Patents

Literature

55 results about "Scalable computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

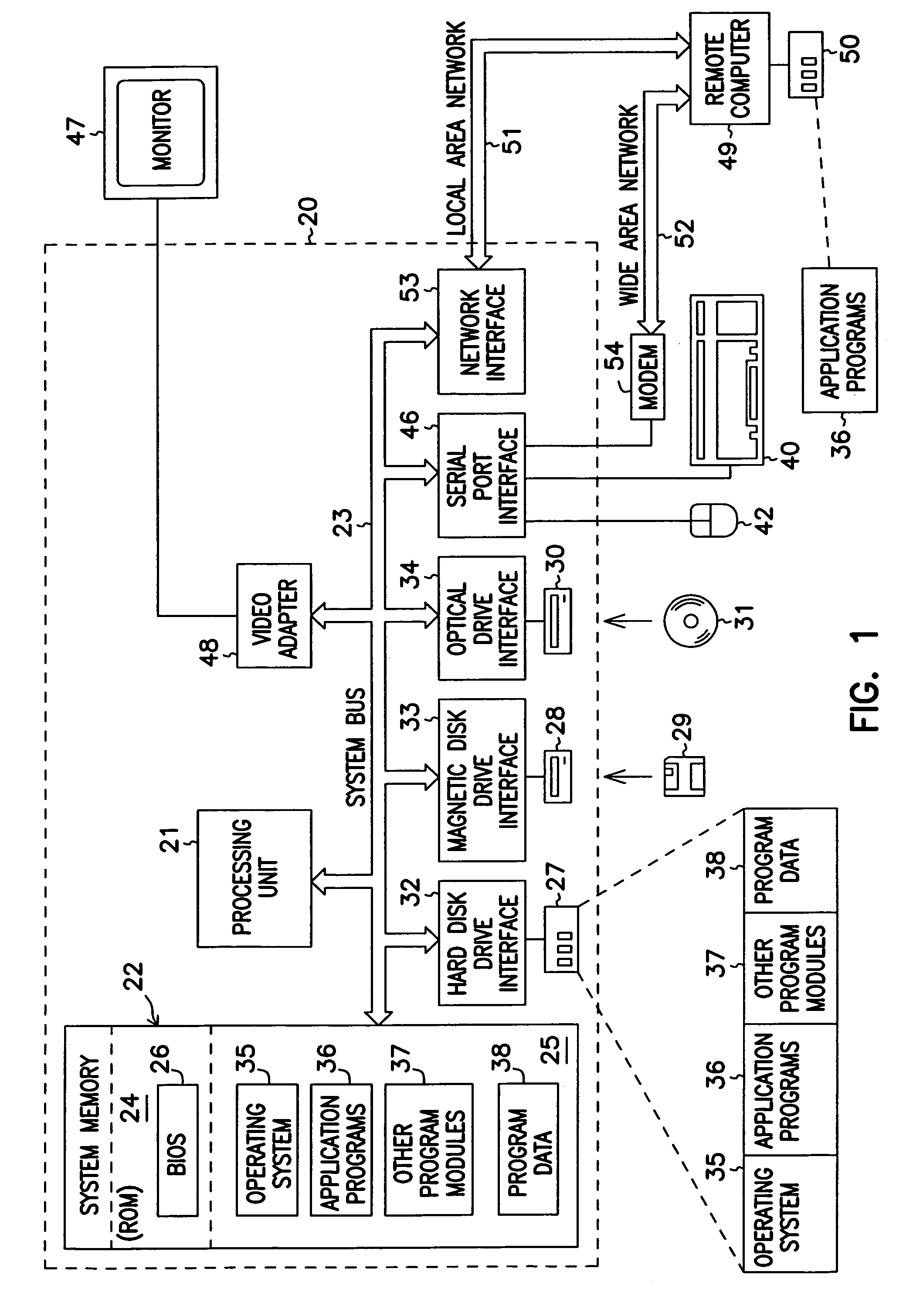

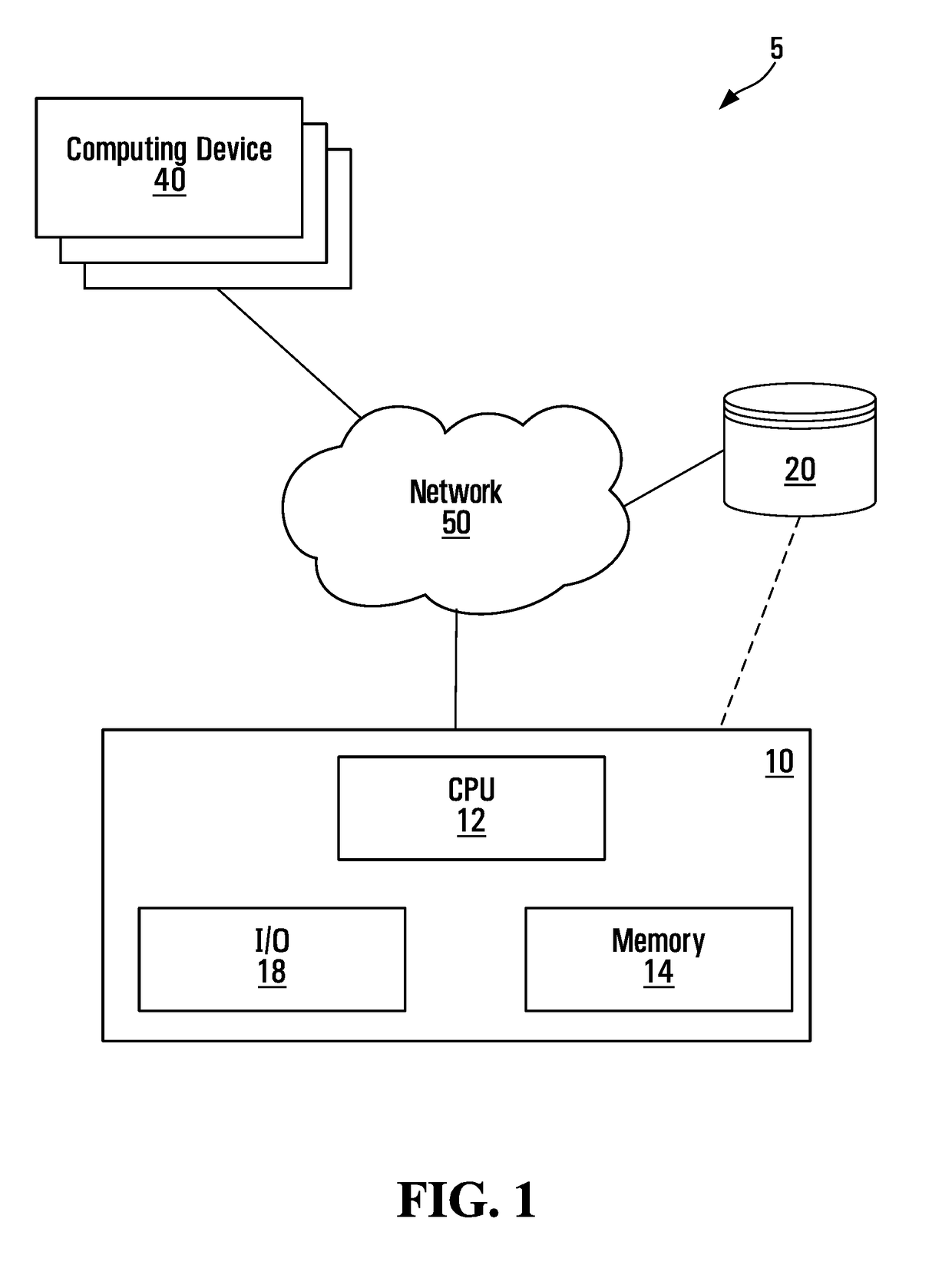

The Scalable Computing research program builds knowledge in high performance computing (HPC) and couples this with scalable cloud computing to support scalable big-data application processing.

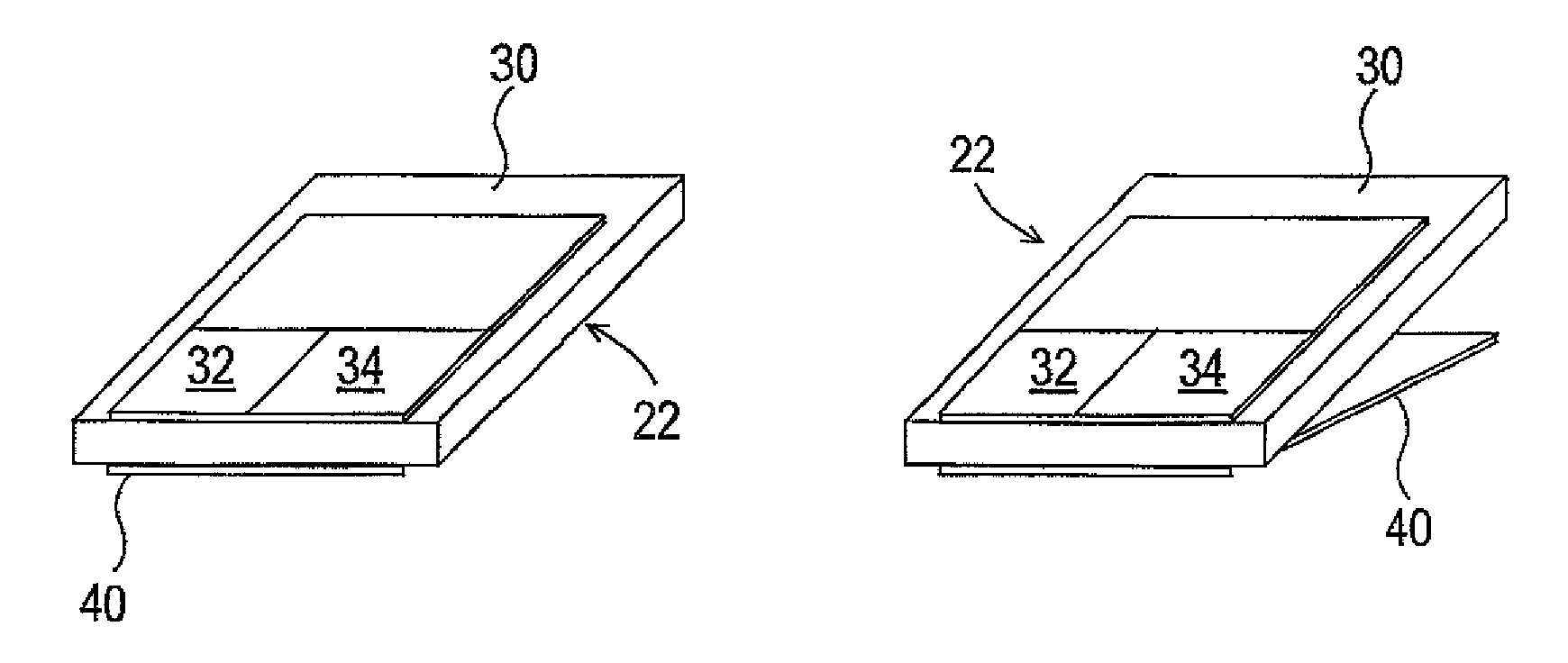

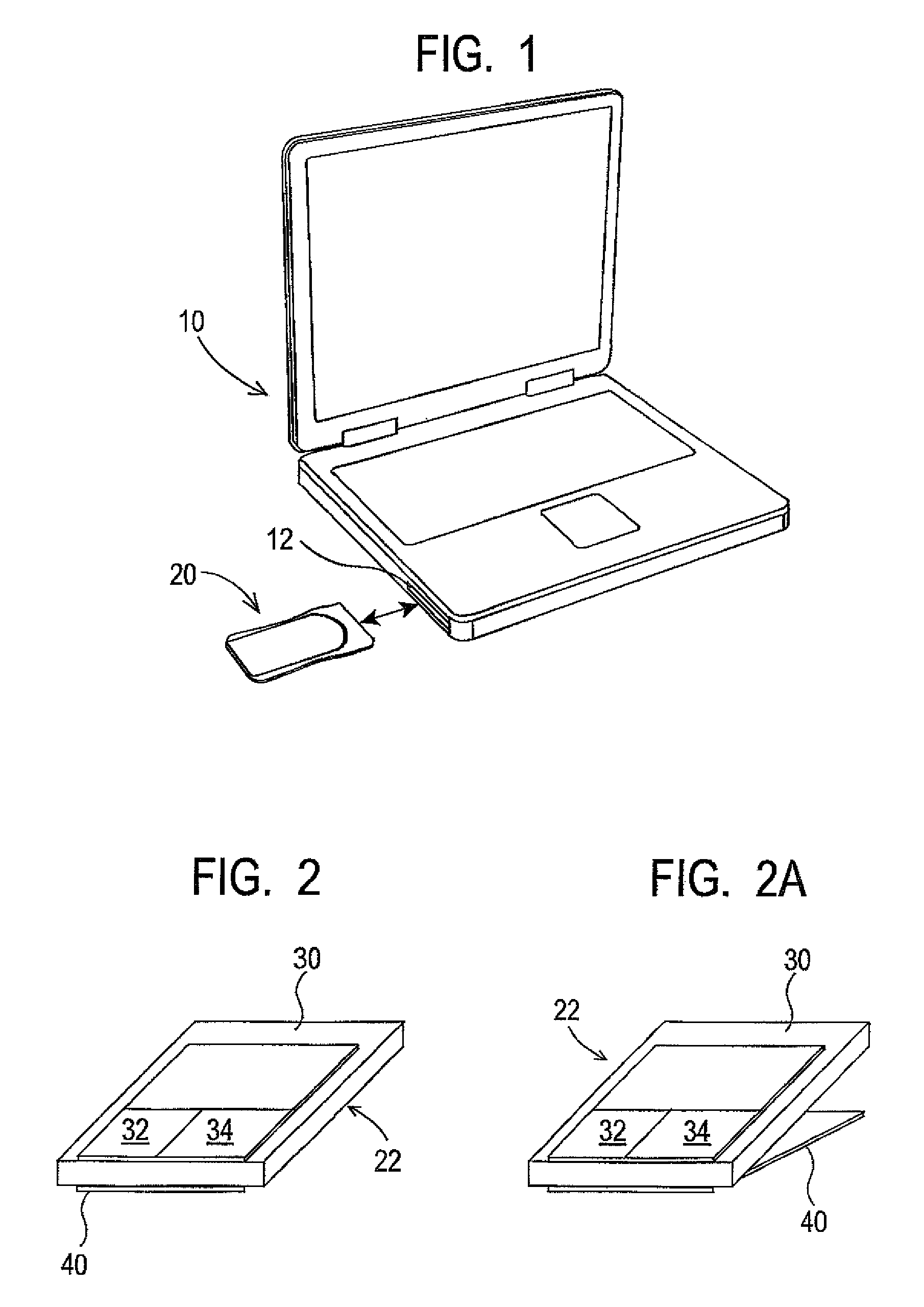

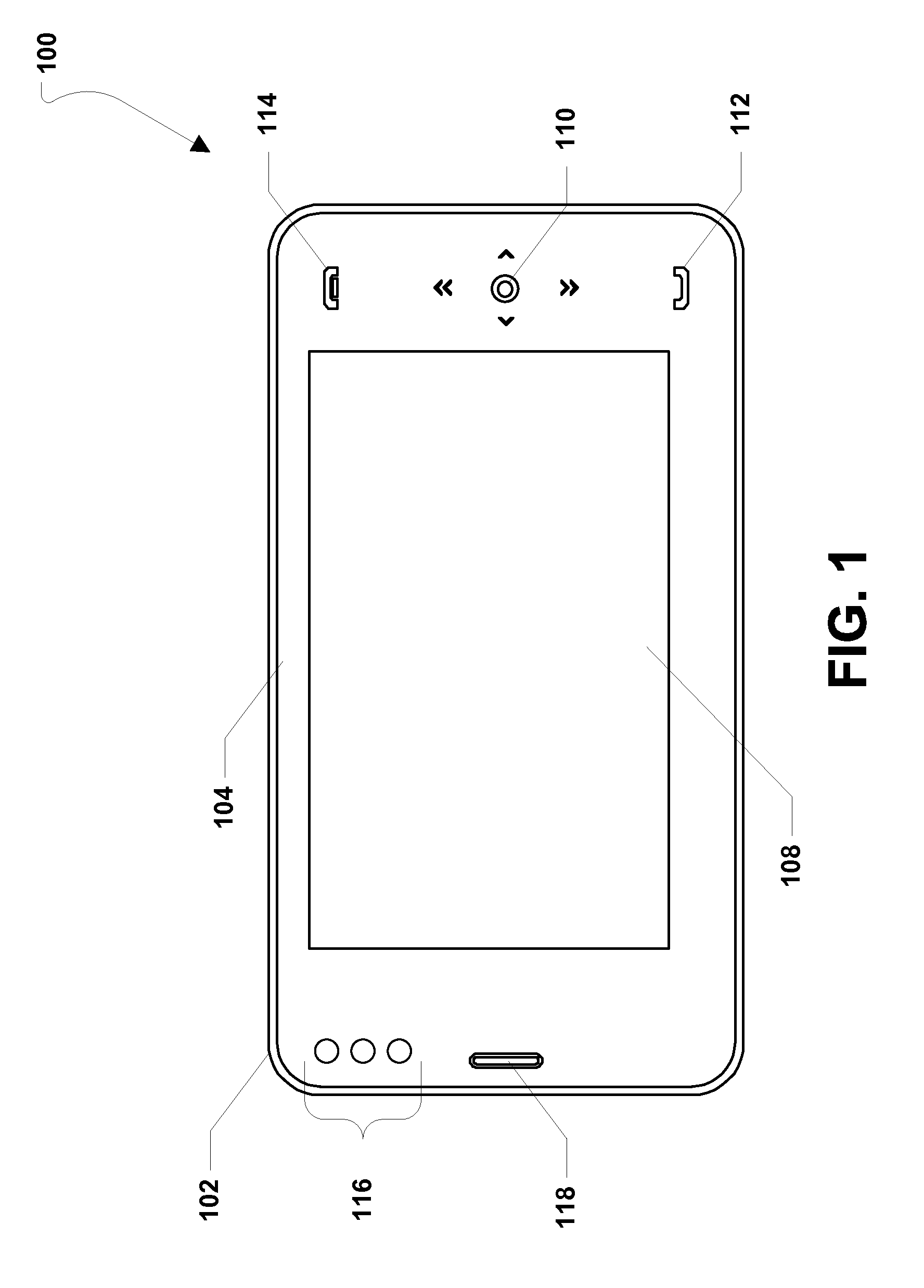

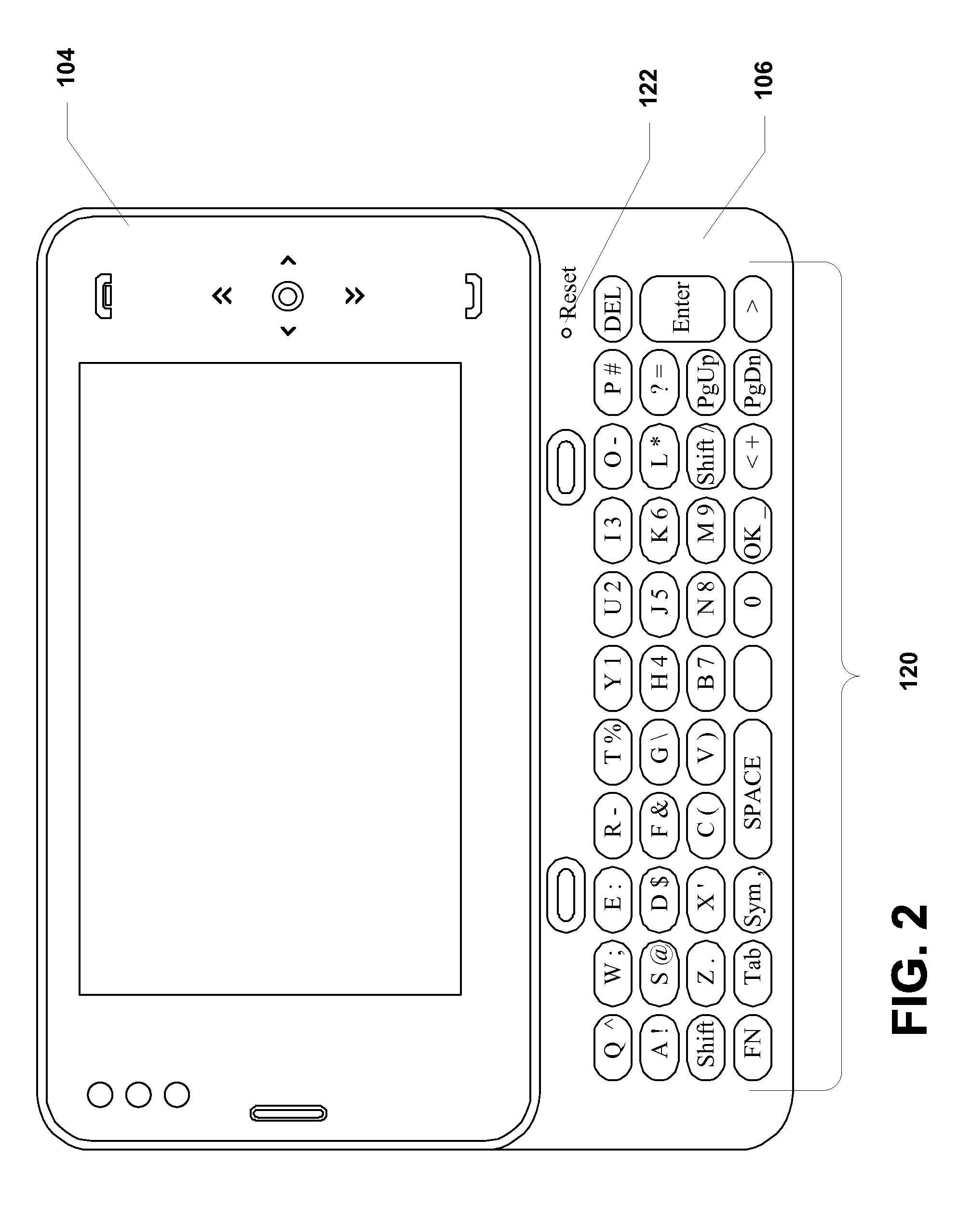

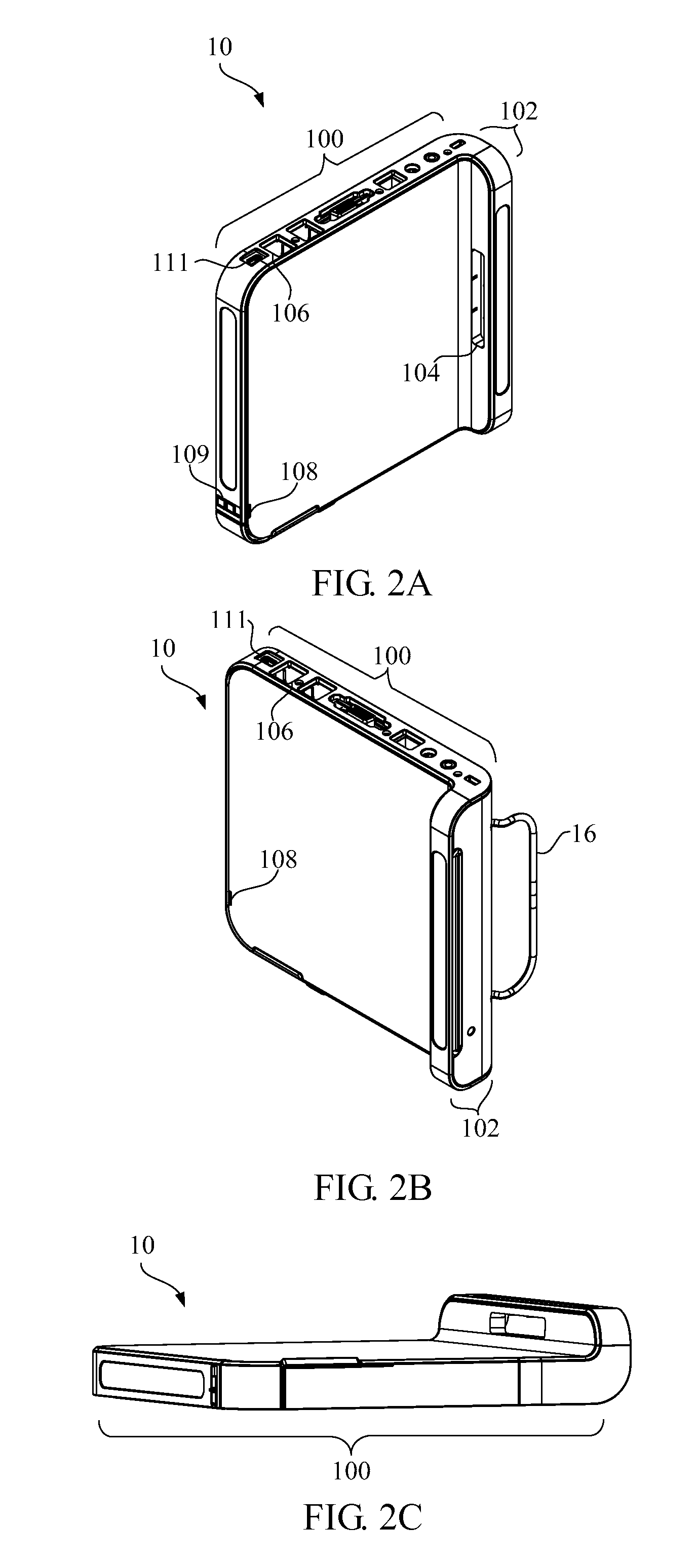

Peripheral devices for portable computer

InactiveUS7233319B2Cathode-ray tube indicatorsDetails for portable computersScalable computingEmbedded system

The present invention relates to the storage and recharging of peripheral devices within a computer. In one exemplary embodiment the invention relates to an expandable computer mouse that may be stored and recharged in a port, such as a PCMCIA slot, of a portable computing device.

Owner:NEWTON PERIPHERALS

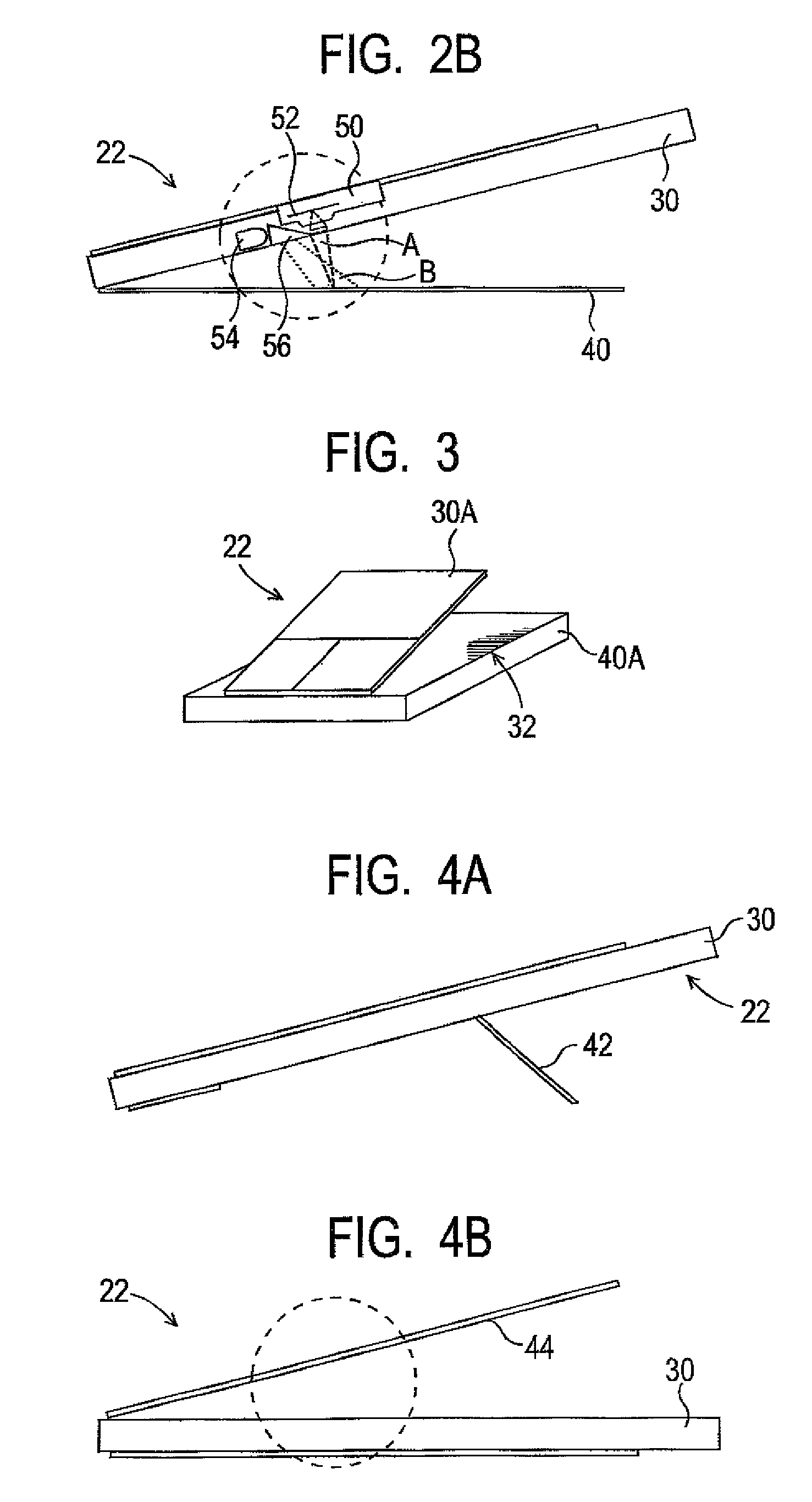

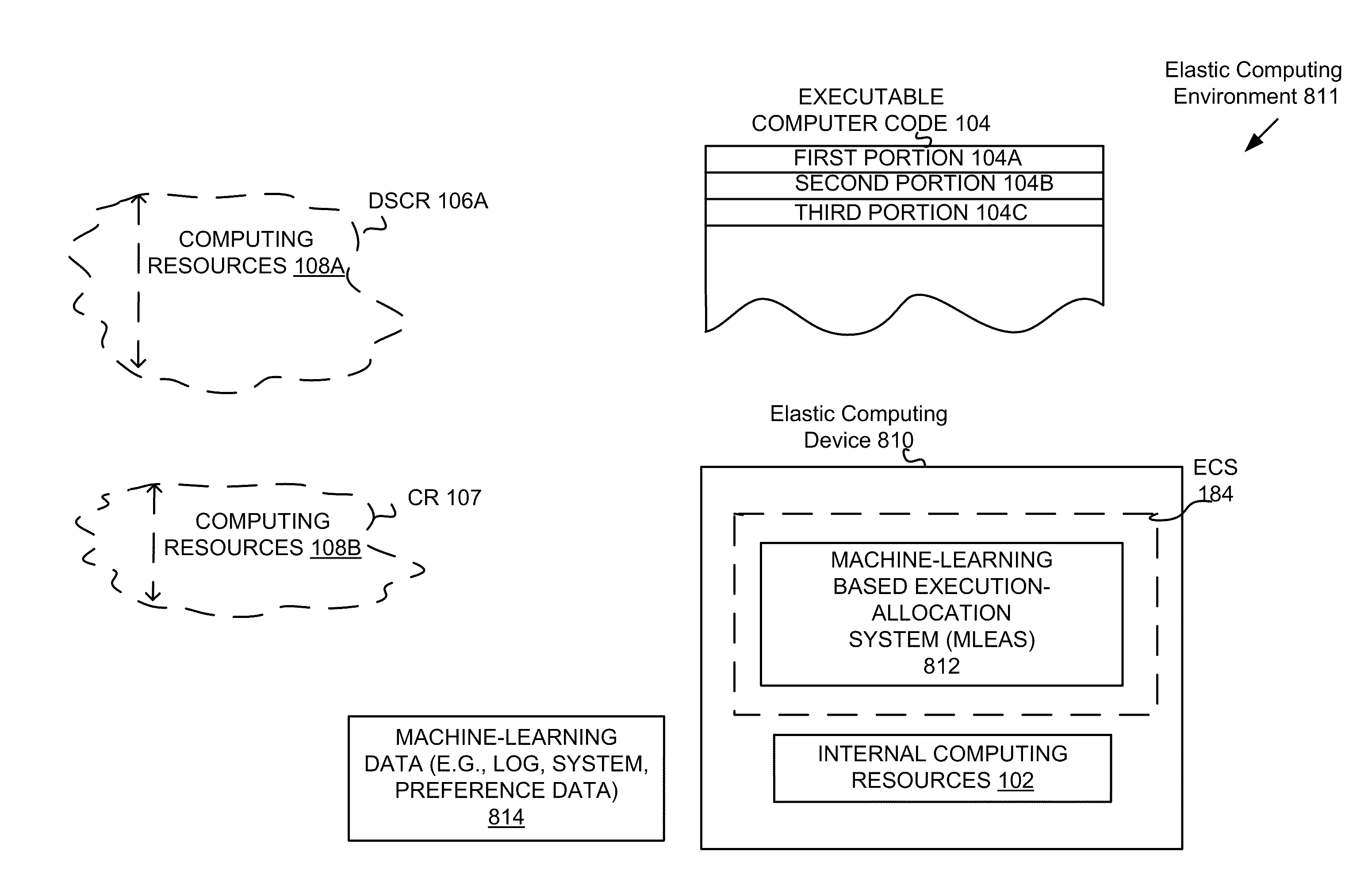

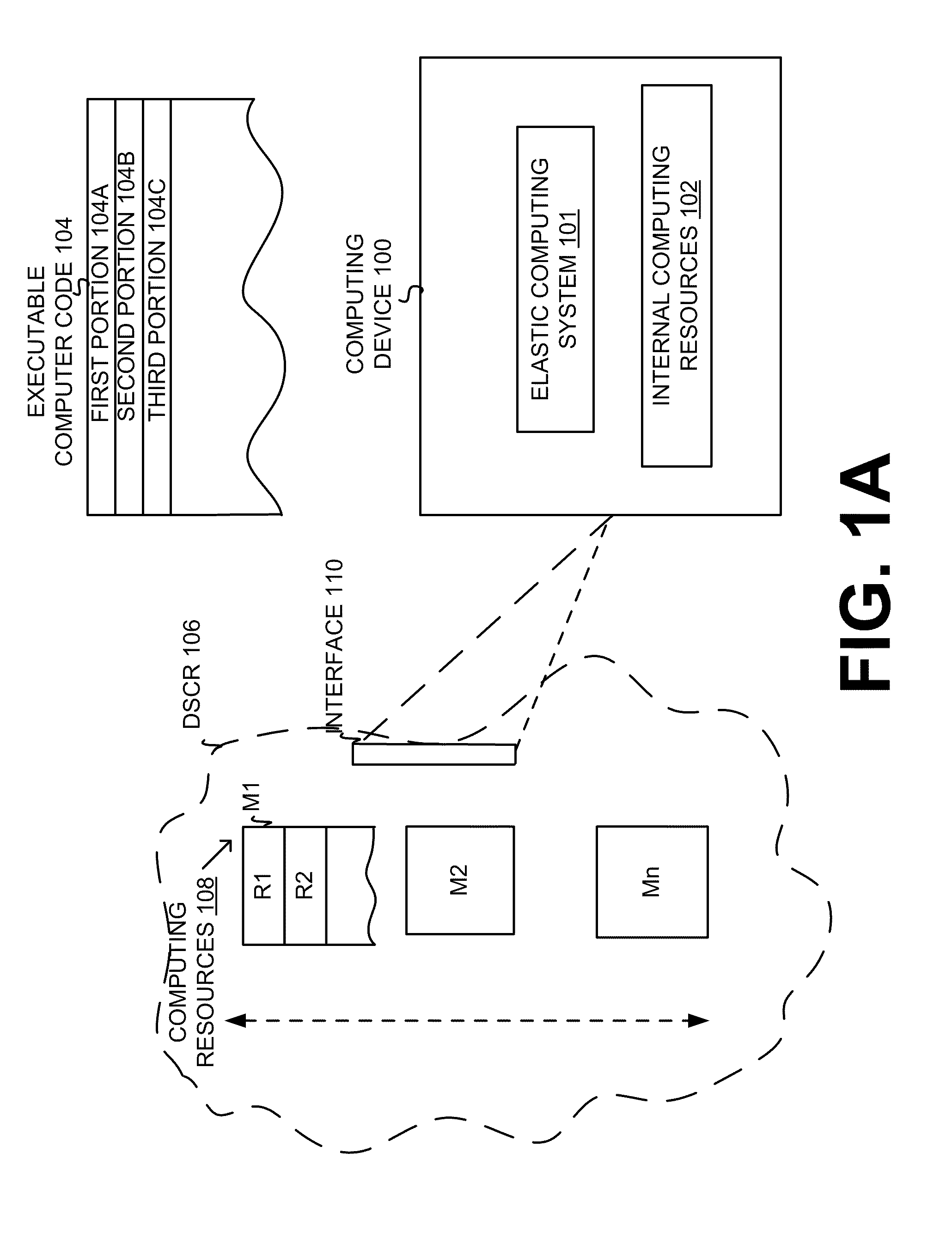

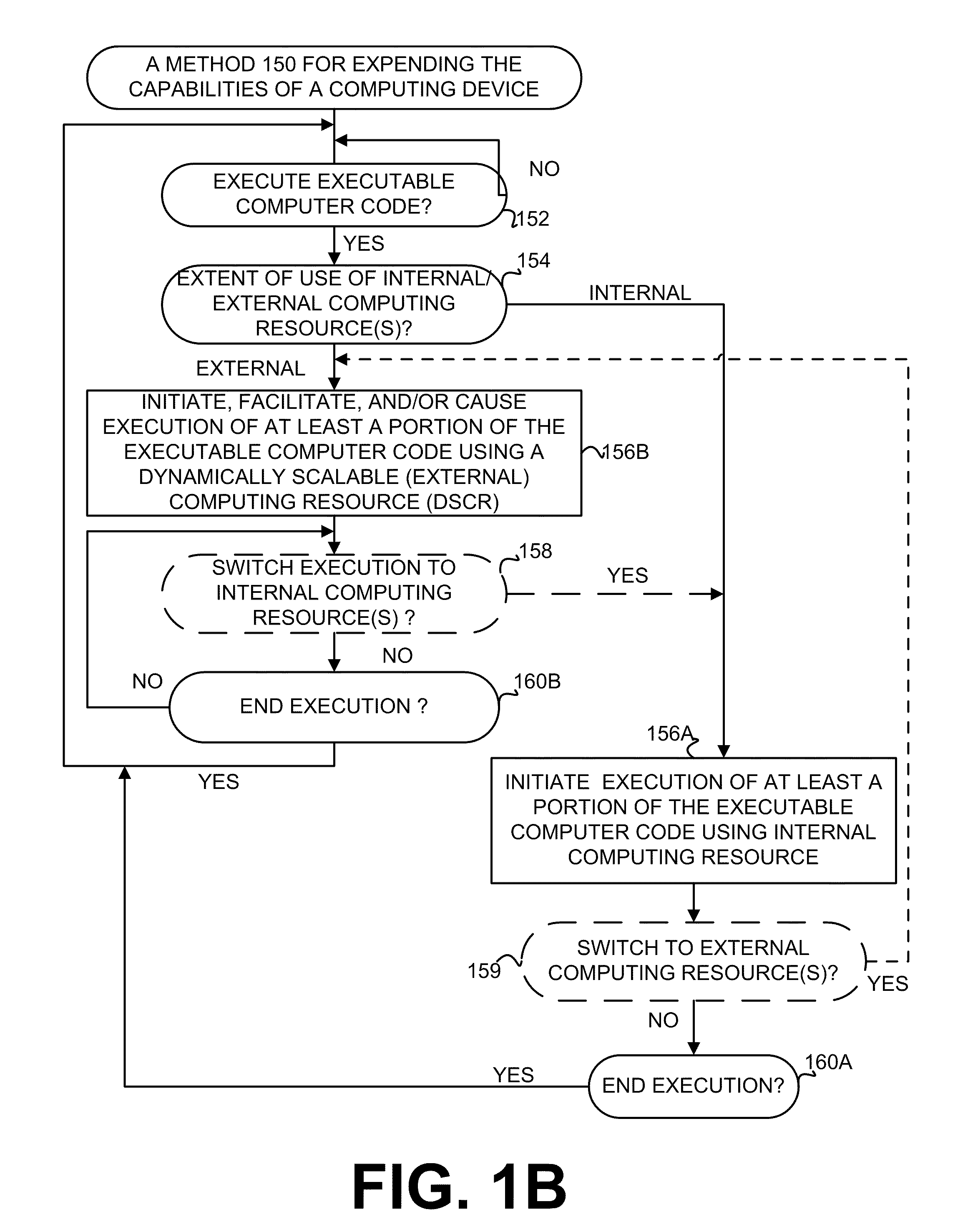

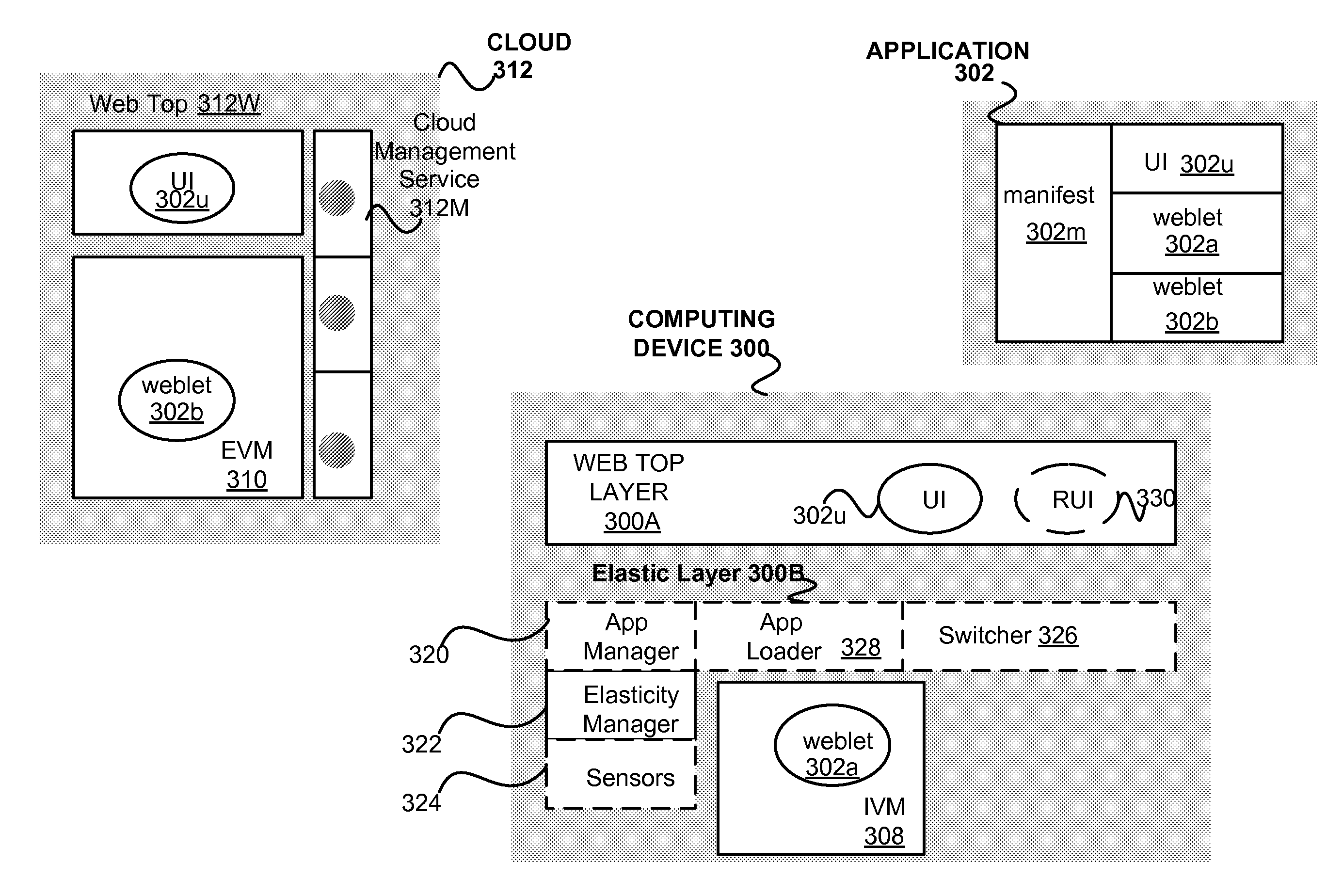

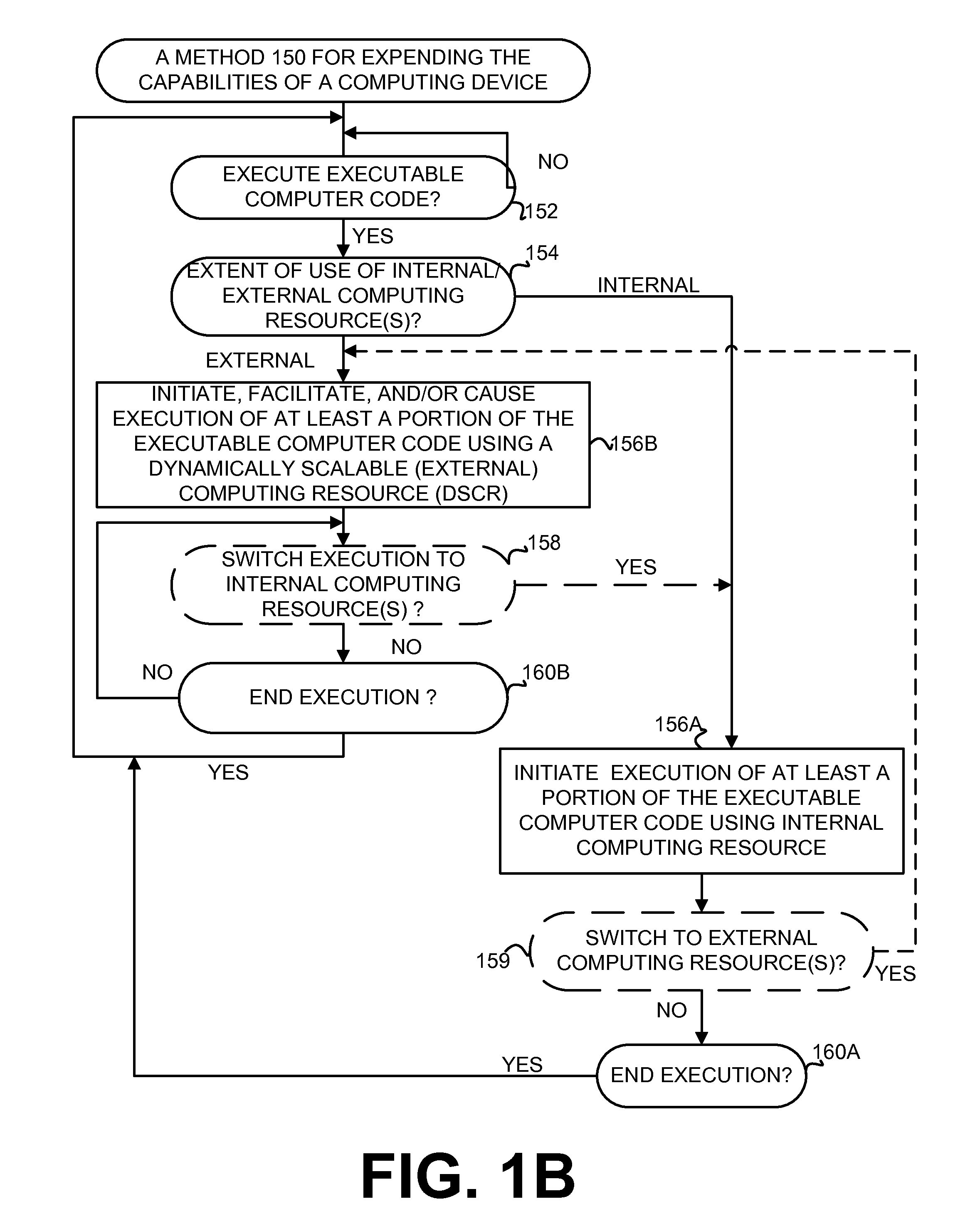

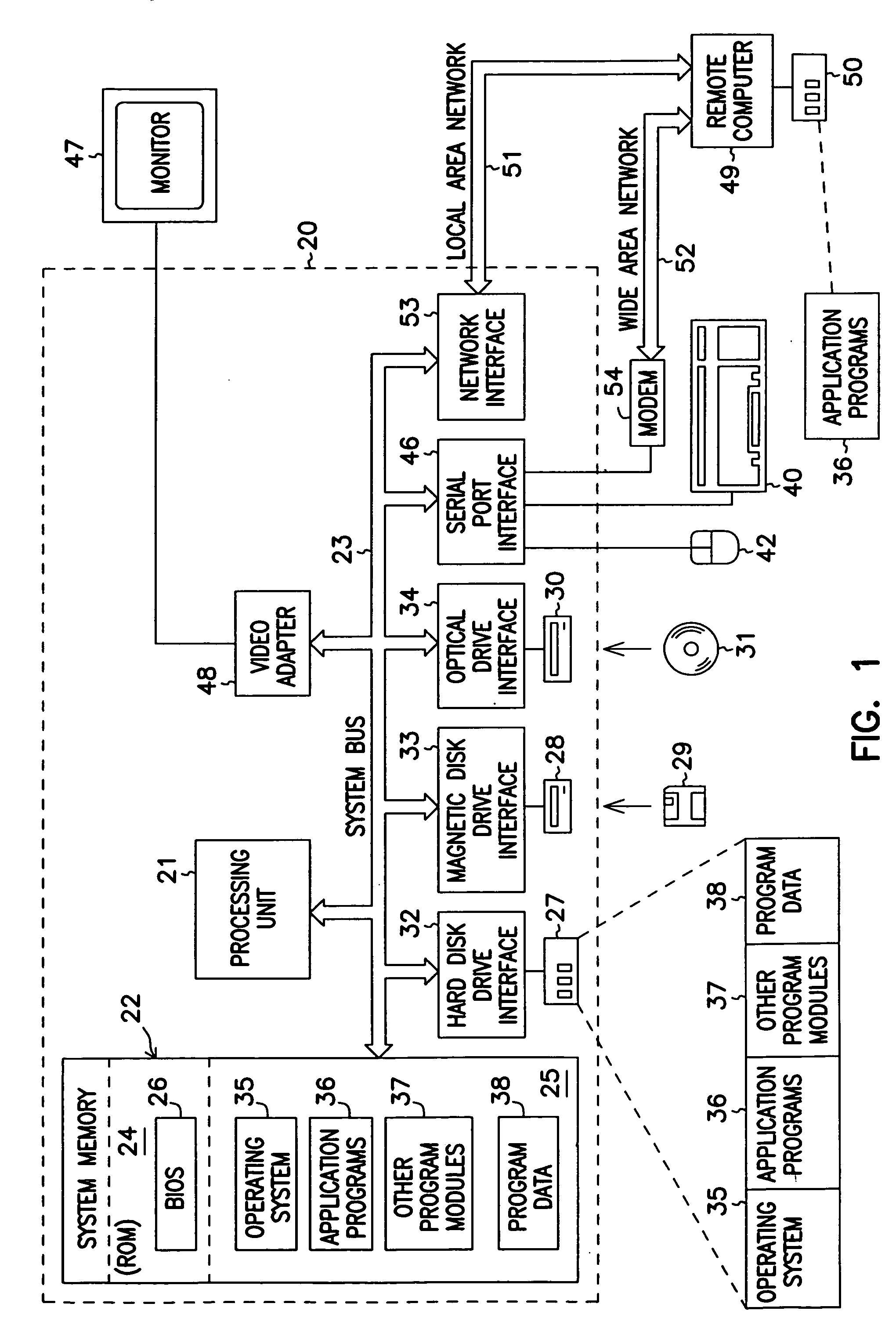

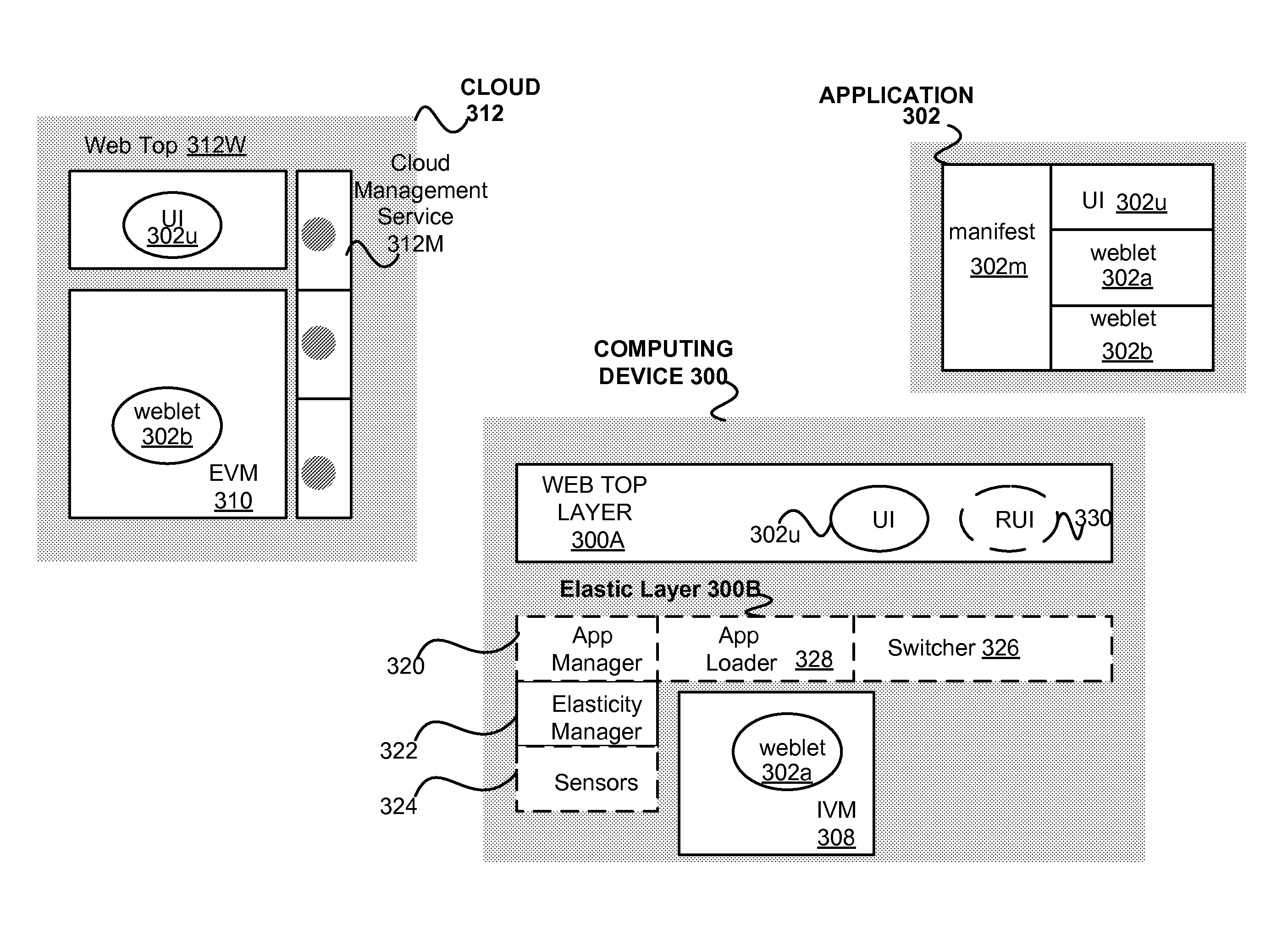

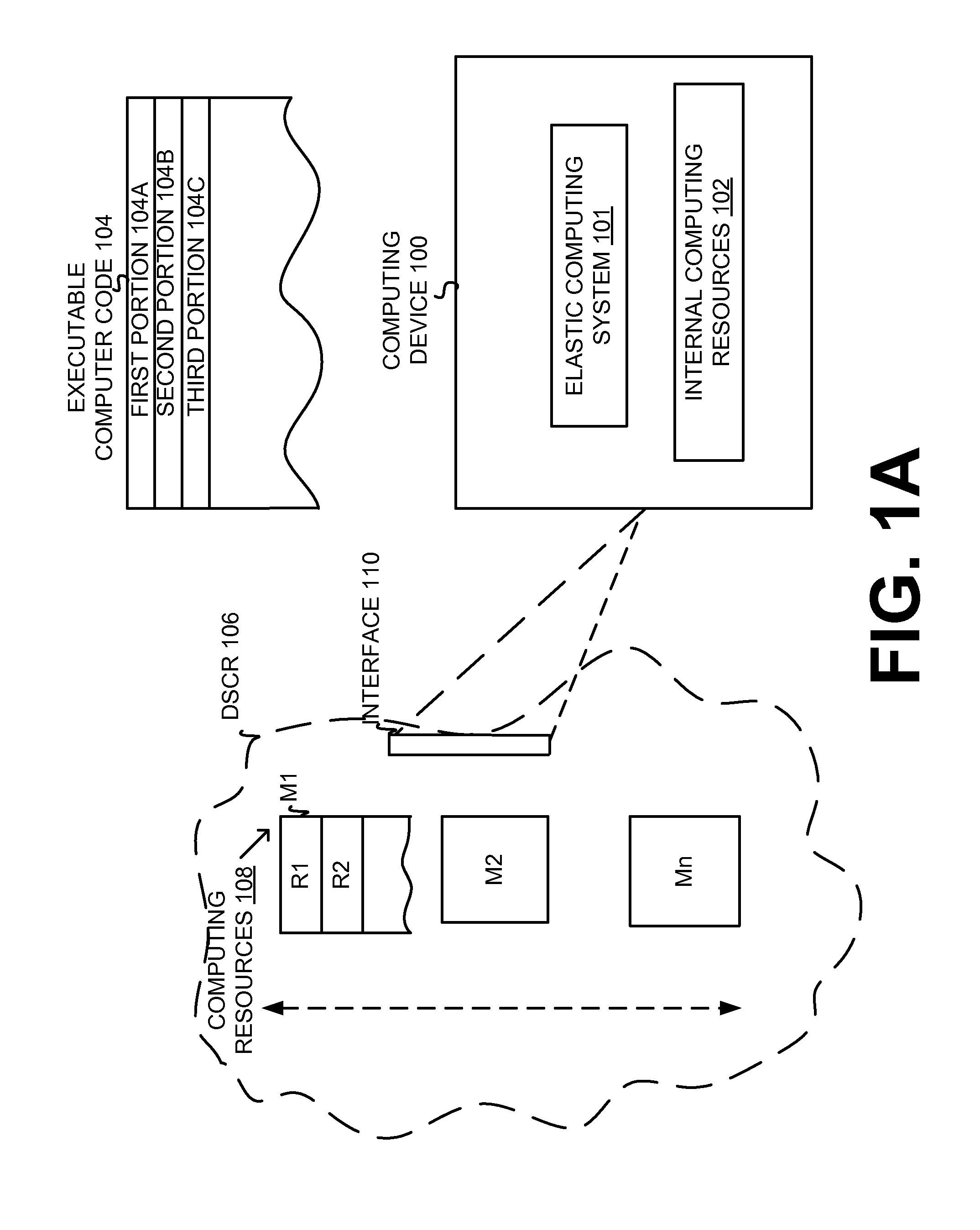

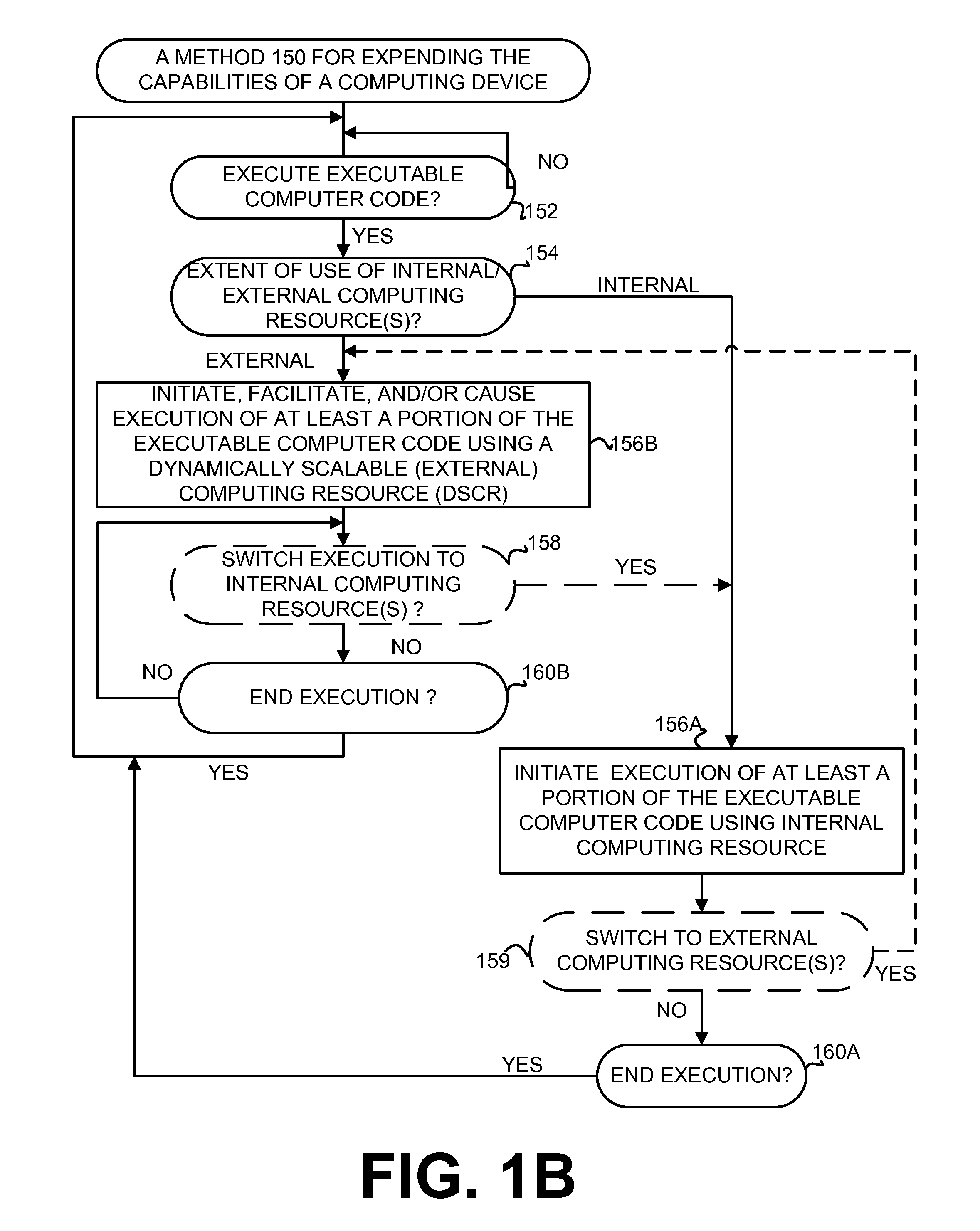

Execution allocation cost assessment for computing systems and environments including elastic computing systems and environments

InactiveUS20110004574A1Computing capabilities of a computing systemEffective resourcesResource allocationDigital computer detailsScalable computingParallel computing

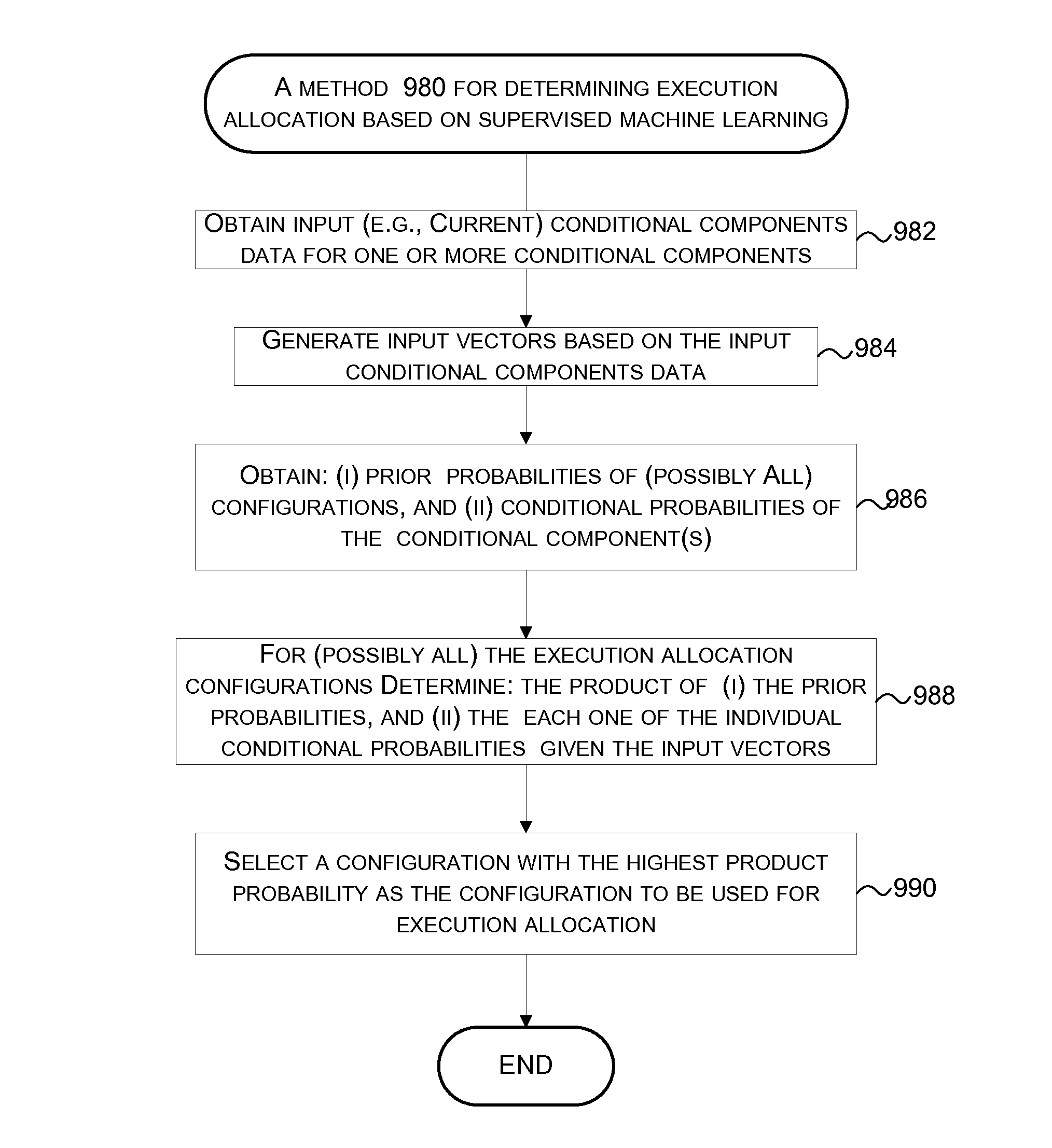

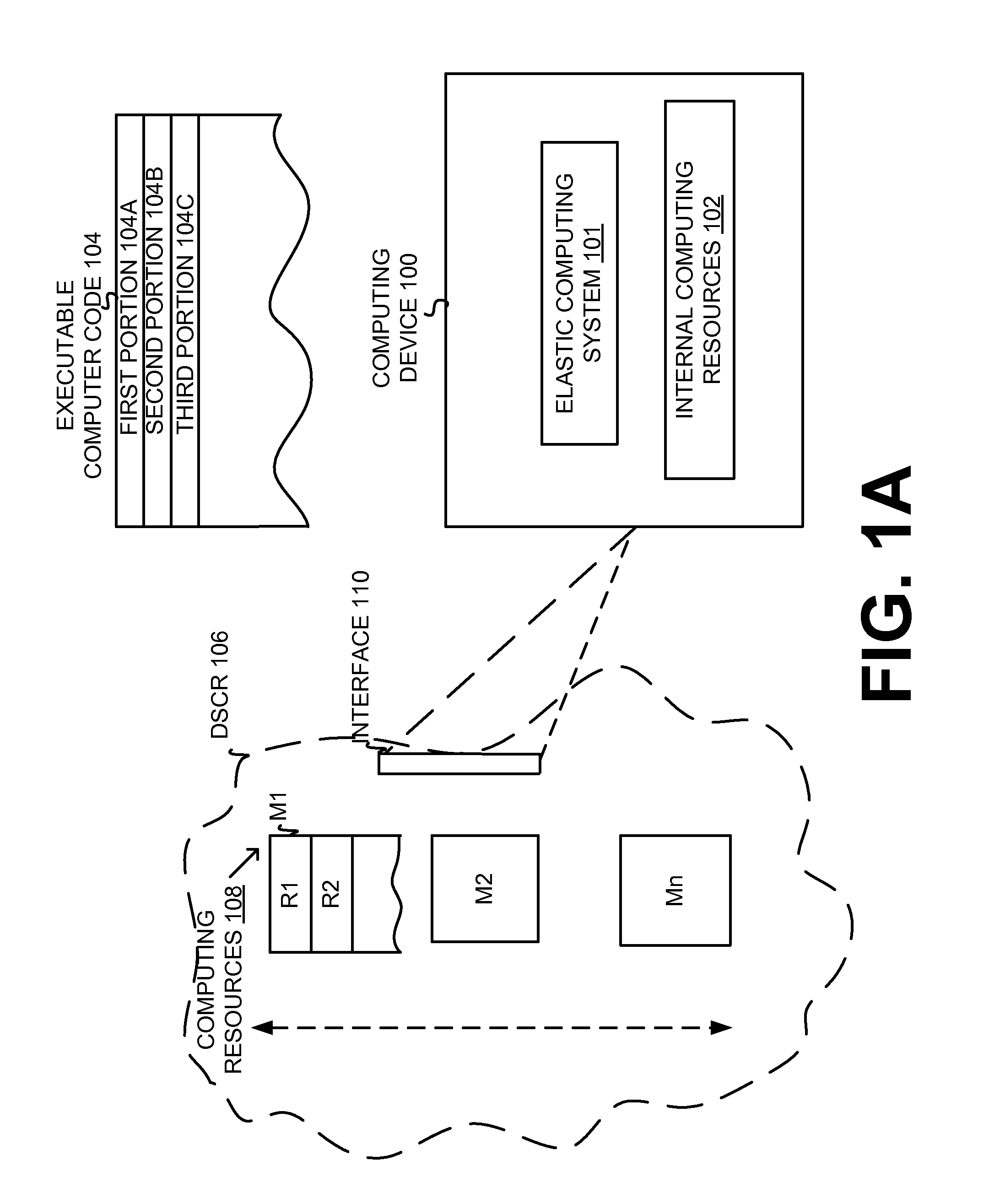

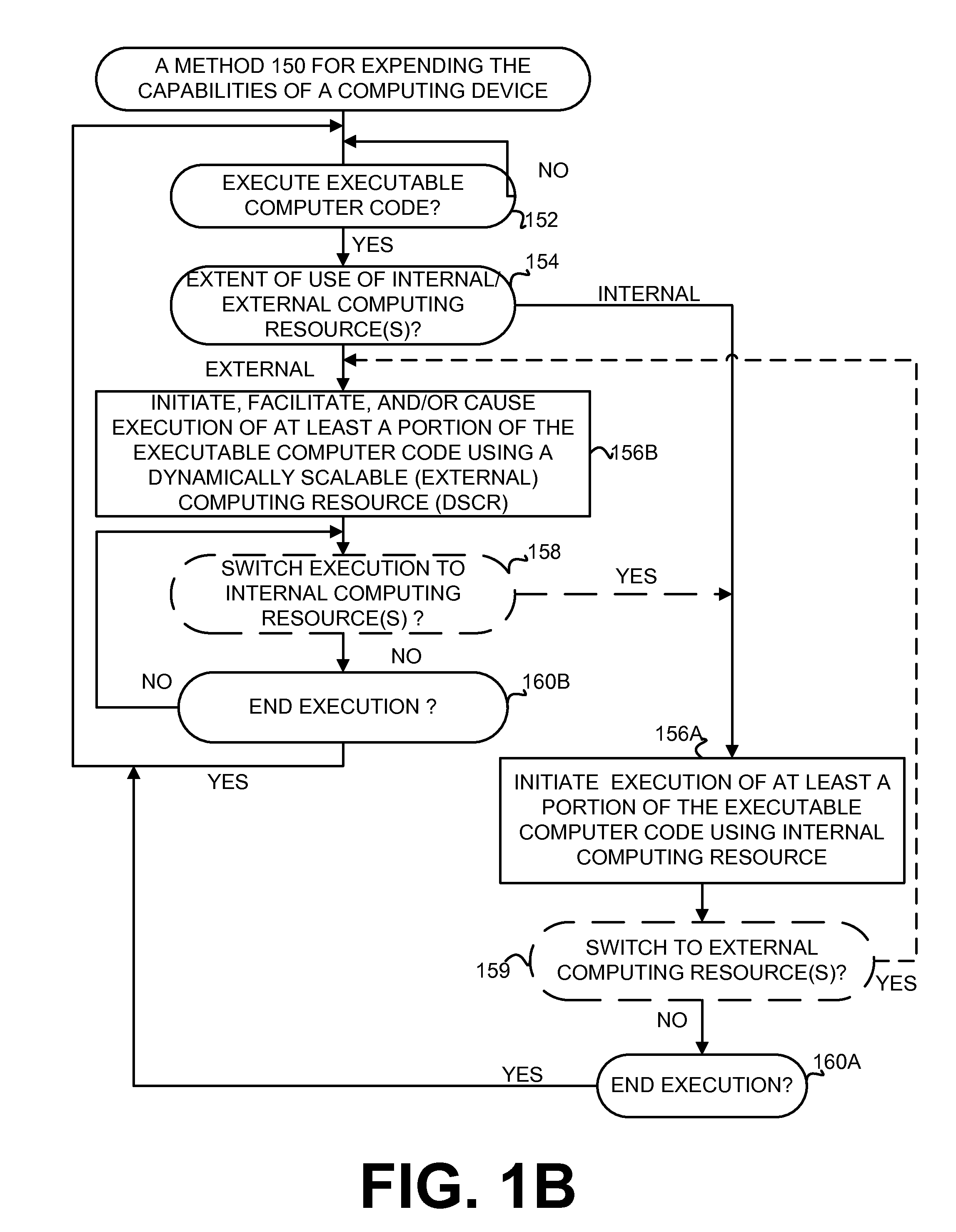

Techniques for allocating individually executable portions of executable code for execution in an Elastic computing environment are disclosed. In an Elastic computing environment, scalable and dynamic external computing resources can be used in order to effectively extend the computing capabilities beyond that which can be provided by internal computing resources of a computing system or environment. Machine learning can be used to automatically determine whether to allocate each individual portion of executable code (e.g., a Weblet) for execution to either internal computing resources of a computing system (e.g., a computing device) or external resources of an dynamically scalable computing resource (e.g., a Cloud). By way of example, status and preference data can be used to train a supervised learning mechanism to allow a computing device to automatically allocate executable code to internal and external computing resources of an Elastic computing environment.

Owner:SAMSUNG ELECTRONICS CO LTD

Securely using service providers in elastic computing systems and environments

InactiveUS20110004916A1Improve abilitiesEasy to useDigital data authenticationProgram controlScalable computingService provision

Access permission can be assigned to a particular individually executable portion of computer executable code (“component-specific access permission”) and enforced in connection with accessing the services of a service provider by the individually executable portion (or component). It should be noted that least one of the individually executable portions can request the services when executed by a dynamically scalable computing resource provider. In addition, general and component-specific access permissions respectively associated with executable computer code as a whole or one of it specific portions (or components) can be cancelled or rendered inoperable in response to an explicit request for cancellation.

Owner:SAMSUNG ELECTRONICS CO LTD

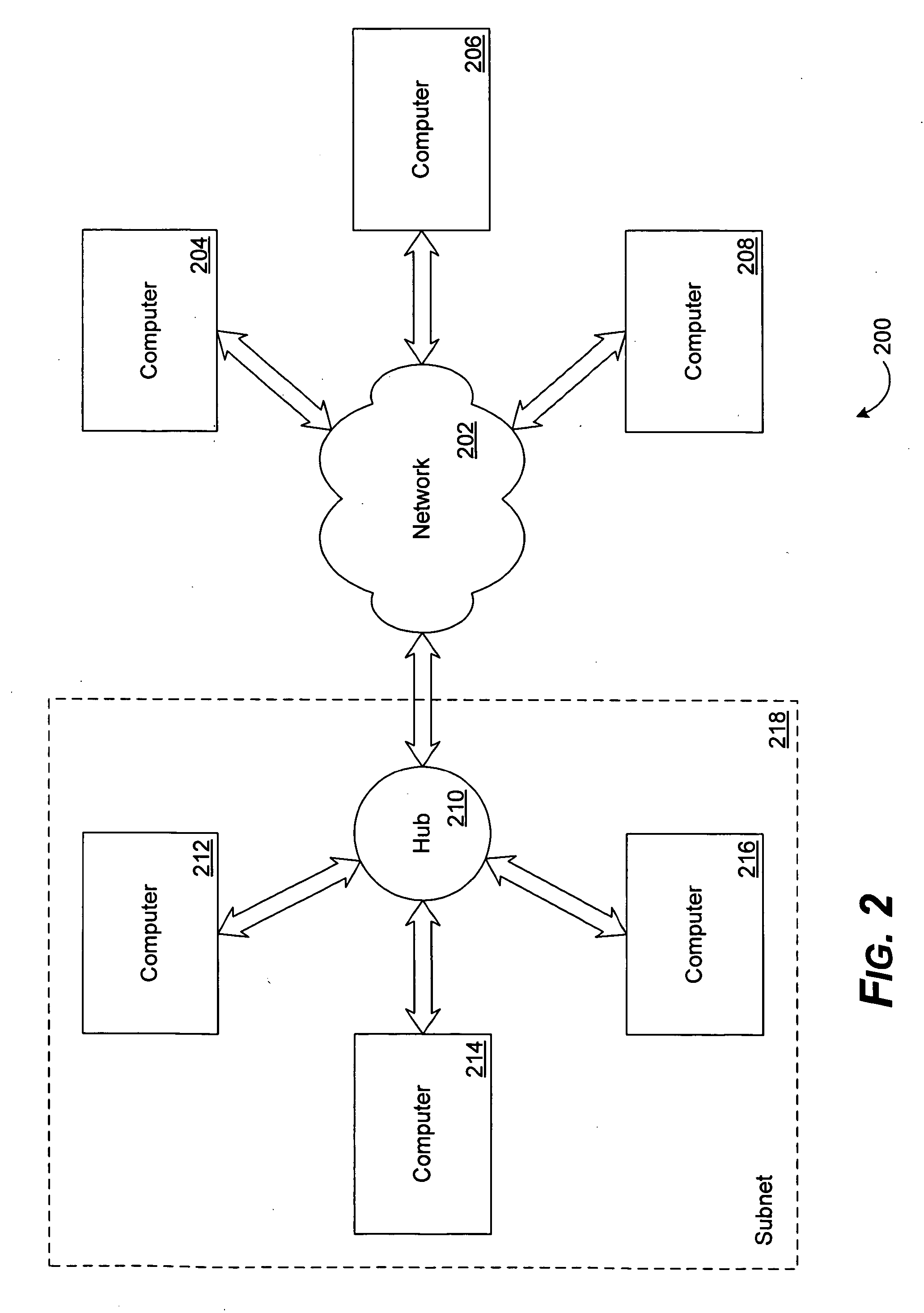

System and method of providing scalable computing between a portable computing device and a portable computing device docking station

ActiveUS20100250975A1Energy efficient ICTProgram control using stored programsScalable computingDocking station

A method of managing processor cores within a portable computing device (PCD) is disclosed and may include determining whether the PCD is docked with a PCD docking station when the PCD is powered on and energizing a first processor core when the PCD is not docked with the PCD docking station. The method may include determining an application processor requirement when an application is selected, determining whether the application processor requirement equals a two processor core condition, and energizing a second processor core when the application processor requirement equals the two processor core condition.

Owner:QUALCOMM INC

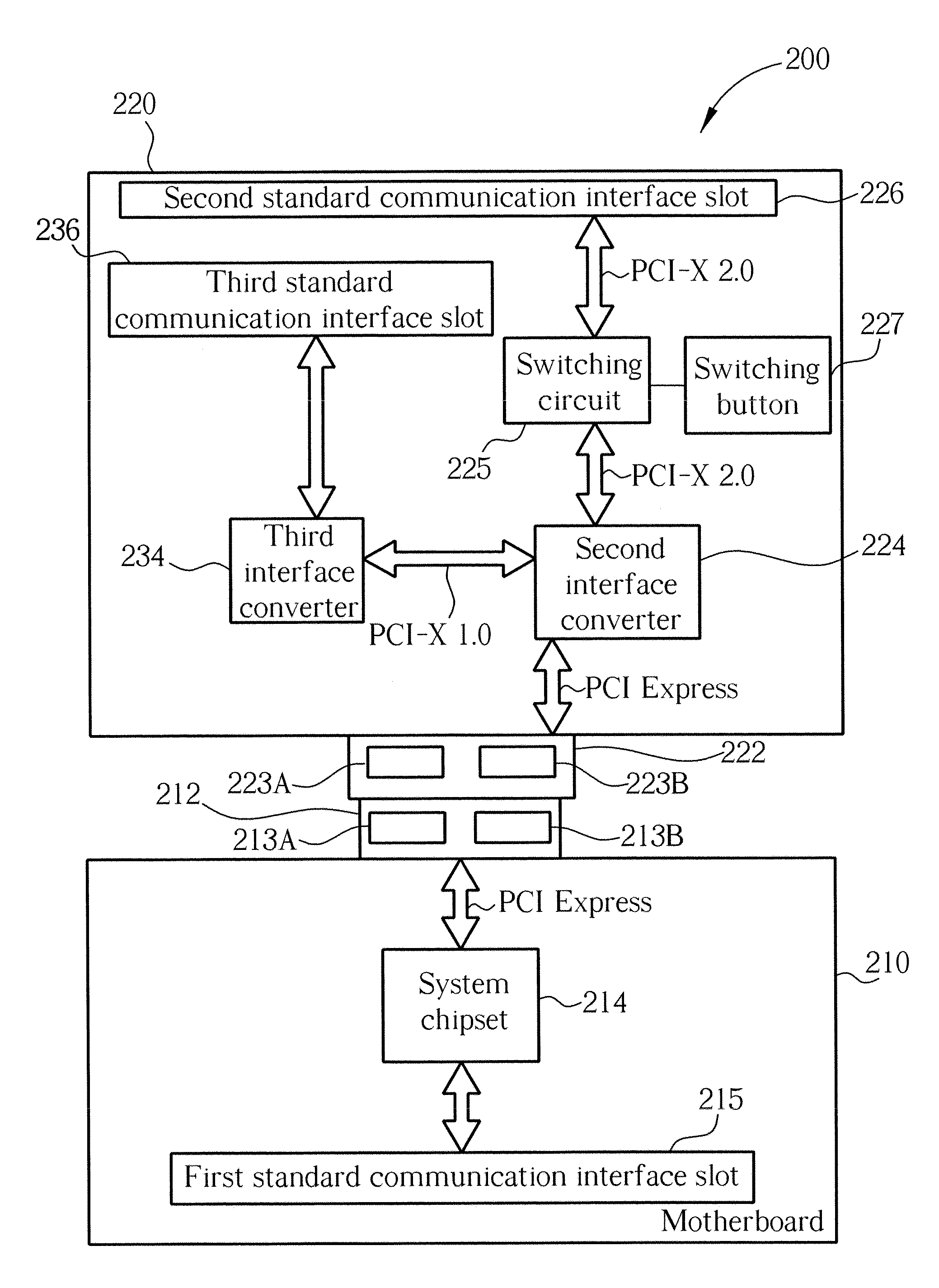

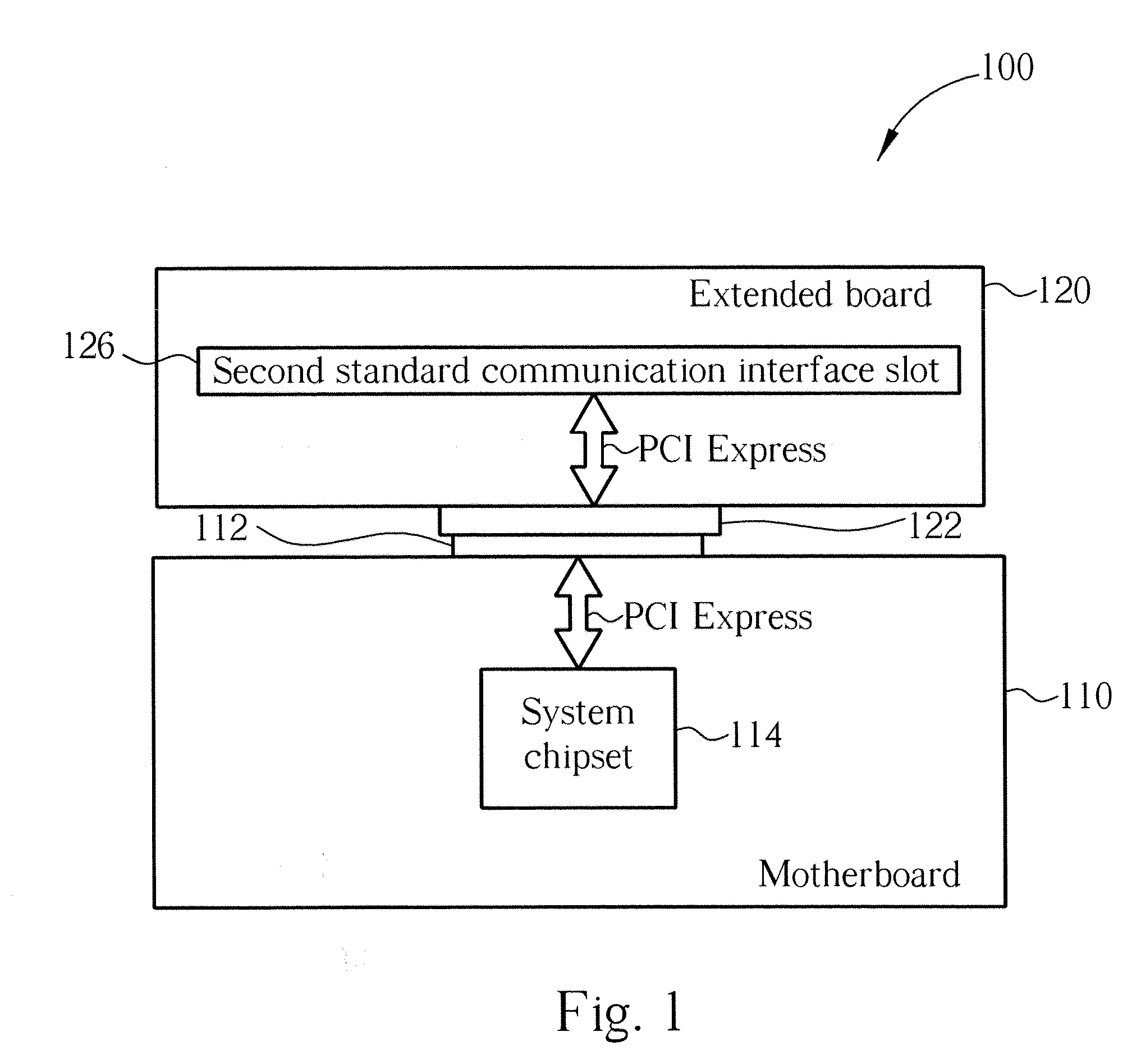

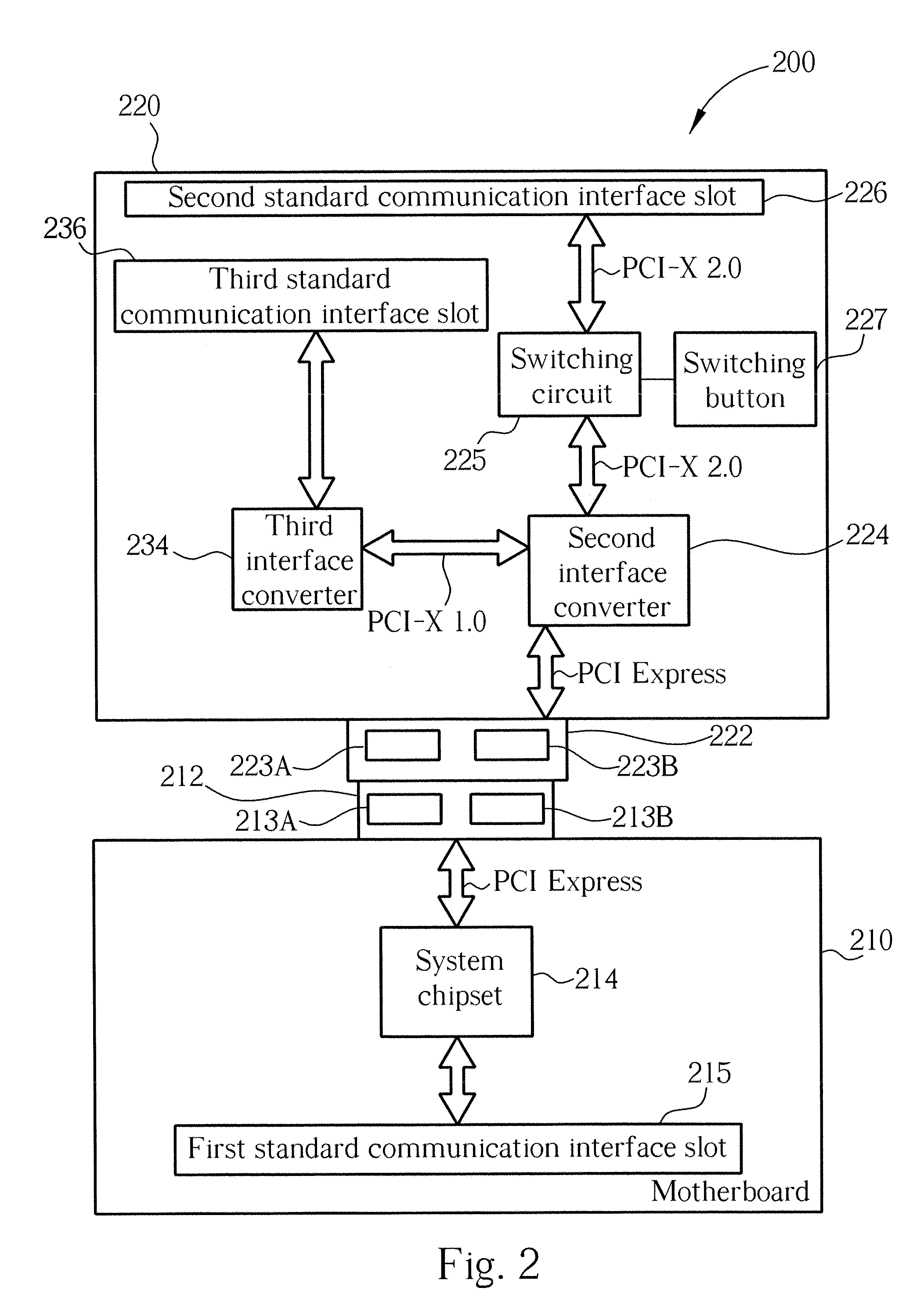

Extendable computer system

ActiveUS7174407B2Increase heightFunction increaseDigital data processing detailsComponent plug-in assemblagesScalable computingComputerized system

The present invention provides an extendable computer system including a motherboard for maintaining the functionality of the computer system. The motherboard includes a system chipset and a first extending port electrically connected to the system chipset for extending functionality of the motherboard. The extendable computer system further includes an extended board capable of electrically connecting to the motherboard for extending the functionality of the computer system. The extended board includes a second extending port capable of electrically connecting to the first extending port for electrically connecting the extended board to the system chipset of the computer system, wherein the extending ports consist of a Golden Finger Slot and a matched Golden Finger, and the extended board and the motherboard are aligned in the same plane through the connection of the extending ports.

Owner:WISTRON CORP

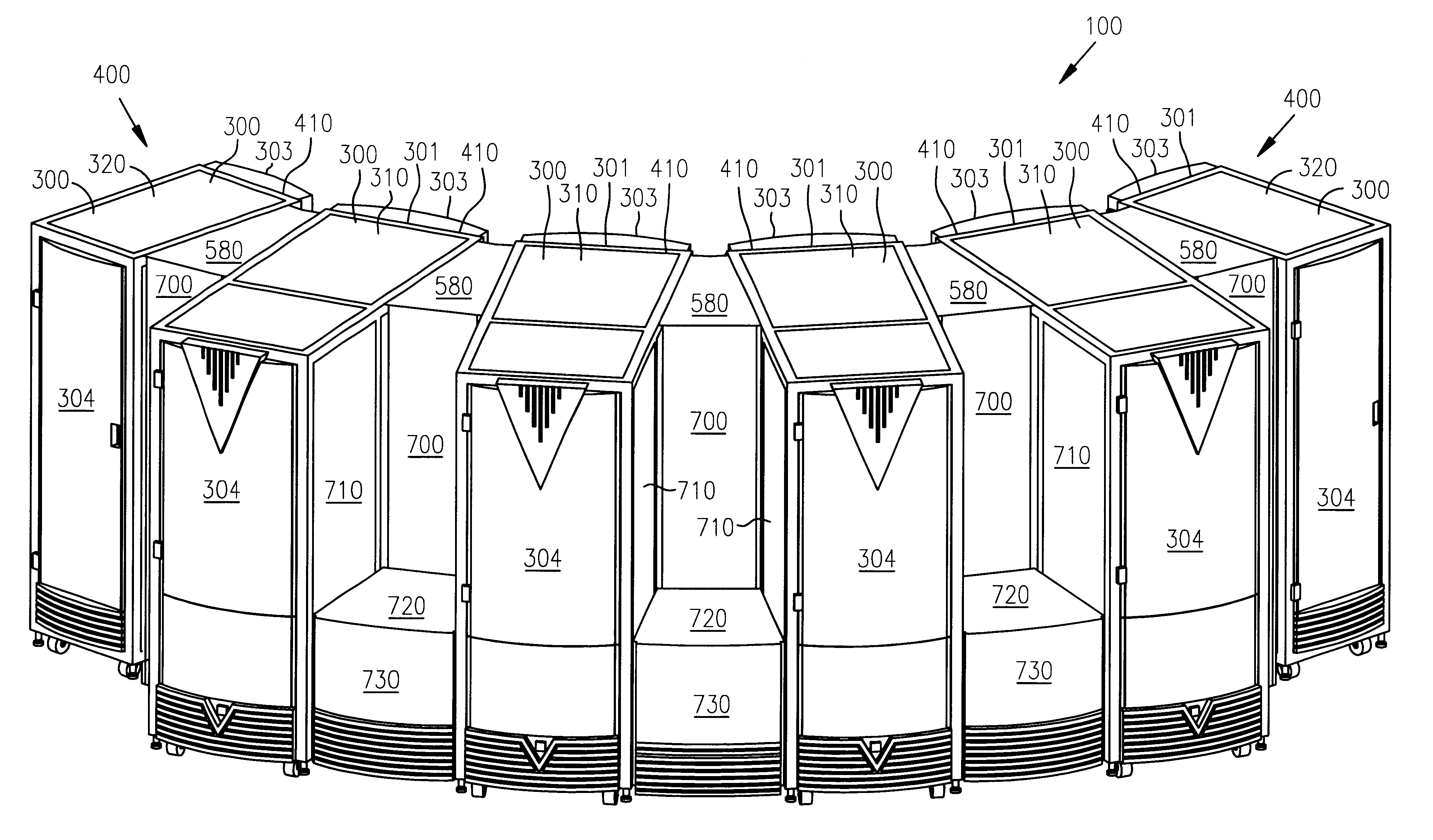

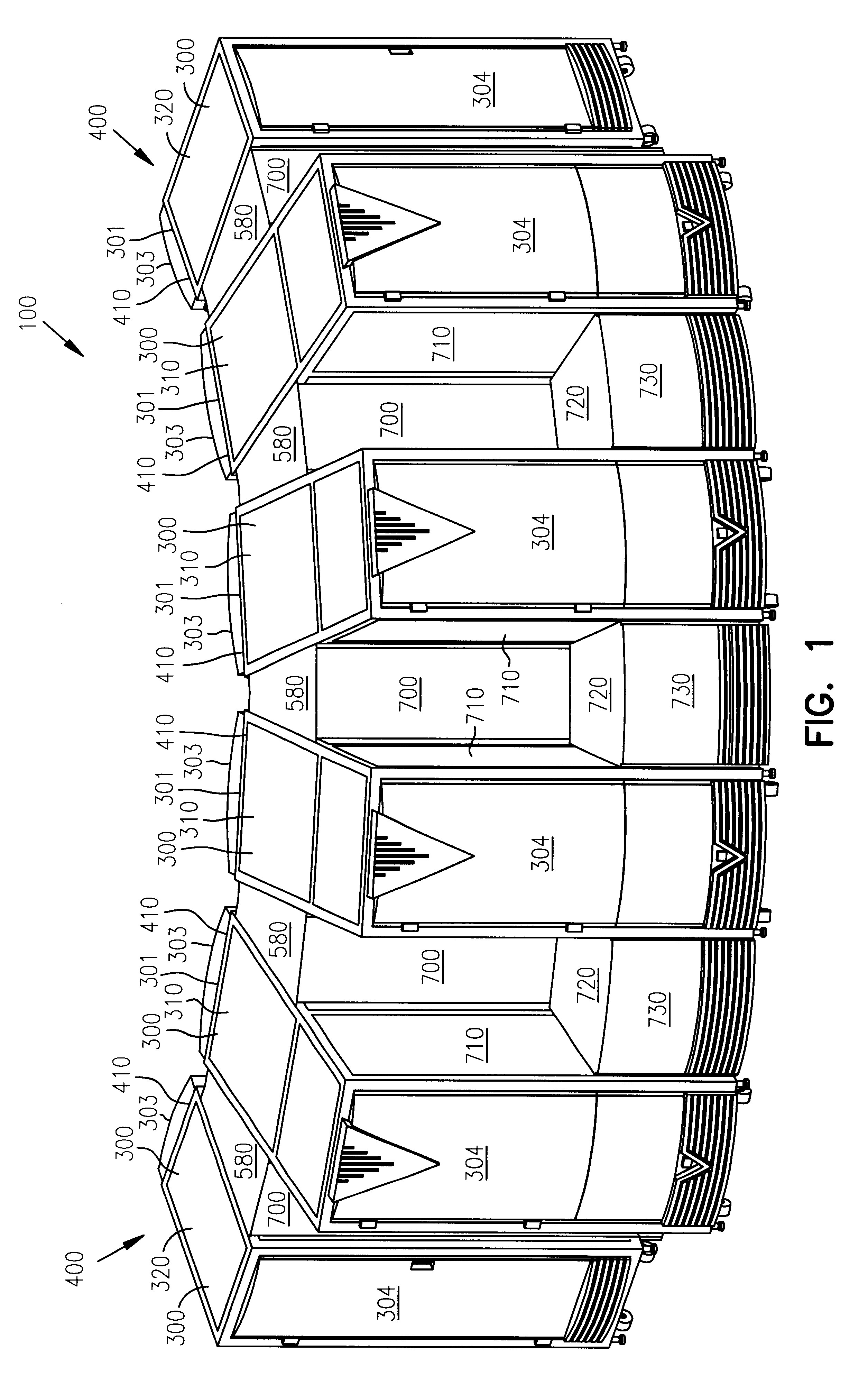

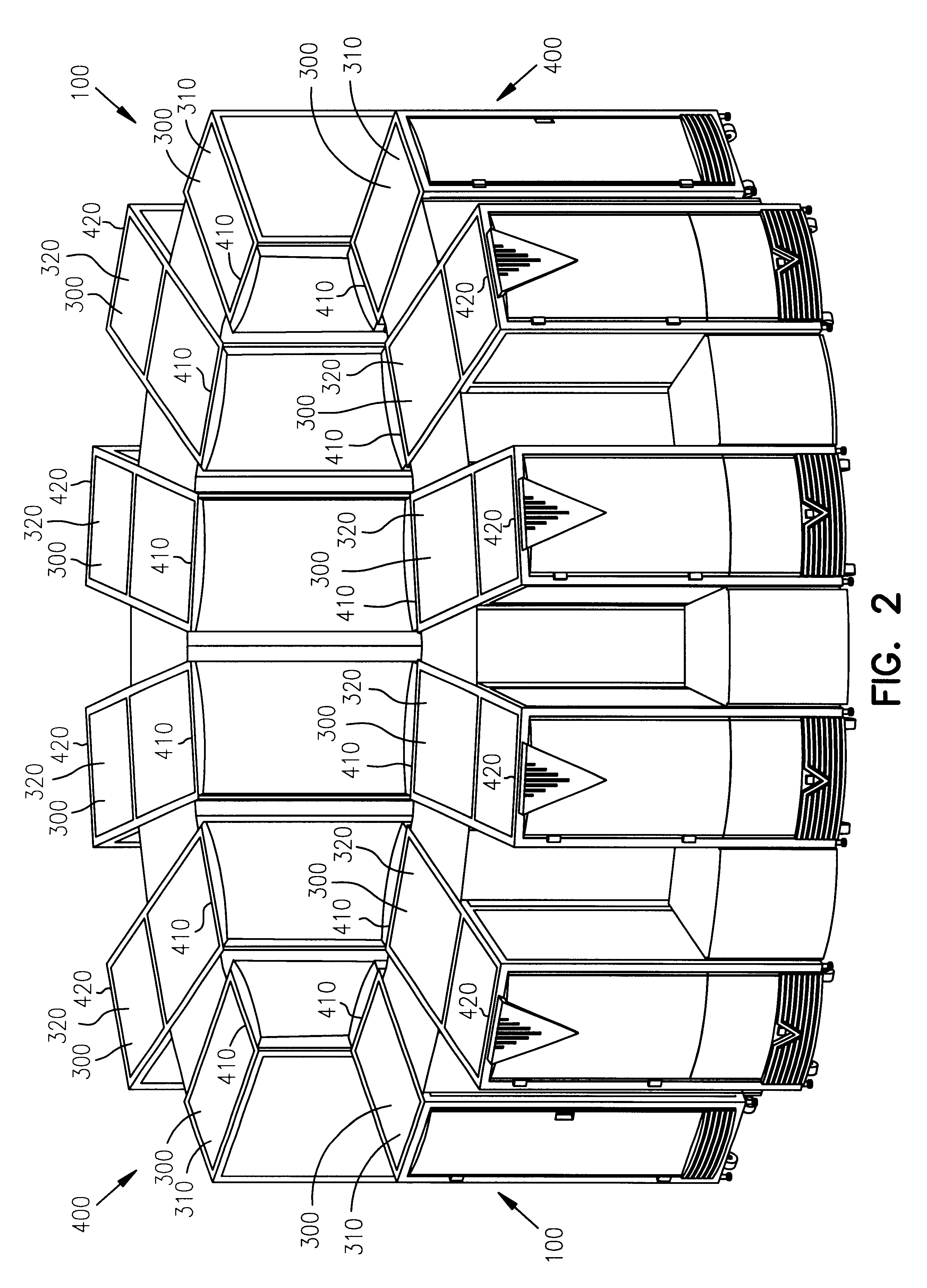

Radial computer system and method

InactiveUS6327143B1Increase spacingDigital processing power distributionSupport structure mountingScalable computingComputerized system

This invention relates to computer systems and hardware, and in particular to a radial computer system, hardware for building a radial computer system and a method for building a radial computer system. According to one aspect of the invention, a clustering concept for a scalable computer system includes computer elements aligned by a joiner into an arc shaped configuration. The radial configuration of the cluster and associated hardware provide a computer system that reduces high speed cable lengths, provides additional connection points for the increased number of cable connections, provides electromagnetic interference shielding, and provides additional space for cooling hardware. These features result in an improved scalable computer system.

Owner:CRAY

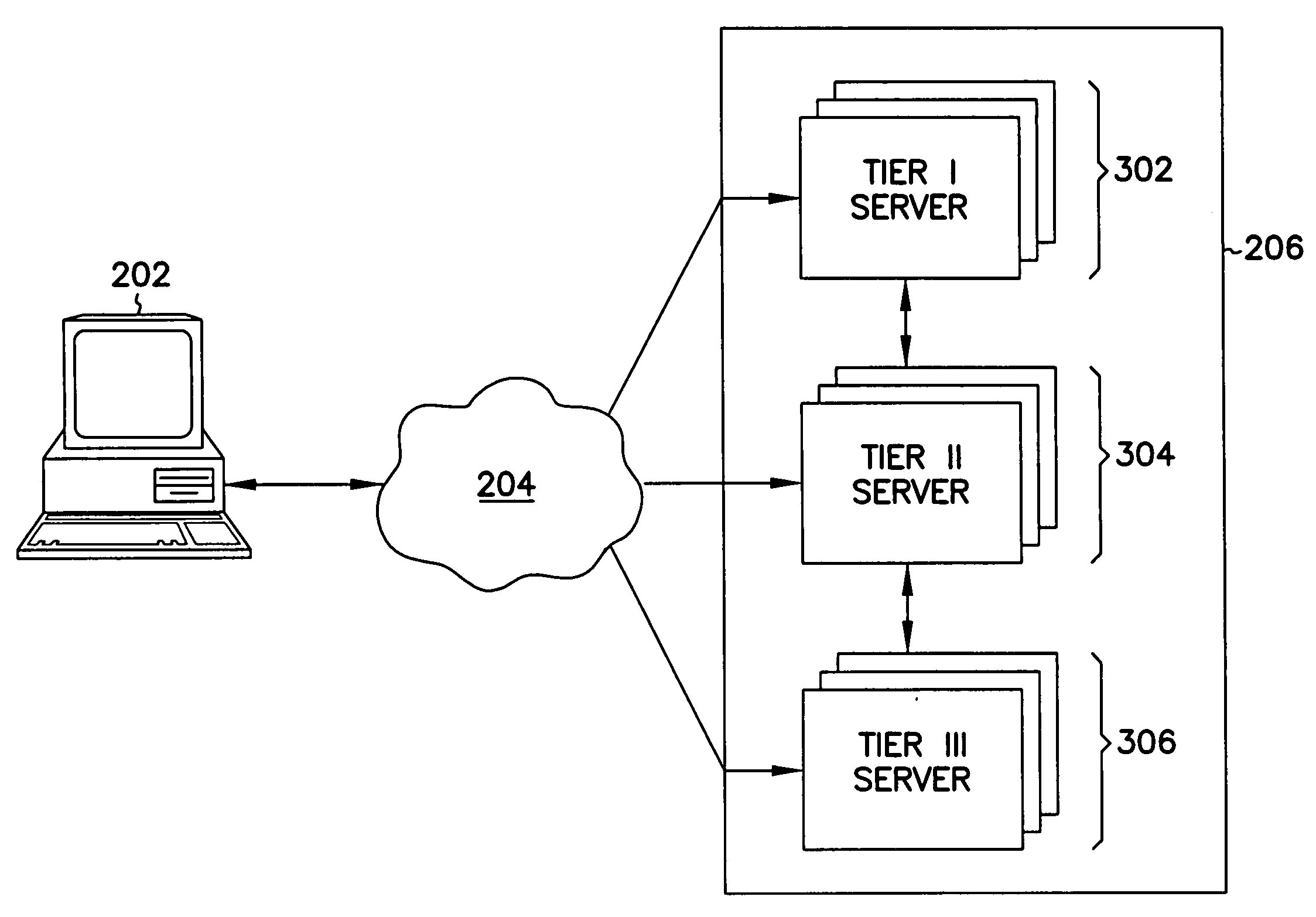

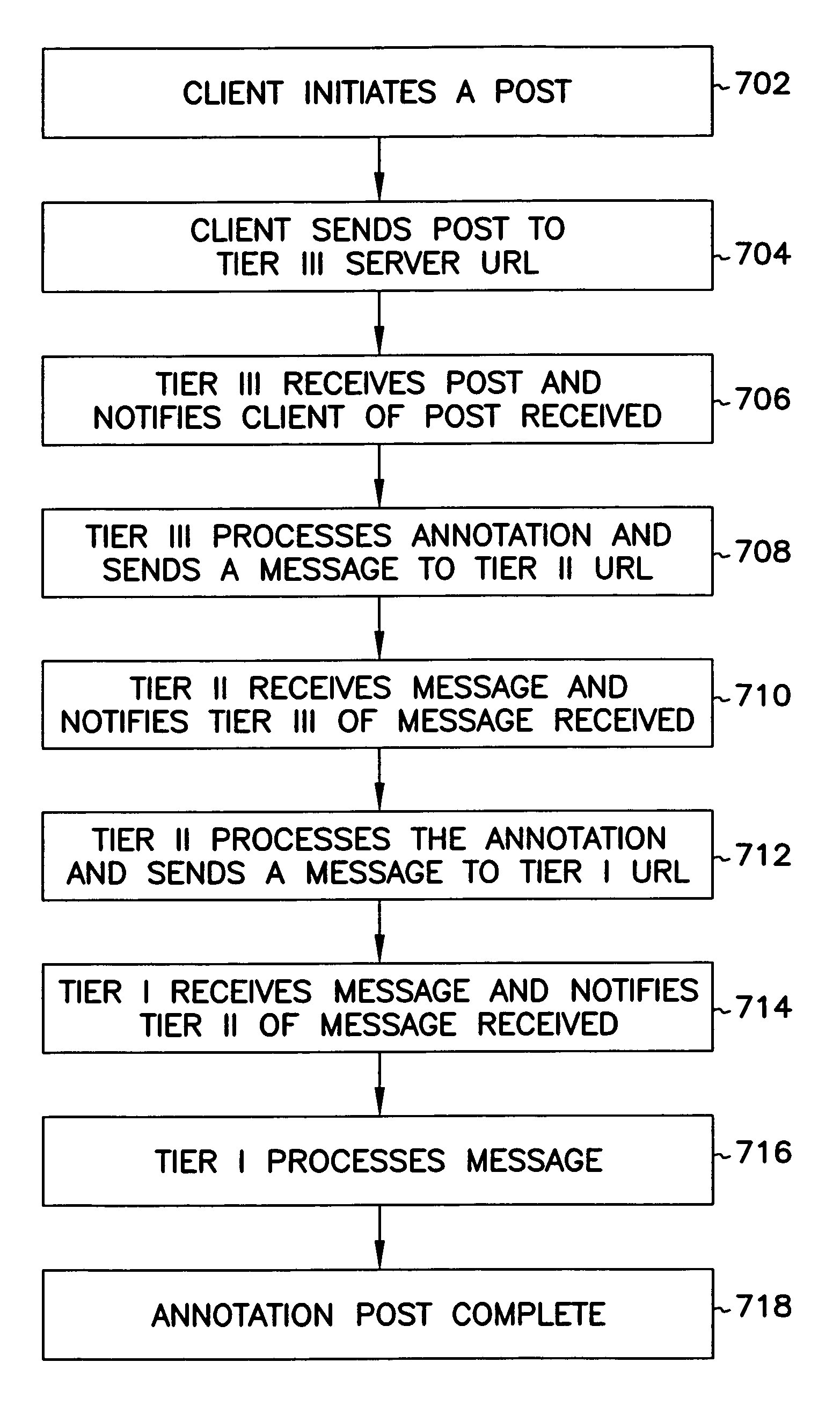

Associating annotations with a content source

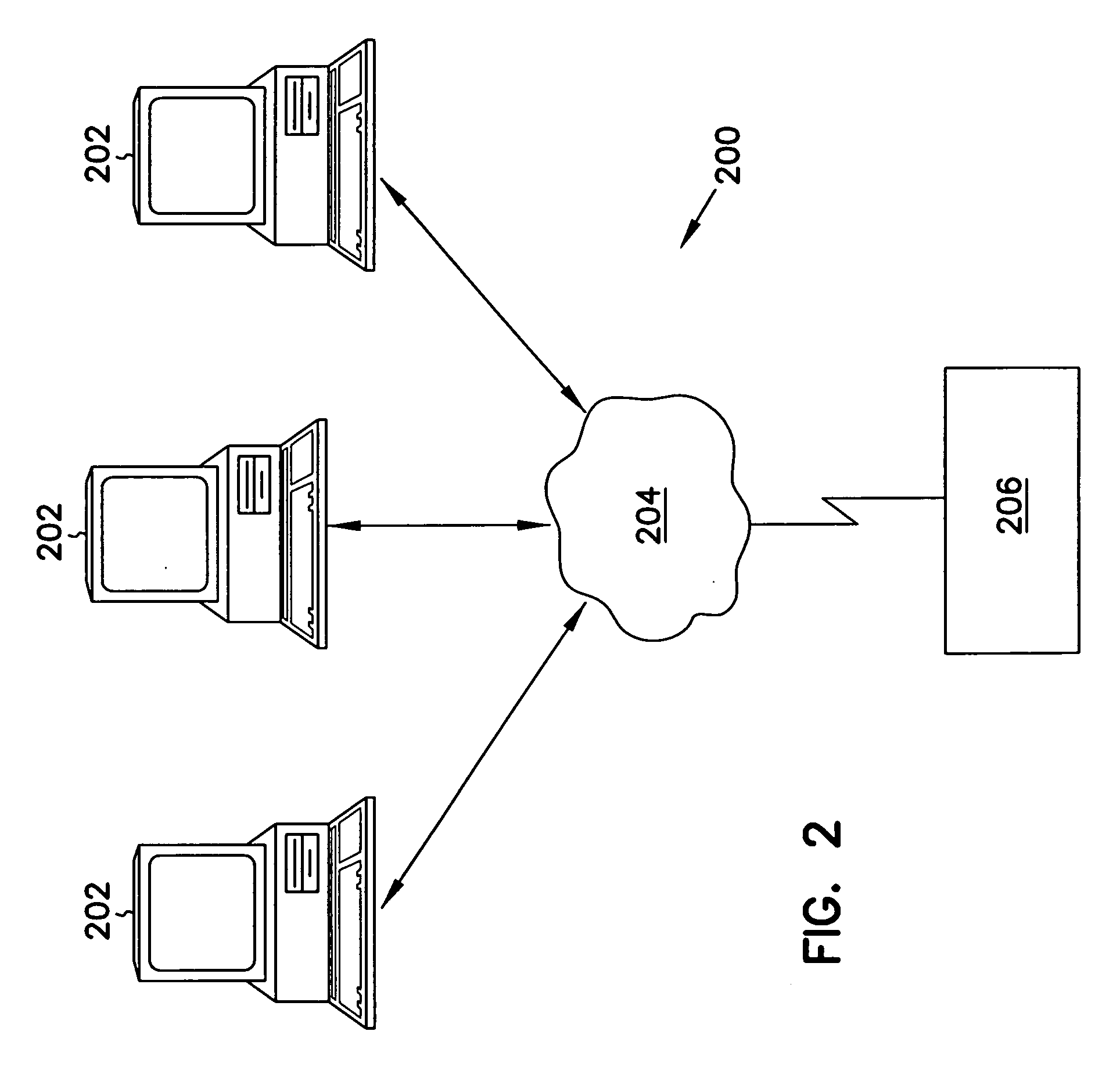

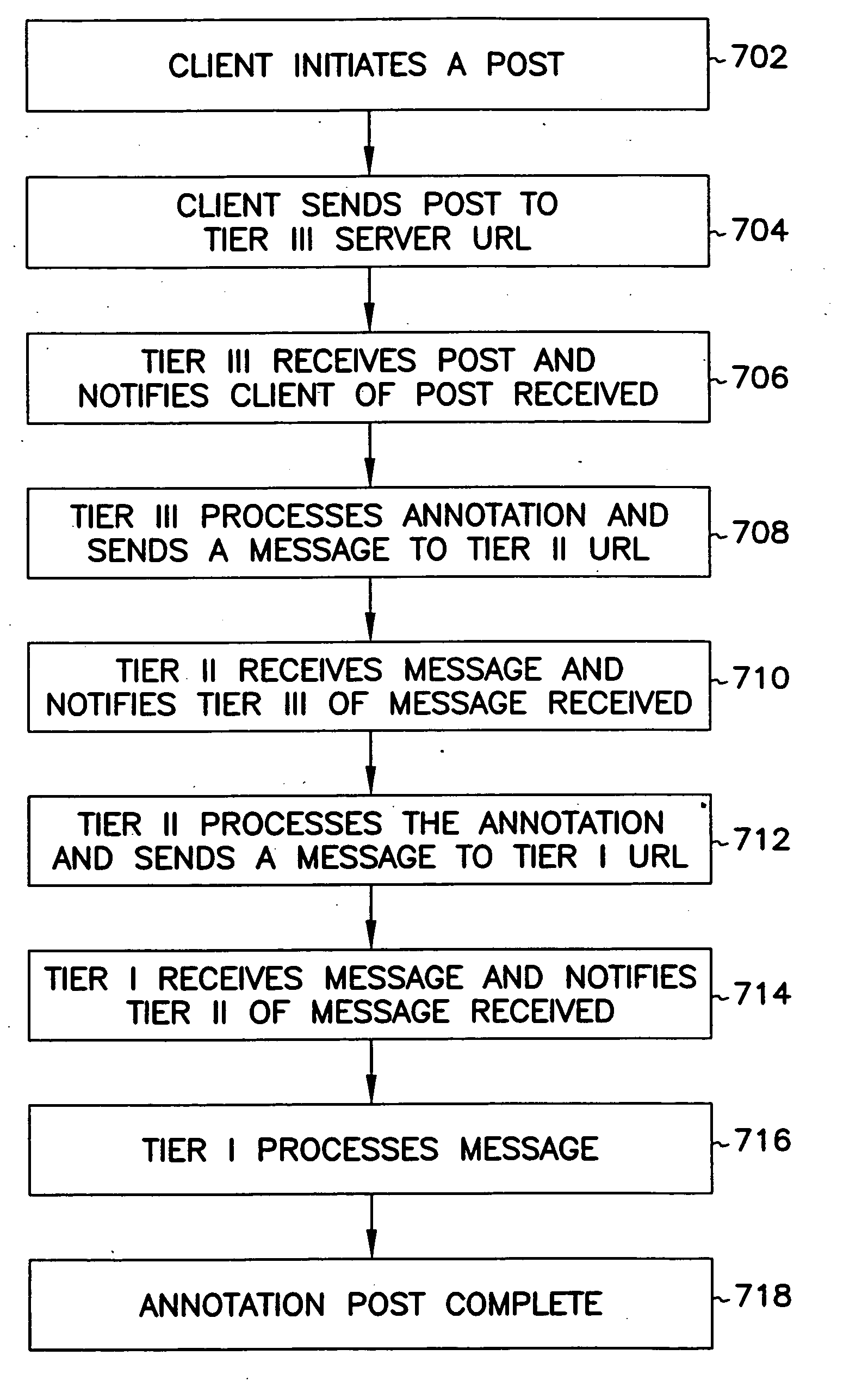

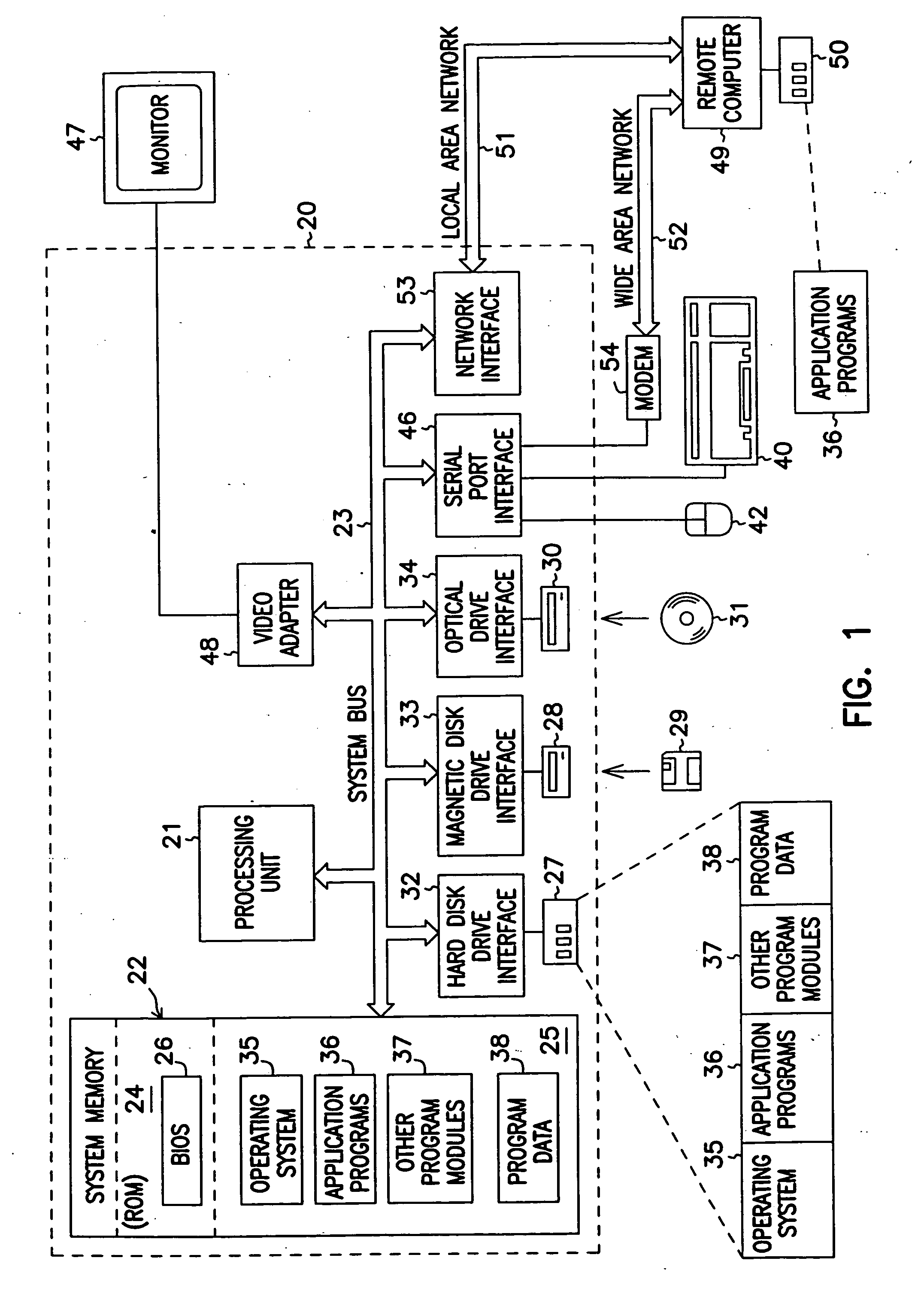

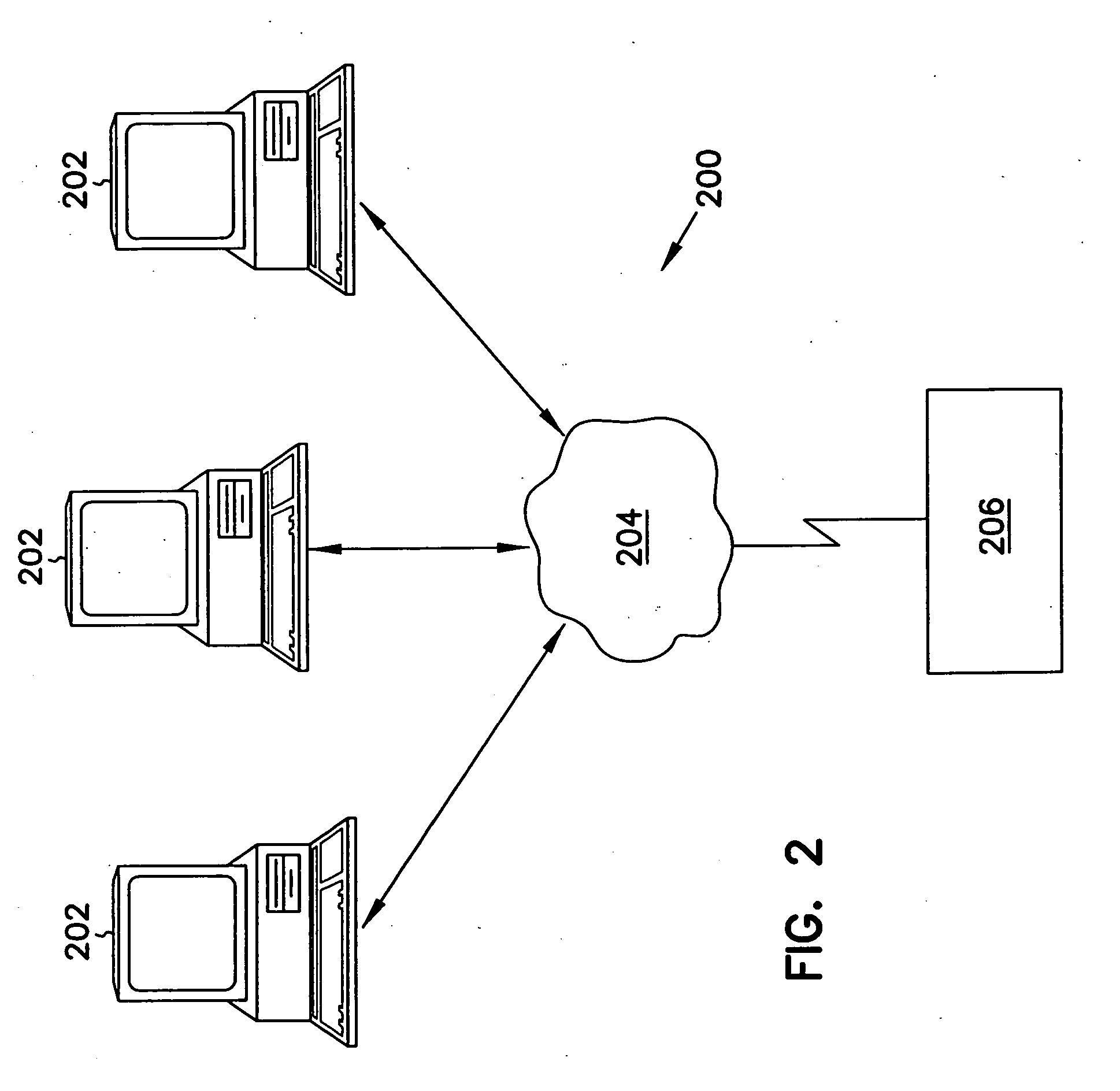

InactiveUS6973616B1Special data processing applicationsWeb data browsing optimisationScalable computingDocument Identifier

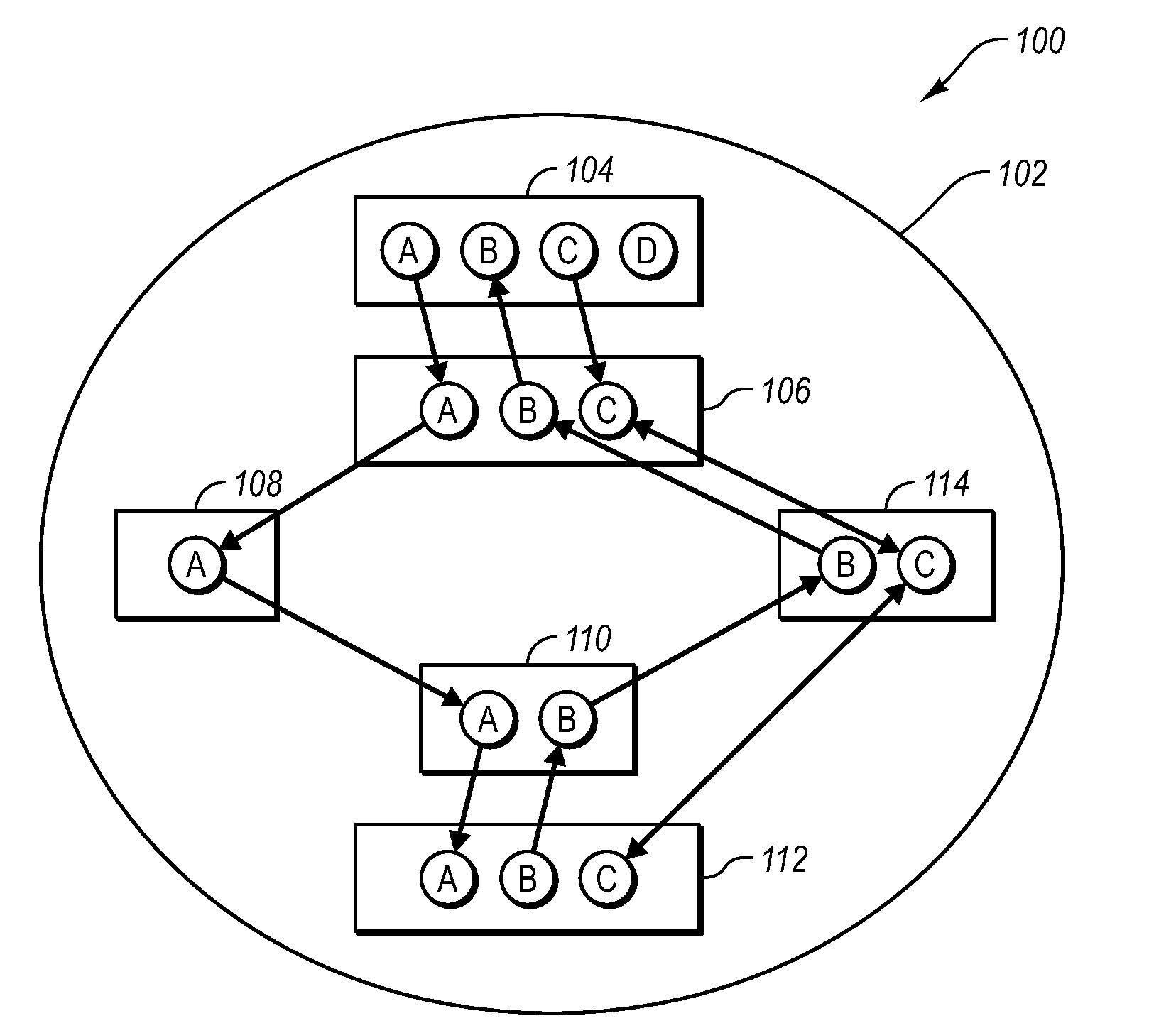

A computing system capable of associating annotations with millions of content sources is described. An annotation is any content associated with a document space. The document space is any document identified by a document identifier. The document space provides the context for the annotation. An annotation is represented as an object having a plurality of properties. The annotation is associated with a content source using a document identifier property. The document identifier property identifies the content source with which the annotation is associated. A scalable computing system for managing annotations responds to requests for presenting annotations to millions of documents a day. The computing system consists of multiple tiers of servers. A tier I server indicates whether there are annotations associated with a content source. A tier II server provides an index to the body of the annotations. A tier III server provides the body of the annotation.

Owner:MICROSOFT TECH LICENSING LLC

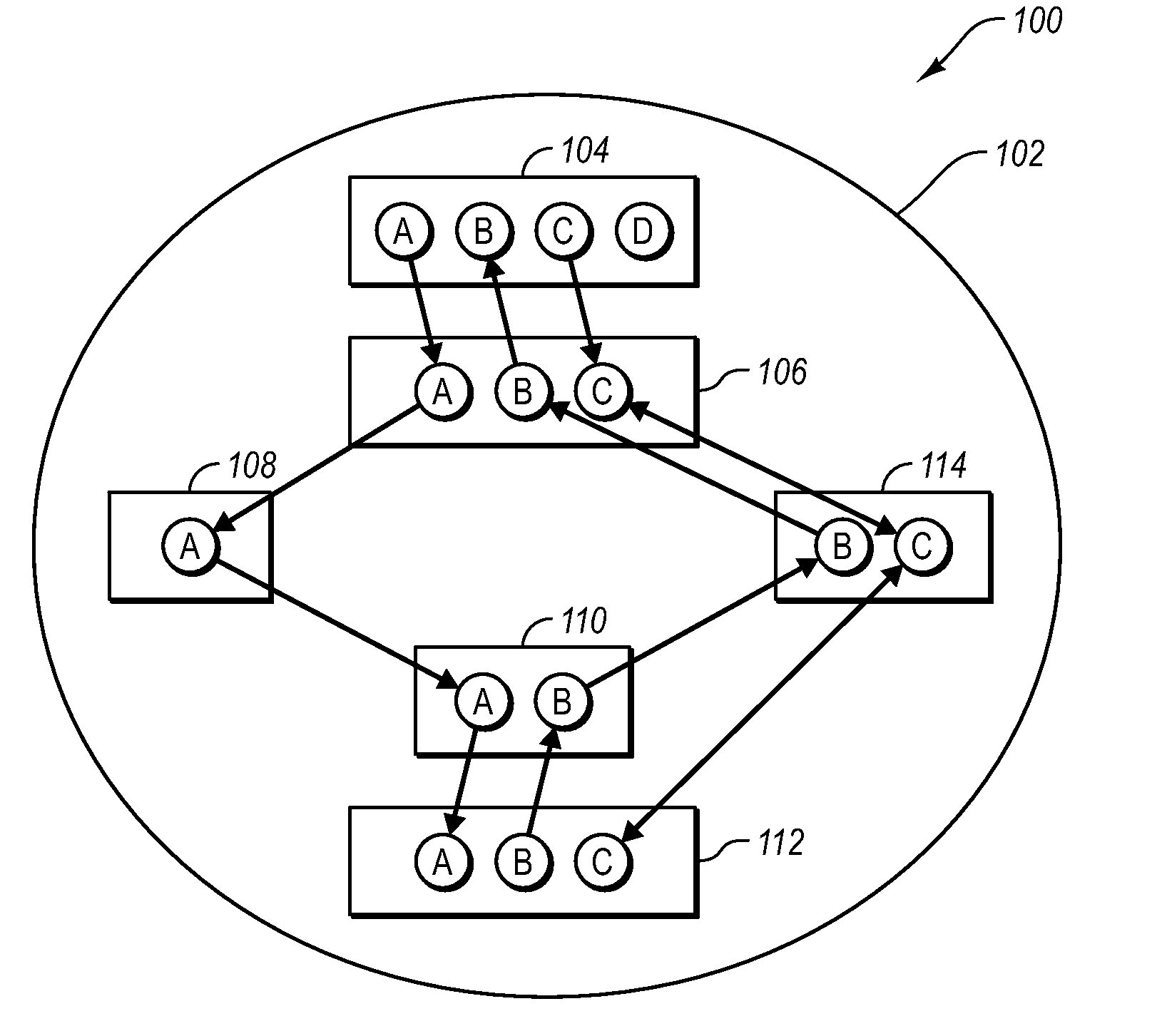

Scalable computing system for managing annotations

InactiveUS20060212795A1Digital computer detailsNatural language data processingScalable computingDocumentation

A scalable computing system for managing annotations is capable of handling requests for annotations to millions of documents a day. The computing system consists of multiple tiers of servers. A tier I server indicates whether there are annotations associated with a content source. A tier II server indexes the annotations. A tier III server stores the body of the annotation.

Owner:MICROSOFT TECH LICENSING LLC +1

Scalable computing system for managing annotations

InactiveUS7051274B1Digital computer detailsNatural language data processingScalable computingDocumentation

A scalable computing system for managing annotations is capable of handling requests for annotations to millions of documents a day. The computing system consists of multiple tiers of servers. A tier I server indicates whether there are annotations associated with a content source. A tier II server indexes the annotations. A tier III server stores the body of the annotation.

Owner:MICROSOFT TECH LICENSING LLC

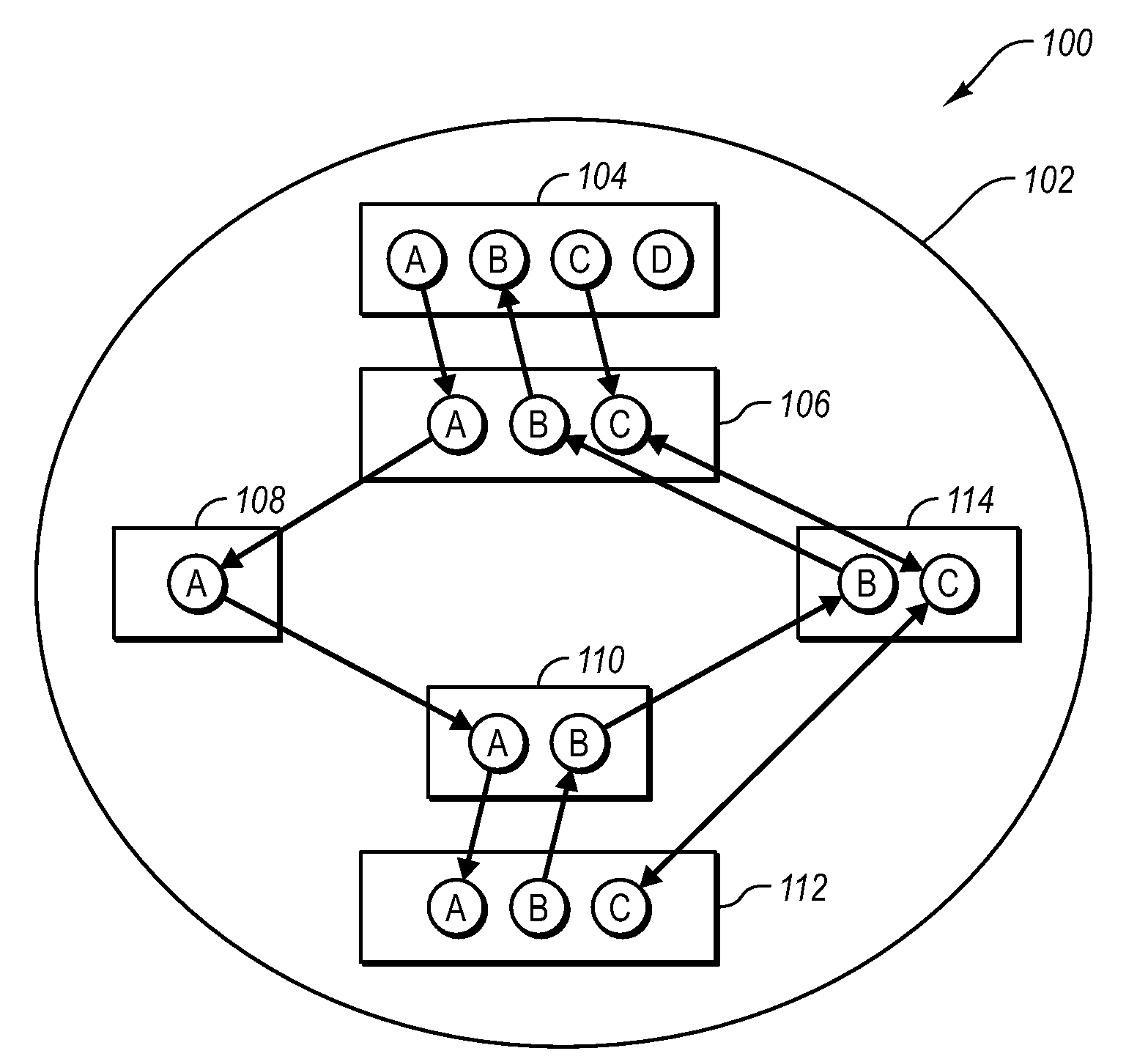

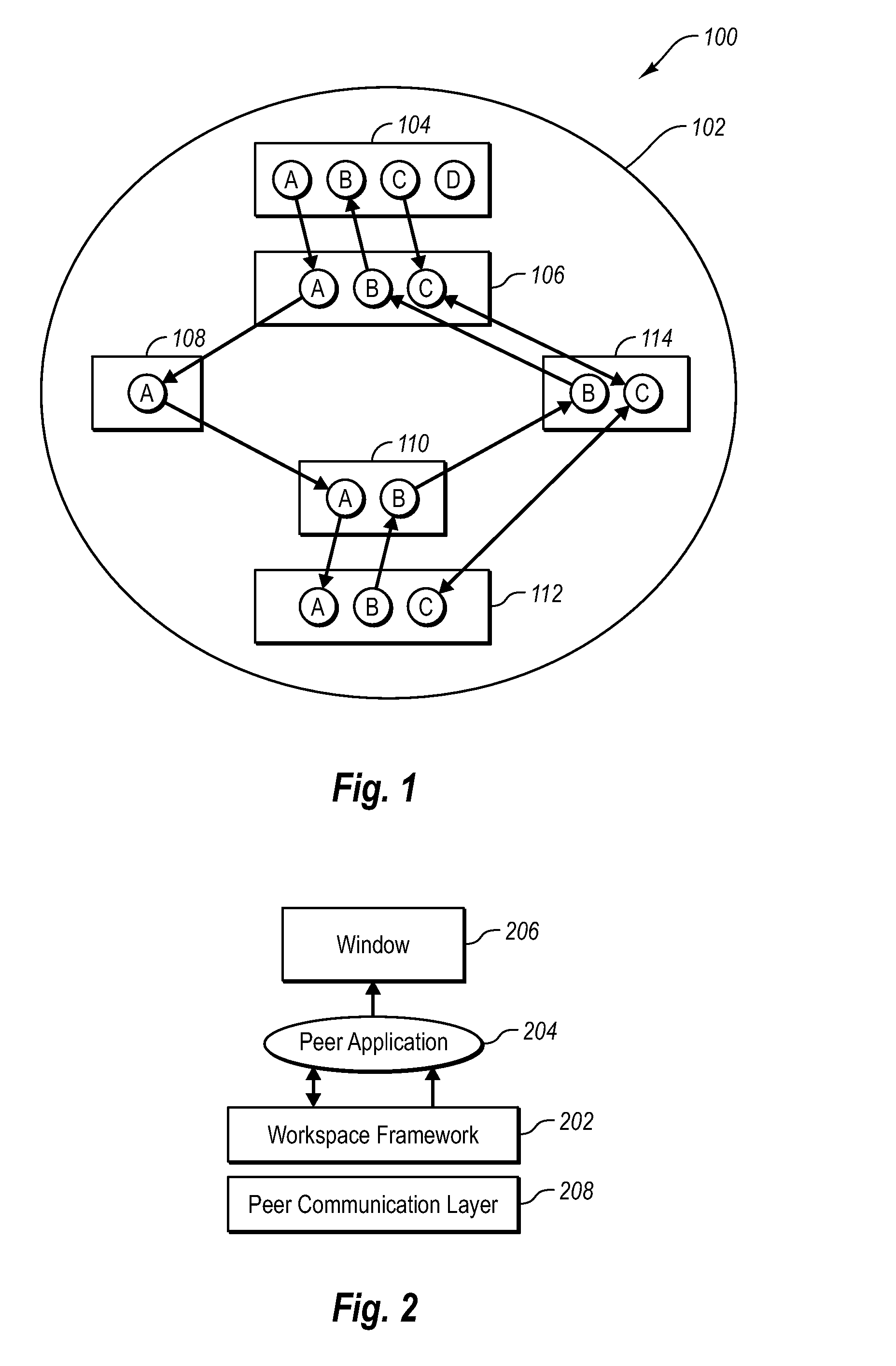

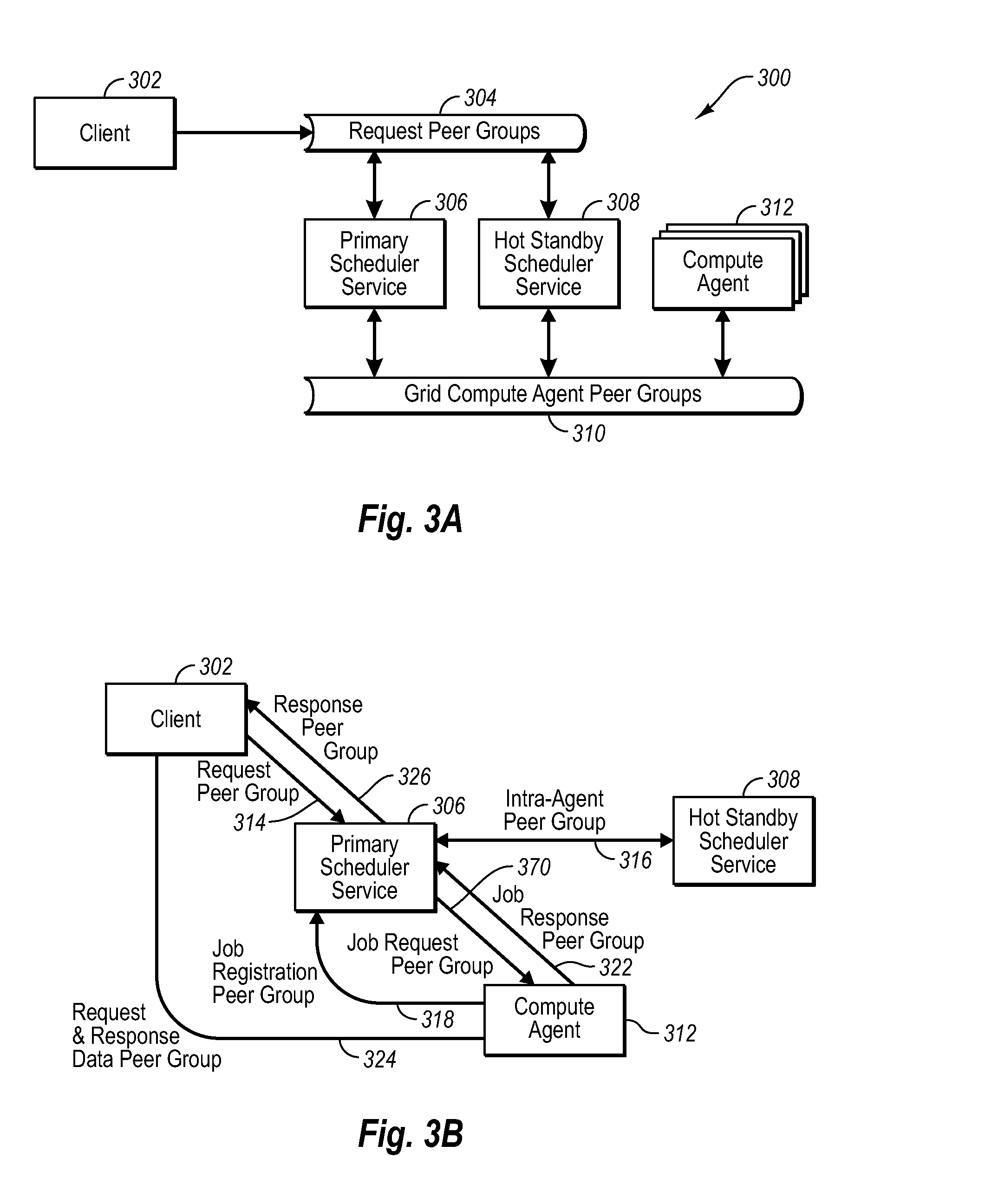

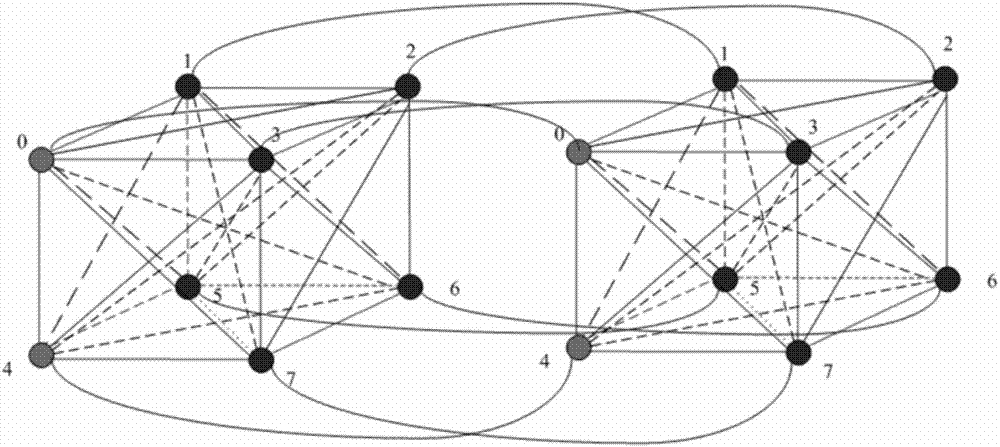

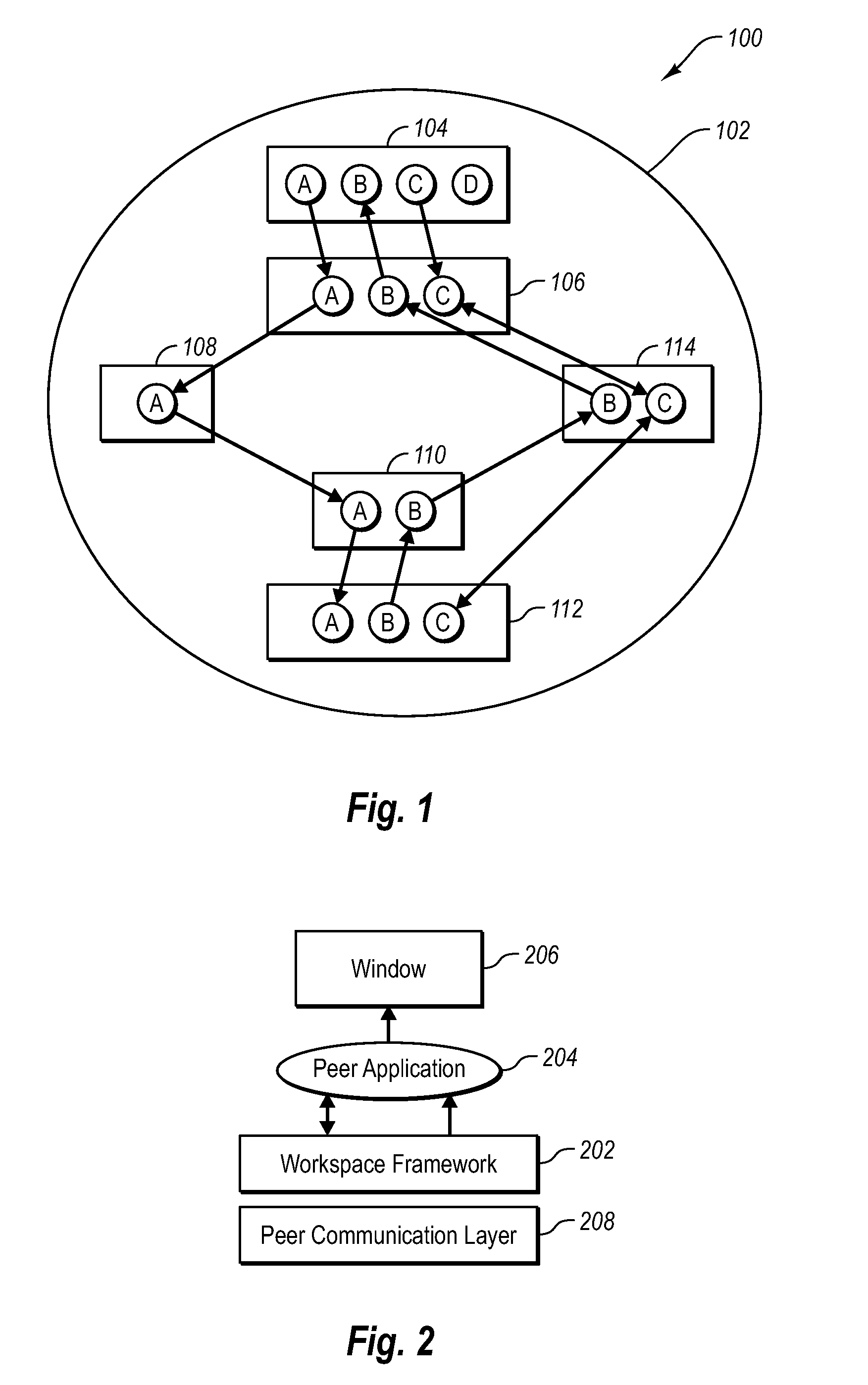

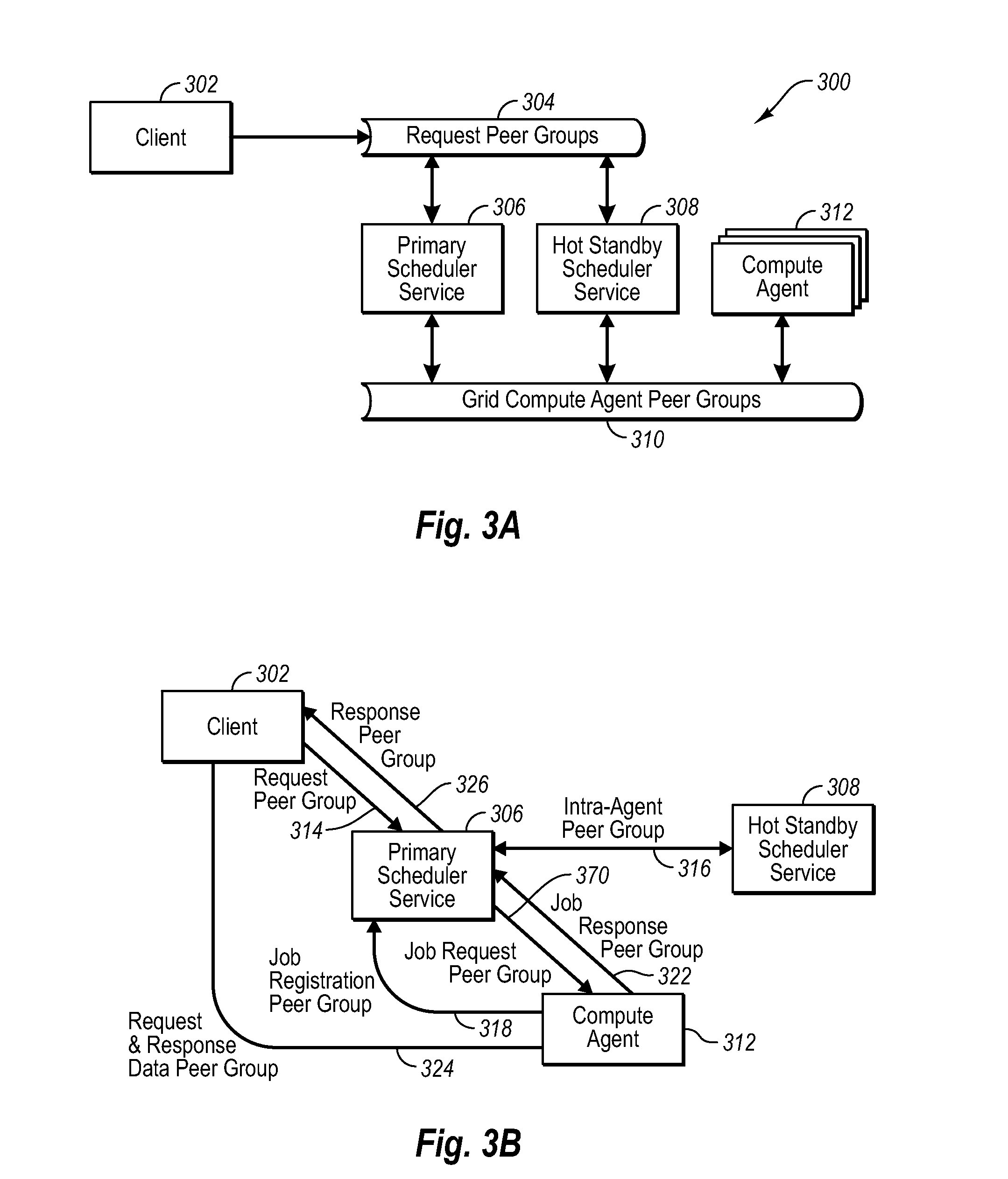

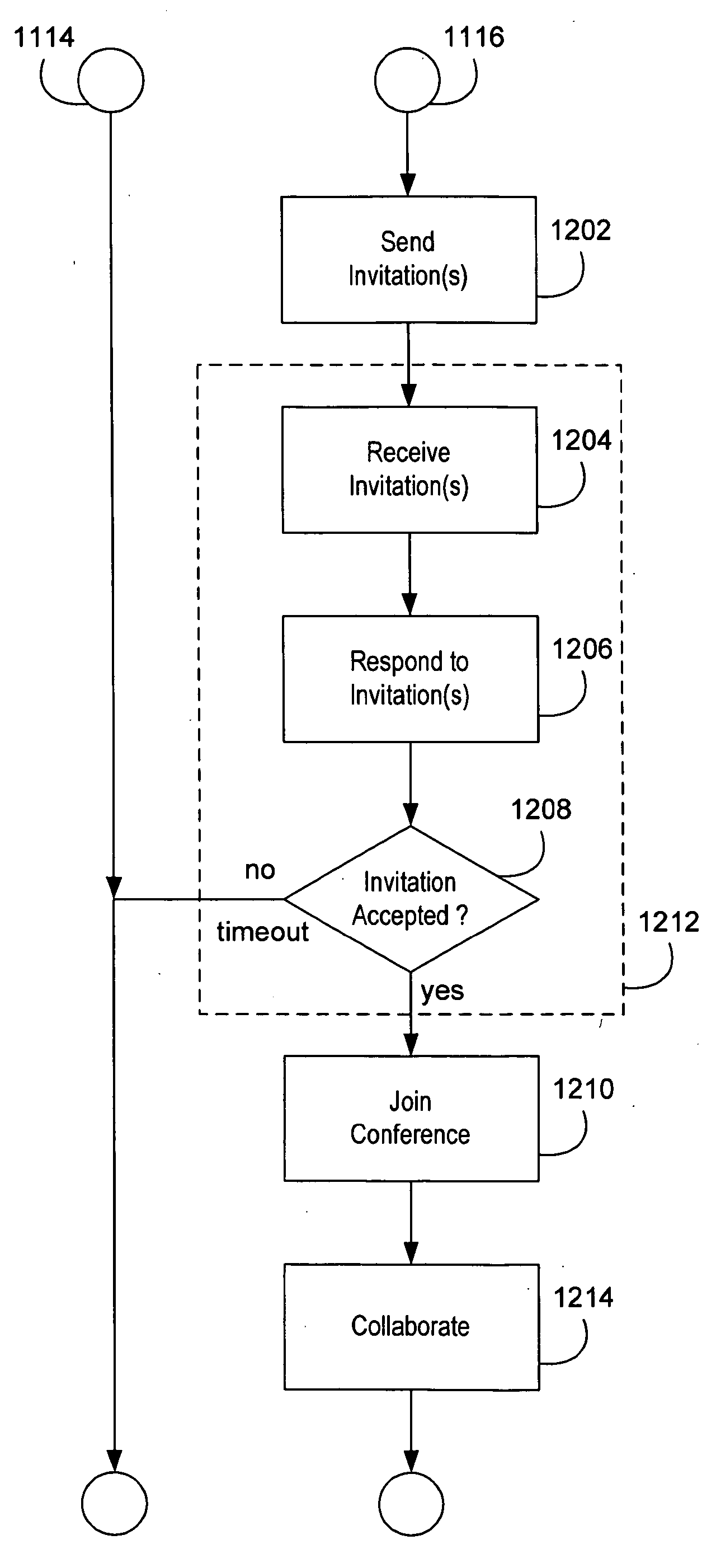

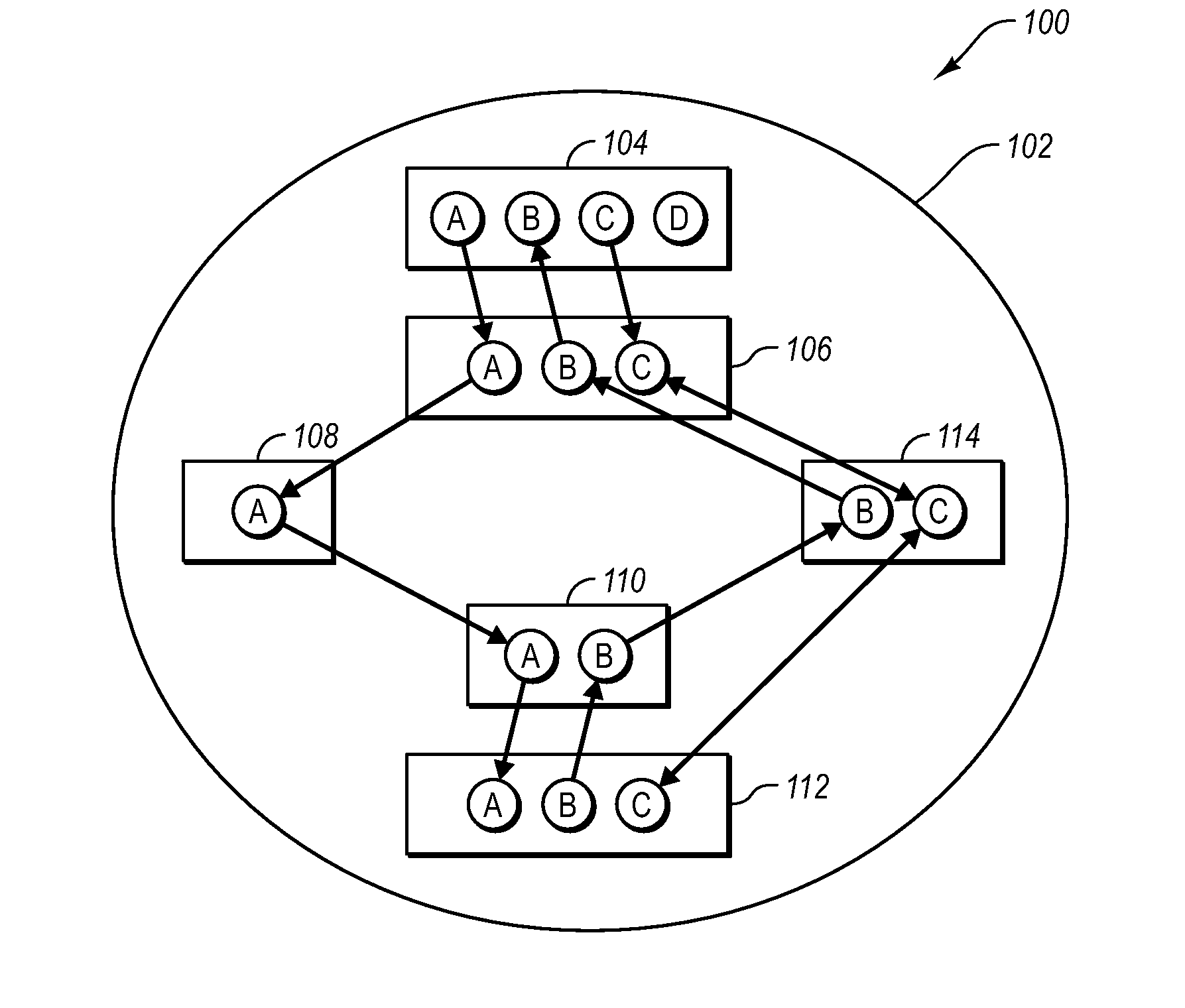

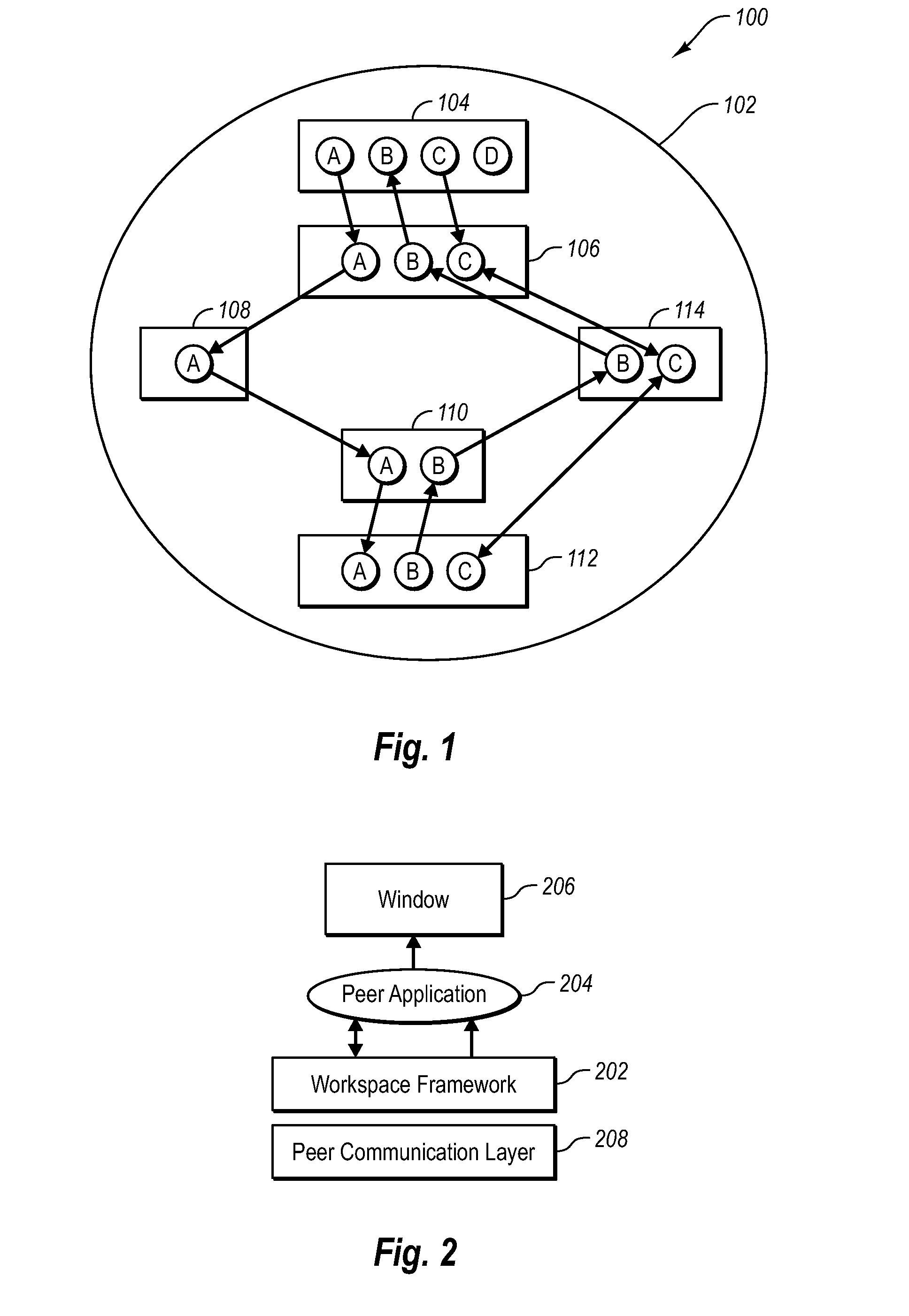

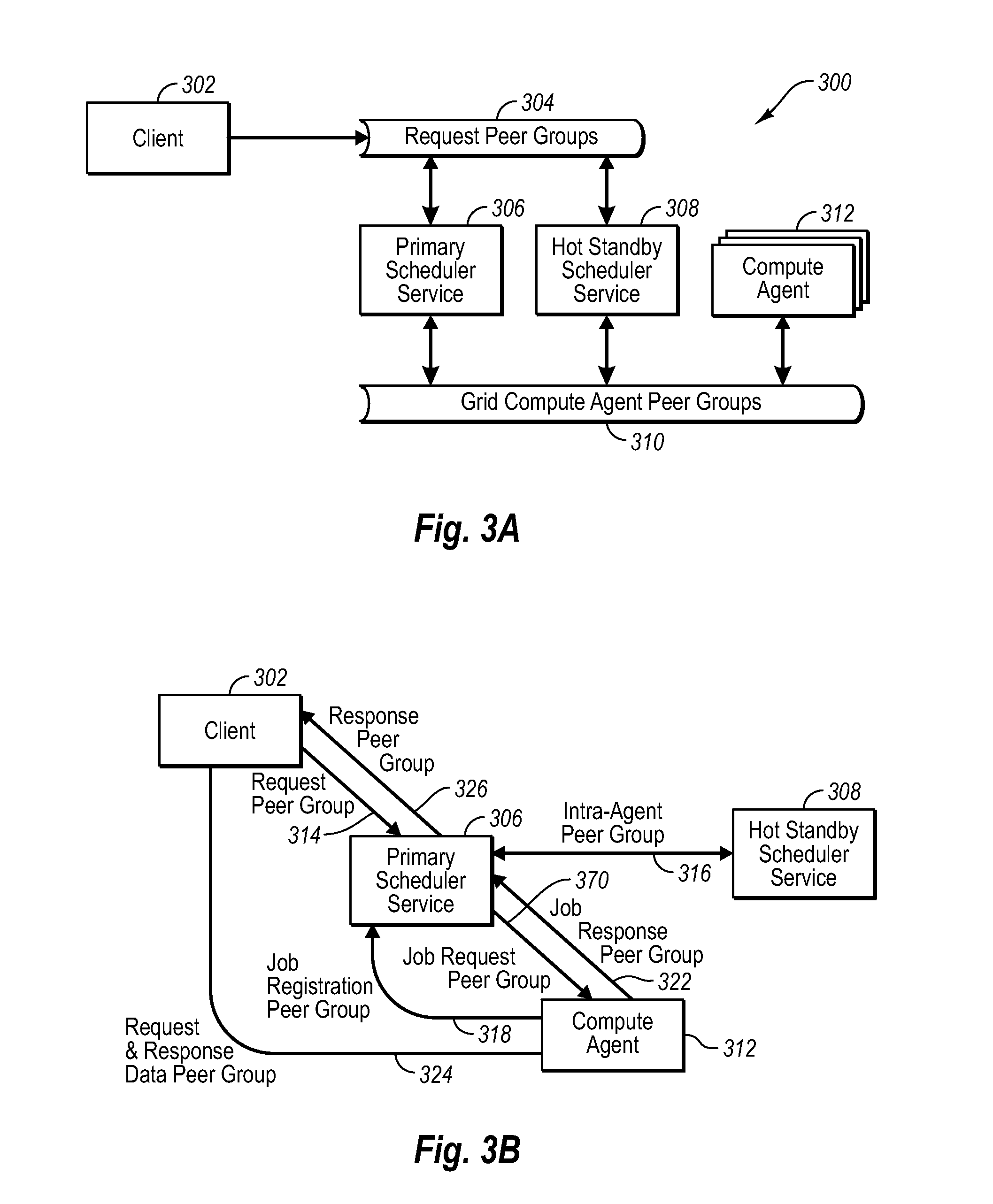

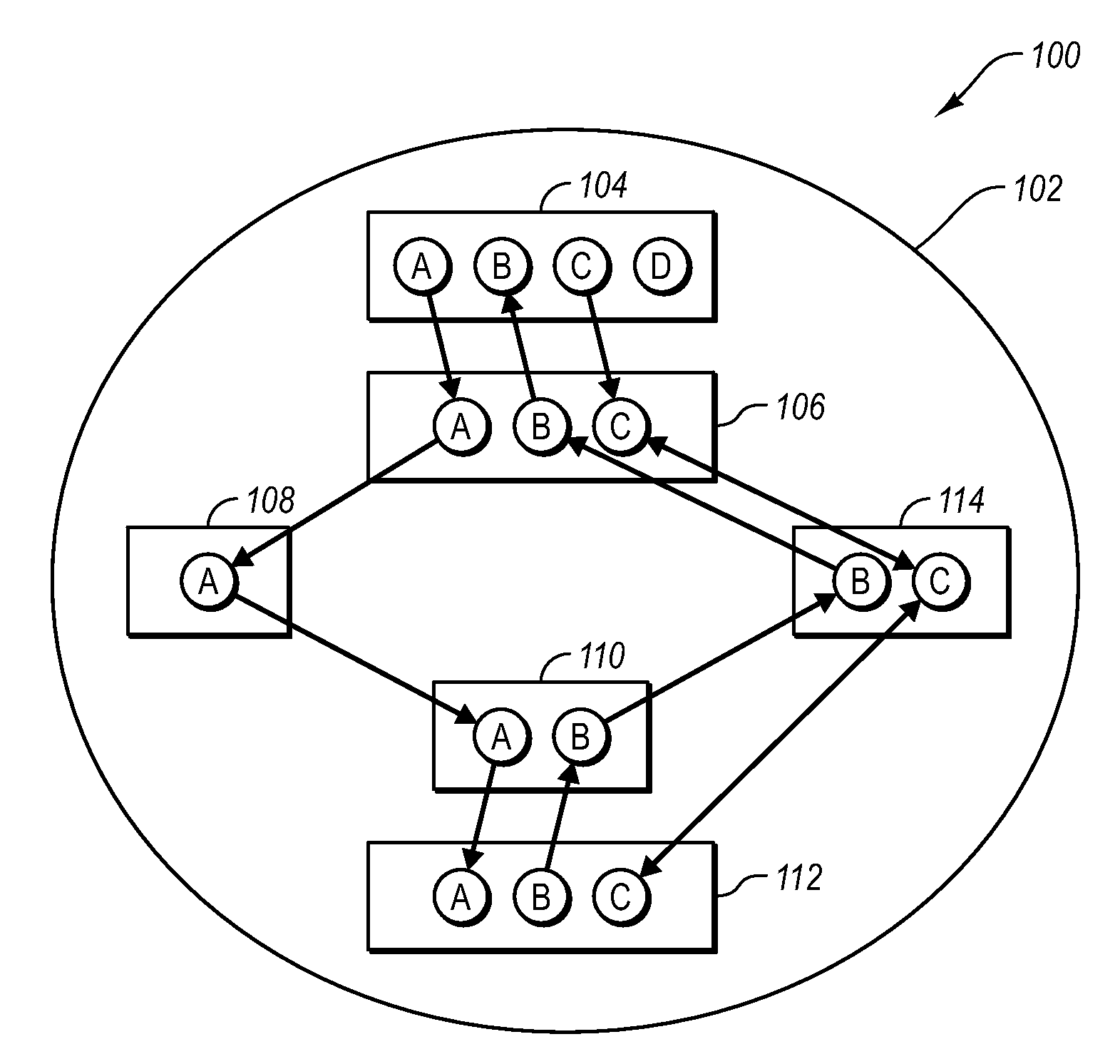

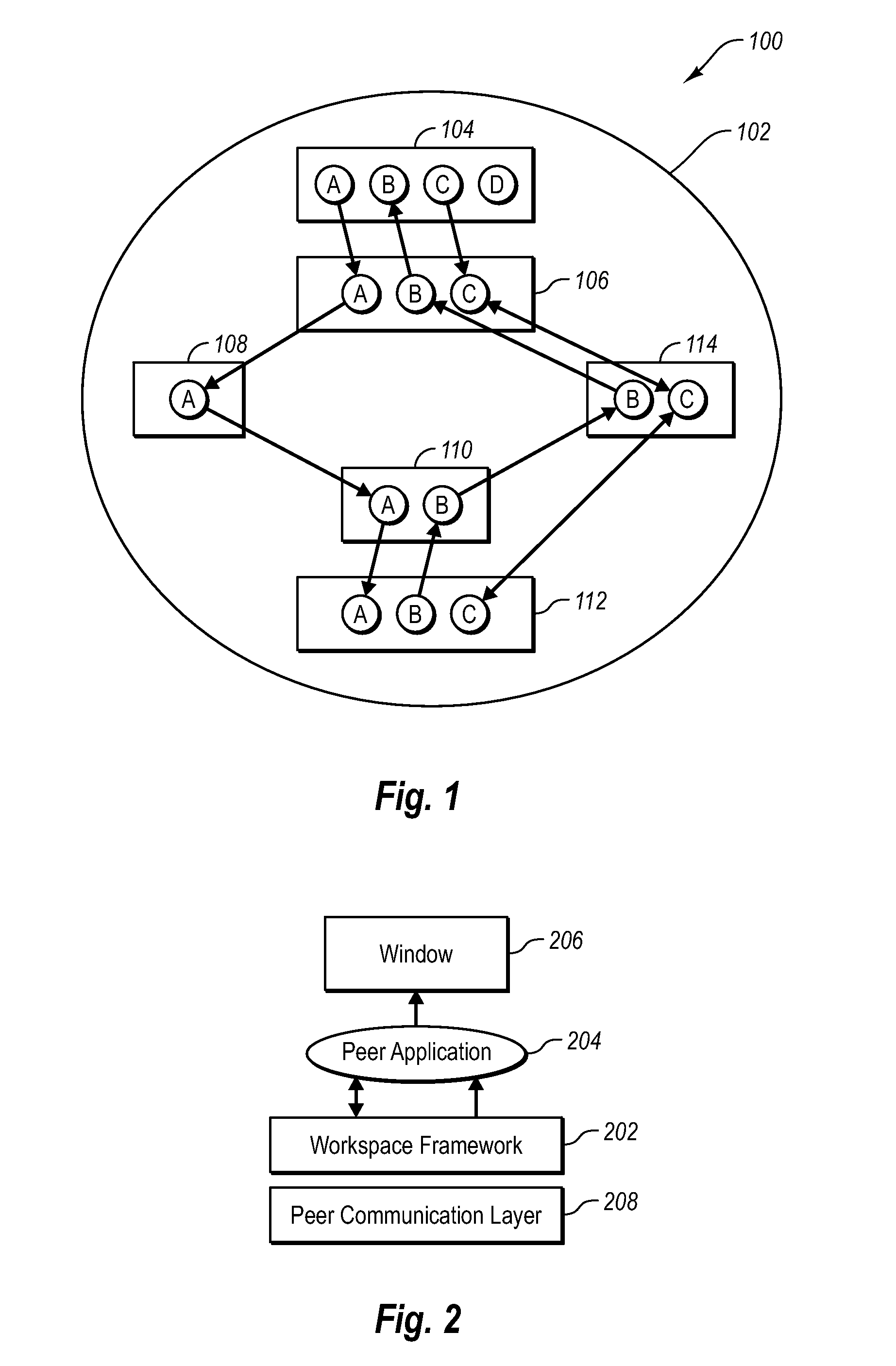

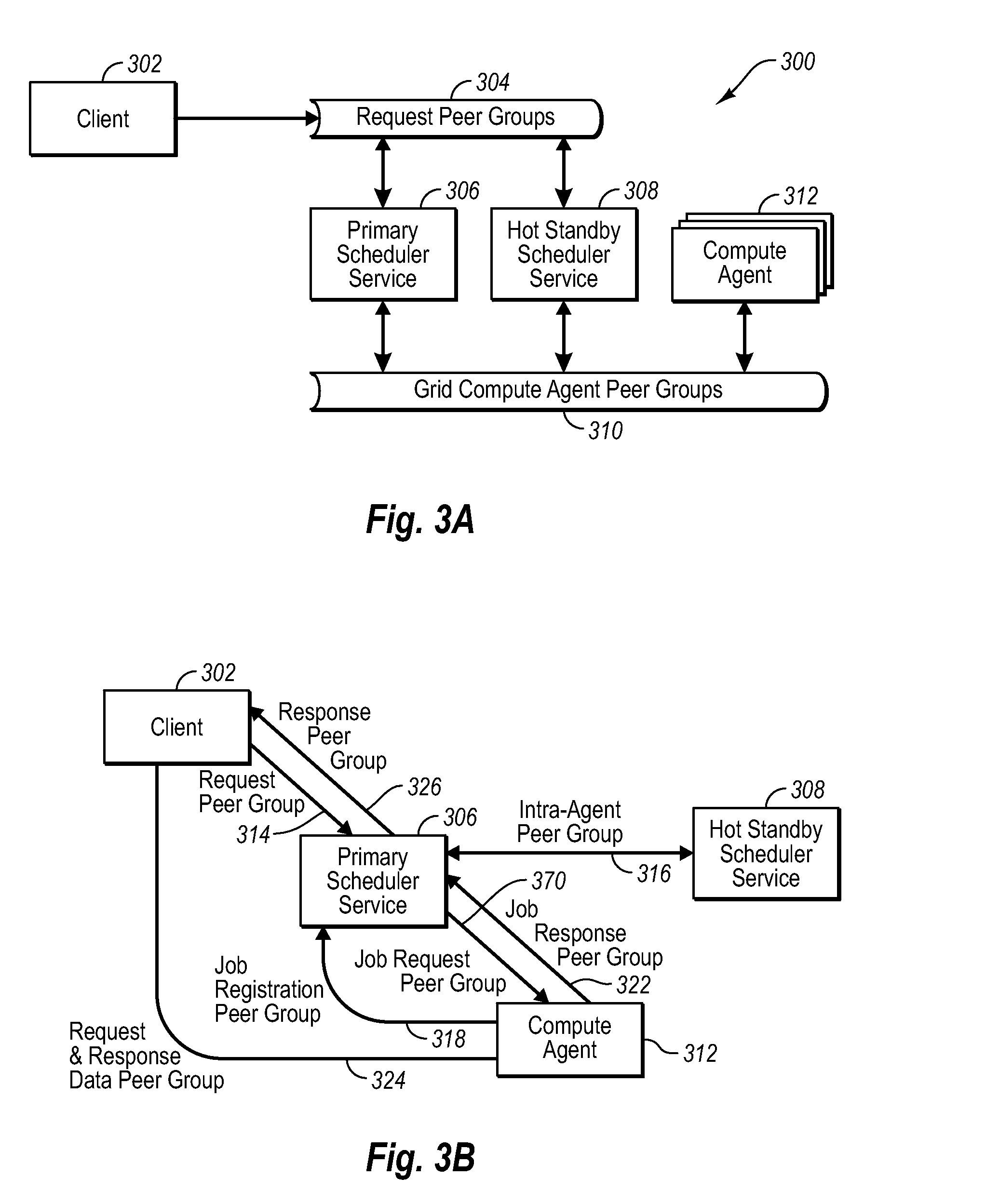

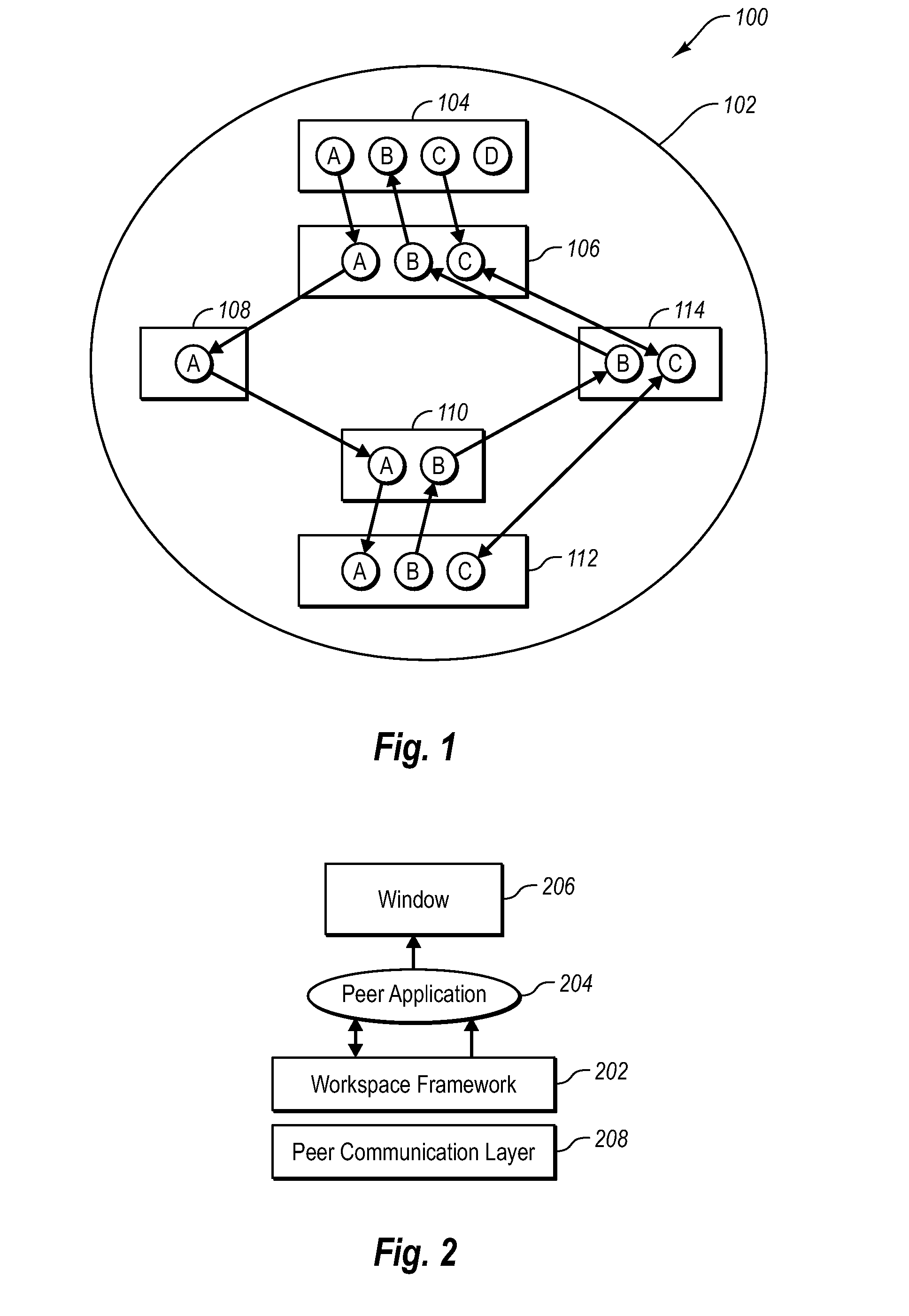

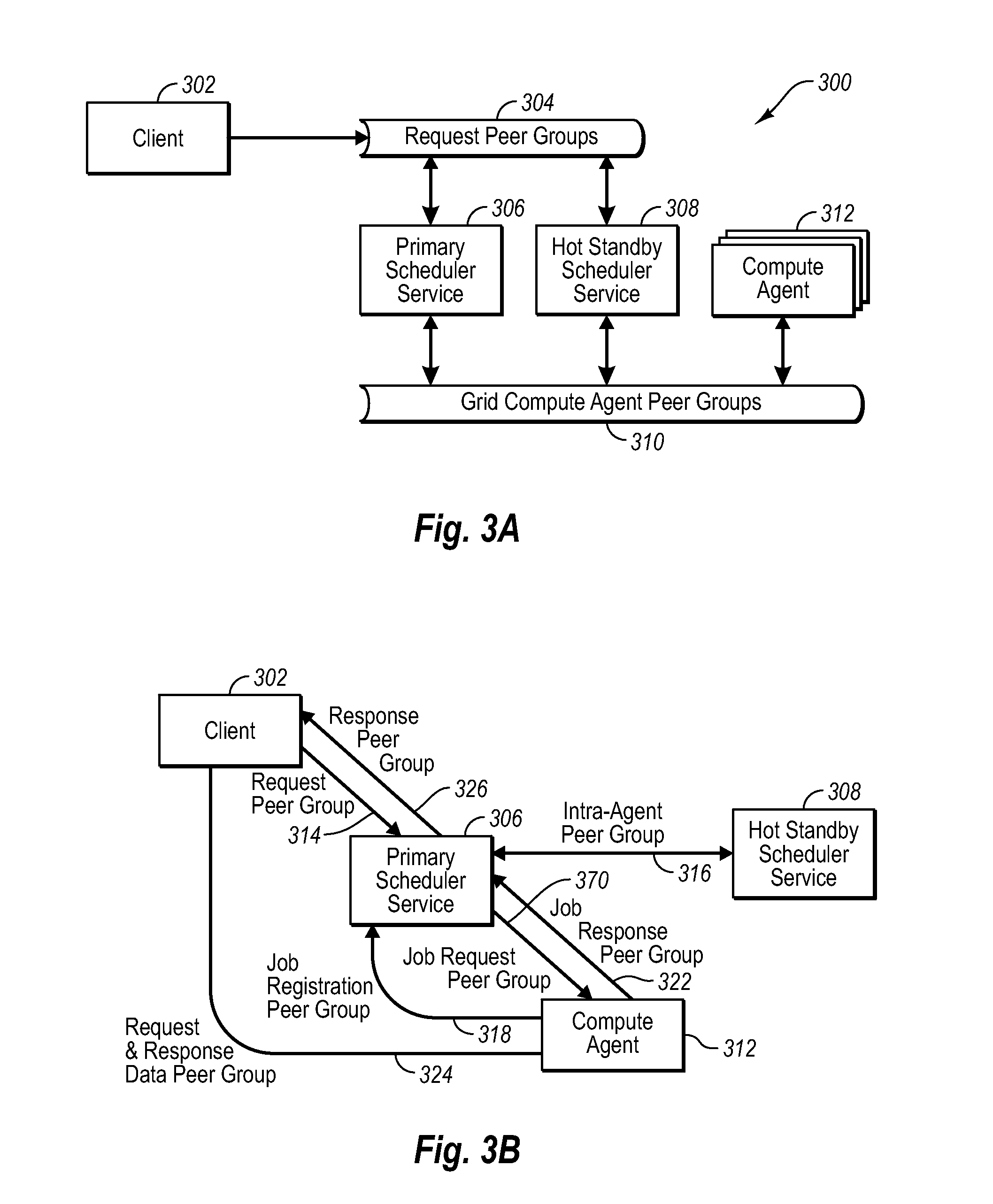

Multiple peer groups for efficient scalable computing

InactiveUS20080080530A1Time-division multiplexData switching by path configurationScalable computingGroup operation

Owner:MICROSOFT TECH LICENSING LLC

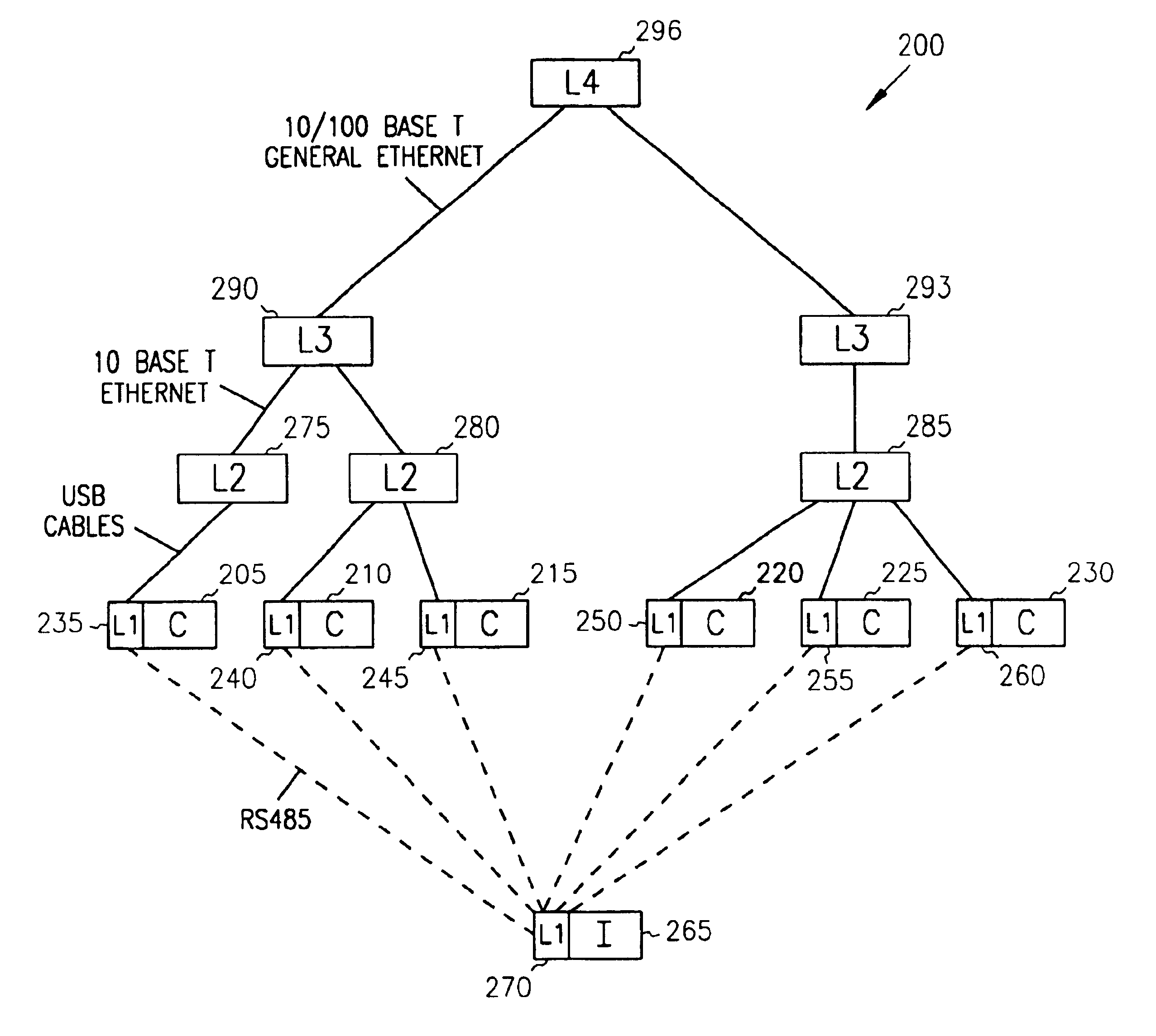

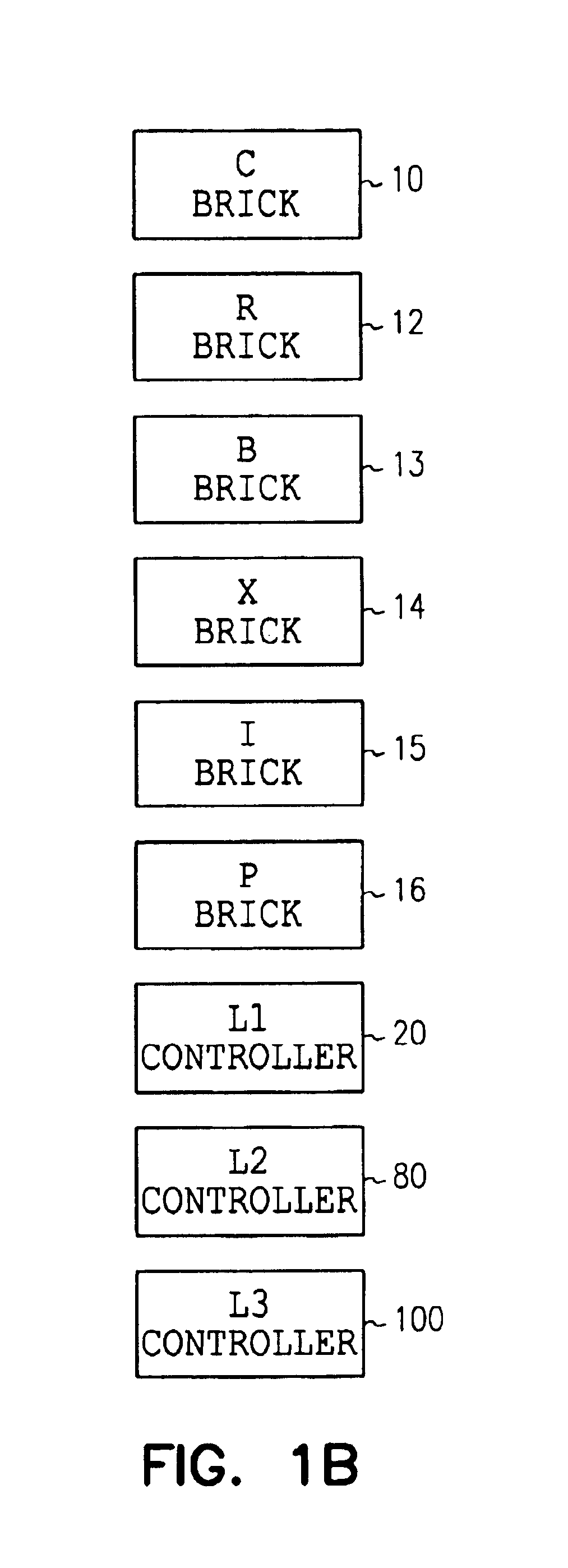

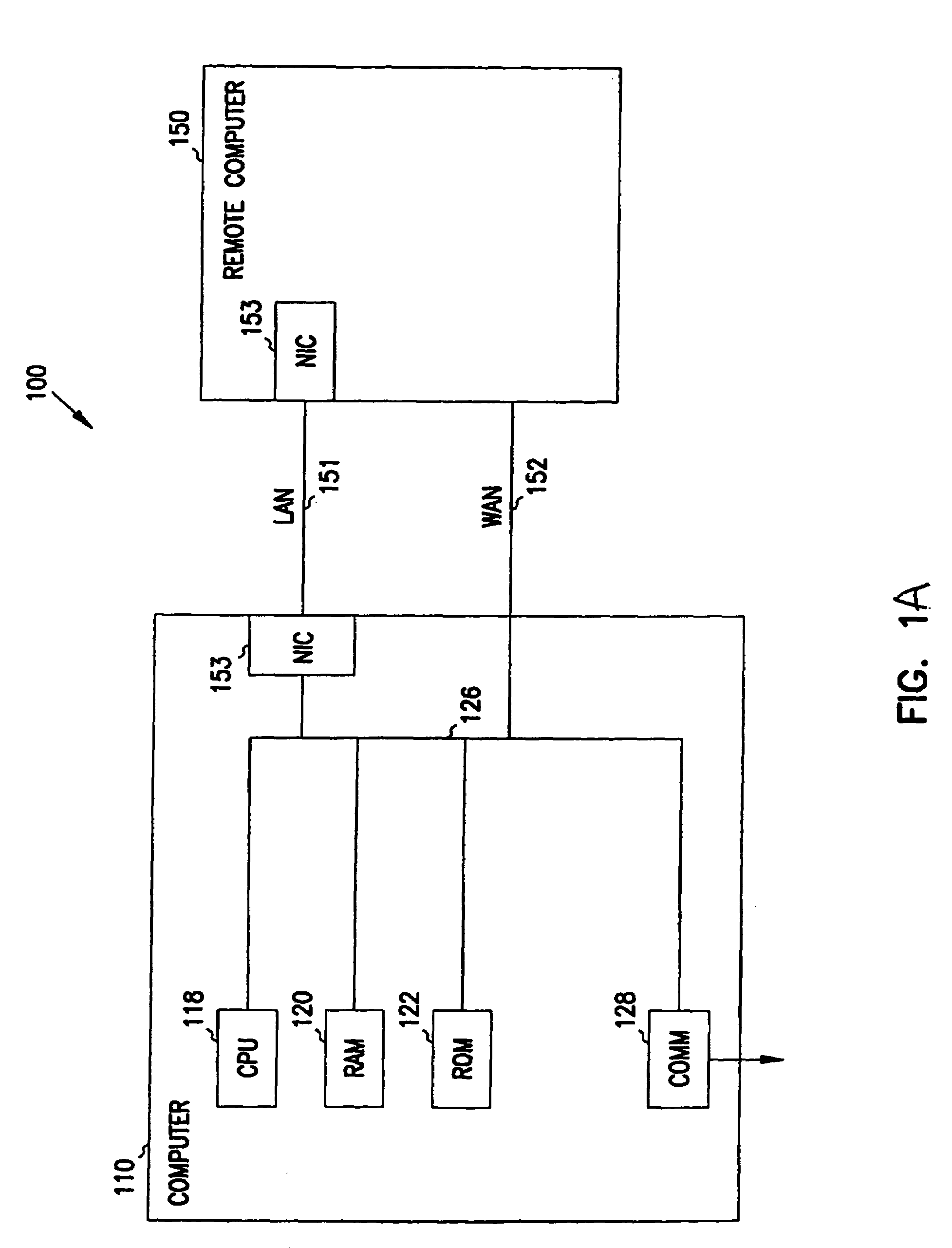

System and method for a hierarchical system management architecture of a highly scalable computing system

InactiveUS6845410B1Facilitate error free intercablingProvide capabilitySpecial data processing applicationsSpecific program execution arrangementsScalable computingSystems management

A modular computer system includes at least two processing functional modules each including a processing unit adapted to process data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one routing functional module is adapted to route data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one input or output functional module is adapted to input or output data and adapted to input / output data to other functional modules through at least one port including a plurality of data lines. Each processing, routing and input or output functional module includes a local controller adapted to control the local operation of the associated functional module, wherein the local controller is adapted to input and output control information over control lines connected to the respective ports of its functional module. At least one system controller functional module is adapted to communicate with one or more local controllers and provide control at a level above the local controllers. Each of the functional modules adapted to be cabled together with a single cable that includes a plurality of data lines and control lines such that control lines in each module are connected together and data lines in each unit are connected together. Each of the local controllers adapted to detect other local controllers to which it is connected and to thereby collectively determine the overall configuration of a system.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

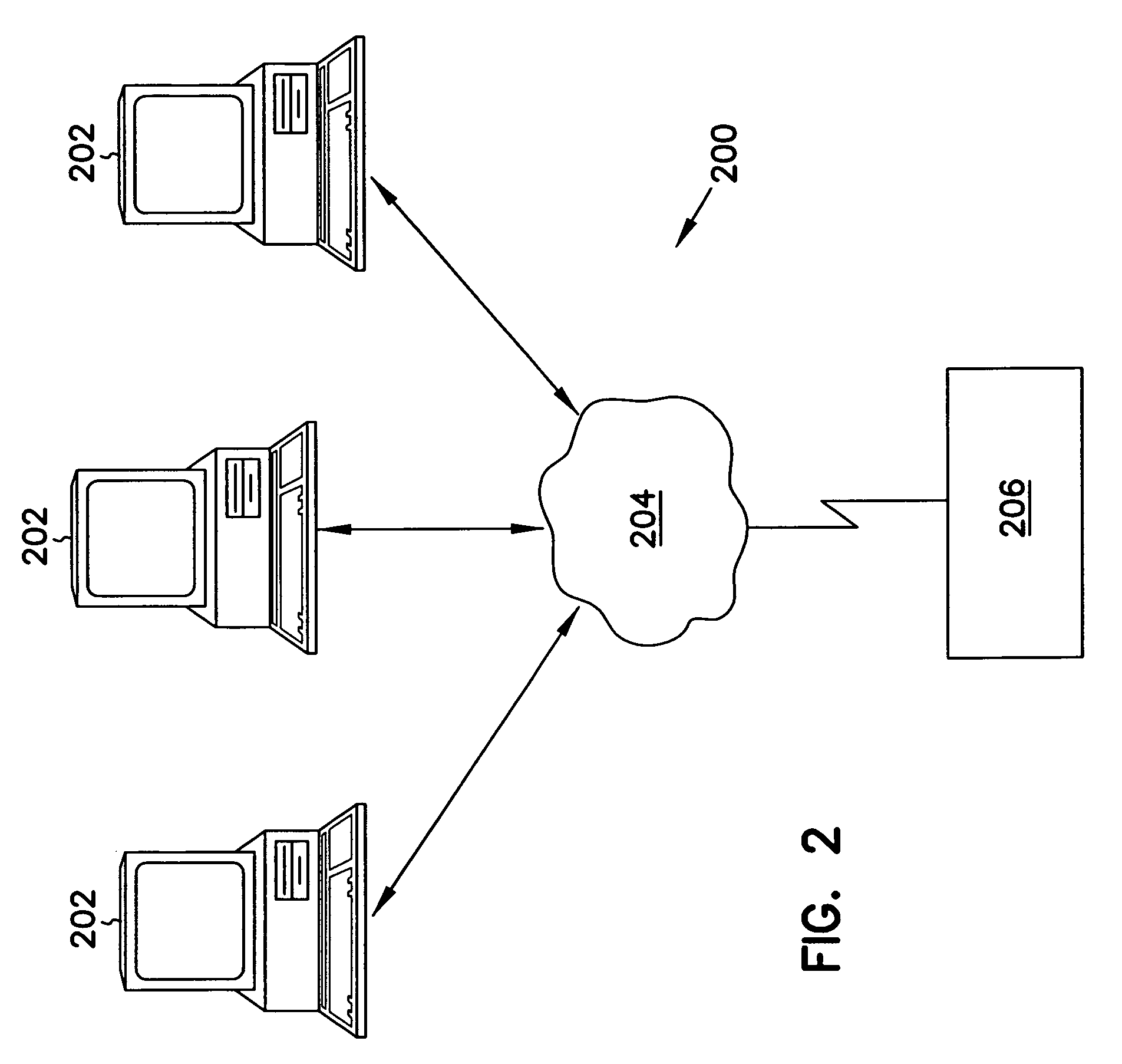

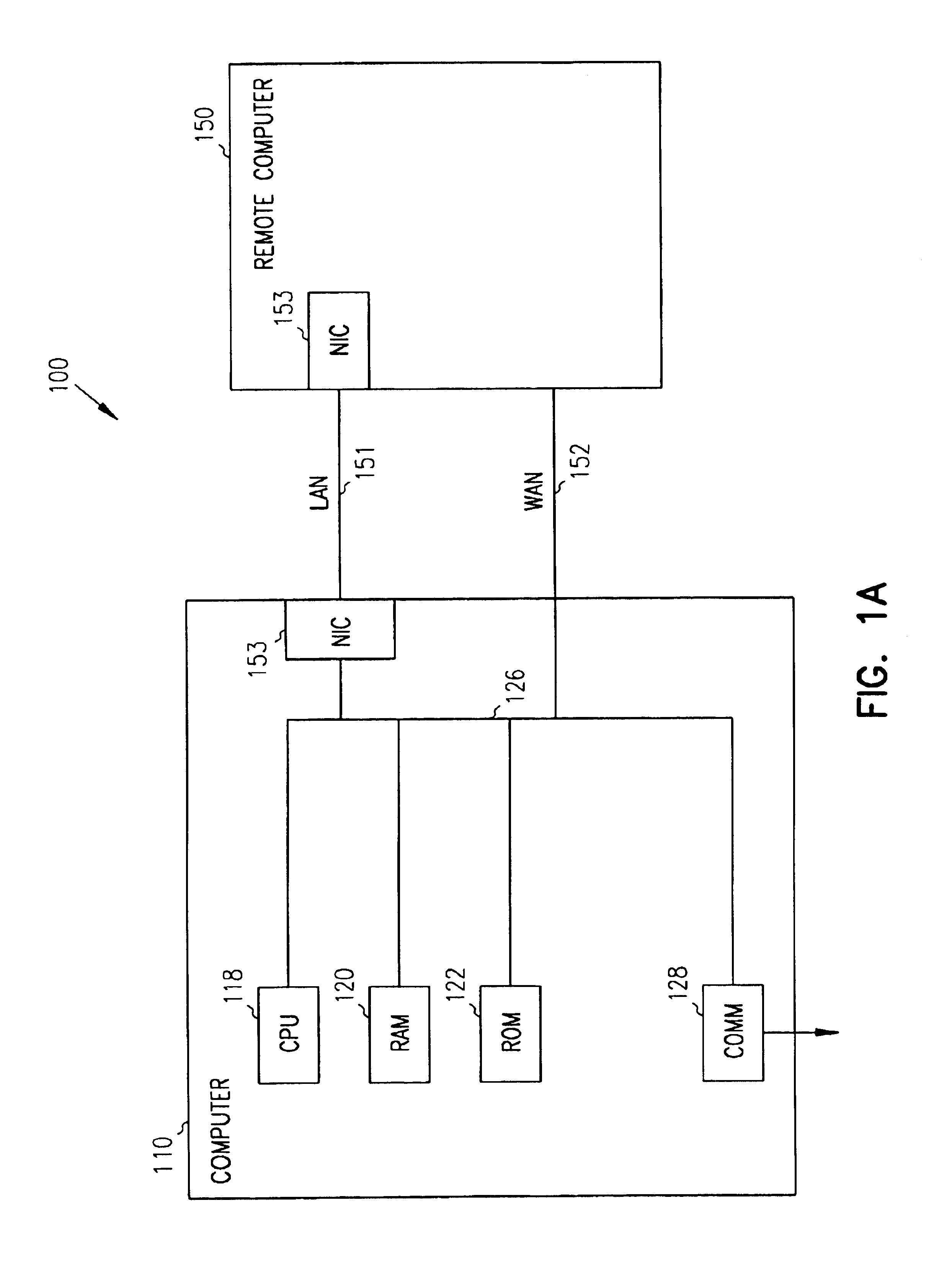

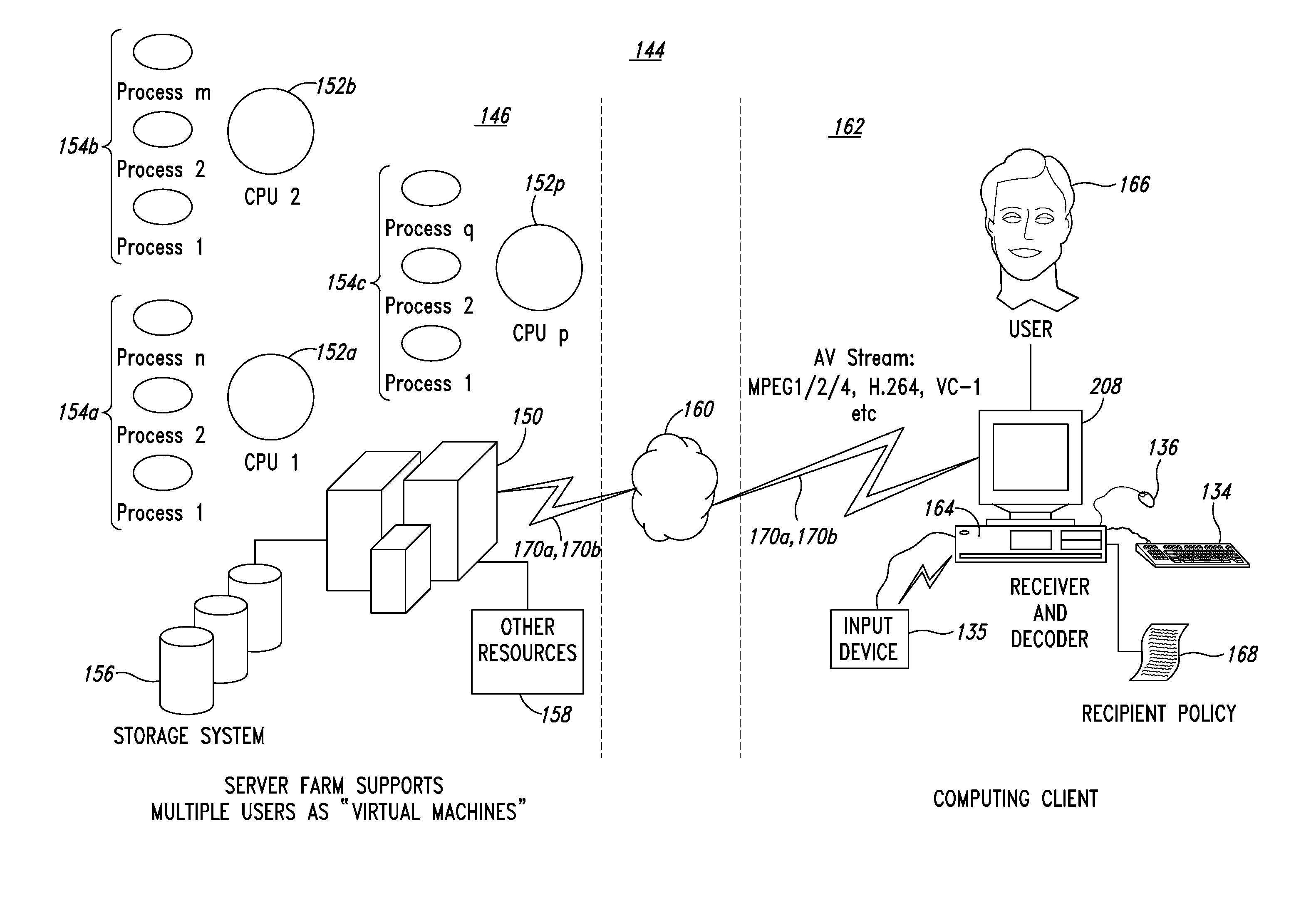

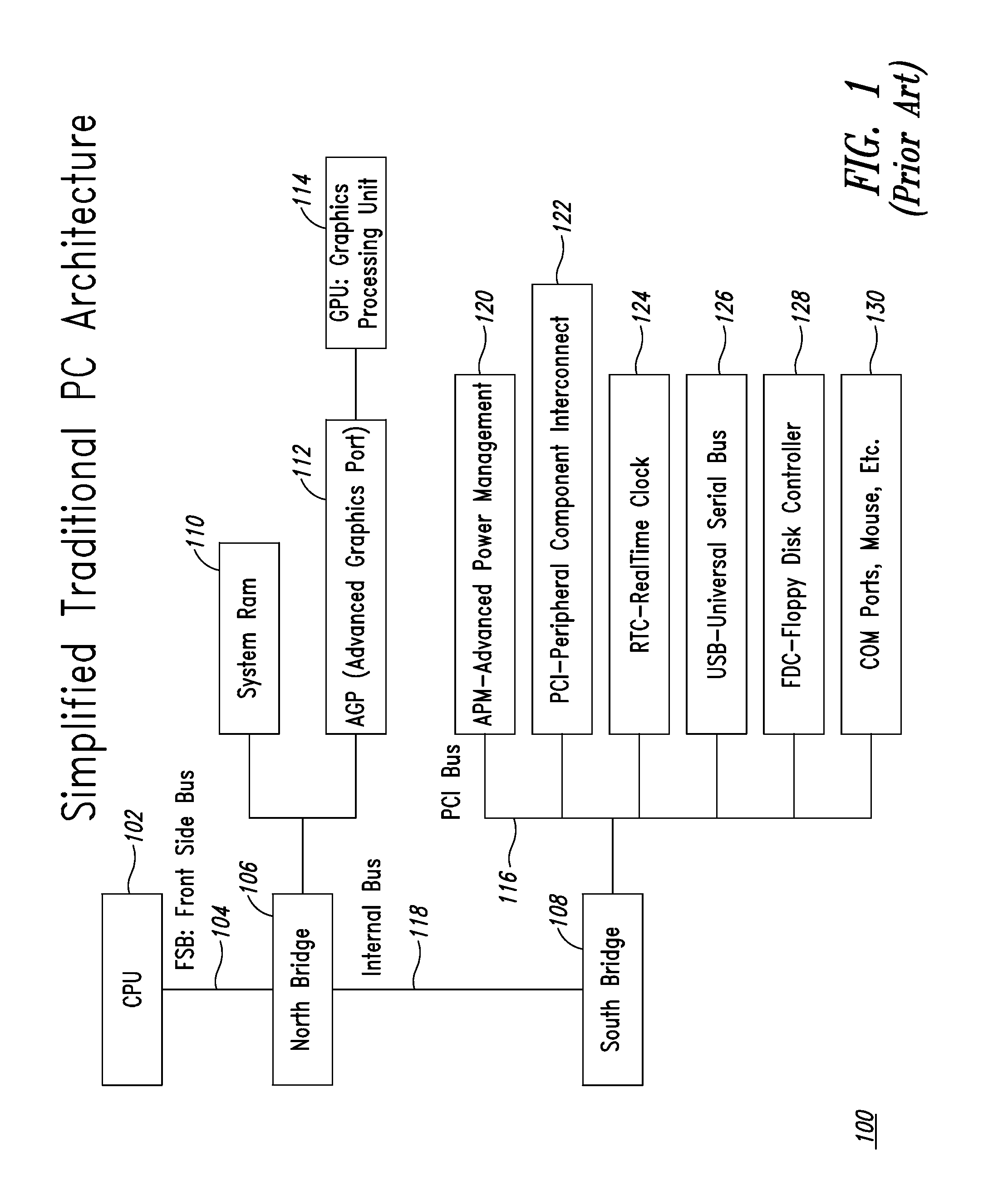

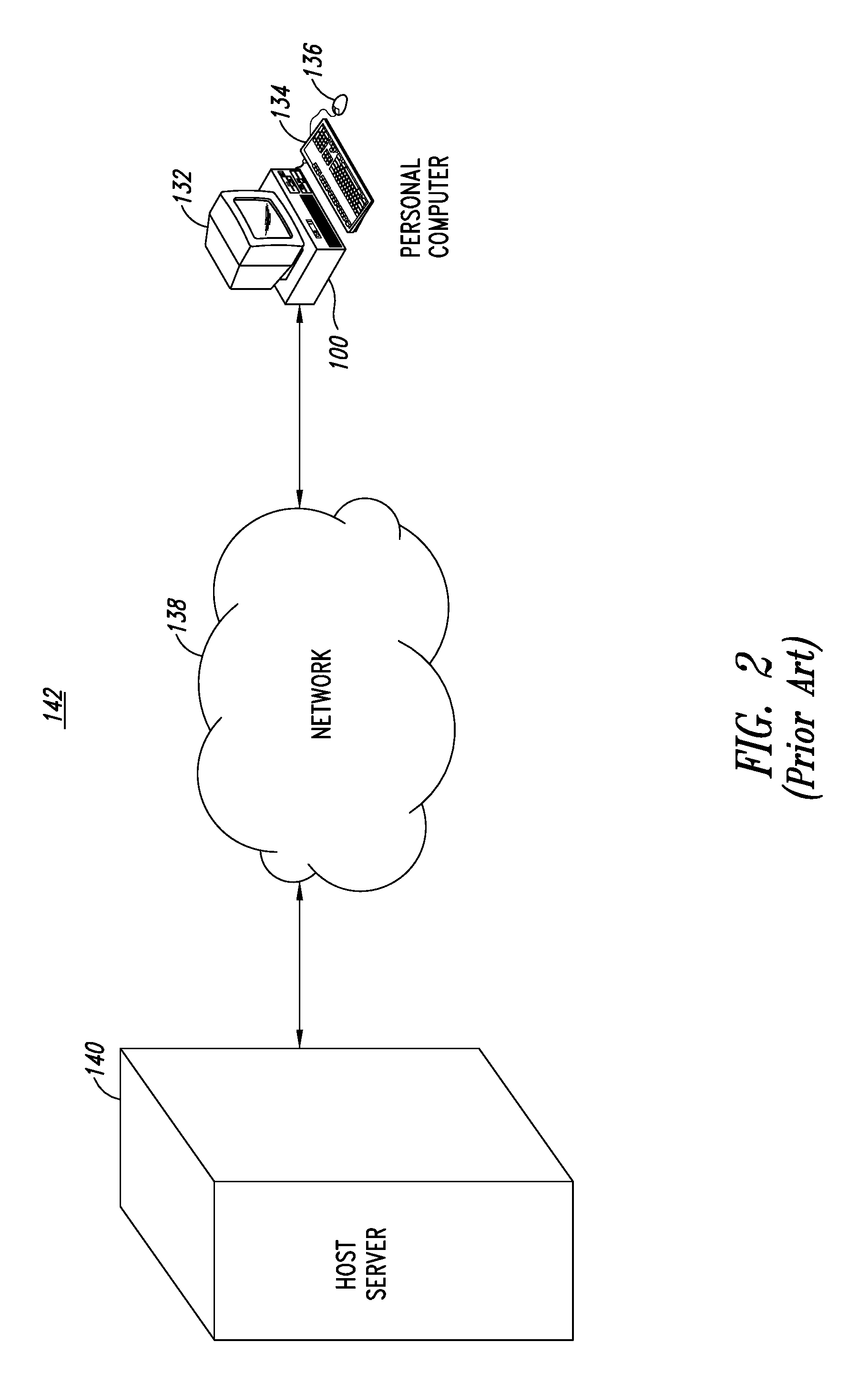

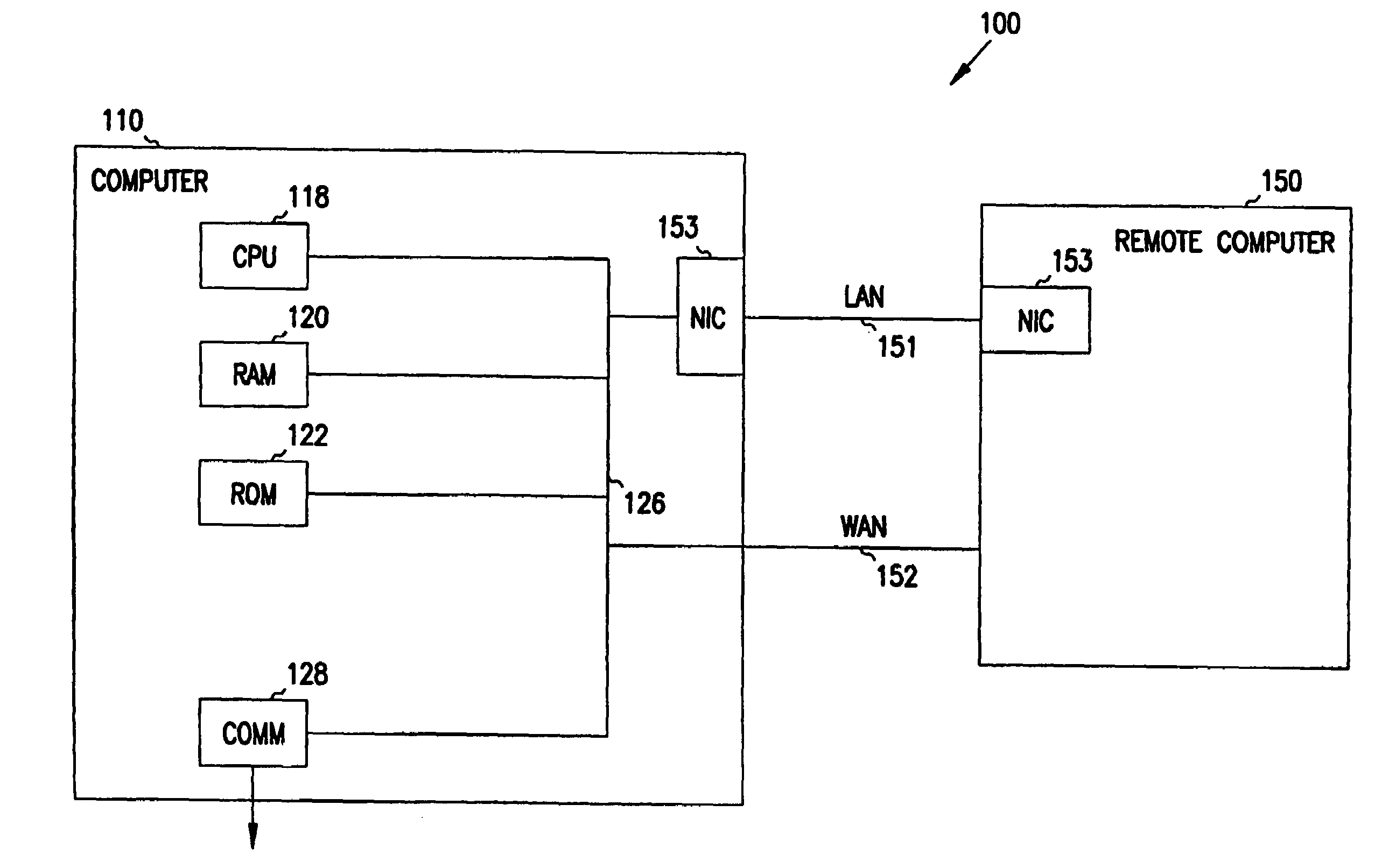

System and method for rendering a high-performance virtual desktop using compression technology

InactiveUS20090323799A1Color television with pulse code modulationColor television with bandwidth reductionComputer resourcesScalable computing

An embodiment of a network of extendable computer resources creates a virtual computing environment for a remote client. The network allocates at least some of the extendable computer resources to the virtual computing environment and compressively represents video output information of the virtual computing environment as an encoded data stream. The encoded data stream is communicated to the remote client, and input information to control the resources allocated to the virtual computing environment is received from the remote client. An embodiment of a local computing client receives a multiframe motion picture stream of encoded signals that represent the video output of a virtual computing environment hosted by a remote computer source. The local computing client decodes the motion picture stream, accepts input information operable to control the virtual computing environment, and communicates the input information to the remote computer source.

Owner:STMICROELECTRONICS SRL

System and method for a hierarchical system management architecture of a highly scalable computing system

InactiveUS7219156B1Hardware monitoringMultiple digital computer combinationsScalable computingSystems management

A modular computer system includes at least two processing functional modules each including a processing unit adapted to process data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one routing functional module is adapted to route data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one input or output functional module is adapted to input or output data and adapted to input / output data to other functional modules through at least one port including a plurality of data lines. Each processing, routing and input or output functional module includes a local controller adapted to control the local operation of the associated functional module, wherein the local controller is adapted to input and output control information over control lines connected to the respective ports of its functional module. At least one system controller functional module is adapted to communicate with one or more local controllers and provide control at a level above the local controllers. Each of the functional modules adapted to be cabled together with a single cable that includes a plurality of data lines and control lines such that control lines in each module are connected together and data lines in each unit are connected together. Each of the local controllers adapted to detect other local controllers to which it is connected and to thereby collectively determine the overall configuration of a system.

Owner:MORGAN STANLEY +1

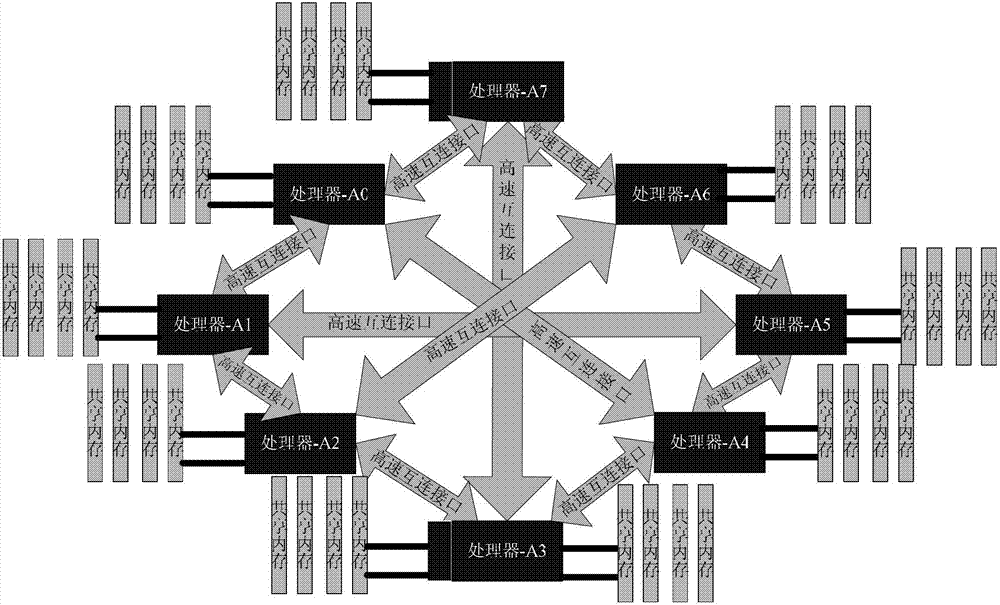

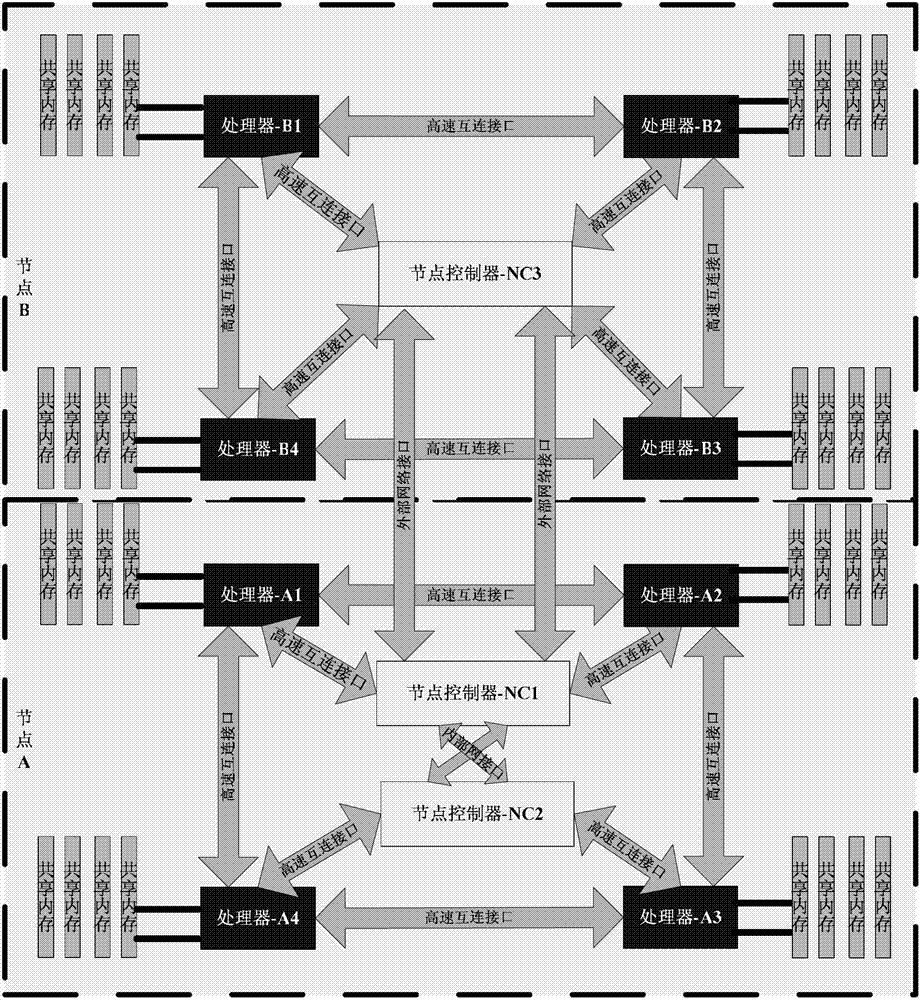

Node controller, parallel computing server system and route method

ActiveCN103092807AAchieve unlimited expansionSize limitMultiple digital computer combinationsScalable computingParallel computing

The invention discloses a node controller, a parallel computing server system and a route method, wherein the node controller is located in one node of the parallel computing server system and can comprise a high-speed interconnected interface and an external network interface, the high-speed interconnected interface is connected with a high-speed interconnected interface of a processor in the node and used for carrying out mutual data transmission with the high-speed interconnected interface of the processor, and the external network interface is connected with external network interfaces of other nodes in the parallel computing server system and used for carrying out mutual data transmission with the external network interfaces of the other nodes. Therefore, scale of the computing server system can be expanded, and performance of the computing server system is improved.

Owner:XFUSION DIGITAL TECH CO LTD

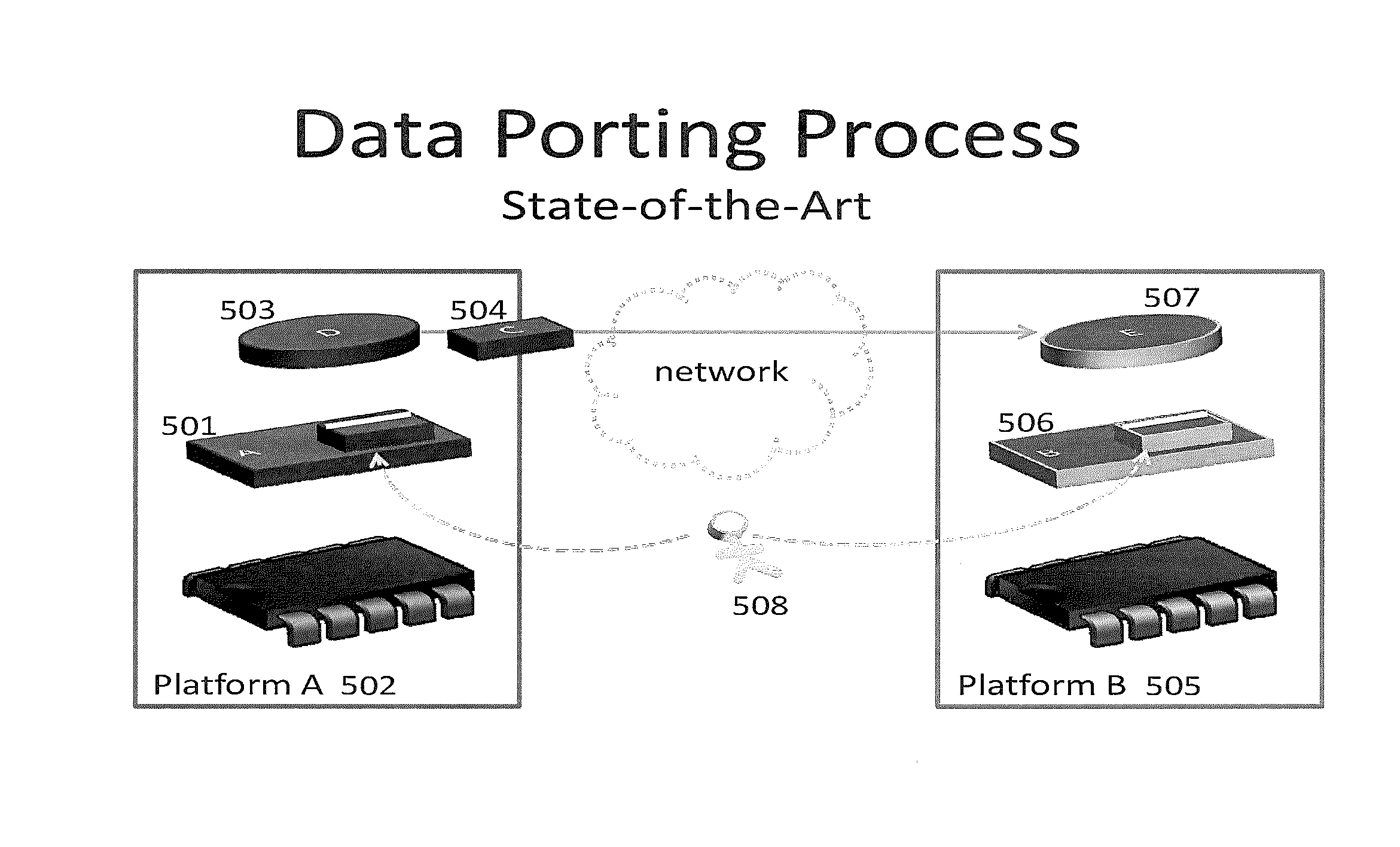

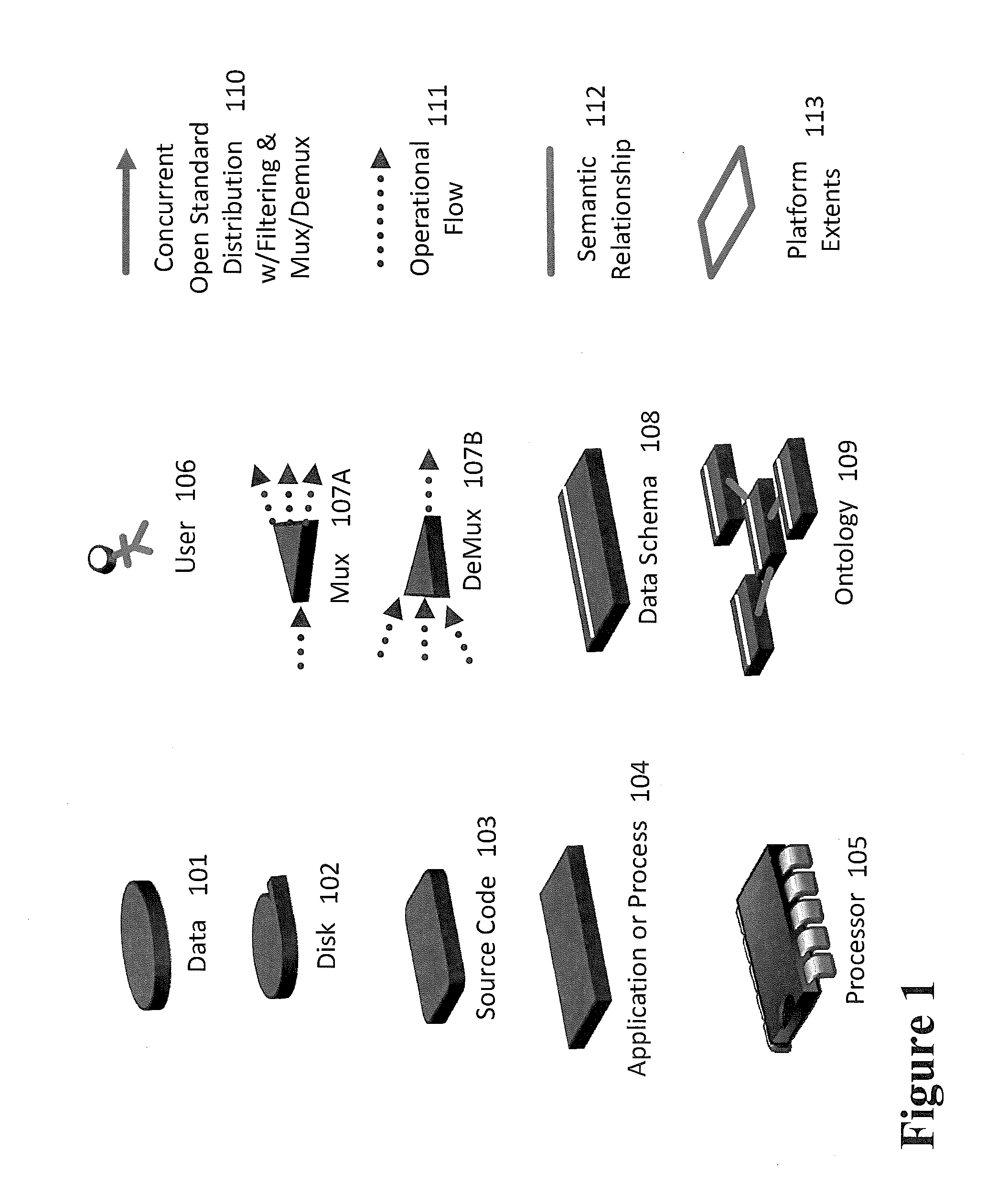

Realtime processing of streaming data

ActiveUS20160004578A1Maximize data capture performanceImprove data collection efficiencyInterprogram communicationProgram loading/initiatingStreaming dataProcessing

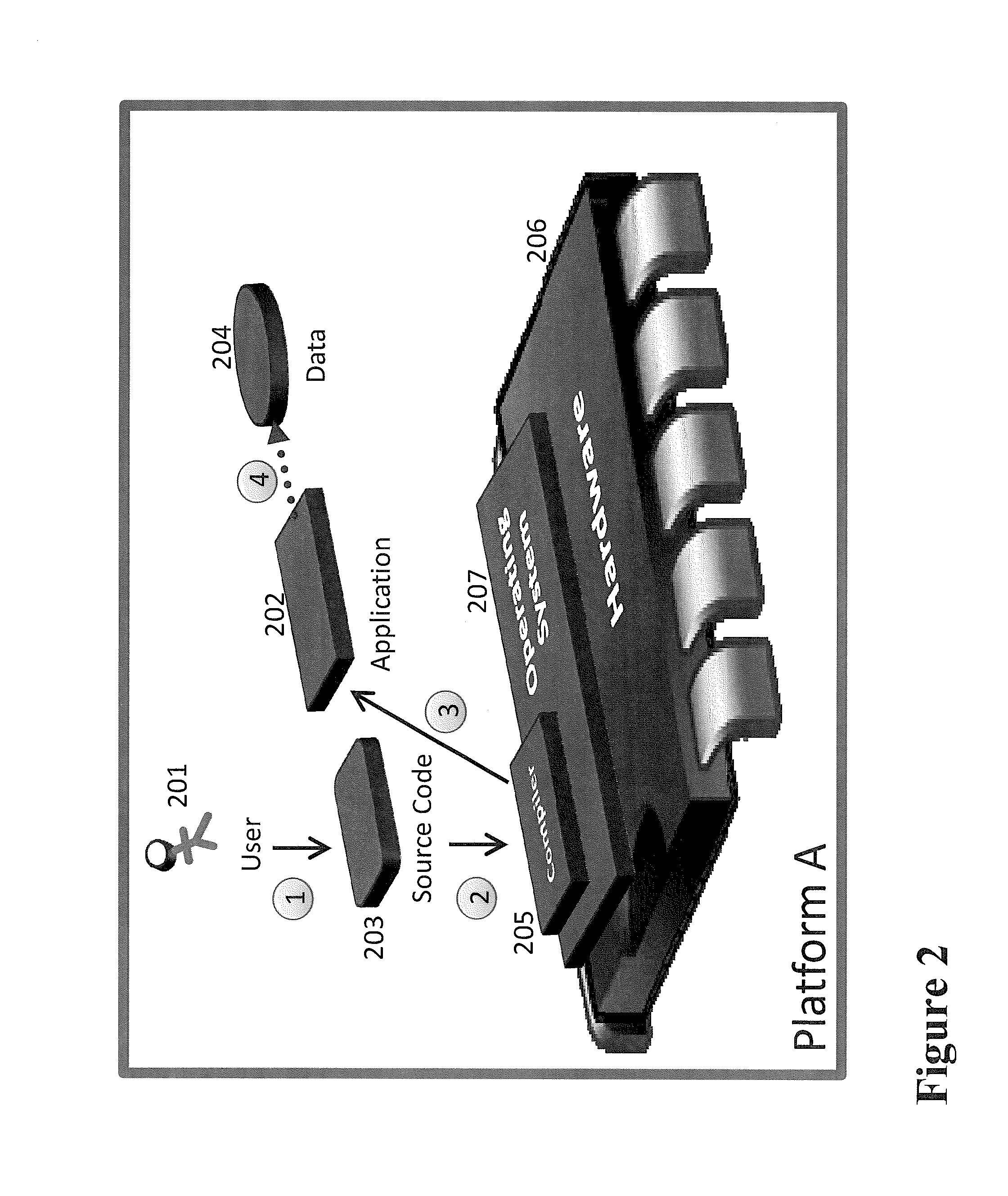

The invention described here is intended for enhancing the technology domain of real-time and high-performance distributed computing. This invention provides a connotative and intuitive grammar that allows users to define how data is to be automatically encoded / decoded for transport between computing systems. This capability eliminates the need for hand-crafting custom solutions for every combination of platform and transport medium. This is a software framework that can serve as a basis for real-time capture, distribution, and analysis of large volumes and variety of data moving at rapid or real-time velocity. It can be configured as-is or can be extended as a framework to filter-and-extract data from a system for distribution to other systems (including other instances of the framework). Users control all features for capture, filtering, distribution, analysis, and visualization by configuration files (as opposed to software programming) that are read at program startup. It enables large scalable computation of high velocity data over distributed heterogeneous platforms. As compared with conventional approaches to data capture which extract data in proprietary formats and rely upon post-run standalone analysis programs in non-real-time, this invention also allows data streaming in real-time to an open range of analysis and visualization tools. Data treatment options are specified via end-user configuration files as opposed to hard-coding software revisions.

Owner:FISHEYE PROD

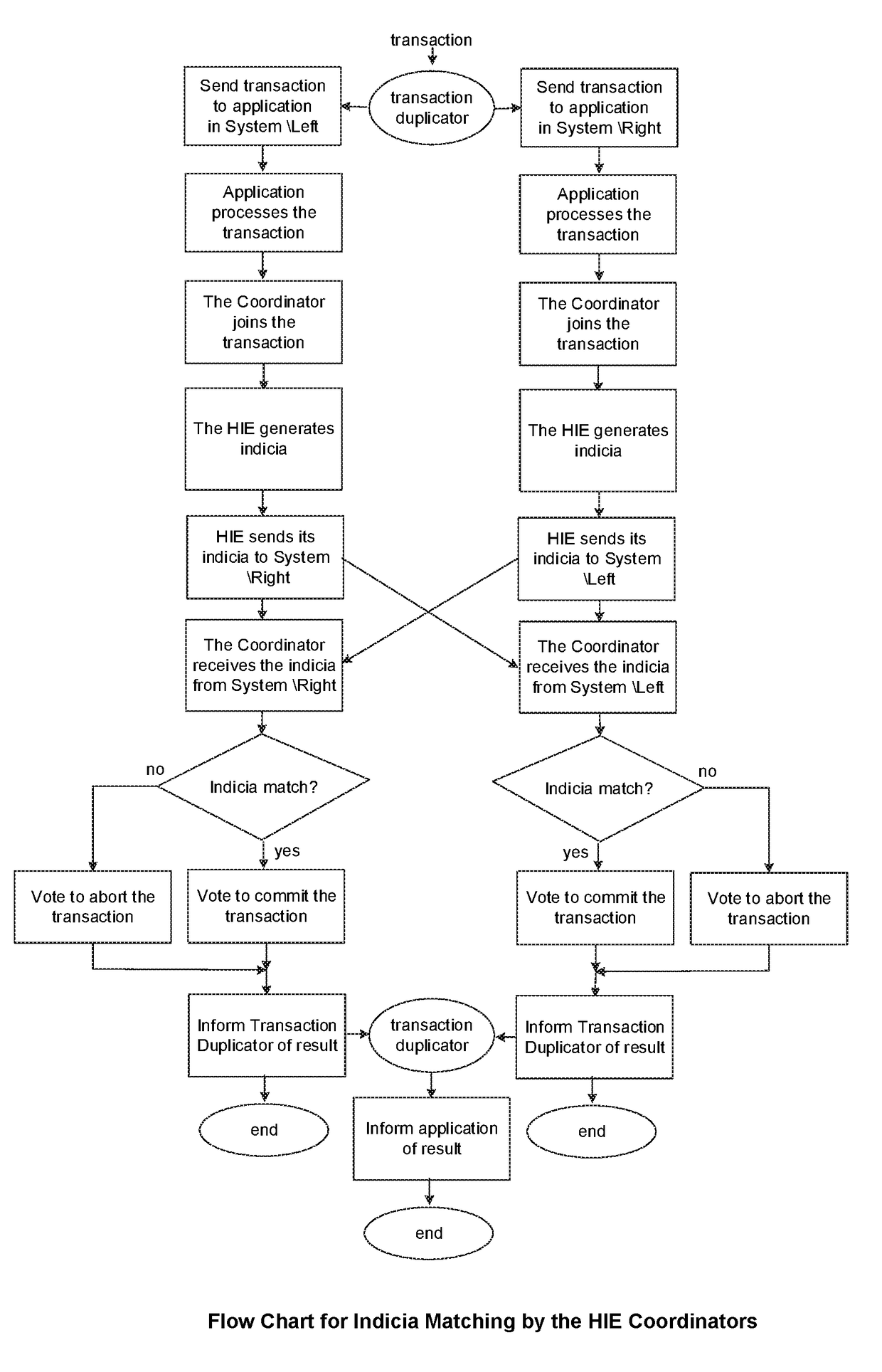

Method of ensuring real-time transaction integrity in the indestructible scalable computing cloud

ActiveUS9922074B1Improve performanceShort response timeDigital data information retrievalTransmissionScalable computingHigh availability

A method is provided to verify the computational results of a transaction processing system utilizing cloud resources in a high-availability and scalable fashion. A transaction is allowed to modify an application's state only if the validity of the result of the processing of the transaction is verified across the majority of the participating child nodes in the cloud. Otherwise, the transaction is aborted.

Owner:GRAVIC

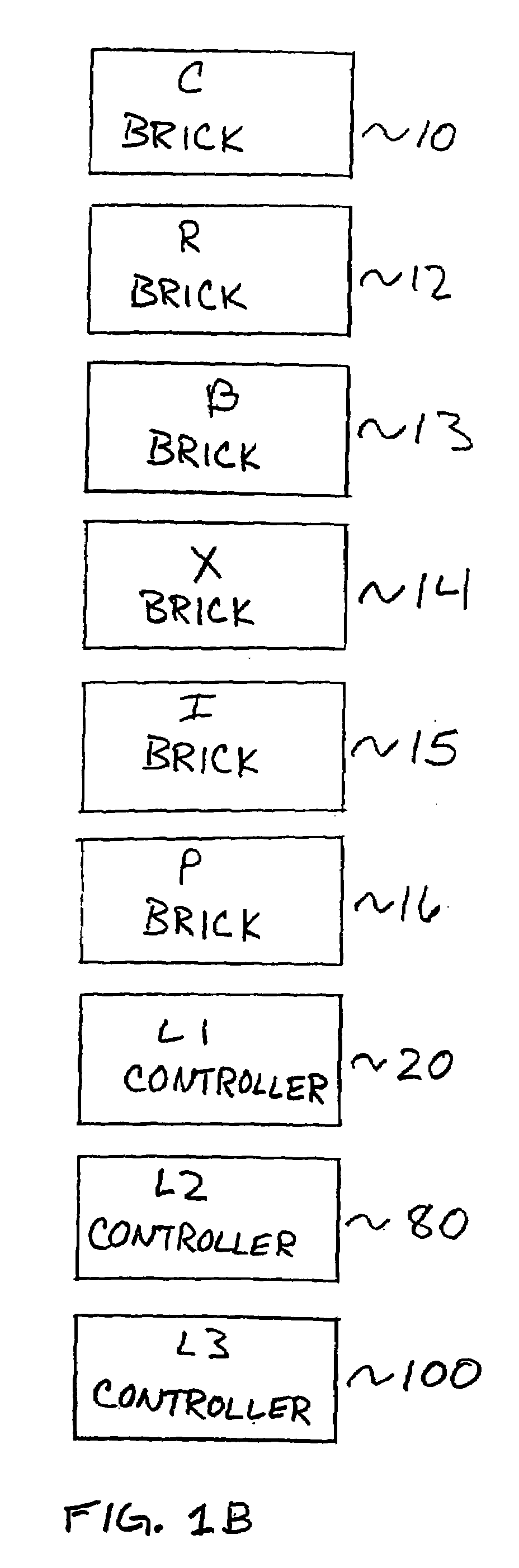

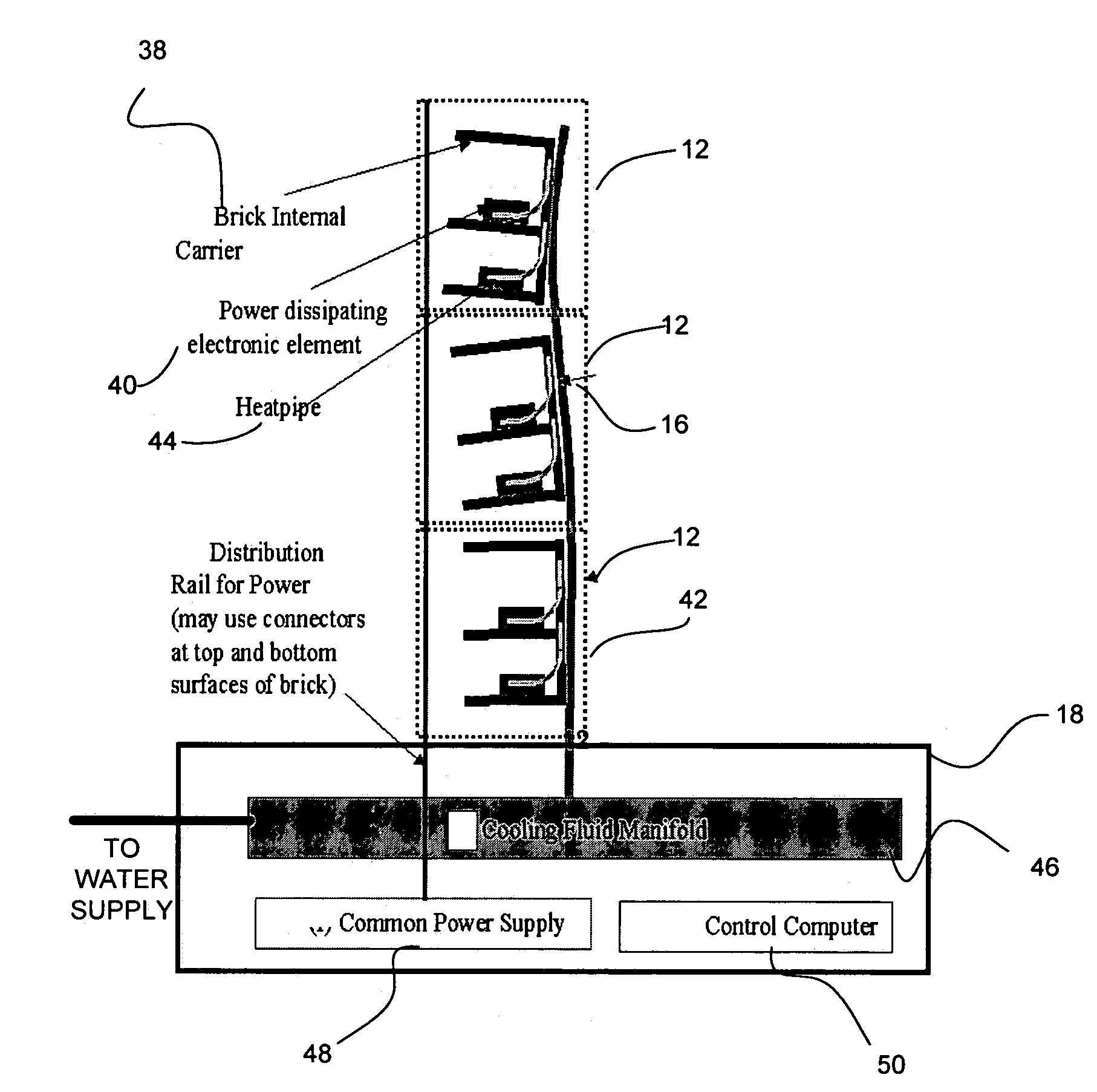

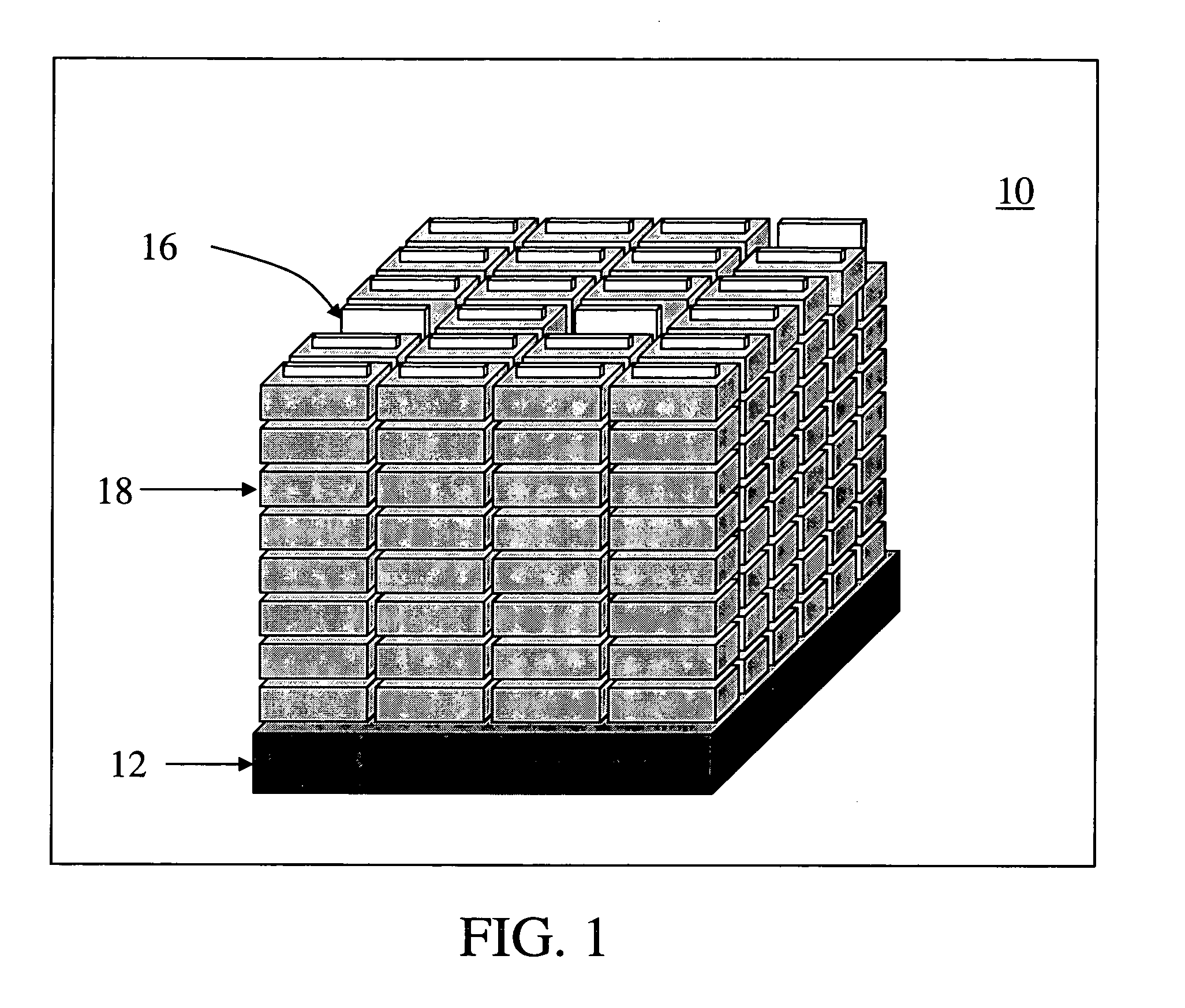

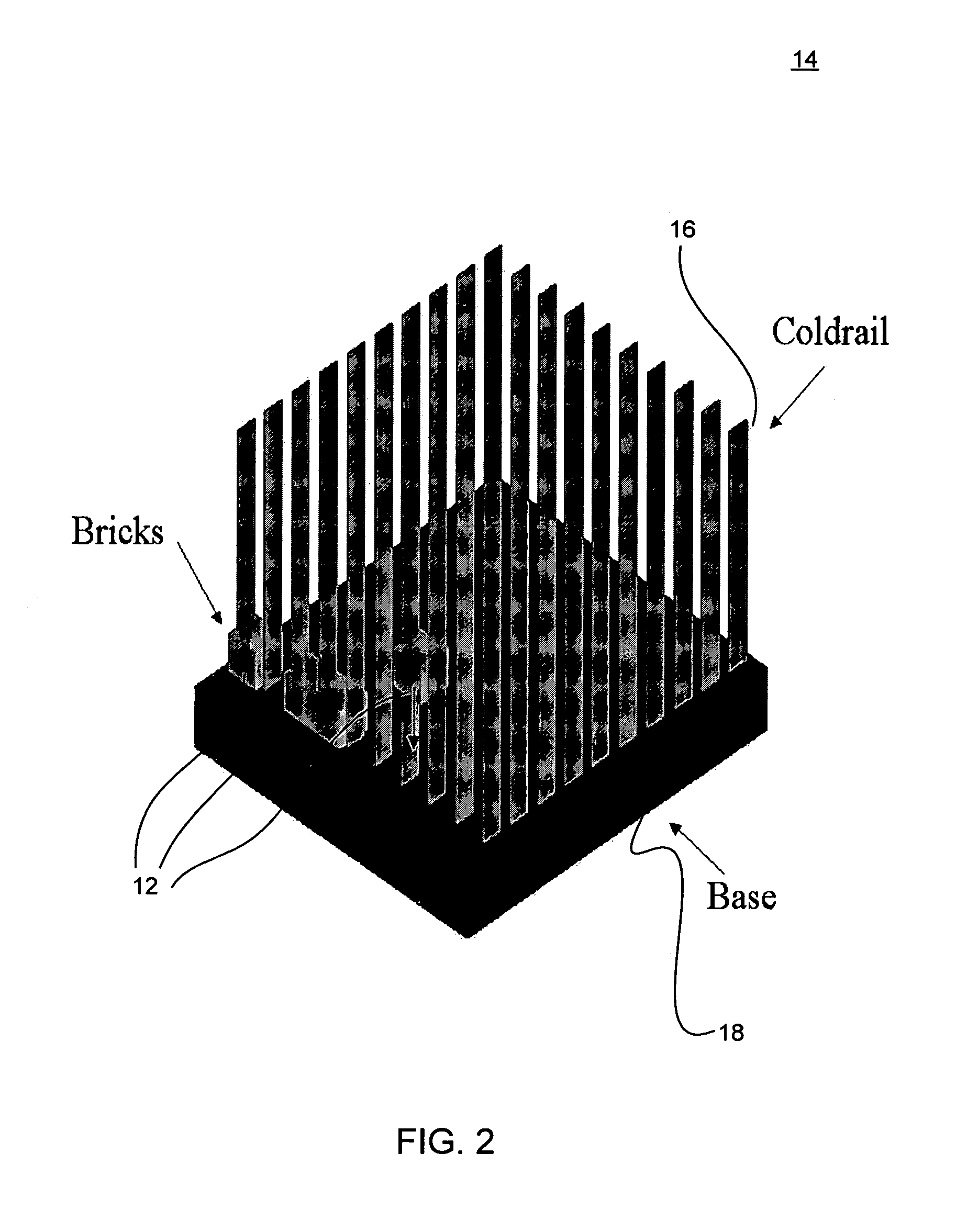

System and method for providing cooling in a three-dimensional infrastructure for massively scalable computers

A three-dimensional computer infrastructure cooling system is provided. The three-dimensional computer infrastructure cooling system includes at least one compute, storage, or communications brick. In addition, the three-dimensional computer infrastructure cooling system includes at least one coldrail to facilitate the removal of heat from the at least one compute, storage, or communications brick. Also, the three-dimensional computer infrastructure includes a brick-internal carrier within the at least one compute, storage, communications brick, wherein the brick-internal carrier is attached to the at least one coldrail. Moreover, the three-dimensional computer infrastructure includes a power dissipating electronic element within the at-least-one compute, storage, or communications brick, wherein the power dissipating element is attached to the brick-internal carrier.

Owner:GOOGLE LLC

Multiple peer groups for efficient scalable computing

InactiveUS20080080393A1Data switching by path configurationNetwork connectionsScalable computingGroup operation

Owner:MICROSOFT TECH LICENSING LLC

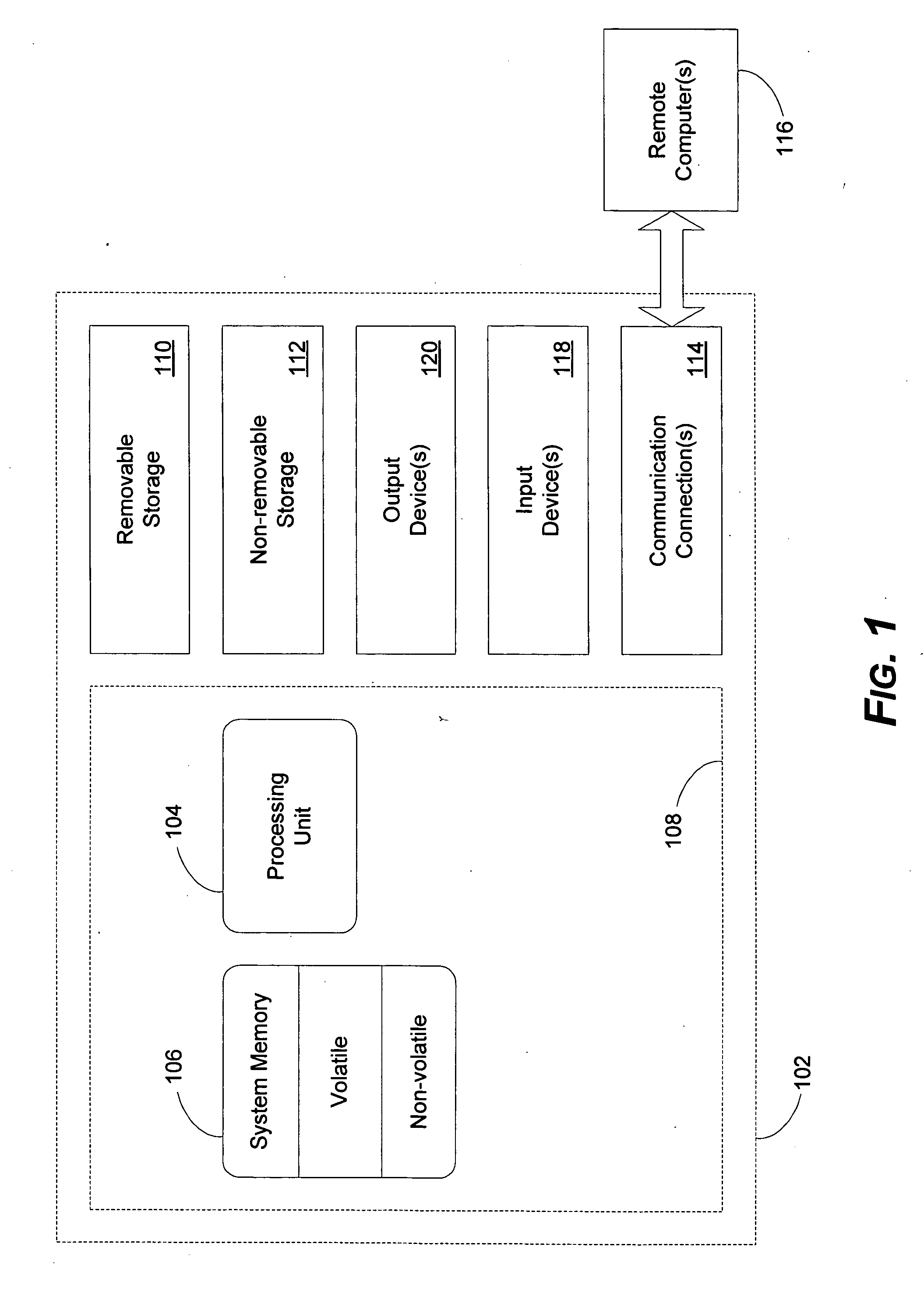

System and method for extensible computer assisted collaboration

A collaborative services platform may include a connectivity service, an activity service and a contact management service. The connectivity service may provide communicative connectivity between users of the collaborative services platform. The activity service may provide one or more collaborative activities supporting various modes of communication. The contact management service may maintain contact information for each of the users of the collaborative service platform. It may be that not every user is capable of participating in every collaborative activity. The contact information maintained by the contact management service may indicate the collaborative activities in which each user is capable of participating. A set of programmatic objects utilized to implement the collaborative services platform may include contact objects, conference objects, MeContact objects, endpoint objects, published objects and presence objects. The presence object may represent a presence of a particular user in a networked computing environment and may reference multiple collaborative endpoints.

Owner:MICROSOFT TECH LICENSING LLC

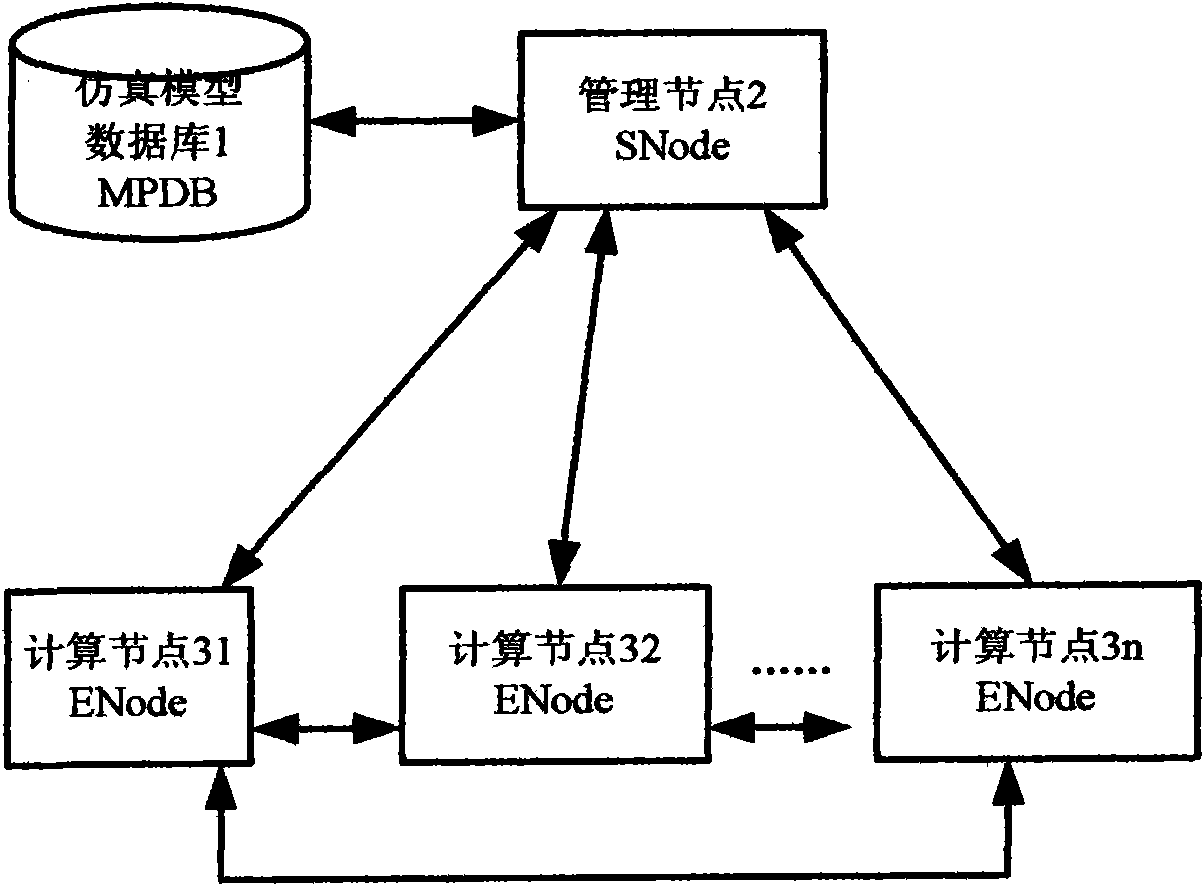

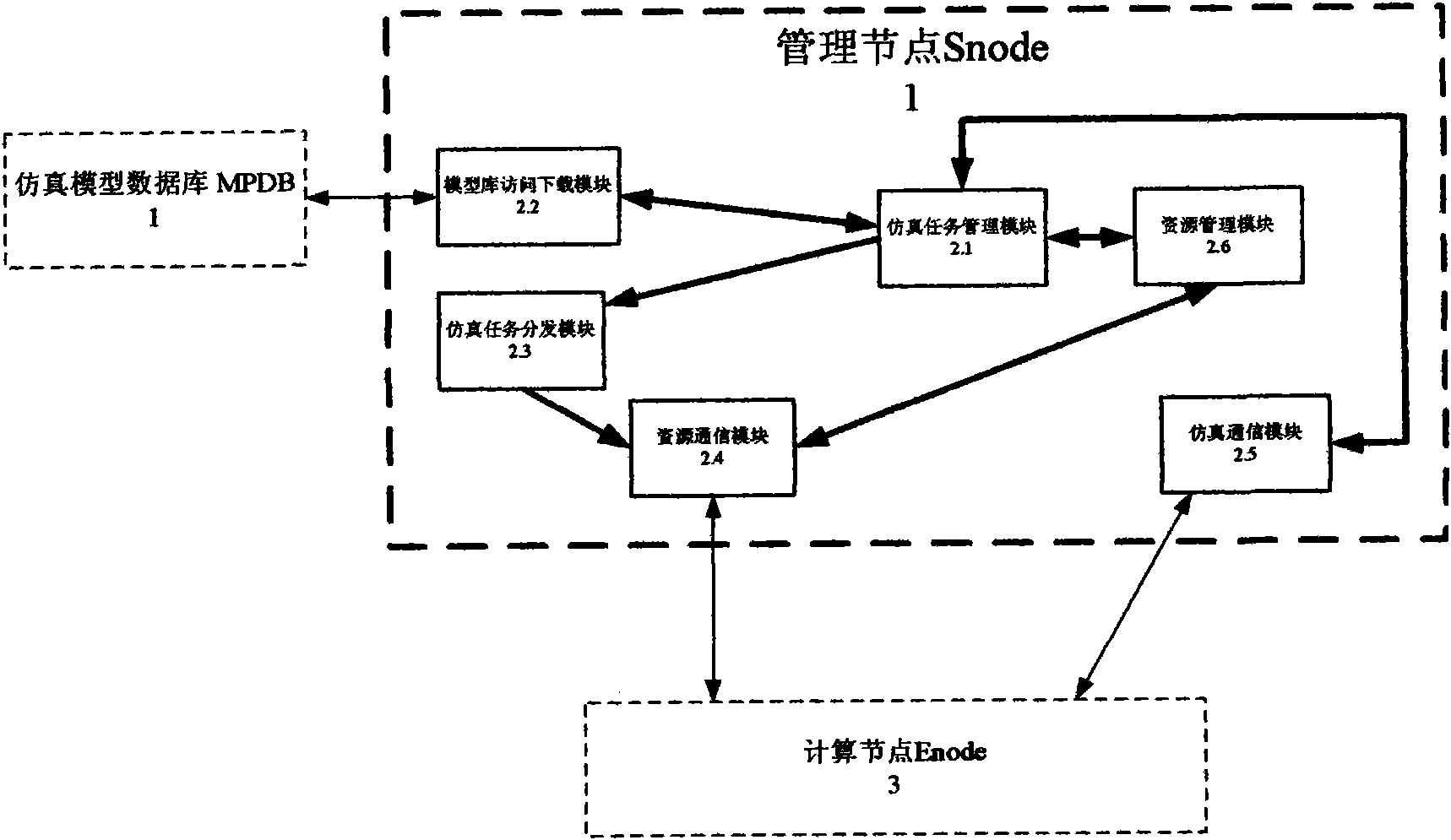

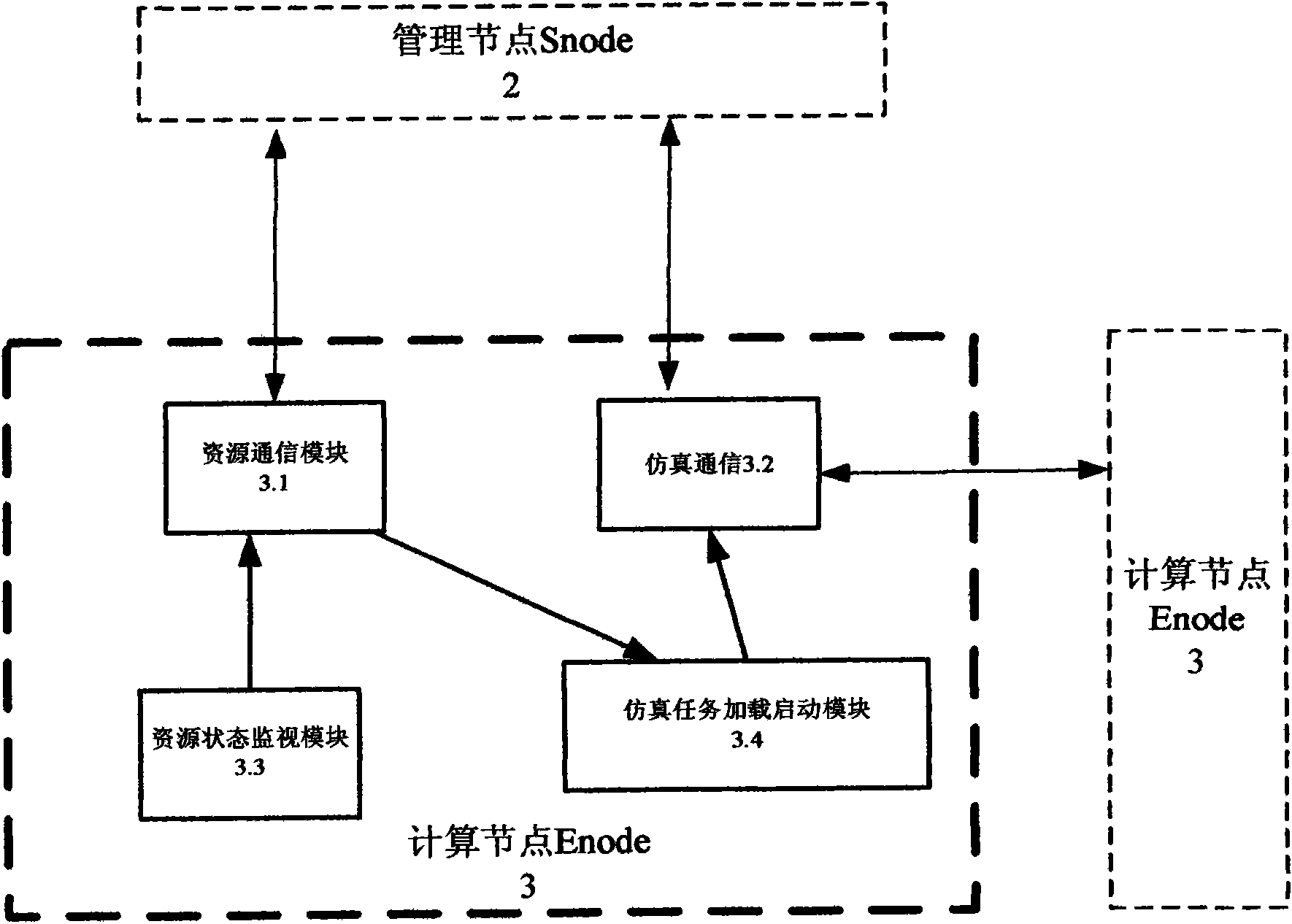

Simulation application-orientated universal extensible computing system

InactiveCN101937359AUsableWith loadSoftware simulation/interpretation/emulationSpecial data processing applicationsScalable computingResource utilization

The invention discloses a simulation application-orientated universal extensible computing system. The system comprises a simulation model database 1, a simulation application management node 2 and a simulation computing node 3. The simulation model database 1 is the storage center of a simulation model; and a user can submit a newly developed model to the simulation model database 1 through the simulation application management node 2, and also can search the desired existing model in the simulation model database 1 through the simulation application management node 2 and download the model for use. The system has the advantages that: a simulation application integrated platform has universal function and extensible scale; the universality of the simulation platform and the plug and play of the simulation model realize dynamic adjustment of simulation computing load and resource use efficiency; the integration efficiency of the different application-orientated complex simulation system is improved, and the development and integration efficiency of the simulation application system is improved; and the availability, reliability and resource utilization rate of the simulation application system are improved.

Owner:NO 709 RES INST OF CHINA SHIPBUILDING IND CORP

Execution allocation cost assessment for computing systems and environments including elastic computing systems and environments

InactiveUS8560465B2Computing capabilities of a computing systemEffective resourcesDigital computer detailsMultiprogramming arrangementsScalable computingSupervised learning

Owner:SAMSUNG ELECTRONICS CO LTD

Multiple peer groups for efficient scalable computing

InactiveUS7881316B2Time-division multiplexData switching by path configurationScalable computingDistributed computing

Owner:MICROSOFT TECH LICENSING LLC

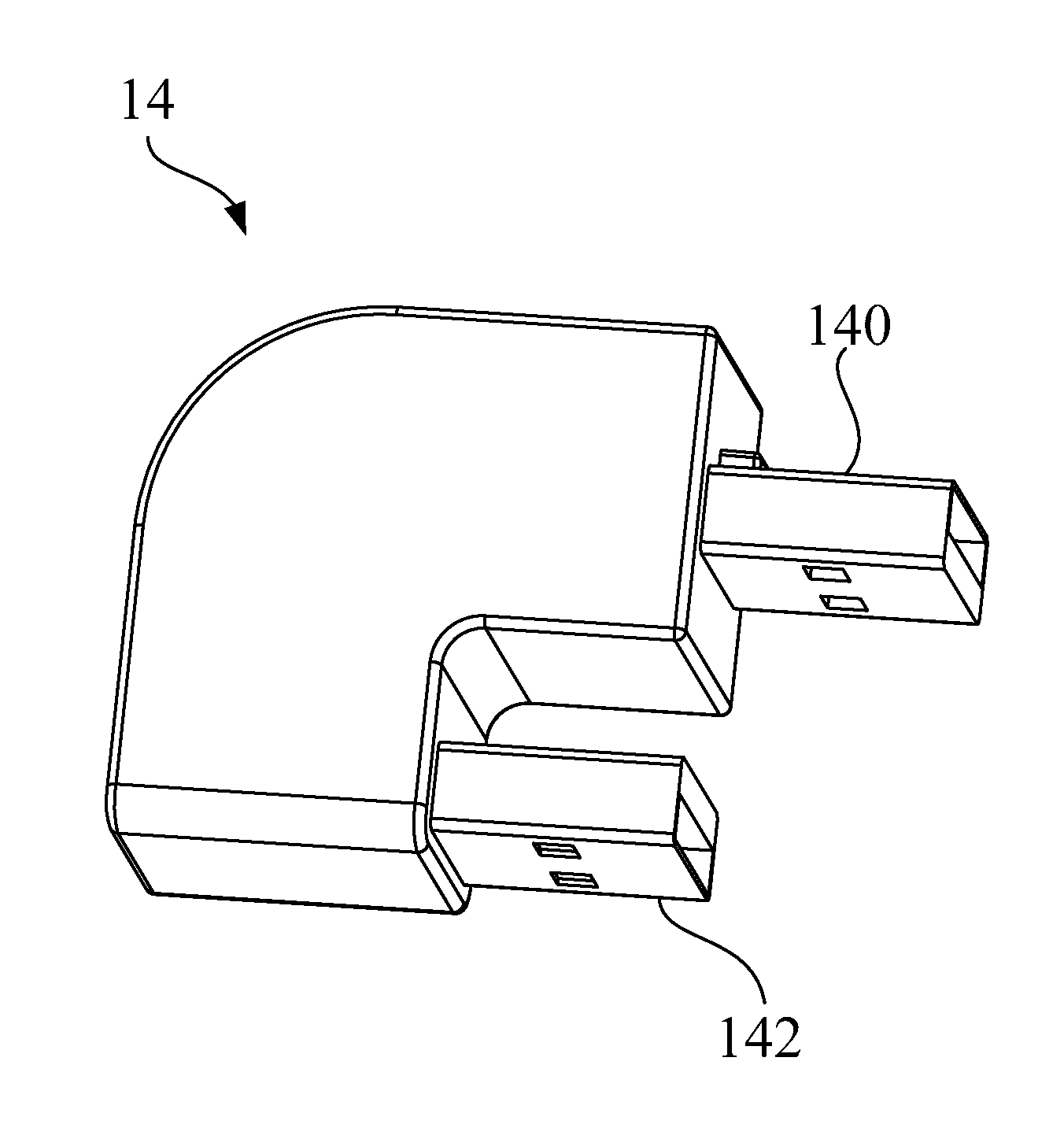

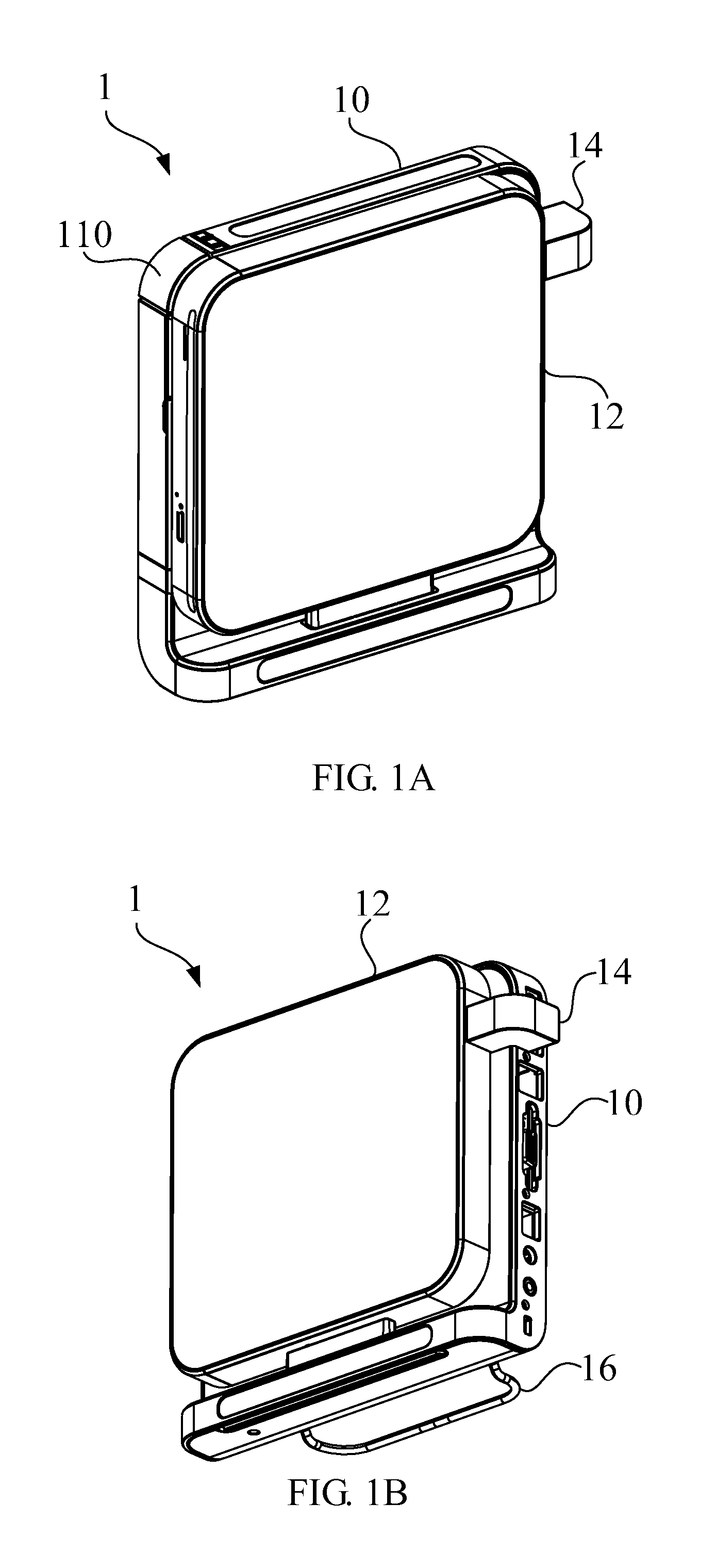

Expandable computer system and fastening device thereof

ActiveUS8391014B2Conveniently disposedConveniently and stably fastenedDigital data processing detailsCoupling device detailsScalable computingComputerized system

An expandable computer system and a fastening device are disclosed in this invention. The expandable computer system includes a computer, an expansion device and a rigid component. The expansion device includes a first holding portion disposed at a first side of the expansion device, and a first interface socket disposed at a second side of the expansion device. The computer includes a second holding portion and a second interface socket. The rigid component has two transmission plugs and a transmission circuit. The two transmission plugs are coupled with two terminals of the transmission circuit. When the first holding portion is connected with the second holding portion, the two transmission plugs are detachably connected with the first interface socket and the second interface socket to fasten the expansion device to the computer.

Owner:PEGATRON

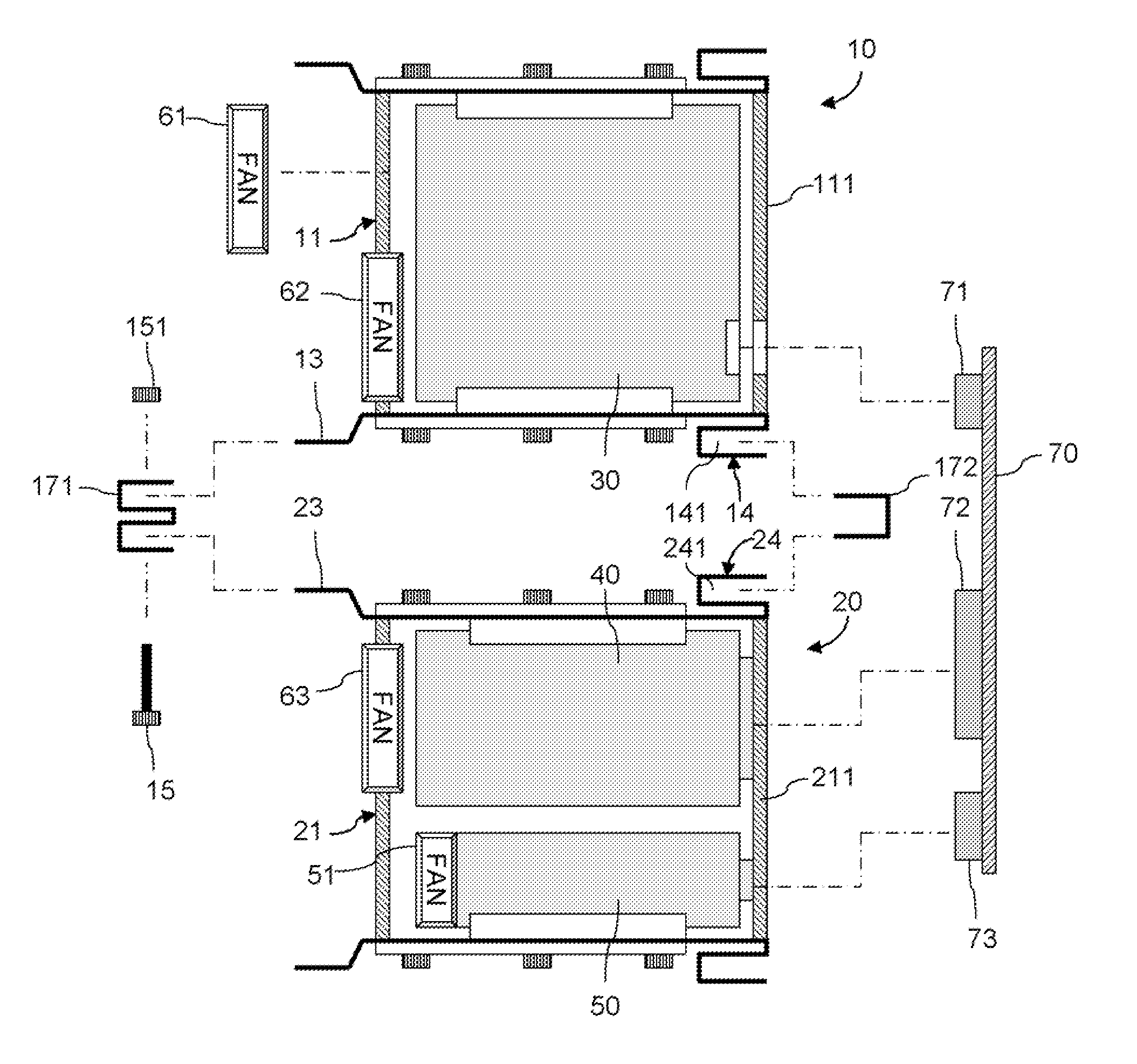

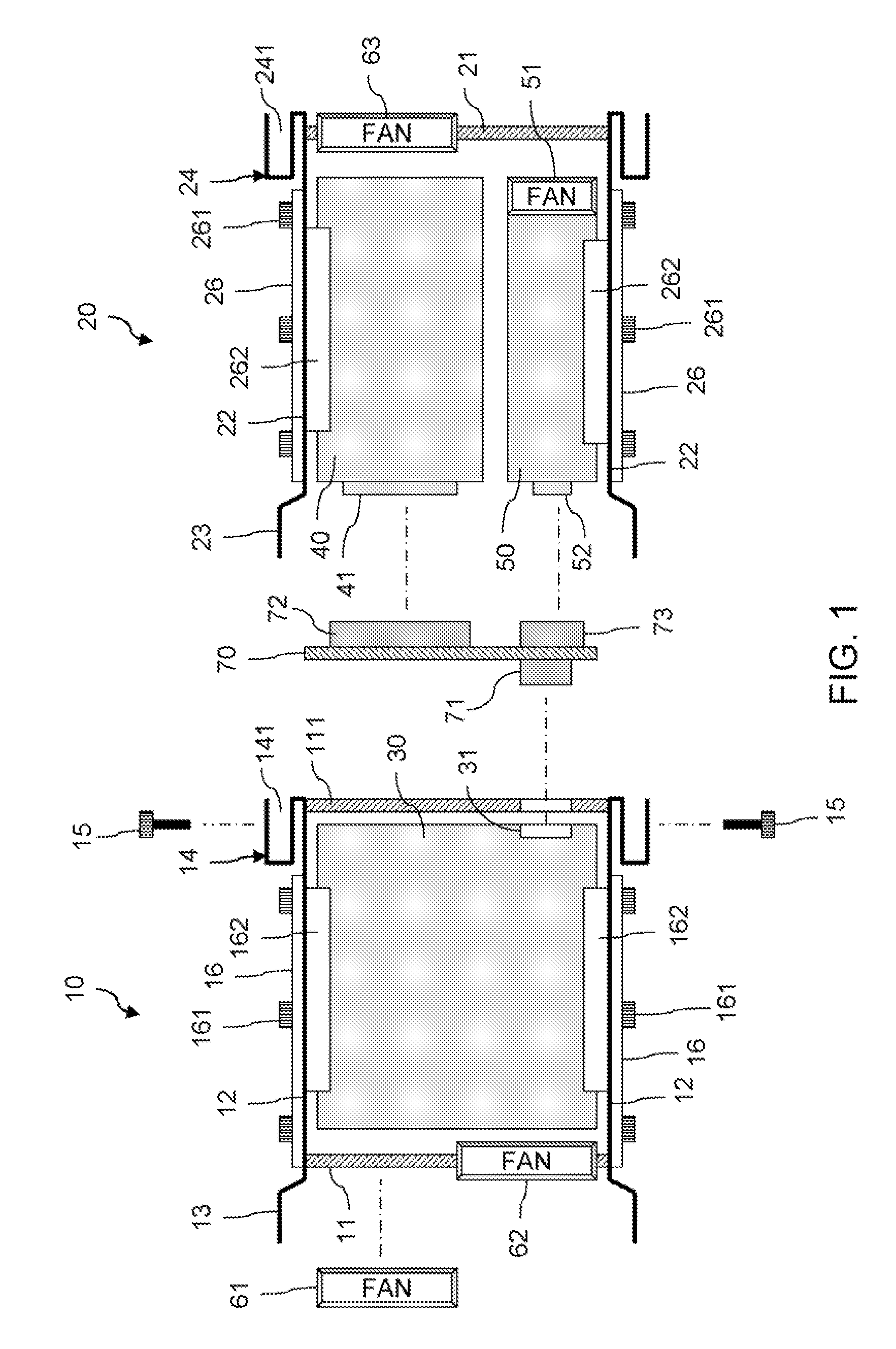

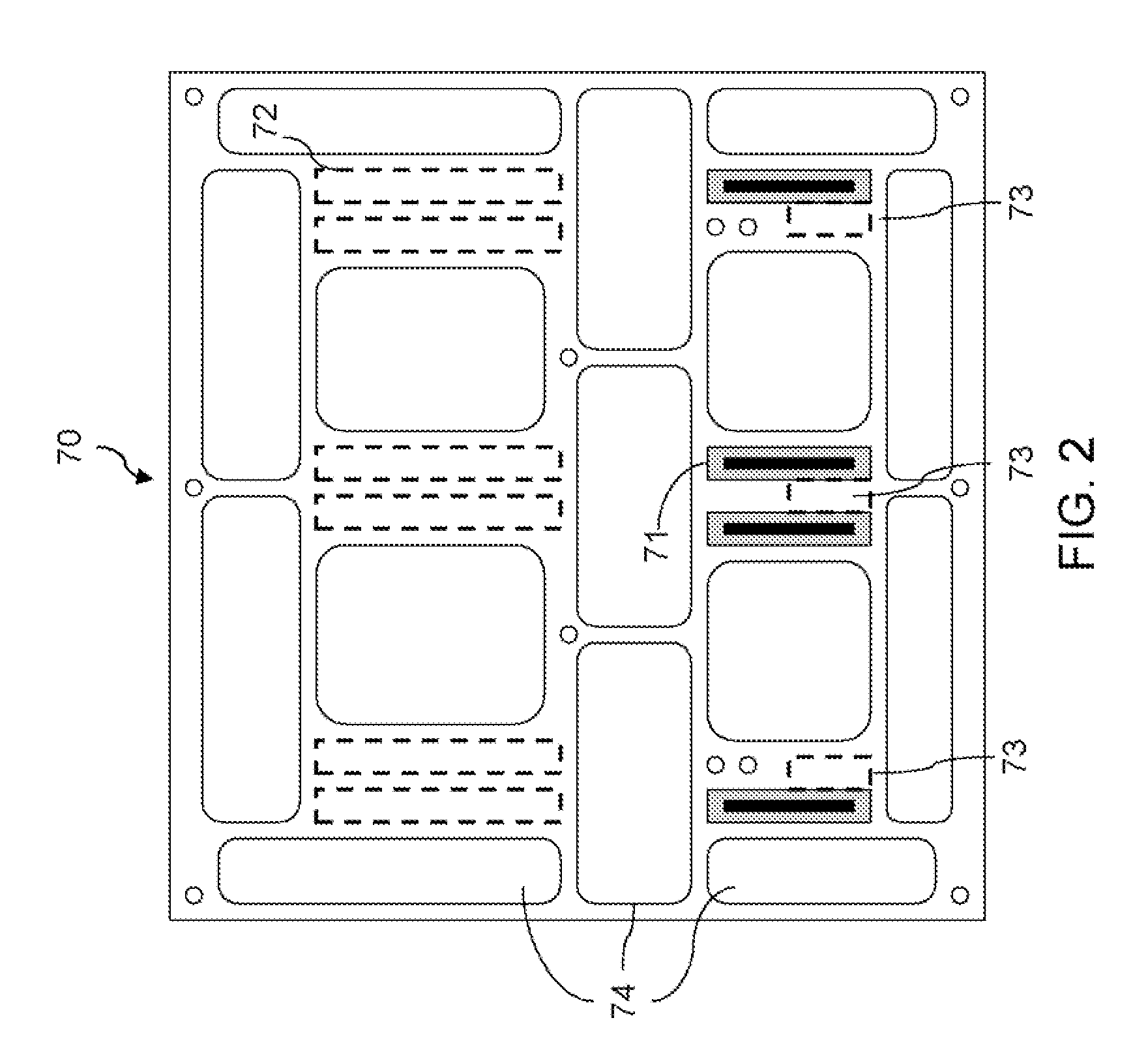

Scalable computer system and reconfigurable chassis module thereof

ActiveUS7656669B2Better accommodateMaximize space utilizationDigital data processing detailsRack/frame constructionScalable computingModular unit

A scalable computer system includes a reconfigurable chassis module, plural hardware units and one or more inter-plane. The chassis module has plural modular units for configuring the hardware units therein respectively. Each of the modular units has dedicated framework to attach the inter-plane or dedicated fans. The inter-plane is to connect with the separated hardware units between the modular units. Each of the modular units is equipped with compatible male and female joints to engage with each other. Certain fastening assemblies may be applied to secure male-male or female-female joints, thereby enabling the modular units to be front-to-back and / or side-by-side connections.

Owner:MITAC INT CORP

Securely using service providers in elastic computing systems and environments

InactiveUS8601534B2Improve abilitiesEasy to useDigital data authenticationProgram controlScalable computingService provision

Access permission can be assigned to a particular individually executable portion of computer executable code (“component-specific access permission”) and enforced in connection with accessing the services of a service provider by the individually executable portion (or component). It should be noted that least one of the individually executable portions can request the services when executed by a dynamically scalable computing resource provider. In addition, general and component-specific access permissions respectively associated with executable computer code as a whole or one of it specific portions (or components) can be cancelled or rendered inoperable in response to an explicit request for cancelation.

Owner:SAMSUNG ELECTRONICS CO LTD

Multiple peer groups for efficient scalable computing

InactiveUS20080080529A1Time-division multiplexData switching by path configurationScalable computingDistributed computing

Owner:MICROSOFT TECH LICENSING LLC

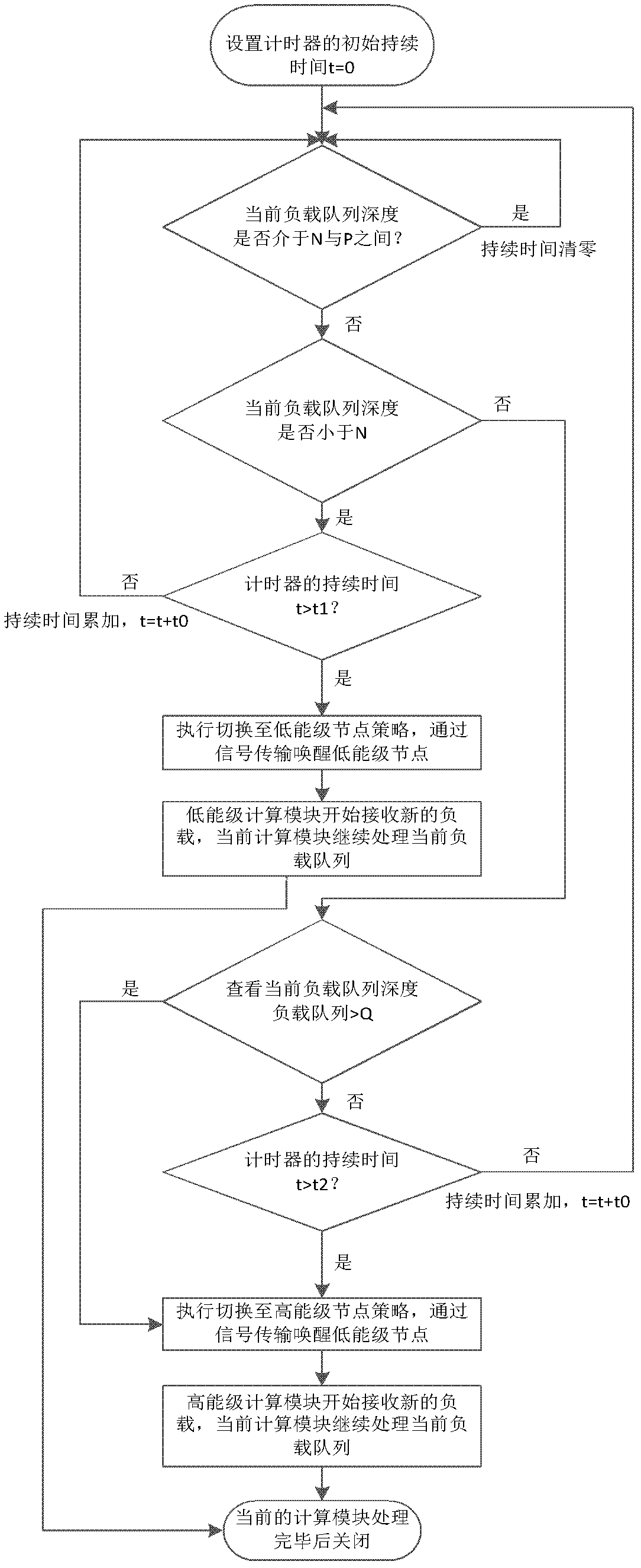

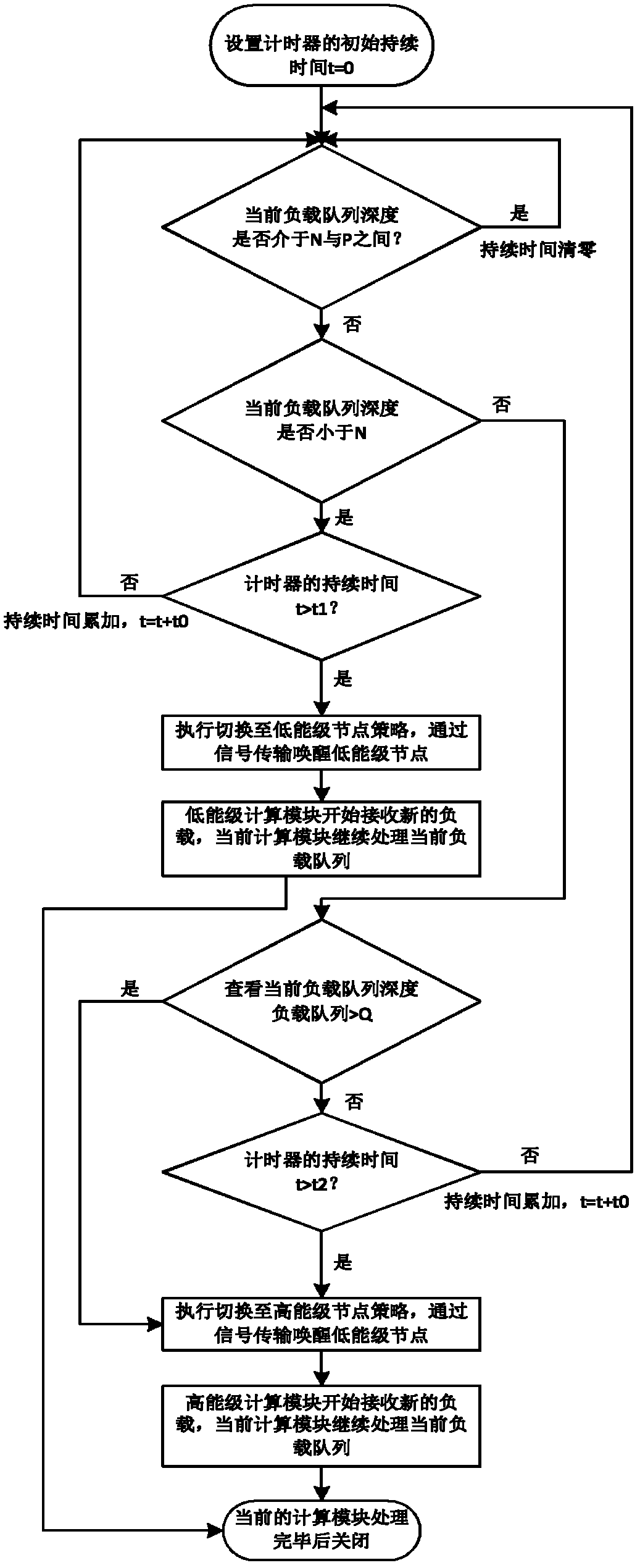

Server and working method thereof

ActiveCN102521046ASolve processing performance bottlenecksEliminate overheadEnergy efficient ICTResource allocationScalable computingCurrent load

The invention provides a working method of a server, and the working method comprises the following steps: turning on a timer, setting the initial endurance time t of the timer to be equal to 0, judging whether the depth of a current load queue is between a low load threshold value and a high load threshold value or not, judging whether the depth of the current load queue is less than the low load threshold value or not if the depth of the current load queue is not between the low load threshold value and the high load threshold value, judging whether the endurance time t of the timer is more than a low load time threshold value or not if the depth of the current load queue is less than the low load threshold value, switching from a current calculating module to another lower-energy-level calculating module if the endurance time t of the timer is more than the low load time threshold value, and beginning receiving a new load by the lower-energy-level calculating module. The working method of the server has the advantages that the performances of the calculating modules can be extended, a storage space can be extended flexibly, the energy consumption of the calculating modules can be regulated according to performance requirements, and the minimization of the idle-load energy consumption of a system can be achieved to achieve high-efficiency service.

Owner:HUAZHONG UNIV OF SCI & TECH

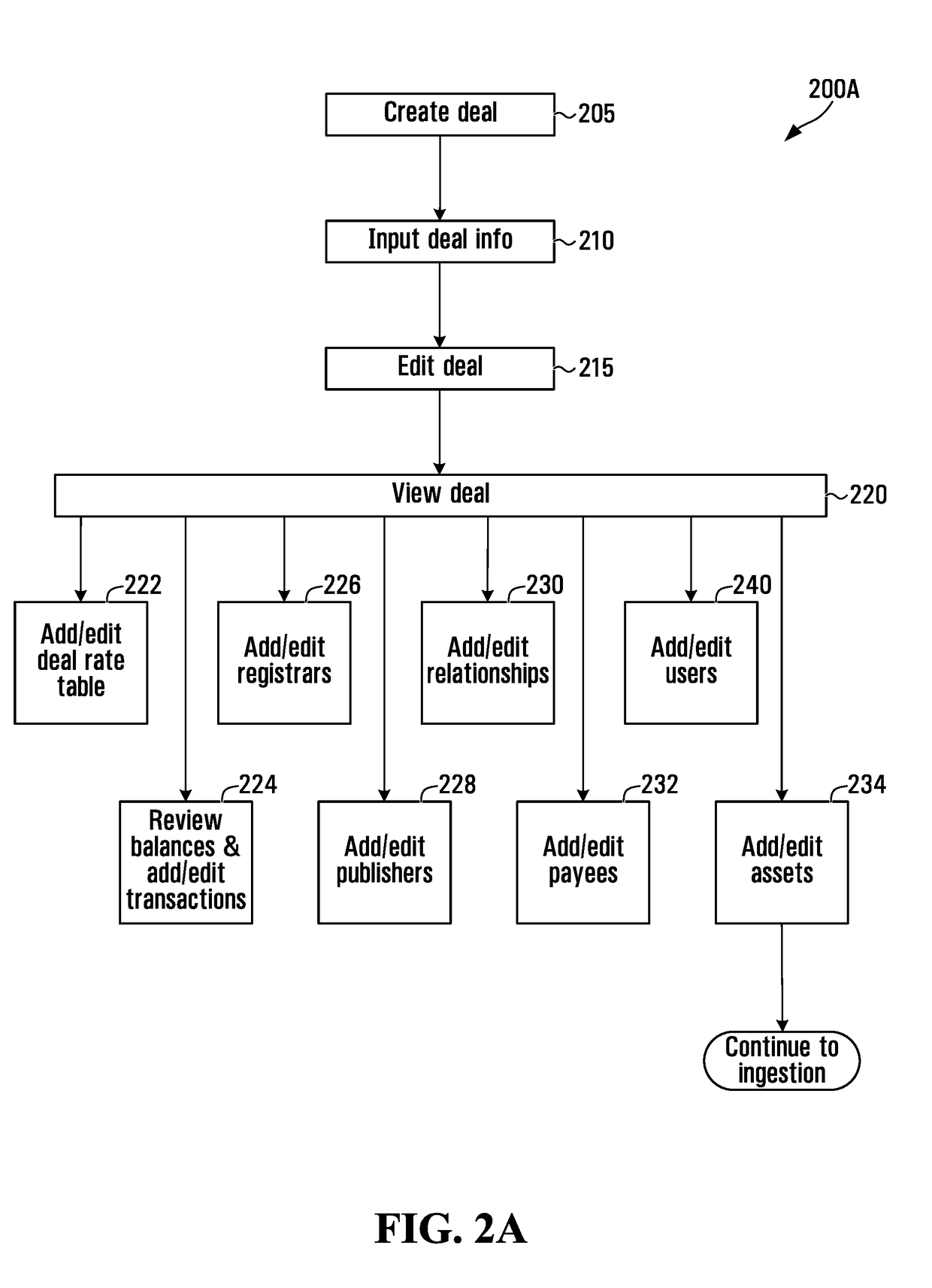

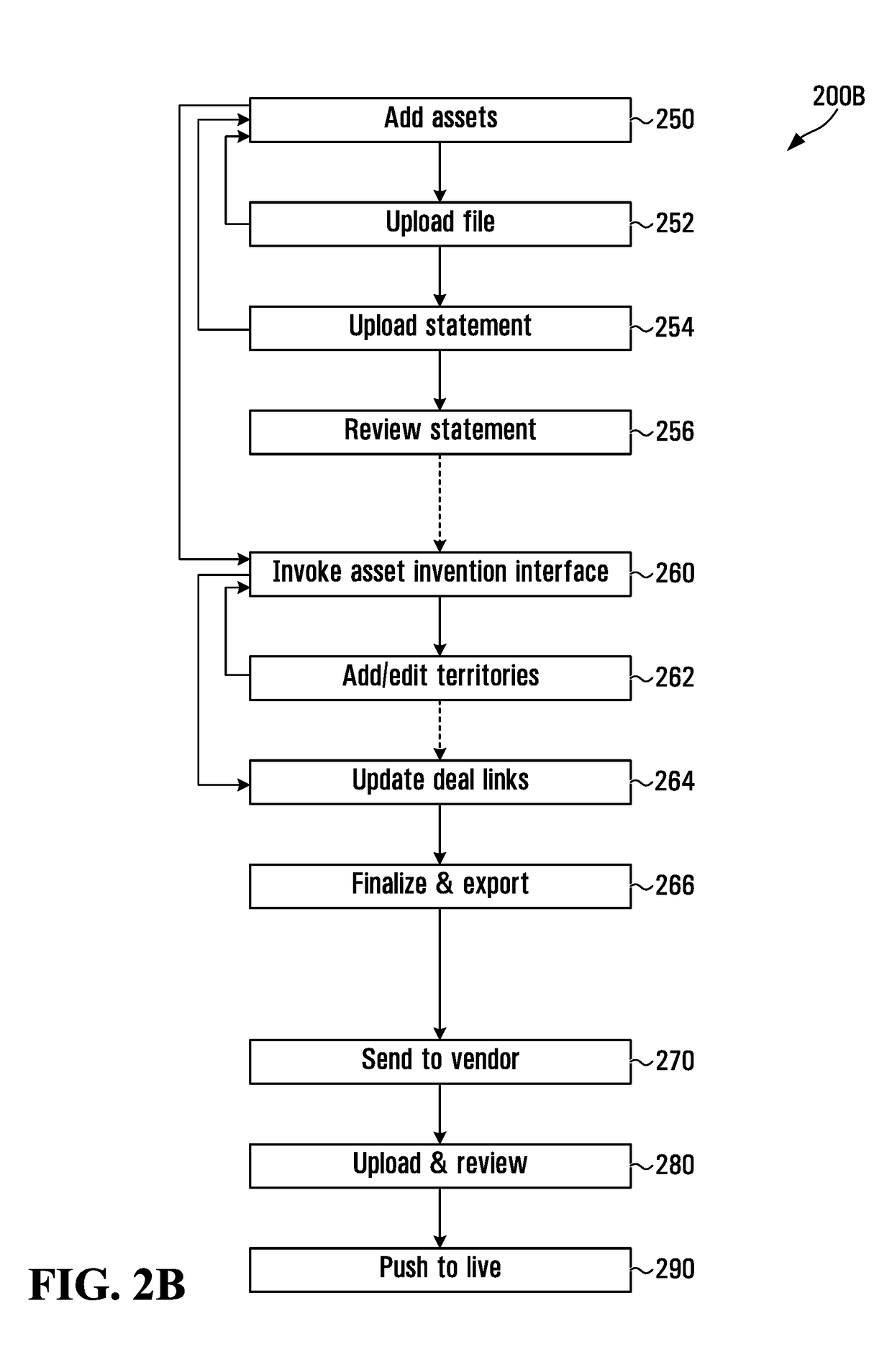

Scalable computing systems and methods for intellectual property rights and royalty management

Scalable computing systems and methods for the management of intellectual property assets and royalty payments. Income can be derived from a number of different sources in association with intellectual property assets. The data from these sources can be conformed into a common format to facilitate the management of intellectual property assets and royalty payments. Intellectual property assets can be organized into deals and can be stored in a database. The deals can also include parameters such as rate tables, rights holders associated with the assets of the deal. With the conformed data, the asset listings in the statements can be matched to the assets in the database. The matching can be done automatically, using fuzzy matching or manually if the automatic or fuzzy matching fails to produce a match. The royalties can then be calculated in parallel for all rights holders of a given asset across all jurisdictions using a dynamic flow network created for particular asset deal relationships.

Owner:ANTHEM ENTERTAINMENT GP INC

Multiple peer groups for efficient scalable computing

InactiveUS20080080528A1Time-division multiplexData switching by path configurationScalable computingGroup operation

Owner:MICROSOFT TECH LICENSING LLC

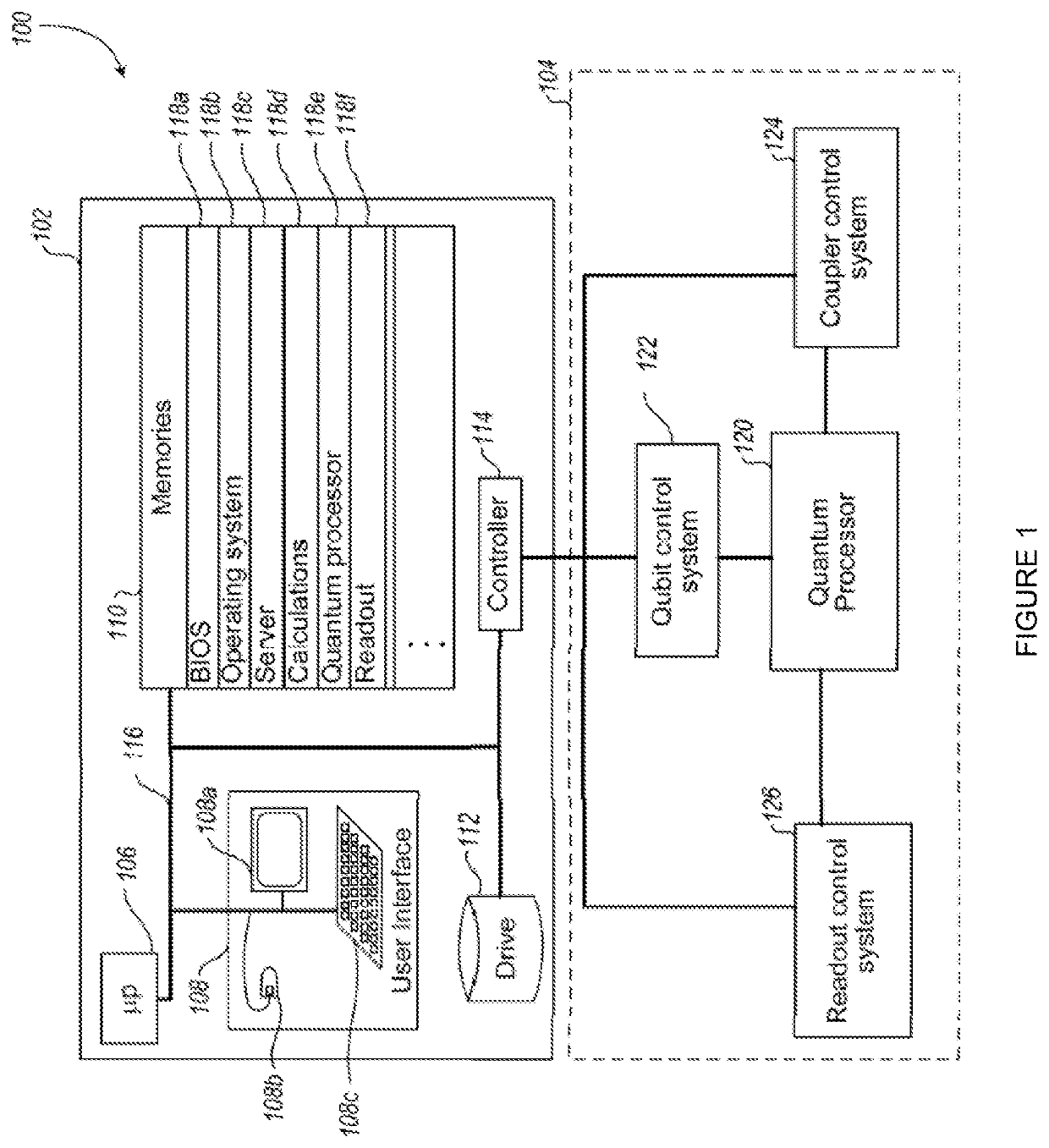

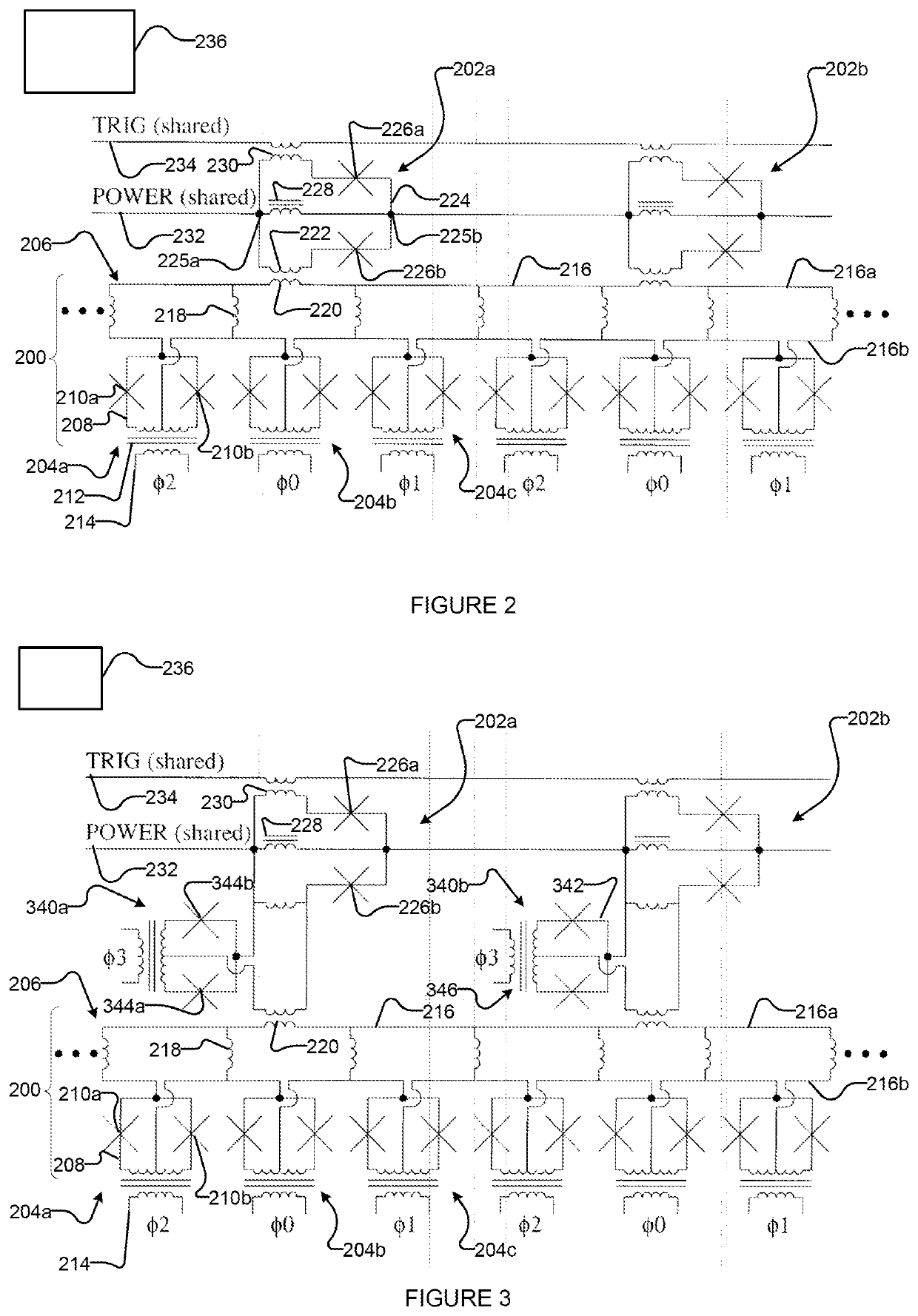

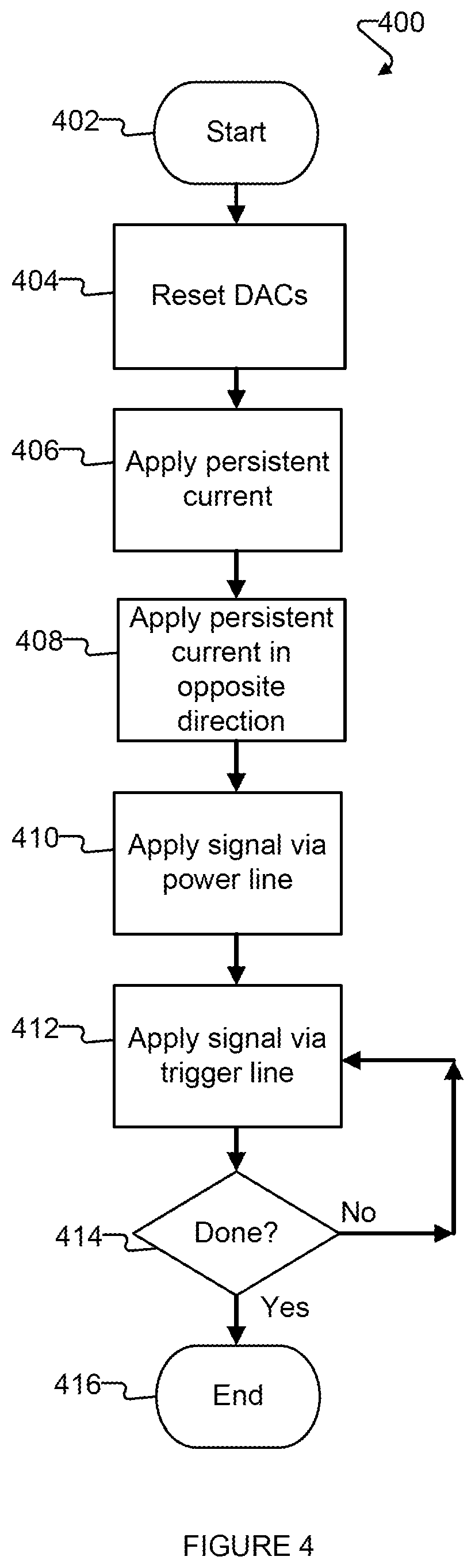

Systems and methods for superconducting devices used in superconducting circuits and scalable computing

ActiveUS20210013391A1Take advantageImprove performanceQuantum computersSuperconductor detailsShift registerConverters

Approaches useful to operation of scalable processors with ever larger numbers of logic devices (e.g., qubits) advantageously take advantage of QFPs, for example to implement shift registers, multiplexers (i.e., MUXs), de-multiplexers (i.e., DEMUXs), and permanent magnetic memories (i.e., PMMs), and the like, and / or employ XY or XYZ addressing schemes, and / or employ control lines that extend in a “braided” pattern across an array of devices. Many of these described approaches are particularly suited for implementing input to and / or output from such processors. Superconducting quantum processors comprising superconducting digital-analog converters (DACs) are provided. The DACs may use kinetic inductance to store energy via thin-film superconducting materials and / or series of Josephson junctions, and may use single-loop or multi-loop designs. Particular constructions of energy storage elements are disclosed, including meandering structures. Galvanic connections between DACs and / or with target devices are disclosed, as well as inductive connections.

Owner:1372934 B C LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com