Patents

Literature

1012results about How to "Avoid overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

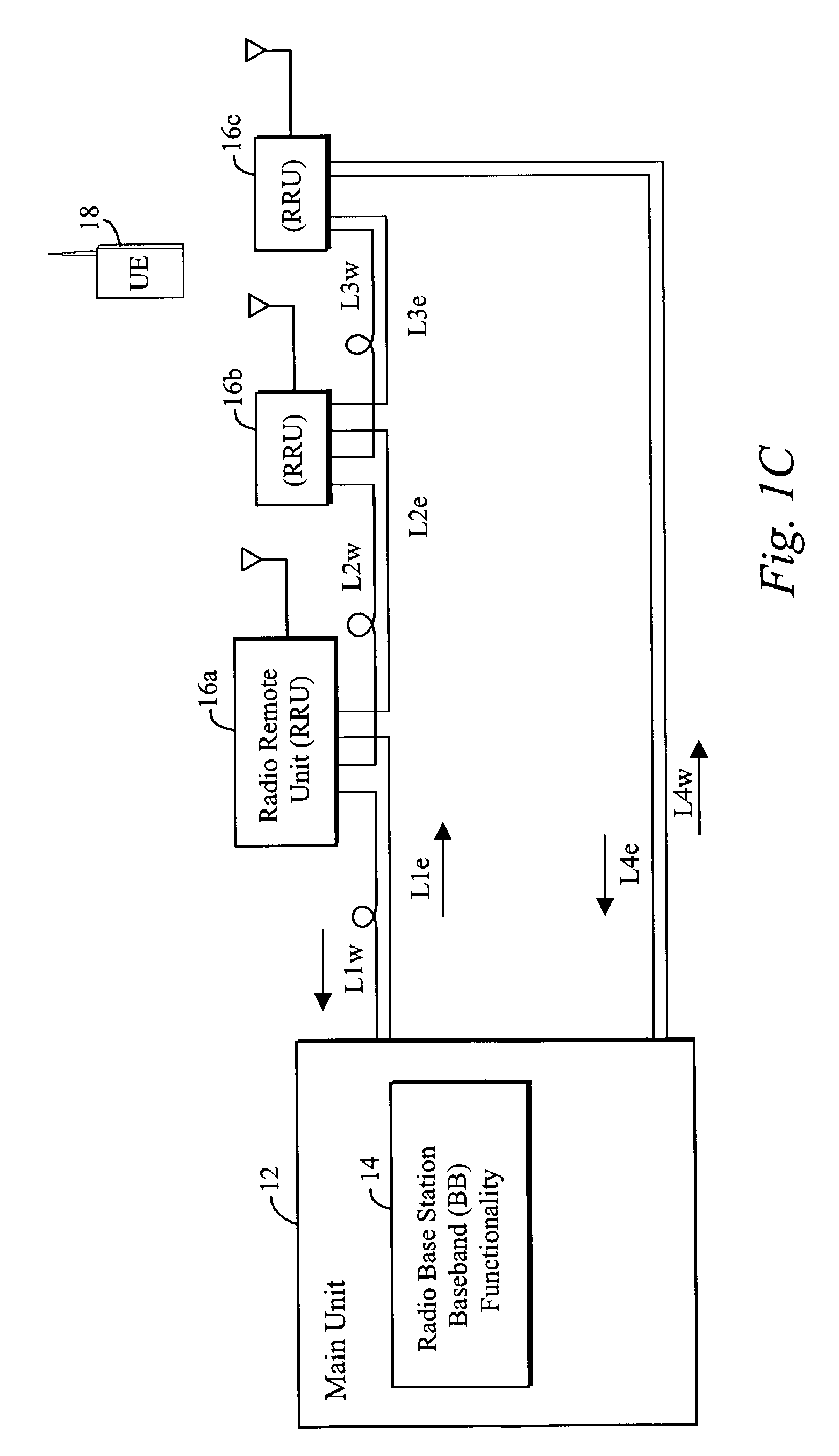

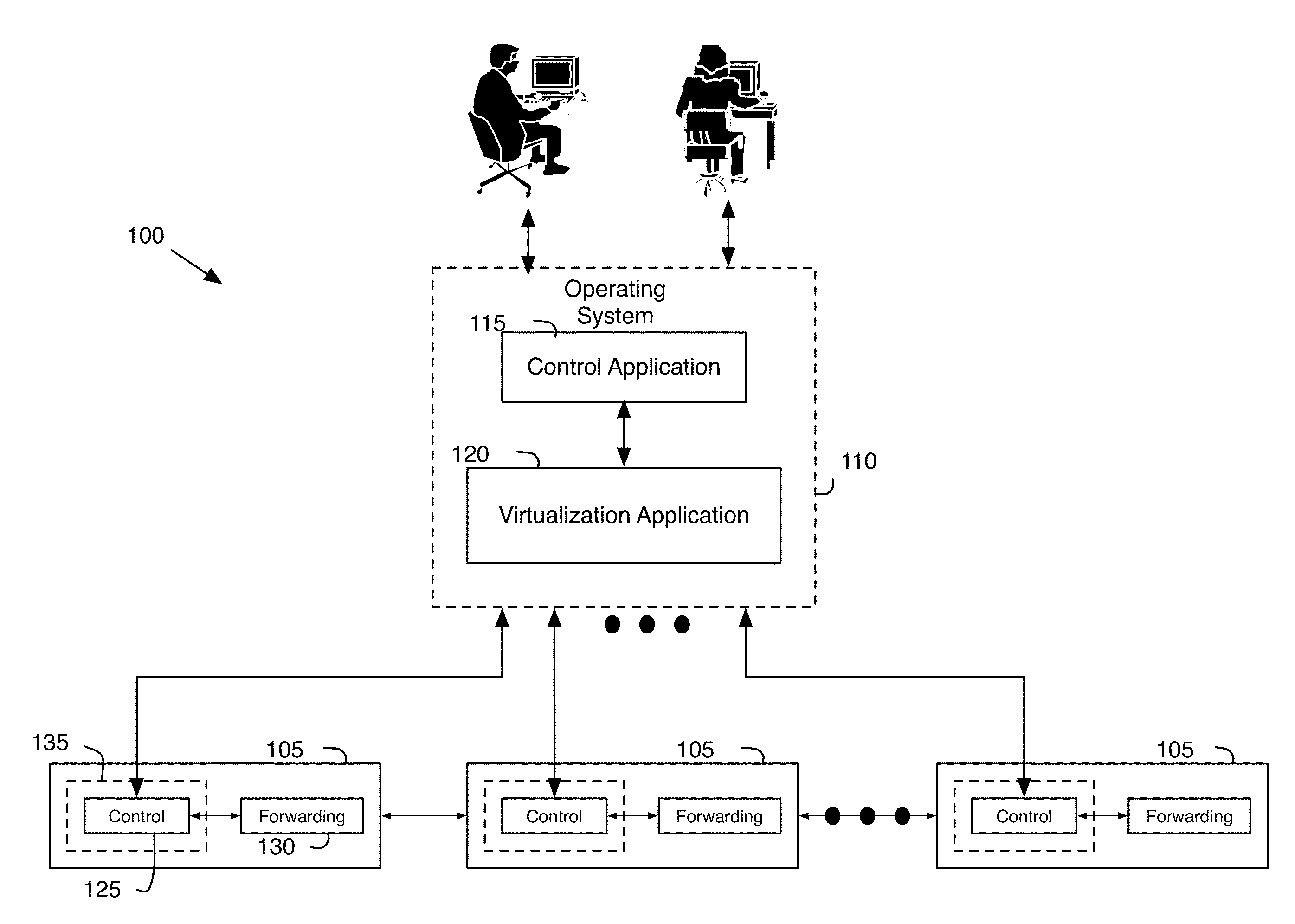

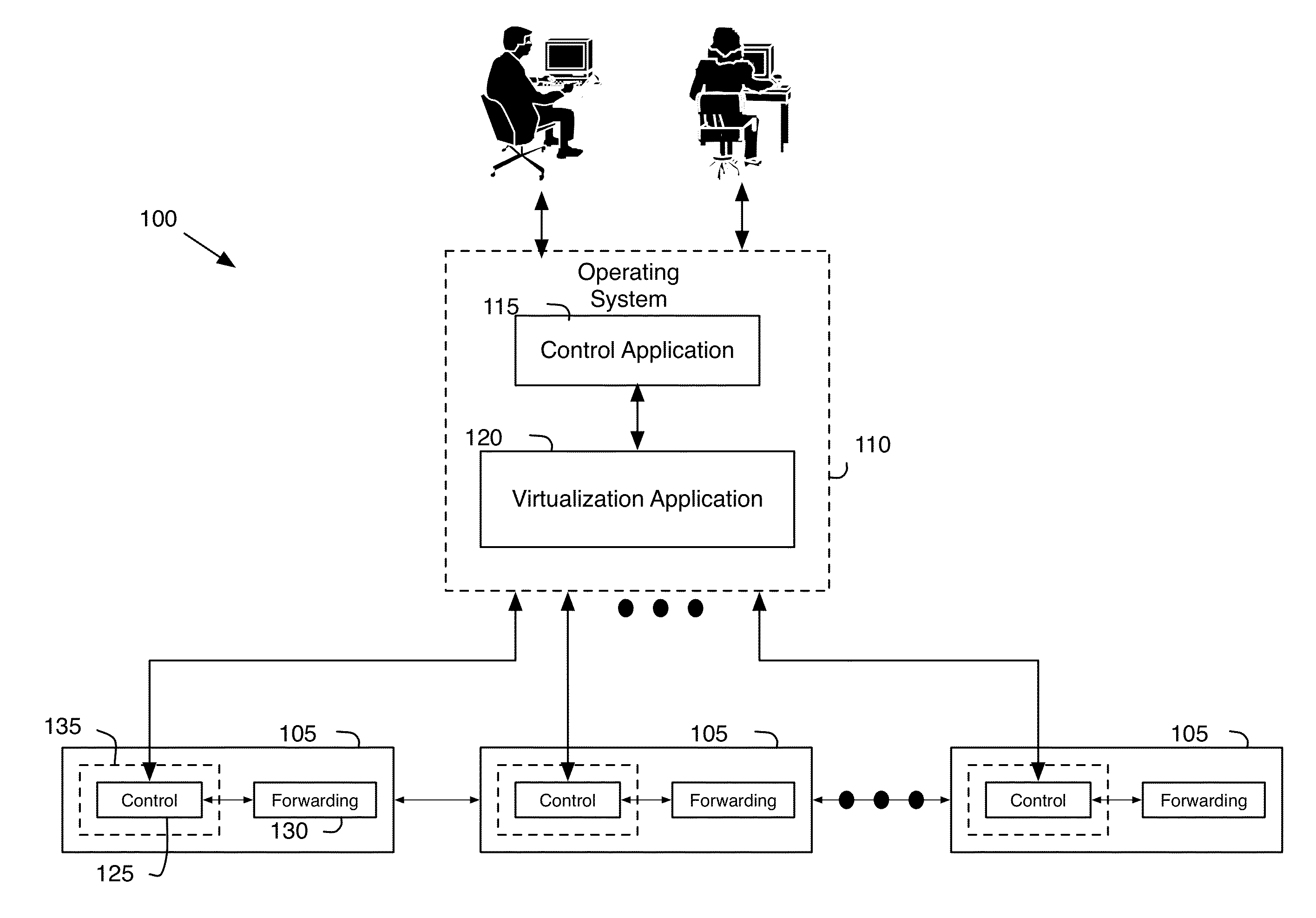

Chassis controller

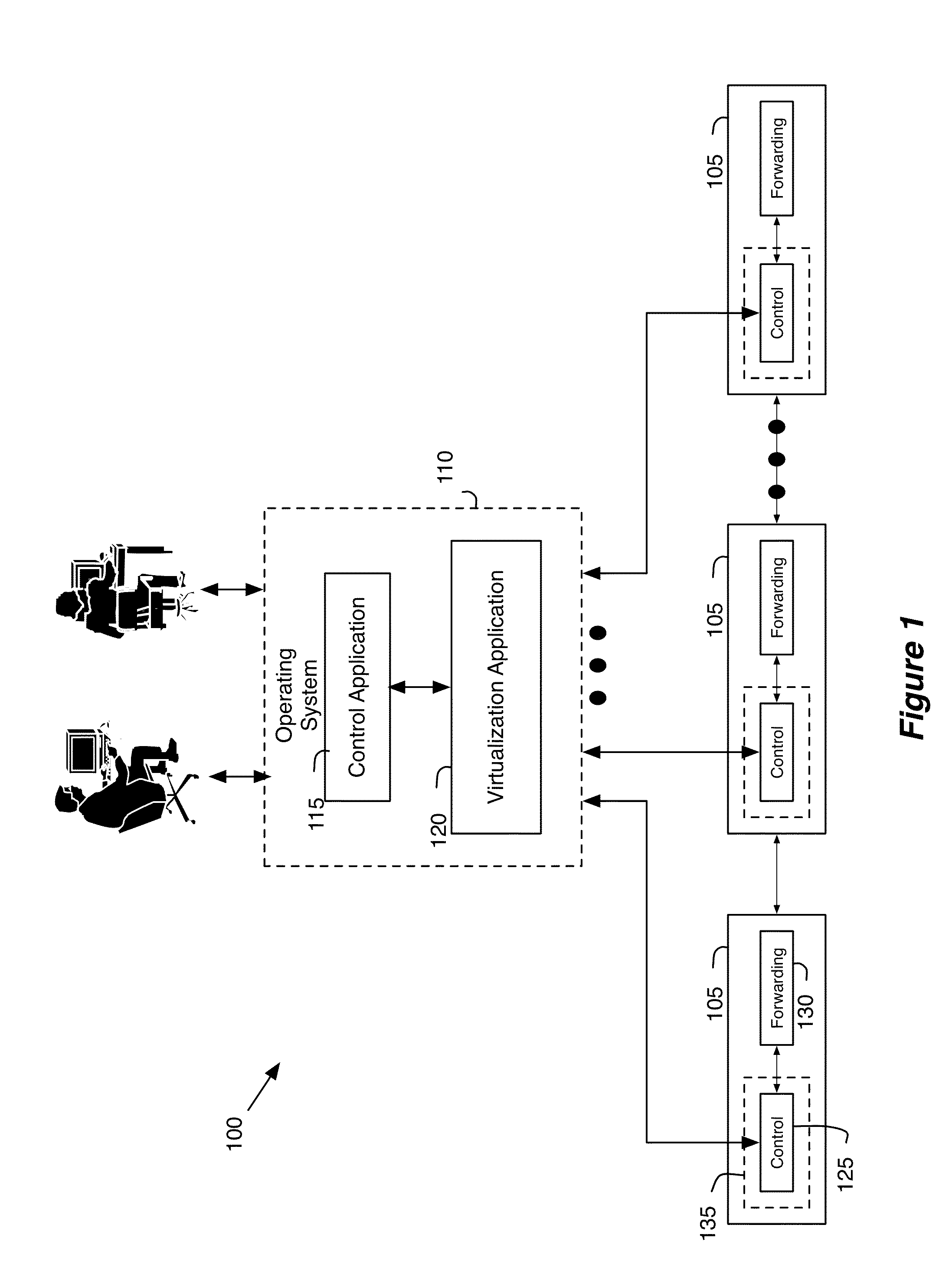

ActiveUS20130103817A1Avoid becoming scalability bottleneckControl volumeDigital computer detailsElectric controllersNetwork controlDatapath

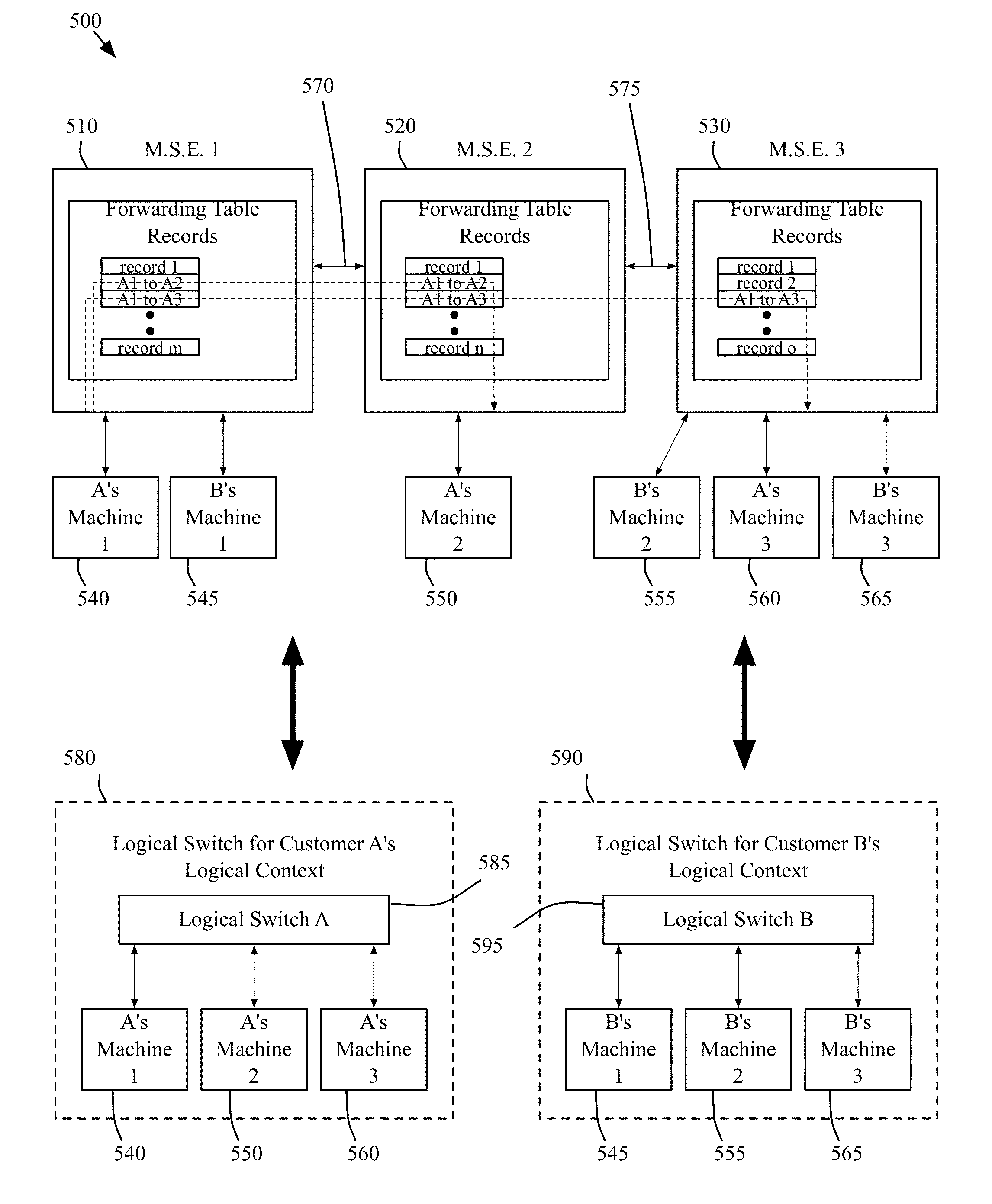

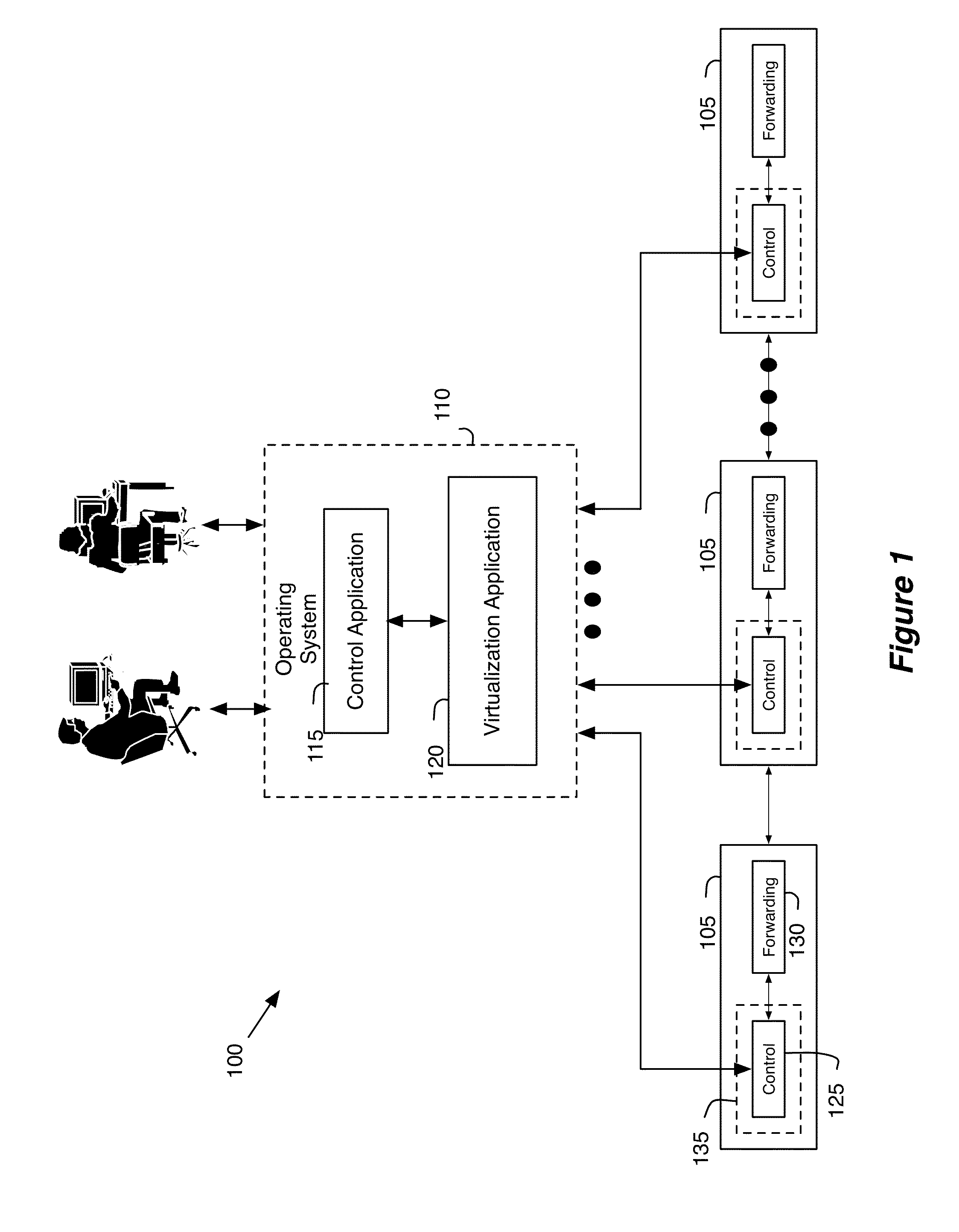

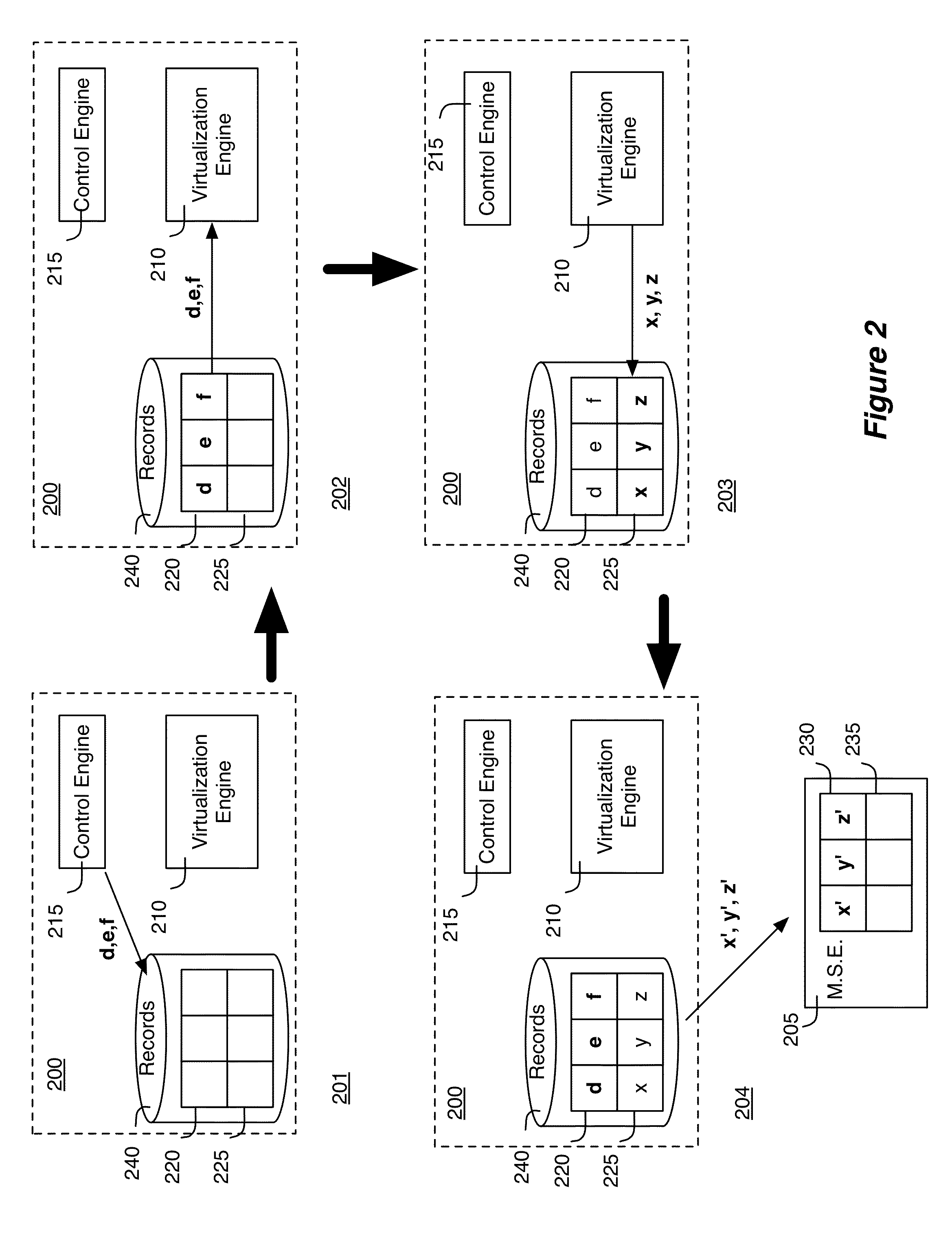

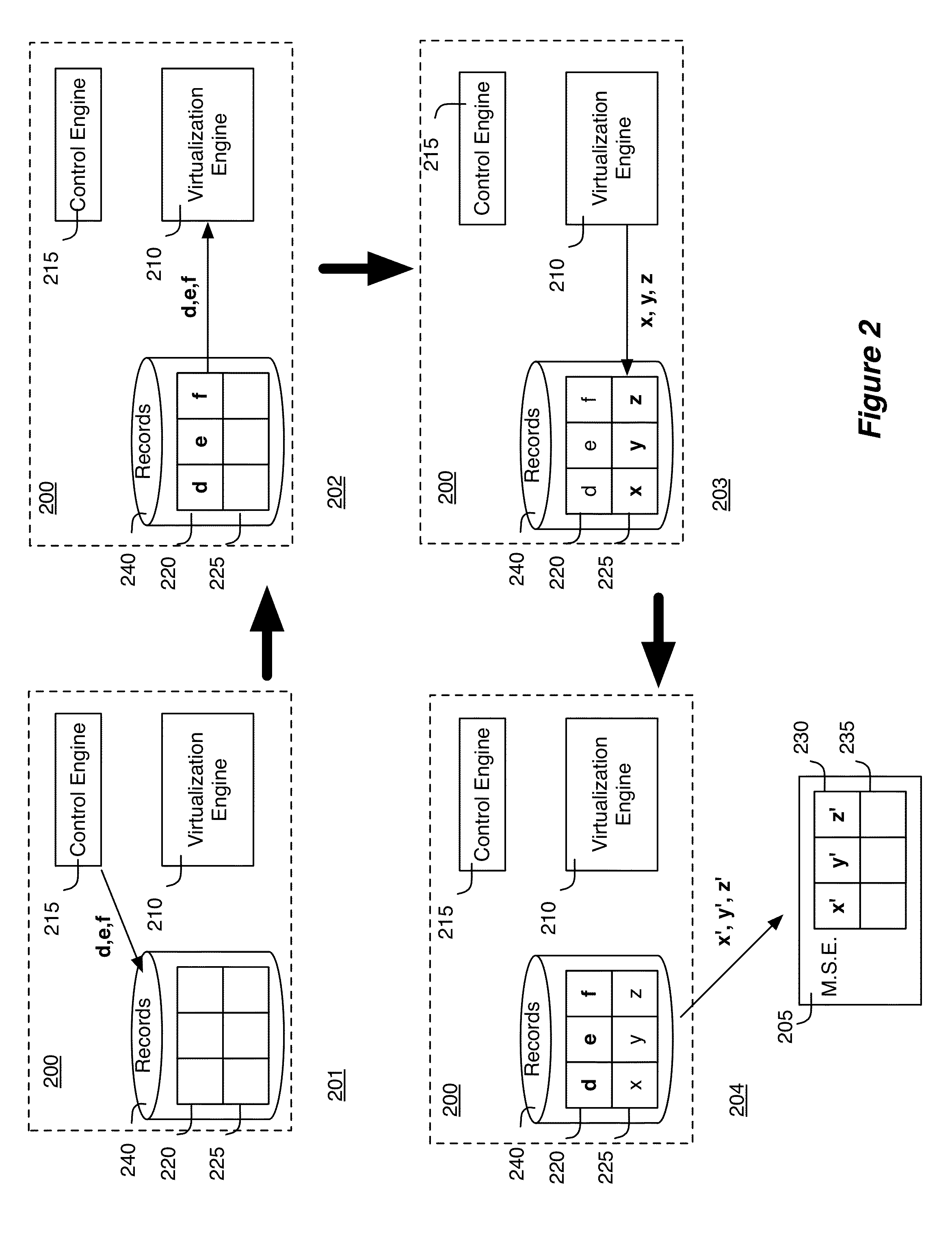

A network control system for generating physical control plane data for managing first and second managed forwarding elements that implement forwarding operations associated with a first logical datapath set is described. The system includes a first controller instance for converting logical control plane data for the first logical datapath set to universal physical control plane (UPCP) data. The system further includes a second controller instance for converting UPCP data to customized physical control plane (CPCP) data for the first managed forwarding element but not the second managed forwarding element. The system further includes a third controller instance for receiving UPCP data generated by the first controller instance, identifying the second controller instance as the controller instance responsible for generating the CPCP data for the first managed forward element, and supplying the received UPCP data to the second controller instance.

Owner:NICIRA

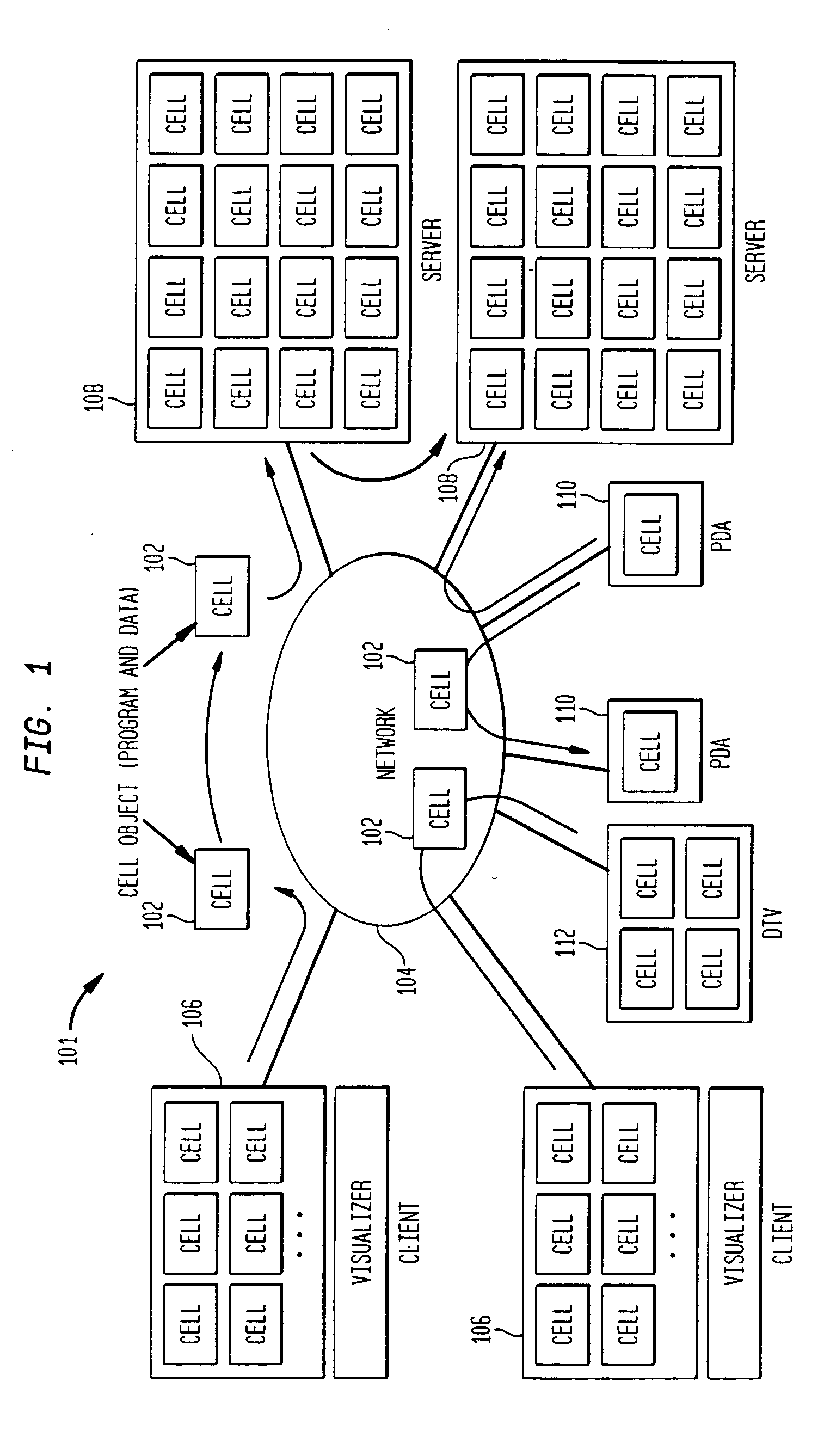

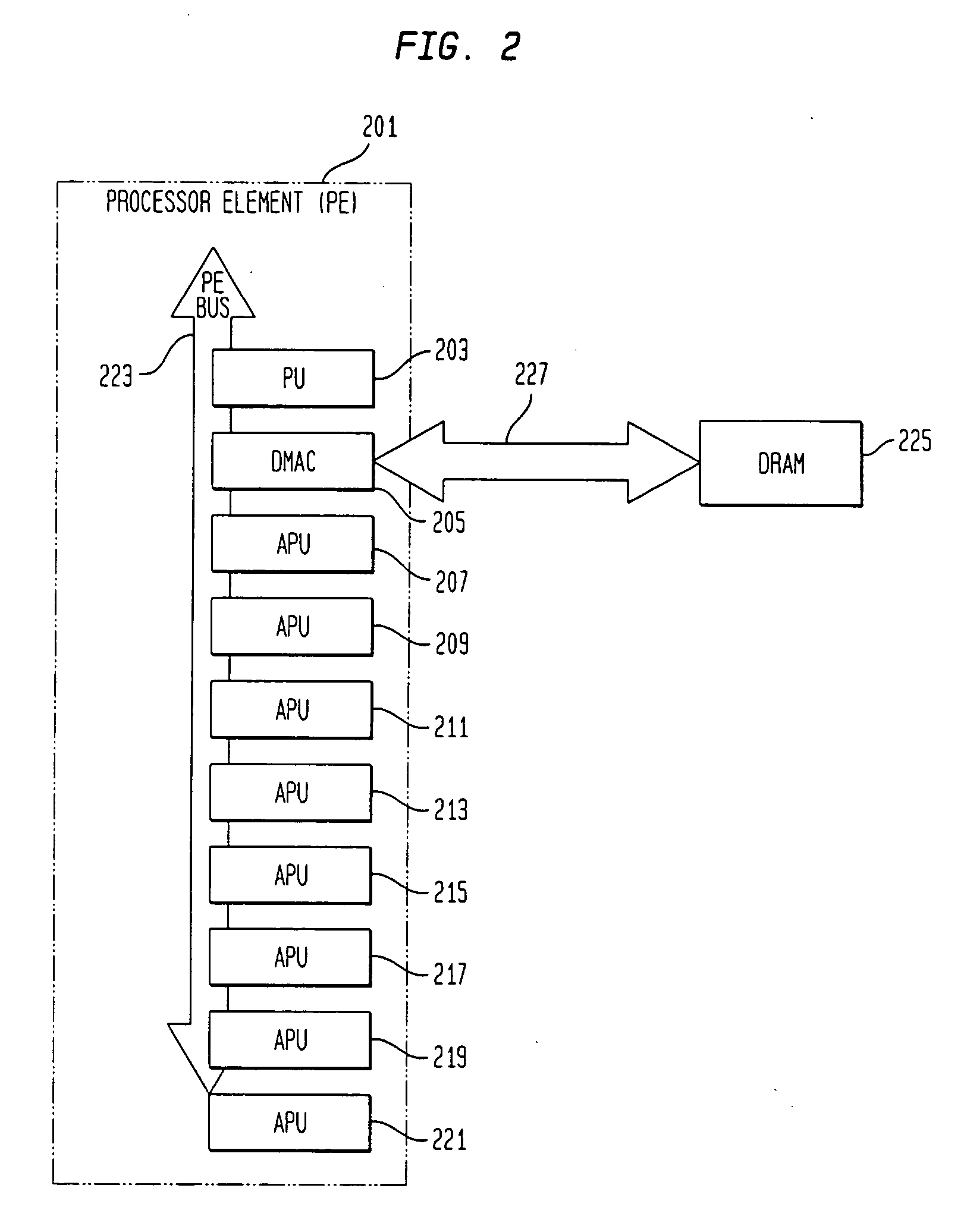

System and method for data synchronization for a computer architecture for broadband networks

ActiveUS20050081213A1Avoid confictAvoid computational overheadVolume/mass flow measurementUnauthorized memory use protectionModular structureBroadband networks

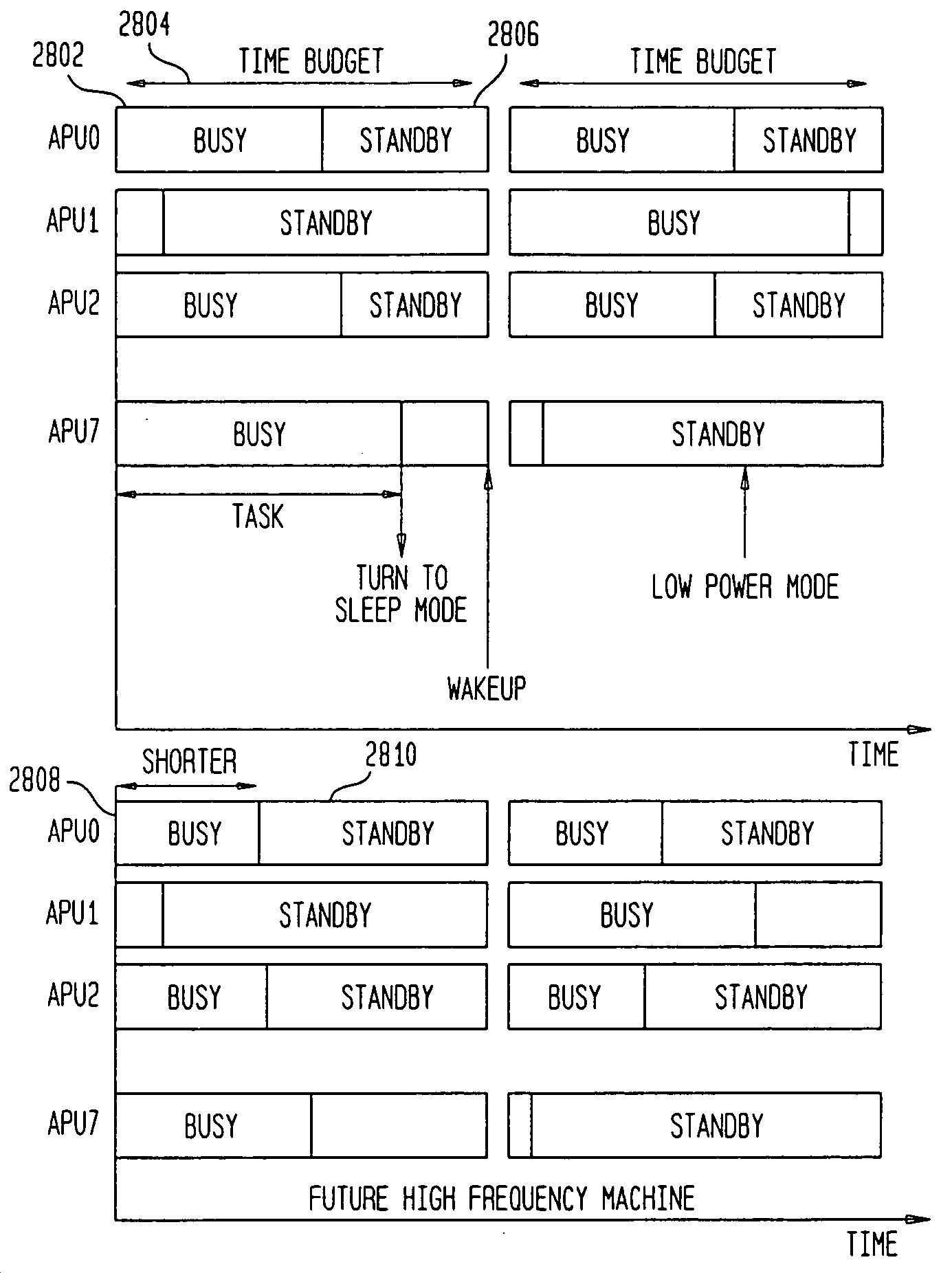

A computer architecture and programming model for high speed processing over broadband networks are provided. The architecture employs a consistent modular structure, a common computing module and uniform software cells. The common computing module includes a control processor, a plurality of processing units, a plurality of local memories from which the processing units process programs, a direct memory access controller and a shared main memory. A synchronized system and method for the coordinated reading and writing of data to and from the shared main memory by the processing units also are provided. A processing system for processing tasks is also provided. The processing system includes processing devices and an absolute timer. The absolute timer defines a time budget. The time budget provides a time period for the completion of tasks by selected processing devices independent of clock frequencies employed by the processing devices for processing the tasks.

Owner:SONY COMPUTER ENTERTAINMENT INC

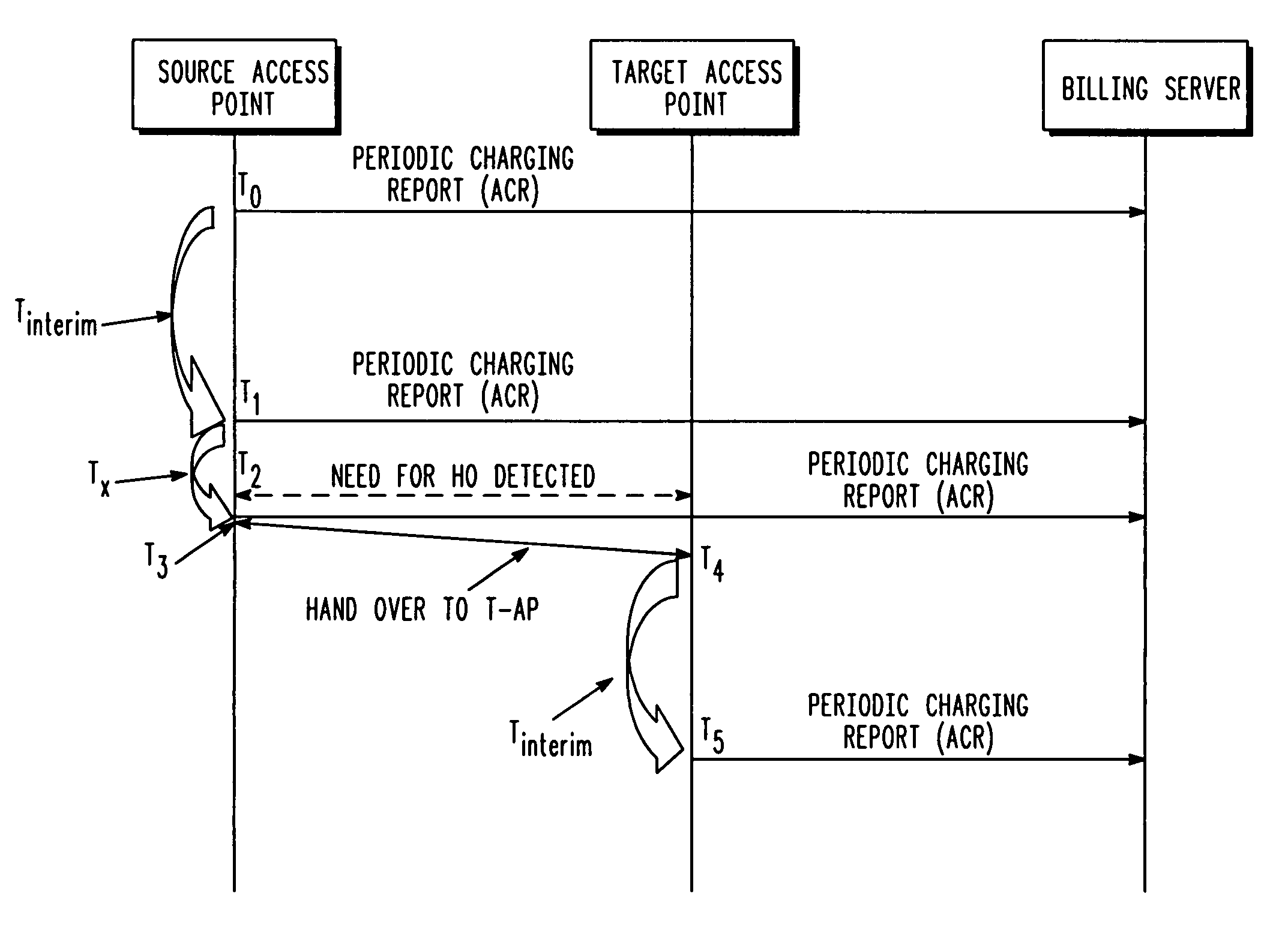

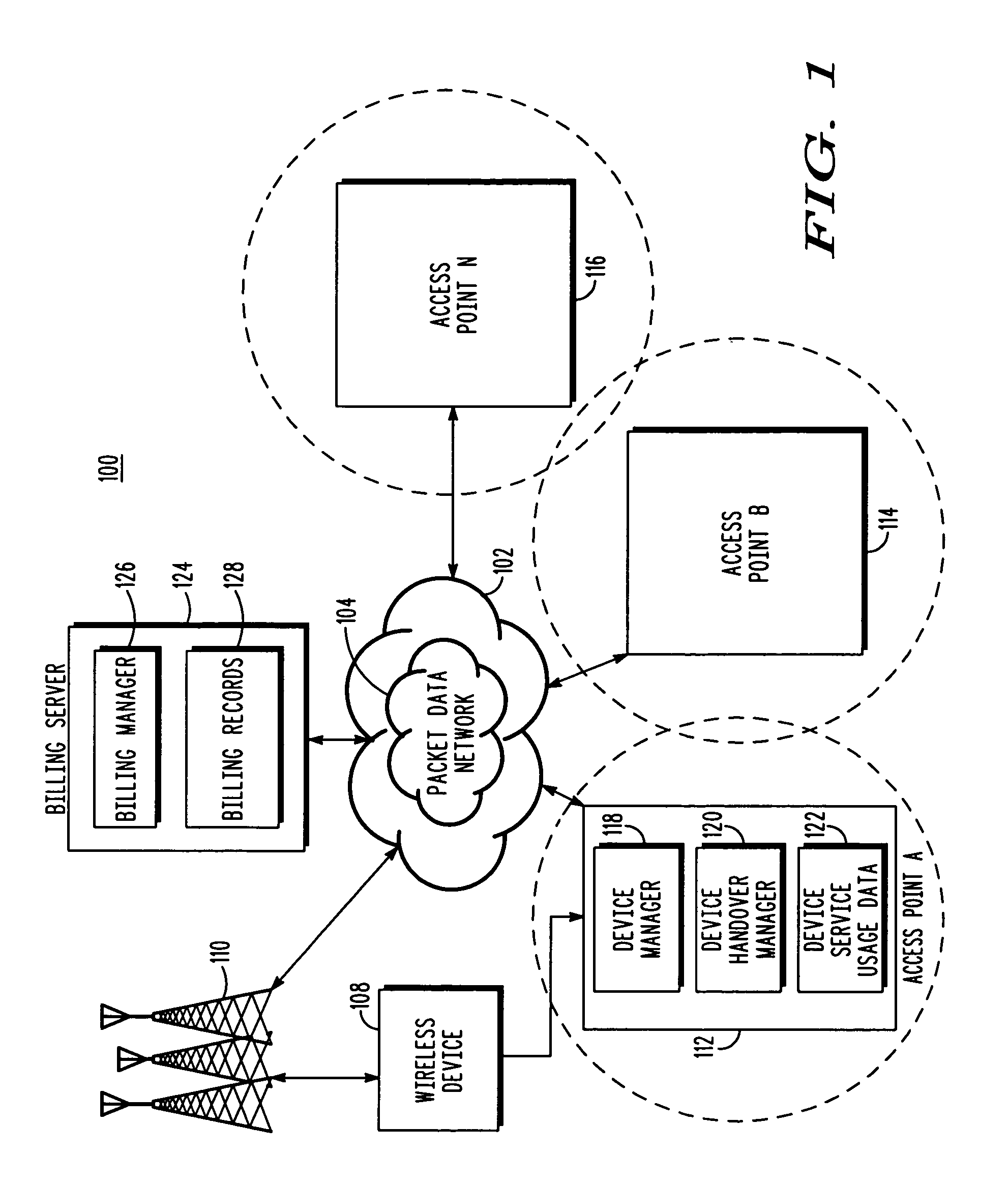

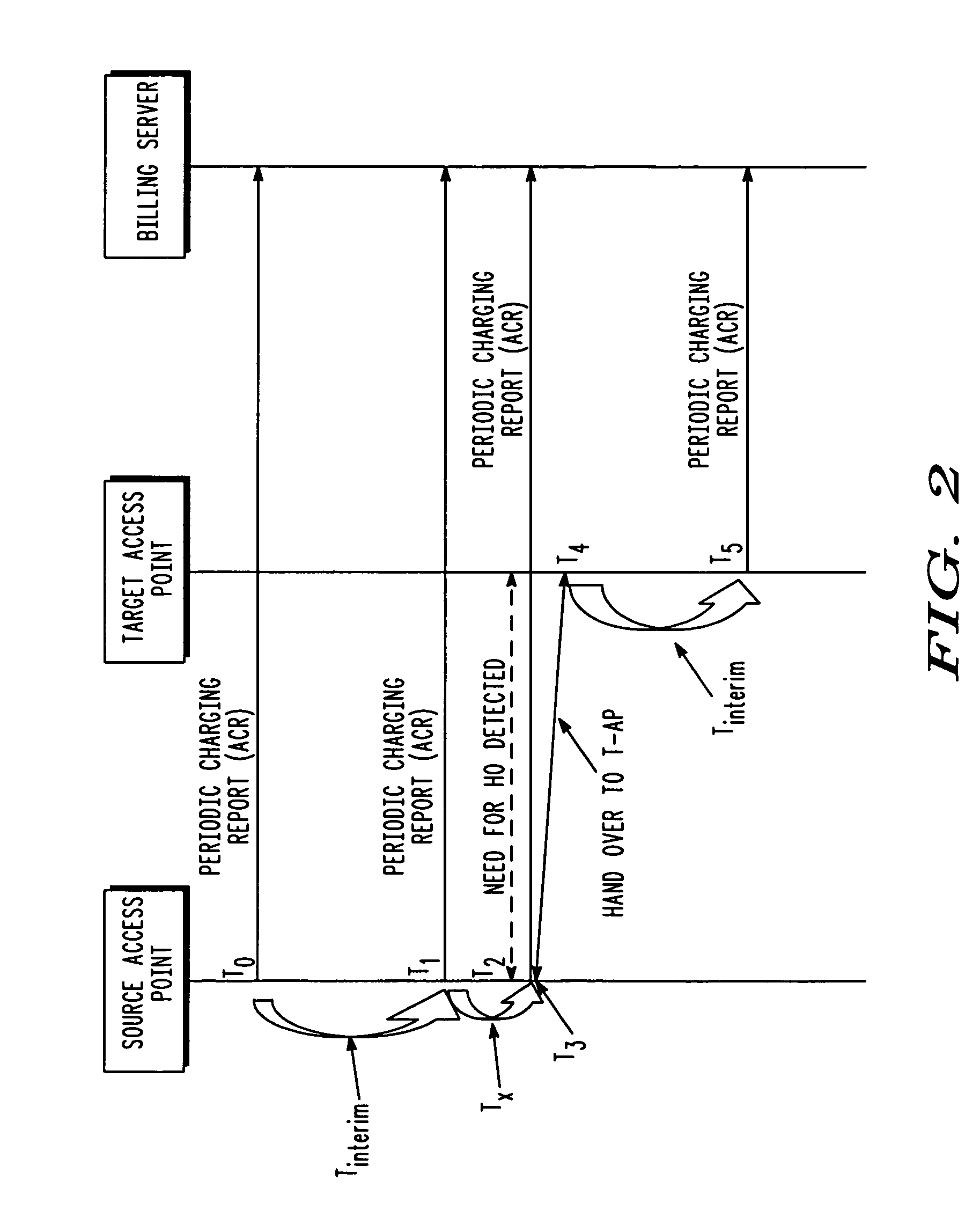

Accurate billing for services used across multiple serving nodes

InactiveUS8463232B2Avoid overheadMaintain integrityAccounting/billing servicesNetwork traffic/resource managementNODALCommunications system

Owner:GOOGLE TECHNOLOGY HOLDINGS LLC

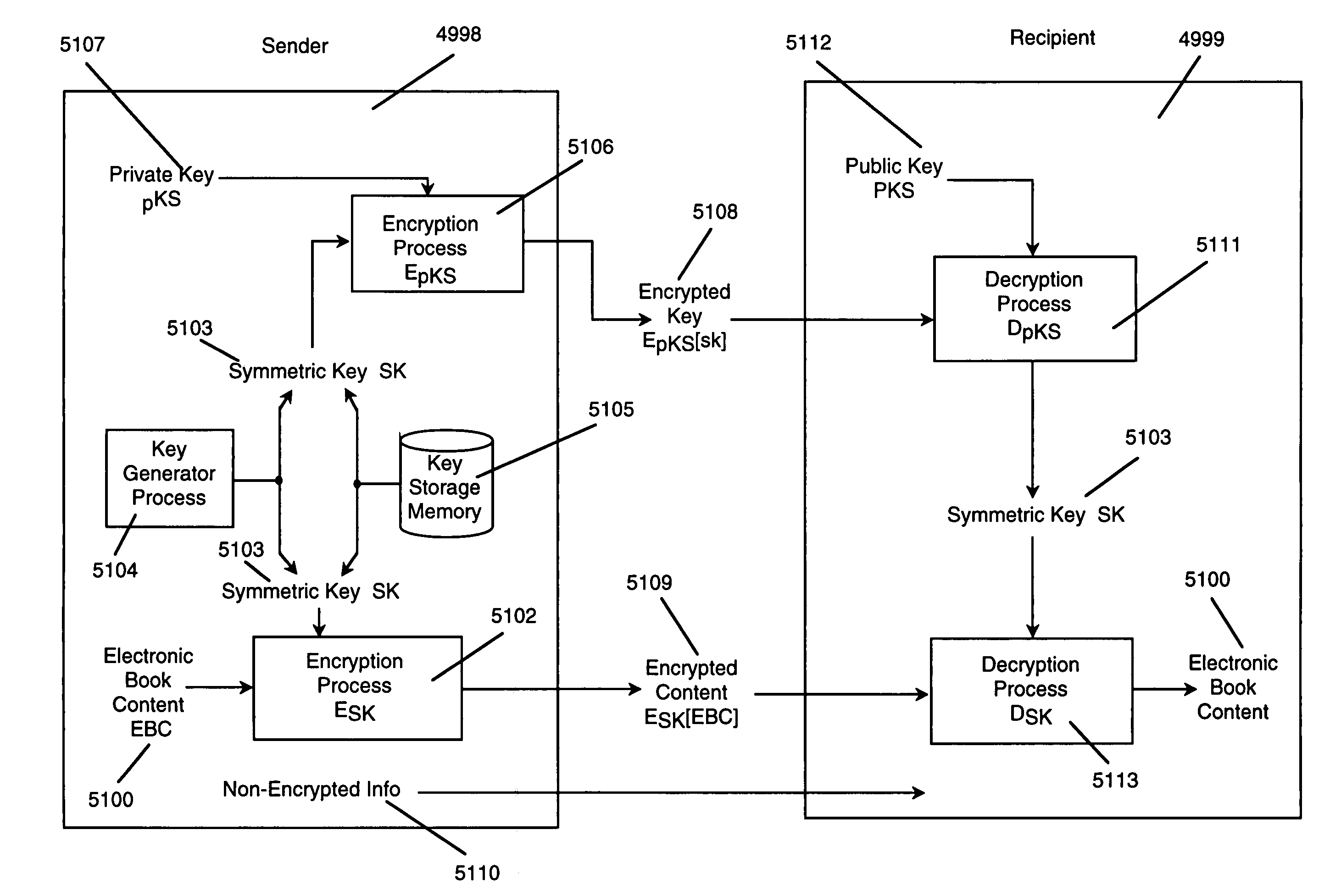

Electronic book security and copyright protection system

InactiveUS7298851B1High tech auraEasy to useKey distribution for secure communicationDigital data processing detailsGraphicsPayment

Owner:ADREA LLC

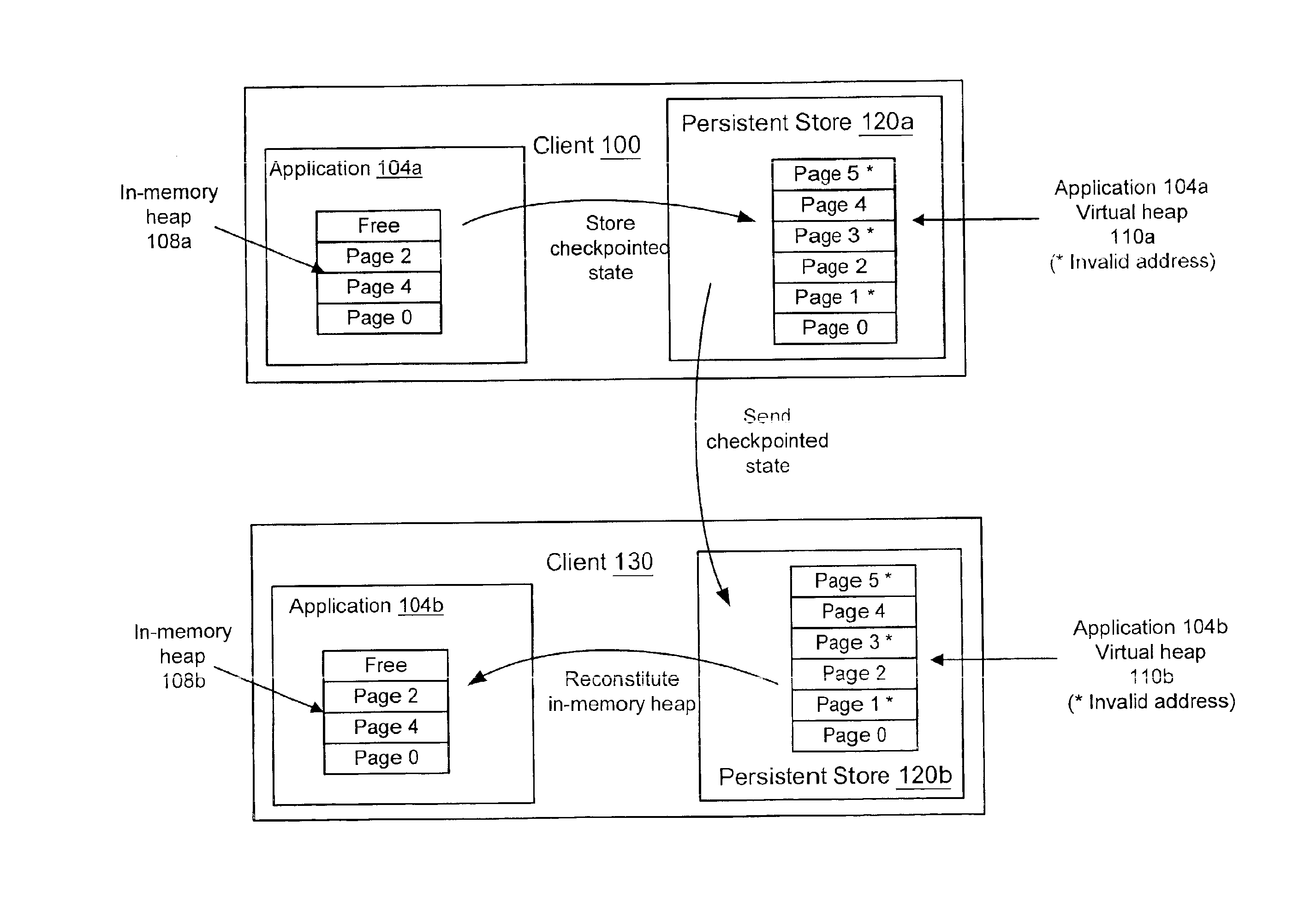

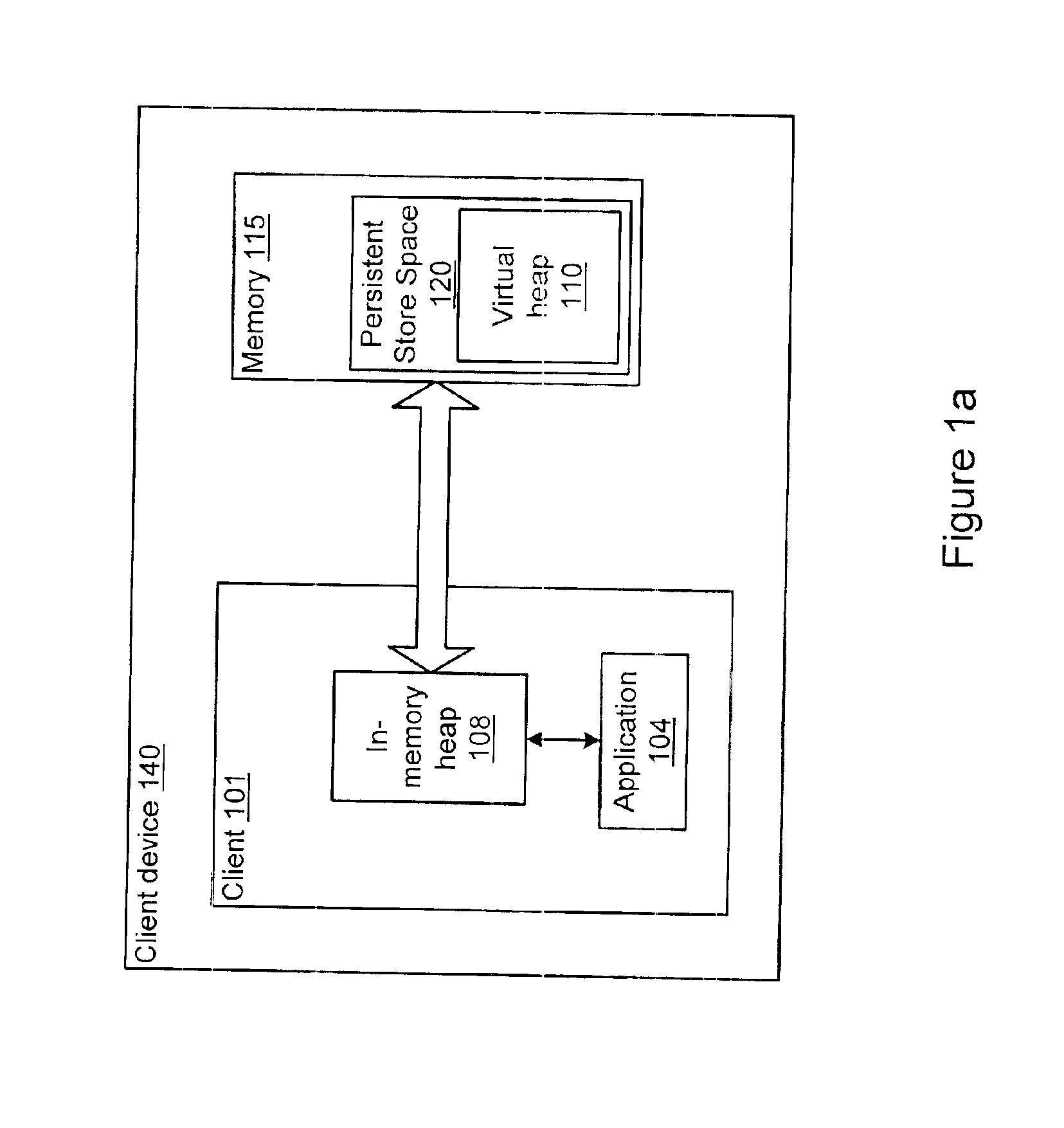

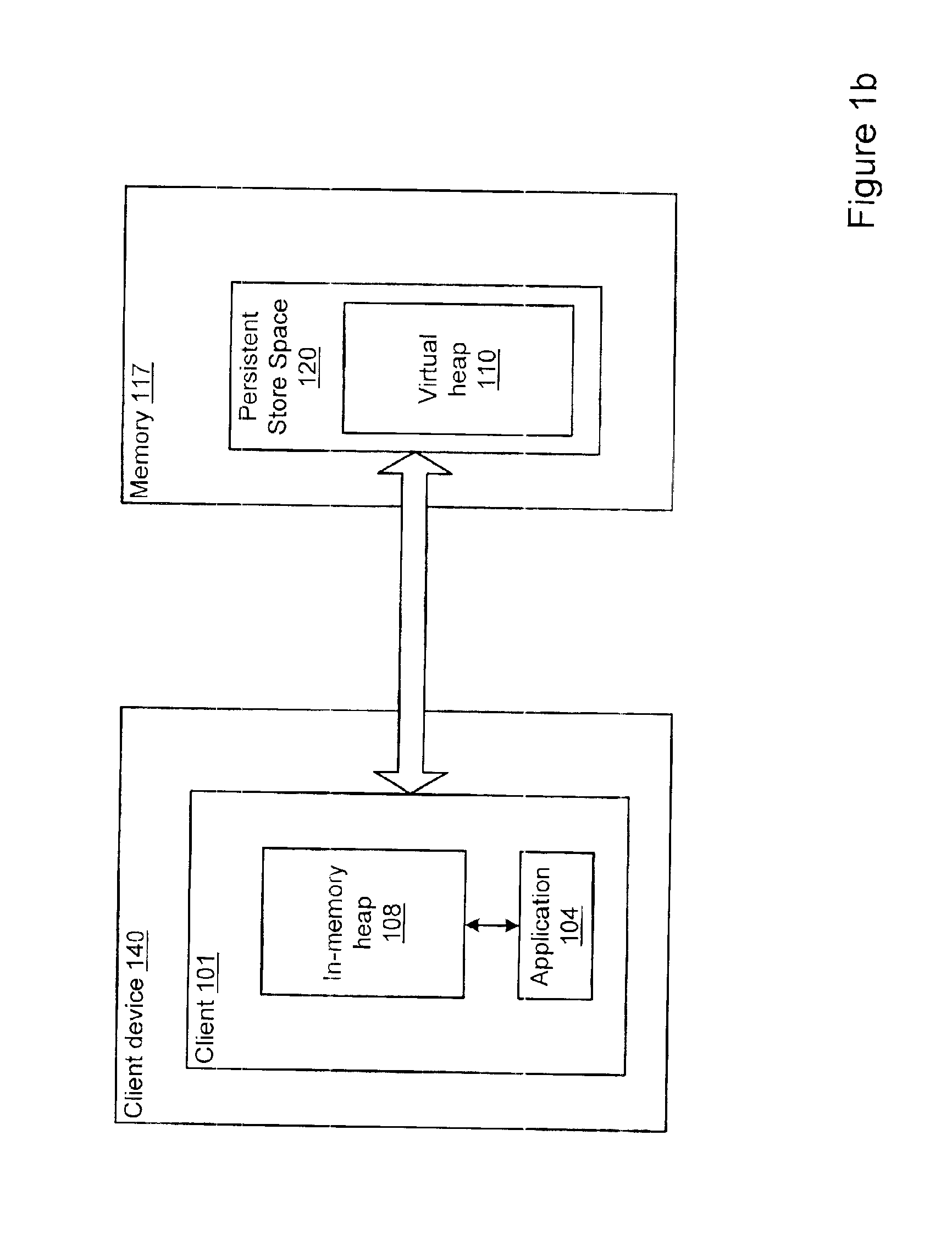

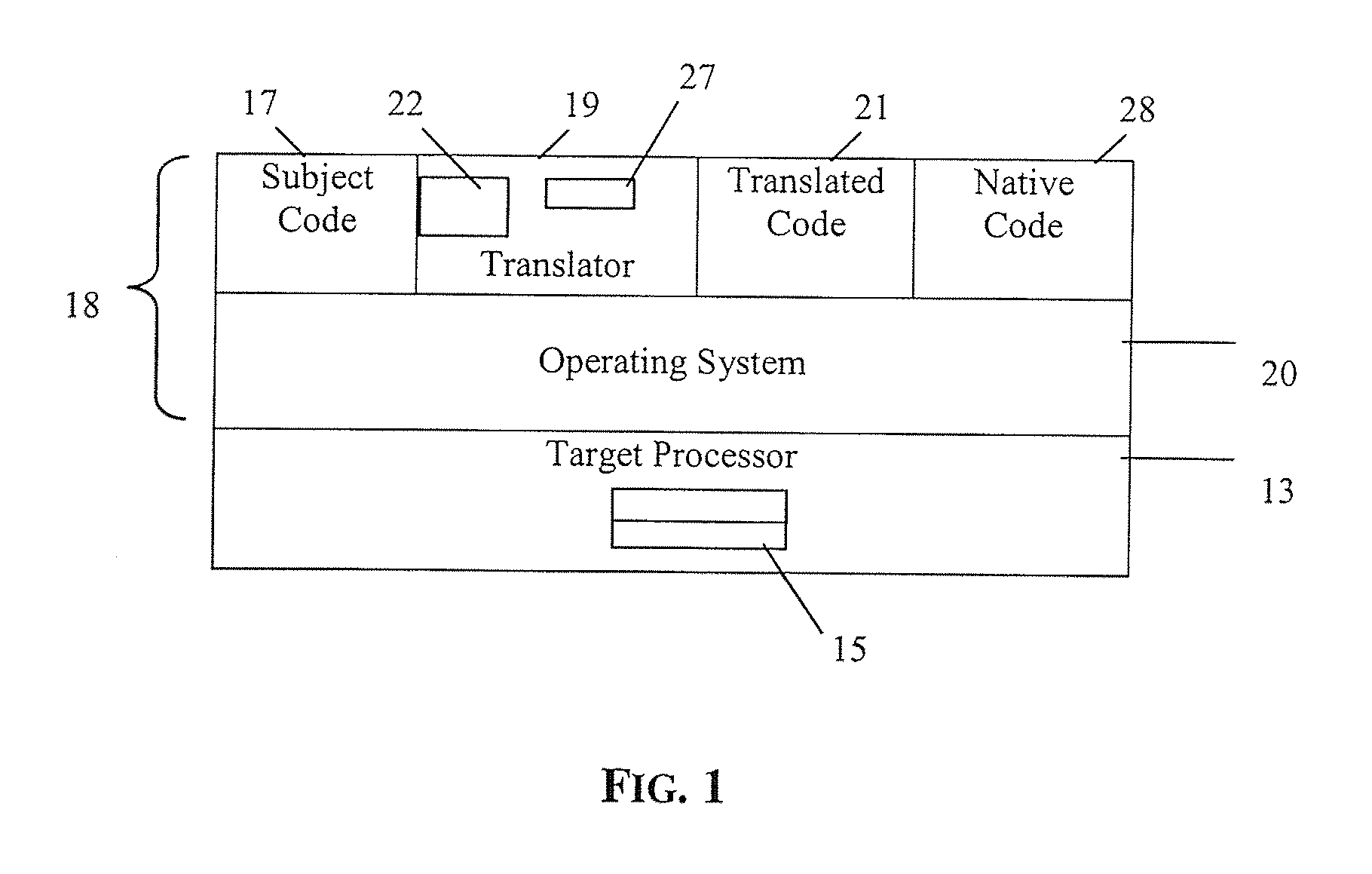

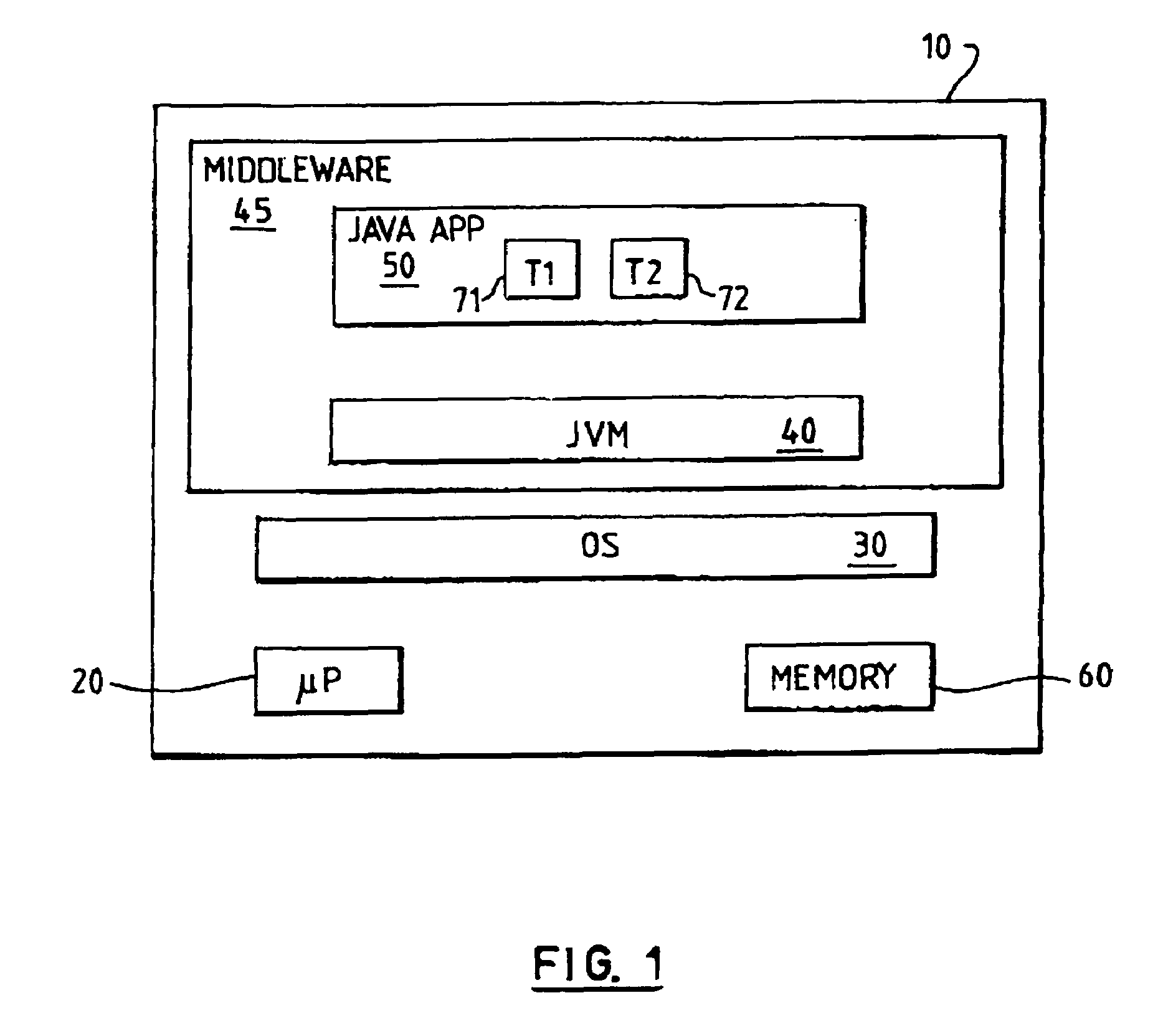

Process persistence in a virtual machine

InactiveUS6854115B1Improve performanceReduce and substantially eliminate fragmentationComputer security arrangementsSoftware simulation/interpretation/emulationVirtual machineFlash memory

A system and method for providing process persistence in a virtual machine are described. A virtual persistent heap may be provided. The virtual persistent heap may enable the checkpointing of the state of the computation of a virtual machine, including processes executing within the virtual machine, to a persistent storage such as a disk or flash device for future resumption of the computation from the checkpoint. The Virtual Persistent Heap also may enable the migration of the virtual machine computation states, and thus the migration of executing processes, from one machine to another. The saved state of the virtual machine heap may also provide the ability to restart the virtual machine after a system crash or shutdown to the last saved persistent state, and to thus restart a process that was running within the virtual machine prior to the system crash or shutdown to a checkpointed state of the process stored in the virtual persistent heap. This persistent feature is important for small consumer and appliance devices, as these appliances may be shutdown and restarted often.

Owner:ORACLE INT CORP

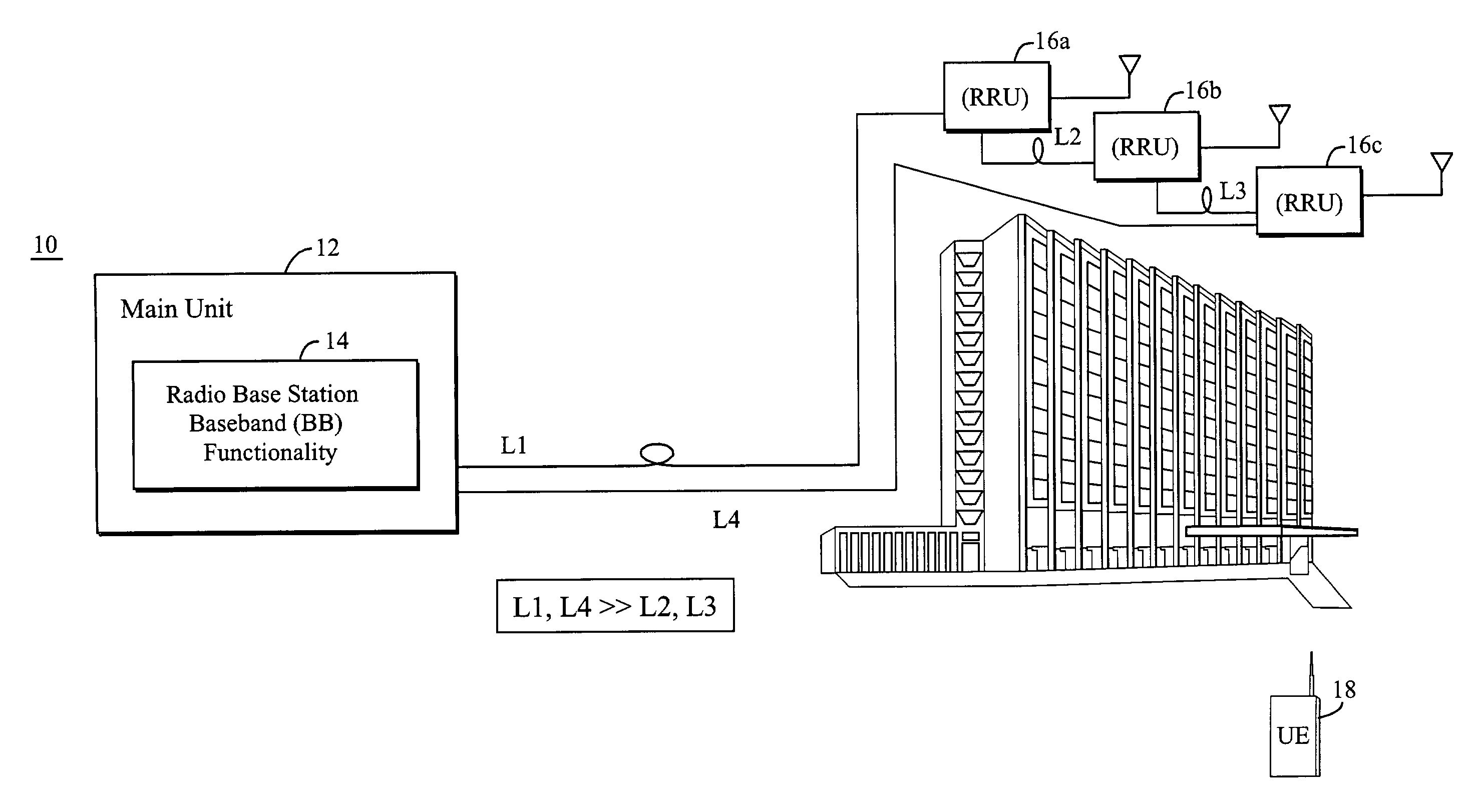

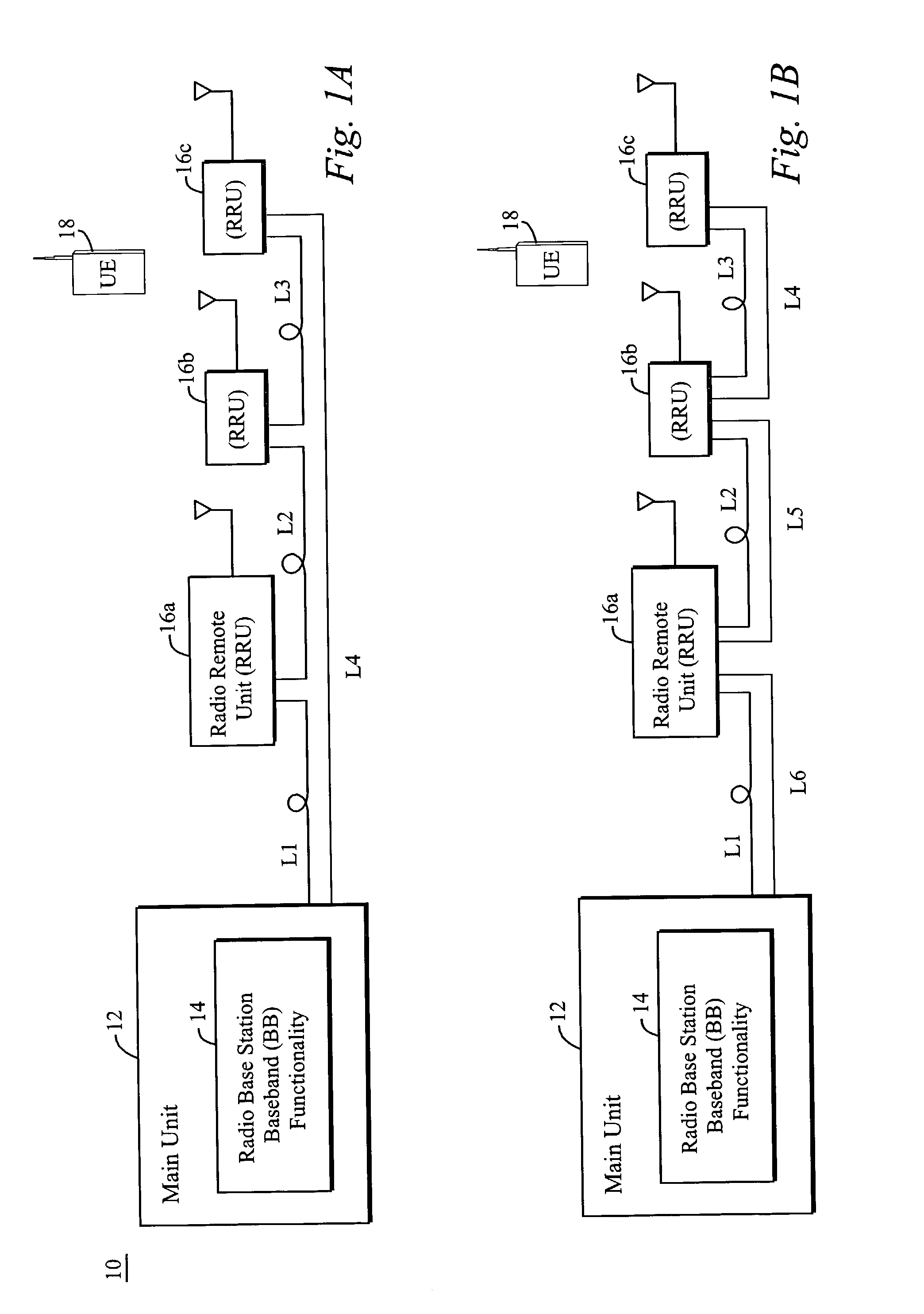

Optical fiber coupling configurations for a main-remote radio base station and a hybrid radio base station

InactiveUS7047028B2Easy on receiverLow costTransmitters monitoringSubstation equipmentSingle fiberEngineering

A main-remote radio base station system includes plural remote radio units. Fiber costs are significantly reduced using a single optical fiber that communicates information between the main unit and the remote units connected in a series configuration. Information from the main unit is sent over a first fiber path to the remote units so that the same information is transmitted over the radio interface by the remote units at substantially the same time. The main unit receives the same information from each of the remote units over a second fiber path at substantially the same time. Delay associated with each remote unit is compensated for by advancing a time when information is sent to each remote unit. A data distribution approach over a single fiber avoids the expense of separate fiber couplings between the main unit and each RRU. That approach also avoids the expense of WDM technology including lasers, filters, and OADMs as well as the logistical overhead needed to keep track of different wavelength dependent devices.

Owner:TELEFON AB LM ERICSSON (PUBL)

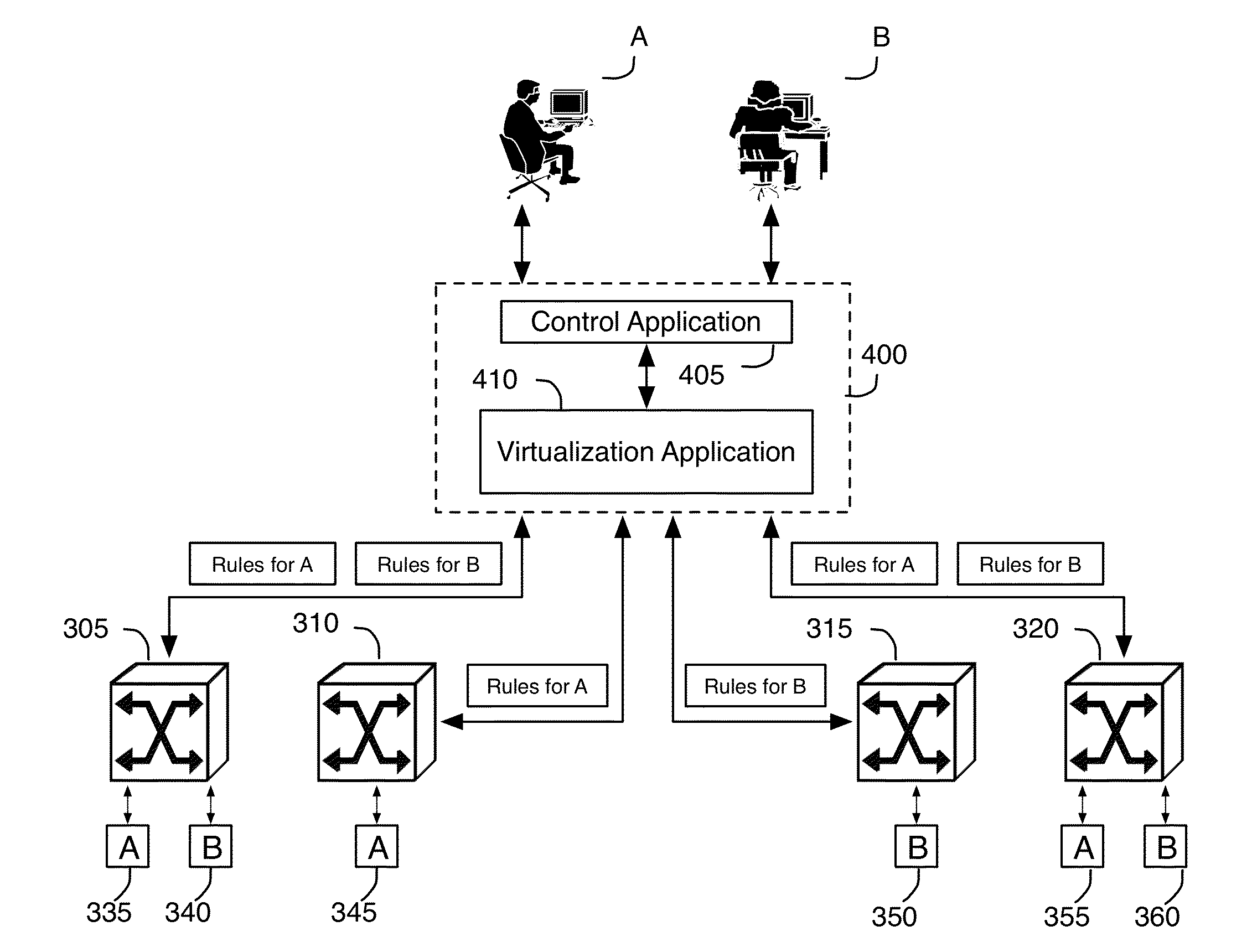

Physical controller

ActiveUS20130103818A1Avoid overheadOverhead costDigital computer detailsElectric controllersNetwork controlDatapath

A network control system for generating physical control plane data for managing first and second managed forwarding elements that implement forwarding operations associated with a first logical datapath set is described. The system includes a first controller instance for converting logical control plane data for the first logical datapath set to universal physical control plane (UPCP) data. The system further includes a second controller instance for converting UPCP data to customized physical control plane (CPCP) data for the first managed forwarding element but not the second managed forwarding element.

Owner:NICIRA

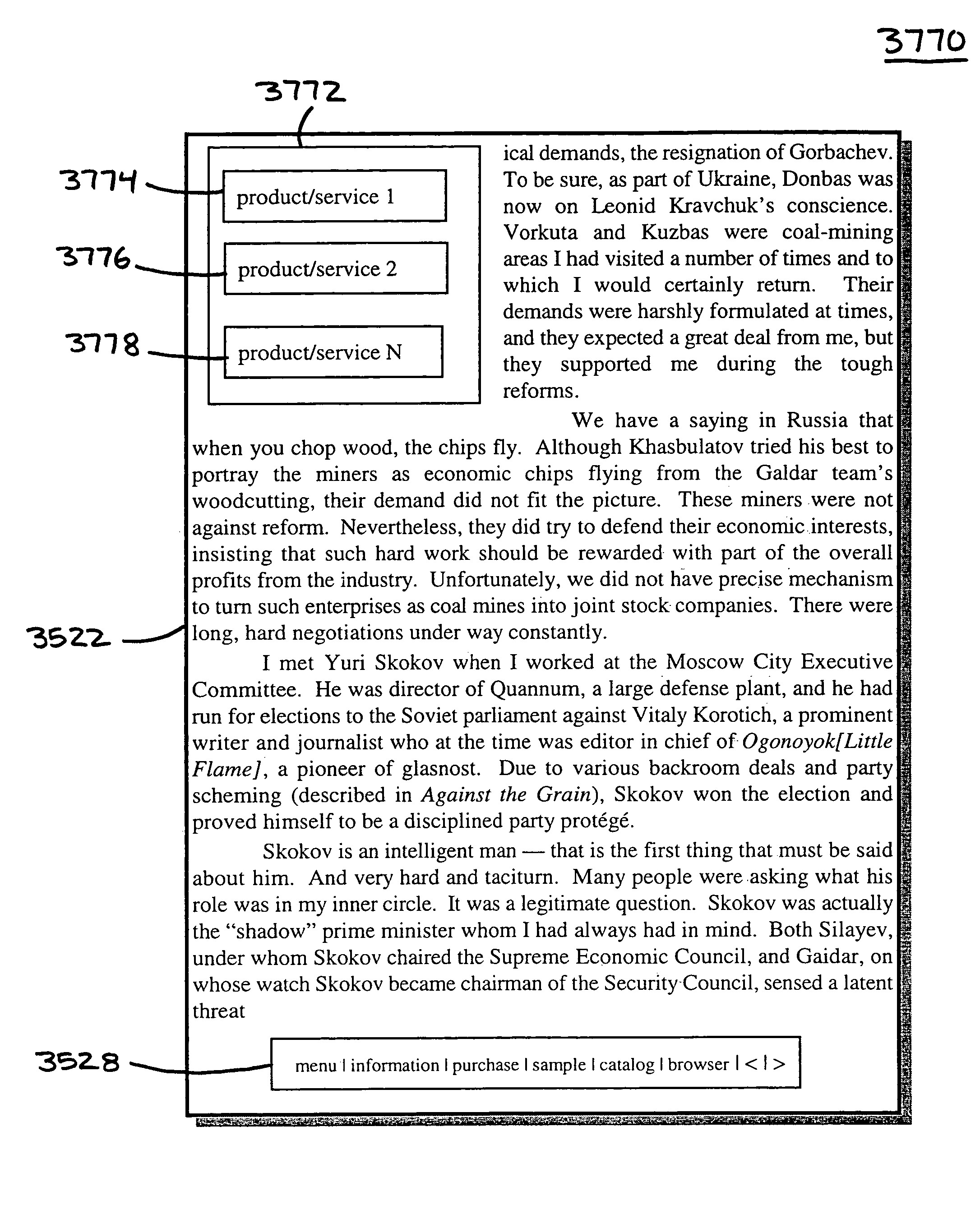

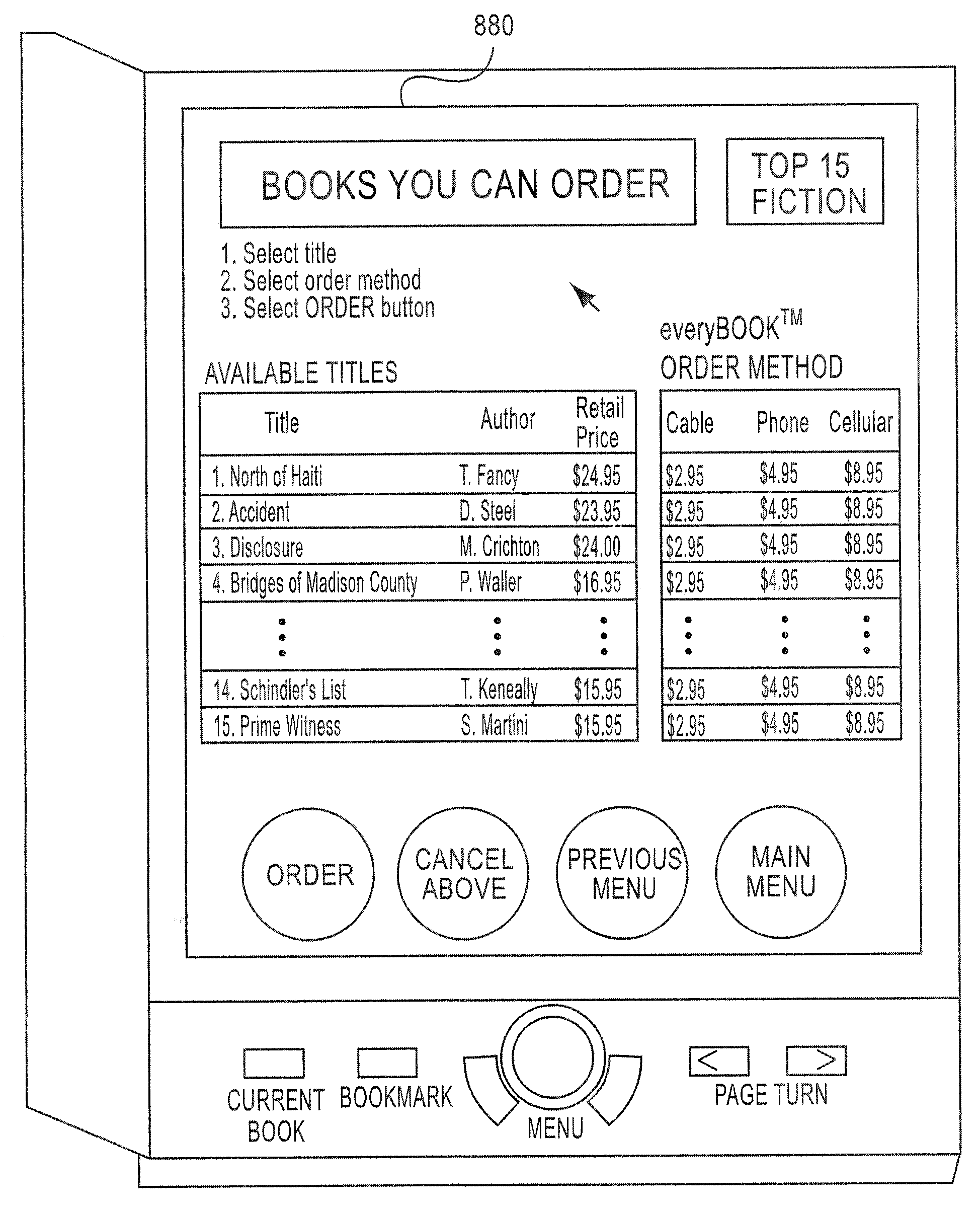

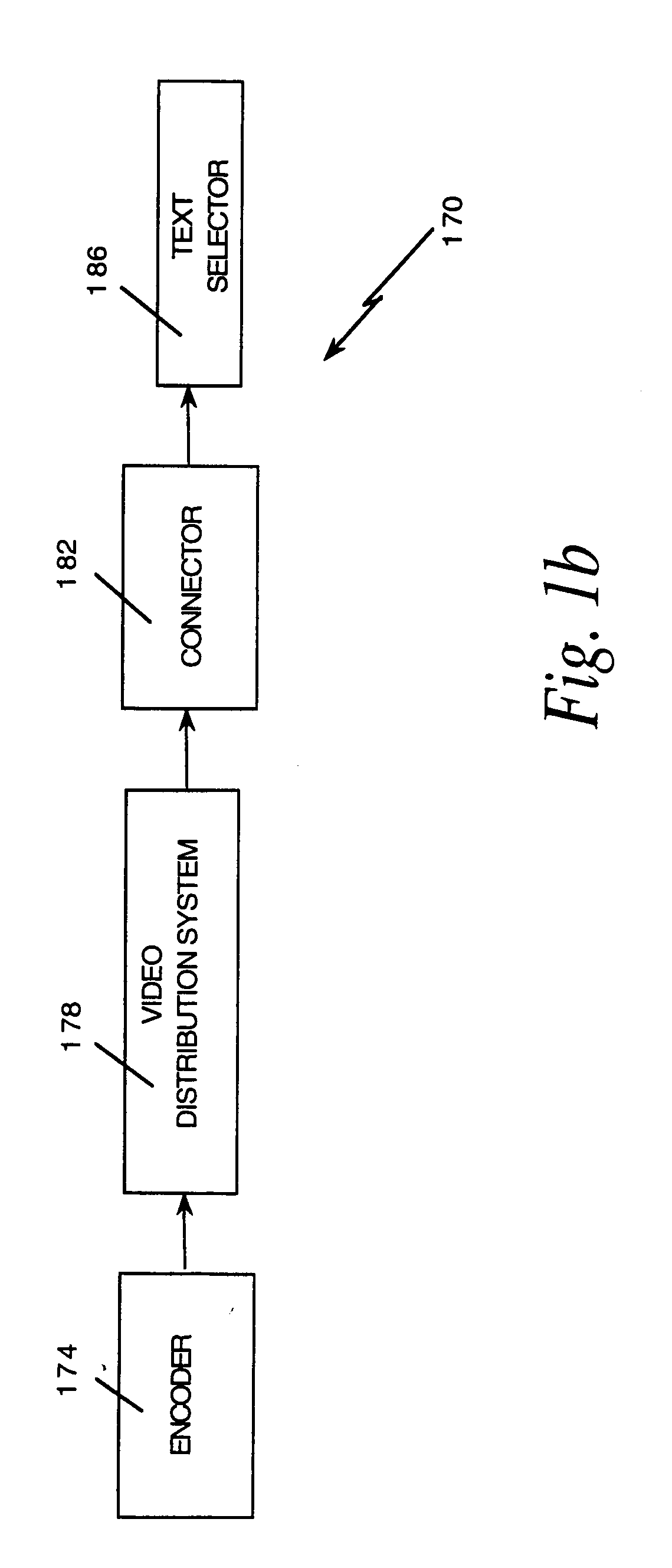

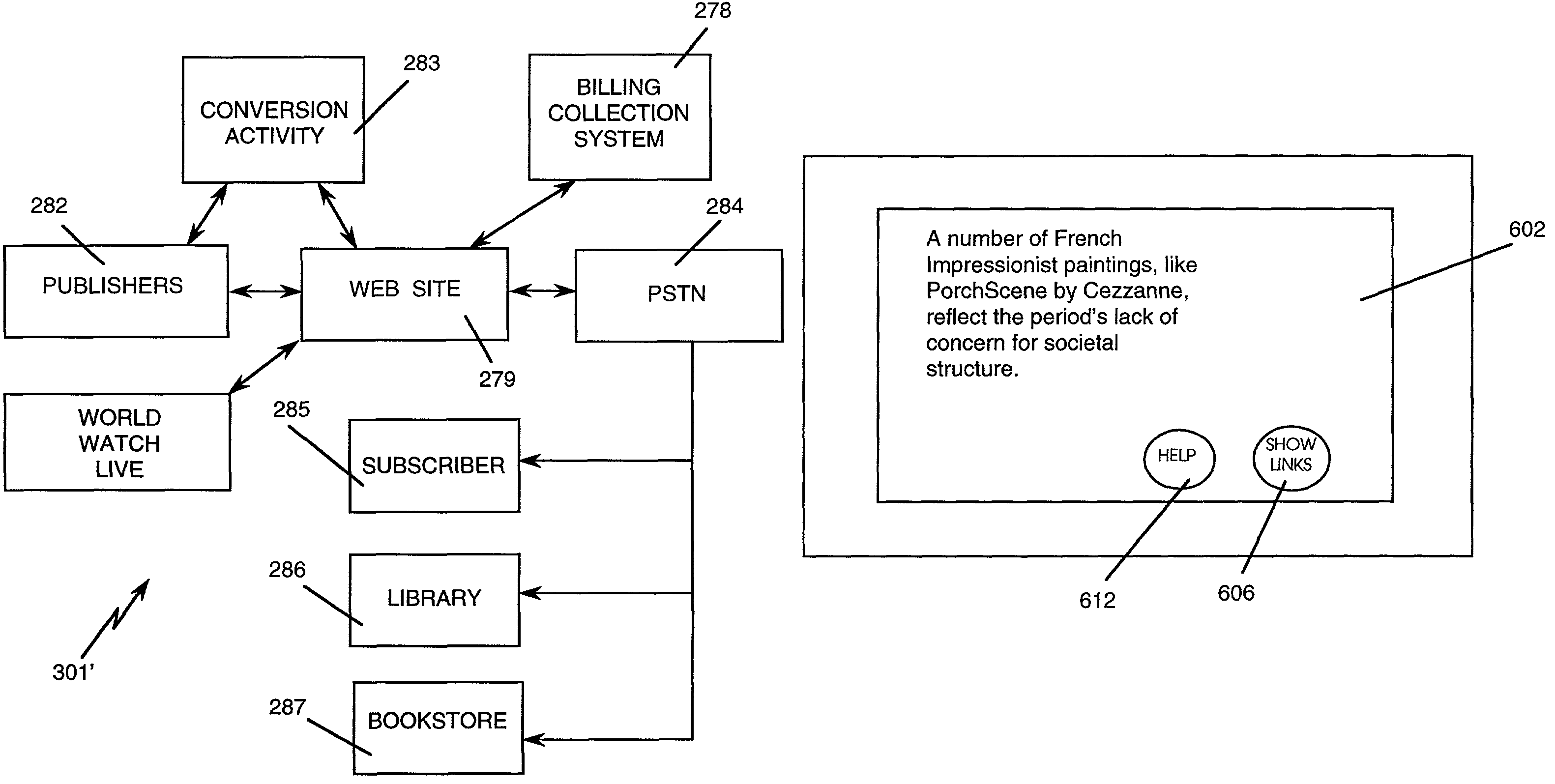

Electronic Book having electronic commerce features

InactiveUS7509270B1High tech auraEasy to useAdvertisementsSpecial data processing applicationsE-commerceElectronic book

A viewer for displaying an electronic book and providing for electronic commerce. In conjunction with viewing an electronic book, a user can view information about products and services, view an on-line electronic catalog, and receive samples of products available for purchase. By entering a purchase request, the user can purchase products or services. In the case of a digital product, the user can download the purchased product directly into the viewer. The viewer also records statistics concerning purchase and information requests in order to recommend related products or services, or for directing particular types of advertisements to the user.

Owner:DISCOVERY PATENT HLDG

Electronic book having electronic commerce features

InactiveUS20090216623A1High tech auraEasy to useAdvertisementsSpecial data processing applicationsElectronic bookE-commerce

A viewer for displaying an electronic book and providing for electronic commerce. In conjunction with viewing an electronic book, a user can view information about products and services, view an on-line electronic catalog, and receive samples of products available for purchase. By entering a purchase request, the user can purchase products or services. In the case of a digital product, the user can download the purchased product directly into the viewer. The viewer also records statistics concerning purchase and information requests in order to recommend related products or services, or for directing particular types of advertisements to the user.

Owner:DISCOVERY COMM INC

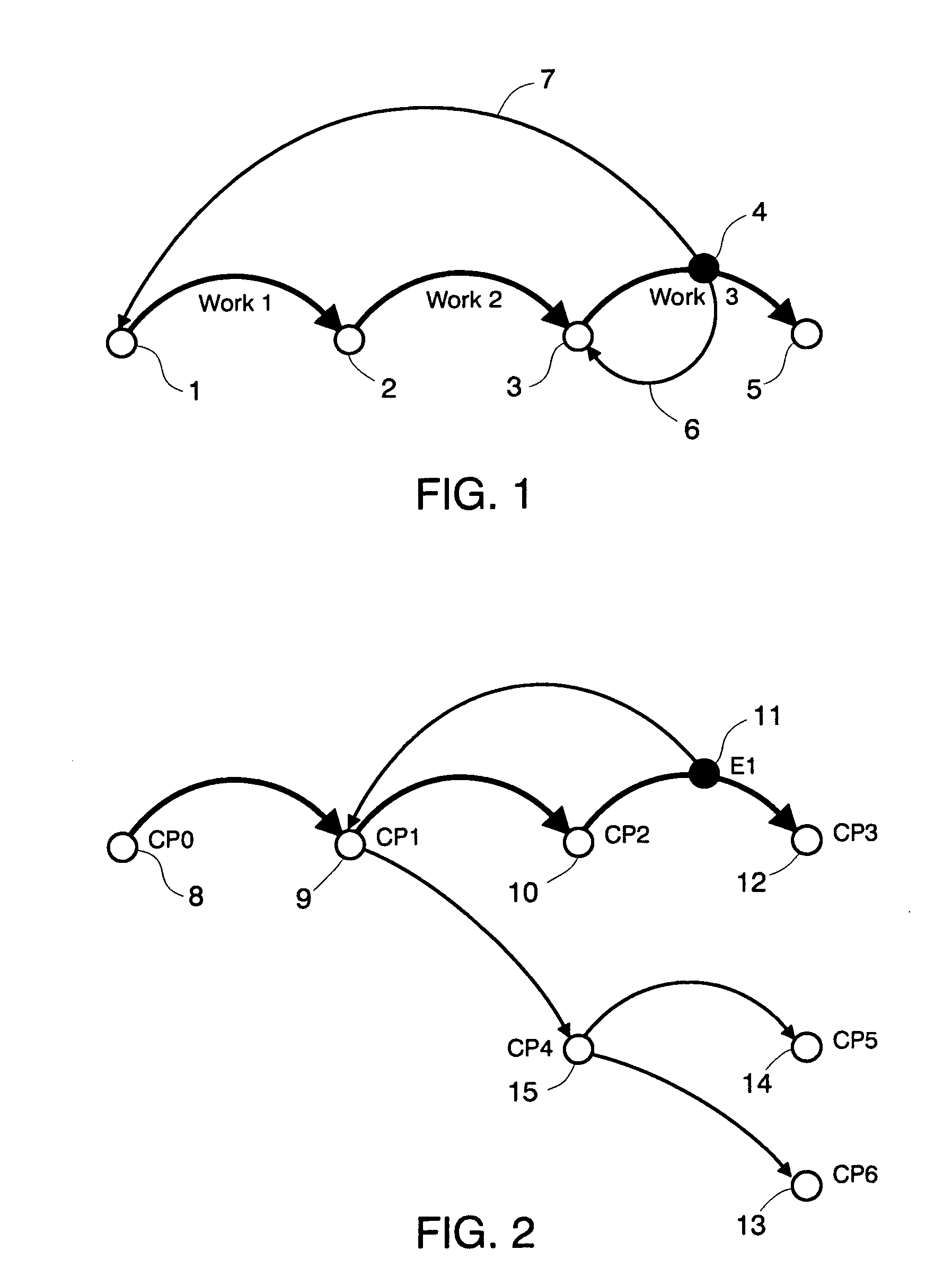

Adaptive method and software architecture for efficient transaction processing and error management

InactiveUS20070174185A1Low costAvoid overheadFinanceError detection/correctionRobustificationSoftware architecture

Owner:MCGOVERAN DAVID O

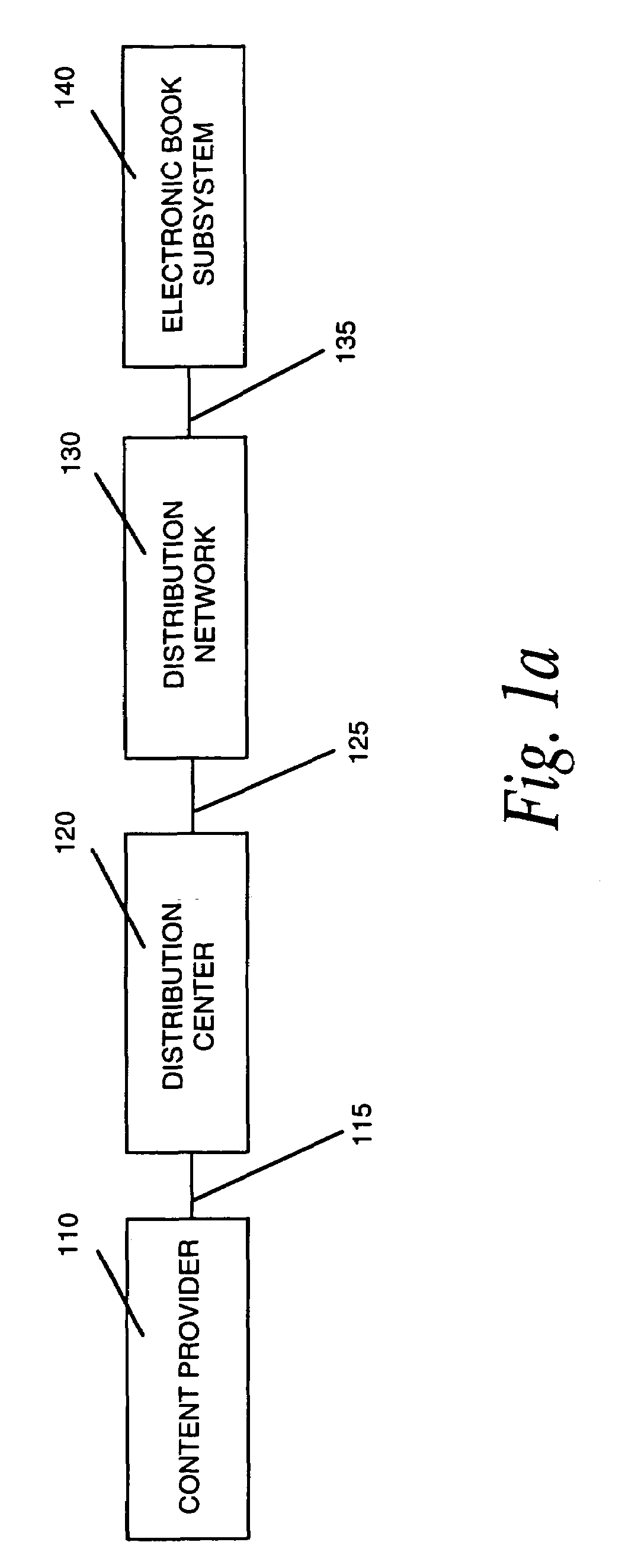

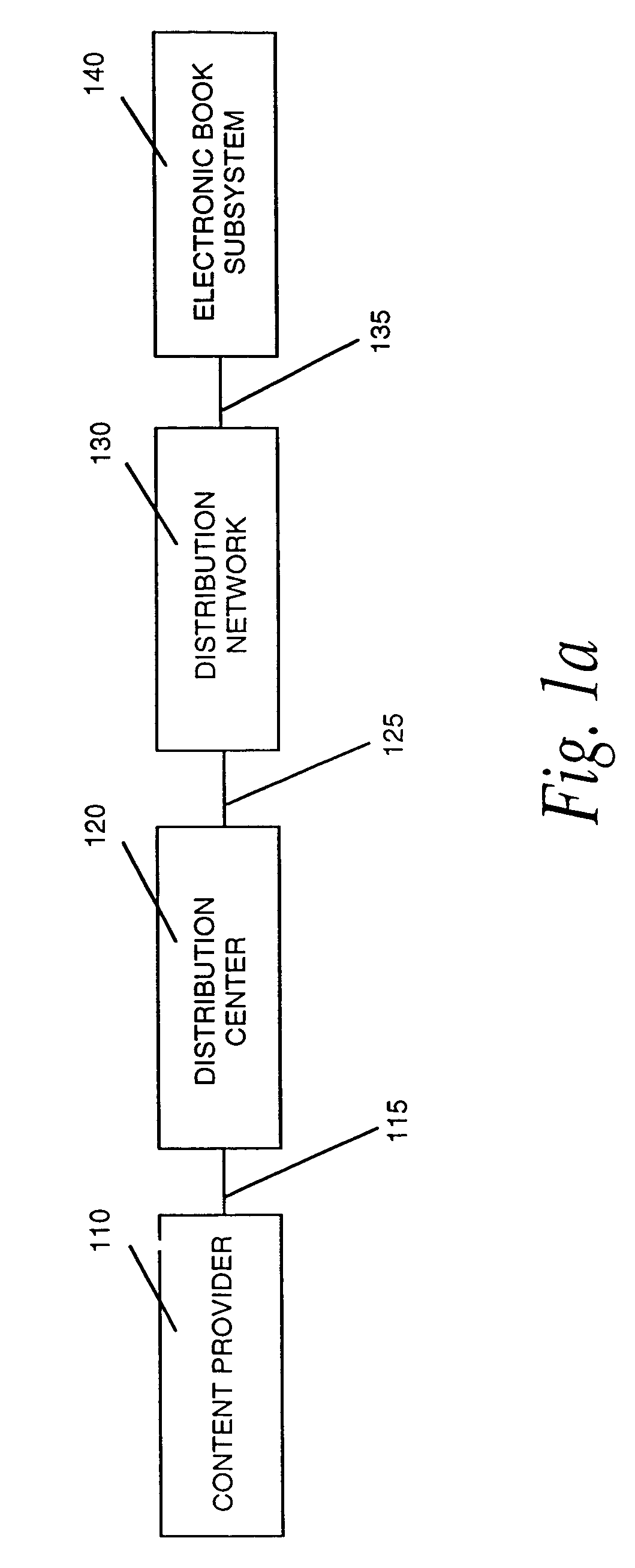

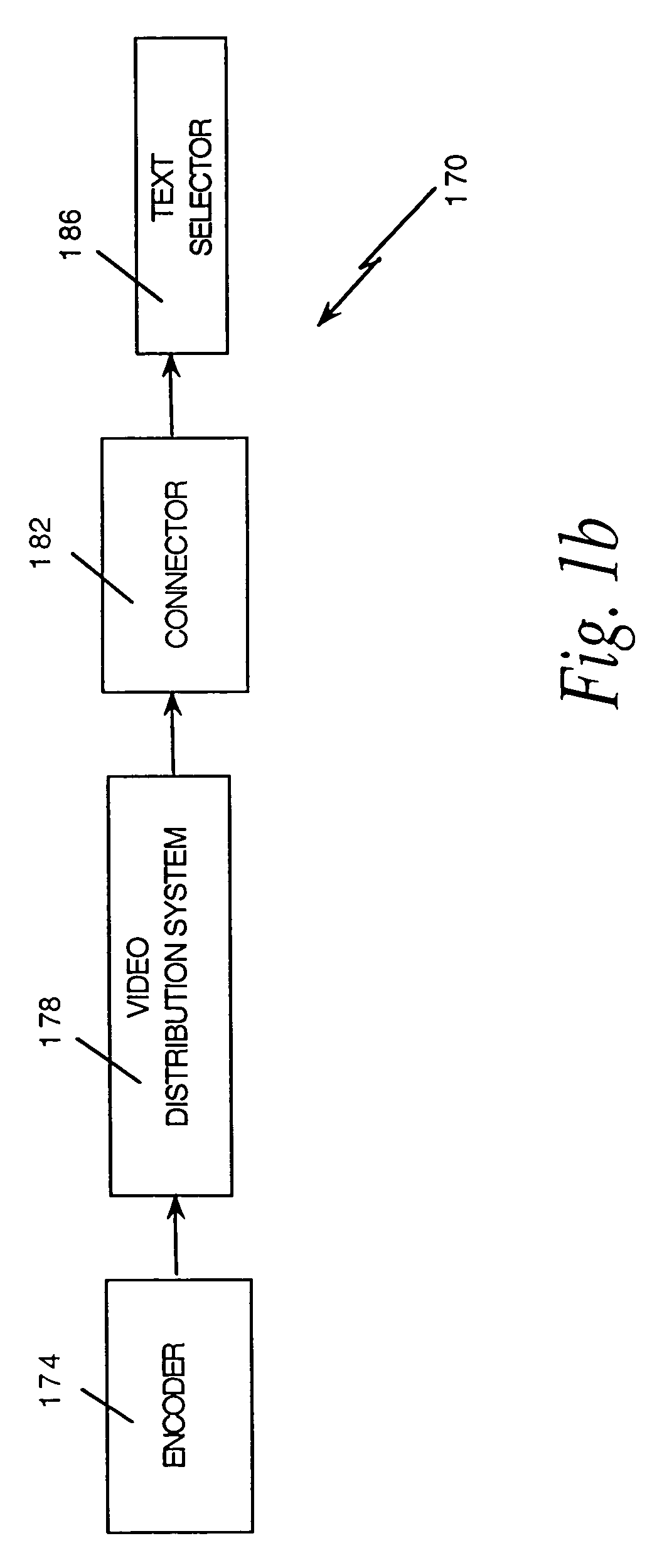

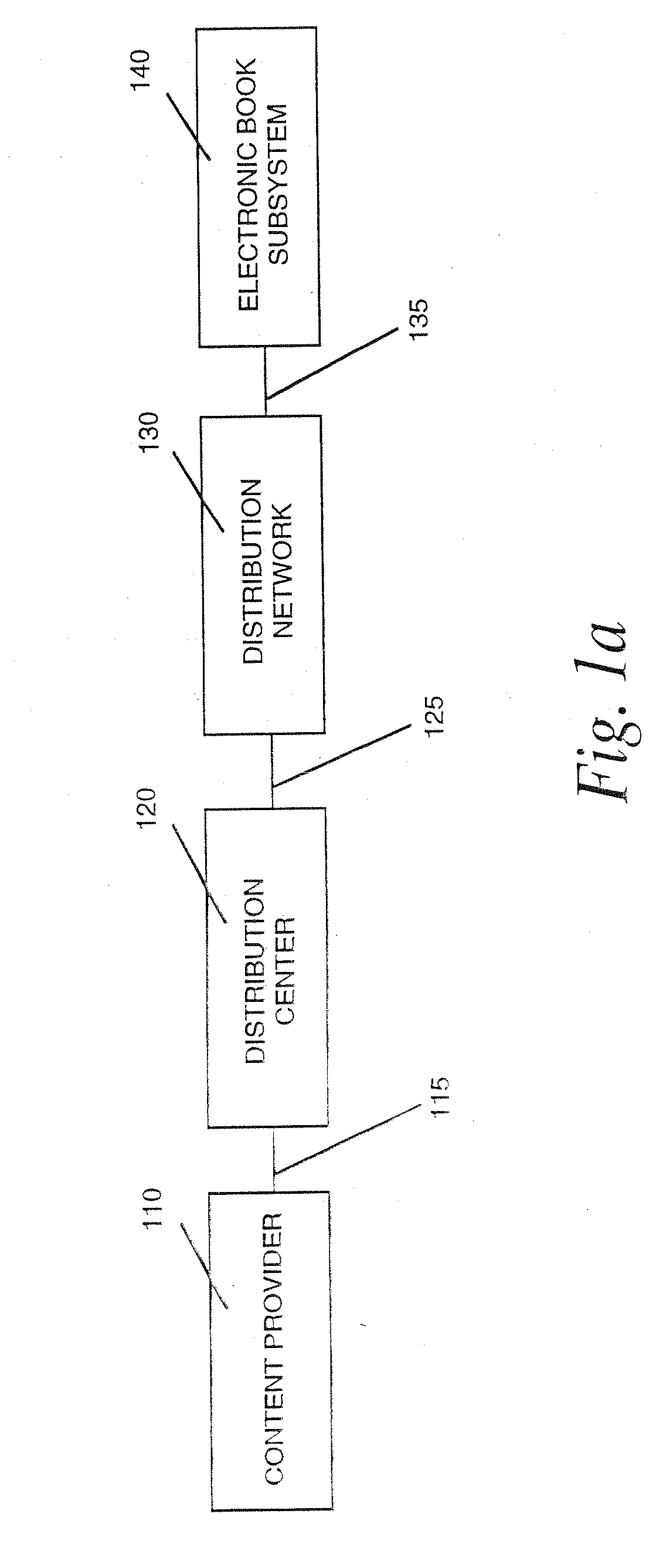

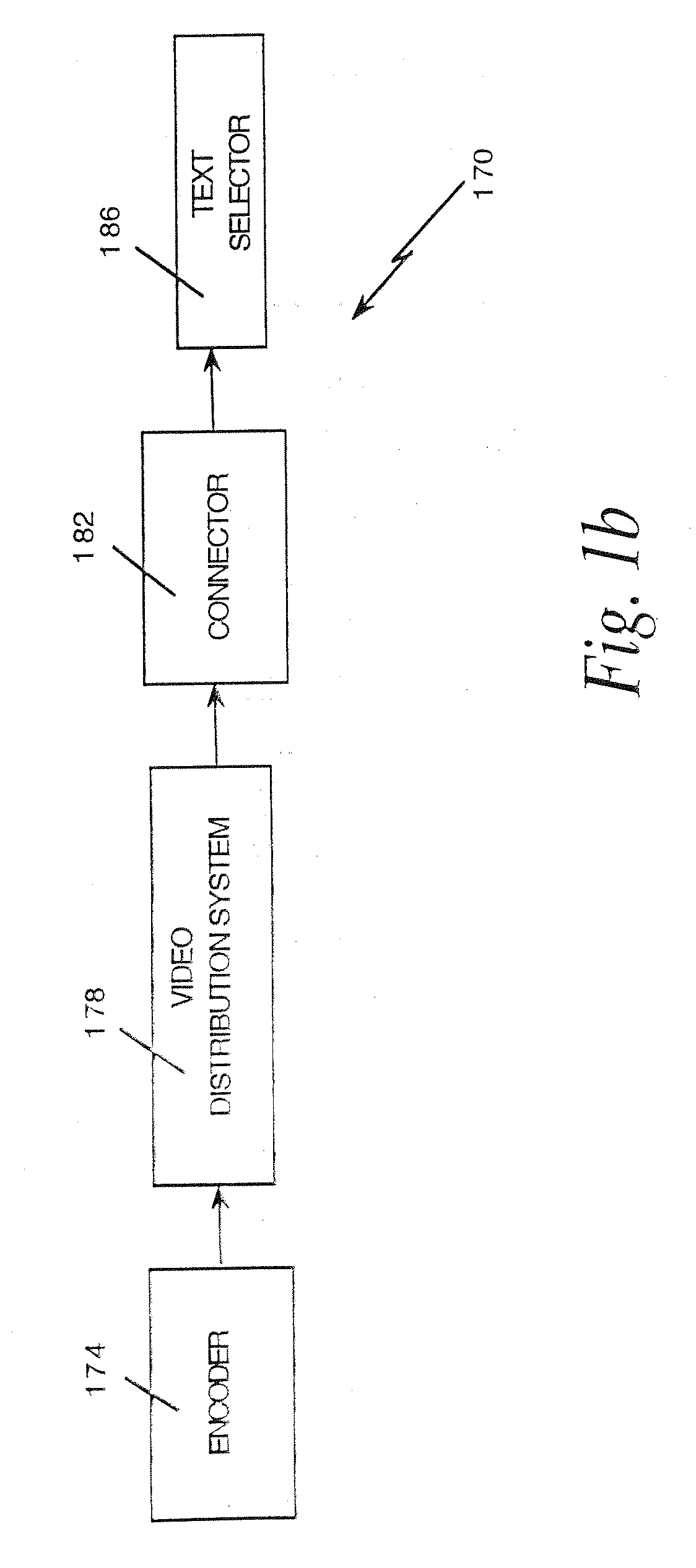

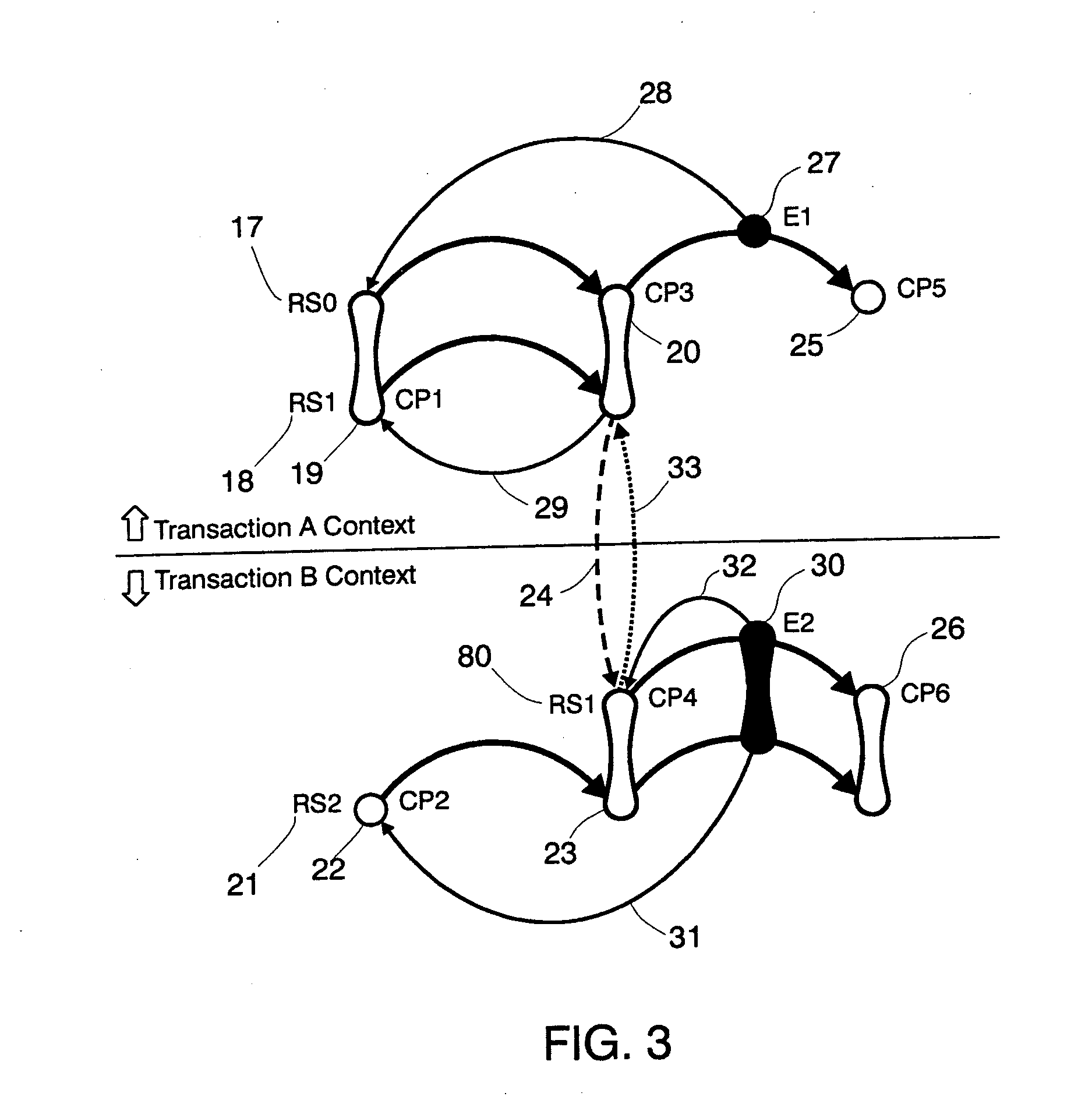

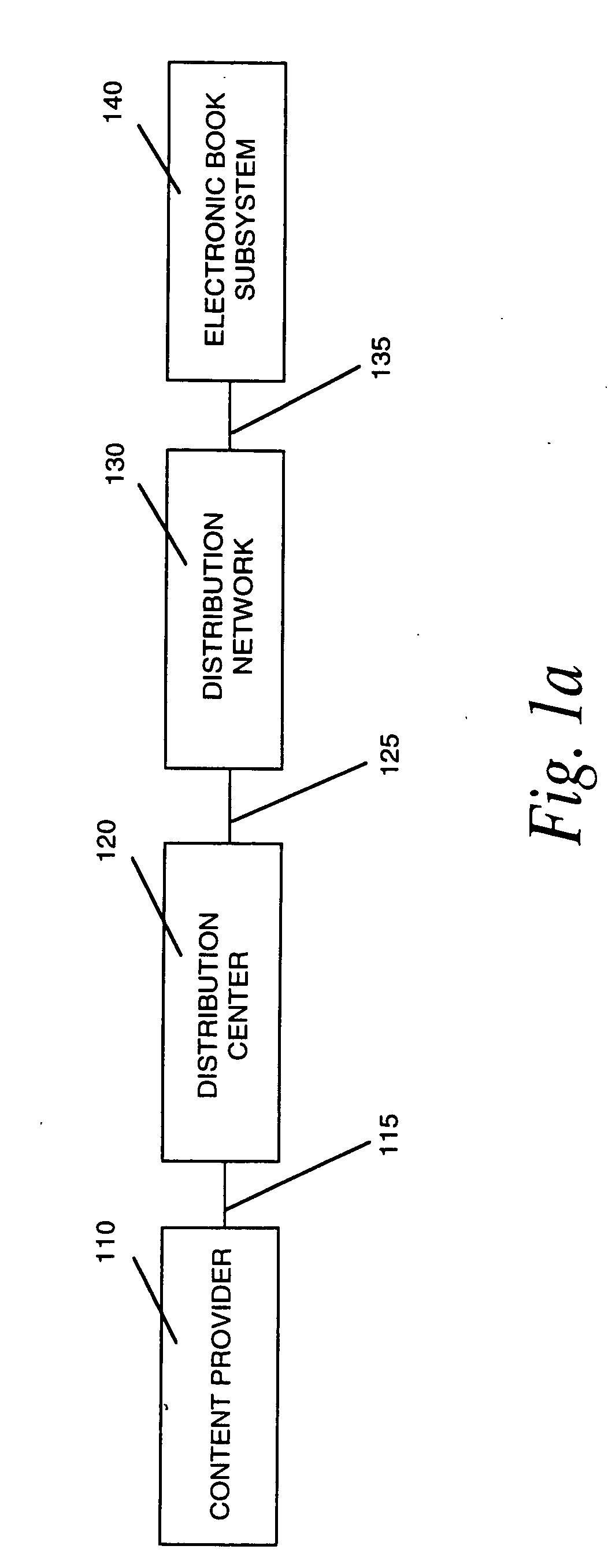

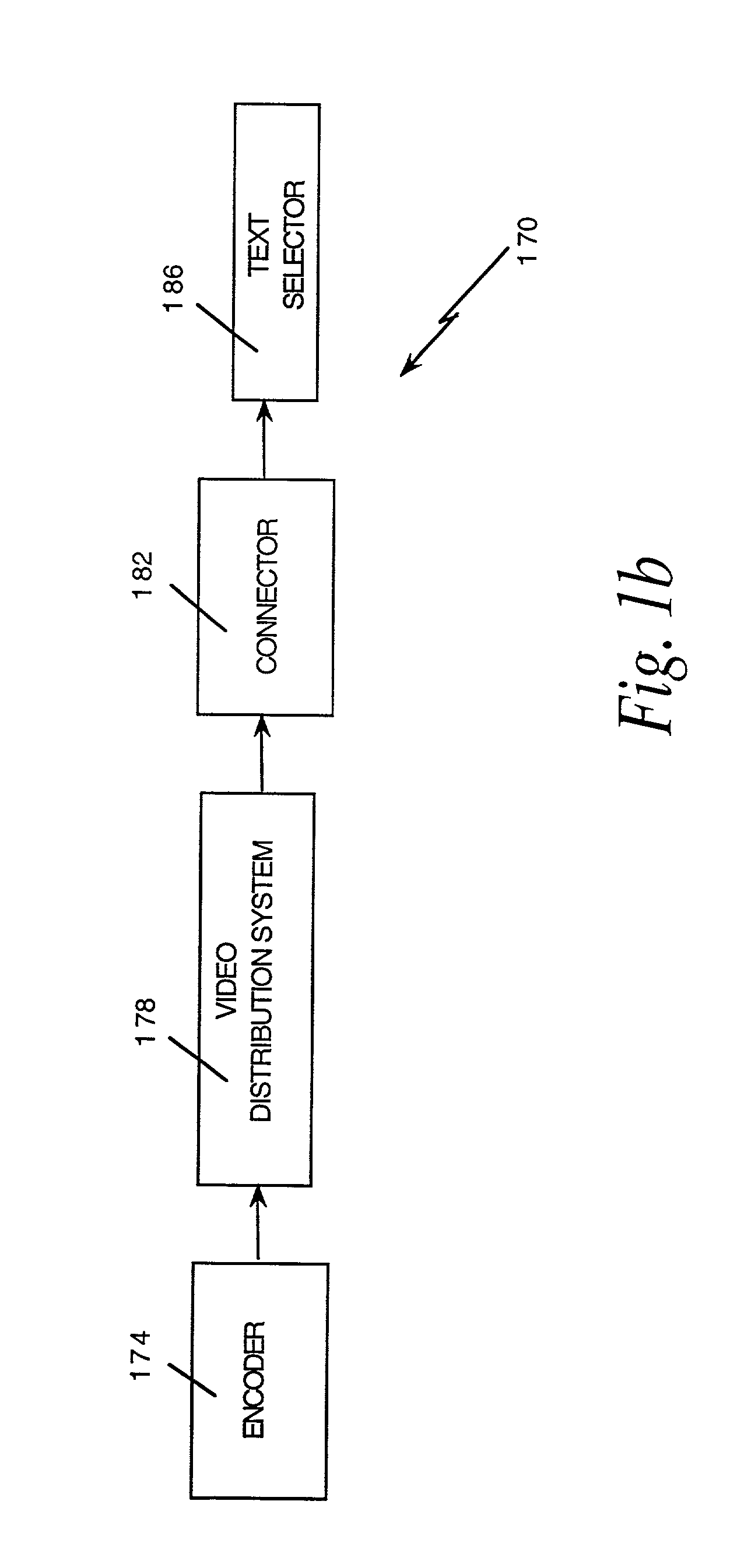

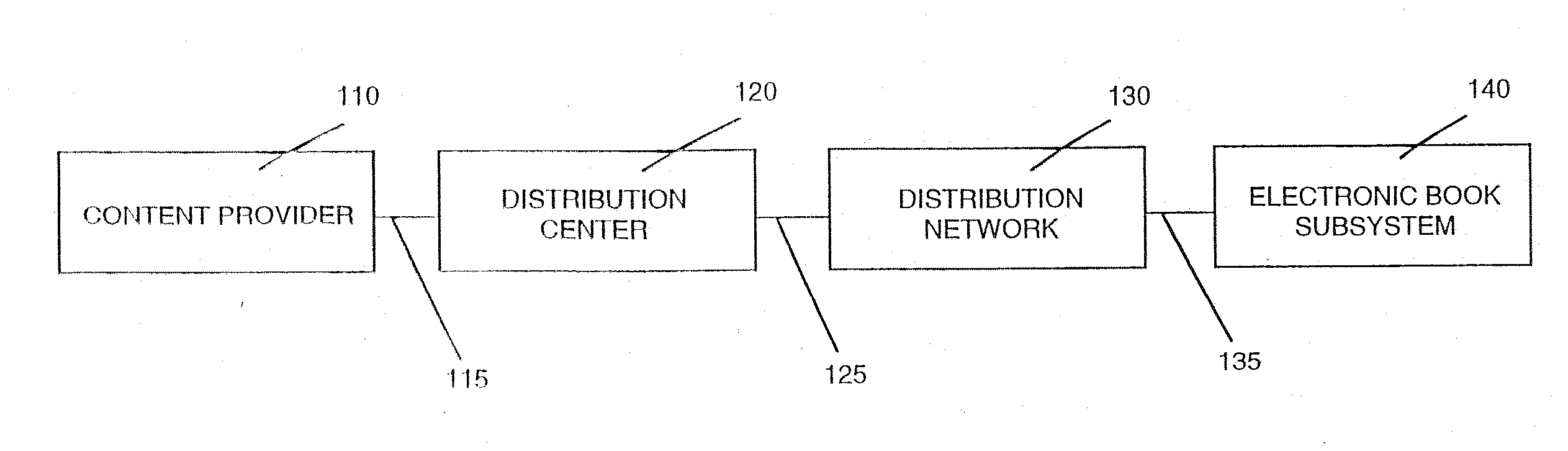

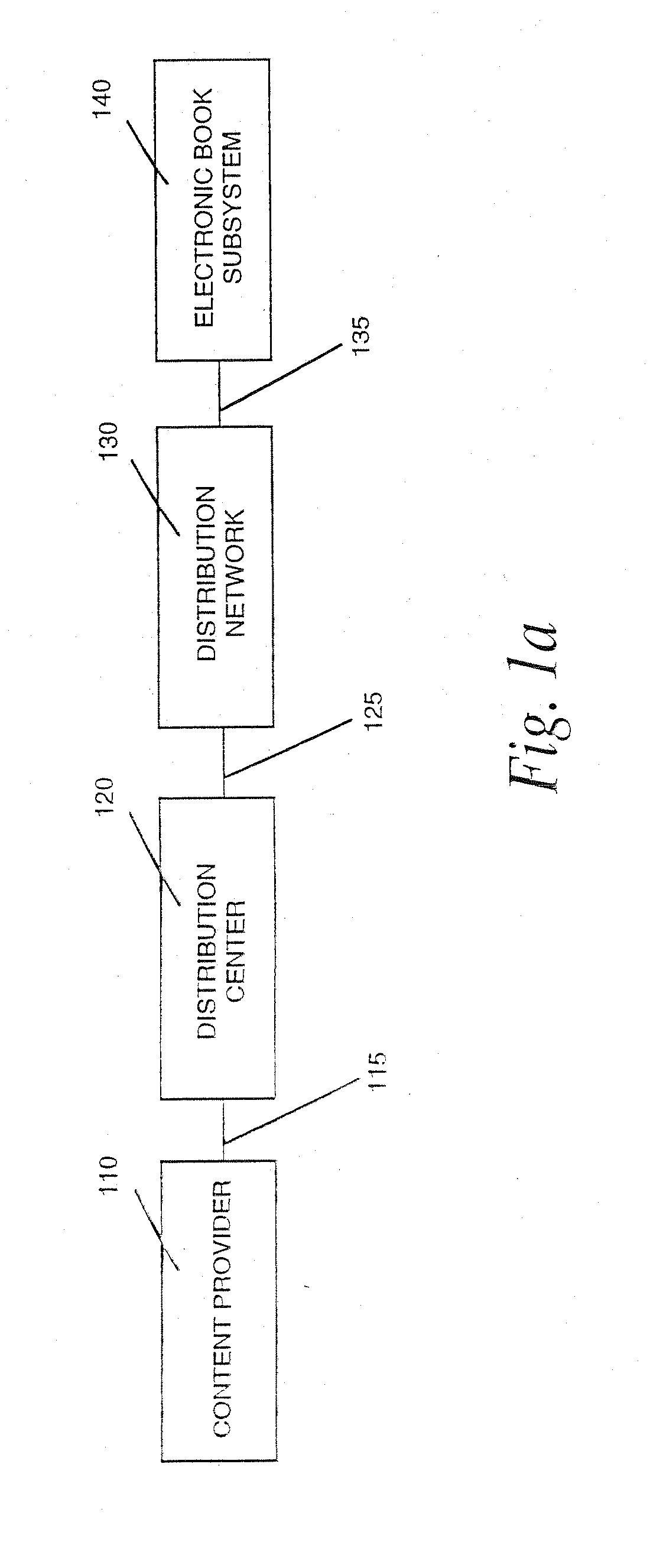

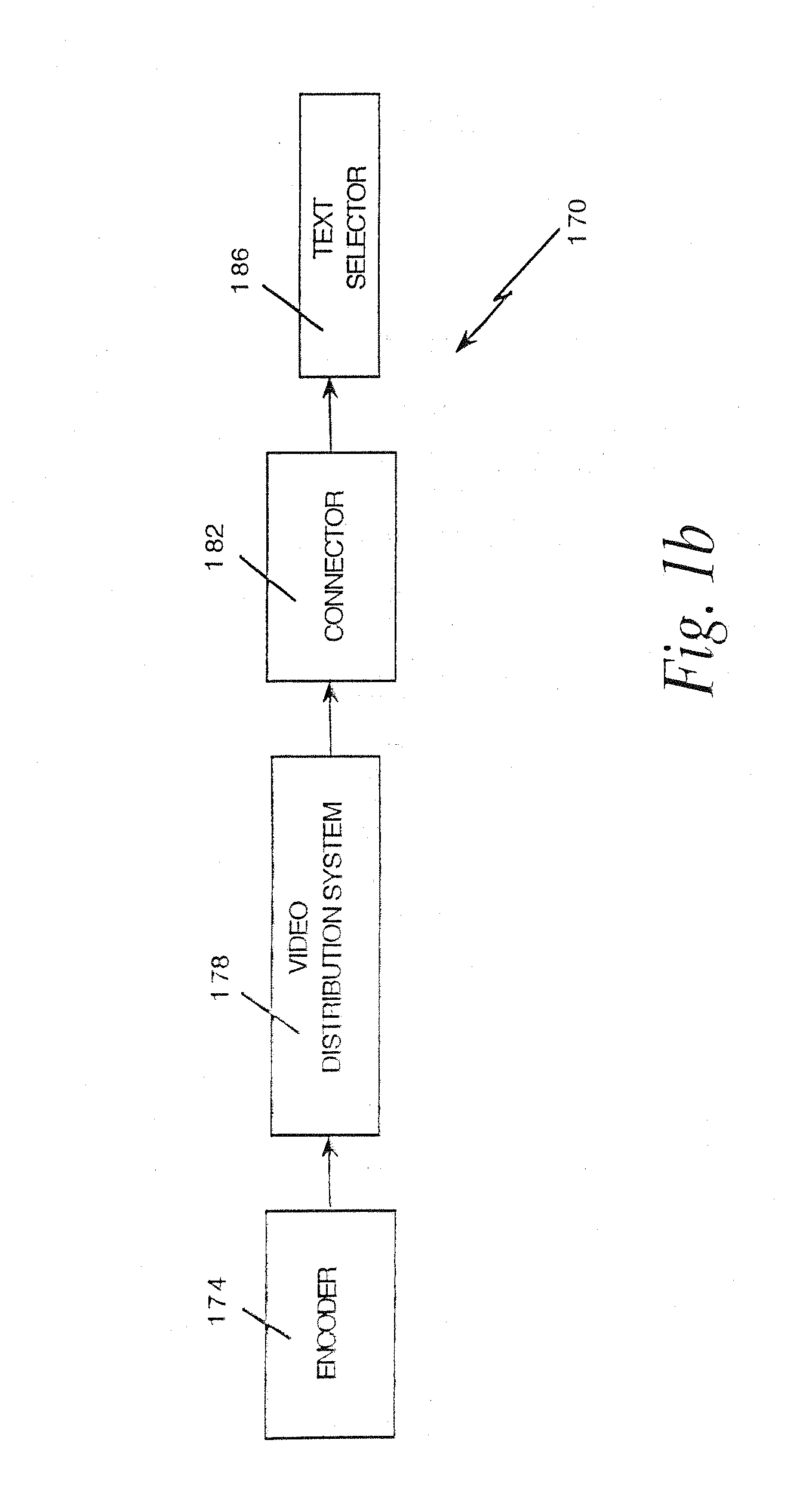

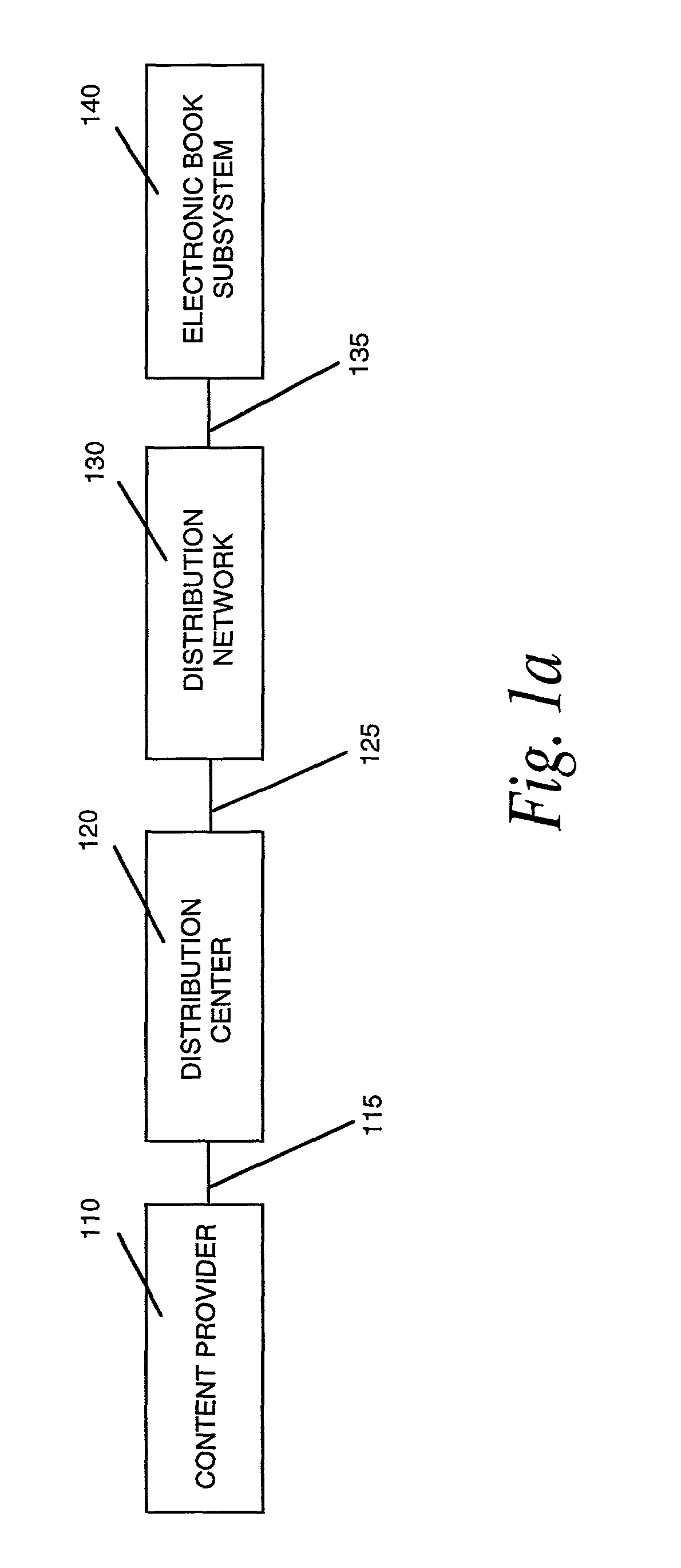

Electronic book security and copyright protection system

InactiveUS20070201702A1High tech auraEasy to useKey distribution for secure communicationProgram/content distribution protectionGraphicsPayment

The invention, electronic book security and copyright protection system, provides for secure distribution of electronic text and graphics to subscribers and secure storage. The method may be executed at a content provider's site, at an operations center, over a video distribution system or over a variety of alternative distribution systems, at a home subsystem, and at a billing and collection system. The content provider or operations center and / or other distribution points perform the functions of manipulation and secure storage of text data, security encryption and coding of text, cataloging of books, message center, and secure delivery functions. The home subsystem connects to a secure video distribution system or variety of alternative secure distribution systems, generates menus and stores text, and transacts through communicating mechanisms. A portable book-shaped viewer is used for secure viewing of the text. A billing system performs the transaction, management, authorization, collection and payments utilizing the telephone system or a variety of alternative communication systems using secure techniques.

Owner:ADREA LLC

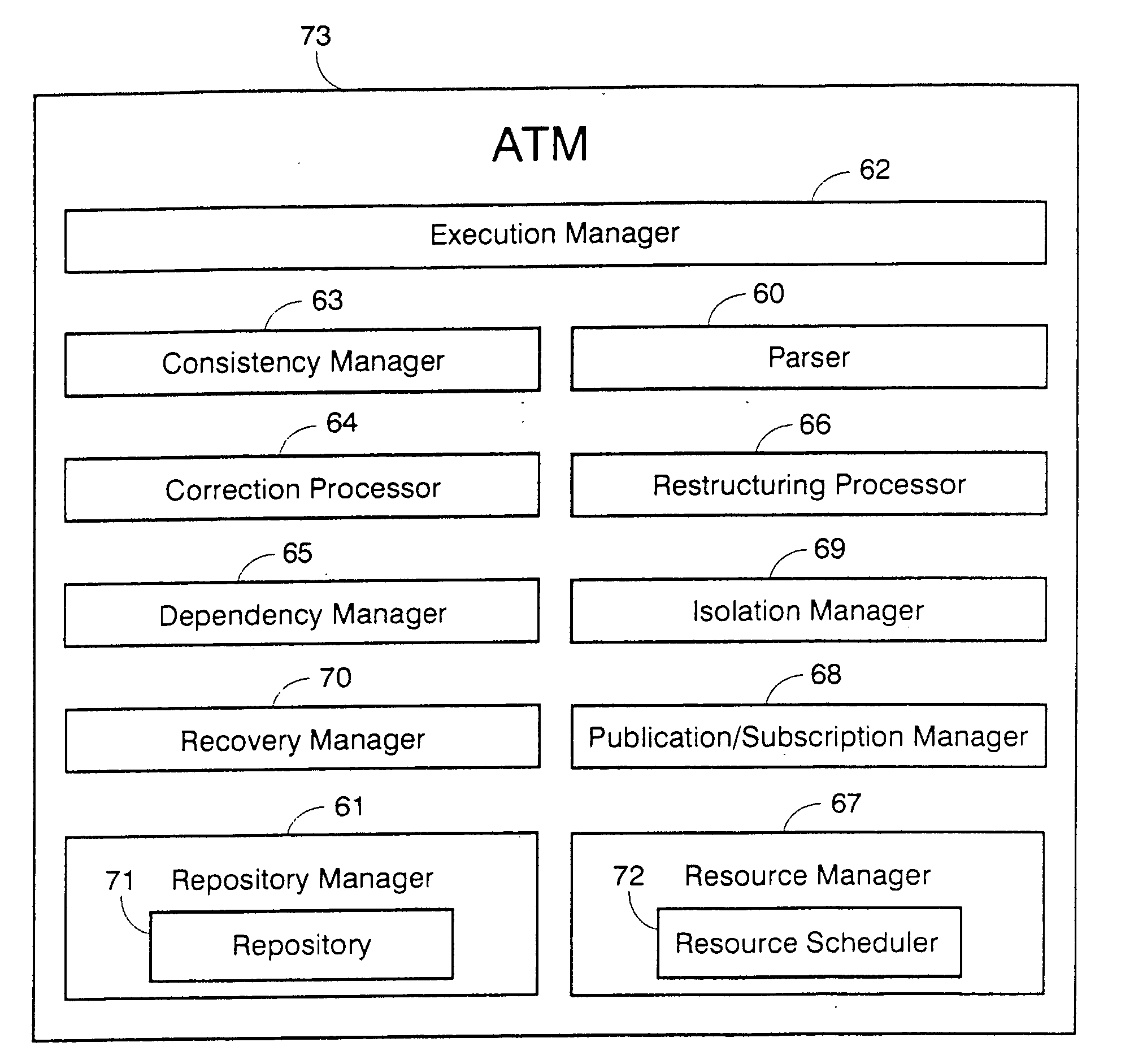

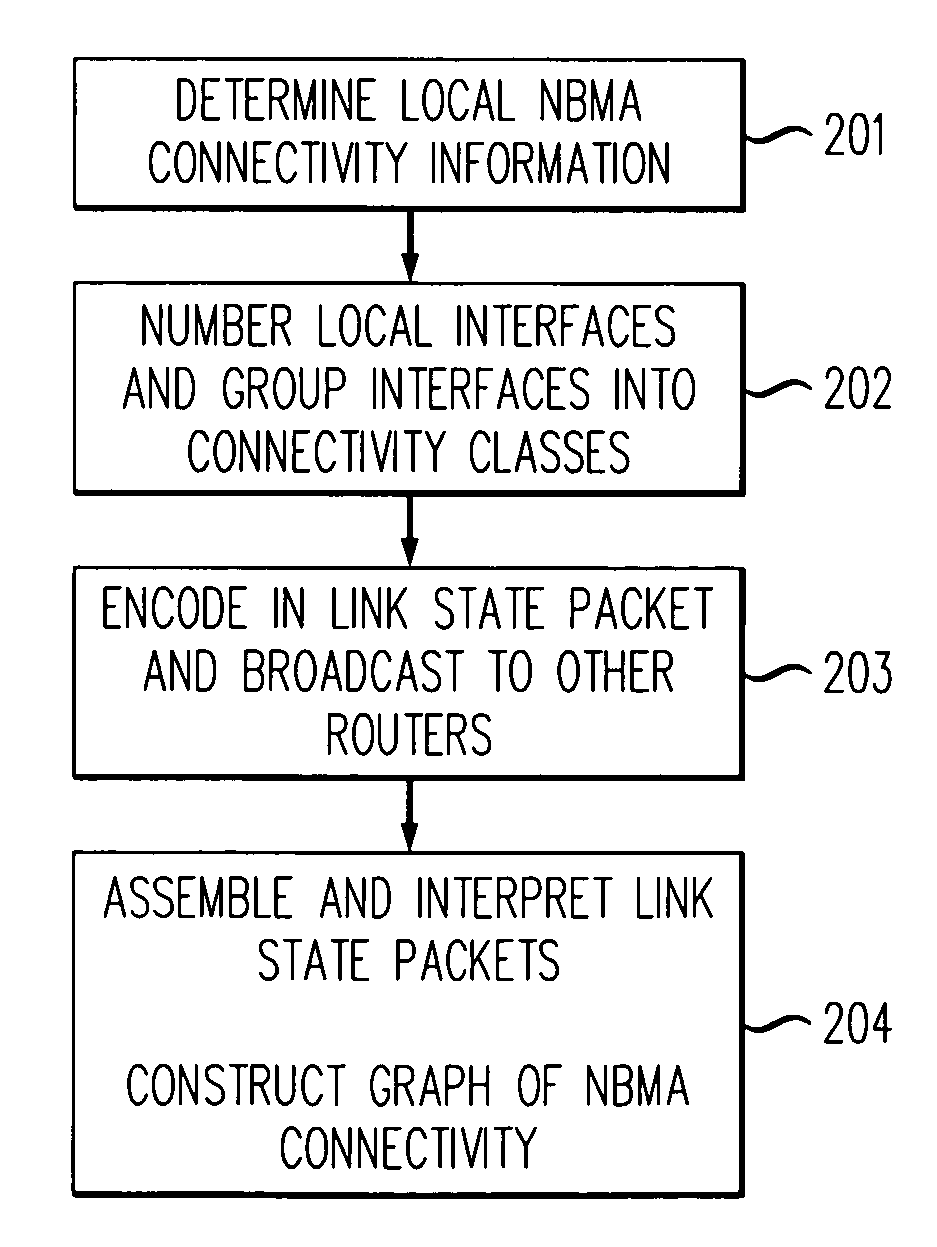

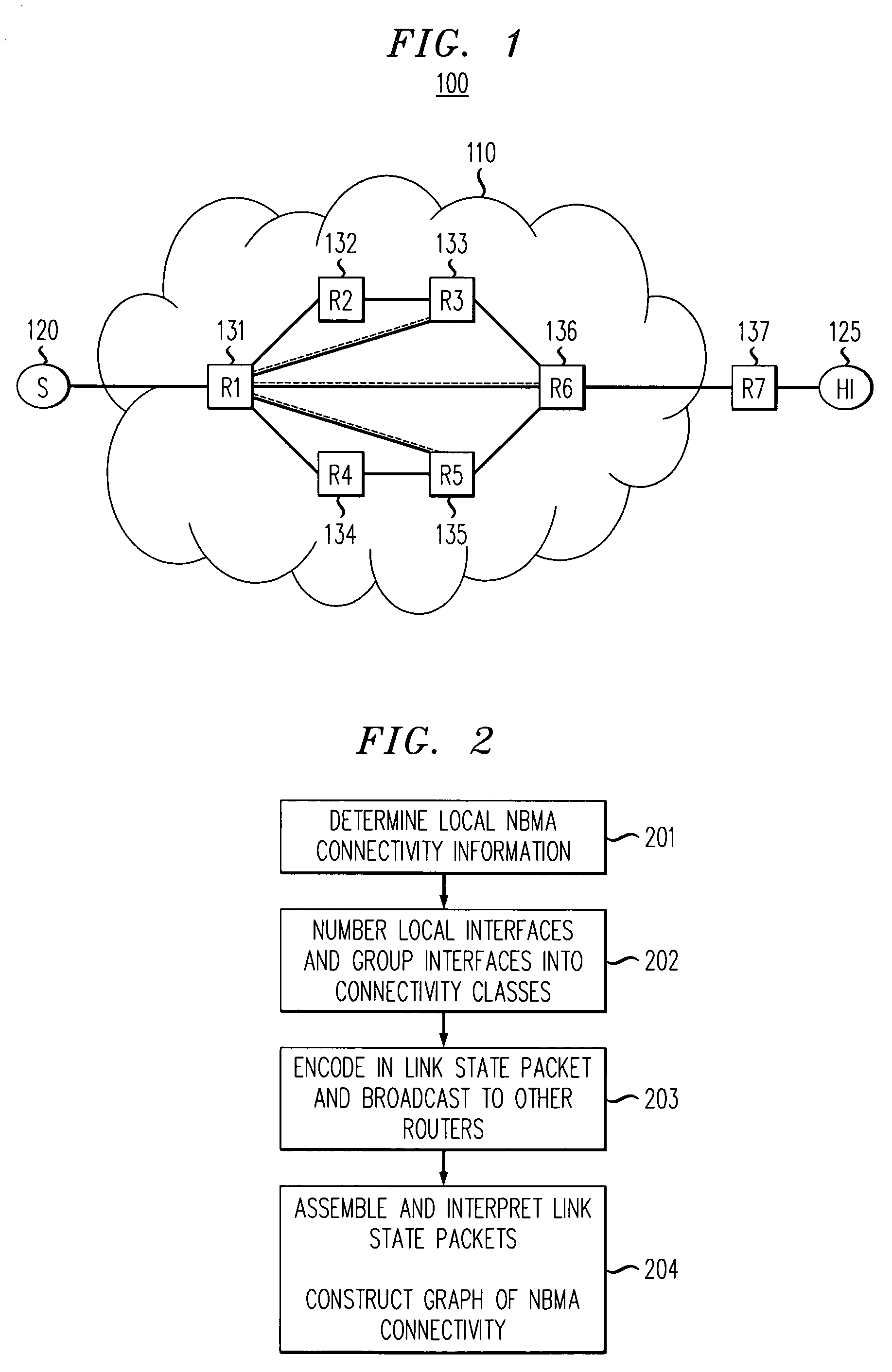

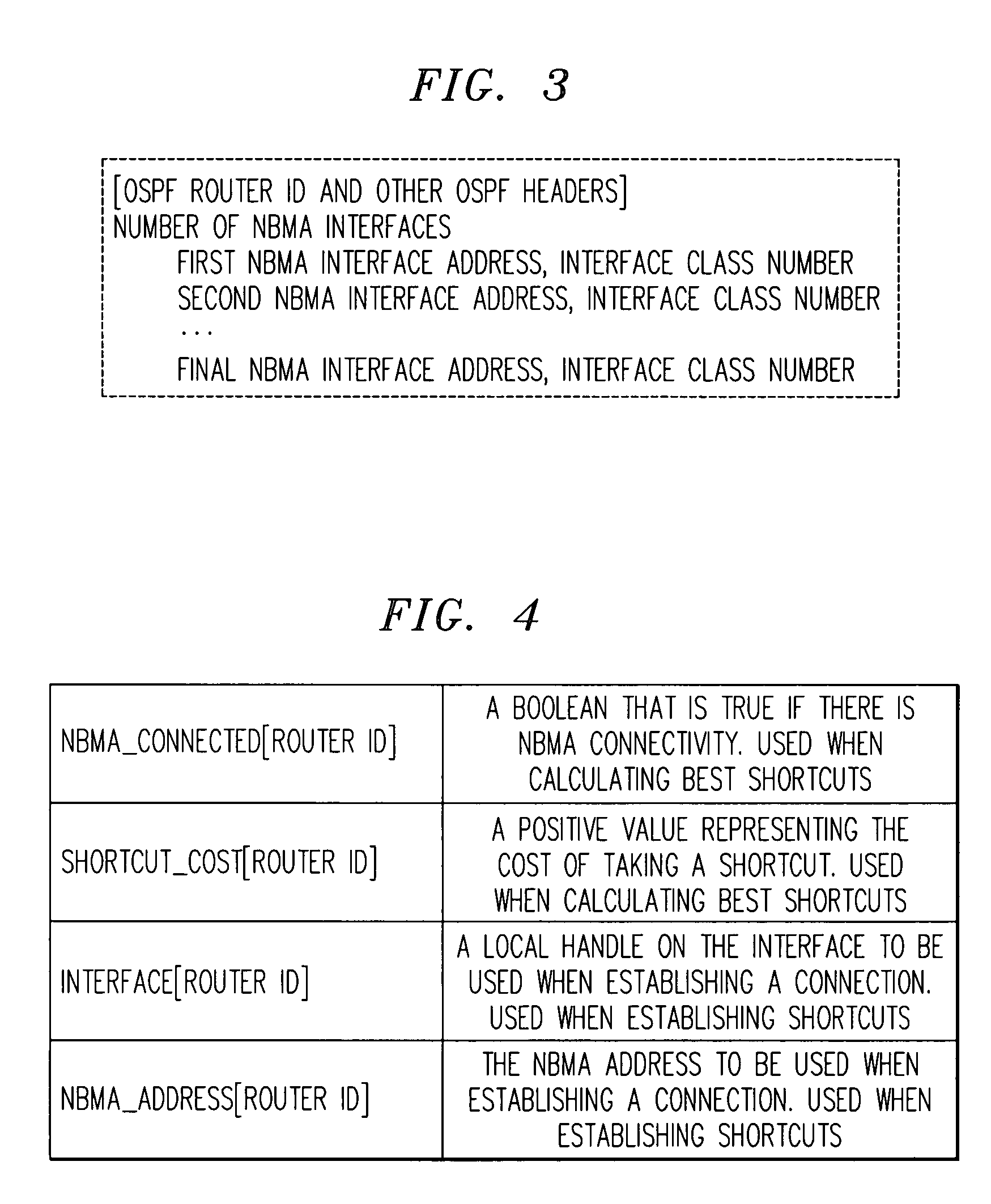

Method for determining non-broadcast multiple access (NBMA) connectivity for routers having multiple local NBMA interfaces

InactiveUS7808968B1Avoid overheadIntroduce latencyData switching by path configurationTier 2 networkLink state packet

The present invention discloses an efficient architecture for routing in a very large autonomous system where many of the layer 3 routers are attached to a common connection-oriented layer 2 subnetwork, such as an ATM network. In a preferred embodiment of the invention, a permanent topology of routers coupled to the subnetwork is connected by permanent virtual circuits. The routers can further take advantage of both intra-area and inter-area shortcuts through the layer 2 network to improve network performance. The routers pre-calculate shortcuts using information from link state packets broadcast by other routers and store the shortcuts to a given destination in a forwarding table, along with corresponding entries for a next hop along the permanent topology. The present invention allows the network to continue to operate correctly if layer 2 resource limitations preclude the setup of additional shortcuts.

Owner:AT&T INTPROP I L P

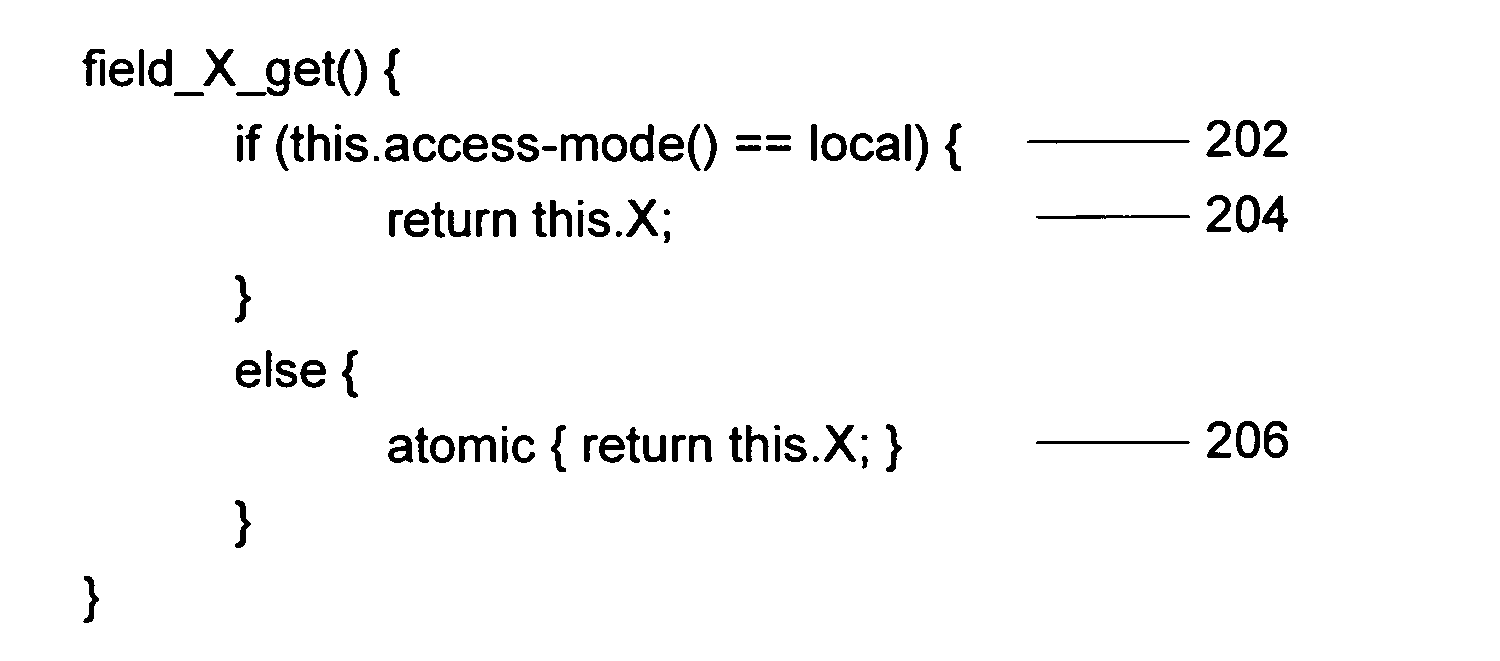

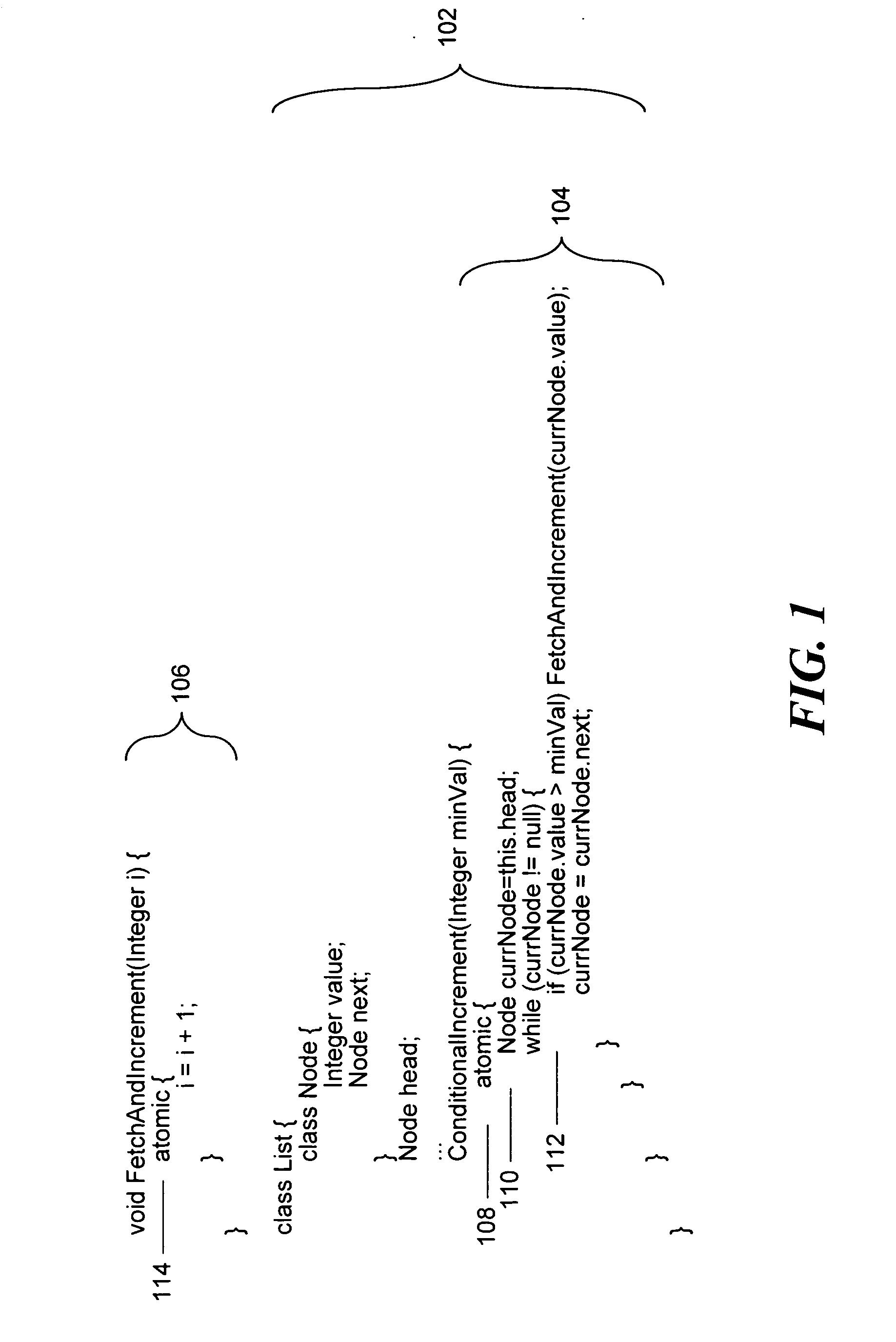

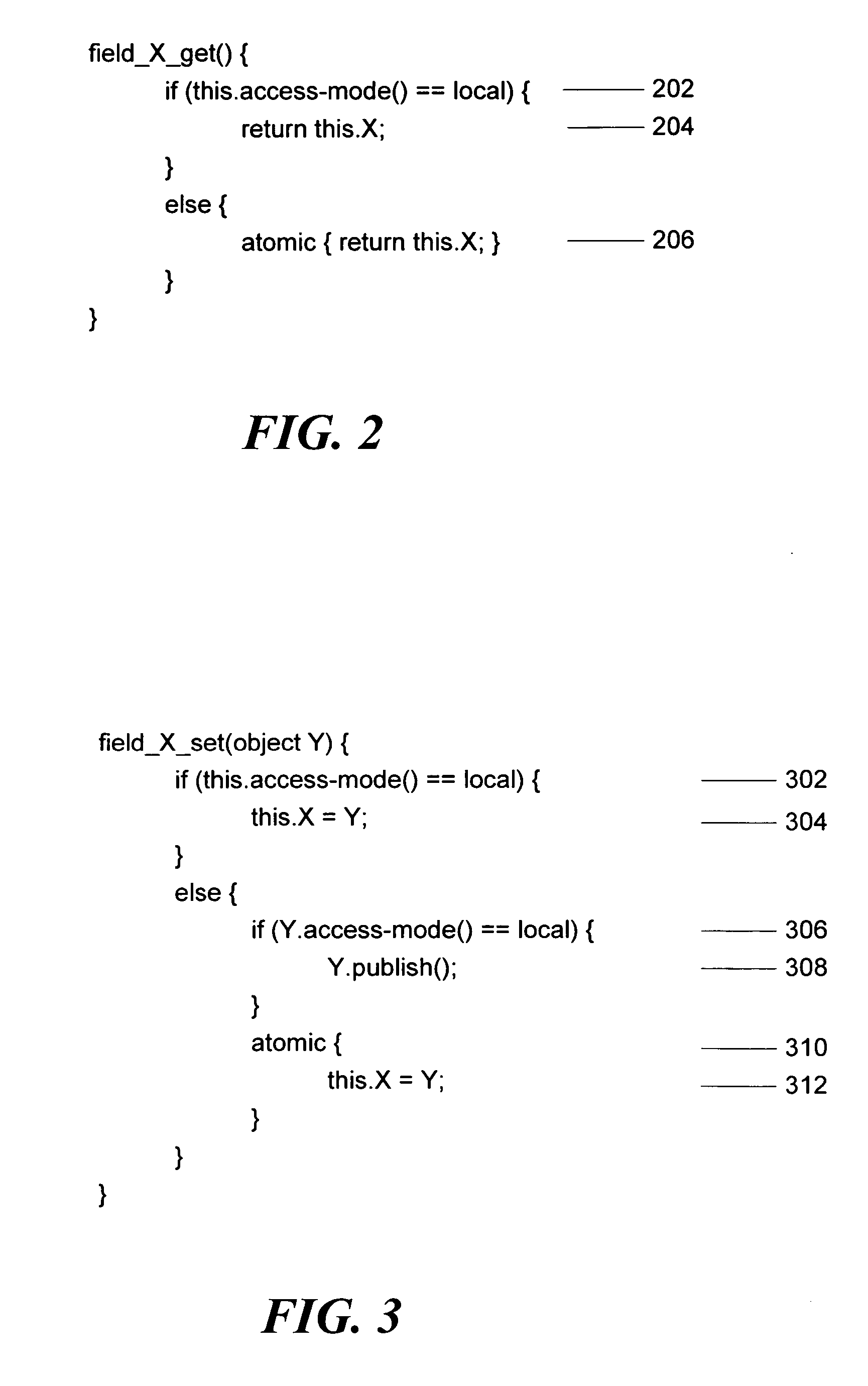

Method and apparatus for improving transactional memory interactions by tracking object visibility

ActiveUS20070150509A1Avoid overheadAvoid accessSpecific program execution arrangementsSpecial data processing applicationsProgramming languageVisibility

In a multi-threaded computer system that uses transactional memory, object fields accessed by only one thread are accessed by regular non-transactional read and write operations. When an object may be visible to more than one thread, access by non-transactional code is prevented and all accesses to the fields of that object are performed using transactional code. In one embodiment, the current visibility of an object is stored in the object itself. This stored visibility can be checked at runtime by code that accesses the object fields or code can be generated to check the visibility prior to access during compilation.

Owner:ORACLE INT CORP

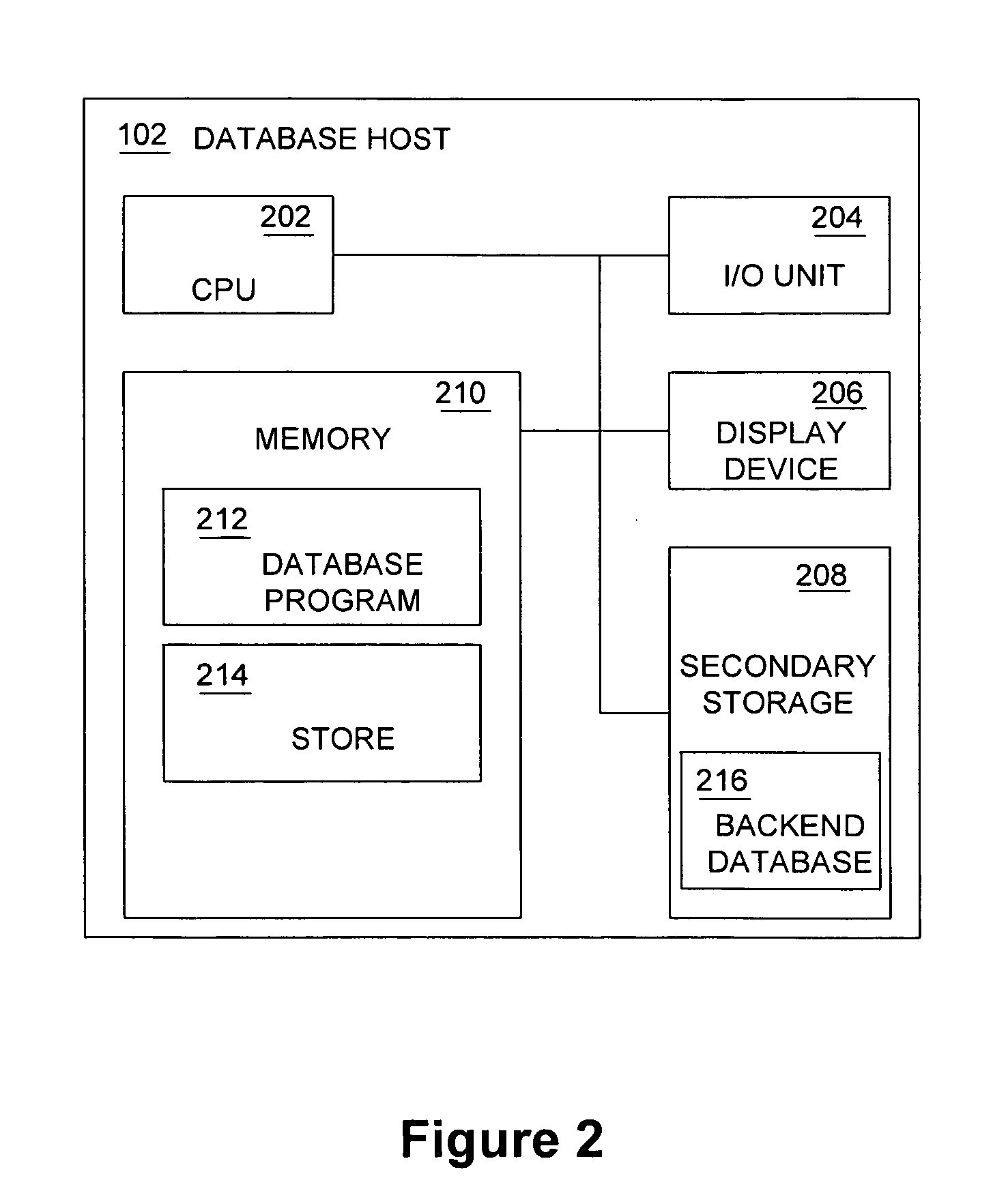

Systems and methods for synchronizing data in a cache and database

ActiveUS20070239797A1Fast readAvoid overheadDigital data information retrievalSpecial data processing applicationsData processing systemData store

Methods, systems, and articles of manufacture consistent with the present invention provide for managing a database. A data store is provided that is distributed over at least two sub data processing systems. A first information in the data store is associated with a first consistency level and a second information in the data store is associated with a second consistency level. At least one of the first consistency level and the second consistency level is selected according to an algorithm.

Owner:SUN MICROSYSTEMS INC

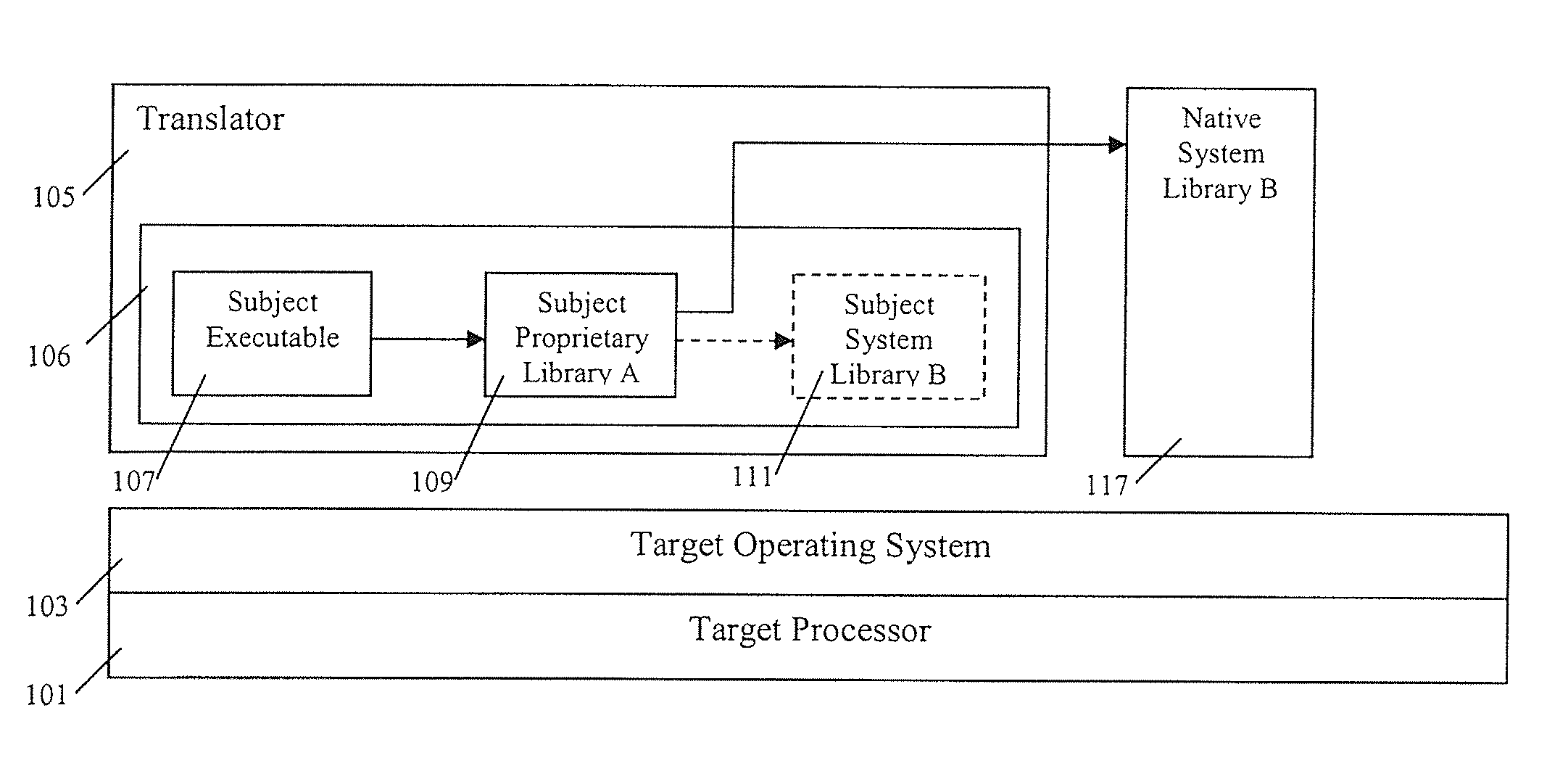

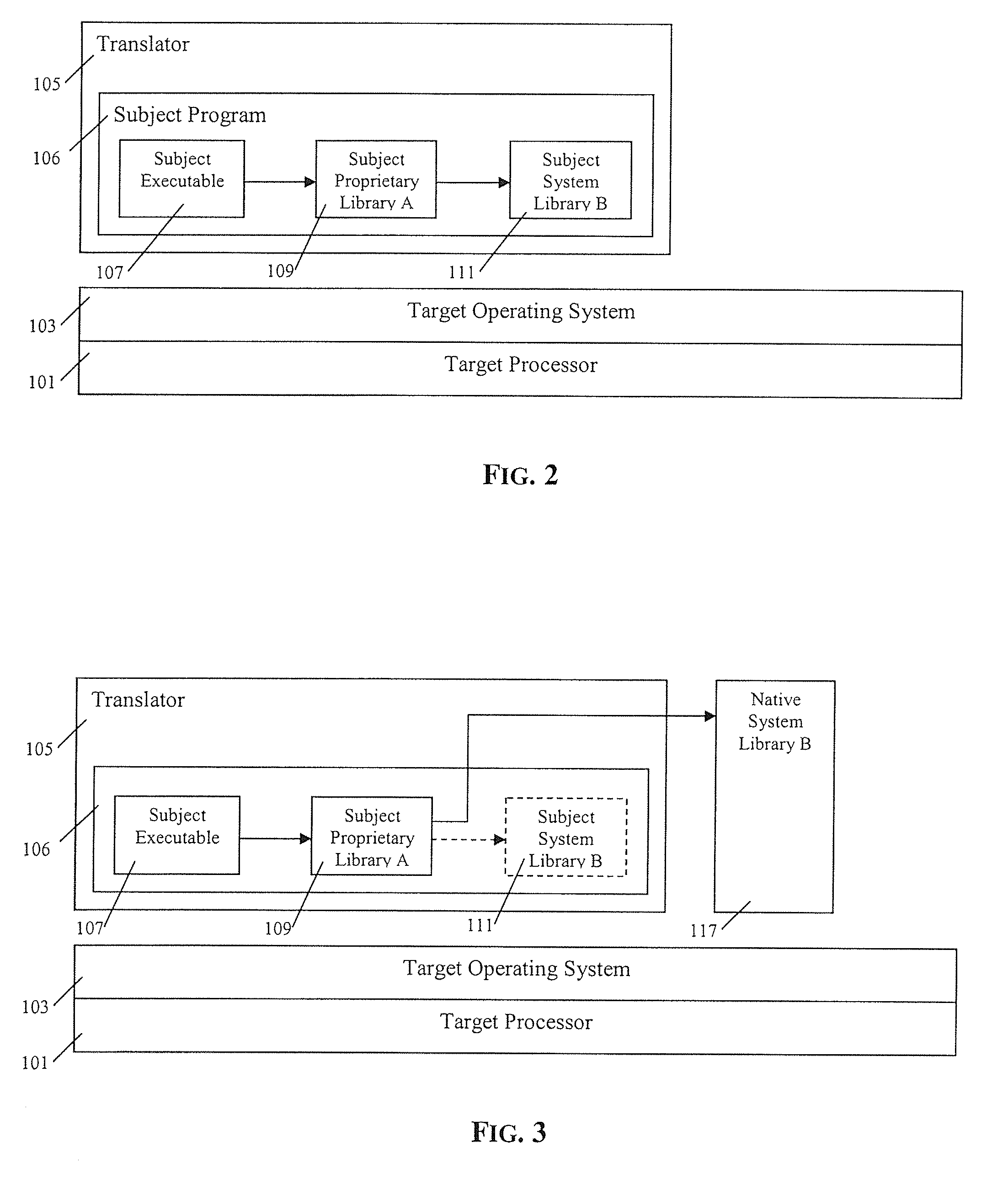

Dynamic native binding

ActiveUS20090100416A1Avoid overheadEfficient implementationBinary to binarySoftware simulation/interpretation/emulationObject codeFunction prototype

Owner:IBM CORP

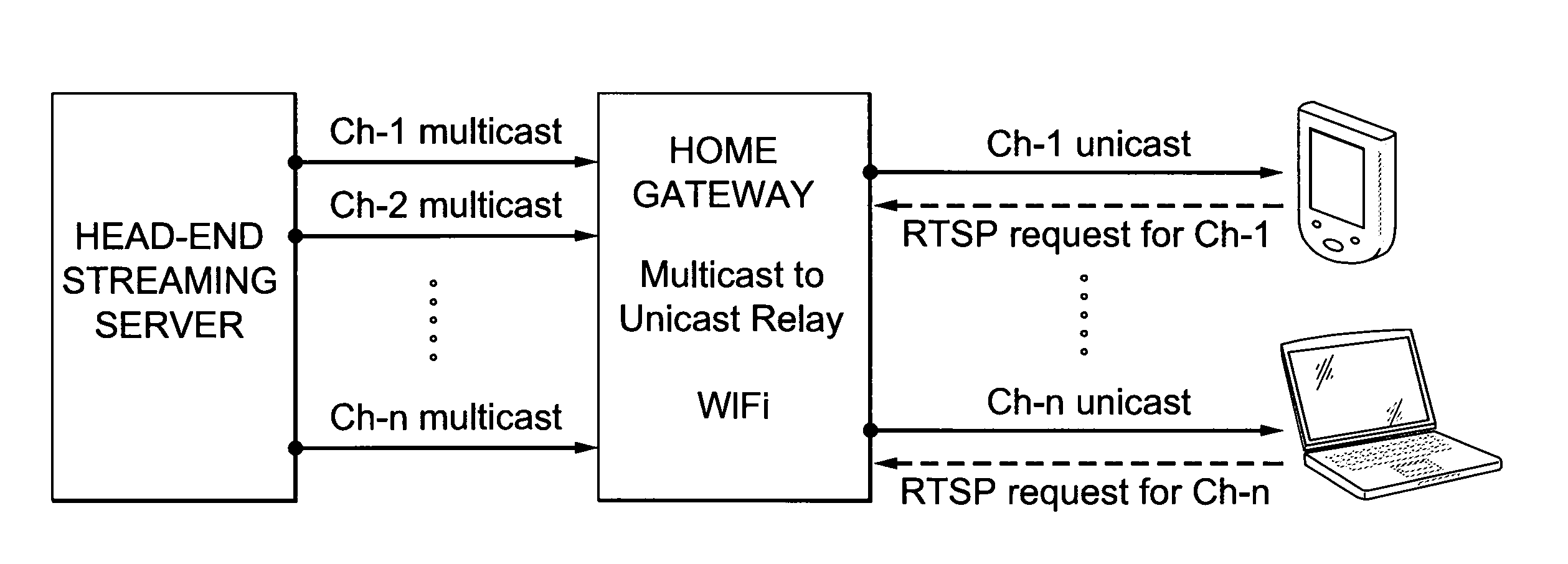

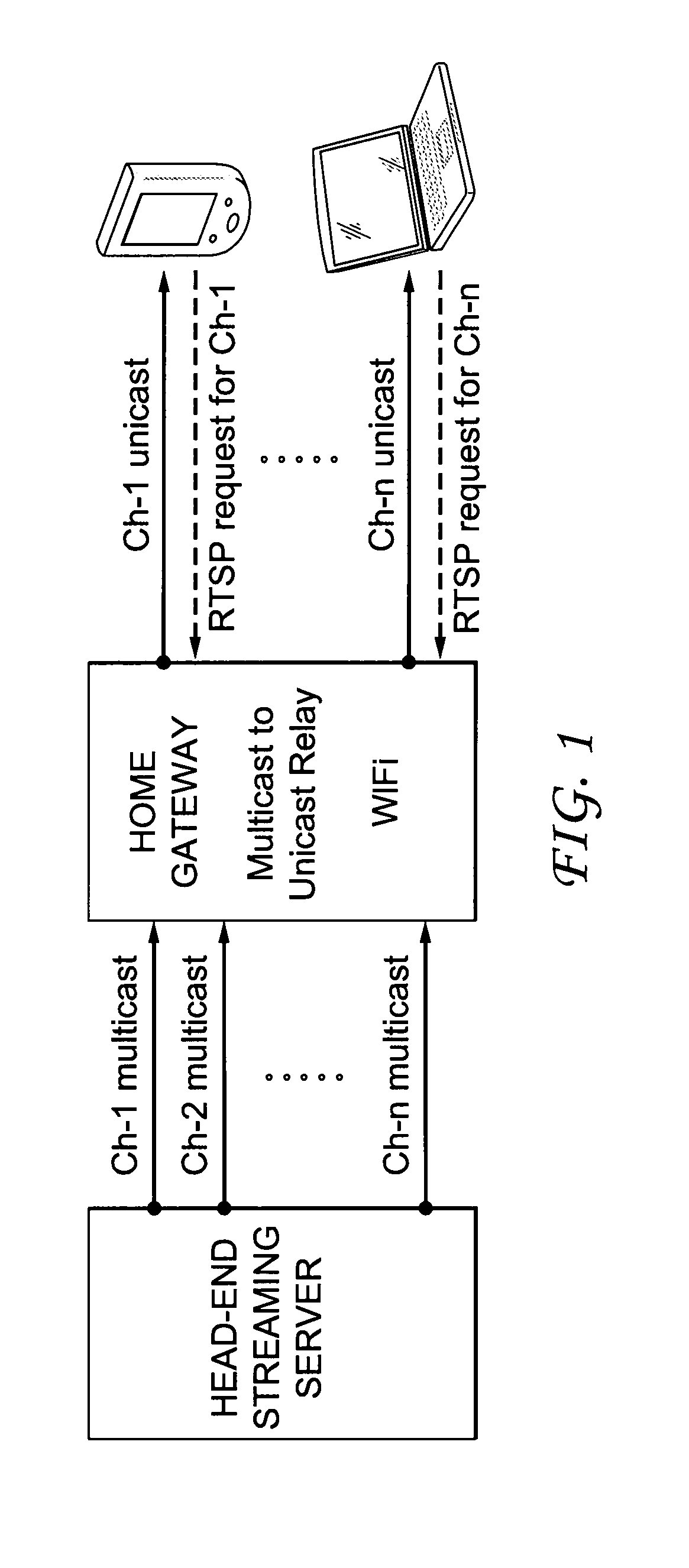

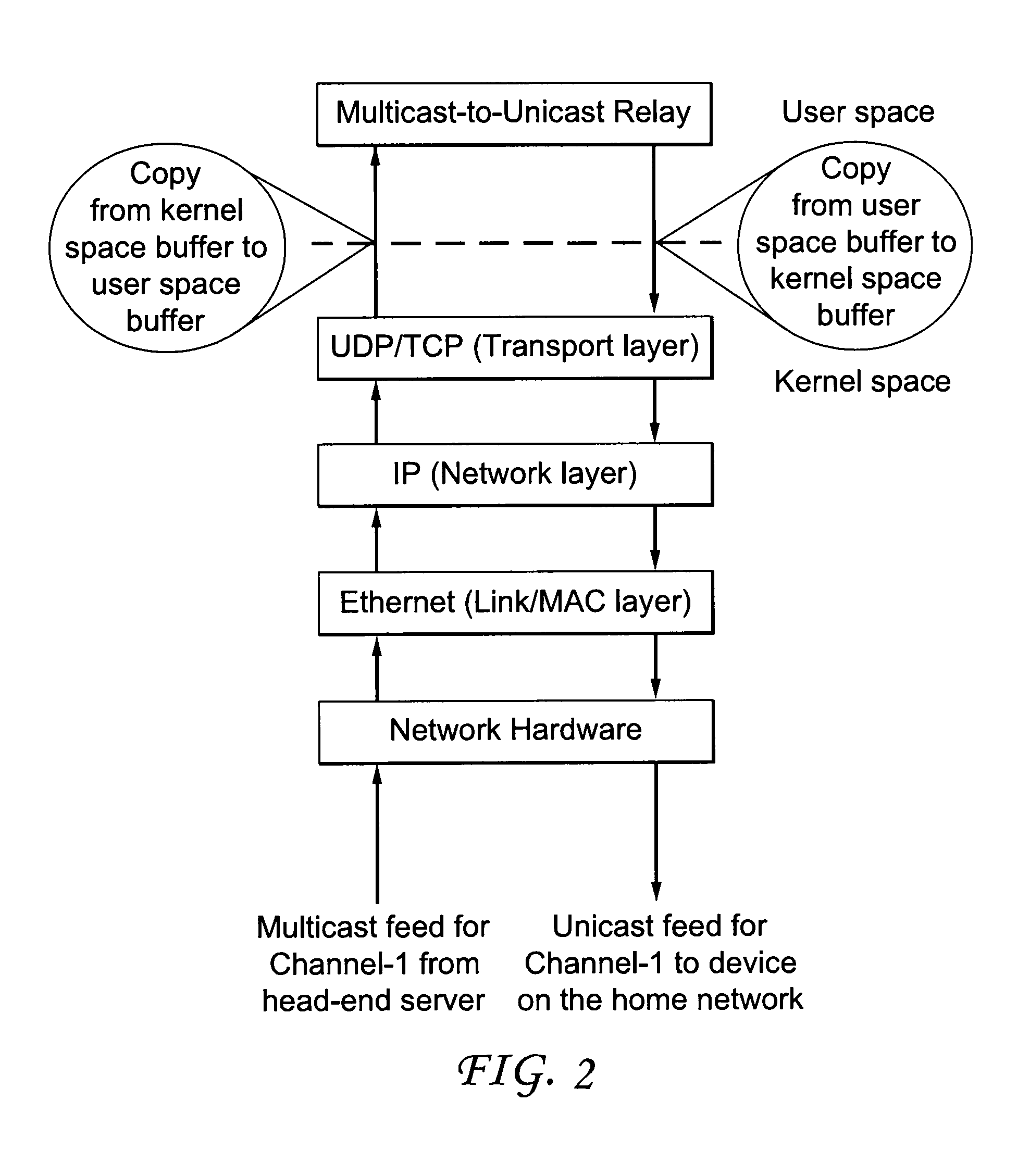

Method and apparatus for converting a multicast session to a unicast session

InactiveUS20130128889A1Avoid overheadImprove error recovery of dataData switching by path configurationNetwork packetClient-side

A method and apparatus are described including receiving a data Packet having a data packet header, storing the received data packet as shared payload, determining if the received data packet is a first data packet, initializing a sequence starting number responsive to the determination, generating a new data packet header, calculating a sequence number for the received data packet using the sequence starting number, inserting the new sequence number into the new data packet header, unicasting the new data packet header and the shared payload to a plurality of client devices.

Owner:MAGNOLIA LICENSING LLC

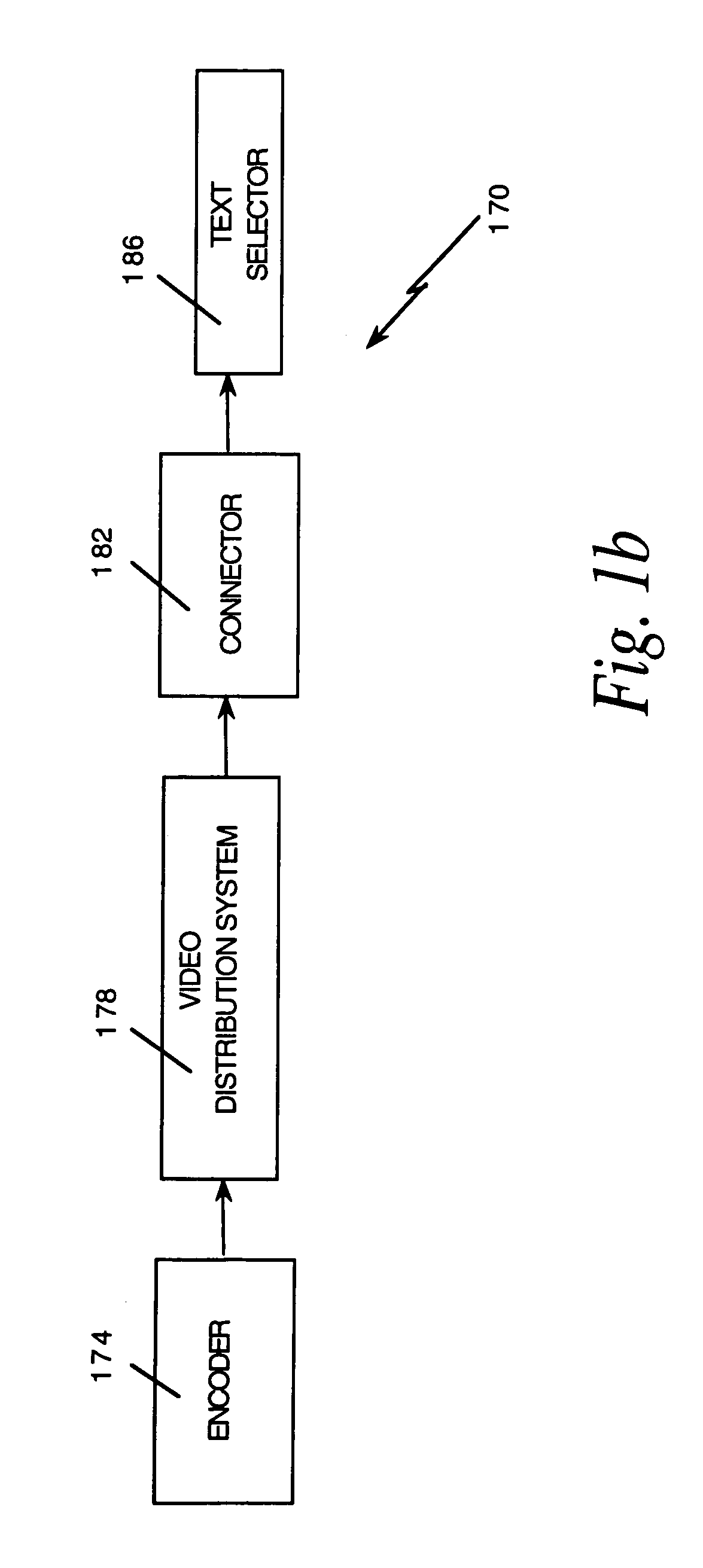

Electronic book connection to world watch live

InactiveUS7849393B1High quality videoQuickly educatedTelevision system detailsAnalogue secracy/subscription systemsRelational databaseElectronic book

An electronic book selection and delivery system distributes text to subscribers. The system includes the ability to use electronic links as well as a system for creating electronic links between specific electronic books and other electronic files. The links may be used or accessed by a menu system or by operation of a cursor and a select button. The other electronic files could be portions of a specific electronic book, such as a Table of Contents. The other electronic files could also exist external to a specific electronic book. For example, definitions provided in an electronic English-language dictionary could be linked to terms contained in an electronic book. The electronic links may be created by the book publisher or may be subscriber-defined. The links may use standard programming language such as hypertext markup language (HTML). The links may be established through use of a relational database.

Owner:DISCOVERY PATENT HLDG

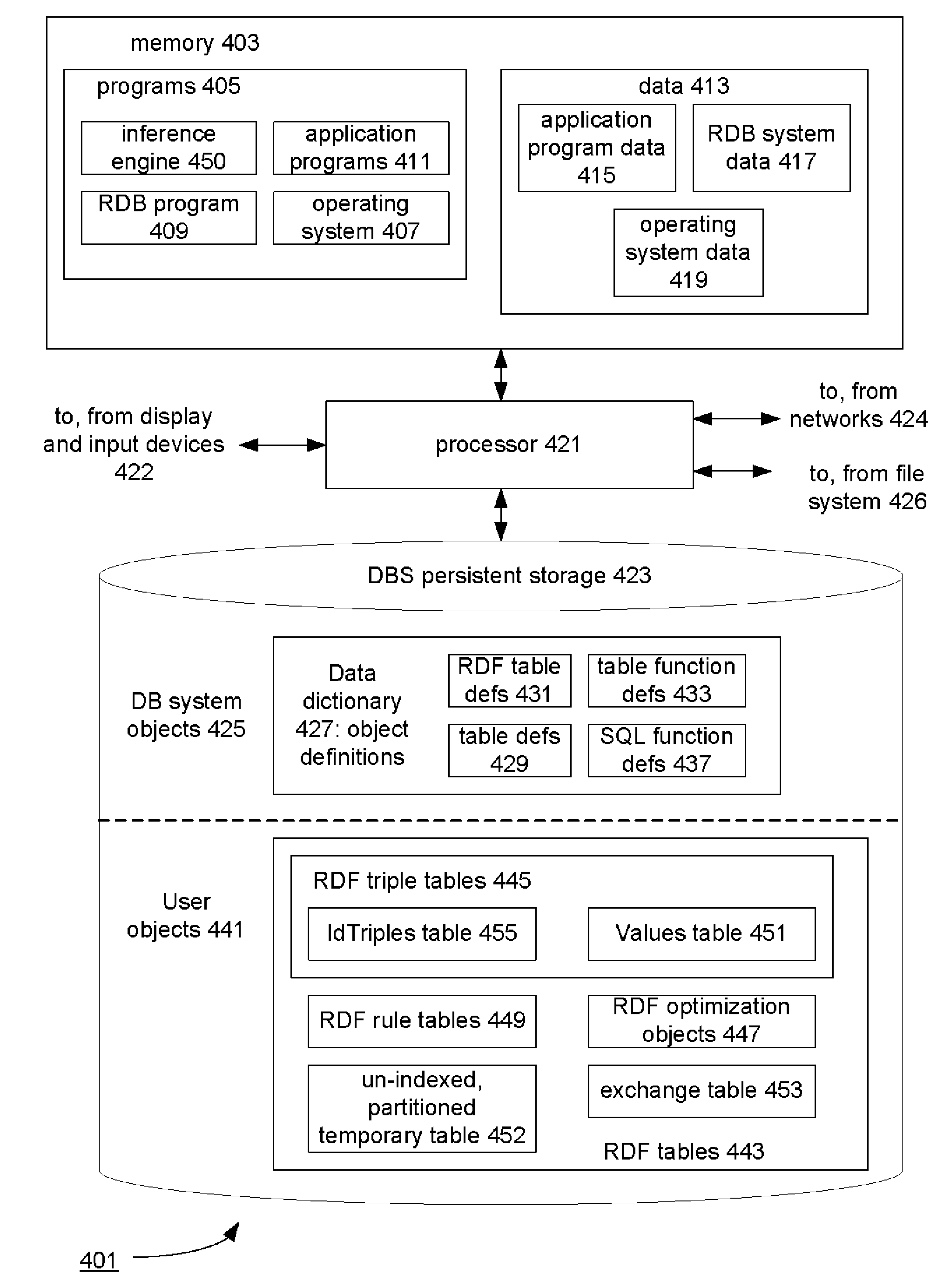

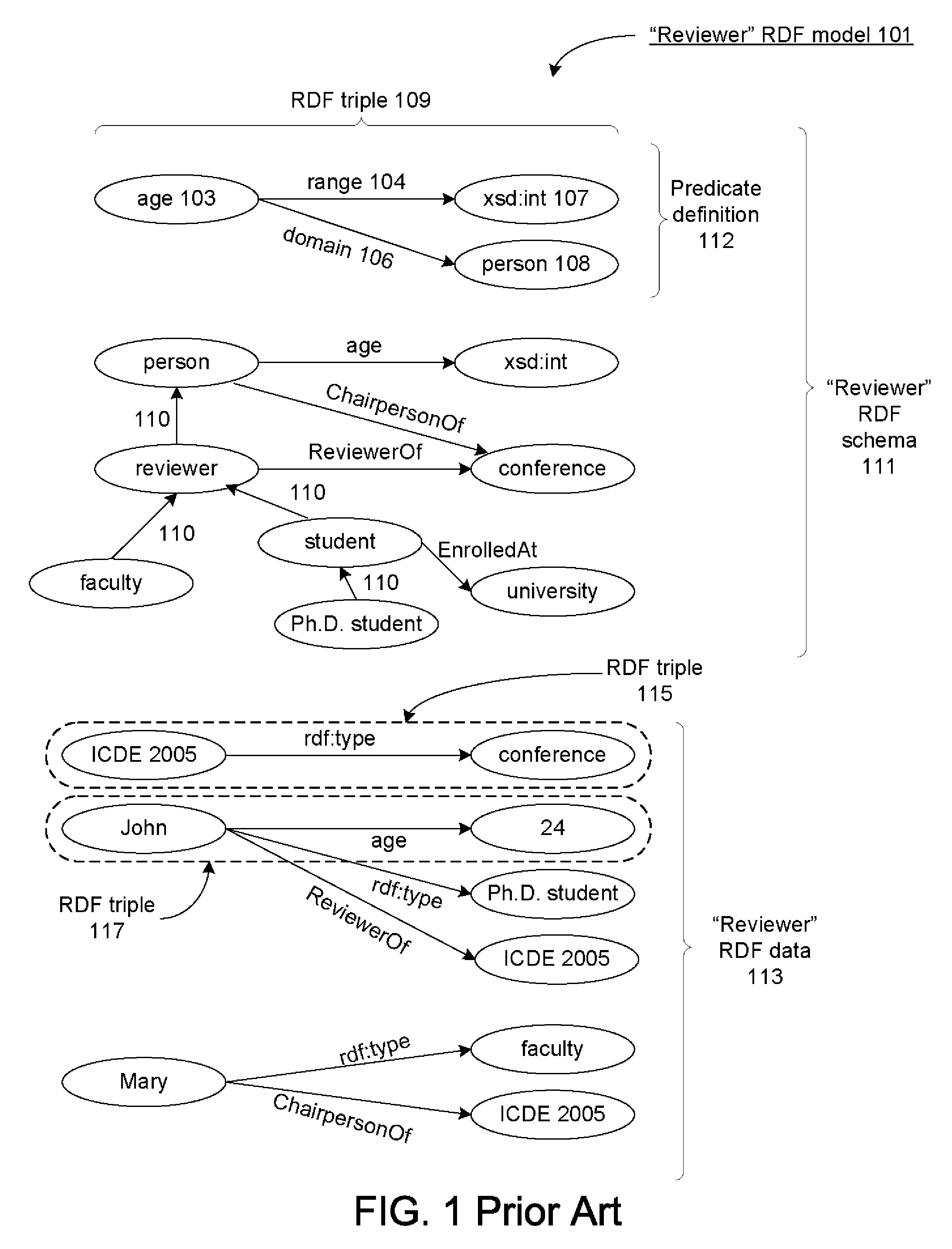

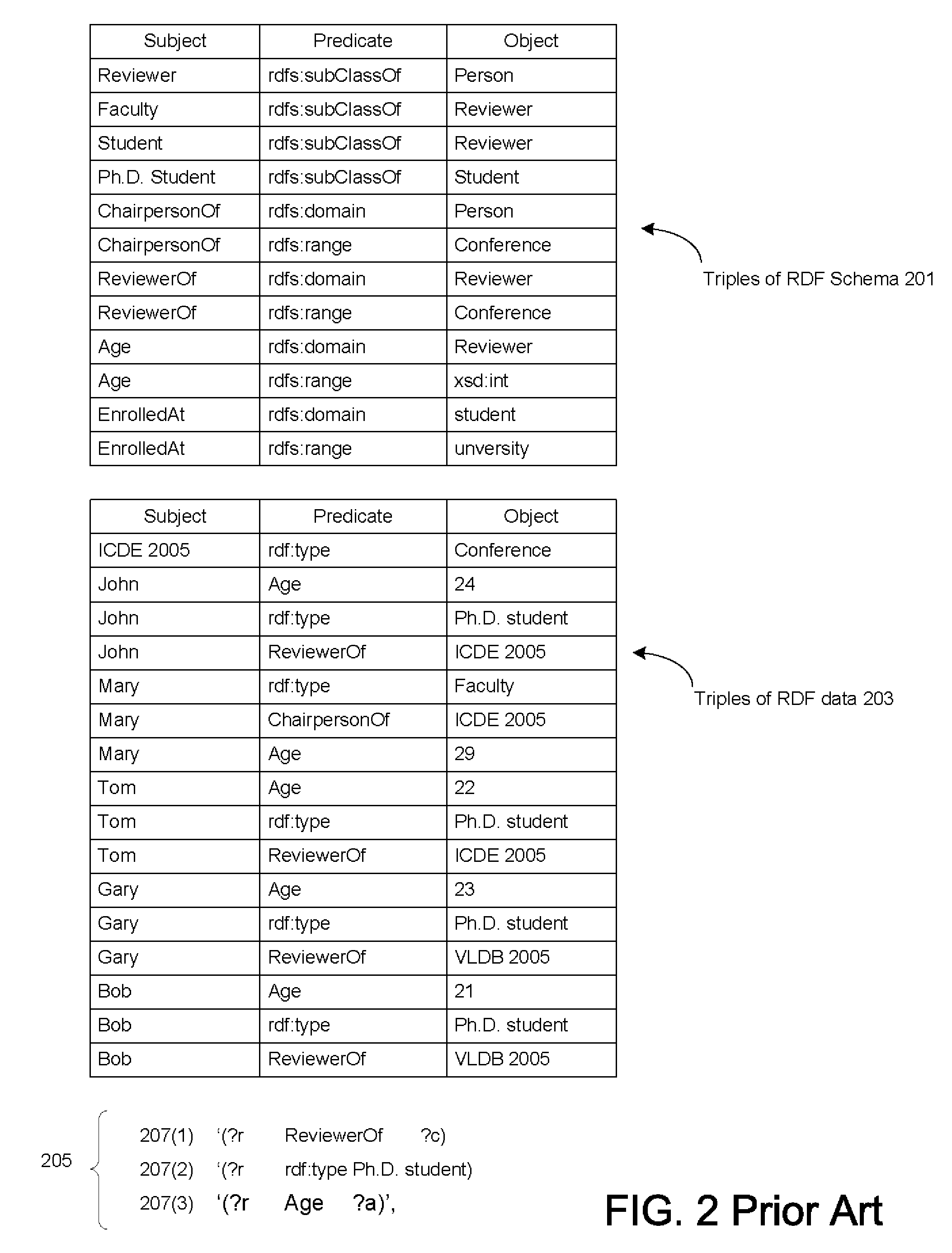

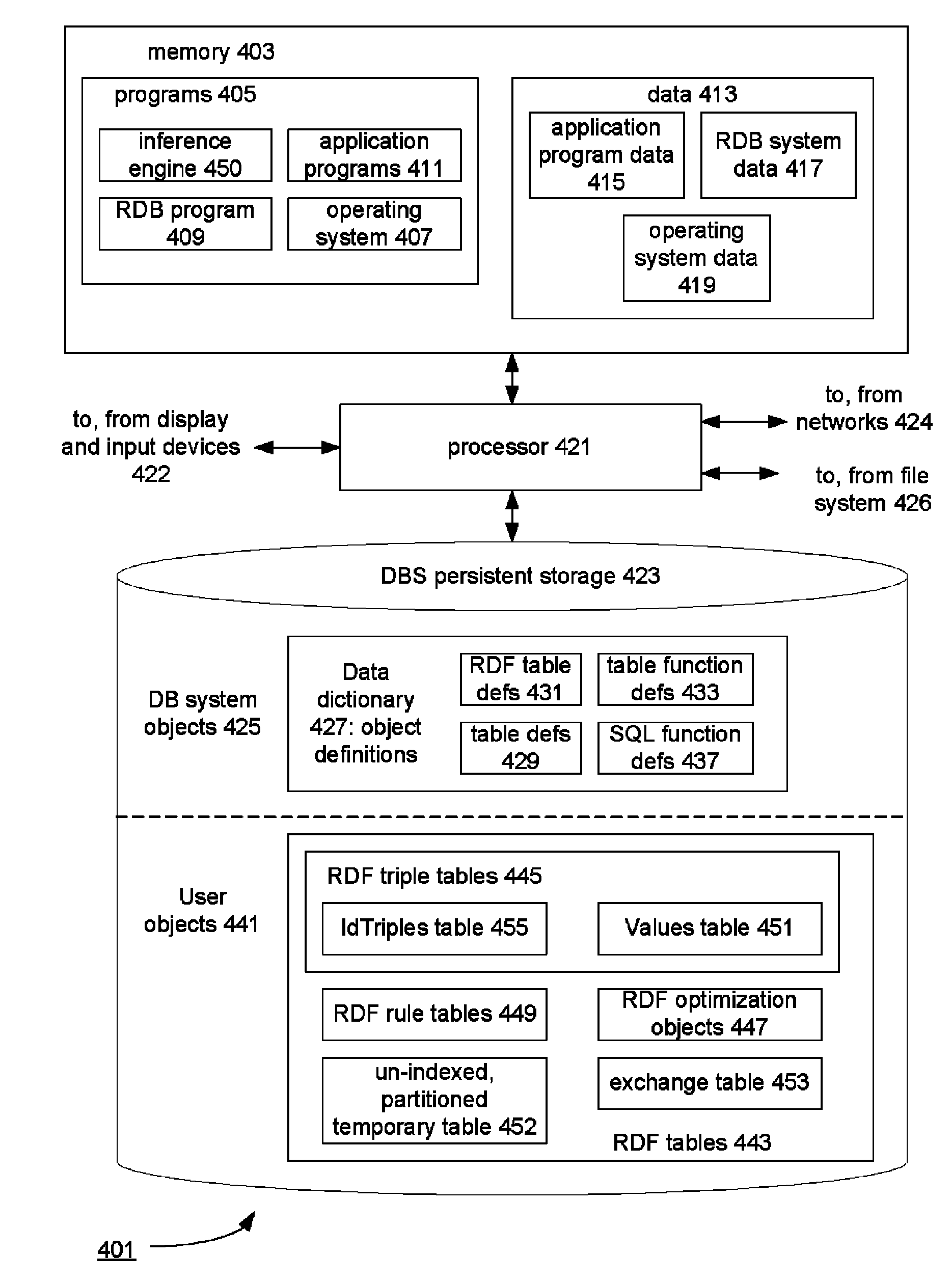

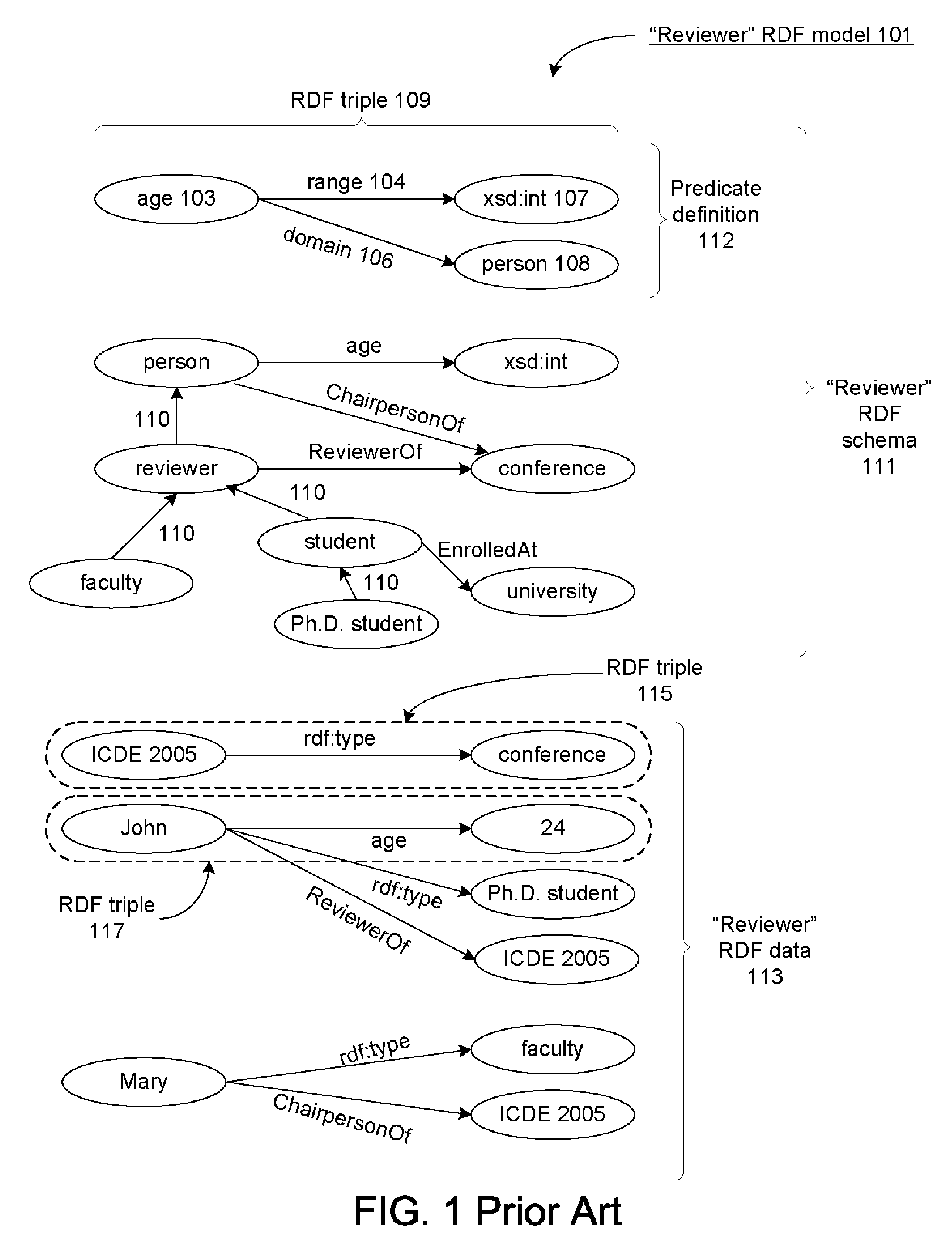

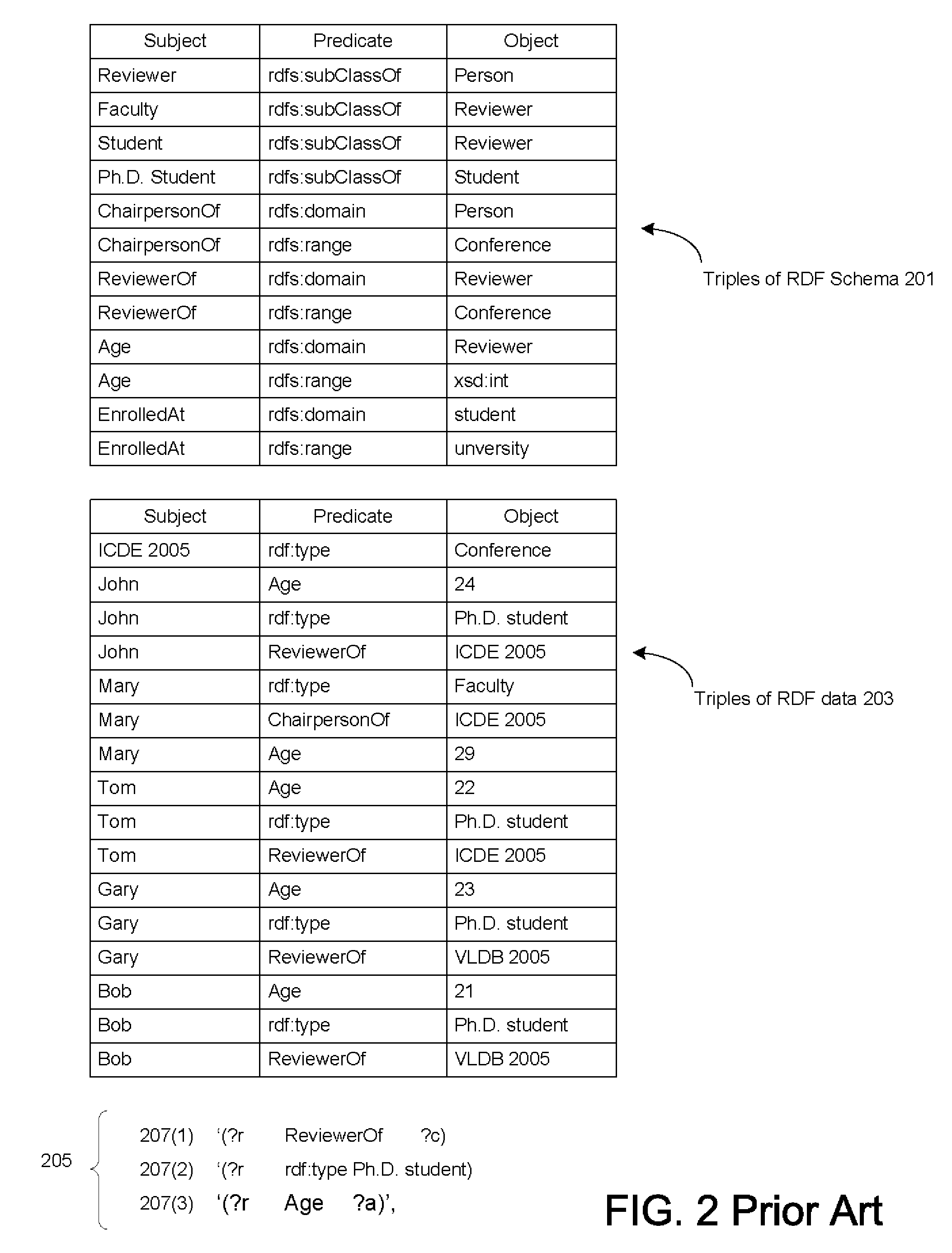

Database-based inference engine for RDFS/OWL constructs

ActiveUS20100036788A1Improve efficiencyAvoid overheadDigital data processing detailsKnowledge representationIncremental maintenanceRelational database

An un-indexed, partitioned temporary table and an exchange table are used in the inferencing of semantic data in a relational database system. The exchange table has the same structure as a semantic data table storing the semantic data. In the inferencing process, a new partition is created in the semantic data table. Inference rules are executed on the semantic data table, and any newly inferred semantic data generated is added to the temporary table. Once no new data is generated, the inferred semantic data is copied from the temporary table into the exchange table. Indexes that are the same as indexes for the semantic data table are built for the exchange table. The indexed data in the exchange table is then exchanged into the new partition in the semantic data table. By use of the un-indexed, partitioned temporary table, incremental maintenance of indexes is avoided, thus allowing for greater efficiency.

Owner:ORACLE INT CORP

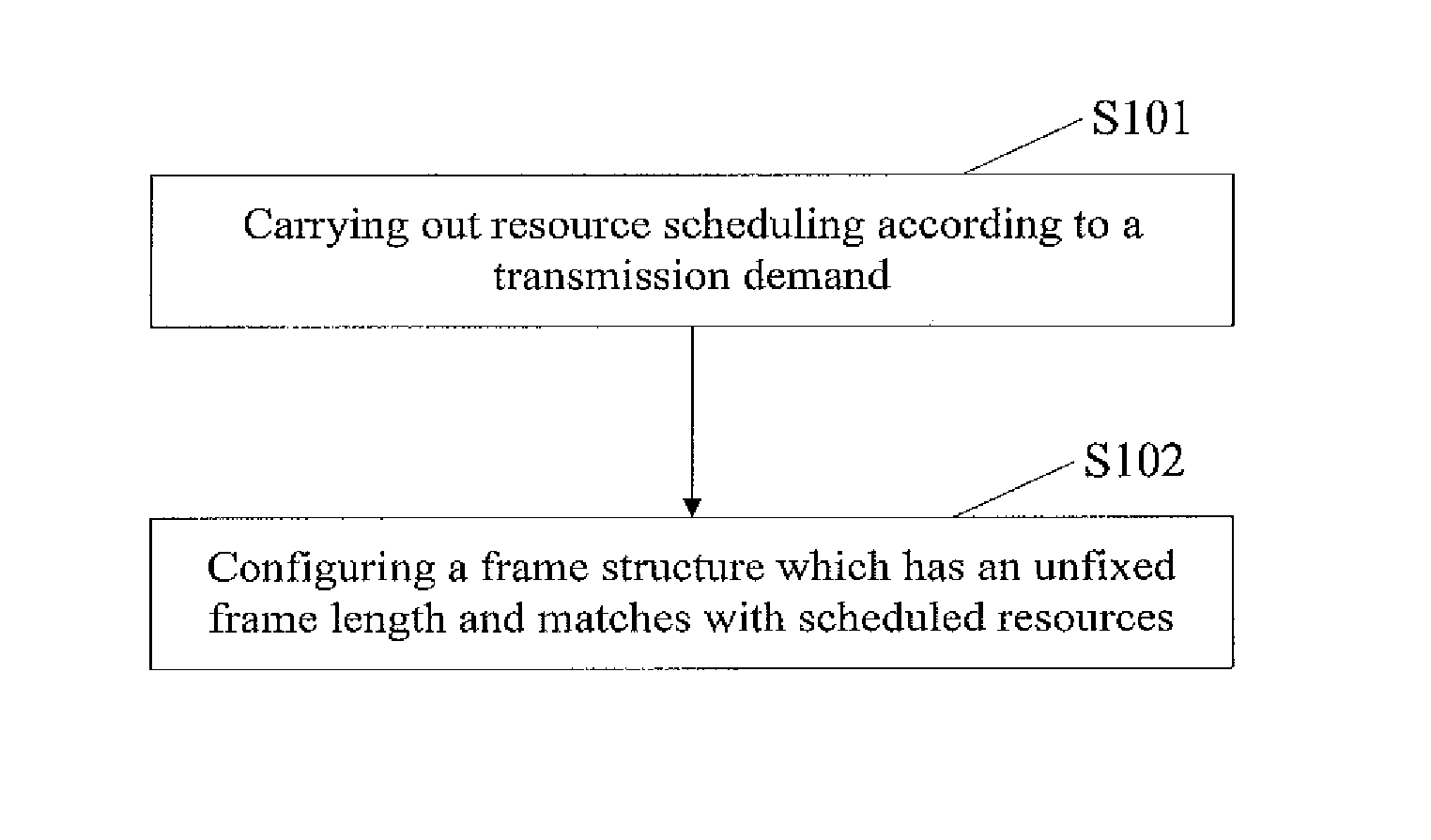

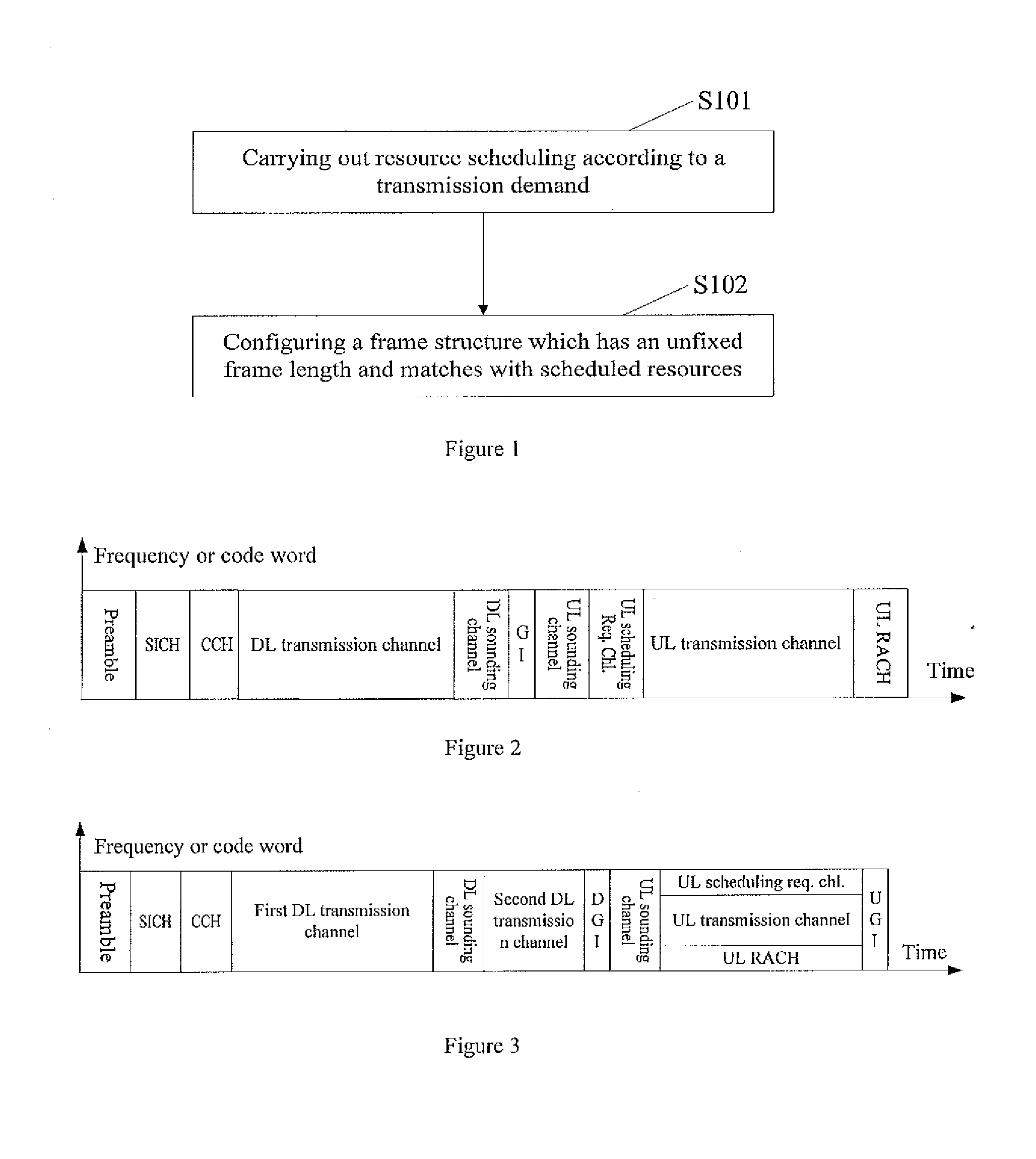

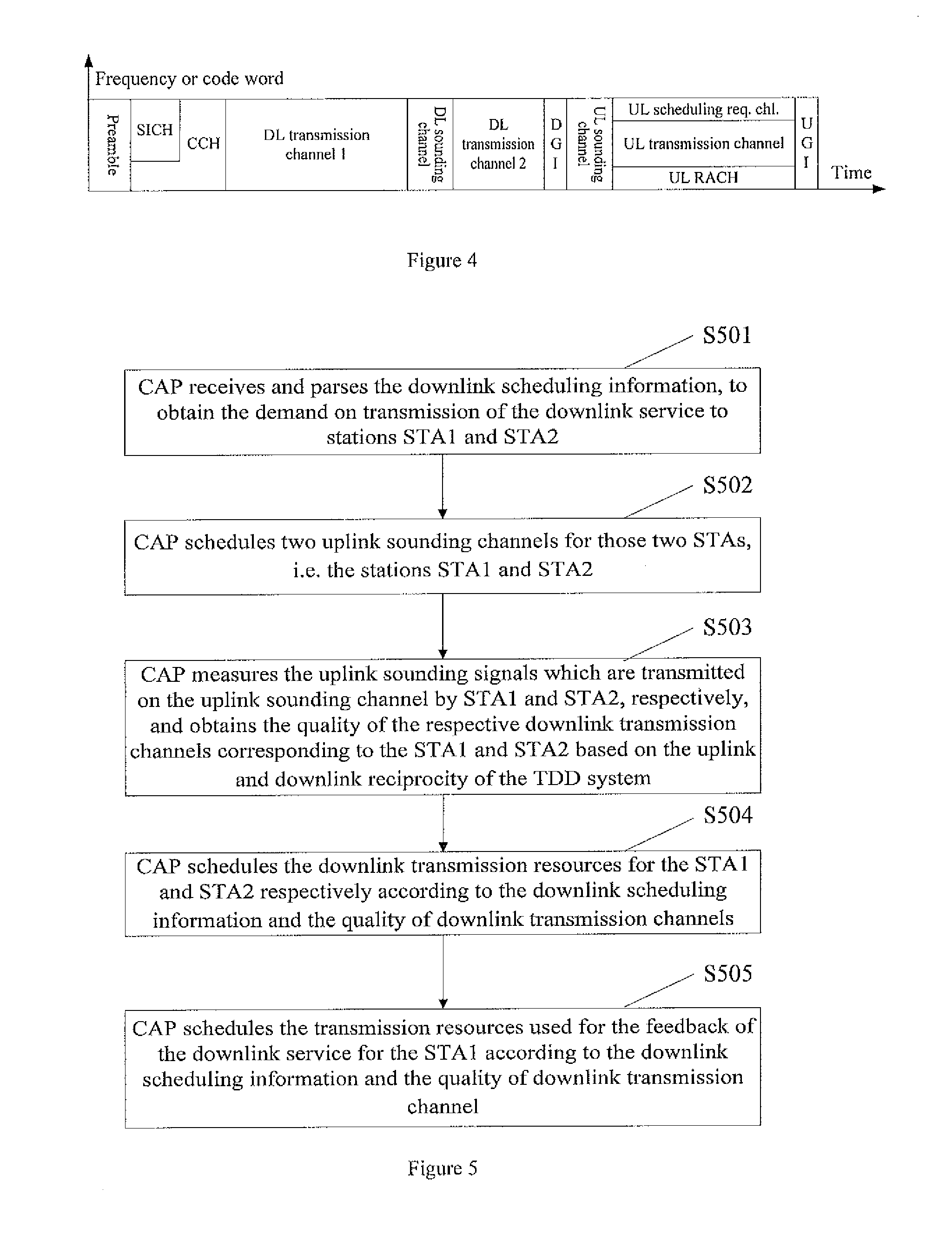

Resource Scheduling Method and Device

ActiveUS20140064206A1Easy to adaptImprove system efficiencyNetwork traffic/resource managementWireless commuication servicesFixed frameDistributed computing

Provided are a resource scheduling method and device; the method comprises: scheduling resource according to the transmission demands; and configuring a frame structure with a non-fixed frame length matching the scheduled resource. The method according to the present invention prevents wastage of wireless resources caused by competition conflict or random back-off, and can better adapt to the demands of different kinds of data services with varied features in the future.

Owner:BEIJING NUFRONT MOBILE MULTIMEDIA TECH

Electronic book having electronic commerce features

InactiveUS20110153464A1High tech auraEasy to useDigital data processing detailsTwo-way working systemsElectronic bookE-commerce

A viewer for displaying an electronic book and providing for electronic commerce. In conjunction with viewing an electronic book, a user can view information about products and services, view an on-line electronic catalog, and receive samples of products available for purchase. By entering a purchase request, the user can purchase products or services. In the case of a digital product, the user can download the purchased product directly into the viewer. The viewer also records statistics concerning purchase and information requests in order to recommend related products or services, or for directing particular types of advertisements to the user.

Owner:ADREA LLC

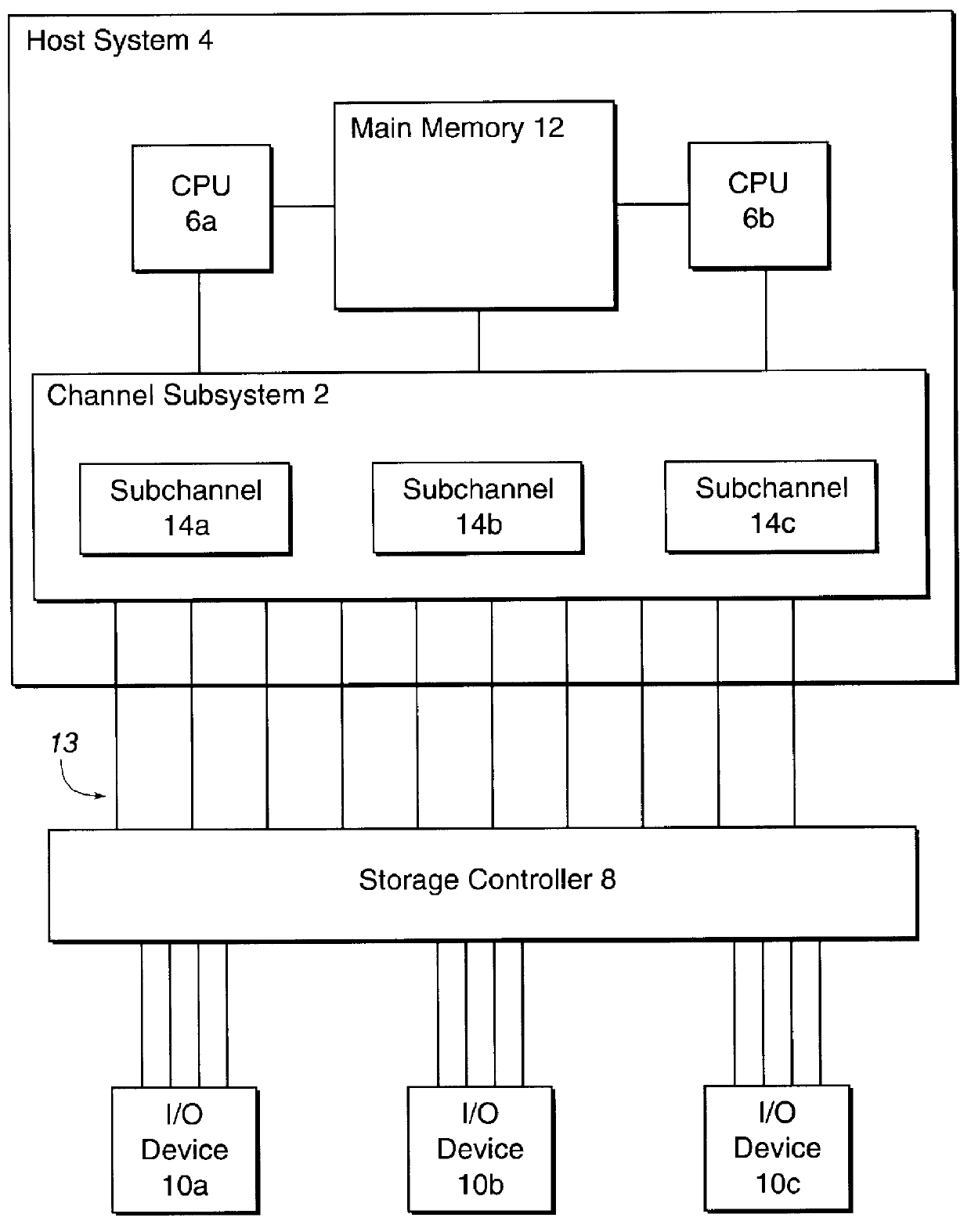

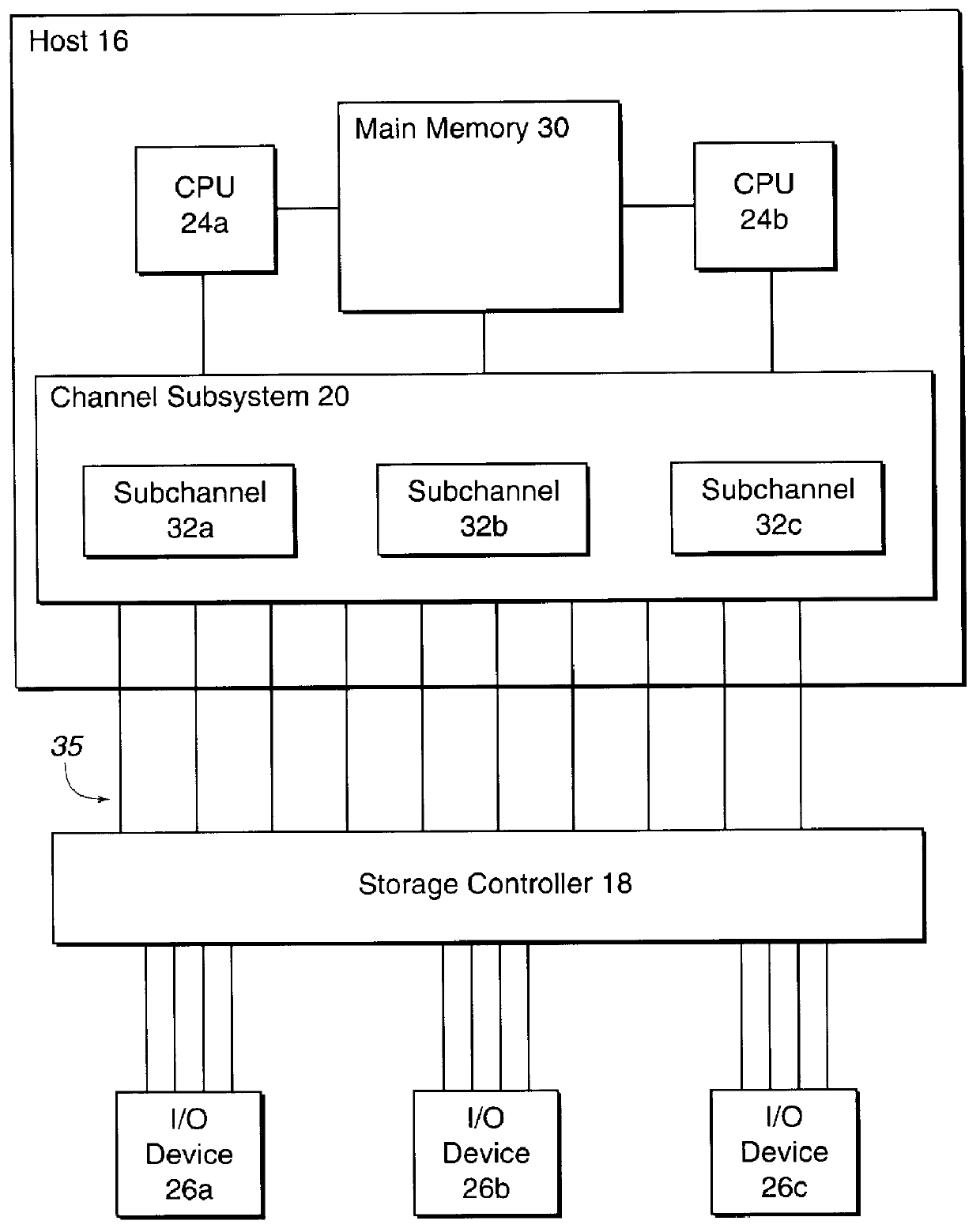

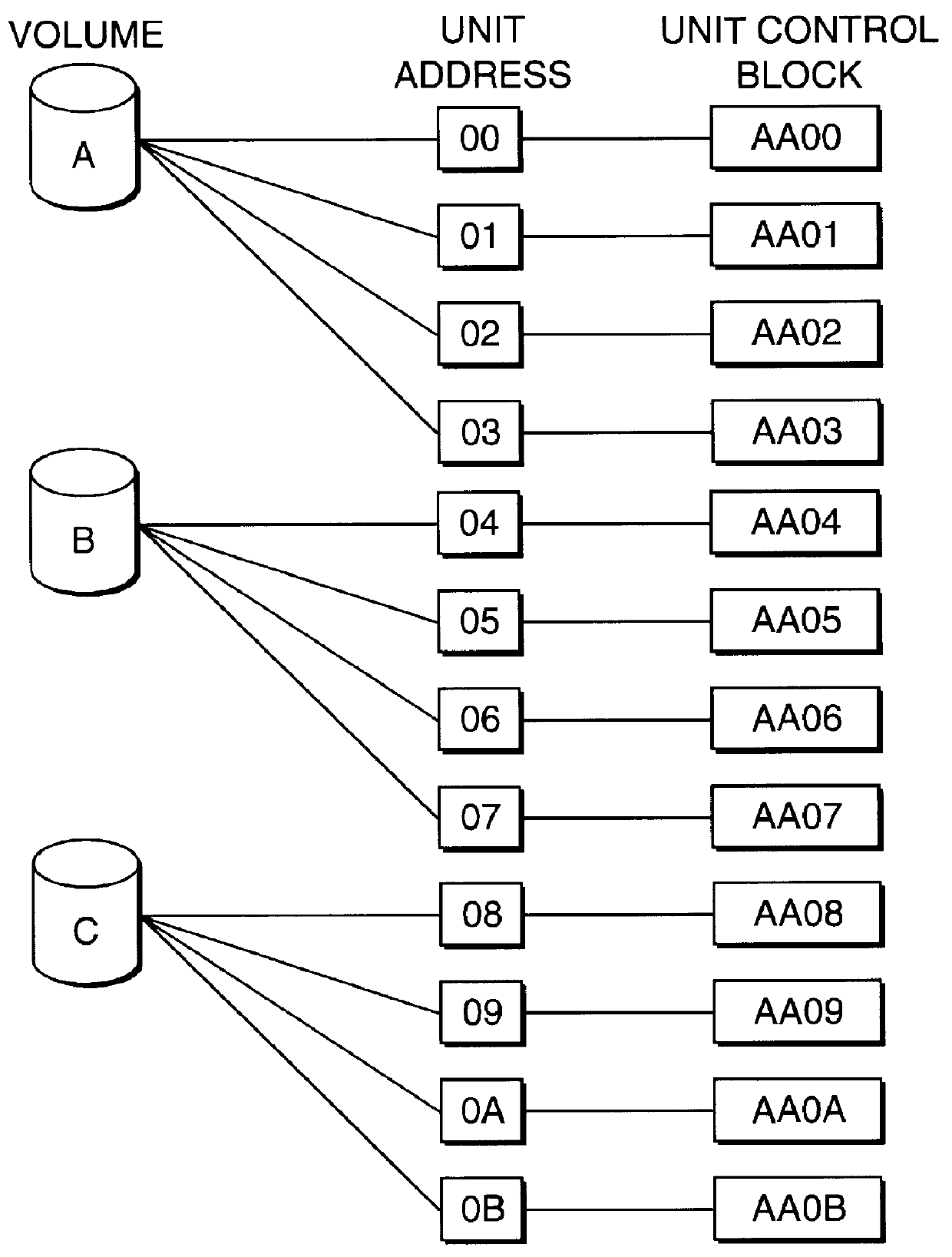

System for reassigning alias addresses to an input/output device

InactiveUS6167459AAvoid overheadReduce needMemory systemsInput/output processes for data processingInput/outputBase address

Disclosed is a system for reassigning addresses. A first processing unit, such as a storage controller, provides at least two base addresses for addressing devices, such as volumes of a direct access storage device (DASD) and a plurality of alias addresses. An alias address associated with a base address provides an address for addressing the device addressed by the base address. The first processing unit processes a command transmitted from a second processing unit, such as a host system that accesses the DASD through the storage controller, to reassign an alias address from a first base address to a second base address. The first base address addresses a first device and the second base address addresses a second device. The first processing unit then indicates that the alias address to reassign is not associated with the first base address and is associated with the second base address. The second device addressed by the second base addresses is capable of being addressed by the reassigned alias address. The first processing unit signals the second processing unit after the first processing unit indicates that the alias address was reassigned from the first base address to the second base address.

Owner:IBM CORP

Database-based inference engine for RDFS/OWL constructs

ActiveUS8401991B2Improve efficiencyAvoid overheadDigital data processing detailsKnowledge representationIncremental maintenanceRelational database

An un-indexed, partitioned temporary table and an exchange table are used in the inferencing of semantic data in a relational database system. The exchange table has the same structure as a semantic data table storing the semantic data. In the inferencing process, a new partition is created in the semantic data table. Inference rules are executed on the semantic data table, and any newly inferred semantic data generated is added to the temporary table. Once no new data is generated, the inferred semantic data is copied from the temporary table into the exchange table. Indexes that are the same as indexes for the semantic data table are built for the exchange table. The indexed data in the exchange table is then exchanged into the new partition in the semantic data table. By use of the un-indexed, partitioned temporary table, incremental maintenance of indexes is avoided, thus allowing for greater efficiency.

Owner:ORACLE INT CORP

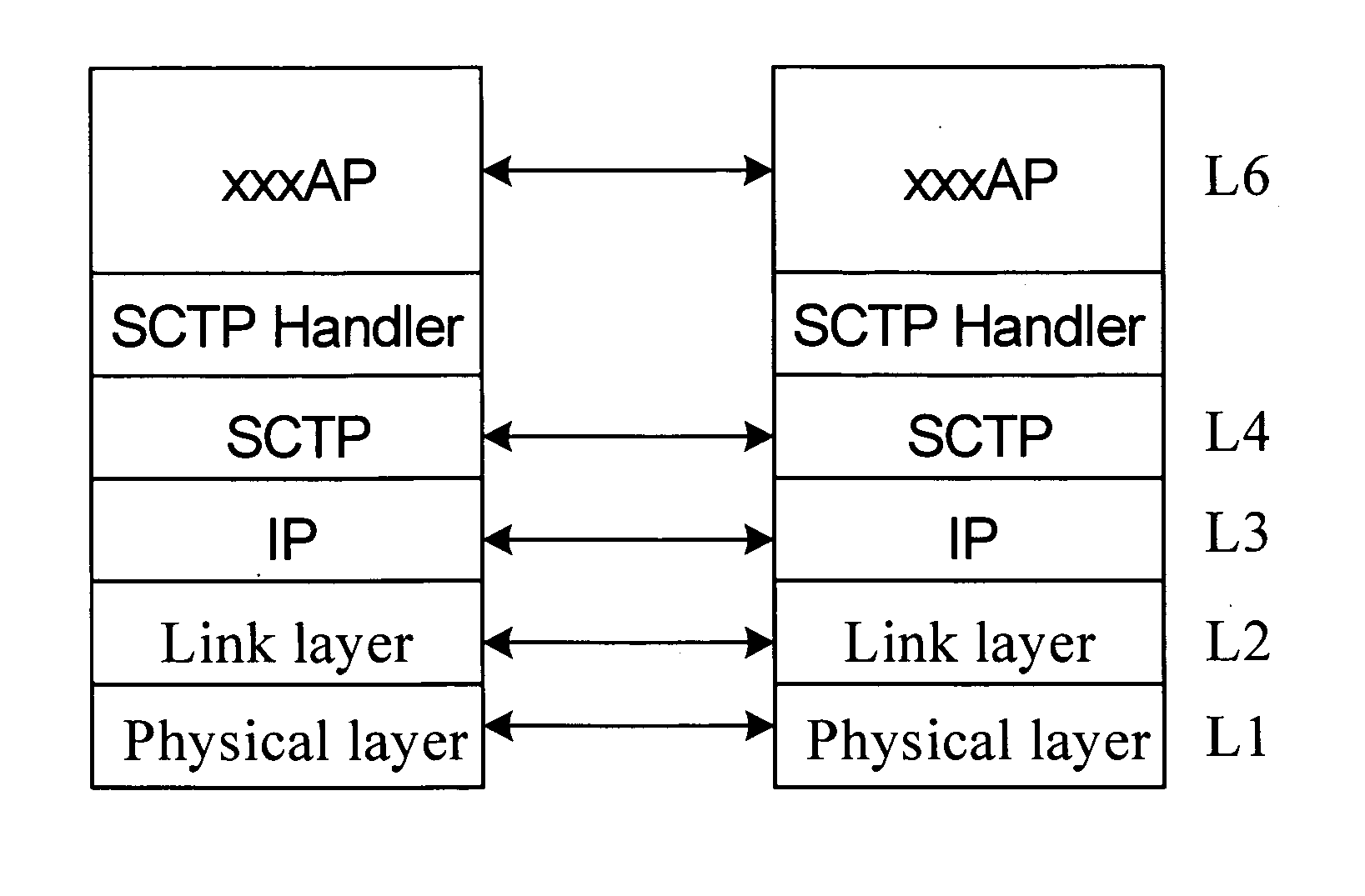

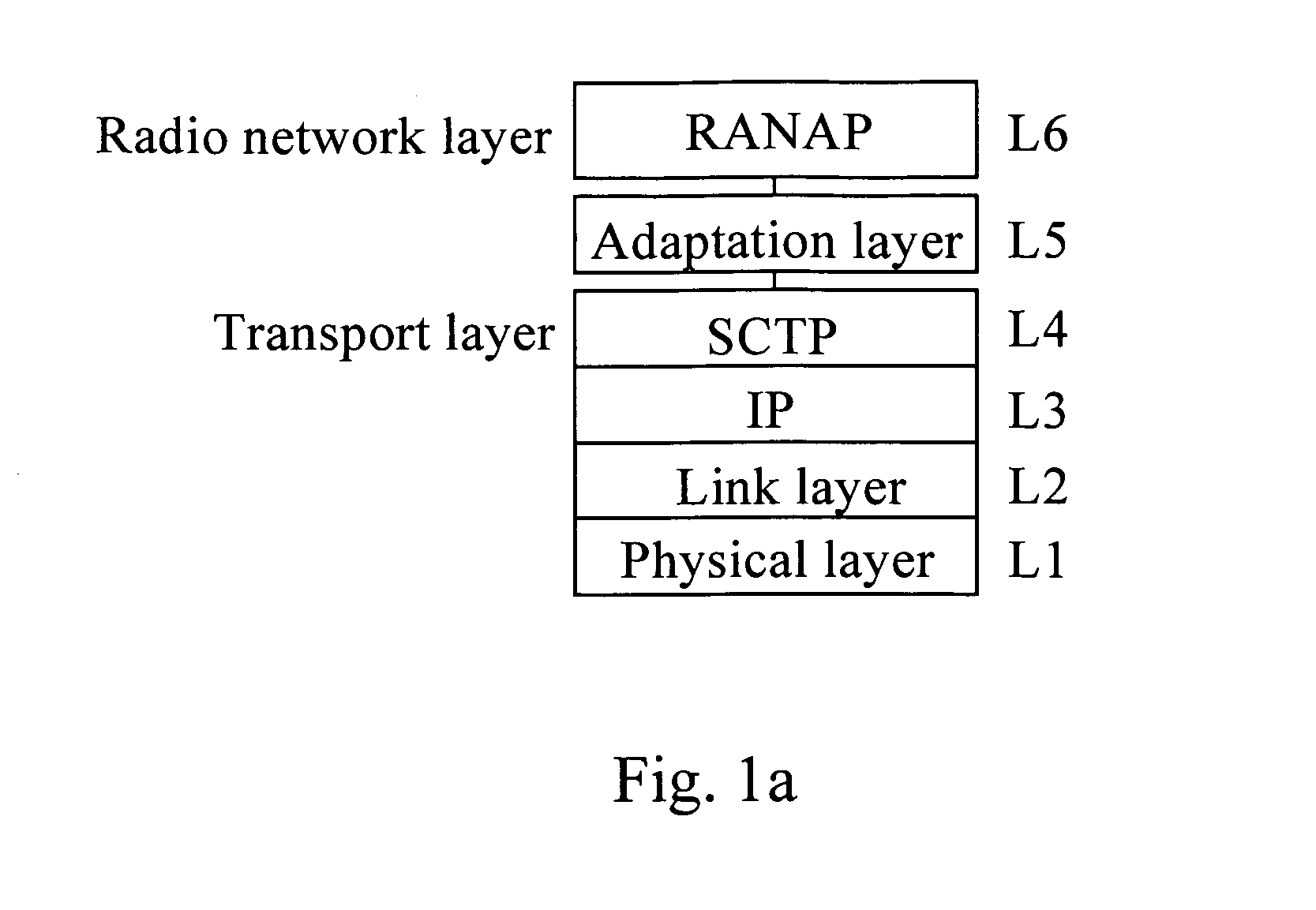

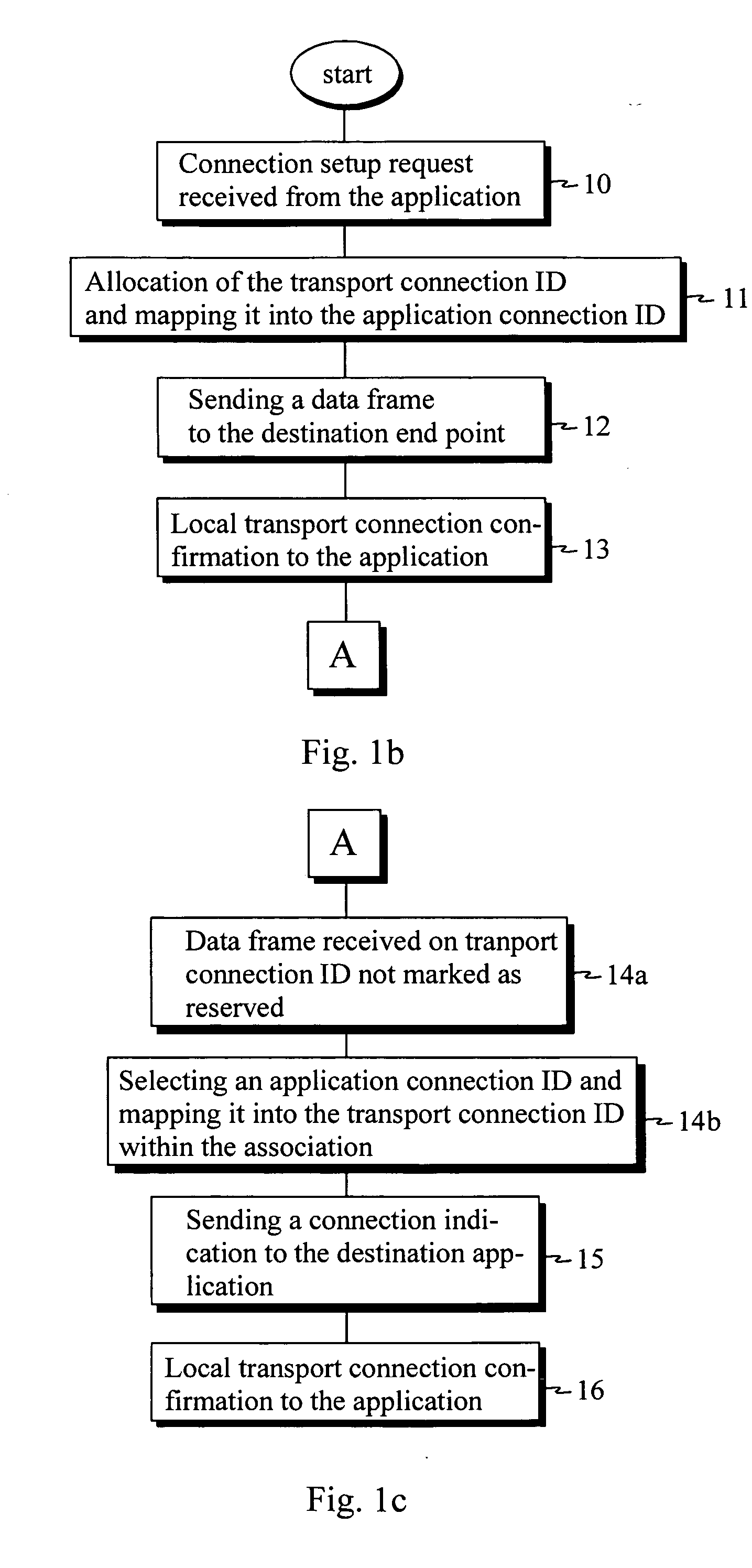

Method and system for sending connection-oriented or connectionless data

InactiveUS20050117529A1Mechanism is preventedAvoid overheadError preventionTransmission systemsStream Control Transmission ProtocolProtocol stack

The present invention describes a method, system and an interconnecting handler for sending connection-oriented or connectionless data between two endpoints in a protocol architecture. The transport protocol in the protocol architecture is in a preferred embodiment the Stream Control Transmission Protocol (SCTP). The present invention allows the setup and release of a connection when using a simplified protocol stack which is able to provide the same kind of services without using peer-to-peer messages like SCCP or SUA use. The present invention also enables discrimination between connection-oriented and connectionless services without peer-to-peer signalling.

Owner:NOKIA CORP

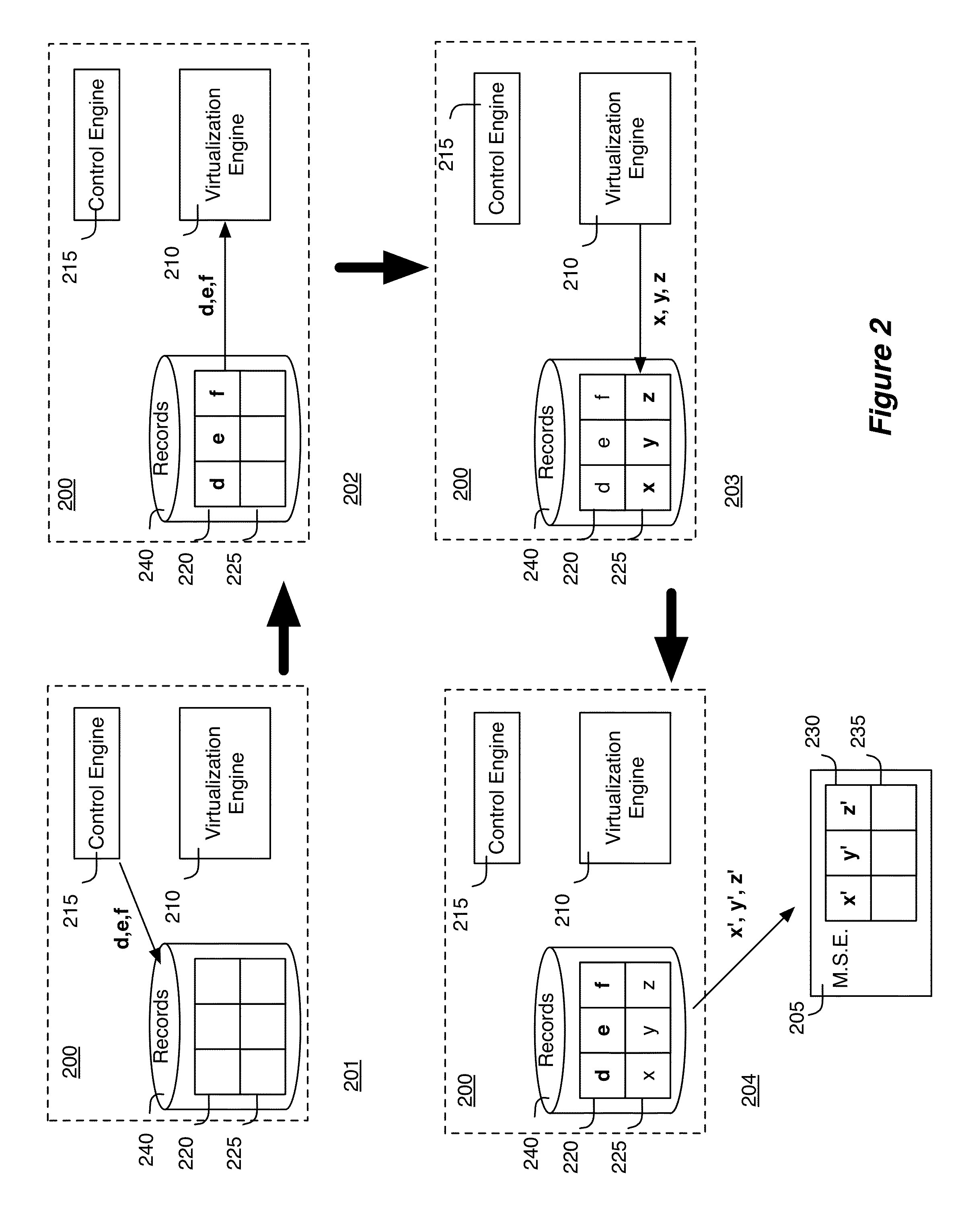

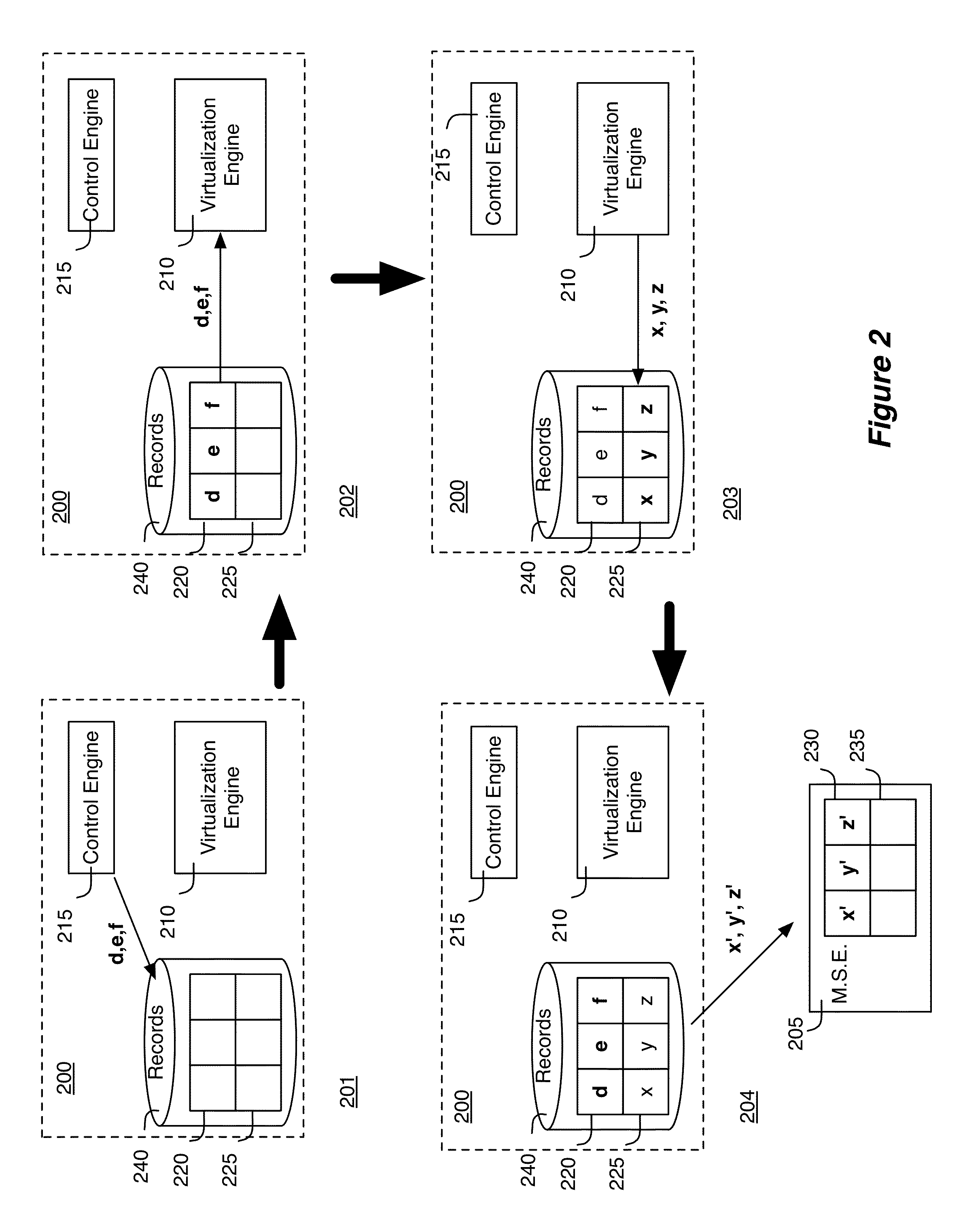

Pull-based state dissemination between managed forwarding elements

ActiveUS20130212246A1Avoid becoming scalability bottleneckControl volumeDigital computer detailsElectric controllersInformation repositoryDissemination

Owner:NICIRA

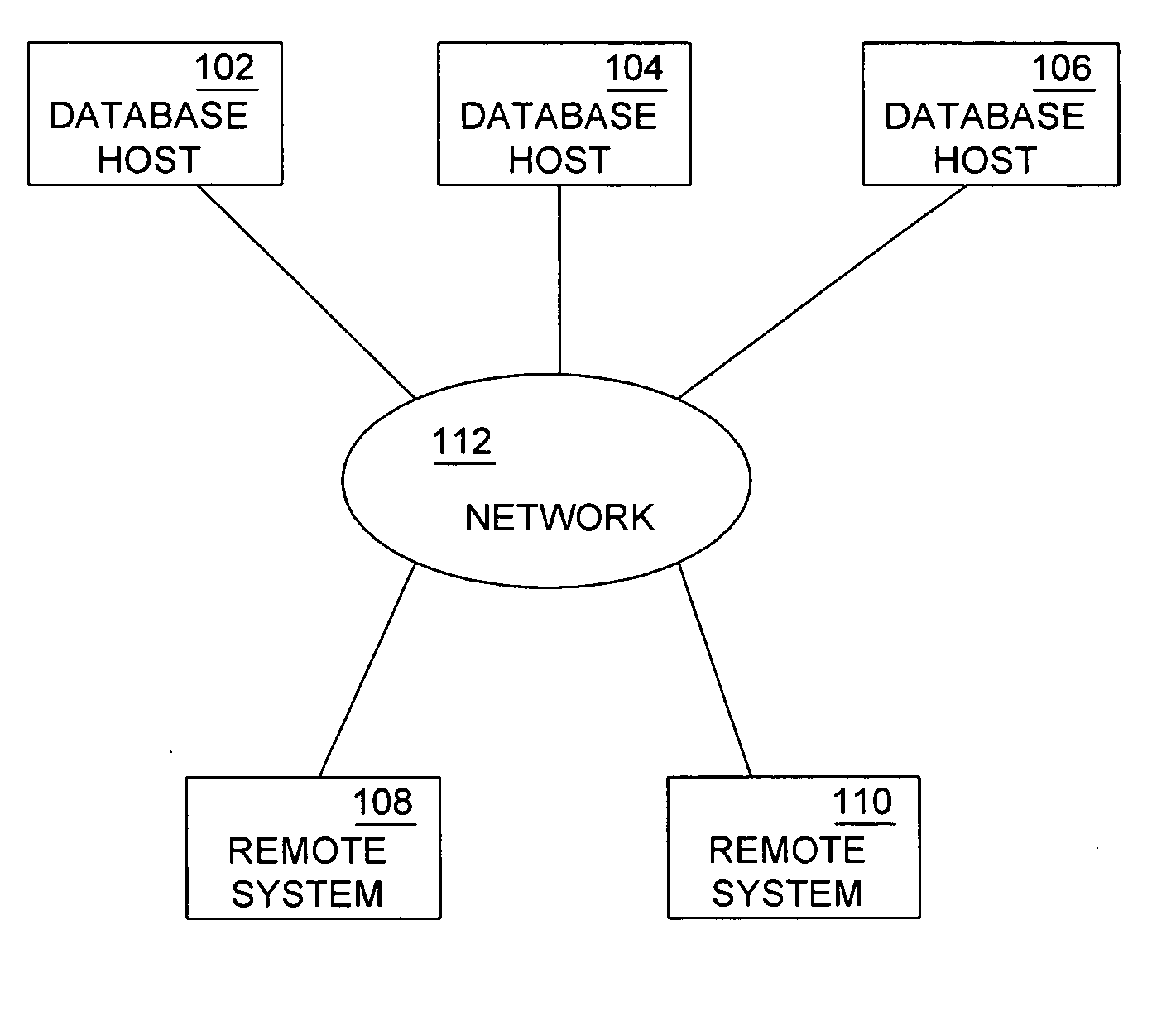

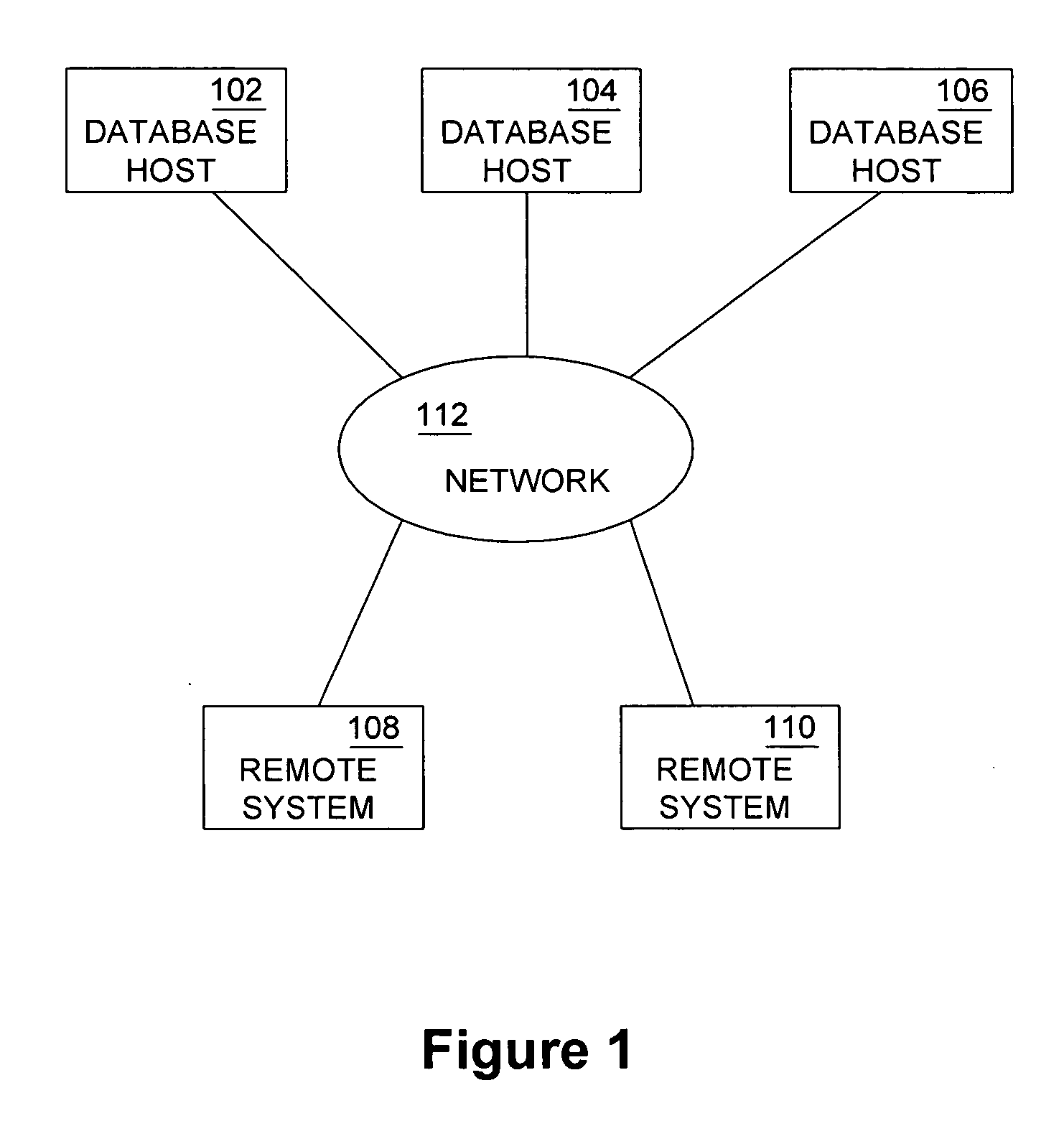

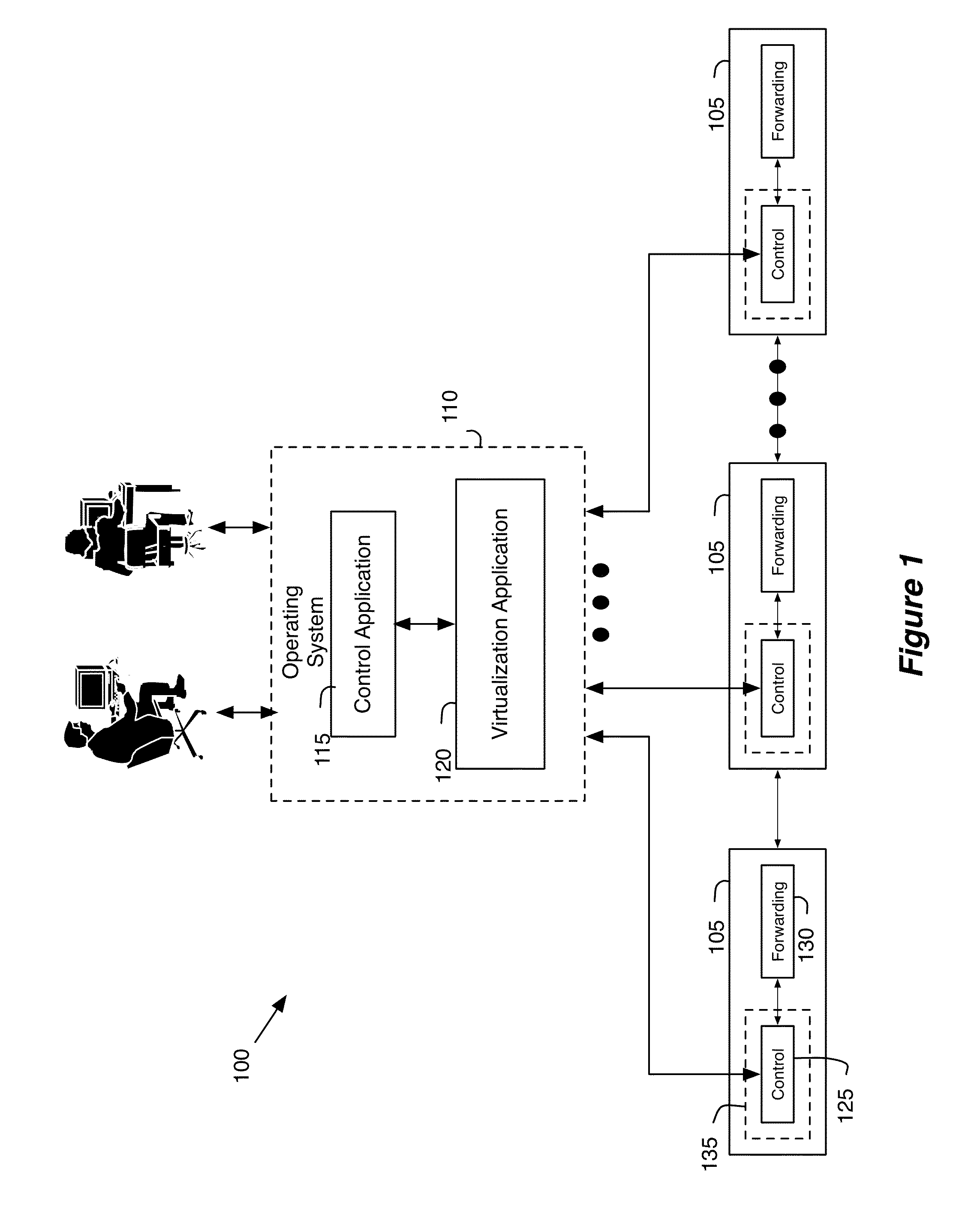

Distributed network control system

ActiveUS20130212148A1Avoid becoming scalability bottleneckControl volumeDigital computer detailsElectric controllersControl systemRelational database

For a controller of a distributed network control system comprising several controllers for managing forwarding elements that forward data in a network, a method for managing the forwarding elements is described. The method changes a set of data tuples stored in a relational database of the first controller that stores data tuples containing data for managing a set of forwarding elements. The method sends the changed data tuples to at least one of other controllers of the network control system. The other controller receiving the changed data tuples processes the changed data tuples and sends the processed data tuples to at least one of the managed forwarding elements.

Owner:NICIRA

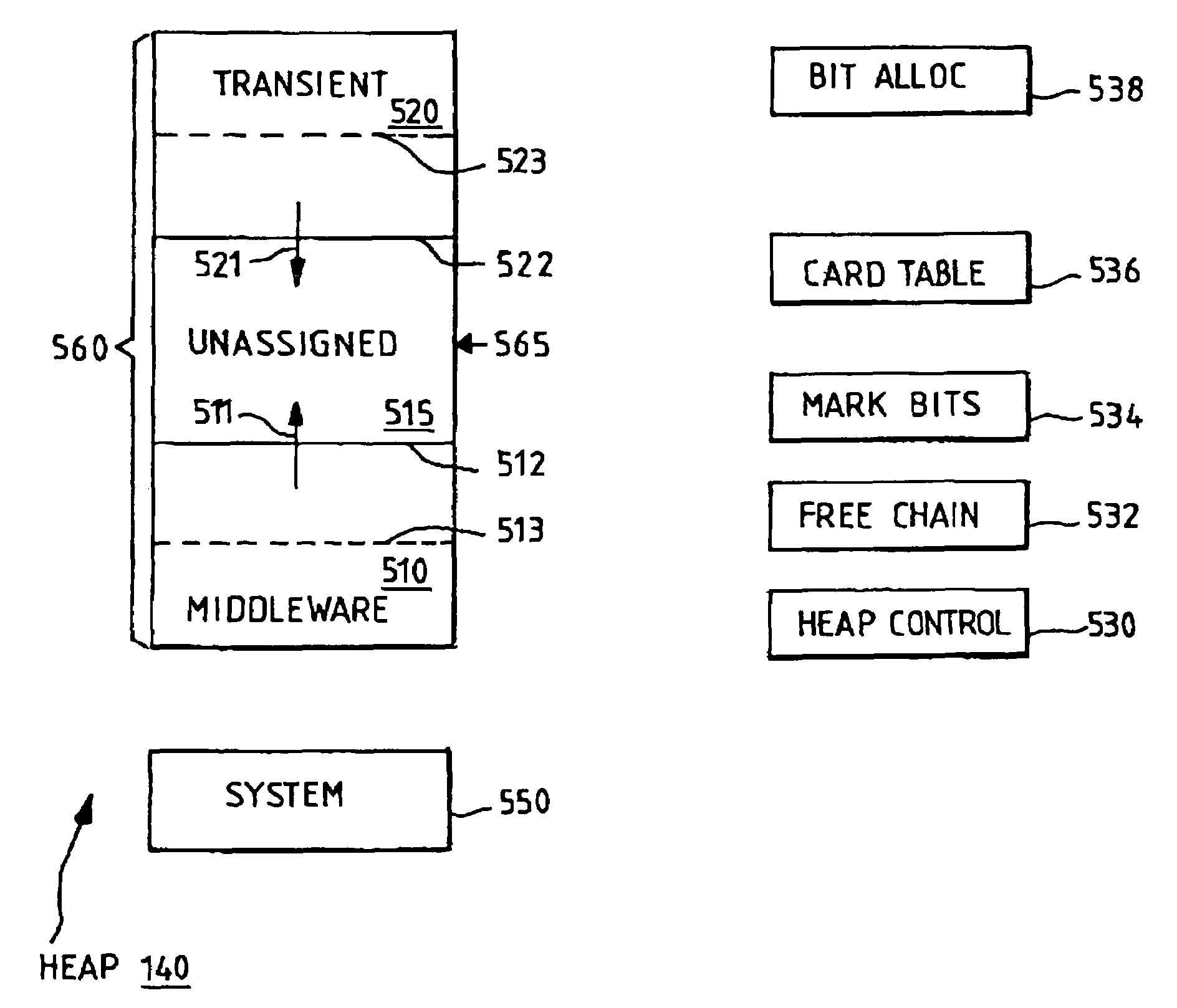

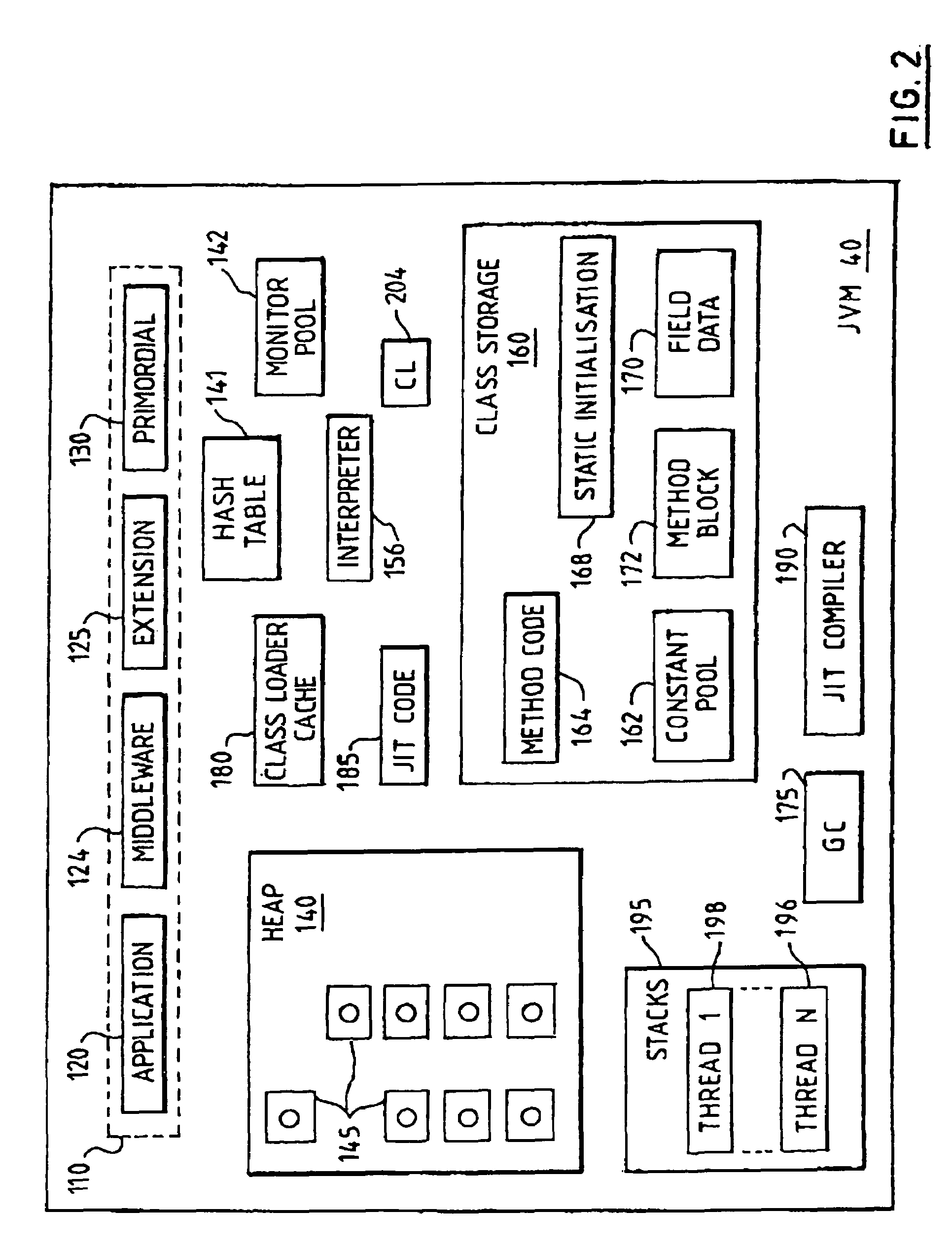

Serially, reusable virtual machine

InactiveUS7263700B1Conserve costLow costData processing applicationsProgram loading/initiatingMemory hierarchyWaste collection

In a virtual machine environment, a method and apparatus for the use of multiple heaps to retain persistent data and transient data wherein the multiple heaps enables a single virtual machine to be easily resettable, thus avoiding the need to terminate and start a new Virtual Machine as well as enabling a single virtual machine to retain data and objects across multiple applications, thus avoiding the computing resource overhead of relinking, reloading, reverifying, and recompiling classes. The memory hierarchy includes a System Heap, a Middleware Heap and a Transient Heap. The use of three heaps enables garbage collection to be selectively targeted to one heap at a time in between applications, thus avoiding this overhead during the life of an application.

Owner:GOOGLE LLC

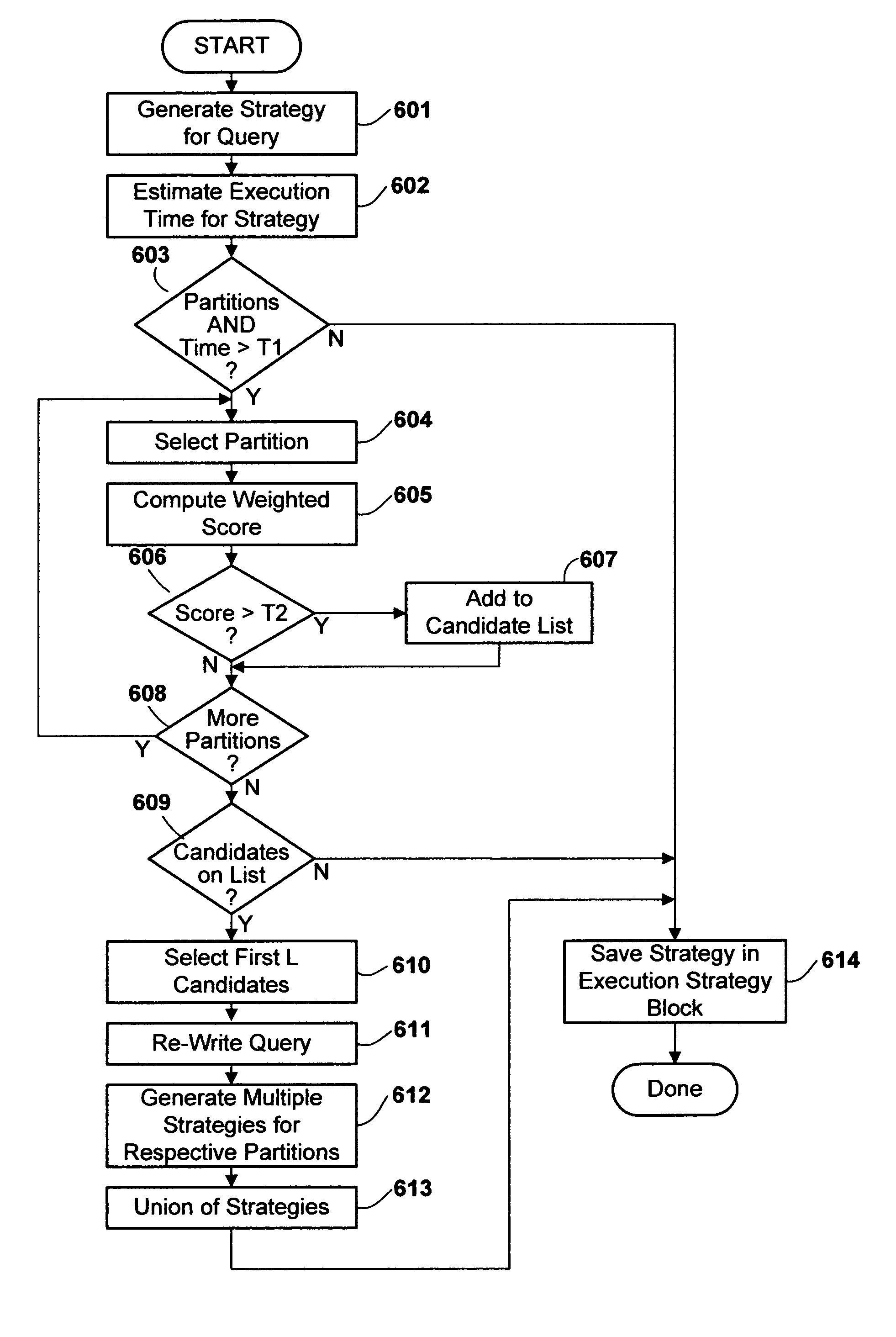

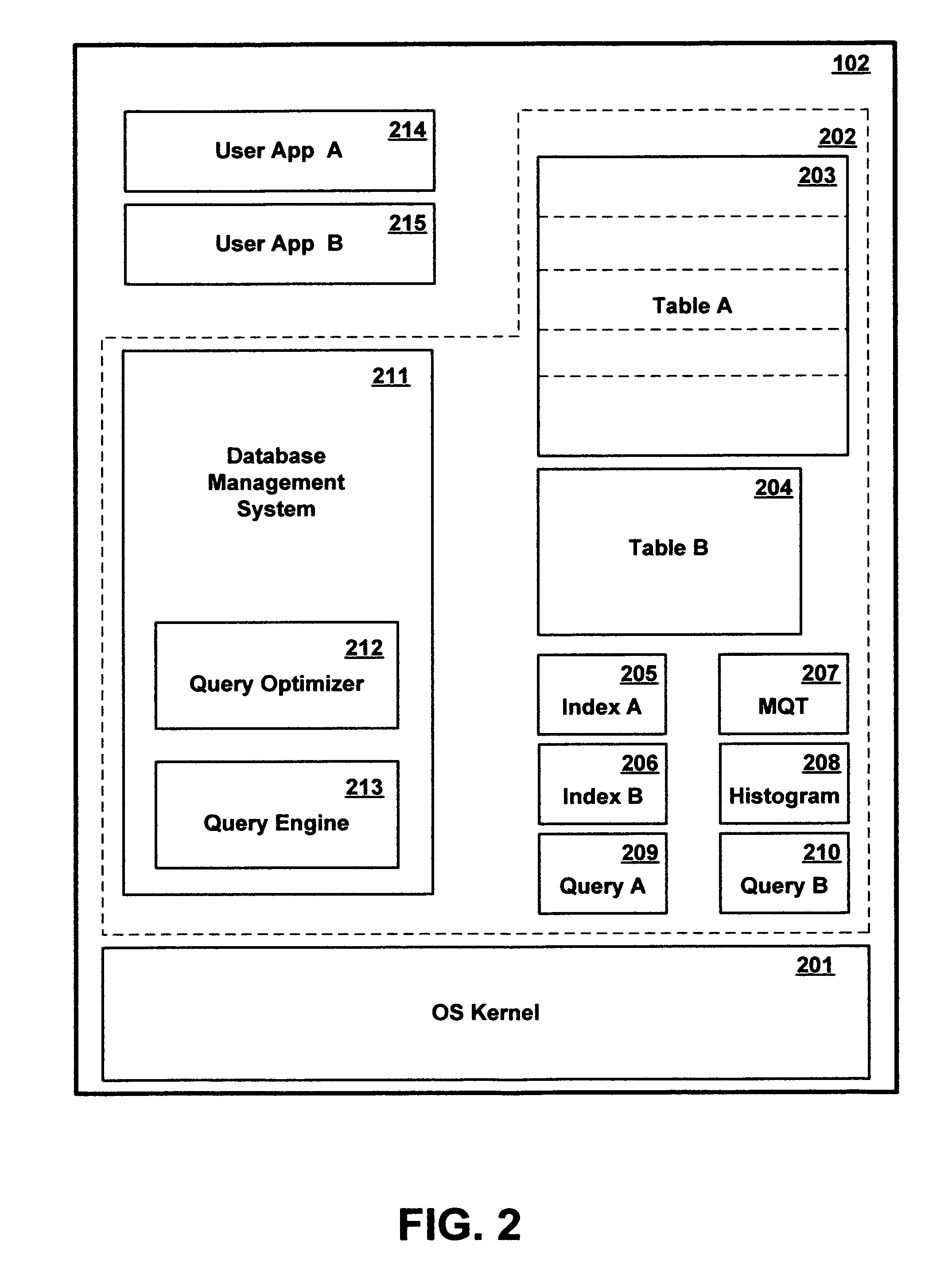

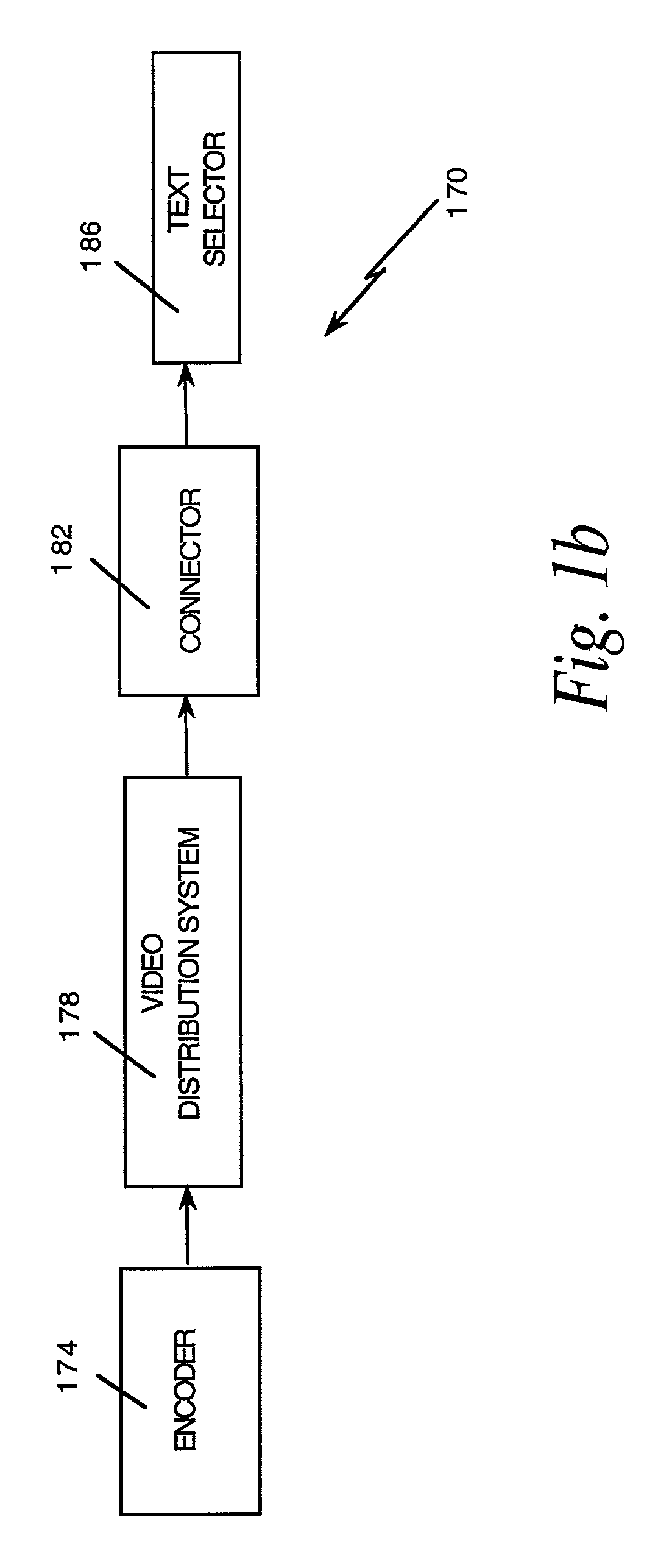

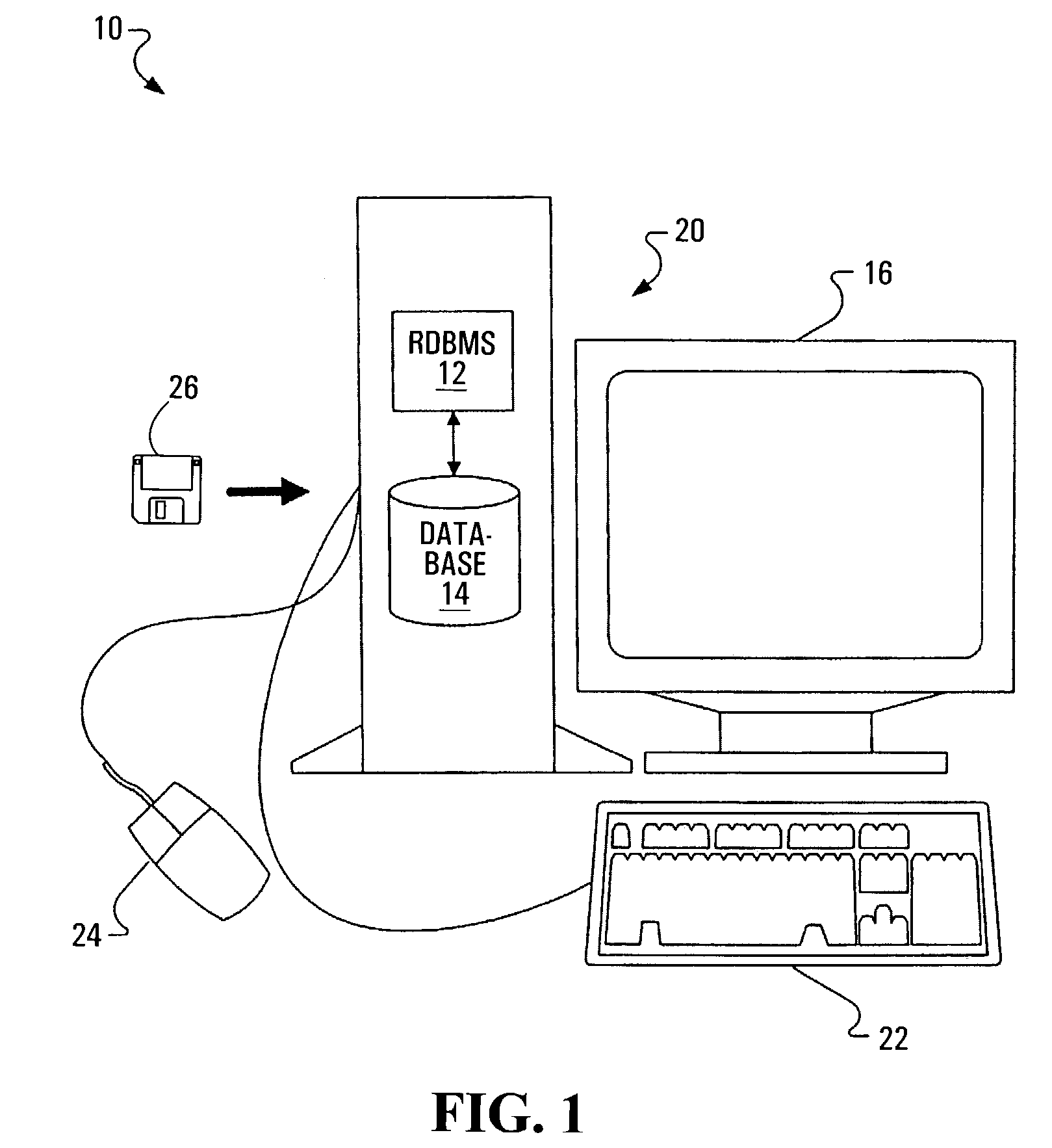

Method and apparatus for dynamically associating different query execution strategies with selective portions of a database table

InactiveUS8386463B2Little overheadAvoid overheadDigital data information retrievalDigital data processing detailsDatabase queryTable (database)

A query facility for database queries dynamically determines whether selective portions of a database table are likely to benefit from separate query execution strategies, and constructs an appropriate separate execution strategies accordingly. Preferably, the database contains at least one relatively large table comprising multiple partitions, each sharing the definitional structure of the table and containing a different respective discrete subset of the table records. The query facility compares metadata for different partitions to determine whether sufficiently large differences exist among the partitions, and in appropriate cases selects one or more partitions for separate execution strategies. Preferably, partitions are ranked for separate evaluation using a weighting formula which takes into account: (a) the number of indexes for the partition, (b) recency of change activity, and (c) the size of the partition.

Owner:INT BUSINESS MASCH CORP

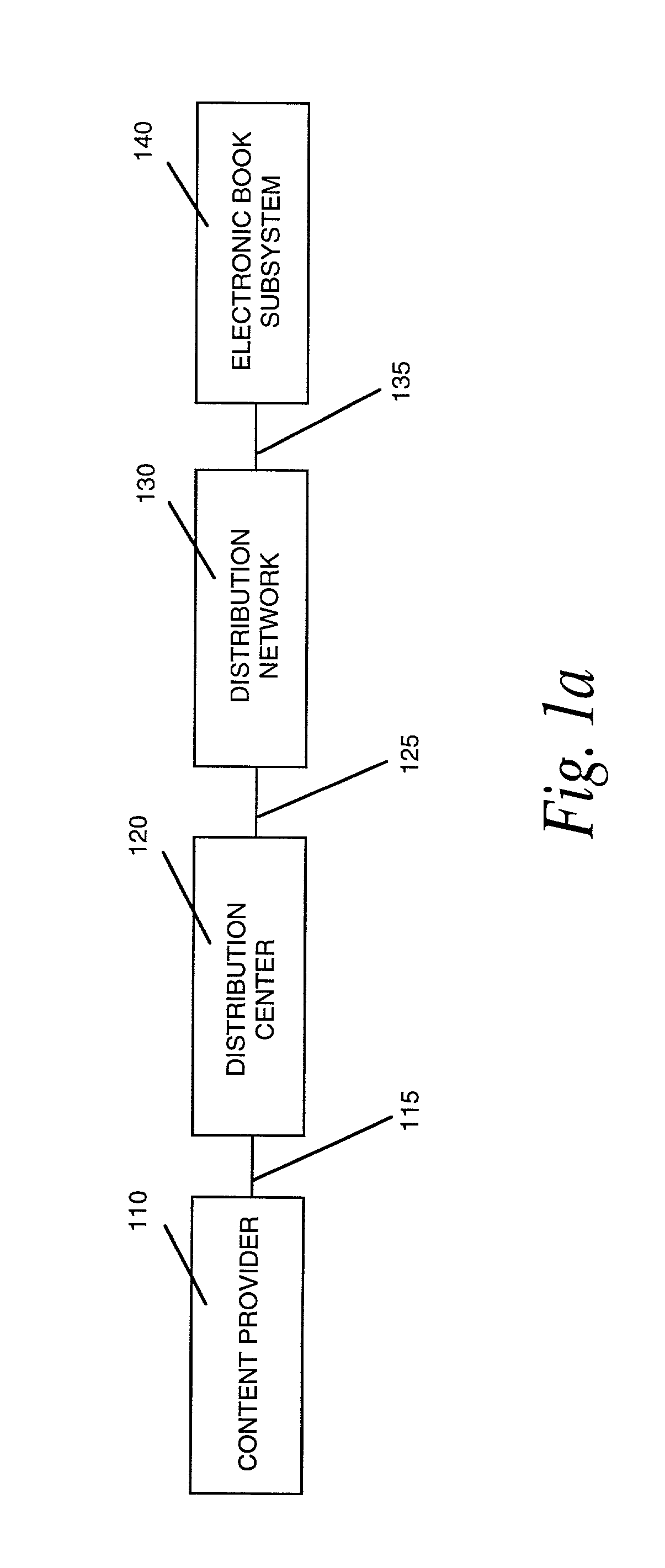

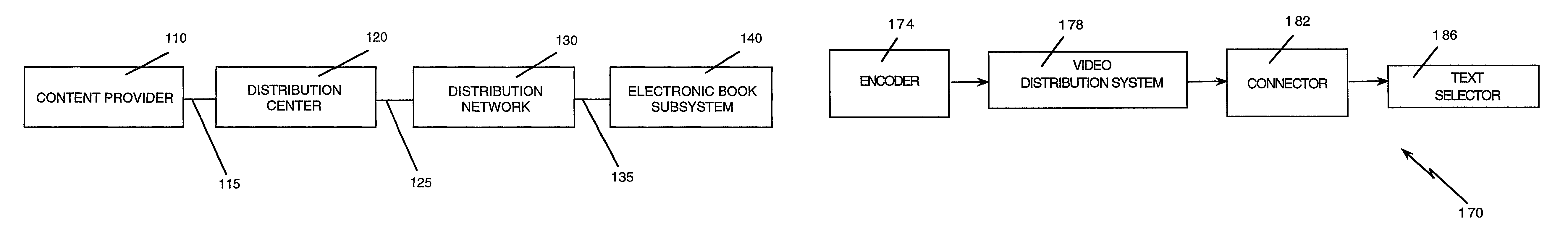

Electronic book alternative delivery systems

InactiveUS7835989B1High tech auraEasy to useTelevision system detailsColor television detailsGraphicsPayment

The invention, an electronic book selection and delivery system, distributes electronic text and graphics to subscribers. The system contains an operations center, a video distribution system or a variety of alternative distribution systems, a home subsystem, and a billing and collection system. The operations center and / or distribution points perform the functions of manipulation of text data, security and coding of text, cataloging of books, message center, and uplink functions. The home subsystem connects to a video distribution system or variety of alternative distribution systems, generates menus and stores text, and transacts through communicating mechanisms. A portable book-shaped viewer is used for viewing the text. The billing system performs the transaction, management, authorization, collection and payments utilizing the telephone system or a variety of alternative communication systems.

Owner:DISCOVERY PATENT HLDG

Method and apparatus for eliminating partitions of a database table from a join query using implicit limitations on a partition key value

InactiveUS20070027860A1Significant comprehensive benefitsAvoid overheadDigital data information retrievalSpecial data processing applicationsStar schemaTable (database)

A database facility supports database join queries in a database environment having at least one database table divided into multiple partitions based on a partition key value. The facility determines whether the values in a table joined to the partitioned table place an implicit limitation on the partition key, and eliminates from query evaluation any partitions which do not satisfy the implicit limitation. Preferably, the database uses a star schema organization, in which implicit limitations in a relatively small dimension table are used to eliminate partitions in a relatively large fact table.

Owner:IBM CORP

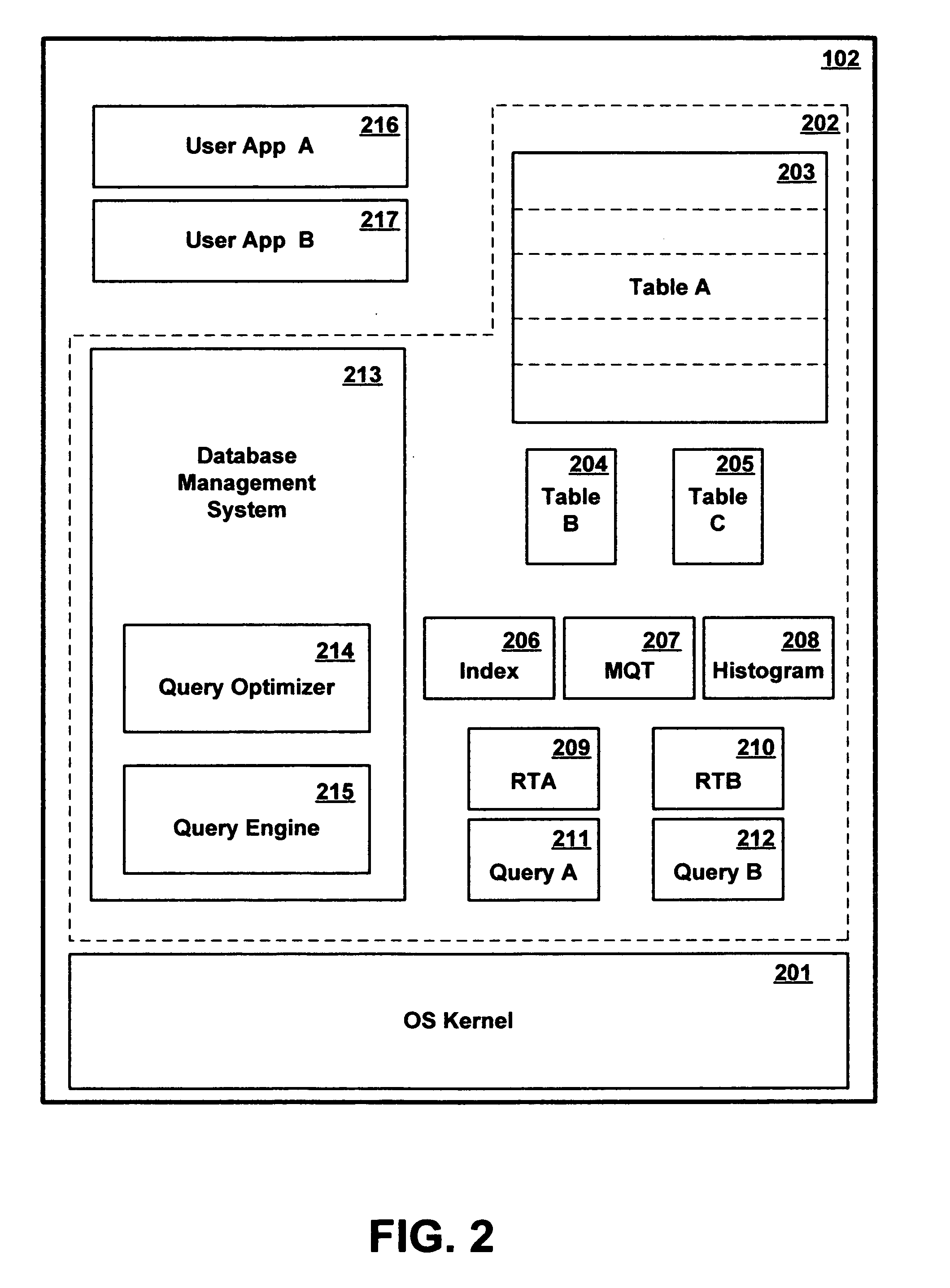

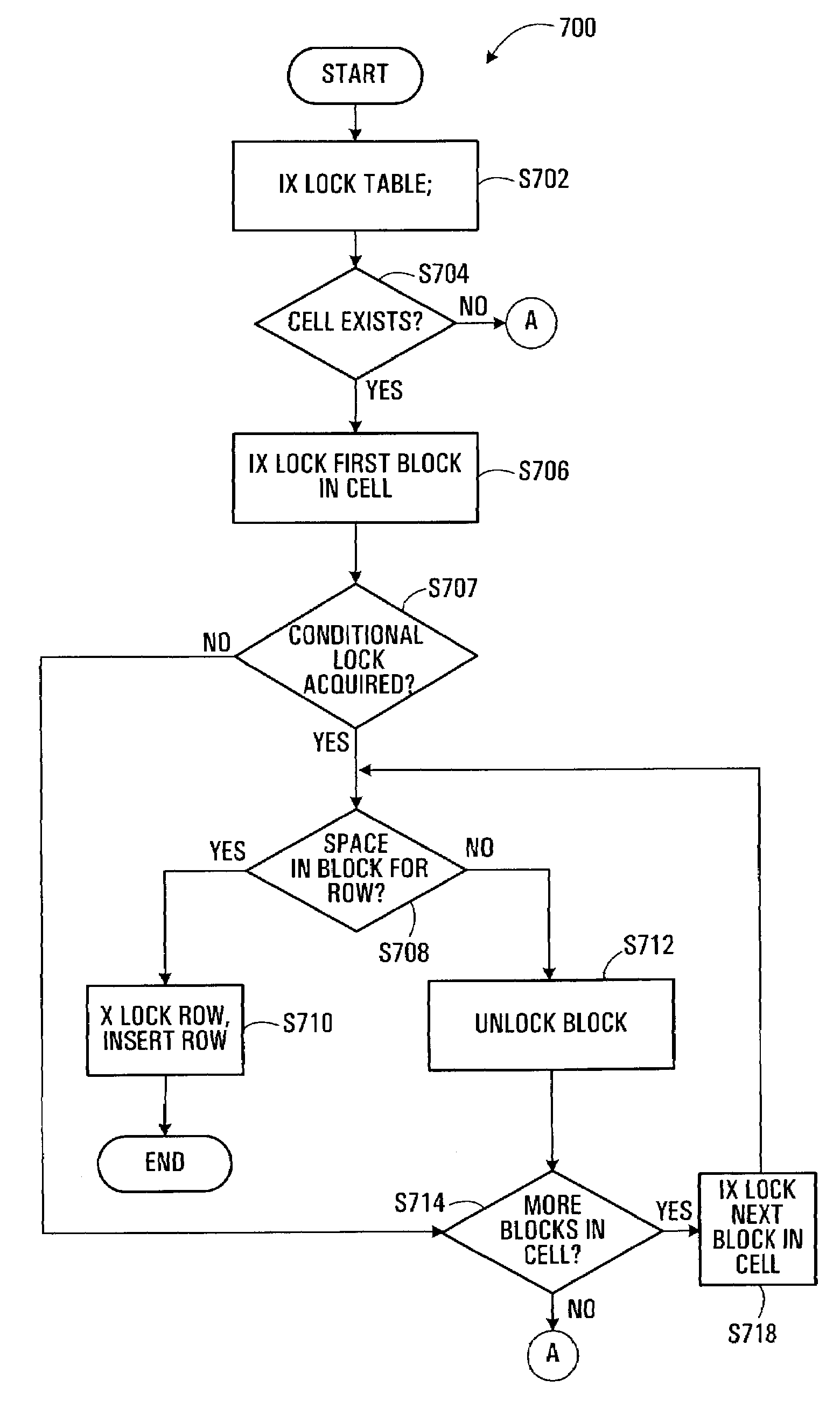

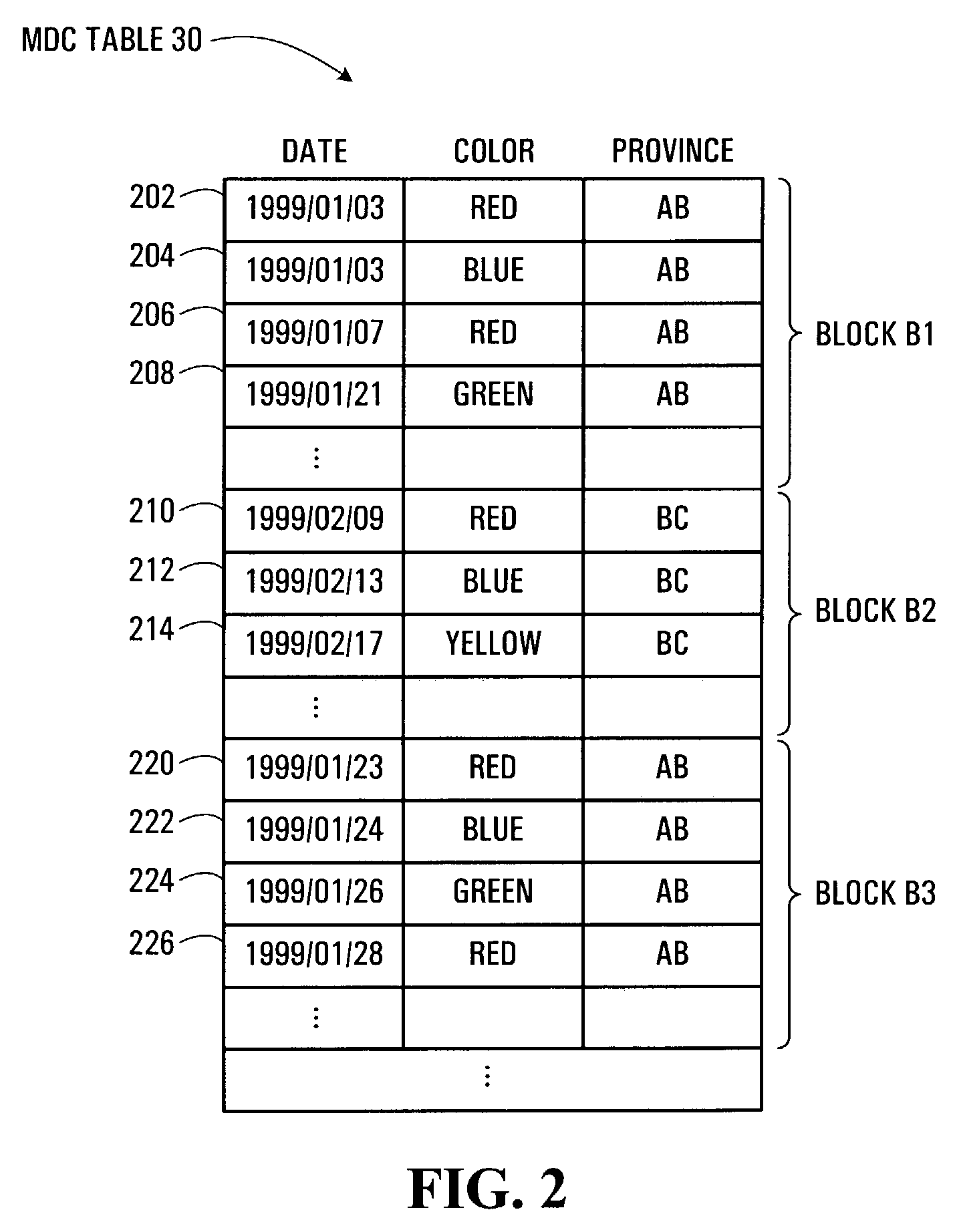

System and method for a multi-level locking hierarchy in a database with multi-dimensional clustering

InactiveUS7236974B2Avoiding undue locking overheadAvoid overheadData processing applicationsMulti-dimensional databasesGranularityRelational database

A multi-level locking hierarchy for a relational database includes a locking level applied to a multi-dimensionally clustering table, a locking level applied to blocks within the table, and a locking level applied to rows within the blocks. The hierarchy leverages the multi-dimensional clustering of the table data for efficiency and to reduce lock overhead. Data is normally locked in order of coarser to finer granularity to limit deadlock. When data of finer granularity is locked, data of coarser granularity containing the finer granularity data is also locked. Block lock durations may be employed to ensure that a block remains locked if any contained row remains locked. Block level lock attributes may facilitate detection of at least one of a concurrent scan and a row deletion within a block. Detection of the emptying of a block during a scan of the block may bar scan completion in that block.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com