Patents

Literature

48 results about "Shared virtual memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Virtual Shared Memory (VSM) Definition - What does Virtual Shared Memory (VSM) mean? Virtual shared memory (VSM) is a technique through which multiple processors within a distributed computing architecture are provided with an abstract shared memory.

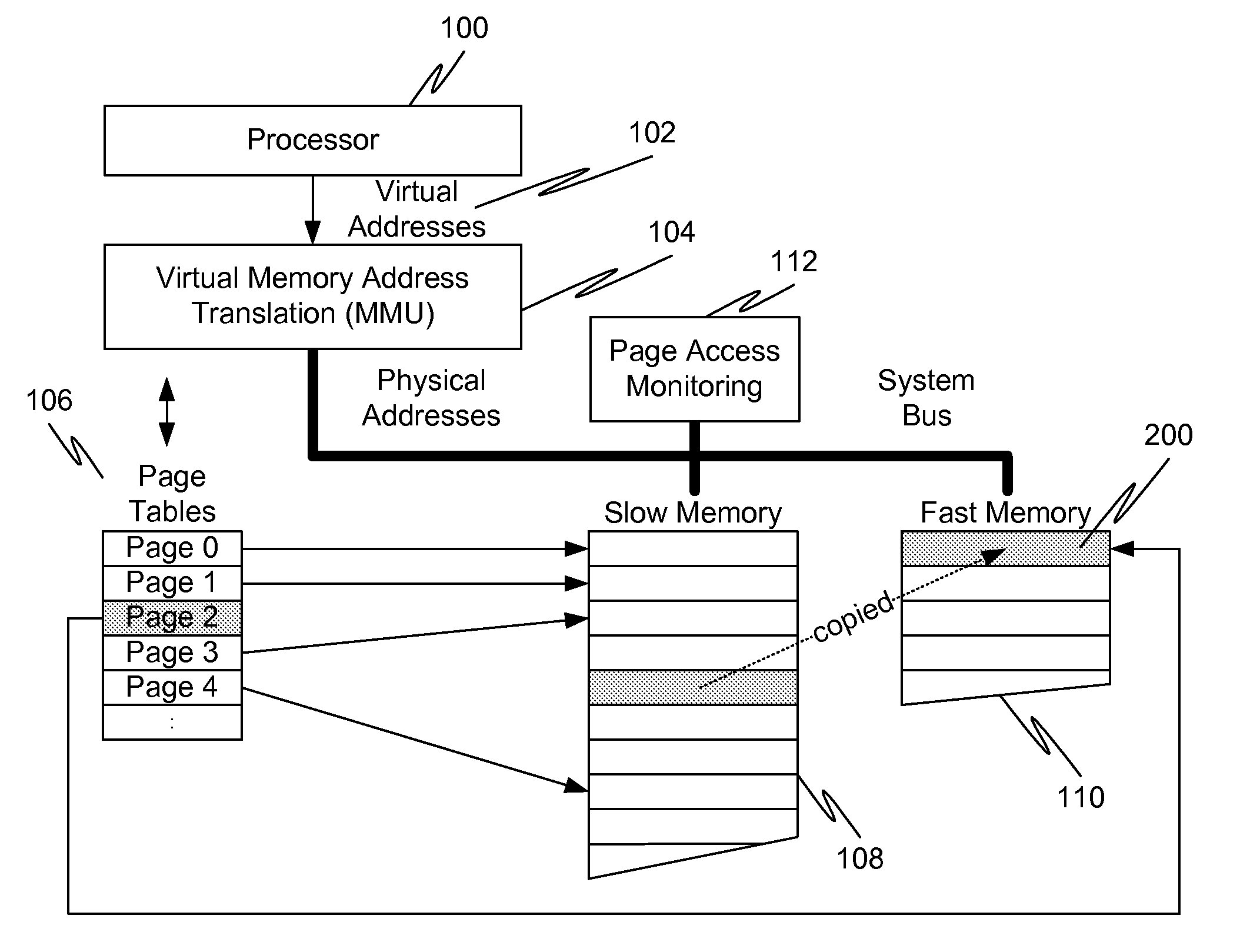

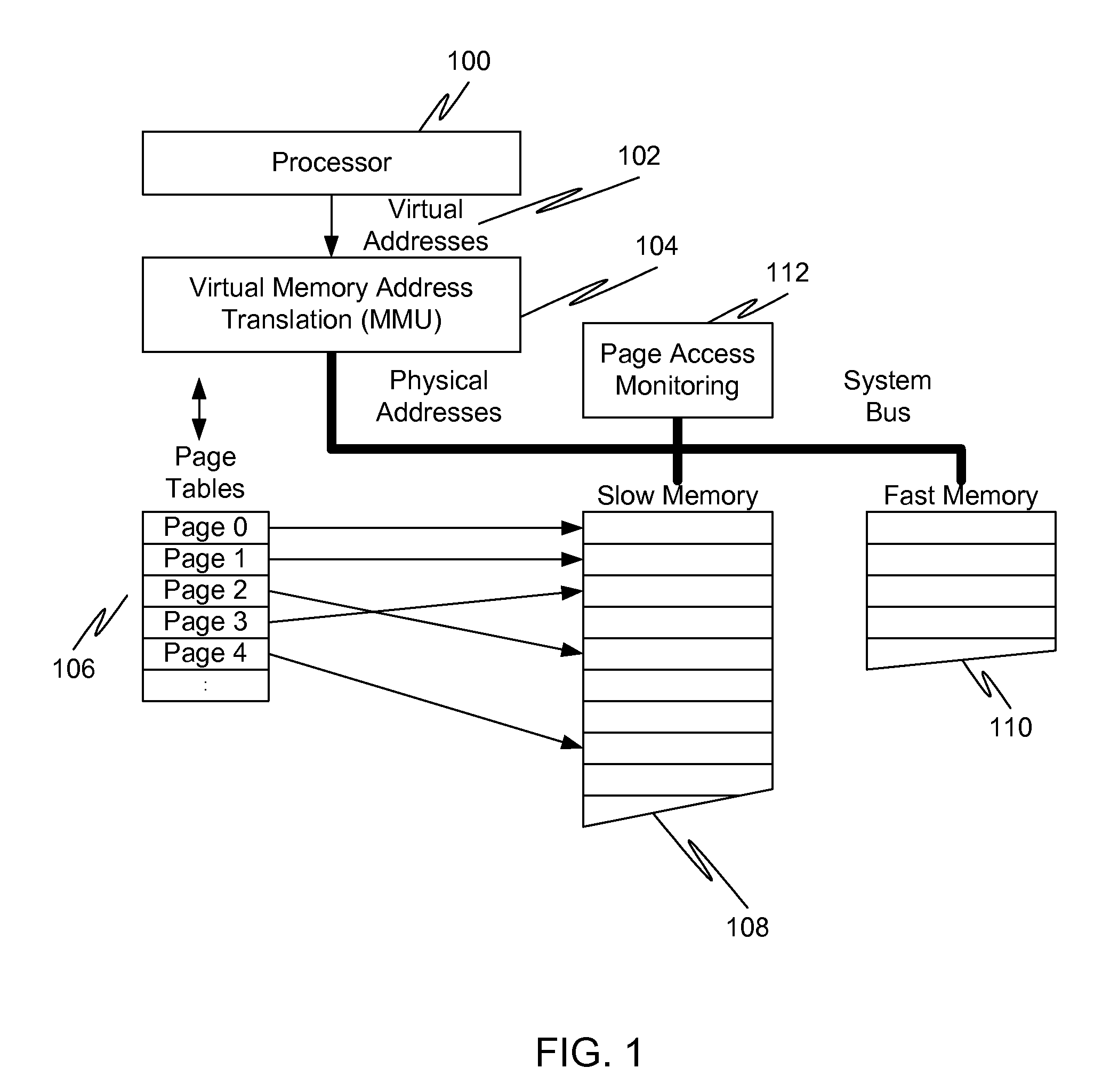

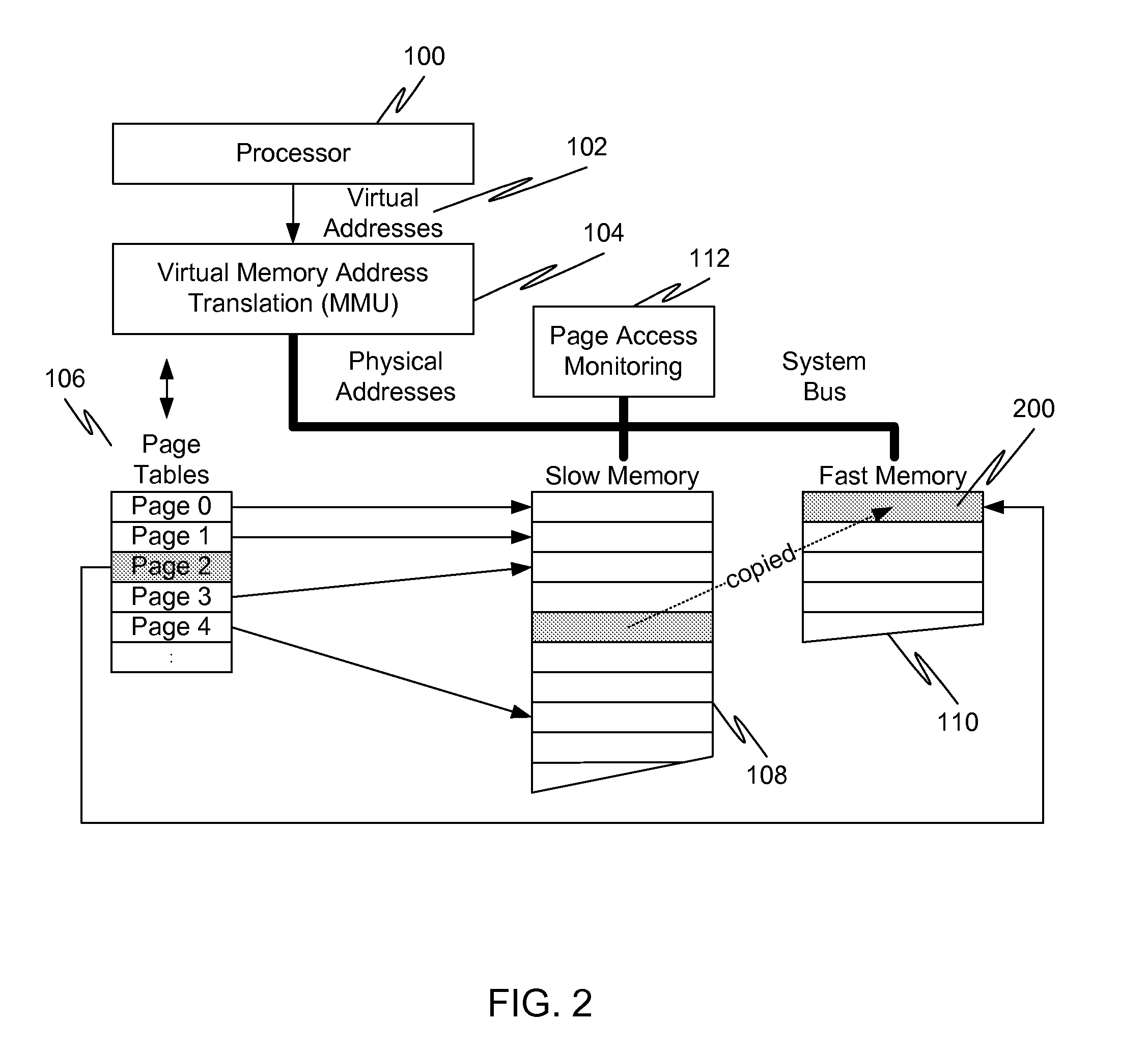

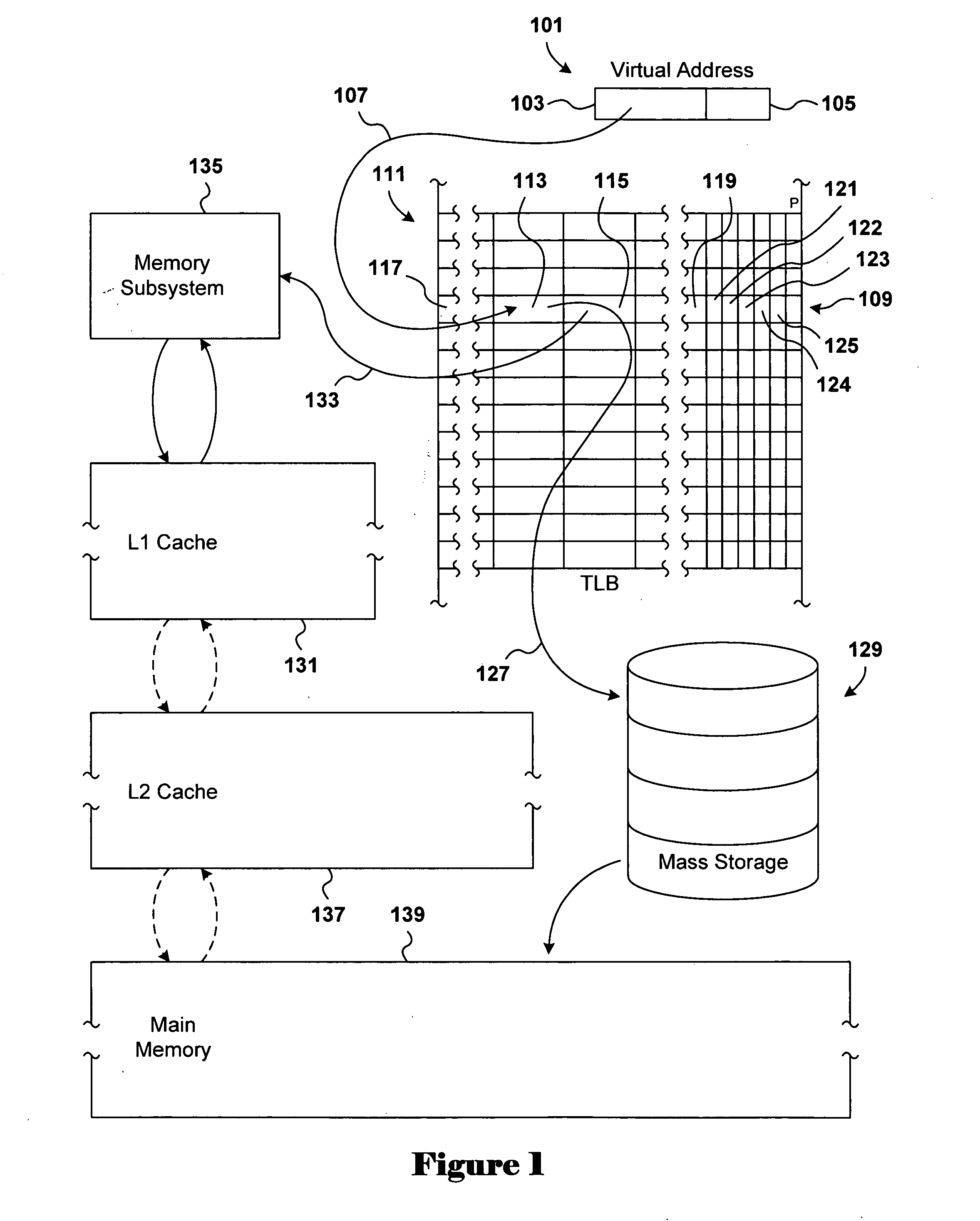

Caching using virtual memory

InactiveUS20120017039A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryPage table

In a first embodiment of the present invention, a method for caching in a processor system having virtual memory is provided, the method comprising: monitoring slow memory in the processor system to determine frequently accessed pages; for a frequently accessed page in slow memory: copy the frequently accessed page from slow memory to a location in fast memory; and update virtual address page tables to reflect the location of the frequently accessed page in fast memory.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

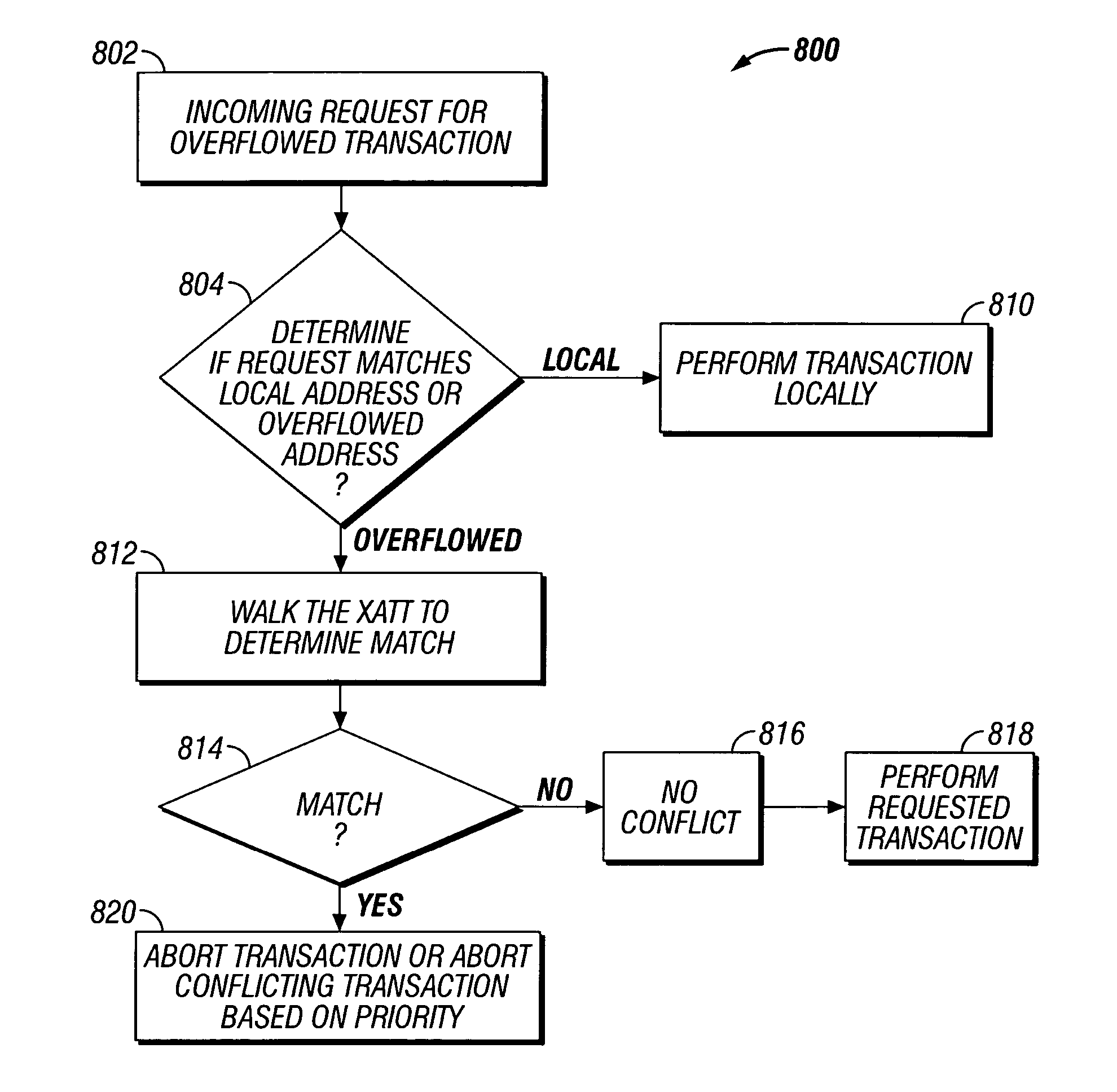

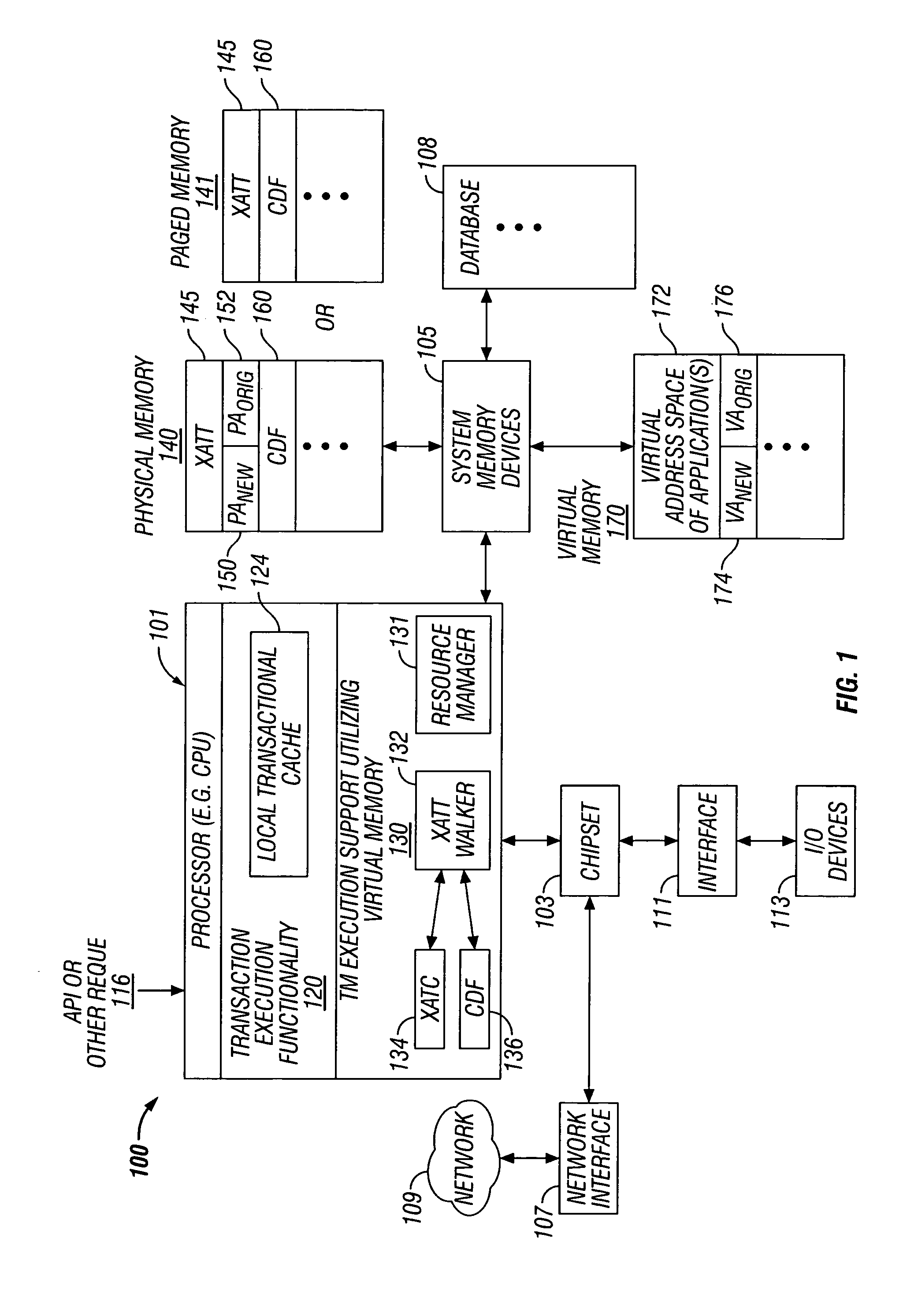

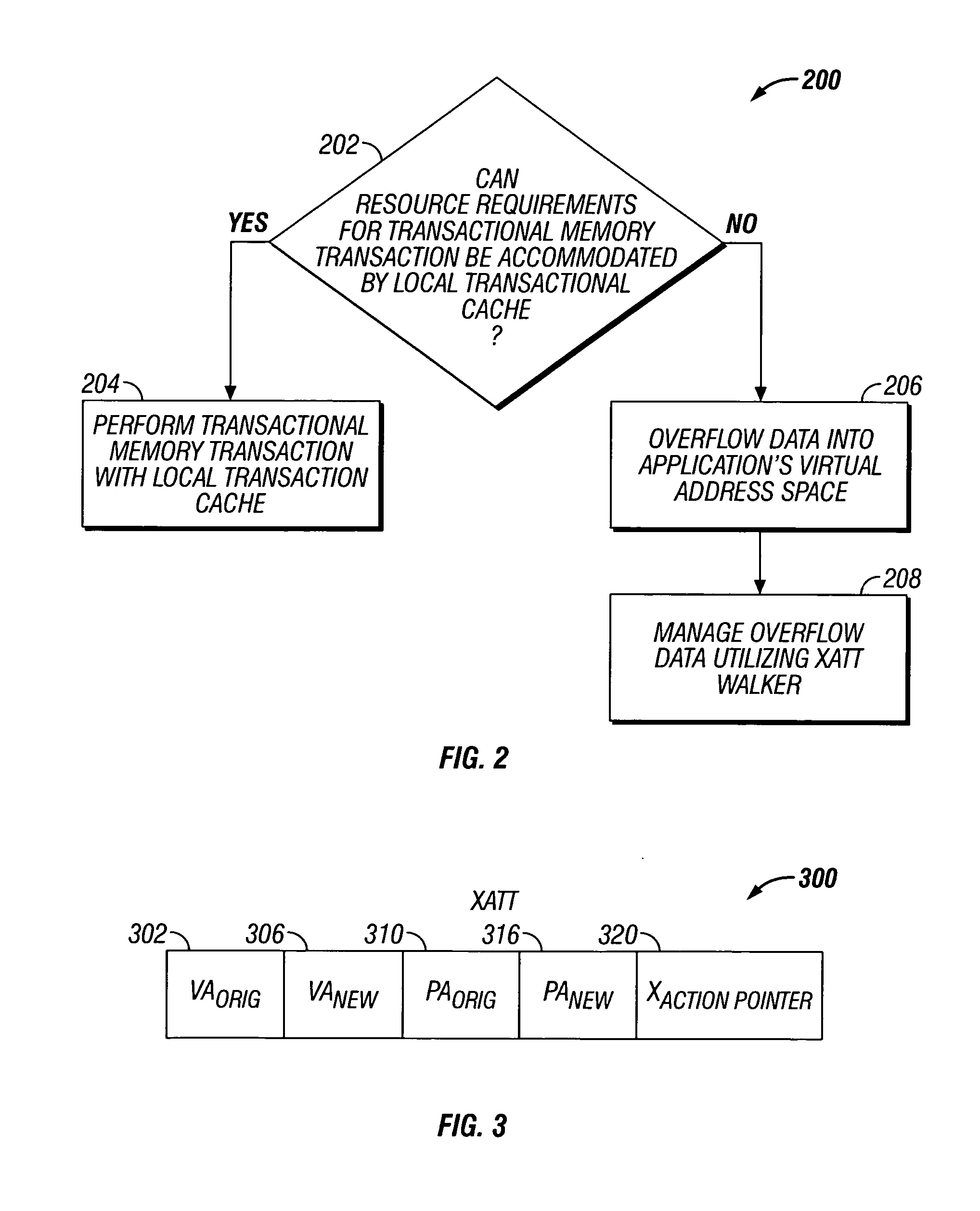

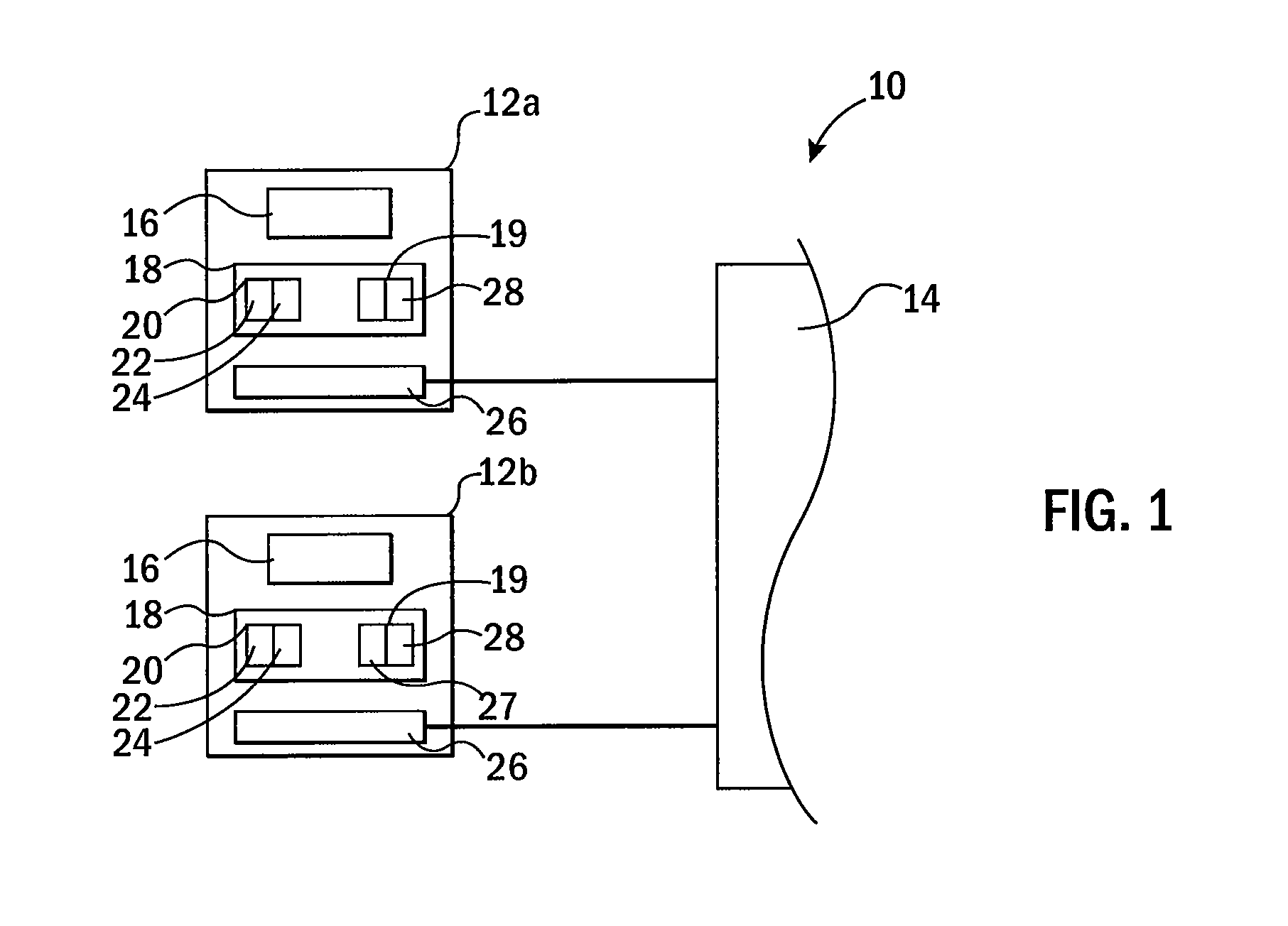

Transactional memory execution utilizing virtual memory

ActiveUS7685365B2Memory adressing/allocation/relocationTransaction processingVirtual memoryTransactional memory

Embodiments of the invention relate to transactional memory execution utilizing virtual memory. A processor includes a local transactional cache and a resource manager. The resource manager responsive to a transactional memory transaction request from a requesting thread determines whether the local transactional cache is capable of accommodating the transactional memory transaction request and, if so, the local transactional caches performs the transactional memory transaction. However, if the local transactional cache is not capable of accommodating the transactional memory transaction request, data for the transactional memory transaction request is overflowed into an application's virtual address space associated with the requesting thread.

Owner:INTEL CORP

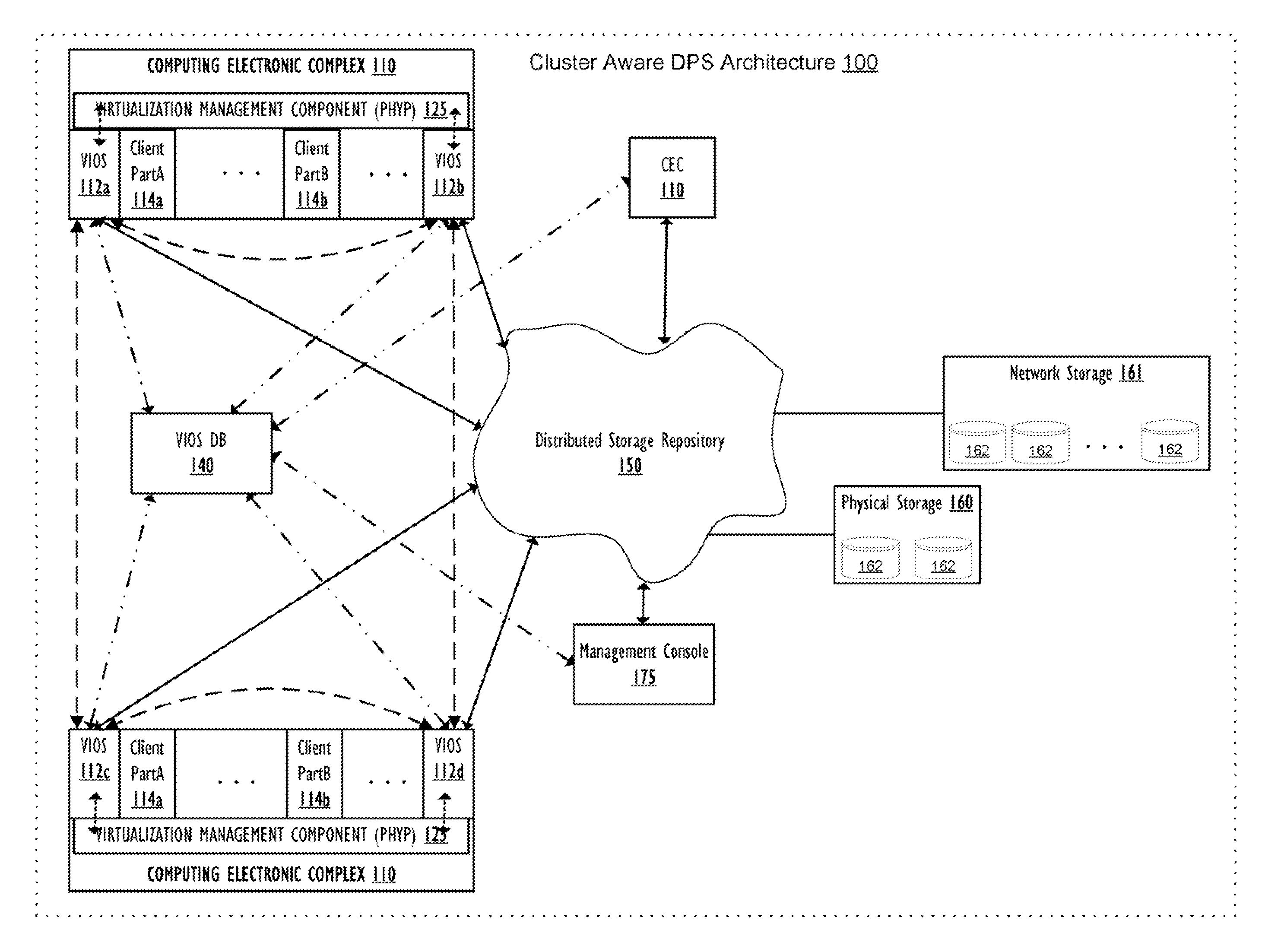

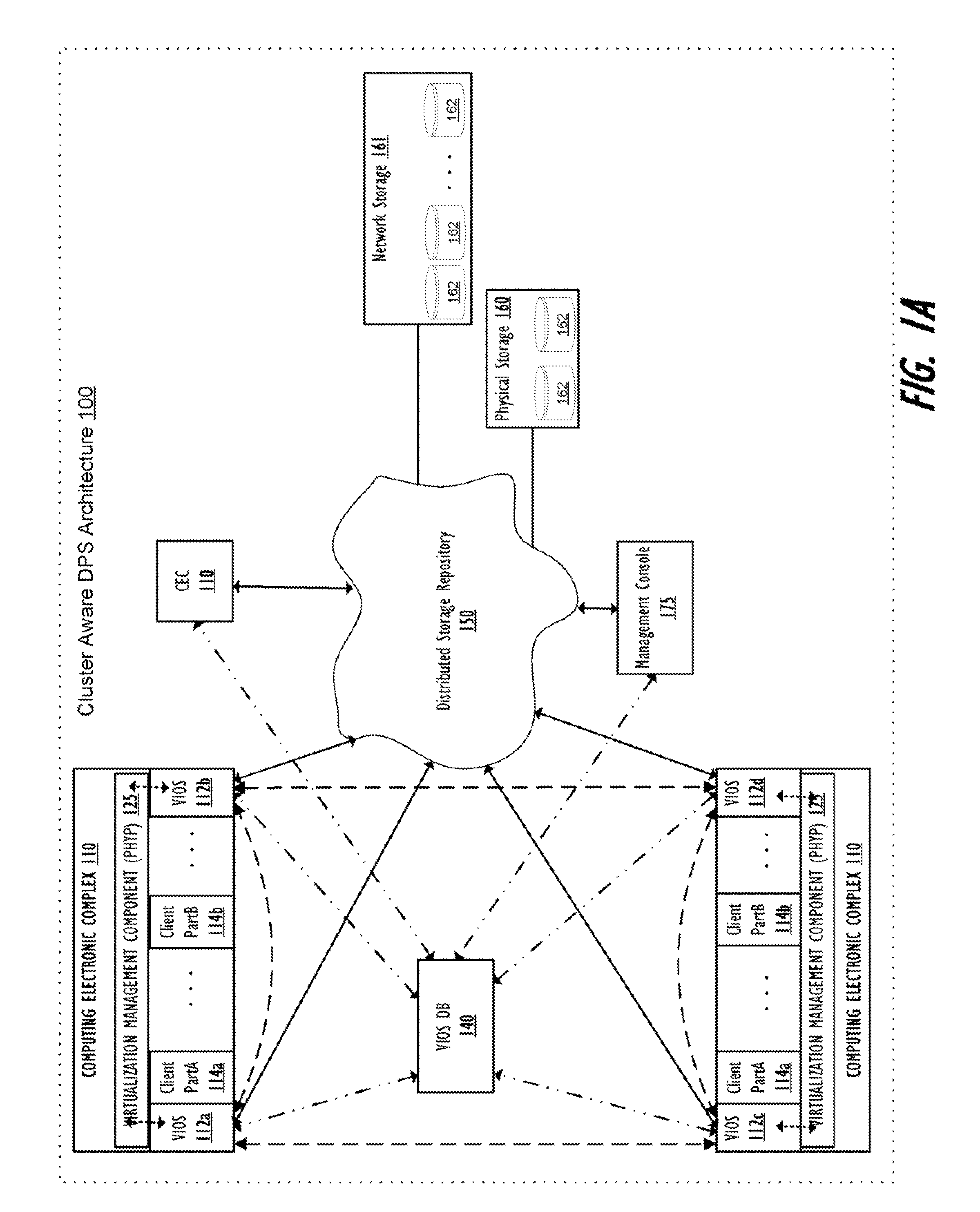

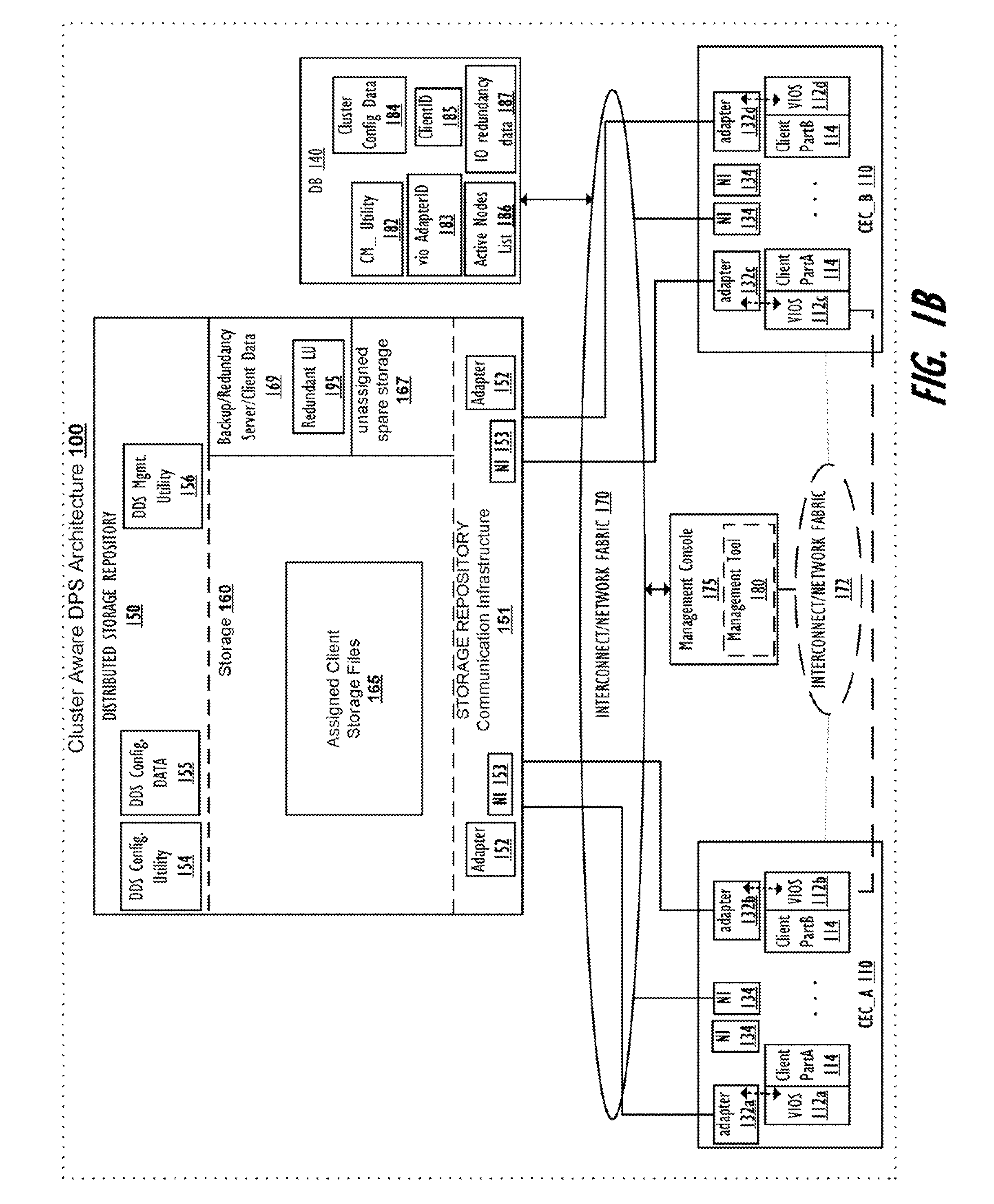

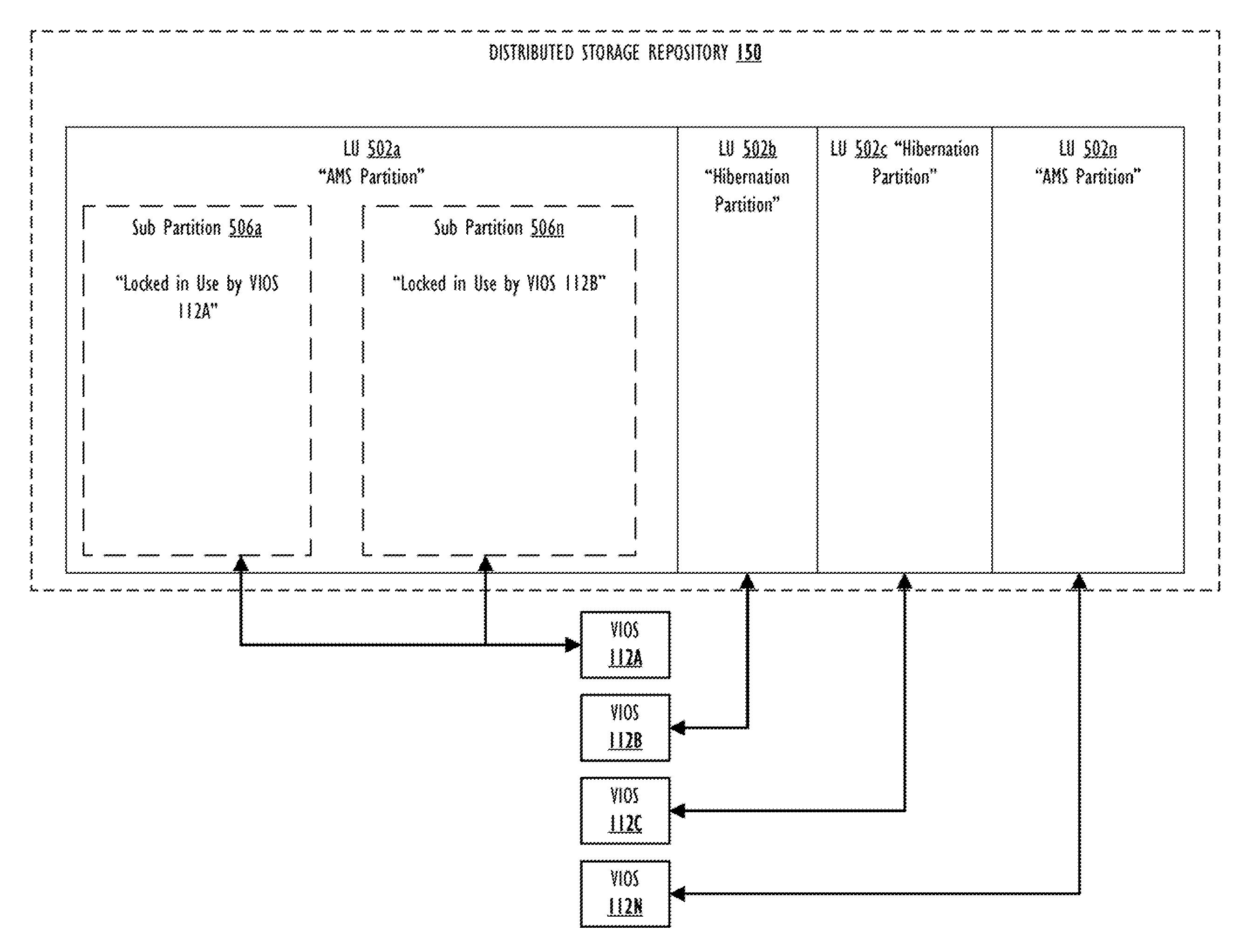

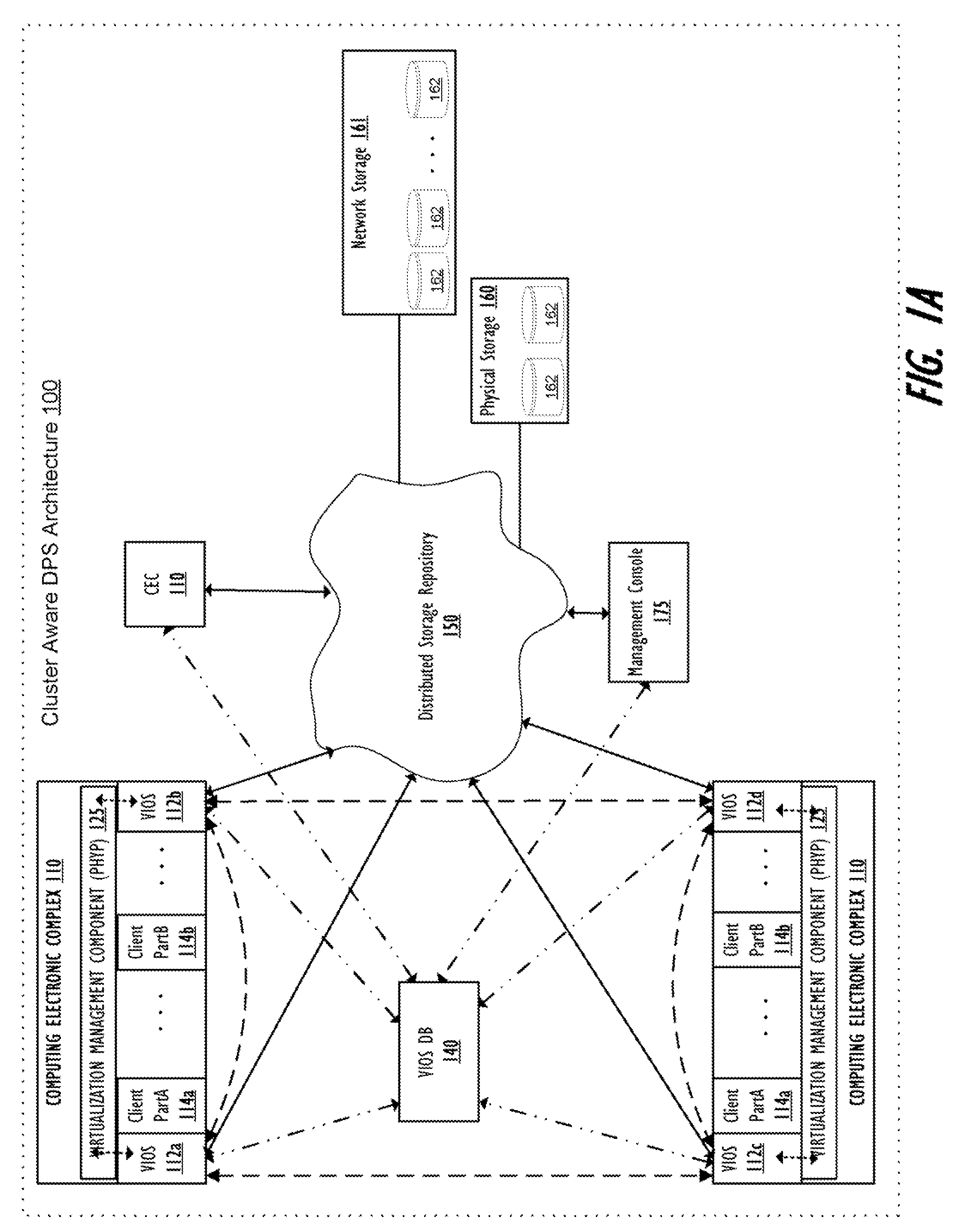

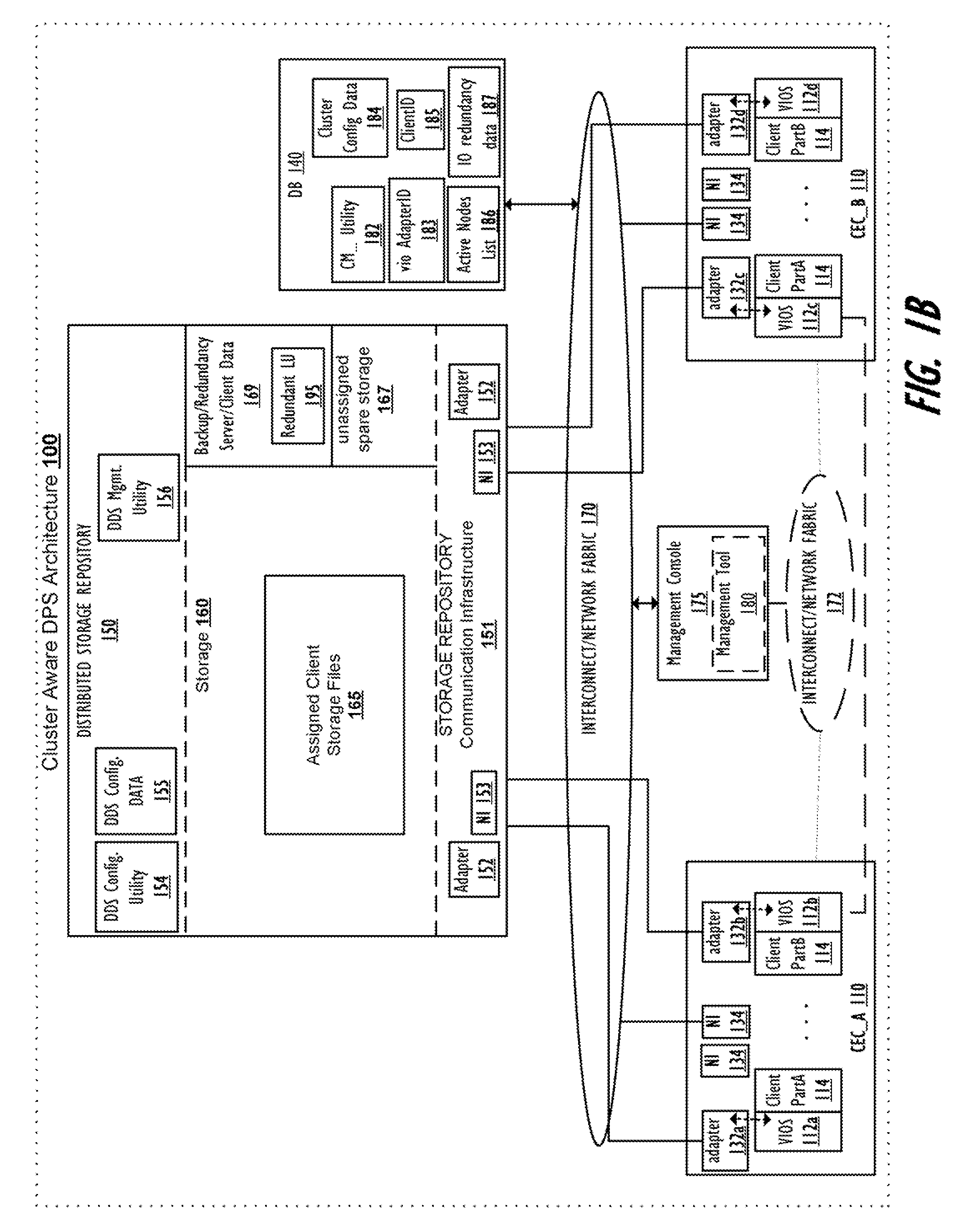

Supporting Virtual Input/Output (I/O) Server (VIOS) Active Memory Sharing in a Cluster Environment

InactiveUS20120110275A1Promote migrationMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryActive memory

A method, system, and computer program product provide a shared virtual memory space via a cluster-aware virtual input / output (I / O) server (VIOS). The VIOS receives a paging file request from a first LPAR and thin-provisions a logical unit (LU) within the virtual memory space as a shared paging file of the same storage amount as the minimum required capacity. The VIOS also autonomously maintains a logical redundancy LU (redundant LU) as a real-time copy of the provisioned / allocated LU, where the redundant LU is a dynamic copy of the allocated LU that is autonomously updated responsive to any changes within the allocated LU. Responsive to a second VIOS attempting to read a LU currently utilized by a first VIOS, the read request is autonomously redirected to the logical redundancy LU. The redundant LU can be utilized to facilitate migration of a client LPAR to a different computing electronic complex (CEC).

Owner:IBM CORP

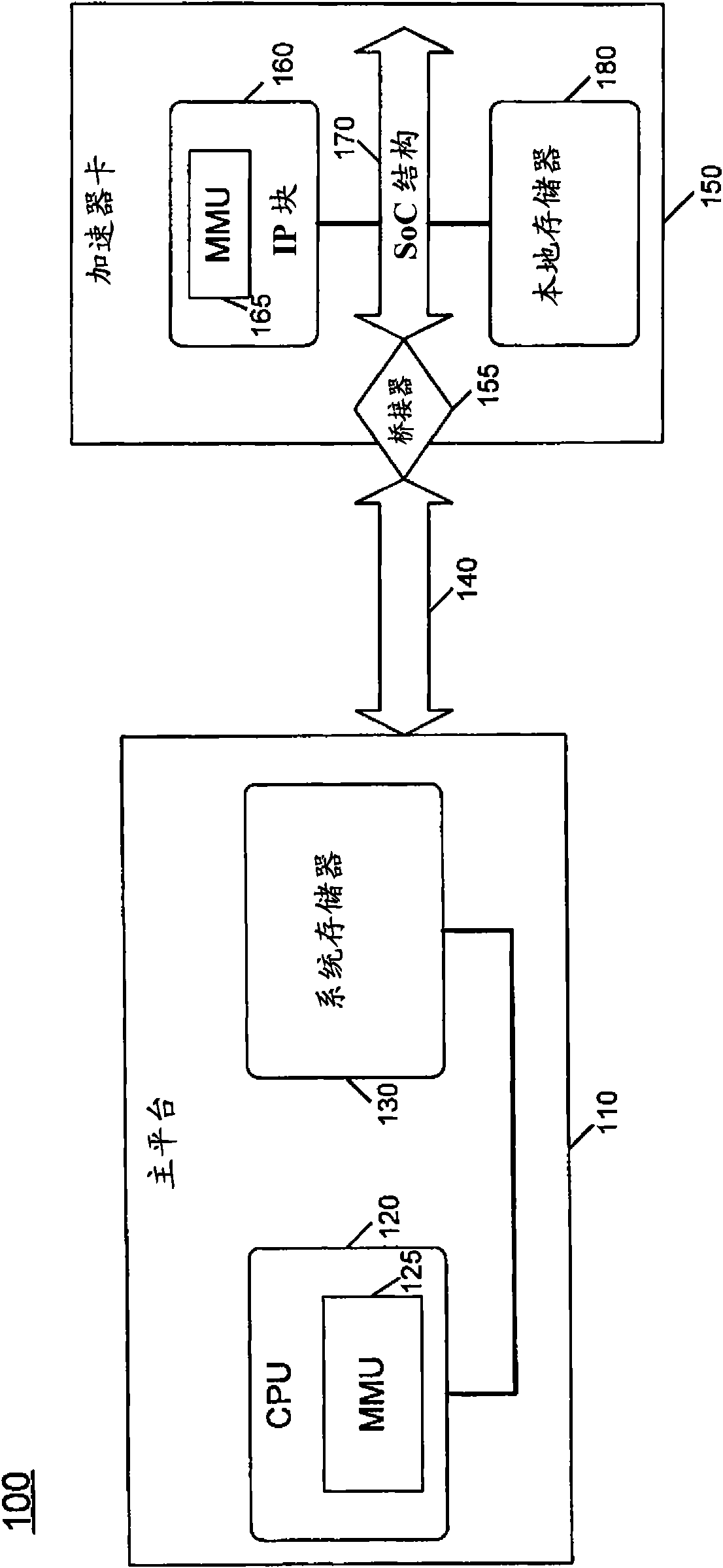

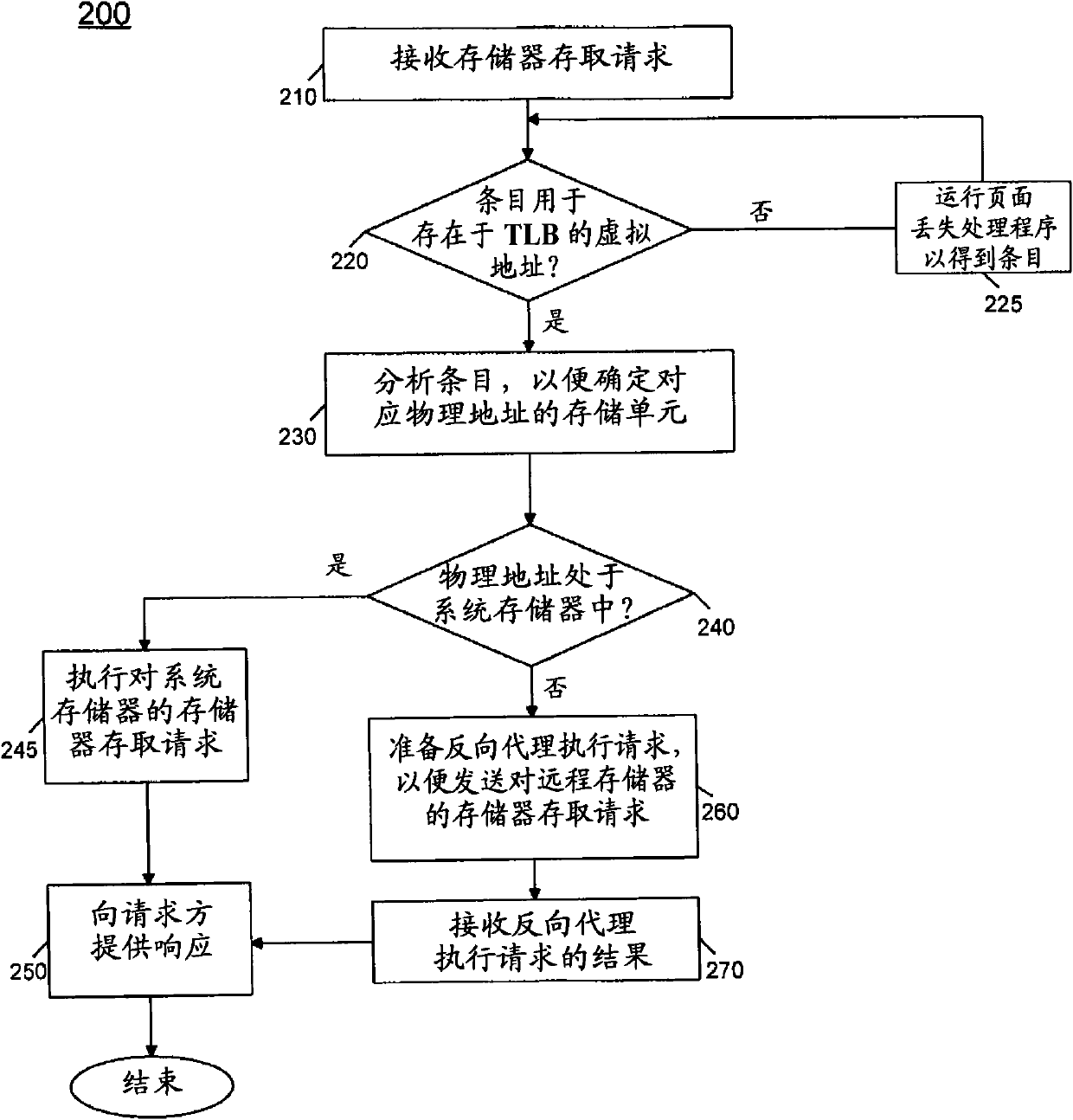

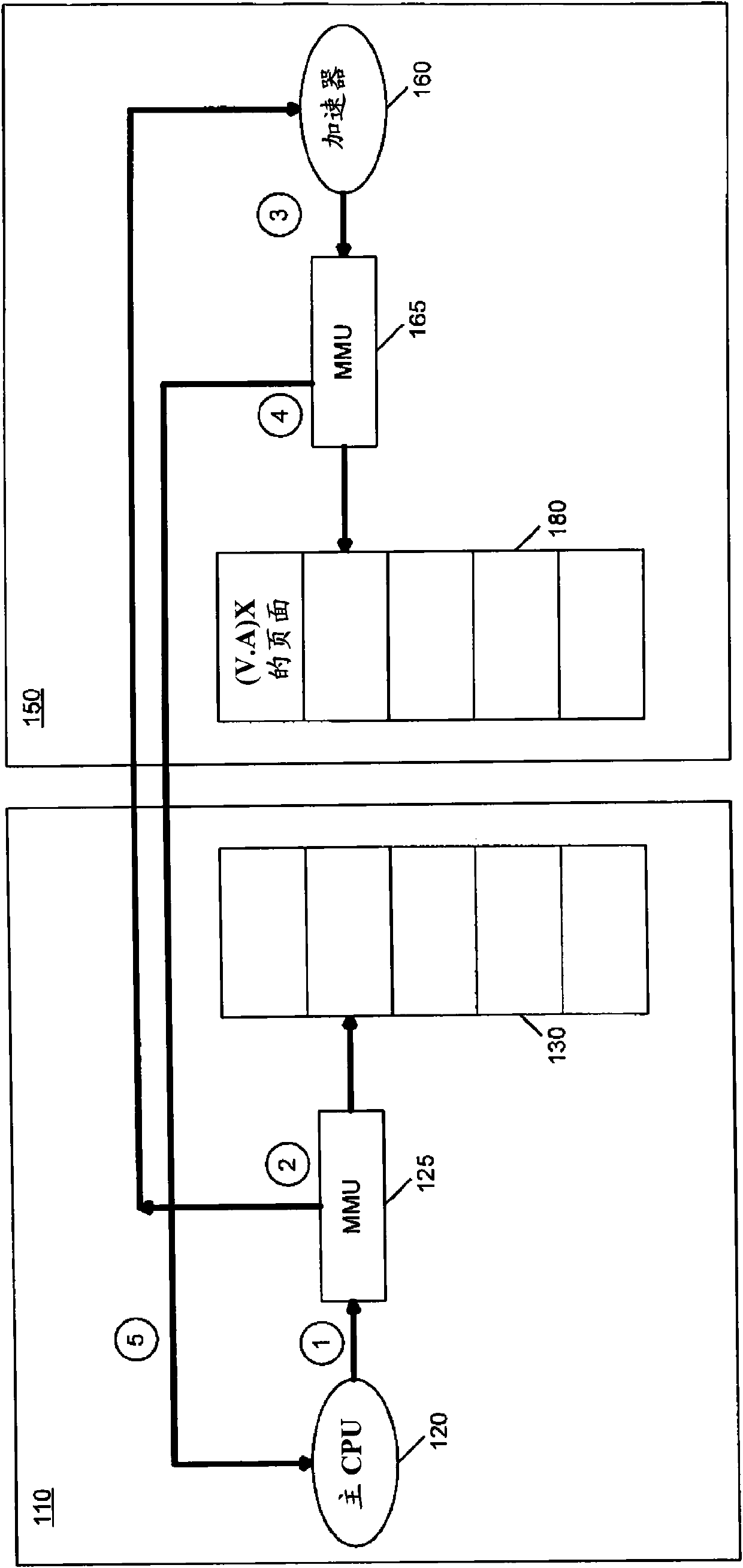

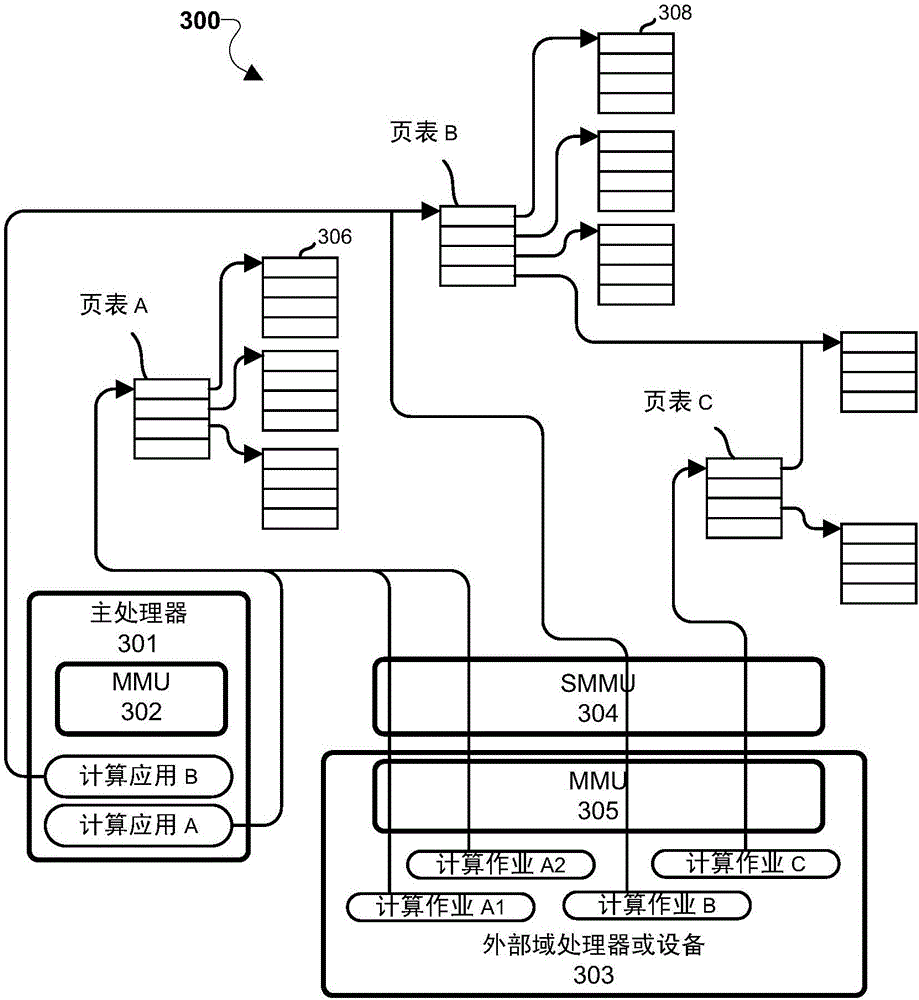

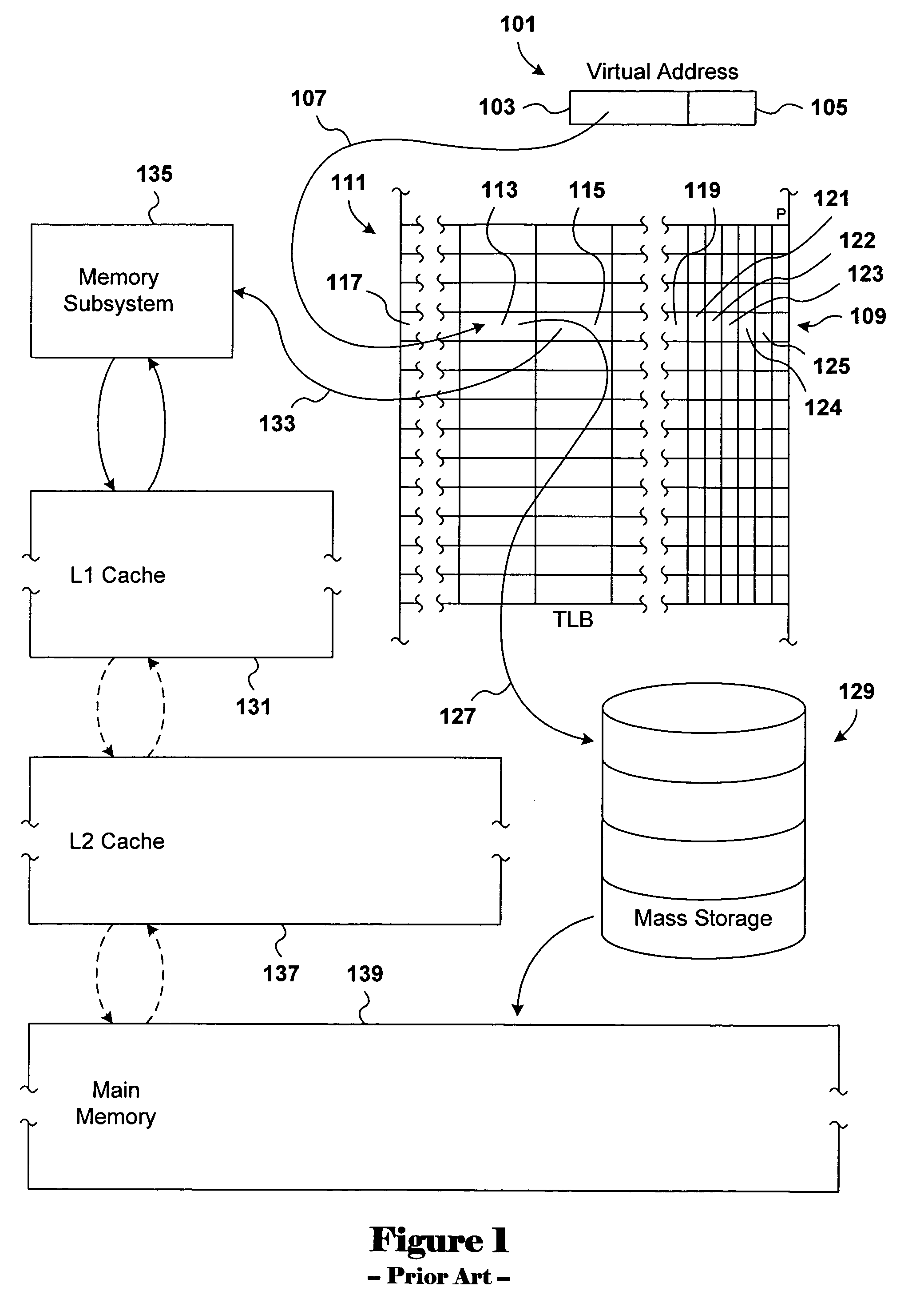

Providing hardware support for shared virtual memory between local and remote physical memory

InactiveCN102023932AMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory management unitShared virtual memory

In one embodiment, the present invention includes a memory management unit (MMU) having entries to store virtual address to physical address translations, where each entry includes a location indicator to indicate whether a memory location for the corresponding entry is present in a local or remote memory. In this way, a common virtual memory space can be shared between the two memories, which may be separated by one or more non-coherent links. Other embodiments are described and claimed.

Owner:INTEL CORP

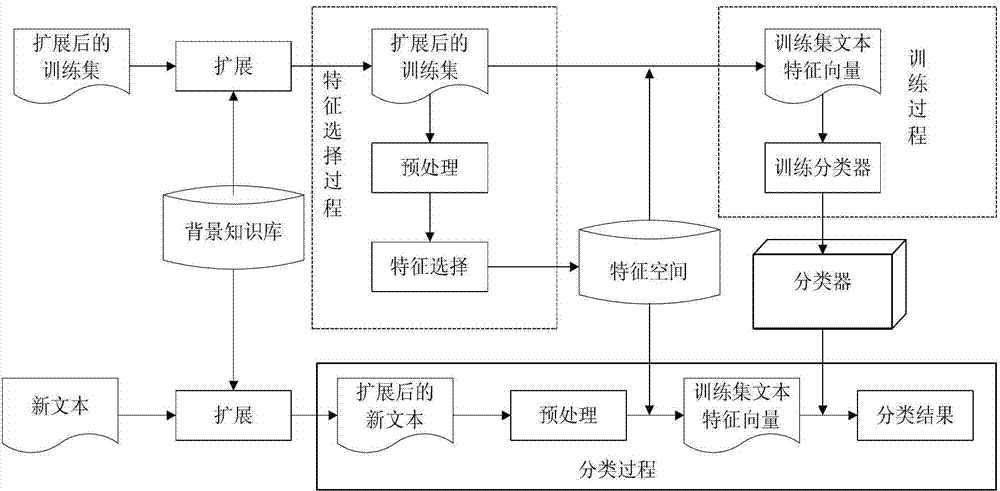

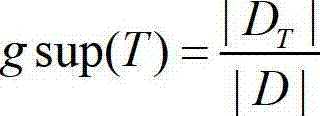

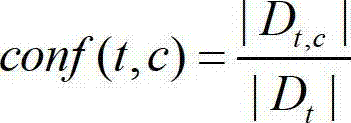

Chinese short text classification method based on characteristic extension

InactiveCN102955856AImprove accuracyImprove recallSpecial data processing applicationsClassification methodsData mining

The invention provides a Chinese short text classification method based on characteristic extension, and the method comprises the following steps that (1) a background knowledge base is established: the two-tuples of feature words which meet a certain constraint condition are dug from a long text corpus with category marks to form the background knowledge base; (2) short text which is trained in a centralized way is extended: extension words are added to the short text which is trained in a centralized way according to a certain extension rule according to the two-tuples in the background knowledge base; (3) a classification model is built: a (shared virtual memory) SVM classification model is established through an extended short text training set; (4) the short text to be classified is extended: the extension words are added to the short text to be classified according to a certain extension rule according to the two-tuples in the background knowledge base and the feature space of the classification model; and (5) a classification result is generated: the classification result is generated through the classification model and the extended short text. According to the Chinese short text classification method based on characteristic extension, the features of the short text are enriched through the long text corpus, so that the accuracy and the recall rate in the classification of the short text are improved.

Owner:北京洛克威尔科技有限公司

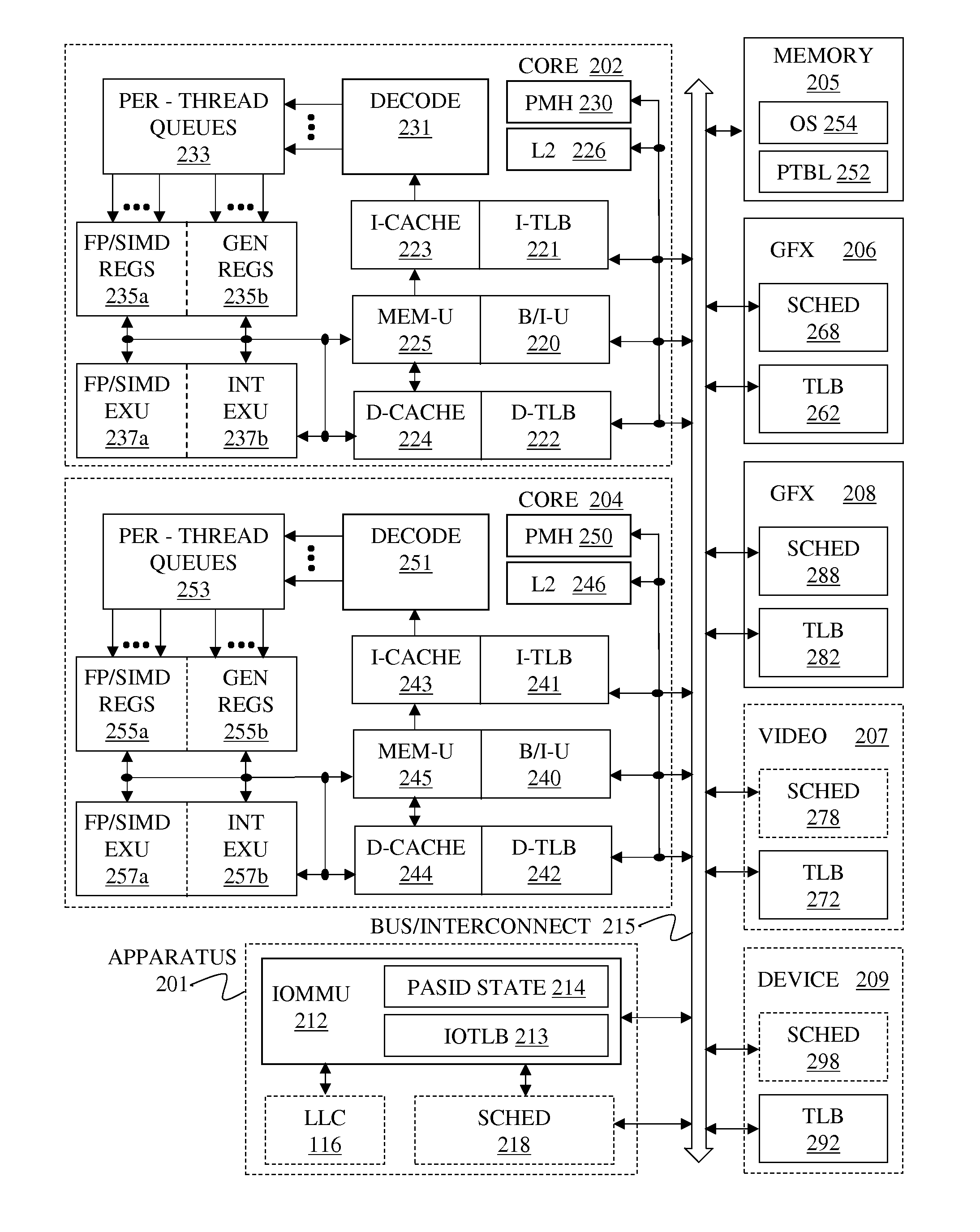

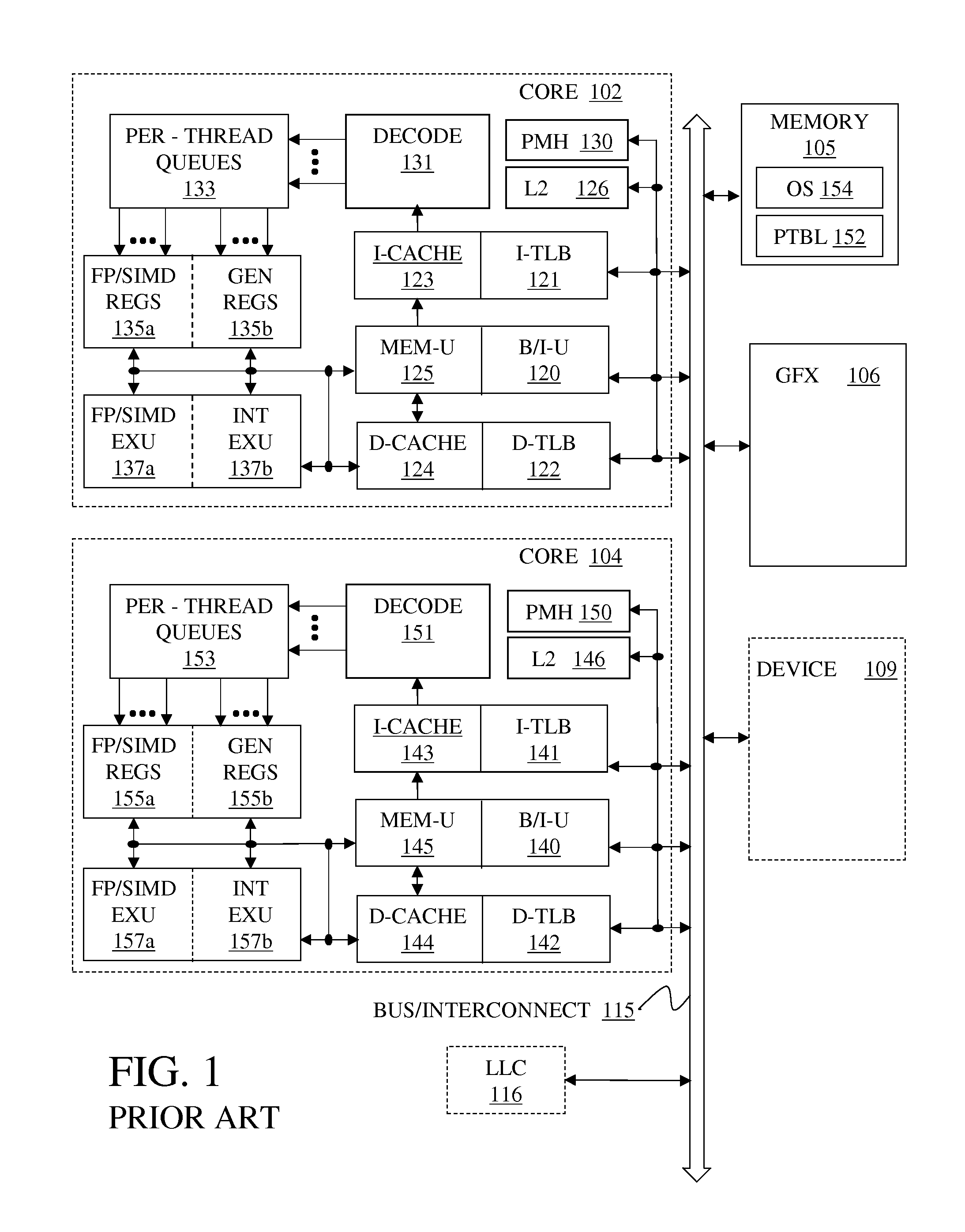

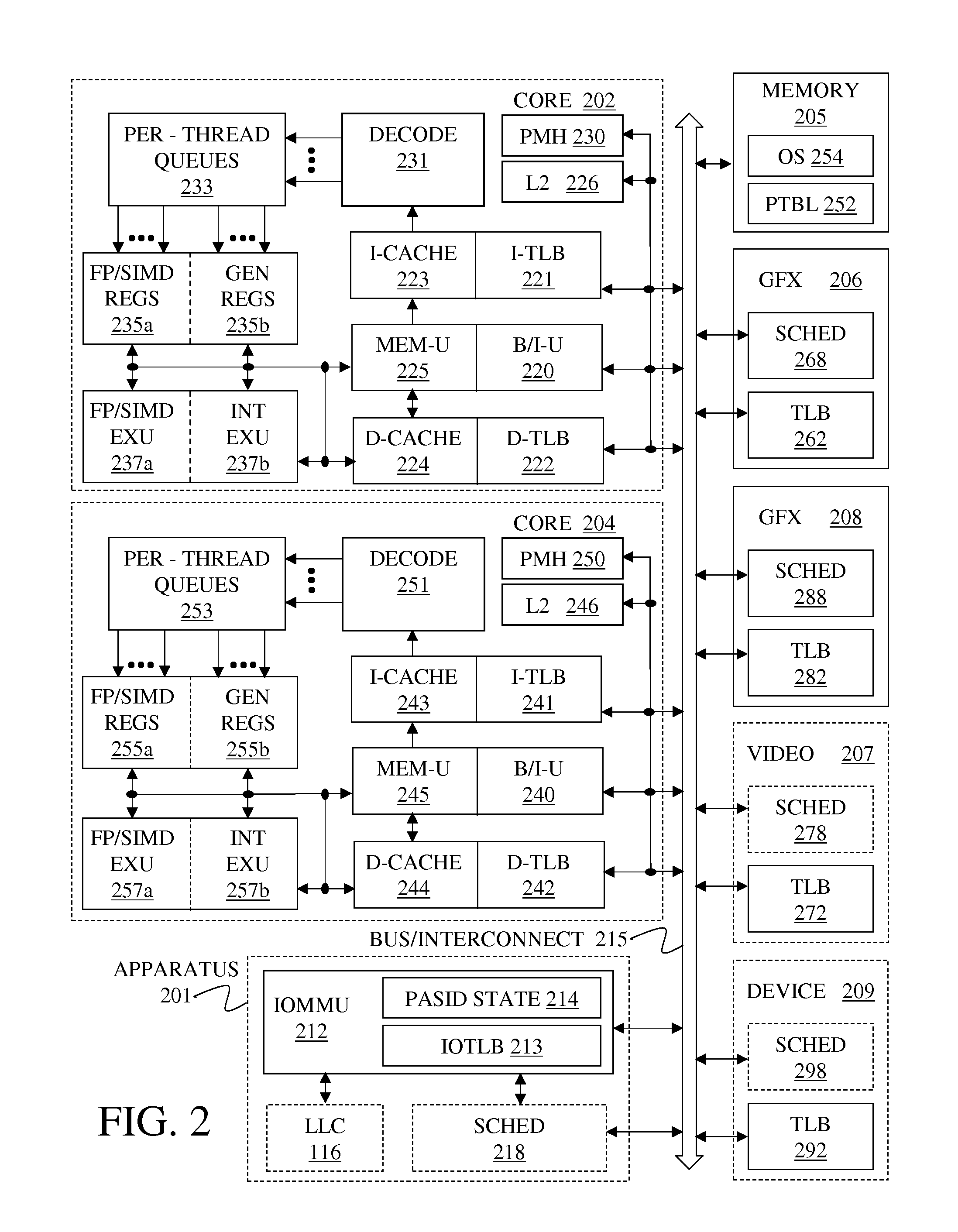

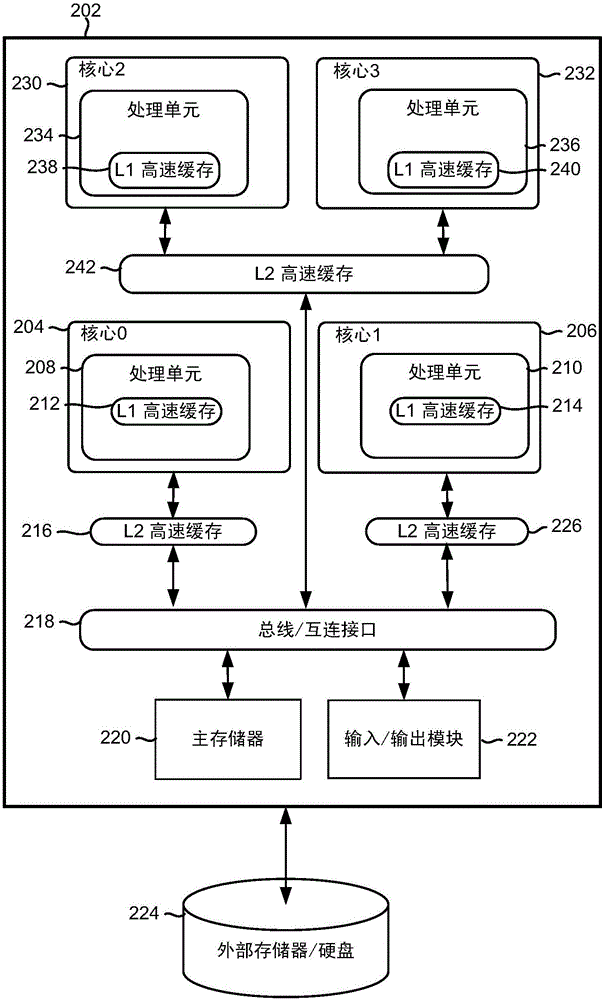

Method and apparatus for tlb shoot-down in a heterogeneous computing system supporting shared virtual memory

ActiveUS20130031333A1Memory architecture accessing/allocationMemory adressing/allocation/relocationManagement unitBiological activation

Methods and apparatus are disclosed for efficient TLB (translation look-aside buffer) shoot-downs for heterogeneous devices sharing virtual memory in a multi-core system. Embodiments of an apparatus for efficient TLB shoot-downs may include a TLB to store virtual address translation entries, and a memory management unit, coupled with the TLB, to maintain PASID (process address space identifier) state entries corresponding to the virtual address translation entries. The PASID state entries may include an active reference state and a lazy-invalidation state. The memory management unit may perform atomic modification of PASID state entries responsive to receiving PASID state update requests from devices in the multi-core system and read the lazy-invalidation state of the PASID state entries. The memory management unit may send PASID state update responses to the devices to synchronize TLB entries prior to activation responsive to the respective lazy-invalidation state.

Owner:INTEL CORP

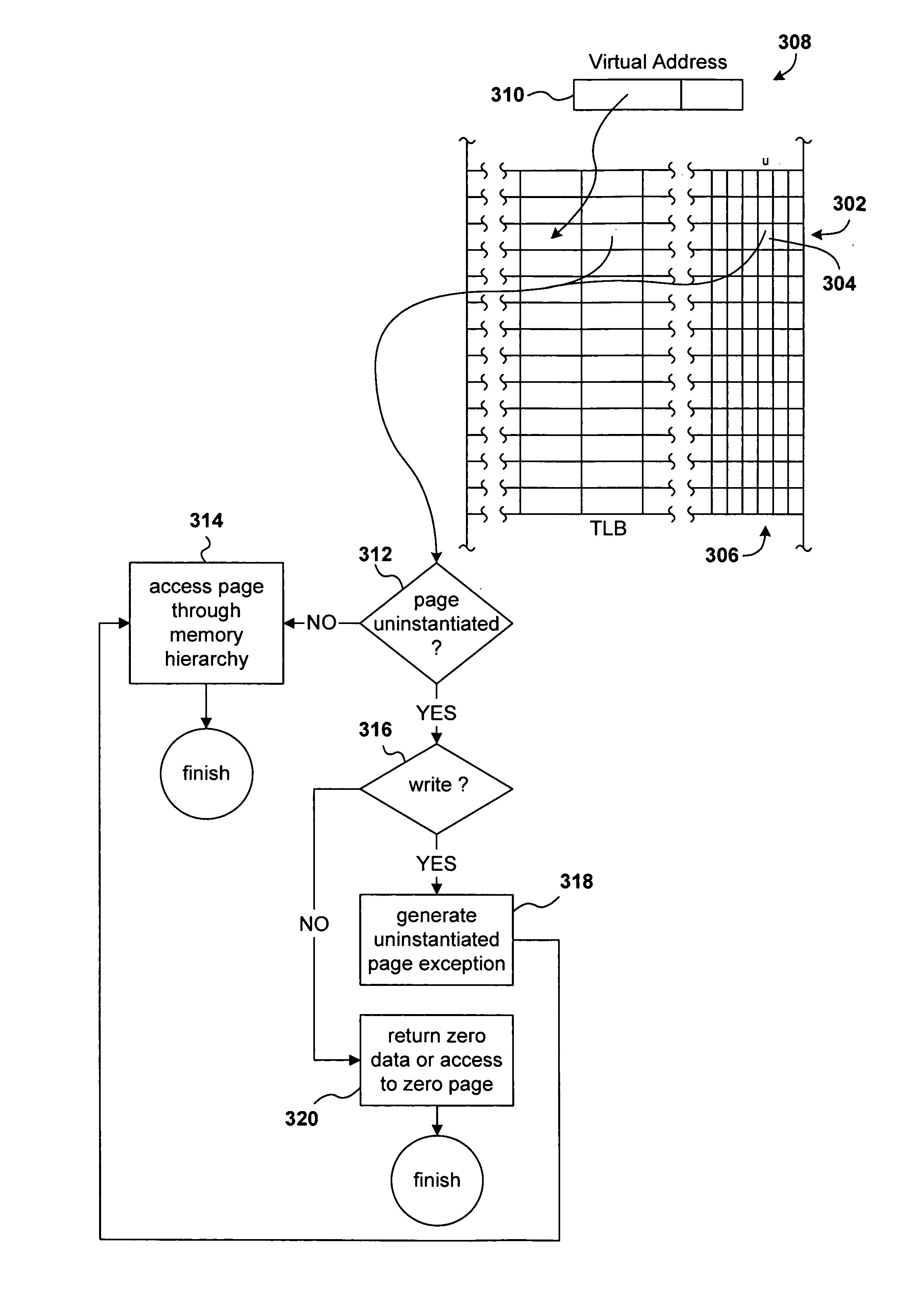

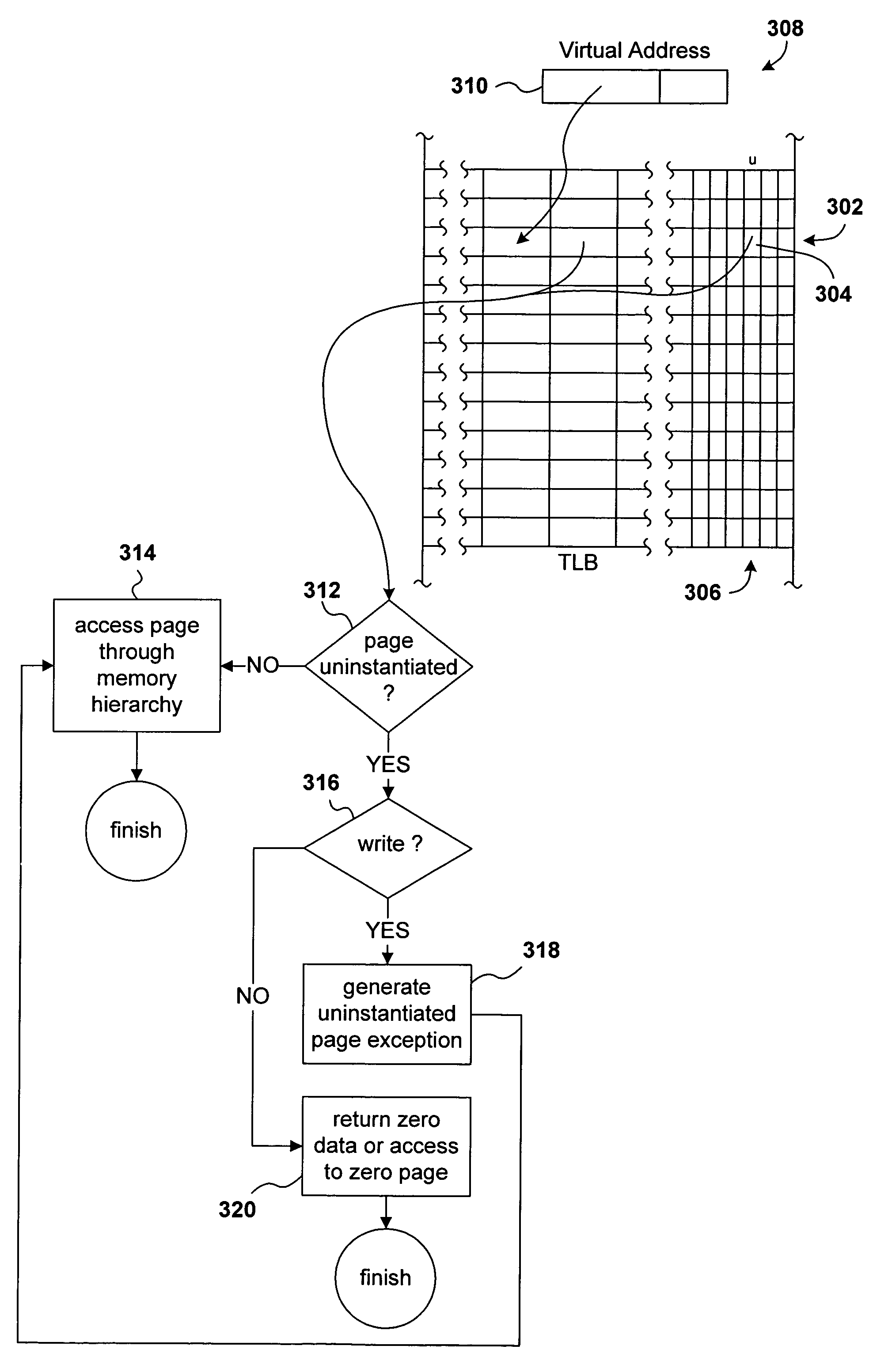

Immediate virtual memory

ActiveUS20050172098A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryOperational system

Various embodiments of the present invention provide for immediate allocation of virtual memory on behalf of processes running within a computer system. One or more bit flags within each translation indicate whether or not a corresponding virtual memory page is immediate. READ access to immediate virtual memory is satisfied by hardware-supplied or software-supplied values. WRITE access to immediate virtual memory raises an exception to allow an operating system to allocate physical memory for storing values written to the immediate virtual memory by the WRITE access.

Owner:GOOGLE LLC

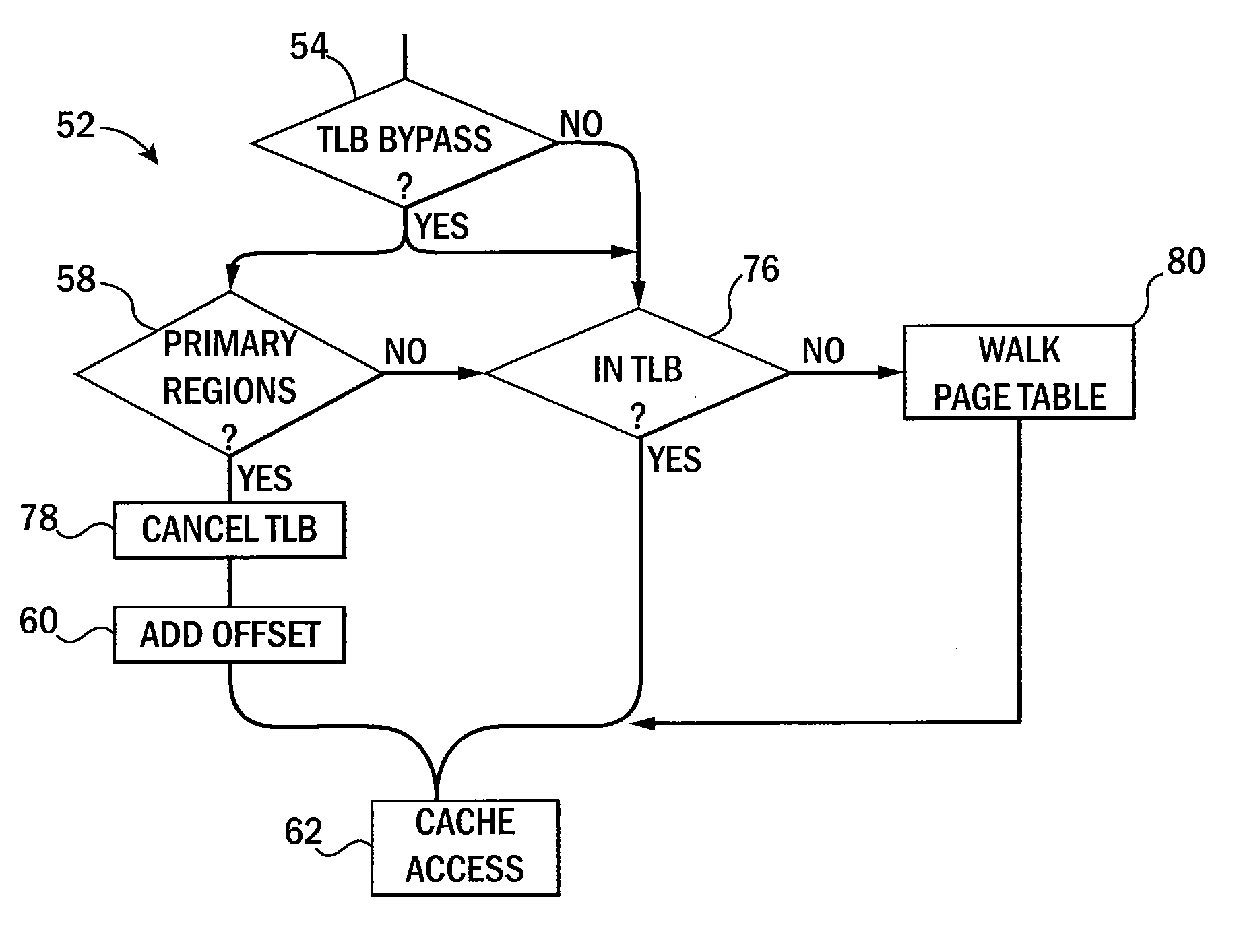

Virtual Memory Management System with Reduced Latency

ActiveUS20140208064A1Lower latencyEliminate needMemory adressing/allocation/relocationMicro-instruction address formationMemory addressComputerized system

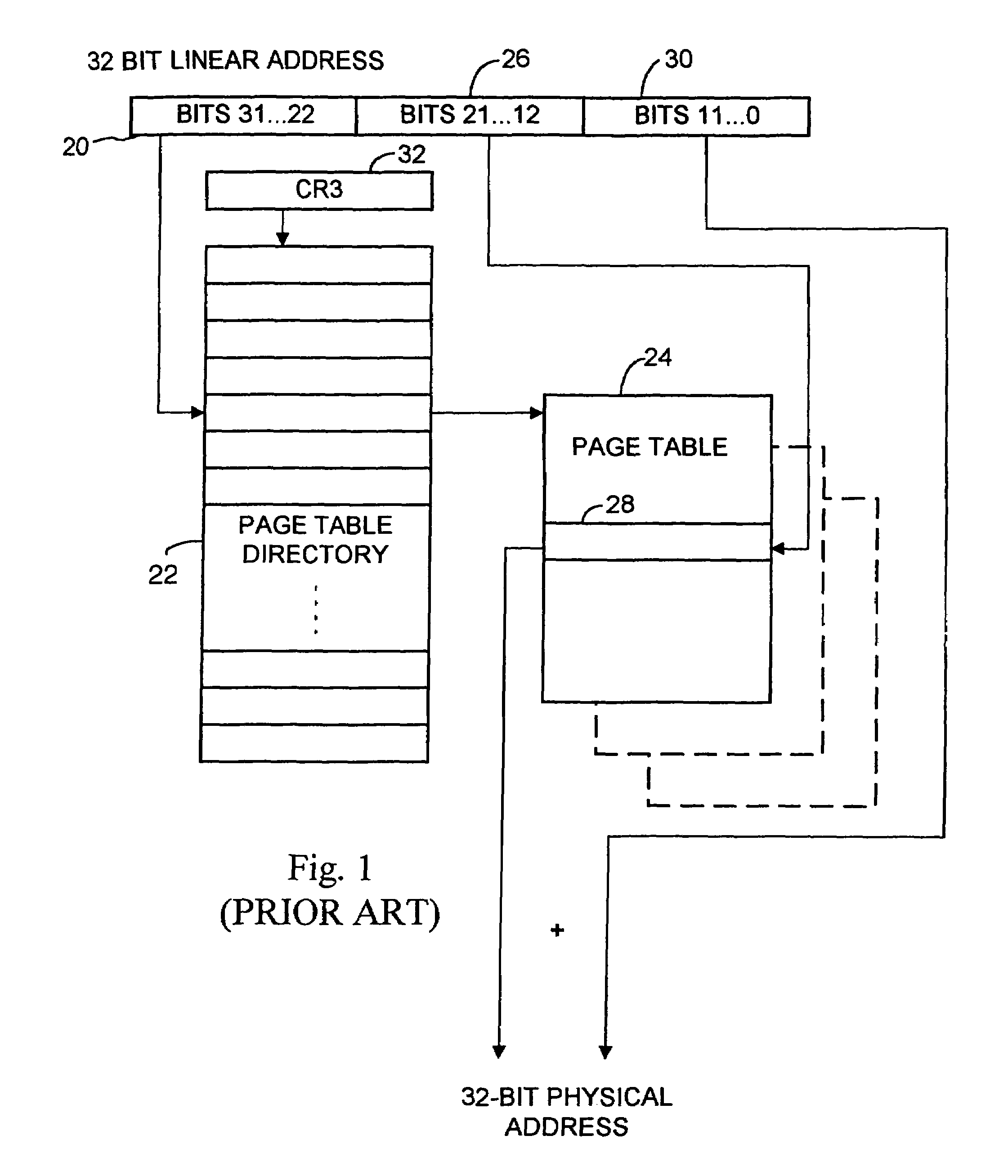

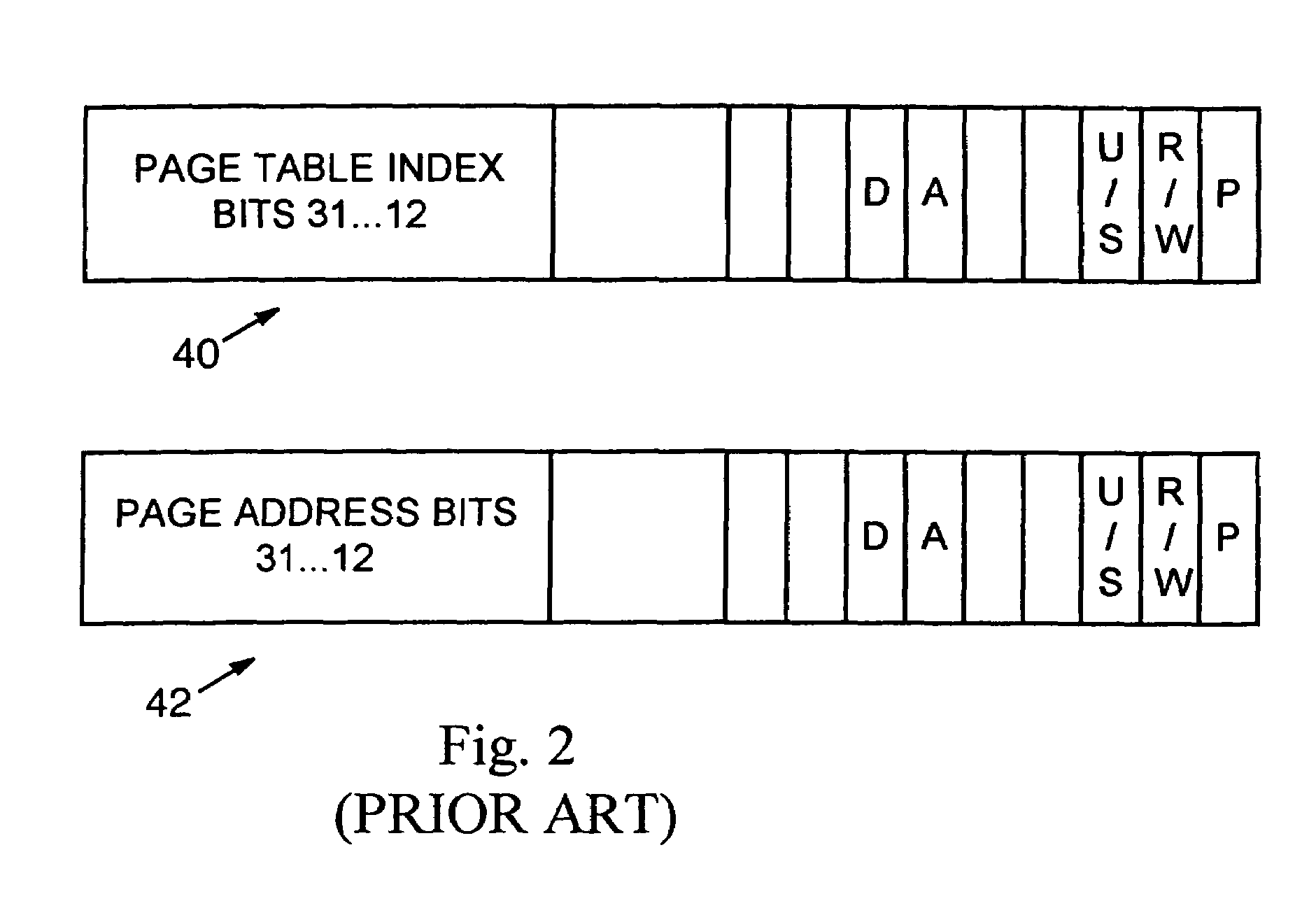

A computer system using virtual memory provides hybrid memory access either through a conventional translation between virtual memory and physical memory using a page table possibly with a translation lookaside buffer, or a high-speed translation using a fixed offset value between virtual memory and physical memory. Selection between these modes of access may be encoded into the address space of virtual memory eliminating the need for a separate tagging operation of specific memory addresses.

Owner:WISCONSIN ALUMNI RES FOUND

Share memory service system and method of web service oriented applications

InactiveUS20060230118A1Low time and complexityOperating flowDigital computer detailsTransmissionVirtual memoryWeb service

A share memory service system and method of web service oriented applications, which includes the steps of declaring an object requiring to be shared of a plurality of web services as a shared object, storing the shared object to a shared virtual memory, and providing the shared object of the shared virtual memory for the access of the web services and a plurality of clients. Accordingly, a shared virtual memory may be simulated in any web service oriented applications, and the method of managing the shared virtual memory providing a way for web services of application program to access the shared object directly instead of invoking each other through the external call. Hence the complexity of service oriented applications development may be reduced.

Owner:DATA SYST CONSULTING

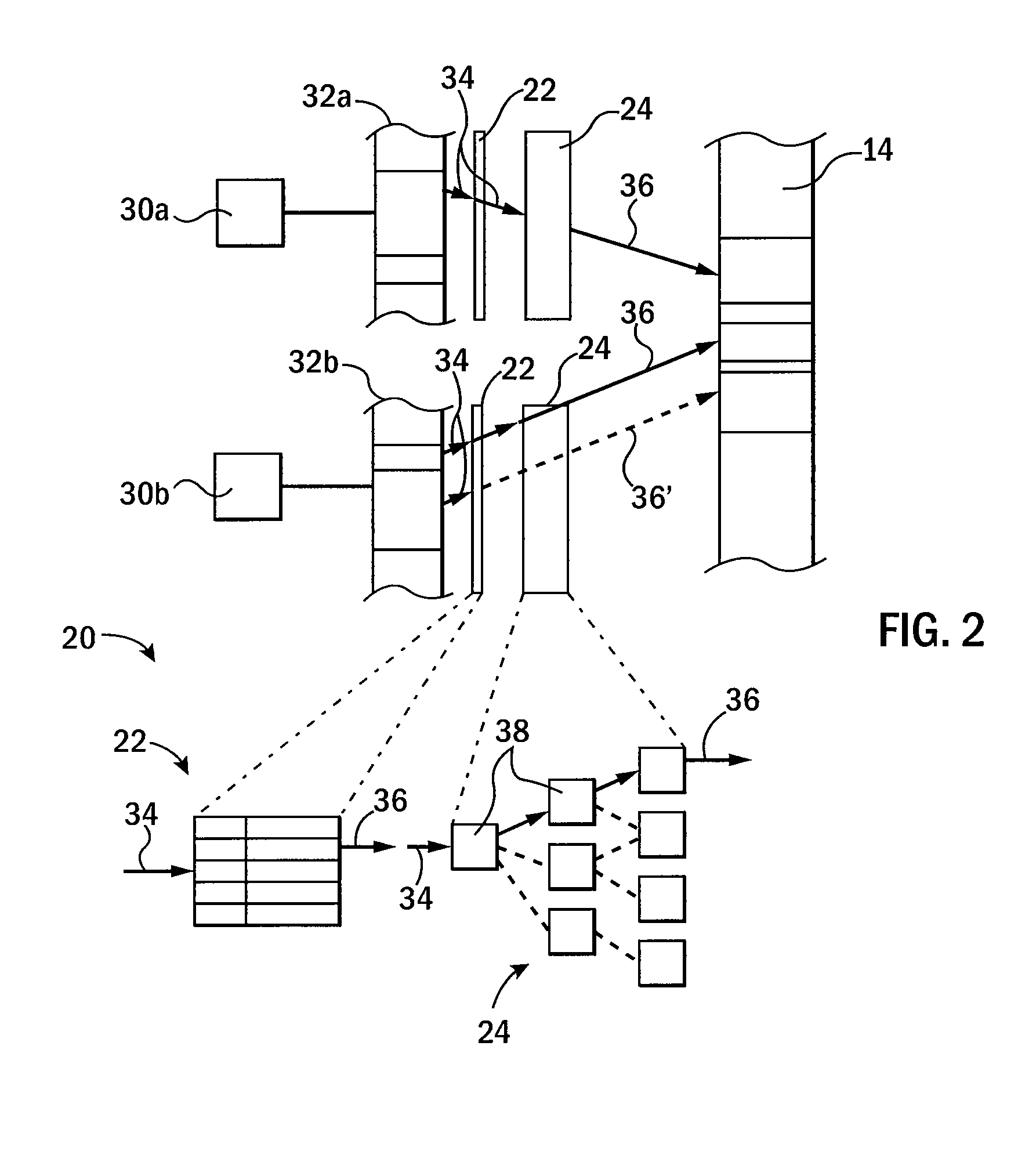

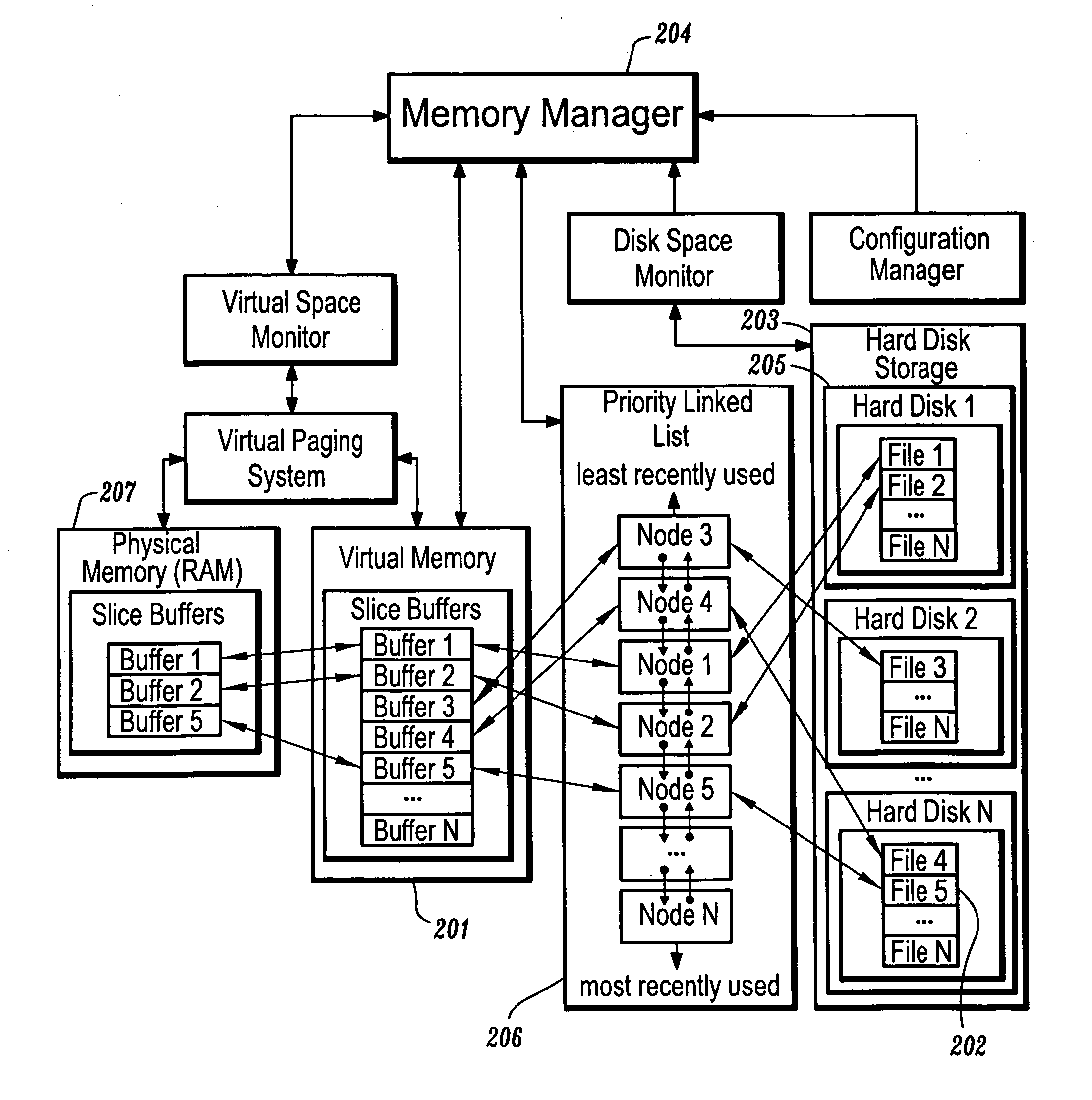

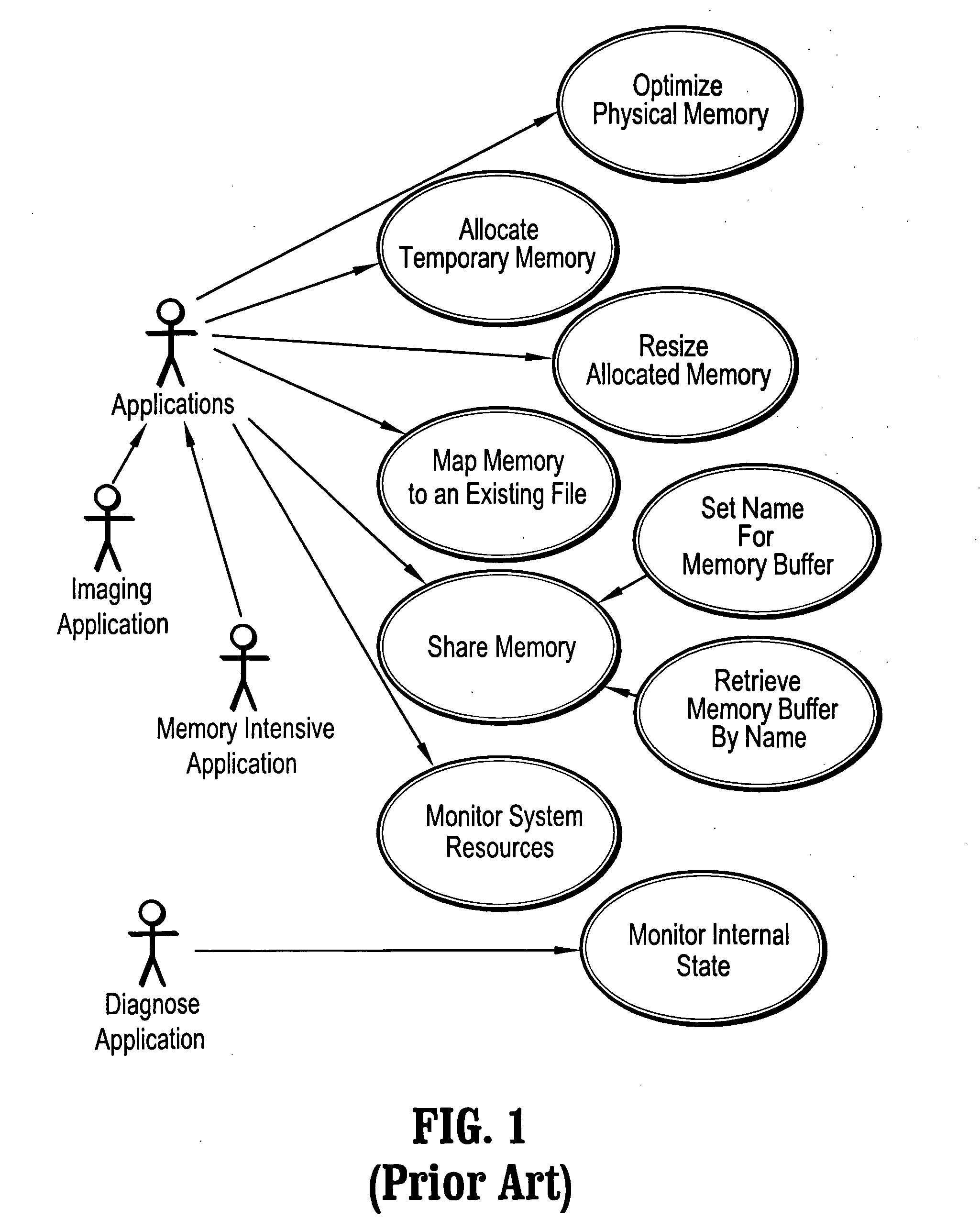

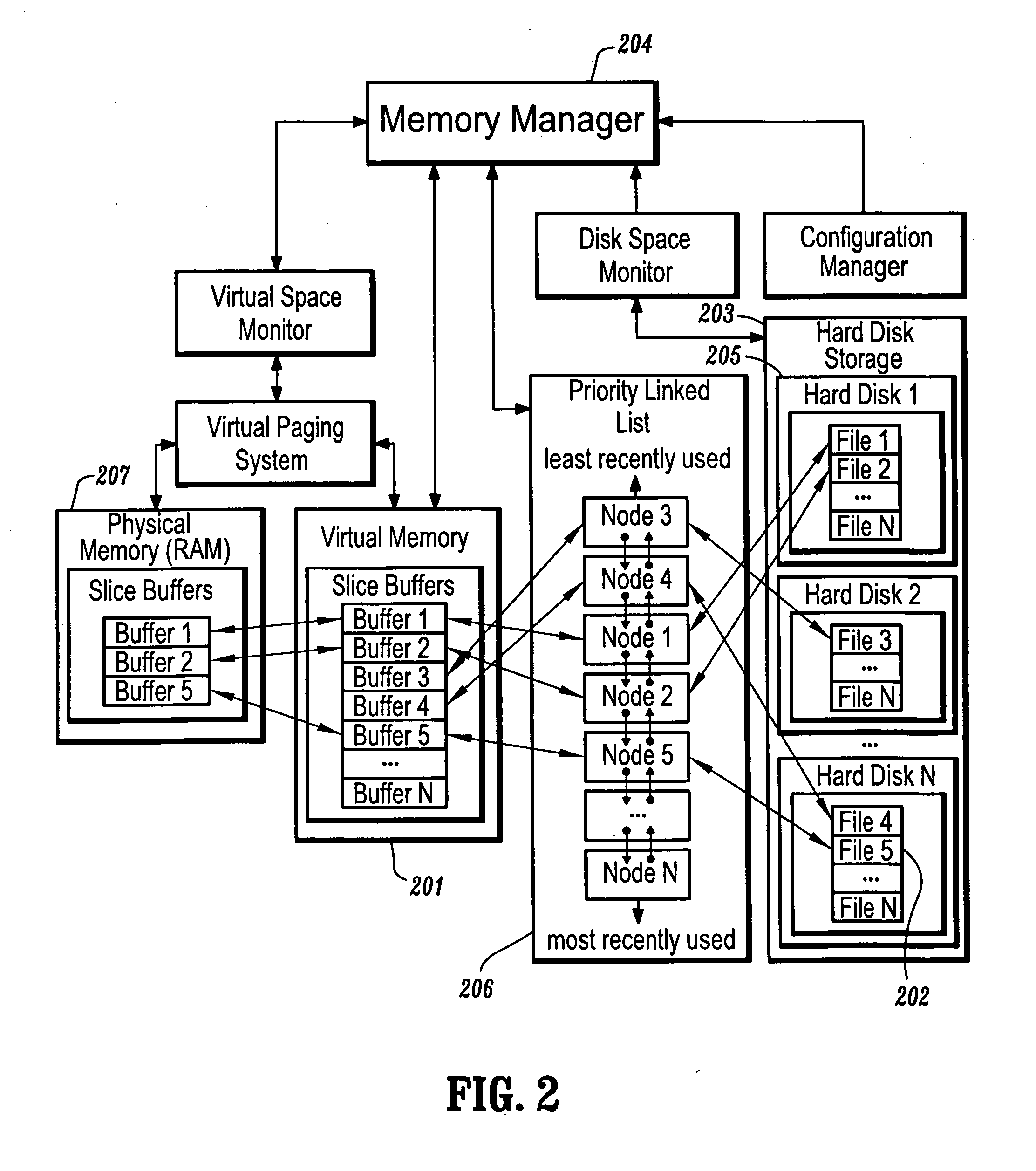

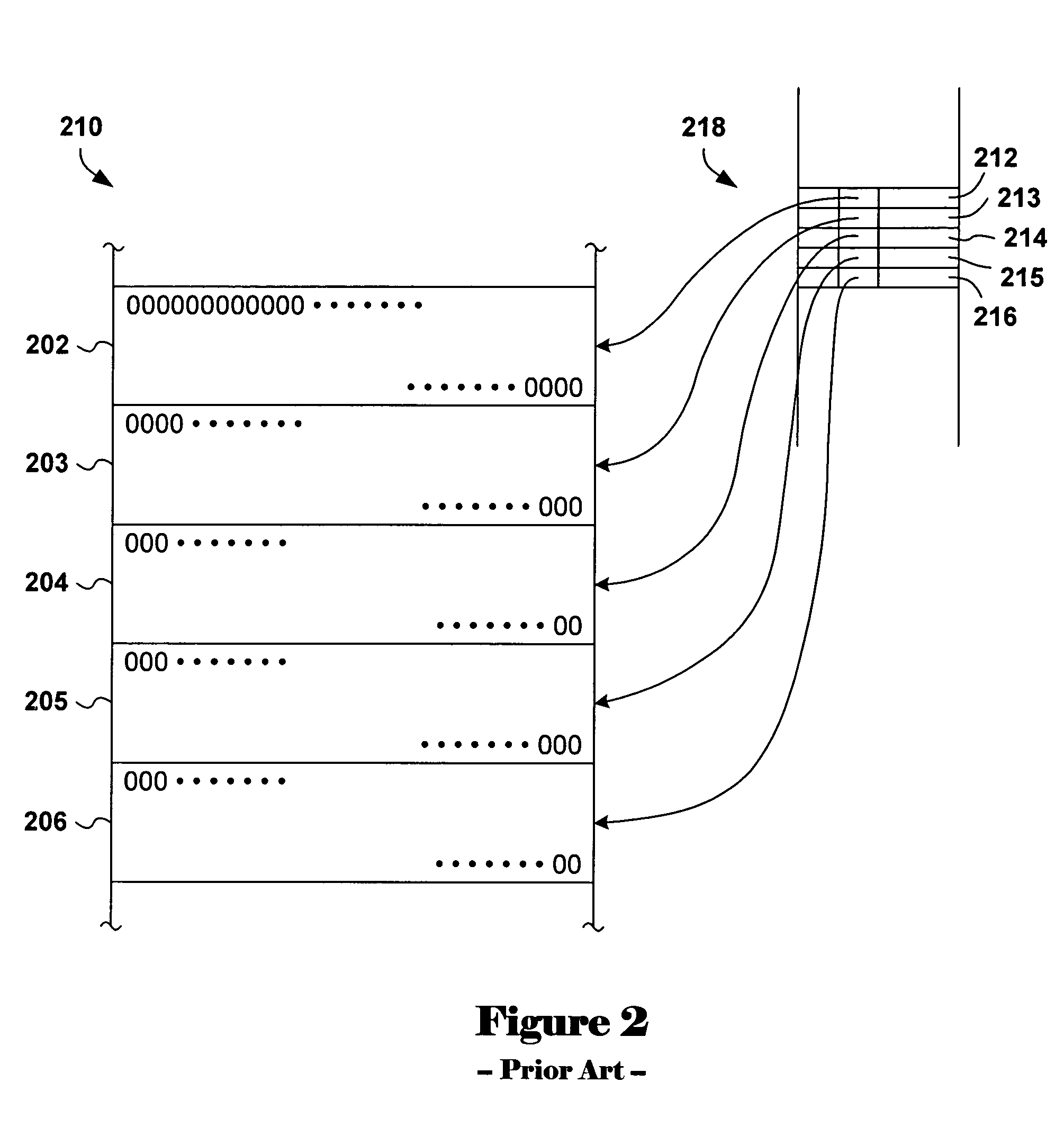

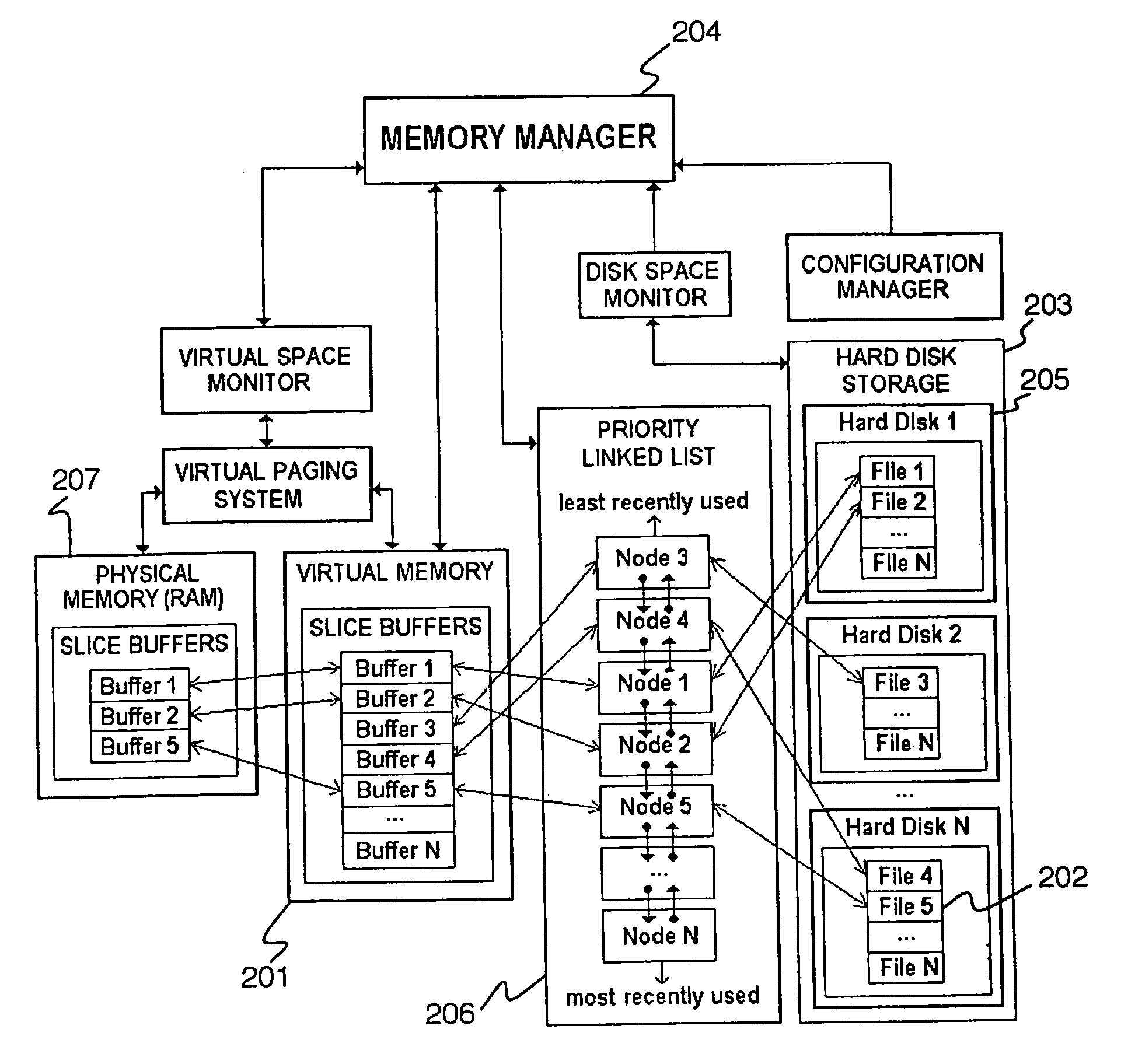

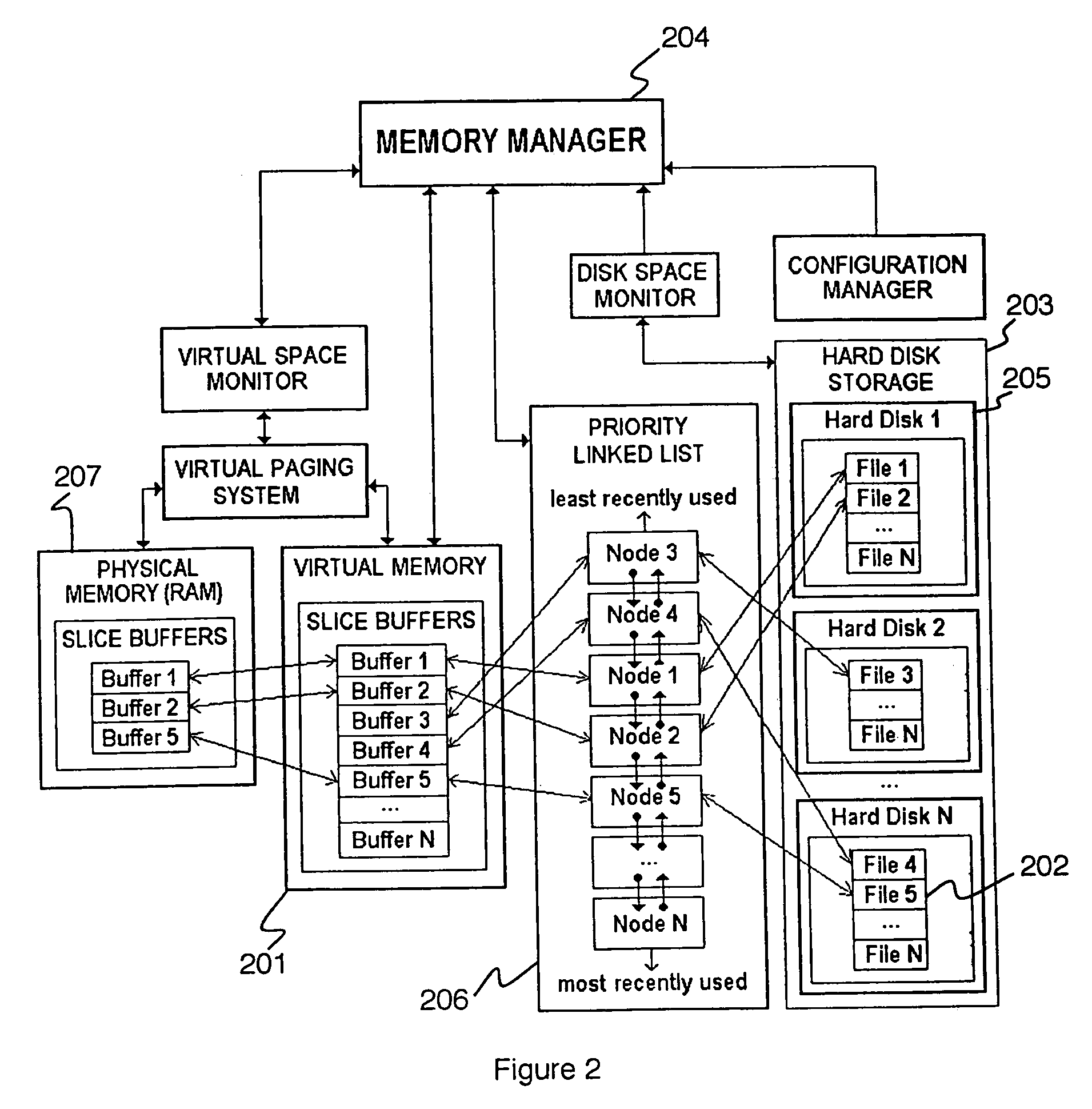

Advanced memory management architecture for large data volumes

InactiveUS20050033934A1Memory adressing/allocation/relocationInput/output processes for data processingApplication softwarePaging

An efficient memory management method for handling large data volumes, comprising a memory management interface between a plurality of applications and a physical memory, determining a priority list of buffers accessed by the plurality of applications, providing efficient disk paging based on the priority list, ensuring sufficient physical memory is available, sharing managed data buffers among a plurality of applications, mapping and unmapping data buffers in virtual memory efficiently to overcome the limits of virtual address space.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

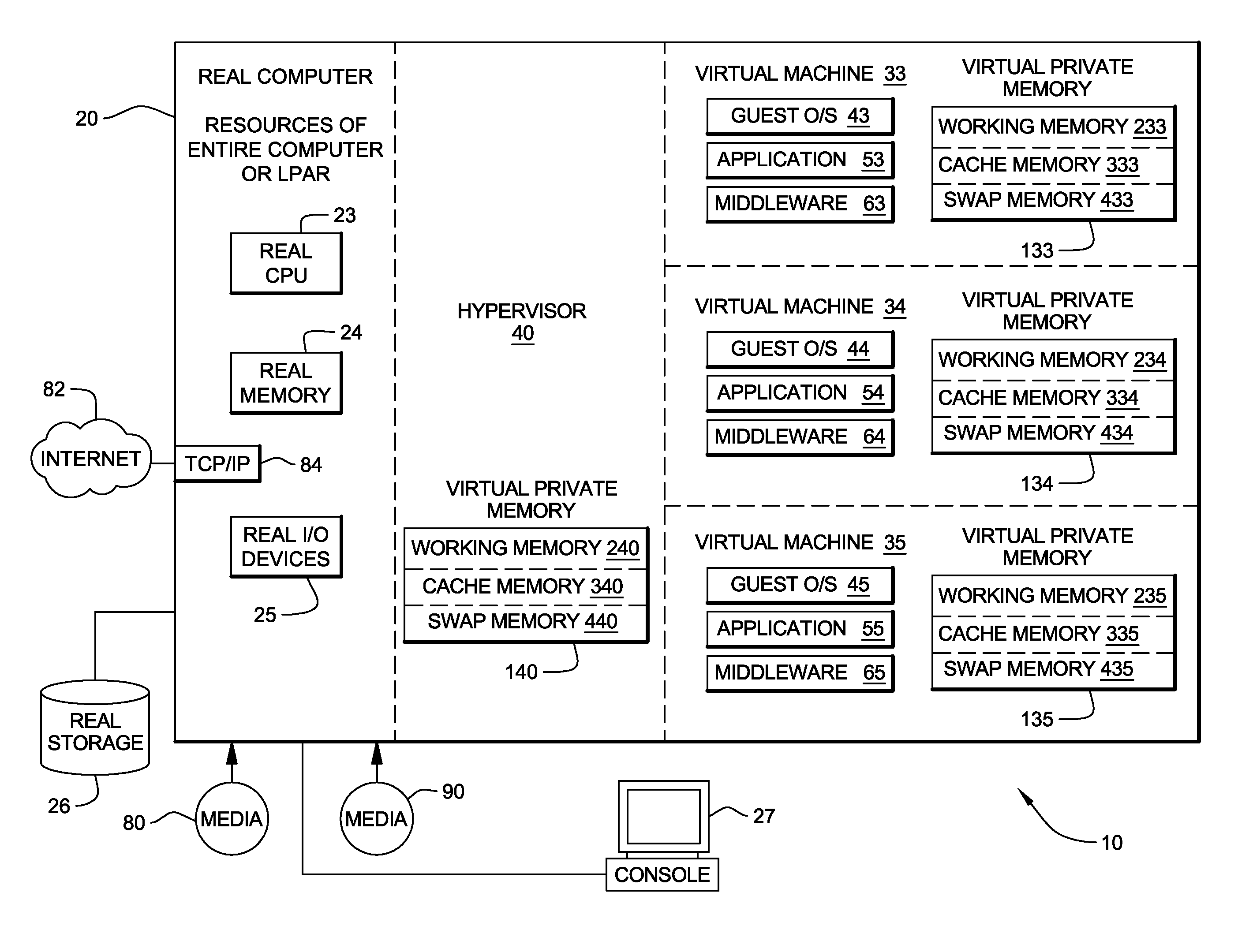

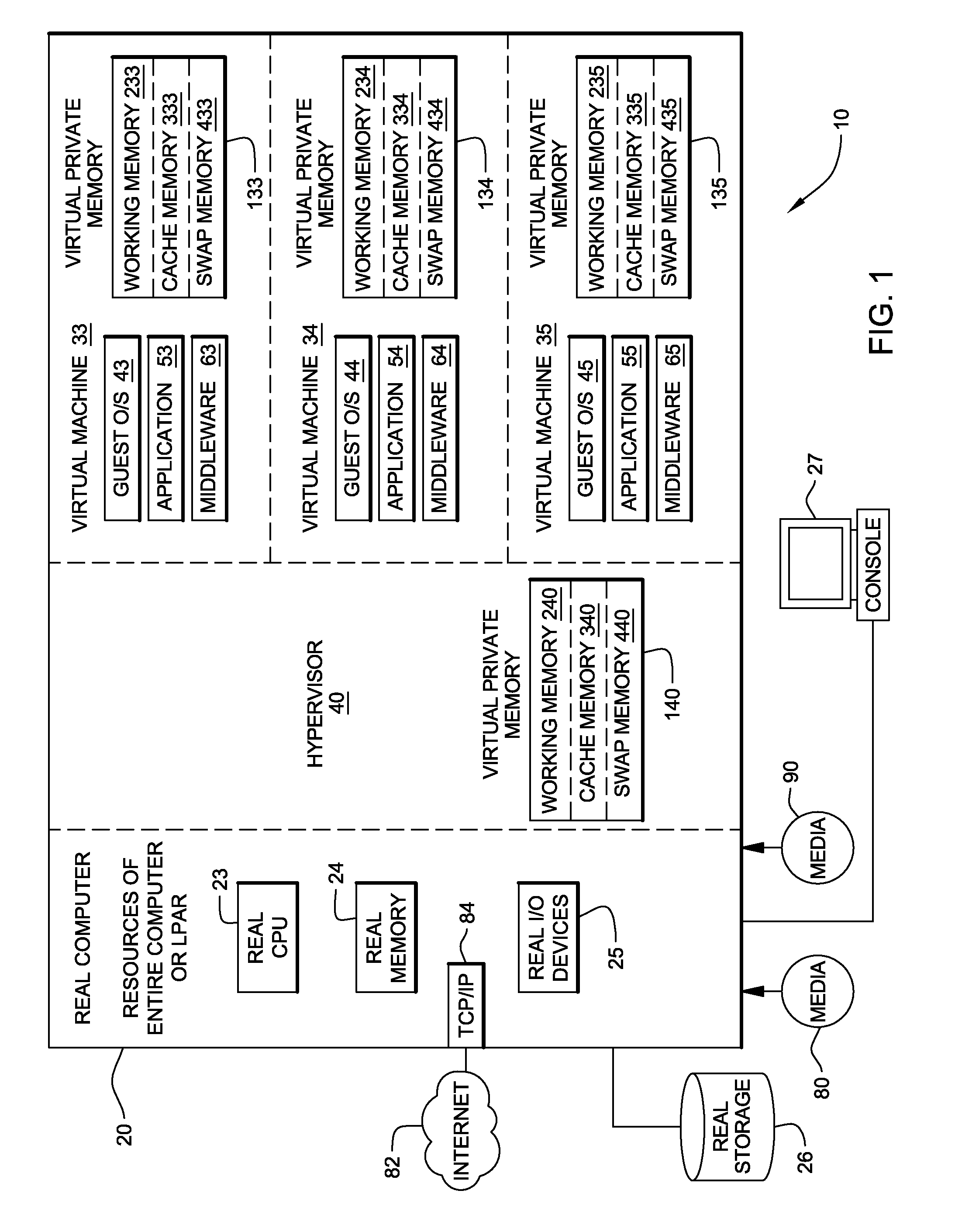

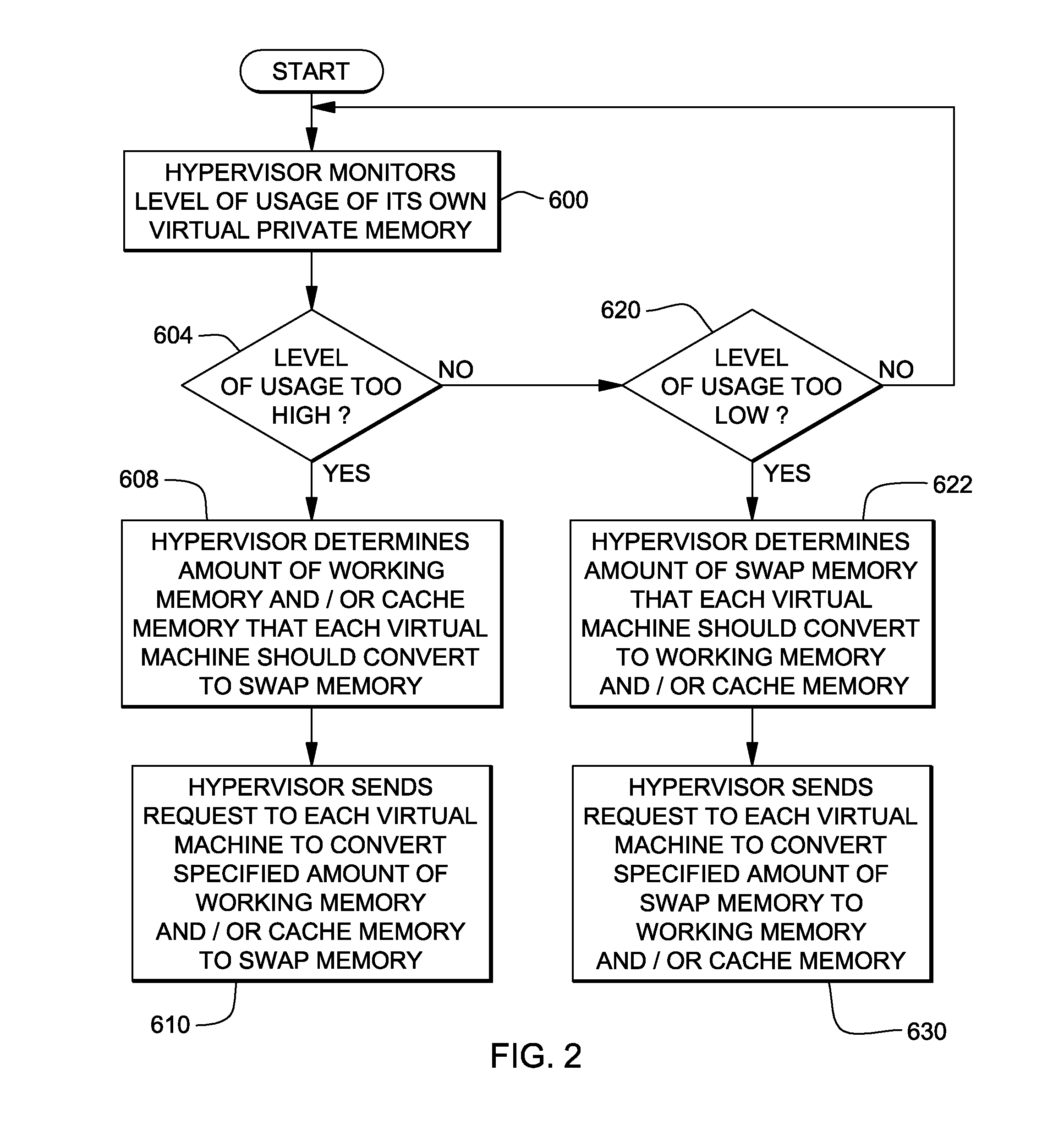

System, method and program to manage virtual memory allocated by a virtual machine control program

Management of virtual memory allocated by a virtual machine control program to a plurality of virtual machines. Each of the virtual machines has an allocation of virtual private memory divided into working memory, cache memory and swap memory. The virtual machine control program determines that it needs additional virtual memory allocation, and in response, makes respective requests to the virtual machines to convert some of their respective working memory and / or cache memory to swap memory. At another time, the virtual machine control program determines that it needs less virtual memory allocation, and in response, makes respective requests to the virtual machines to convert some of their respective swap memory to working memory and / or cache memory.

Owner:INT BUSINESS MASCH CORP

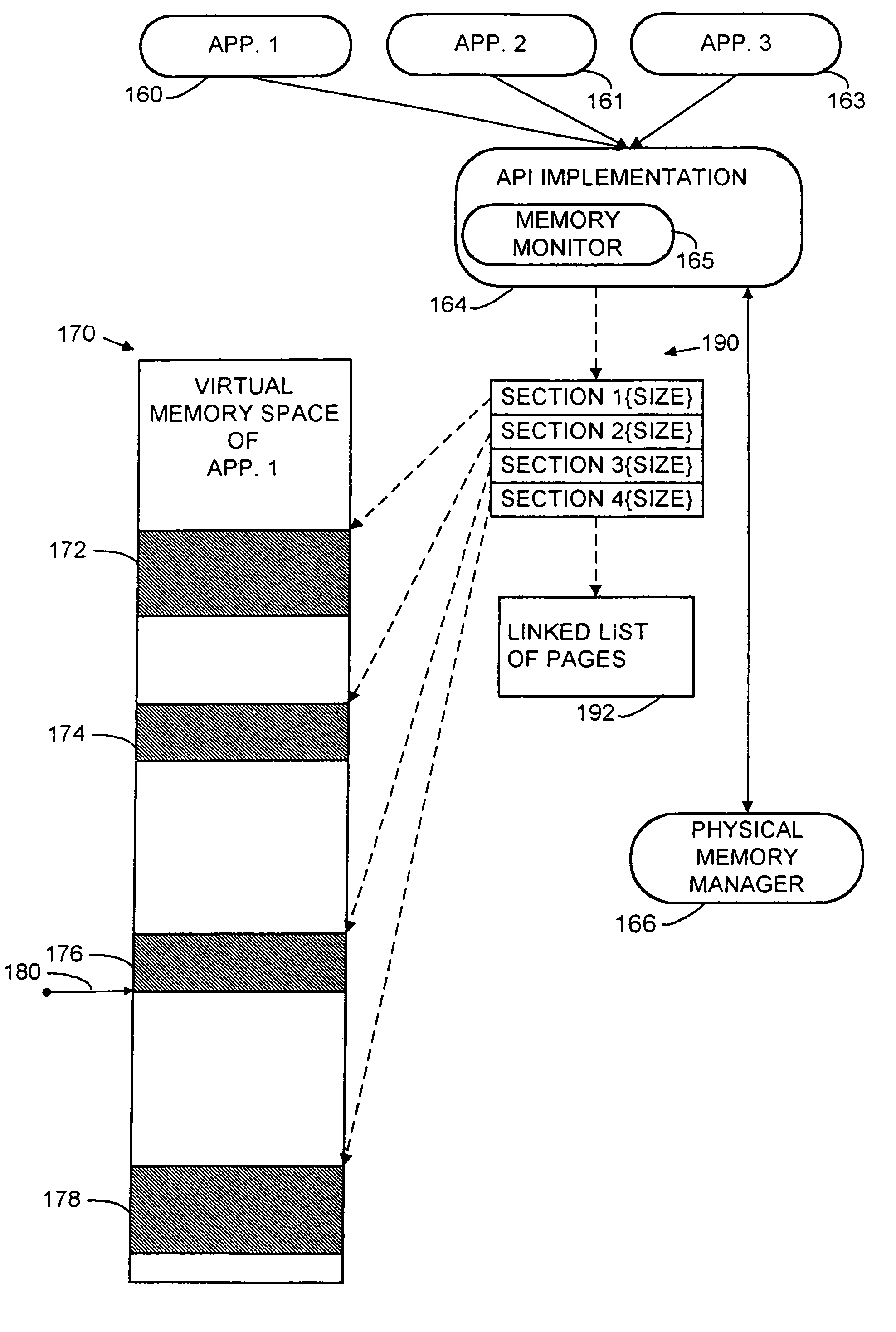

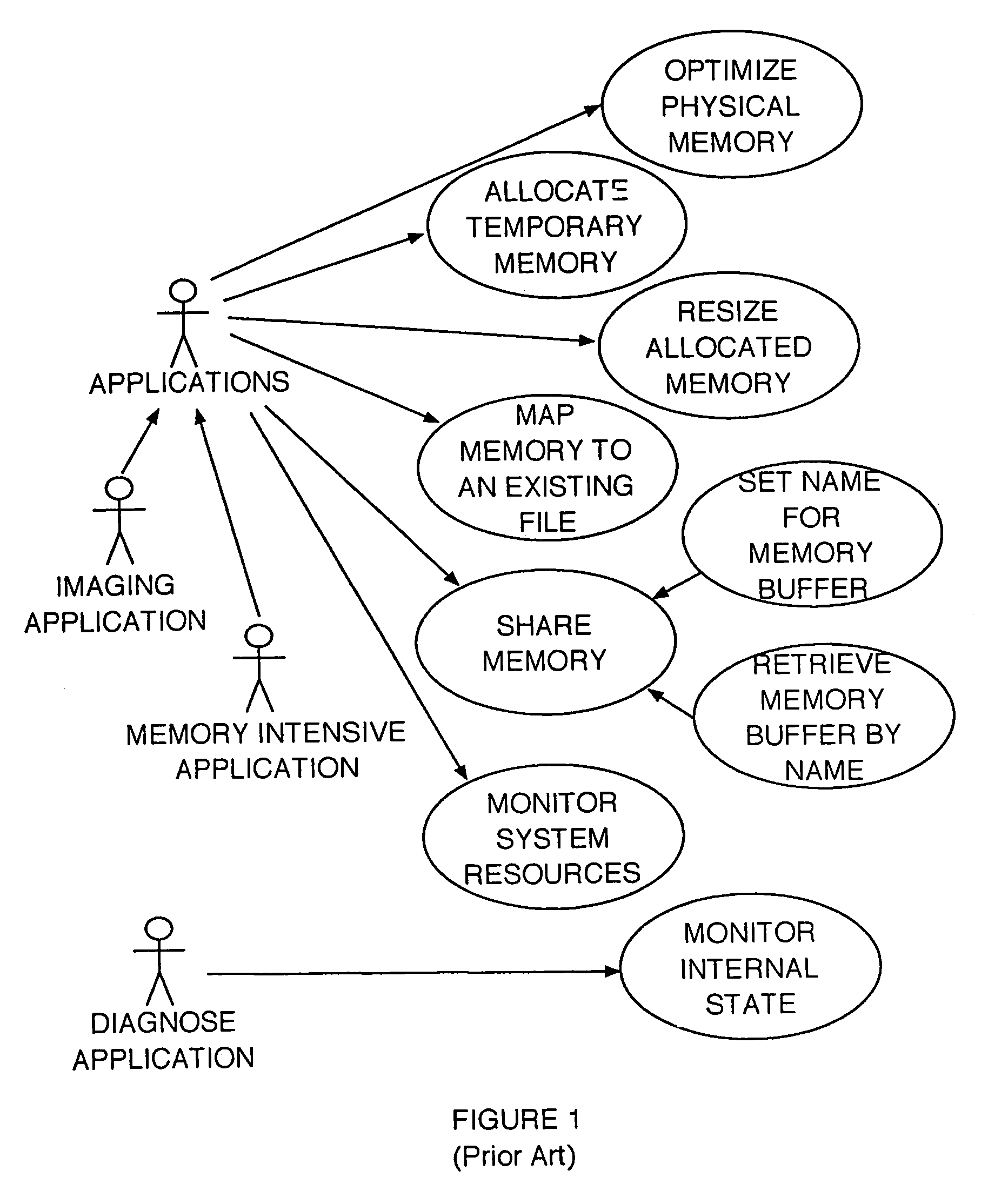

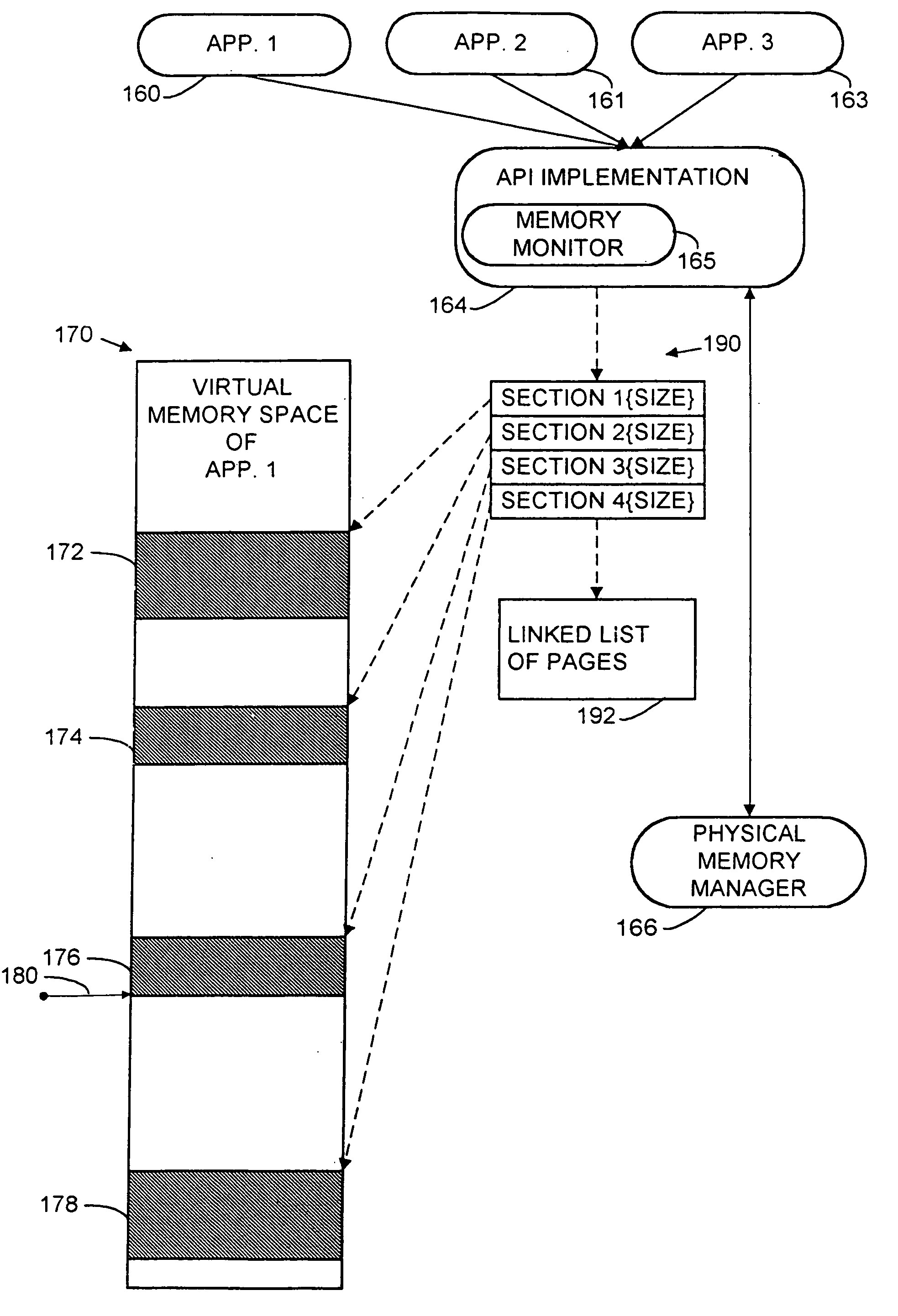

Application programming interface enabling application programs to group code and data to control allocation of physical memory in a virtual memory system

InactiveUS6983467B2Improve performancePrevents page faultsProgram initiation/switchingMemory adressing/allocation/relocationOperational systemApplication programming interface

An application programming interface (API) enables application programs in a multitasking operating environment to classify portions of their code and data in a group that the operating system loads into physical memory all at one time. Designed for operating systems that implement virtual memory, this API enables memory-intensive application programs to avoid performance degradation due to swapping of units of memory back and forth between the hard drive and physical memory. Instead of incurring the latency of a page fault whenever the application attempts to access code or data in the group that is not located in physical memory, the API makes sure that all of the code or data in a group is loaded into physical memory at one time. This increases the latency of the initial load operation, but reduces performance degradation for subsequent memory accesses to code or data in the group.

Owner:MICROSOFT TECH LICENSING LLC

Memory allocation improvements

ActiveUS20140359248A1Memory adressing/allocation/relocationProgram controlVirtual memoryParallel computing

In one embodiment, a memory allocator of a memory manager can service memory allocation requests within a specific size-range from a section of pre-reserved virtual memory. The pre-reserved virtual memory allows allocation requests within a specific size range to be allocated in the pre-reserved region, such that the virtual memory address of a memory allocation serviced from the pre-reserved region can indicate elements of metadata associated with the allocations that would otherwise contribute to overhead for the allocation.

Owner:APPLE INC

On-demand shareability conversion in a heterogeneous shared virtual memory

InactiveCN106575264AMemory architecture accessing/allocationMemory systemsParallel computingPage table

The aspects include systems and methods of managing virtual memory page shareability. A processor or memory management unit may set in a page table an indication that a virtual memory page is not shareable with an outer domain processor. The processor or memory management unit may monitor for when the outer domain processor attempts or has attempted to access the virtual memory page. In response to the outer domain processor attempting to access the virtual memory page, the processor may perform a virtual memory page operation on the virtual memory page.

Owner:QUALCOMM INC

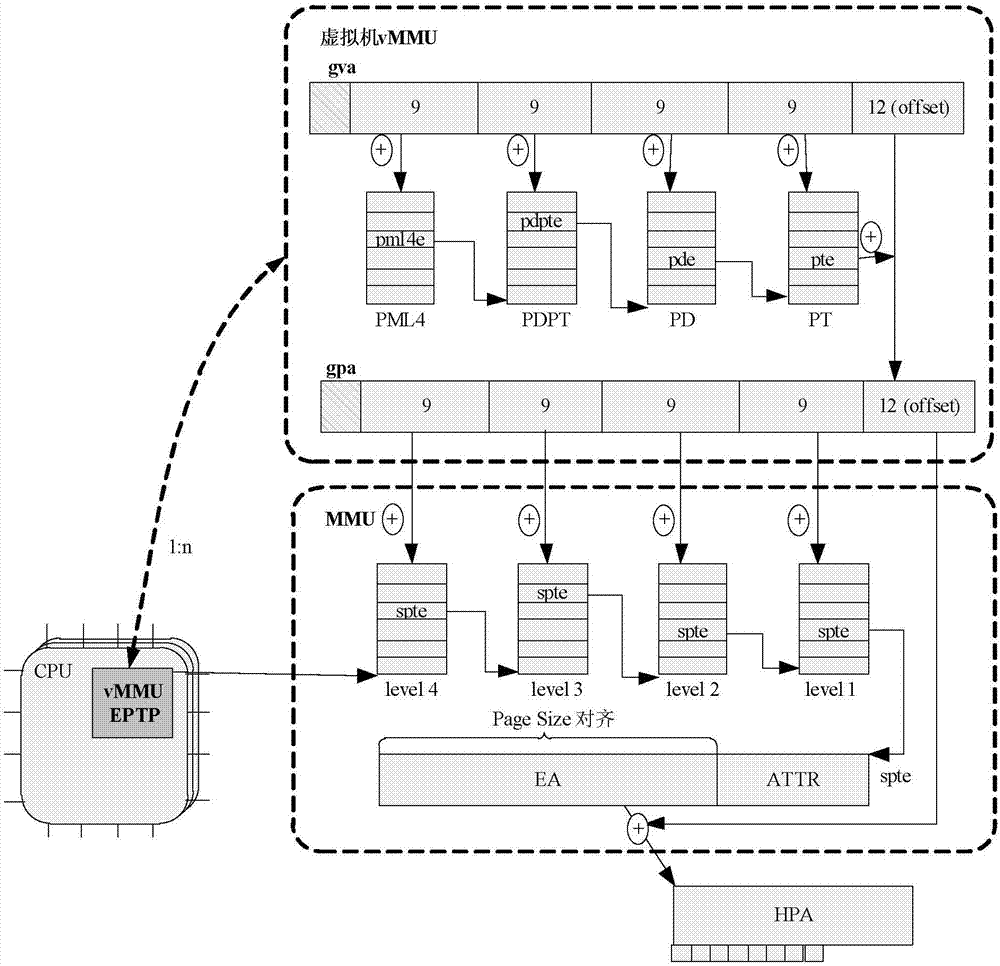

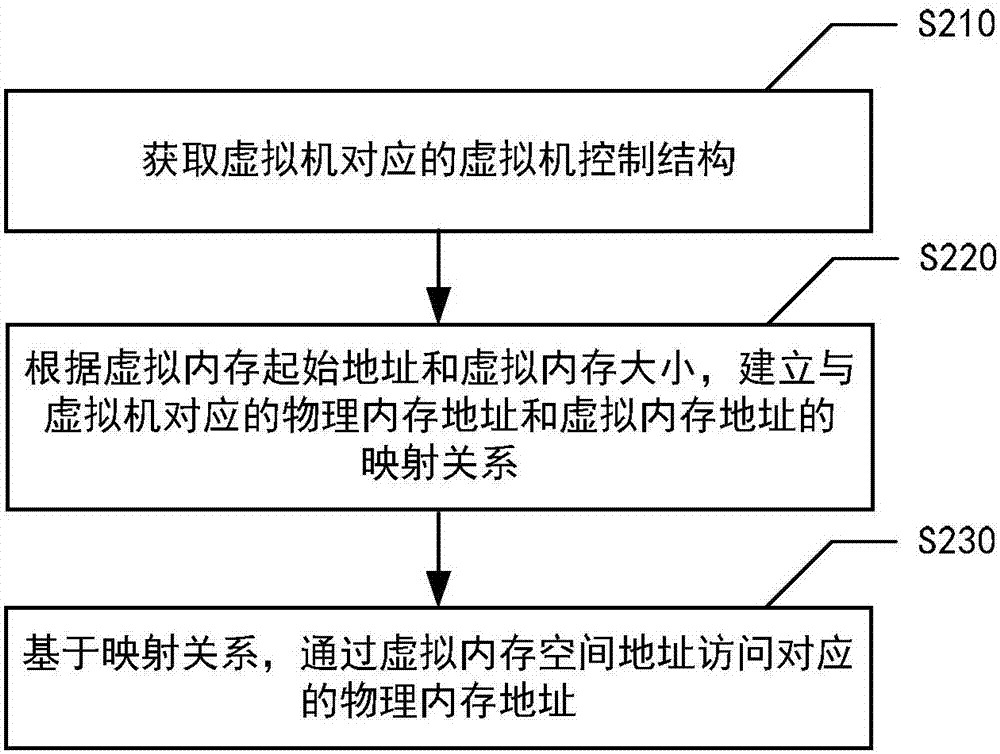

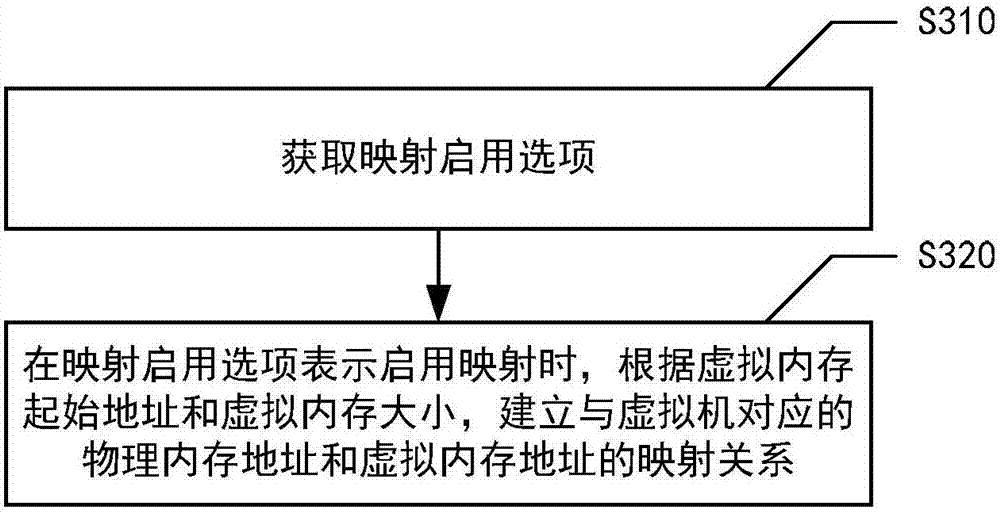

Virtual machine memory access method and system and electronic equipment

ActiveCN107341115AMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationMemory addressVirtual memory

The disclosure provides a virtual machine memory access method. The method comprises the following steps: acquiring a virtual machine control structure corresponding to a virtual machine, wherein the virtual machine control structure comprises a virtual memory starting address and a virtual memory size; establishing mapping relationships with a physical memory address and a virtual memory address corresponding to the virtual machine according to the virtual memory starting address and the virtual memory size; and accessing the corresponding physical memory address through a virtual memory space address based on the mapping relationships. The disclosure also provides electronic equipment in which the virtual machine is deployed, and a virtual machine memory access system.

Owner:LENOVO (BEIJING) CO LTD

Supporting virtual input/output (I/O) server (VIOS) active memory sharing in a cluster environment

InactiveUS8458413B2Promote migrationMemory architecture accessing/allocationDigital data processing detailsVirtual memoryActive memory

A method, system, and computer program product provide a shared virtual memory space via a cluster-aware virtual input / output (I / O) server (VIOS). The VIOS receives a paging file request from a first LPAR and thin-provisions a logical unit (LU) within the virtual memory space as a shared paging file of the same storage amount as the minimum required capacity. The VIOS also autonomously maintains a logical redundancy LU (redundant LU) as a real-time copy of the provisioned / allocated LU, where the redundant LU is a dynamic copy of the allocated LU that is autonomously updated responsive to any changes within the allocated LU. Responsive to a second VIOS attempting to read a LU currently utilized by a first VIOS, the read request is autonomously redirected to the logical redundancy LU. The redundant LU can be utilized to facilitate migration of a client LPAR to a different computing electronic complex (CEC).

Owner:IBM CORP

Immediate virtual memory

ActiveUS7313668B2Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryOperational system

Various embodiments of the present invention provide for immediate allocation of virtual memory on behalf of processes running within a computer system. One or more bit flags within each translation indicate whether or not a corresponding virtual memory page is immediate. READ access to immediate virtual memory is satisfied by hardware-supplied or software-supplied values. WRITE access to immediate virtual memory raises an exception to allow an operating system to allocate physical memory for storing values written to the immediate virtual memory by the WRITE access.

Owner:GOOGLE LLC

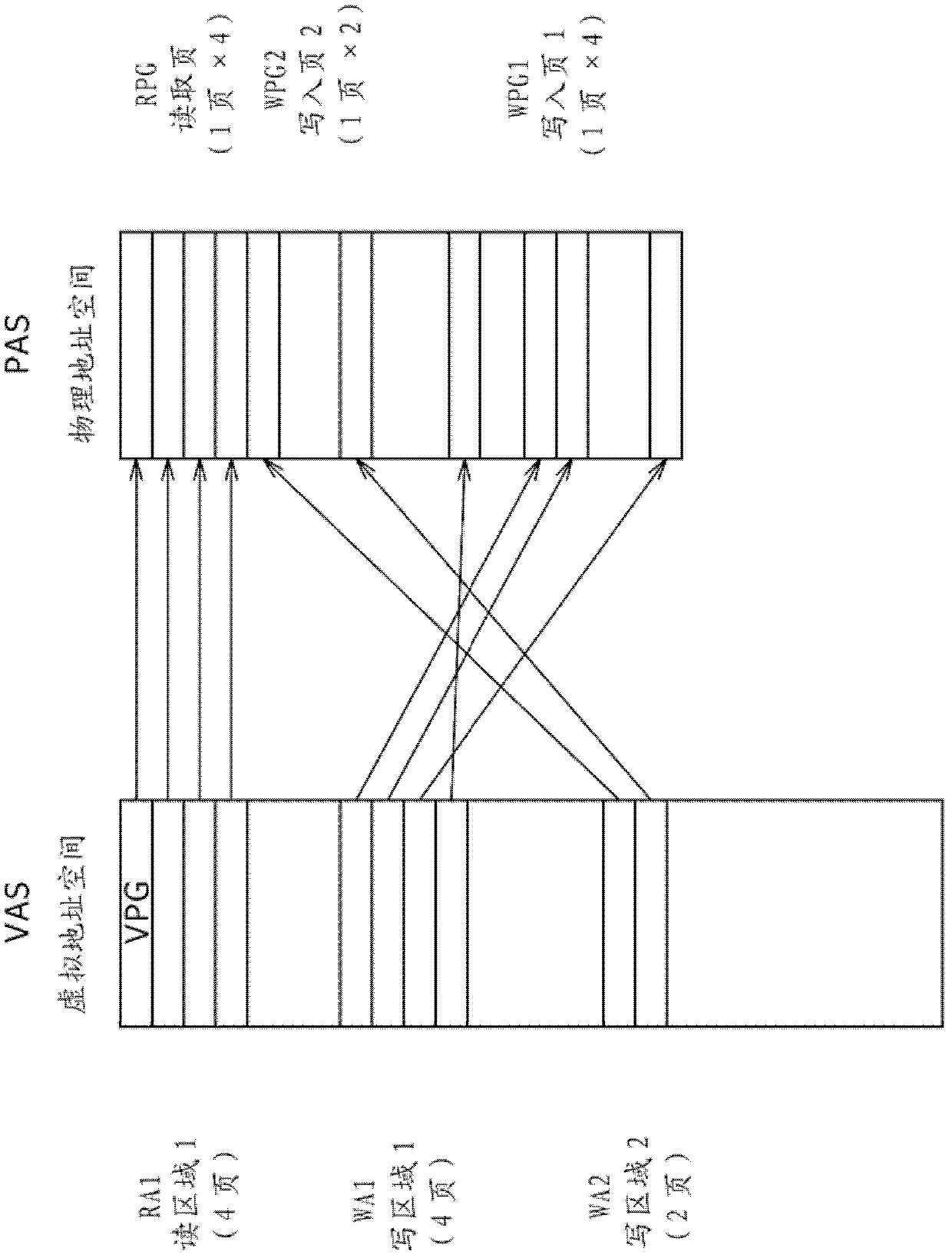

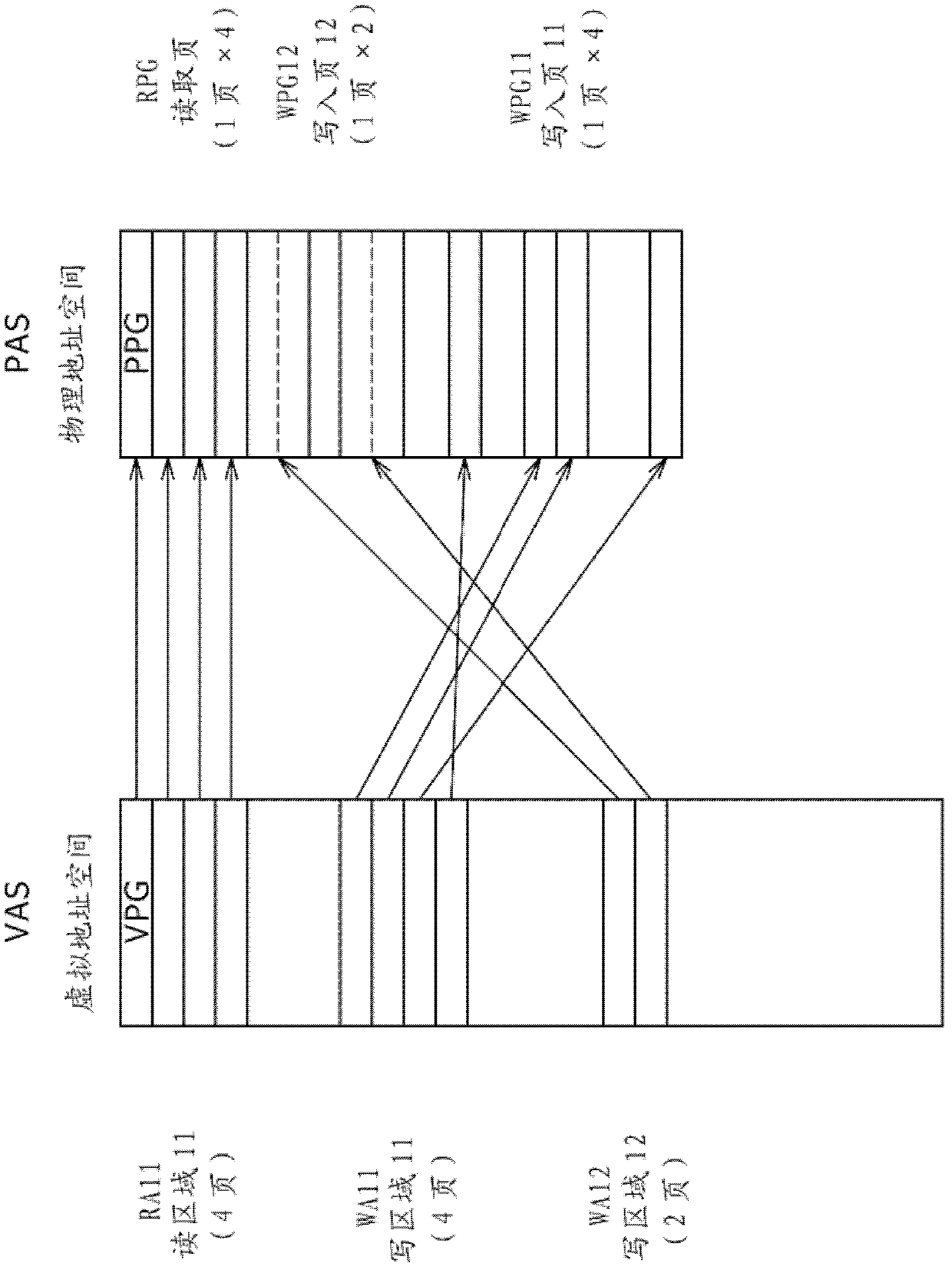

Virtual memory system, virtual memory controlling method, and program

InactiveCN102707899AMemory architecture accessing/allocationInput/output to record carriersVirtual memoryShared virtual memory

Disclosed herein is a virtual memory system including a nonvolatile memory allowing random access, having an upper limit to a number of times of rewriting, and including a physical address space accessed via a virtual address; and a virtual memory control section configured to manage the physical address space of the nonvolatile memory in page units, map the physical address space and a virtual address space, and convert an accessed virtual address into a physical address; wherein the virtual memory control section is configured to expand a physical memory capacity allocated to a virtual page in which rewriting occurs.

Owner:SONY SEMICON SOLUTIONS CORP

Advanced memory management architecture for large data volumes

An efficient memory management method for handling large data volumes, comprising a memory management interface between a plurality of applications and a physical memory, determining a priority list of buffers accessed by the plurality of applications, providing efficient disk paging based on the priority list, ensuring sufficient physical memory is available, sharing managed data buffers among a plurality of applications, mapping and unmapping data buffers in virtual memory efficiently to overcome the limits of virtual address space.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

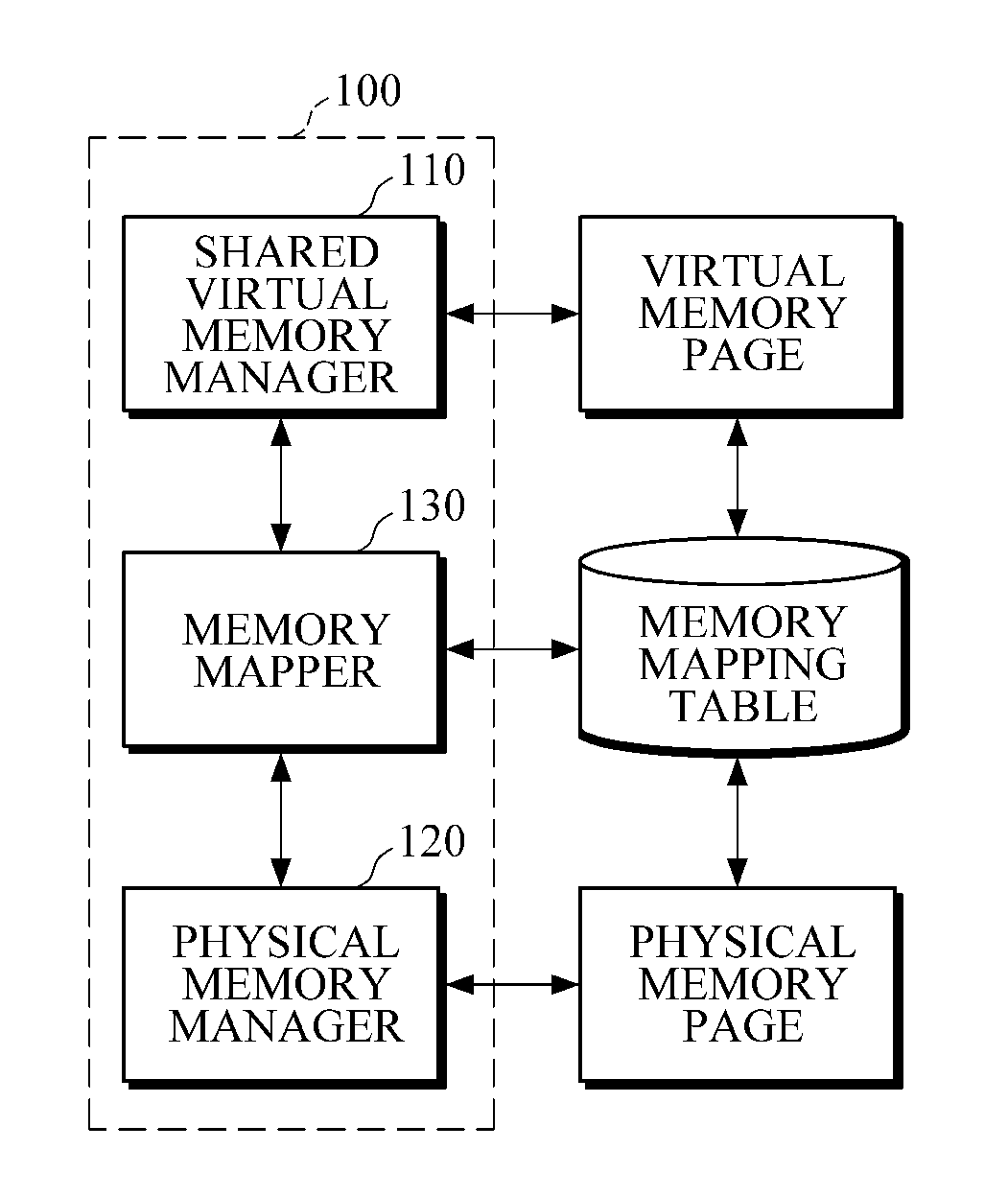

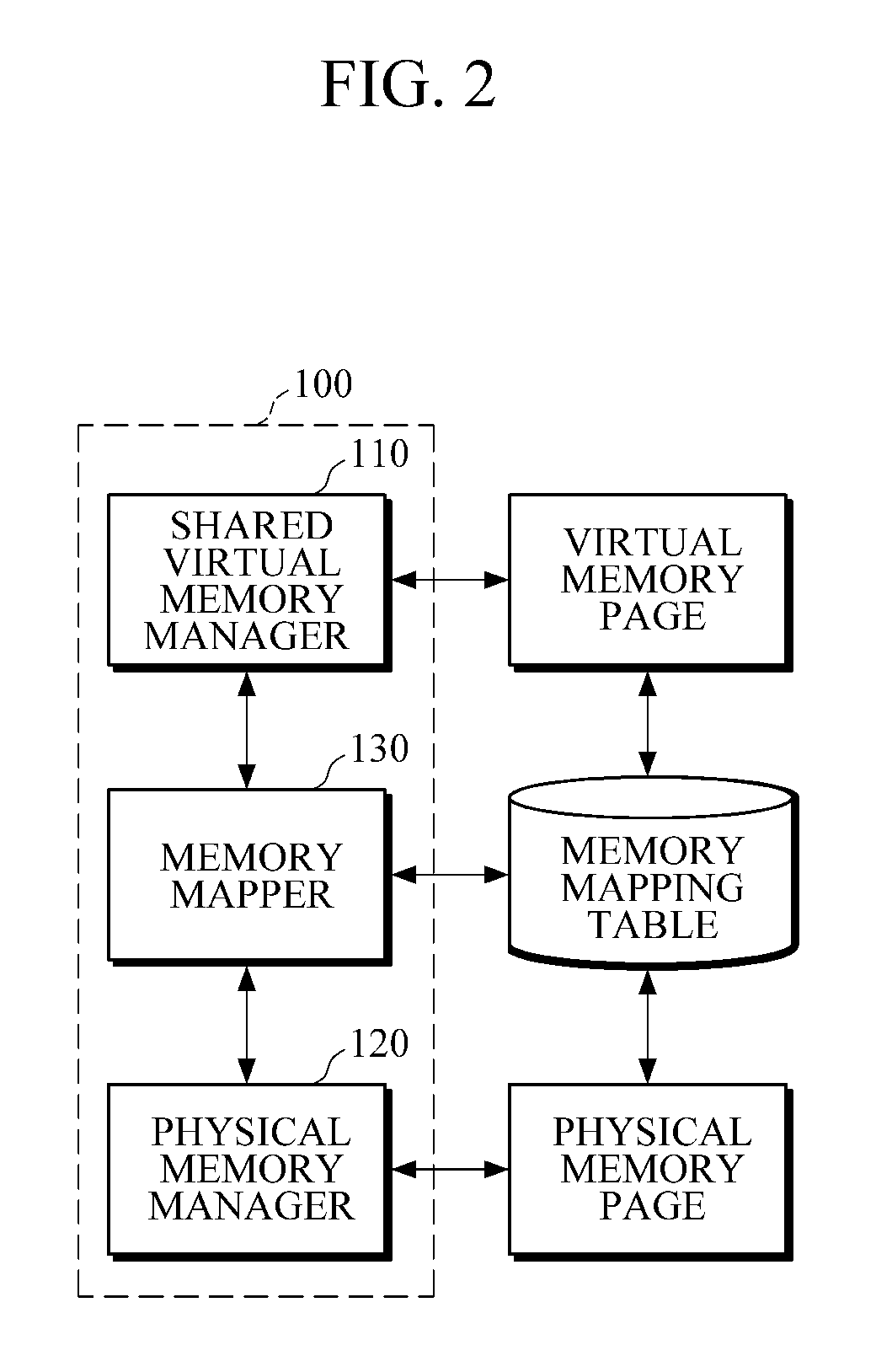

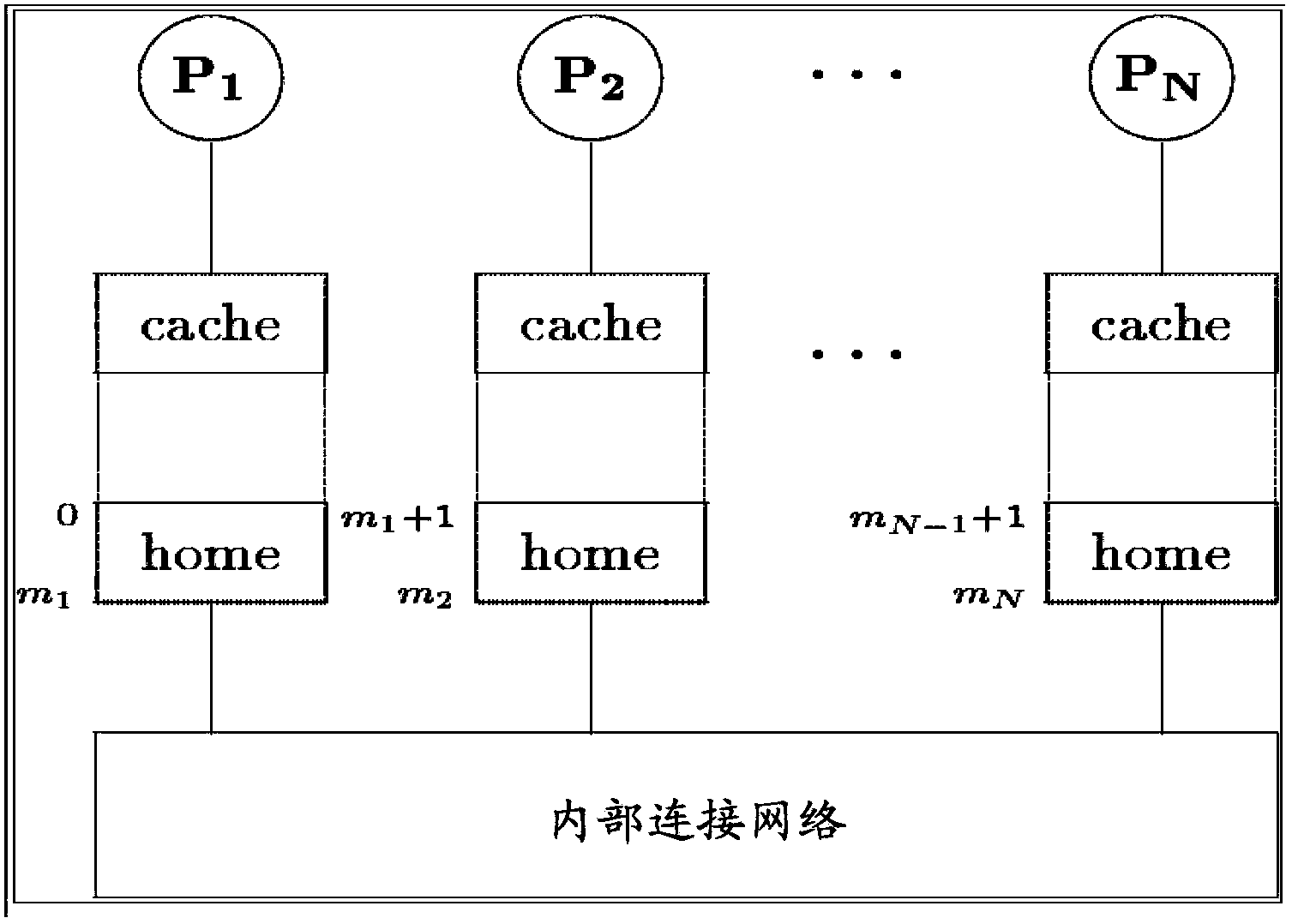

Shared virtual memory management apparatus for providing cache-coherence

ActiveUS20140040563A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryData coherence

A shared virtual memory management apparatus for ensuring cache coherence. When two or more cores request write permission to the same virtual memory page, the shared virtual memory management apparatus allocates a physical memory page for the cores to change data in the allocated physical memory page. Thereafter, changed data is updated in an original physical memory page, and accordingly it is feasible to achieve data coherence in a multi-core hardware environment that does not provide cache coherence.

Owner:SEOUL NAT UNIV R&DB FOUND

Application programming interface enabling application programs to group code and data to control allocation of physical memory in a virtual memory system

InactiveUS20050034136A1Improve performancePrevents page faultsProgram initiation/switchingMemory adressing/allocation/relocationOperational systemApplication programming interface

An application programming interface (API) enables application programs in a multitasking operating environment to classify portions of their code and data in a group that the operating system loads into physical memory all at one time. Designed for operating systems that implement virtual memory, this API enables memory-intensive application programs to avoid performance degradation due to swapping of units of memory back and forth between the hard drive and physical memory. Instead of incurring the latency of a page fault whenever the application attempts to access code or data in the group that is not located in physical memory, the API makes sure that all of the code or data in a group is loaded into physical memory at one time. This increases the latency of the initial load operation, but reduces performance degradation for subsequent memory accesses to code or data in the group.

Owner:MICROSOFT TECH LICENSING LLC

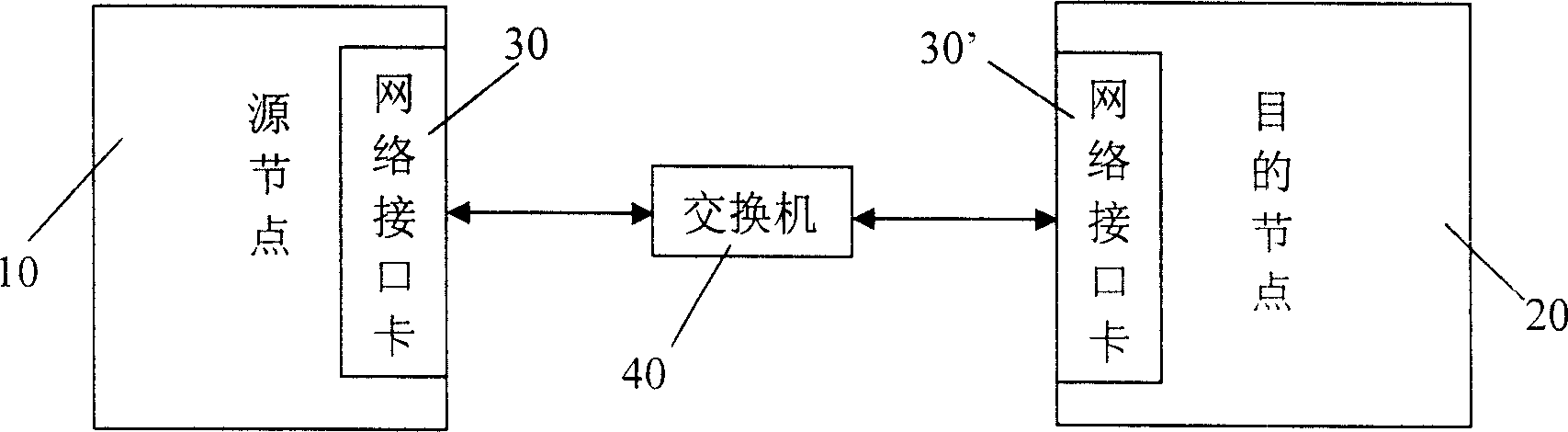

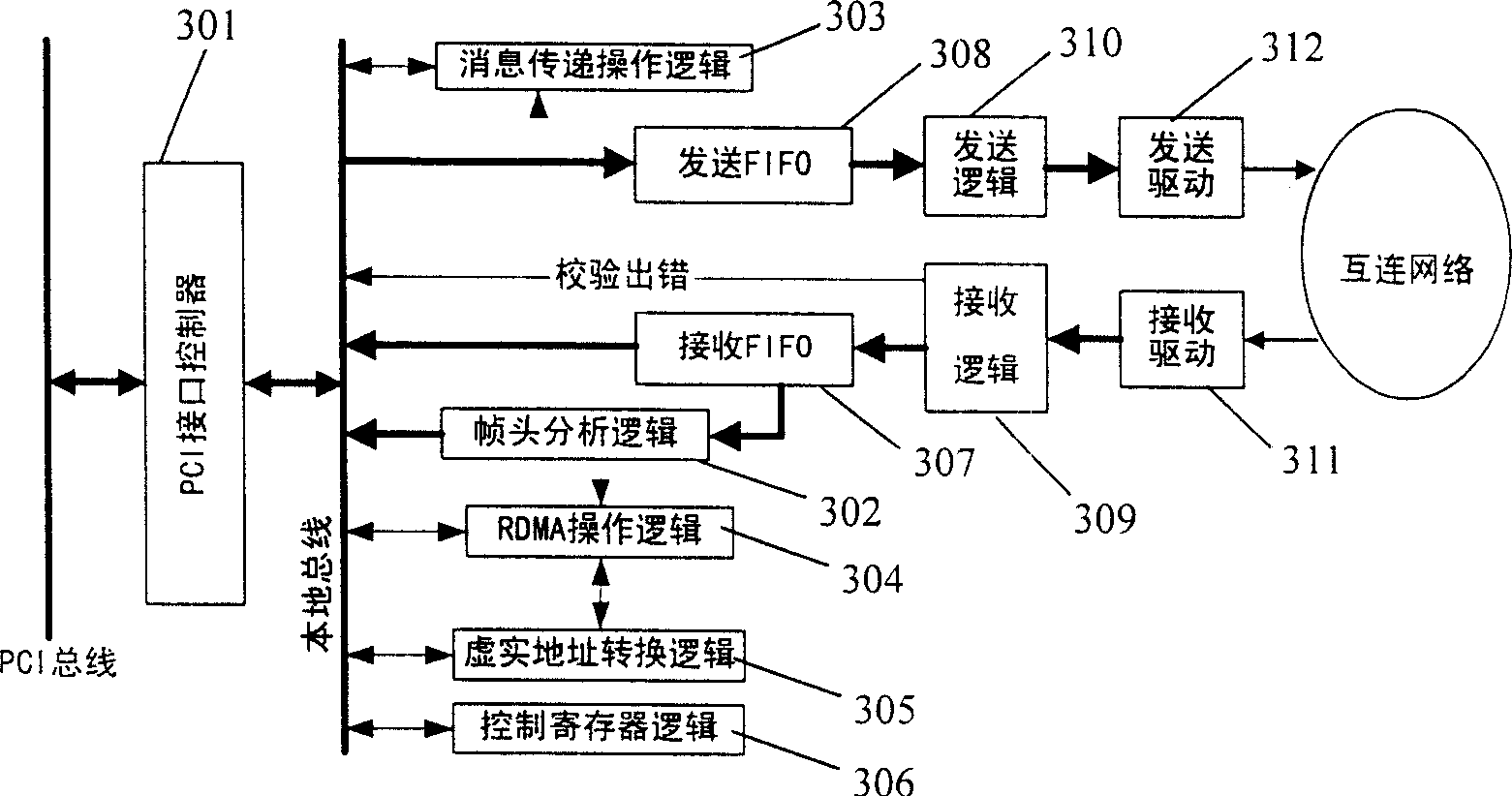

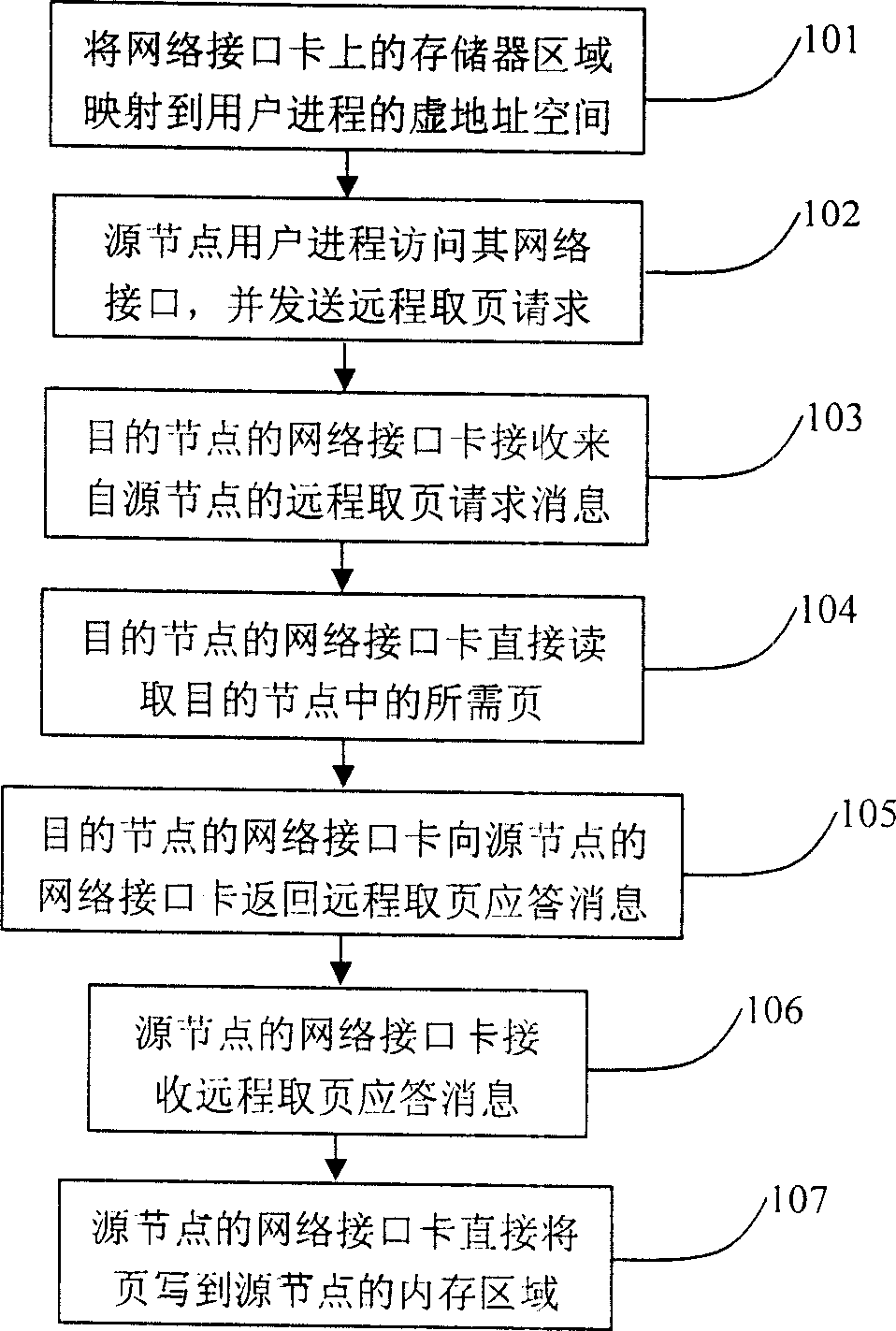

Remote page access method for use in shared virtual memory system and network interface card

ActiveCN1705269AImplement the page fetch operationReduce overheadMemory adressing/allocation/relocationData switching networksAccess networkVirtual memory

A long distance paging method and network interface card for virtual share storage system, which contains mapping the memory area in network interface card to virtual address space of user process, source node user process directly accessing network interface card, generating and sending long distance paging request message to network interface card of destination node which directly accessing the needed page and feeding back response message to source node network interface card which directly writing said page to memory area of source node, said network interface card is added with frame head analysis logic, RDMA operation logic and virtual / real address conversion, said invention can realize long distance paging operation without breaking off the current work of CPU by bidirection operation of user process and network interface card.

Owner:LOONGSON TECH CORP

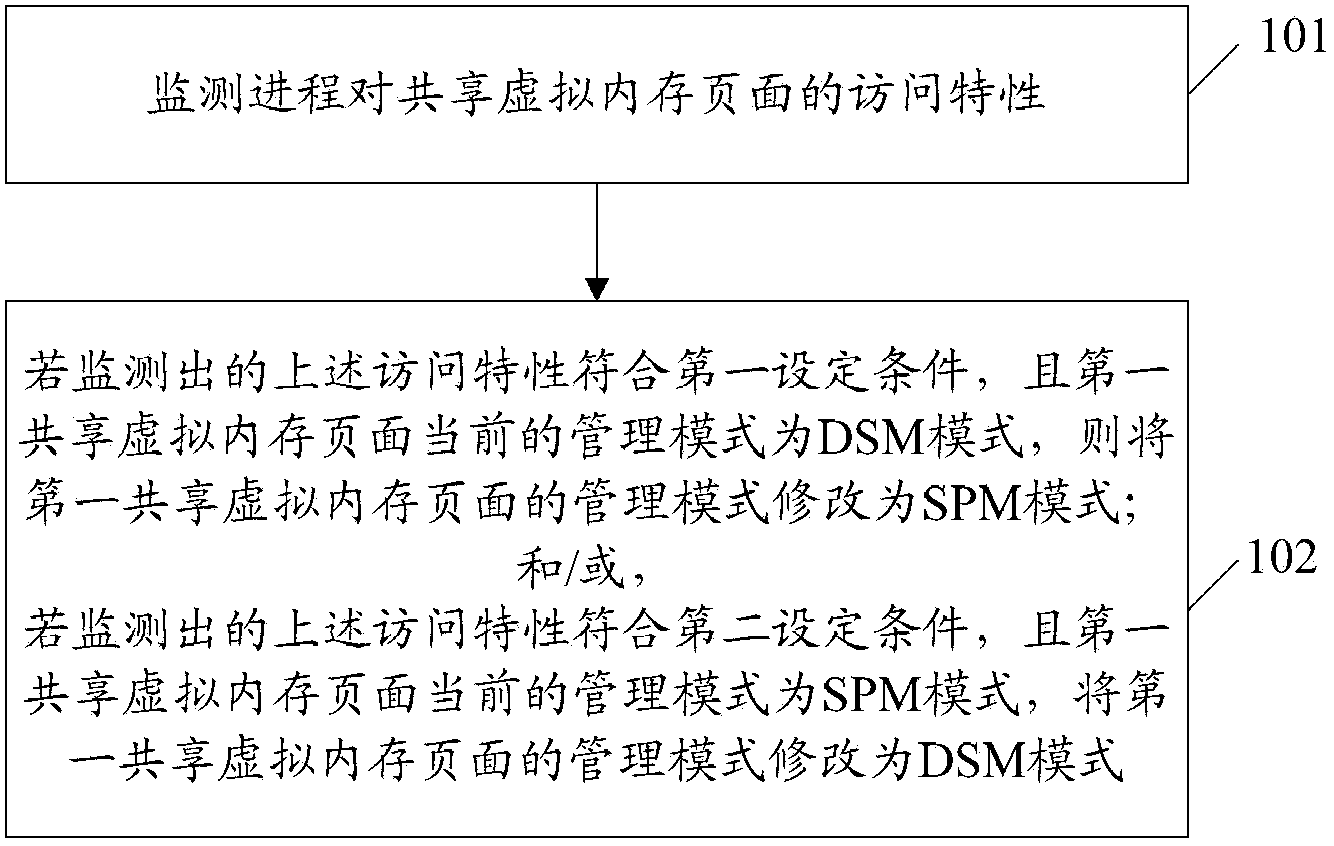

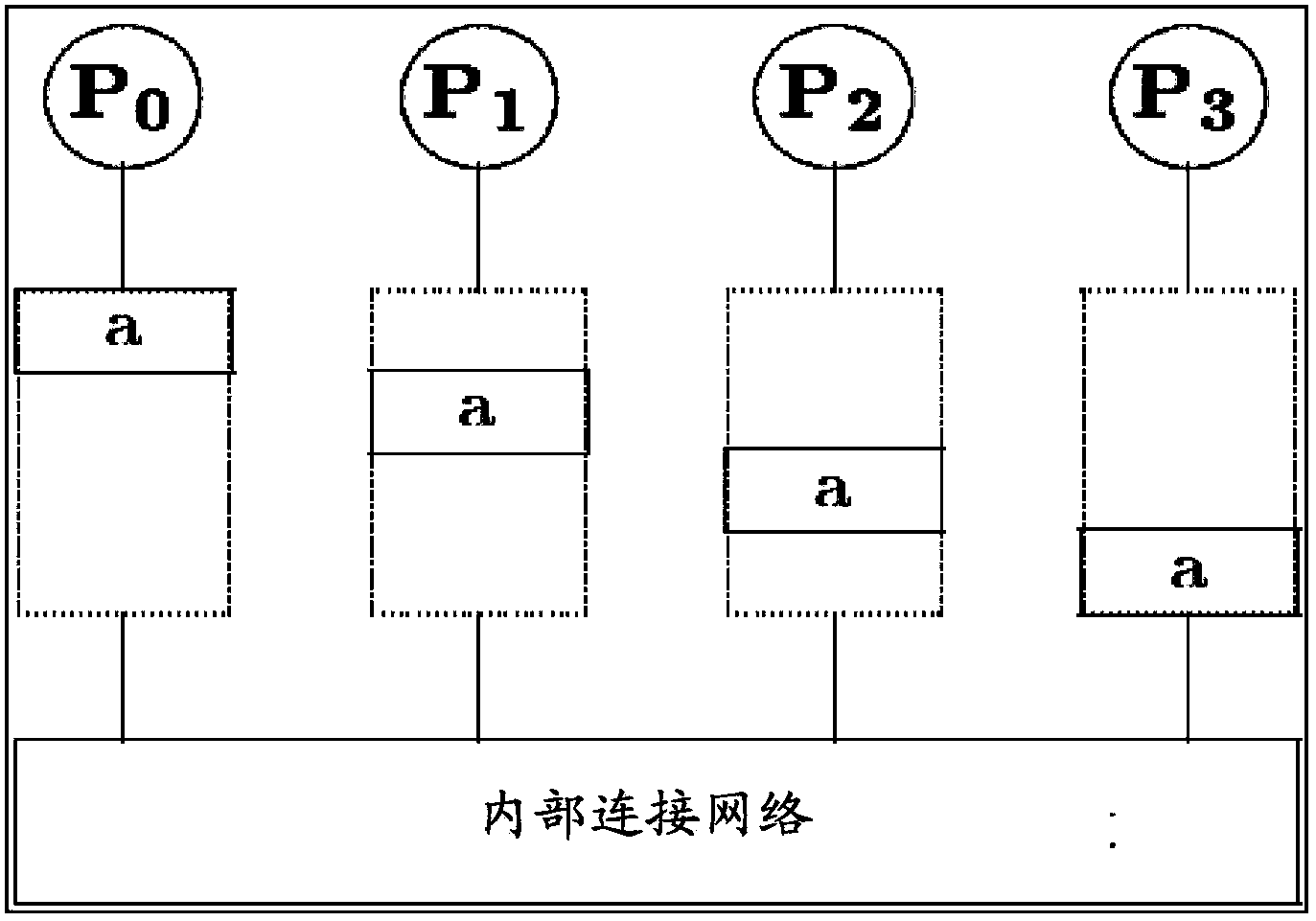

Method and associated equipment for determining management mode of shared virtual memory page

ActiveCN103902459AImprove access performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryCurrent mode

Disclosed are a method and a relevant device for determining a mode of managing a shared virtual memory page. The method for determining a mode of managing a shared virtual memory page comprises: monitoring an access characteristic of a process to the shared virtual memory page; and if the monitored access characteristic conforms to the first set condition and a current mode of managing the shared virtual memory page is a distributed shared memory mode, modifying the mode of managing the shared virtual memory page to a shared physical memory mode. The technical solutions provided by the embodiments of the present invention facilitate the improvement of access performance of the shared virtual memory.

Owner:HUAWEI TECH CO LTD

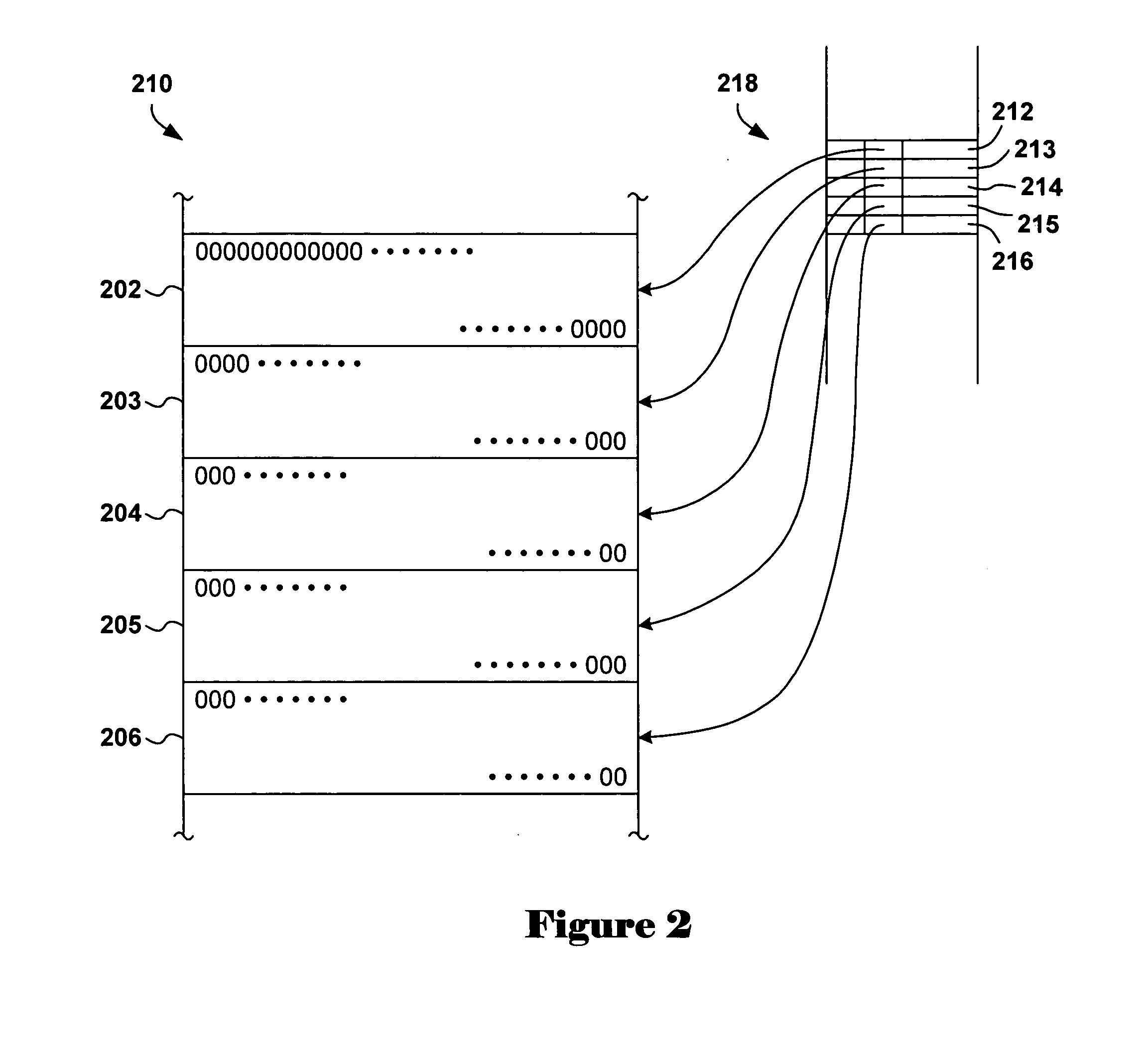

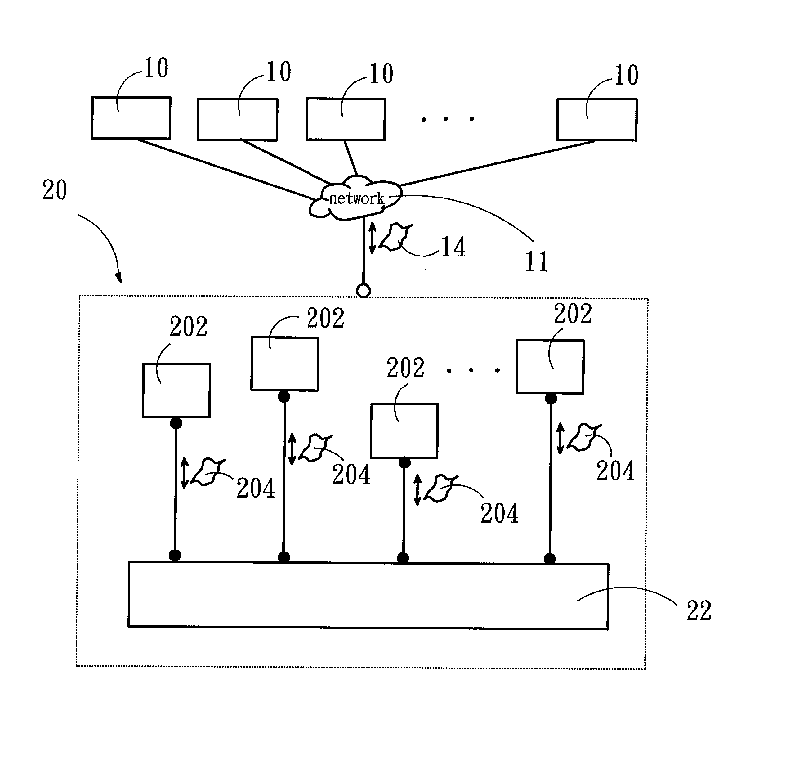

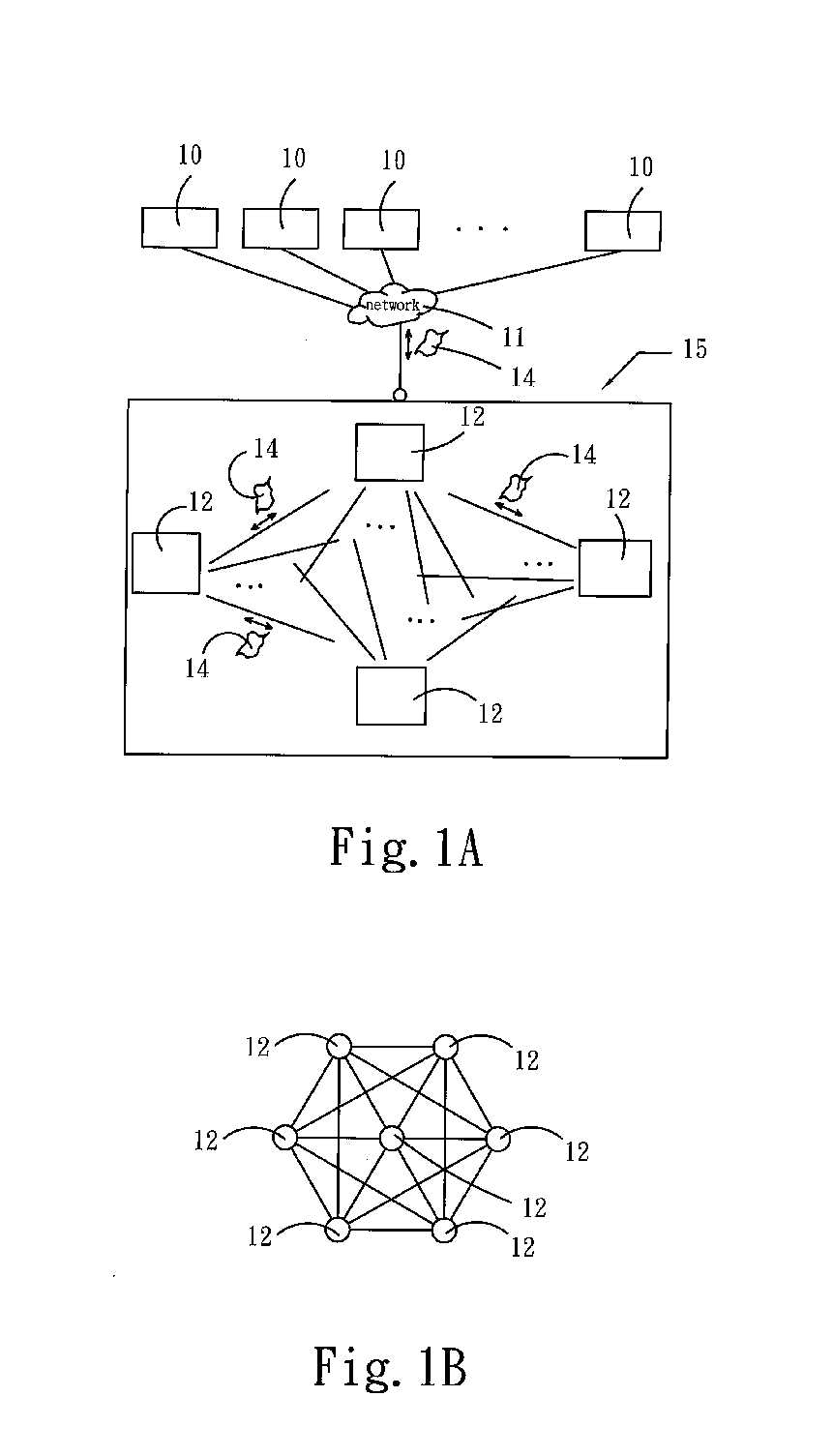

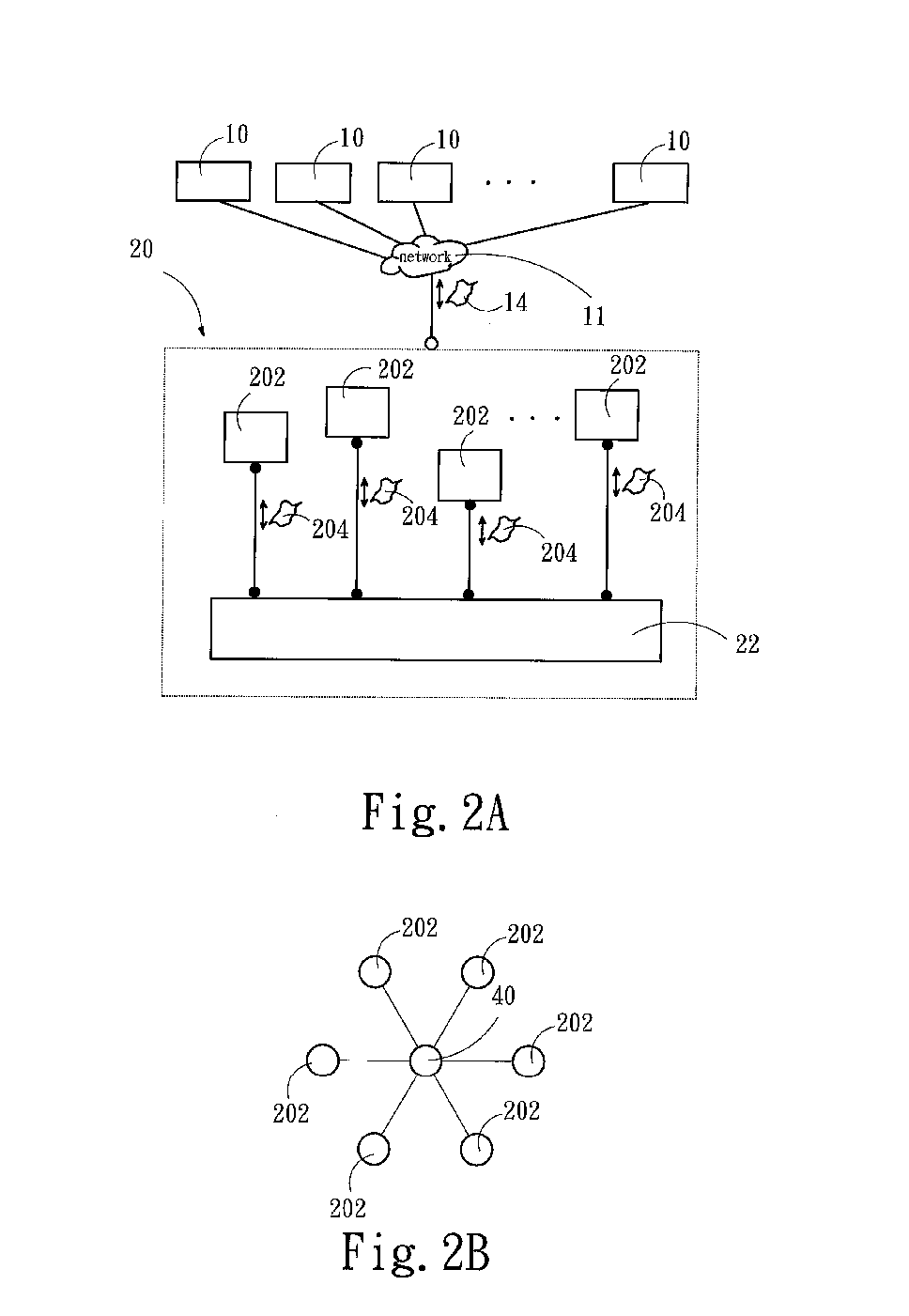

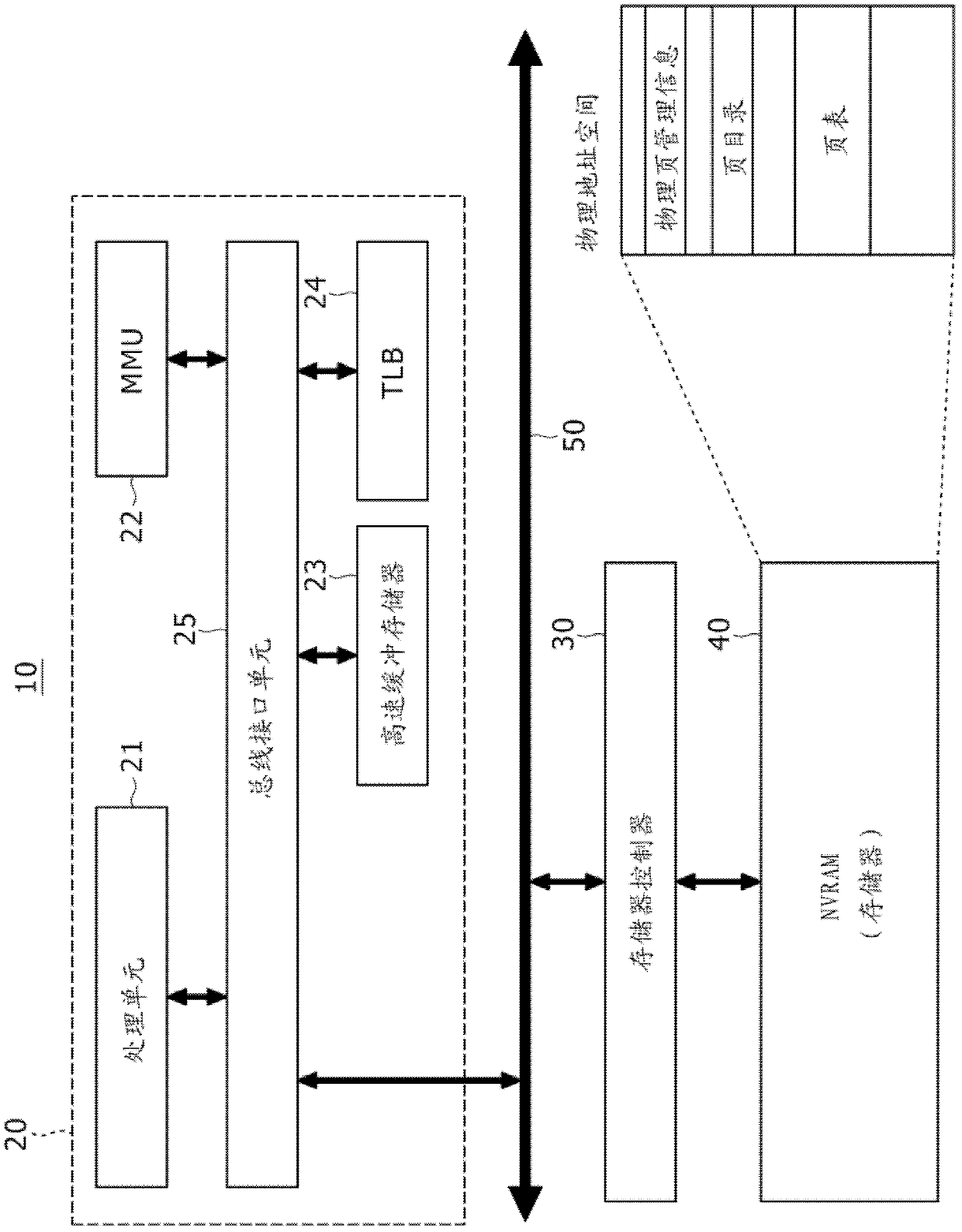

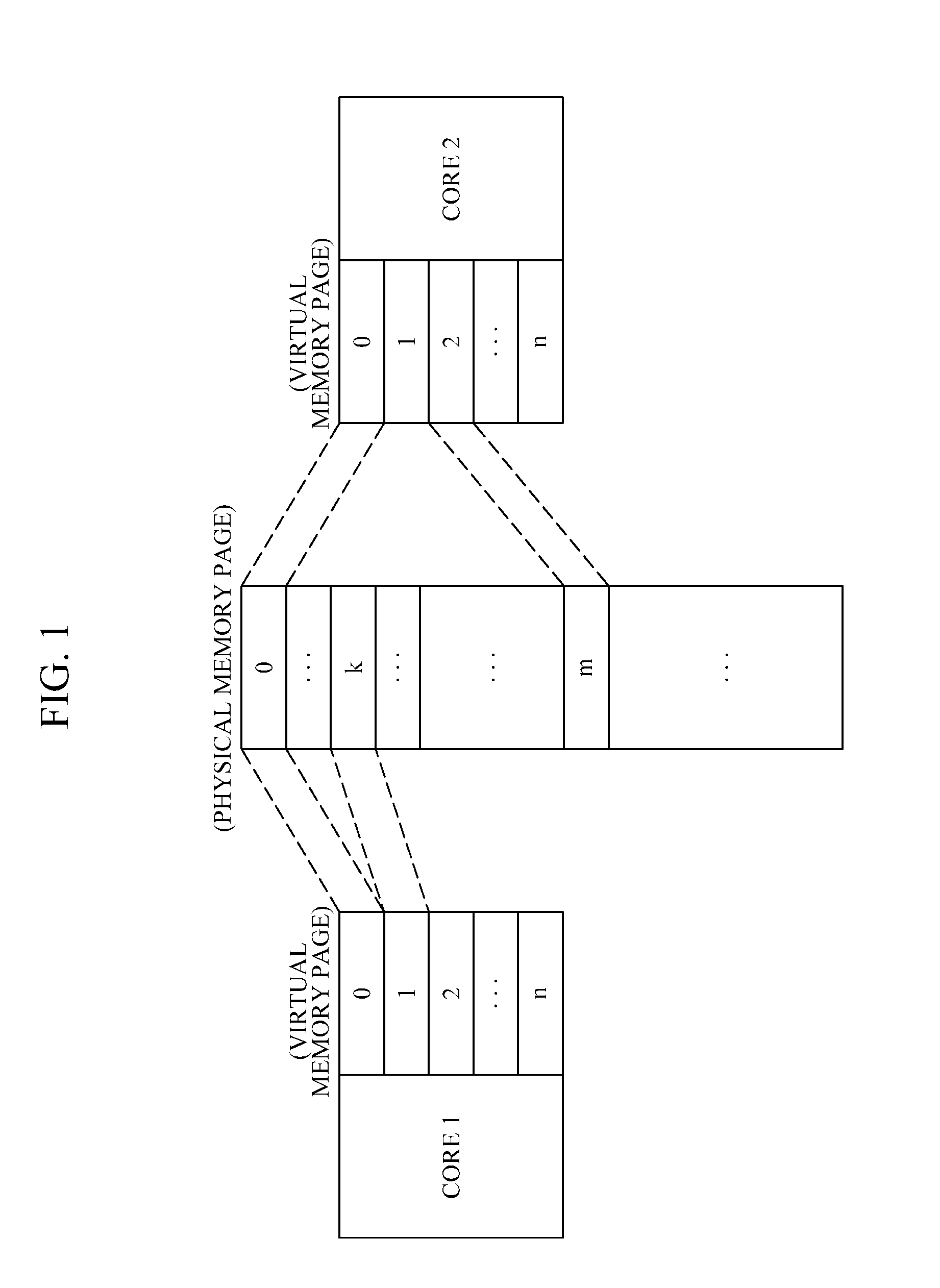

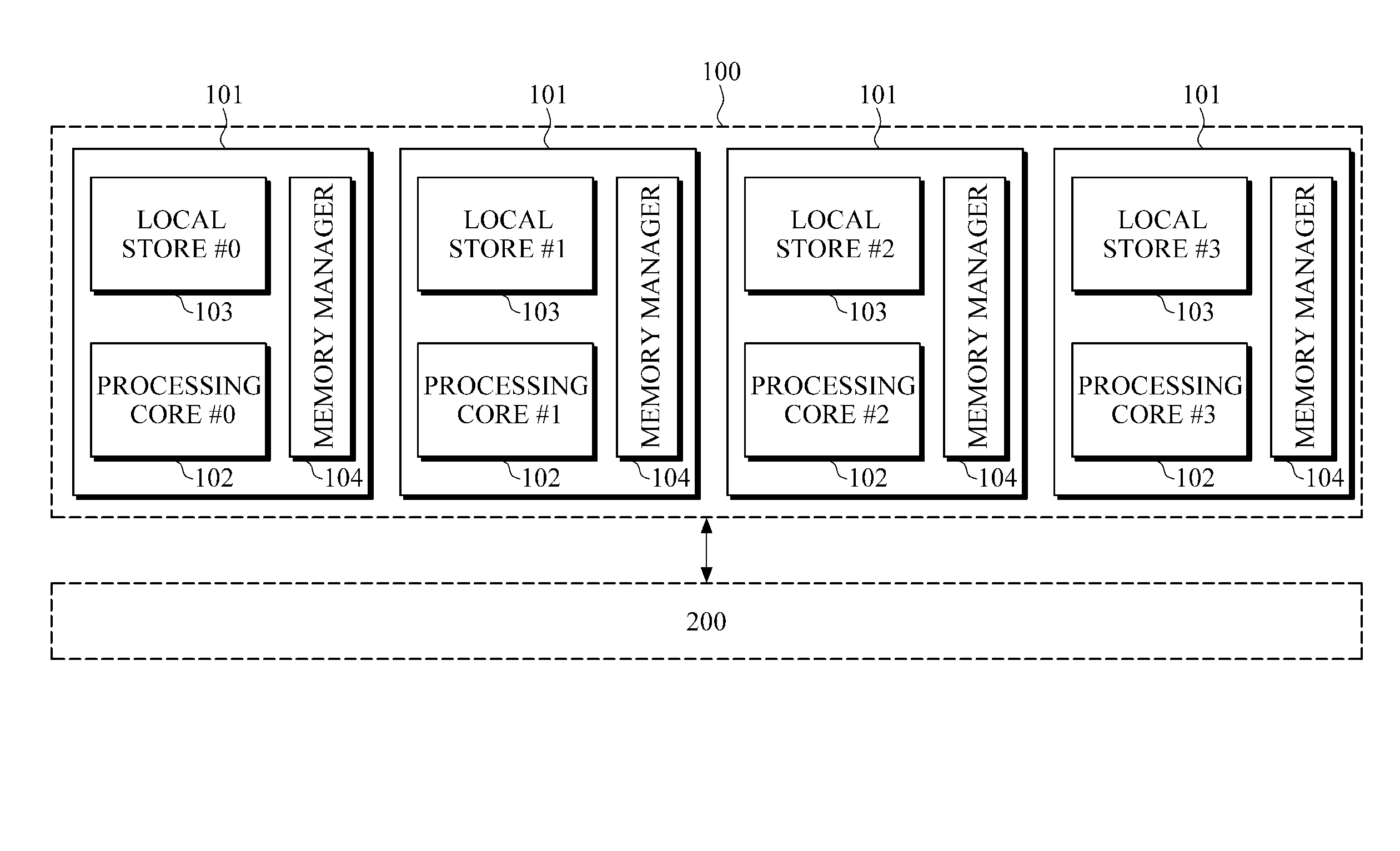

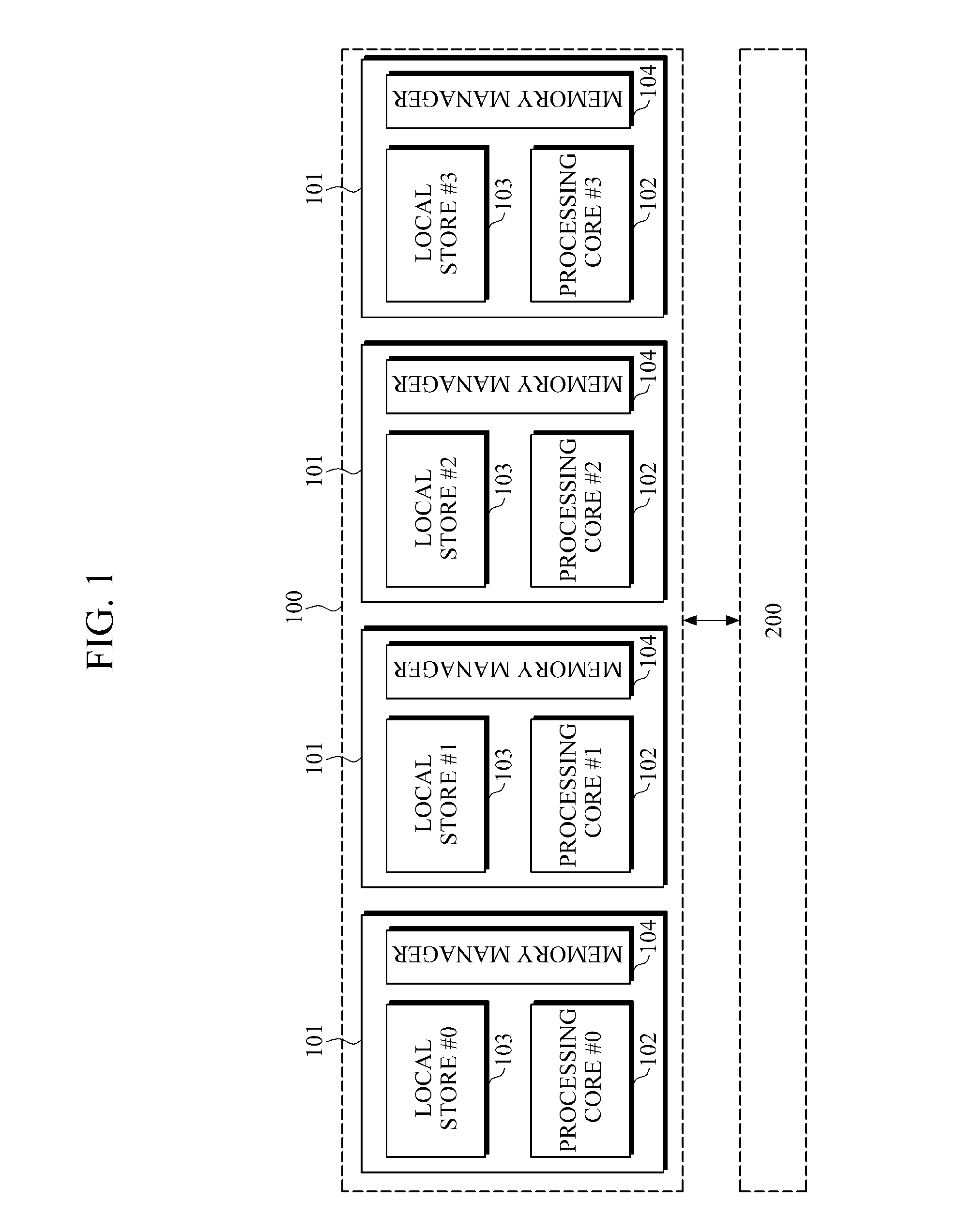

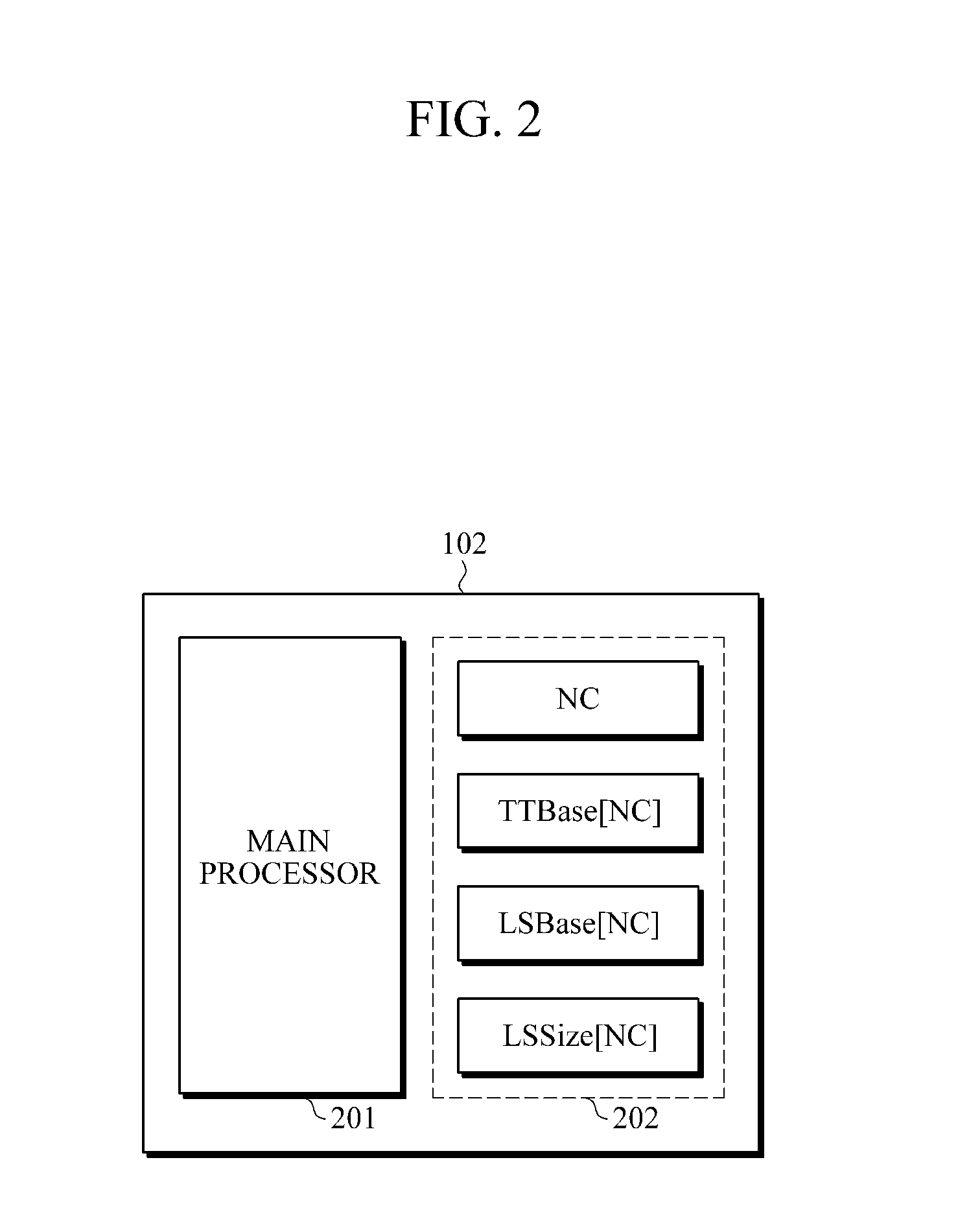

Multiprocessor using a shared virtual memory and method of generating a translation table

ActiveUS20120089808A1Energy efficient ICTMemory adressing/allocation/relocationVirtual memoryProcessing core

A multiprocessor using a shared virtual memory (SVM) is provided. The multiprocessor includes a plurality of processing cores and a memory manager configured to transform a virtual address into a physical address to allow a processing core to access a memory region corresponding to the physical address.

Owner:SAMSUNG ELECTRONICS CO LTD +1

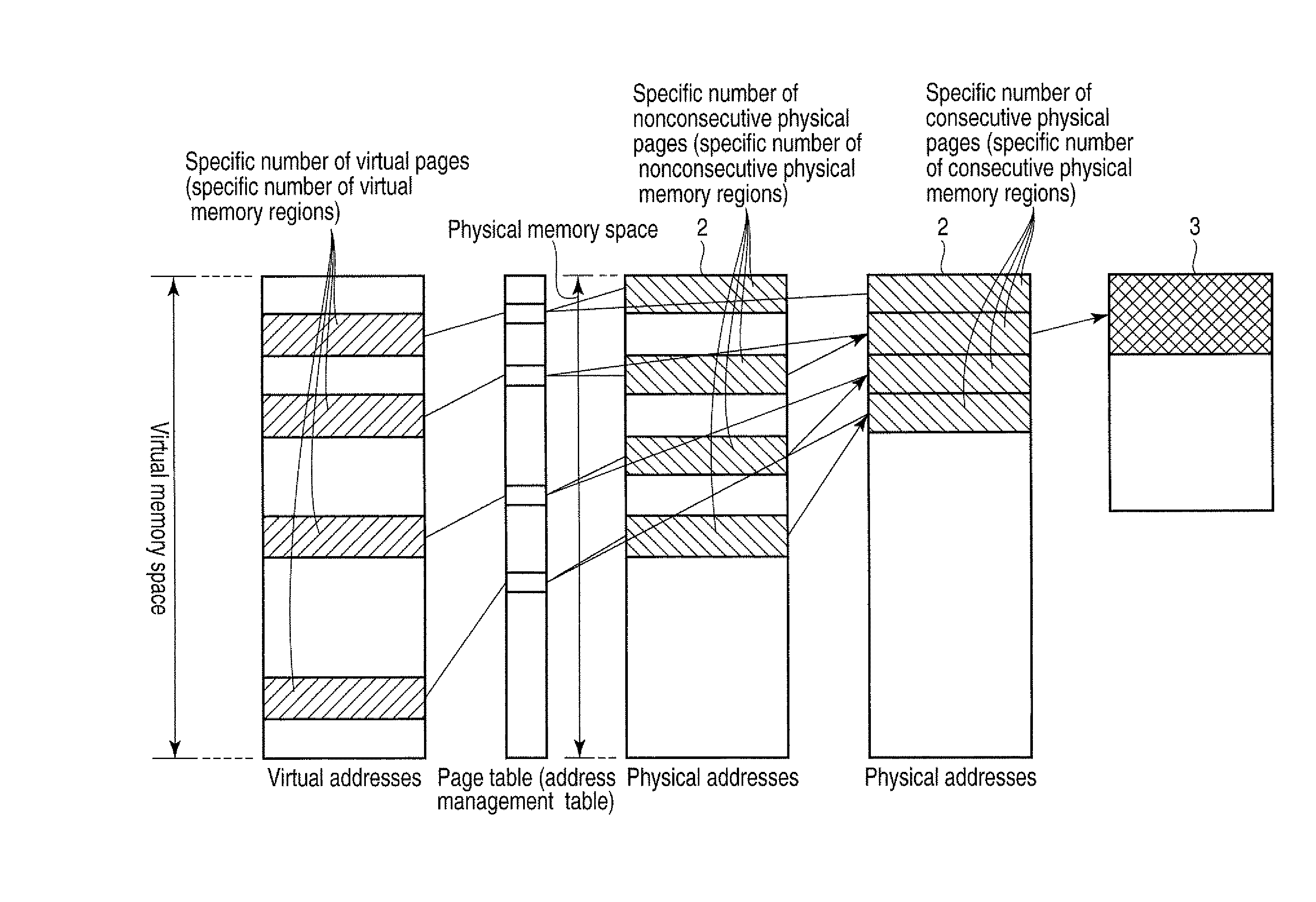

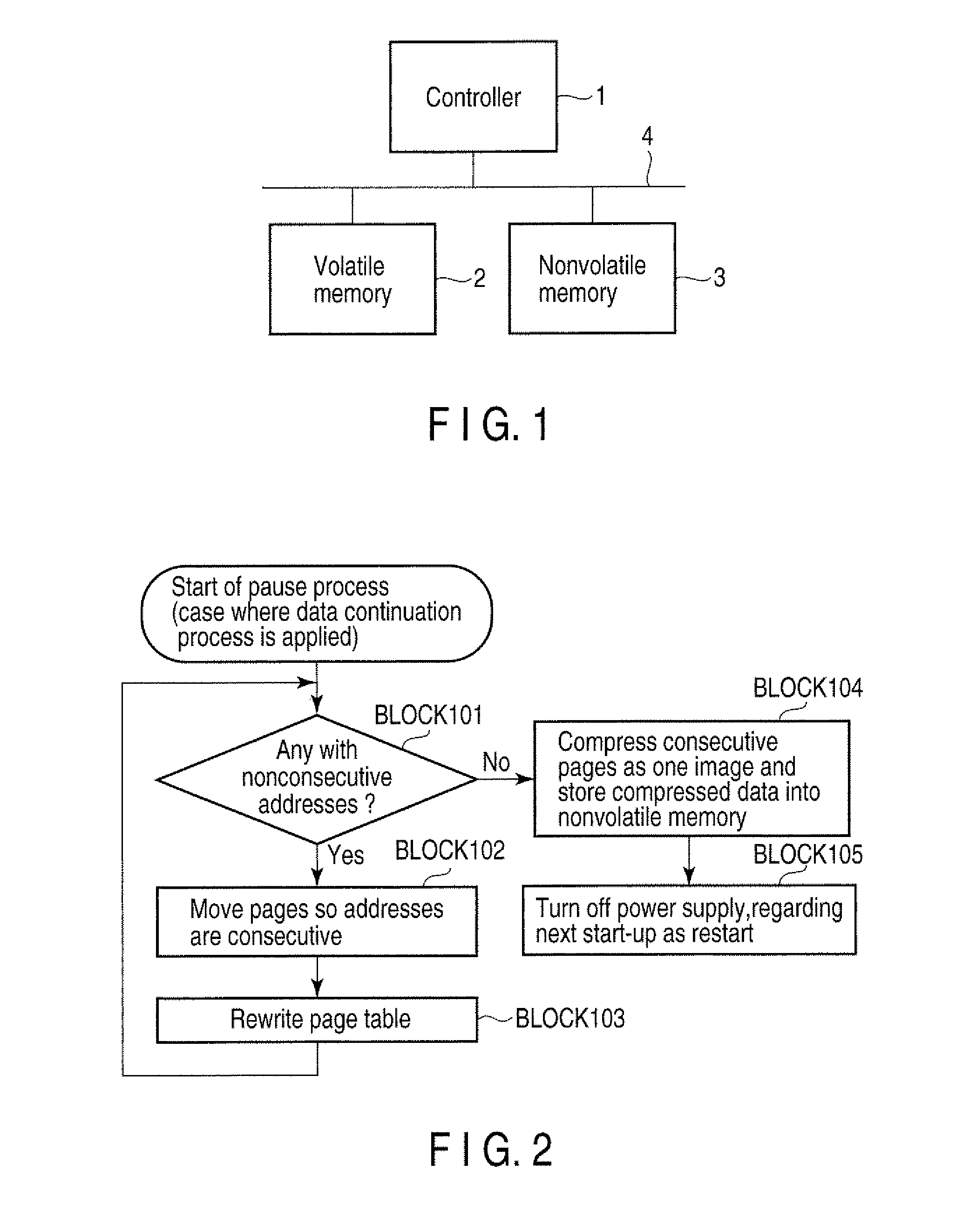

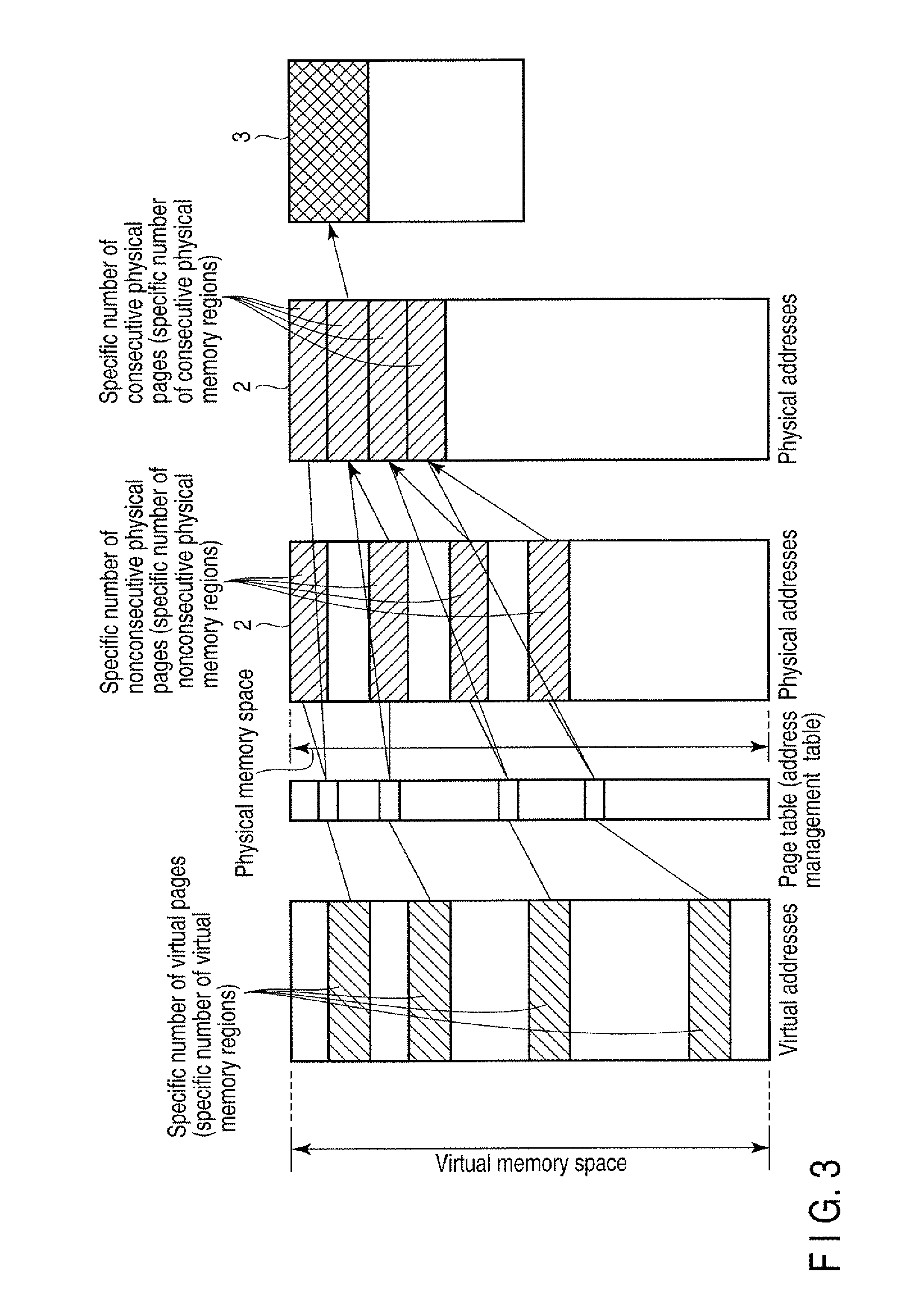

Data Storage Control Apparatus and Data Storage Control Method

InactiveUS20100211750A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryParallel computing

According to one embodiment, a data storage control method, which is applied to a virtual memory that controls access to the data stored in each of the physical memory regions by the corresponding one of the virtual addresses on the basis of an address management table that manages the correspondence relationship between a plurality of virtual addresses corresponding to a plurality of virtual memory regions and a plurality of physical addresses corresponding to a plurality of physical memory regions of a first memory, includes writing the data stored in a specific number of nonconsecutive physical memory regions made to correspond to a specific number of virtual memory regions on the basis of the address management table to a specific number of consecutive physical memory regions.

Owner:KK TOSHIBA

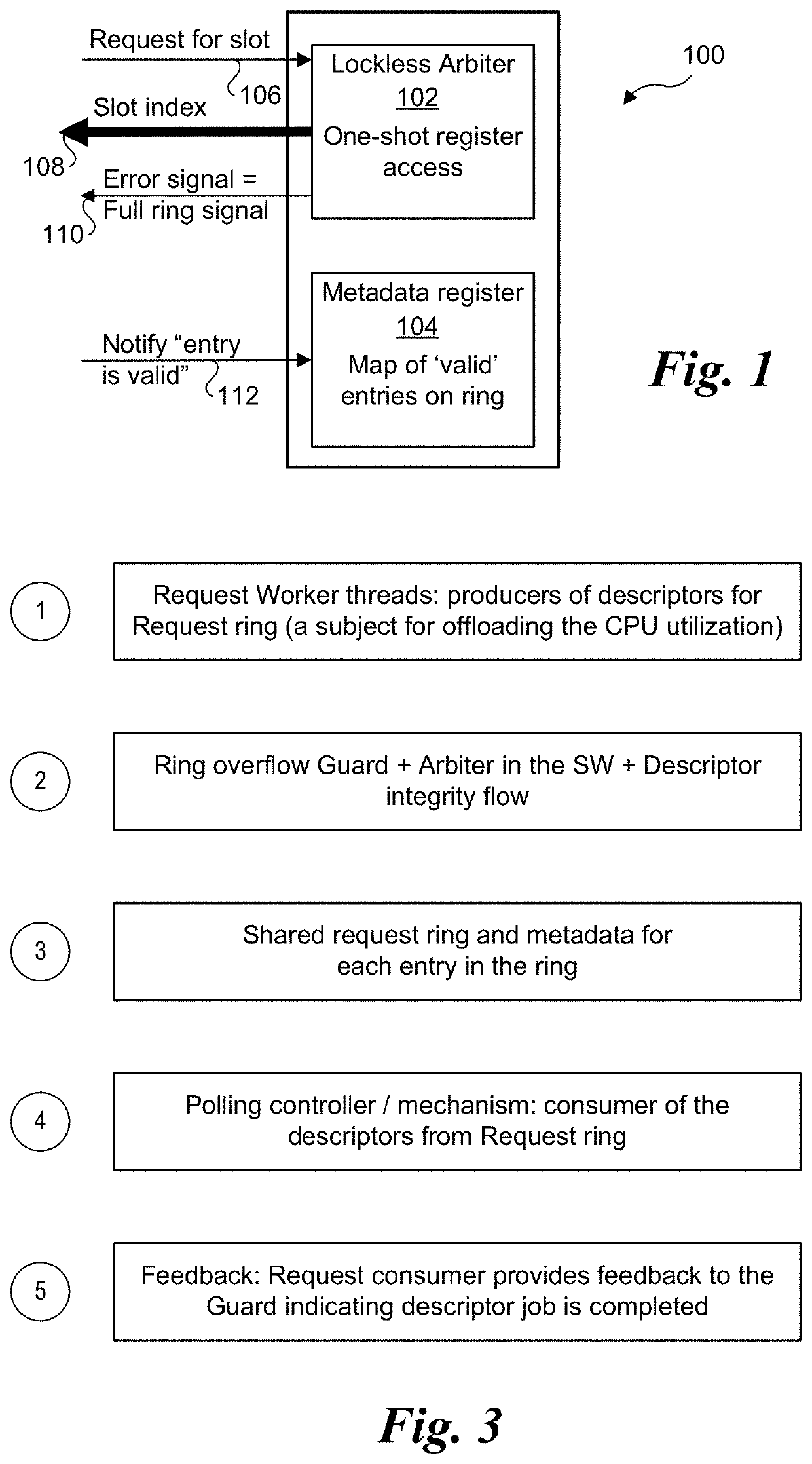

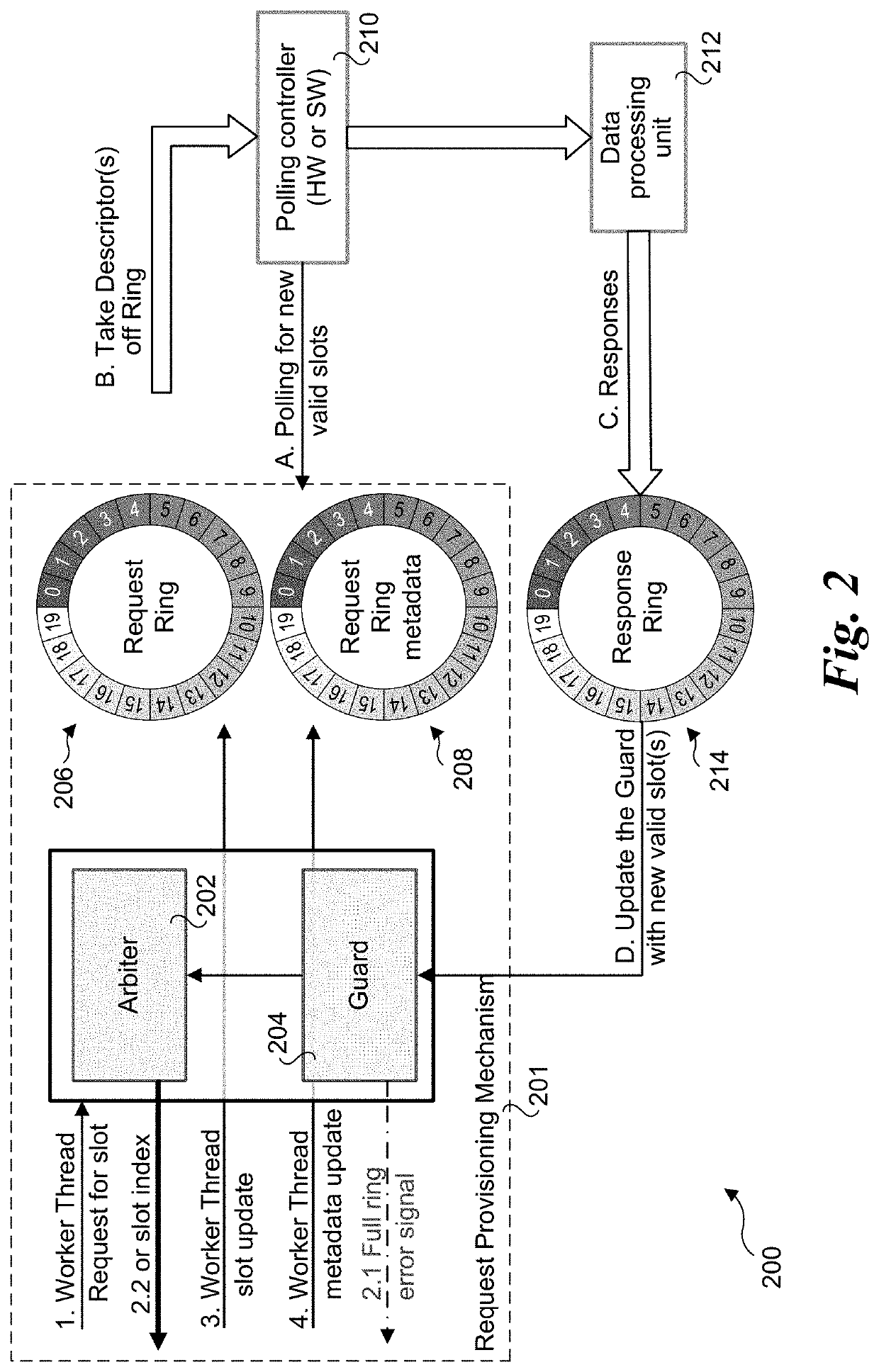

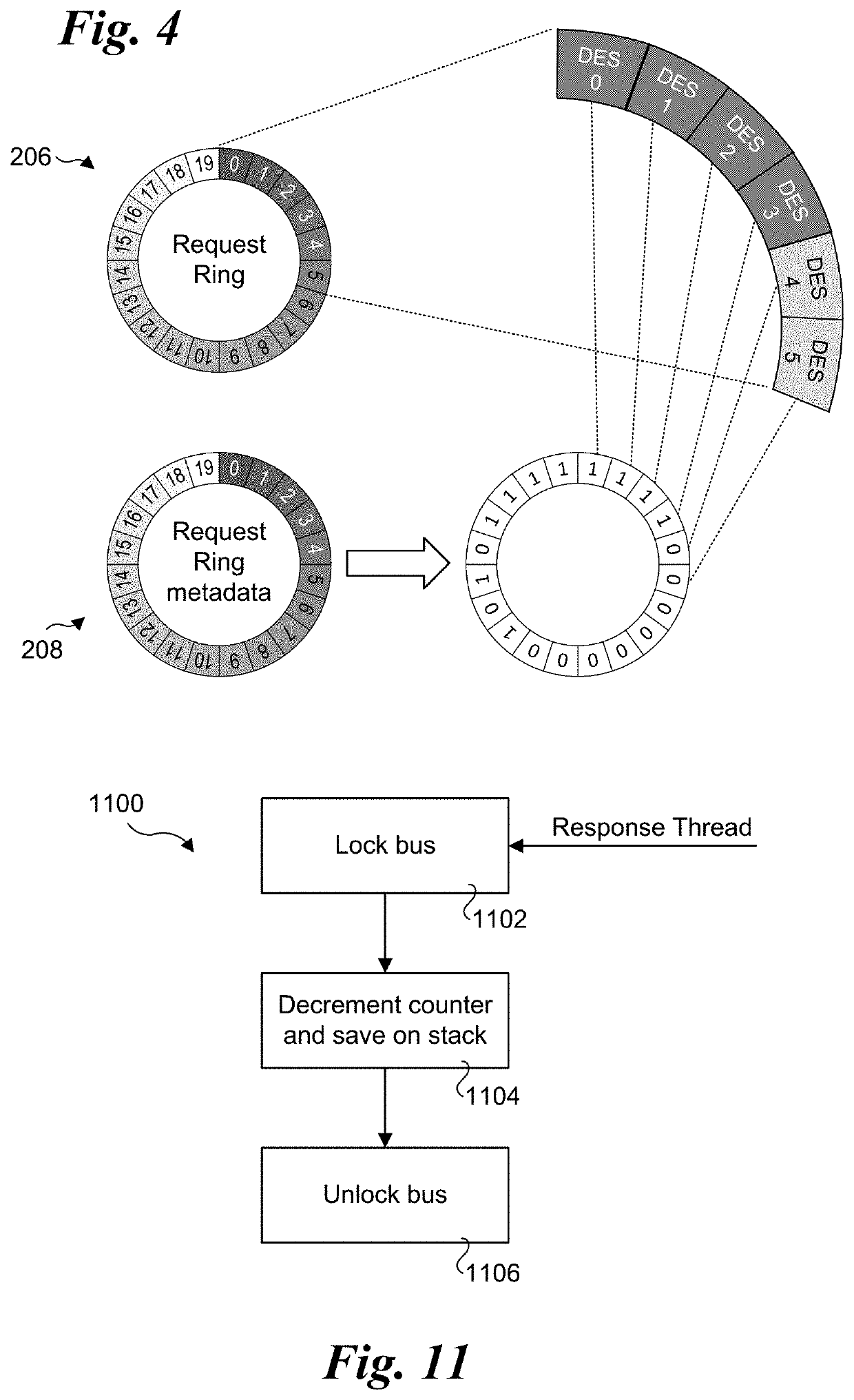

Method for arbitration and access to hardware request ring structures in a concurrent environment

Methods and apparatus for arbitration and access to hardware request ring structures in a concurrent environment. A request ring mechanism is provided including an arbiter, ring overflow guard, request ring, and request ring metadata, each of which is implemented in shared virtual memory (SVM) on a computing platform including a multi-core processor coupled to an offload device having one or more SVM-capable accelerators. Worker threads request to access the request ring to provide job descriptors to be processed by the accelerator(s). A lockless arbiter returns either an index of a slot in which to write a descriptor or information indicating the ring is full to each worker thread. The scheme enables worker threads to write descriptors to slots in the request ring corresponding to the returned indexes without contention from other worker threads. The ring overflow guard prevents valid descriptors from being overwritten before they are taken off the ring by the accelerator(s). The request ring metadata is used indicate a valid / invalid status of the ring entries.

Owner:INTEL CORP

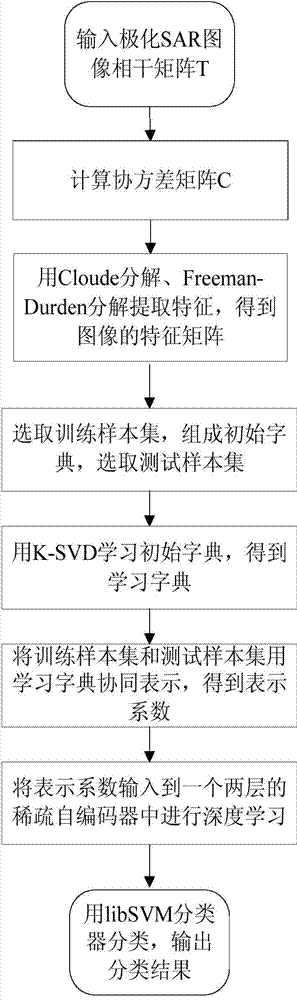

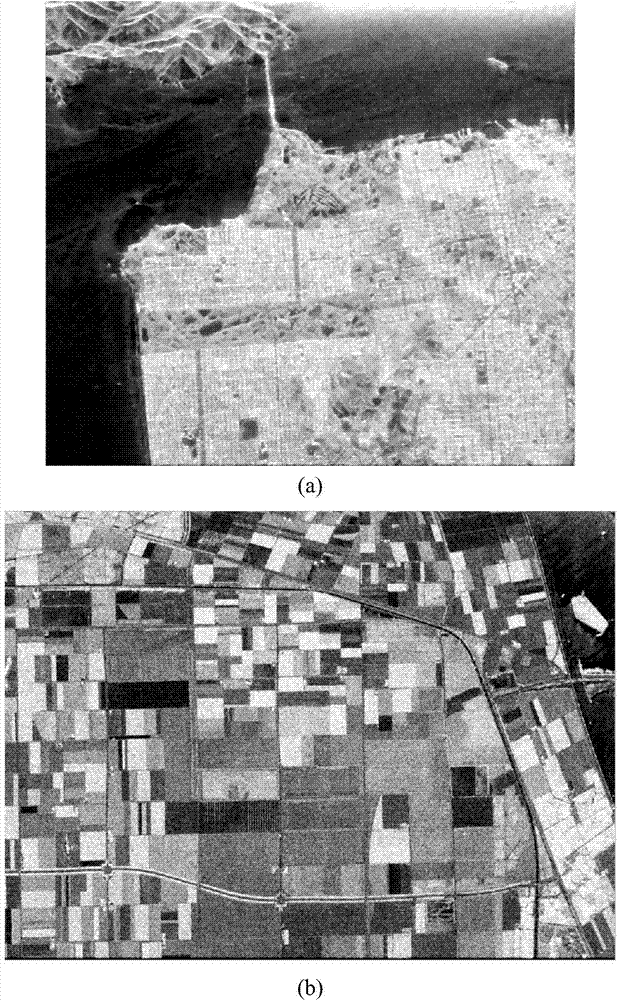

Polarized SAR (Synthetic Aperture Radar) image classifying method based on cooperative representation and deep learning.

ActiveCN104751173AReduce computational complexityImprove classification accuracyCharacter and pattern recognitionSingular value decompositionComputation complexity

The invention discloses a polarized SAR (Synthetic Aperture Radar) image classifying method based on cooperative representation and deep learning, and mainly solves the problems that an existing method is high in computation complexity and low in classification precision. The method comprises the realizing steps: 1, inputting a polarized SAR image, and extracting the polarization characteristics of the image; 2, selecting a training sample set according to practical ground features, and selecting pixel points of the entire image as a test sample set; 3, taking the characteristics of the training sample set as an initial dictionary, and learning the initial dictionary to obtain a learning dictionary by K-SVD (Singular Value Decomposition); 4, synergically representing the training sample set and the testing sample set to obtain the representation coefficients of the training sample set and the testing sample set by the learning dictionary; 5, deeply learning the representation coefficients of the training sample set and the testing sample set so as to obtain more essential characteristic representing; and 6, carrying out the polarized SAR image classification on the representation coefficients by an libSVM (Shared Virtual Memory) classifier after the deep learning. The SAR image classifying method provided by the utility model is low in computation complexity and high in classification accuracy, and is applicable to the polarized SAR image classification.

Owner:XIDIAN UNIV

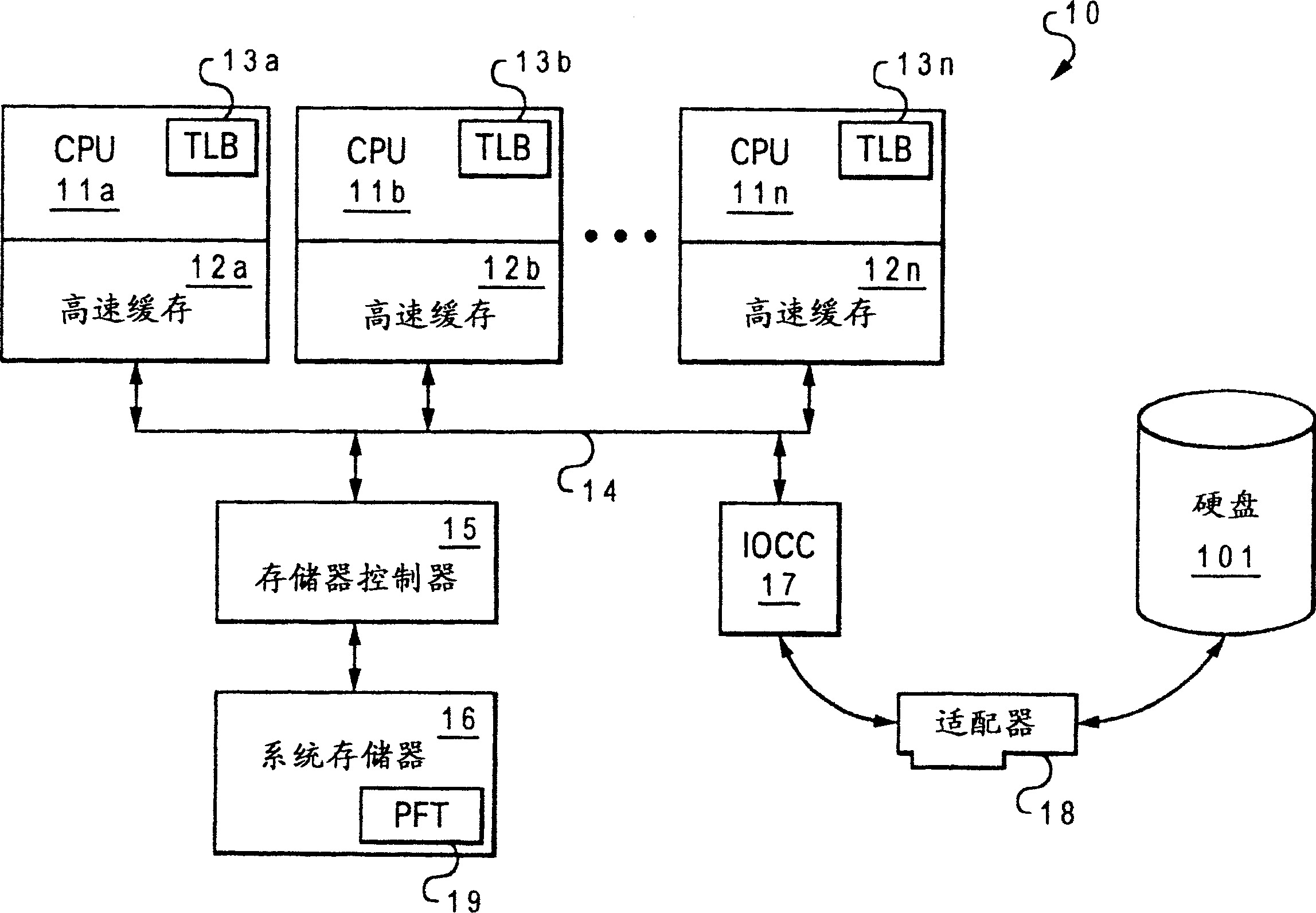

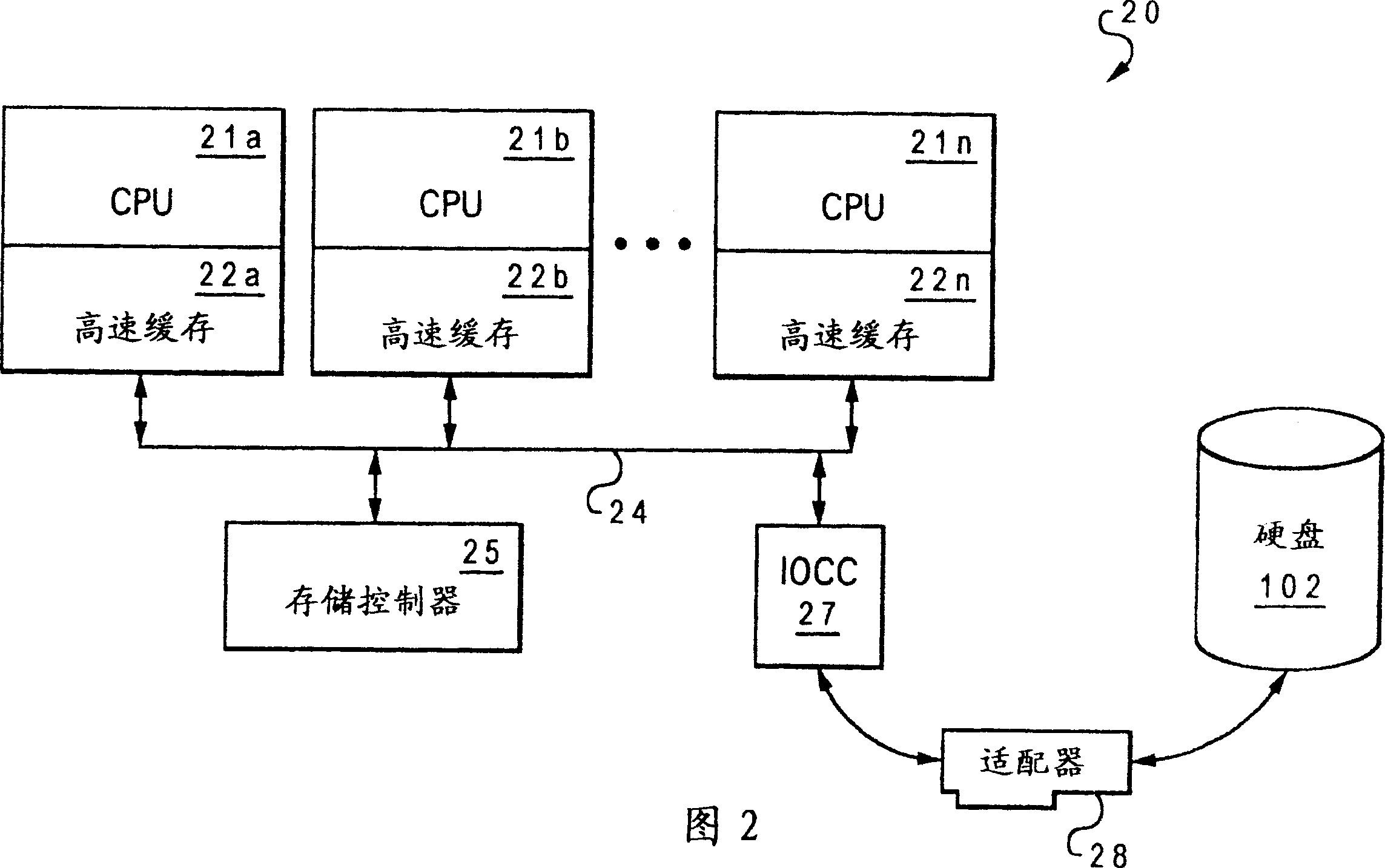

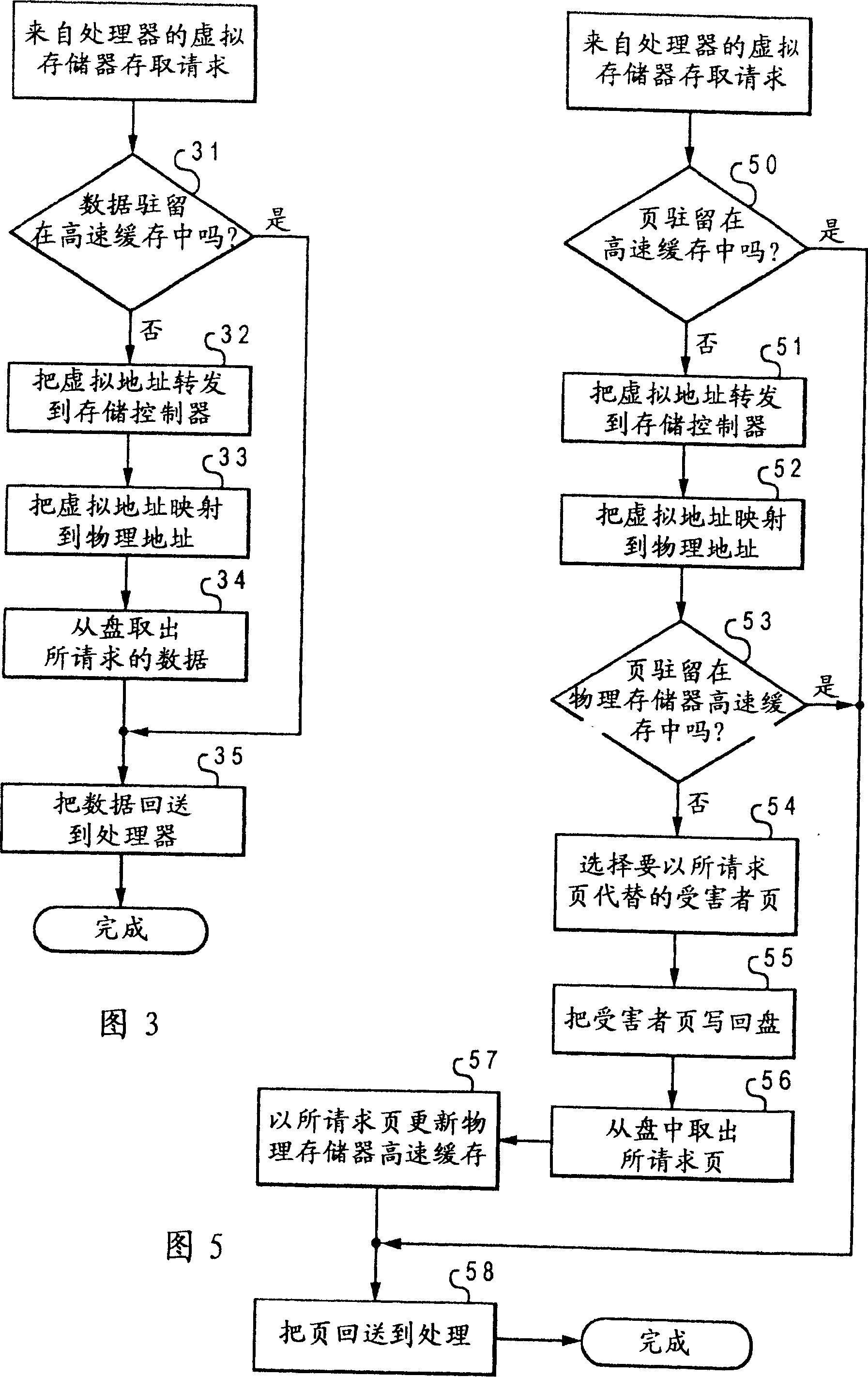

Data processing system capable of processing program utilizing virtual memory

InactiveCN1506851AMemory adressing/allocation/relocationColour-separation/tonal-correctionData processing systemVirtual memory

An access request for a data processing system having no system memory is disclosed. The data processing system includes multiple processing units. The processing units have volatile cache memories operating in a virtual address space that is greater than a real address space. The processing units and the respective volatile memories are coupled to a storage controller operating in a physical address space that is equal to the virtual address space. The processing units and the storage controller are coupled to a hard disk via an interconnect. The storage controller, which is coupled to a physical memory cache, allows the mapping of a virtual address from one of the volatile cache memories to a physical disk address directed to a storage location within the hard disk without transitioning through a real address. The physical memory cache contains a subset of information within the hard disk. When a specific set of data is needed, a processing unit generates a virtual memory access request to be received by the storage controller. The storage controller then fetches the data for the requesting processor. The virtual memory access request includes a group of hint bits regarding data prefetch associated with the fetched data.

Owner:IBM CORP

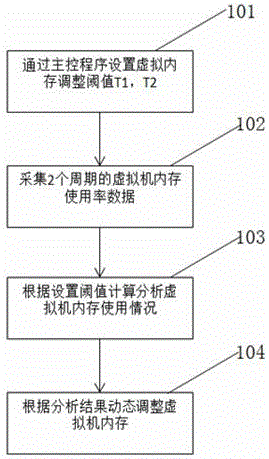

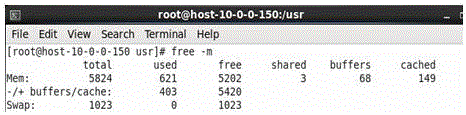

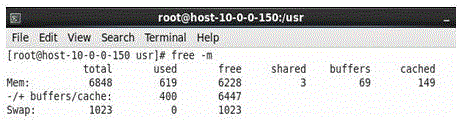

Virtual memory dynamic allocation method of virtual machine

InactiveCN106598697ARealize dynamic deploymentAdjust real-time onlineSoftware simulation/interpretation/emulationVirtual memoryShared virtual memory

The invention provides a virtual memory dynamic allocation method of a virtual machine. The virtual memory dynamic allocation method of a virtual machine realizes the dynamic allocation of the virtual memory by developing a control program with the memory balloon technology. Firstly, setting two memory adjustment threshold values T1 (if the memory utilization rate is larger than T1, the memory is extended) and T2 (if the memory utilization rate is less than T2, the memory is recycled) through the control program; then, collecting the memory utilization rate U1 of each virtual machine and storing in the database. In the second collection cycle, the collected virtual machine memory utilization rate U2 is also stored in the database, and the virtual memory analysis is performed at the same time. If (U1+U2) / 2>T1, the virtual memory is extended dynamically; on the contrary, if (U1+U2) / 2<T2 and U1<T1 and U2<T1, the virtual memory is recycled dynamically. According to the virtual memory dynamic allocation method of a virtual machine, the values of T1 and T2 are set according to the actual application, therefore, the virtual machine memory can be dynamically allocated effectively, and the memory utilization efficiency is improved.

Owner:CHINA PETROLEUM & CHEM CORP +1

GPU shared virtual memory working set management

ActiveUS20170060743A1Memory architecture accessing/allocationMemory systemsComputational scienceVirtual memory

A method and apparatus of a device that manages virtual memory for a graphics processing unit is described. In an exemplary embodiment, the device manages a graphics processing unit working set of pages. In this embodiment, the device determines the set of pages of the device to be analyzed, where the device includes a central processing unit and the graphics processing unit. The device additionally classifies the set of pages based on a graphics processing unit activity associated with the set of pages and evicts a page of the set of pages based on the classifying.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com