Providing hardware support for shared virtual memory between local and remote physical memory

A technology of remote memory and local memory, which is applied in the field of providing hardware support for shared virtual memory between local and remote physical memory, and can solve problems such as no management or allocation of accelerator memory

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

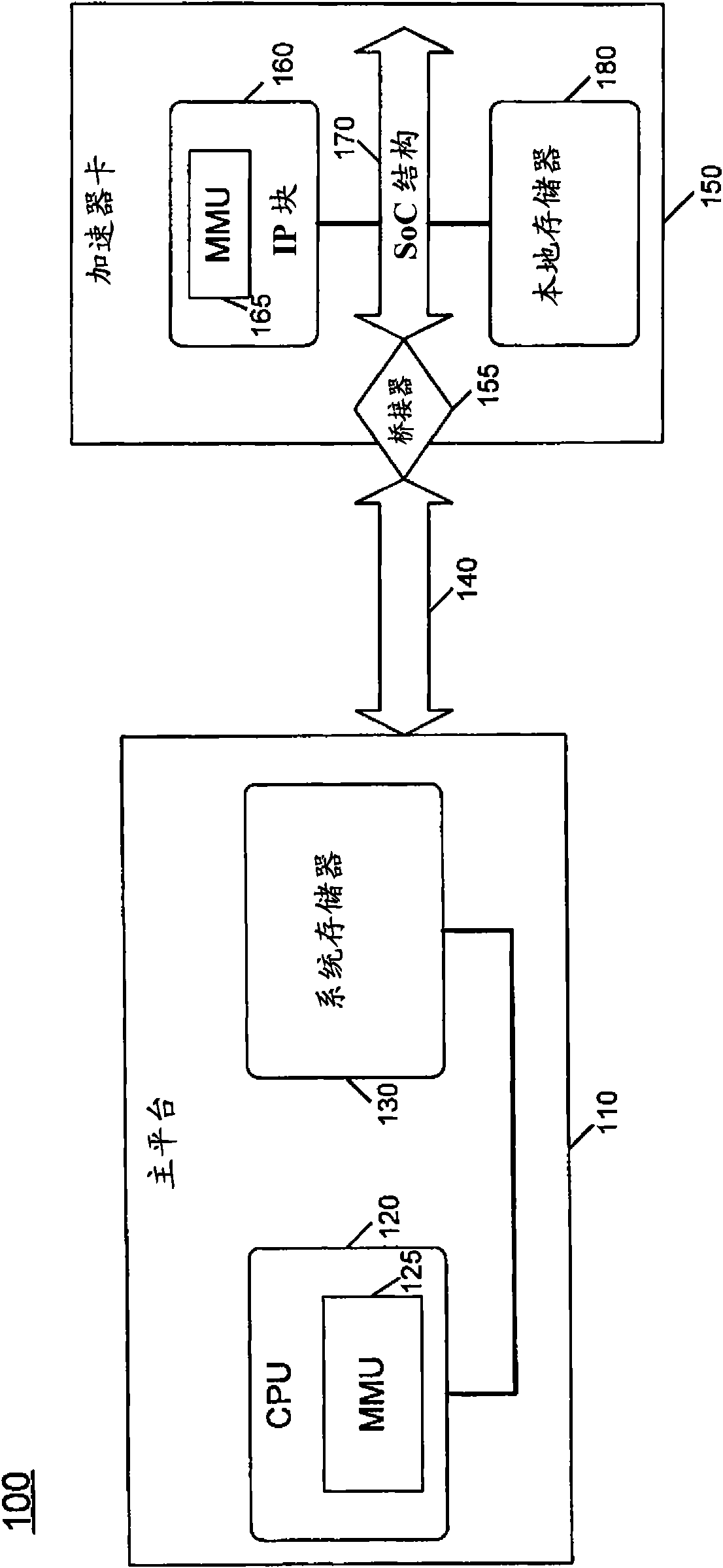

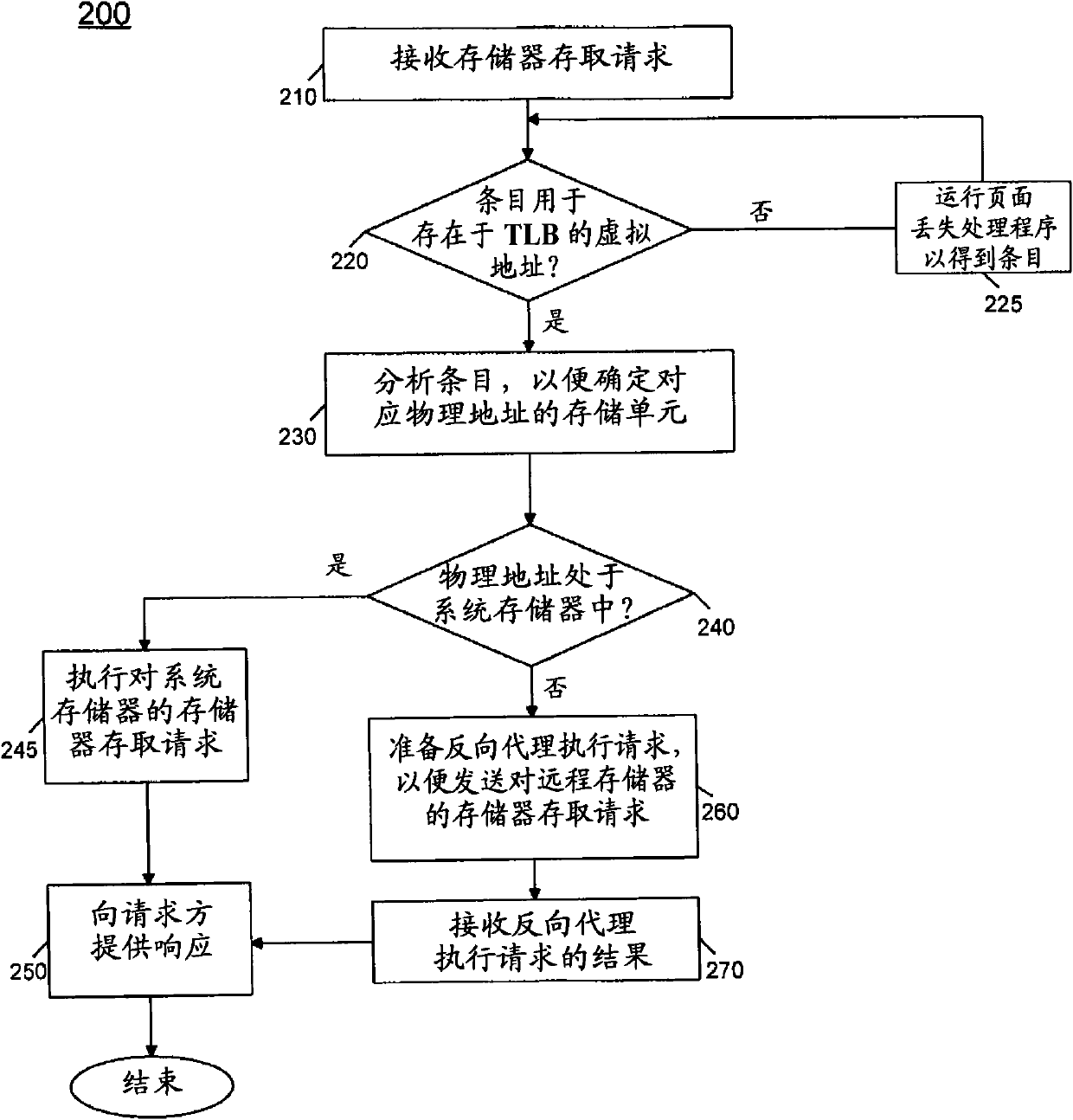

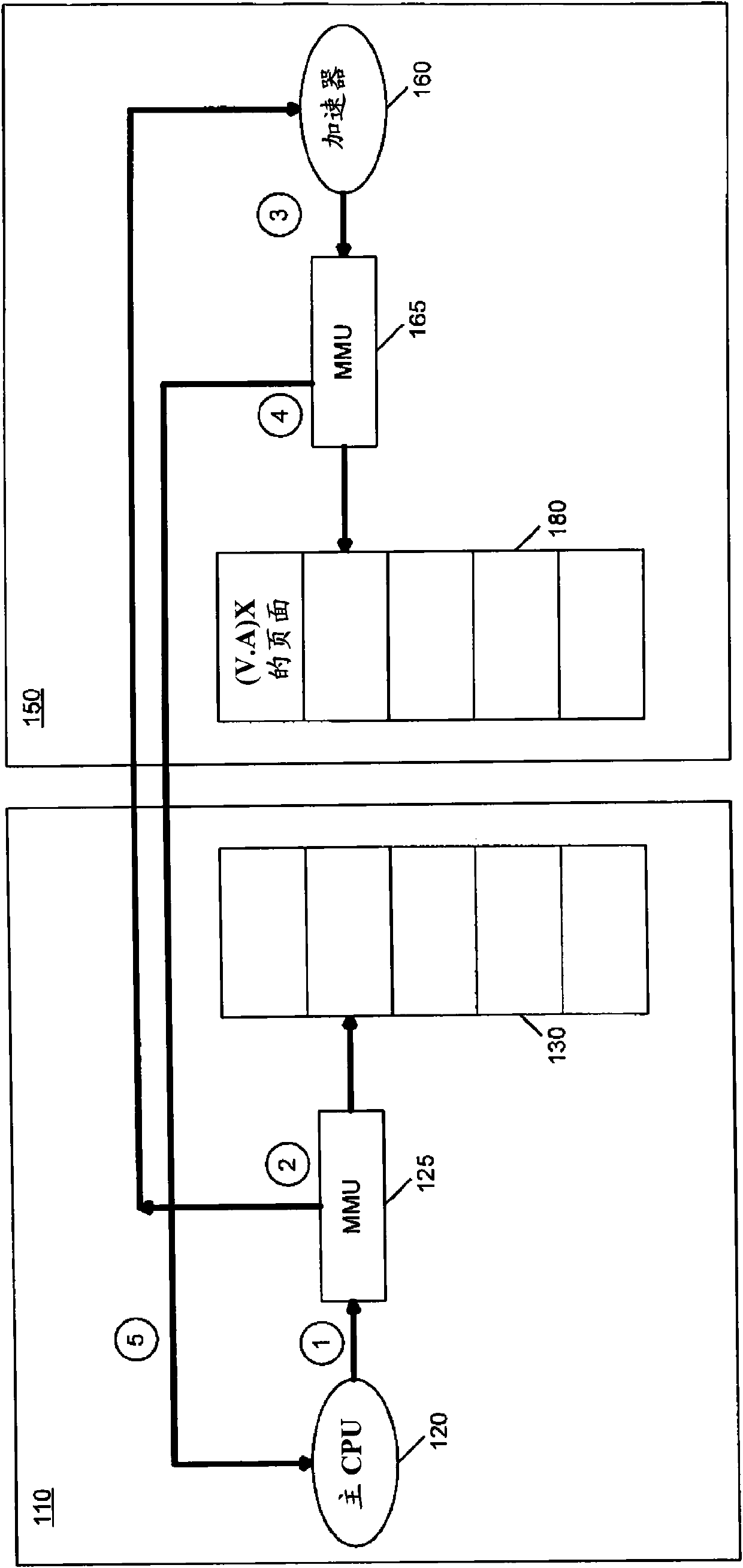

[0015] Embodiments enable a processor (e.g., a central processing unit (CPU) on a socket) to create and manage memory residing on the accelerator by using special load / store transactions and addressing memory Peripheral Component Interconnect Express (PCIe TM ) interface and other interfaces and the full shared virtual address space of the accelerator interconnected with the system. The ability to directly address remote memory allows for an increase in effective computing capacity as seen by application software, and allows applications to seamlessly share data without explicitly involving a programmer to move data back and forth. In this way, memory can be addressed without resorting to memory protection and faulting virtual address accesses to redirect pending memory accesses from error handlers. Thus, existing shared-memory multi-core processing can be extended to include accelerators that are not on-socket, but connected via peripheral non-coherent links.

[0016] In co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com