Polarized SAR (Synthetic Aperture Radar) image classifying method based on cooperative representation and deep learning.

A collaborative representation and deep learning technology, applied in the field of image processing, can solve problems such as high computational complexity, achieve the effects of improving classification accuracy, reducing the time consumed by classification, and reducing computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

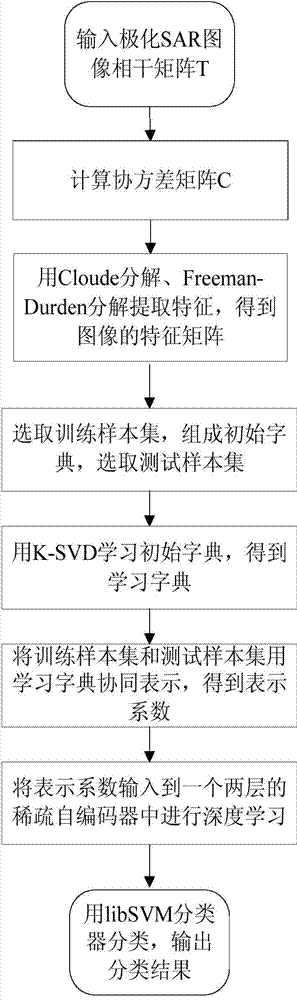

[0027] refer to figure 1 , the specific implementation steps of the present invention are as follows:

[0028] Step 1, calculating the polarization covariance matrix C and the total power characteristic parameter S.

[0029] (1a) The polarization coherence matrix T of each 3*3 pixel point of the input polarization SAR image;

[0030] (1b) Calculate the polarization covariance matrix C of each pixel by the following formula: C=M*T*M',

[0031] In the formula, M=[1 / sqrt(2)]*m, m=[101; 10-1; 0sqrt(2)0], sqrt(2) represents the square root of 2, and M' represents the transposition matrix of M.

[0032] (1c) Using three elements T on the diagonal of T 11 , T 22 , T 33 Constituting the total power characteristic parameter: S=T 11 +T 22 +T 33 .

[0033] Step 2, extracting polarization features.

[0034] (2a) Decompose two scattering parameters, the scattering entropy H and the anti-entropy A, from the polarization coherence matrix T of each pixel through the Claude Cloude de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com