Patents

Literature

50 results about "Software pipelining" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

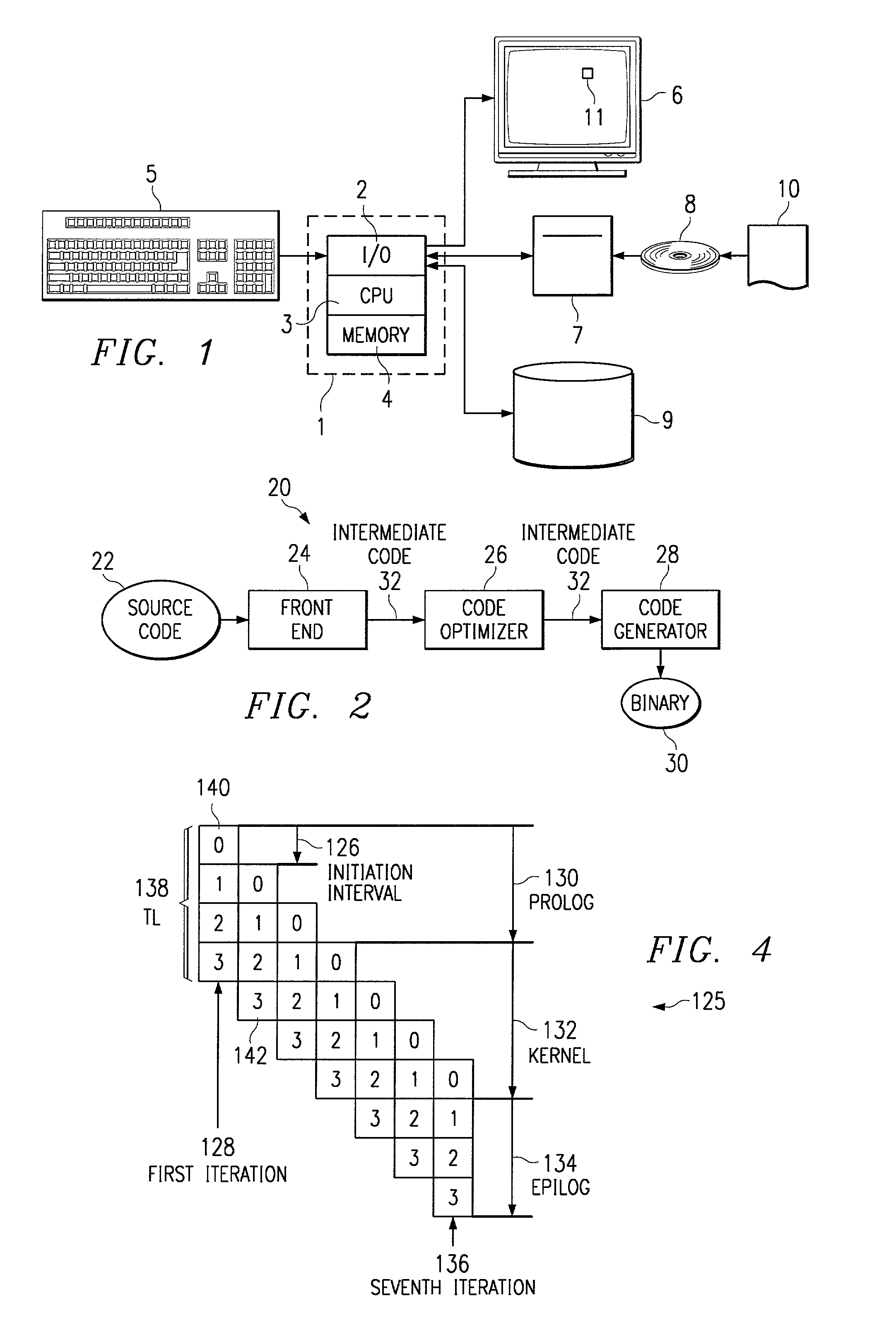

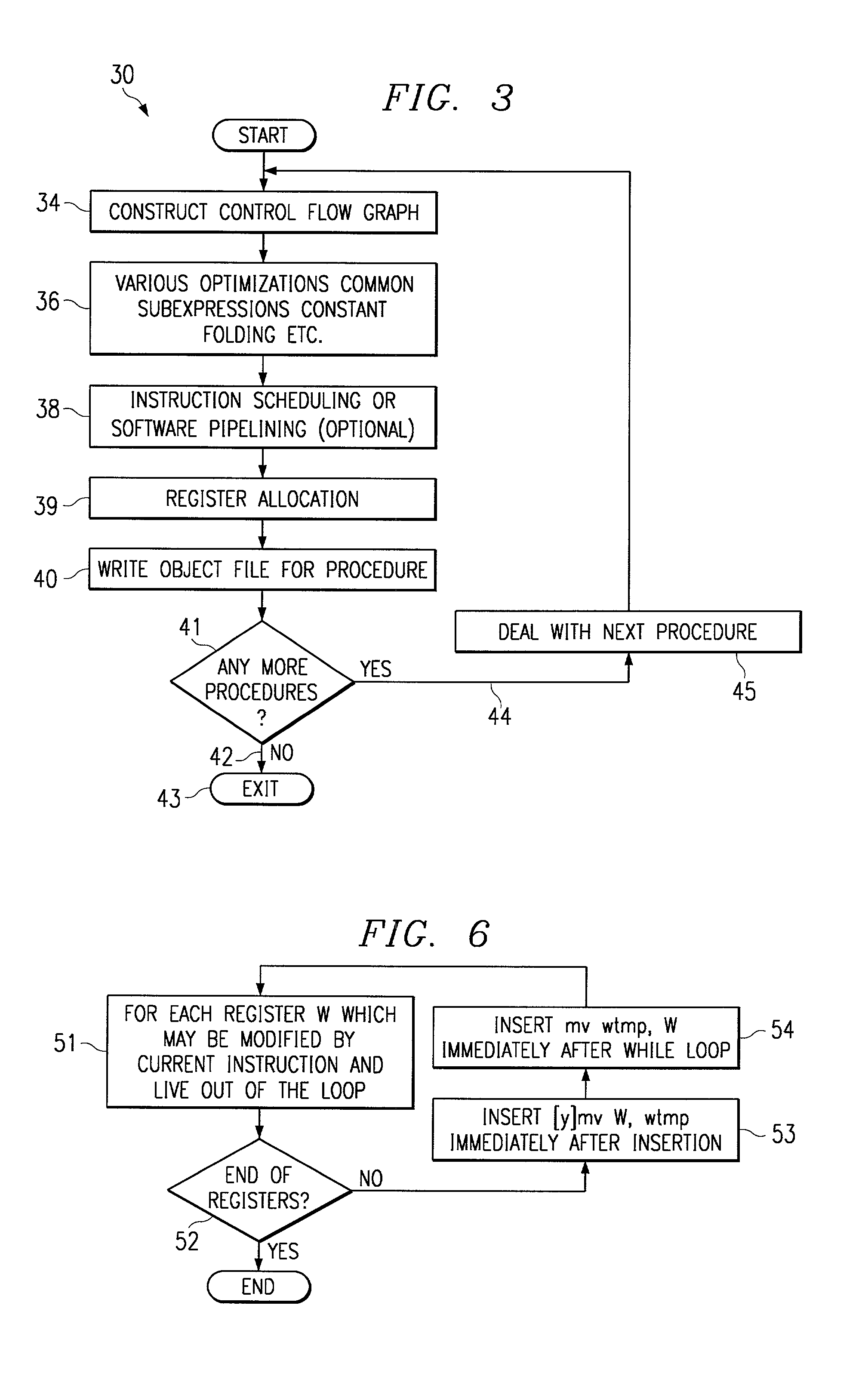

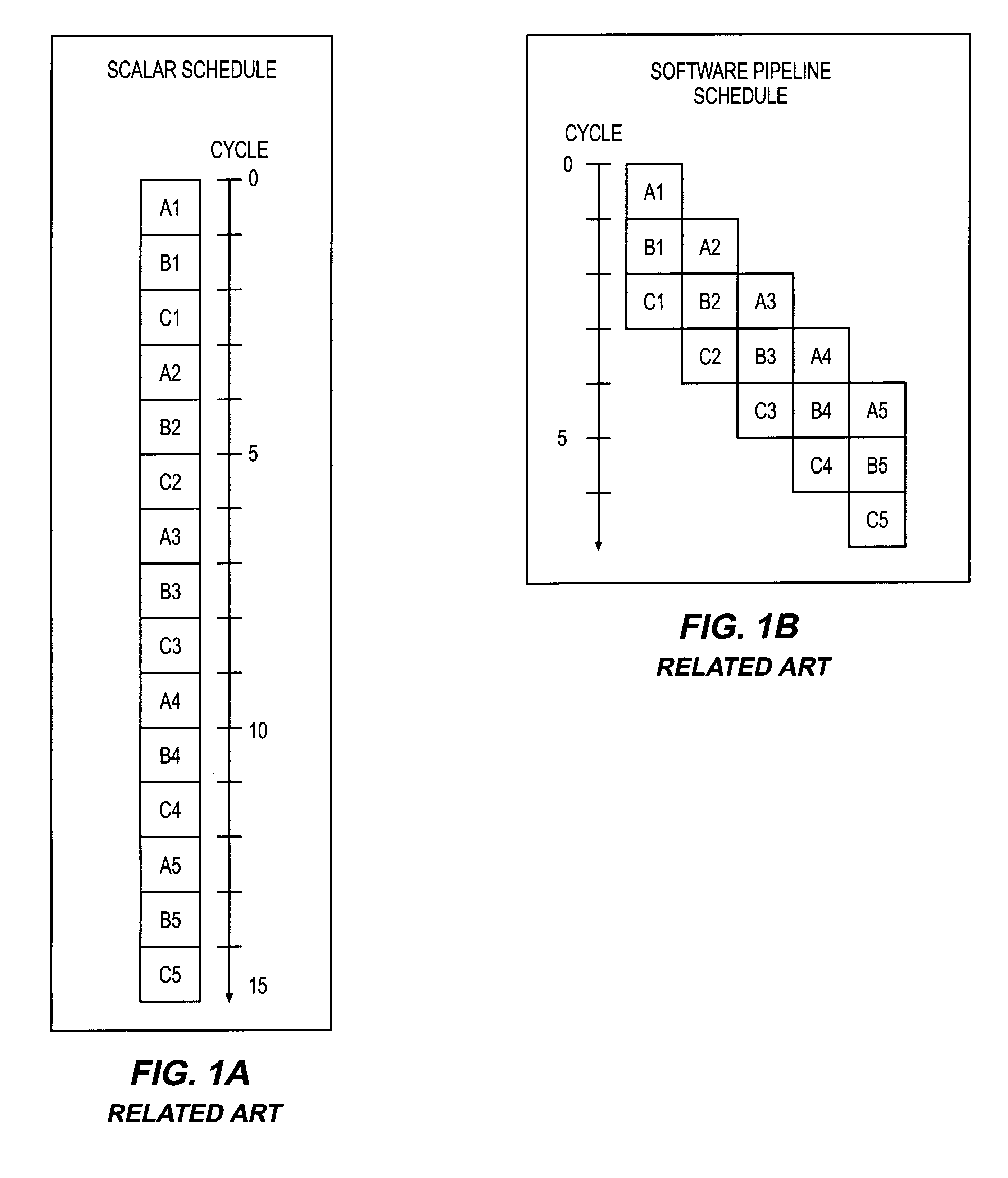

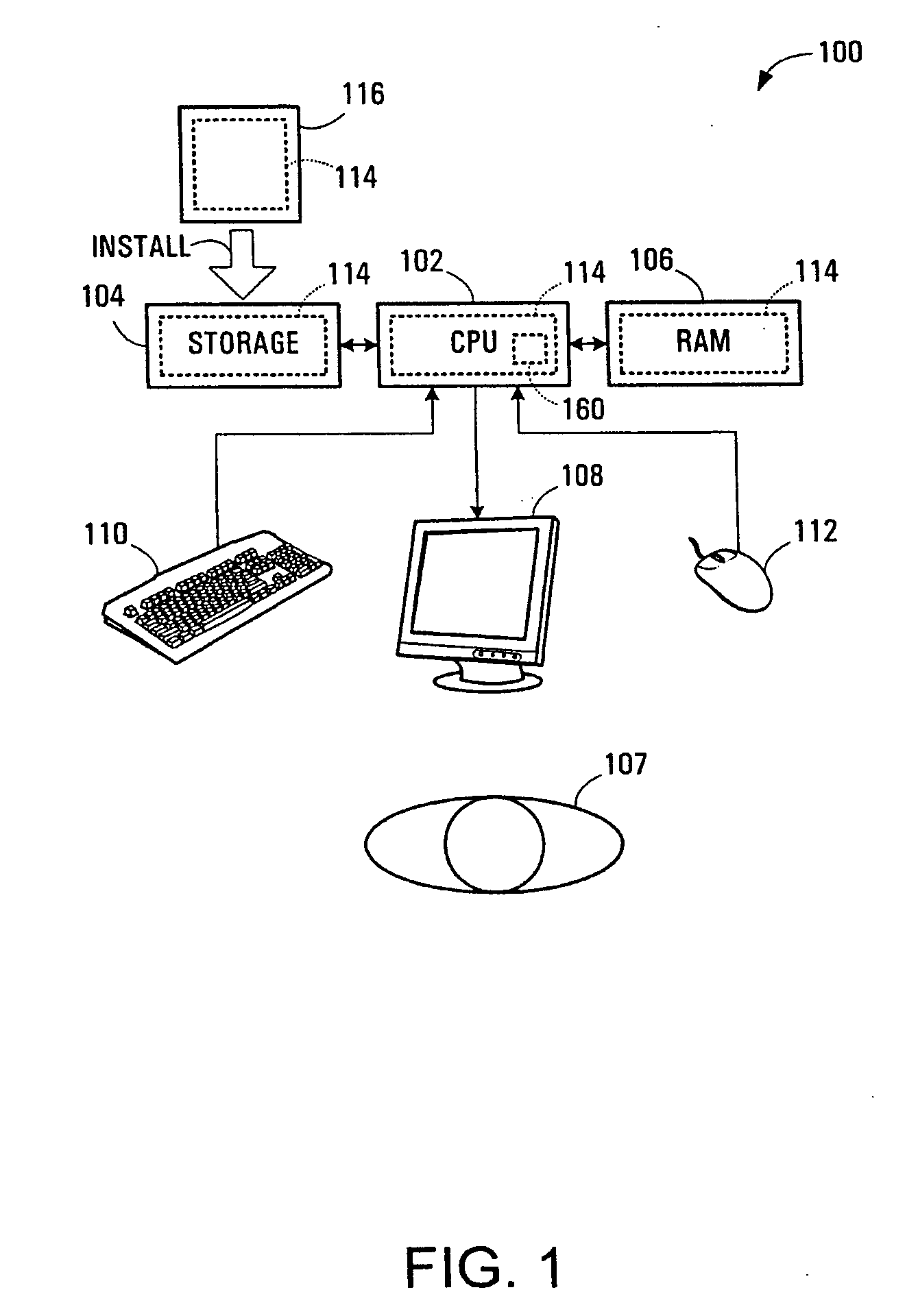

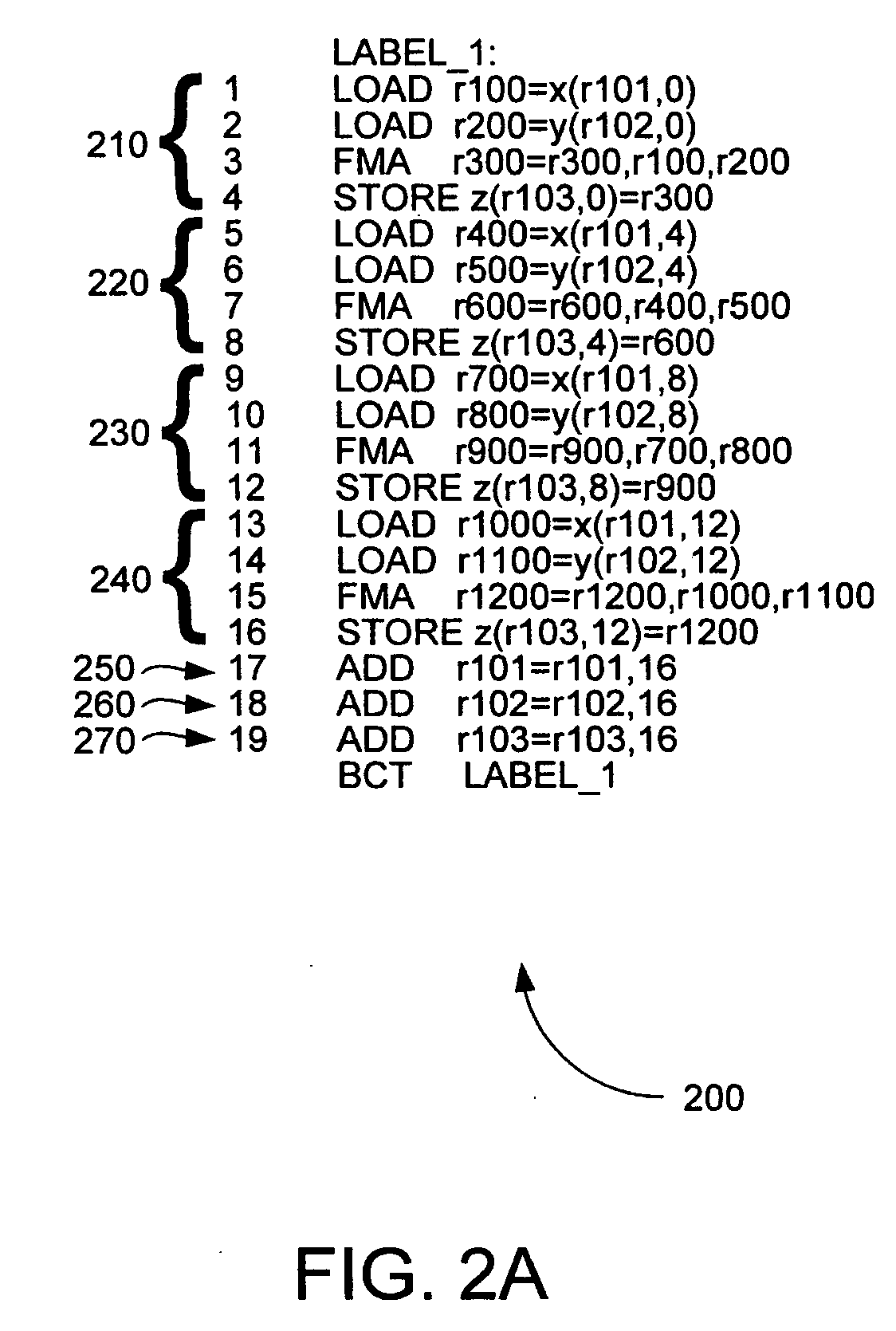

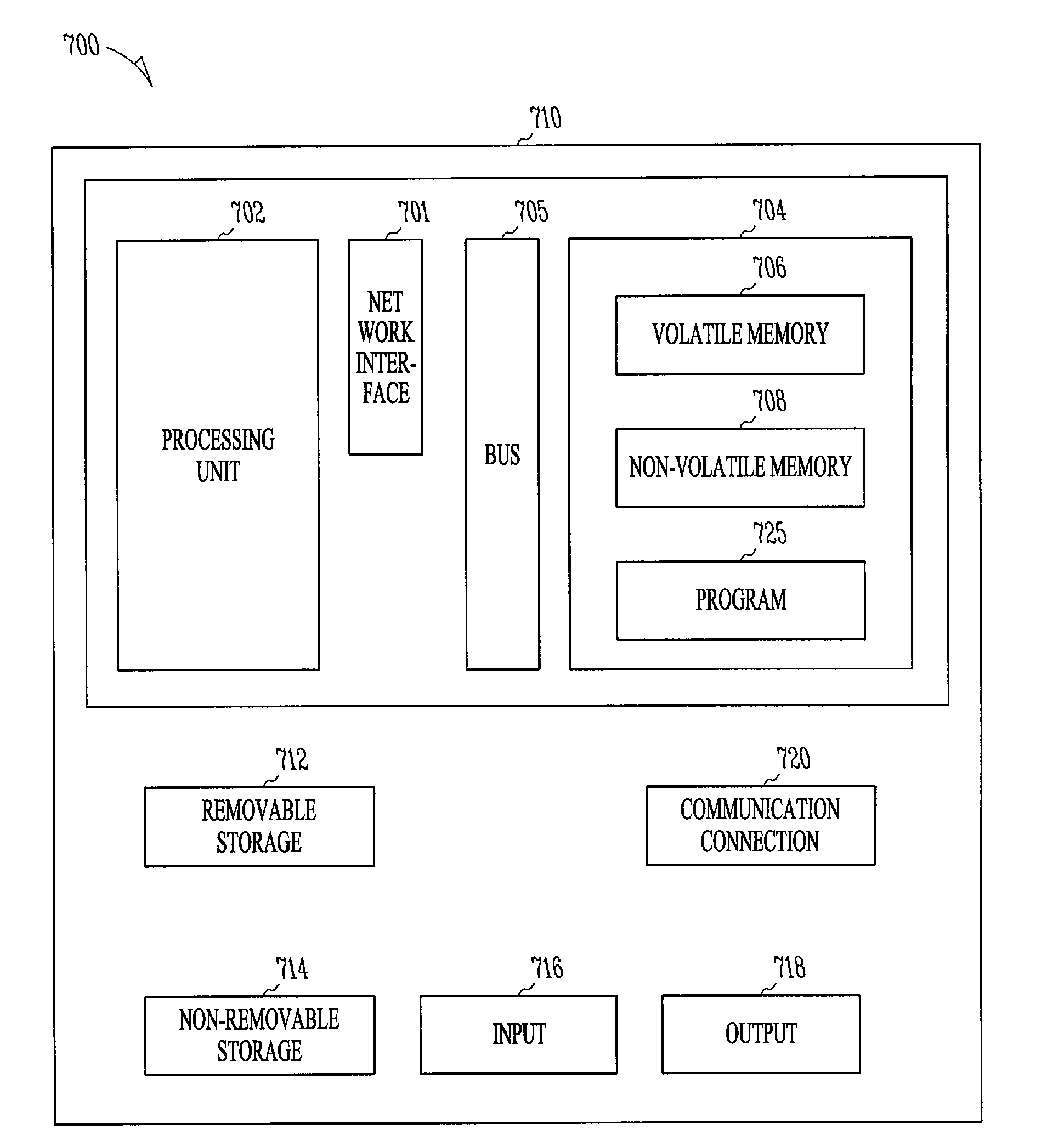

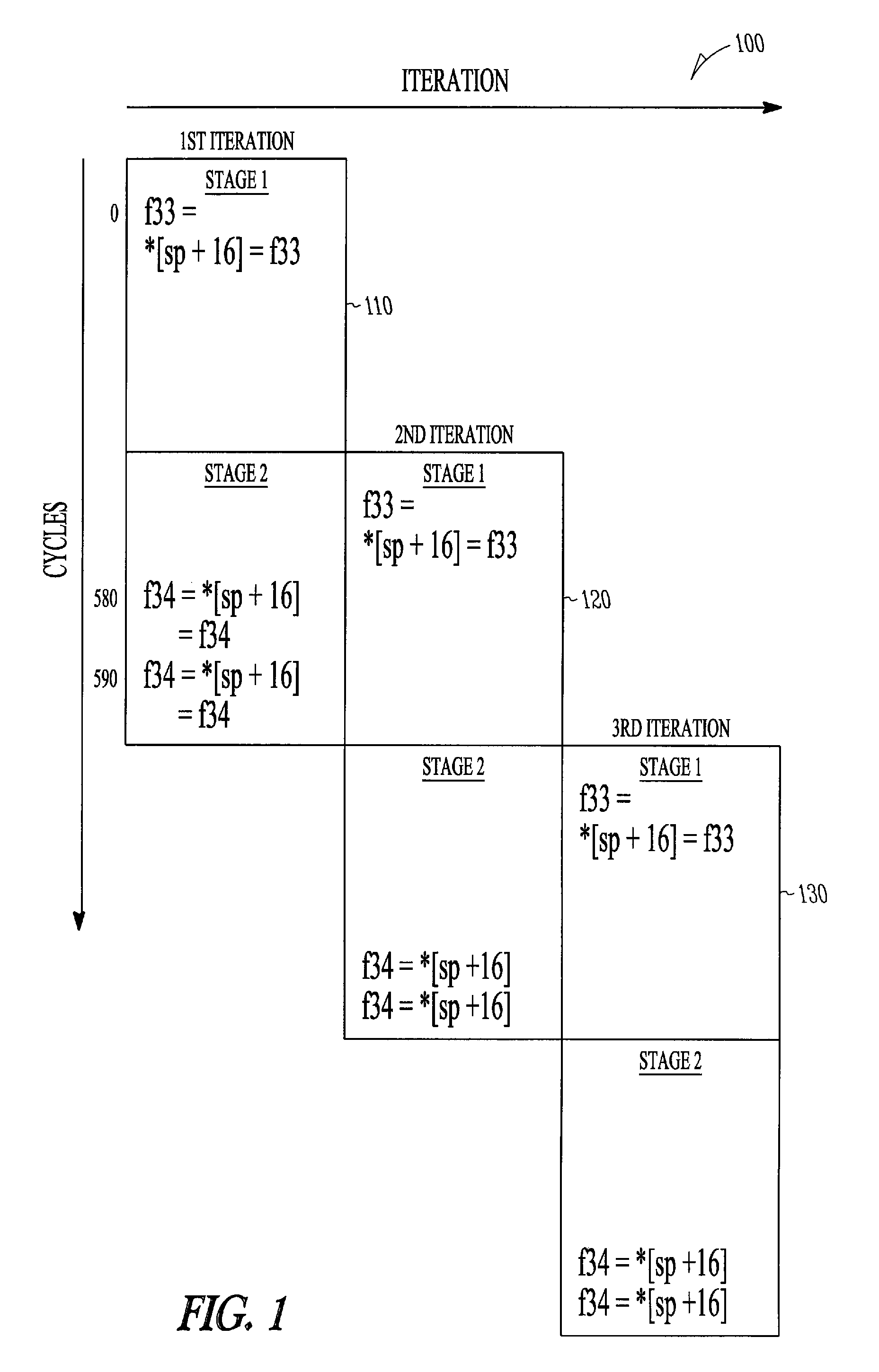

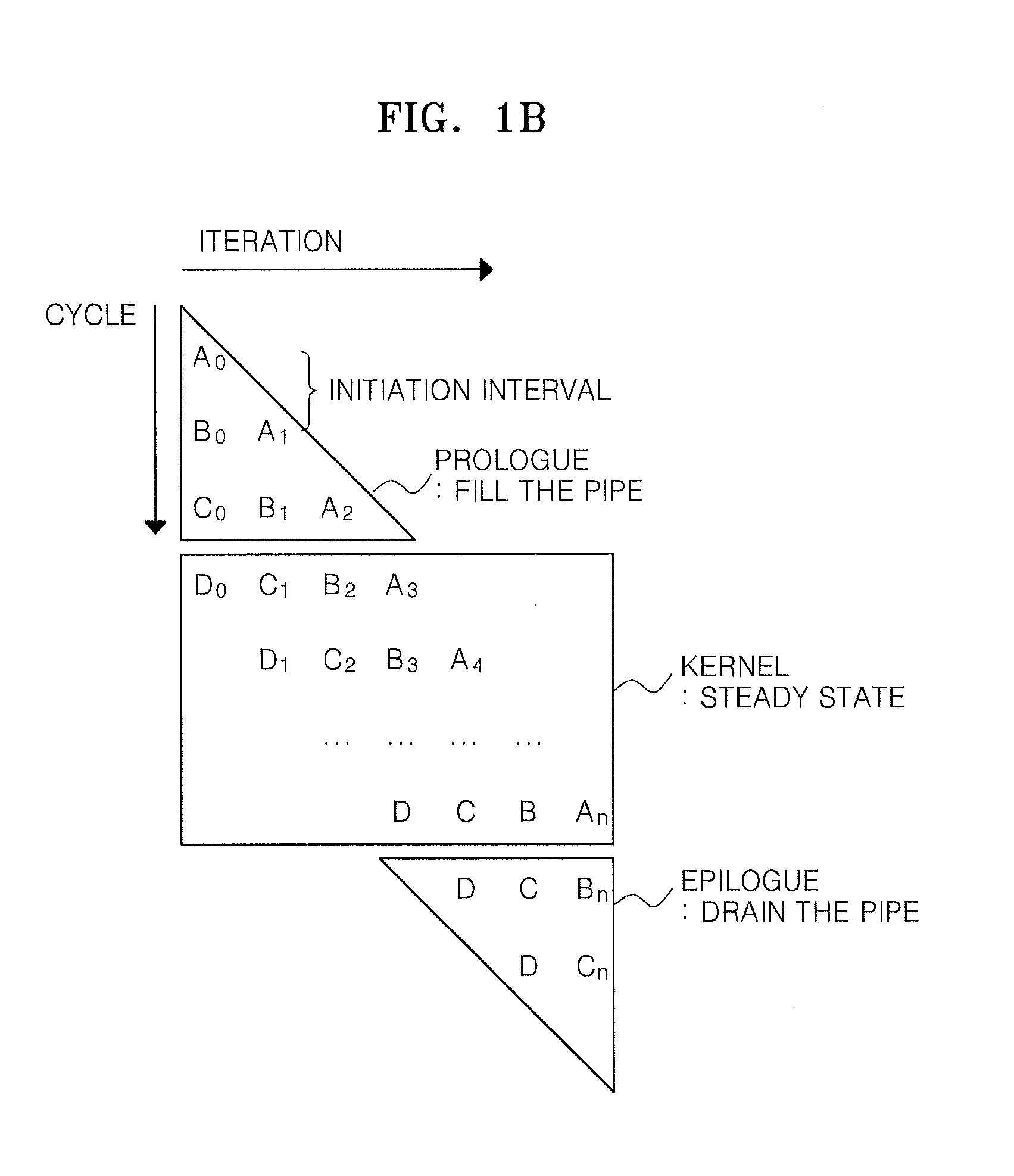

In computer science, software pipelining is a technique used to optimize loops, in a manner that parallels hardware pipelining. Software pipelining is a type of out-of-order execution, except that the reordering is done by a compiler (or in the case of hand written assembly code, by the programmer) instead of the processor. Some computer architectures have explicit support for software pipelining, notably Intel's IA-64 architecture.

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

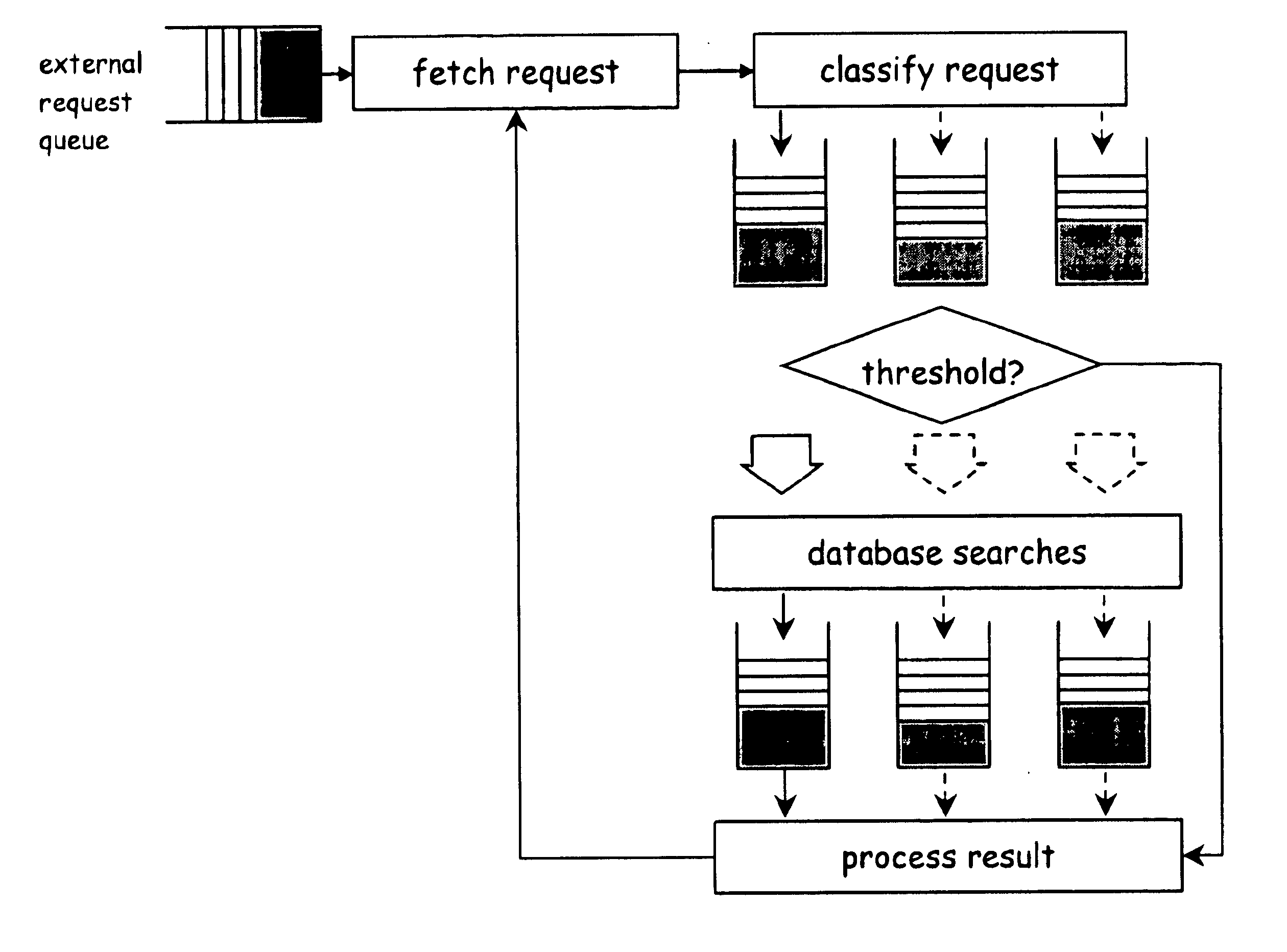

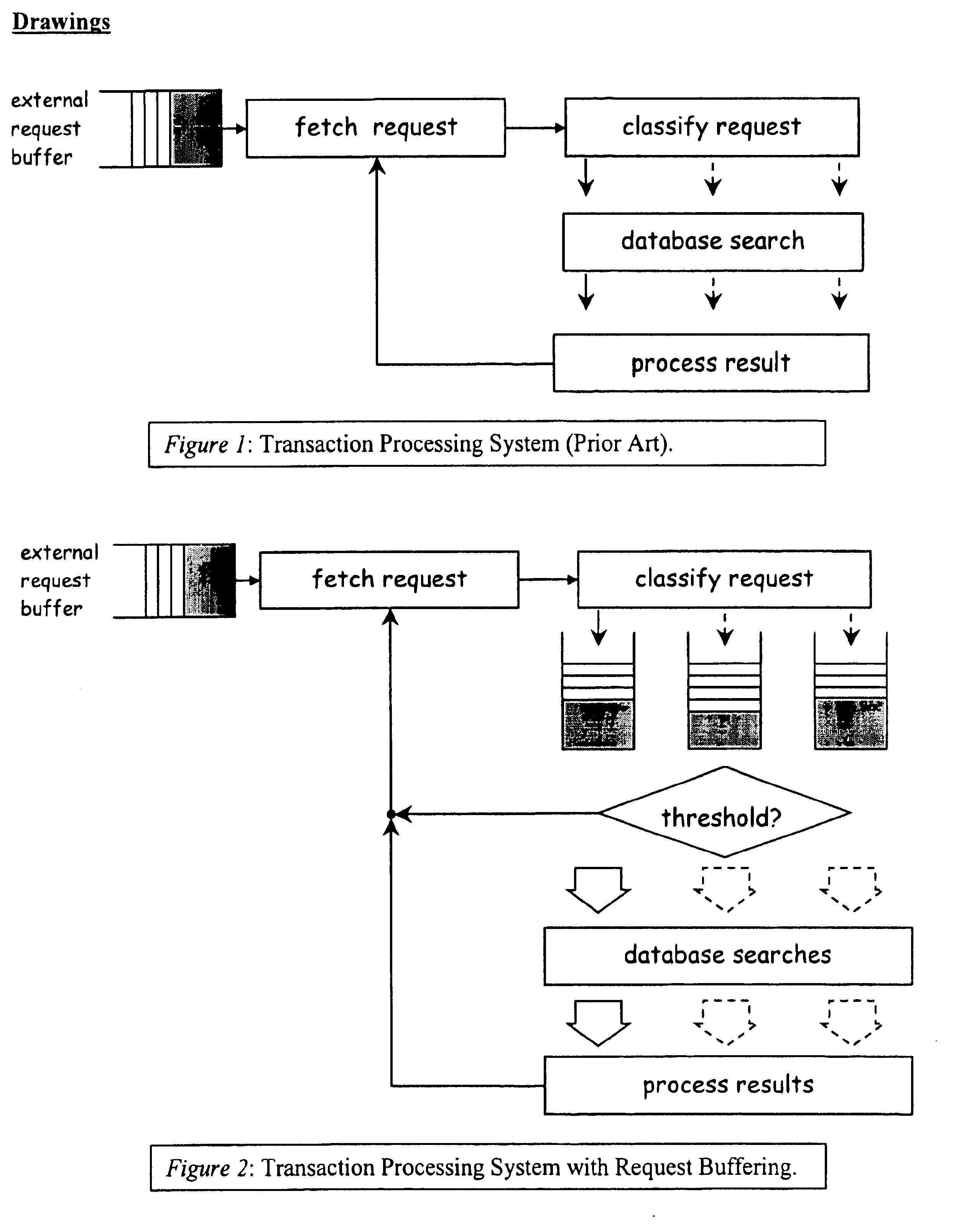

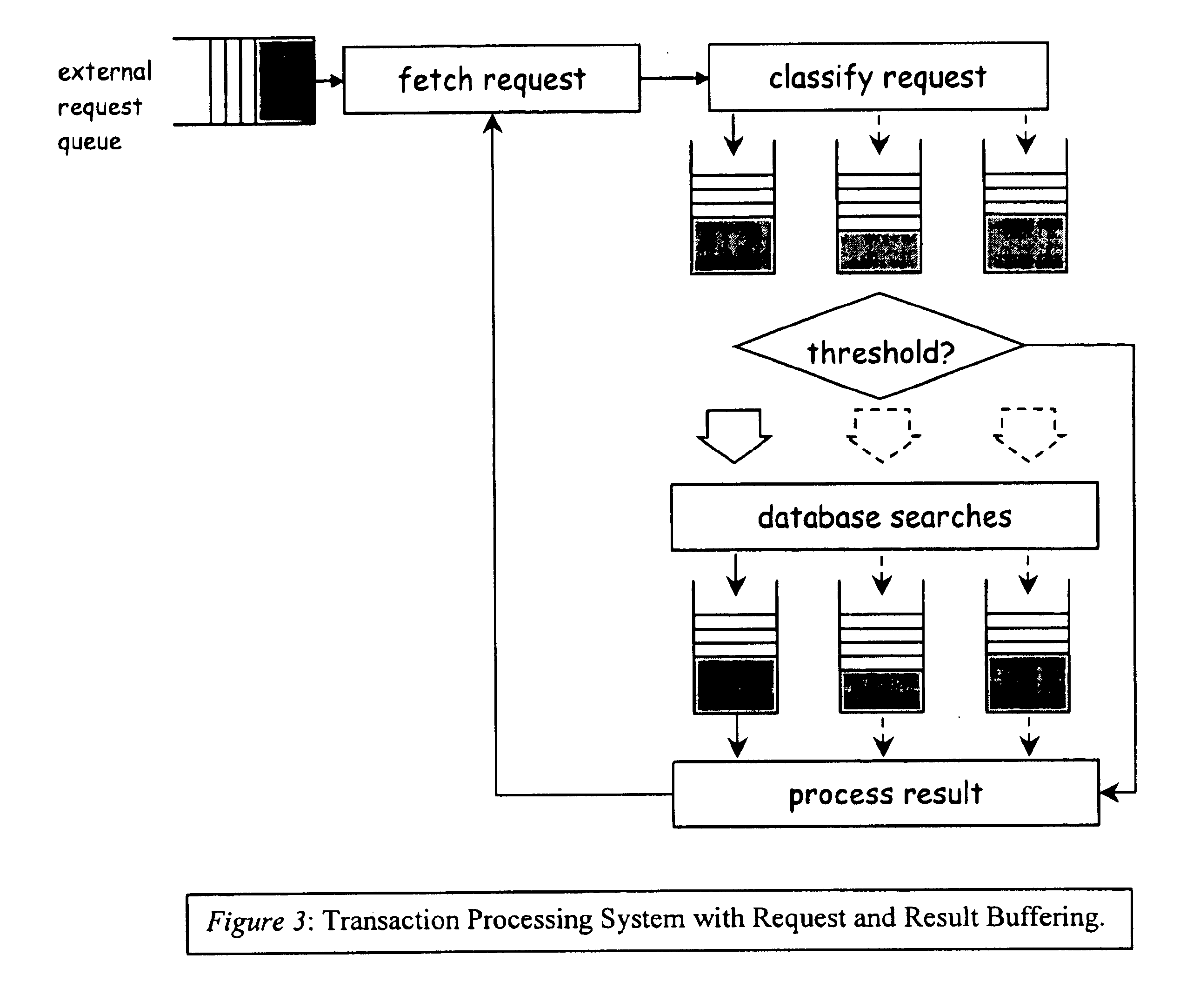

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

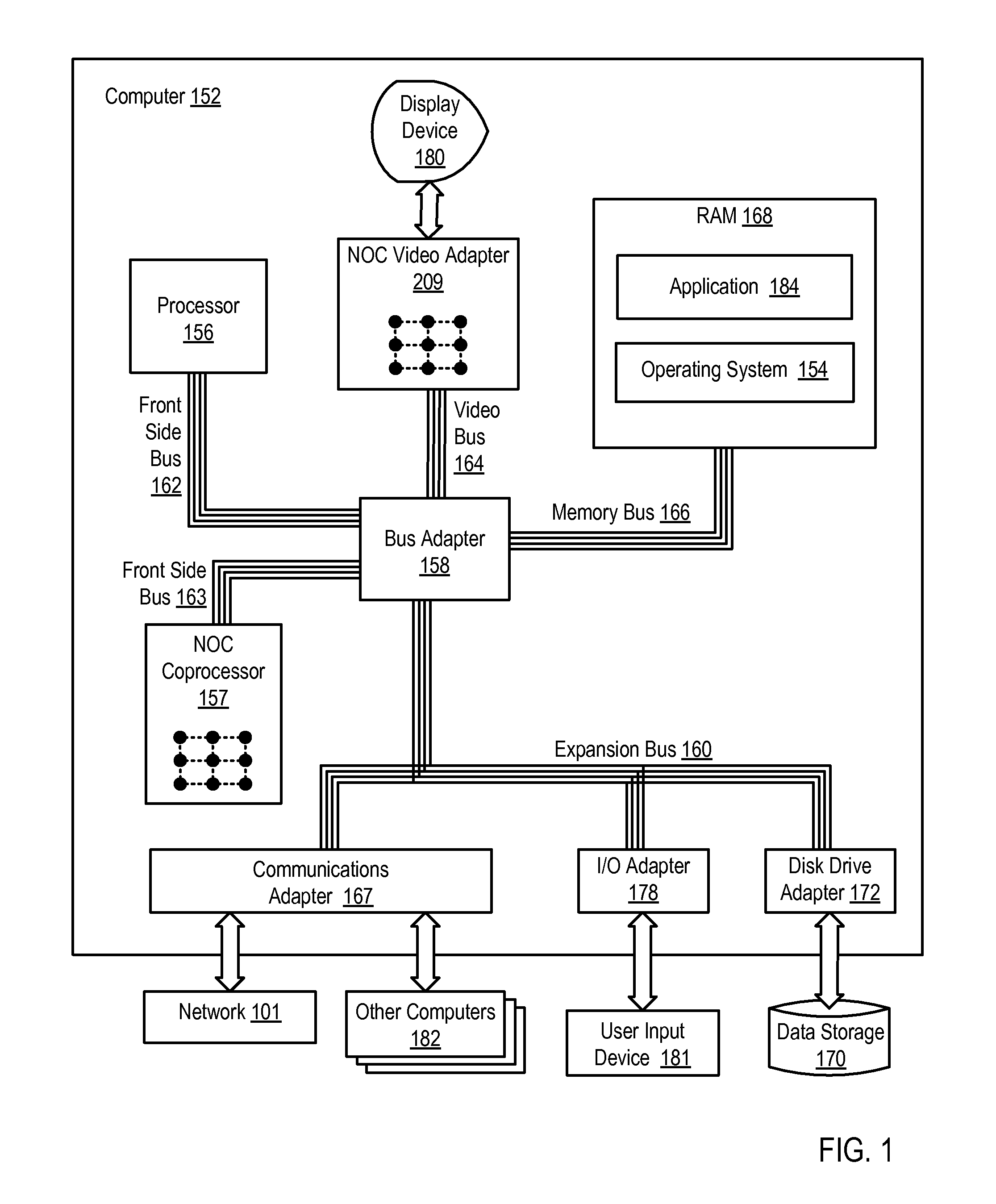

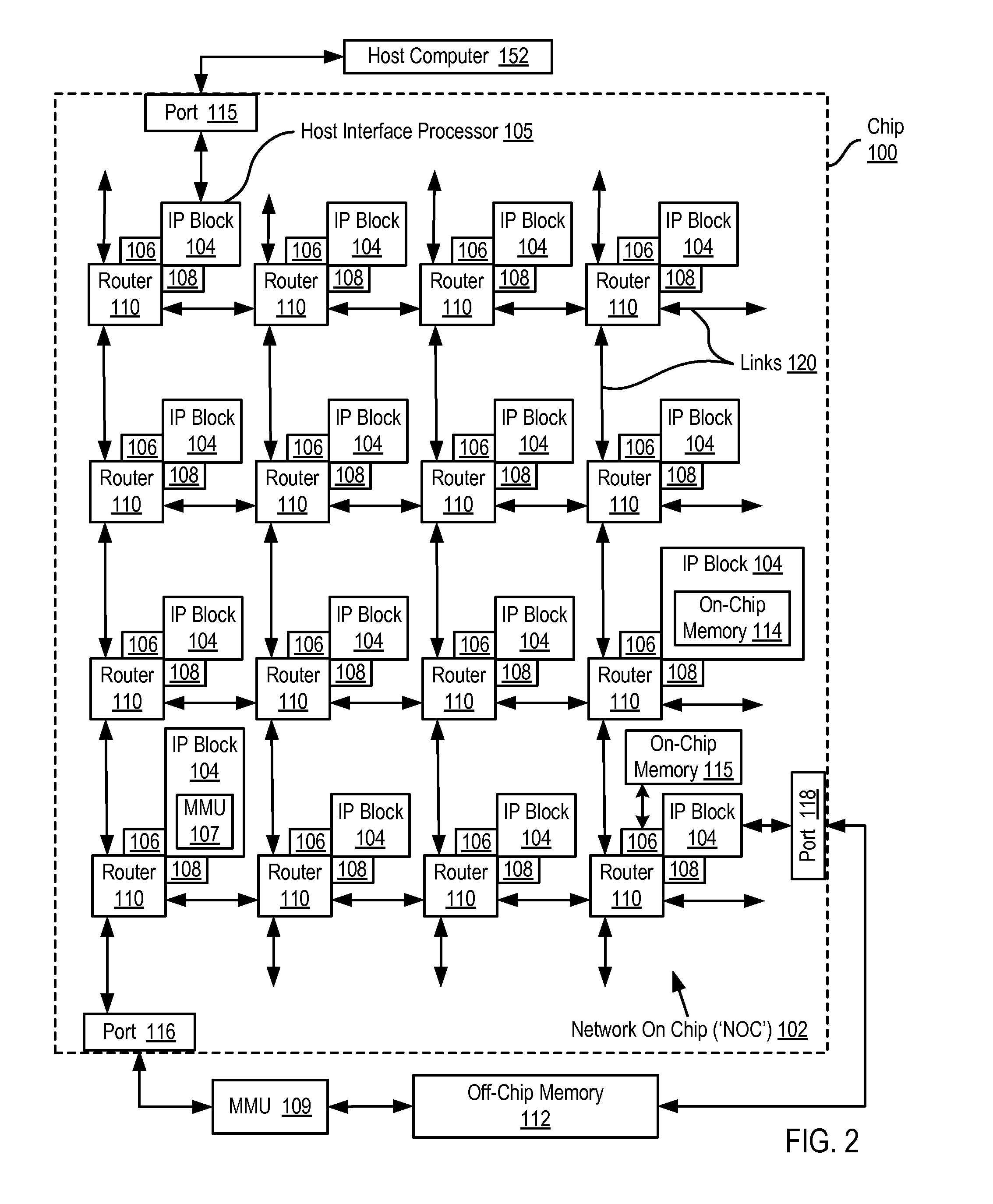

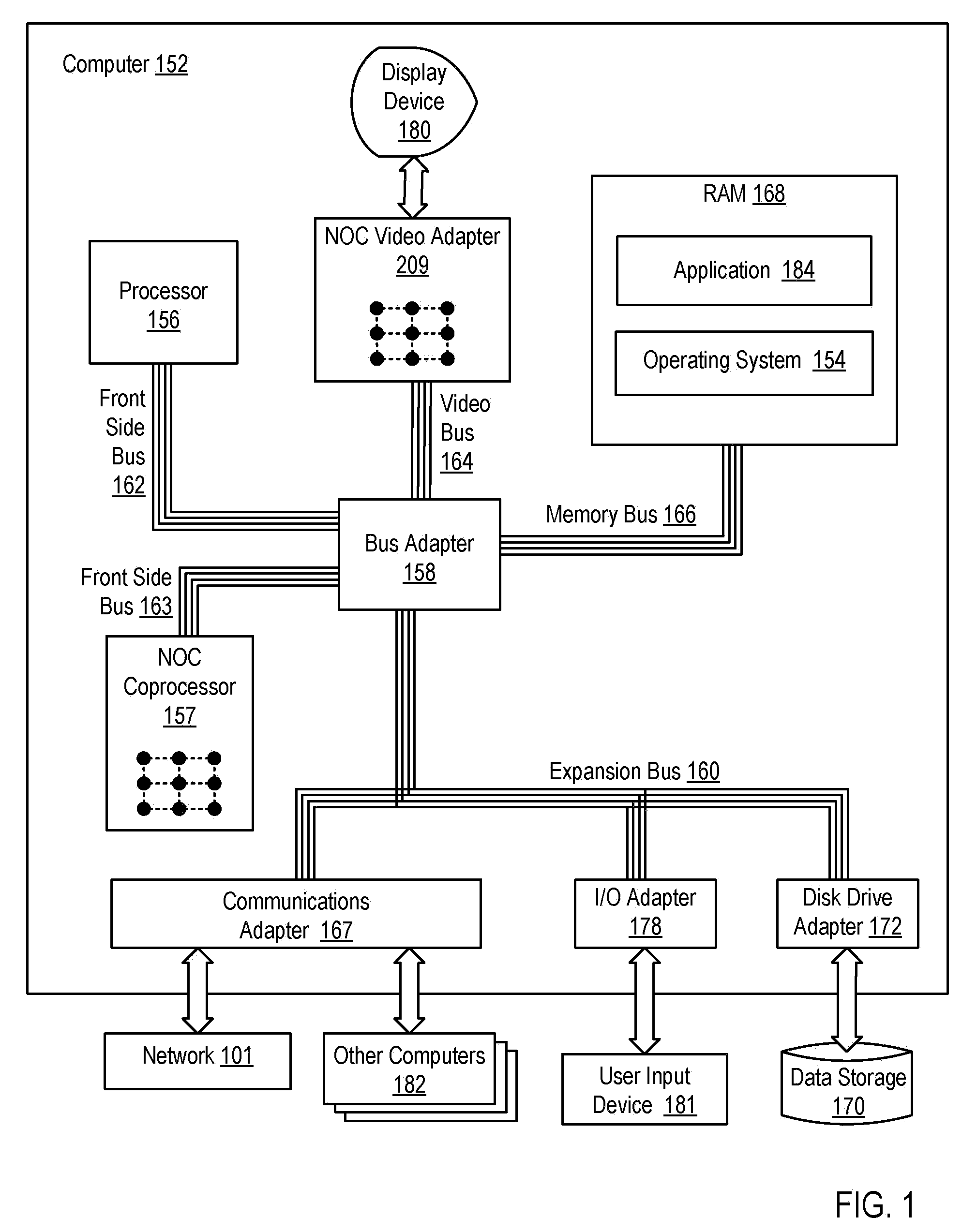

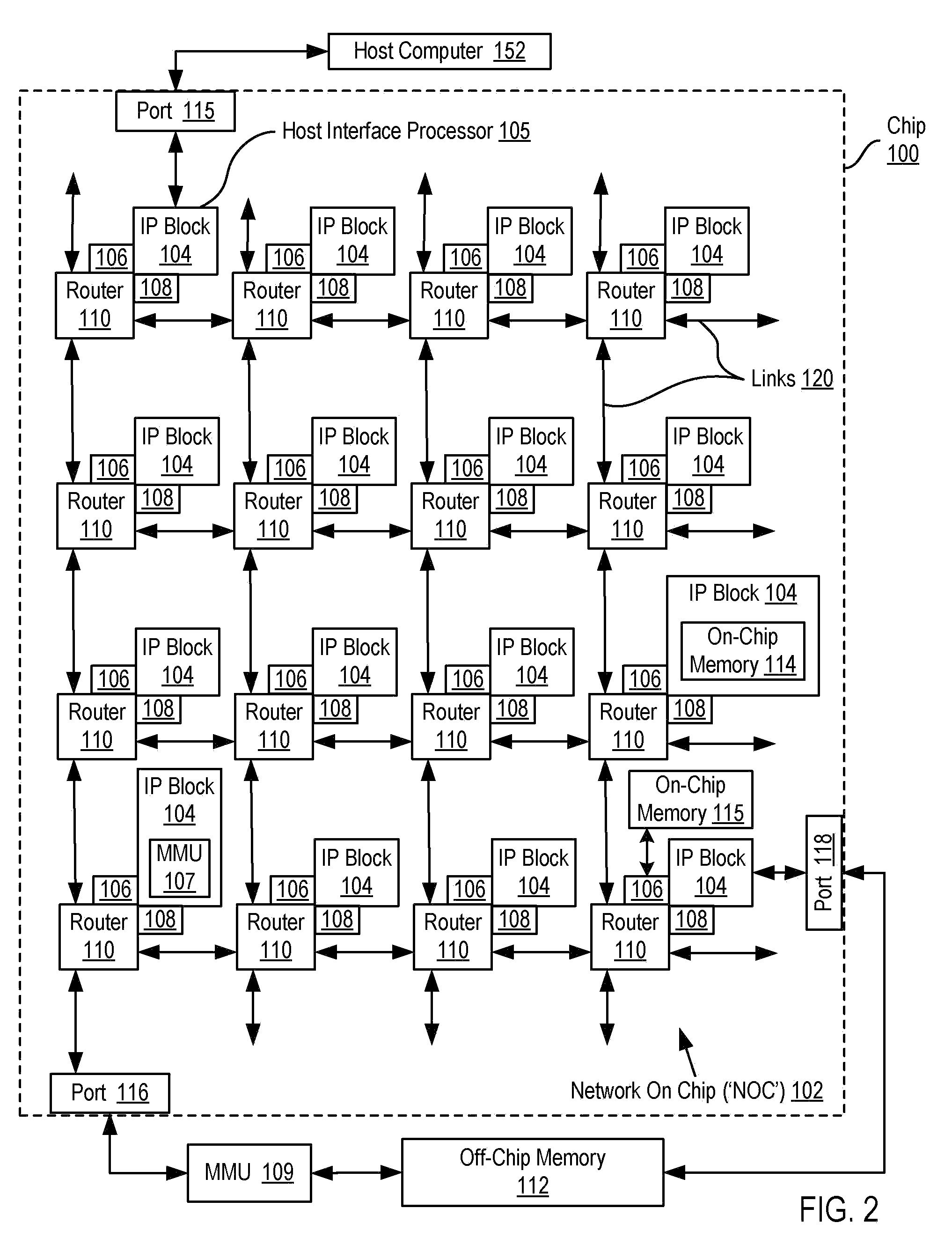

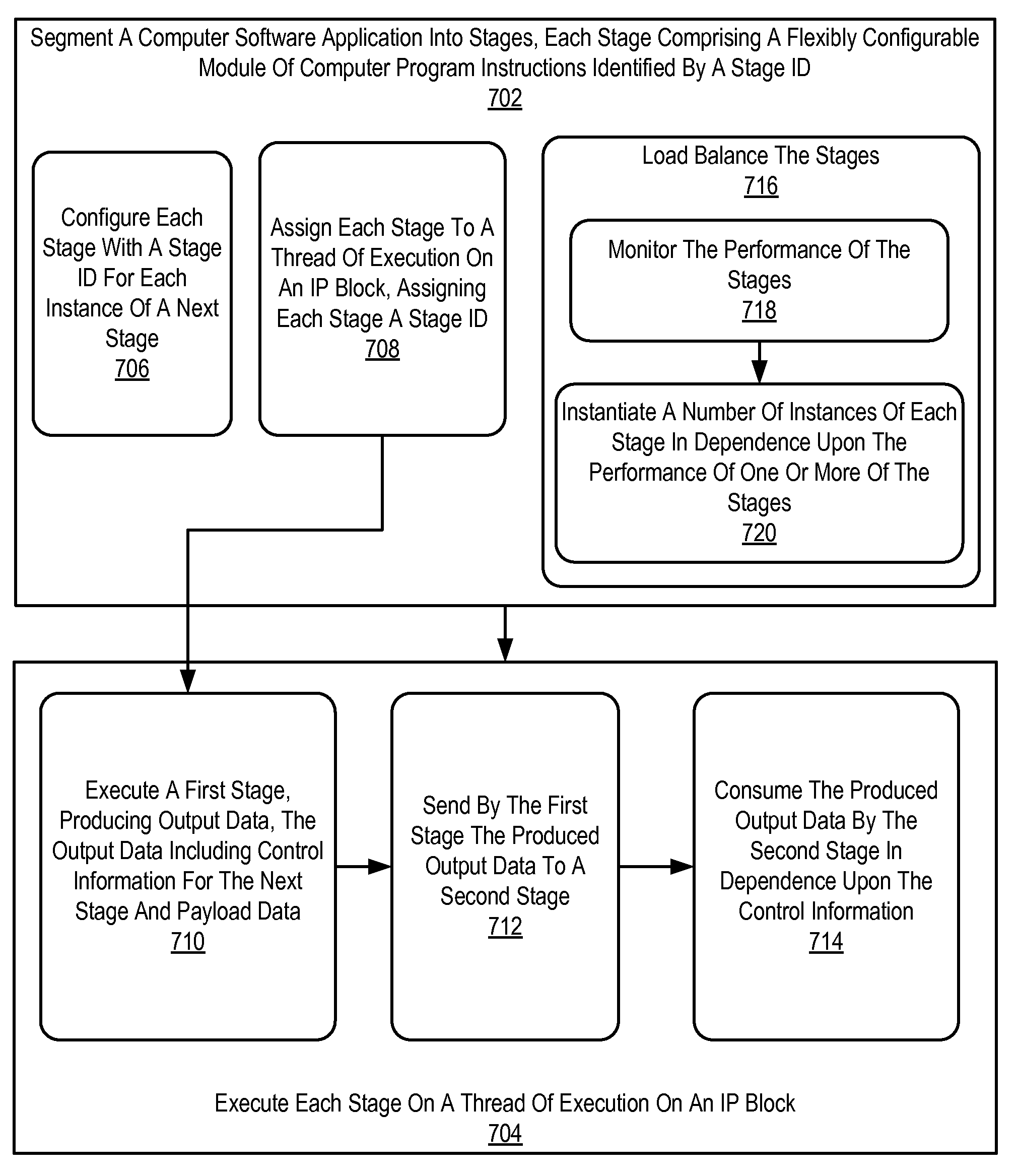

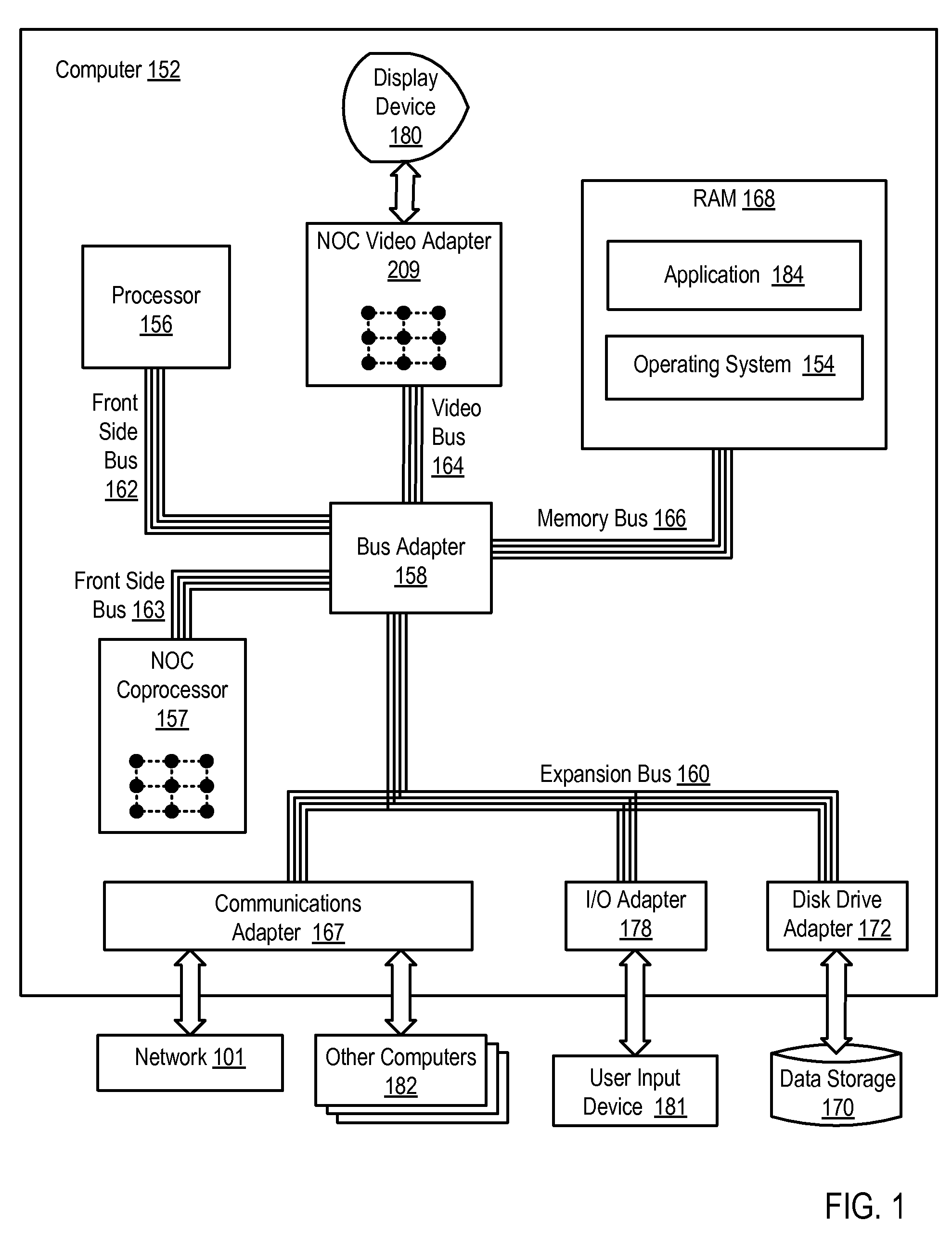

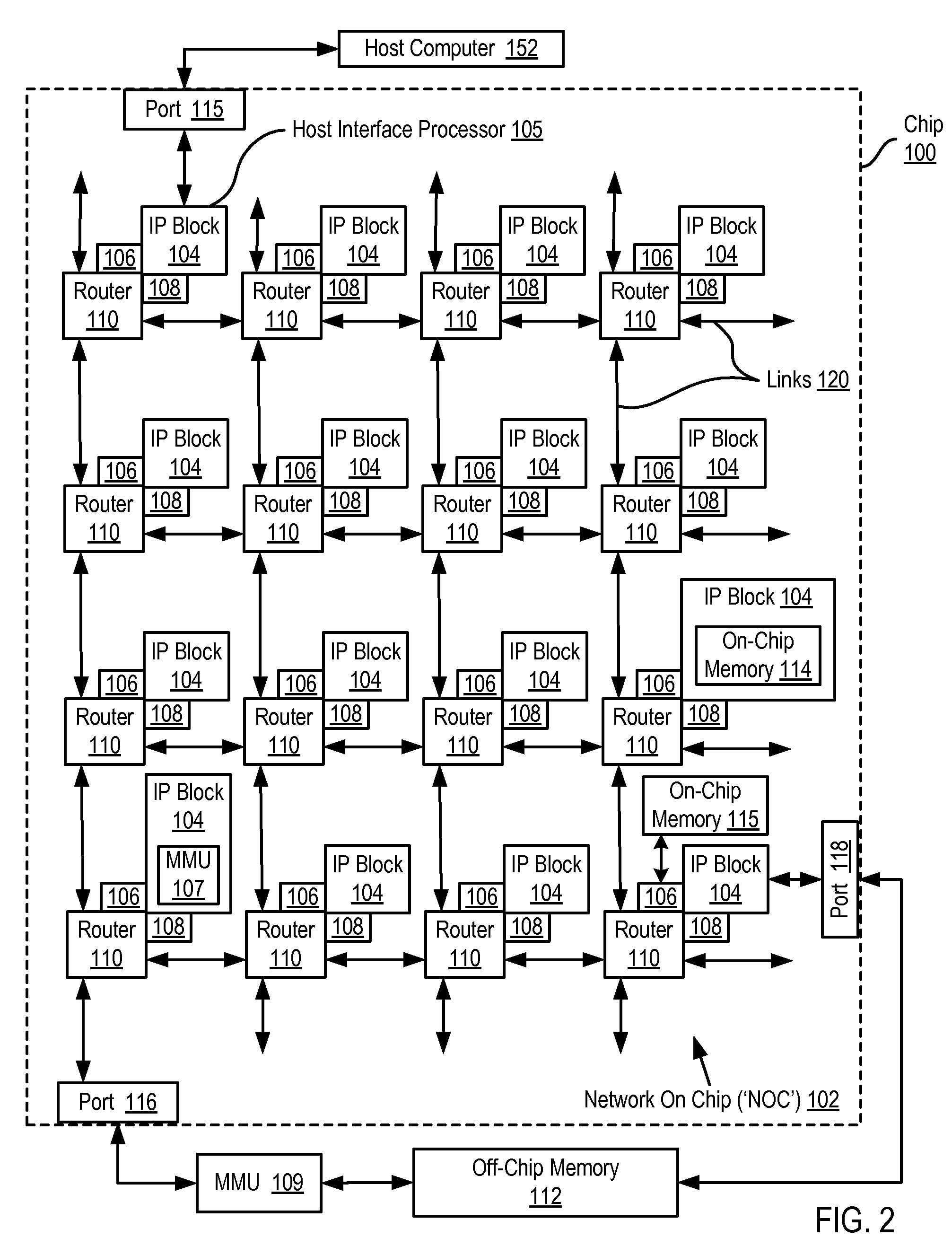

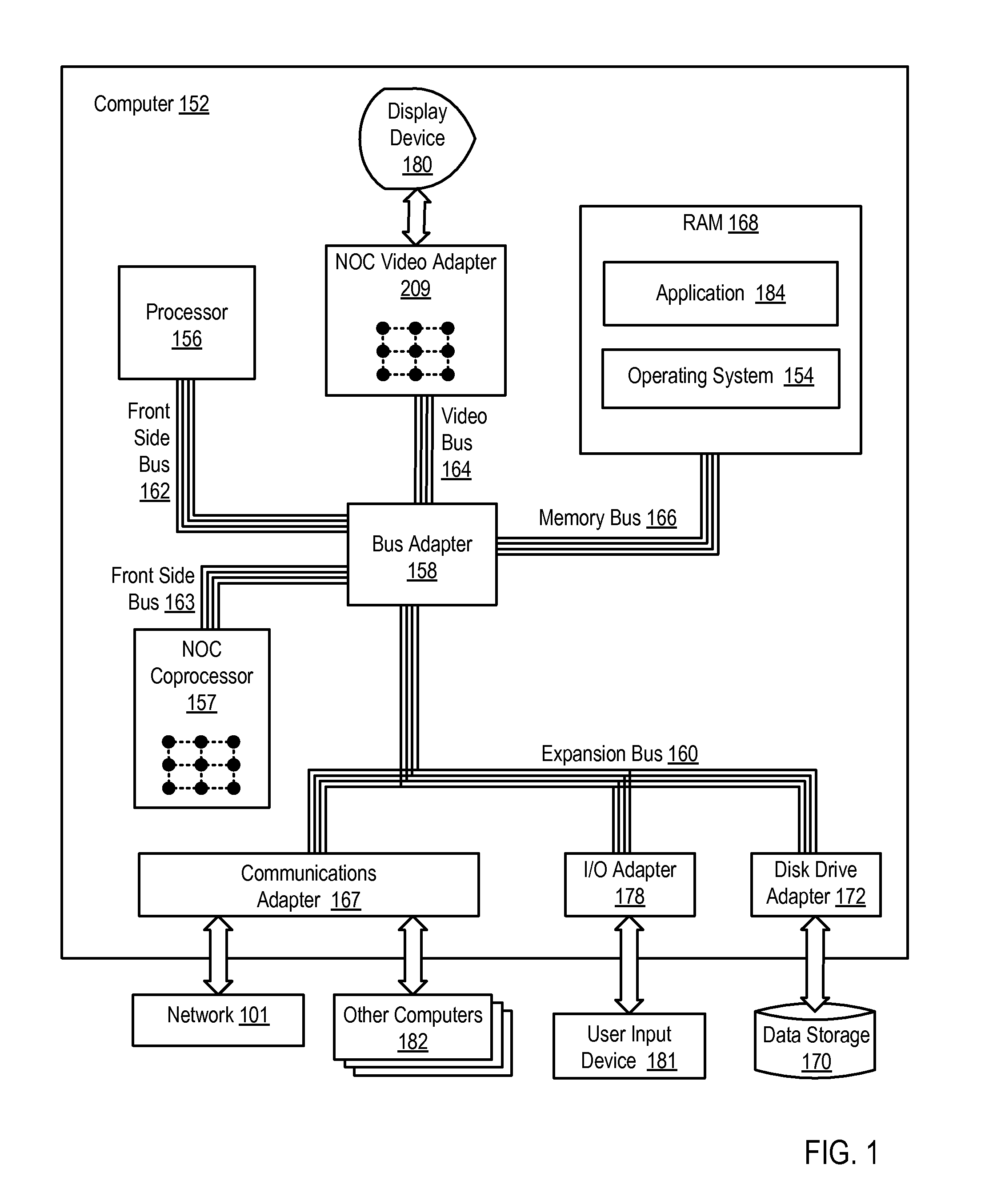

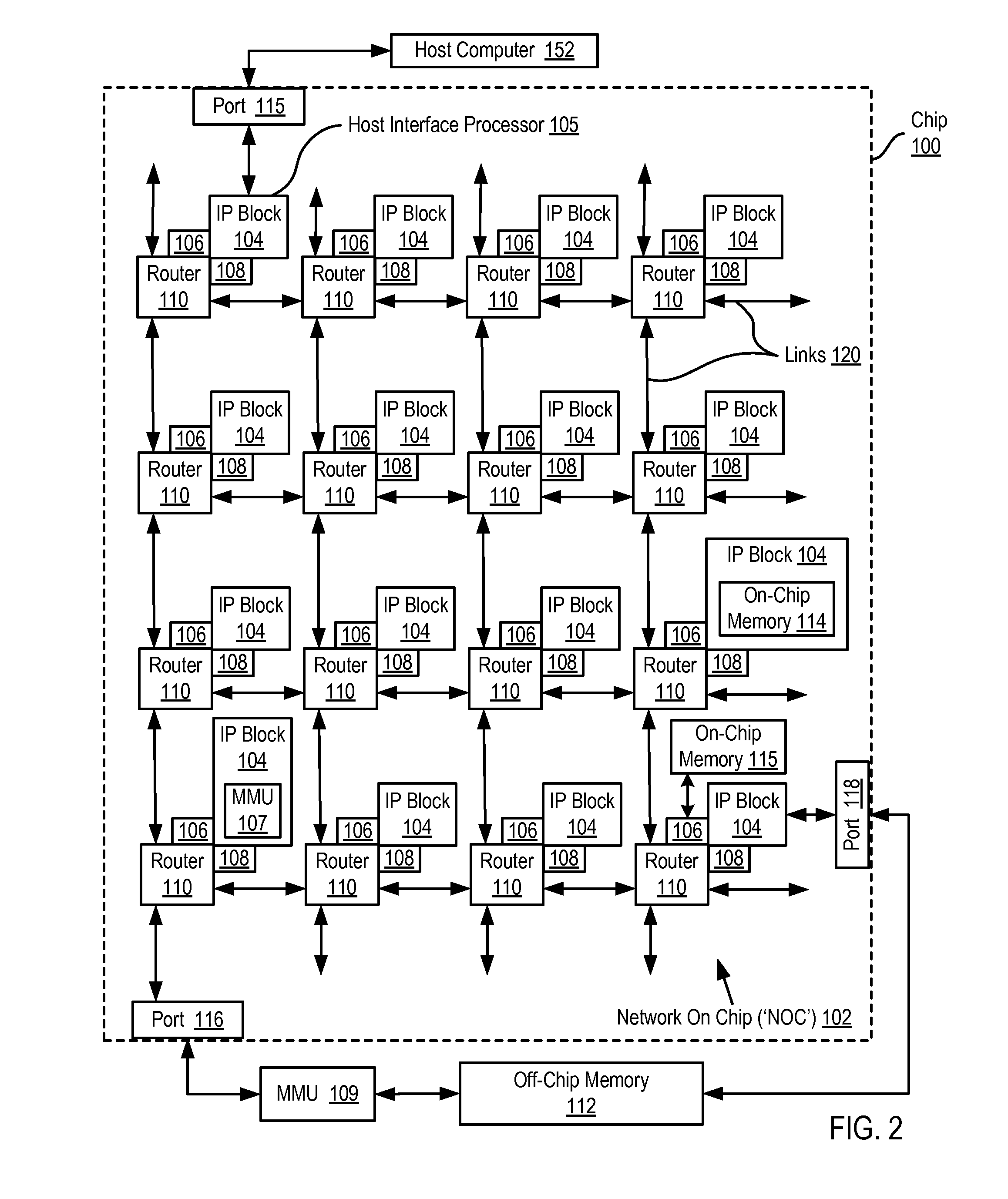

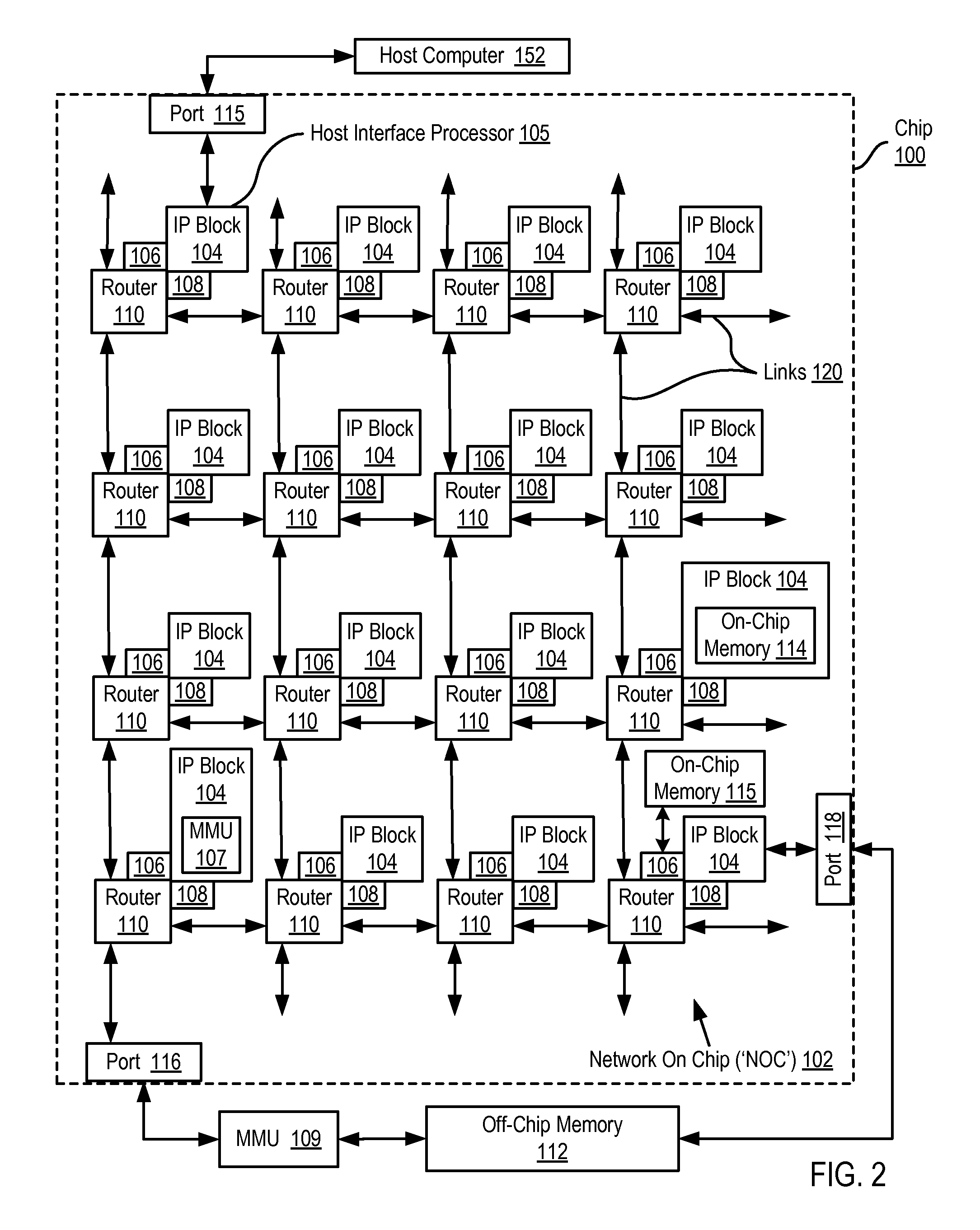

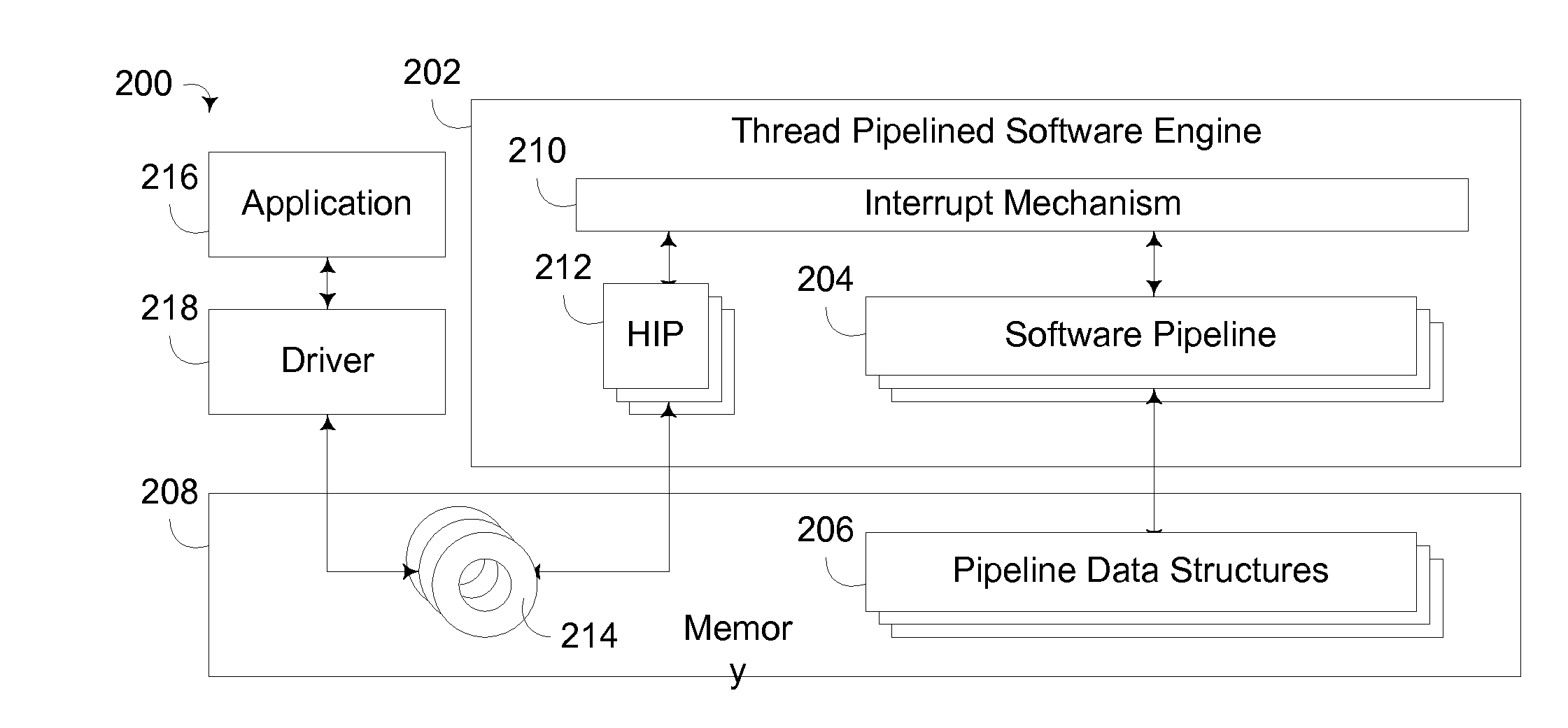

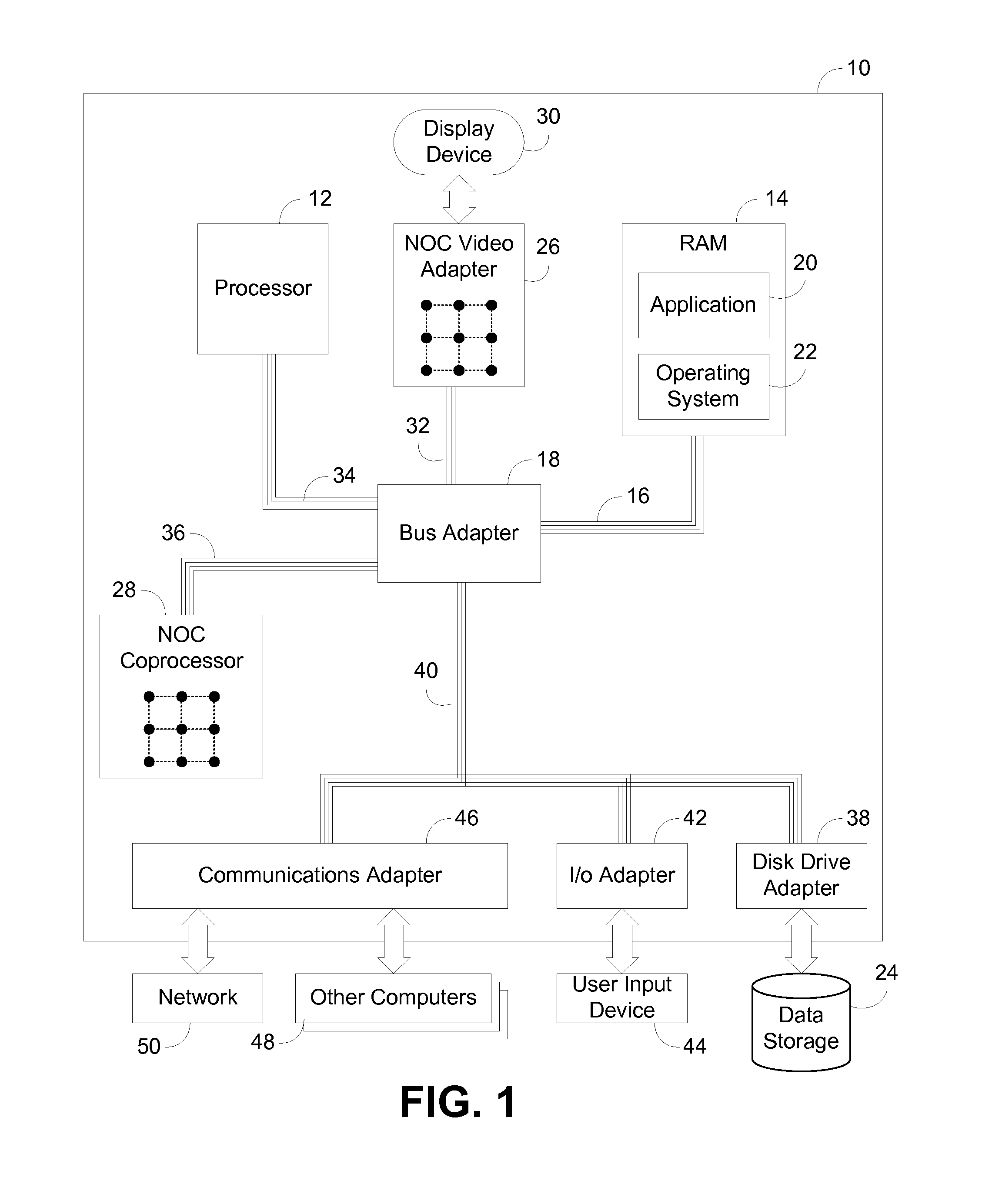

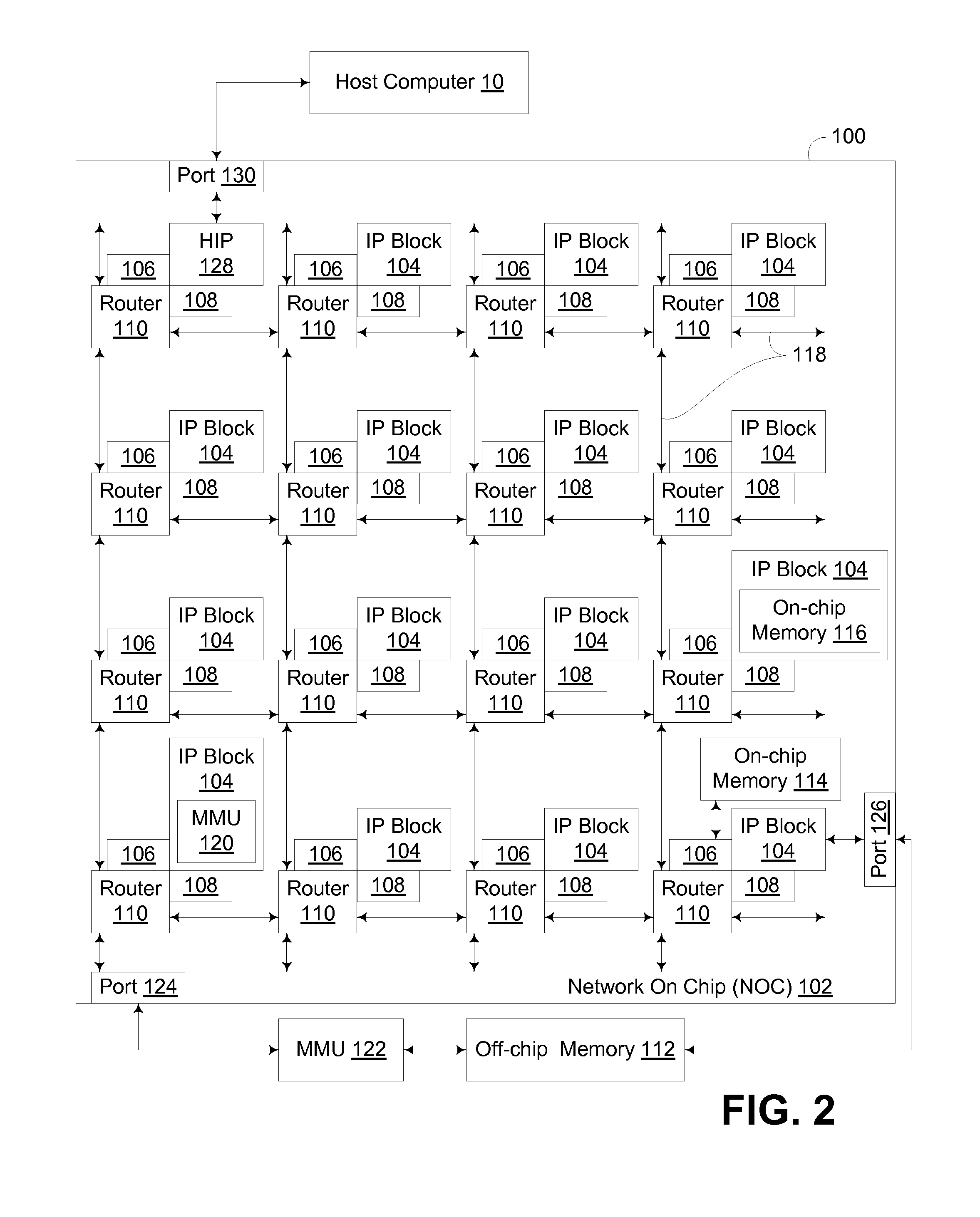

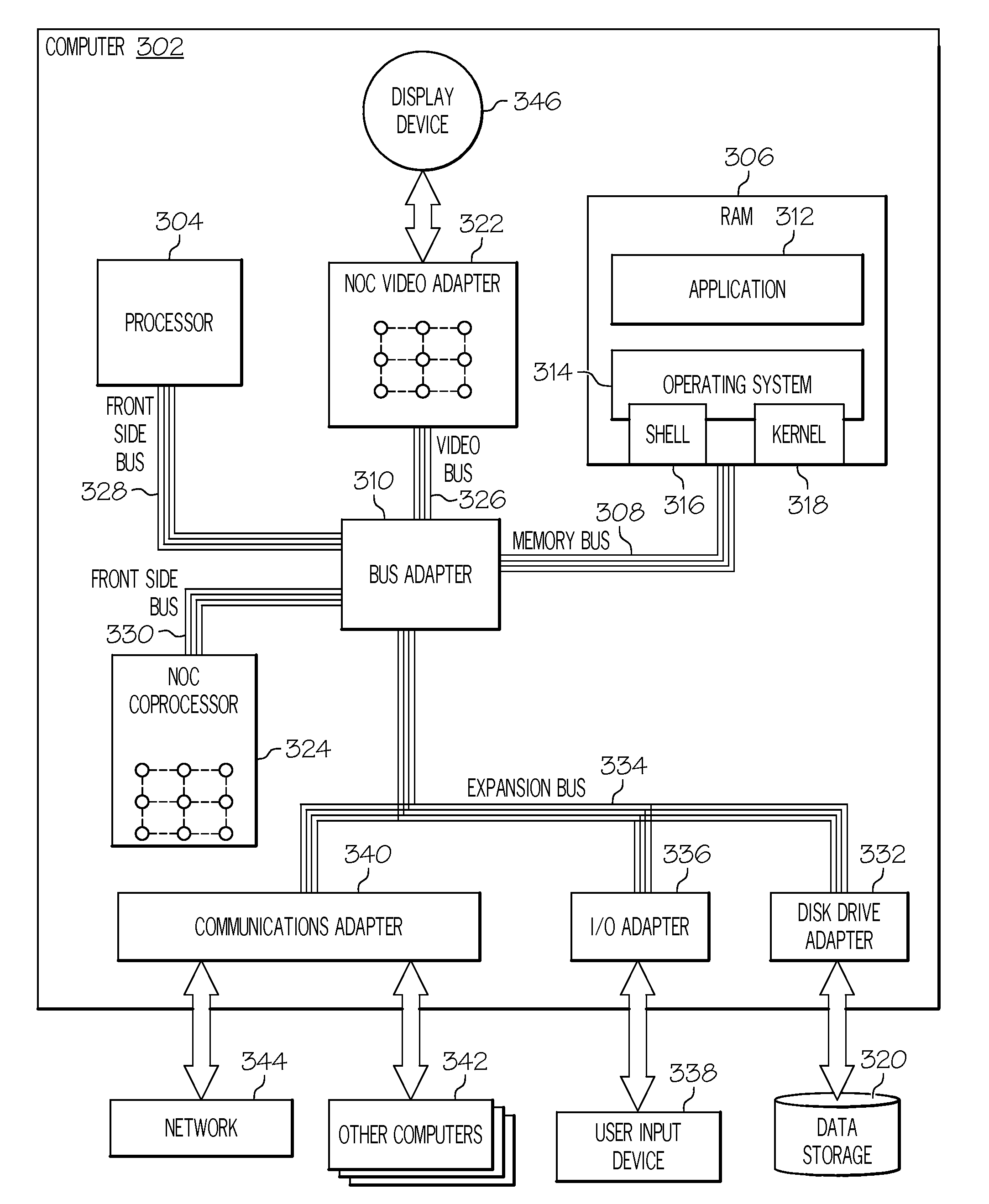

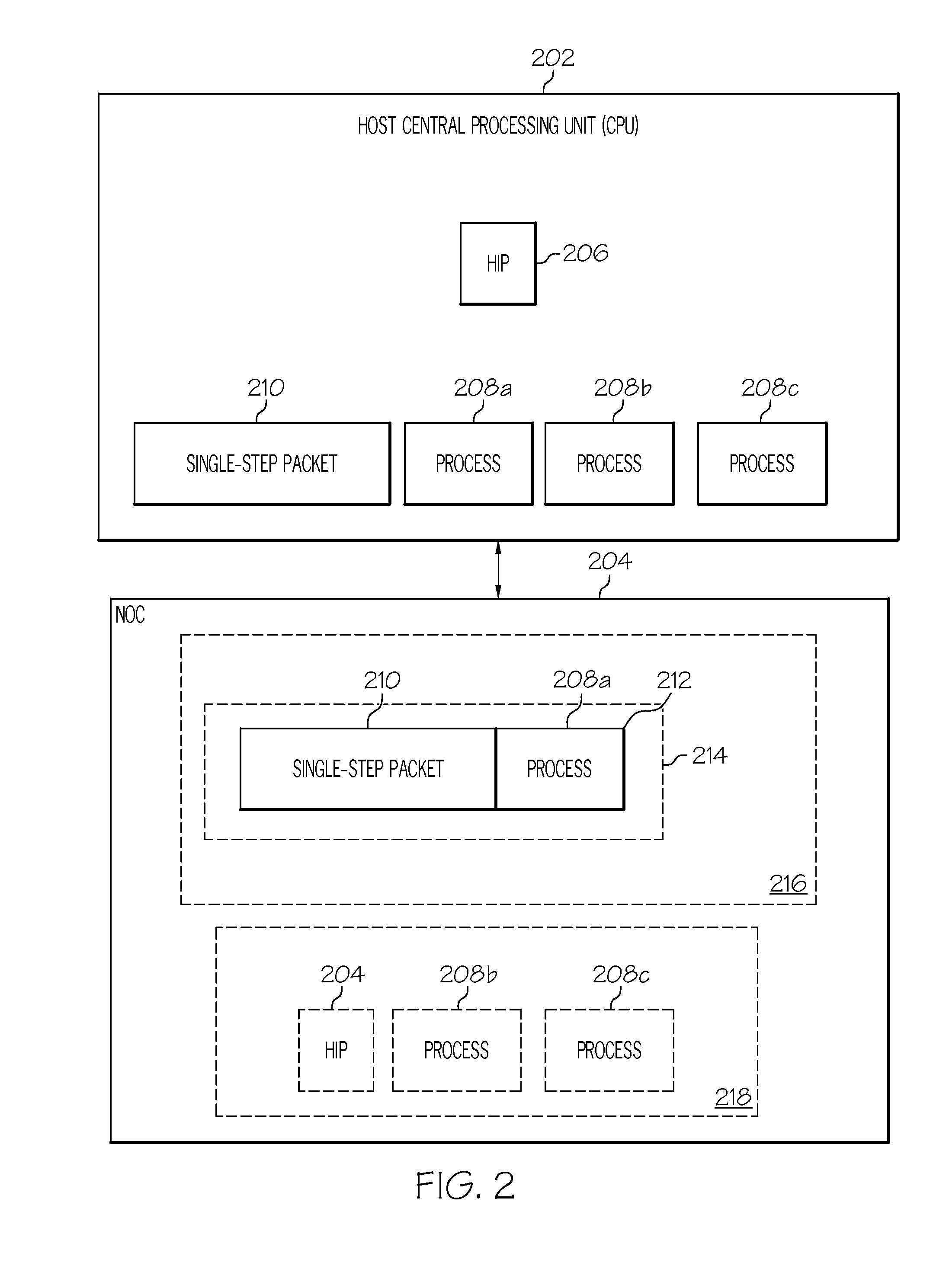

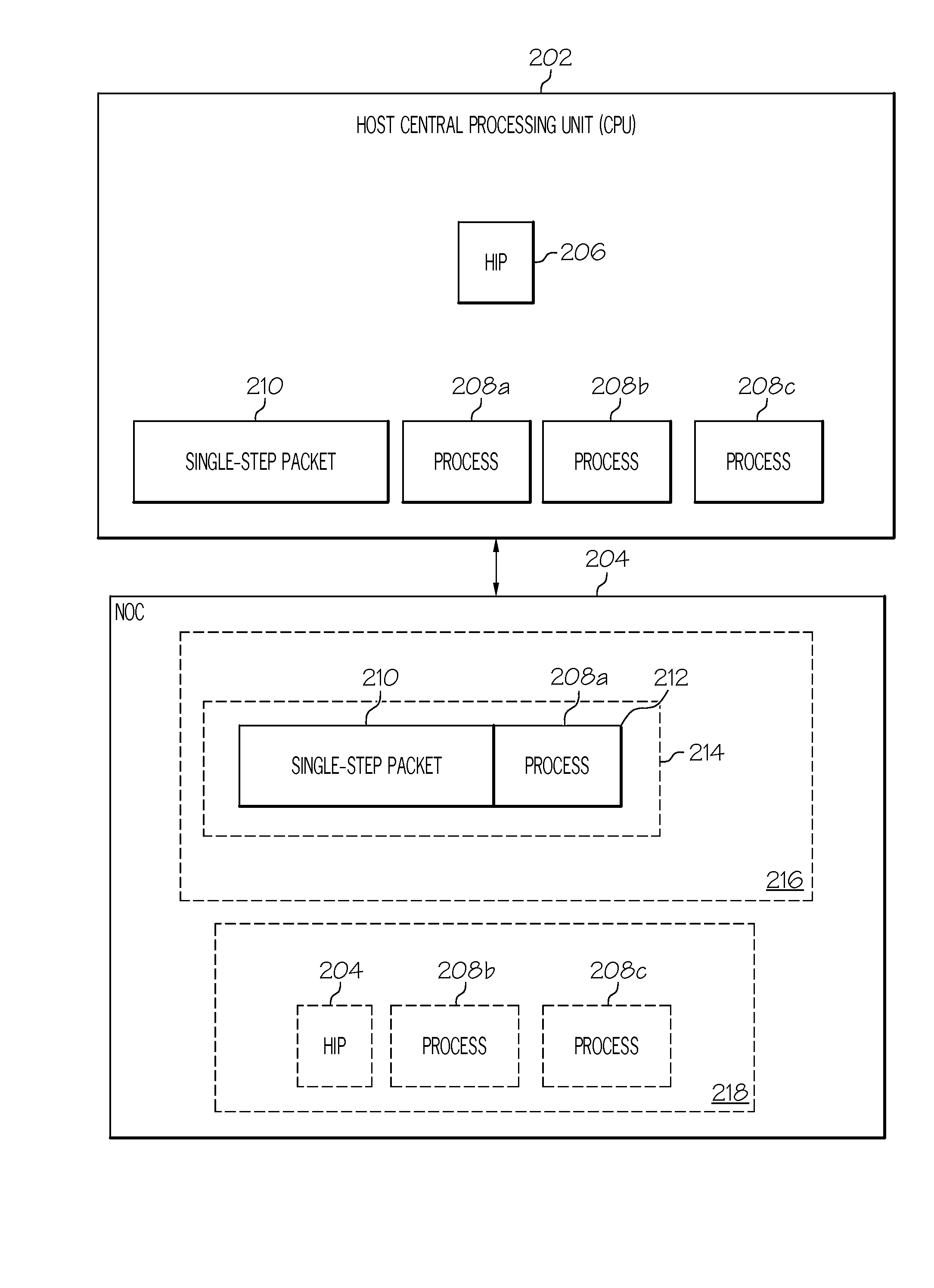

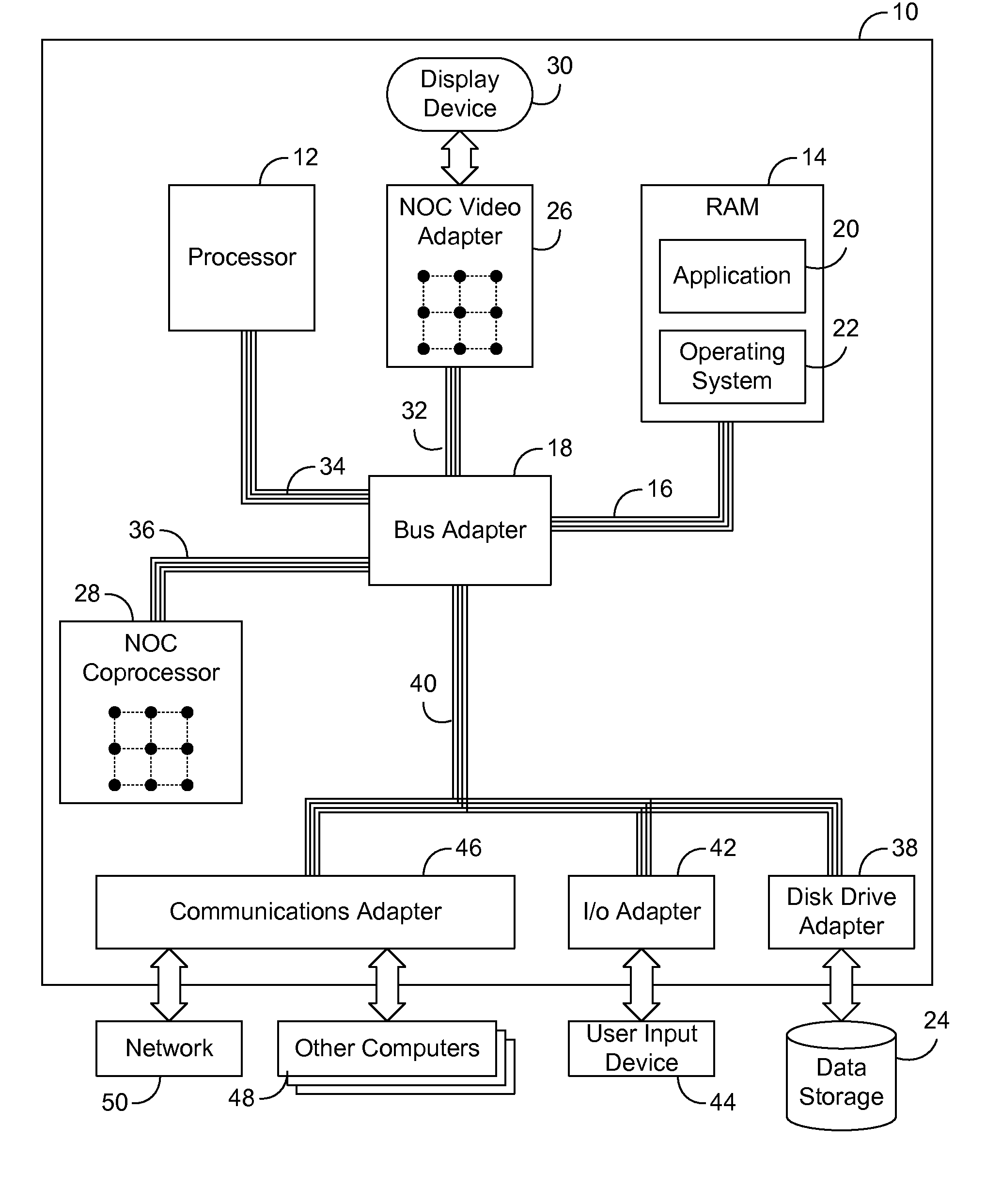

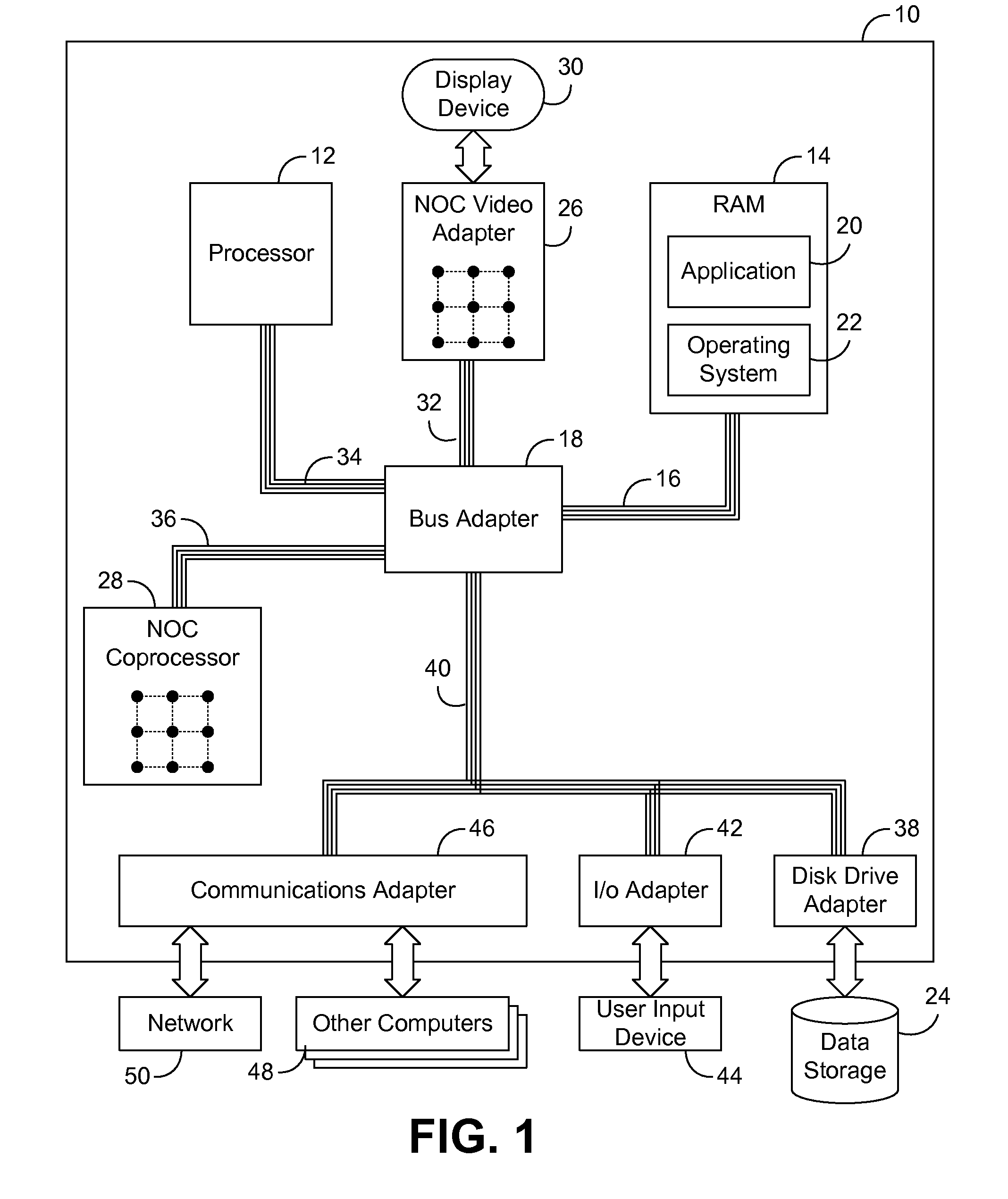

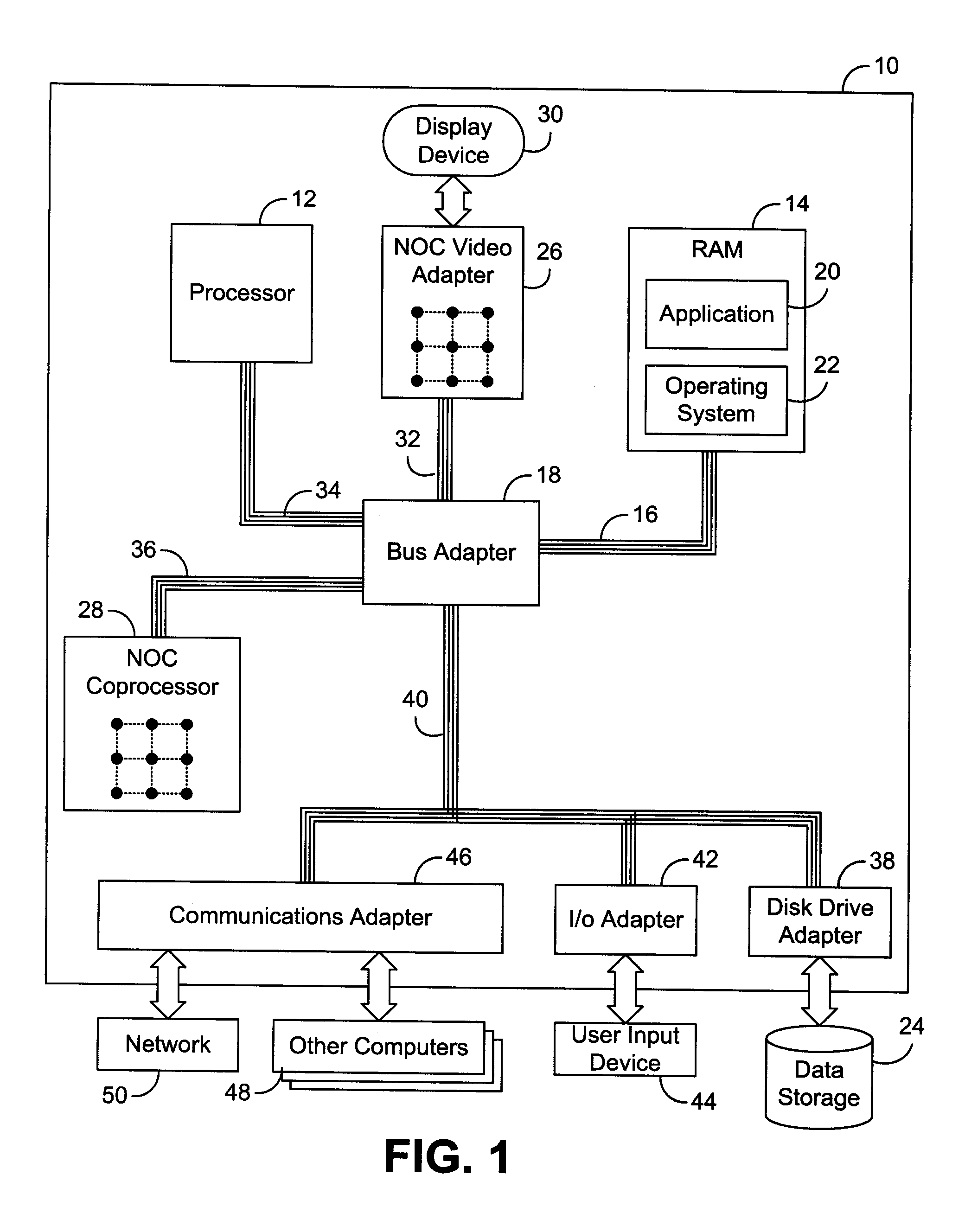

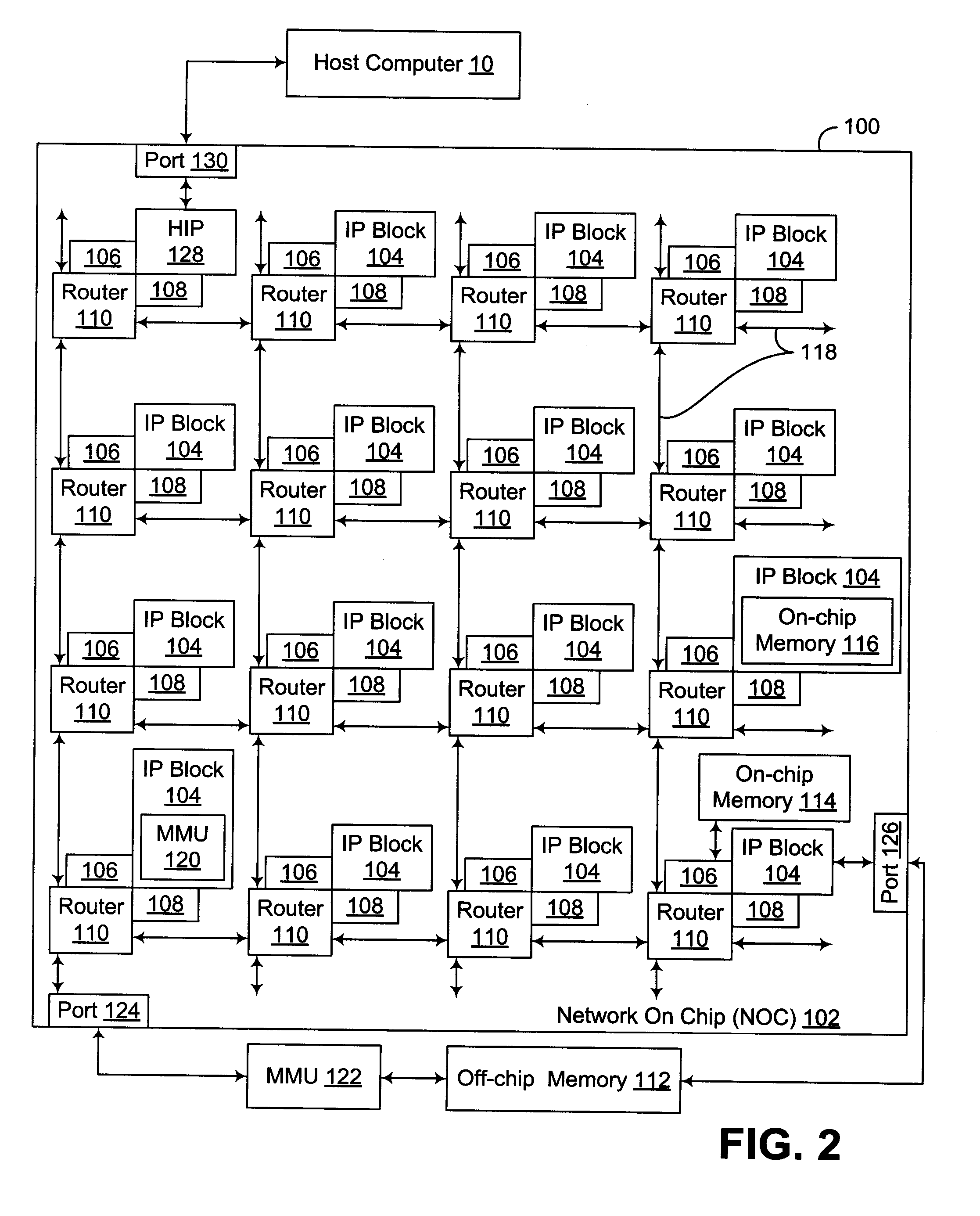

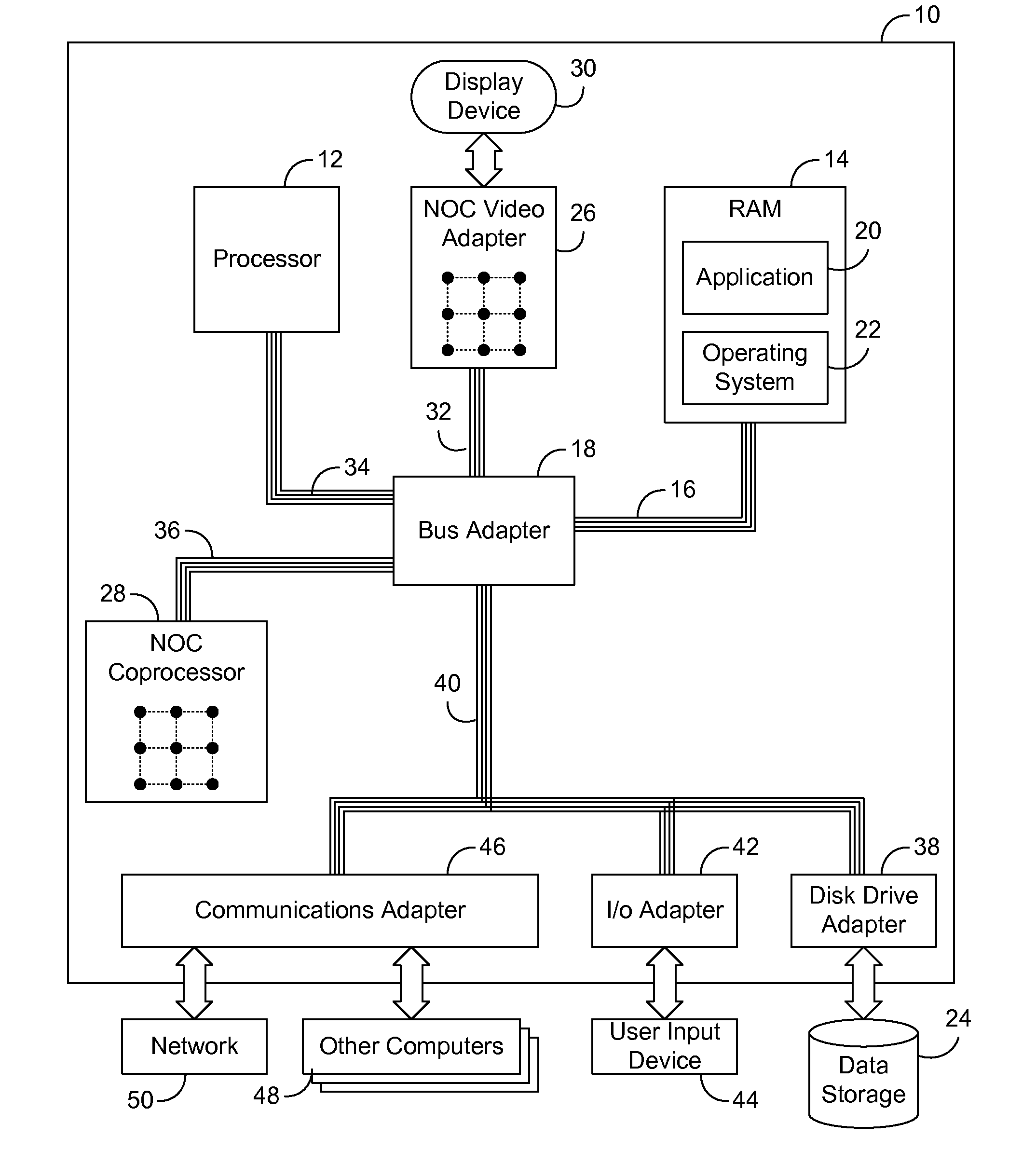

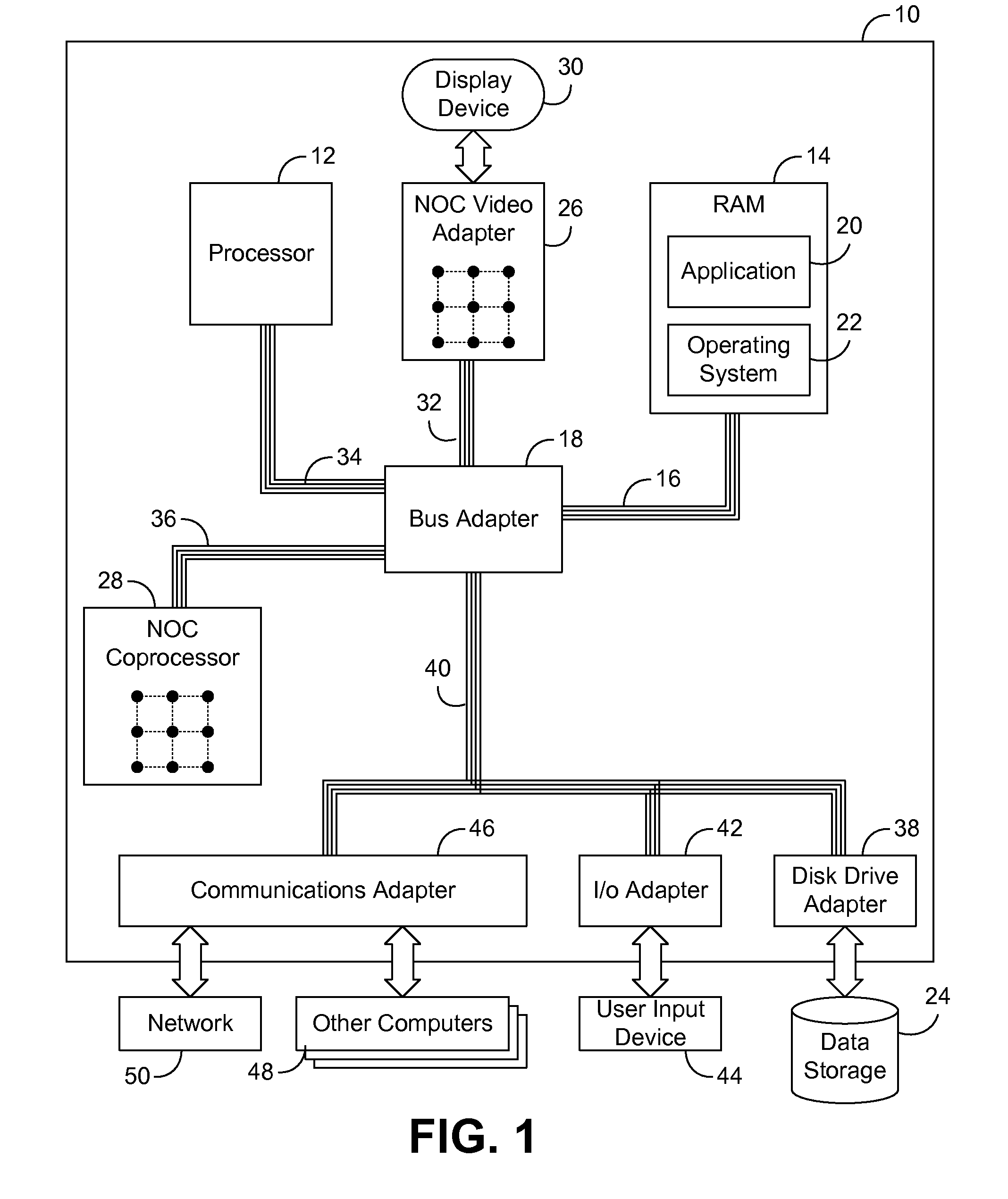

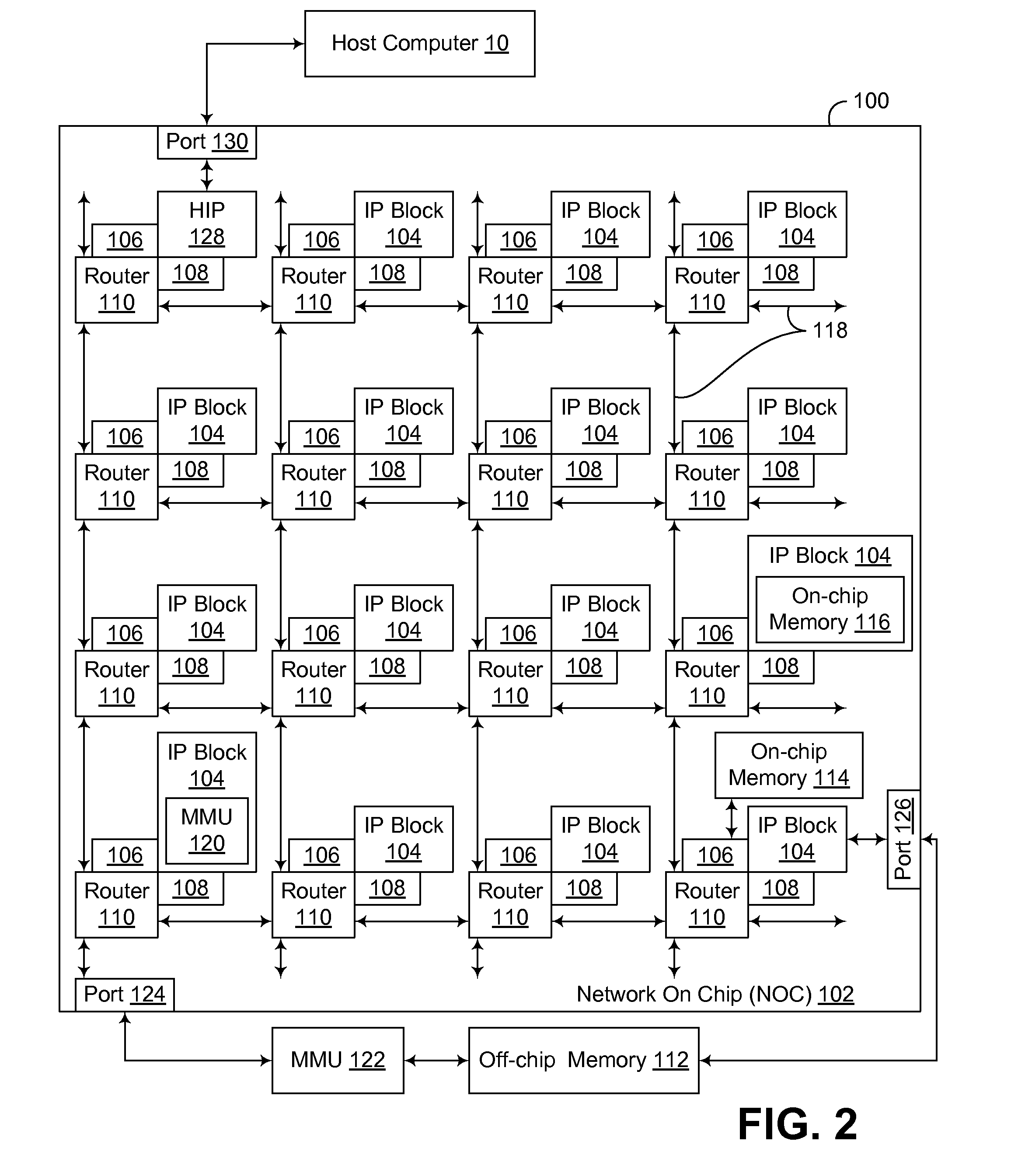

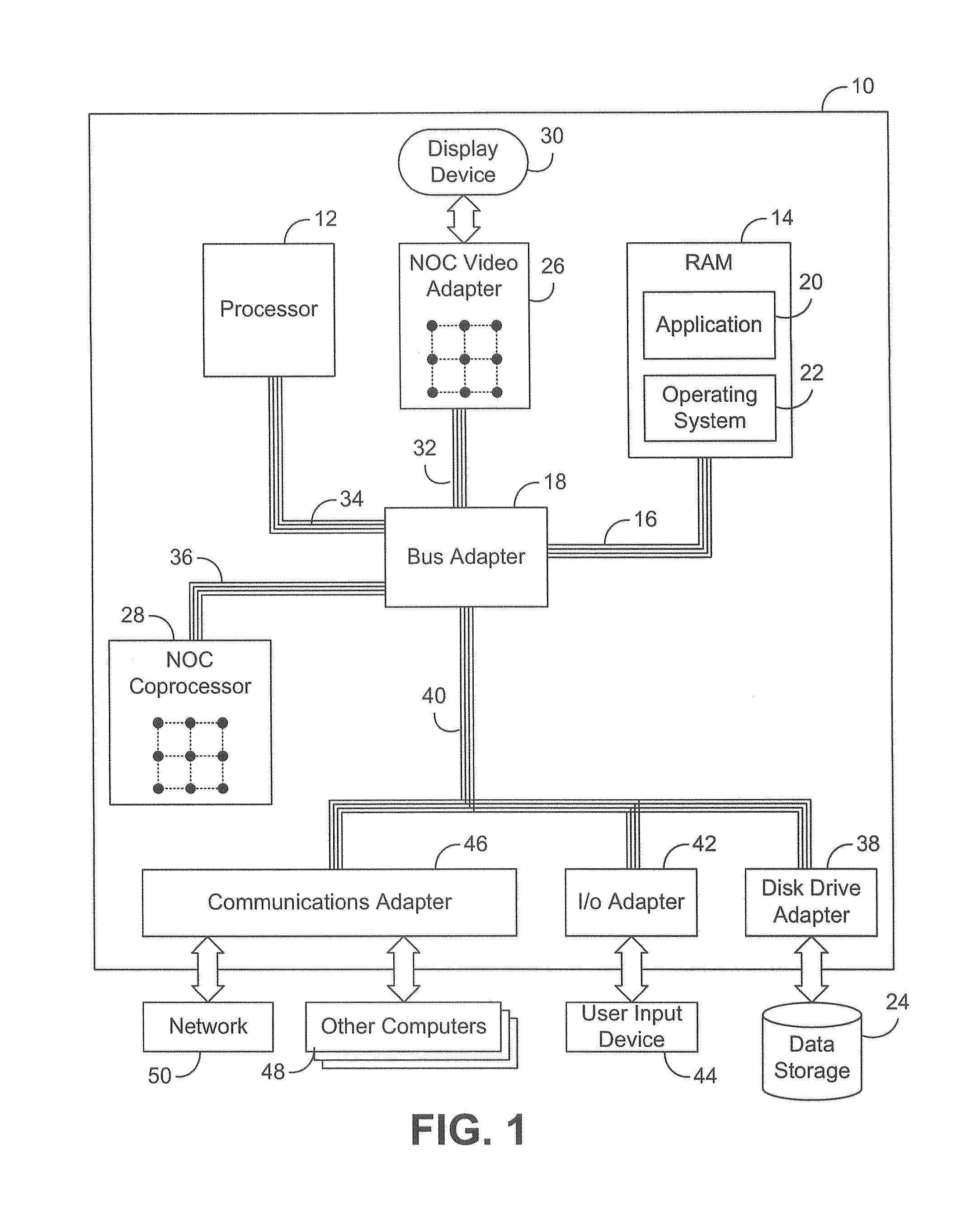

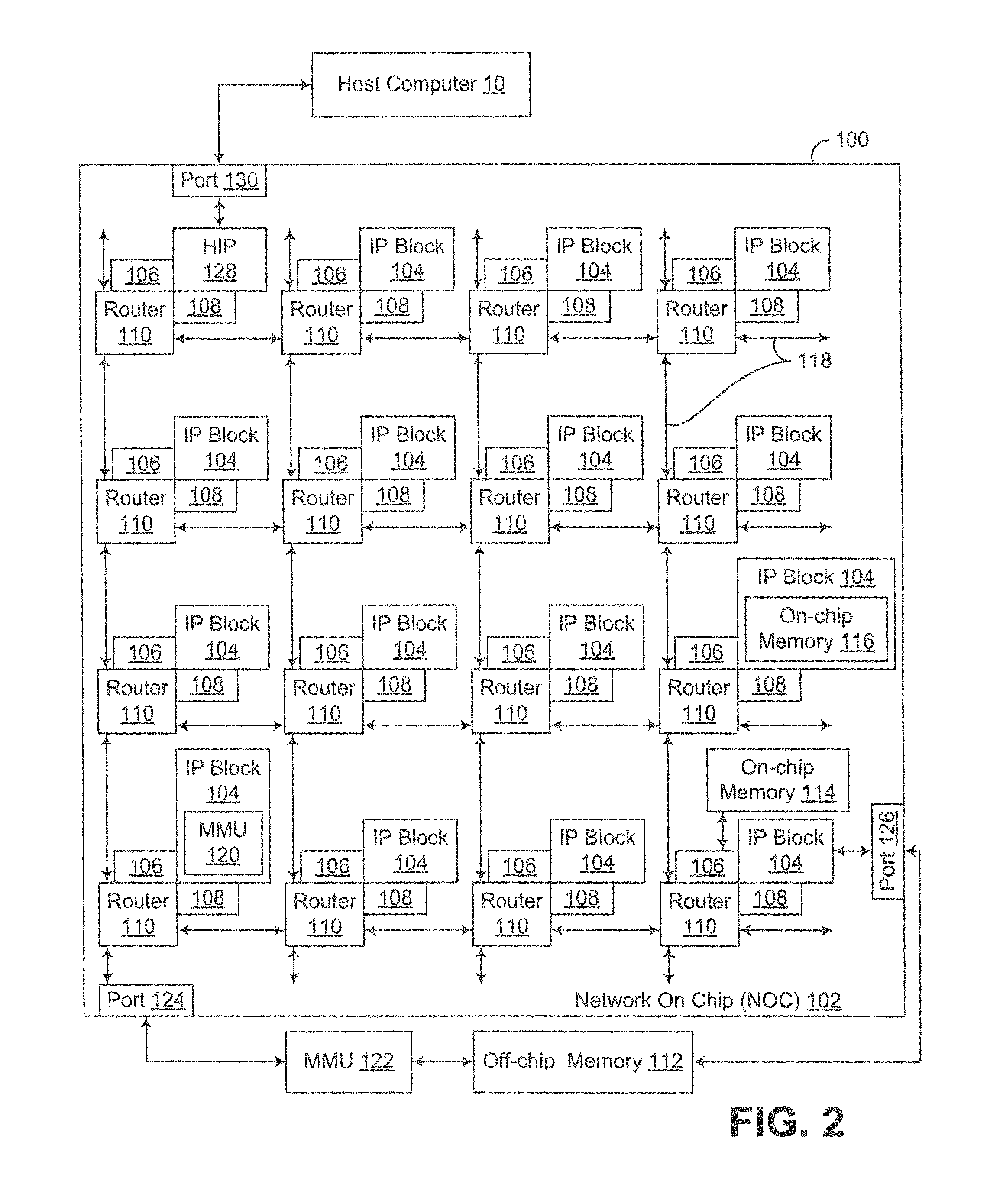

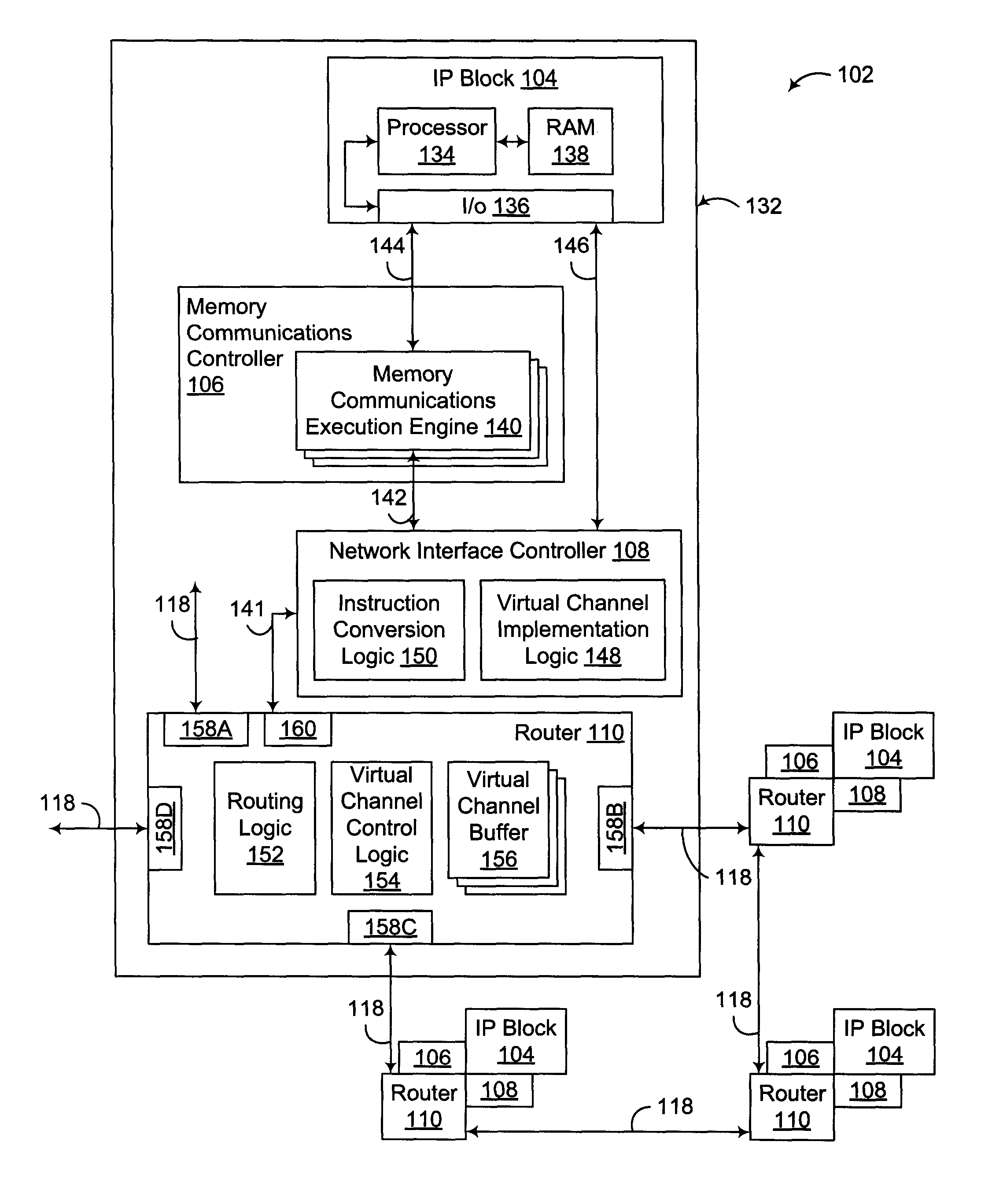

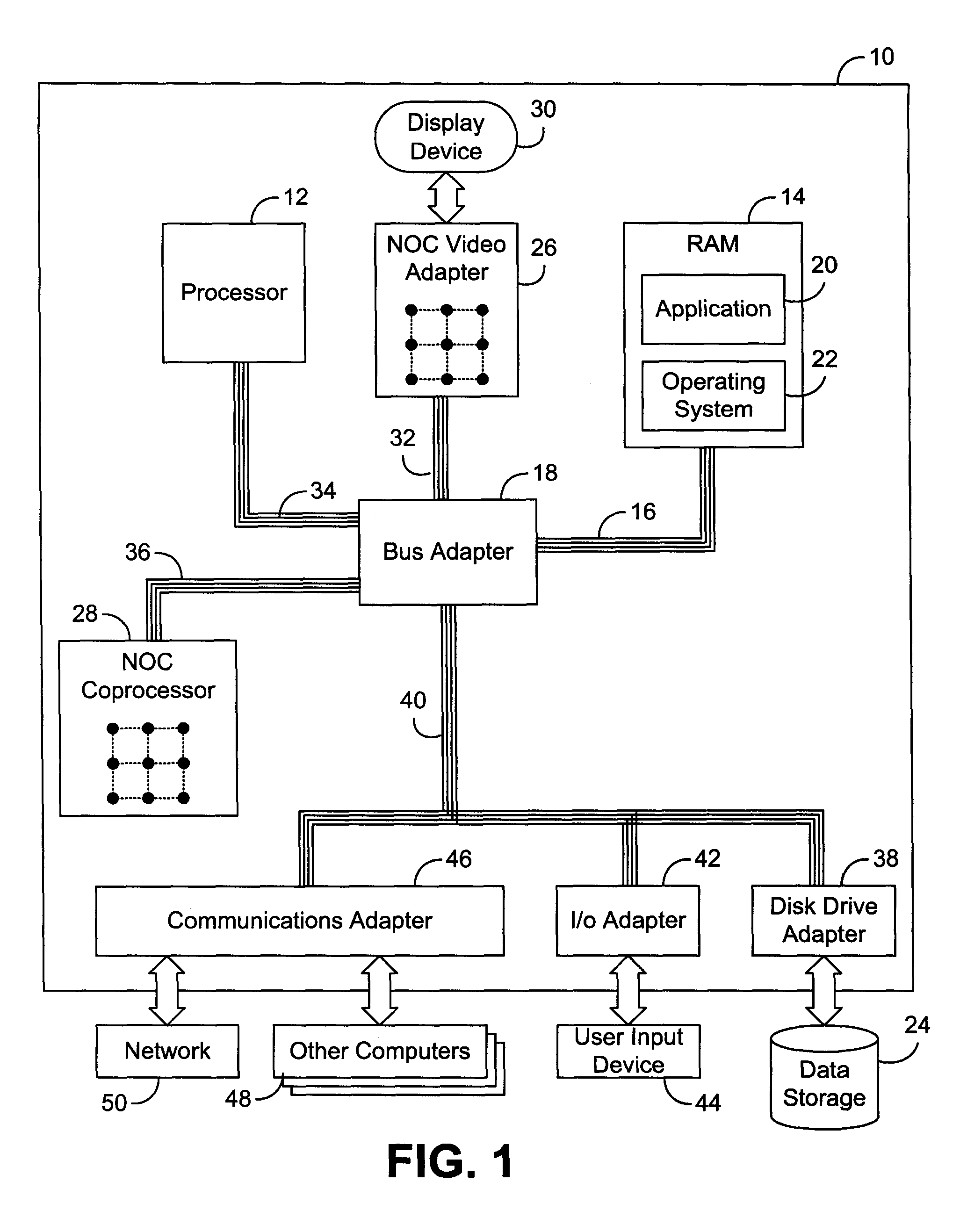

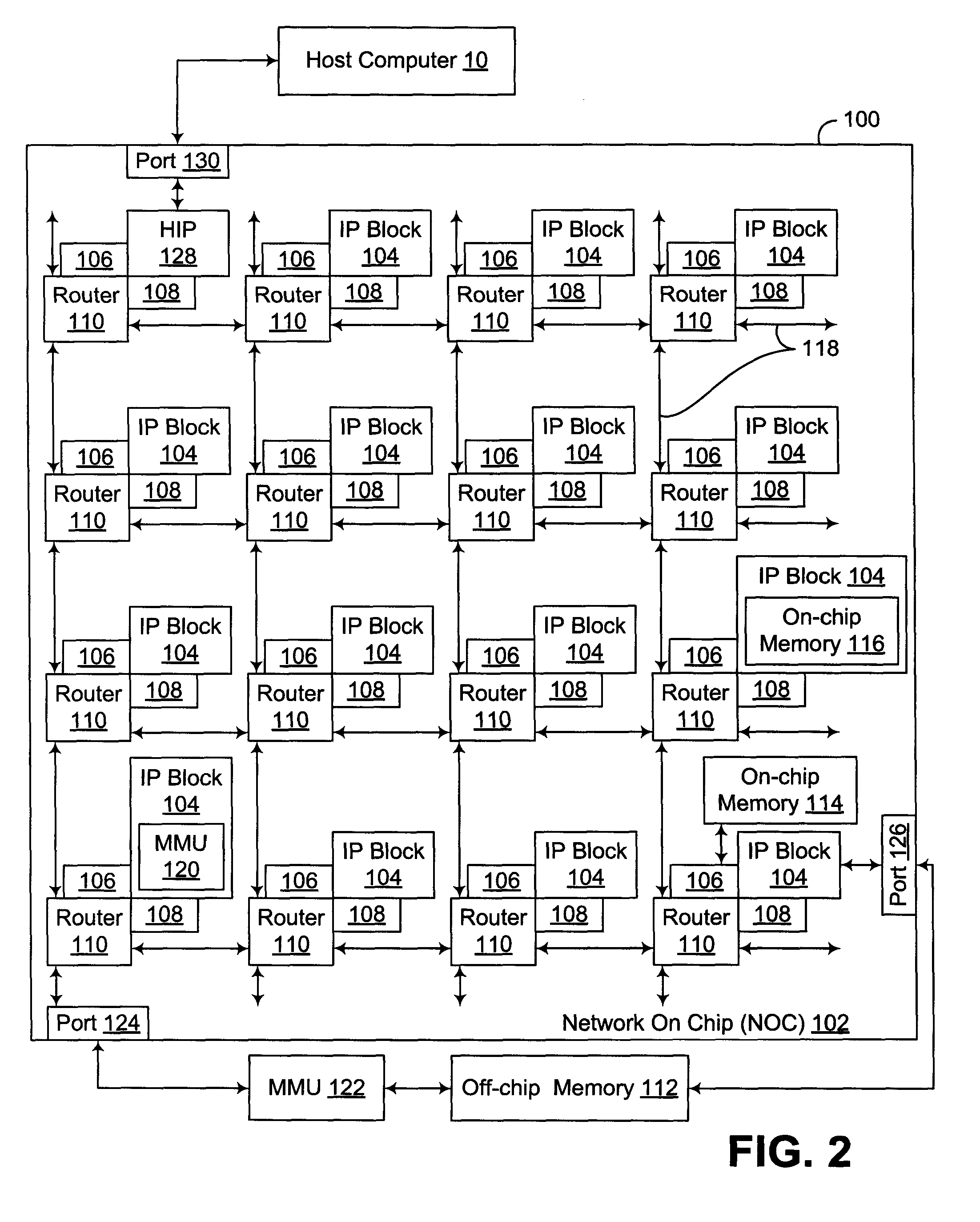

Dynamic Virtual Software Pipelining On A Network On Chip

InactiveUS20090282222A1General purpose stored program computerMultiprogramming arrangementsParallel computingSoftware pipelining

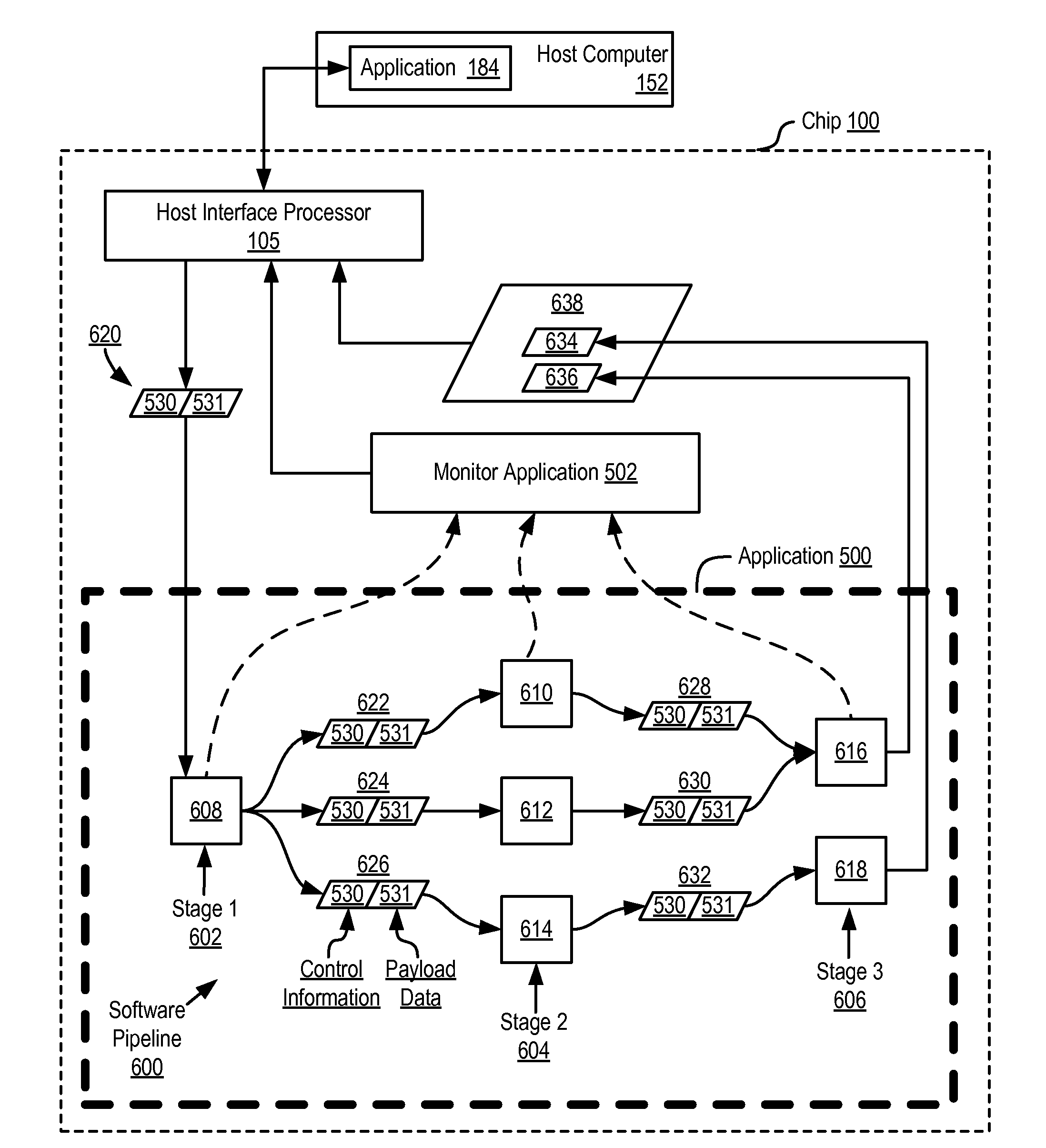

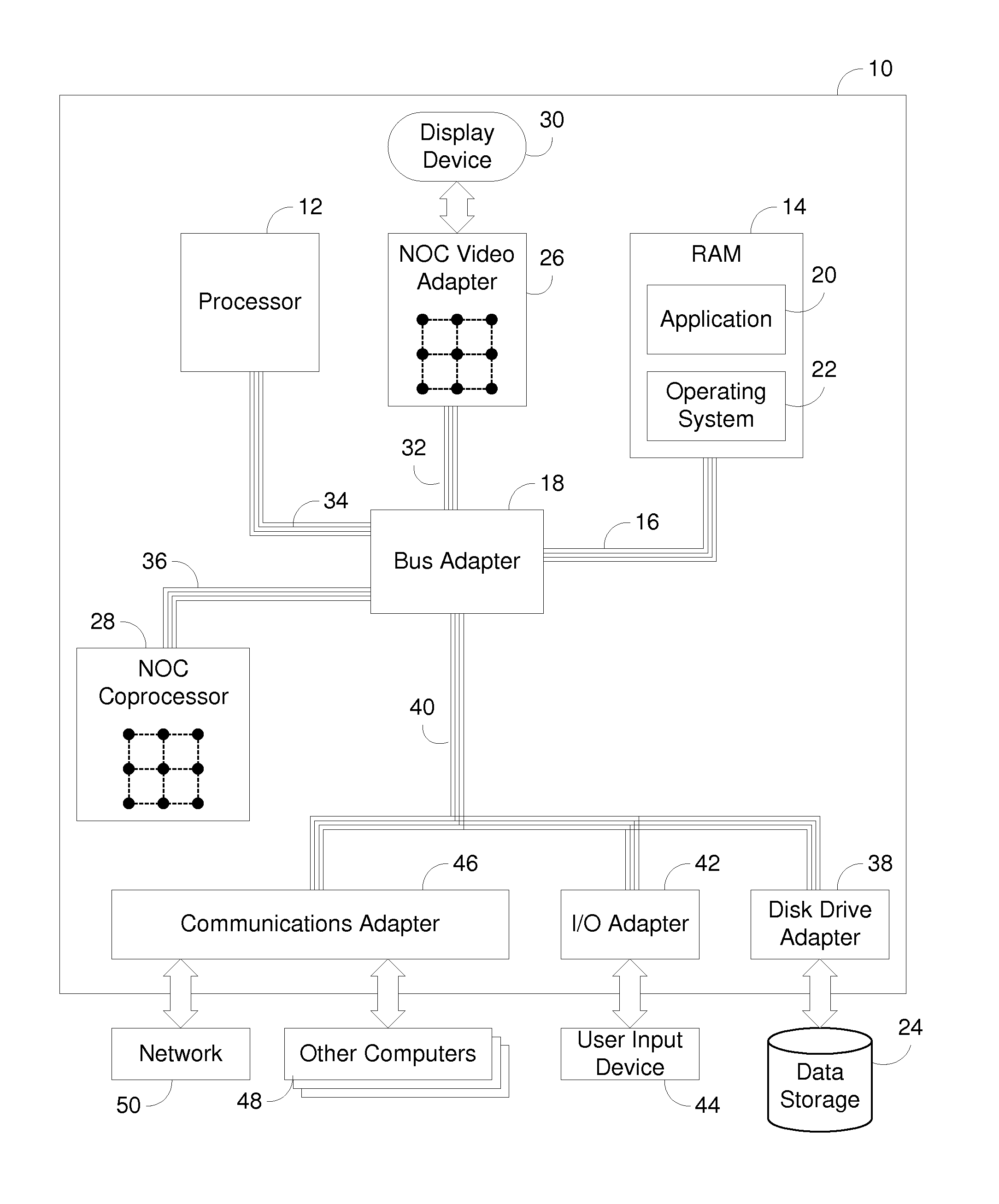

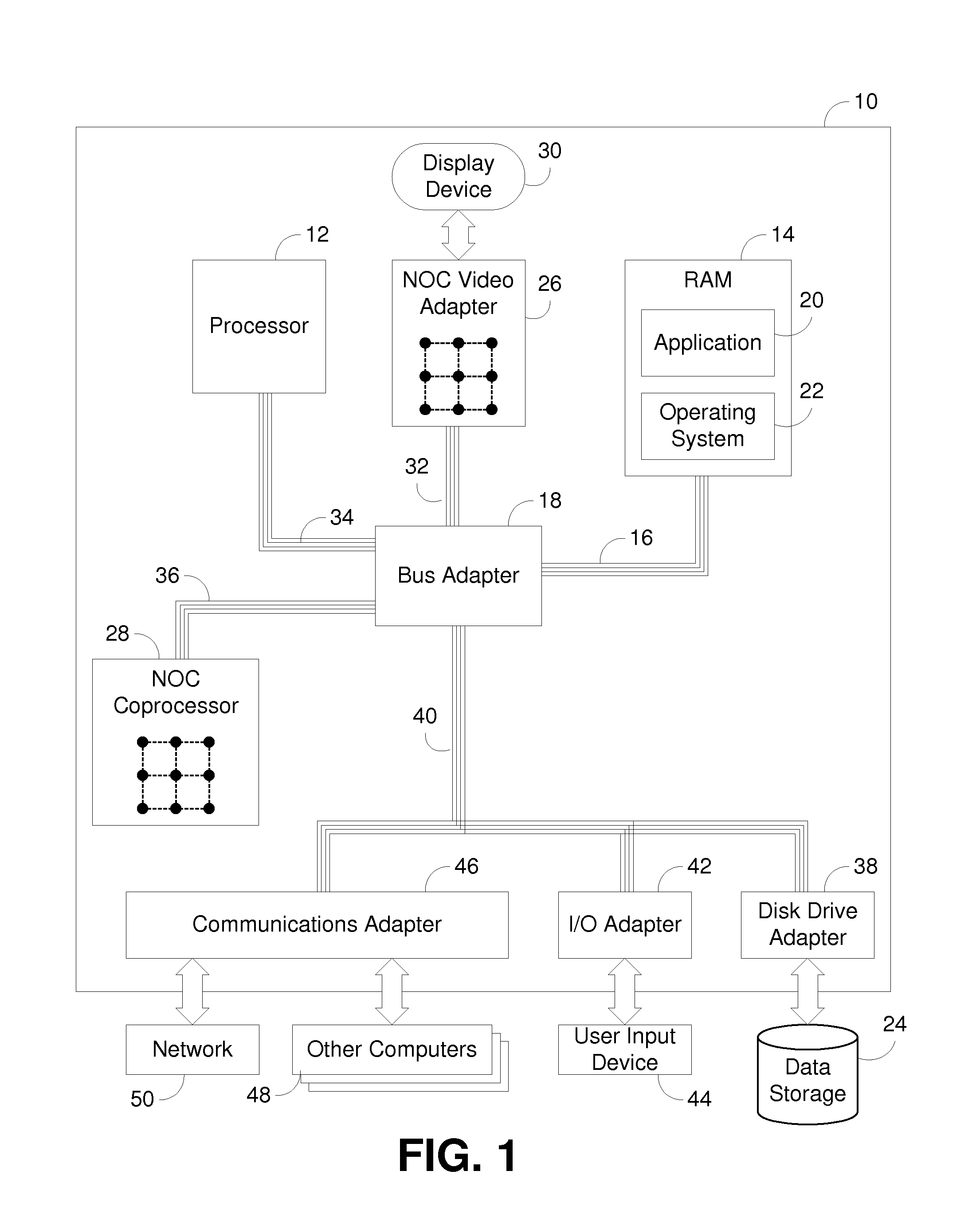

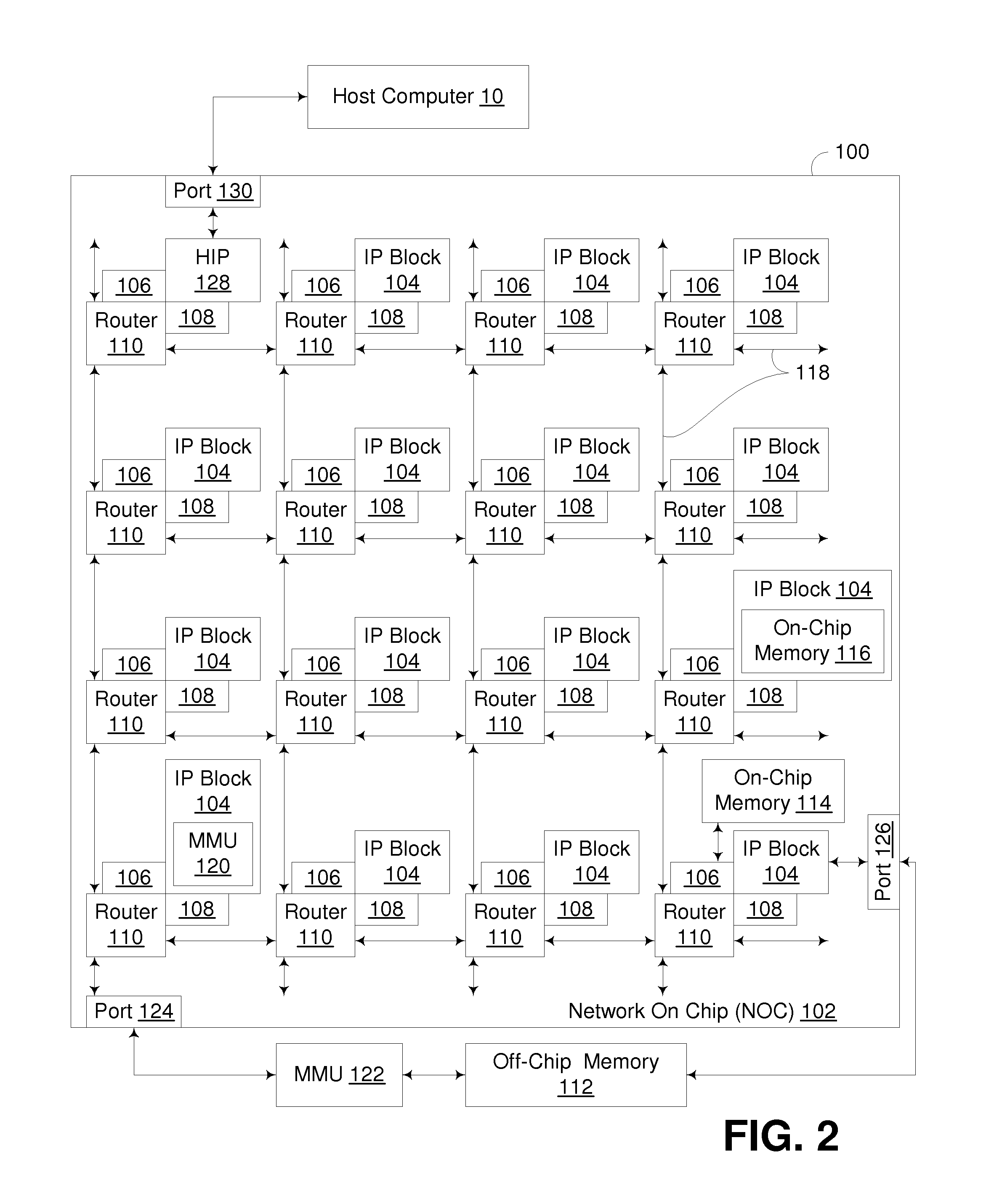

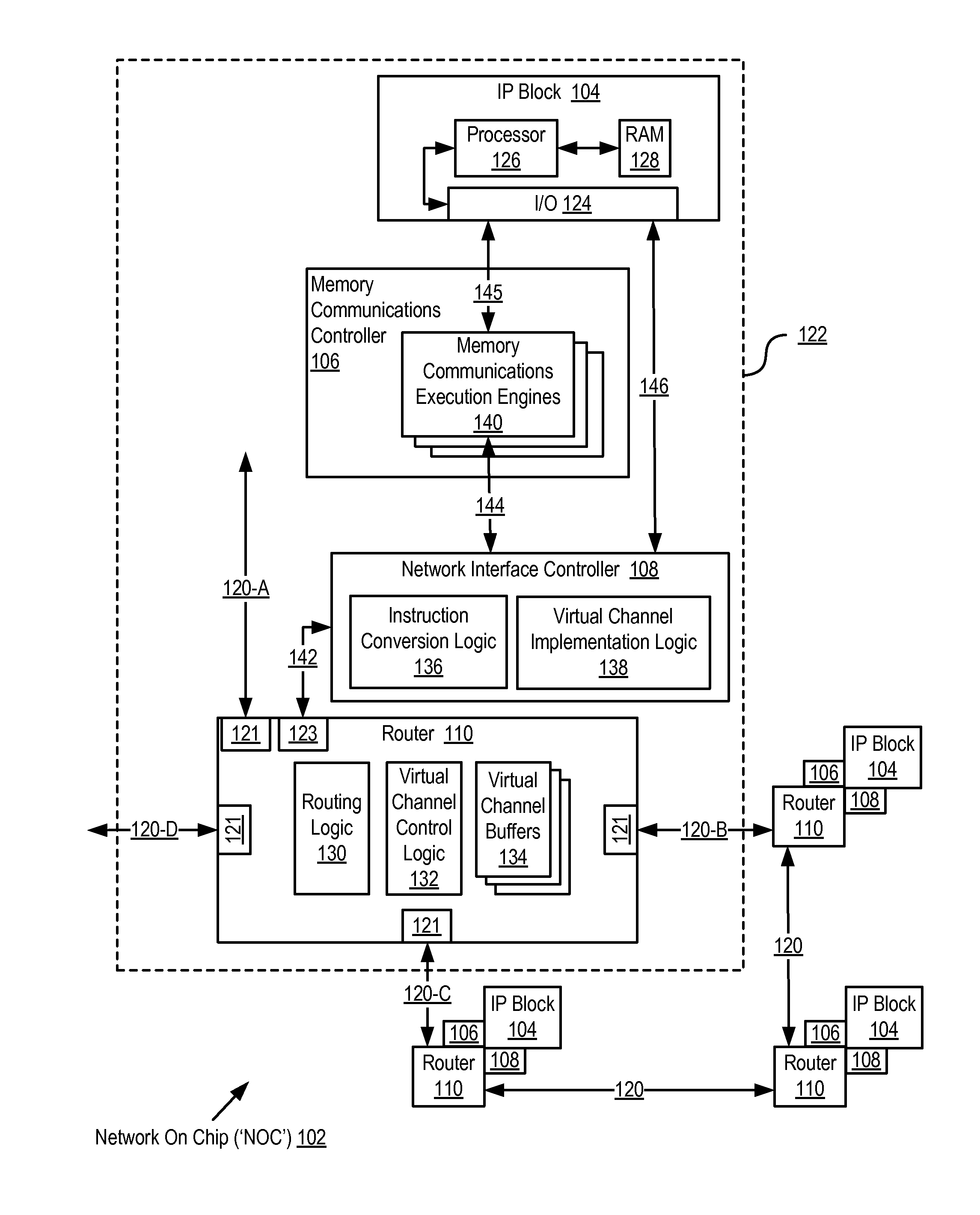

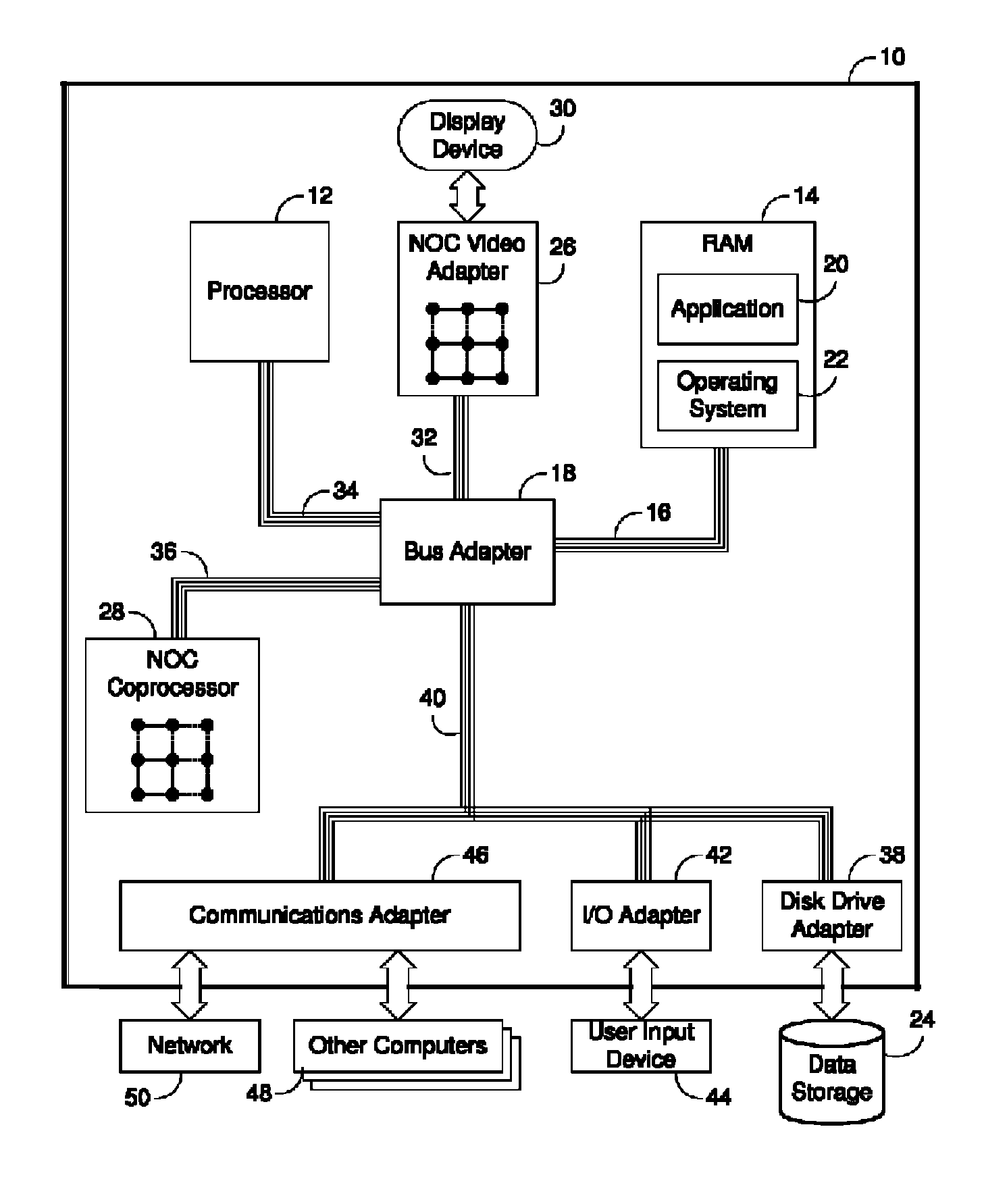

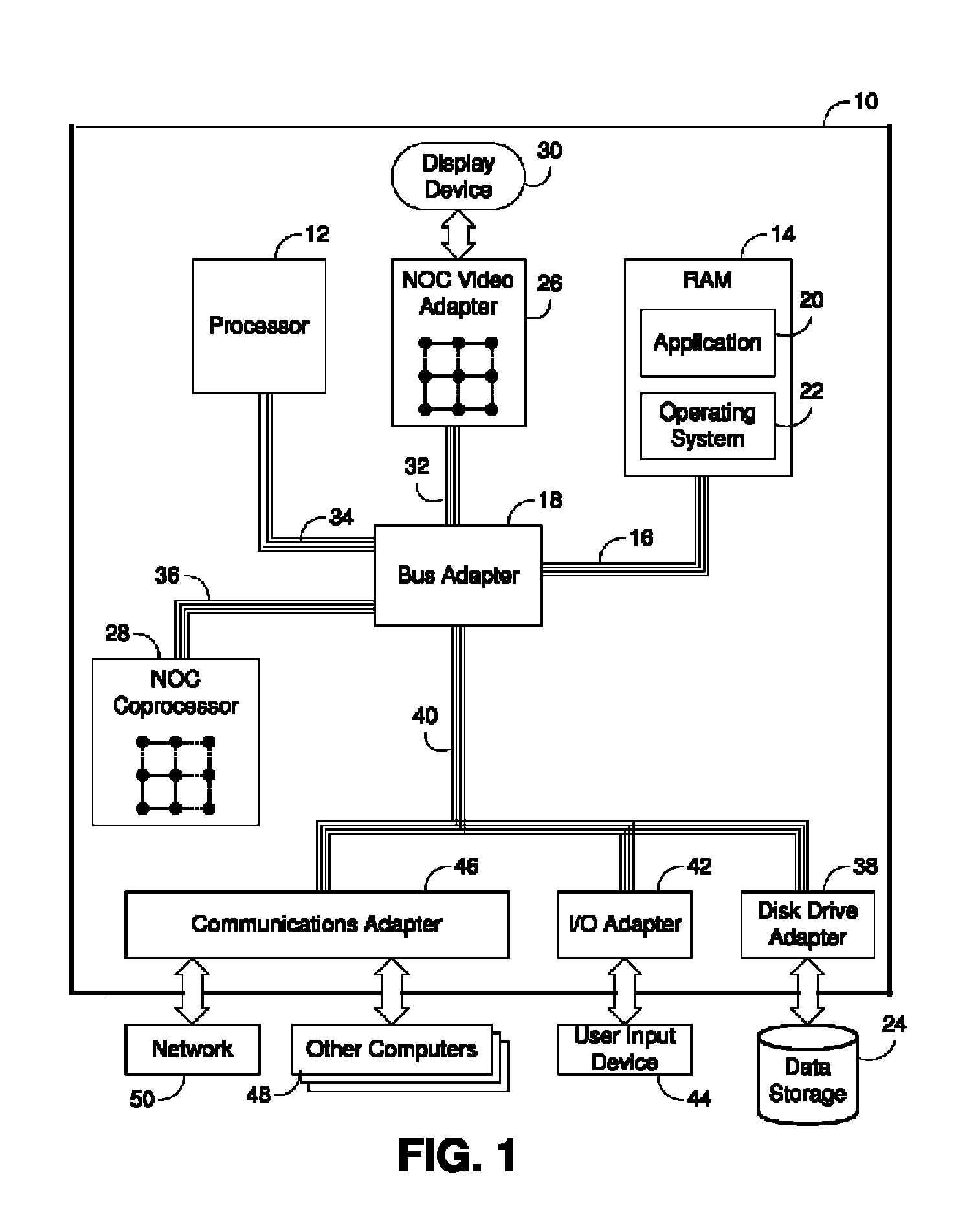

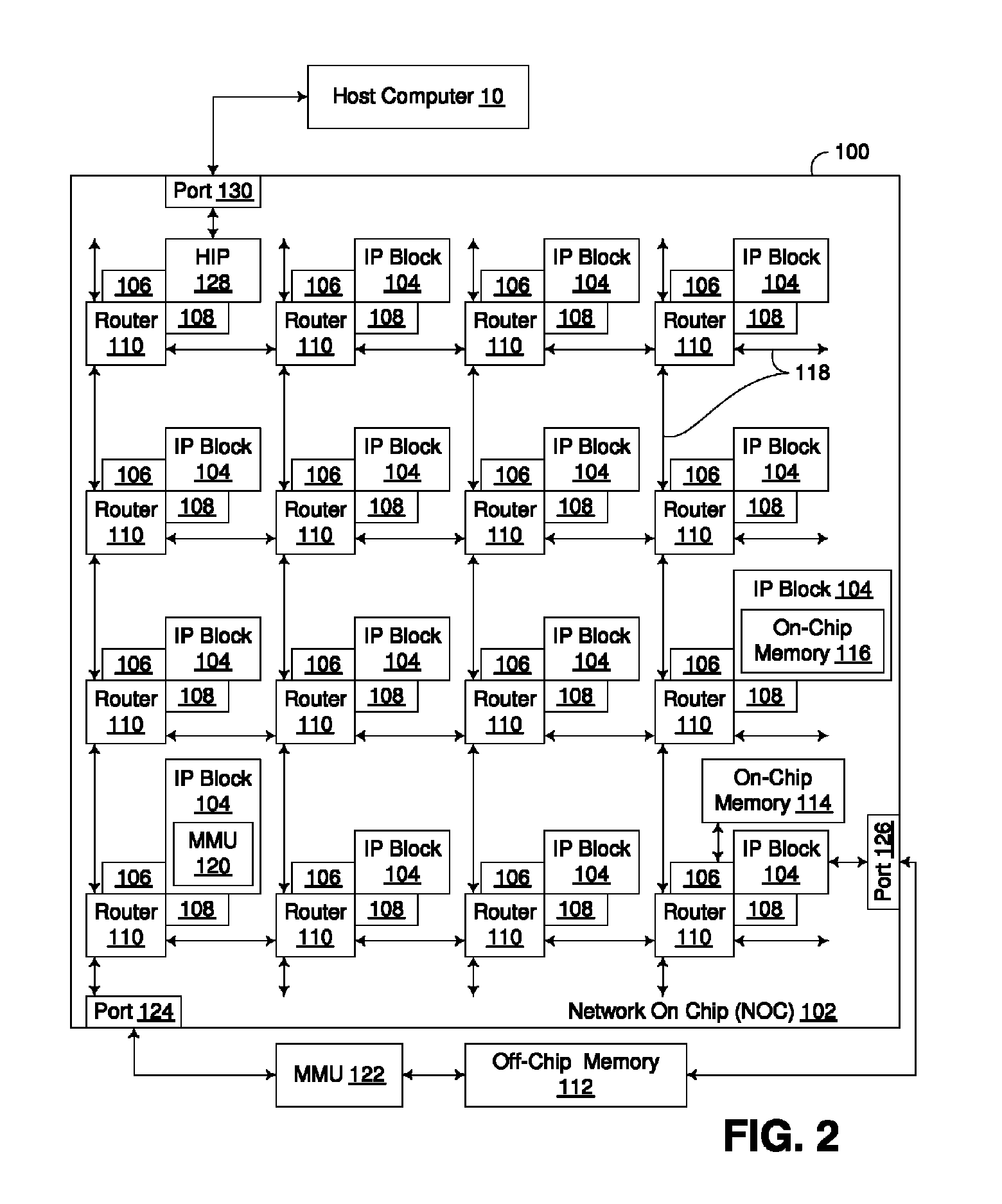

A NOC for dynamic virtual software pipelining including IP blocks, routers, memory communications controllers, and network interface controllers, each IP block adapted to a router through a memory communications controller and a network interface controller, the NOC also including: a computer software application segmented into stages, each stage comprising a flexibly configurable module of computer program instructions identified by a stage ID, each stage assigned to a thread of execution on an IP block; and each stage executing on a thread of execution on an IP block, including a first stage executing on an IP block, producing output data and sending by the first stage the produced output data to a second stage, the output data including control information for the next stage and payload data; and the second stage consuming the produced output data in dependence upon the control information.

Owner:IBM CORP

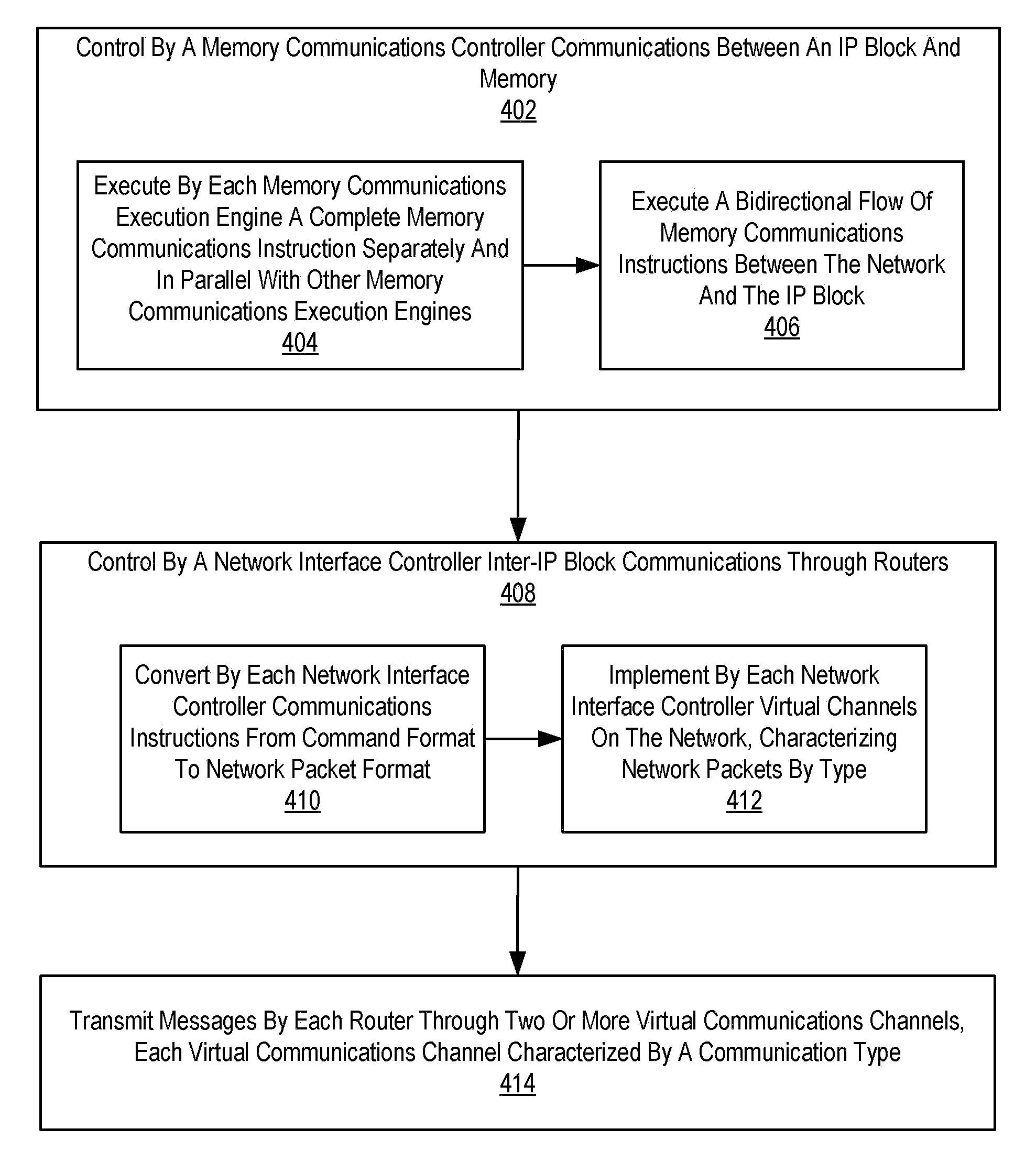

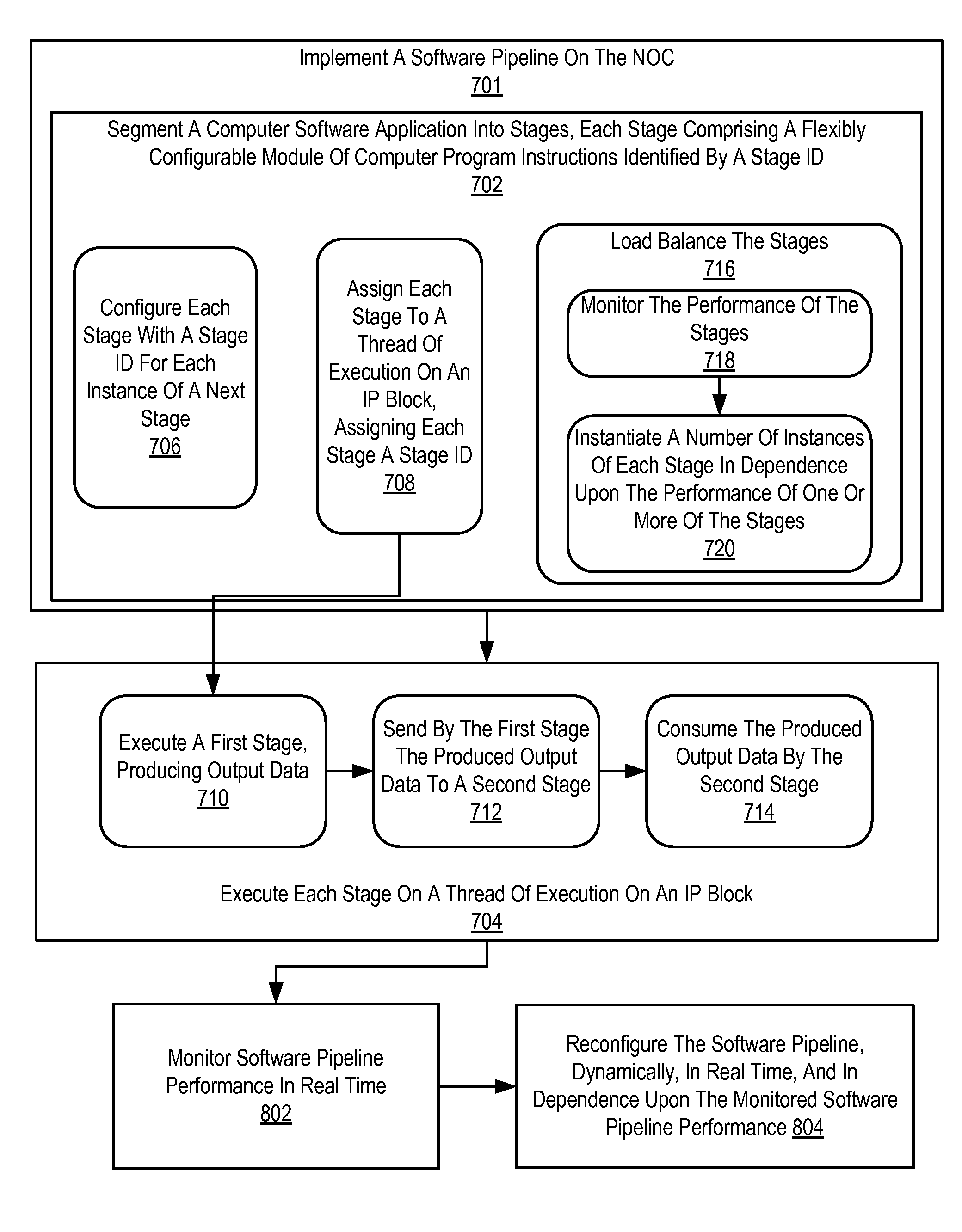

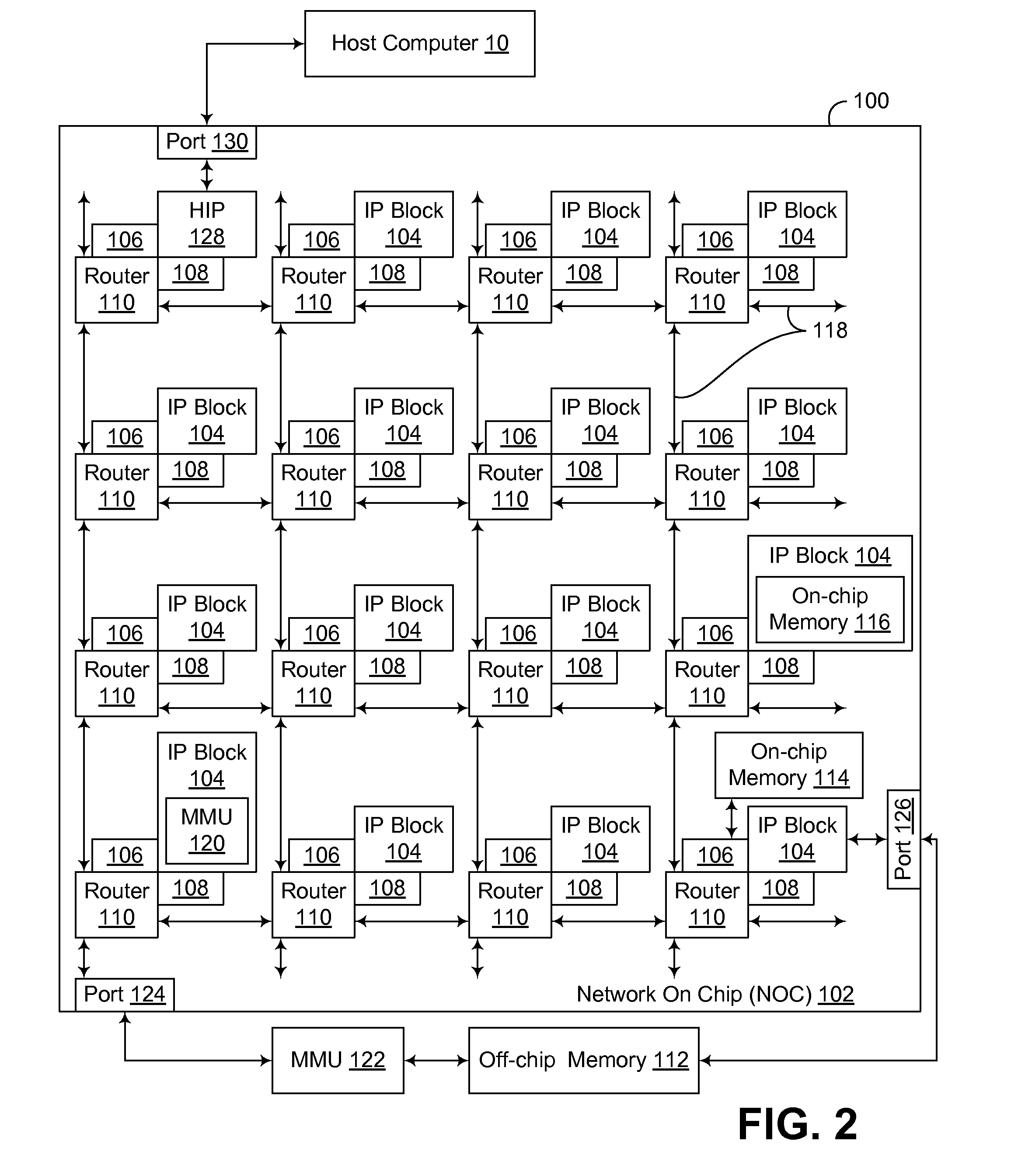

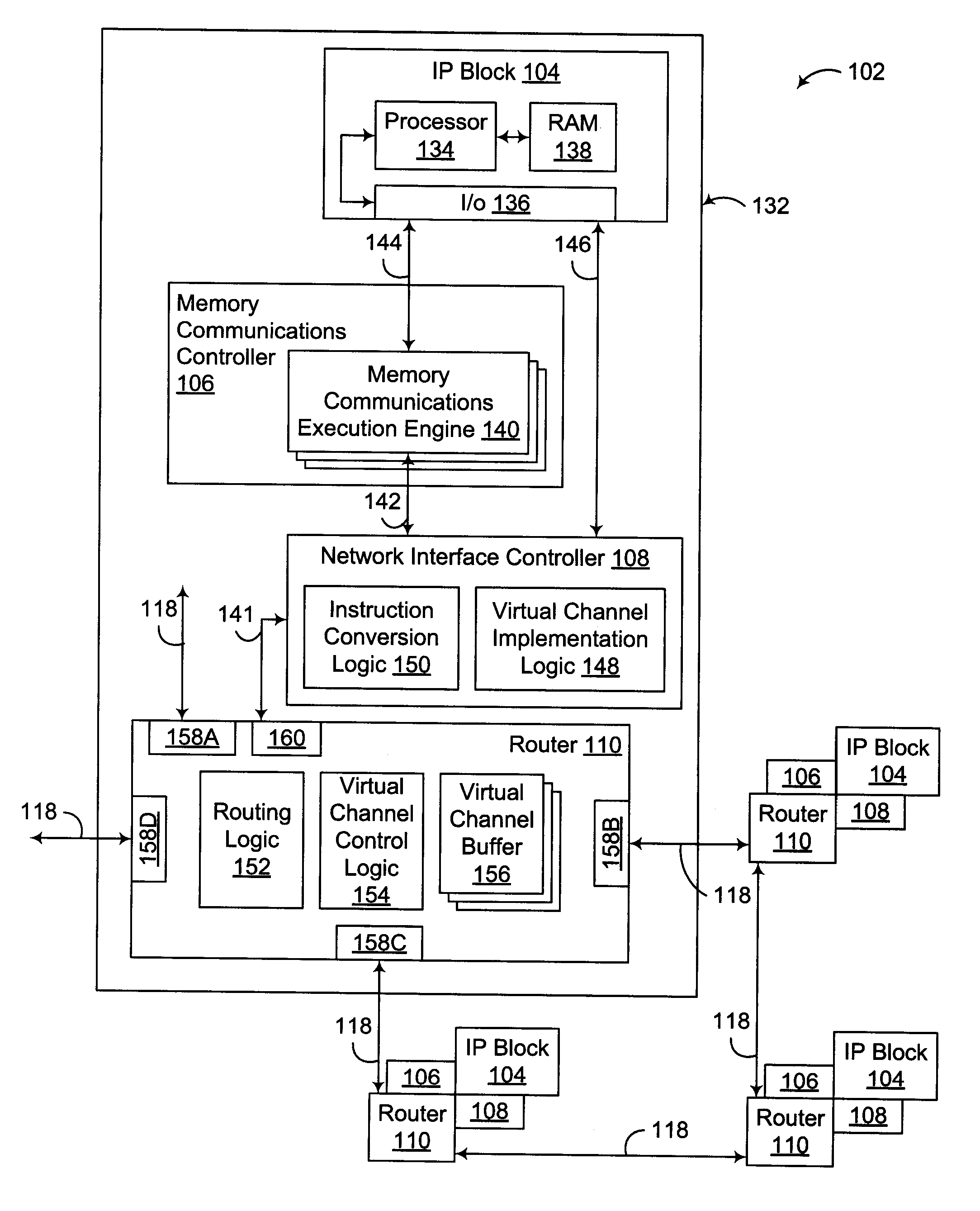

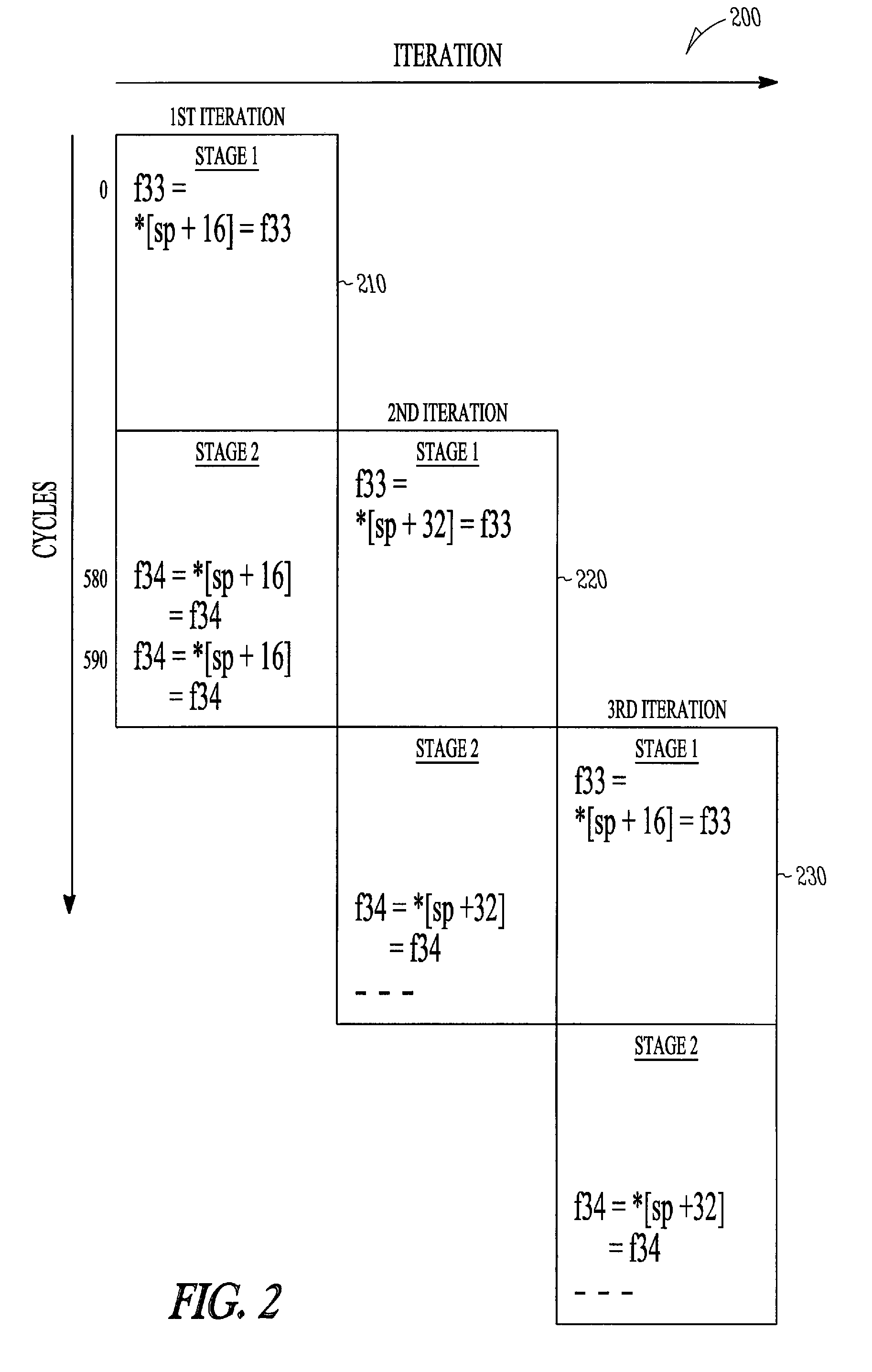

Software Pipelining on a Network on Chip

InactiveUS20090125706A1Digital computer detailsConcurrent instruction executionParallel computingCommunication control

A network on chip (‘NOC’) that includes integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, with each IP block adapted to a router through a memory communications controller and a network interface controller, where each memory communications controller controlling communications between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers, the NOC also including a computer software application segmented into stages, each stage comprising a flexibly configurable module of computer program instructions identified by a stage ID with each stage executing on a thread of execution on an IP block.

Owner:IBM CORP

Dynamic virtual software pipelining on a network on chip

InactiveUS8020168B2General purpose stored program computerMultiprogramming arrangementsParallel computingCommunication control

A NOC for dynamic virtual software pipelining including IP blocks, routers, memory communications controllers, and network interface controllers, each IP block adapted to a router through a memory communications controller and a network interface controller, the NOC also including: a computer software application segmented into stages, each stage comprising a flexibly configurable module of computer program instructions identified by a stage ID, each stage assigned to a thread of execution on an IP block; and each stage executing on a thread of execution on an IP block, including a first stage executing on an IP block, producing output data and sending by the first stage the produced output data to a second stage, the output data including control information for the next stage and payload data; and the second stage consuming the produced output data in dependence upon the control information.

Owner:IBM CORP

Rolling Context Data Structure for Maintaining State Data in a Multithreaded Image Processing Pipeline

InactiveUS20090231349A1Processor architectures/configurationElectric digital data processingMultiple contextImaging processing

A multithreaded rendering software pipeline architecture utilizes a rolling context data structure to store multiple contexts that are associated with different image elements that are being processed in the software pipeline. Each context stores state data for a particular image element, and the association of each image element with a context is maintained as the image element is passed from stage to stage of the software pipeline, thus ensuring that the state used by the different stages of the software pipeline when processing the image element remains coherent irrespective of state changes made for other image elements being processed by the software pipeline. Multiple image elements may therefore be processed concurrently by the software pipeline, and often without regard for synchronization or serialization of state changes that affect only certain image elements.

Owner:IBM CORP

Software Pipelining On A Network On Chip

Memory sharing in a software pipeline on a network on chip (‘NOC’), the NOC including integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, with each IP block adapted to a router through a memory communications controller and a network interface controller, where each memory communications controller controlling communications between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers, including segmenting a computer software application into stages of a software pipeline, the software pipeline comprising one or more paths of execution; allocating memory to be shared among at least two stages including creating a smart pointer, the smart pointer including data elements for determining when the shared memory can be deallocated; determining, in dependence upon the data elements for determining when the shared memory can be deallocated, that the shared memory can be deallocated; and deallocating the shared memory.

Owner:INT BUSINESS MASCH CORP

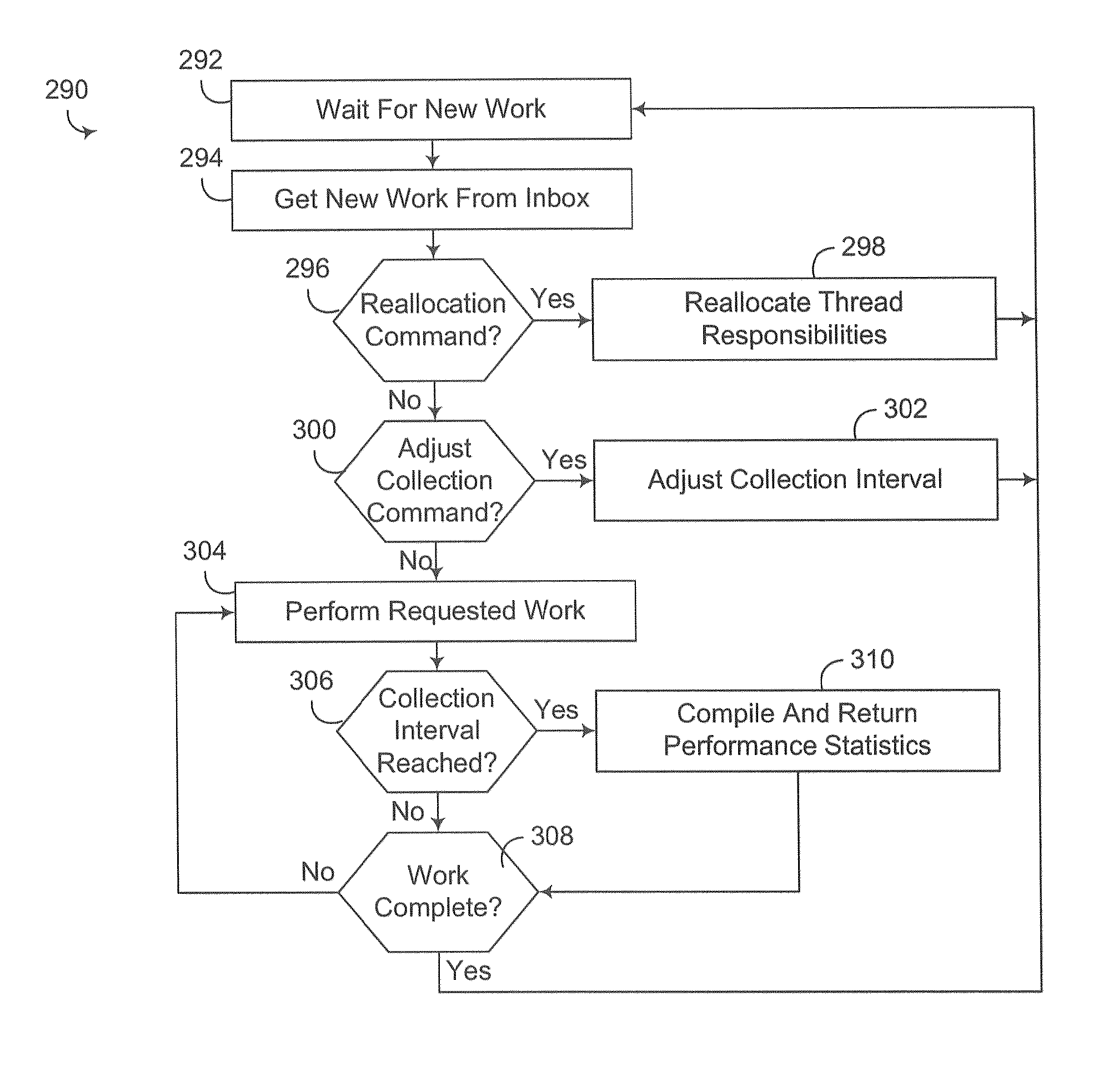

Monitoring software pipeline performance on a network on chip

InactiveUS7958340B2Error detection/correctionDigital computer detailsParallel computingComputer module

Software pipelining on a network on chip (‘NOC’), the NOC including integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, each IP block adapted to a router through a memory communications controller and a network interface controller, each memory communications controller controlling communication between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers. Embodiments of the present invention include implementing a software pipeline on the NOC, including segmenting a computer software application into stages, each stage comprising a flexibly configurable module of computer program instructions identified by a stage ID; executing each stage of the software pipeline on a thread of execution on an IP block; monitoring software pipeline performance in real time; and reconfiguring the software pipeline, dynamically, in real time, and in dependence upon the monitored software pipeline performance.

Owner:INT BUSINESS MASCH CORP

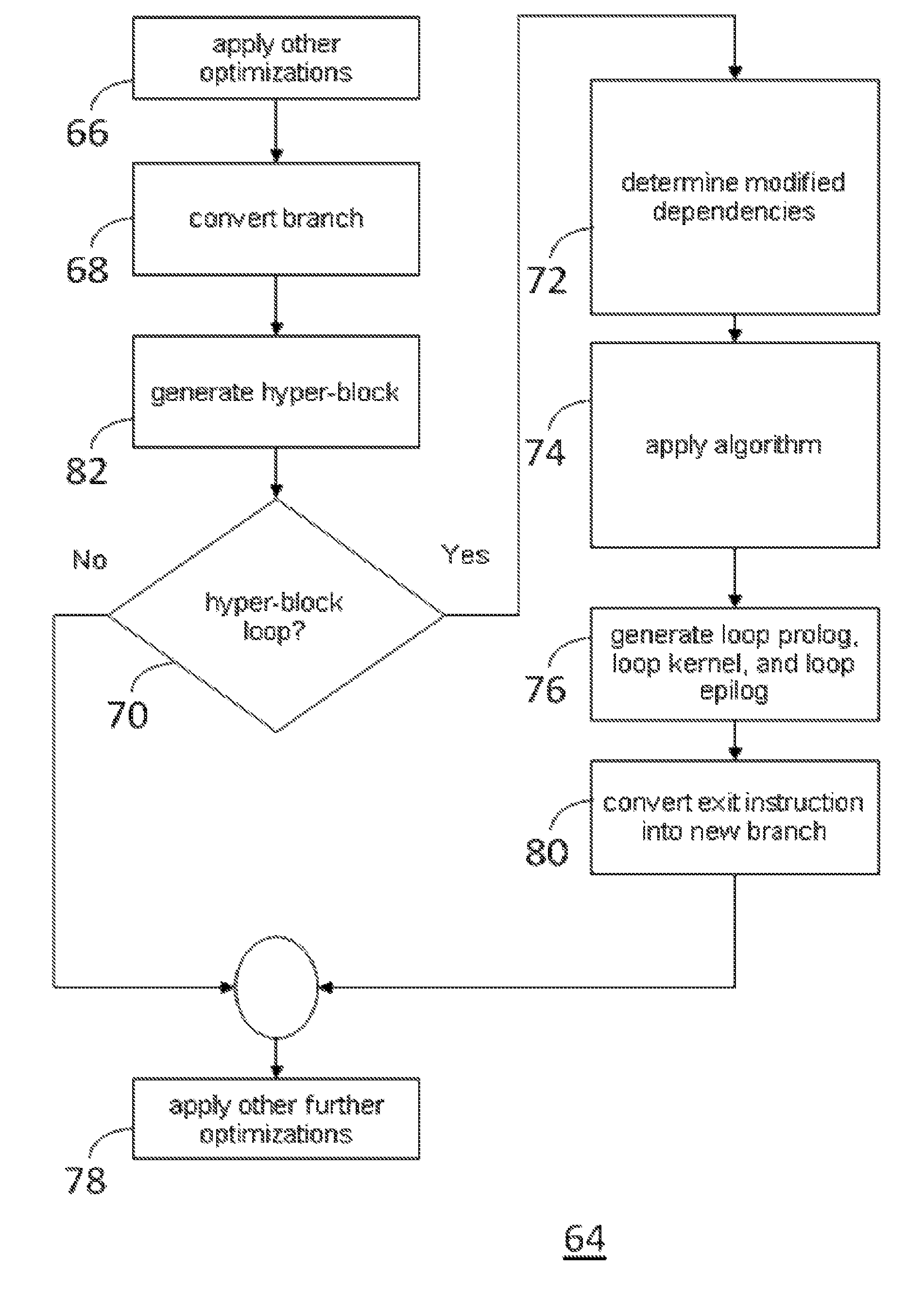

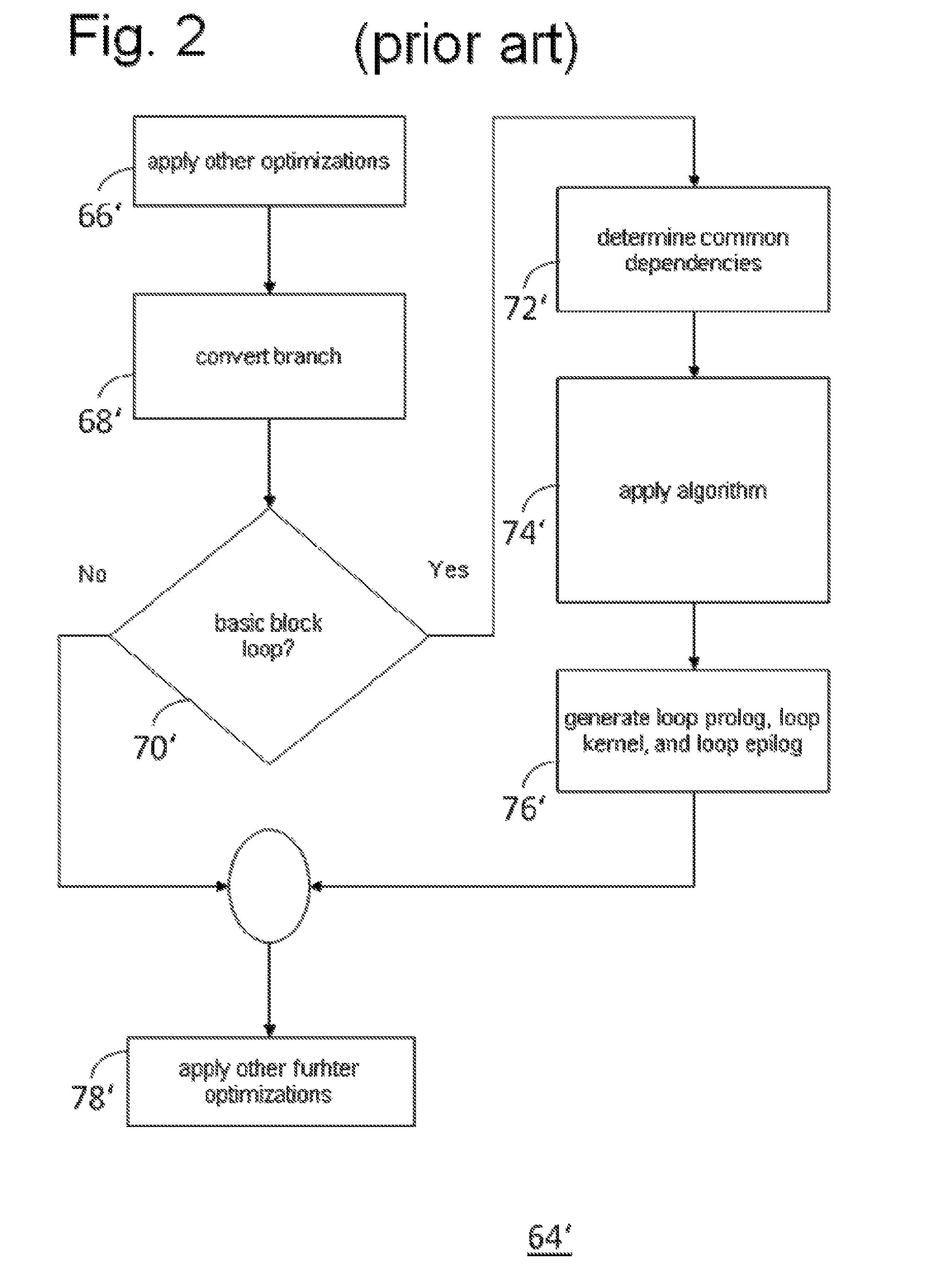

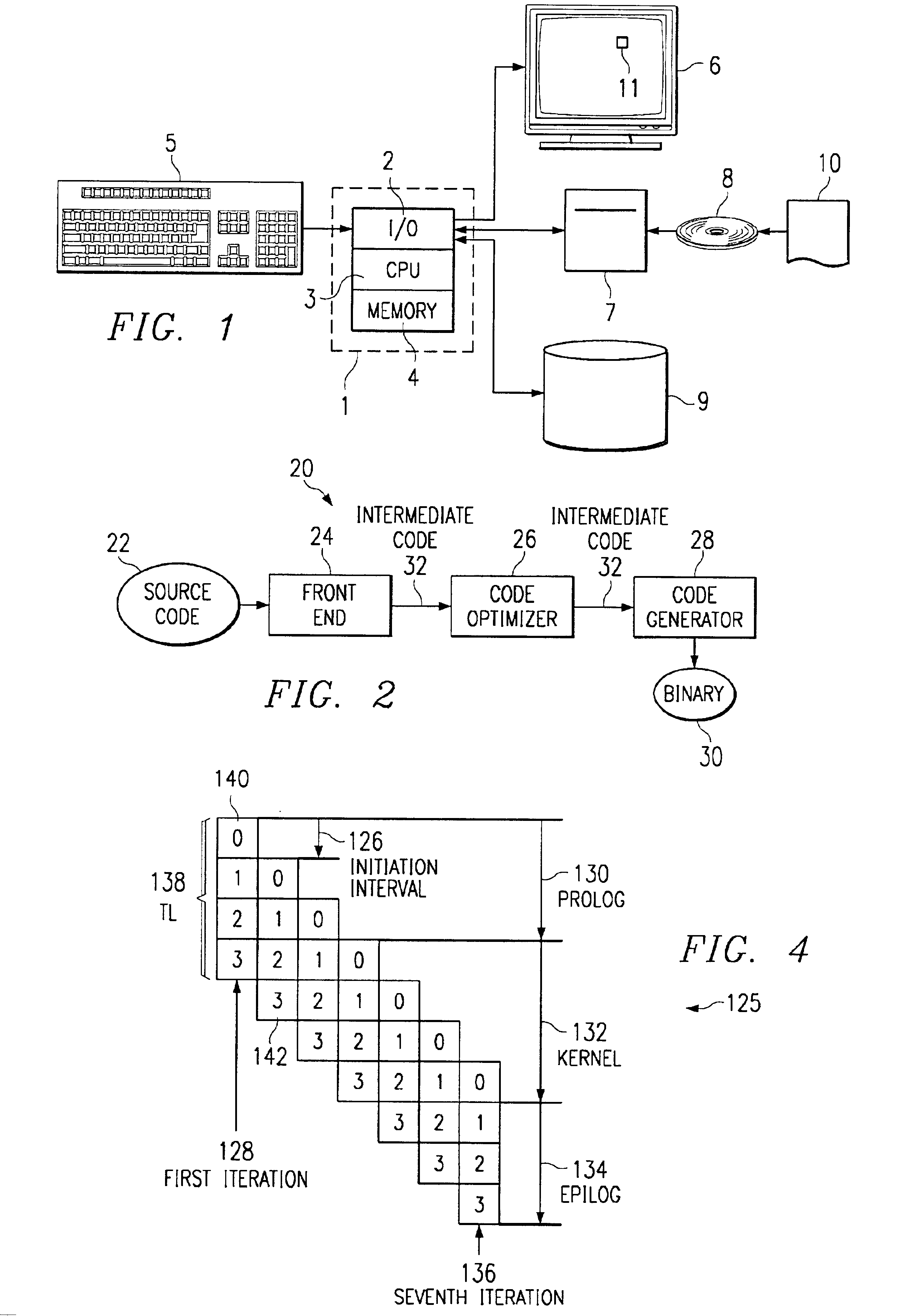

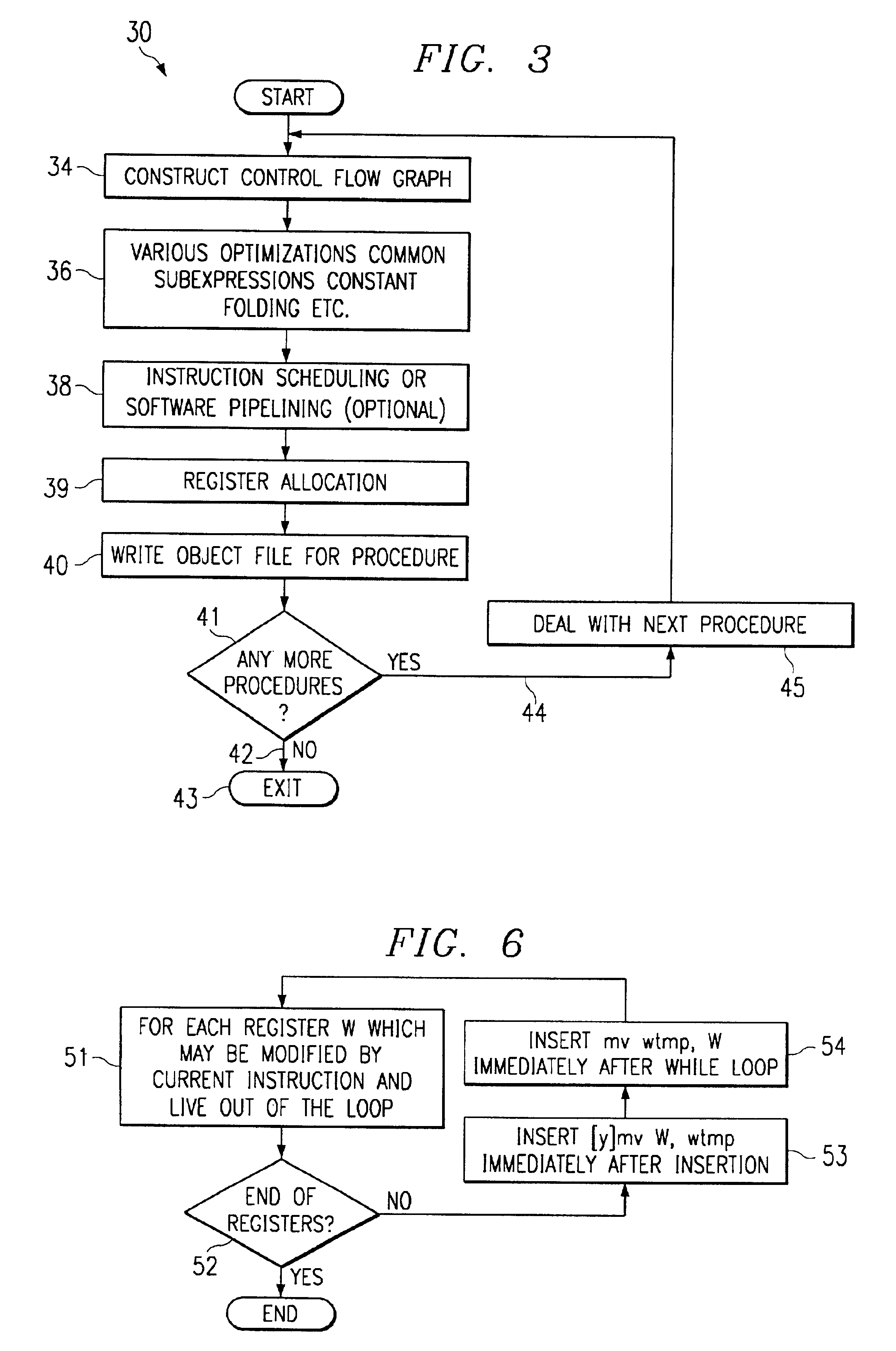

Computer system and a method for generating an optimized program code

A computer system for generating an optimized program code from a program code having a loop with an exit branch, wherein the computer system comprises a processing unit, wherein the processing unit is arranged to convert an exit instruction of the exit branch into a predicated exit instruction, wherein the processing unit is arranged to determine common dependencies within the loop, wherein the processing unit is arranged to generate modified dependencies by adding additional dependencies to the common dependencies, and wherein the processing unit is arranged to apply an algorithm that uses software pipelining for generating an optimized program code for the loop based on the modified dependencies.

Owner:NXP USA INC

Rendering of stereoscopic images with multithreaded rendering software pipeline

InactiveUS20110063285A1Reduce the burden onReduce overheadSteroscopic systems3D-image renderingComputer graphics (images)Software pipelining

A circuit arrangement, program product and circuit arrangement render stereoscopic images in a multithreaded rendering software pipeline using first and second rendering channels respectively configured to render left and right views for the stereoscopic image. Separate transformations are applied to received vertex data to generate transformed vertex data for use by each of the first and second rendering channels in rendering the left and right views for the stereoscopic image.

Owner:IBM CORP

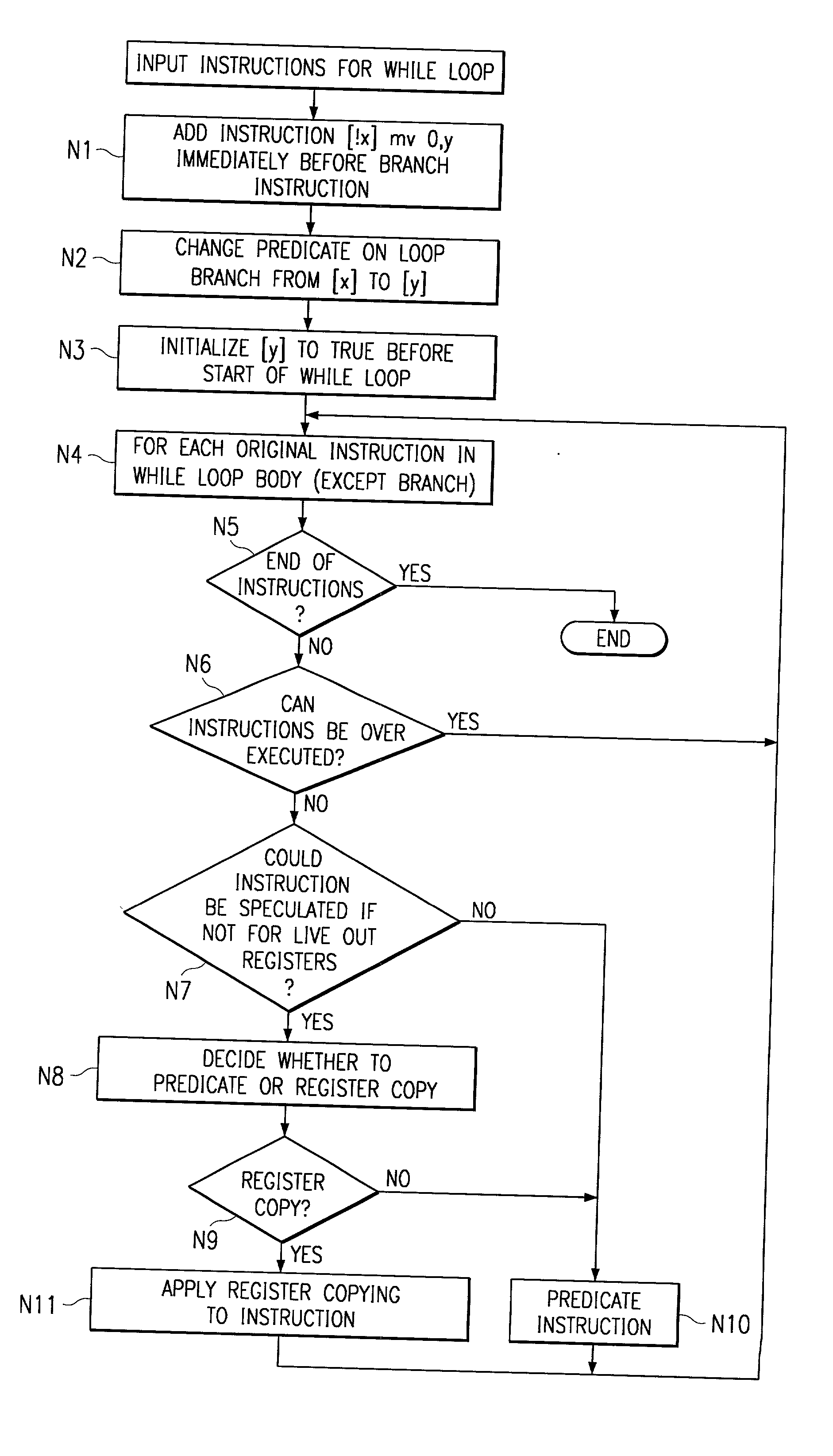

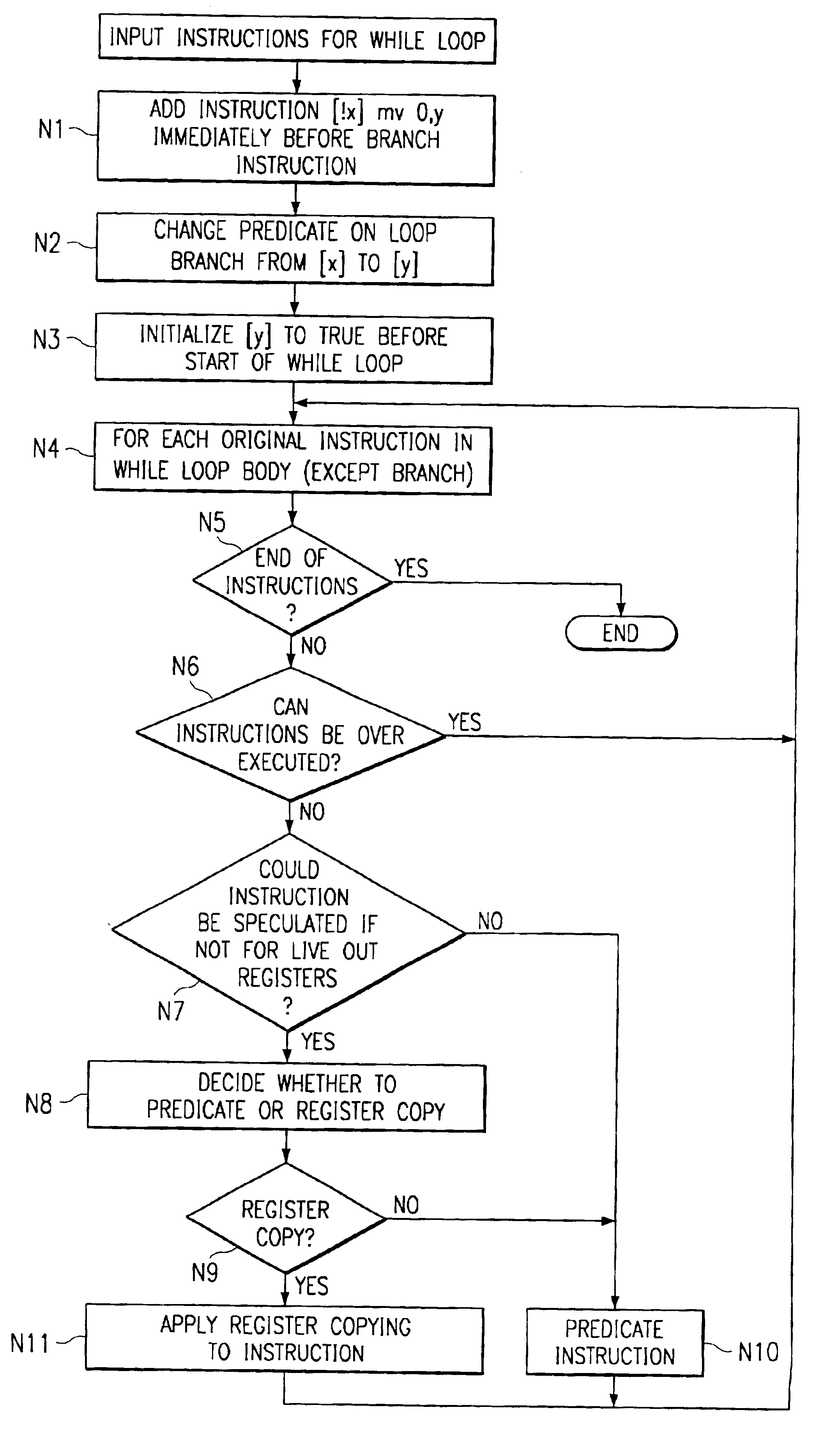

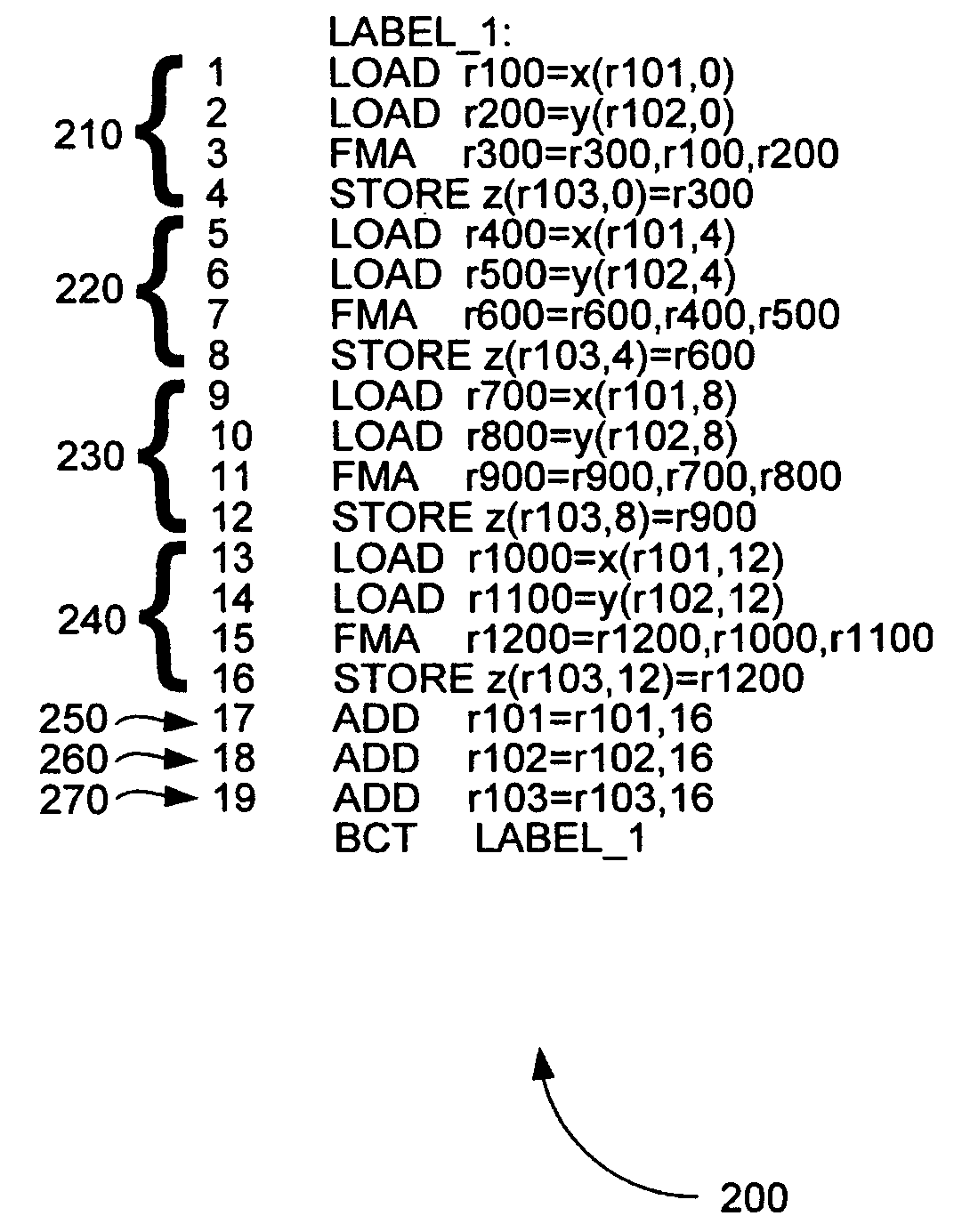

Method for software pipelining of irregular conditional control loops

InactiveUS20020120923A1Suppresses increase in code sizeReduce running timeSoftware engineeringDigital computer detailsProcessor registerParallel computing

Owner:TEXAS INSTR INC

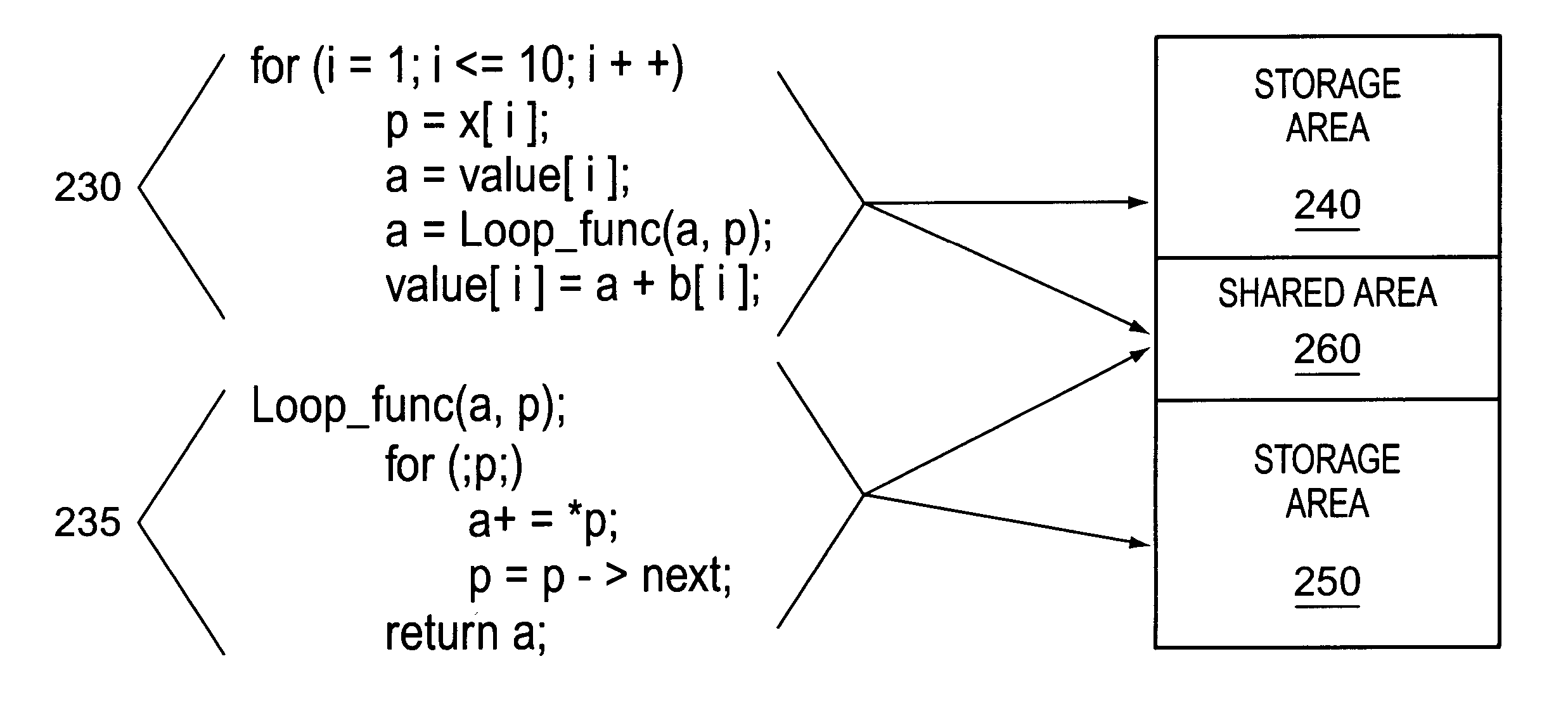

Method and apparatus for software pipelining of nested loops

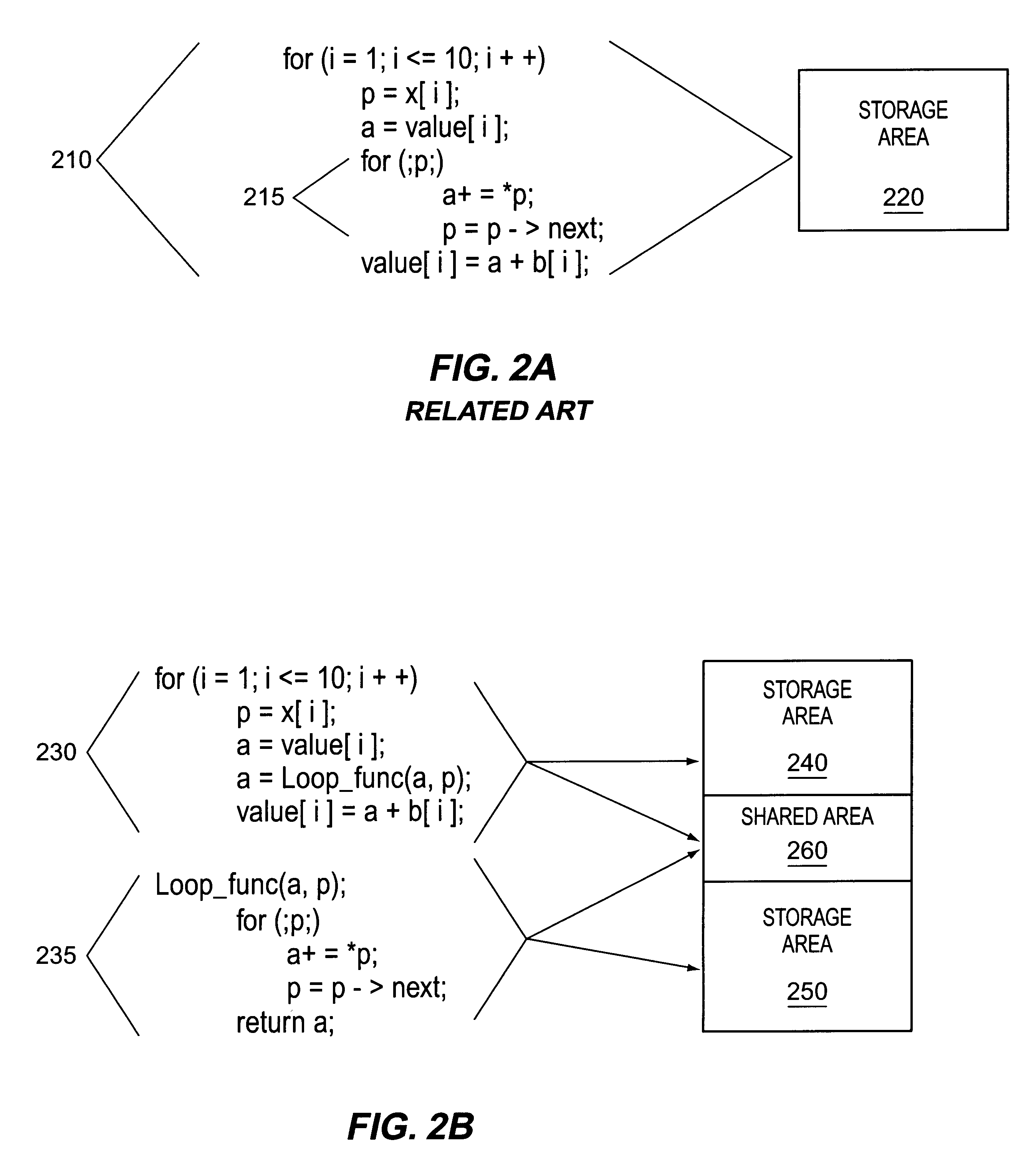

A method for executing software pipelined executable code generated by compiling a set of unexecutable instructions having an inner loop and an outer loop is disclosed. Instructions are executed that perform the operations specified in the outer loop using a first storage area. A second storage area is allocated for use when performing the operations specified in the inner loop. Instructions are then executed that perform the operations specified in the inner loop using the second storage area, wherein at least certain storage locations in the first storage area are not alterable while the operations specified in the inner loop are being performed.

Owner:INTEL CORP

Throughput-aware software pipelining for highly multi-threaded systems

ActiveUS20130111453A1Reducing scalarityReducing latency settingSoftware engineeringProgram controlResource utilizationProcessor register

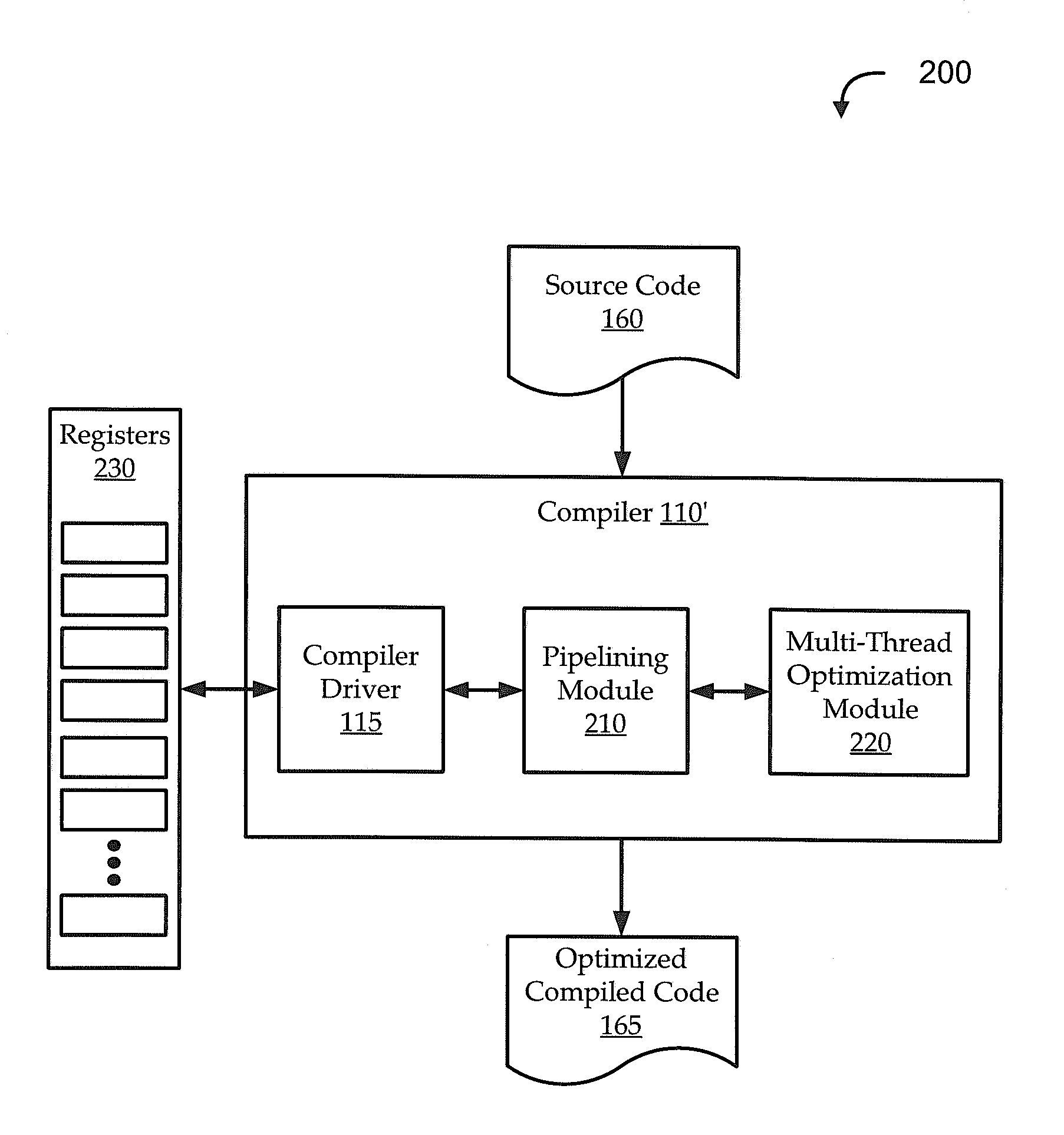

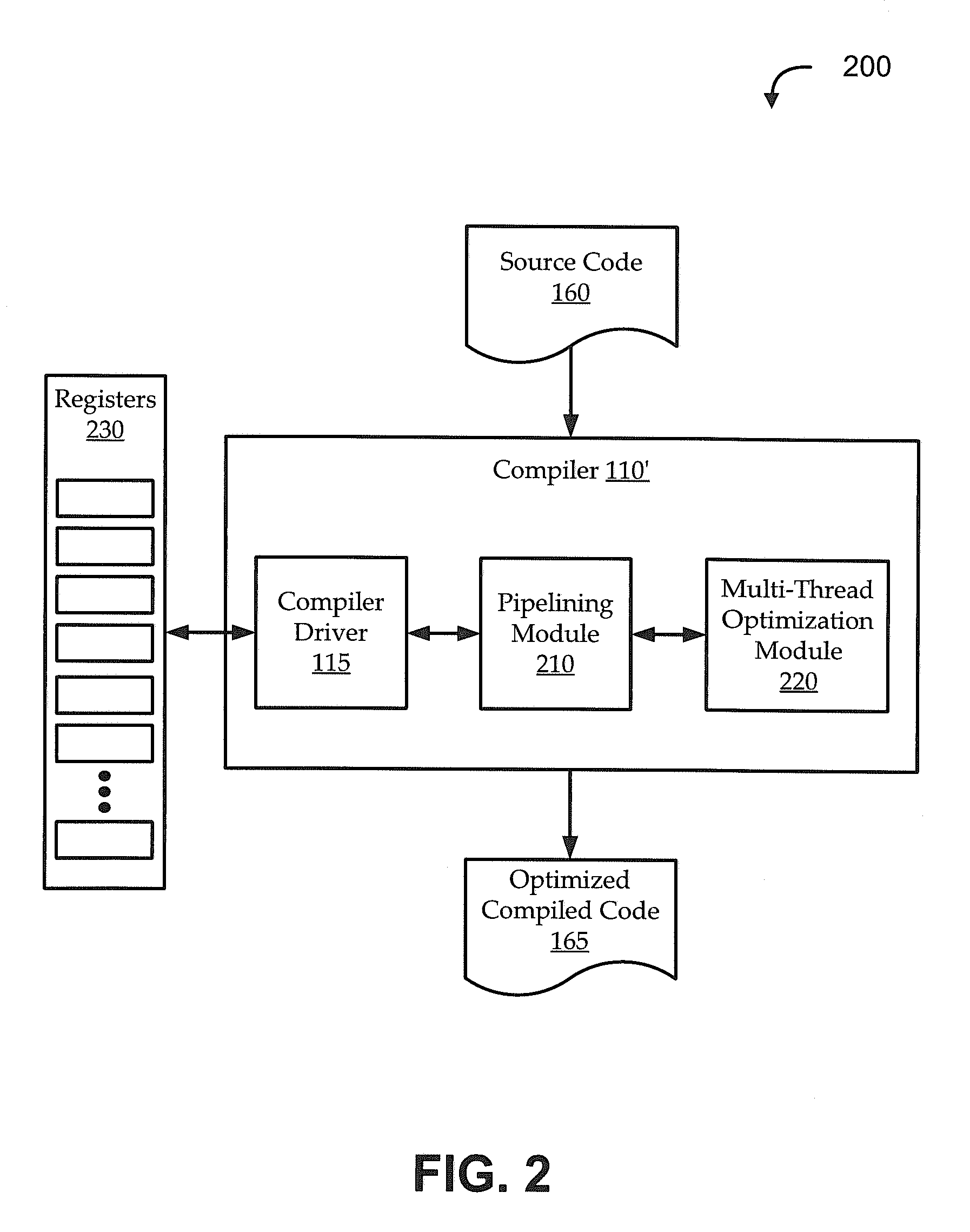

Embodiments of the invention provide systems and methods for throughput-aware software pipelining in compilers to produce optimal code for single-thread and multi-thread execution on multi-threaded systems. A loop is identified within source code as a candidate for software pipelining. An attempt is made to generate pipelined code (e.g., generate an instruction schedule and a set of register assignments) for the loop in satisfaction of throughput-aware pipelining criteria, like maximum register count, minimum trip count, target core pipeline resource utilization, maximum code size, etc. If the attempt fails to generate code in satisfaction of the criteria, embodiments adjust one or more settings (e.g., by reducing scalarity or latency settings being used to generate the instruction schedule). Additional attempts are made to generate pipelined code in satisfaction of the criteria by iteratively adjusting the settings, regenerating the code using the adjusted settings, and recalculating whether the code satisfies the criteria.

Owner:ORACLE INT CORP

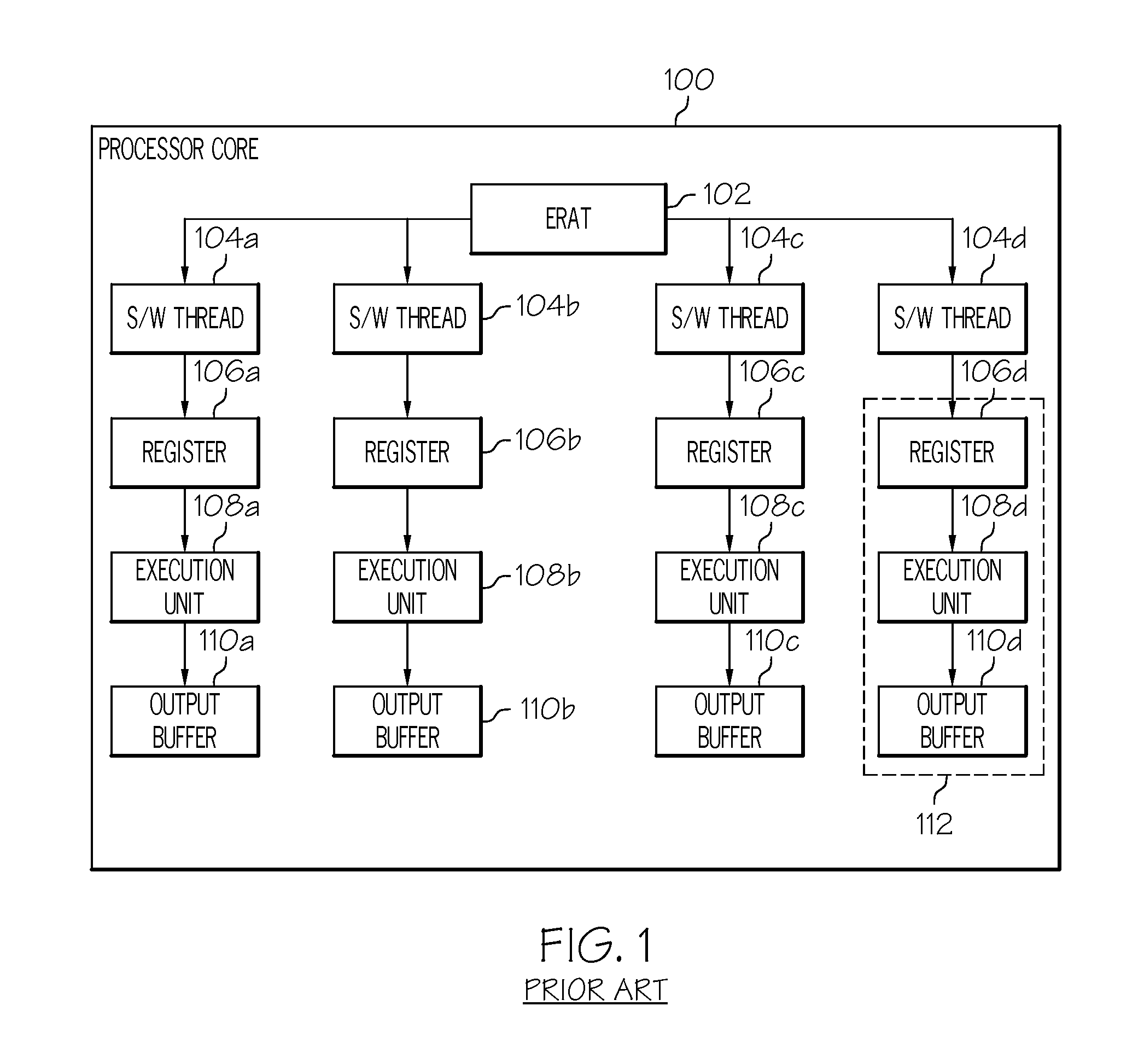

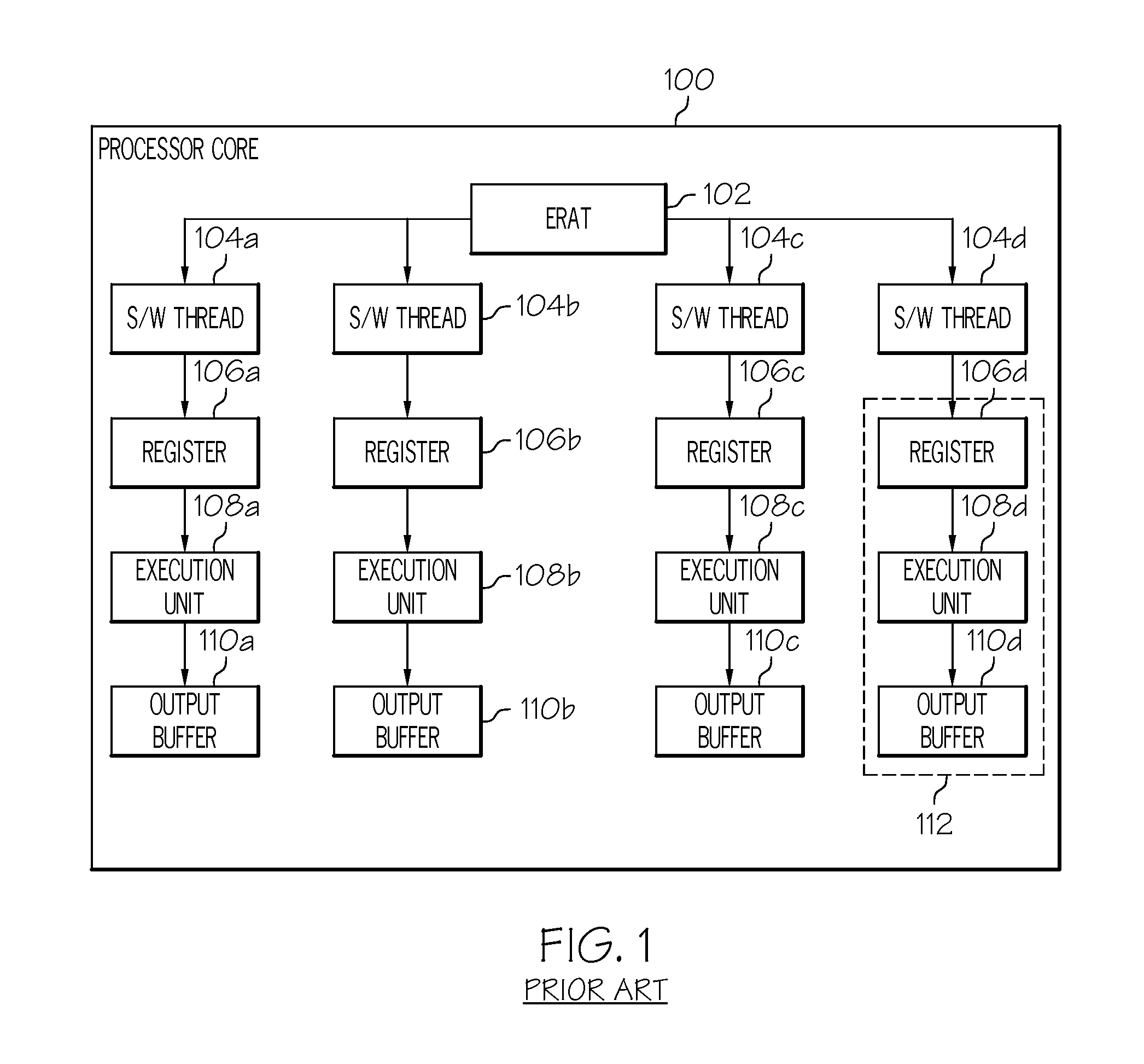

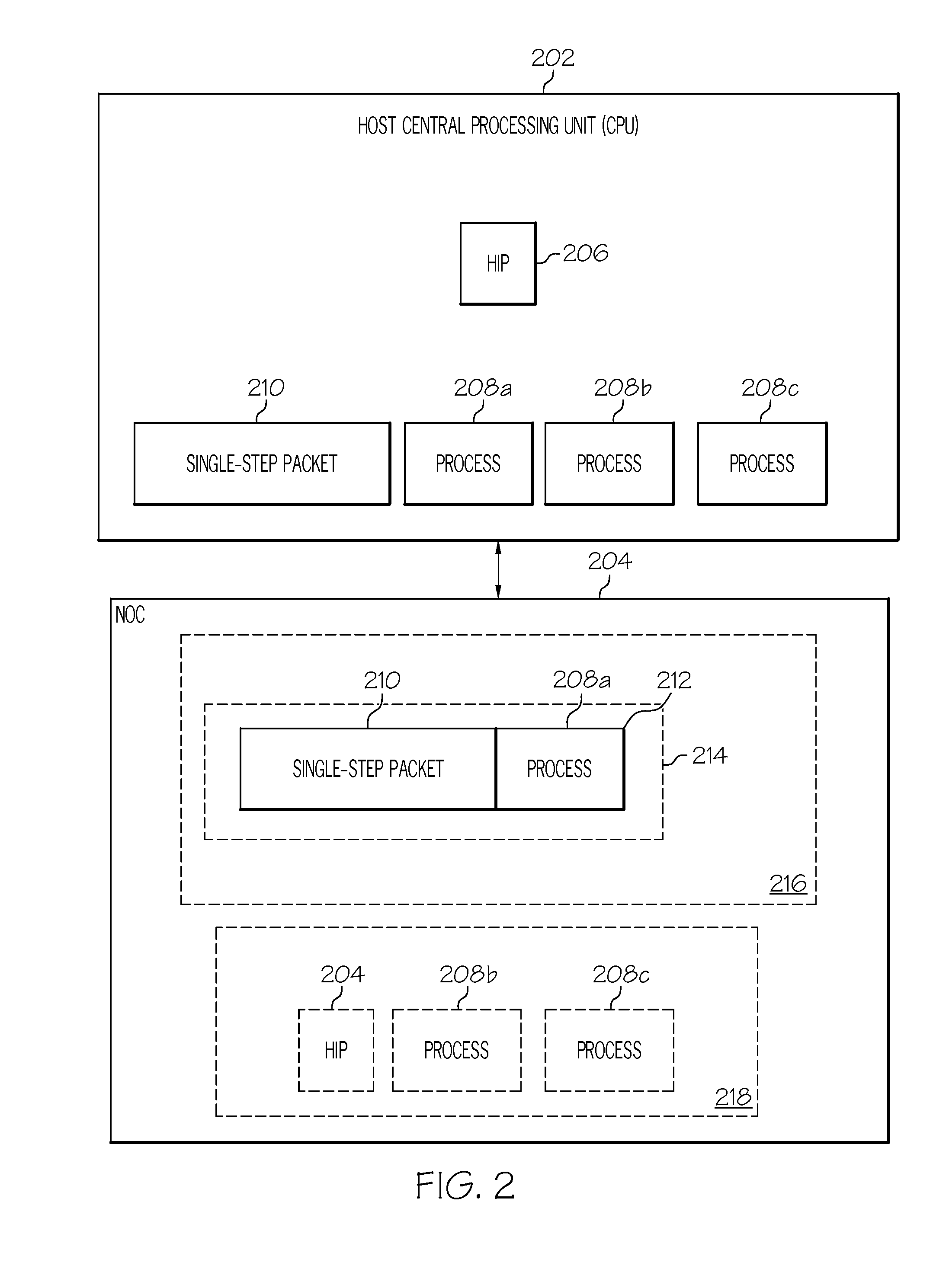

Single step mode in a software pipeline within a highly threaded network on a chip microprocessor

A hardware thread is selectively forced to single step the execution of software instructions from a work packet granule. A “single step” packet is associated with a work packet granule. The work packet granule, with the associated “single step” packet, is dispatched as an appended work packet granule to a preselected hardware thread in a processor core, which, in one embodiment, is located at a node in a Network On a Chip (NOC). The work packet granule then executes in a single step mode until completion.

Owner:IBM CORP

Single step mode in a software pipeline within a highly threaded network on a chip microprocessor

InactiveUS8140832B2Error detection/correctionDigital computer detailsHardware threadSoftware pipelining

A hardware thread is selectively forced to single step the execution of software instructions from a work packet granule. A “single step” packet is associated with a work packet granule. The work packet granule, with the associated “single step” packet, is dispatched as an appended work packet granule to a preselected hardware thread in a processor core, which, in one embodiment, is located at a node in a Network On a Chip (NOC). The work packet granule then executes in a single step mode until completion.

Owner:INT BUSINESS MASCH CORP

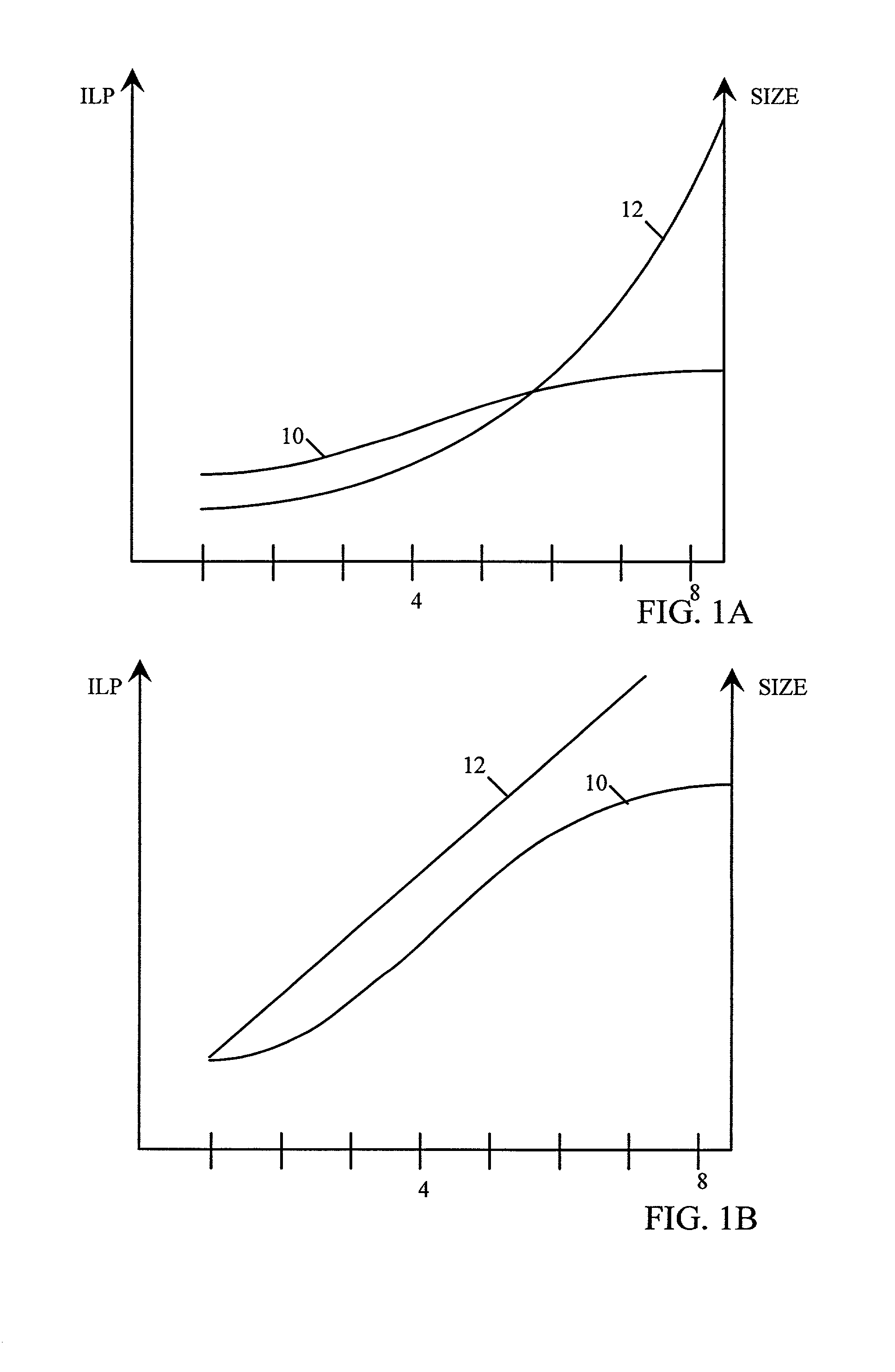

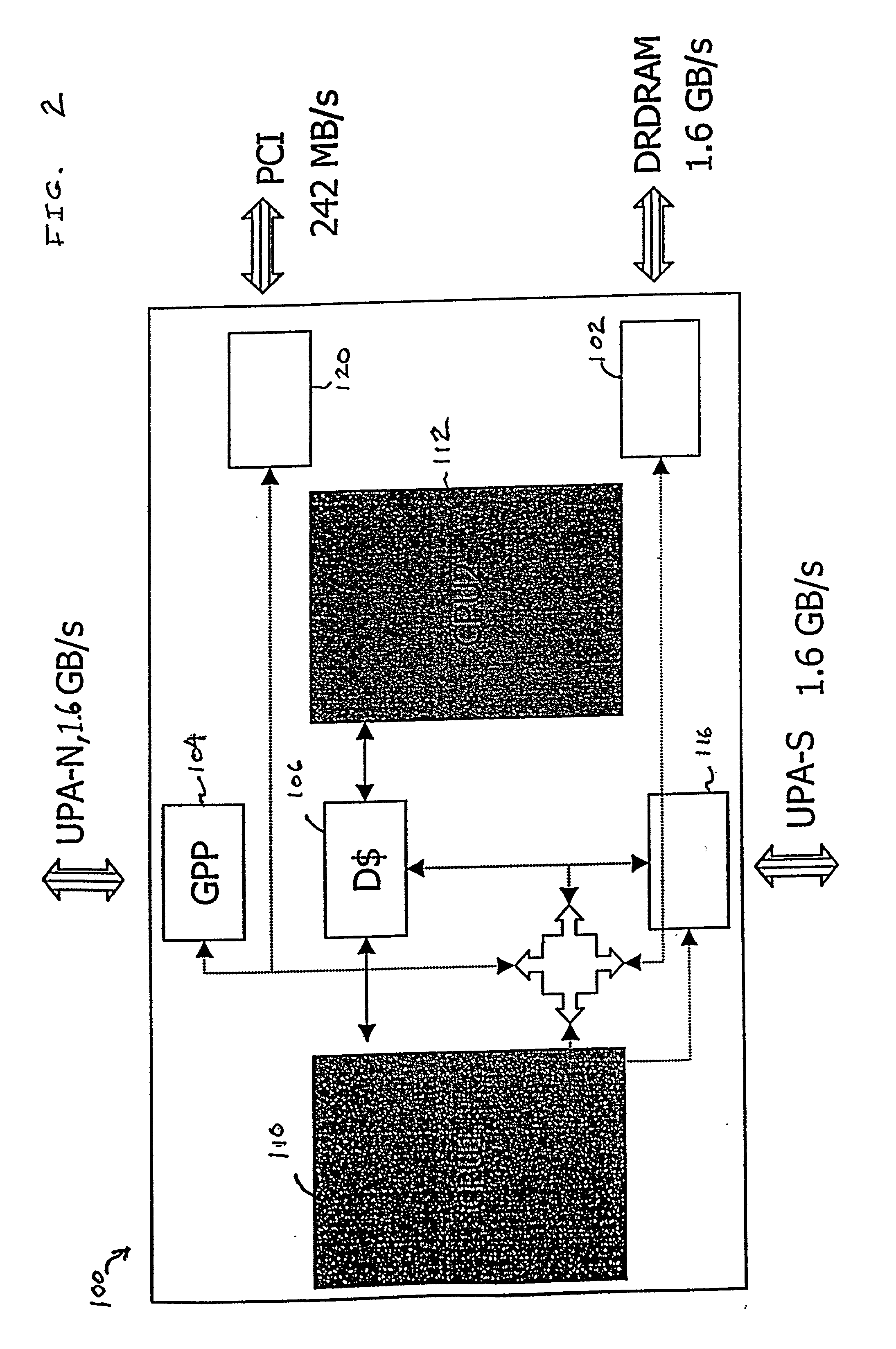

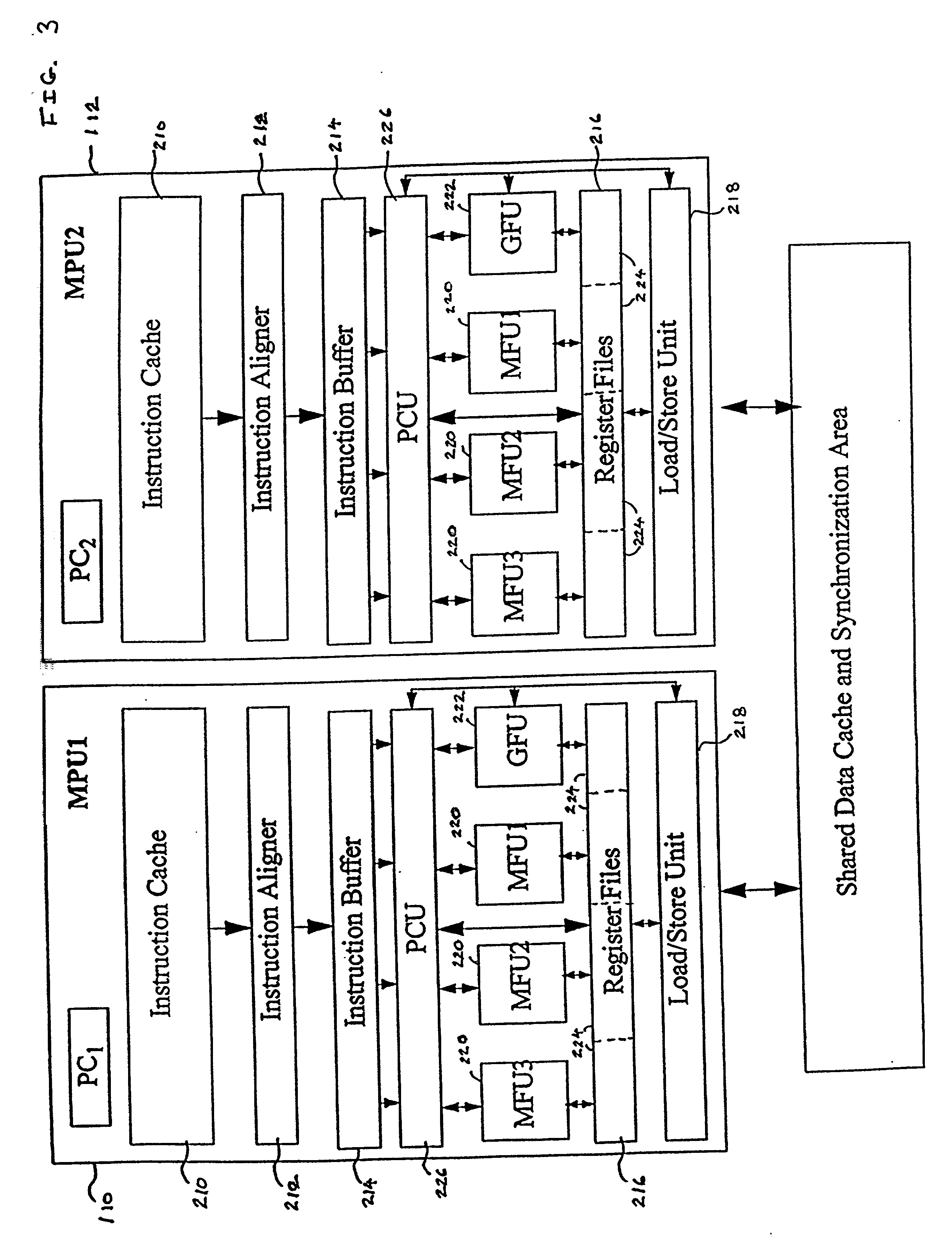

Variable issue-width vliw processor

InactiveUS20010042187A1Register arrangementsInstruction analysisMain processing unitSoftware pipelining

Abstract of the Disclosure A processor has a flexible architecture that efficiently handles computing applications having a range of instruction-level parallelism from a very low degree to a very high degree of instruction-level parallelism. The processor includes a plurality of processing units, an individual processing unit of the plurality of processing units including a multiple-instruction parallel execution path. For computing applications having a low degree of instruction-level parallelism, the processor includes control logic that controls the plurality of processing units to execute instructions mutually independently in a plurality of independent execution threads. For computing applications having a high degree of instruction-level parallelism, the processor further includes control logic that controls the plurality of processing units with a low thread synchronization to operate in combination using spatial software pipelining in the manner of a single wide-issue processor. The control logic in the processor alternatively controls the plurality of processing units to operate: (1) in a multiple-thread operation on the basis of a highly parallel structure including multiple independent parallel execution paths for executing in parallel across threads and a multiple-instruction parallel pathway within a thread, and (2) in a single-thread wide-issue operation on the basis of the highly parallel structure including multiple parallel execution paths with low level synchronization for executing the single wide-issue thread. The multiple independent parallel execution paths include functional units that execute an instruction set including special data-handling instructions that are advantageous in a multiple-thread environment.

Owner:SUN MICROSYSTEMS INC

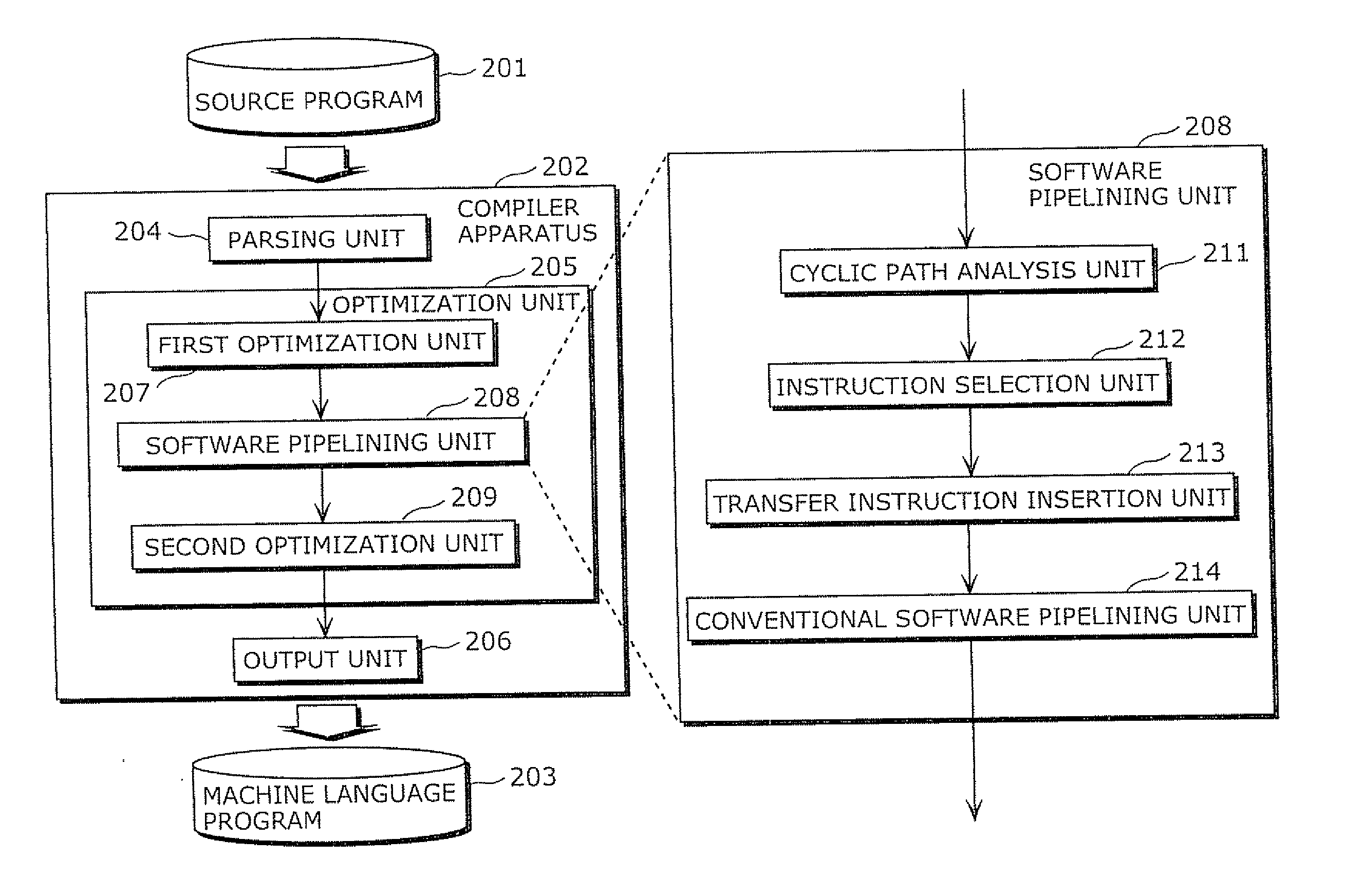

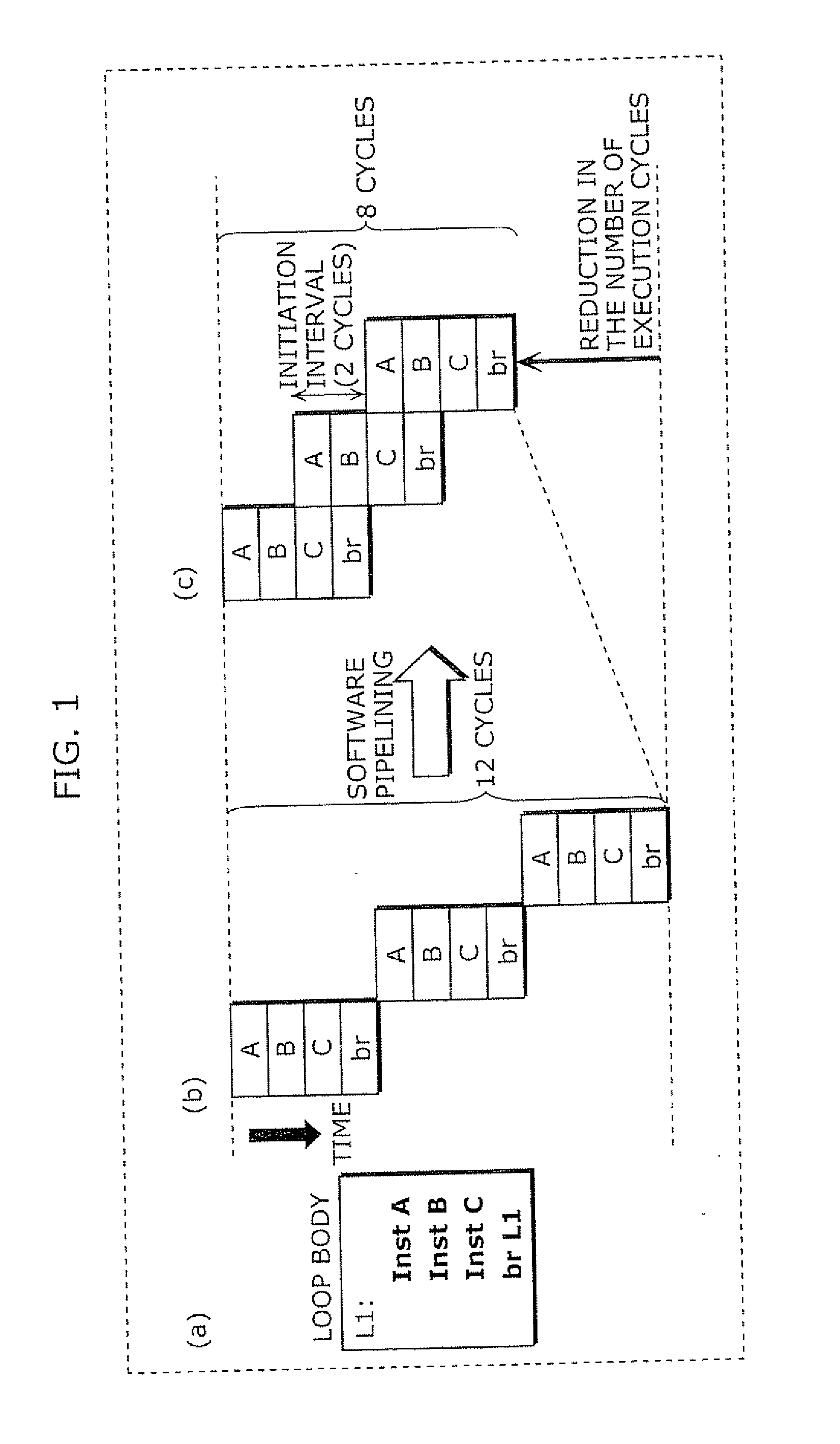

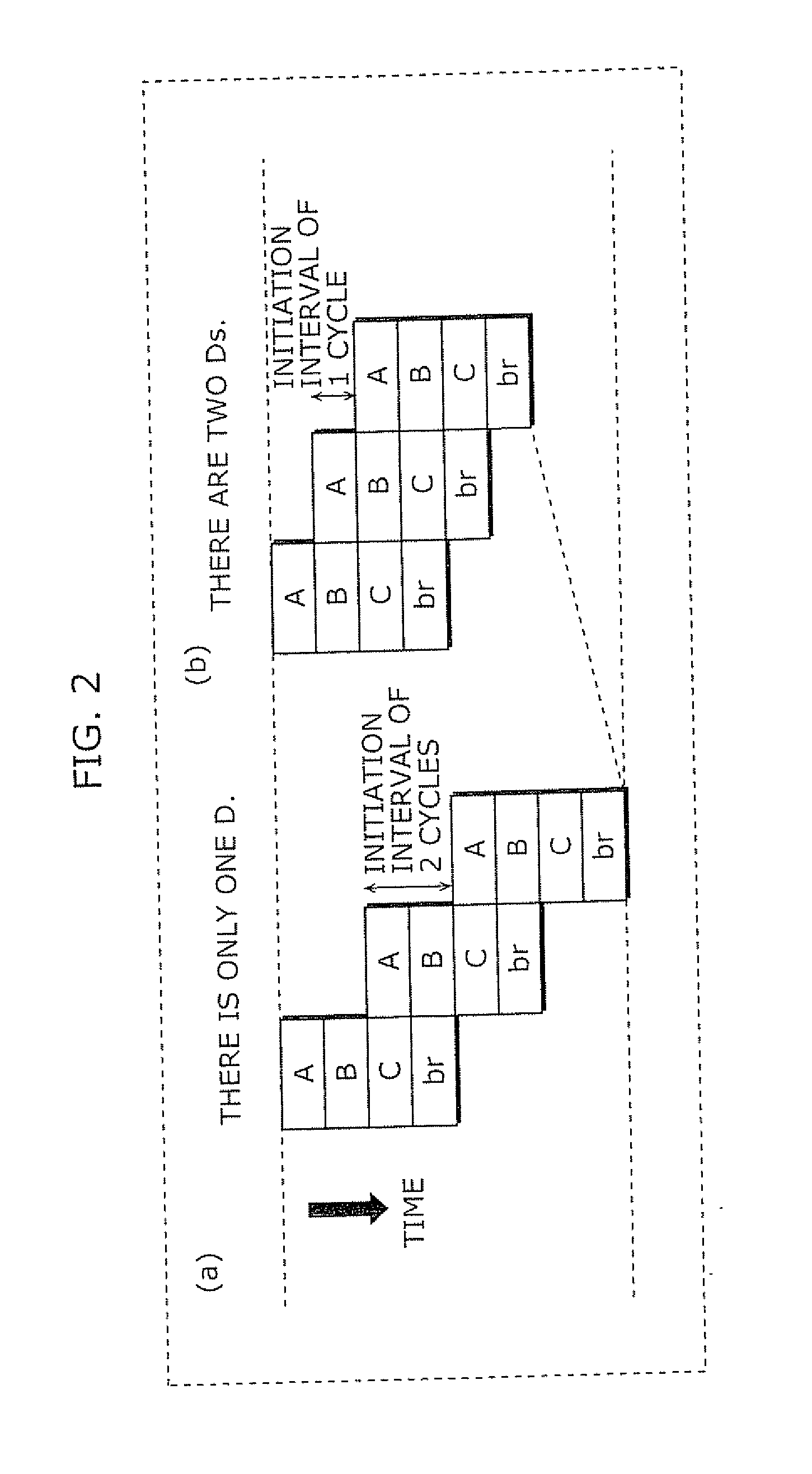

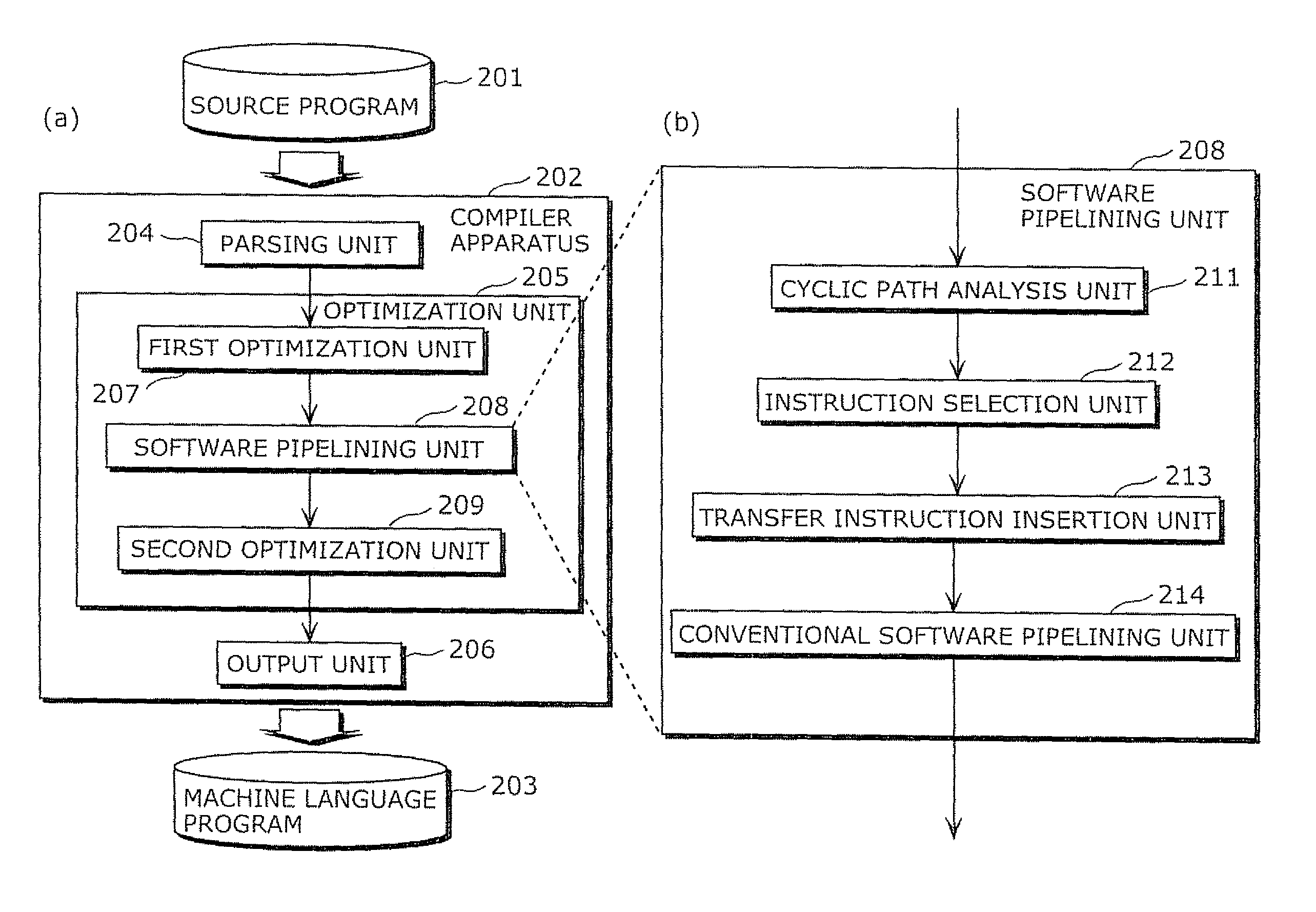

Compiler apparatus

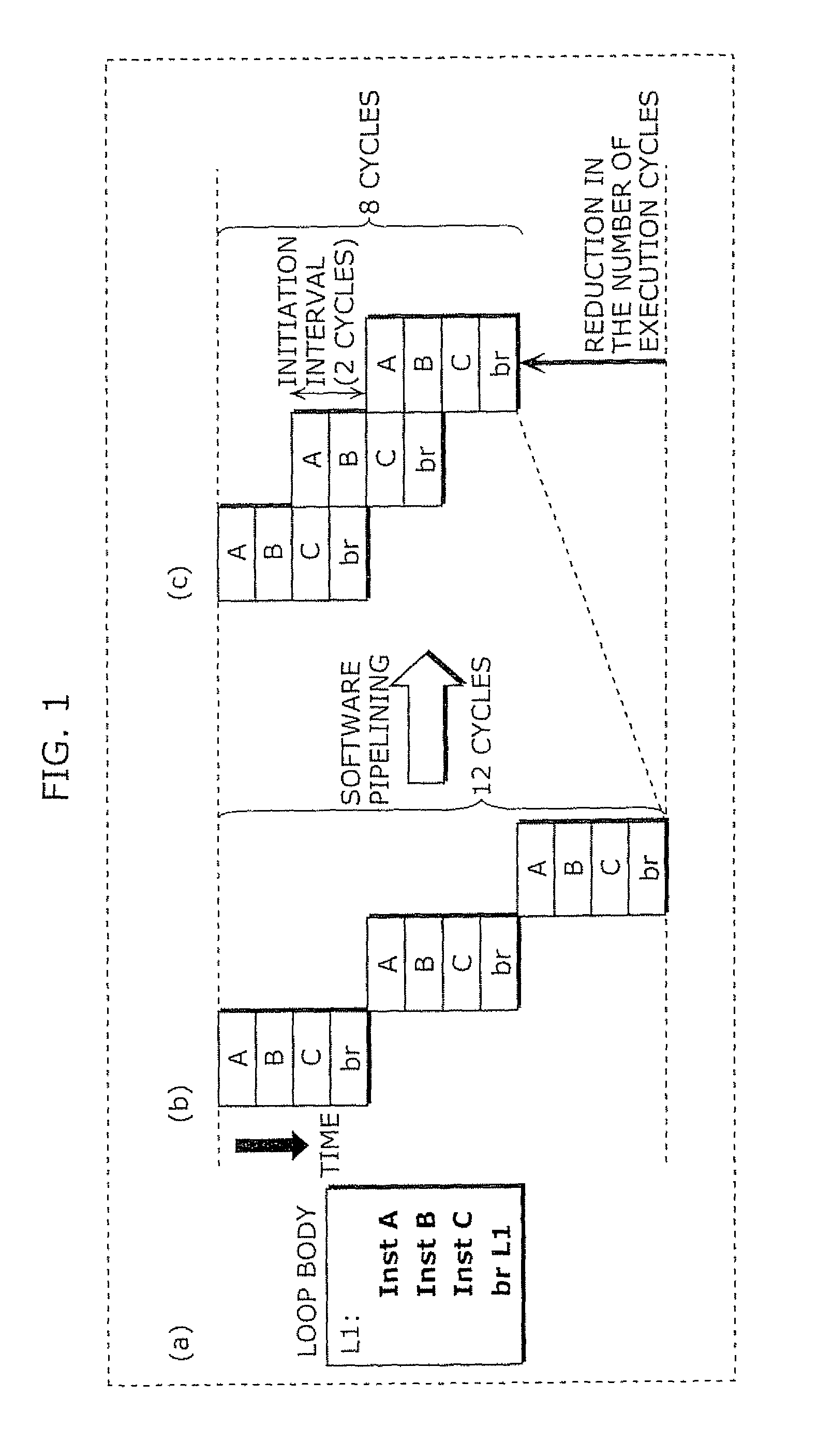

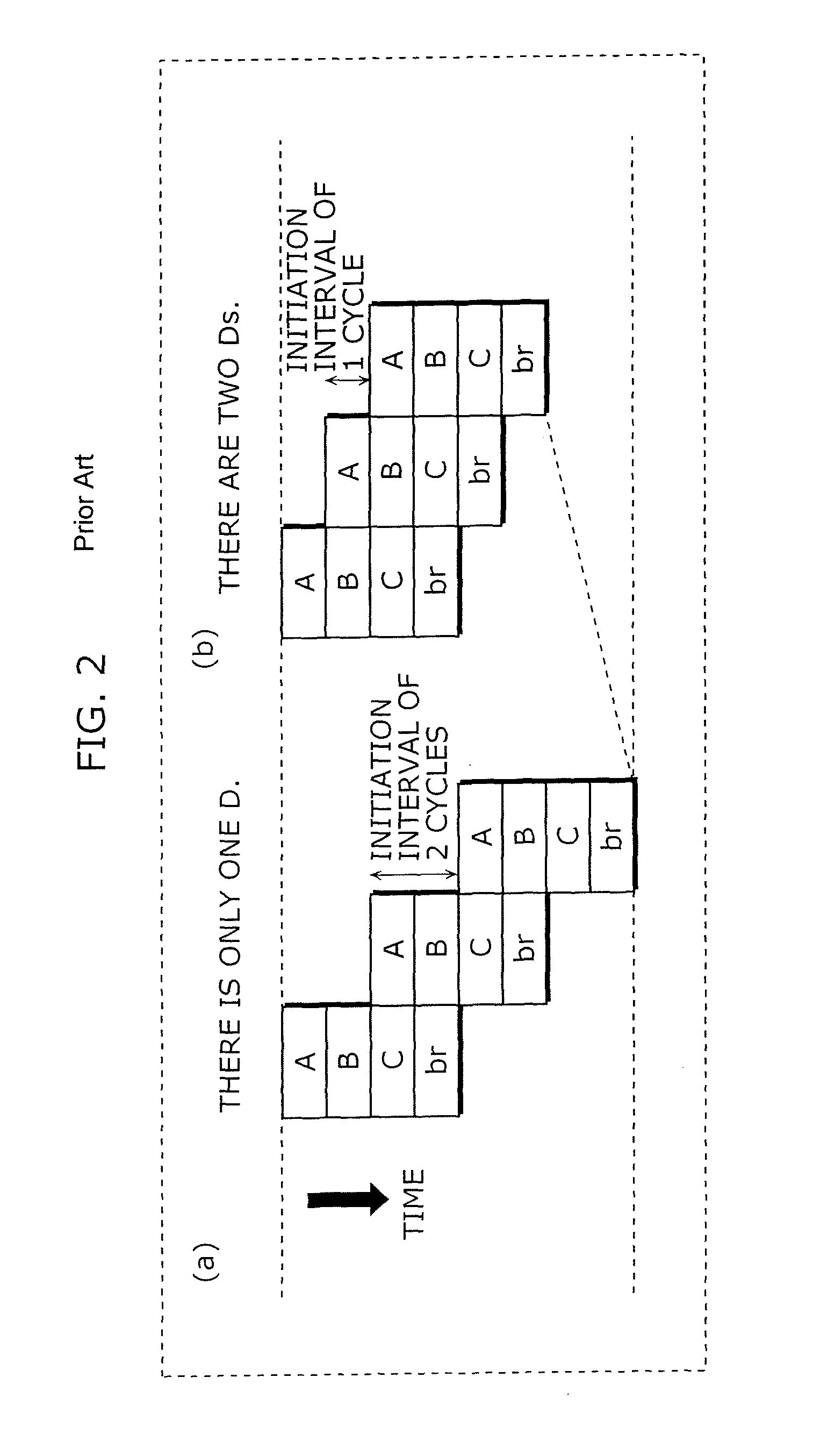

ActiveUS20060277529A1Reduce the number of executionsReduce in quantitySoftware engineeringProgram controlCyclic processOperand

A compiler apparatus, which can perform software pipelining optimization that has a considerable effect of reducing the number of execution cycles taken to complete a loop process, converts a source program into a machine program for a processor which is capable of parallel processing. The compiler apparatus is composed of: a parsing unit operable to parse the source program and then to convert the source program into an intermediate program which is described in an intermediate language; an optimization unit operable to optimize the intermediate program; and a conversion unit operable to convert the optimized intermediate program into the machine language program, wherein the optimization unit is operable to execute software pipelining, by inserting a transfer instruction, which is used for transferring data between operands, into a loop process included in the intermediate program so that a data dependence relation is changed.

Owner:SOCIONEXT INC

Rolling texture context data structure for maintaining texture data in a multithreaded image processing pipeline

InactiveUS20110292063A1Easy accessMultiprogramming arrangementsCharacter and pattern recognitionImaging processingComputer graphics (images)

A multithreaded rendering software pipeline architecture utilizes a rolling texture context data structure to store multiple texture contexts that are associated with different textures that are being processed in the software pipeline. Each texture context stores state data for a particular texture, and facilitates the access to texture data by multiple, parallel stages in a software pipeline. In addition, texture contexts are capable of being “rolled”, or copied to enable different stages of a rendering pipeline that require different state data for a particular texture to separately access the texture data independently from one another, and without the necessity for stalling the pipeline to ensure synchronization of shared texture data among the stages of the pipeline.

Owner:IBM CORP

Streaming physics collision detection in multithreaded rendering software pipeline

InactiveUS20110283086A1Reduce memory bandwidth requirementImprove performanceProgram control using wired connectionsGeneral purpose stored program computerComputer hardwareHardware thread

A circuit arrangement, program product and method stream level of detail components between hardware threads in a multithreaded circuit arrangement to perform physics collision detection. Typically, a master hardware thread, e.g., a component loader hardware thread, is used to retrieve level of detail data for an object from a memory and stream the data to one or more slave hardware threads, e.g., collision detection hardware threads, to perform the actual collision detection. Because the slave hardware threads receive the level of detail data from the master thread, typically the slave hardware threads are not required to load the data from the memory, thereby reducing memory bandwidth requirements and accelerating performance.

Owner:IBM CORP

Rolling texture context data structure for maintaining texture data in a multithreaded image processing pipeline

InactiveUS8405670B2Easy accessCharacter and pattern recognitionMultiprogramming arrangementsImaging processingComputer graphics (images)

A multithreaded rendering software pipeline architecture utilizes a rolling texture context data structure to store multiple texture contexts that are associated with different textures that are being processed in the software pipeline. Each texture context stores state data for a particular texture, and facilitates the access to texture data by multiple, parallel stages in a software pipeline. In addition, texture contexts are capable of being “rolled”, or copied to enable different stages of a rendering pipeline that require different state data for a particular texture to separately access the texture data independently from one another, and without the necessity for stalling the pipeline to ensure synchronization of shared texture data among the stages of the pipeline.

Owner:INT BUSINESS MASCH CORP

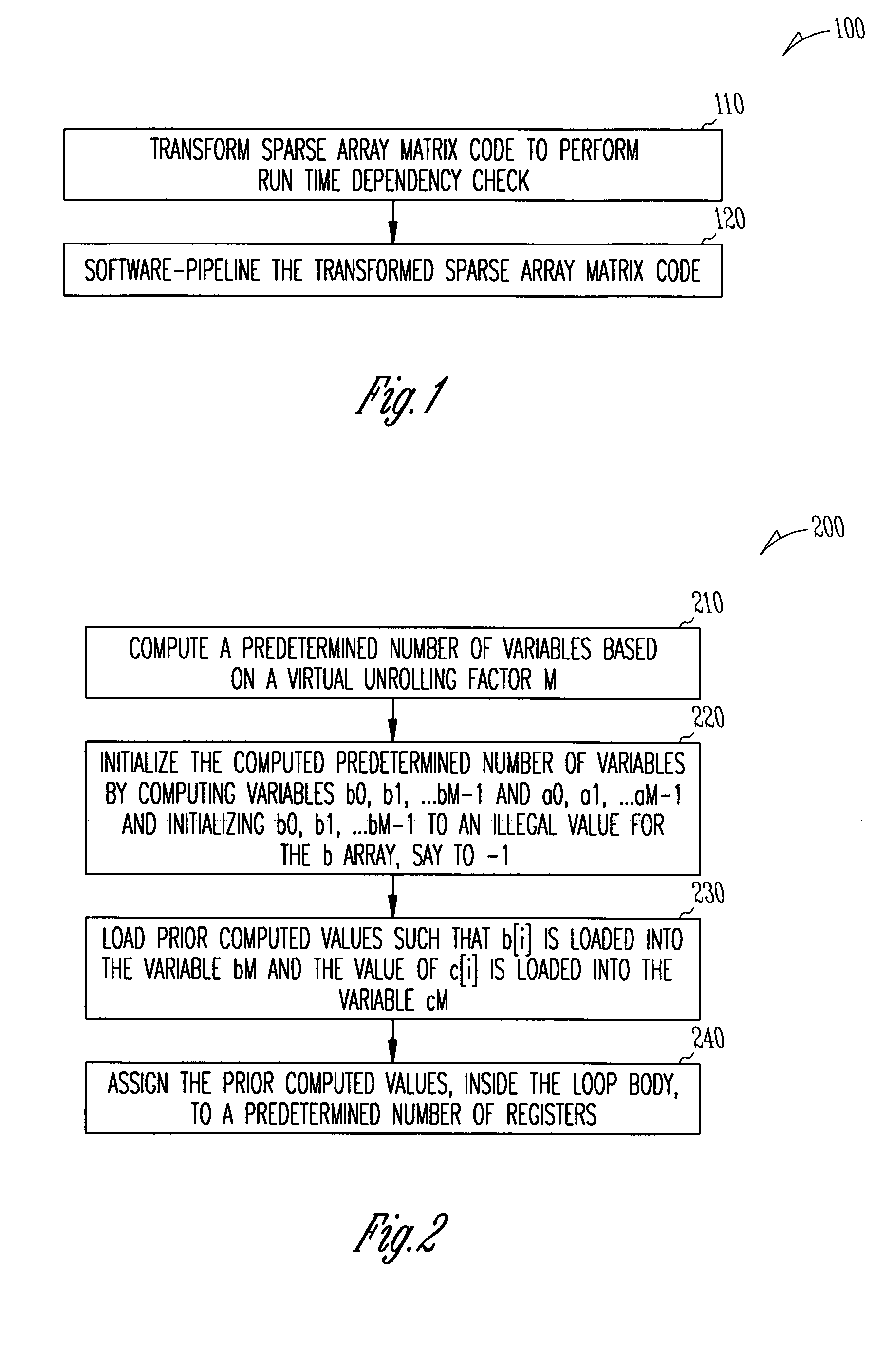

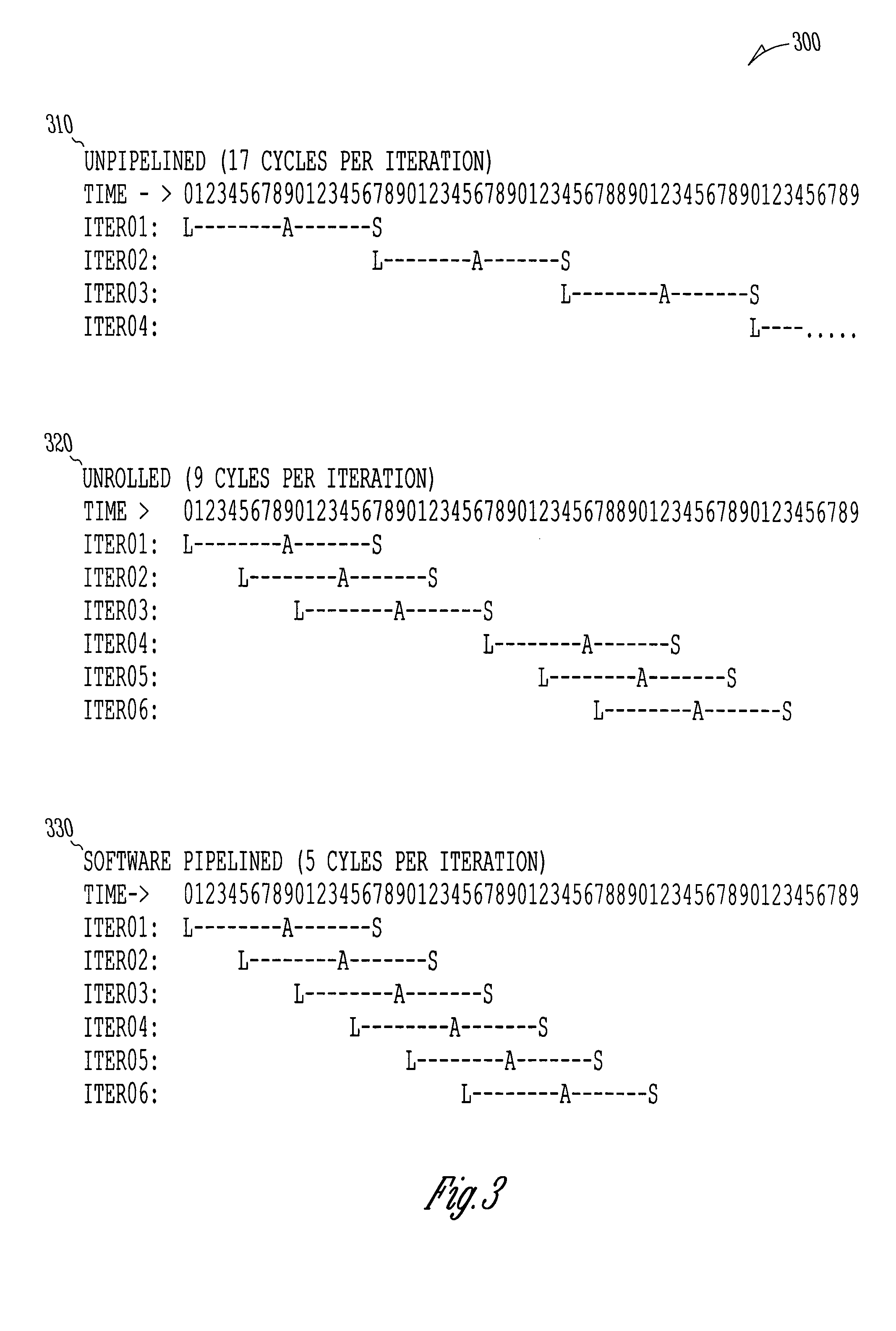

System and method for software-pipelining of loops with sparse matrix routines

A method that uses software-pipelining to translate programs, from higher level languages into equivalent object or machine language code for execution on a computer, including sparse arrays / matrices. In one example embodiment, this is accomplished by transforming sparse array matrix source code and software-pipelining the transformed source code to reduce recurrence initiation interval, decrease run time, and enhance performance.

Owner:INTEL CORP

Method for software pipelining of irregular conditional control loops

InactiveUS6892380B2Suppresses increase in code sizeMinimum trip countSoftware engineeringDigital computer detailsProcessor registerParallel computing

A method for software pipelining of irregular conditional control loops including pre-processing the loops so they can be safely software pipelined. The pre-processing step ensures that each original instruction in the loop body can be over-executed as many times as necessary. During the pre-processing stage, each instruction in the loop body is processing in turn (N4). If the instruction can be safely speculatively executed, it is left alone (N6). If it could be safely speculatively executed except that it modifies registers that are live out of the loop, then the instruction can be pre-processed using predication or register copying (N7, N8, N9). Otherwise, predication must be applied (N10). Predication is the process of guarding an instruction. When the guard condition is true, the instruction executes as though it were unguarded. When the guard condition is false, the instruction is nullified.

Owner:TEXAS INSTR INC

Scheduling technique for software pipelining

InactiveUS20050034111A1Reduce pressureConvenient teachingSoftware engineeringProgram controlScheduling instructionsProcessor register

An improved scheduling technique for software pipelining is disclosed which is designed to find schedules requiring fewer processor clock cycles and reduce register pressure hot spots when scheduling multiple groups of instructions (e.g. as represented by multiple sub-graphs of a DDG) which are independent, and substantially identical. The improvement in instruction scheduling and reduction of hot spots is achieved by evenly distributing such groups of instructions around the schedule for a given loop.

Owner:IBM CORP

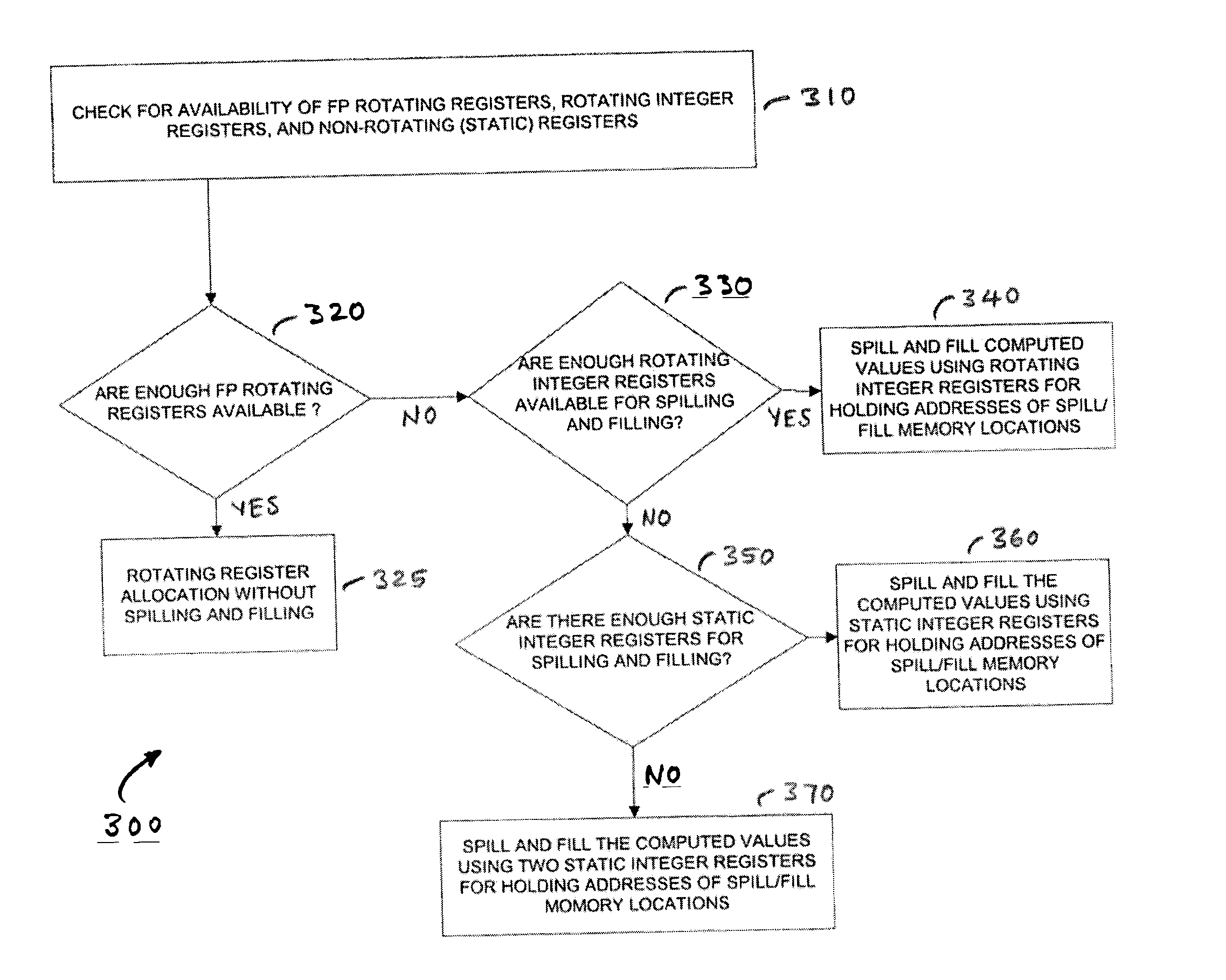

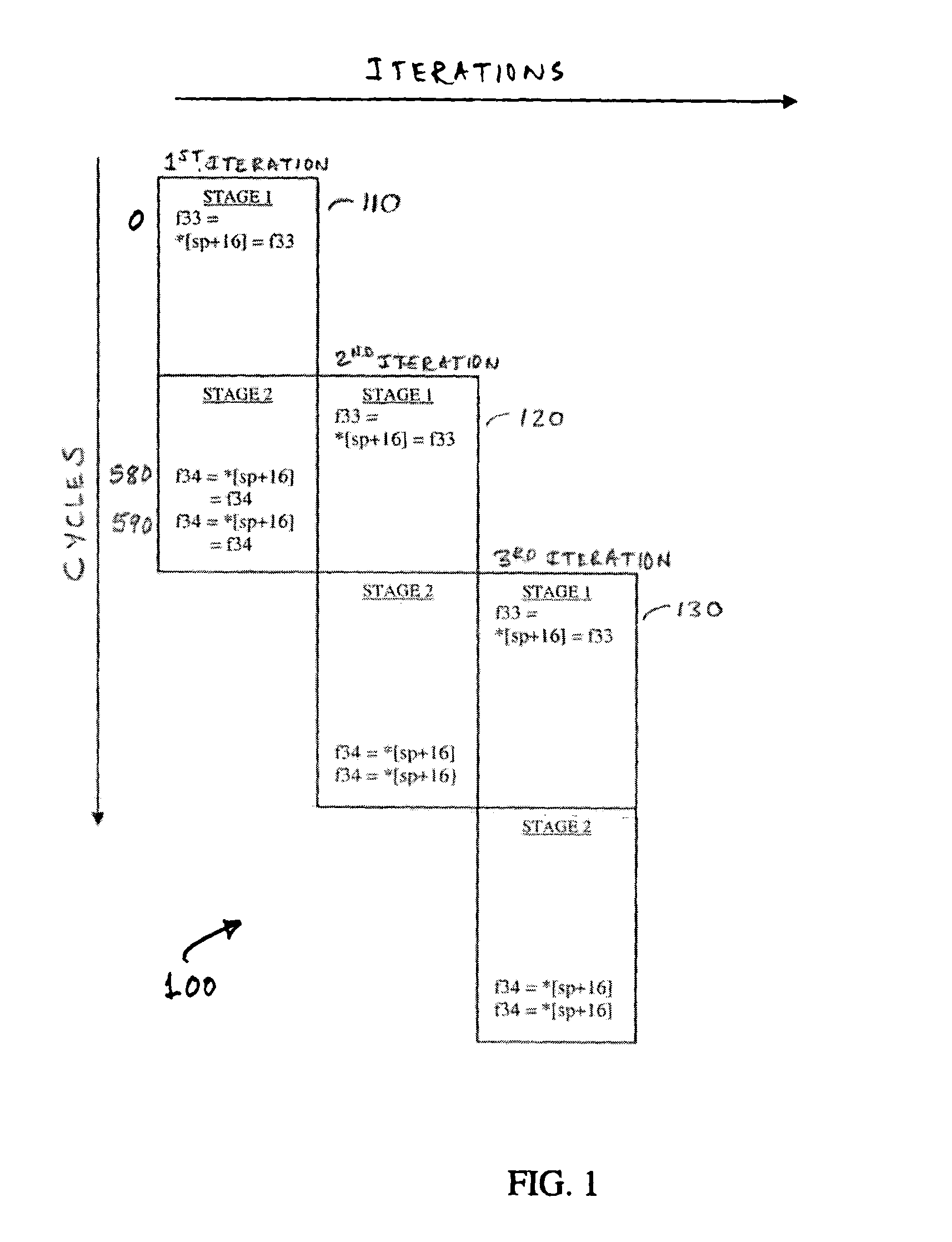

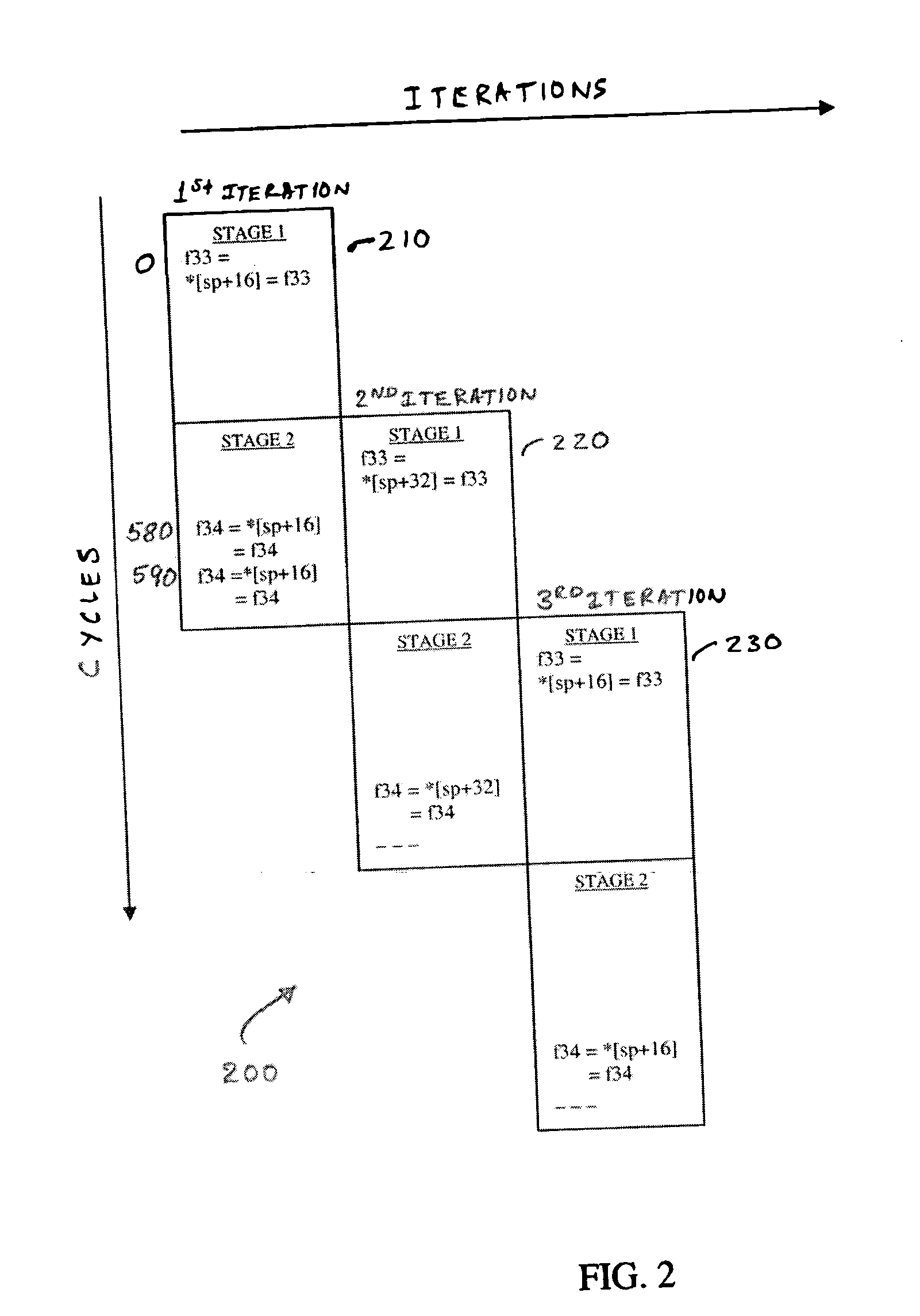

System, method, and apparatus for spilling and filling rotating registers in software-pipelined loops

An efficient method for software-pipelining (SWP) of loops to translate programs, from higher level languages into equivalent object or machine language code for execution on a computer. In one example embodiment, this is accomplished by spilling and filling multiple computed values, in a register, that are live across multiple stages in a software-pipelined loop, using multiple rotating stack memory locations to reduce compiler-time of SWP, and complexity of the implemented SWP.

Owner:INTEL CORP

Multithreaded software rendering pipeline with dynamic performance-based reallocation of raster threads

InactiveUS8587596B2Improve balanceMultiprogramming arrangementsMultiple digital computer combinationsParallel computingSoftware pipelining

A multithreaded rendering software pipeline architecture dynamically reallocates regions of an image space to raster threads based upon performance data collected by the raster threads. The reallocation of the regions typically includes resizing the regions assigned to particular raster threads and / or reassigning regions to different raster threads to better balance the relative workloads of the raster threads.

Owner:INT BUSINESS MASCH CORP

System, method, and apparatus for spilling and filling rotating registers in software-pipelined loops

InactiveUS20050071607A1Register arrangementsSoftware engineeringParallel computingSoftware pipelining

An efficient method for software-pipelining (SWP) of loops to translate programs, from higher level languages into equivalent object or machine language code for execution on a computer. In one example embodiment, this is accomplished by spilling and filling multiple computed values, in a register, that are live across multiple stages in a software-pipelined loop, using multiple rotating stack memory locations to reduce compiler-time of SWP, and complexity of the implemented SWP.

Owner:INTEL CORP

Compiler apparatus

ActiveUS7856629B2Reduce the number of executionsReduce in quantitySoftware engineeringProgram controlCyclic processOperand

A compiler apparatus, which can perform software pipelining optimization that has a considerable effect of reducing the number of execution cycles taken to complete a loop process, converts a source program into a machine program for a processor which is capable of parallel processing. The compiler apparatus is composed of: a parsing unit operable to parse the source program and then to convert the source program into an intermediate program which is described in an intermediate language; an optimization unit operable to optimize the intermediate program; and a conversion unit operable to convert the optimized intermediate program into the machine language program, wherein the optimization unit is operable to execute software pipelining, by inserting a transfer instruction, which is used for transferring data between operands, into a loop process included in the intermediate program so that a data dependence relation is changed.

Owner:SOCIONEXT INC

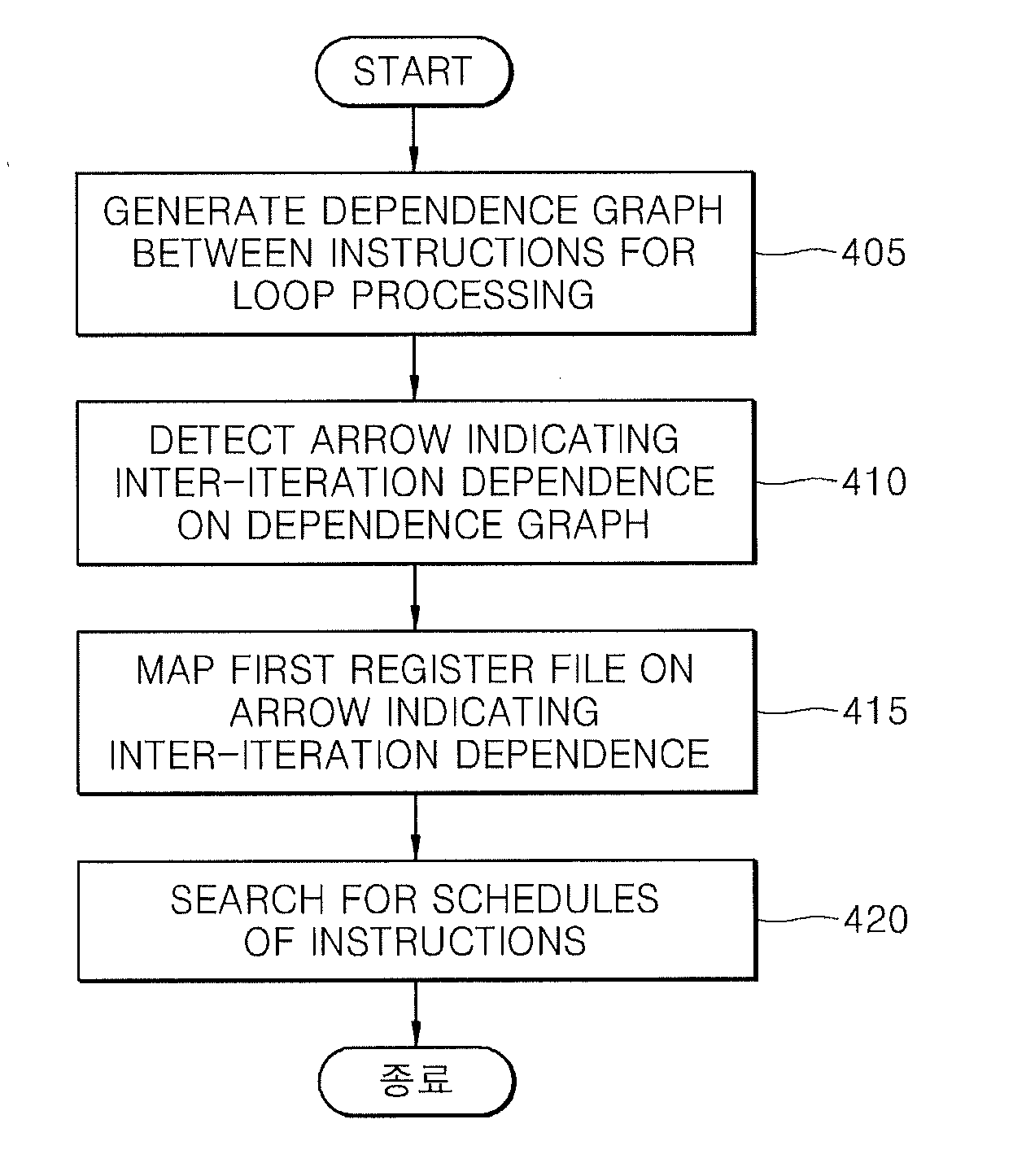

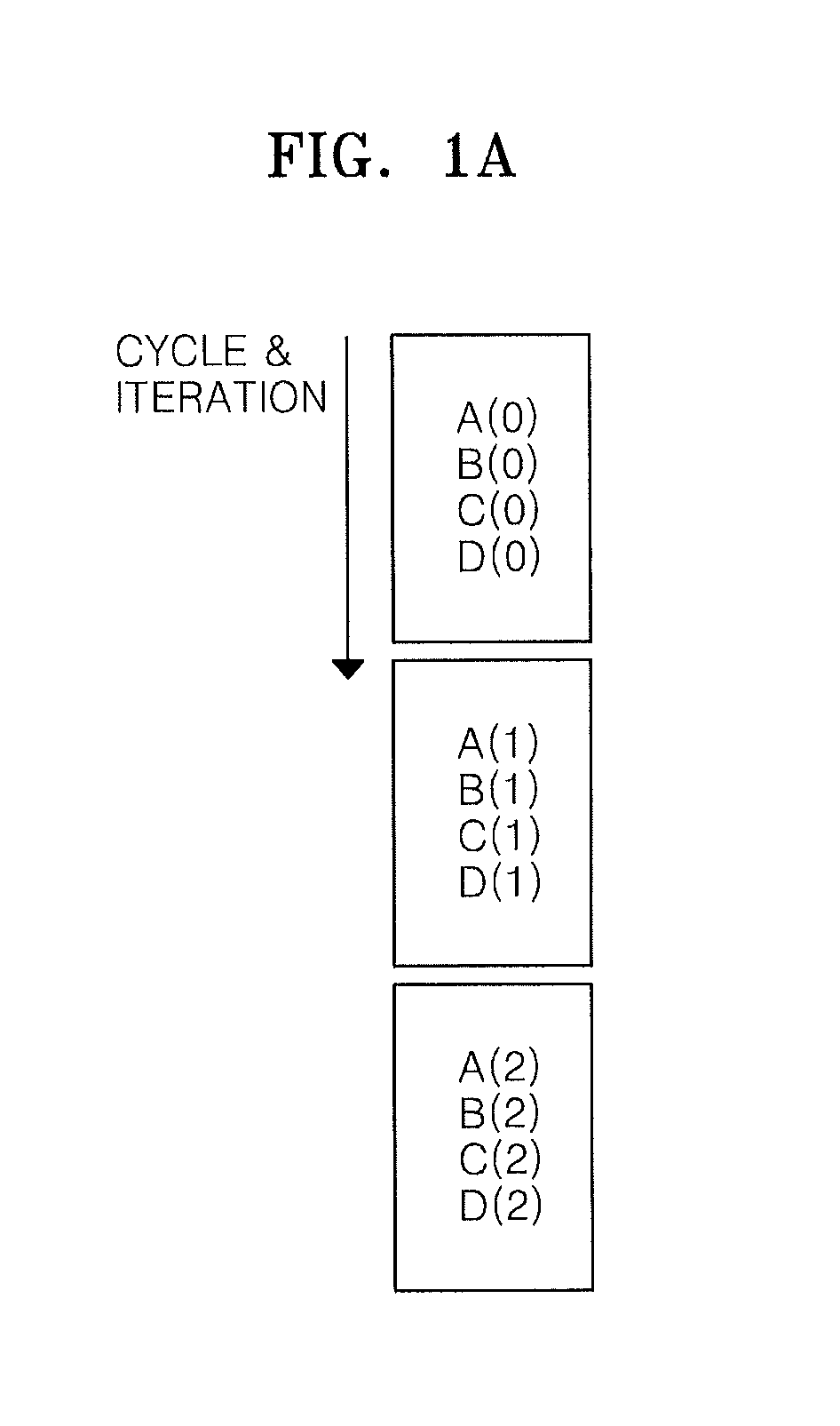

Method and apparatus for instruction scheduling using software pipelining

InactiveUS20150100950A1Program control using stored programsSoftware engineeringTime scheduleScheduling instructions

A method for scheduling loop processing of a reconfigurable processor includes generating a dependence graph of instructions for the loop processing; mapping a first register file of the reconfigurable processor on an arrow indicating inter-iteration dependence on the dependence graph; and searching for schedules of the instructions based on the mapping result.

Owner:SAMSUNG ELECTRONICS CO LTD

Rolling context data structure for maintaining state data in a multithreaded image processing pipeline

InactiveUS8330765B2Processor architectures/configurationElectric digital data processingMultiple contextImaging processing

A multithreaded rendering software pipeline architecture utilizes a rolling context data structure to store multiple contexts that are associated with different image elements that are being processed in the software pipeline. Each context stores state data for a particular image element, and the association of each image element with a context is maintained as the image element is passed from stage to stage of the software pipeline, thus ensuring that the state used by the different stages of the software pipeline when processing the image element remains coherent irrespective of state changes made for other image elements being processed by the software pipeline. Multiple image elements may therefore be processed concurrently by the software pipeline, and often without regard for synchronization or serialization of state changes that affect only certain image elements.

Owner:INT BUSINESS MASCH CORP

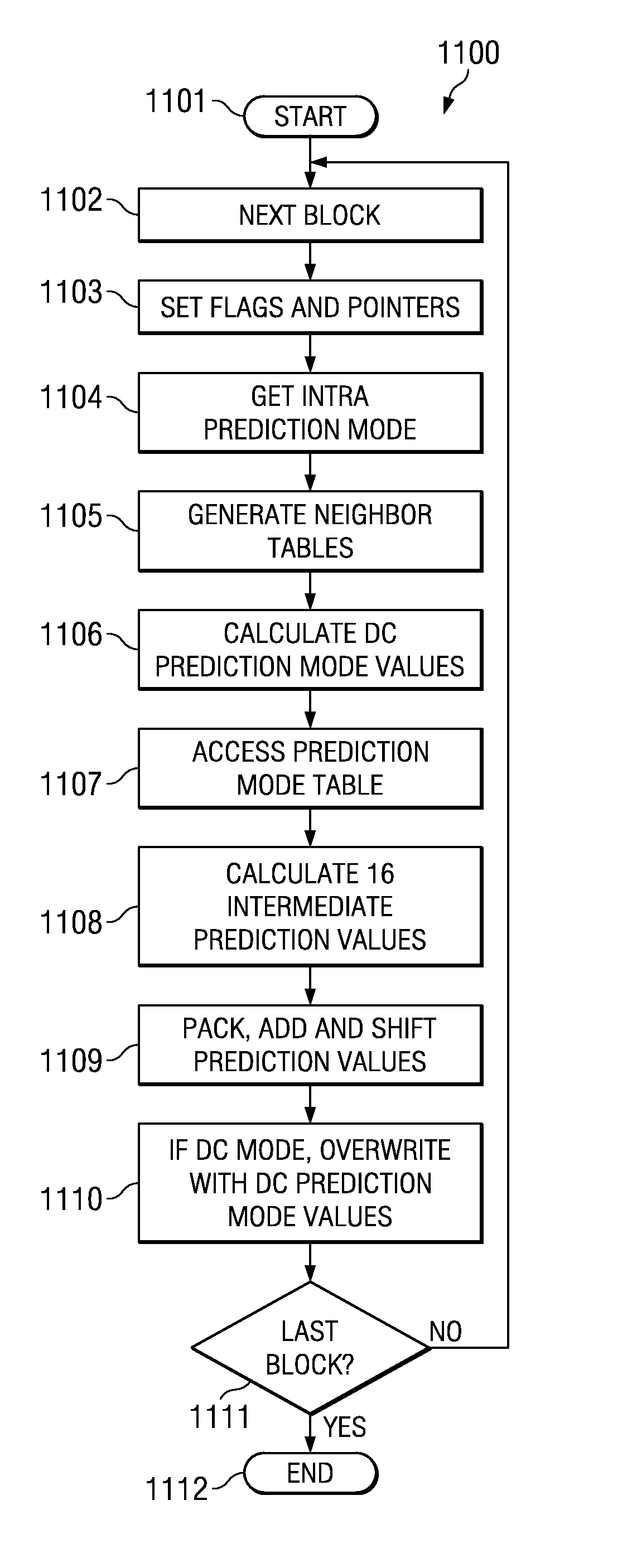

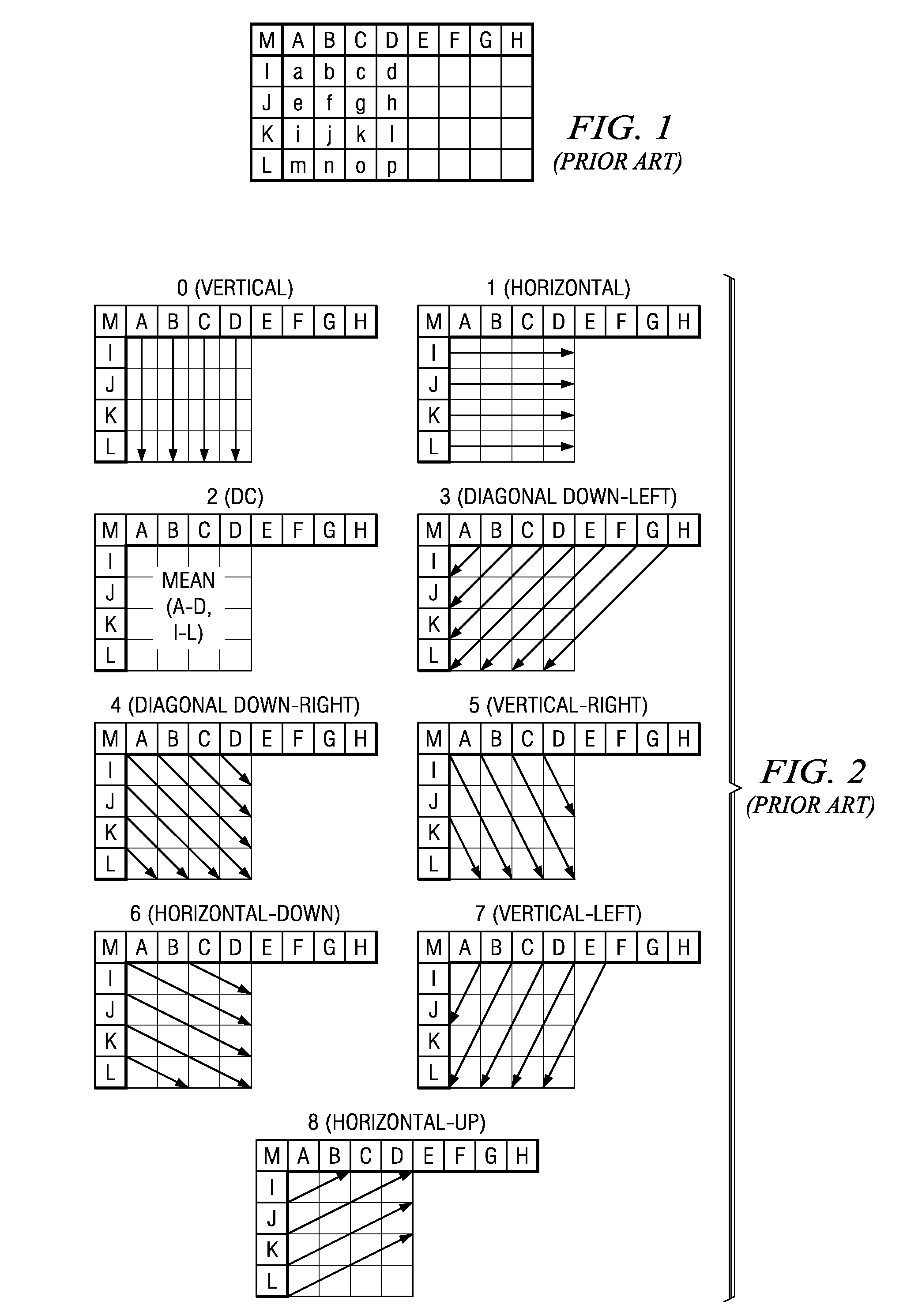

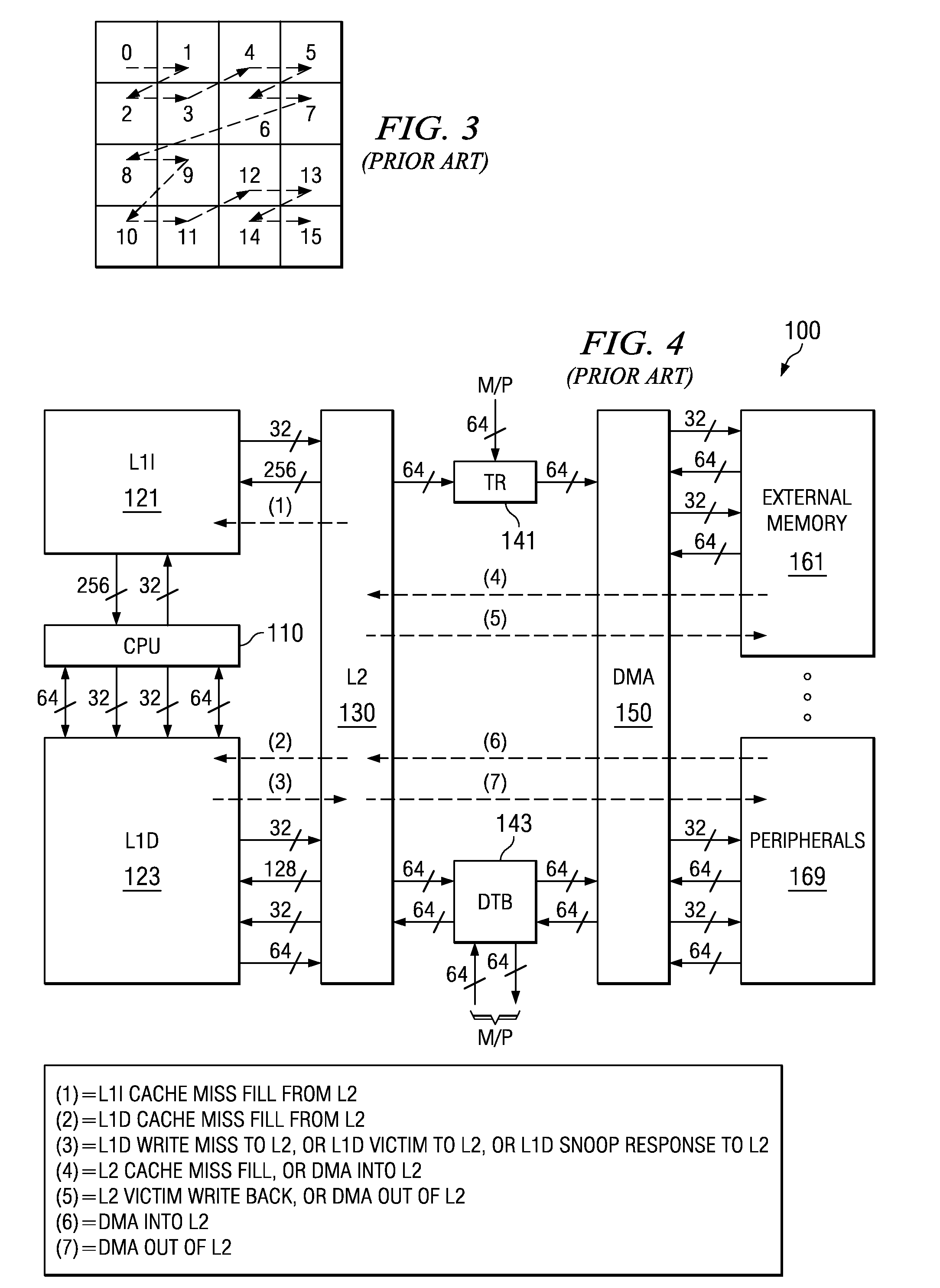

Efficient implementation of H.264 4 by 4 intra prediction on a VLIW processor

ActiveUS8233537B2Eliminates branchingGuaranteed maximum utilizationPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningTheoretical computer scienceSoftware pipelining

This invention is useful in video compression standards support a rich set of intra prediction modes. This invention a unique table creation and lookup approach to software pipeline the prediction process for all pixels within a block. The table stores constant data and pointer data into a neighbor pixel table. Indexing into the table based upon the current intra prediction mode for each pixel of a block recalls constant data and other pixel data for calculation of an intra prediction value.

Owner:TEXAS INSTR INC

Streaming physics collision detection in multithreaded rendering software pipeline

InactiveUS8564600B2Meet cutting requirementsImprove performanceGeneral purpose stored program computerCharacter and pattern recognitionHardware threadComputer hardware

A circuit arrangement, program product and method stream level of detail components between hardware threads in a multithreaded circuit arrangement to perform physics collision detection. Typically, a master hardware thread, e.g., a component loader hardware thread, is used to retrieve level of detail data for an object from a memory and stream the data to one or more slave hardware threads, e.g., collision detection hardware threads, to perform the actual collision detection. Because the slave hardware threads receive the level of detail data from the master thread, typically the slave hardware threads are not required to load the data from the memory, thereby reducing memory bandwidth requirements and accelerating performance.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com