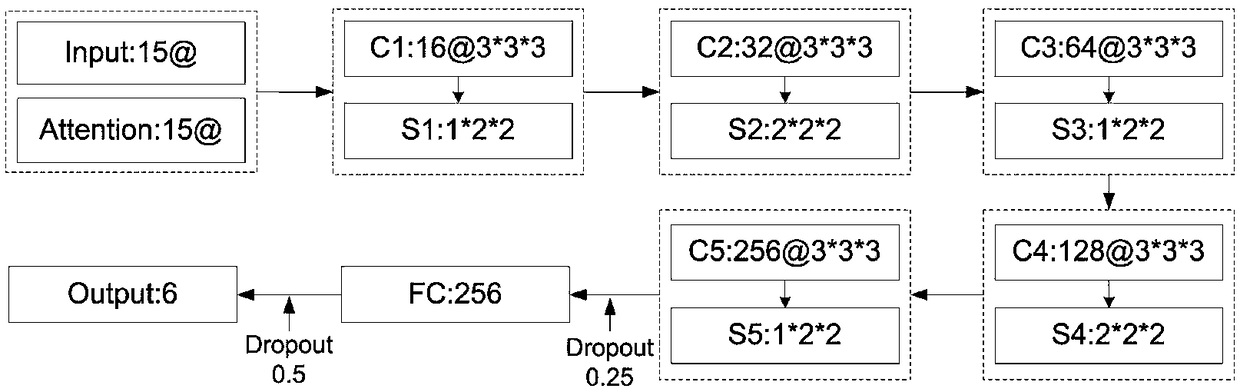

Human behavior recognition method based on attention mechanism and 3D convolutional neural network

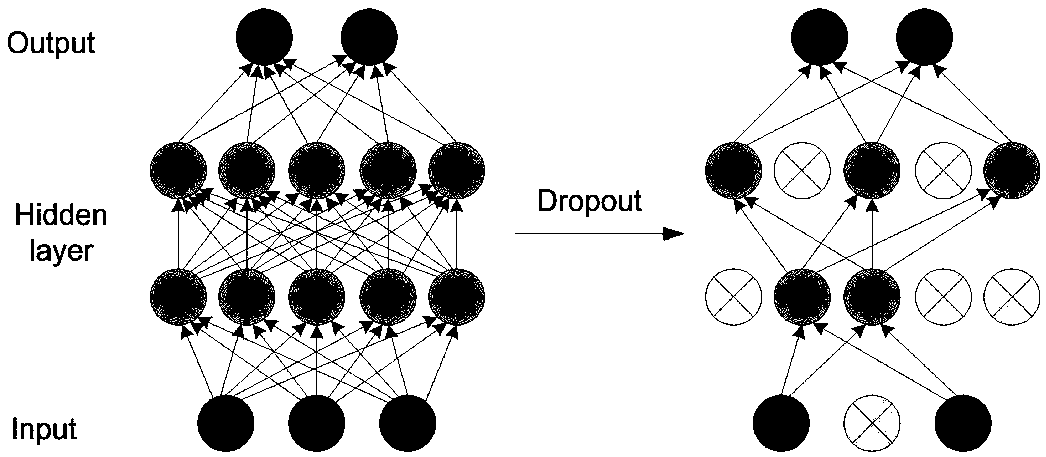

A convolutional neural network and recognition method technology, which is applied in the field of human behavior recognition based on attention mechanism and 3D convolutional neural network, can solve the problems of difficulty in showing the essential characteristics of actions, and the impact of recognition results is relatively large, so as to improve the network Recognition accuracy, improve recognition accuracy, and prevent overfitting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

[0098] The specific scheme of embodiment 2 is as follows:

[0099] The frame difference channel in this embodiment is calculated using the three-frame difference method, and the calculation process is as follows Figure 7 As shown, by taking the three adjacent frames of images as a group and performing further difference, it can better detect the front and rear change areas of the intermediate frames. The frame difference can describe the difference of human body movements during the movement, and the frame difference matrix describes the area that should be paid attention to in the whole frame cube.

[0100] 1 attention matrix (three-frame difference method) of the present embodiment:

[0101] 1) Select three consecutive frames of images I in the video frame sequence t-1 (x,y), I t (x,y), I t+1 (x, y), respectively calculate the difference D between two adjacent frames of images t-1,t (x,y),D t,t+1 (x,y):

[0102]

[0103] 2) For the obtained difference image, selec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com