Virtual viewpoint image generation method based on generation type confrontation network

A technology for virtual viewpoint and image generation, applied in the field of stereo vision and deep learning, it can solve problems such as inapplicability of natural images, and achieve the effect of making up for the lack of hardware and having strong scalability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

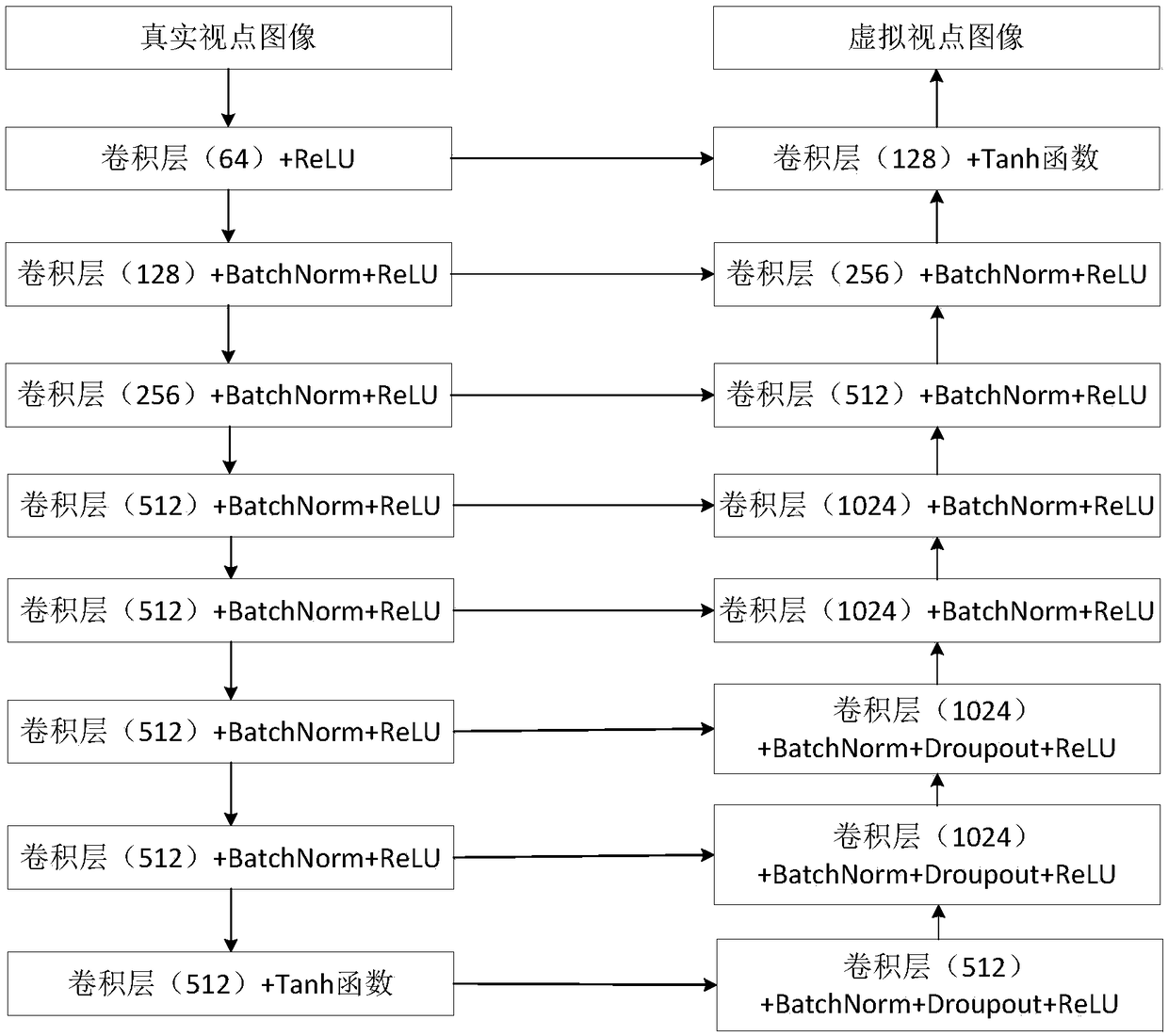

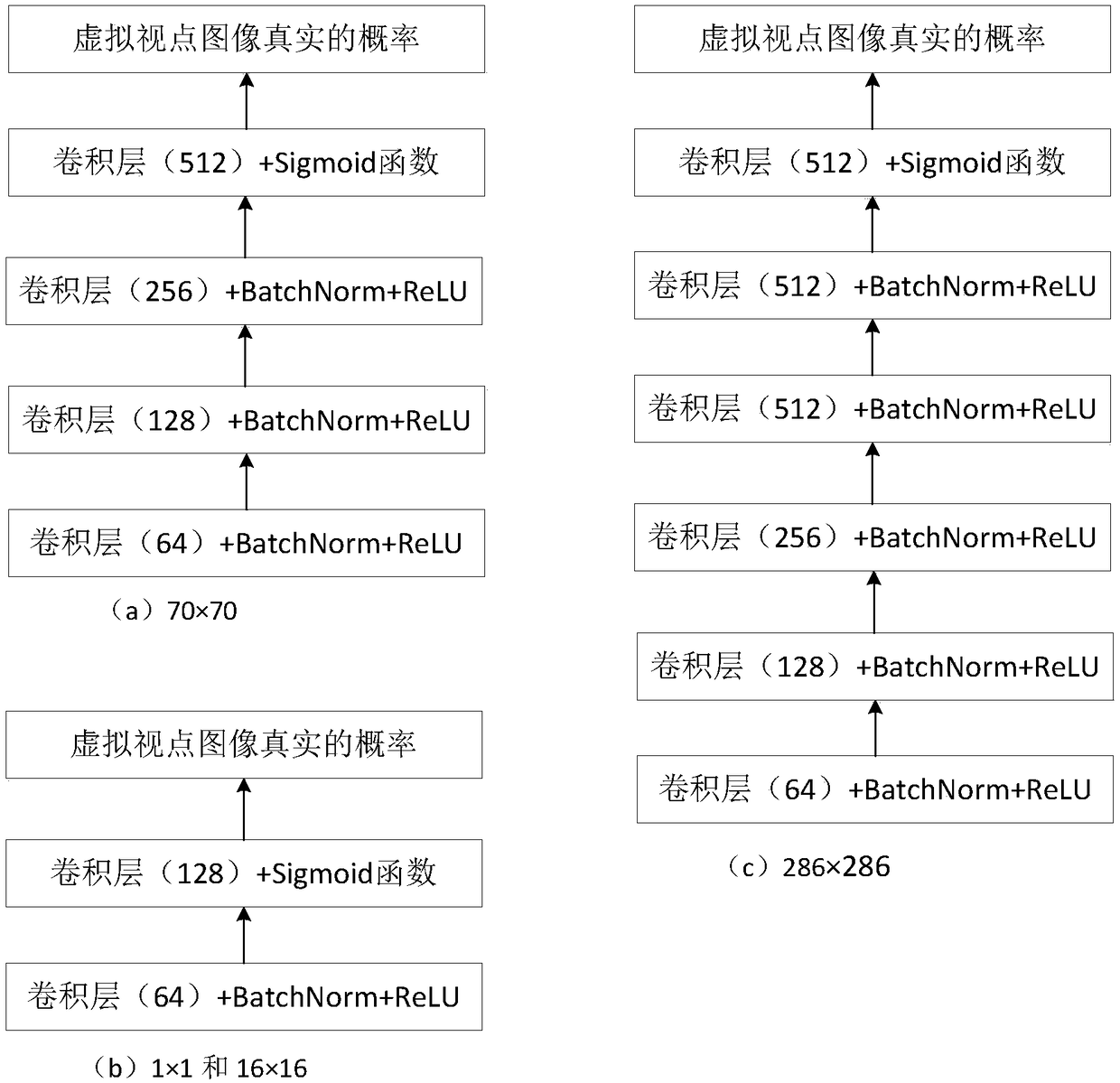

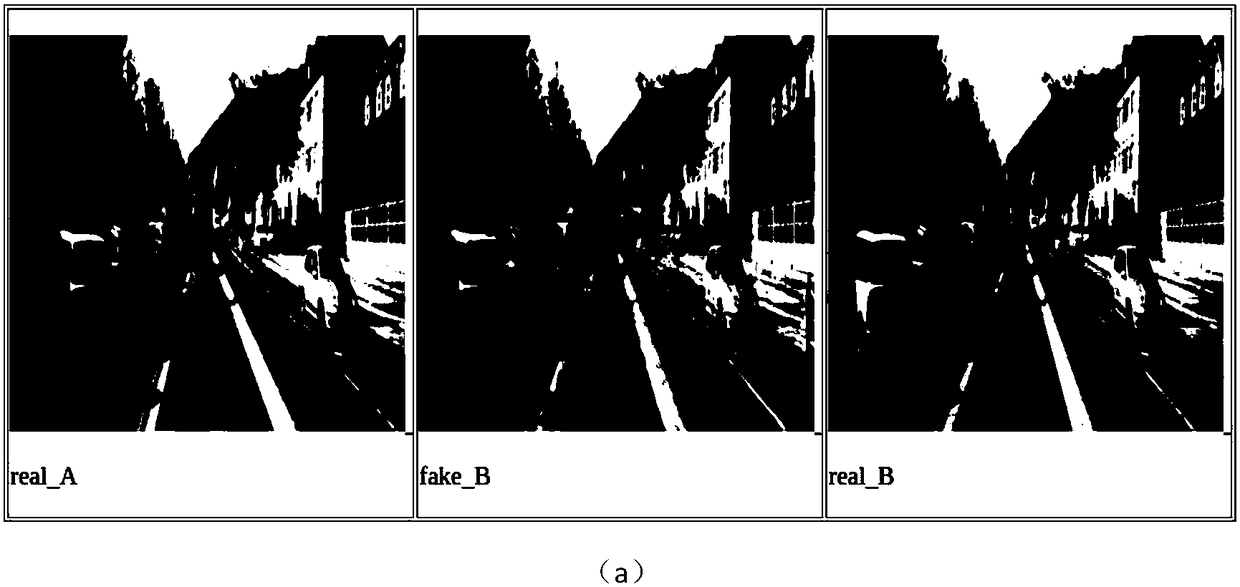

[0017] The invention uses the generative confrontation network model in deep learning, takes the road scene image of the KITTI dataset as the research object, and realizes the virtualization of the road scene image based on the monocular image without relying on information such as depth and parallax. viewpoint image generation, and can be generalized to virtual viewpoint image generation applied to other natural images.

[0018] In order to make the purpose and technical solution of the present invention clearer, the embodiments of the present invention will be further described in detail below.

[0019] 1. Build the dataset

[0020] This experiment uses the KITTI 2015 three-dimensional data set. Due to its limited data volume, the present invention solves it through data enhancement. According to the characteristics of binocular images, this experiment adopts traditional data enhancement methods, including horizontal flipping, cropping and other methods. Through data enhan...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com