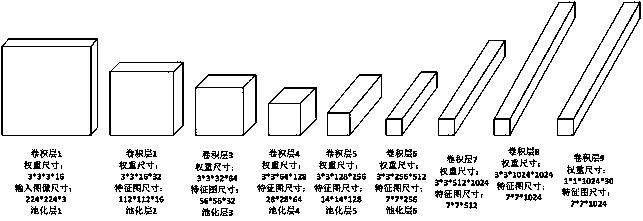

A CNN-based low-precision training and 8-bit integer quantitative reasoning method

A reasoning method and low-precision model technology, applied in the field of convolutional neural networks, can solve the problems of large precision loss and insufficient calculation efficiency, and achieve the effects of controlling precision loss, saving calculation time, and reducing precision loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] It should be noted that, in the case of no conflict, the embodiments of the present invention and the features in the embodiments can be combined with each other.

[0028] Specific embodiments of the invention will be described in detail below.

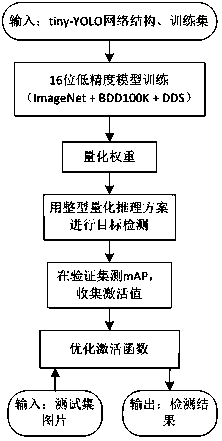

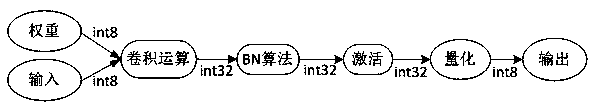

[0029] The technical solution of the present invention is divided into two stages: the first stage is to use 16-bit floating-point low-precision fixed-point algorithm for model training to obtain a model for target detection, that is, weights. The second stage is to use the 8-bit integer quantization scheme to quantize the weights, quantize the activation value into 8-bit integer data, and realize the quantitative reasoning of 8-bit integers.

[0030] Specific steps are as follows:

[0031] A. Use the 16-bit floating-point low-precision fixed-point algorithm of to train the model, that is, use 2 bits to represent the integer part, use 14 bits to represent the fractional part, and use rounding to convert 32-bit floating-point ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com