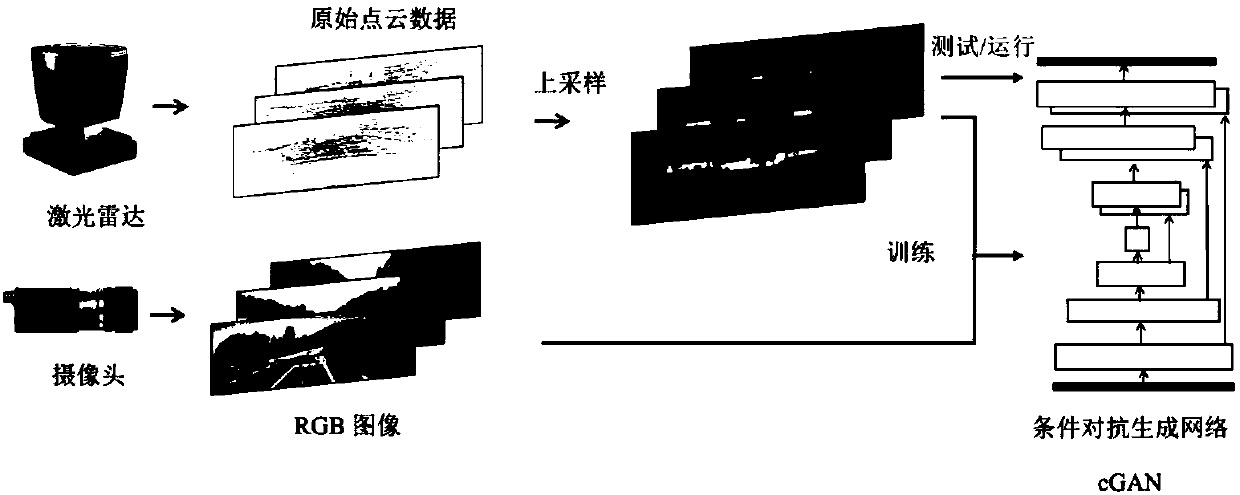

Radar generated color semantic image system and method based on conditional generative adversarial network

A color map and radar technology, applied in the fields of sensors and artificial intelligence, can solve problems such as incomplete road environment information, increased load on unmanned vehicles' battery life calculation chips, and inaccurate accuracy, so as to avoid imaging uncertainty and inaccuracy. The effects of stability, elimination of road shadows, and high efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further described in detail with reference to the accompanying drawings and embodiments.

[0040] The radar generation color semantic image system and method of the present invention are based on the confrontation generation network and use machine learning and deep learning algorithms. On the one hand, machine learning and deep learning algorithms depend on the model architecture, but the bigger factor is whether the problem specification is complete. The present invention solves a color semantic road scene reconstruction problem that has not been considered yet, and the scheme of this problem specification has not been studied in academia. The present invention selects an adversarial generation network framework capable of stipulating the target problem, uses the calibrated radar data, and performs up-sampling to obtain road depth information as much as possible. At the same time, the problem specification of image pairs formed by upsamp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com