An image-to-image translation method based on a discriminant region candidate adversarial network

A region and image technology, applied in the field of image processing, can solve the problems of unbalanced color distribution, artifacts, low image resolution, etc., and achieve the effect of high resolution and less artifacts

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0040] The embodiments of the present application are preferred embodiments of the present application.

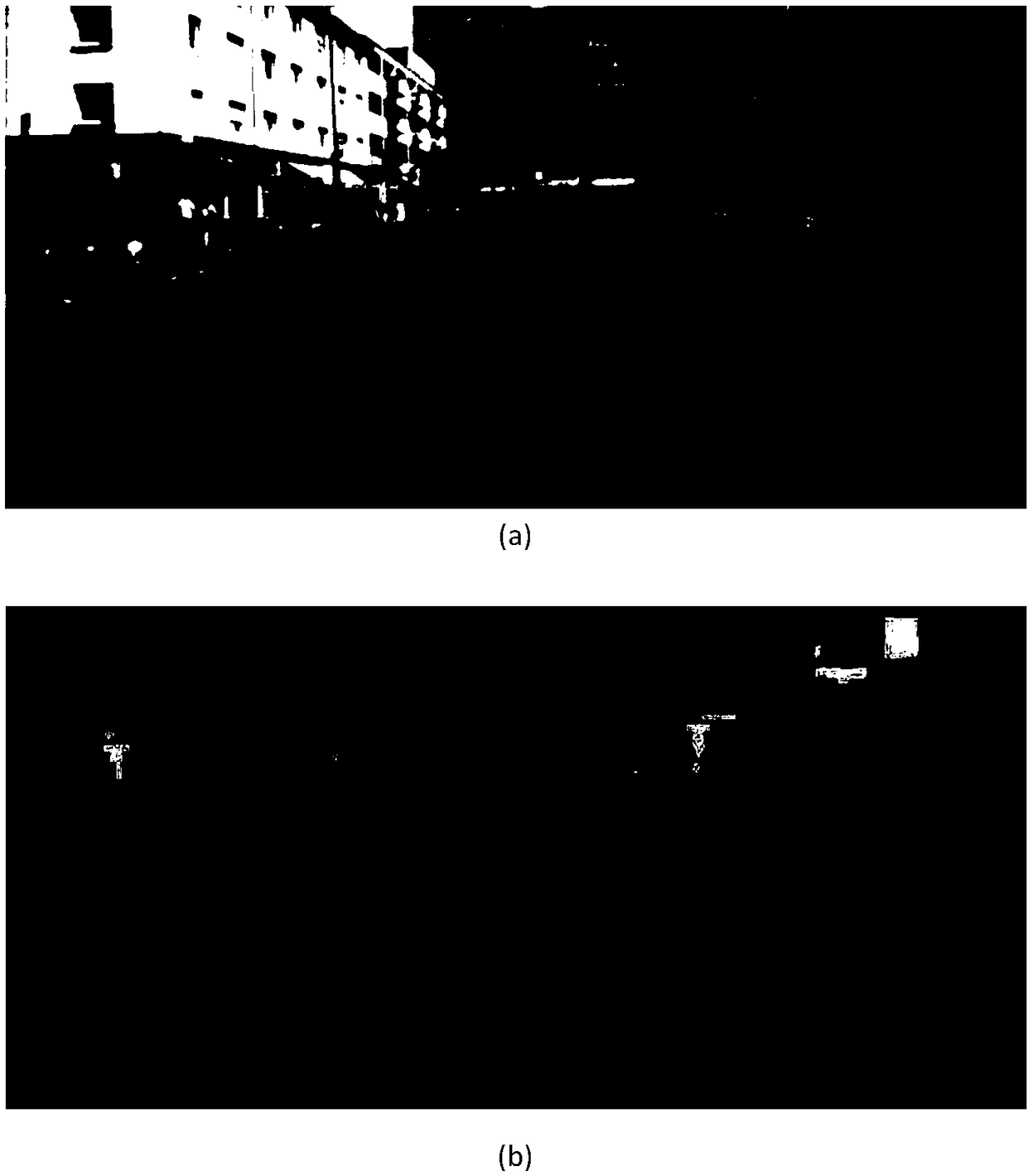

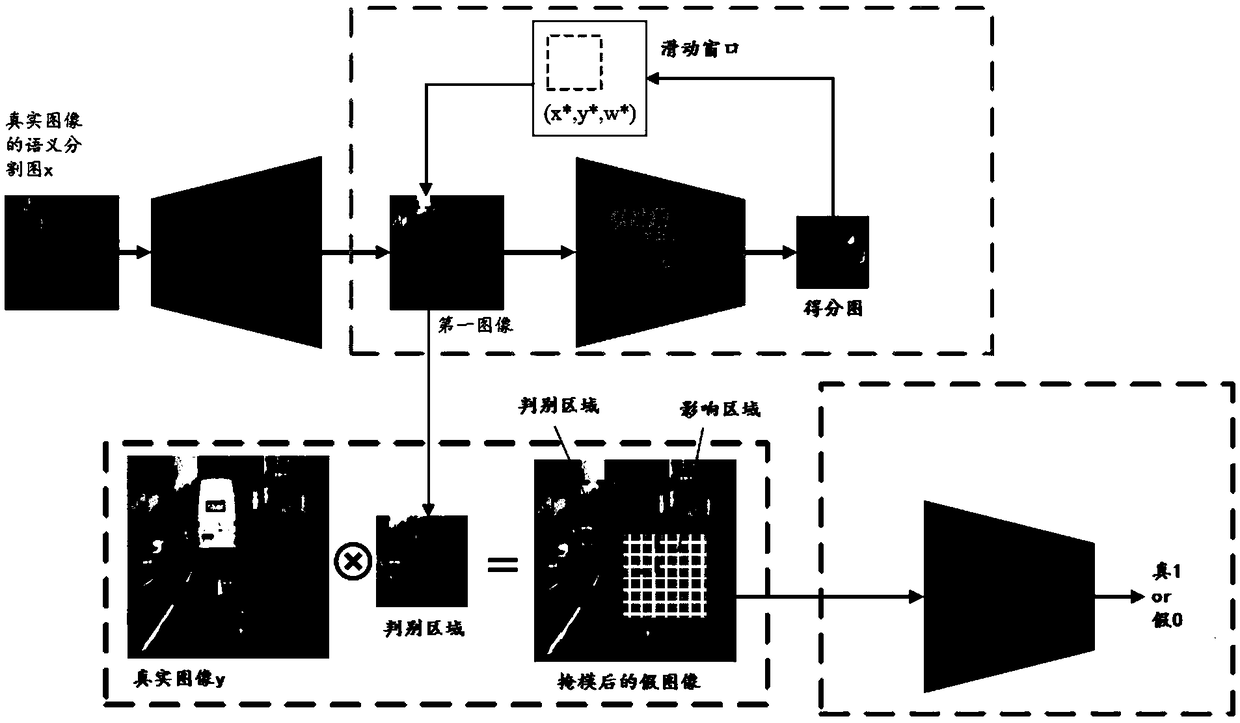

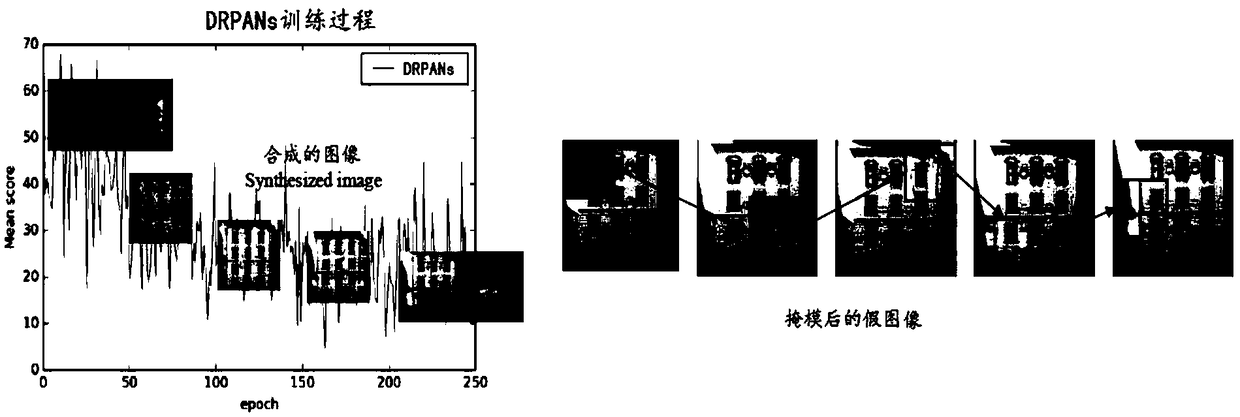

[0041] An image-to-image translation method based on a discriminative region candidate adversarial network. The embodiment of the present application proposes a discriminative region candidate adversarial network (DRPANs, Discriminative Region Proposal Adversarial Networks) for high-quality image-to-image translation. The discriminative region candidate adversarial network It includes a generator, an image block discriminator and a corrector, wherein the image block discriminator (Patch Discriminator) uses a PatchGAN Markovian discriminator to extract a discriminant region to generate a masked fake image.

[0042] Such as figure 2 As shown, the method includes the following steps:

[0043] S1: Input the semantic segmentation map of the real image into the generator to generate the first image;

[0044] Among them, image semantic segmentation means that the machine autom...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com