Patents

Literature

116 results about "Human visual perception" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

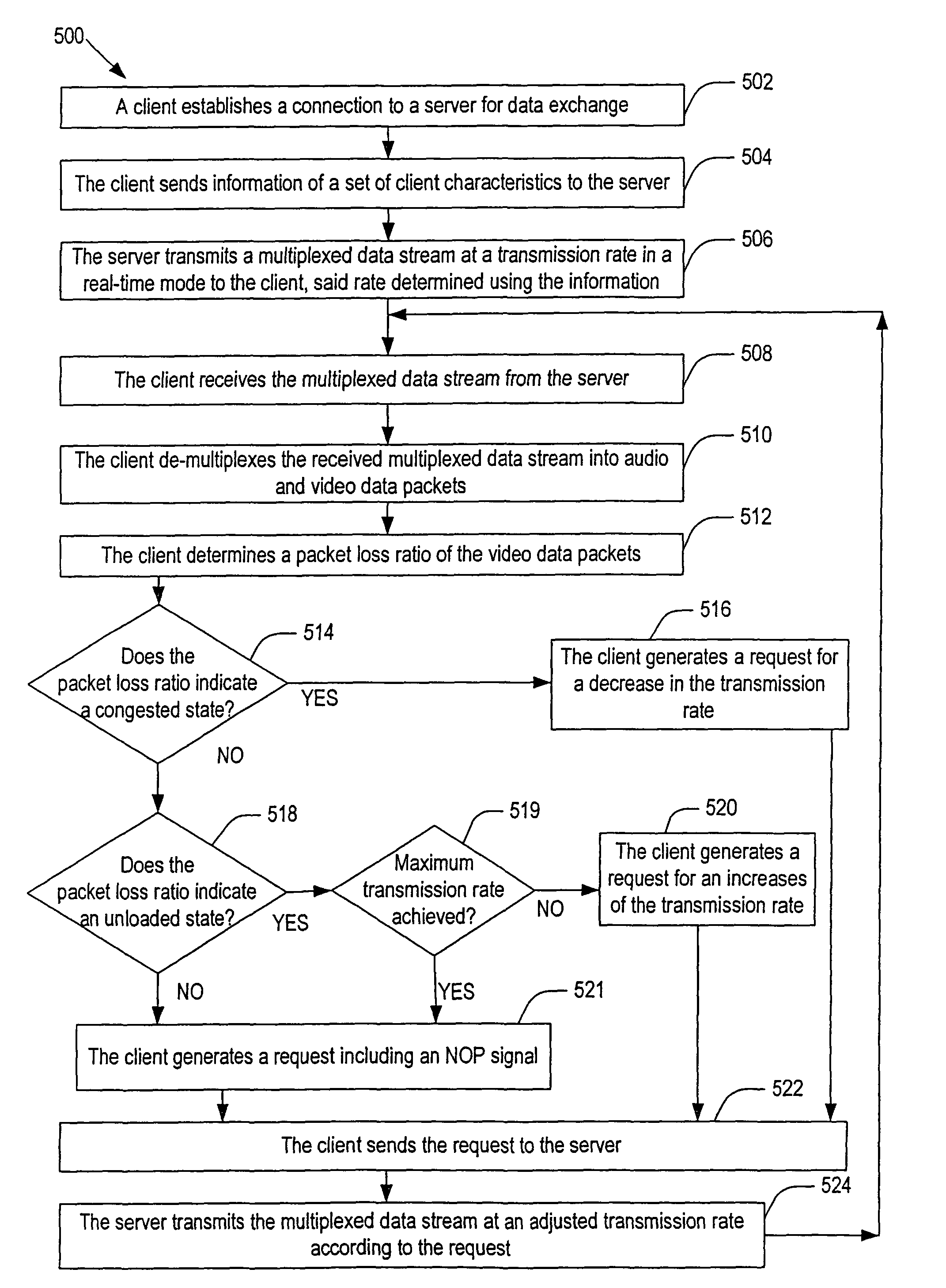

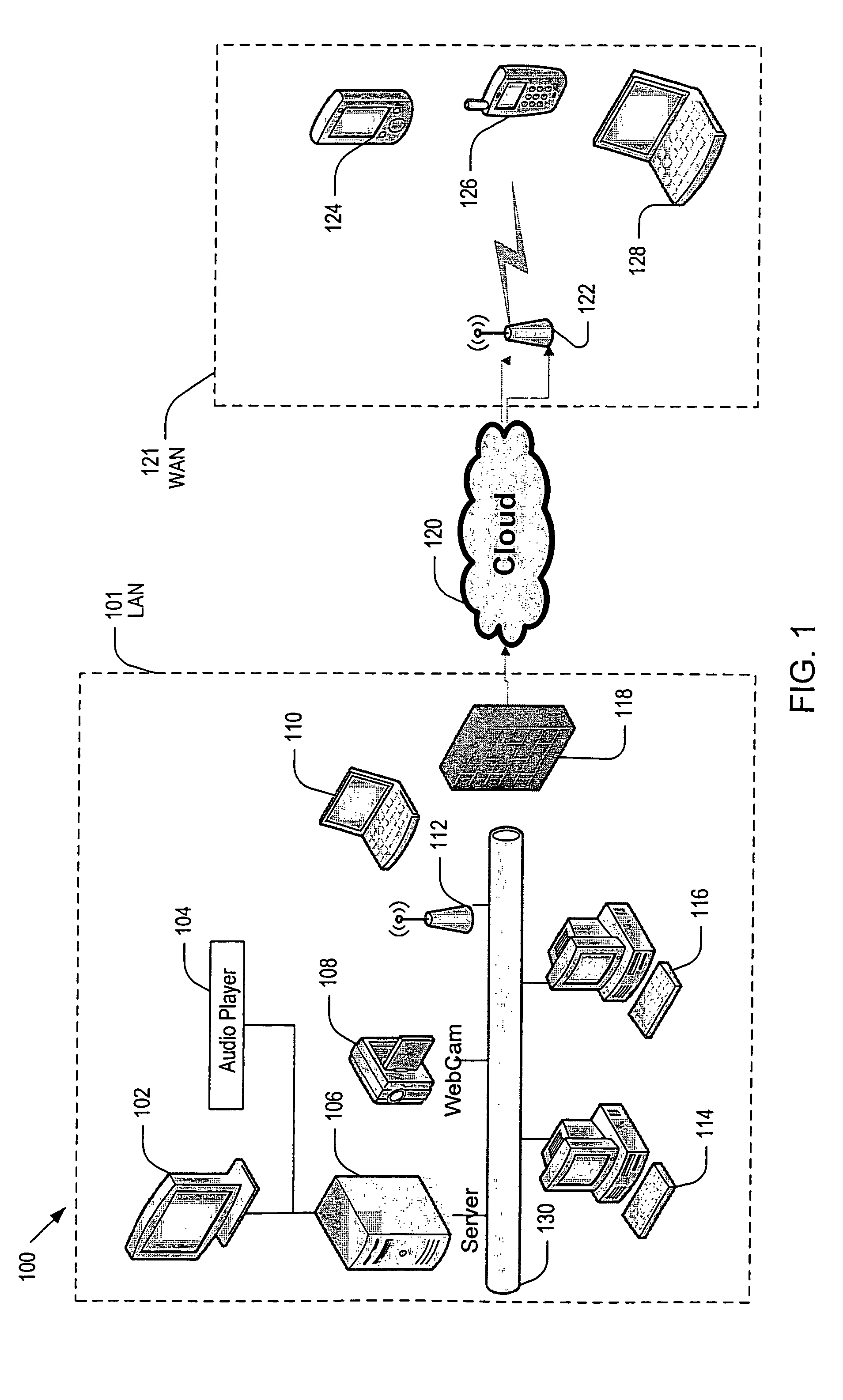

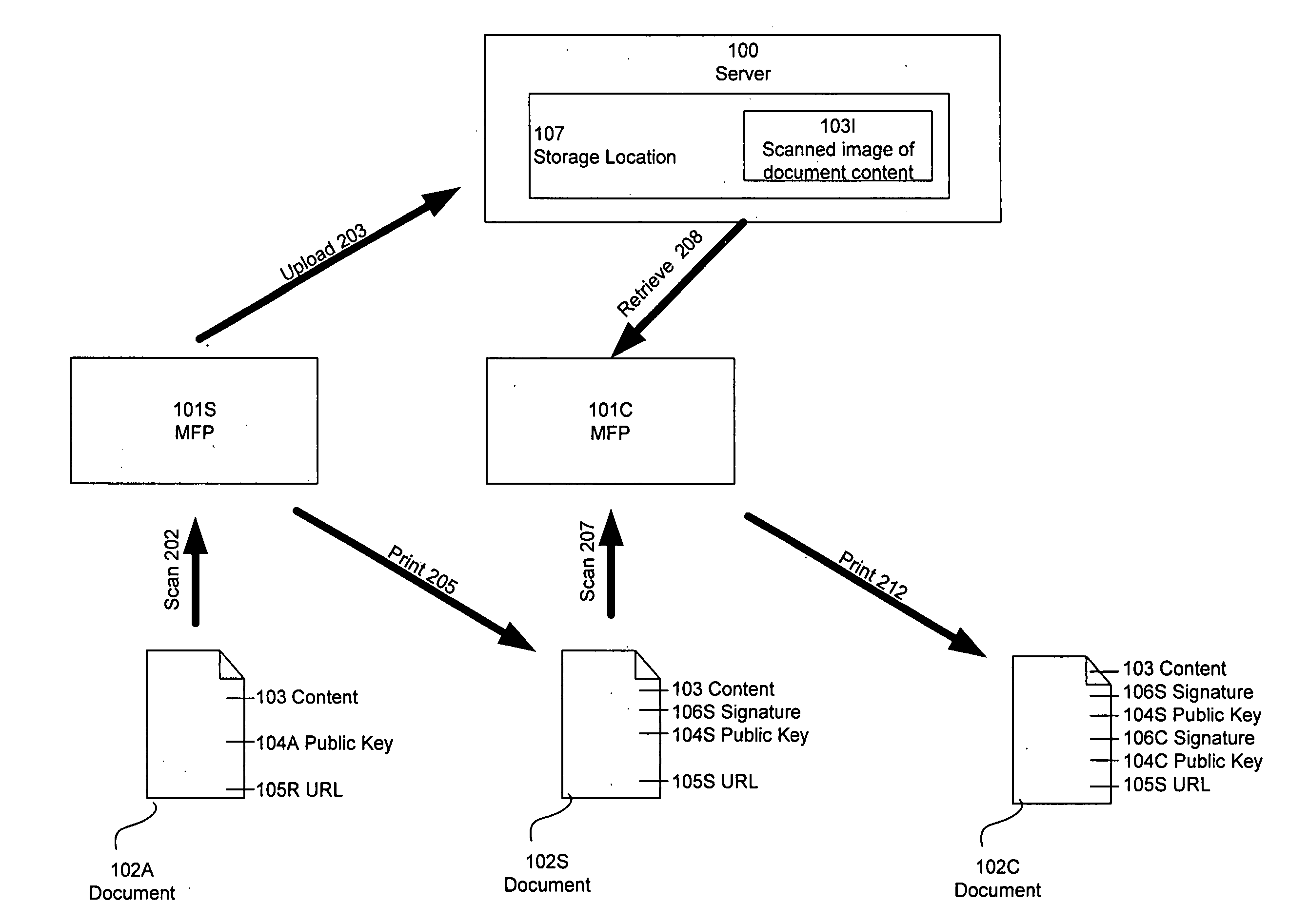

Adaptive media transport management for continuous media stream over LAN/WAN environment

InactiveUS7984179B1Simplify complexityReduce design costMultiple digital computer combinationsSelective content distributionData streamControl channel

Methods and systems for transmitting compressed audio / video data streams across conventional networks or channels in real time. Each system employs the Adaptive Rate Control (ARC) technique that is based on the detection of defective packets and other influencing factors such as overall system performance, usage model and channel characteristics. To control data stream congestions in the channel and maintain the visual display quality above a certain level, the present invention exploits the human visual perception and adaptability to changing visual conditions. The ARC technique relies on the client's capacity for calculating the quality of video packets received from a server and sending information of a desired transmission rate to the server. This approach simplifies the hardware and software implementation complexity otherwise imposed on the server and reduces the overall design cost by shifting the burden of monitoring bandwidth and transmission control from the server to the client.

Owner:SEXTANT NAVIGATION

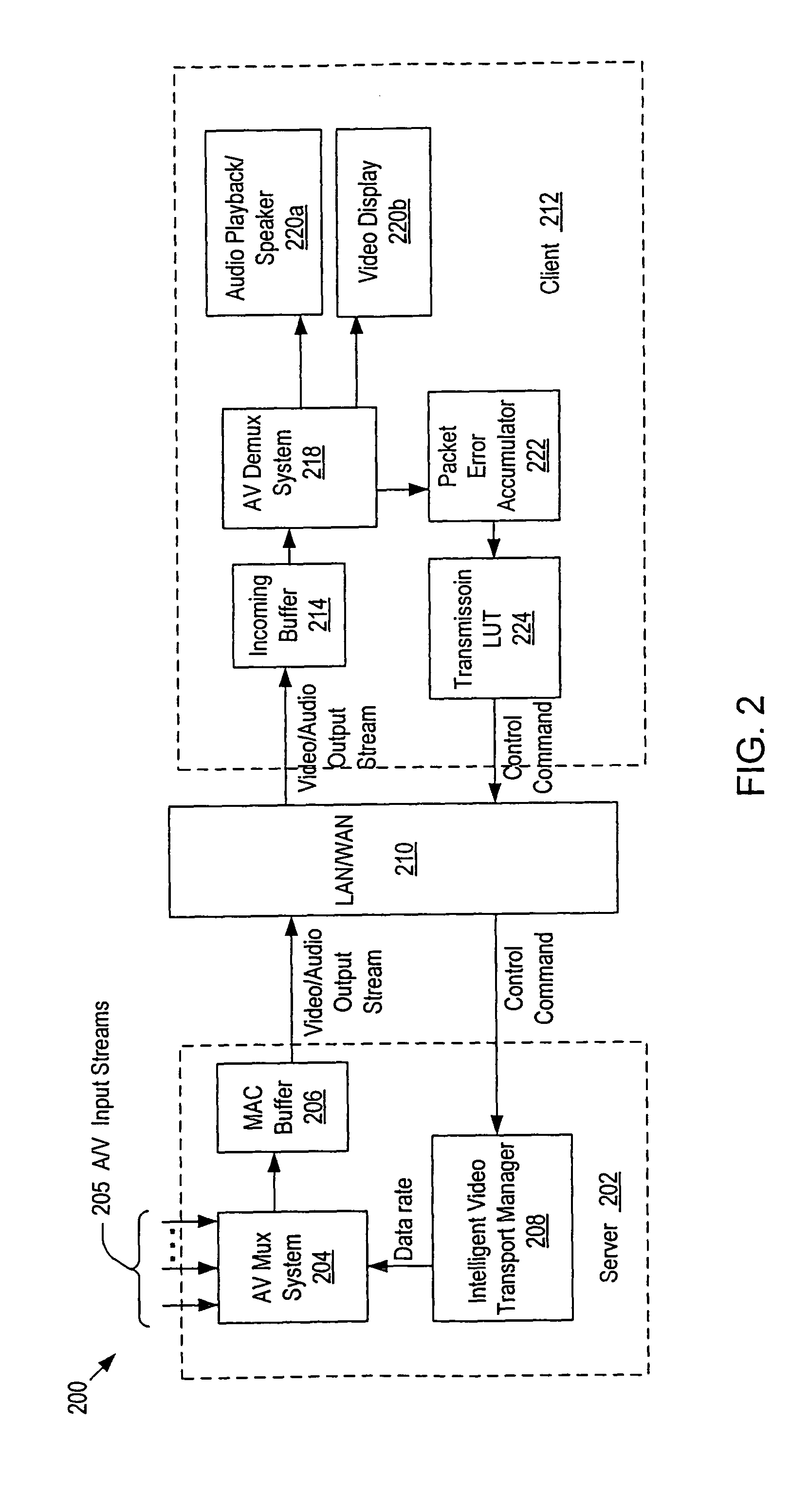

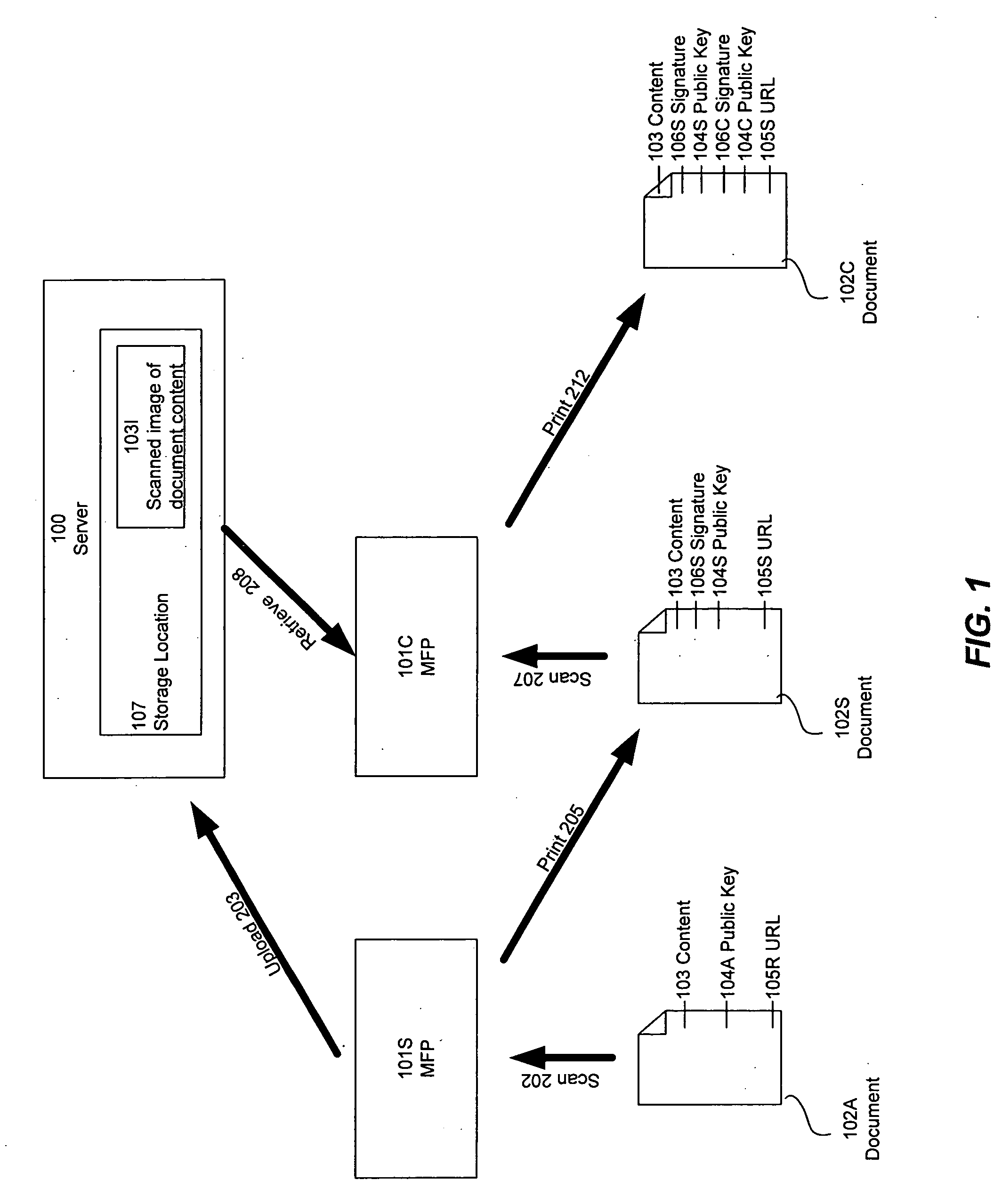

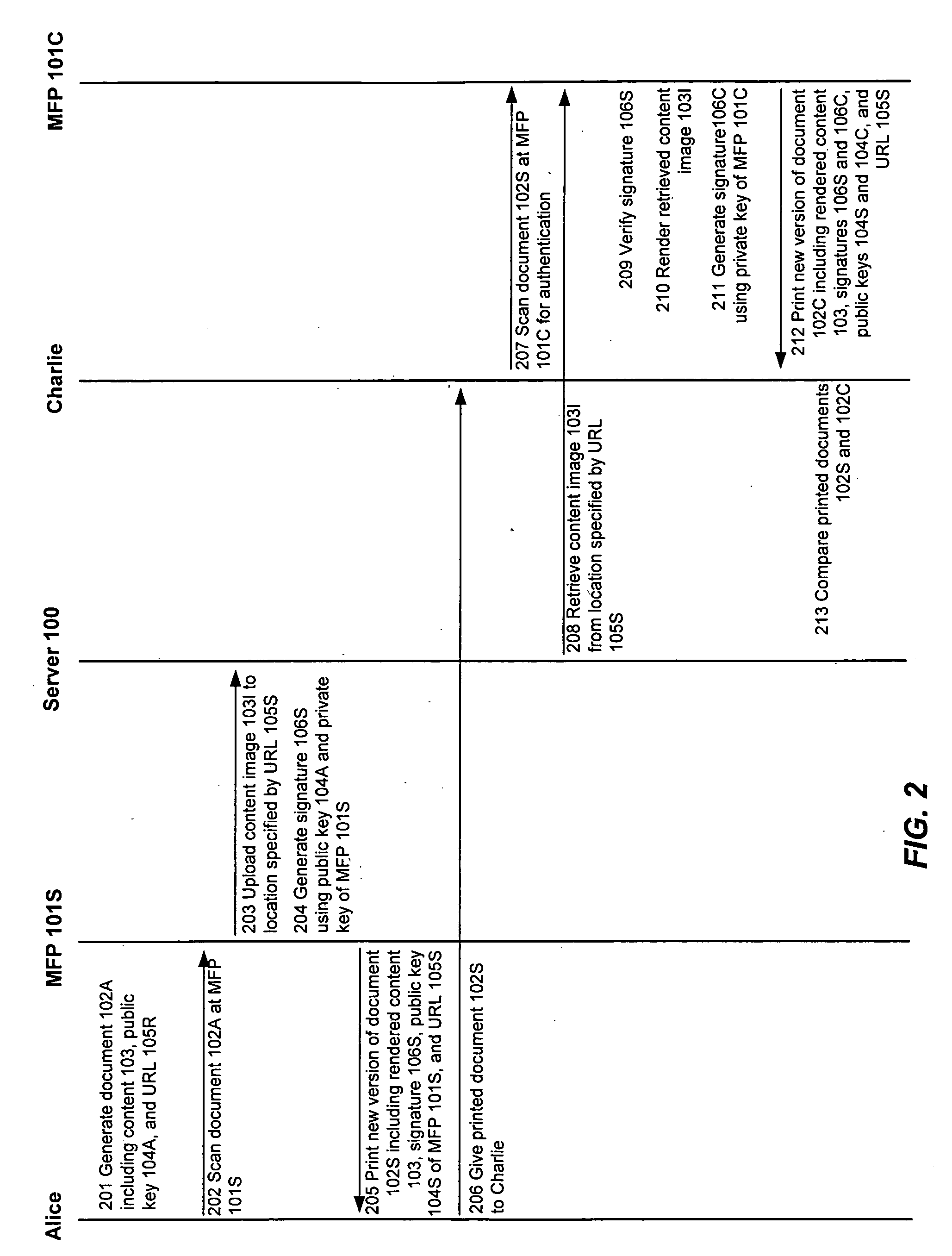

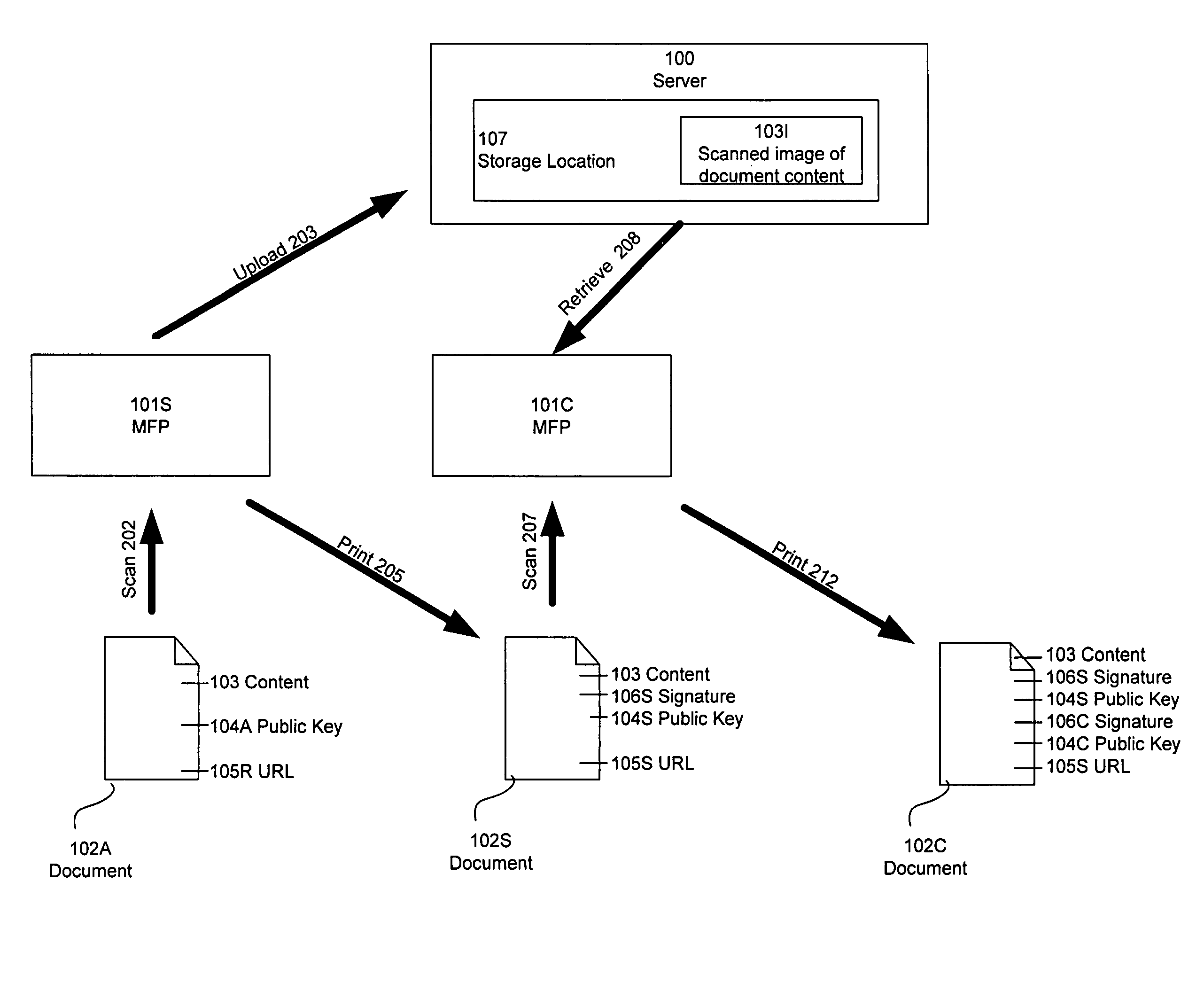

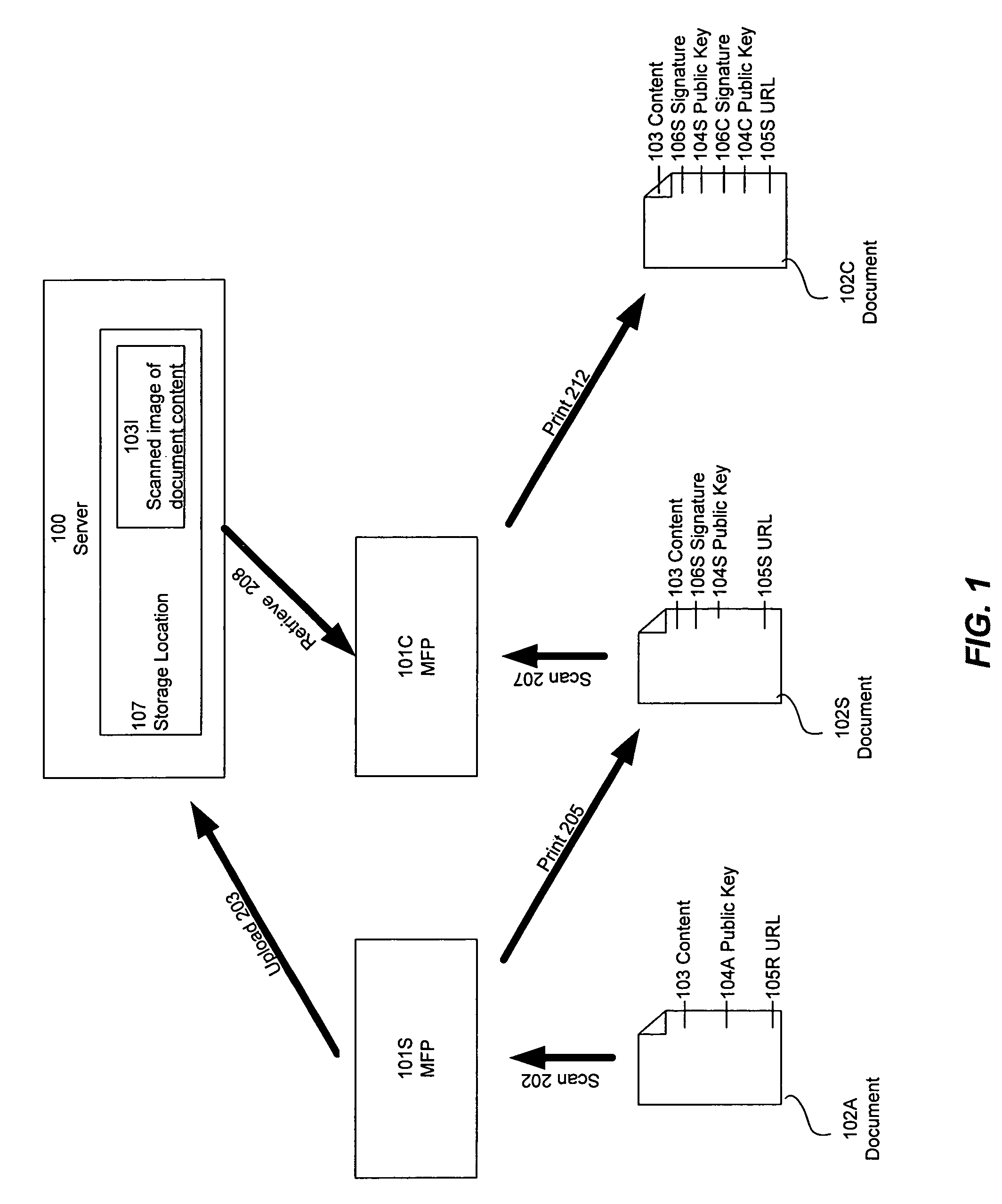

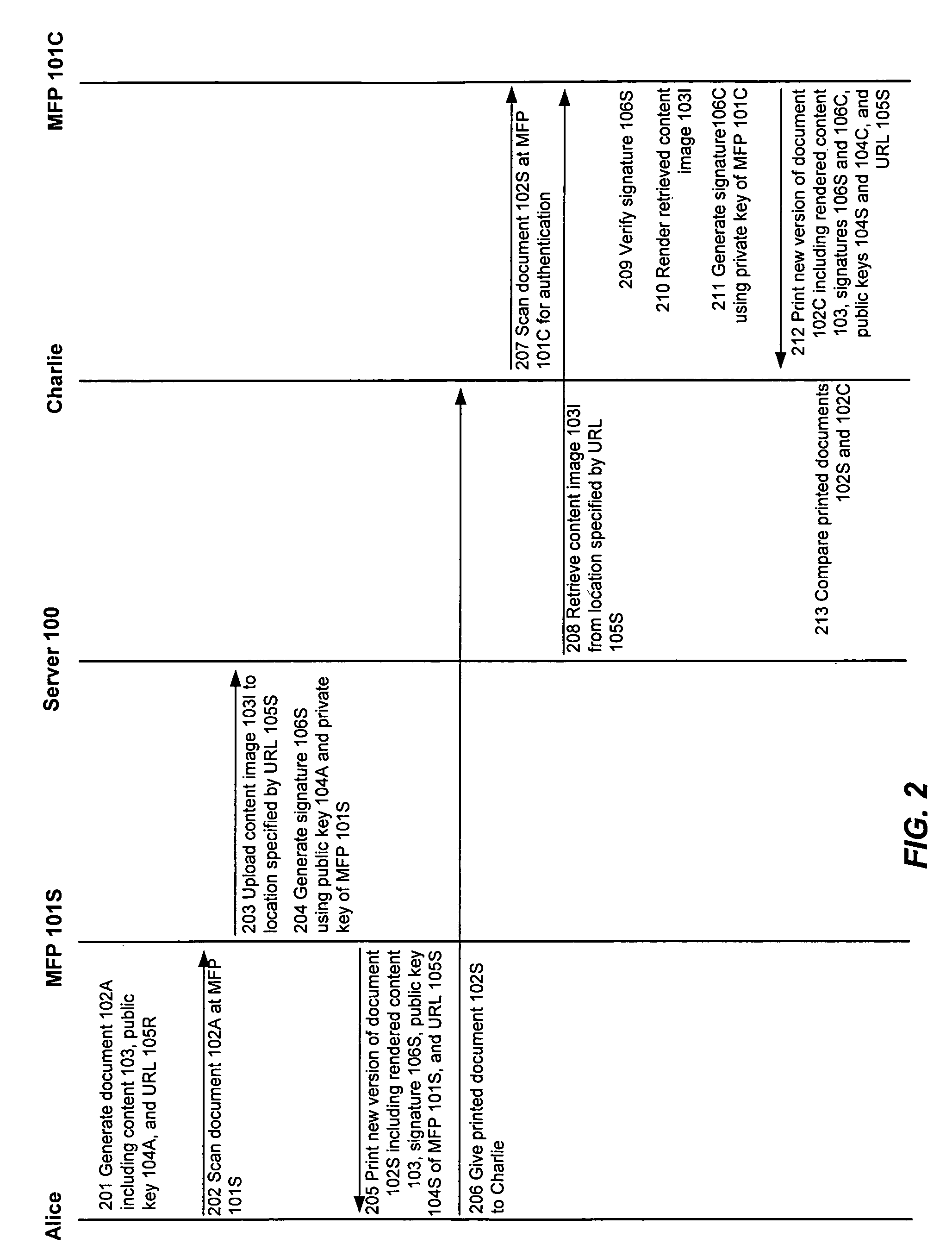

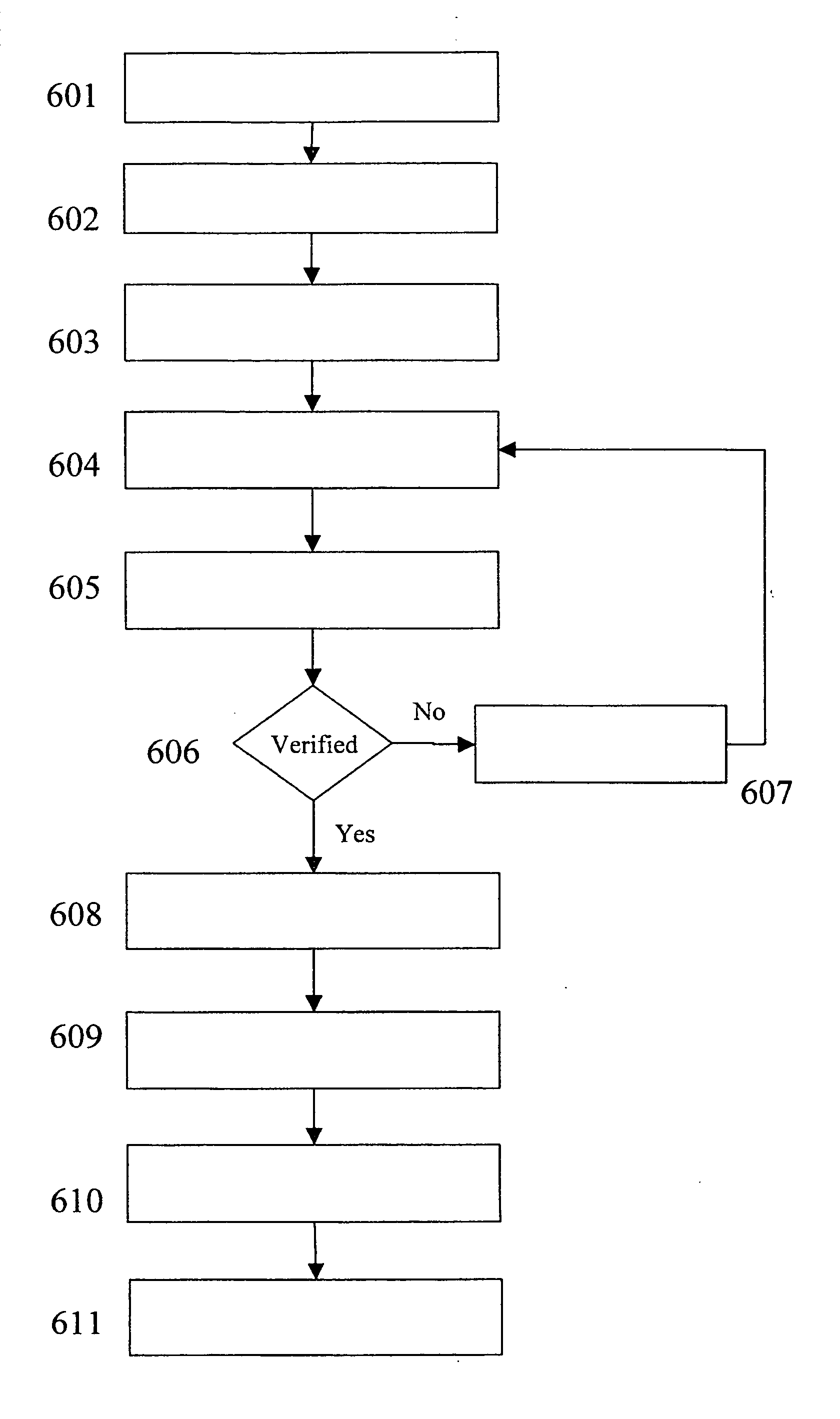

Document authentication combining digital signature verification and visual comparison

InactiveUS20060117182A1Easy to detectEfficiency advantageUser identity/authority verificationDigital data protectionVisual comparisonDigital signature

A document authentication system and method combine digital and non-electronic (or visual) authentication methodologies in an integrated, unified manner. As well as providing indicia of digital authentication, the invention generates a physical artifact that can be validated by unaided human visual perception. The present invention thus provides an opportunity to improve the level of trust in authentication of documents, while preserving the advantages of both traditional and digital authentication mechanisms.

Owner:RICOH KK

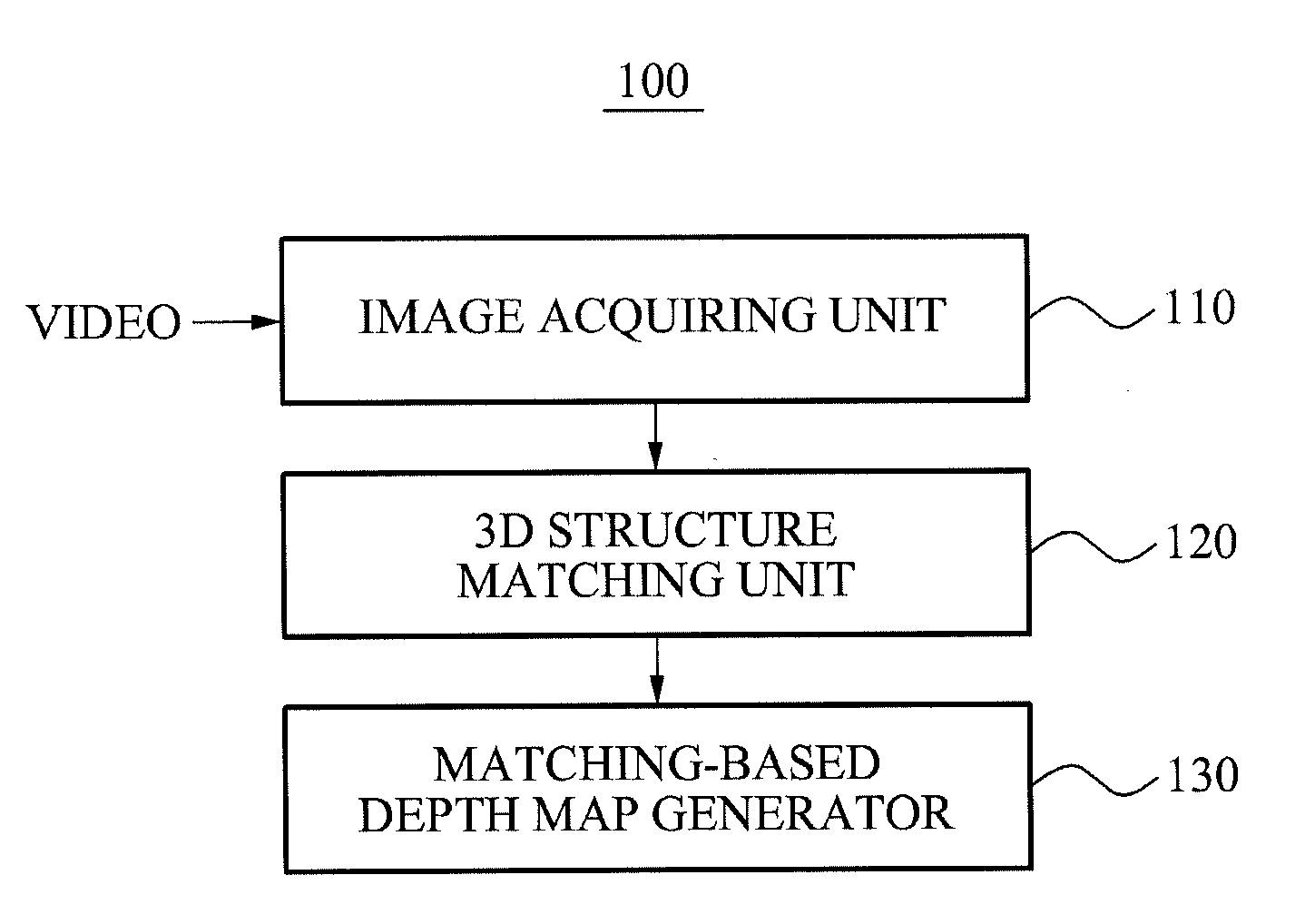

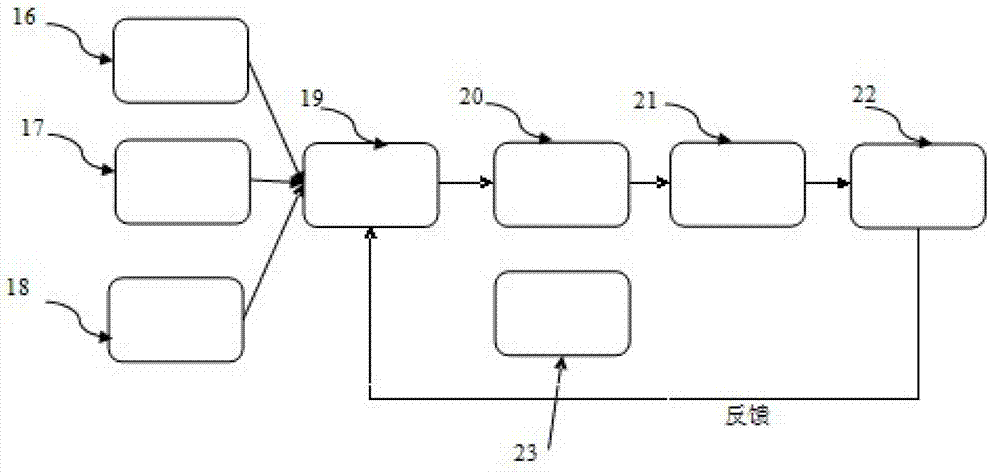

Apparatus, method and computer-readable medium generating depth map

Disclosed are an apparatus, a method and a computer-readable medium automatically generating a depth map corresponding to each two-dimensional (2D) image in a video. The apparatus includes an image acquiring unit to acquire a plurality of 2D images that are temporally consecutive in an input video, a saliency map generator to generate at least one saliency map corresponding to a current 2D image among the plurality of 2D images based on a Human Visual Perception (HVP) model, a saliency-based depth map generator, a three-dimensional (3D) structure matching unit to calculate matching scores between the current 2D image and a plurality of 3D typical structures that are stored in advance, and to determine a 3D typical structure having a highest matching score among the plurality of 3D typical structures to be a 3D structure of the current 2D image, a matching-based depth map generator; a combined depth map generator to combine the saliency-based depth map and the matching-based depth map and to generate a combined depth map, and a spatial and temporal smoothing unit to spatially and temporally smooth the combined depth map.

Owner:SAMSUNG ELECTRONICS CO LTD

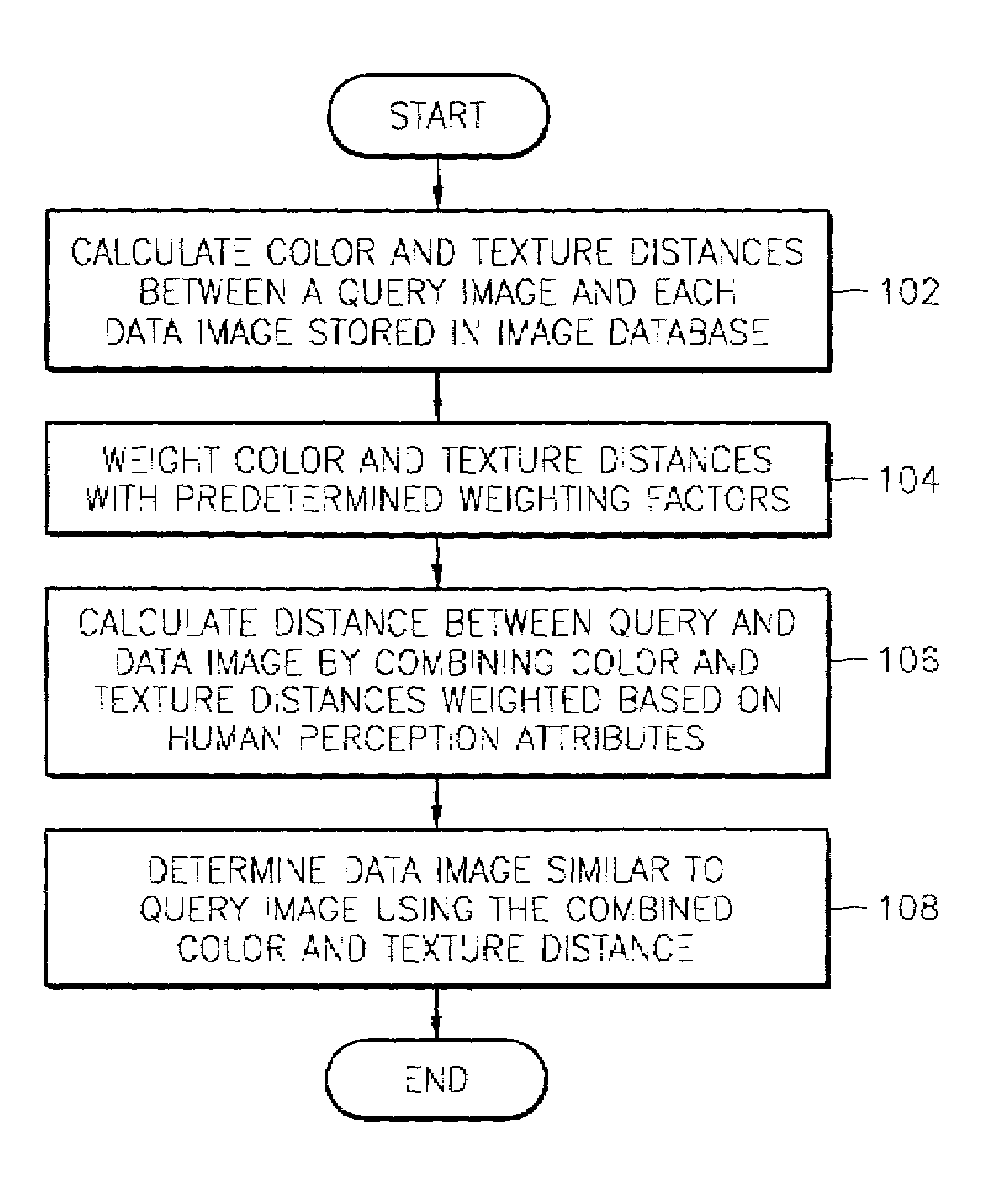

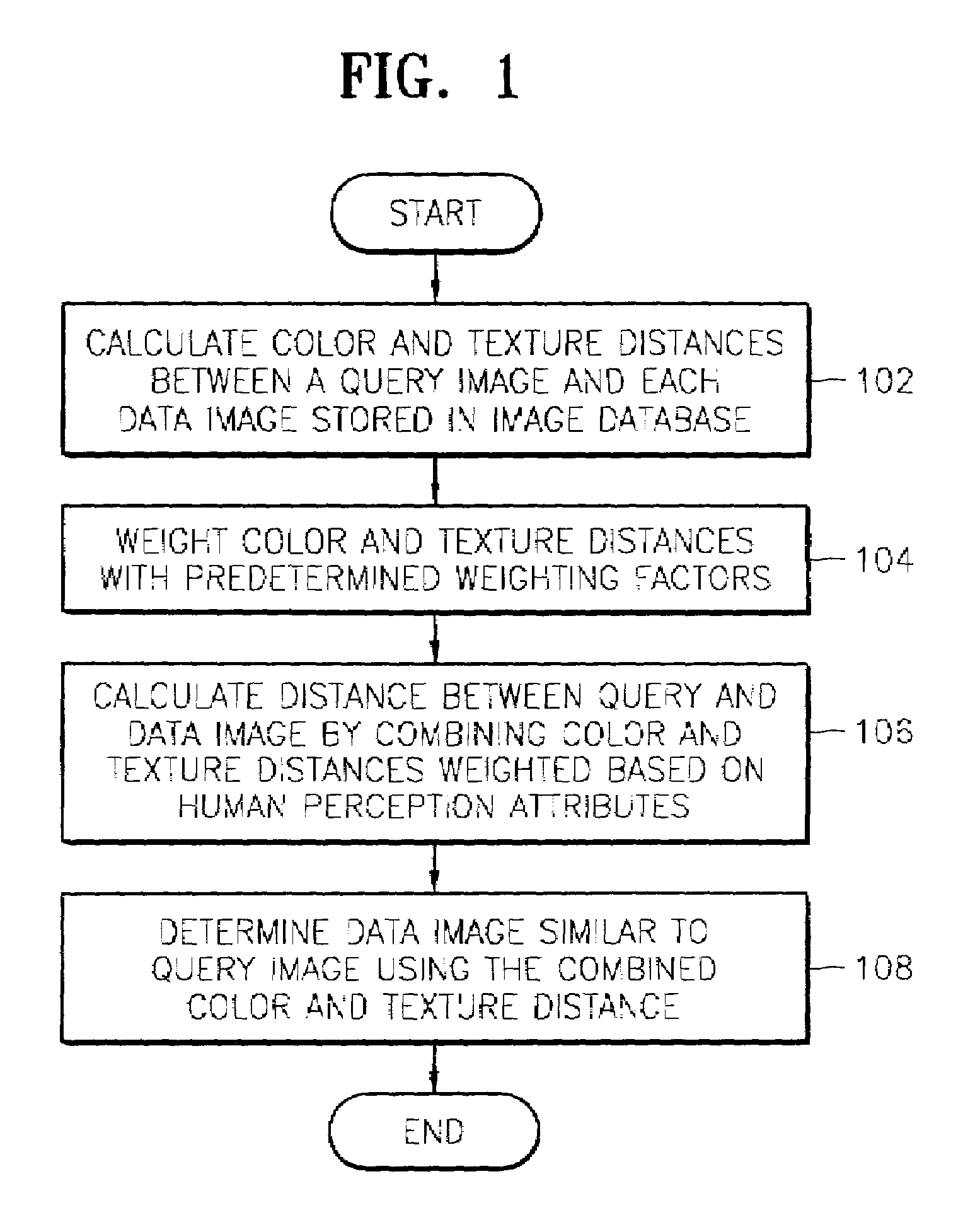

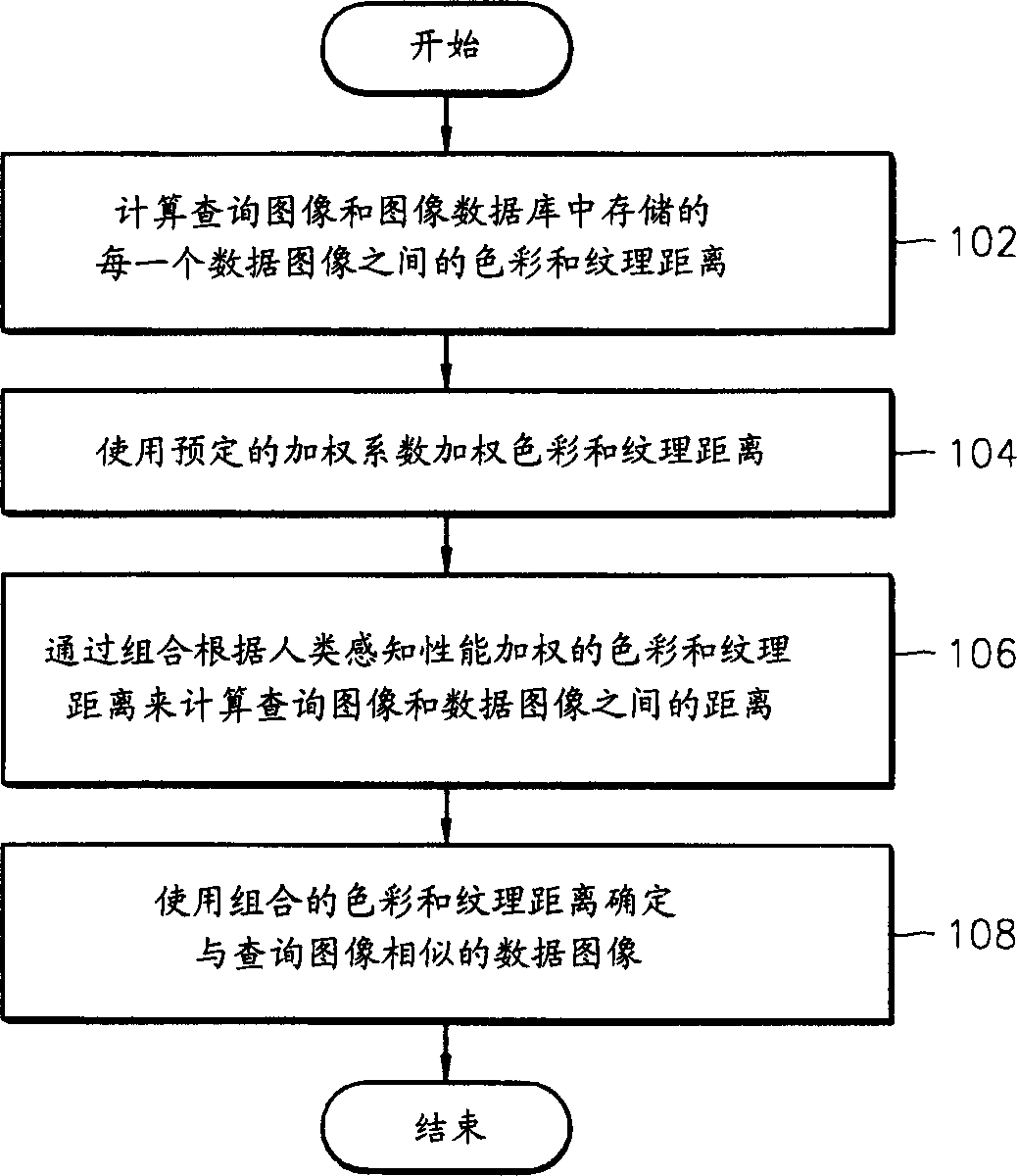

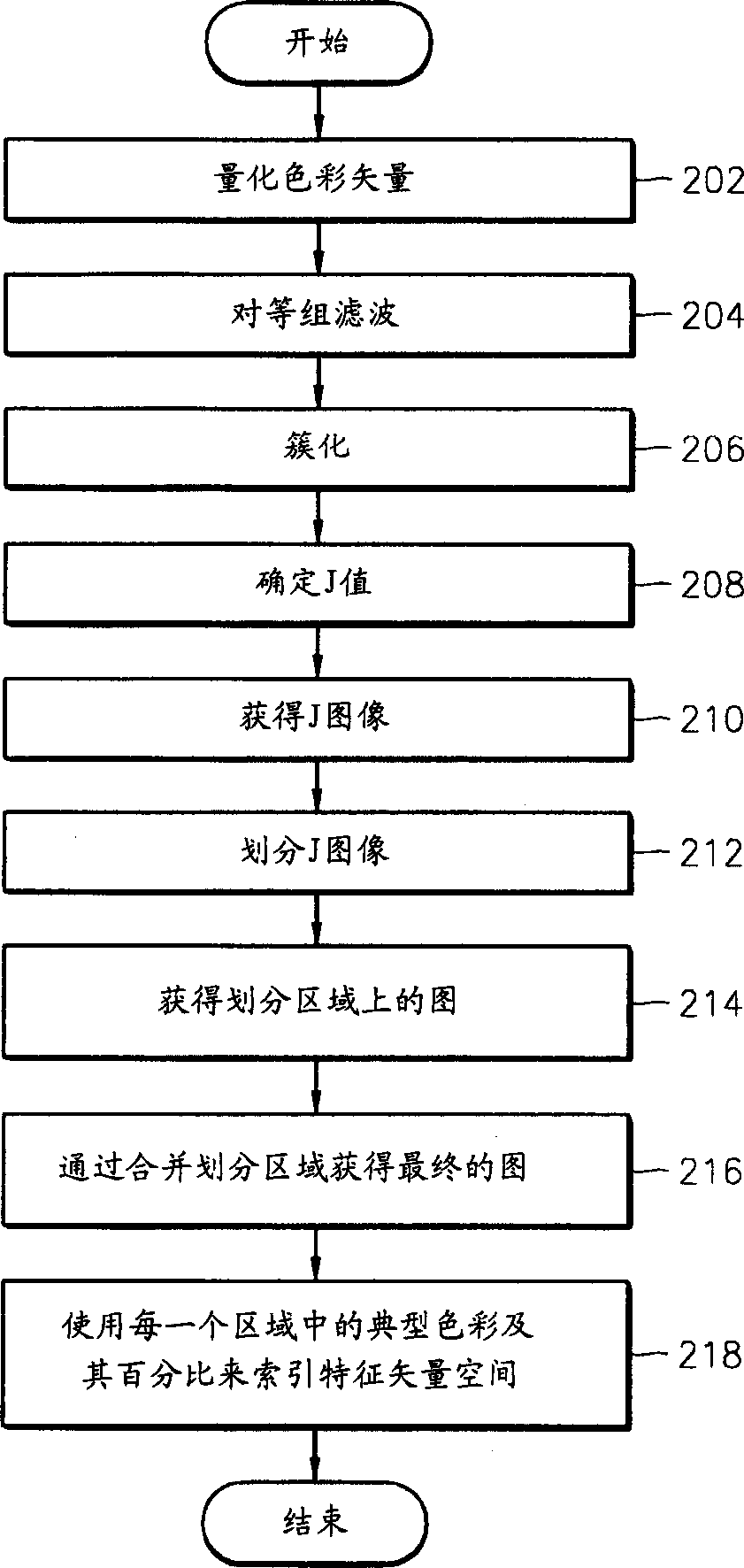

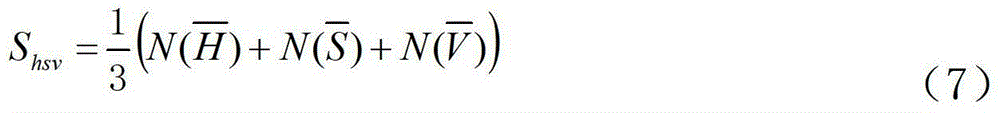

Image retrieval method based on combination of color and texture features

InactiveUS7062083B2Improve performanceTelevision system detailsData processing applicationsSingle imageImage retrieval

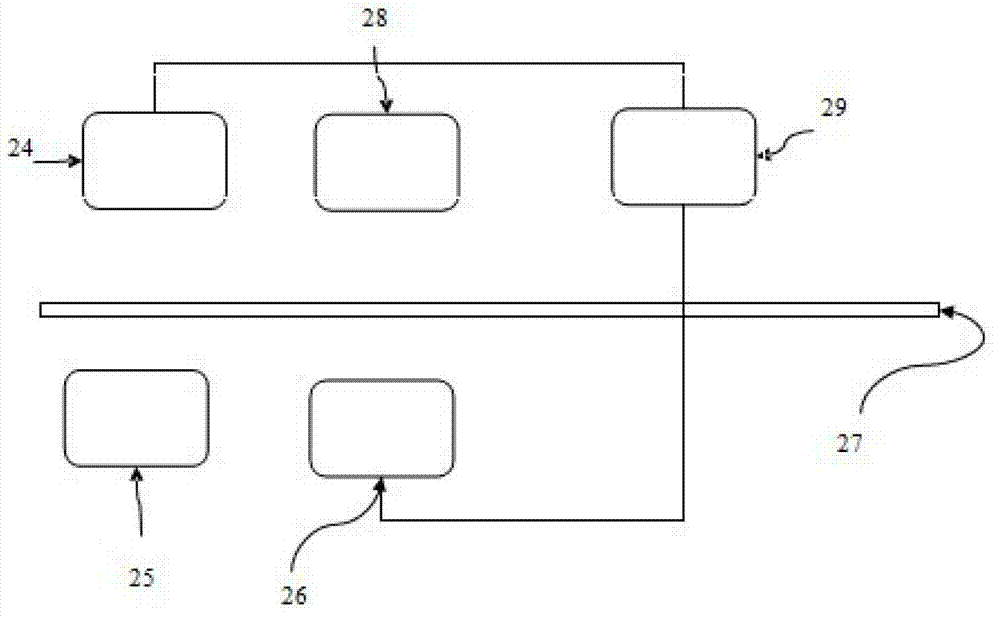

An image retrieval method with improved performance in which a data image similar to a query image is retrieved by appropriately combining color and texture features. The method for retrieving a data image similar to a query image in an image database containing a plurality of data images involves: calculating color and texture distances between a query image and each data image in the image database; weighting the calculated color and texture distances with predetermined weighting factors; calculating a feature distance between the query image and each data image by combining the weighted color and texture distances by considering human visual perception attributes; and determining the data image similar to the query image using the feature distance. According to the image retrieval method, data images similar to a query image can be retrieved based on the human visual perception mechanism by combining both color and texture features extracted from image regions. In particular, the image region based retrieval enables accurate retrieval of more objects and many kinds of information from a single image.

Owner:SAMSUNG ELECTRONICS CO LTD

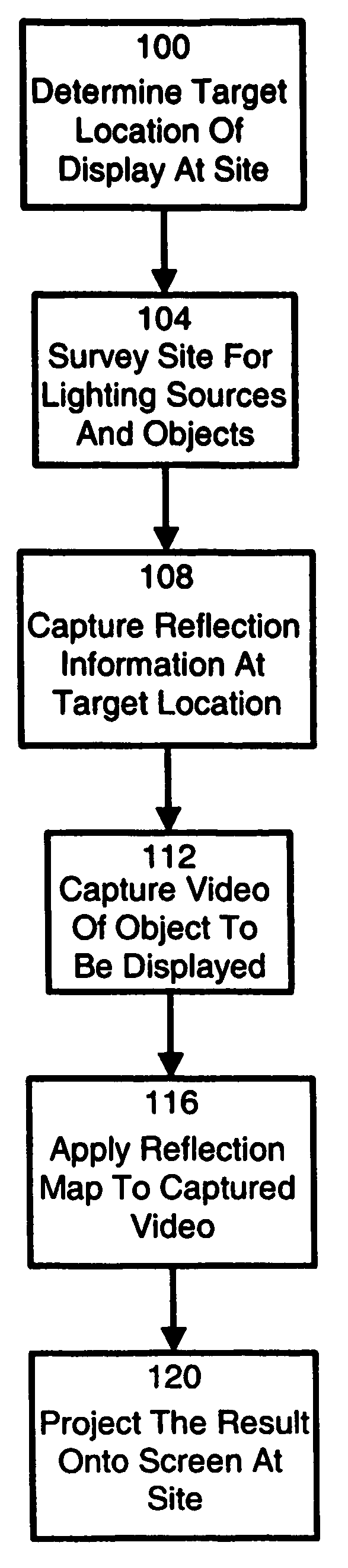

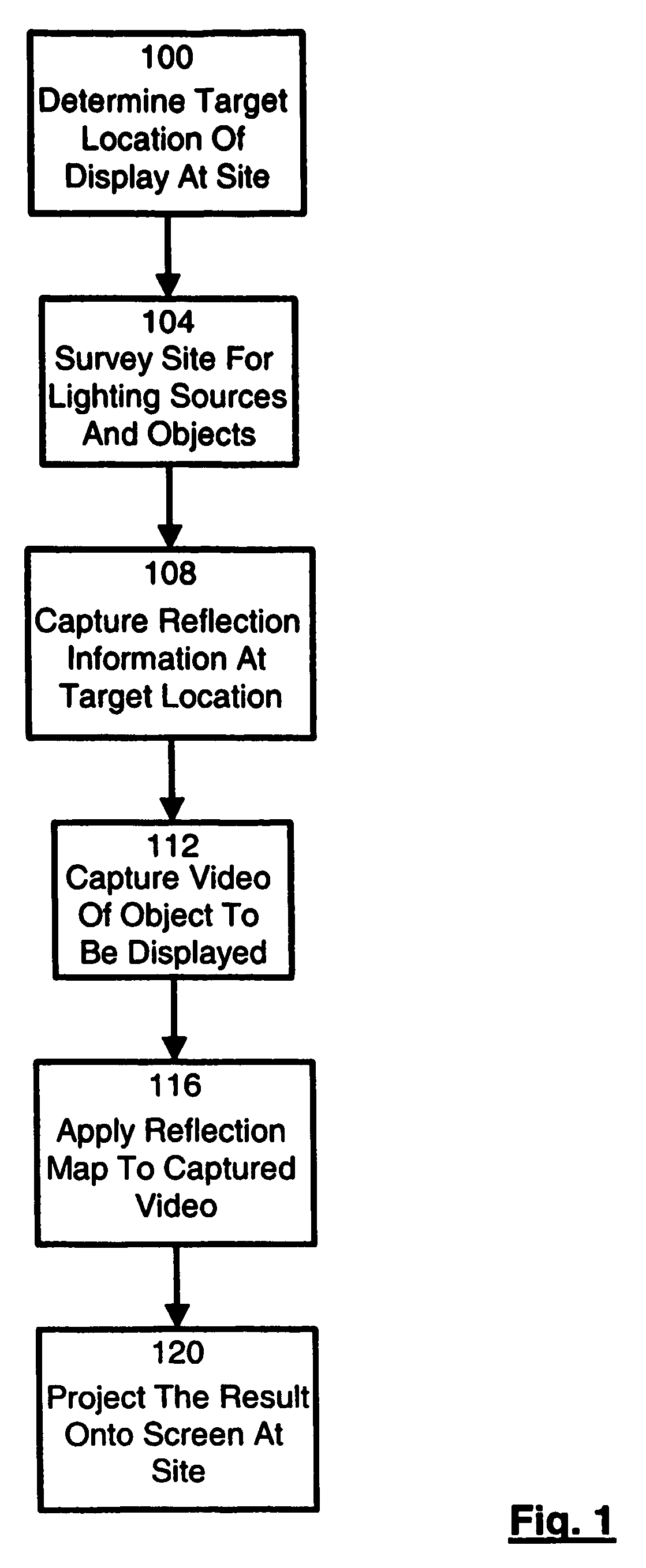

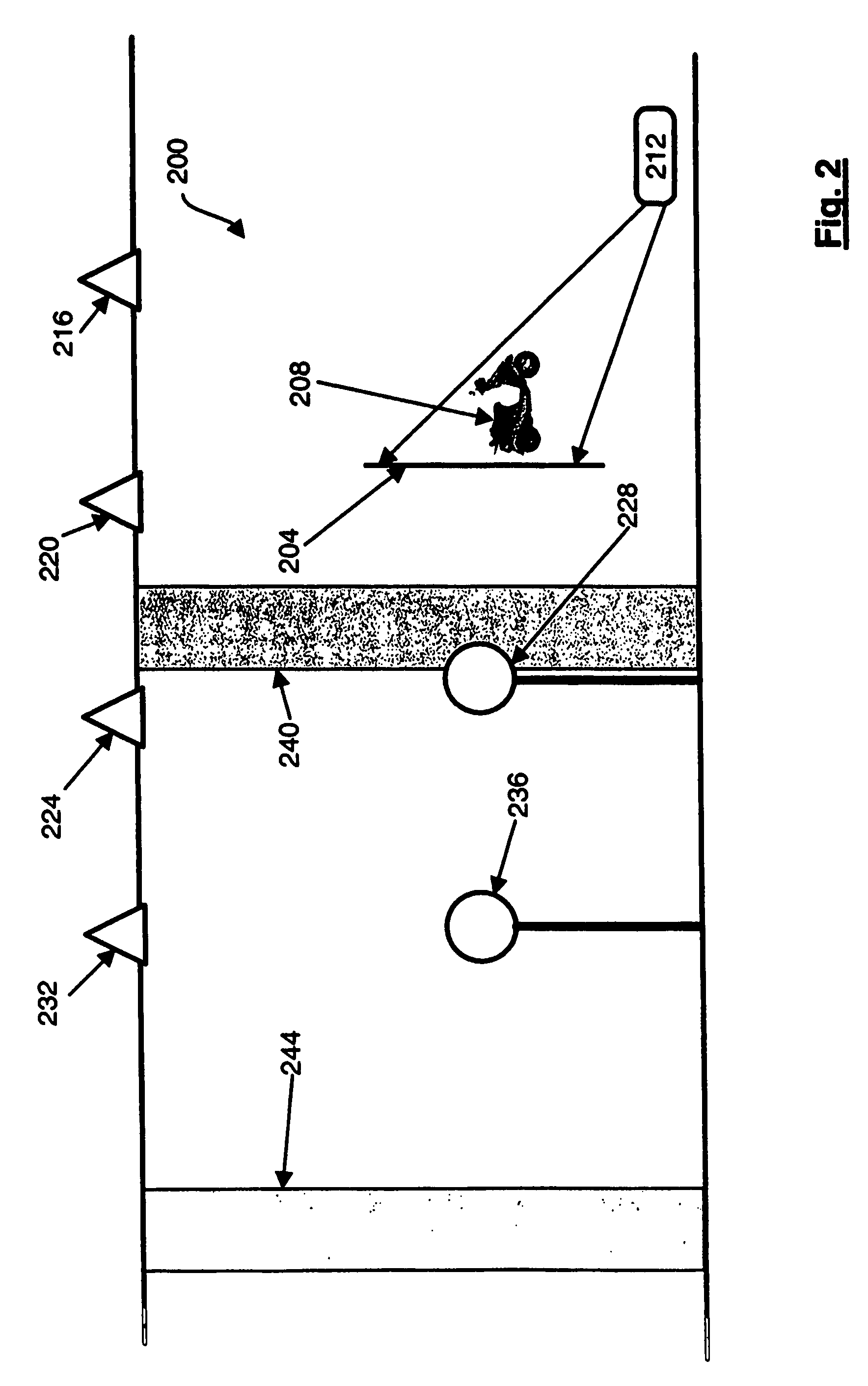

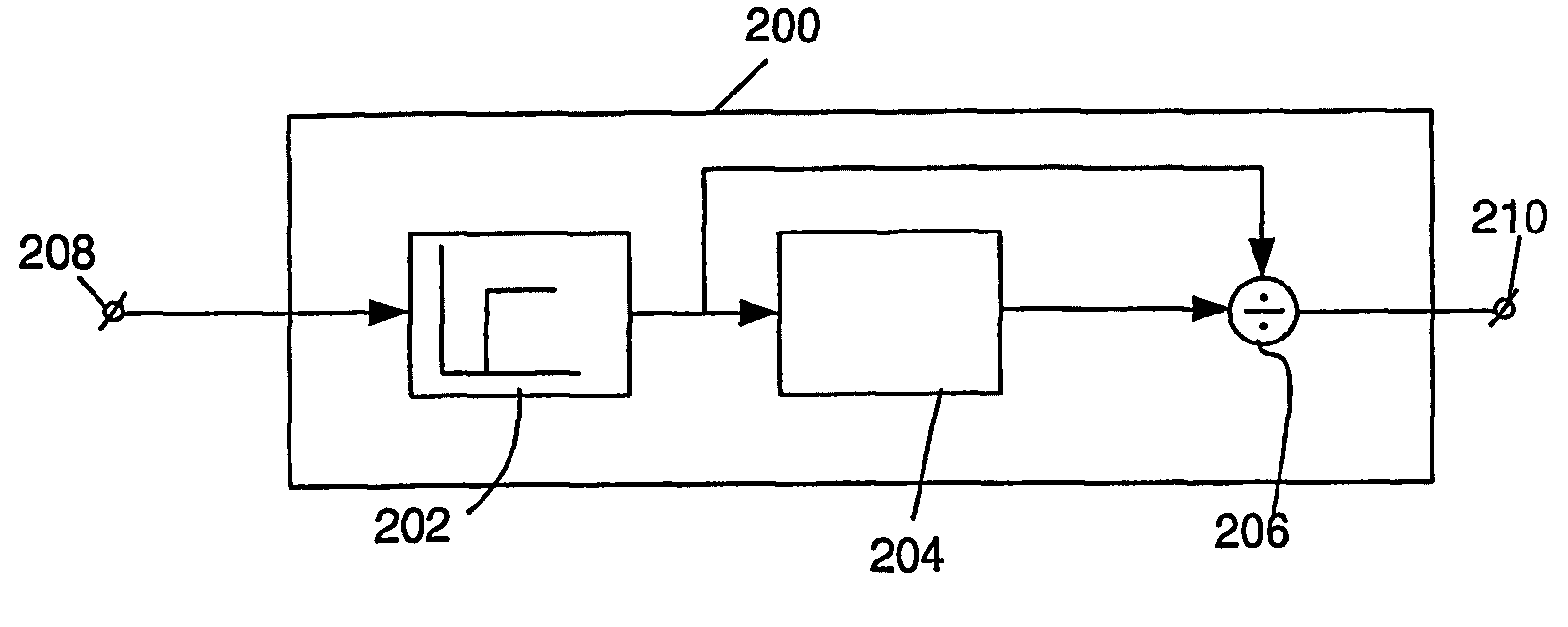

Three dimensional display method, system and apparatus

InactiveUS7385600B2Effective displayProjectorsPicture reproducers using projection devicesAmbient lightingProjection screen

Owner:1614367 ONTARIO

Method of and scaling unit for scaling a three-dimensional model and display apparatus

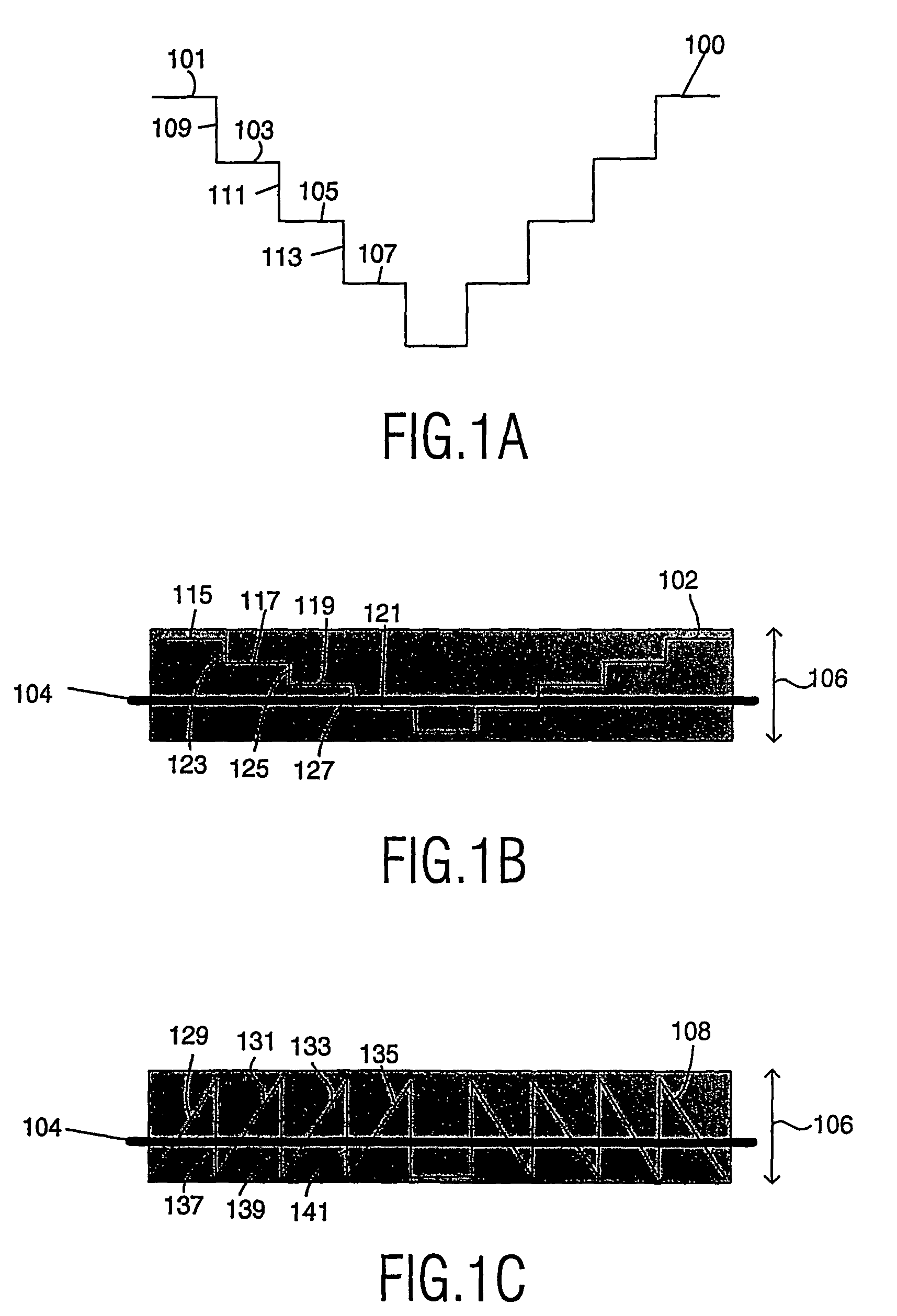

InactiveUS7167188B2Easy to scaleGeometric image transformationCathode-ray tube indicatorsThree dimensionalityScale unit

A method of scaling a three-dimensional model (100) into a scaled three-dimensional model (108) in a dimension which is related with depth which method is based on properties of human visual perception. The method is based on discrimination or distinguishing between relevant parts of the information represented by the three-dimensional model for which the human visual perception is sensitive and in irrelevant parts of the information represented by the three-dimensional model for which the human visual perception is insensitive. Properties of the human visual perception are e.g. sensitivity to a discontinuity in a signal representing depth and sensitivity to a difference of luminance values between neighboring pixels of a two-dimensional view of the three-dimensional model.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

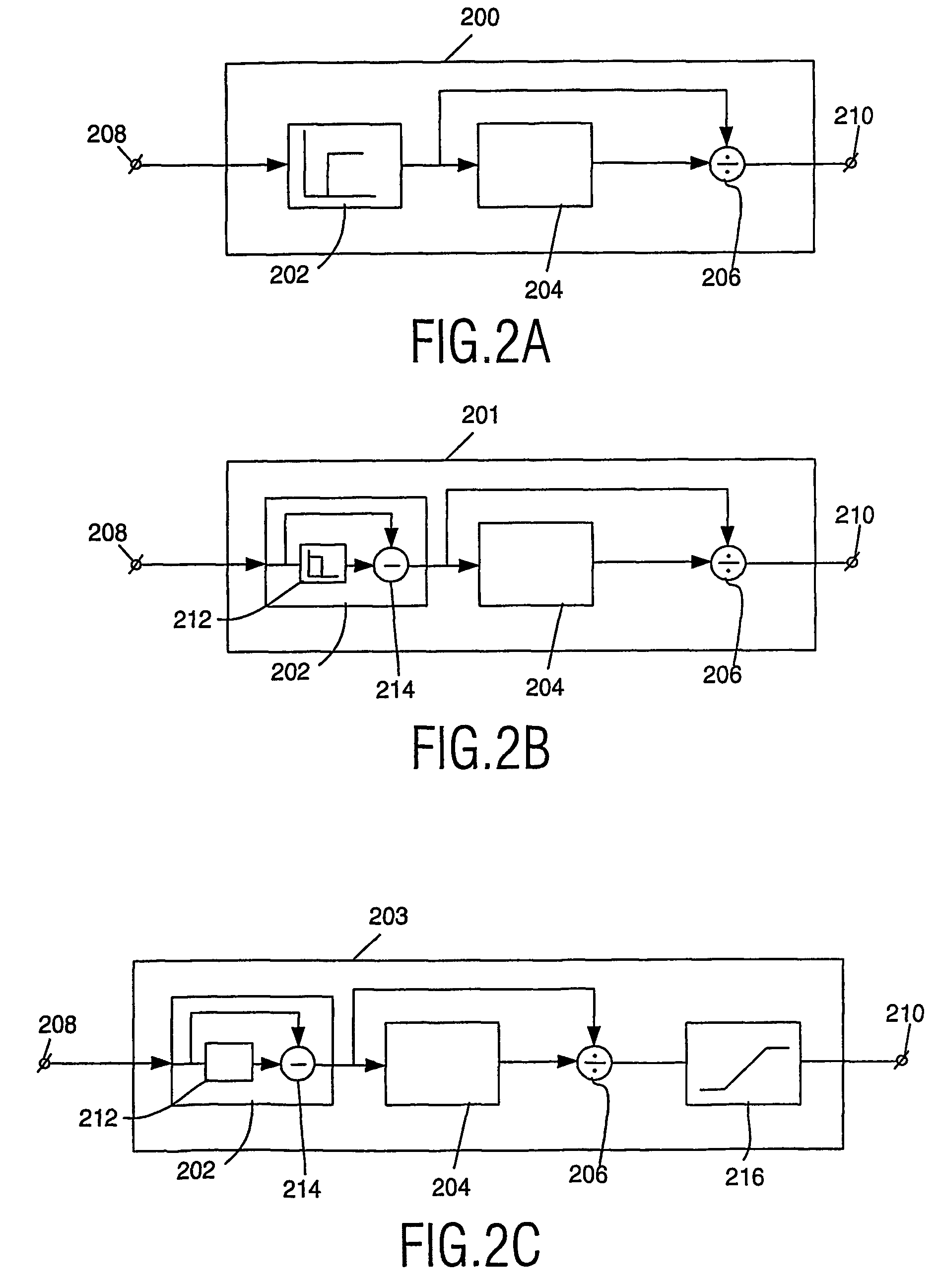

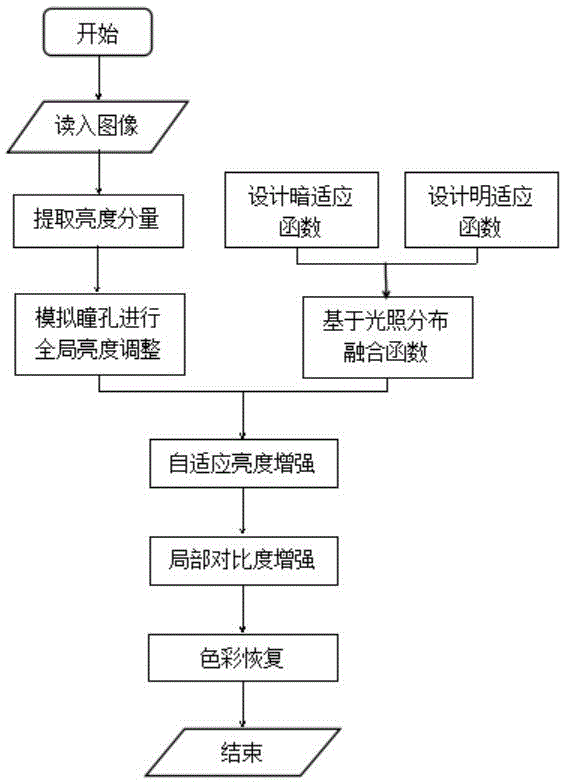

Human visual perception simulation-based self-adaptive low-illumination image enhancement method

ActiveCN105046663AIncrease brightness levelEnhancement effect is goodImage enhancementColor imagePupil

Owner:SOUTHWEAT UNIV OF SCI & TECH

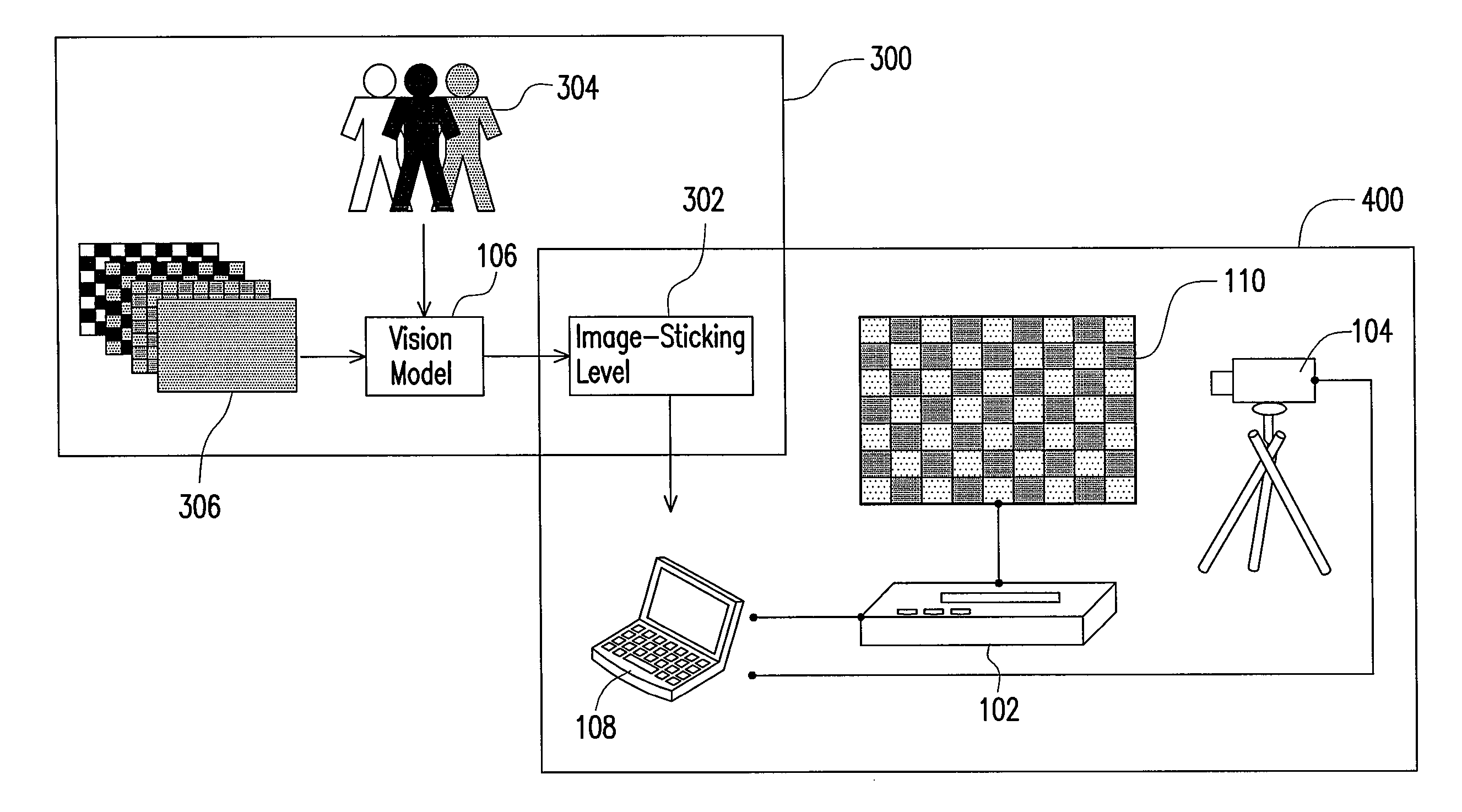

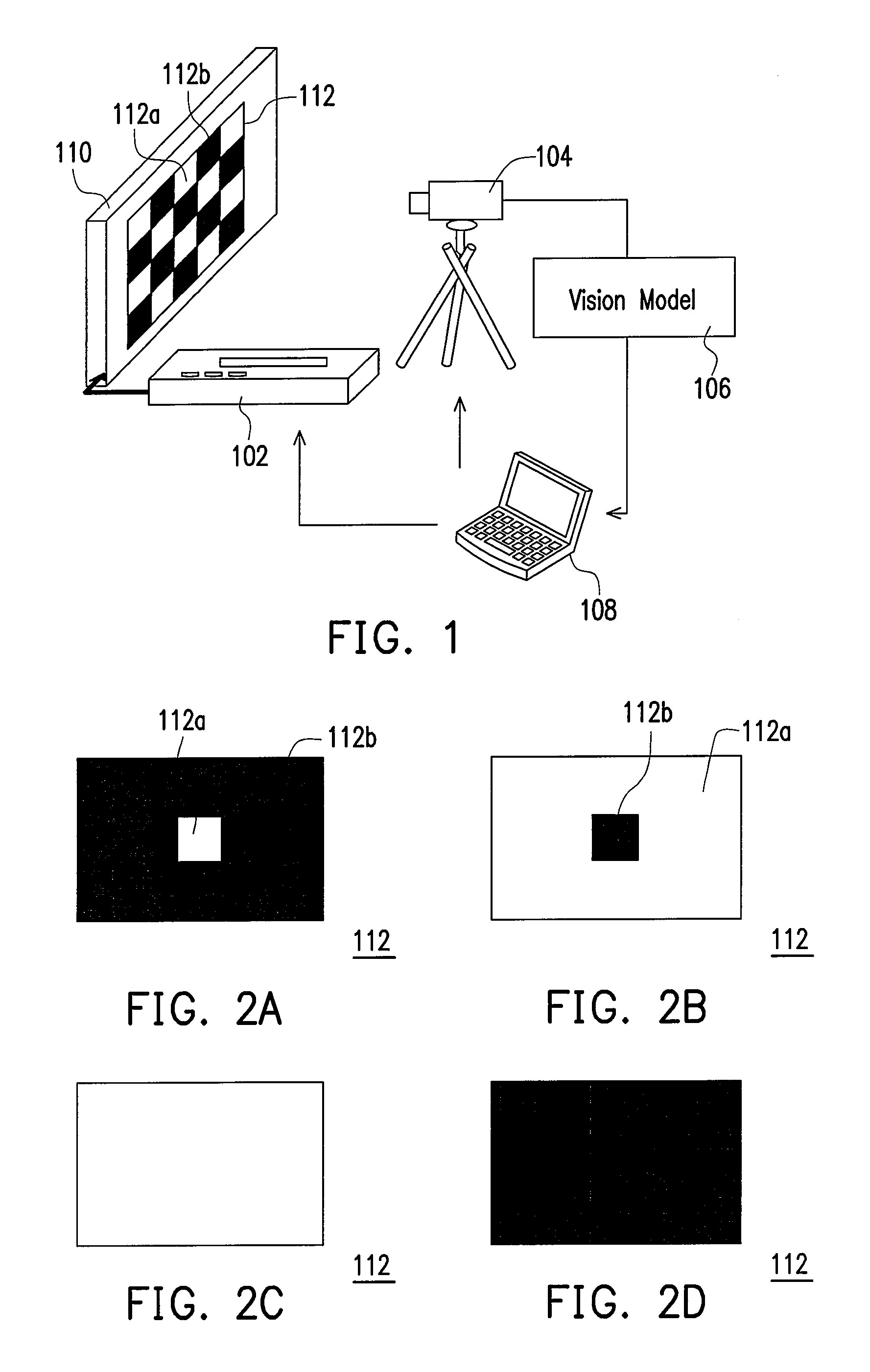

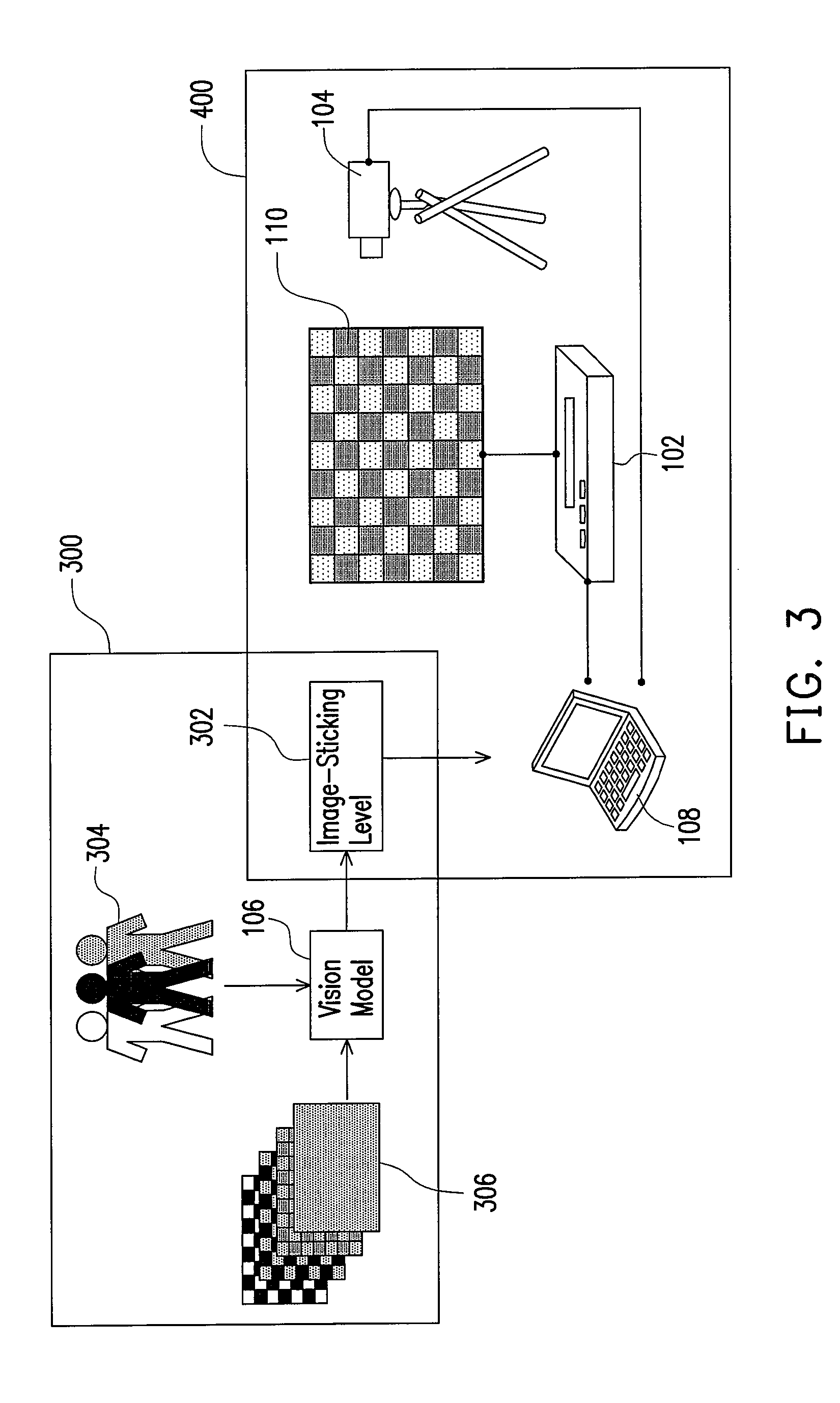

Method and apparatus of detecting image-sticking of display device

InactiveUS20090096778A1Cathode-ray tube indicatorsInput/output processes for data processingGray levelTest frame

A method of measuring image-sticking of a display device based on a vision model is described. A display device is provided, and a test frame is displayed on the display device. The test frame has a pattern formed by a maximum gray level and a minimum gray level. After burning-in the test frame for a period of time, a gray frame at a medium gray level is displayed on the display device. The test frame, including portions of extreme black and extreme white, leaves image-sticking on the gray pattern at a medium gray level at the same time. Next, an image capture device is used to capture the image-sticking on the display device after burn-in. Finally, a vision model is employed to simulate the human visual perception on the image-sticking and to grade the image-sticking according to the human eye sensitivity for viewing the image-sticking on the display device.

Owner:TAIWAN TFT LCD ASSOC +6

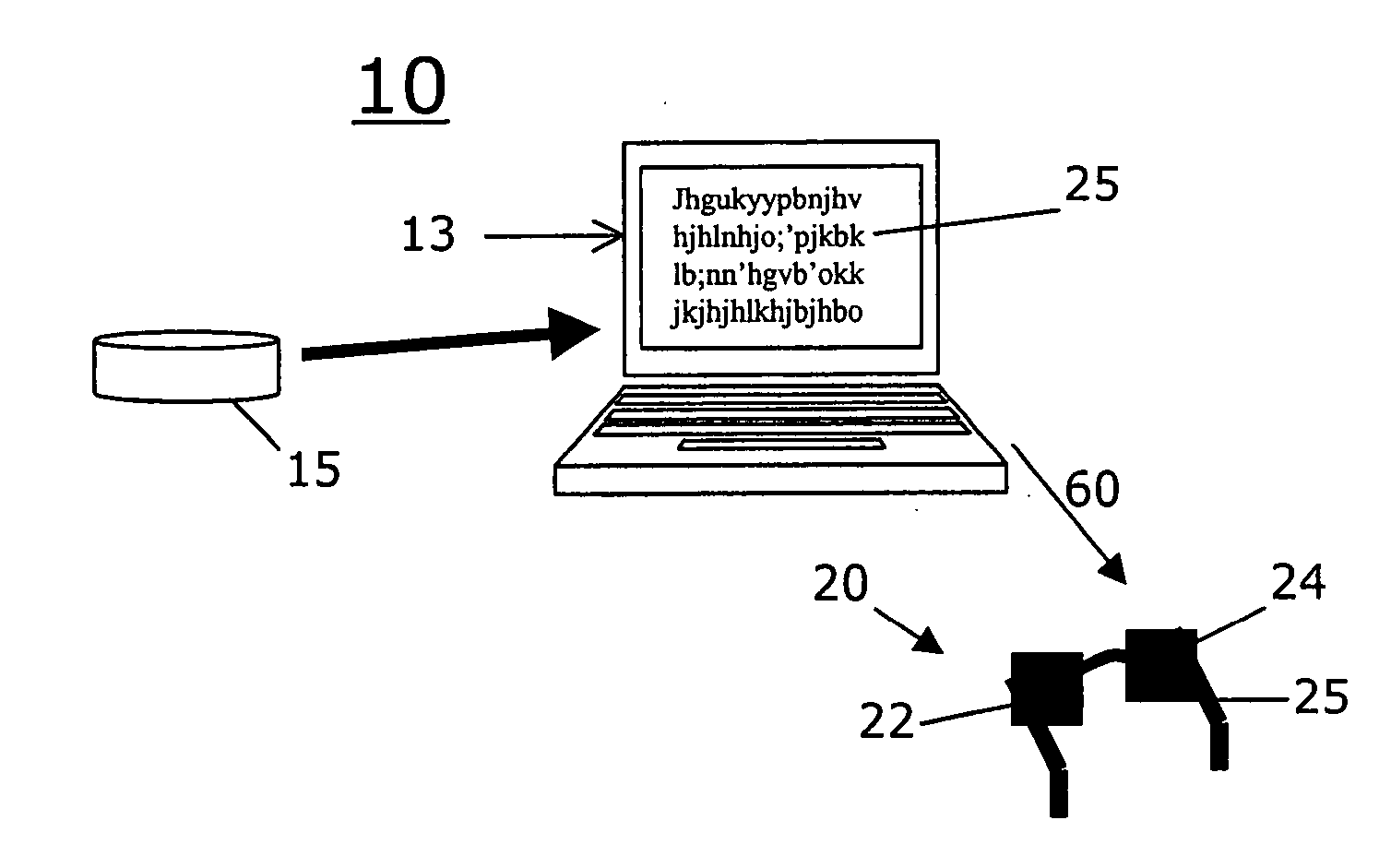

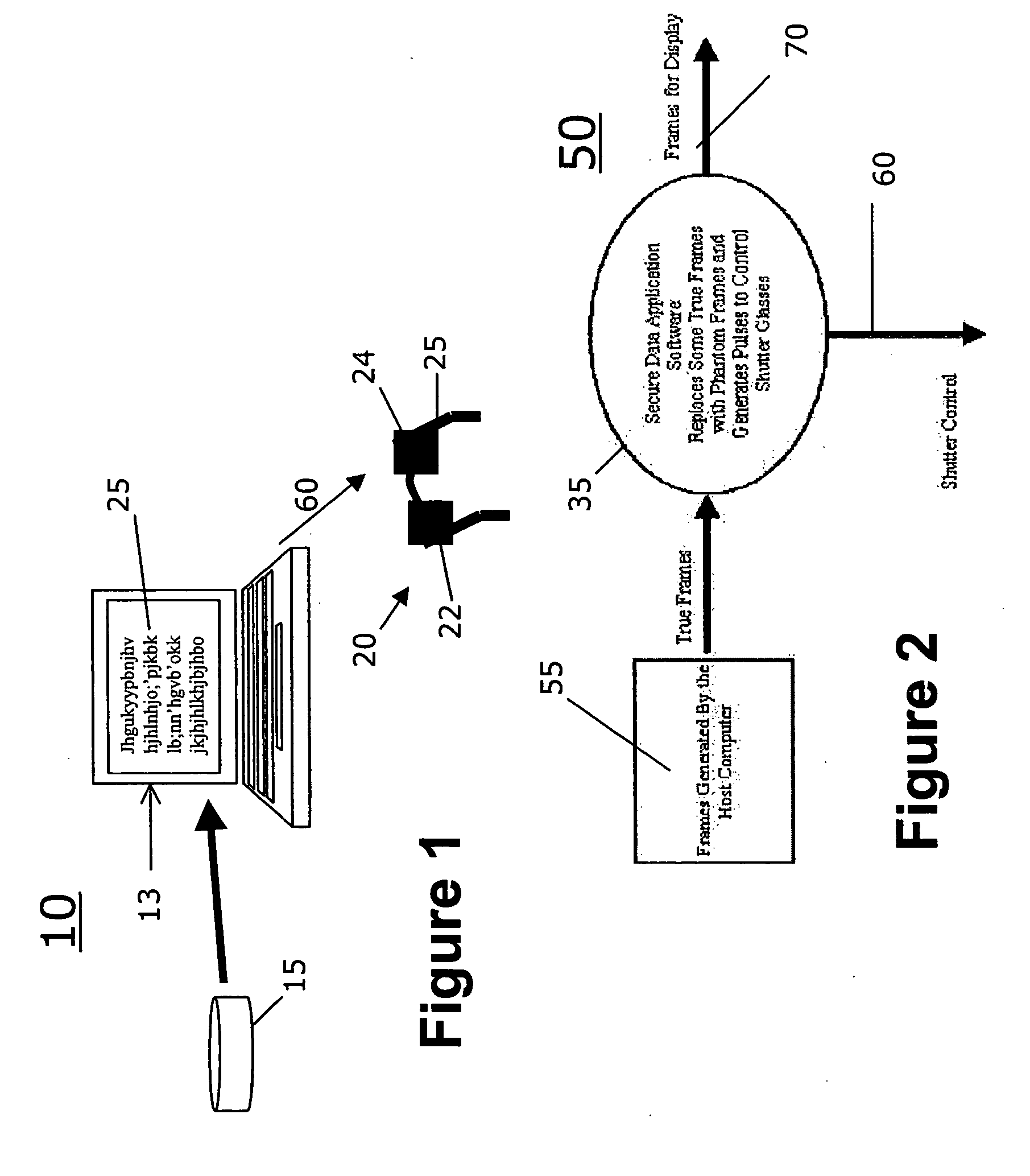

Method for displaying private/secure data

InactiveUS20070247392A1Minimally invasiveCathode-ray tube indicatorsComputer graphics (images)Display device

A system and method for allowing users to view secure data while excluding others from viewing. The method includes generating a stream of first data frames including secure data content to be viewed on a display device; inserting second data frames within the stream in a manner to thereby render a displayed image of the secure data unreadable on the display device. Shutter glasses, to be worn by an authorized user, are provided to receive signals from the computer device and, in response to received signals, filter out the second data frames thus enabling the viewer to view the secure data to the exclusion of nearby observers. The second frames are generated and interspersed within the first video frames having secure data by software executing on a conventional computing device. The second frames may include random text interspersed according to a regular or irregular pattern, or may include color and intensities to exploit deficiencies of human visual perception.

Owner:IBM CORP

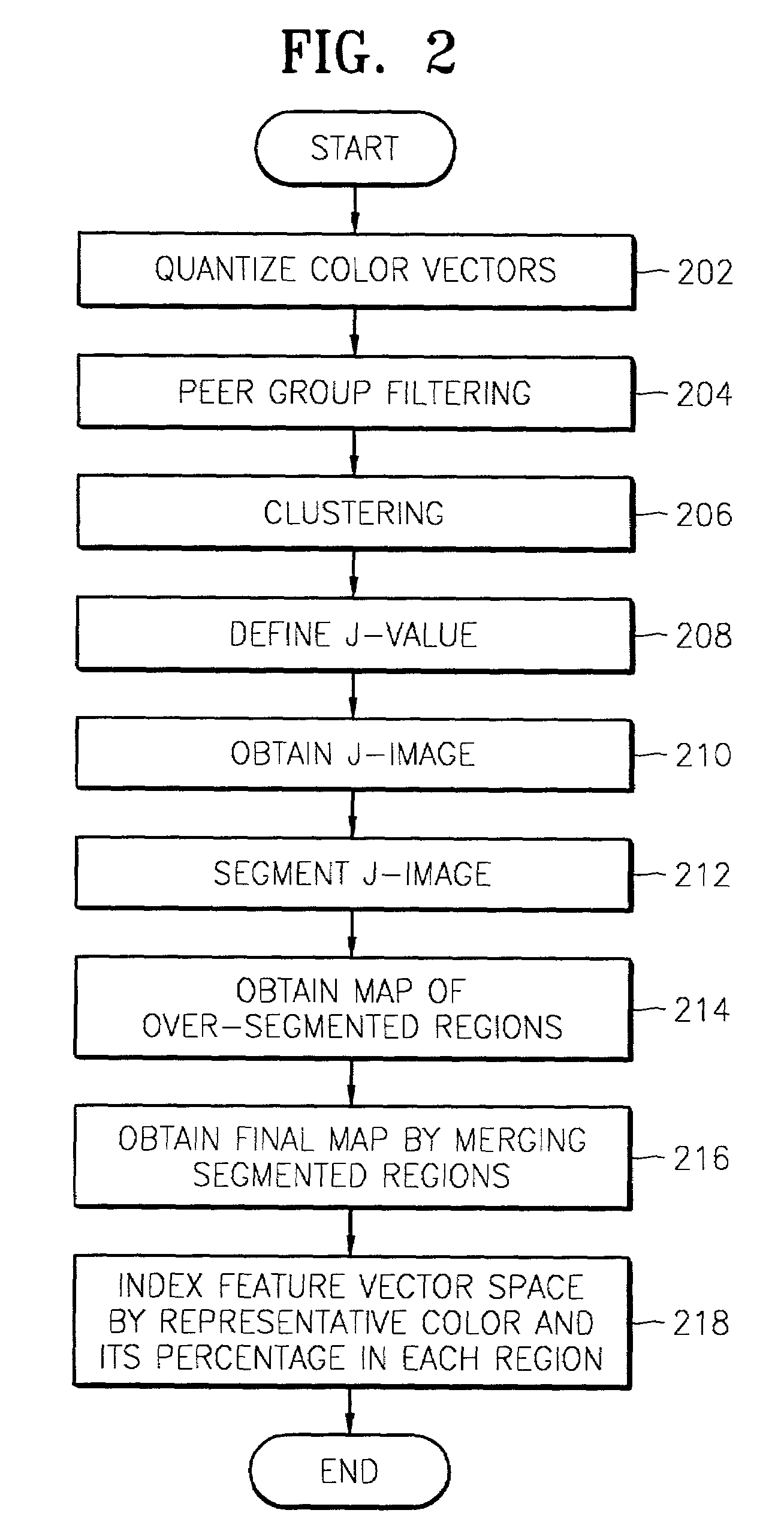

Image retrieval method based on color and image characteristic combination

An image retrieval method with improved performance in which a data image similar to a query image is retrieved by appropriately combining colour and texture features. The method for retrieving a data image similar to a query image in an image database containing a plurality of data images involves: calculating colour and texture distances between a query image and each data image in the image database; weighting the calculated colour and texture distances with predetermined weighting factors; calculating a feature distance between the query image and each data image by combining the weighted colour and texture distances by considering human's visual perception attributes; and determining the data image similar to the query image using the feature distance. According to the image retrieval method, data images similar to a query image can be retrieved based on the human visual perception mechanism by combining both colour and texture features extracted from image regions. In particular, the image region based retrieval enables much accurate retrieval of more objects and many kinds of information from a single image.

Owner:SAMSUNG ELECTRONICS CO LTD

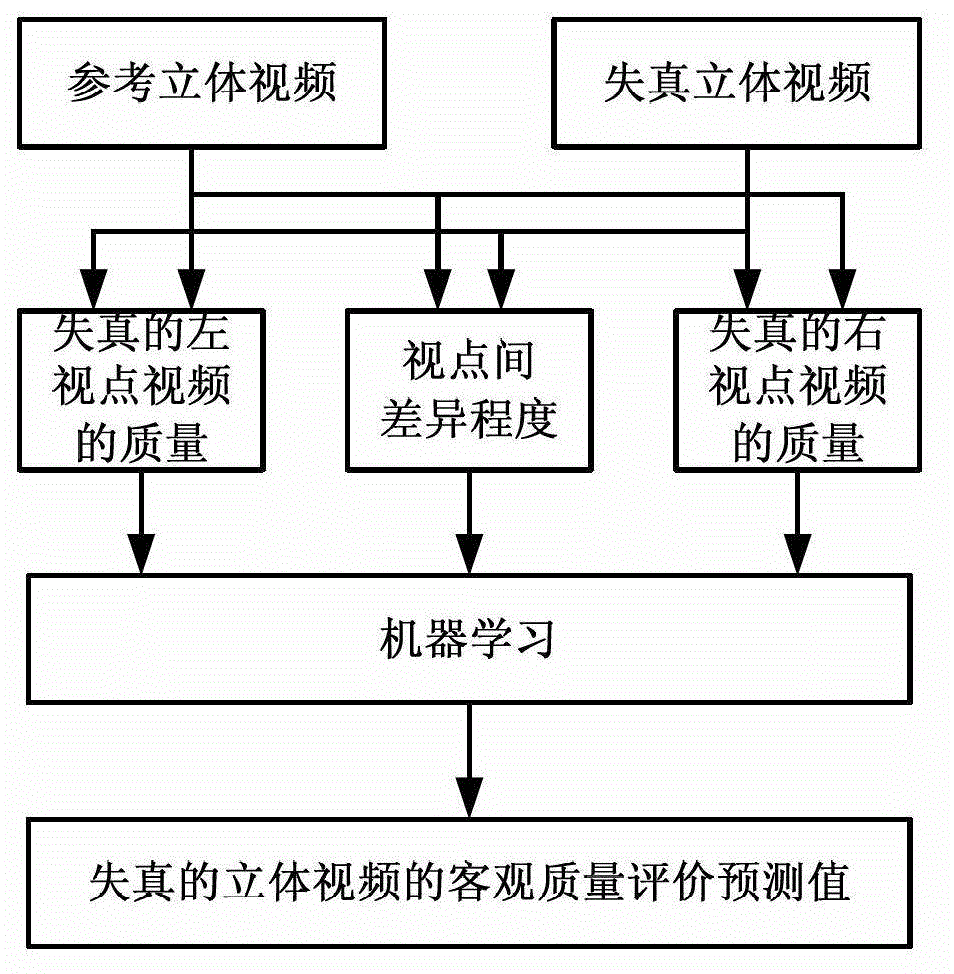

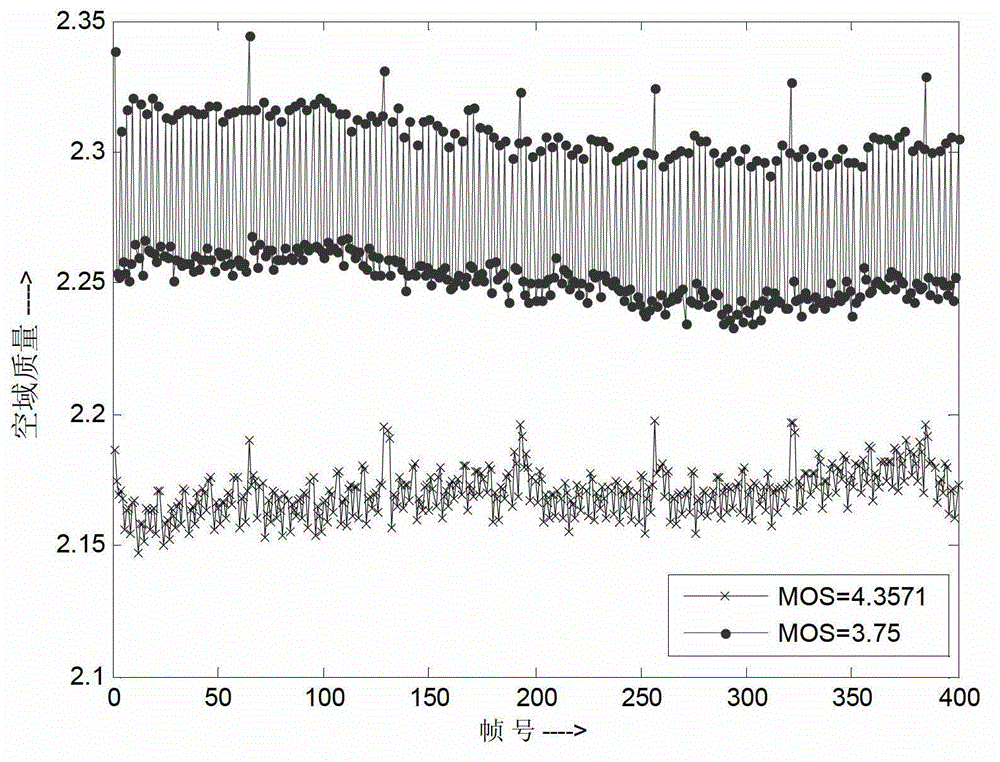

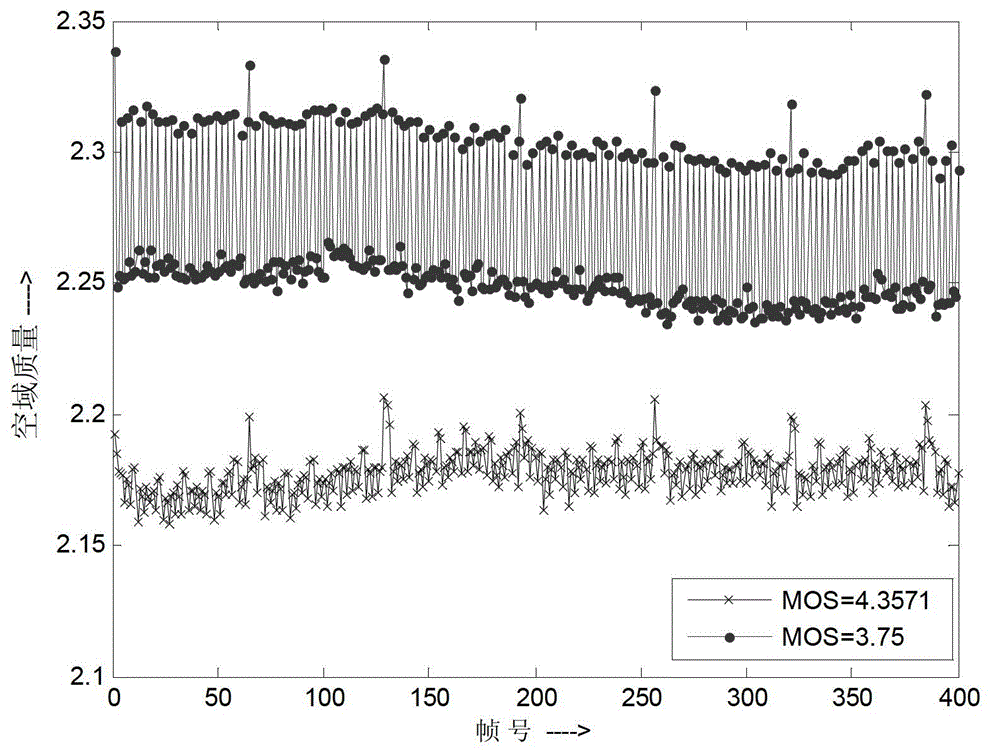

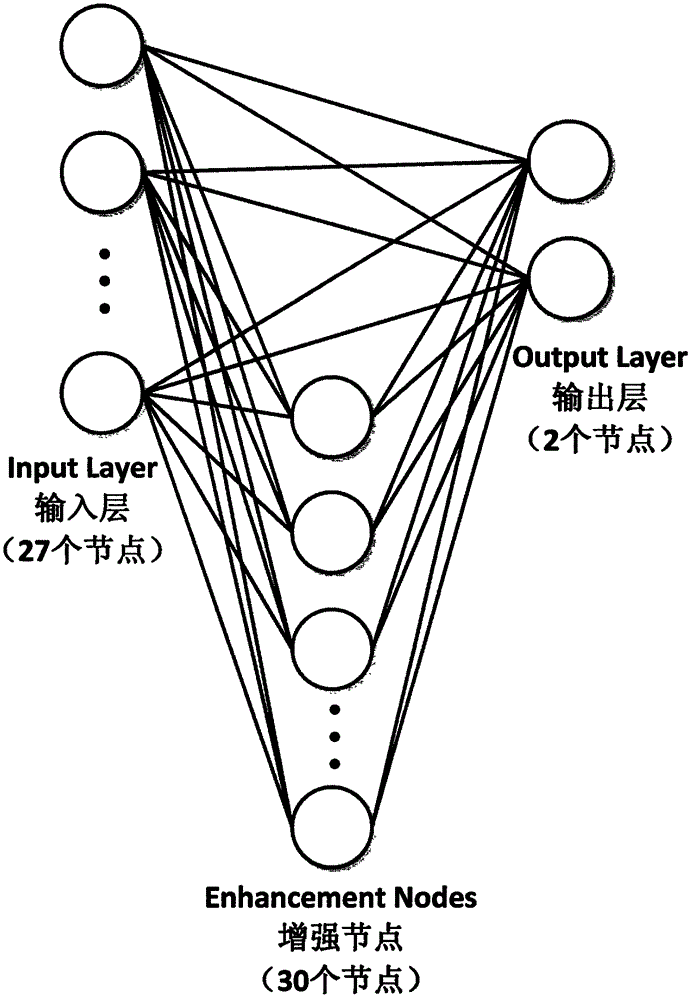

Stereoscopic video objective quality evaluation method based on machine learning

InactiveCN103338379AReflect changes in visual qualityGet evaluation resultTelevision systemsSteroscopic systemsStereoscopic videoSingular value decomposition

The invention discloses a stereoscopic video objective quality evaluation method based on machine learning. When spatial domain quality of luminance component images of single-frame images is evaluated, each image block of luminance component images of each frame of image in original and distorted stereoscopic videos is subjected to singular value decomposition, and dot product of singular vectors obtained from singular value decomposition is adopted to evaluate distortion degree of each frame of image in the distorted stereoscopic video. Because the singular vectors can greatly reflect structural information of images, when the dot product of the singular vectors is adopted to evaluate the quality of the images, changes of the structural information are considered, and therefore, evaluation results can reflect changes of visual quality of the stereoscopic video more objectively when the stereoscopic video is under various kinds of distortion influences. According to the stereoscopic video objective quality evaluation method based on machine learning, a method of machine learning is adopted to process the relations between objective quality evaluation predicted values and the quality of a left-view point video and a right-view point video, and degree of difference among point views of the left-view point video and the right-view point video, and therefore, evaluation results which are more consistent with human visual perception can be effectively obtained.

Owner:NINGBO UNIV

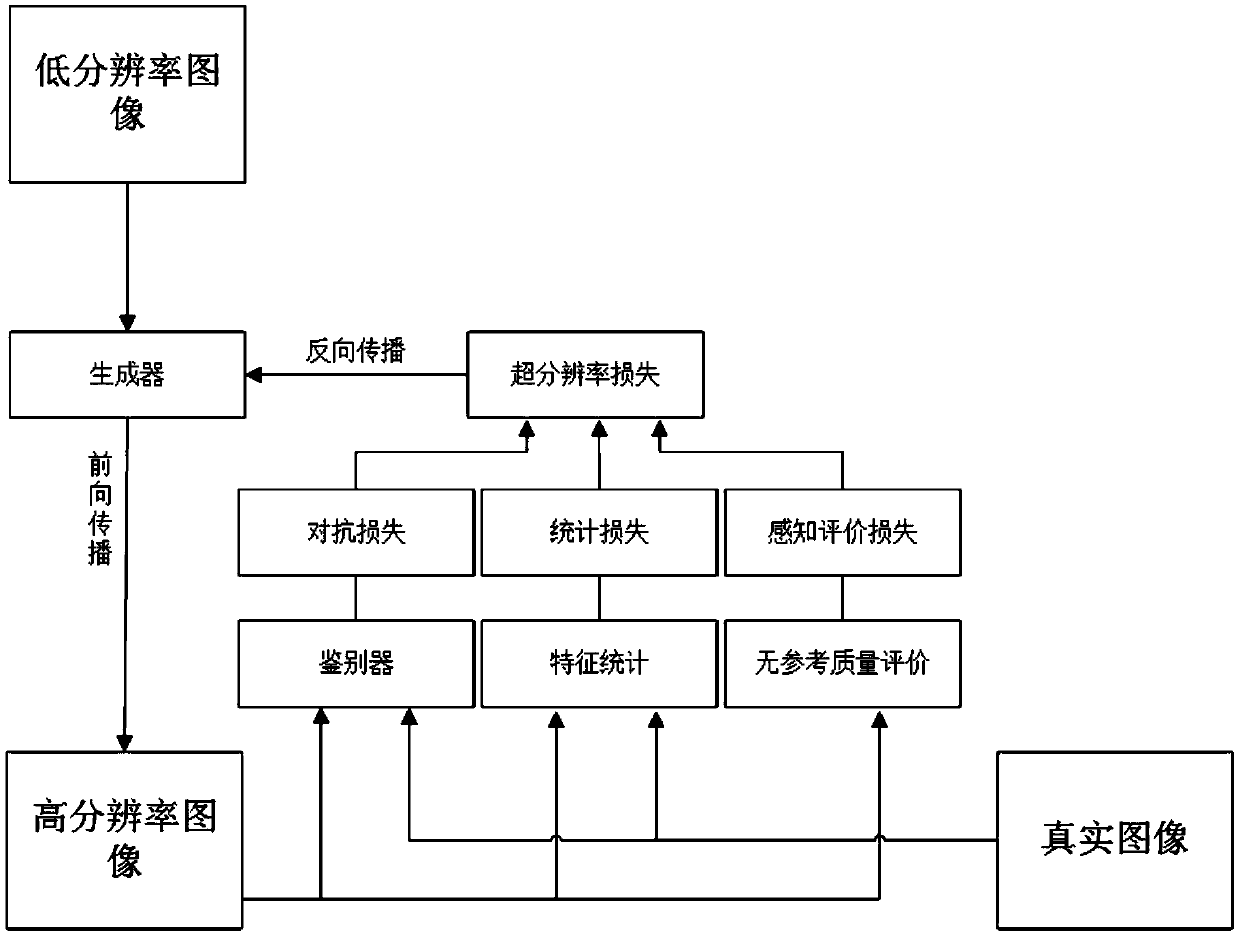

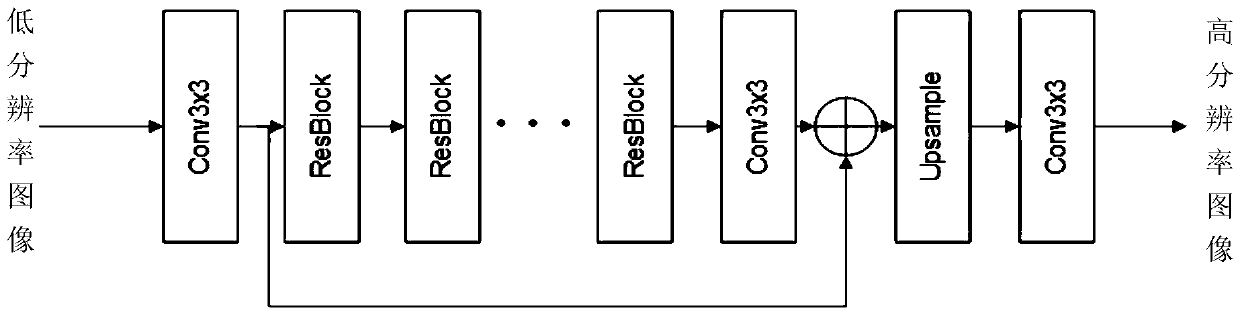

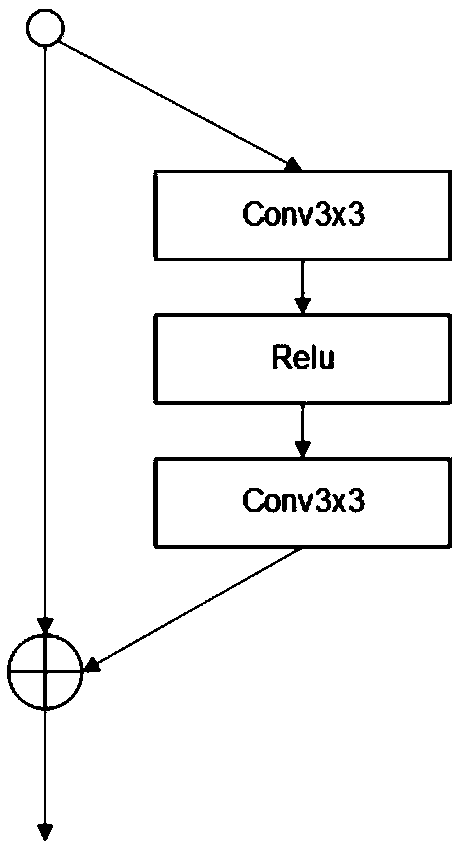

An image super-resolution reconstruction method based on non-reference quality evaluation and feature statistics

ActiveCN109559276ARich real texture detailsImprove human perceptionImage enhancementImage analysisPattern recognitionReconstruction method

According to the invention, a super-resolution reconstructed picture is more consistent with a human eye visual perception effect; at the same time, the feature structure of the picture is maintained.The invention provides an image super-resolution reconstruction method based on no-reference quality evaluation and feature statistics. An adversarial learning network model is constructed, more high-frequency detail edges are generated, the image looks like more textures, the tone, the brightness and the sharpness of the image are adjusted through no-reference quality evaluation, so that the generated image is closer to human visual perception, and the internal feature structure of the image is maintained through feature statistics. Compared with a traditional super-resolution reconstructionmethod, the high-resolution image generated through the method has more abundant real texture details, the human eye perception effect of the image is improved, and the result content of the image cannot be damaged.

Owner:WUHAN UNIV

Document authentication combining digital signature verification and visual comparison

InactiveUS8037310B2Easy to detectEfficient combinationUser identity/authority verificationDigital data protectionVisual comparisonDigital signature

A document authentication system and method combine digital and non-electronic (or visual) authentication methodologies in an integrated, unified manner. As well as providing indicia of digital authentication, the invention generates a physical artifact that can be validated by unaided human visual perception. The present invention thus provides an opportunity to improve the level of trust in authentication of documents, while preserving the advantages of both traditional and digital authentication mechanisms.

Owner:RICOH KK

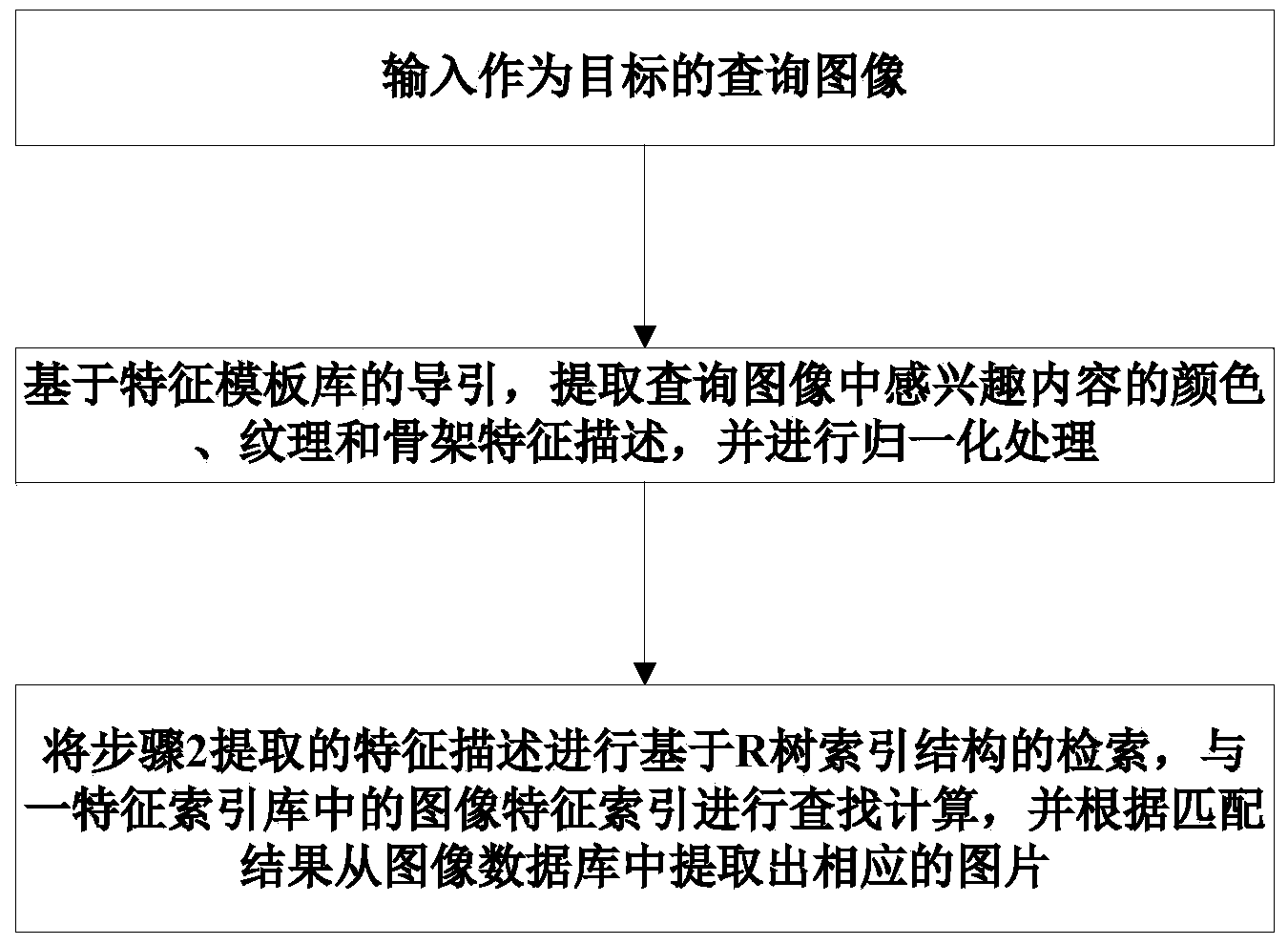

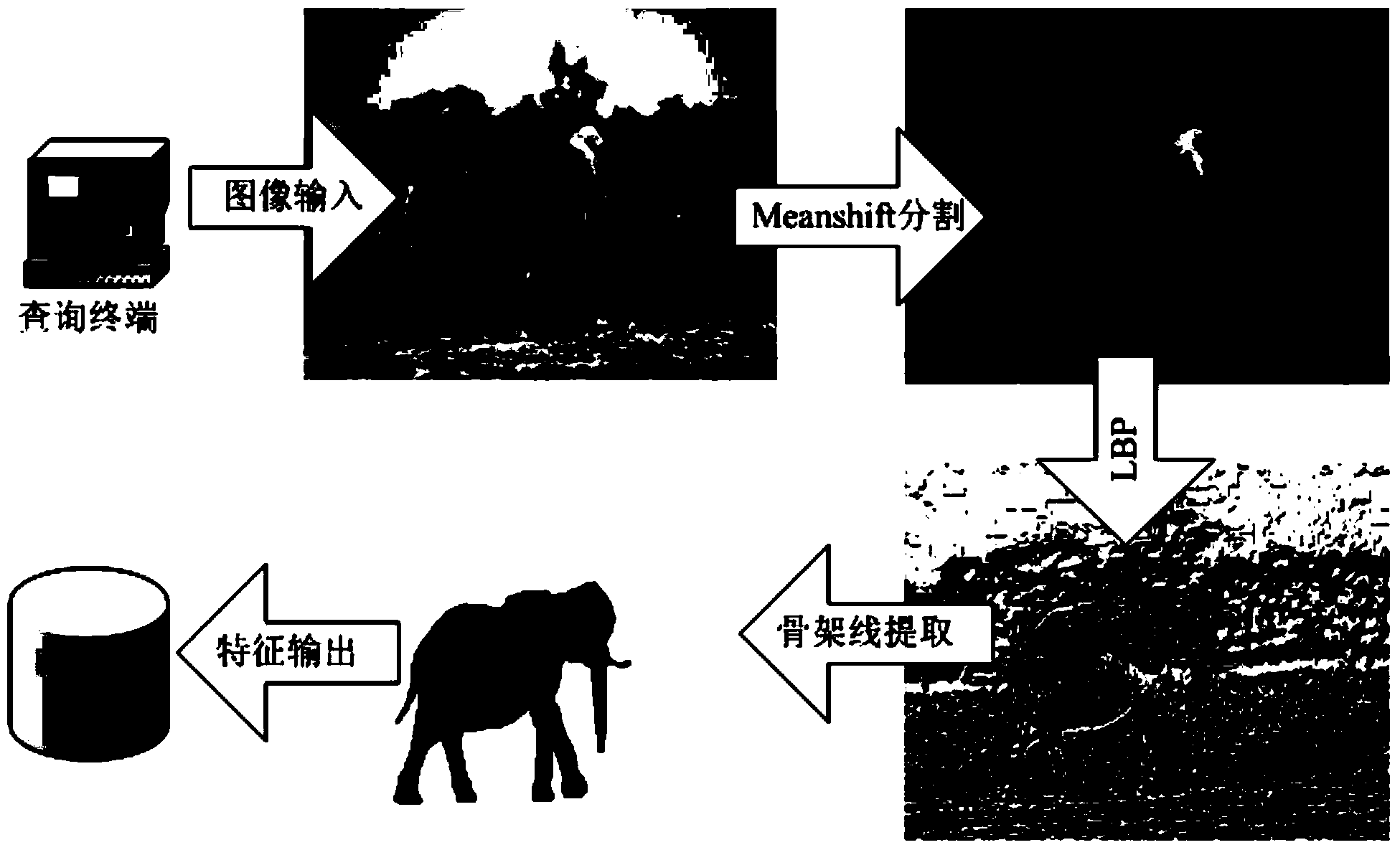

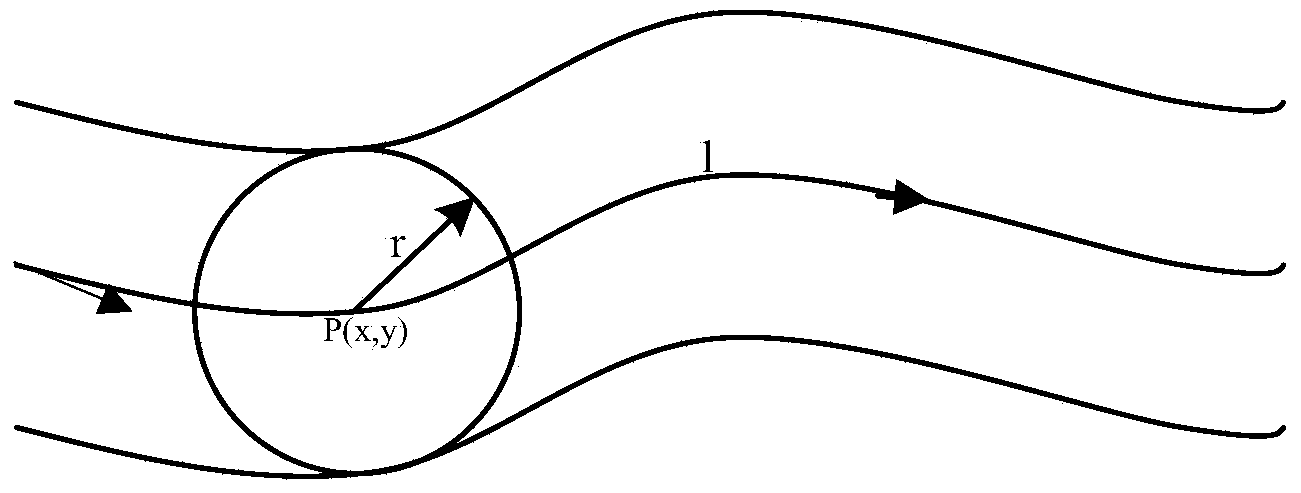

Colorful animal image retrieval method based on content and colorful animal image retrieval system based on content

ActiveCN103870569AOvercoming the Impact of Matched RetrievalReduce matching calculationsImage analysisCharacter and pattern recognitionImage retrievalR-tree

The invention provides a colorful animal image retrieval method based on content and a colorful animal image retrieval system based on content. The method comprises the following steps: step 1, inputting an inquiry image which serves as a retrieval object; step 2, extracting the color, grain and framework characteristic descriptions of interested content in the inquiry image based on the guidance of a characteristic template library, and performing normalization processing, wherein the characteristic descriptions, which are different in animal color, grain and framework and are formed on the basis of sample statistics, are stored in the characteristic template library; step 3, performing retrieval based on an R-tree index structure on the characteristic descriptions extracted in the step 2, performing search calculation on image characteristic indexes in a characteristic index library, and extracting a corresponding image from an image database according to the matching result. The method and the system can be used for overcoming the influence of illumination changes on image retrieval judgment to a certain extent, describing the angle and posture semantic information of an inquiry animal according to the framework characteristic analysis during retrieval, and generating a retrieval result consistent with the human visual perception.

Owner:NANJING NORTH OPTICAL ELECTRONICS

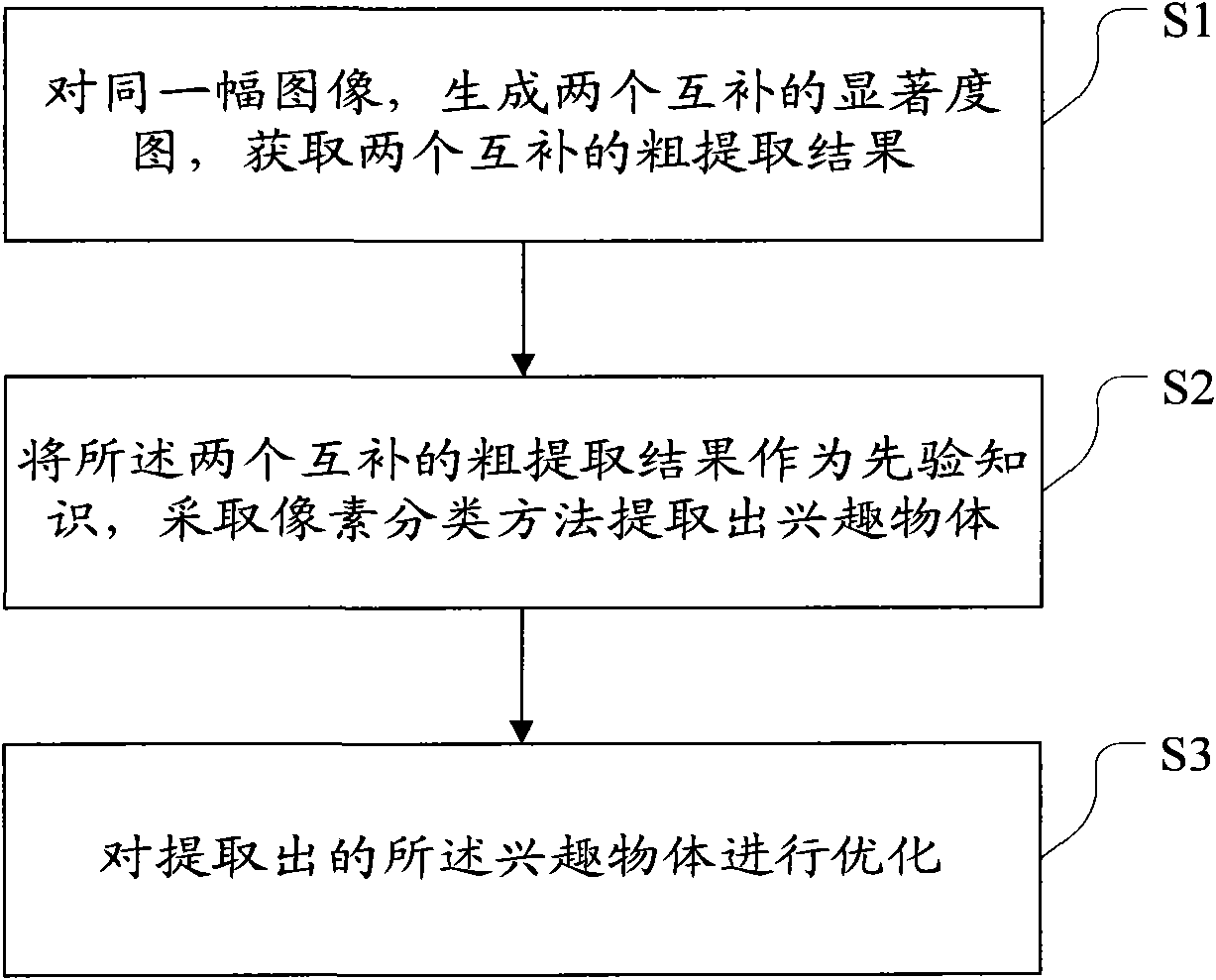

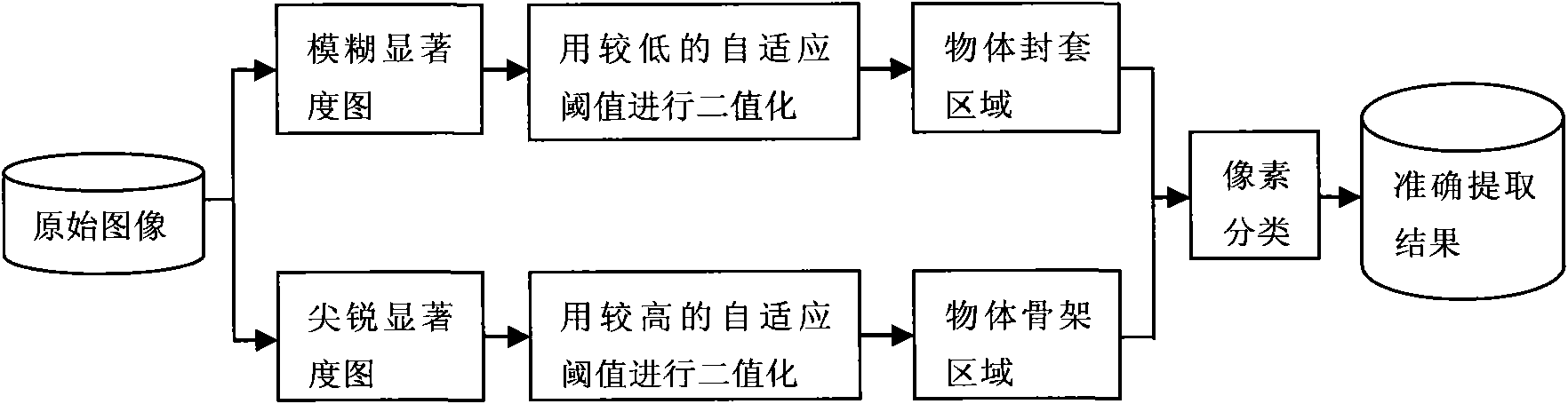

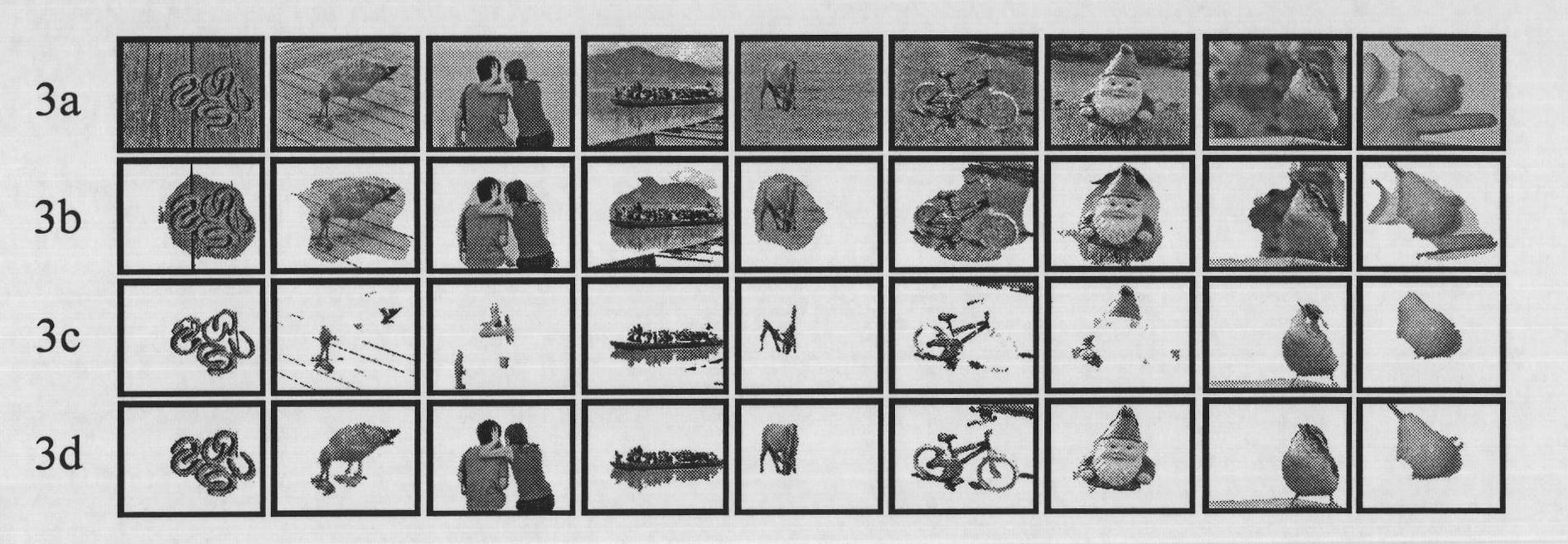

Image interested object automatic retrieving method and system based on complementary significant degree image

InactiveCN101847264AHigh simulationRobustImage enhancementImage analysisComputer visionHuman visual perception

The invention discloses image interested object automatic retrieving method and system based on a complementary significant degree image. The method comprises the steps of: generating two complementary significant degree images for the same image to obtain two complementary crude retrieving results; taking the complementary crude retrieving results as priori knowledge, and retrieving the interested objects by adopting a pixel classification method; and optimizing the interested objects. In the invention, the interested object in the image is retrieved automatically and accurately under the condition of simulating human visual perception by giving any image, and the problem of the integrity of results based on the significant degree method is efficiently solved.

Owner:PEKING UNIV

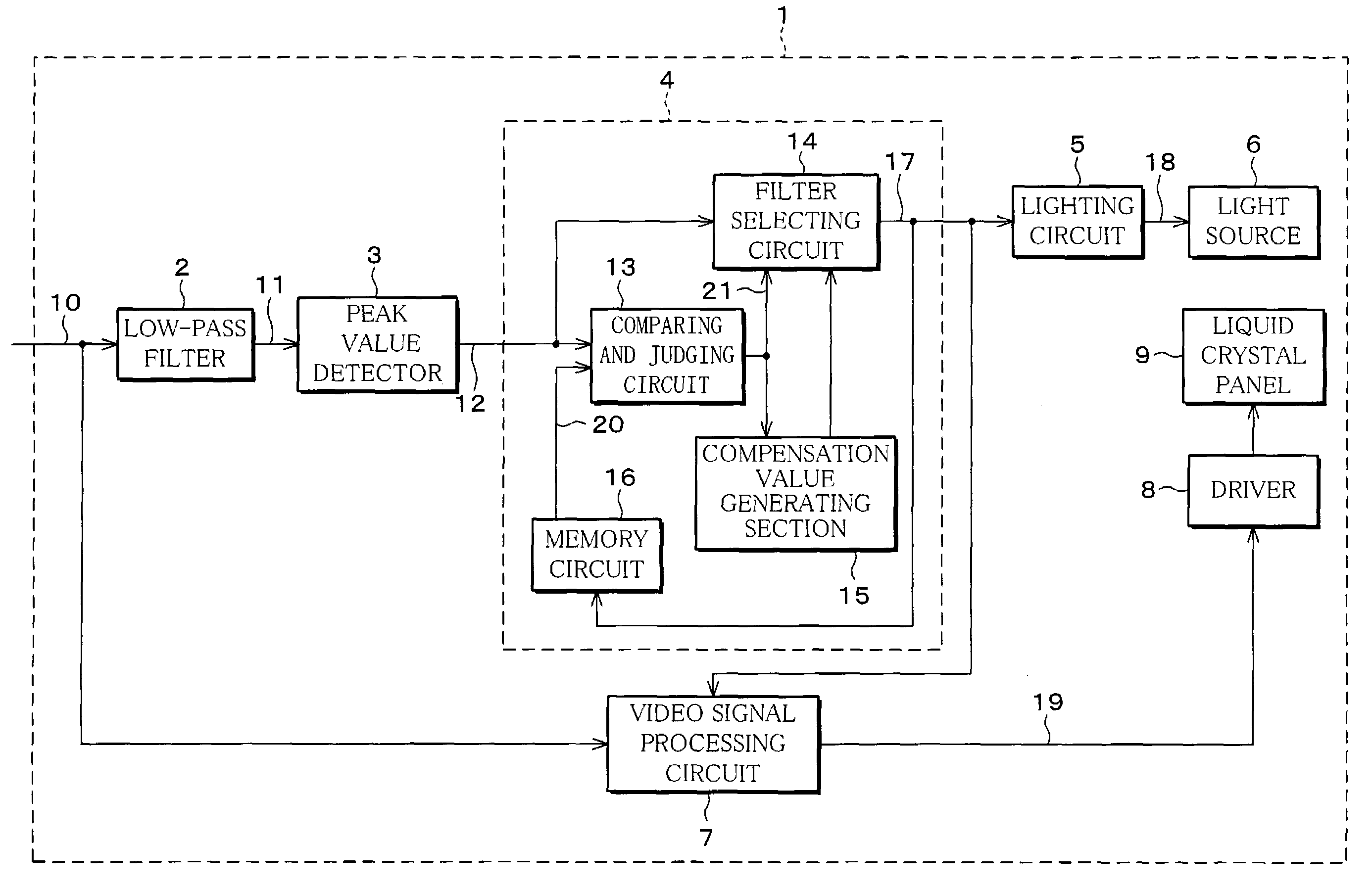

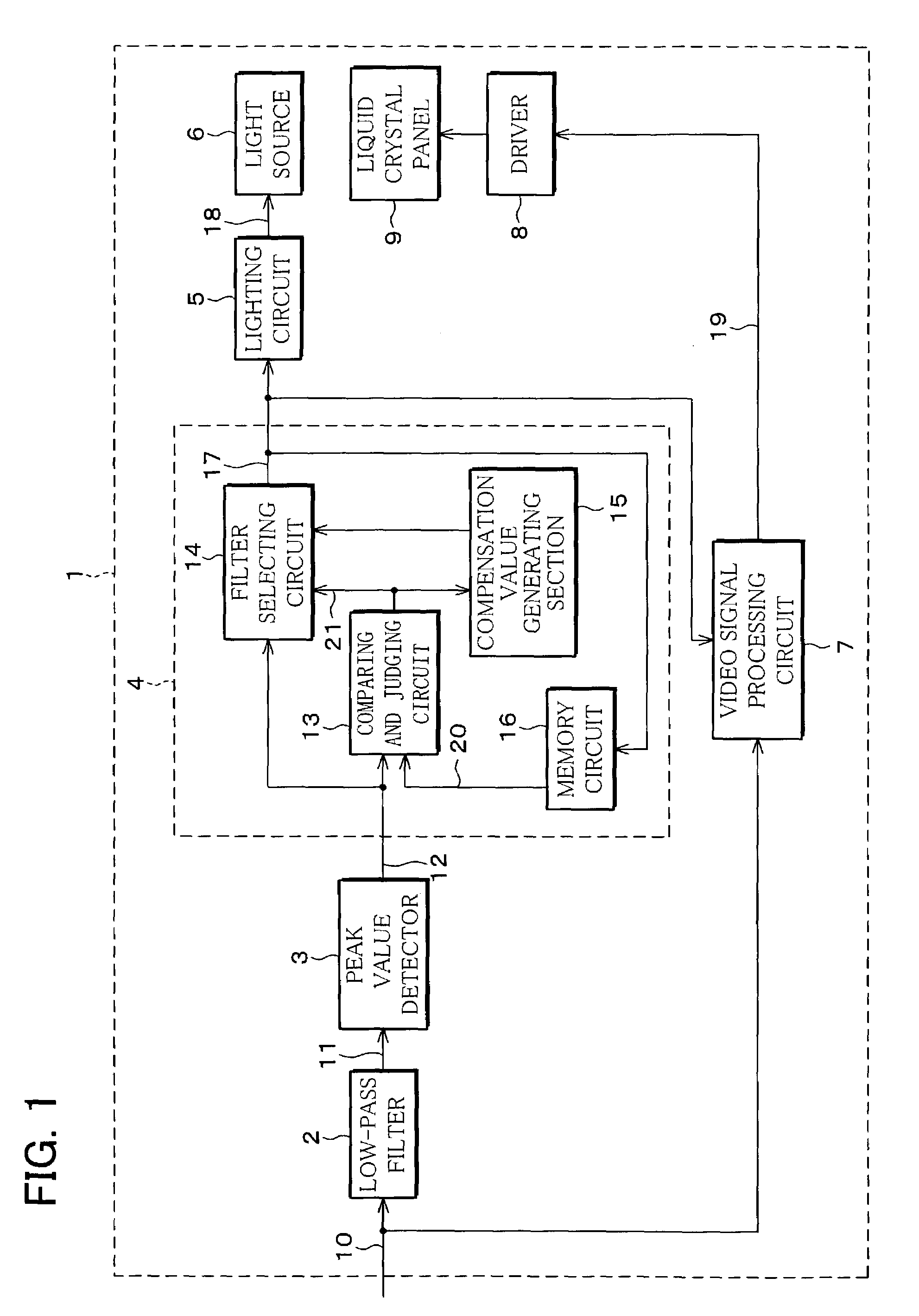

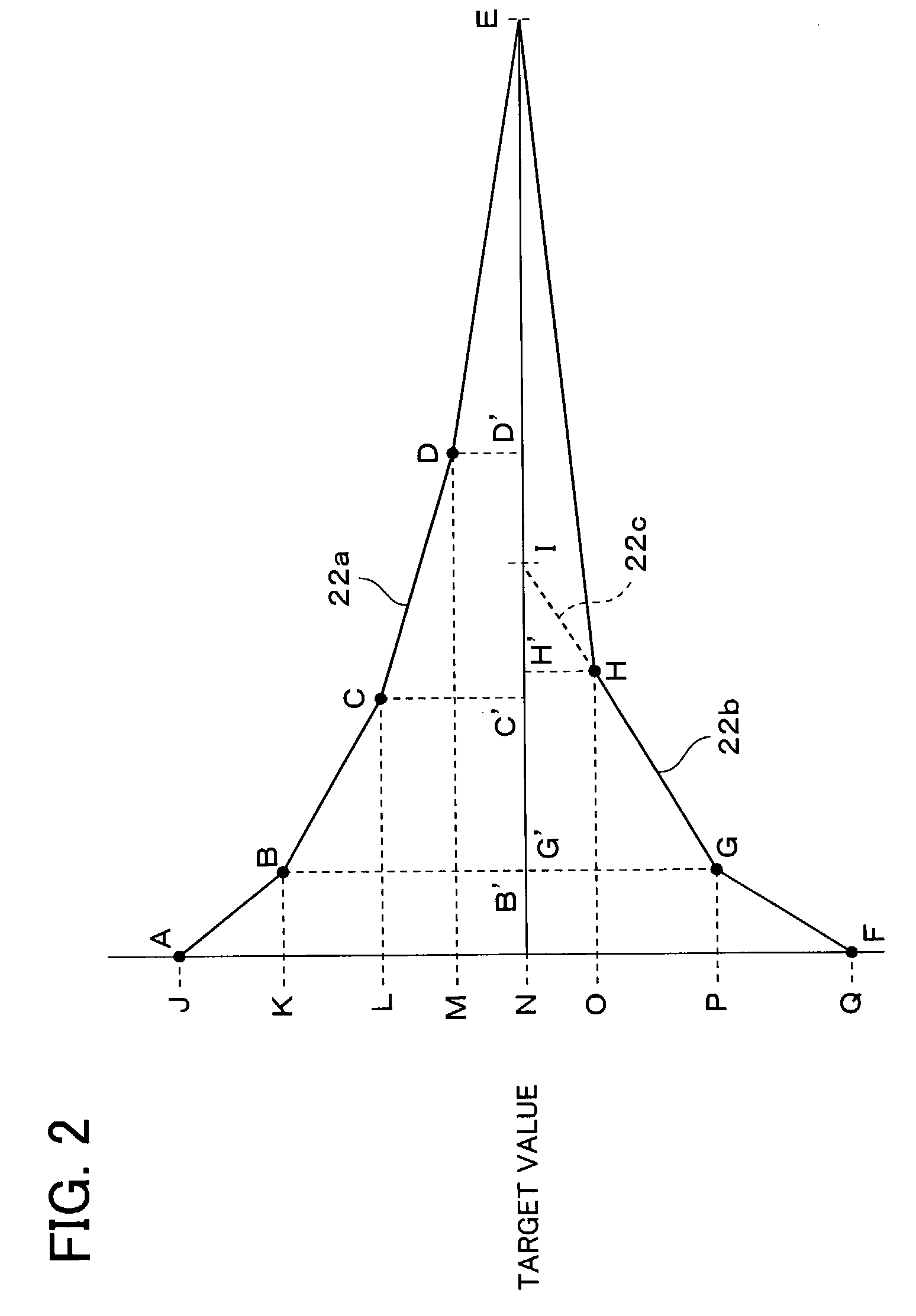

Image display device and image display method

InactiveUS7330172B2Avoid flickeringDisplay can be suppressedStatic indicating devicesIlluminanceComputer graphics (images)

An image display device controls the video signal and the lighting circuit according to the input video signal, so as to improve contrast, so that flicker or “bright black display”, which may be caused when the display characteristics do not match the human visual characteristics, is eliminated. The image display device includes a filter circuitry, in which a filter selecting circuit changes the filter characteristic according to the difference of a video signal and memory data stored in a memory circuit. The filter circuitry selects different filter characteristics for the display change from dark to bright and for the display change from bright to dark. This prevents display flicker, which may be caused due to saturation of human visual perception with respect to changes in gradation and illuminance level.

Owner:SHARP KK

Method and system for detecting of errors on optical storage media

A method of grading a level of damage to digital data provides a scan of the digital data for errors therein. Based on the detected errors and predetermined data based on at least one of audio human perception and human visual perception a grade of plain quality is determined. The grade of plain quality is then provided of an output from the system for interpretation by a user or a subsequent system.

Owner:CLARESTOW CORP

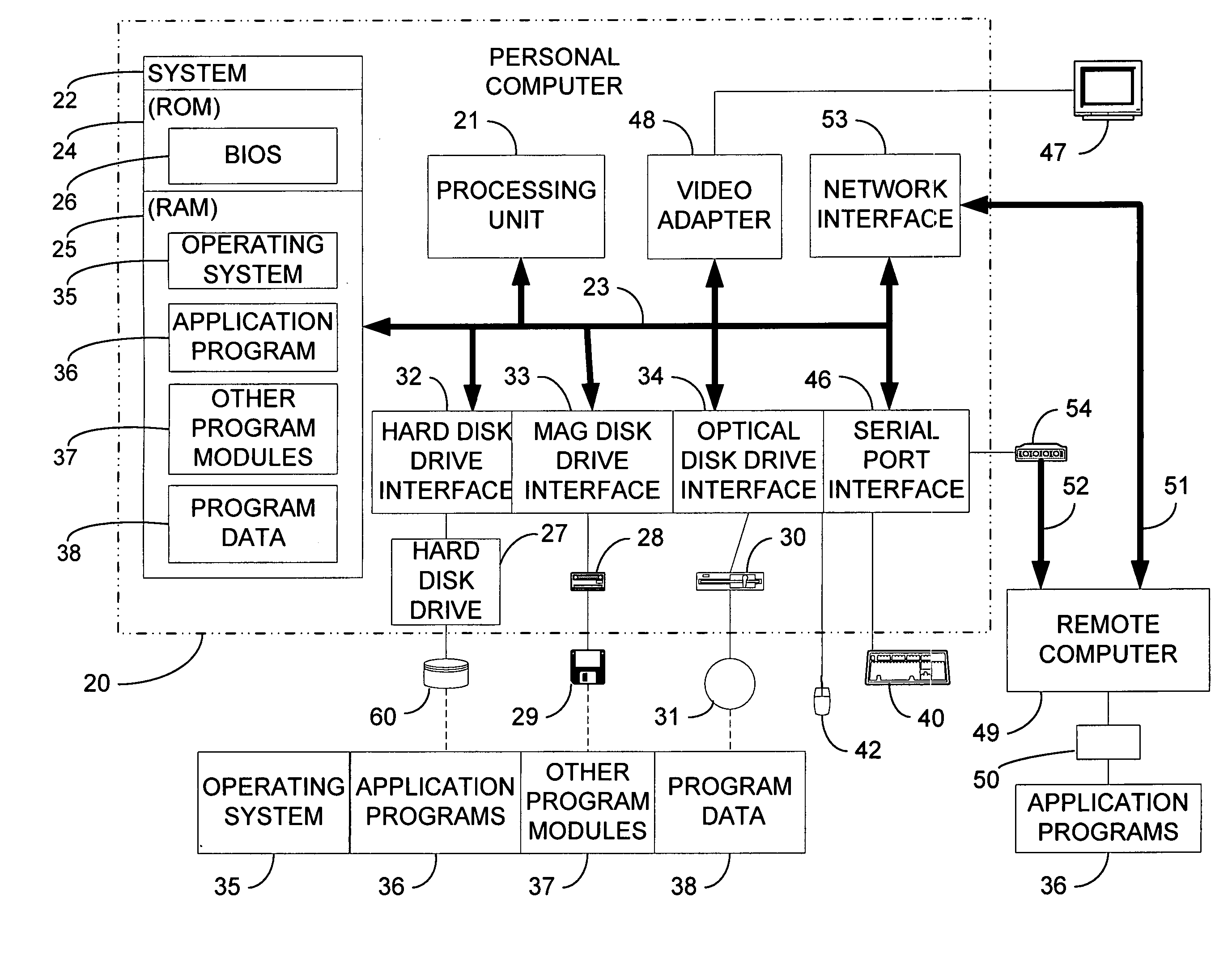

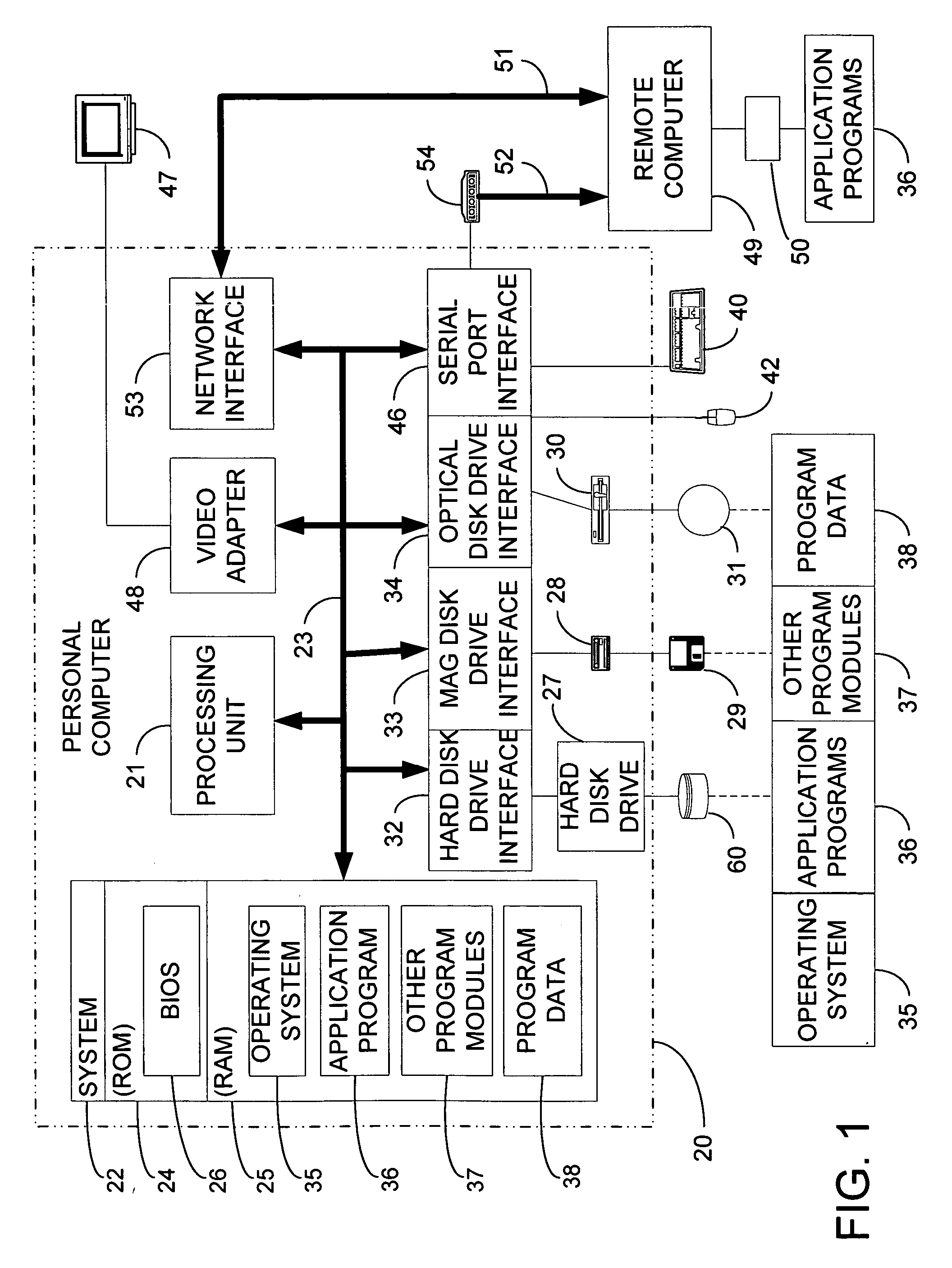

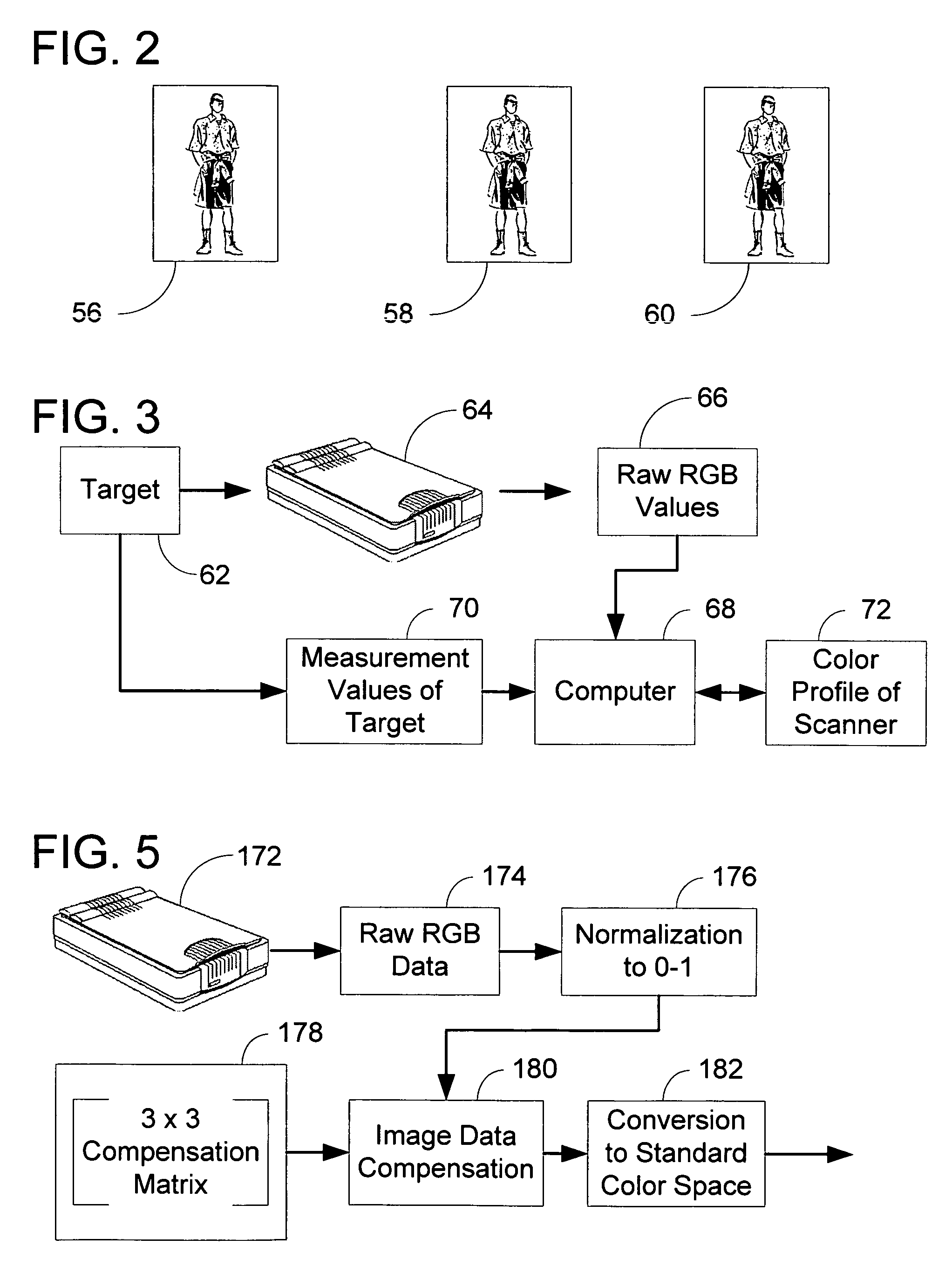

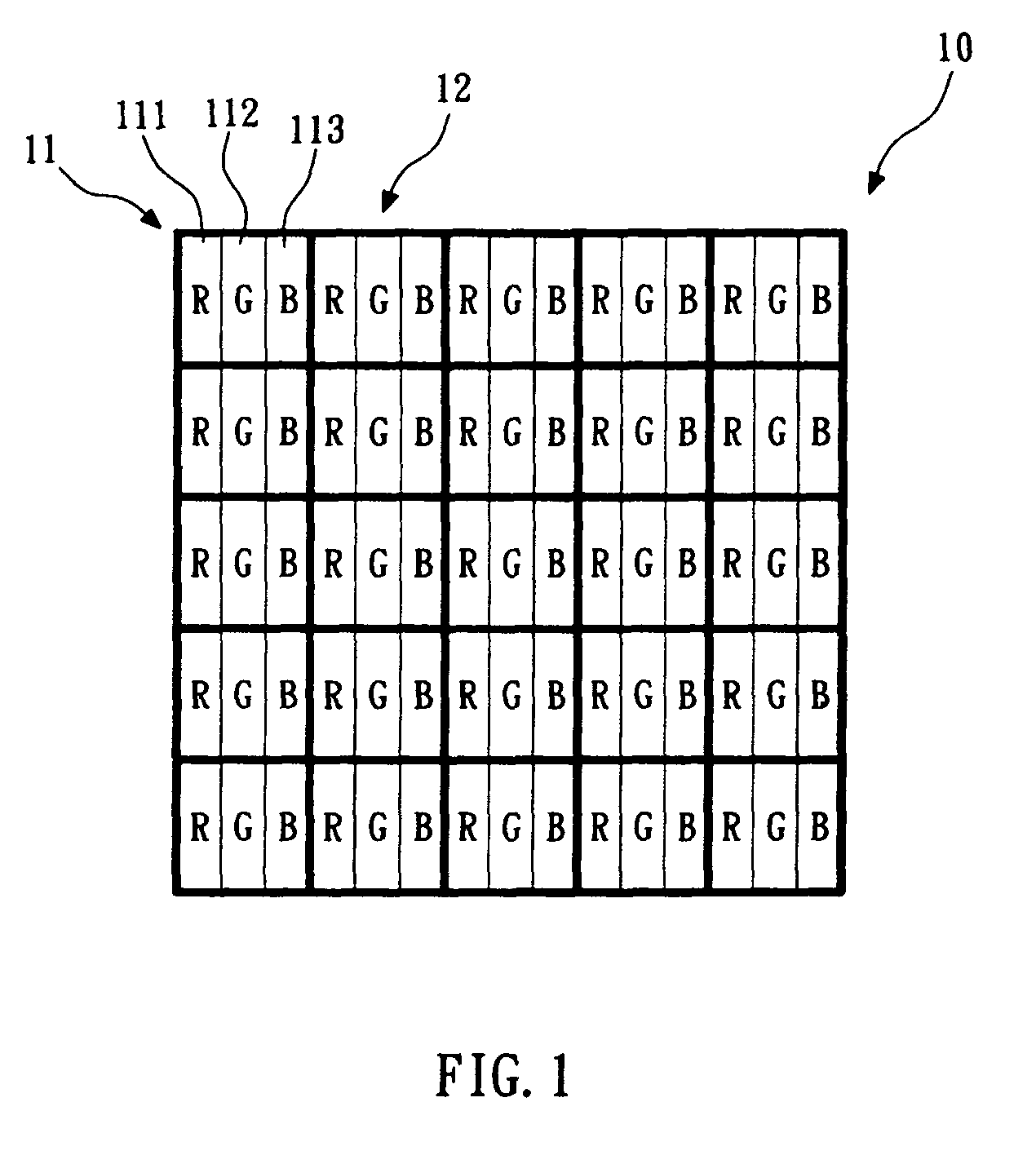

Method of achieving high color fidelity in a digital image capture device and a capture device incorporating same

InactiveUS7043385B2High color fidelityReduce errorsImage analysisStructural/machines measurementMeasurement deviceImaging data

A method for calibration of digital image capture devices is presented. This simplified method provides a calibration based on the human visual perception of the colors input into the device using simple test targets, measurement devices, and software with a minimum of labor and expertise. This analysis may be performed using the data analysis tools of a conventional electronic spreadsheet. The method normalizes the test target data to both black and white, and converts the normalized data into the color space of the capture device through white point adaptation. The raw captured image data is also normalized to both black and white, and is regressed with the converted, normalized target data to determine the expected measurement values. These values are used to compensate the device output to achieve a high level of color fidelity. To ensure that the level of fidelity is acceptable, the CIE color difference equations are used.

Owner:MICROSOFT TECH LICENSING LLC

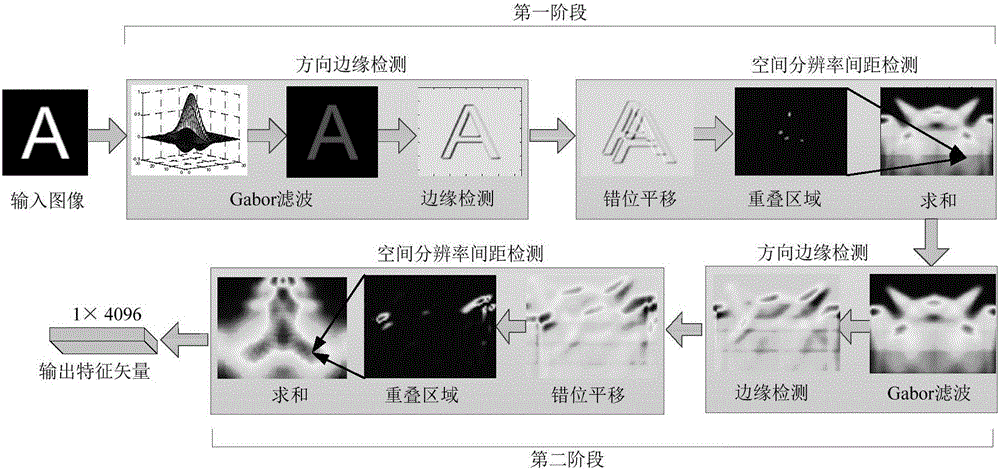

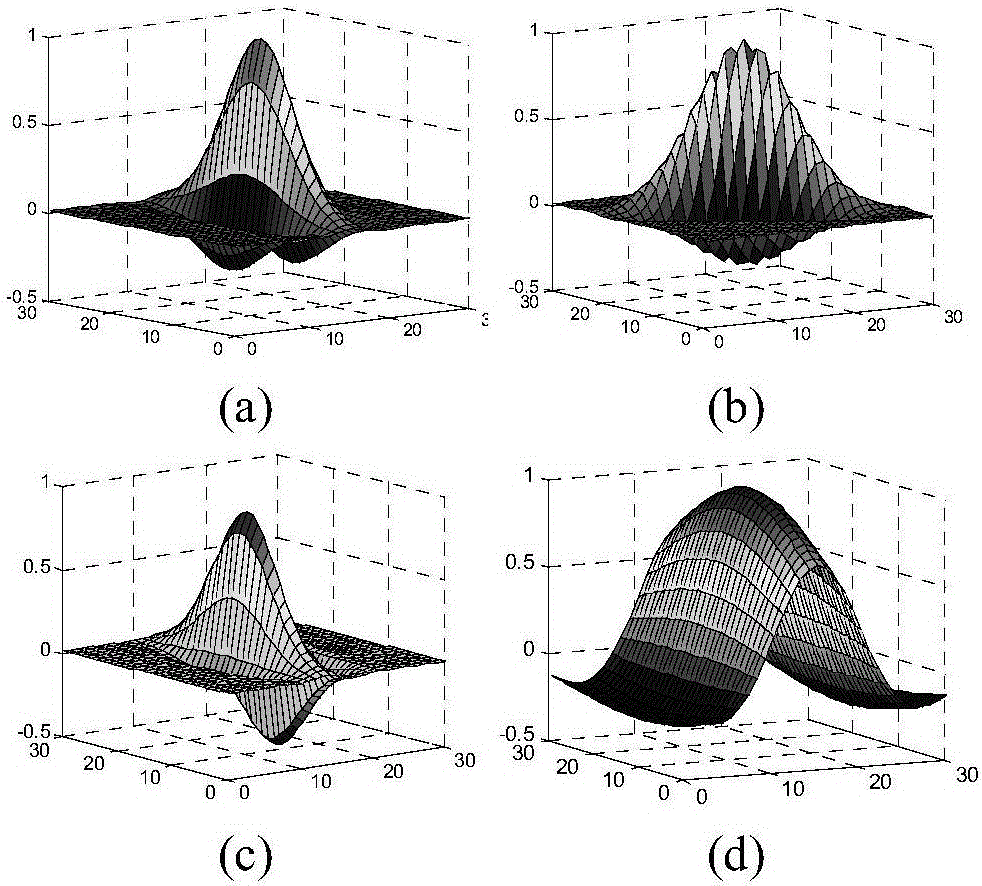

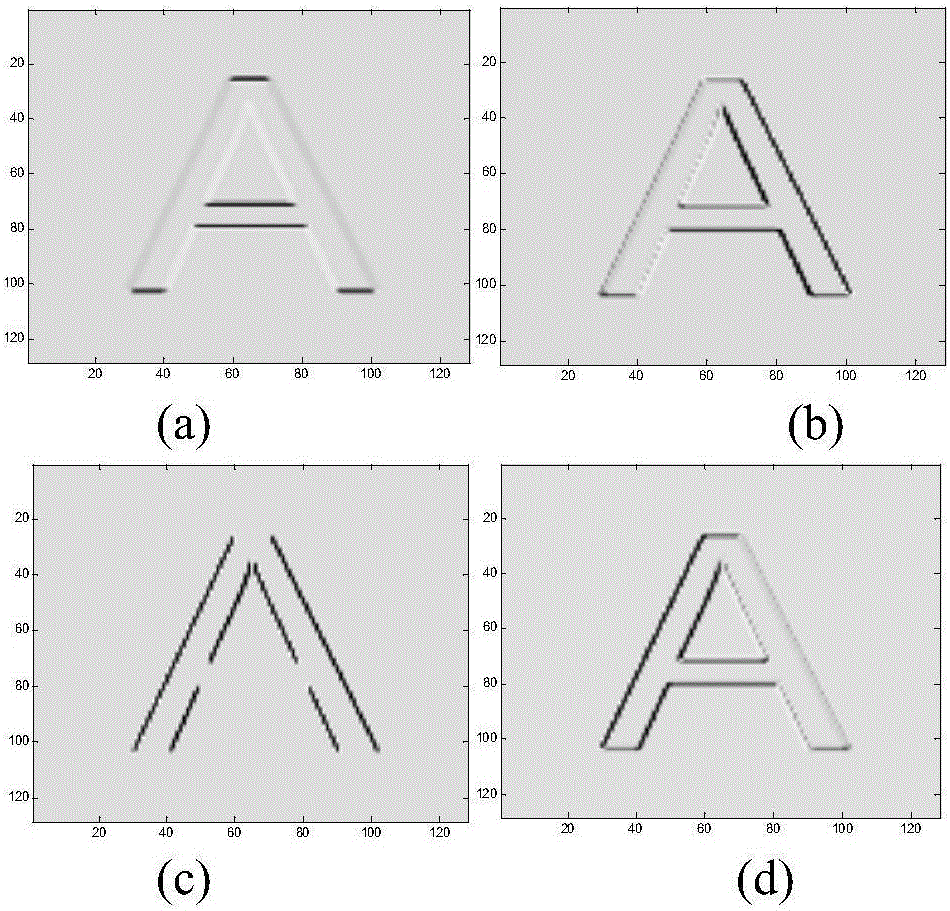

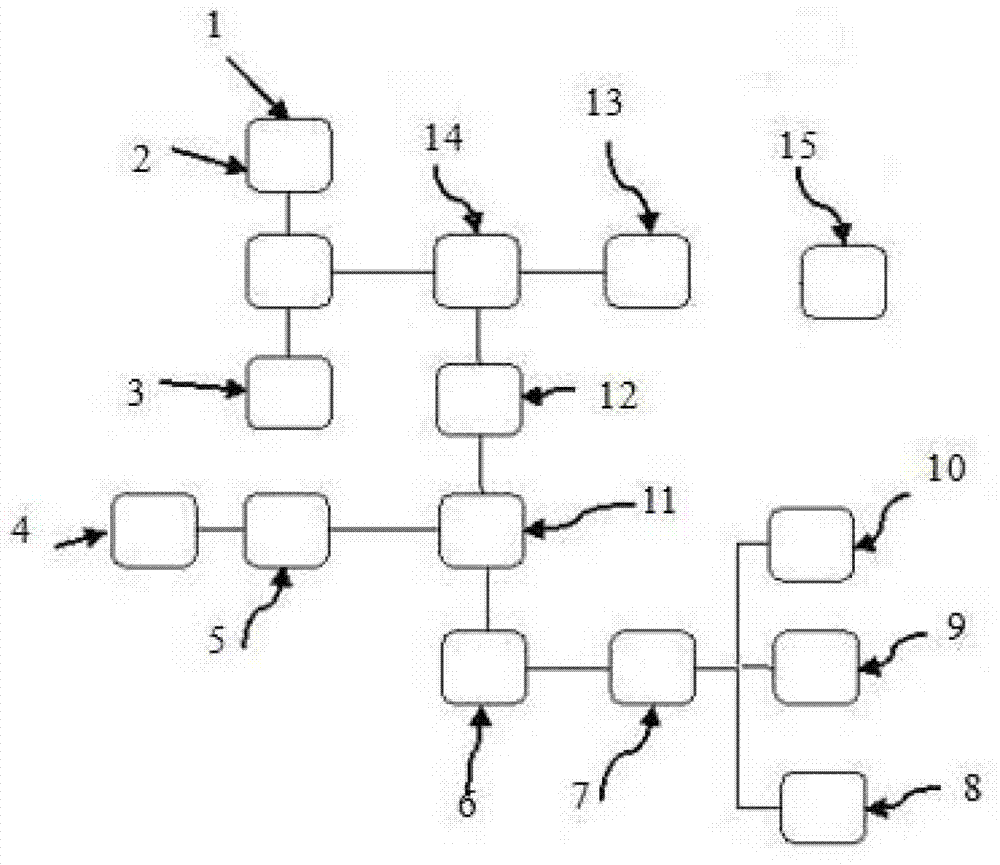

Bionic vision transformation-based image RSTN (rotation, scaling, translation and noise) invariant attributive feature extraction and recognition method

ActiveCN105809173AThe RSTN invariant property hasInvariant properties haveCharacter and pattern recognitionFeature extractionImage resolution

The invention discloses a bionic vision transformation-based image RSTN (rotation, scaling, translation and noise) invariant attributive feature extraction and recognition method. The method includes the following steps: 1) gray processing is performed on an original image, and the size of the image is reset by using a bilinear interpolation method; 2) the directional edge of the target image is detected based on a Gabor and bipolar filter F-based filter-filter filter, so that an edge image E can be obtained; 3) the spatial resolution pitch detection value of the edge image E is calculated, so that a first-stage output image S1 is obtained; and 4) directional edge detection mentioned in the step 2) and spatial resolution pitch detection mentioned in the step 3) are performed on the first-stage output image S1 again, so that a second-stage feature output image S2 can be obtained, and invariant attributive features can be obtained. According to the method of the invention, a human visual perception mechanism is simulated, and bionic vision transformation-based RSTN invariant attributive features are used in combination, and therefore, the accuracy of image recognition can be improved, and robustness to noises is enhanced.

Owner:CENT SOUTH UNIV

Copper strip surface defect identifying device for simulating human visual perception mechanism and method thereof

InactiveCN103091330AEasy to detectImprove classification performanceOptically investigating flaws/contaminationCopperComputer science

The invention discloses a copper strip surface defect identifying device for simulating a human visual perception mechanism and a method thereof. The device and the method have the advantages that various defects such as hollow, scratches, greasy dirt, deckle edges, gaps and the like possibly generated in processes of reproduction, storage and transportation of a copper strip can be identified, capacities of defect detection and classification on the surface of the copper strip are improved, moreover, the identifying accuracy and speed are increased, and the efficiency is high; and not only can requirements on surface information detection of the copper strip of the current enterprises be met, but also the device and the method are further suitable for surface detection of other materials such as steel plates and the like, the requirements of the enterprises on surface defect information detection and storage of the copper belt are met, and furthermore, the device and the method are also suitable for the defect detection of other materials and have wide application prospect and market value.

Owner:HOHAI UNIV CHANGZHOU

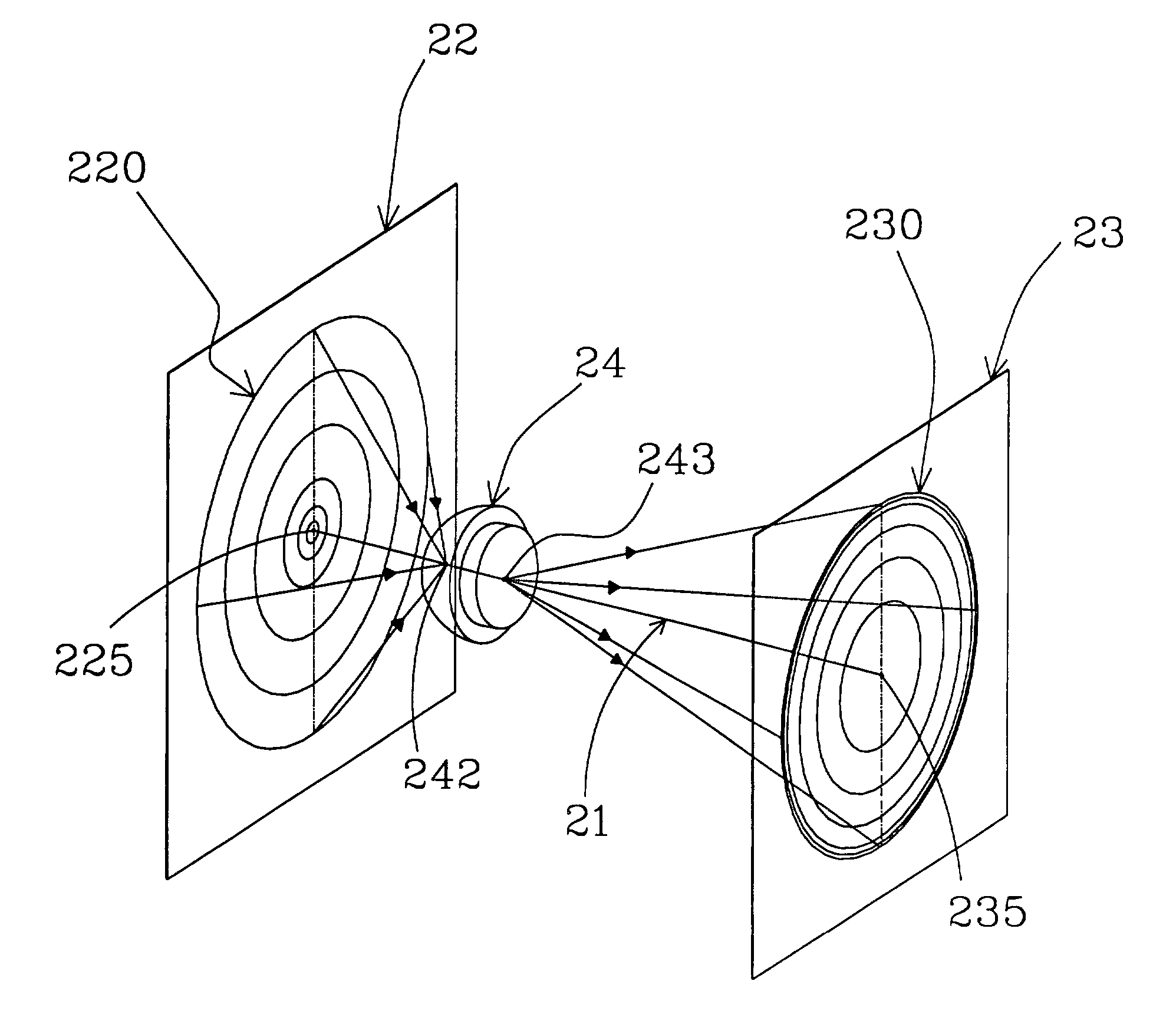

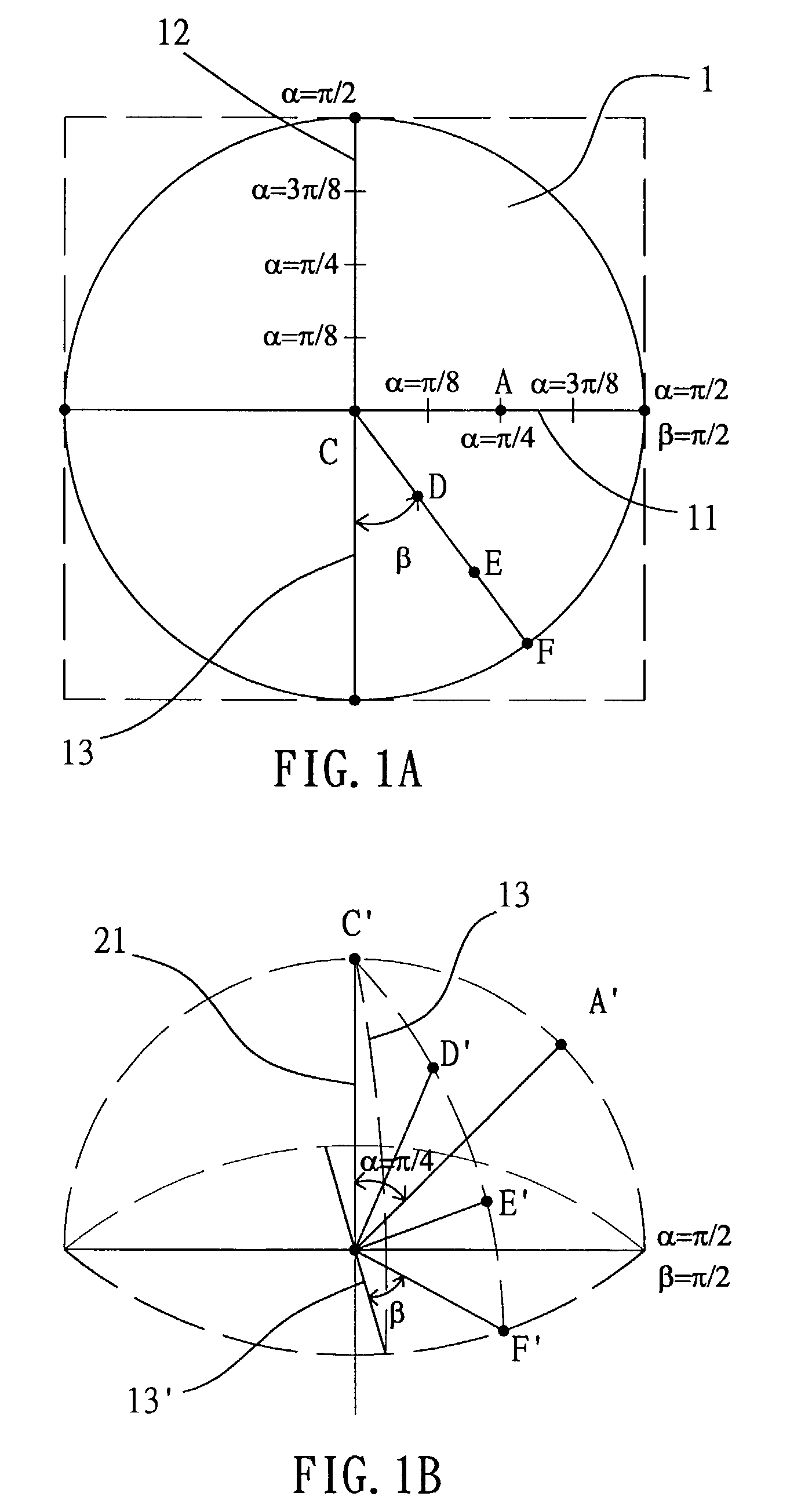

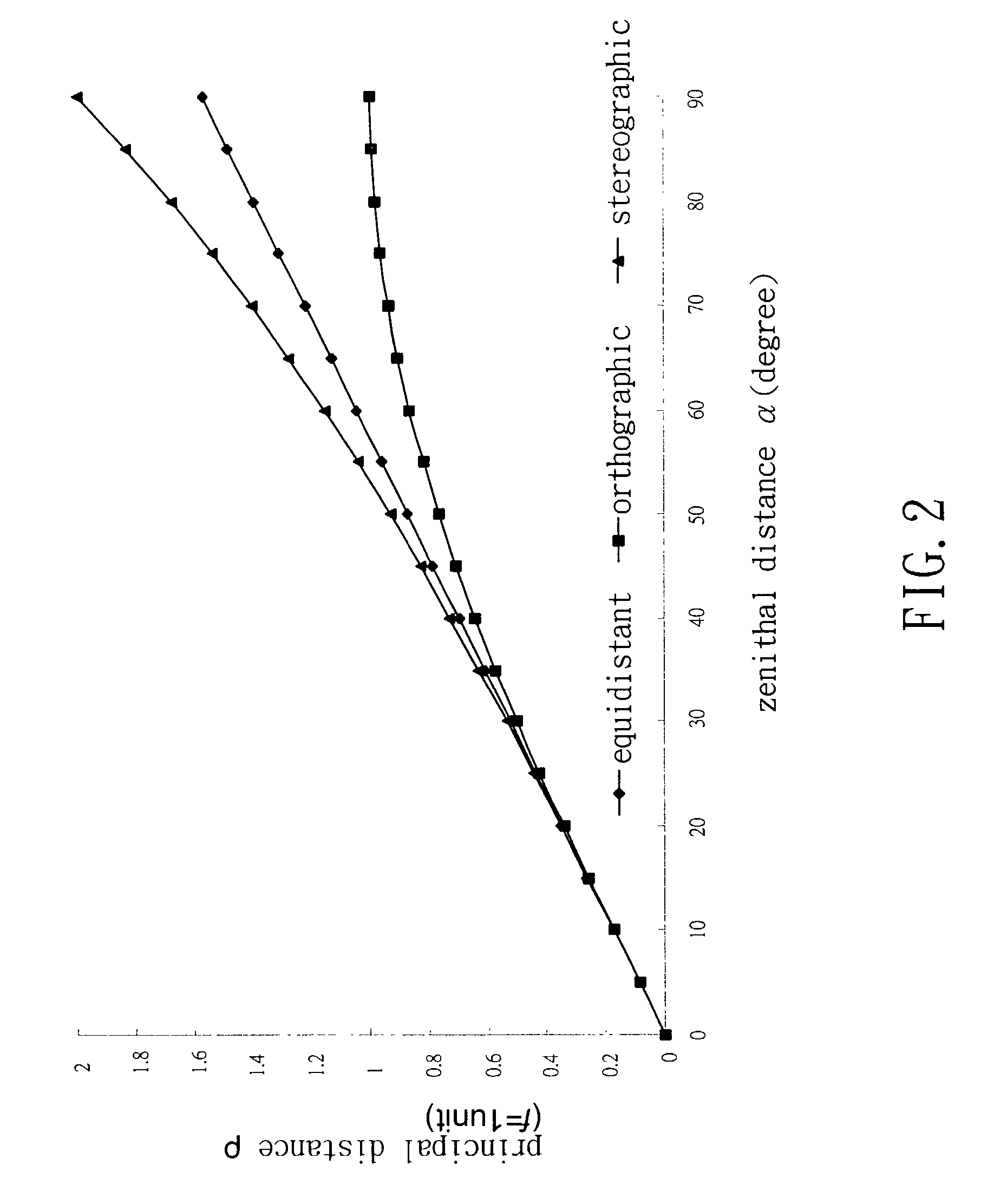

Method for presenting fisheye-camera images

InactiveUS7042508B2Simple methodLow cost methodTelevision system detailsGeometric image transformationImage compressionPrincipal point

The present invention is a method for presenting the fisheye-camera images. A calibration target with a concentric-and-symmetric pattern (PCT) is utilized to assist in parameterizing a fisheye camera (FIS) in order to ascertain the optical parameters comprising the principal point, the focal length constant and the projection function of the FIS. Hence, the position of an imaged point referring to the principal point on the image plane directly reflects its corresponding zenithal distance α and azimuthal distance β of the sight ray in space so as to normalize the imaged point onto a small sphere. Further according to the map projections in cartography capable of transforming the global geometry into flap maps, the interesting area in a field of view can be accordingly transformed by a proper projection method. The image transforming method disclosed in the invention is simple, low-cost, suitable to various FISs with different projection mechanisms and capable of transforming the fisheye-camera images for particular functions, such as the approach of normal human visual perception, video data encryption, and image compression / transformation with a high fidelity.

Owner:APPRO TECH

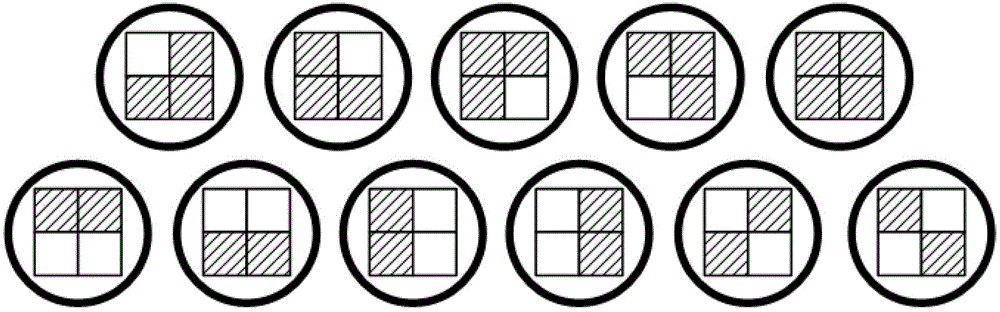

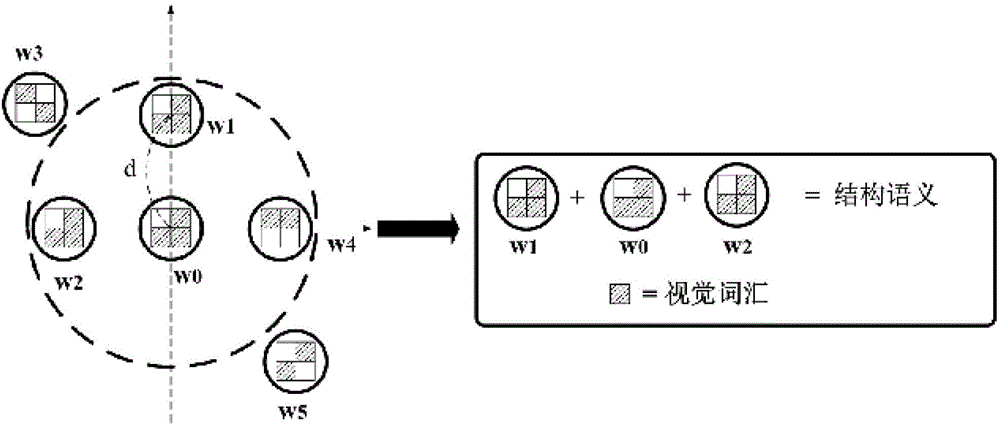

Image search method based on structure semantic histogram

InactiveCN103336830ARich relevant informationSpecial data processing applicationsDistillationVision based

The invention provides an image search method based on a structure semantic histogram. The method comprises the steps that the advantages based on a visual perception mechanism and a vision vocabulary model are considered for image searching, a novel image feature expressing method of the structure semantic histogram is provided, the structure semantic histogram can be regarded as the distillation of a mainstream vision vocabulary model, is used for analyzing natural images, and has more abundant information than a mainstream vision vocabulary method. The image search method integrates the advantages of vision vocabularies, semantic features and the histogram, simulates a human visual perception mechanism in a certain degree, and can express structure space information, vision significance information and uniform color information of semantic information and the vision vocabularies.

Owner:刘广海

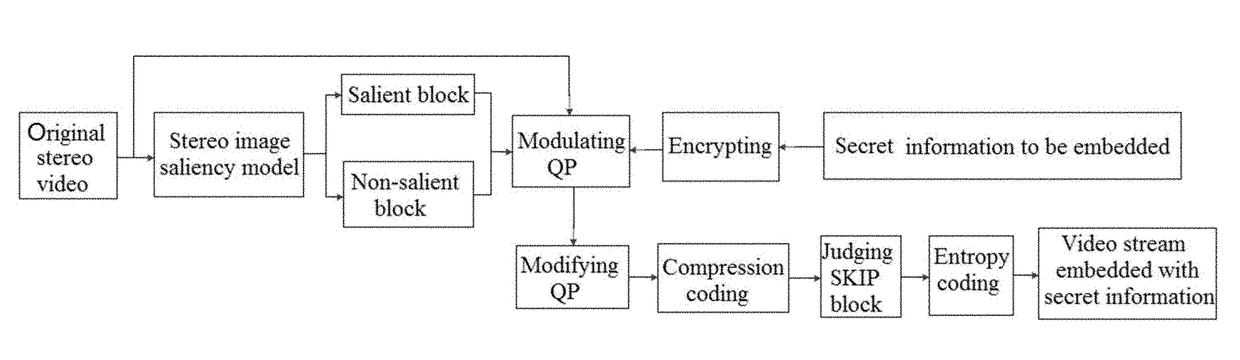

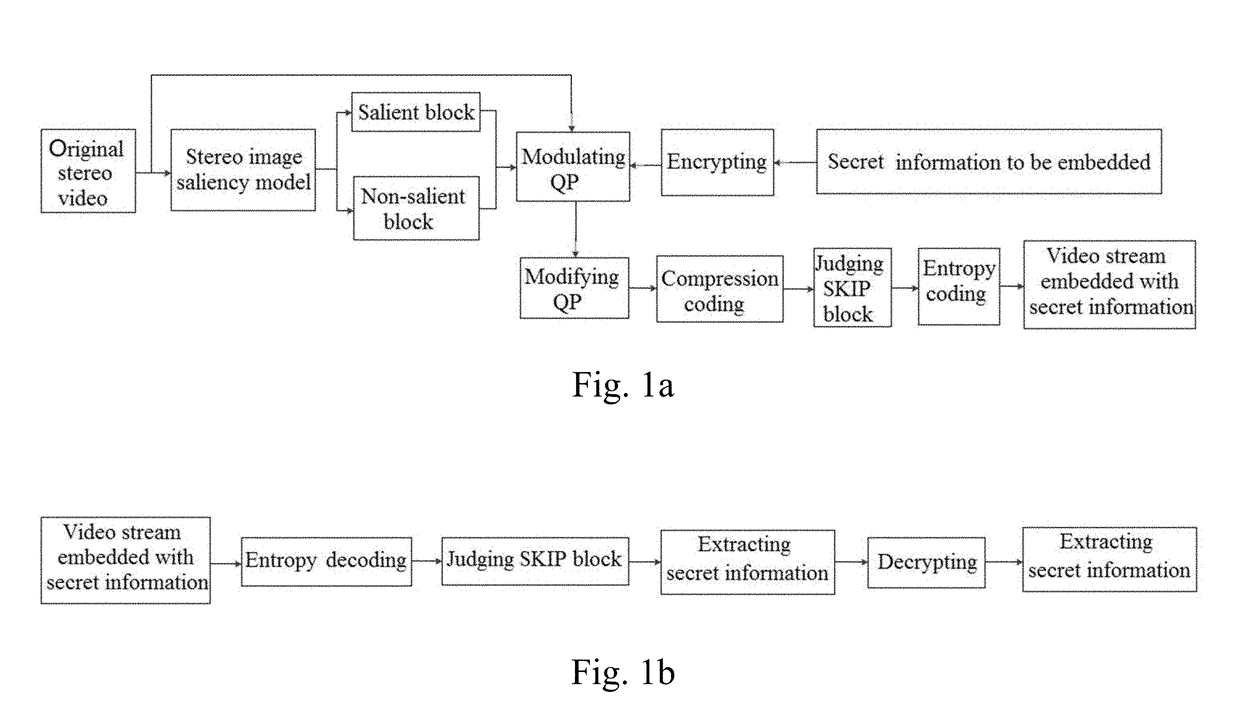

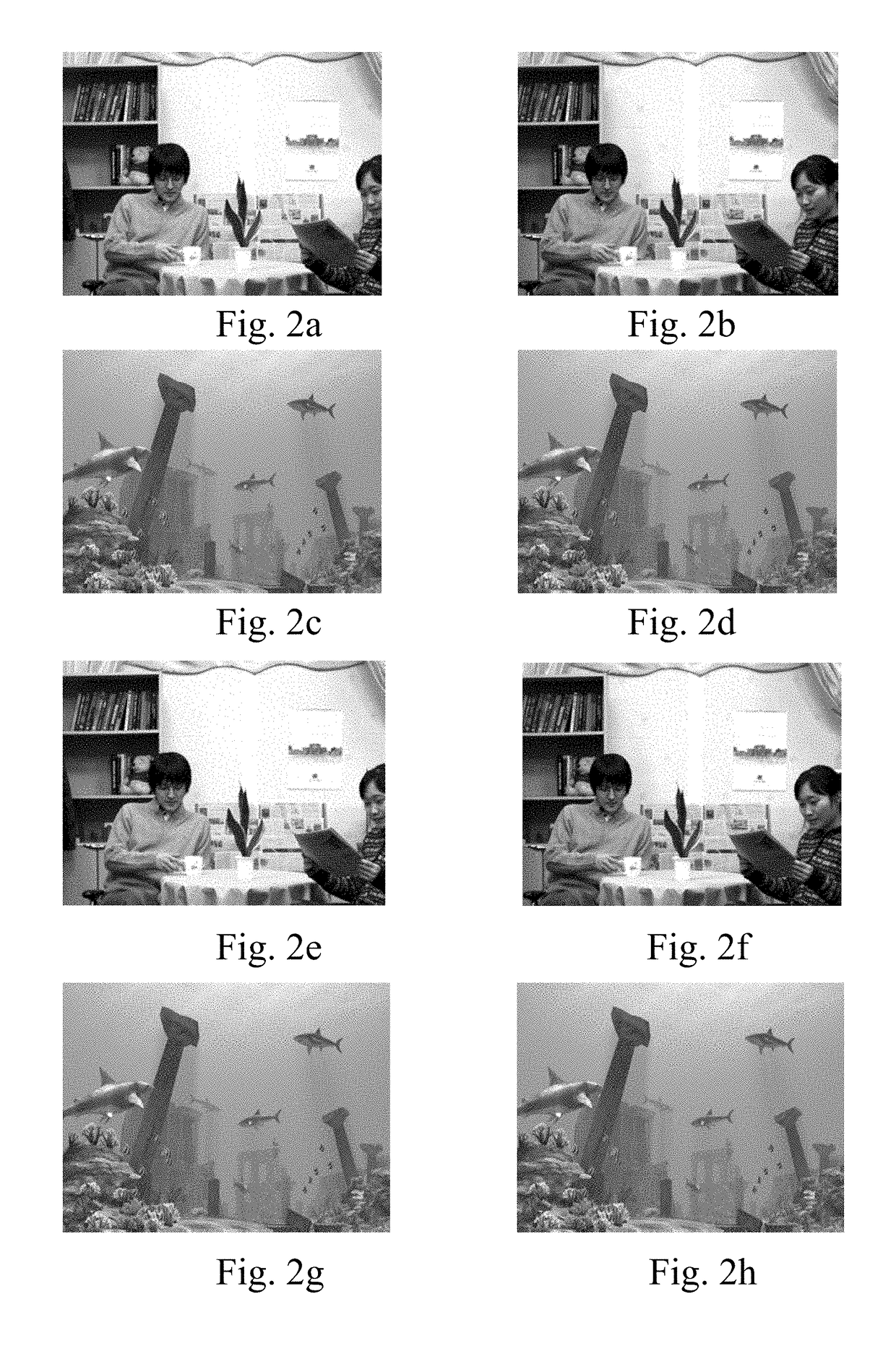

3D-HEVC inter-frame information hiding method based on visual perception

ActiveUS20170374347A1Improve algorithm performanceReduce complexityDigital video signal modificationSteroscopic systemsPattern recognitionSide information

A 3D-HEVC inter-frame information hiding method based on visual perception includes steps of information embedding and information extraction. In the step of information embedding, the human visual perception characteristic is considered, stereo salient images are obtained by a stereo image salient model, and the stereo salient images are divided into salient blocks and non-salient blocks with an otsu threshold. The coding quantization parameters are modified according to different modulation rules for different regions. Then, based on the modified quantization parameters, the coding-tree-units are coded to complete the information embedding. In the step of information extraction, no original video is needed, no any side information needs to be transmitted, and the secret information can be blindly extracted. The present invention combines with the human visual perception characteristic, and selects P frames and B frames as embedded frames for effectively reducing the decrease of the stereo video subjective quality.

Owner:NINGBO UNIV

Digital image edge information extracting method

InactiveCN1881255AImprove accuracyImprove digital image qualityImage enhancementTelevision systemsImage extractionImaging quality

The invention relates to a method for extracting the edge information of digit image, which is characterized in that: fixing the gradient image of digit image; shielding the gradient image based on the human visual sensitive character; based on the shield gradient image, extracting the edge information of digit image. The invention can consist the edge extracted result and the analyzed edge condition, to make the edge extracted effect meet the human visual sensitive character, to realize better following application and improve the digit image quality in video communication.

Owner:HUAWEI TECH CO LTD

Moving target detection method on basis of pulse coupled neural network

ActiveCN103700118AImprove robustnessImprove accuracyImage enhancementImage analysisPattern recognitionVideo image

The invention discloses a moving target detection method on the basis of a pulse coupled neural network, which comprises the following steps of a, sensing a video image sequence by utilizing the pulse coupled neural network and extracting global features of a video image; b, establishing a global feature histogram of each pixel; c, for each pixel, utilizing the global feature histograms corresponding to first K frames to establish an initial background model of the pixel; d, for each pixel, calculating similarity of a global feature histogram of a current frame image and the corresponding global feature histogram in the background model and detecting whether the pixel is a moving target; e, for each pixel, utilizing the global feature histogram of the current frame image to update the background model of the pixel. The moving target detection method uses the integral characteristics of human visual perception for reference, utilizes the pulse coupled neural network to extract the global features of the image and is beneficial for inhibiting the negative influence of disturbance of a dynamic background on detection of the moving target so as to improve accuracy of detecting the moving target.

Owner:NORTHEASTERN UNIV

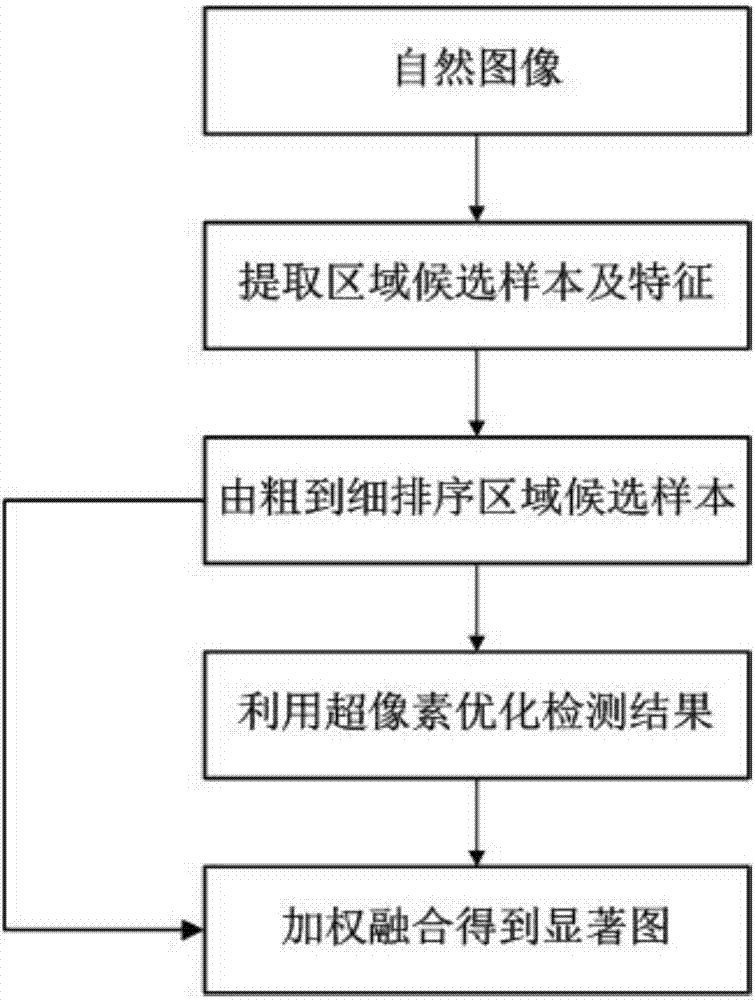

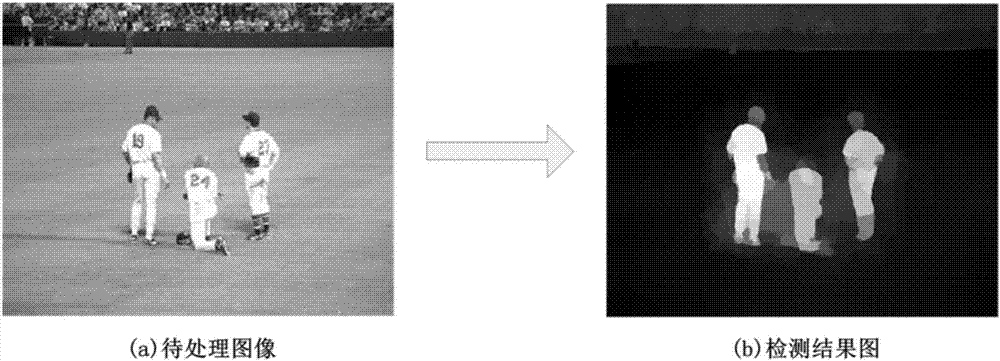

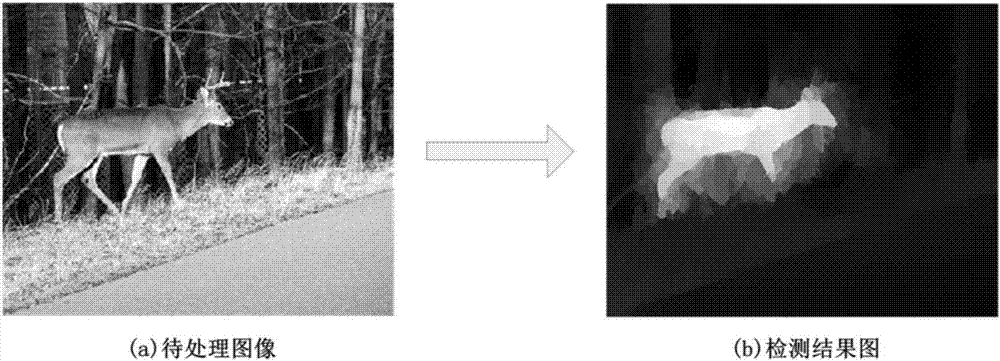

Significance detection method based on selection of regional candidate sample

ActiveCN107103608AEfficient detectionConform to visual perceptionImage enhancementImage analysisPattern recognitionSample selection

The invention provides a significance detection method based on selection of a regional candidate sample, and belongs to the technical field of artificial intelligence. On the basis of existing prior knowledge, depth characteristics and a classifier are introduced, and a selecting mechanism from rough to fine is used, the significance and targeted performance of the regional candidate sample are evaluated, a detection result is further optimized by utilizing super pixels, and a significant object in an image can be detected effectively. Compared with a traditional method, the detection result is more accurate. Especially for a multi-object image or an image in which the object is very similar to background, the detection result satisfies visual perception of humans more, and an obtained significance map is more accurate.

Owner:DALIAN UNIV OF TECH

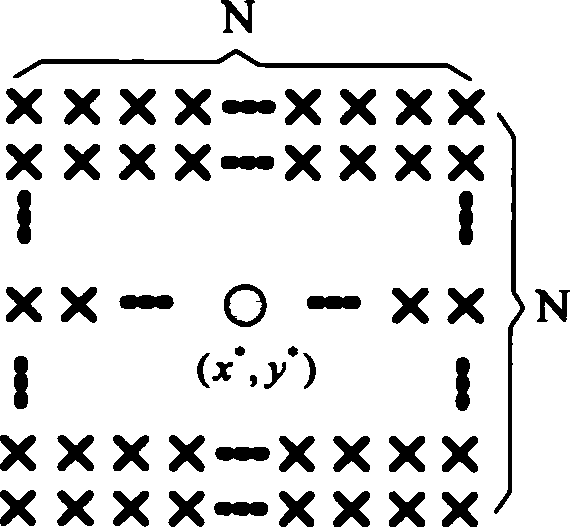

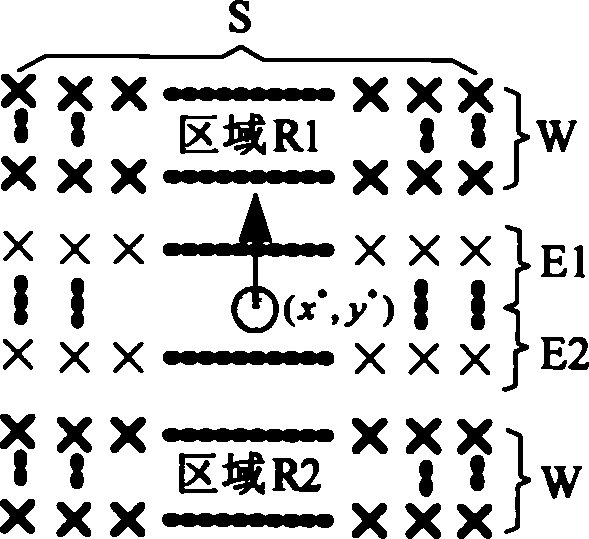

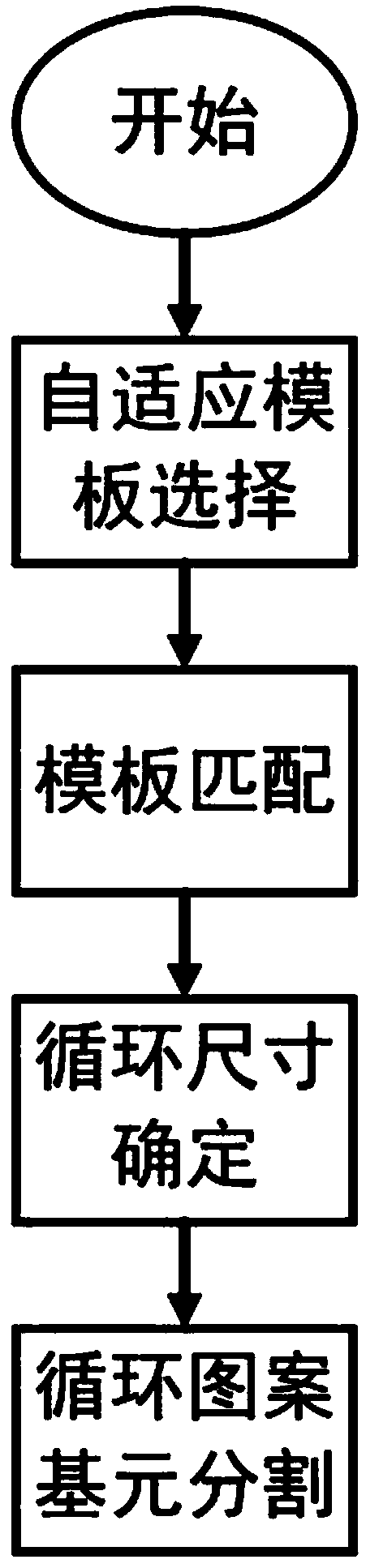

Element segmentation method of fabric printing cyclic pattern based on adaptive template matching

ActiveCN109242858AConform to visual perceptionHigh speedImage enhancementImage analysisTemplate matchingManual segmentation

The invention relates to a segmentation method of fabric printing cycle pattern primitives based on adaptive template matching, comprising the following steps: 1. the adaptive template selection: automatically determining an adaptive size template image in an image containing the printing cycle pattern; 2. template matching: template pattern in the step 1 is the most template, template matching iscarried out with the original image, and regions similar to the template pattern in the original image are found; 3, determining the size of the cycle: determining the size of the pattern cycle according to the positional relationship between the regions similar to the template pattern found in the step 2; 4. cyclic pattern primitive segmentation: according to the cyclic size determined in step (3), the original image is traversed and a complete pattern primitive conforming to human visual perception is segmented. The invention can automatically segment a complete pattern primitive from an image with a circulating pattern, avoids the shortcomings of slow manual segmentation speed, low efficiency and poor segmentation precision, and accelerates the flow of printing pattern development anddesign.

Owner:ZHEJIANG SCI-TECH UNIV

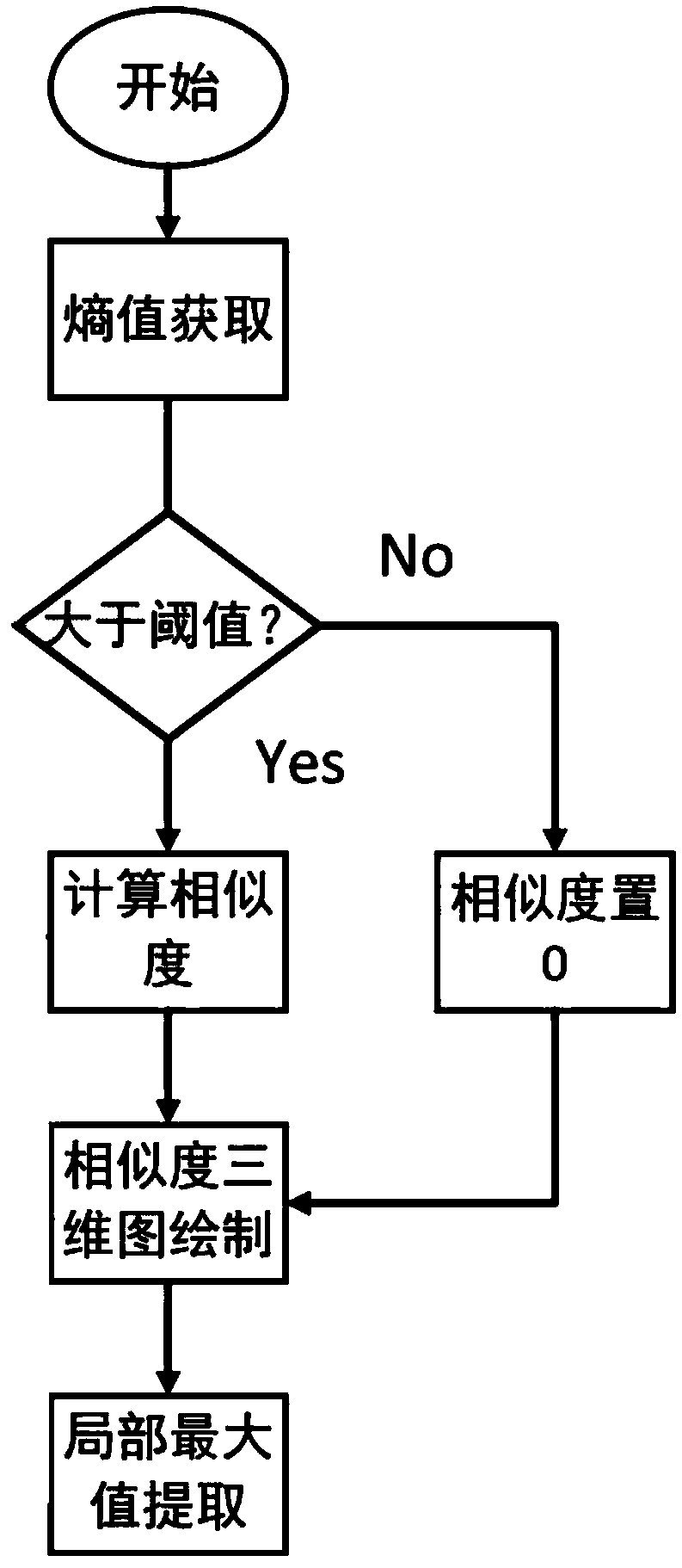

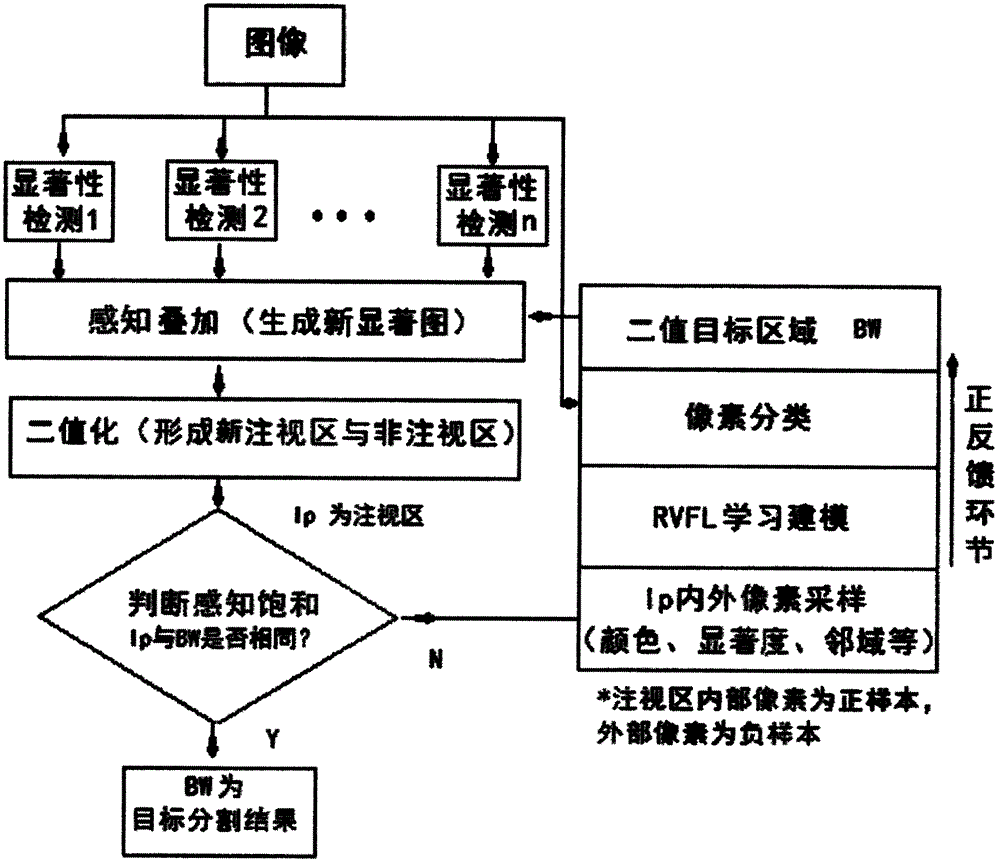

Saliency detection method based on visual perception positive feedback

The invention discloses a saliency detection method based on visual perception positive feedback, comprising: 1) using various existing saliency detection methods to detect image saliency initially; 2) superposing the above results to generate a new comprehensive saliency image, and binarizing the image by means of thresholding method to form binary fixation area Ip; 3) acquiring few pixel samples inside and outside the Ip repeatedly, training, and parallelly constructing various RVFL (random vector functional link) neural network models; classifying pixels by the multiple neural network models, and integrating to form binary target output BW; 4) returning the BW as a neural fired pulse to step 2, and superposing with the comprehensive saliency diagram to form an iterative cycle; 5) during iterating, if the input Ip and output BW in positive feedback link are substantially the same, indicating that perception is saturated, and stopping iteration. Ip or BW is the most salient target segmentation result in the image. Human vision is simulated by superposition of various saliency detection methods and iteration of visual perception positive feedback, and a visual saliency image more like human visual perception is obtained.

Owner:CHINA JILIANG UNIV

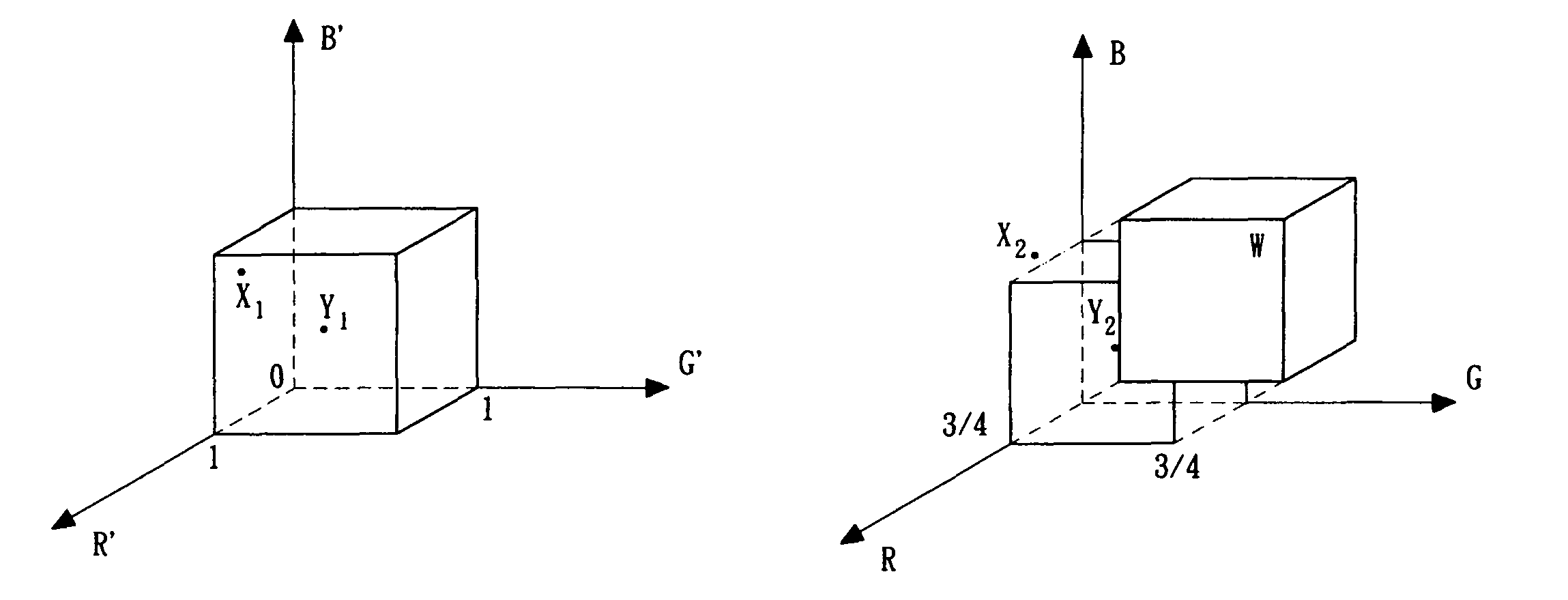

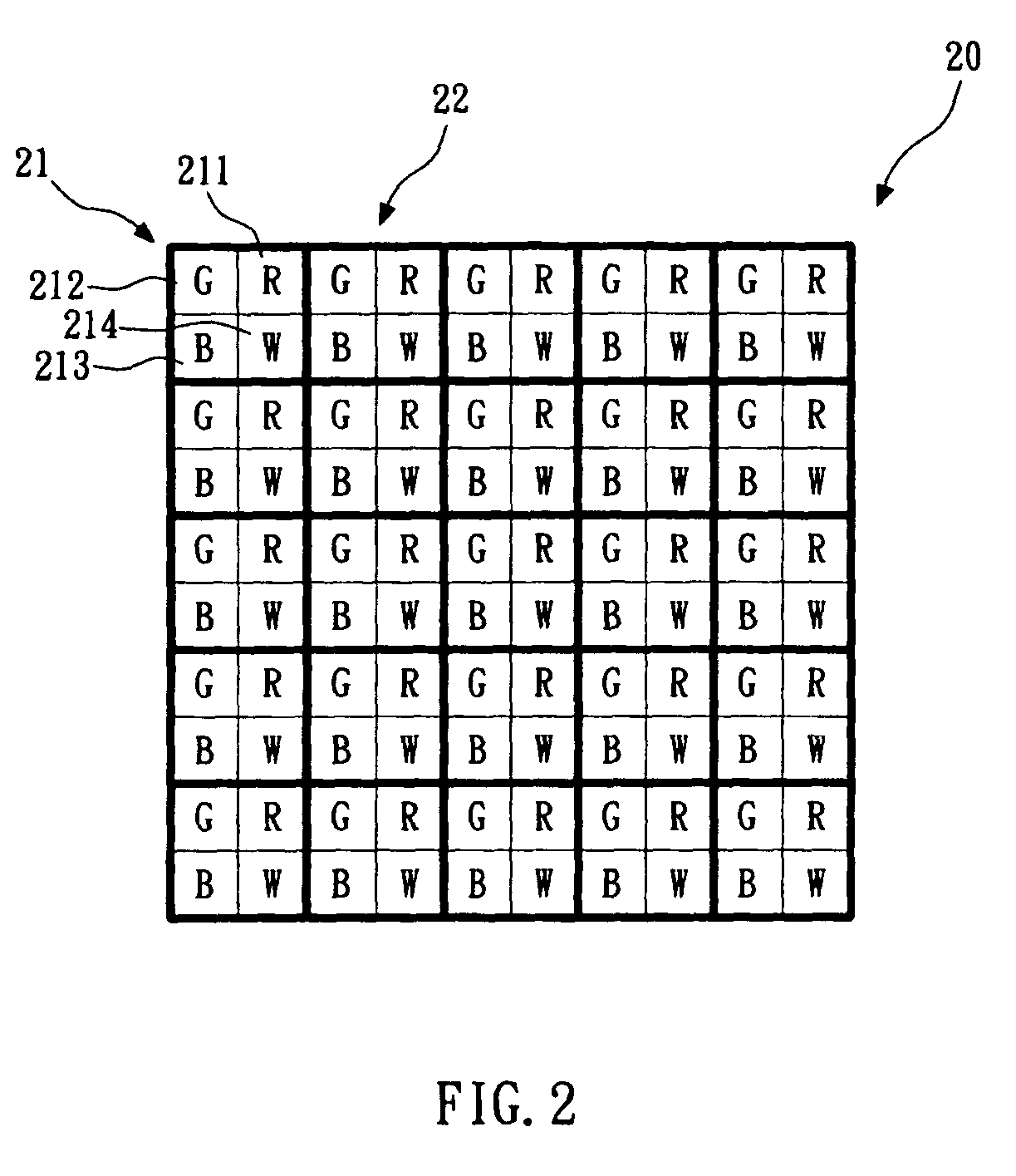

Perceptual color matching method between two different polychromatic displays

ActiveUS7742205B2Increase contrastDigitally marking record carriersDigital computer detailsLuminous intensityColored white

Owner:VP ASSETAB

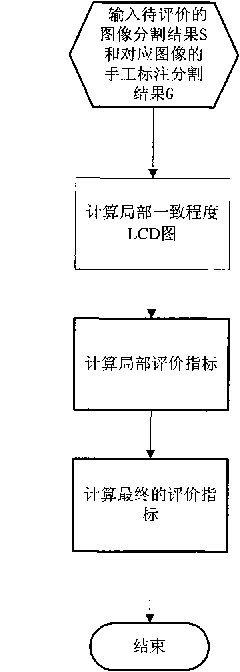

Performance analysis method for automatic segmentation result of image

The invention discloses a performance analysis method for the automatic segmentation result of an image. The method comprises the following steps: enduing each pixel with different weights by calculating the perception uniformity degree among multiple manually-marked segmentation results on the pixels, wherein the higher the perception uniformity degree is, the higher the weight corresponding to the pixel is; and finally calculating a weighted evaluation number as the final evaluation index for the segmentation results to be evaluated. The performance analysis index obtained by the method can quantitatively reflect the uniformity degree between the automatic segmentation result of the image processed by some computer algorithm and the manually-marked segmentation result, thereby objectively reflecting the closeness degree between some computer image automatic segmentation algorithm and the human visual perception in the aspect of image automatic segmentation.

Owner:WUHAN CITMS TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com