Panorama video automatic stitching method based on SURF feature tracking matching

A panoramic video and automatic splicing technology, which is applied in image data processing, instrumentation, computing, etc., can solve the problems of low feature matching efficiency and poor splicing effect, and achieve the effect of improving efficiency, increasing processing speed, and high processing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0069] Exemplary embodiments of the present invention will be described in detail with reference to the following drawings.

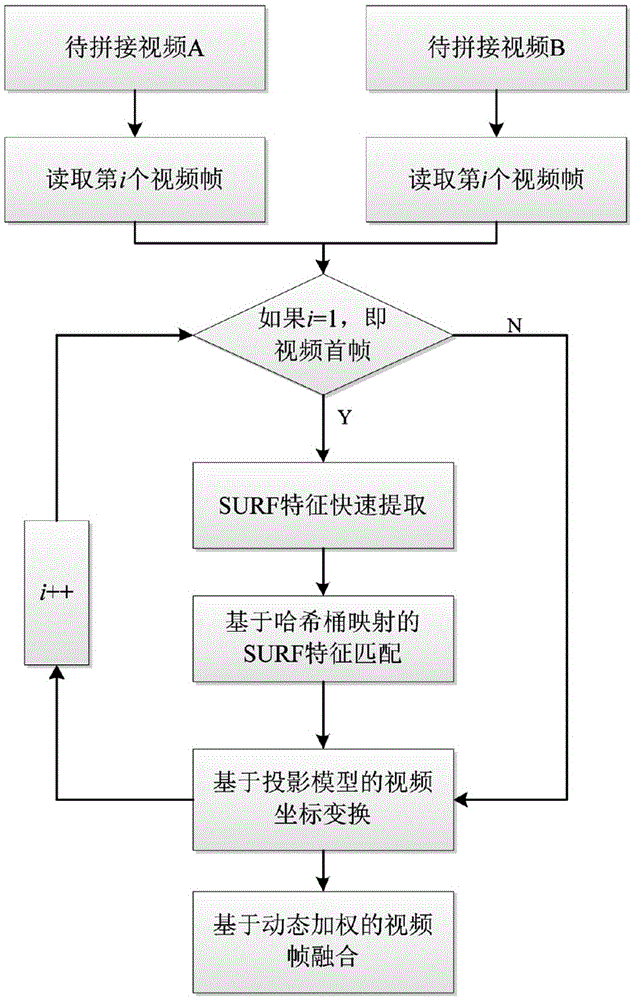

[0070] Embodiments of the present invention provide a panoramic video automatic splicing method based on SURF feature tracking and matching, such as figure 1 As shown, first, a brief introduction to the following process of the method is given:

[0071] Step 1: Read the i-th video frame for the two videos to be spliced;

[0072] Step 2: If i=1, that is, the frame is the first frame of the video, proceed to step 3; otherwise, directly proceed to step 5;

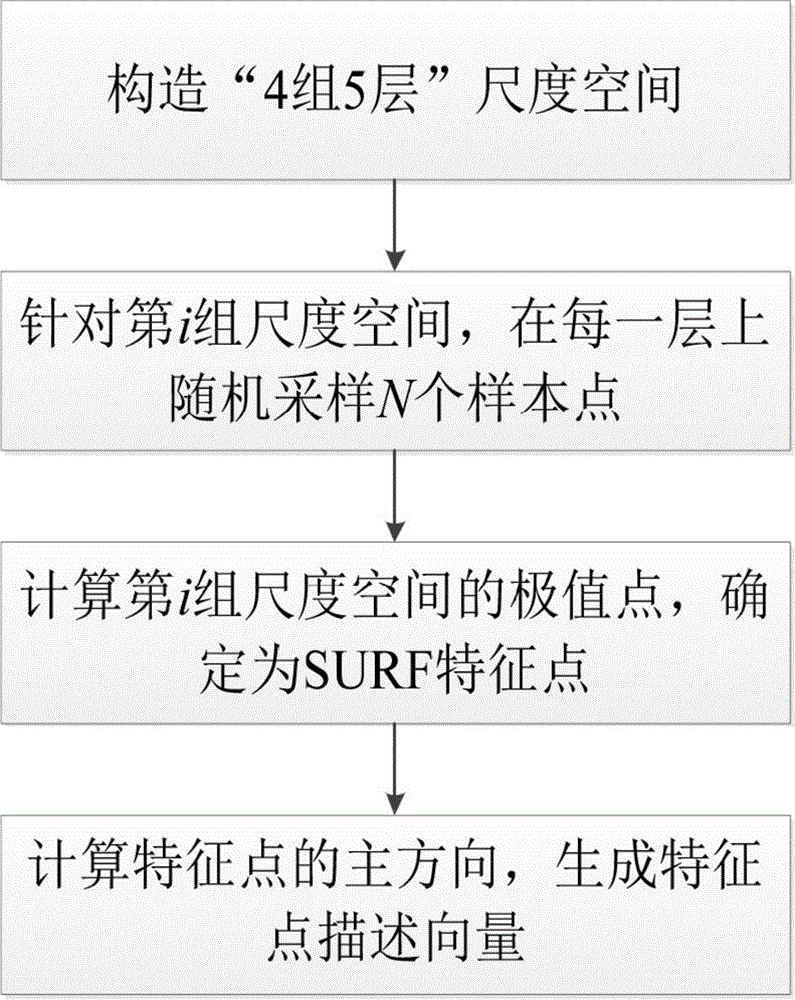

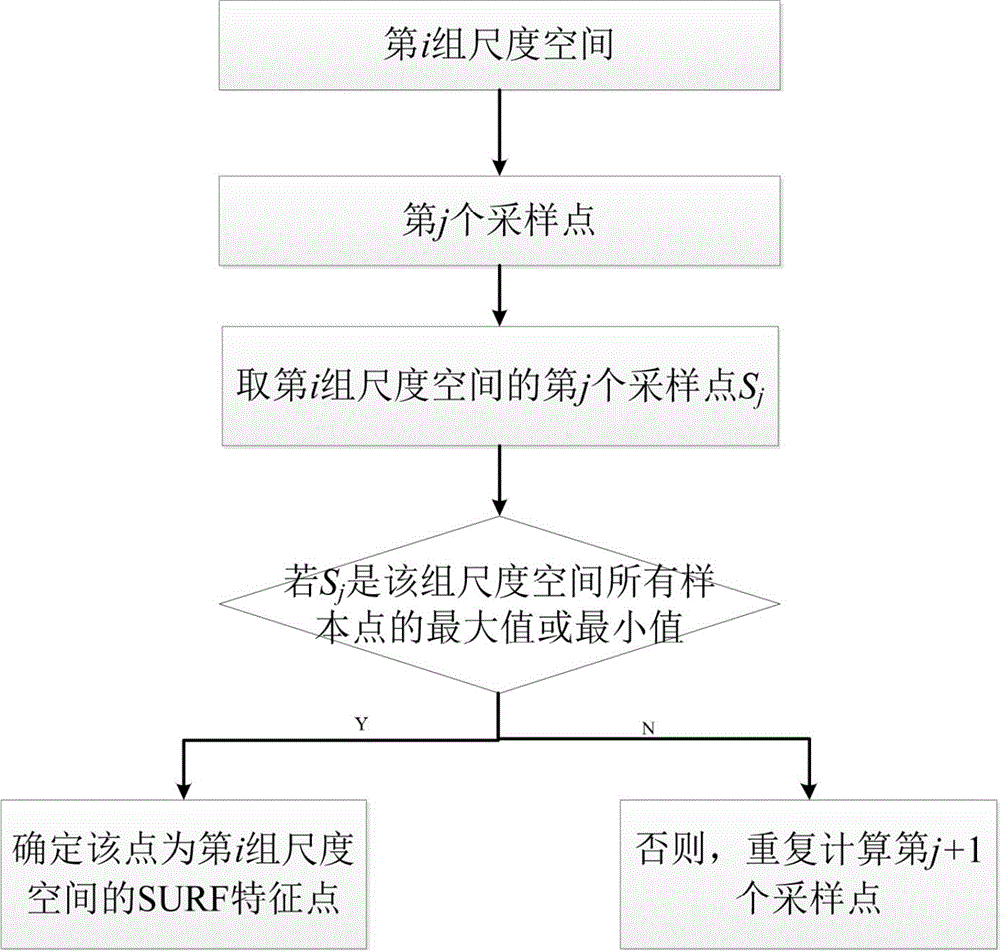

[0073] Step 3: Quickly extract SURF feature points for the first frames of the two videos, and generate feature point description vectors;

[0074] Step 4: For the first frames of the two videos, based on hash mapping and bucket storage, search for similar SURF feature vector point pairs to form a similar feature set;

[0075] Step 5: Based on the video coordinate transformation of the projection mod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com