Visual inertial navigation SLAM method based on ground plane hypothesis

A ground-level, ground-based technology, applied in image data processing, instrumentation, computing, etc., to solve problems such as decreased accuracy and coherence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0143] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

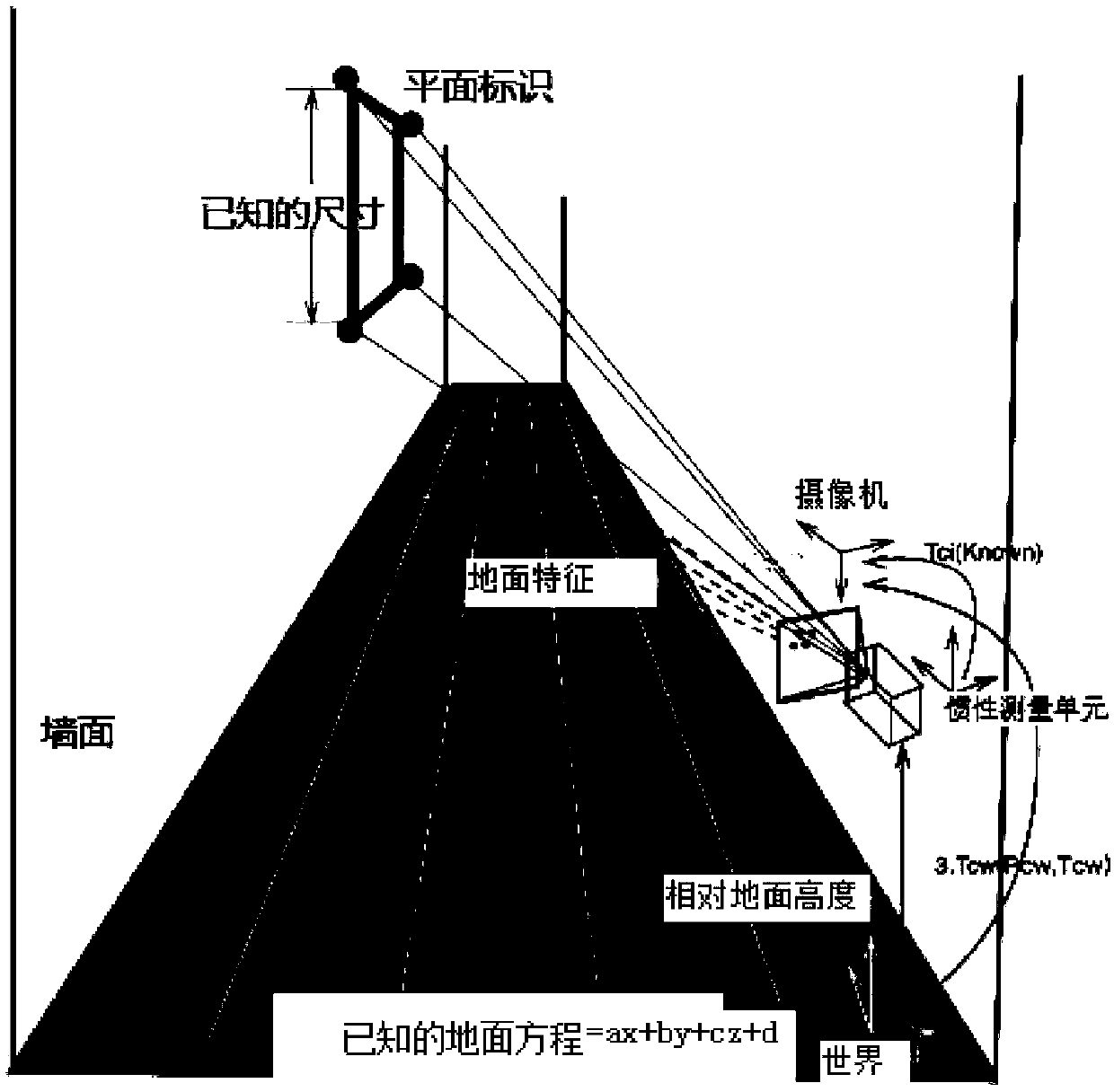

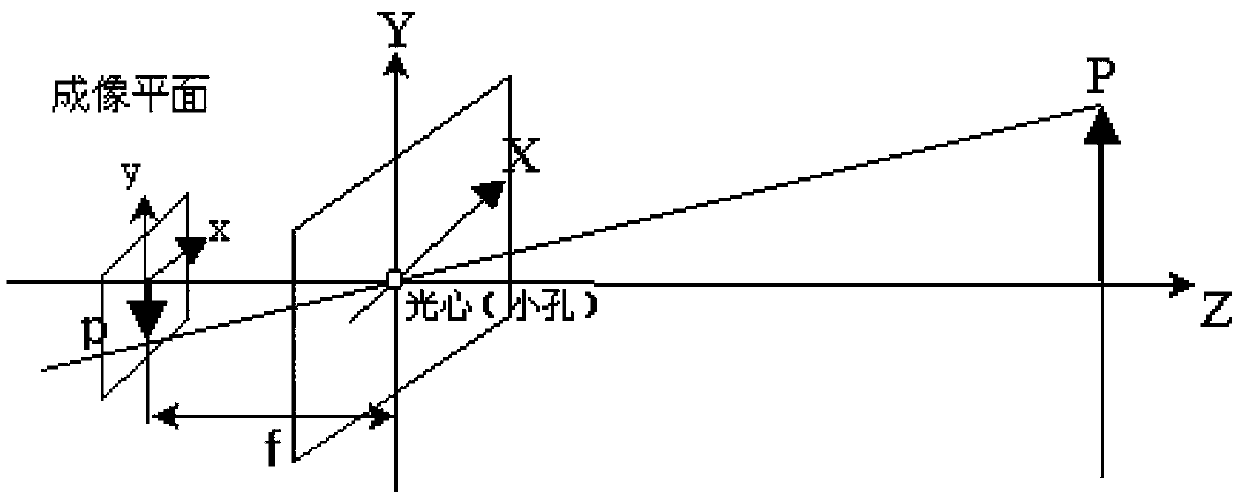

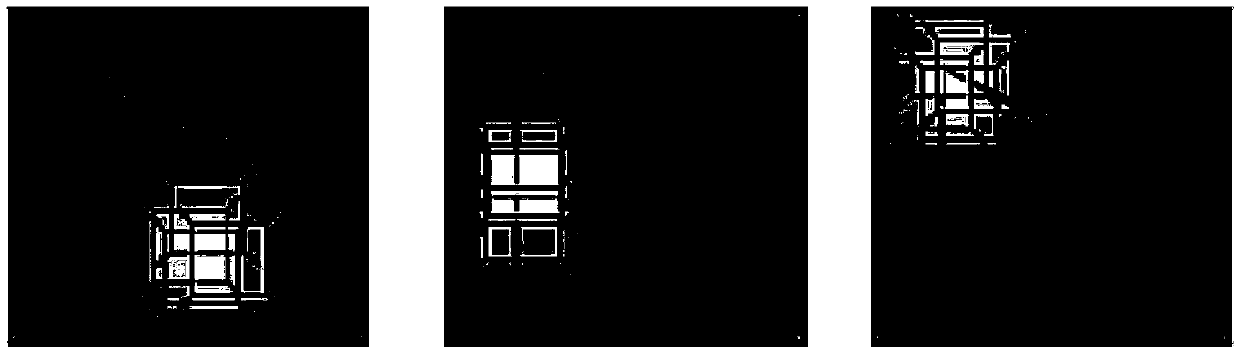

[0144] The present invention proposes a Visual-Inertial SLAM method (VIGO) based on ground plane assumptions, adding feature points on the ground and feature points on plane road signs as map features to realize SLAM. In order to make the positioning more robust and continuous, and restore the real scale, this paper adds an inertial sensor and adds the pre-integration data of the IMU to the optimization framework. In this way, the estimation of camera pose can be globally restricted, which greatly improves the accuracy. In addition, in the reconstructed 3D map, the ground area can be clearly constructed to provide richer information for subsequent AR applications or robot applications. The overall framework is as figure 1 shown.

[0145] The present invention is based on the visual inertial navigation SLAM method of ground plane hypothesis, compr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com