Calculation apparatus and method for accelerator chip accelerating deep neural network algorithm

A deep neural network and acceleration chip technology, applied in the field of computing devices for accelerating chips, can solve problems such as non-compliance, increase in chip power consumption, and increase in the number of times of reading intermediate values.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] In order to make the object, technical solution and advantages of the present invention clearer, the computing device and method of the acceleration chip for accelerating the deep neural network algorithm of the present invention will be further described in detail below in conjunction with the accompanying drawings.

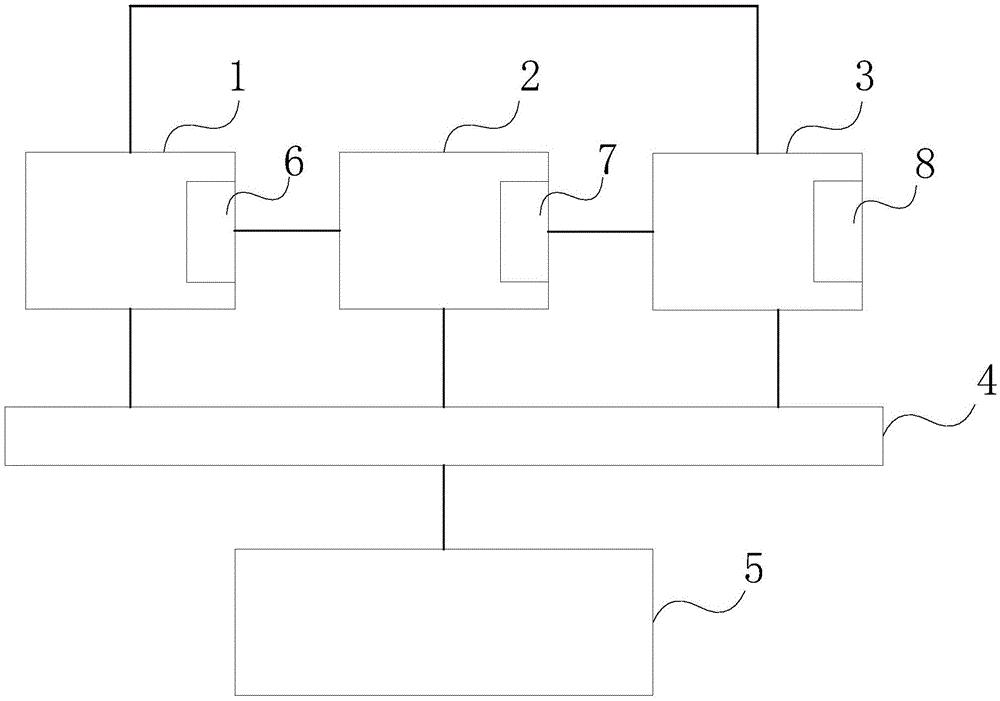

[0052] figure 1 It is a diagram of the relationship between each constituent module and the main memory of the operation device of the acceleration chip of the accelerated deep neural network algorithm of the present invention, the device includes a main memory 5, a vector addition processor 1, a vector function value operator 2 and a vector multiplication Adder 3. Among them, the vector addition processor 1 , the vector function value operator vector 2 and the vector multiply-adder 3 all have intermediate value storage areas 6 , 7 , 8 , and can read and write to the main memory 5 at the same time. Vector addition processor 1 is configured to carry out t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com