Human body action detection and positioning method based on space-time combination

A technology of human motion and positioning method, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problem that the detection results need to be improved, and achieve the effect of solving the great difference in video length

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

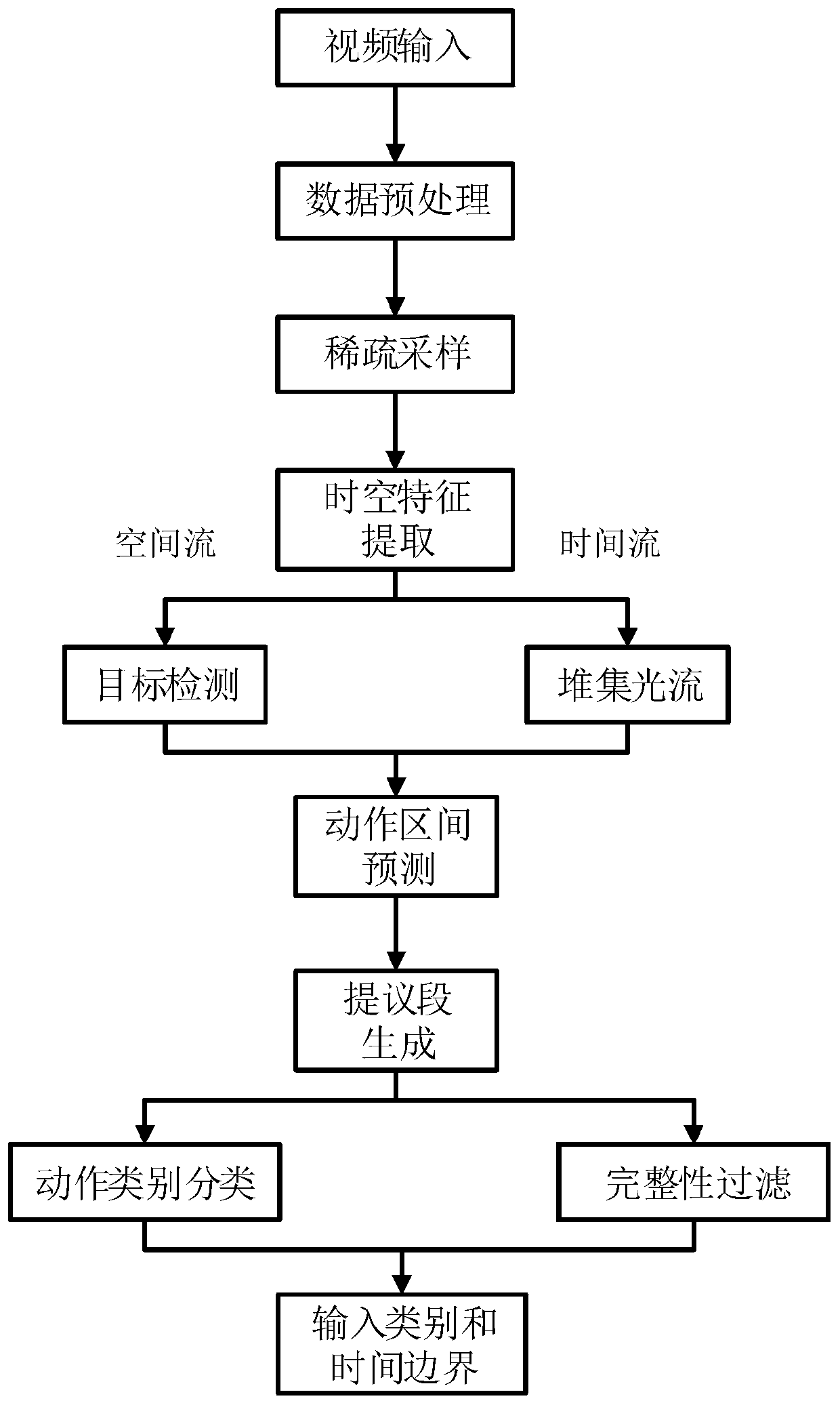

[0045] A human action detection and localization method based on spatio-temporal joint, such as figure 1 As shown, it is a flow chart of the human motion detection and localization method based on spatio-temporal joint of the present invention, the method includes:

[0046] S1, data preprocessing, divide an input untrimmed video into K equal-length video sequences and divide the entire data set, divide the data set into training set, verification set and test set, and the division ratio is 7:2: 1. The original data comes from the streaming media server of the offshore oil production platform. The monitoring equipment on each offshore platform remains static, and the working platform is used as the monitoring scene. The real-time monitoring video is transmitted through microwave and stored in the streaming media server.

[0047] S2, sparse samp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com