Video motion identification method based on time domain segmentation network

A time domain segmentation and action recognition technology, applied in the field of action recognition, can solve the problems of video loss of important information, limited data resources, and limited video duration.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

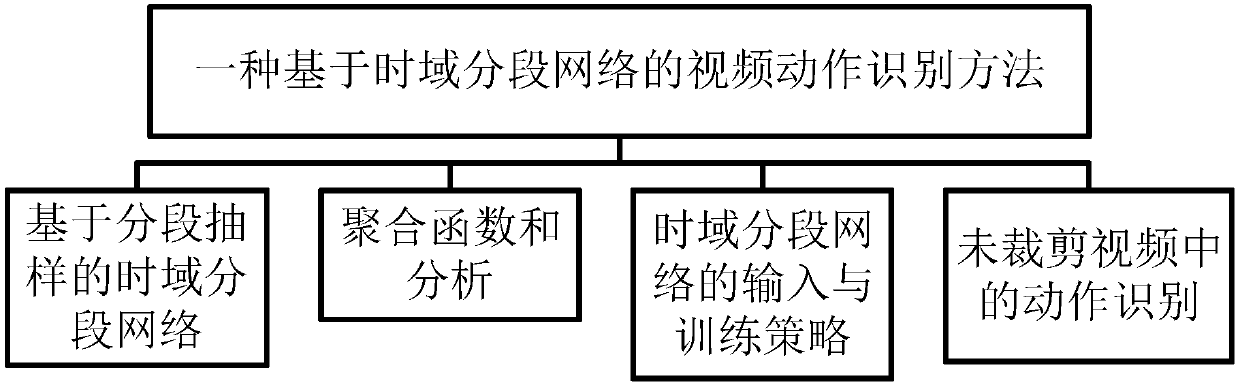

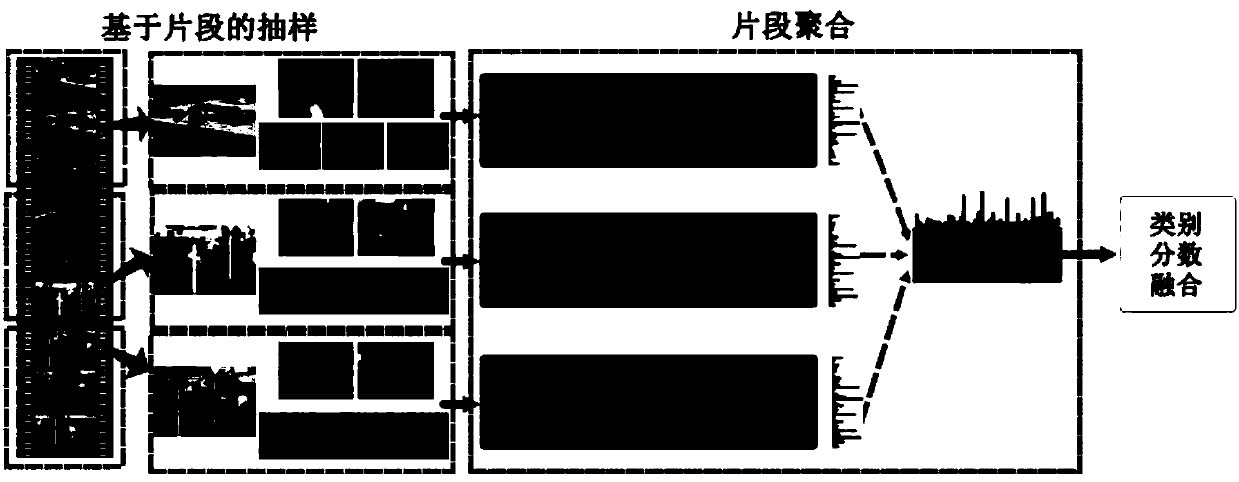

[0057] figure 1 It is a system frame diagram of a video action recognition method based on a time domain segmentation network in the present invention. It mainly includes temporal segmentation network (TSN) based on segmented sampling, aggregation function and analysis, input and training strategy of temporal segmentation network and action recognition in uncropped video.

[0058] Aggregation functions and analysis, the consensus (aggregation) function is an important part of the TSN framework; five types of aggregation functions are proposed: max pooling, average pooling, top Pooling, weighted averaging, and attention weights.

[0059] Max pooling, in this aggregate function, assig...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com