Track and convolutional neural network feature extraction-based behavior identification method

A convolutional neural network and feature extraction technology, applied in the field of behavior recognition based on trajectory and convolutional neural network feature extraction, can solve the problems of large amount of calculation and insufficient feature expression ability, and achieve strong robustness and discrimination Power, improve algorithm efficiency, reduce computational complexity and effect of feature dimension

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

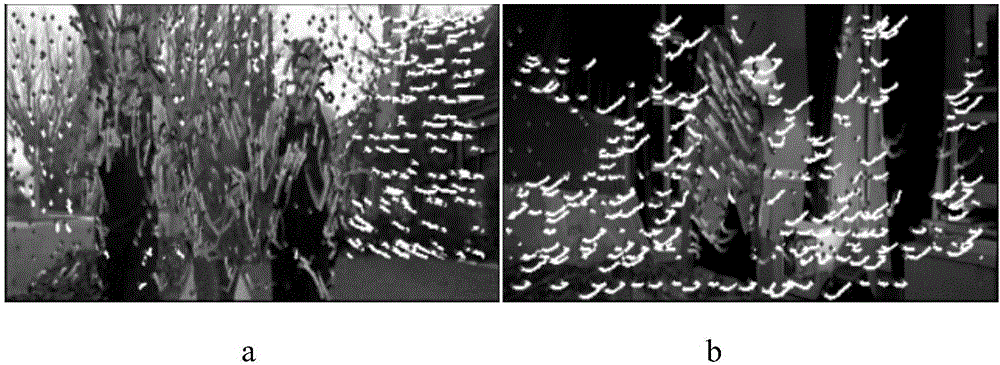

[0038] For the problem of human behavior recognition, traditional methods generally extract the trajectory points generated during human motion, and combine the trajectory points to extract unsupervised feature descriptors around the space-time domain, such as histogram of oriented gradients (HOG), histogram of optical flow ( HOF), Motion Boundary Histogram (MBH), etc., combined with Fisher transform and principal component analysis to finally classify and identify, but unsupervised feature descriptors generally have problems such as insufficient feature representation ability and large computational complexity.

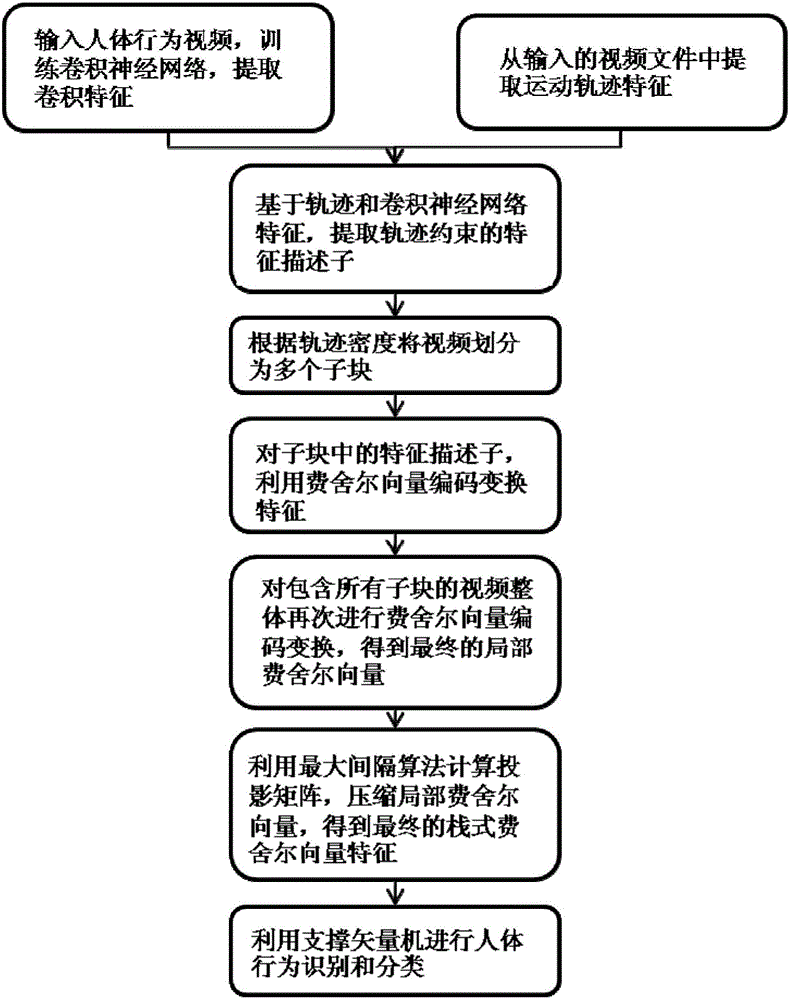

[0039] In order to avoid the problems existing in the prior art, improve the effectiveness and accuracy of human behavior recognition and reduce redundant calculations, the present invention proposes a behavior recognition method based on trajectory and convolutional neural network stack feature transformation, see figure 1 , including the following steps:

[0040] (...

Embodiment 2

[0057] The behavior recognition method based on trajectory and convolutional neural network feature transformation is the same as embodiment 1,

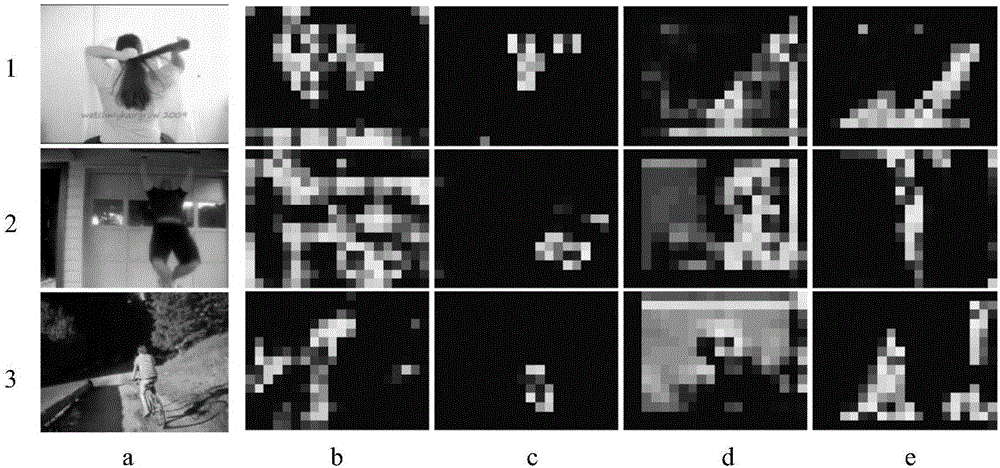

[0058] In step (2.4), the feature of the convolutional layer using the convolutional neural network to extract trajectory constraints specifically includes the following steps:

[0059](2.4.1) Train the convolutional neural network, extract the video frame and the corresponding class label from the human behavior video as the input of the convolutional neural network (CNN), and extract the convolution feature for each input video frame, where the convolution The structure of the neural network (CNN) is 5 convolutional layers and 3 fully connected layers.

[0060] Different layers of convolutional neural networks can capture different behavior patterns, from low-level edge textures to complex objects and targets, and higher-level neural networks have larger receptive fields to obtain more discriminative features;

[0061] (2.4.2) Obt...

Embodiment 3

[0070] The behavior recognition method based on trajectory and convolutional neural network feature transformation is the same as embodiment 1,

[0071] The maximum interval feature transformation method described in step (3), specifically:

[0072] Sampling the local Fisher vector of each sample in all the labeled sample sets used for training, in a sampling subset {φ i ,y i} i=1,...,N Learning the projection matrix U∈R using the maximum margin feature transformation method p∈2Kd , p<<2Kd, where N represents the number of local Fisher vectors in the sampling subset.

[0073] Using a one-to-many strategy, the multi-category problem of the B-type behavior sample set is transformed into multiple binary classification problems to learn the projection matrix, and the maximum interval is solved in each binary classification problem. The maximum interval constraint is as follows:

[0074] y′ i (wUφ i +b)>1,i=1,...,N

[0075] Among them, y′ i ∈(-1,1) is the class label of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com