Patents

Literature

174results about How to "Improve programming performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Card for a set top terminal

InactiveUS6181335B1Speed up searchImprove throughputTelevision system detailsPulse modulation television signal transmissionData signalUser Friendly

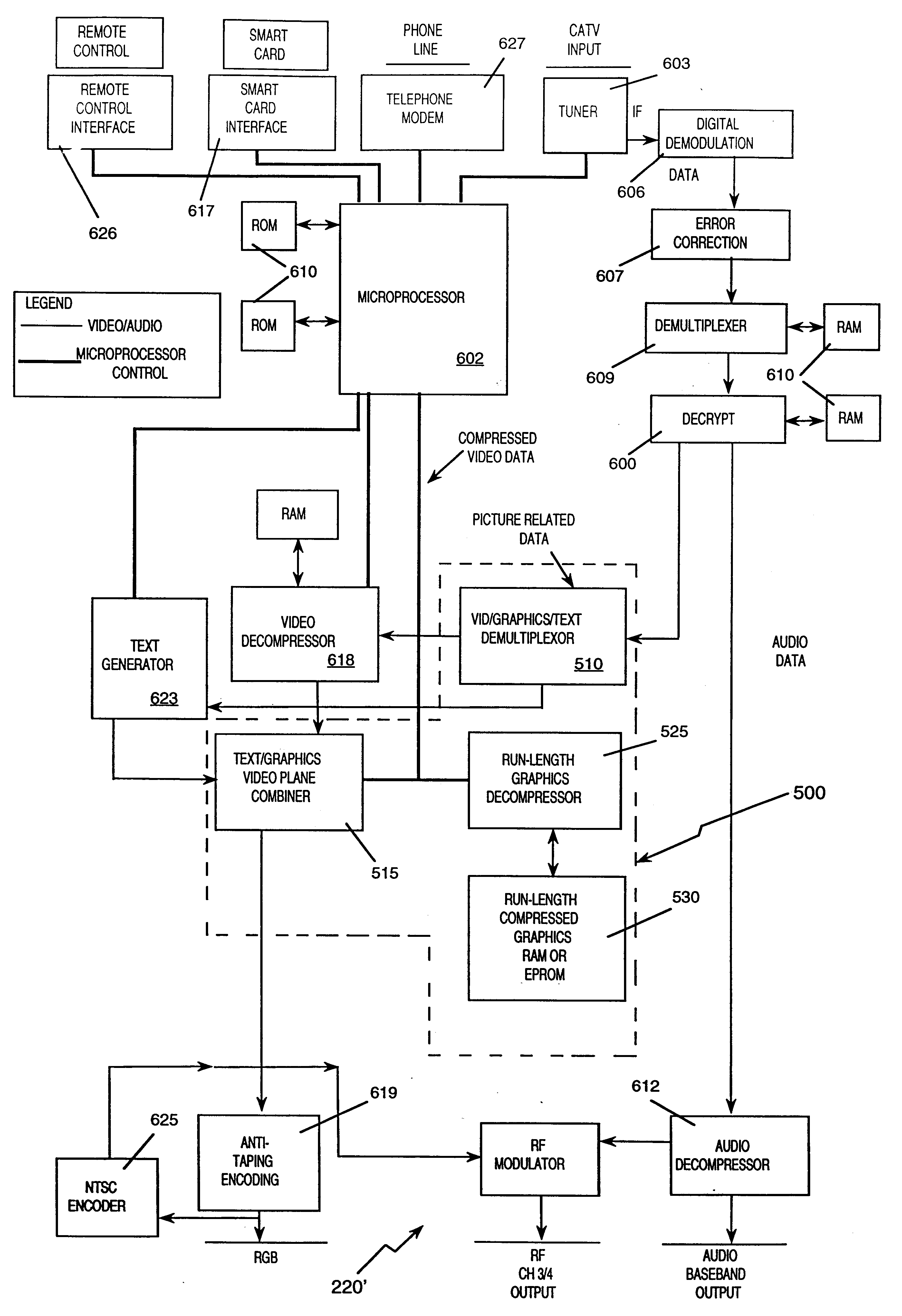

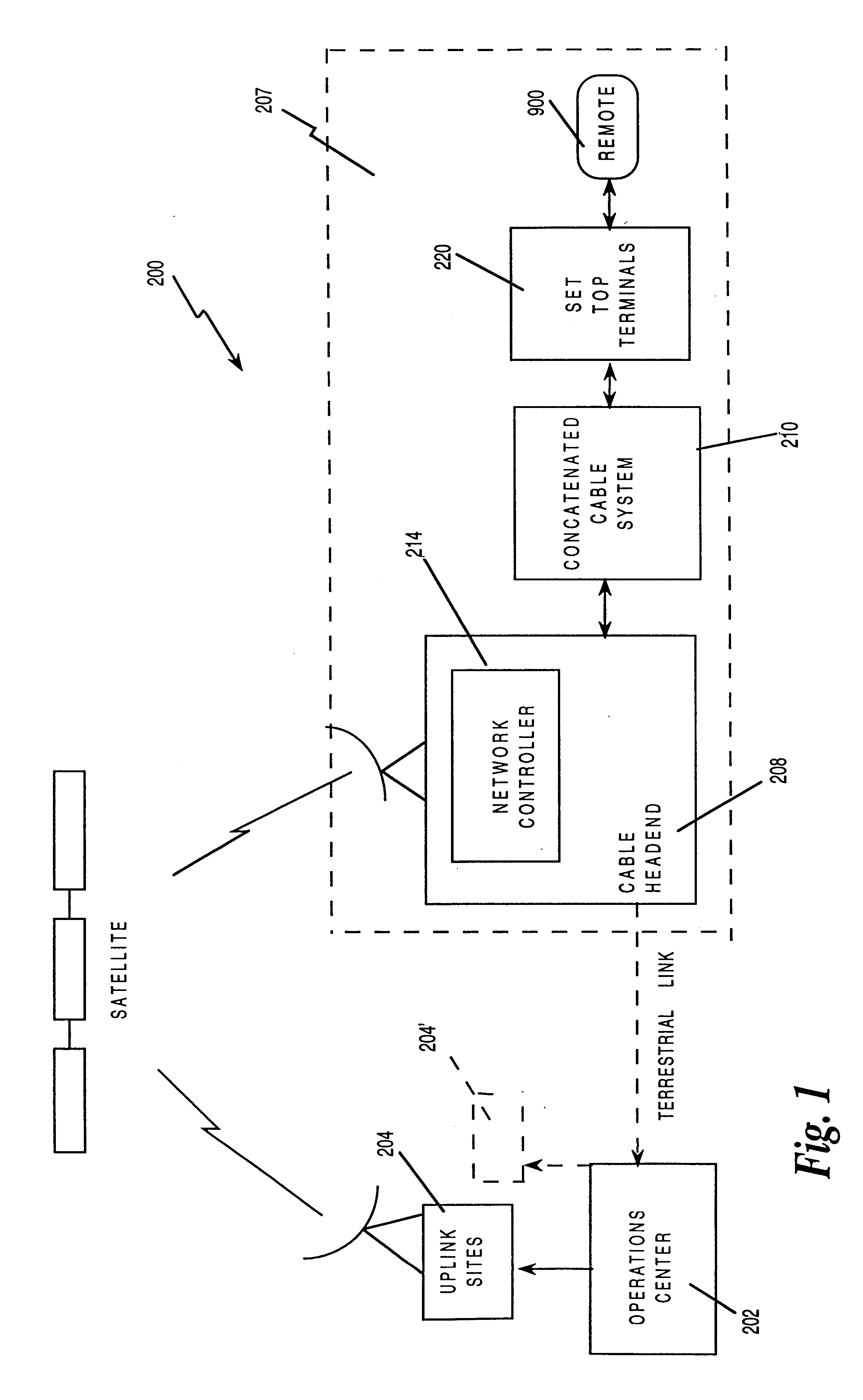

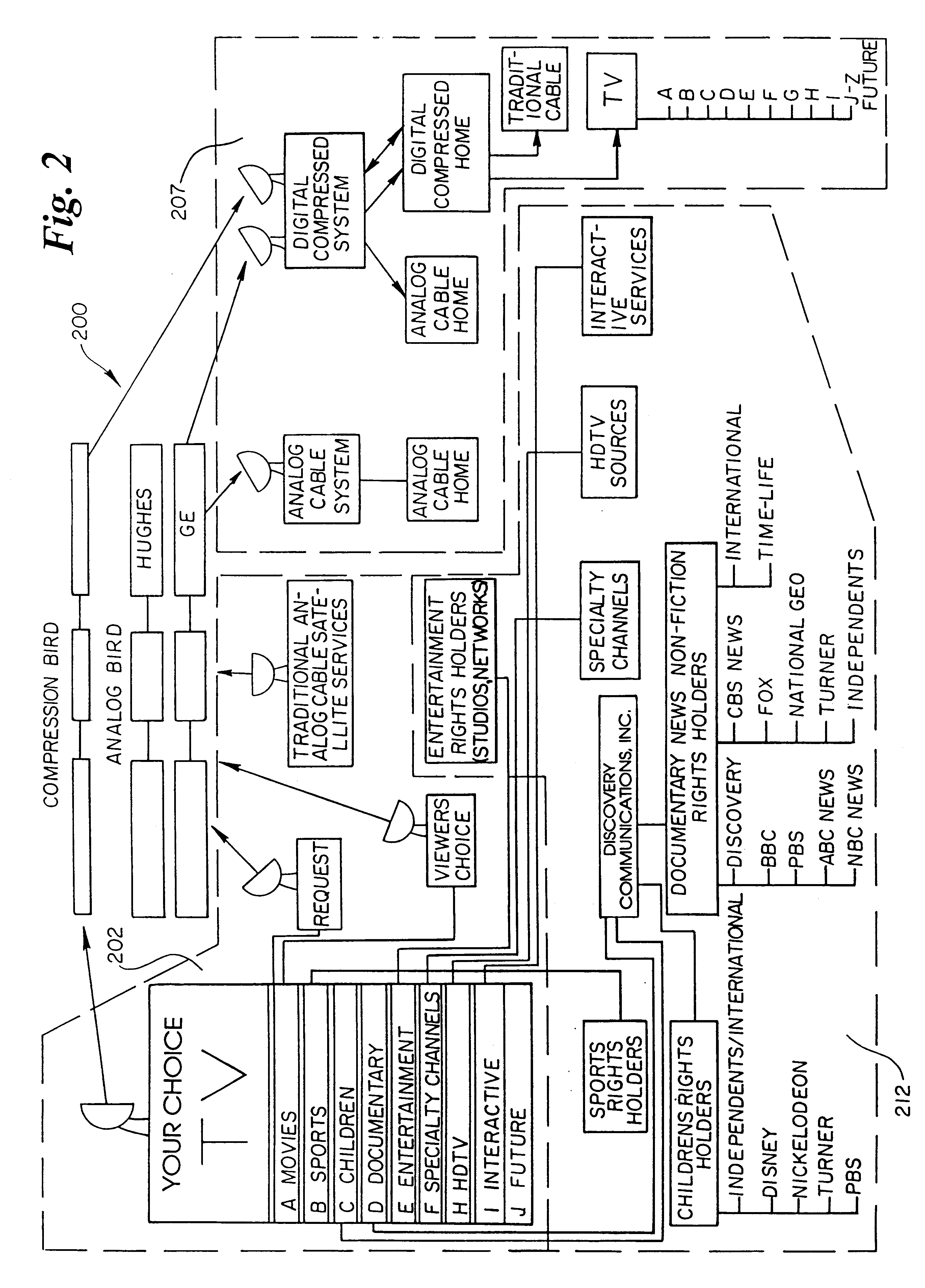

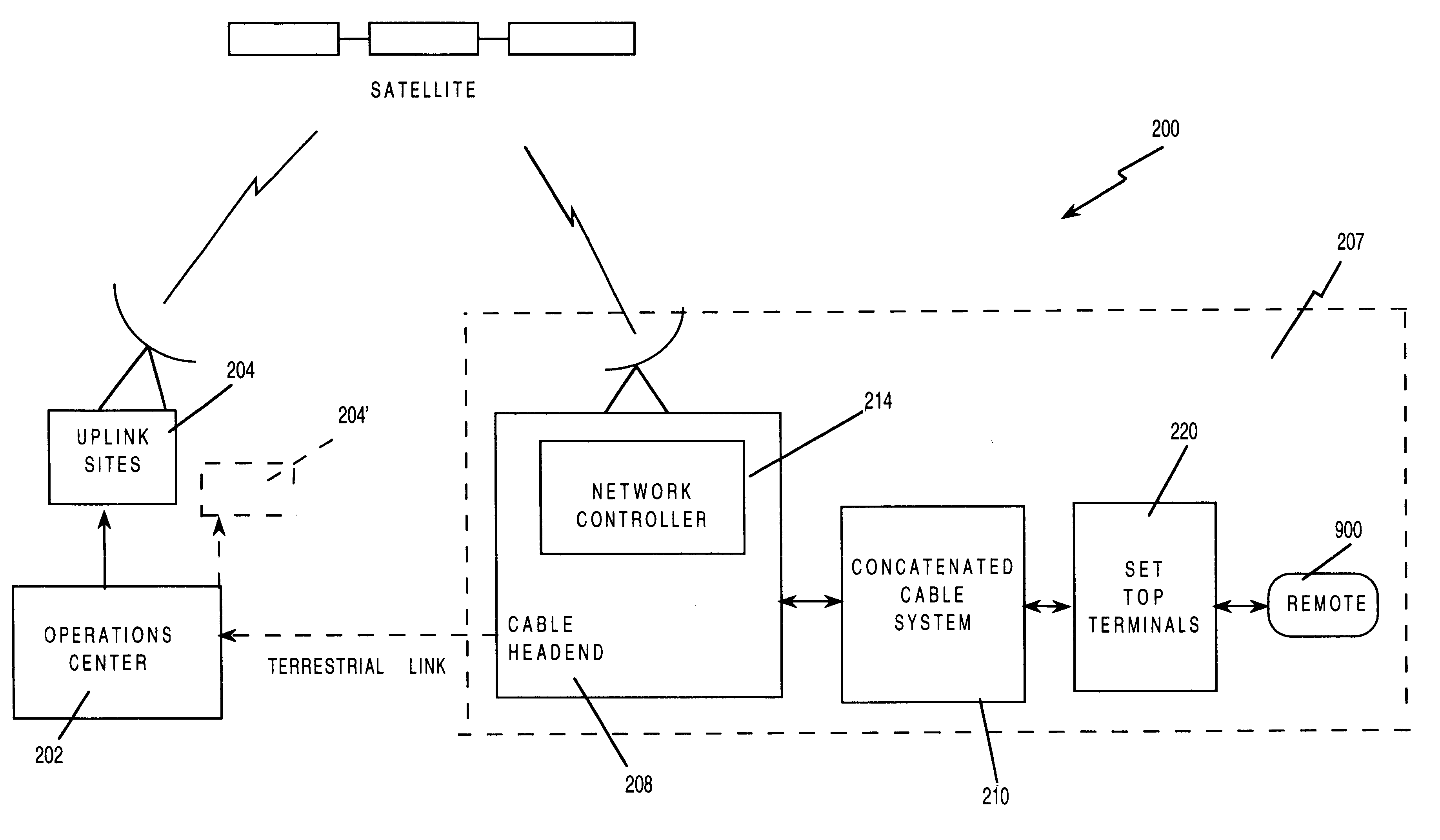

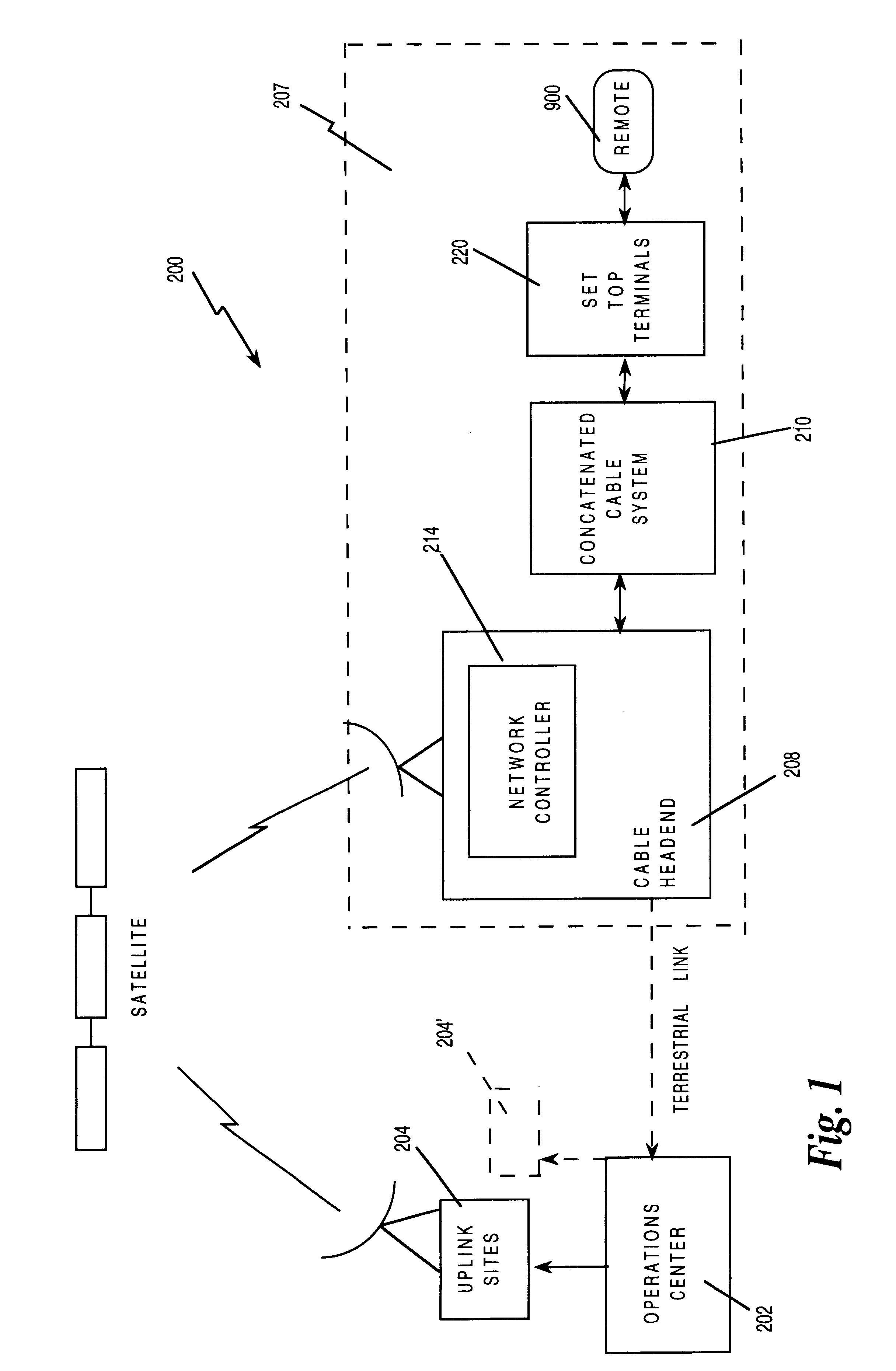

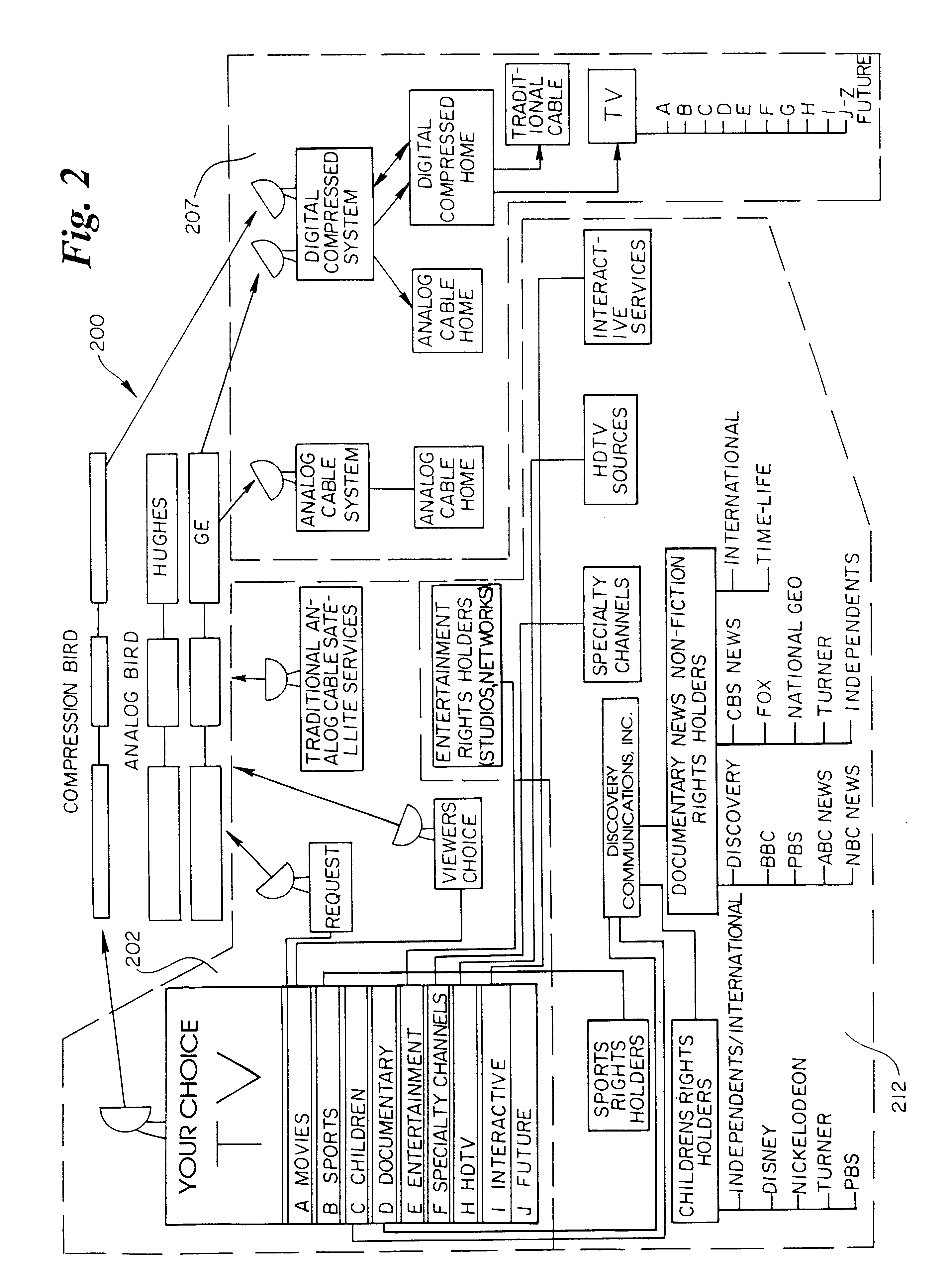

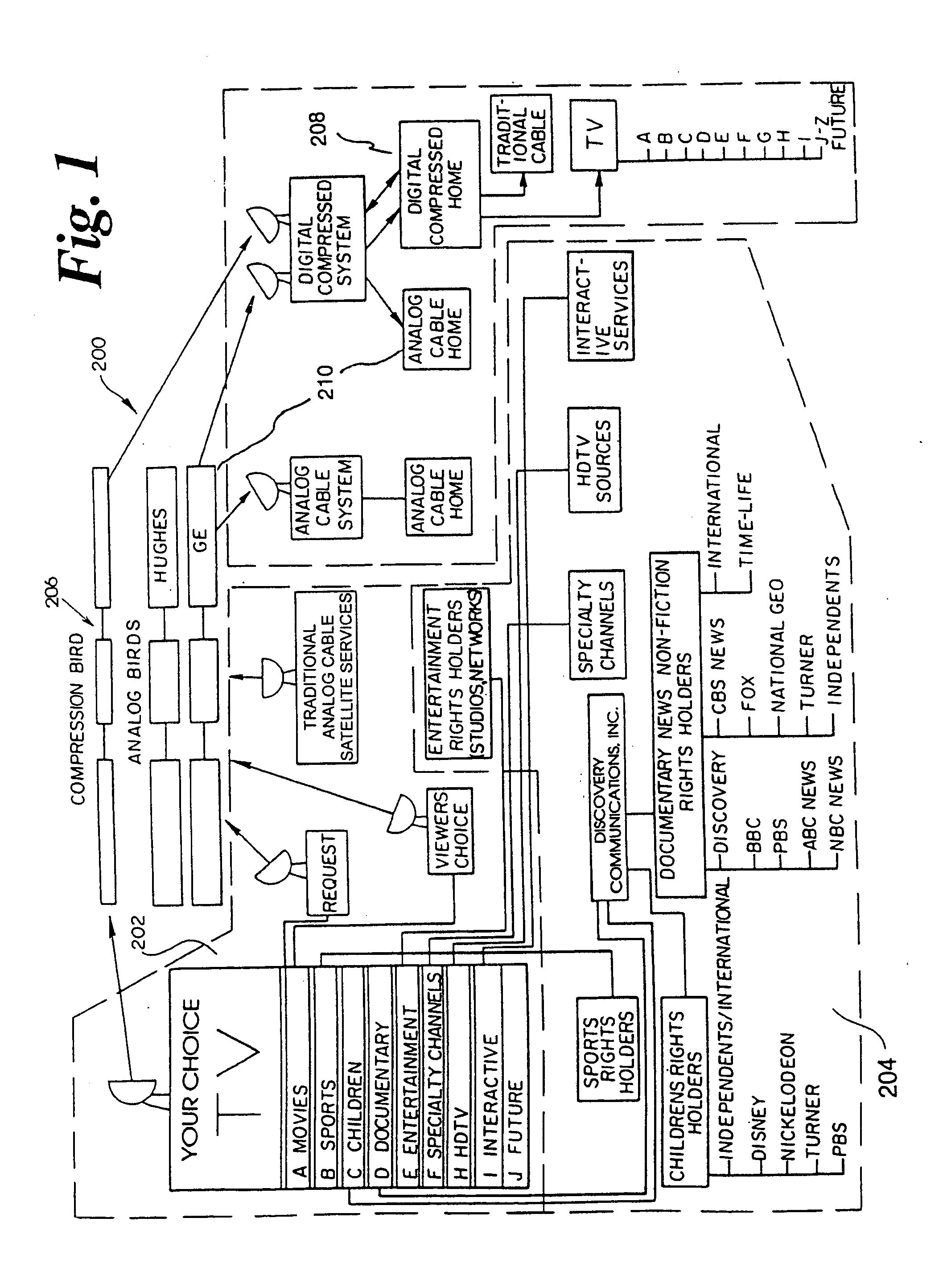

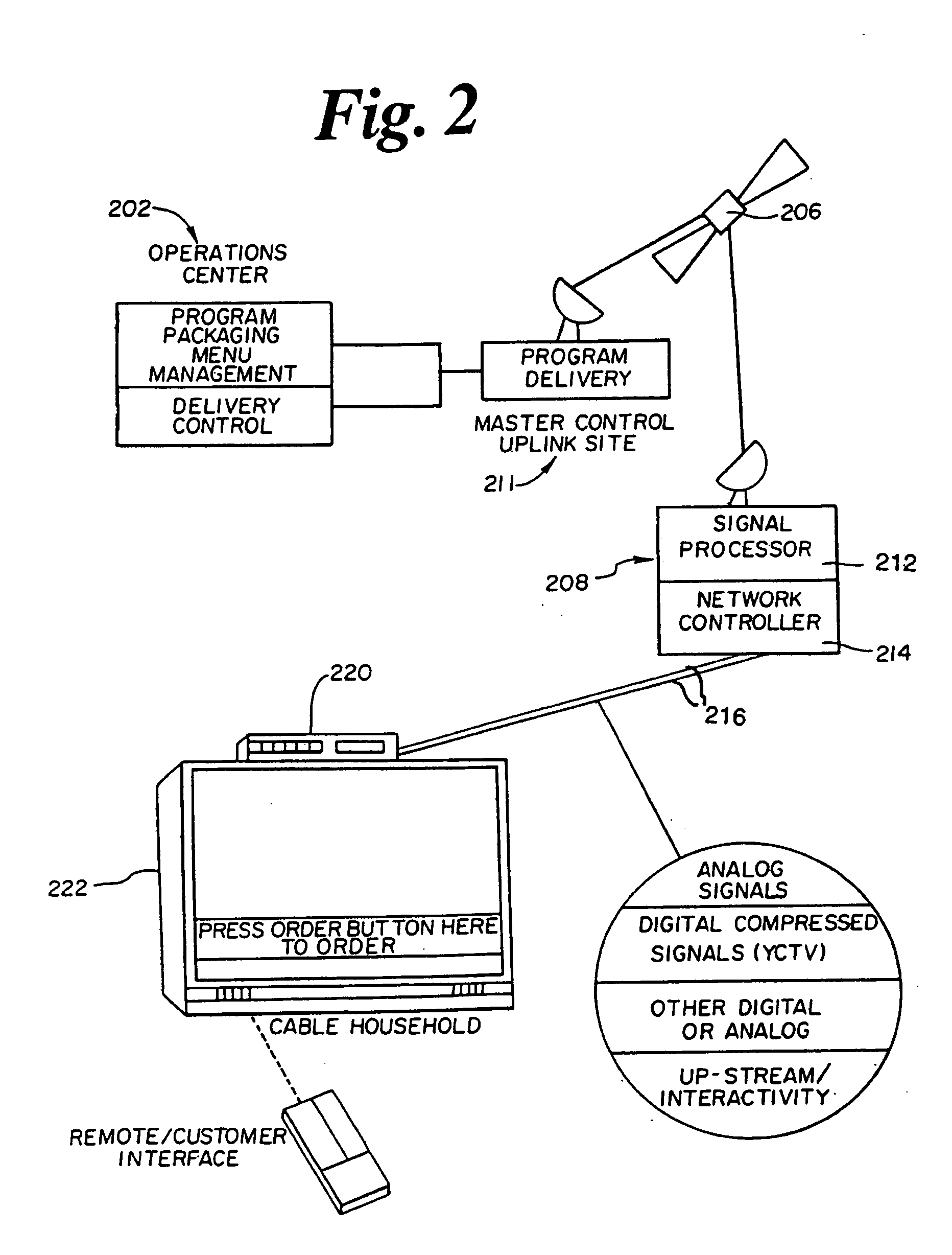

An apparatus for upgrading a viewer interface for a television program delivery system (200) is described. The invention relates to methods and devices for viewer pathways to television programs and services. Specifically, the apparatus involves hardware and software used in conjunction with the interface and a television at the viewer home to create a user friendly menu based approach to accessing programs and services. The apparatus is particularly useful in a program delivery system (200) with hundreds of programs and a data signal carrying program information. The disclosure describes menu generation and menu selection of television programs.

Owner:COMCAST IP HLDG I

Set top terminal that stores programs locally and generates menus

InactiveUS6828993B1Improve throughputImprove programming performanceTelevision system detailsPulse modulation television signal transmissionData signalComputer science

A viewer interface for a television program delivery system is described. The innovation relates to methods and devices for viewer pathways to television programs. Specifically, the interface involves hardware and software used in conjunction with a television at the viewer home to create a user friendly menu based approach to television program access. The device is particularly useful in a program delivery system with hundreds of programs and a data signal carrying program information. The disclosure describes menu generation and menu selection of television programs.

Owner:COMCAST IP HLDG I

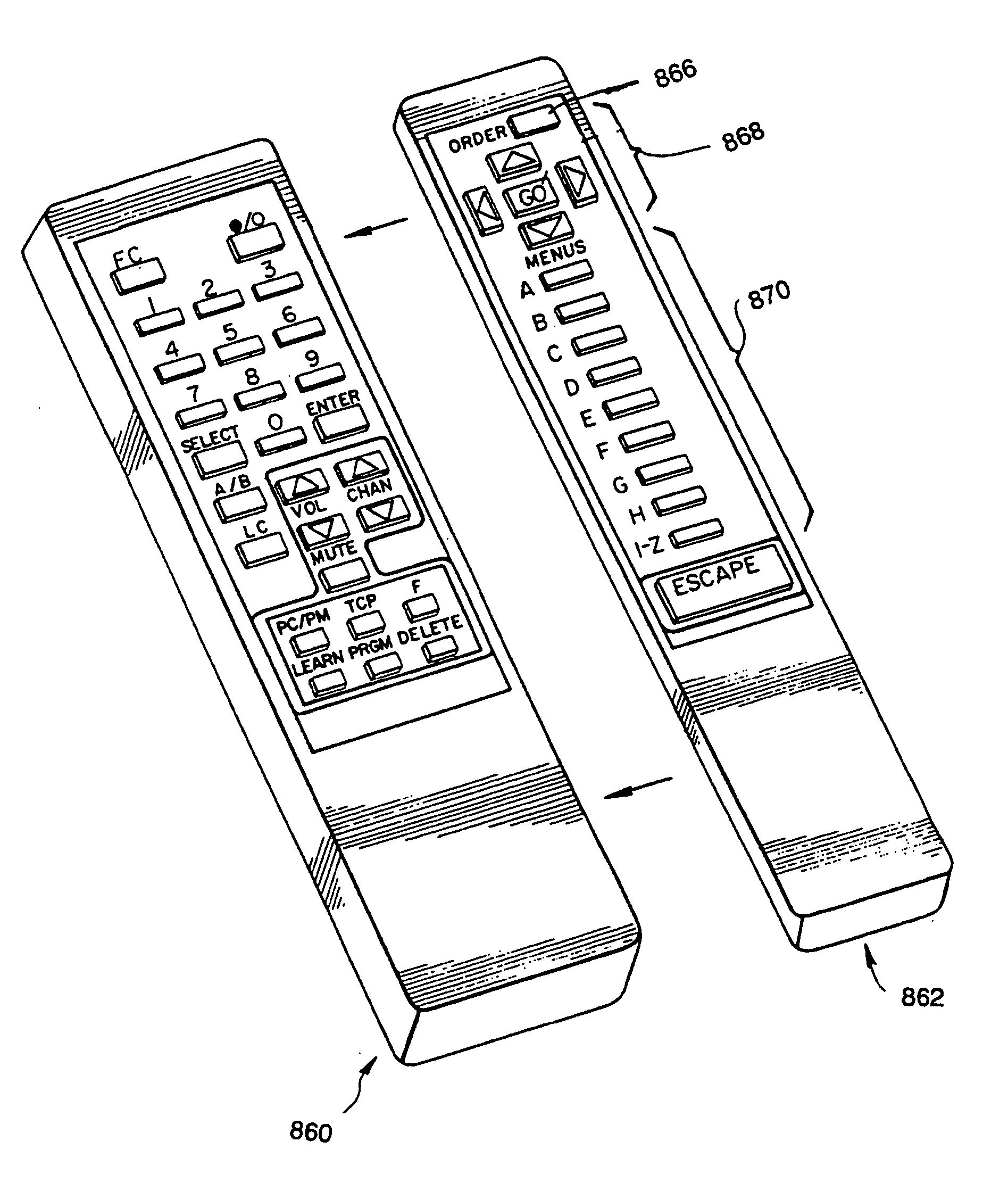

Remote control for menu driven subscriber access to television programming

InactiveUS20050157217A1Improve throughputImprove programming performancePulse modulation television signal transmissionBroadcast information characterisationMultiplexingRemote control

An expanded television program delivery system is disclosed which allows viewers to select television and audio program choices from a series of menus. Menus are partially stored in a set-top terminal in each subscriber's home. The menus may be reprogrammed by signals sent from a headend or from a central operations center. The system allows for a great number of television signals to be transmitted by using digital compression techniques. An operations center with computer-assisted packaging allows various television, audio and data signals to be combined, compressed and multiplexed into signals transmitted on various channels to a cable headend which distributes the signals to individual set-top terminals. Various types of menus may be used and the menus may incorporate information included within the video / data signal received by the set-top terminal. A remote control unit with icon buttons allows a subscriber to select programs based upon a series of major menus, submenus, and during program menus. Various billing and statistics gathering methods for the program delivery system are also disclosed.

Owner:COMCAST IP HLDG I

Data center network architecture

ActiveUS20130108263A1Enhance performanceEfficiently allocate bandwidthData switching by path configurationOptical multiplexComputational resourceDistributed computing

Data center network architectures that can reduce the cost and complexity of data center networks. The data center network architectures can employ optical network topologies and optical nodes to efficiently allocate bandwidth within the data center networks, while reducing the physical interconnectivity requirements of the data center networks. The data center network architectures also allow computing resources within data center networks to be controlled and provisioned based at least in part on a combined network topology and application component topology, thereby enhancing overall application program performance.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

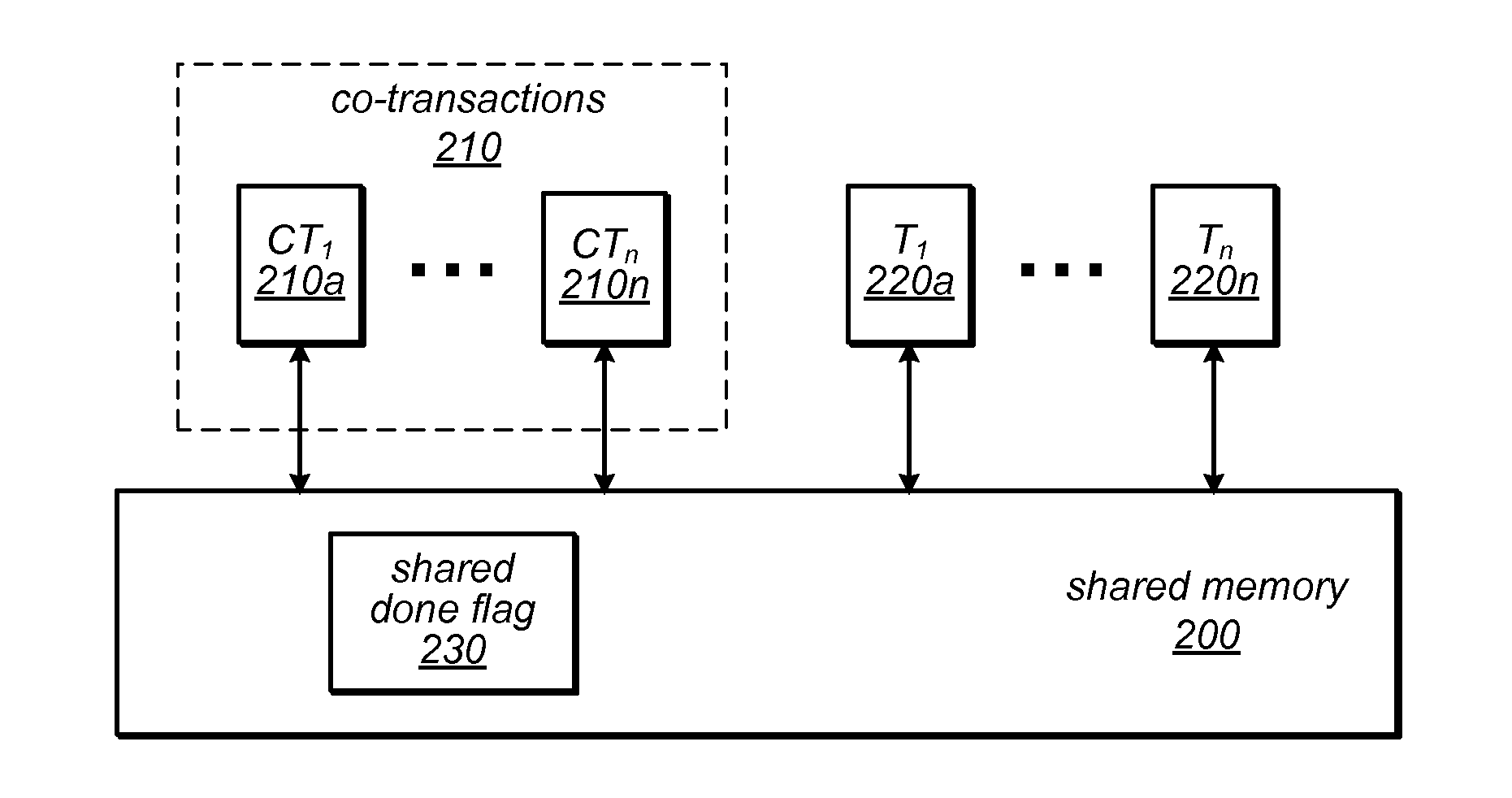

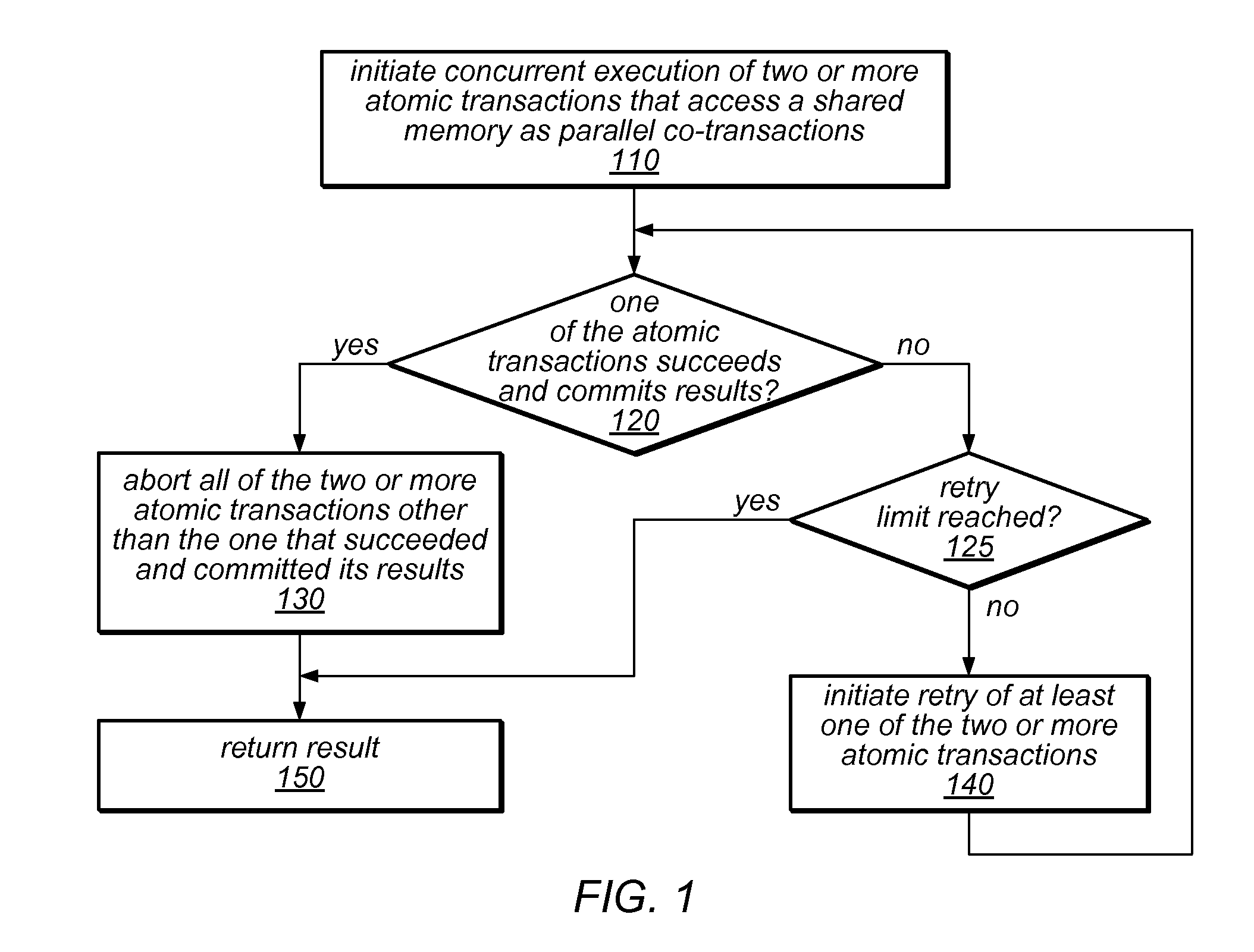

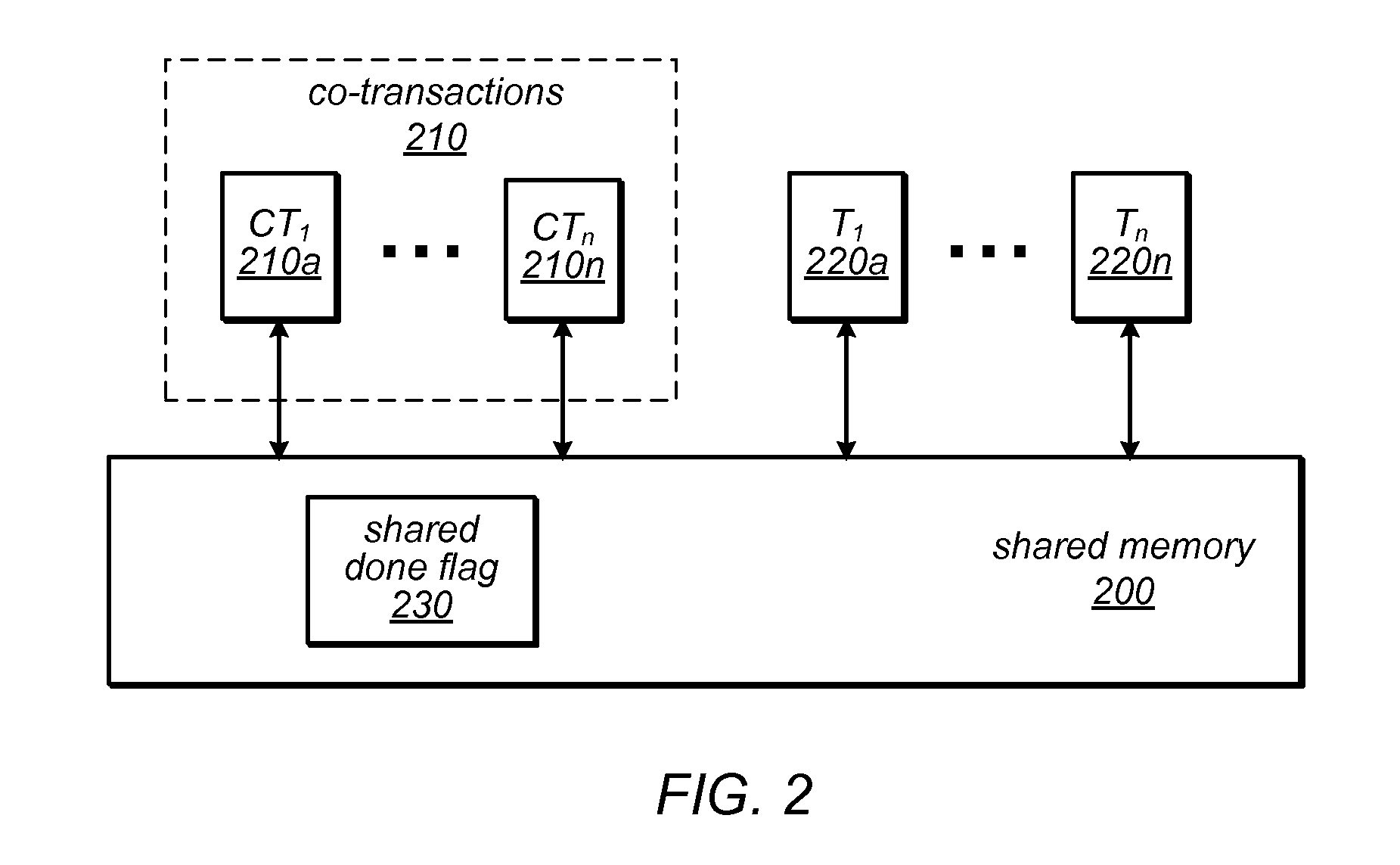

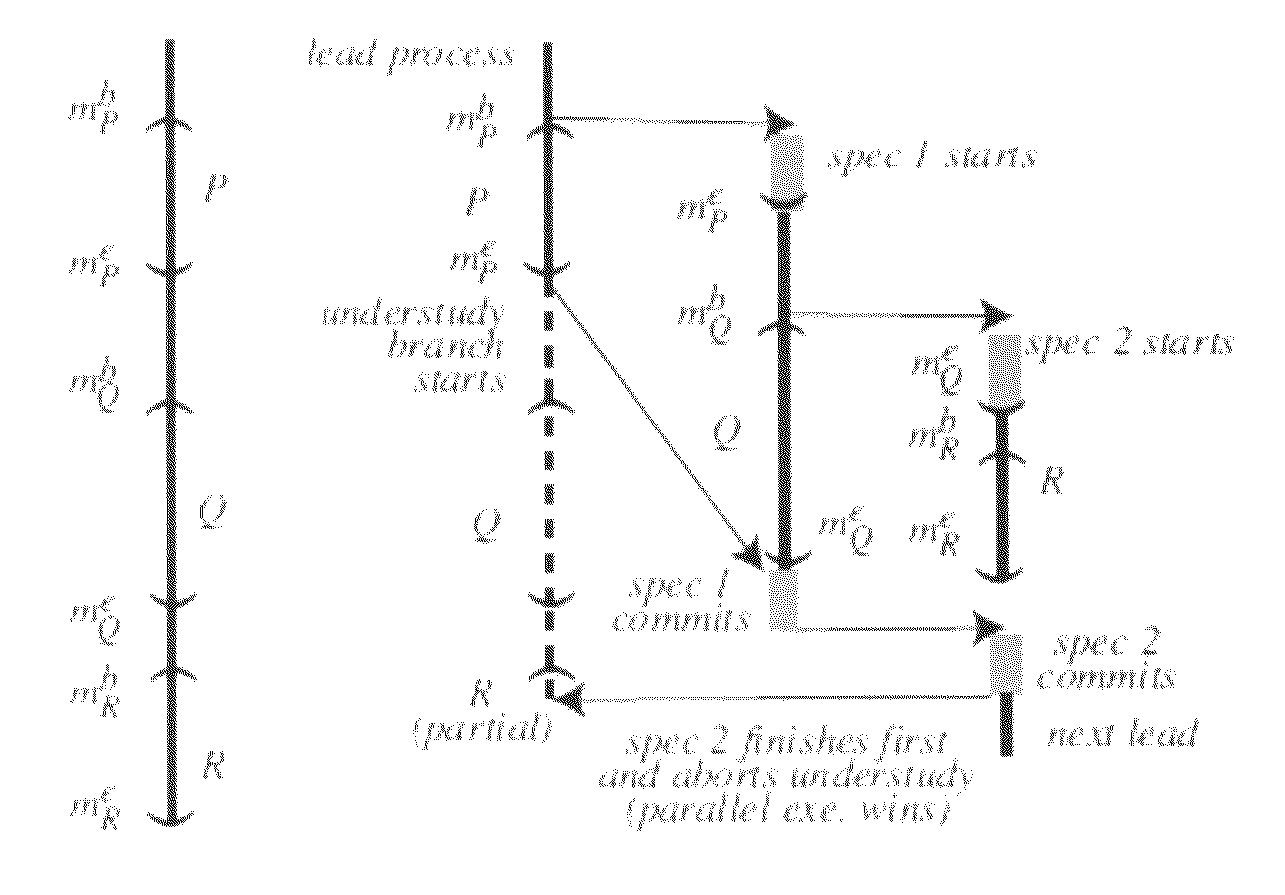

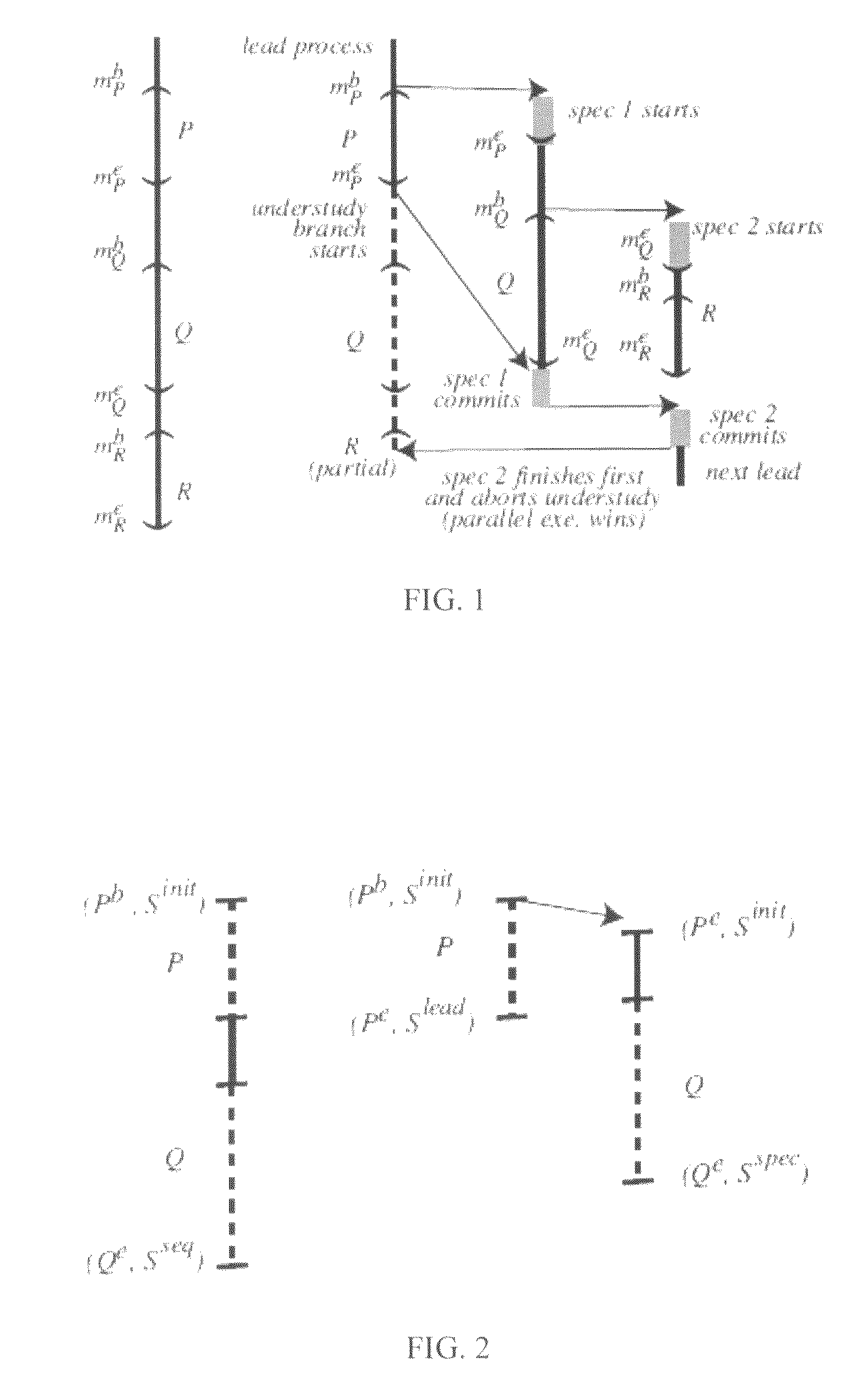

System and Method for Executing a Transaction Using Parallel Co-Transactions

ActiveUS20110246993A1Improve programming performanceSimple and efficient implementationMemory systemsTransaction processingTransactional memoryShared memory

The transactional memory system described herein may implement parallel co-transactions that access a shared memory such that at most one of the co-transactions in a set will succeed and all others will fail (e.g., be aborted). Co-transactions may improve the performance of programs that use transactional memory by attempting to perform the same high-level operation using multiple algorithmic approaches, transactional memory implementations and / or speculation options in parallel, and allowing only the first to complete to commit its results. If none of the co-transactions succeeds, one or more may be retried, possibly using a different approach and / or transactional memory implementation. The at-most-one property may be managed through the use of a shared “done” flag. Conflicts between co-transactions in a set and accesses made by transactions or activities outside the set may be managed using lazy write ownership acquisition and / or a priority-based approach. Each co-transaction may execute on a different processor resource.

Owner:ORACLE INT CORP

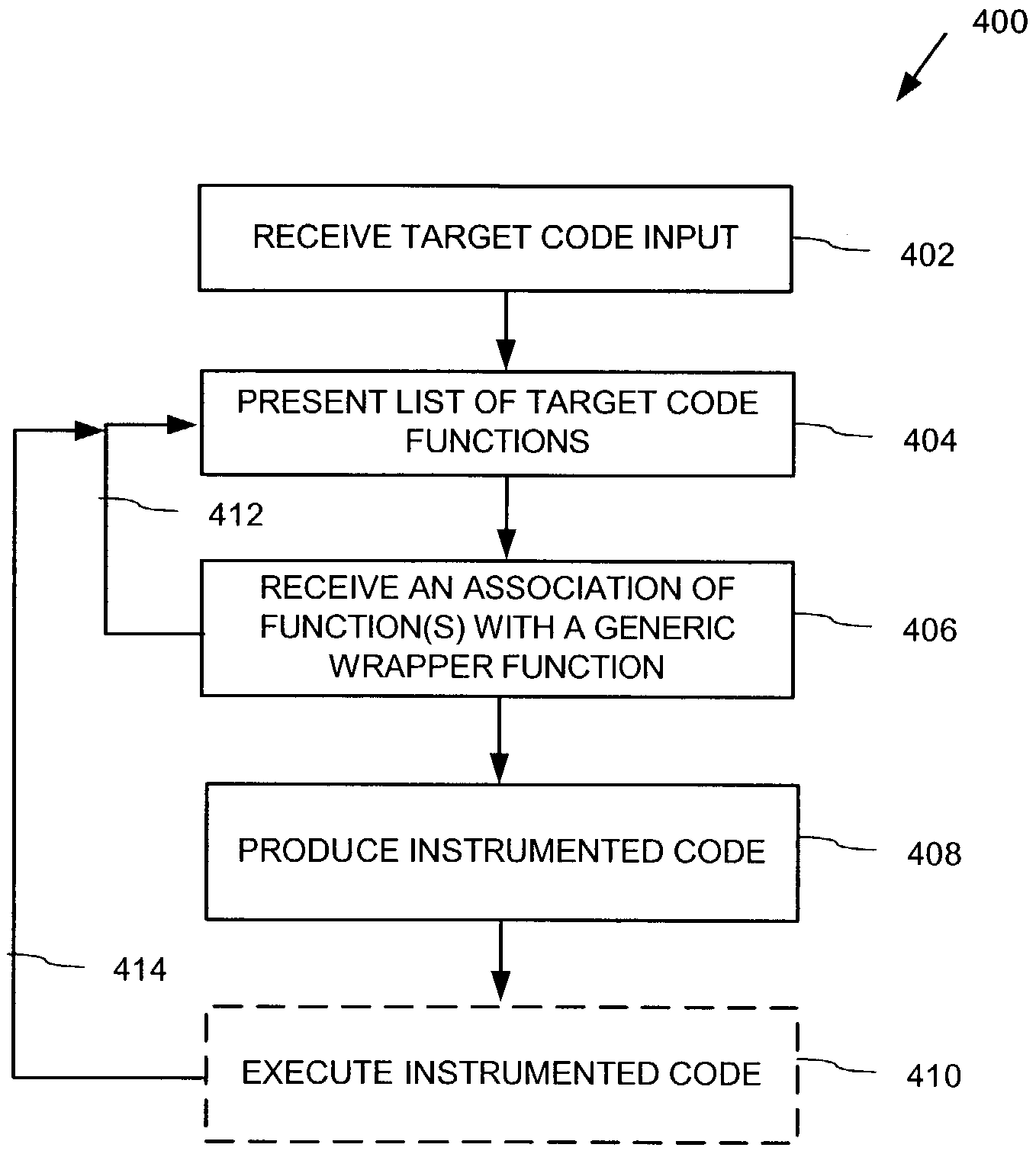

Generic wrapper scheme

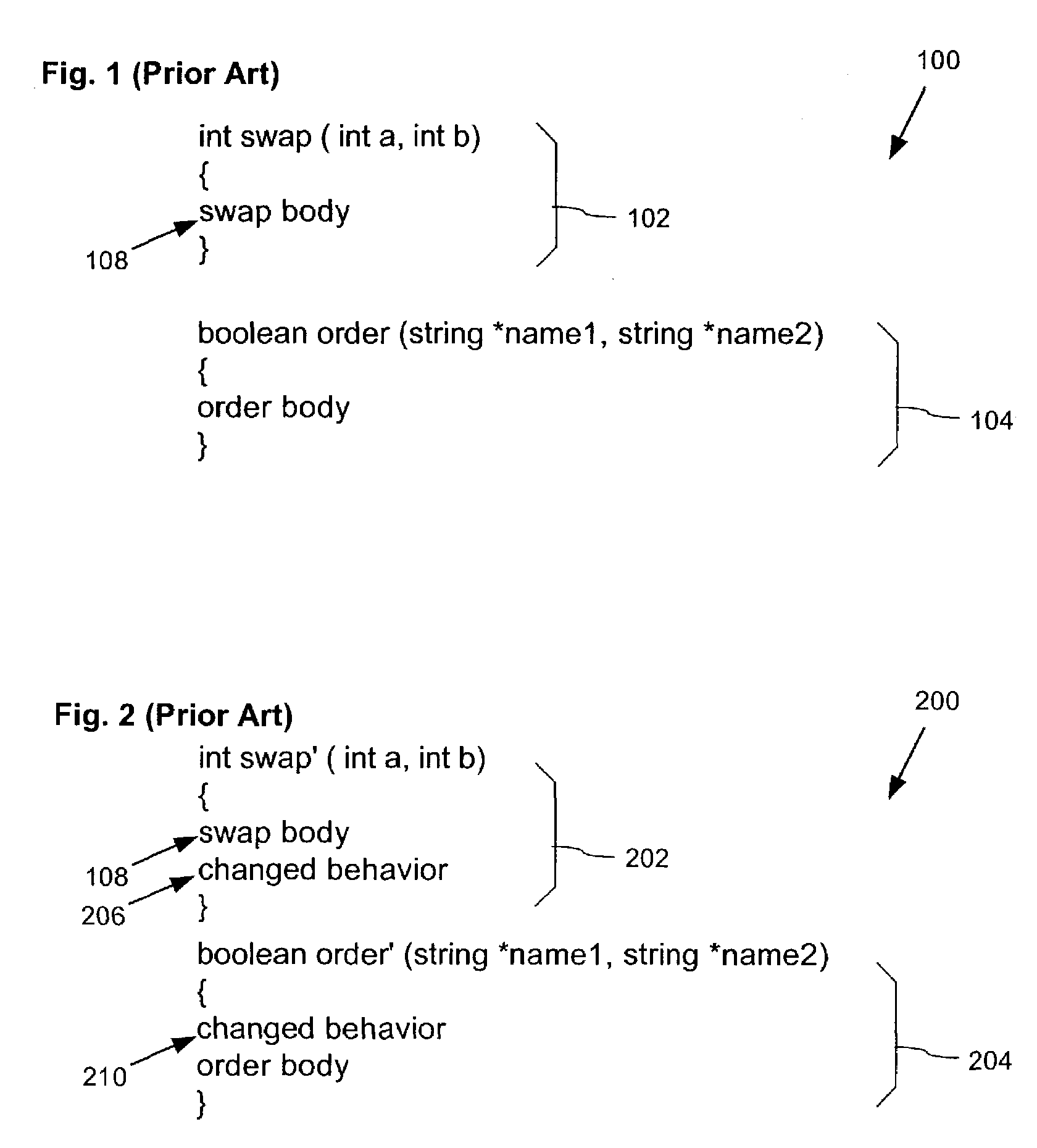

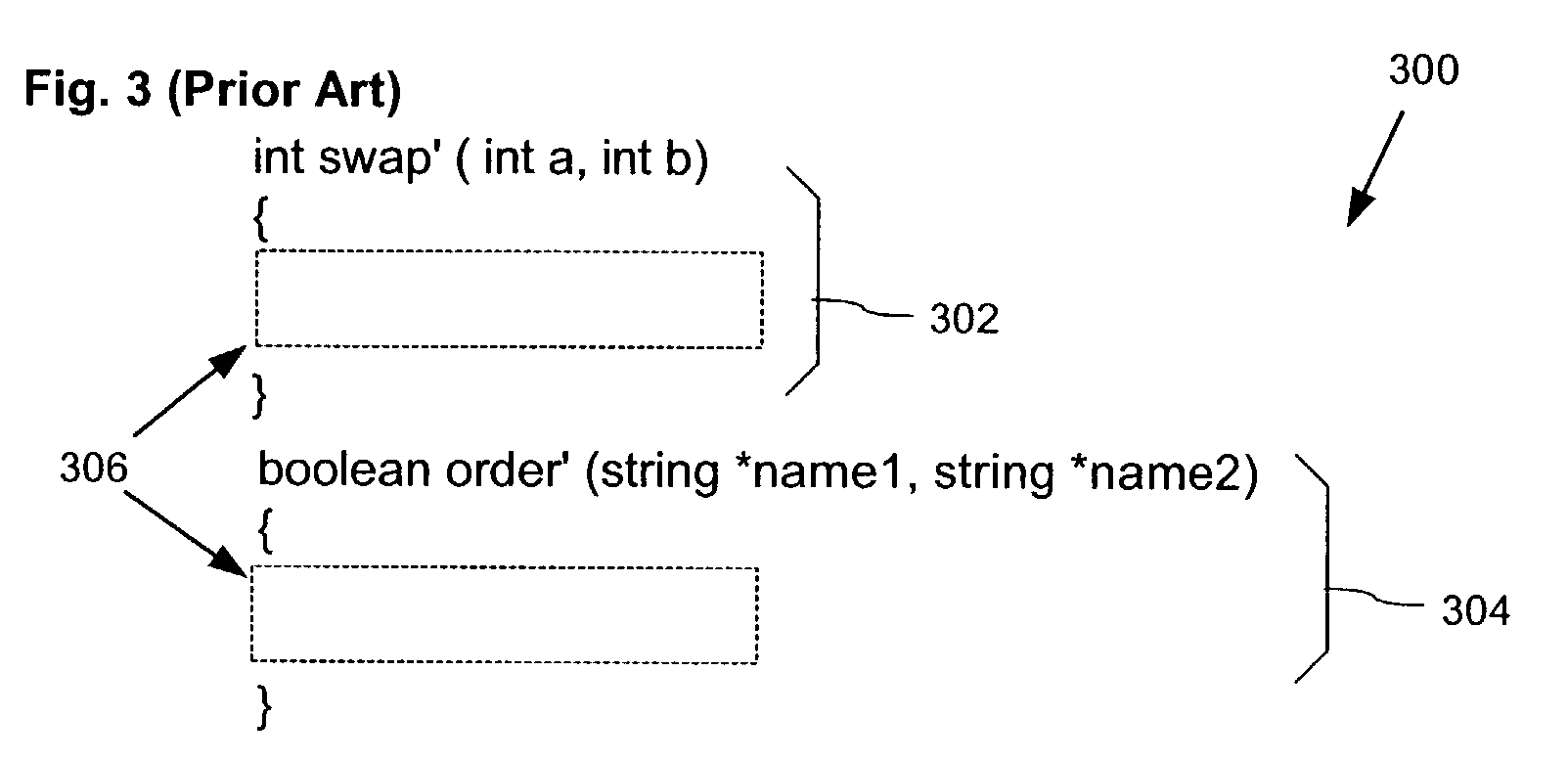

InactiveUS7353501B2Improve programming performanceBehavior changeDigital computer detailsSoftware testing/debuggingInstrument functionParallel computing

A method instruments a function in an executable file so that the instrumented function calls a generic preprocessor prior to execution of the body of the function. After the preprocessor modifies the original function's incoming parameters, the body of the function itself is executed. Finally, execution is directed to a generic postprocessor prior to returning from the function. The postprocessor modifies the outgoing parameters and return value. In one implementation, the parameters of an instrumented function are described and packaged into a descriptor data structure. The descriptor data structure is passed to the generic preprocessor and postprocessor. A generic processor uses the descriptor to select changed behaviors based on the calling context.

Owner:MICROSOFT TECH LICENSING LLC

Integrated circuit card programming modules, systems and methods

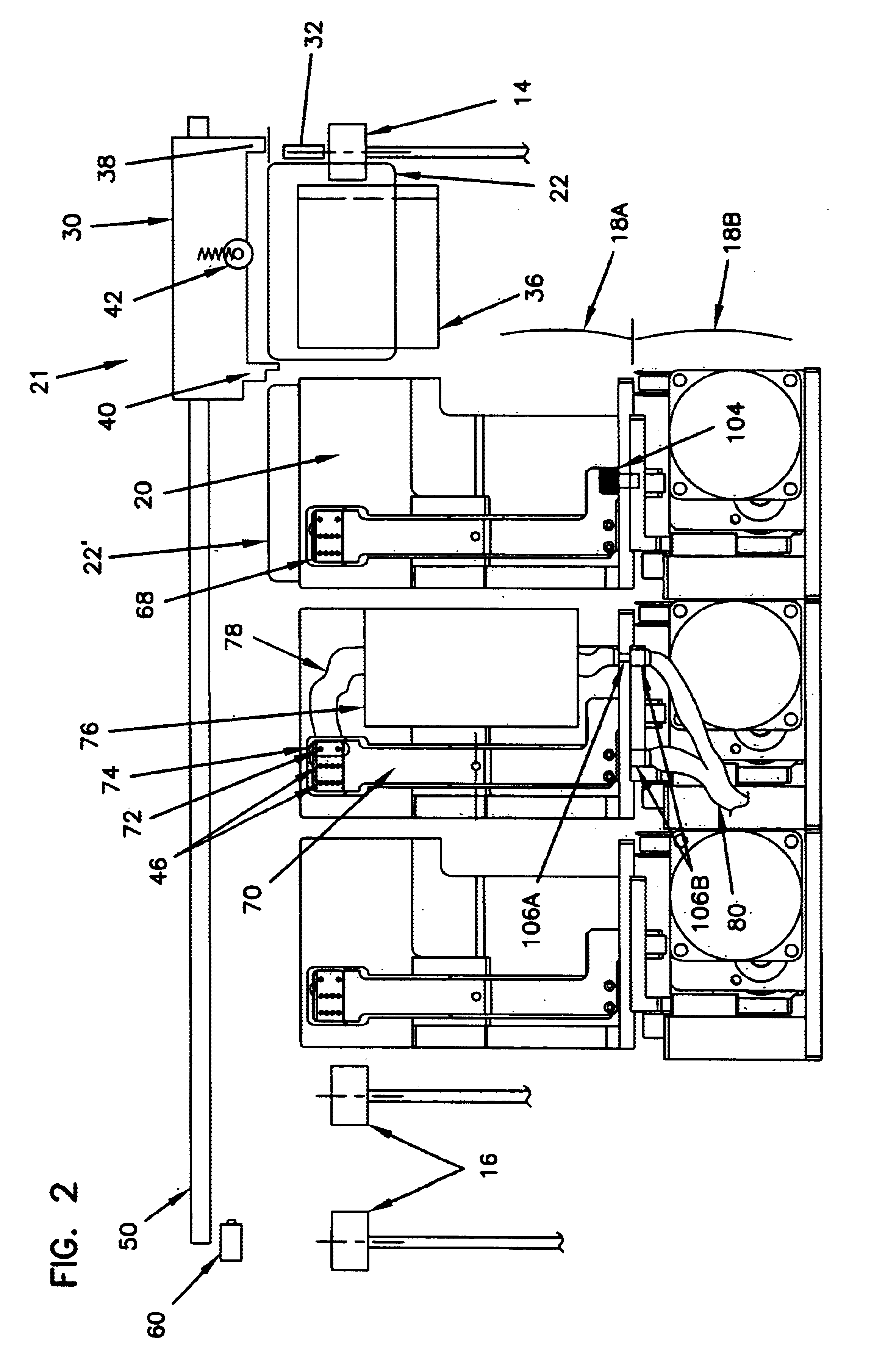

InactiveUS6695205B1Improve programming performanceSpace minimizationDigitally marking record carriersOther printing matterComputer moduleModularity

The invention relates to the programming of integrated circuit cards. More particularly, the invention concerns systems and methods for integrated circuit card programming, as well as modules used for integrated circuit card programming. Each module includes a movable cassette mechanism having a plurality of card programming stations thereon. The use of multiple card programming stations permits simultaneous programming of a plurality of cards. Further, the use of a single cassette in the module permits the size of the module to be reduced significantly. A modular concept is more readily adaptable to customer needs and requirements. For instance, if a customer requires more card production than that provided by a single module, a second module that is identical to the first module can be connected to the first module so that the two modules working together provide two cassettes. Additional modules can be added to further increase card production capacity.

Owner:ENTRUST CORP

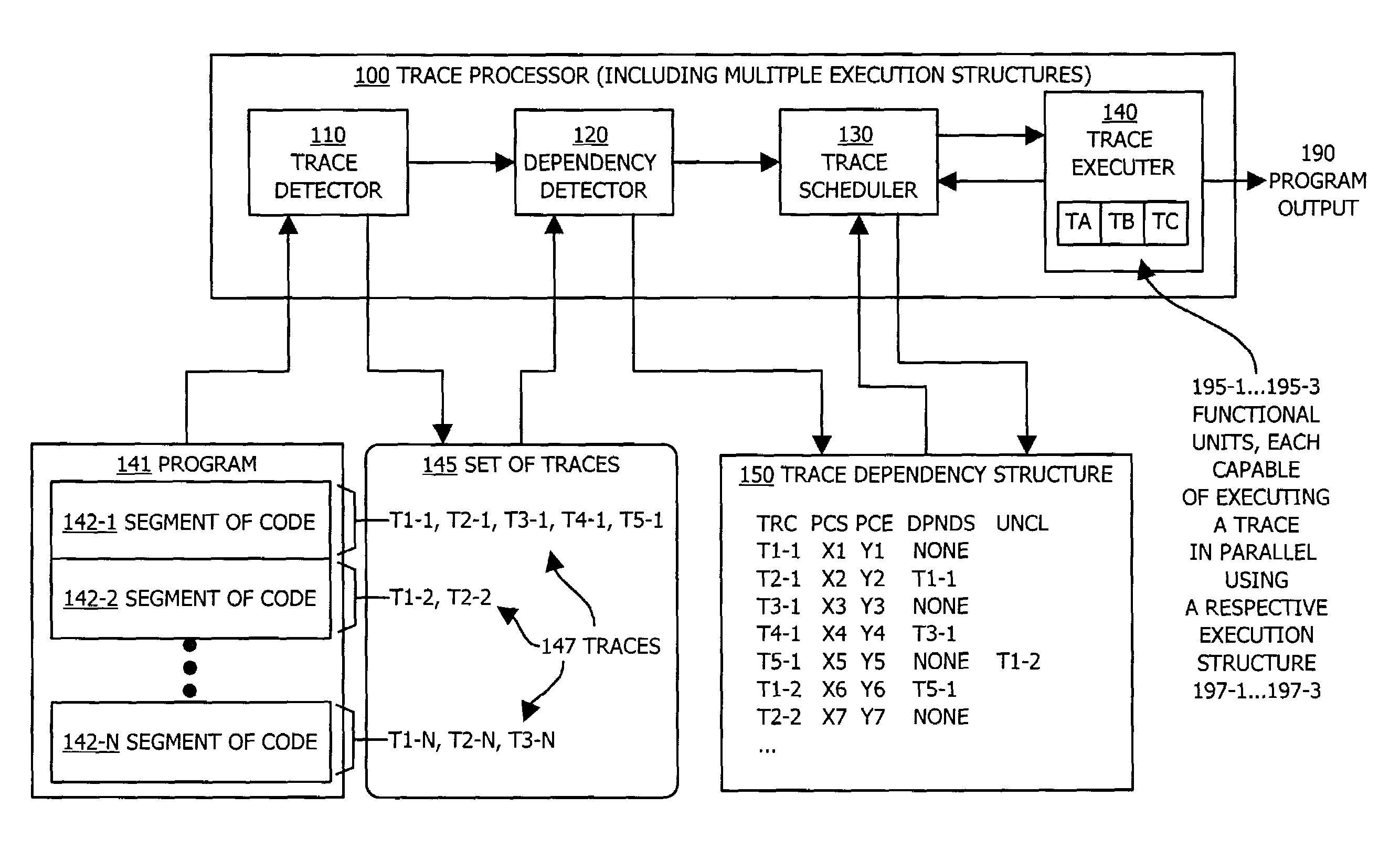

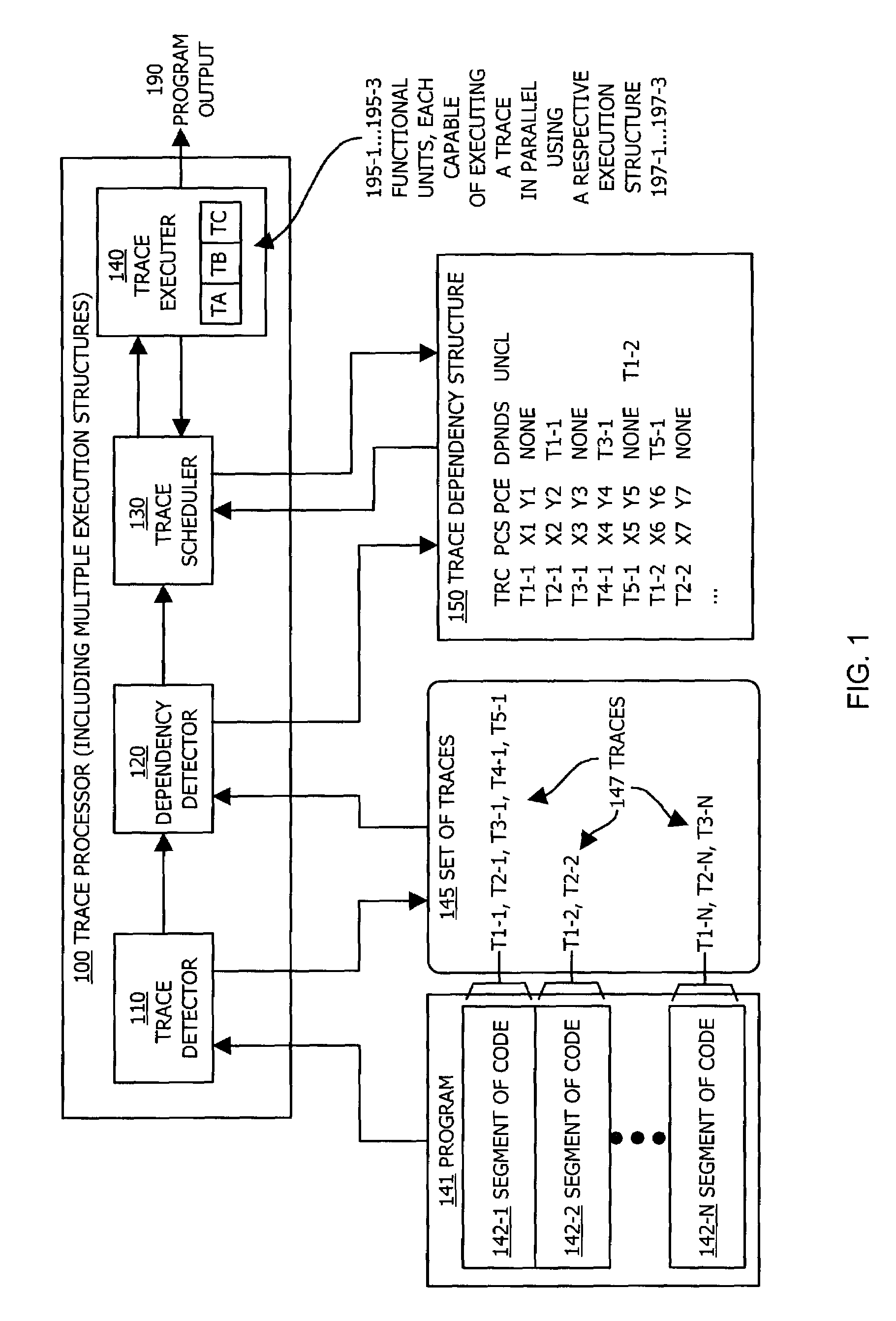

Methods and apparatus of an architecture supporting execution of instructions in parallel

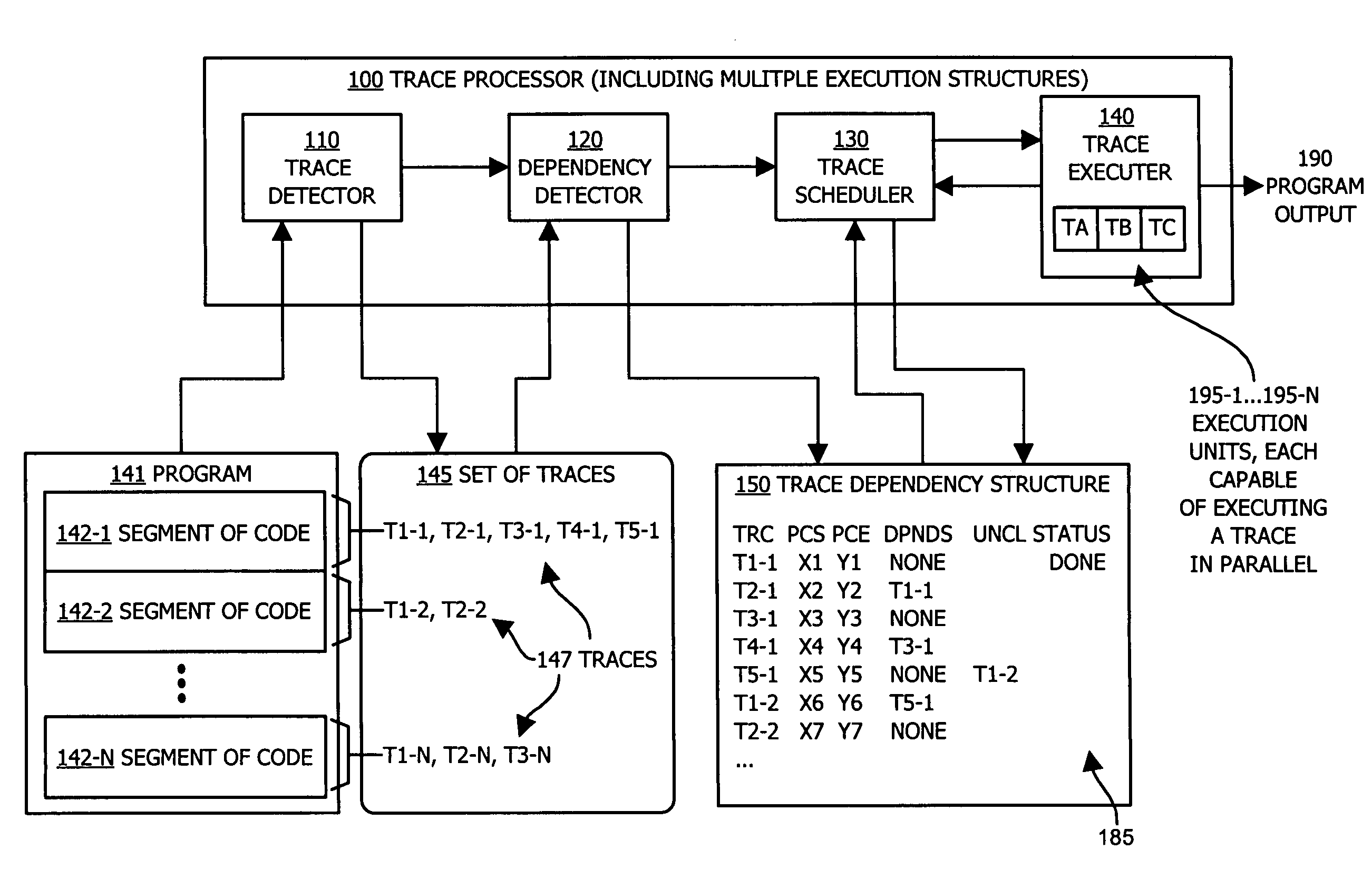

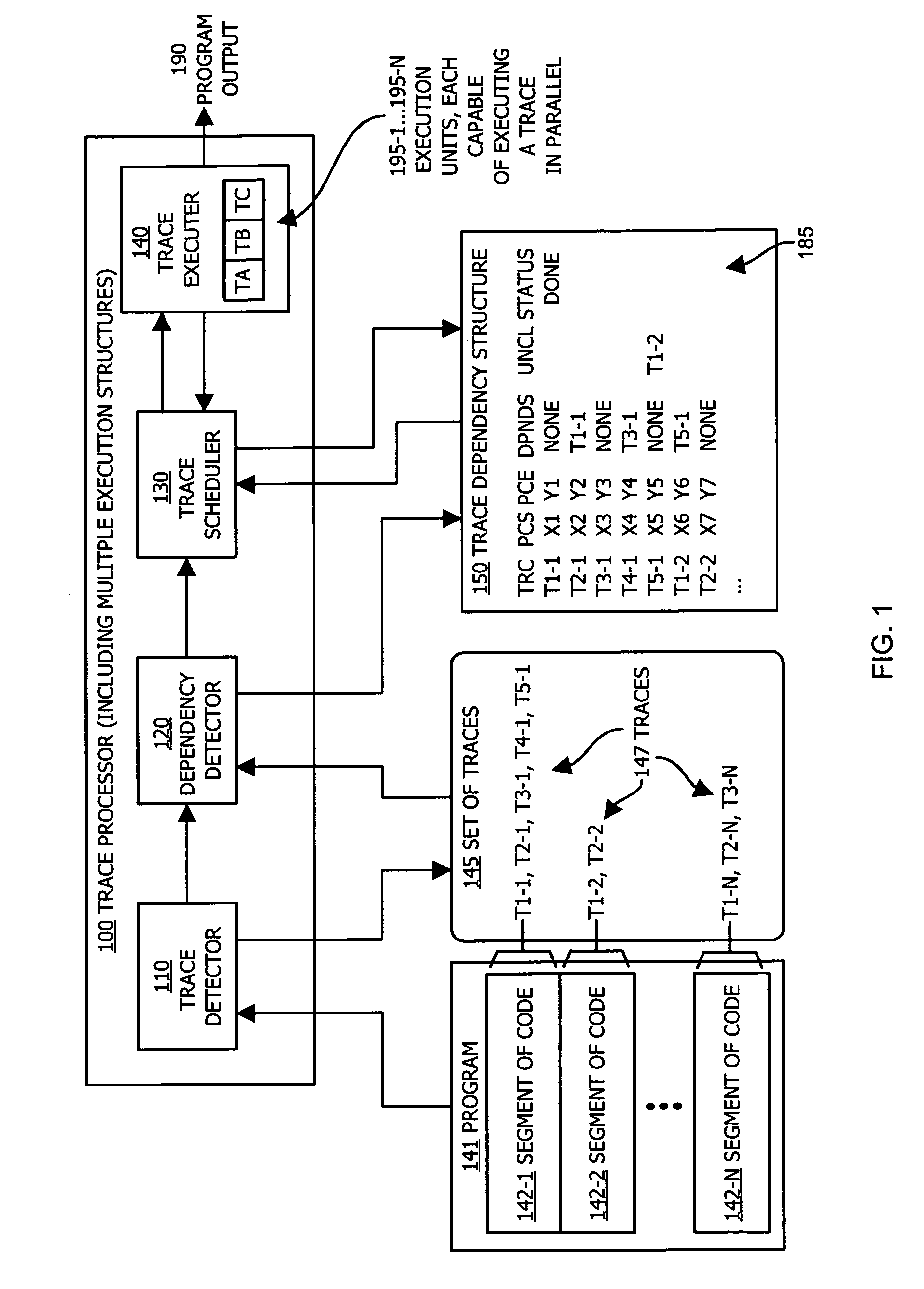

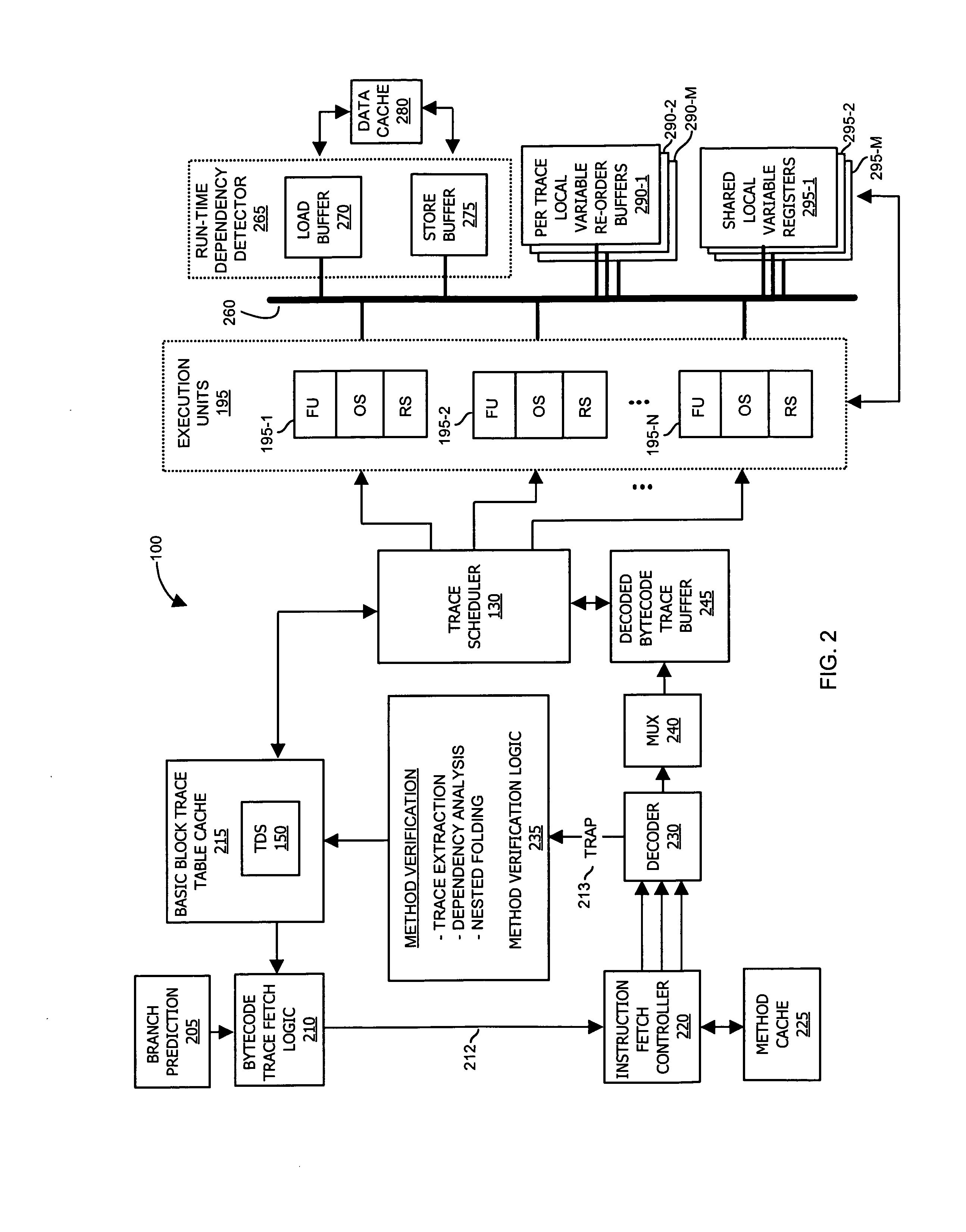

ActiveUS7600221B1Improve execution speedImprove execution timeDigital computer detailsSpecific program execution arrangementsOperandRunning time

A processing architecture supports executing instructions in parallel after identifying at least one level of dependency associated with a set of traces within a segment of code. Each trace represents a sequence of logical instructions within the segment of code that can be executed in a corresponding operand stack. Scheduling information is generated based on a dependency order identified among the set of traces. Thus, multiple traces may be scheduled for parallel execution unless a dependency order indicates that a second trace is dependent upon a first trace. In this instance, the first trace is executed prior to the second trace. Trace dependencies may be identified at run-time as well as prior to execution of traces in parallel. Results associated with execution of a trace are stored in a temporary buffer (instead of memory) until after it is known that a data dependency was not detected at run-time.

Owner:ORACLE INT CORP

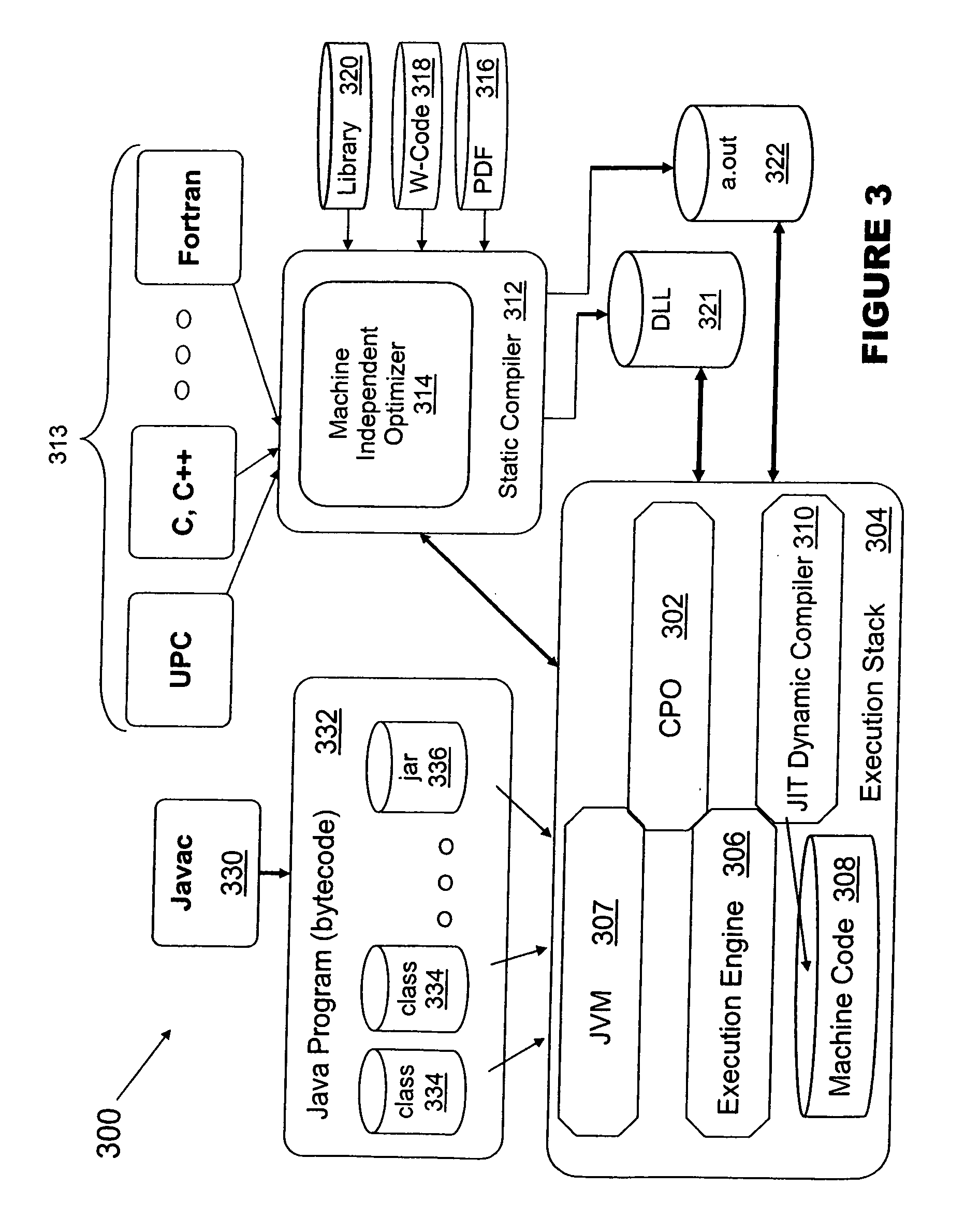

Methods and apparatus for executing instructions in parallel

ActiveUS7210127B1Eliminate operationLimited abilitySpecific program execution arrangementsMemory systemsParallel computingJava bytecode

A system, method and apparatus for executing instructions in parallel identify a set of traces within a segment of code, such as Java bytecode. Each trace represents a sequence of instructions within the segment of code that are execution structure dependent, such as stack dependent. The system, method and apparatus identify a dependency order between traces in the identified set of traces. The dependency order indicates traces that are dependent upon operation of other traces in the segment of code. The system, method and apparatus can then execute traces within the set of traces in parallel and in an execution order that is based on the identified dependency order, such that at least two traces are executed in parallel and such that if the dependency order indicates that a second trace is dependent upon a first trace, the first trace is executed prior to the second trace. This system provides bytecode level parallelism for Java and other applications that utilize execution structure-based architectures and identifies and efficiently eliminates Java bytecode stack dependency.

Owner:ORACLE INT CORP

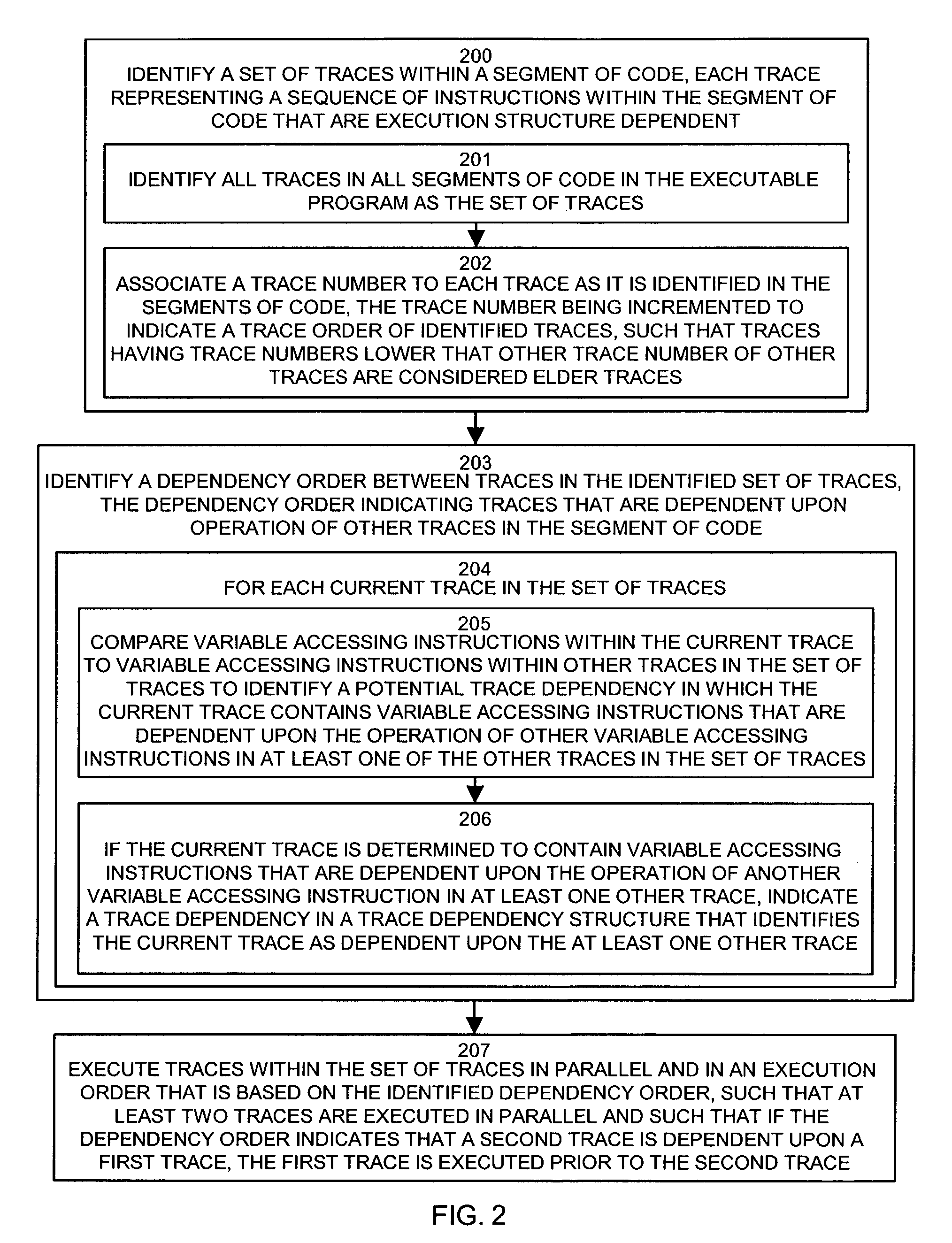

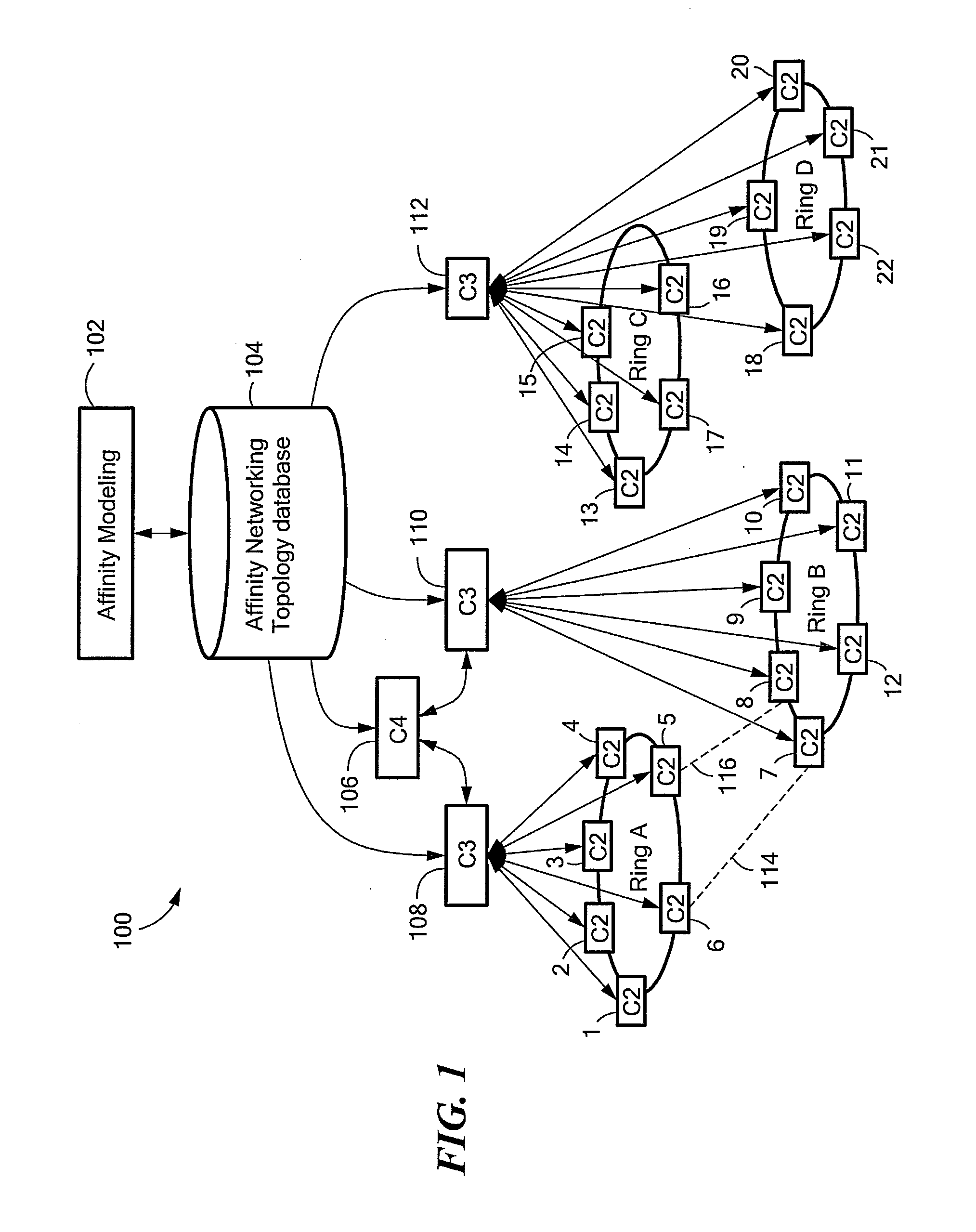

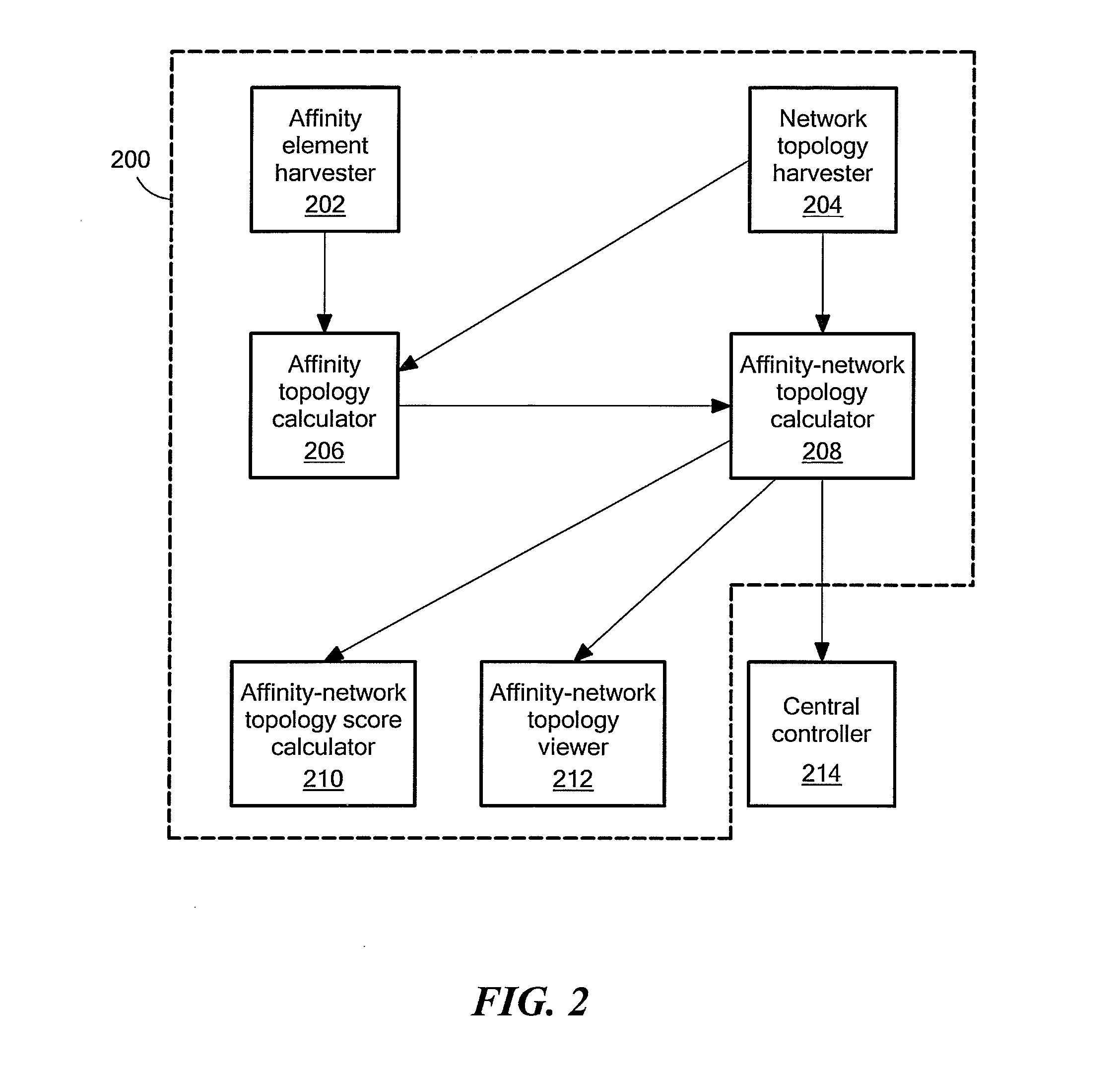

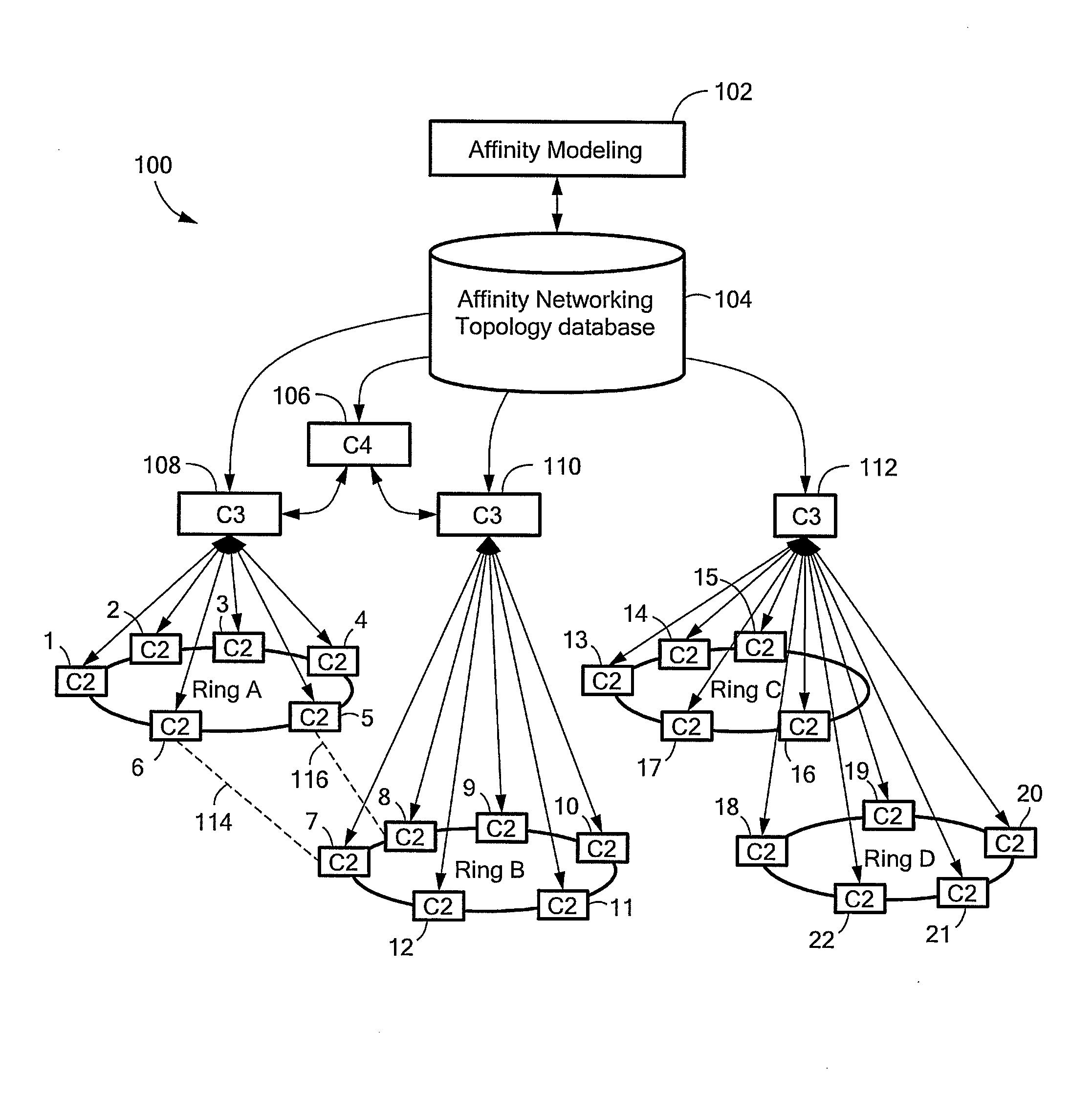

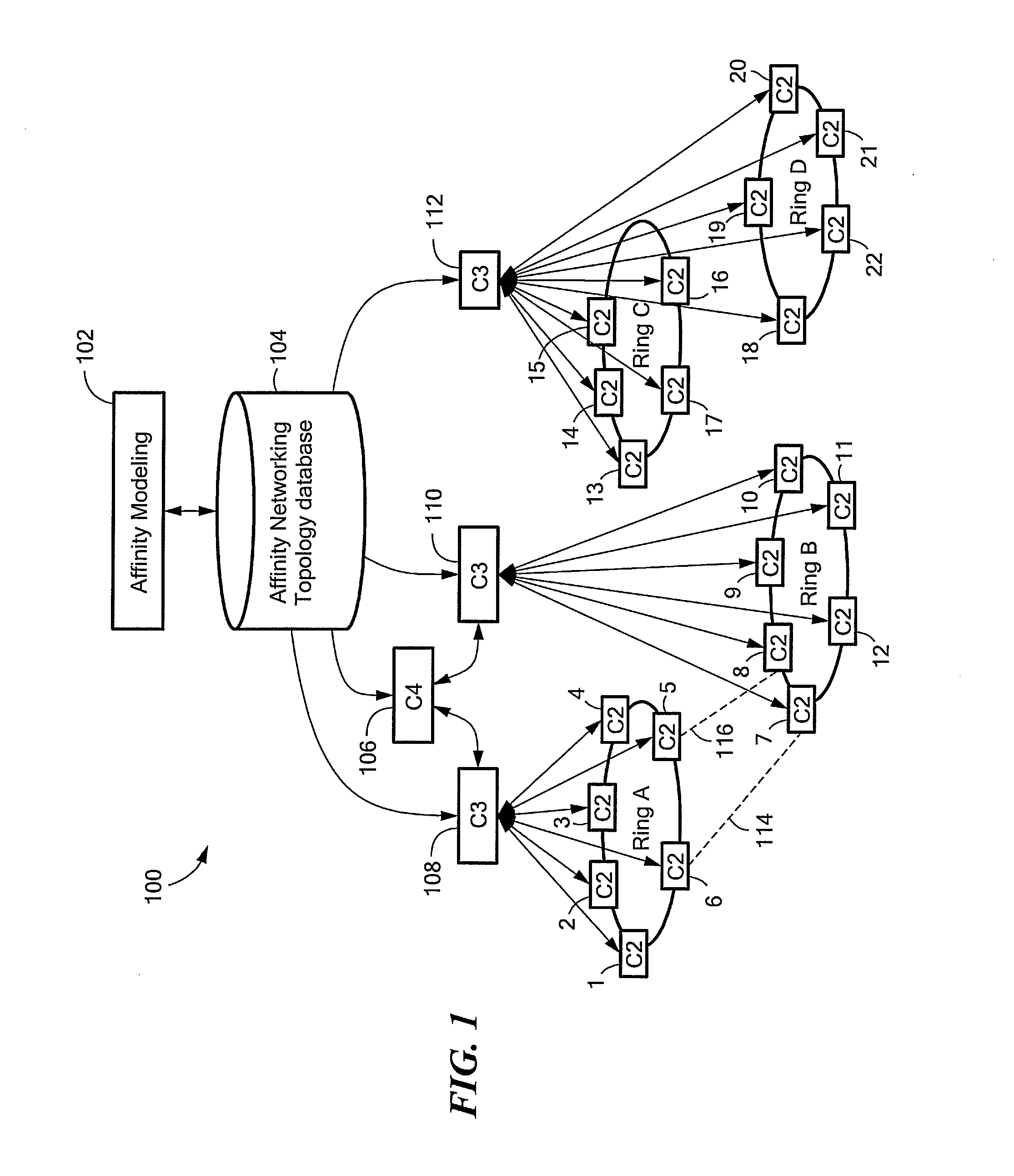

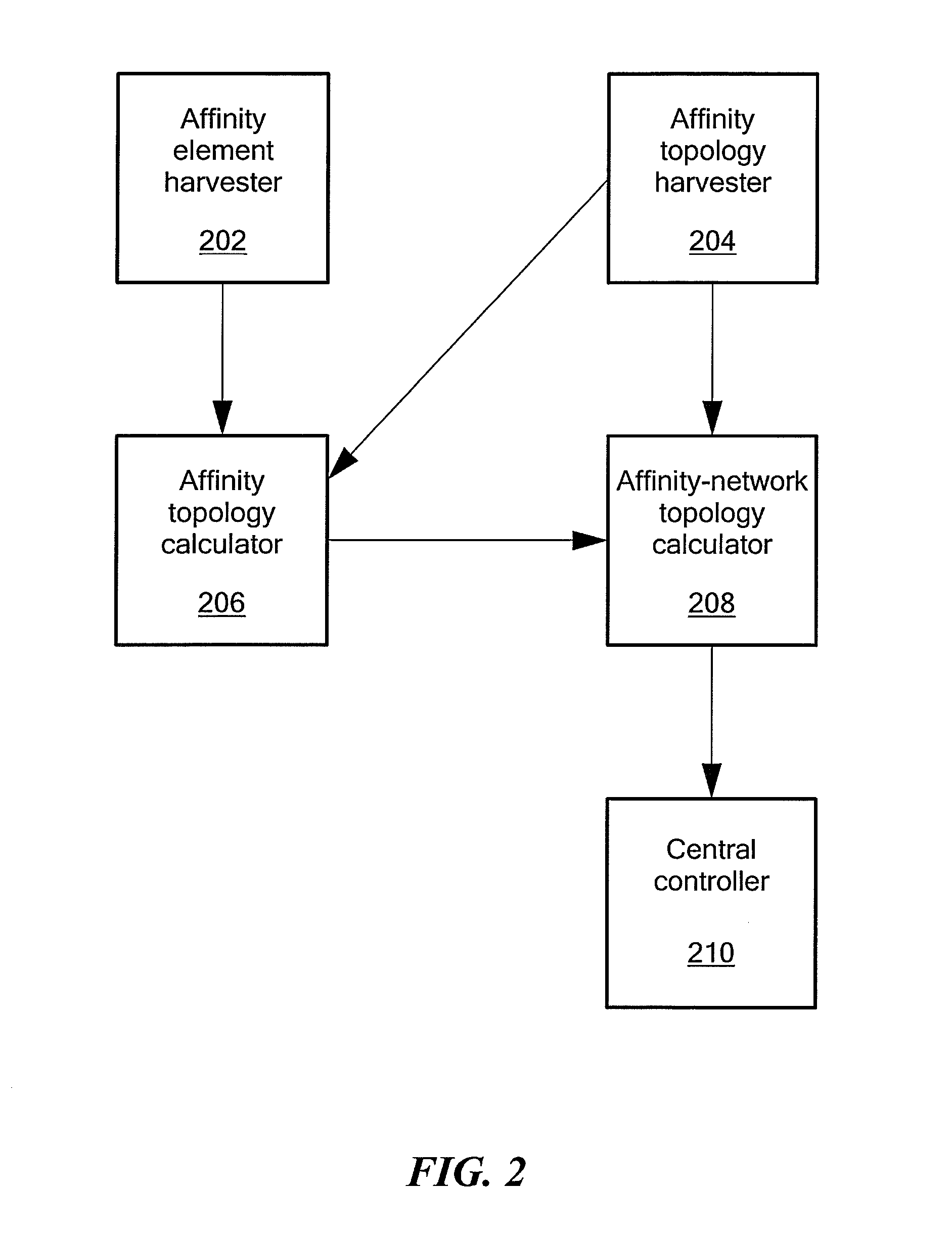

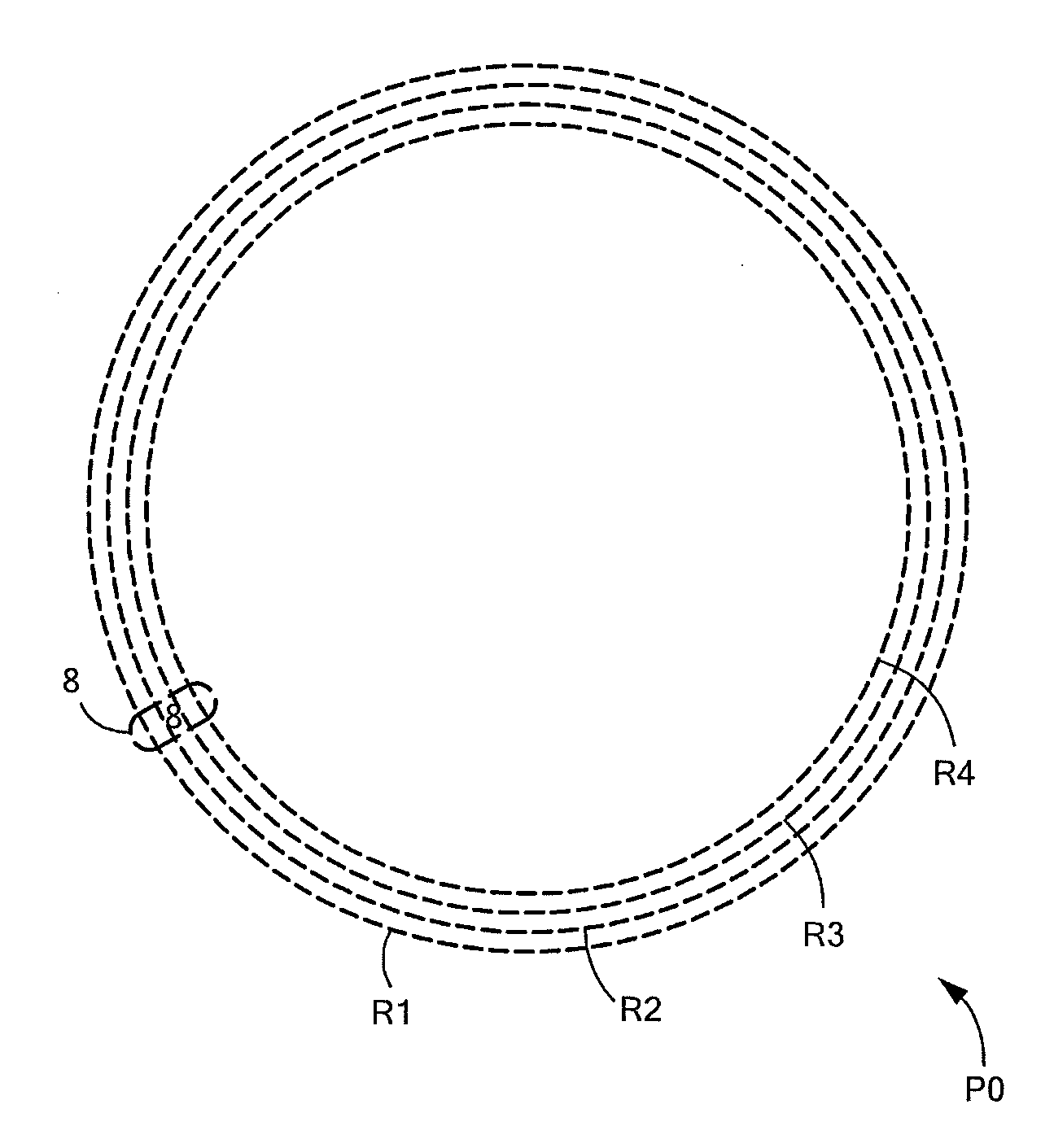

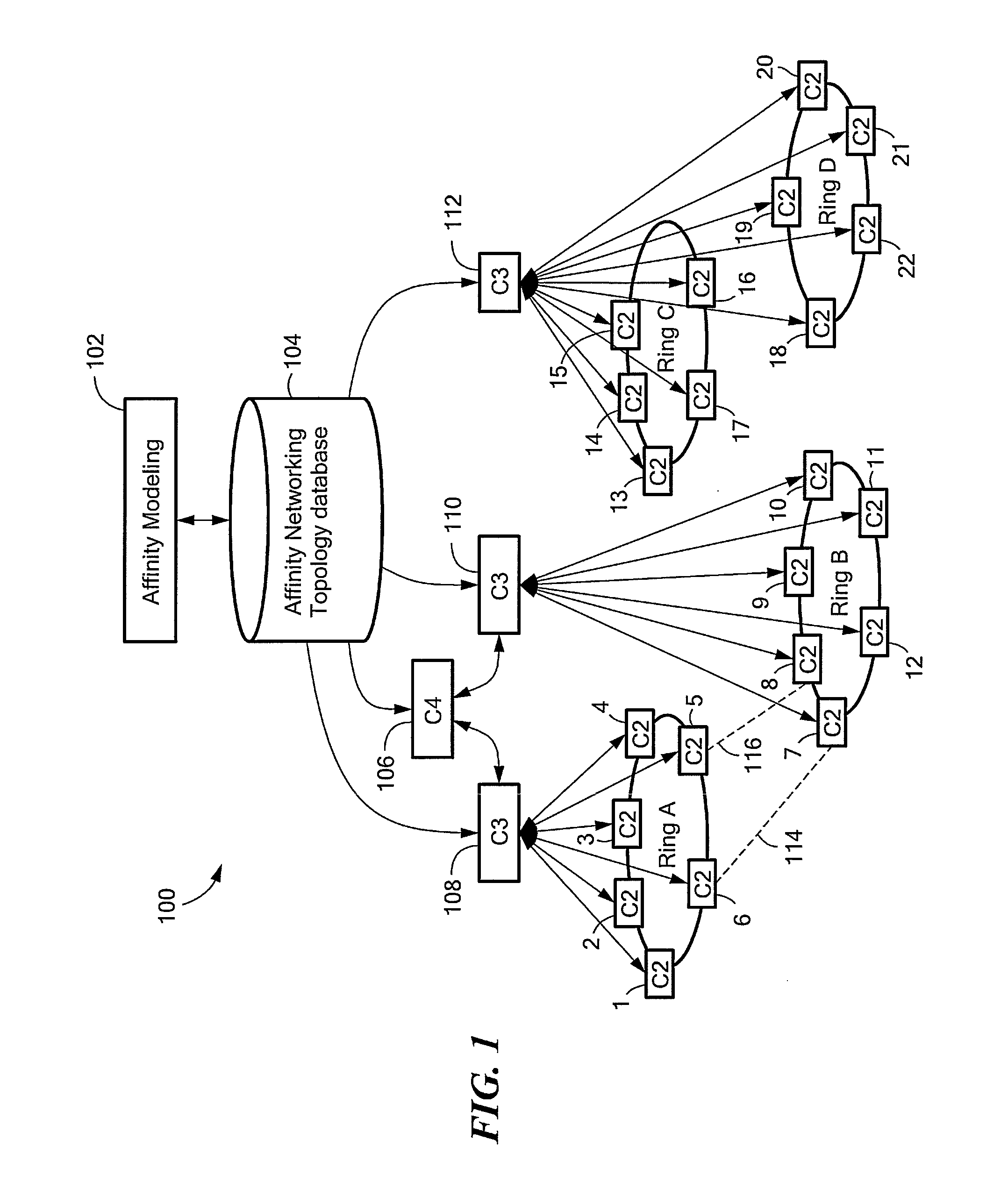

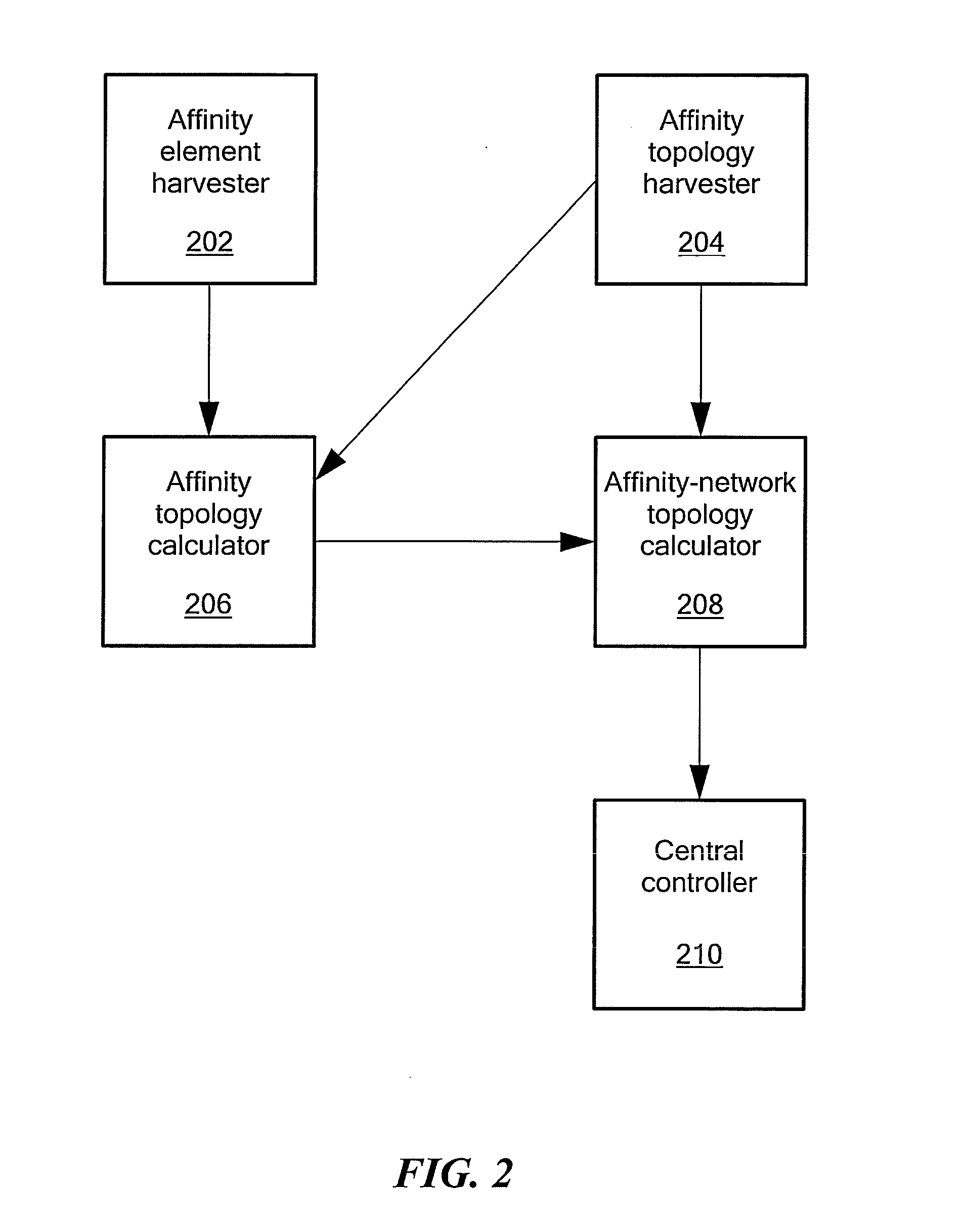

Affinity modeling in a data center network

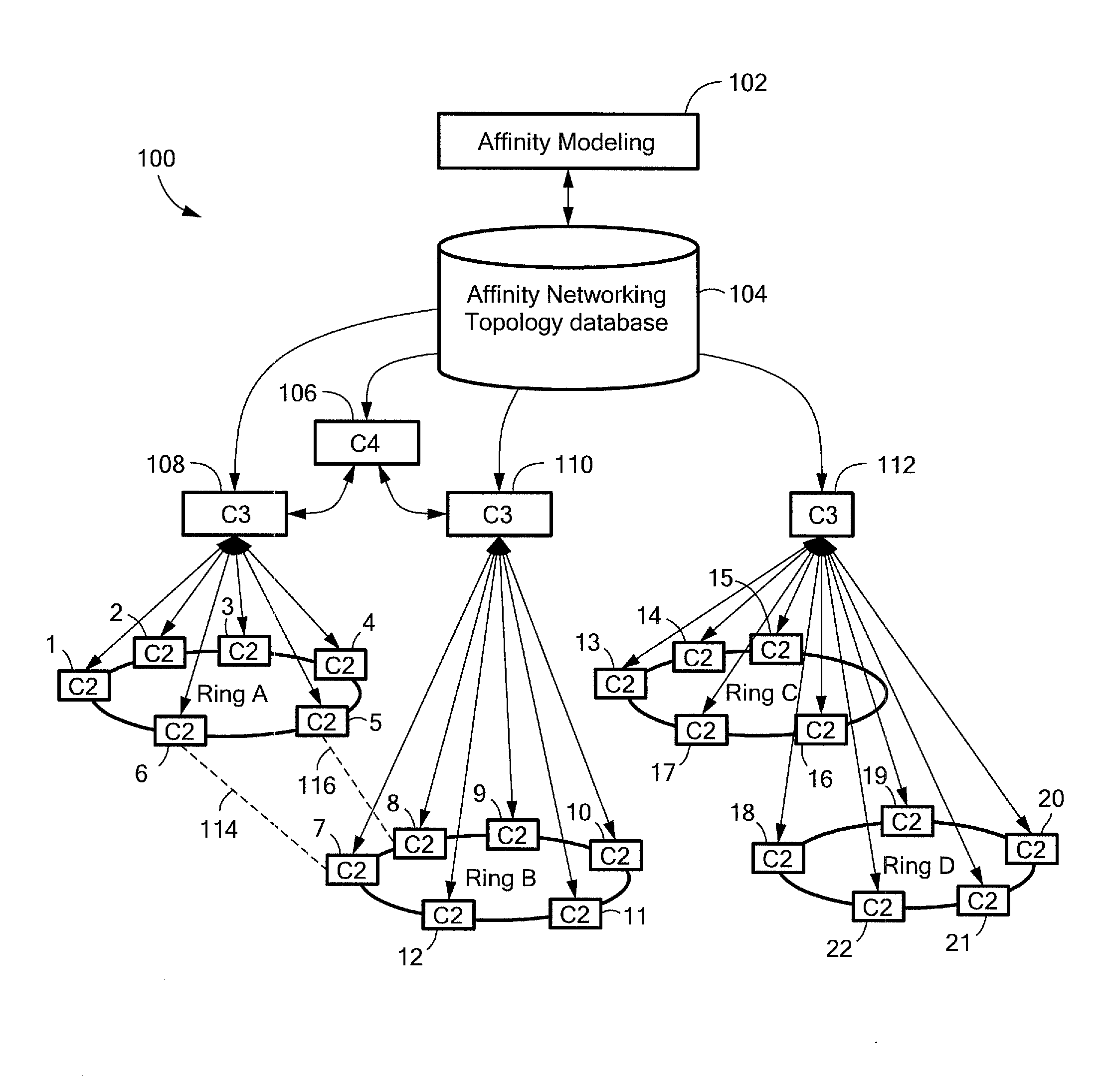

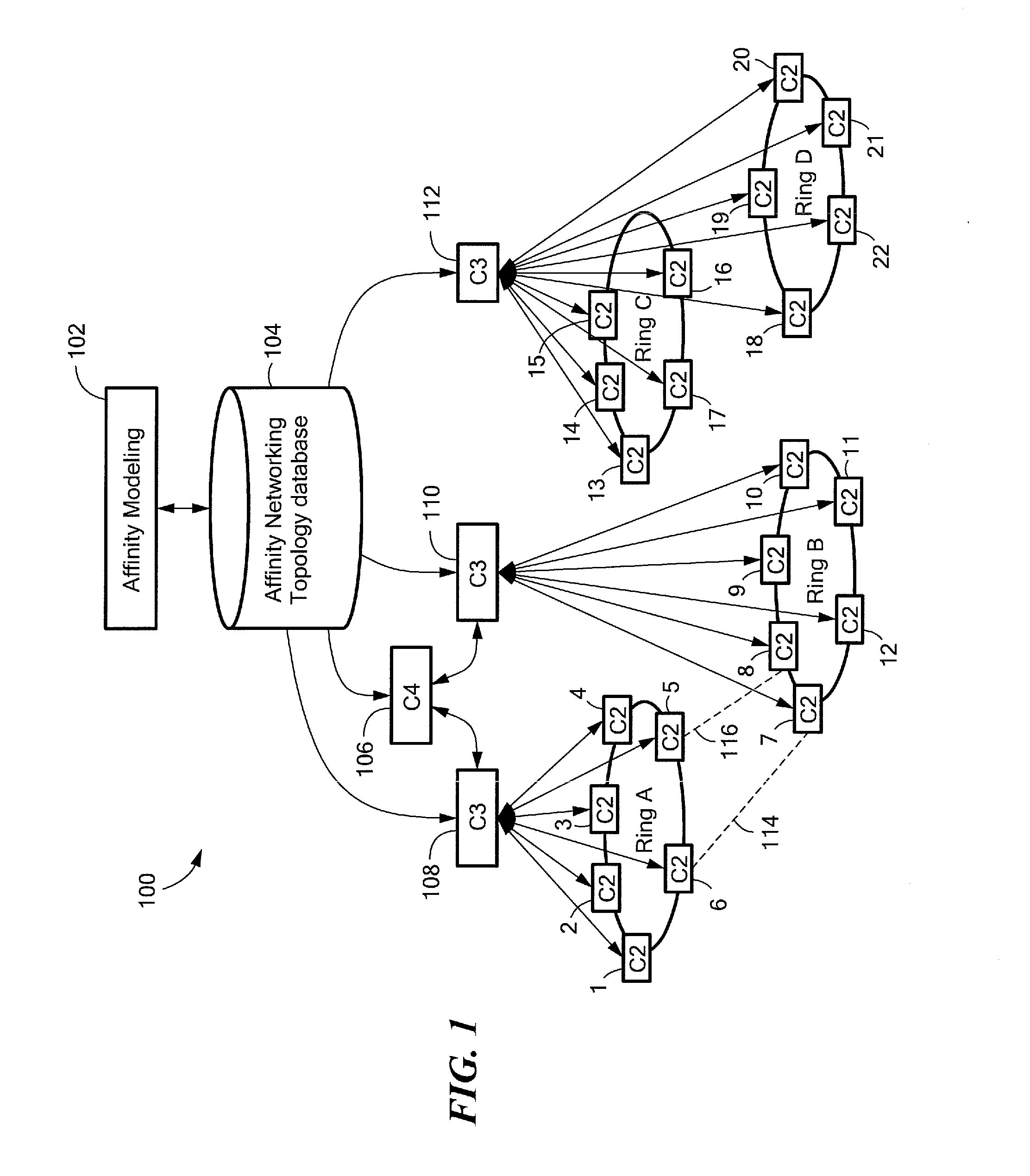

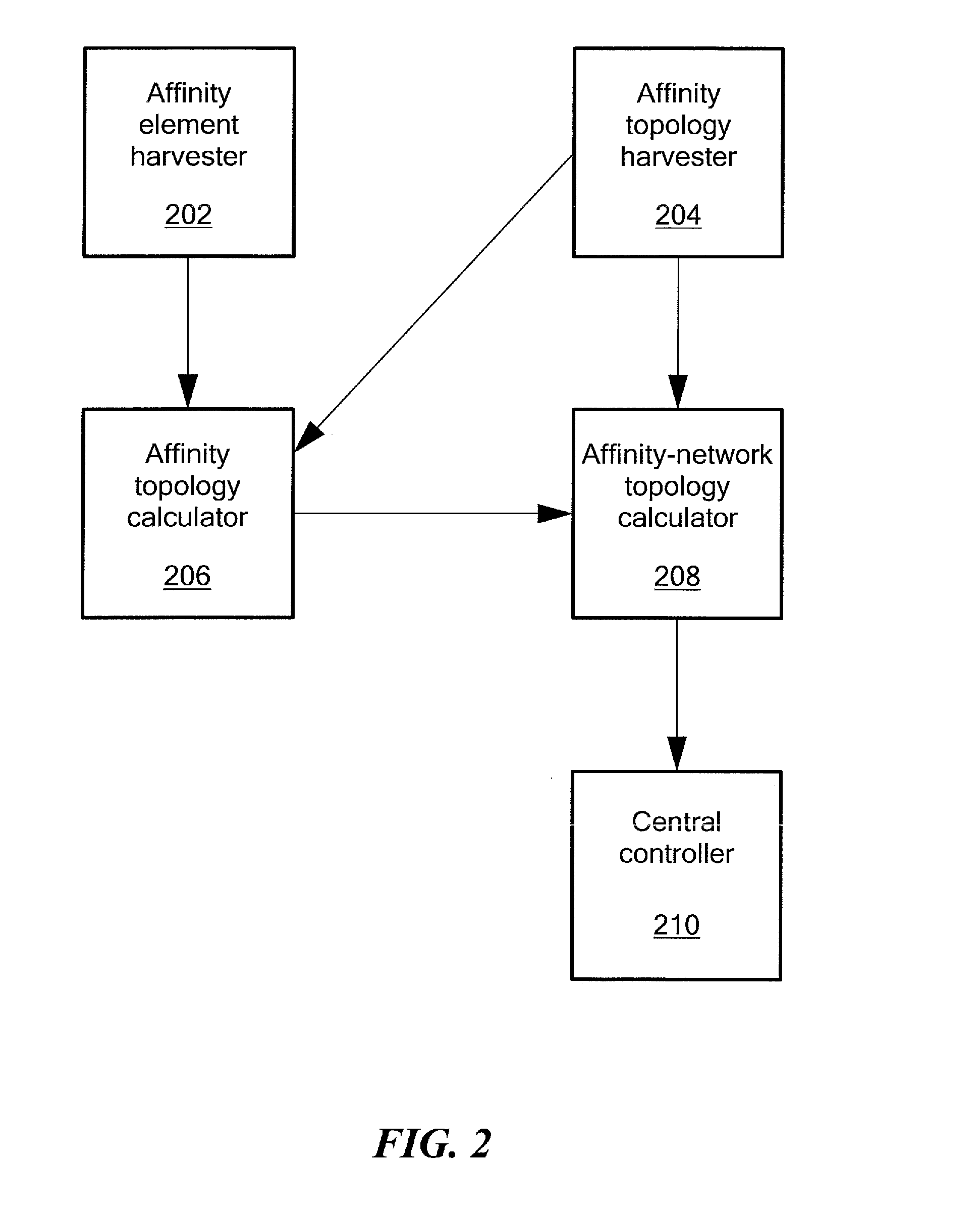

ActiveUS20130108259A1Enhance program performanceEffective distributionMultiplex system selection arrangementsElectromagnetic network arrangementsComputational resourceDistributed computing

Systems and methods of affinity modeling in data center networks that allow bandwidth to be efficiently allocated with the data center networks, while reducing the physical interconnectivity requirements of the data center networks. Such systems and methods of affinity modeling in data center networks further allow computing resources within the data center networks to be controlled and provisioned based at least in part on the network topology and an application component topology, thereby enhancing overall application program performance.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

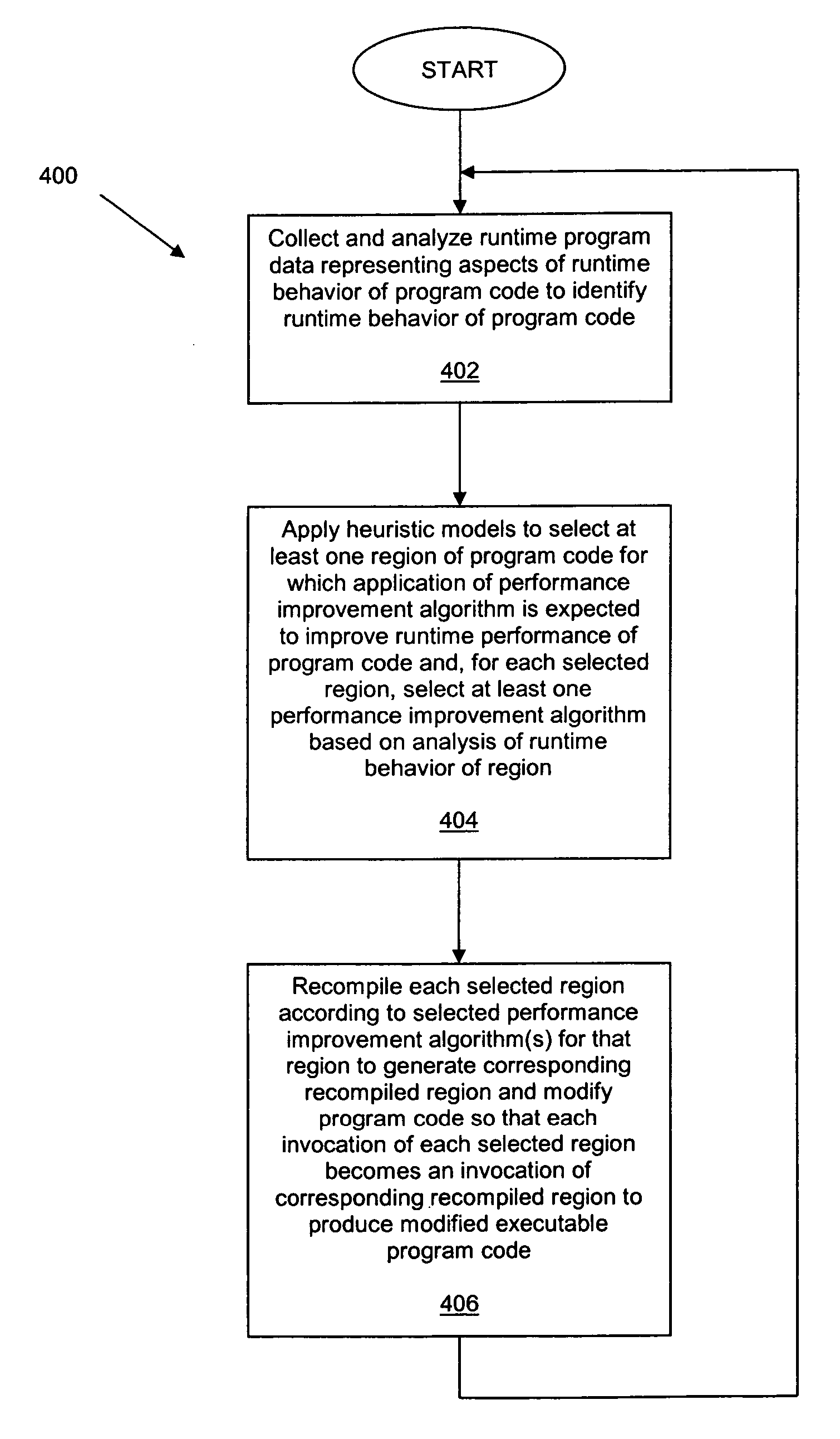

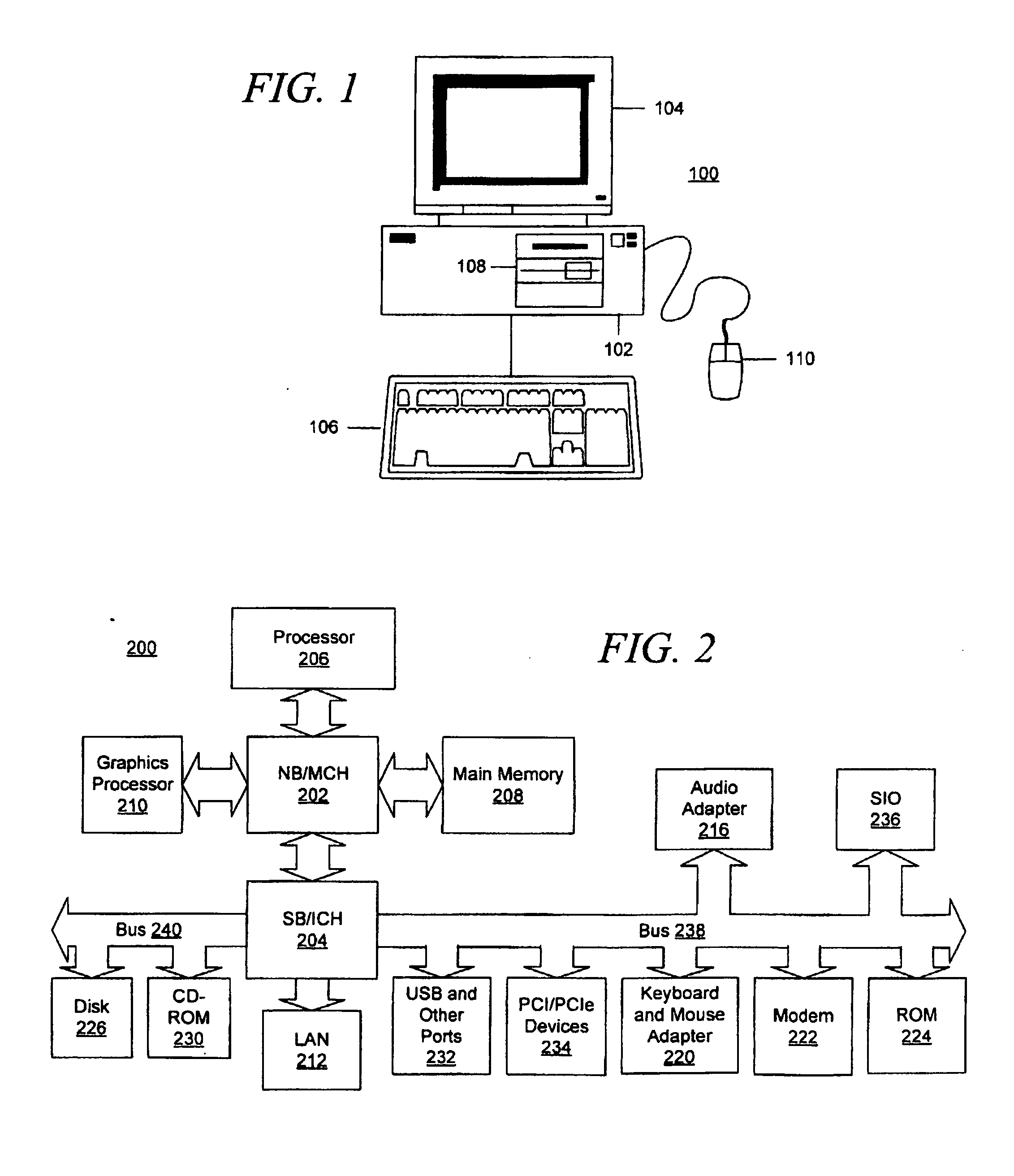

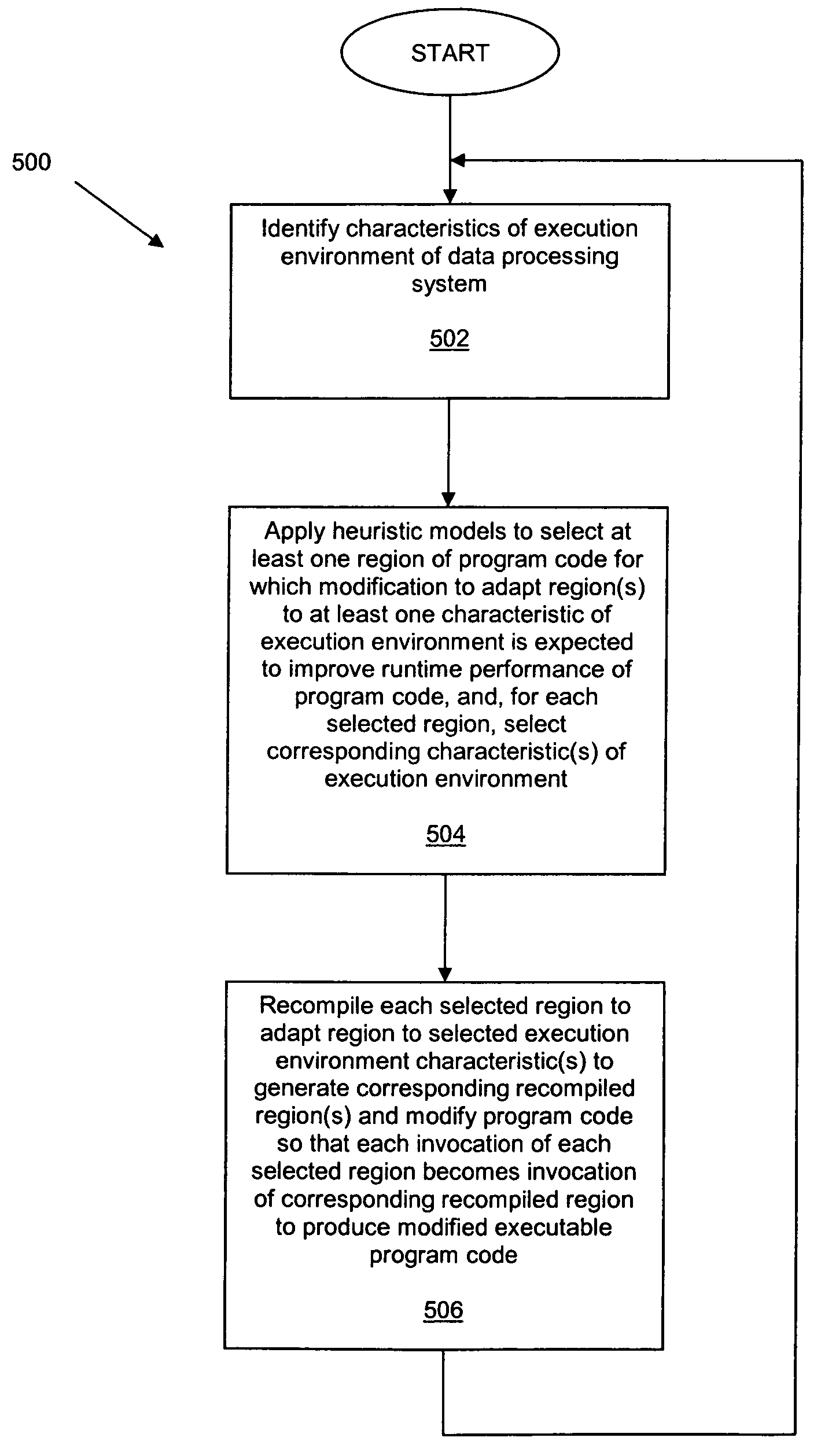

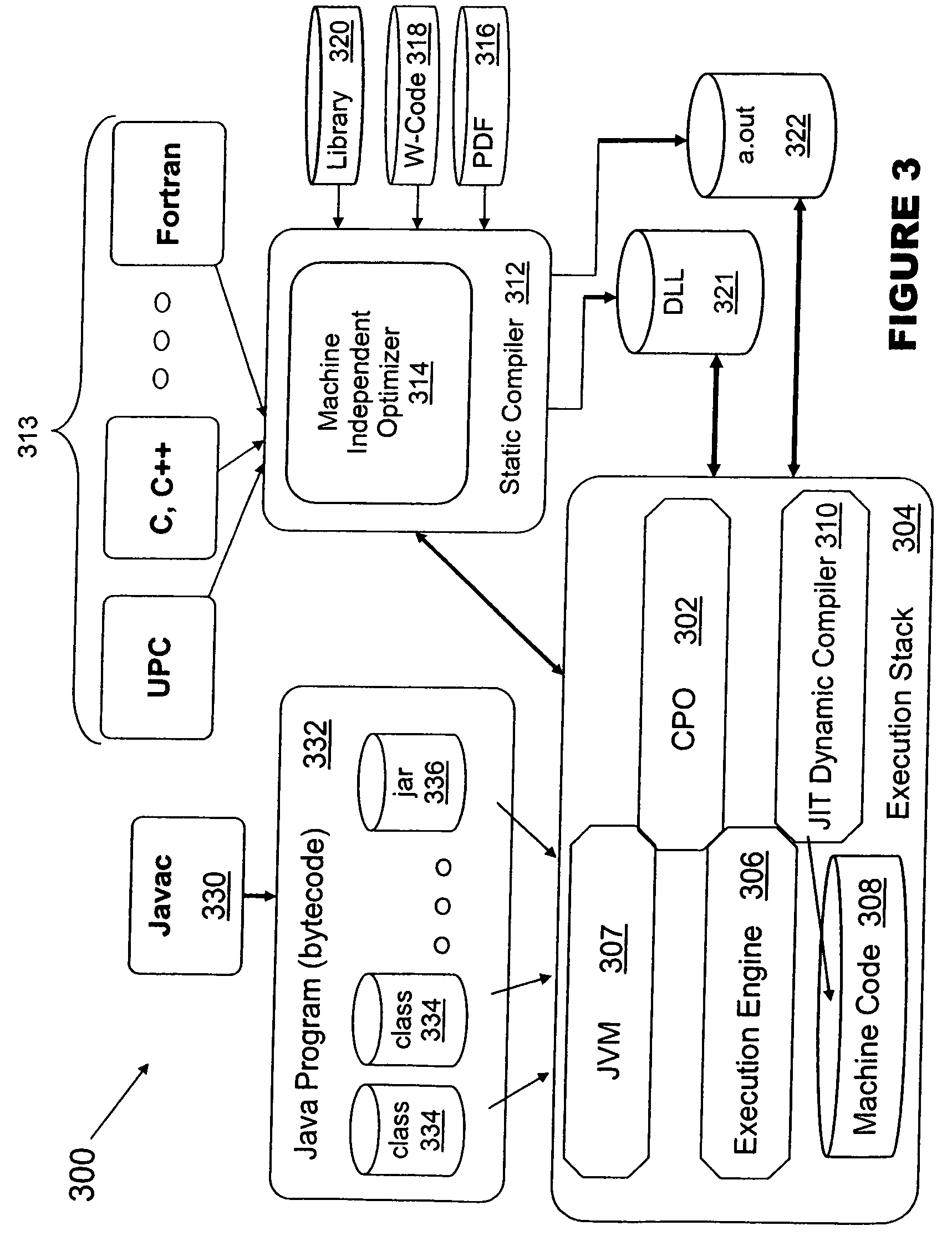

Method for improving performance of executable code

InactiveUS20070226698A1Enhance run-time performanceImprove programming performanceSpecific program execution arrangementsMemory systemsData processing systemAnalysis data

A computer-implemented method, computer program product and data processing system to improve runtime performance of executable program code when executed on the data-processing system. During execution, data is collected and analyzed to identify runtime behavior of the program code. Heuristic models are applied to select region(s) of the program code where application of a performance improvement algorithm is expected to improve runtime performance. Each selected region is recompiled using selected performance improvement algorithm(s) for that region to generate corresponding recompiled region(s), and the program code is modified to replace invocations of each selected region with invocations of the corresponding recompiled region. Alternatively or additionally, the program code may be recompiled to be adapted to characteristics of the execution environment of the data processing system. The process may be carried out in a continuous recursive manner while the program code executes, or may be carried out a finite number of times.

Owner:IBM CORP

Hierarchy of control in a data center network

ActiveUS20130108264A1Efficiently allocate bandwidthReducing physical interconnectivity requirementData switching by path configurationOptical multiplexInterconnectivityData center

Data center networks that employ optical network topologies and optical nodes to efficiently allocate bandwidth within the data center networks, while reducing the physical interconnectivity requirements of the data center networks. Such data center networks provide a hierarchy of control for controlling and provisioning computing resources within the data center networks based at least in part on the network topology and an application component topology, thereby enhancing overall application program performance.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Method for improving performance of executable code

InactiveUS7954094B2Enhance run-time performanceImprove programming performanceSpecific program execution arrangementsMemory systemsData processing systemAnalysis data

A computer-implemented method, computer program product and data processing system to improve runtime performance of executable program code when executed on the data-processing system. During execution, data is collected and analyzed to identify runtime behavior of the program code. Heuristic models are applied to select region(s) of the program code where application of a performance improvement algorithm is expected to improve runtime performance. Each selected region is recompiled using selected performance improvement algorithm(s) for that region to generate corresponding recompiled region(s), and the program code is modified to replace invocations of each selected region with invocations of the corresponding recompiled region. Alternatively or additionally, the program code may be recompiled to be adapted to characteristics of the execution environment of the data processing system. The process may be carried out in a continuous recursive manner while the program code executes, or may be carried out a finite number of times.

Owner:INT BUSINESS MASCH CORP

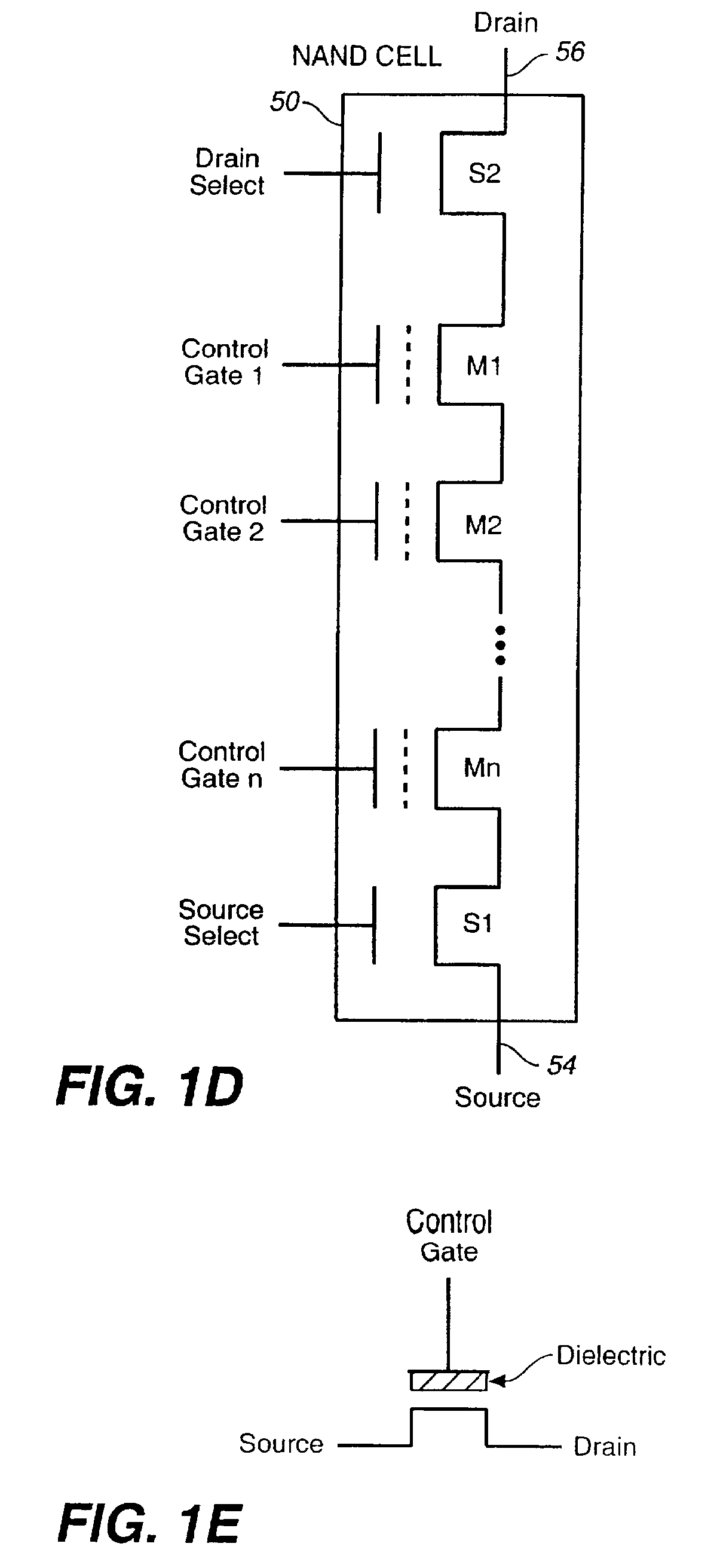

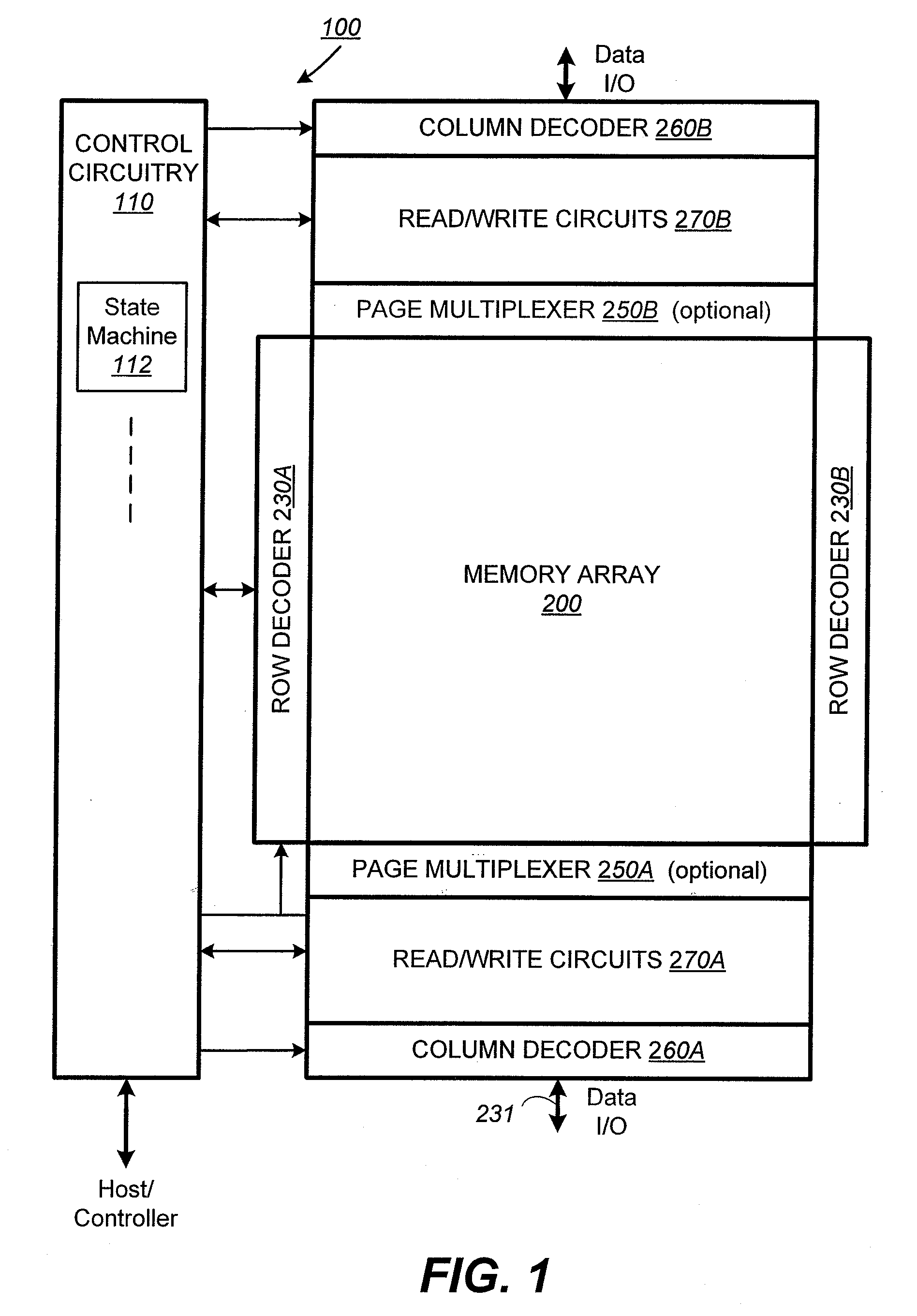

Method for Non-Volatile Memory with Managed Execution of Cached Data

ActiveUS20060239080A1Improve programming performanceMore program dataMemory architecture accessing/allocationRead-only memoriesControl memoryOperating system

Methods and circuitry are present for executing current memory operation while other multiple pending memory operations are queued. Furthermore, when certain conditions are satisfied, some of these memory operations are combinable or mergeable for improved efficiency and other benefits. The management of the multiple memory operations is accomplished by the provision of a memory operation queue controlled by a memory operation queue manager. The memory operation queue manager is preferably implemented as a module in the state machine that controls the execution of a memory operation in the memory array.

Owner:SANDISK TECH LLC

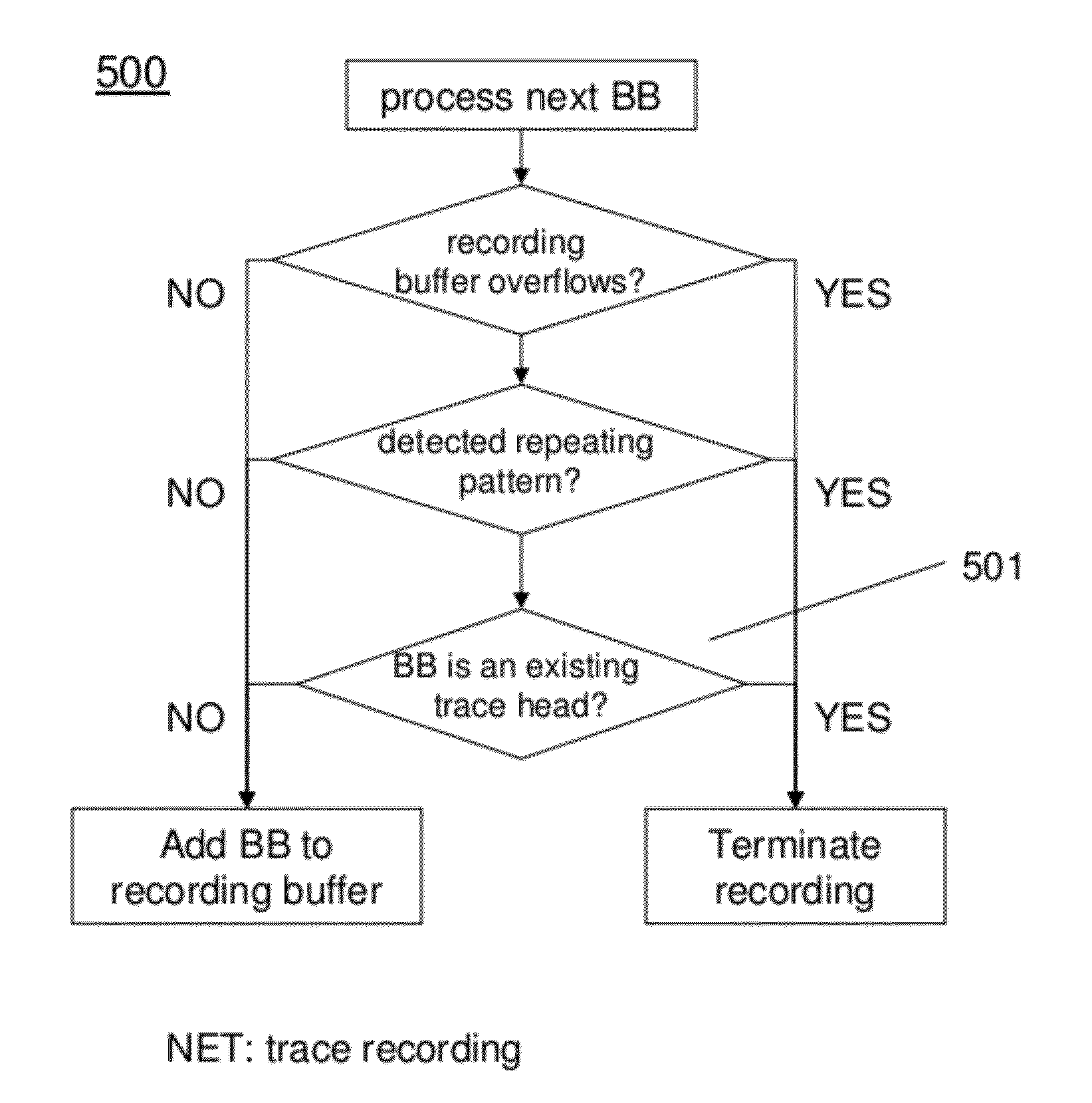

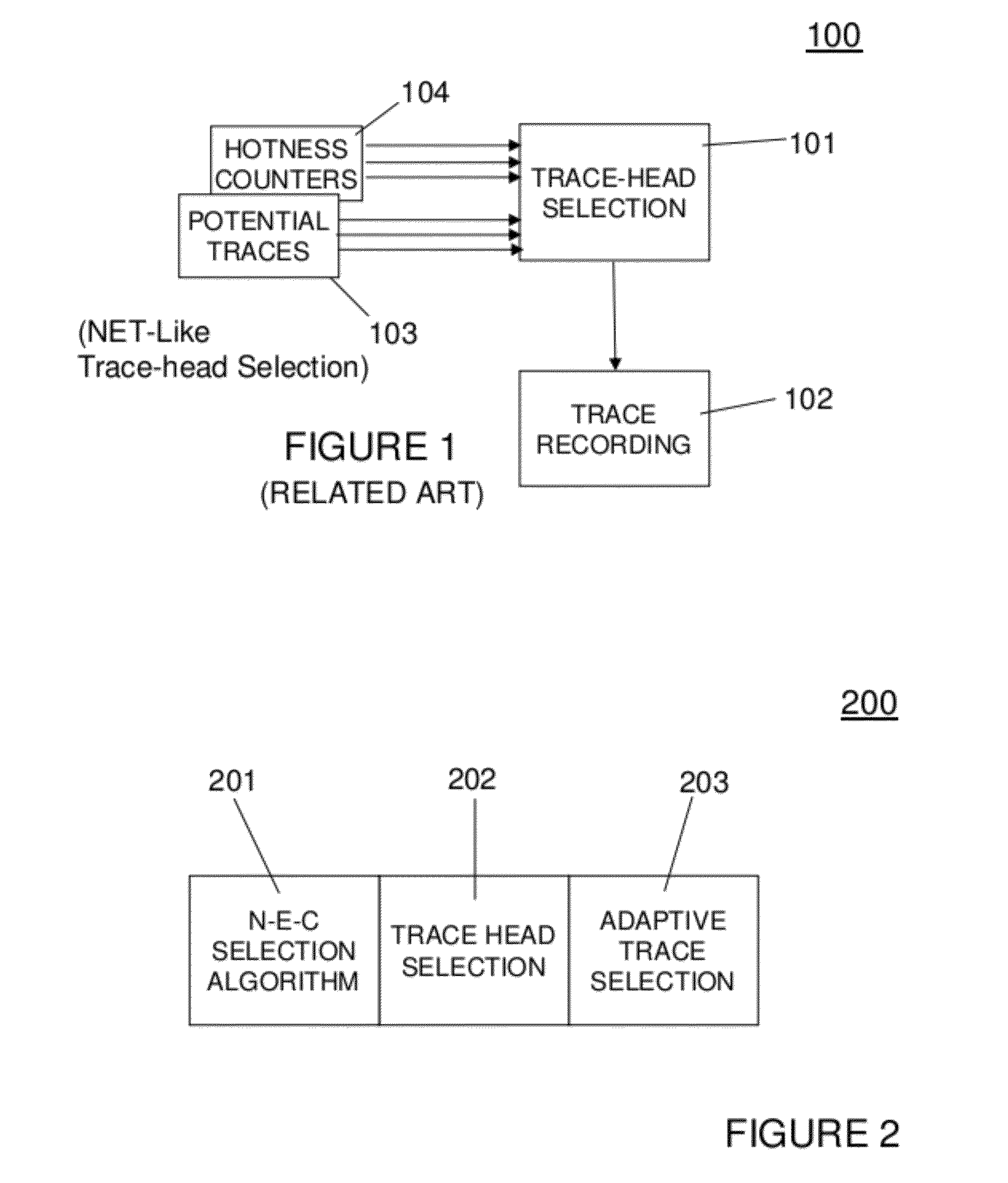

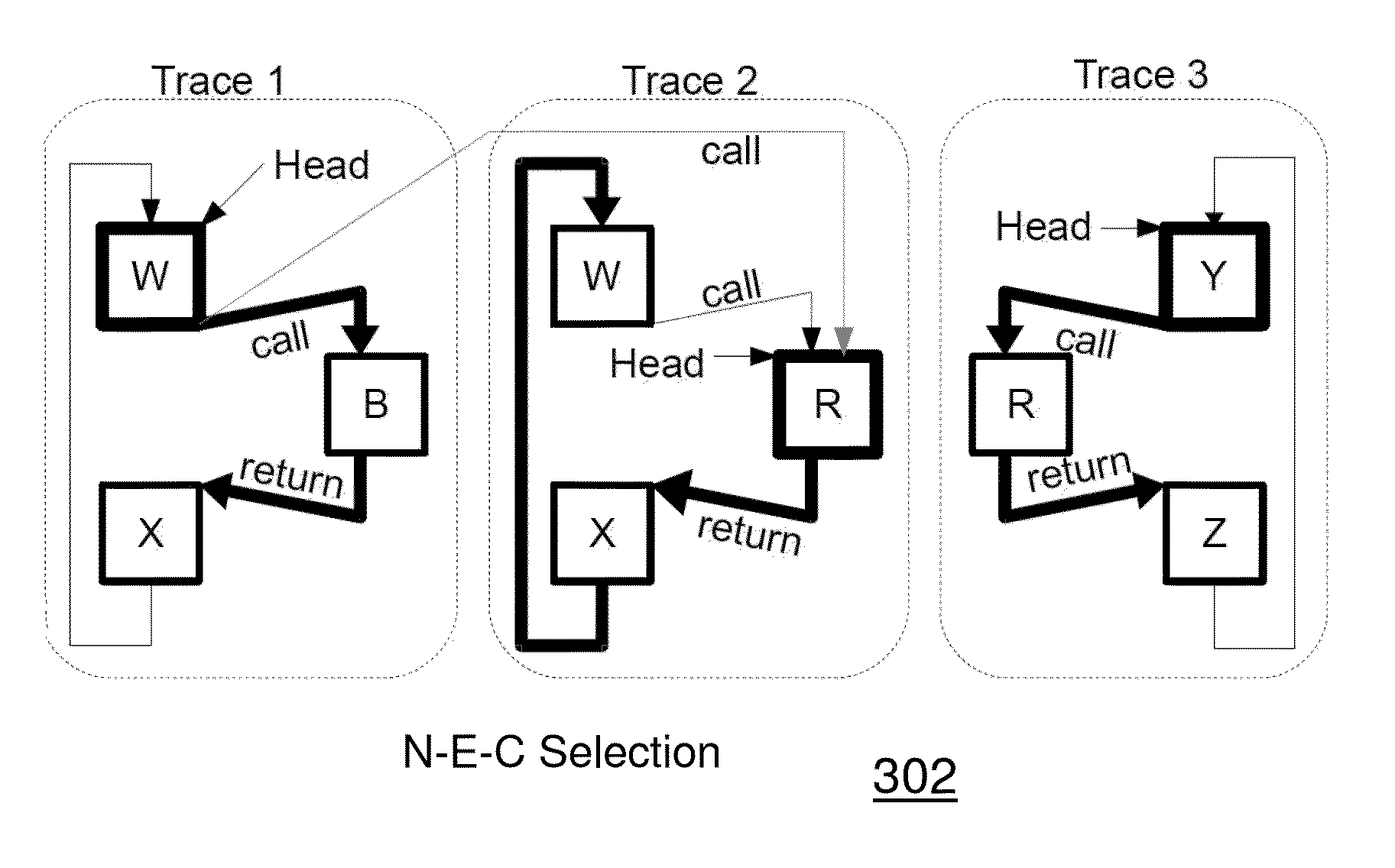

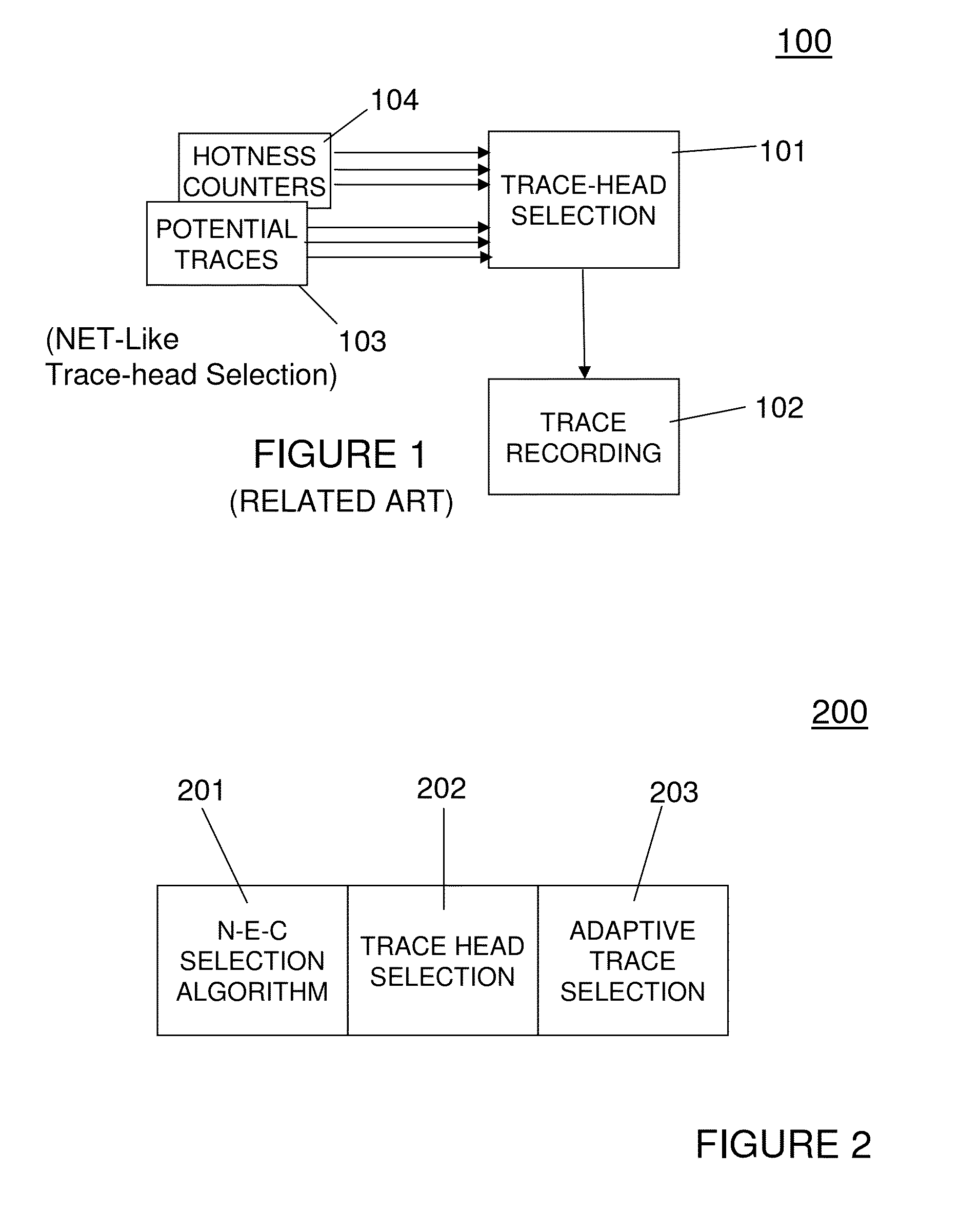

Adaptive next-executing-cycle trace selection for trace-driven code optimizers

ActiveUS20120204164A1Compilation is improvedReduce the amount of solutionSoftware engineeringProgram controlDynamic compilationParallel computing

An apparatus includes a processor for executing instructions at runtime and instructions for dynamically compiling the set of instructions executing at runtime. A memory device stores the instructions to be executed and the dynamic compiling instructions. A memory device serves as a trace buffer used to store traces during formation during the dynamic compiling. The dynamic compiling instructions includes a next-executing-cycle (N-E-C) trace selection process for forming traces for the instructions executing at runtime. The N-E-C trace selection process continues through an existing trace-head when forming traces without terminating a recording of a current trace if an existing trace-head is encountered.

Owner:GLOBALFOUNDRIES US INC

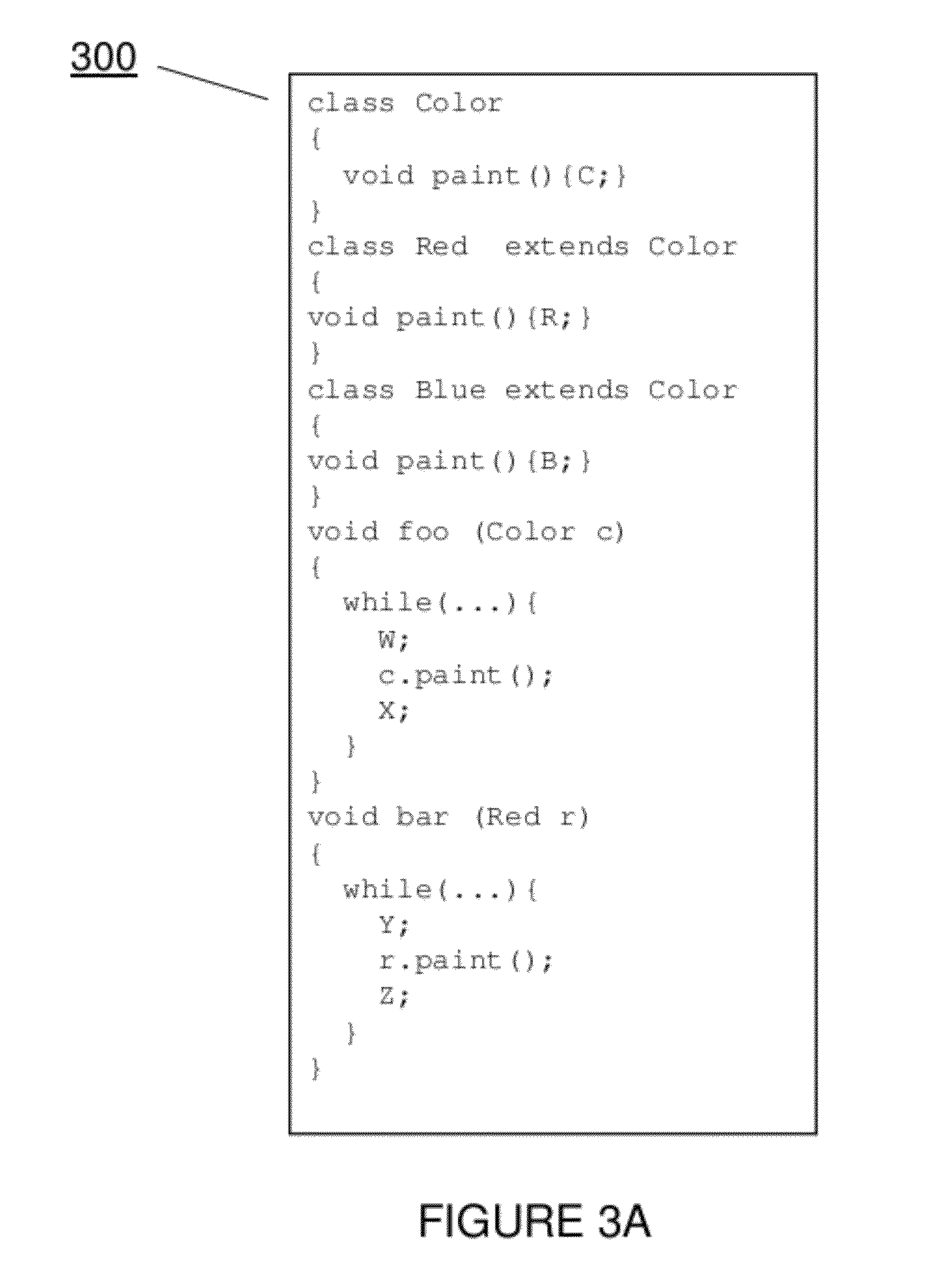

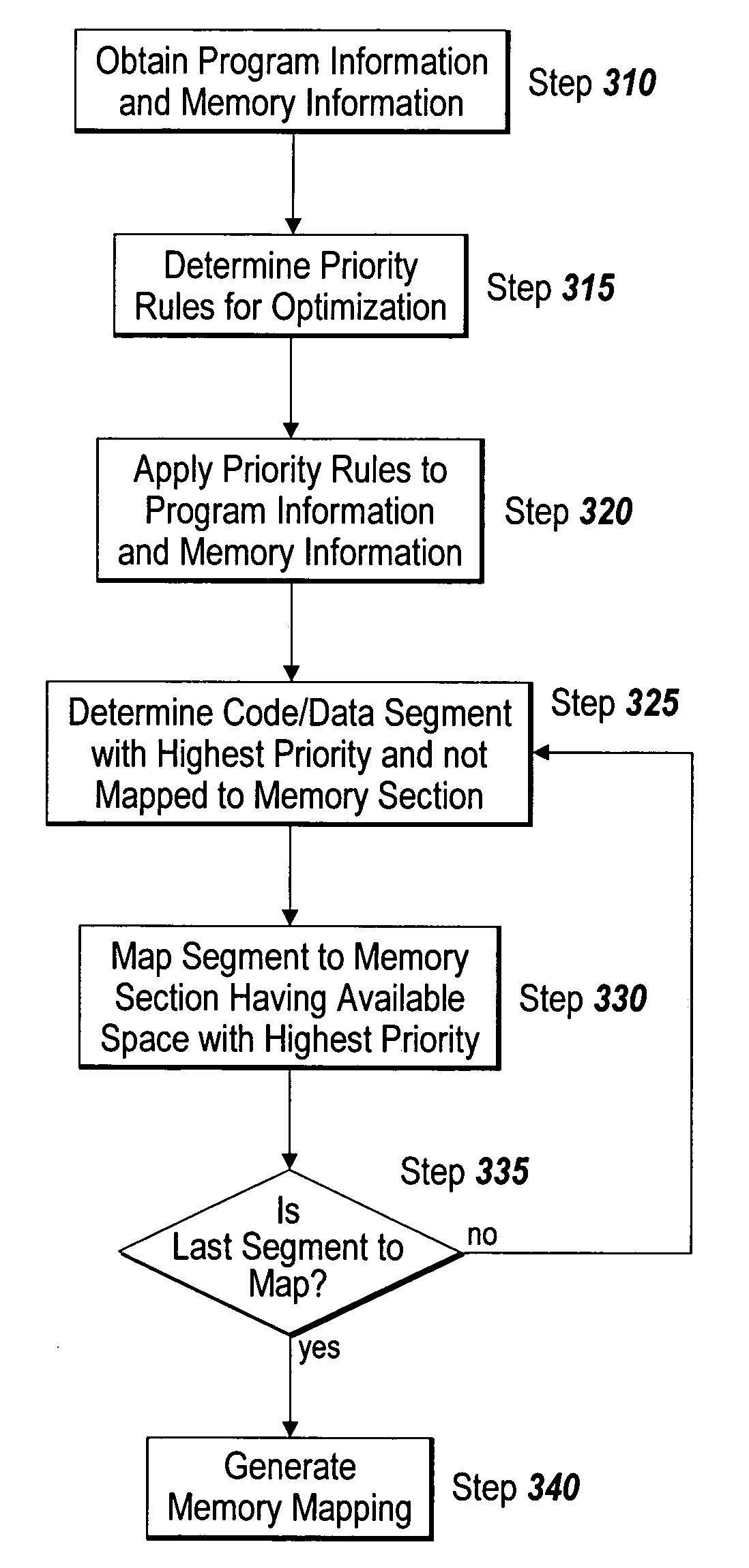

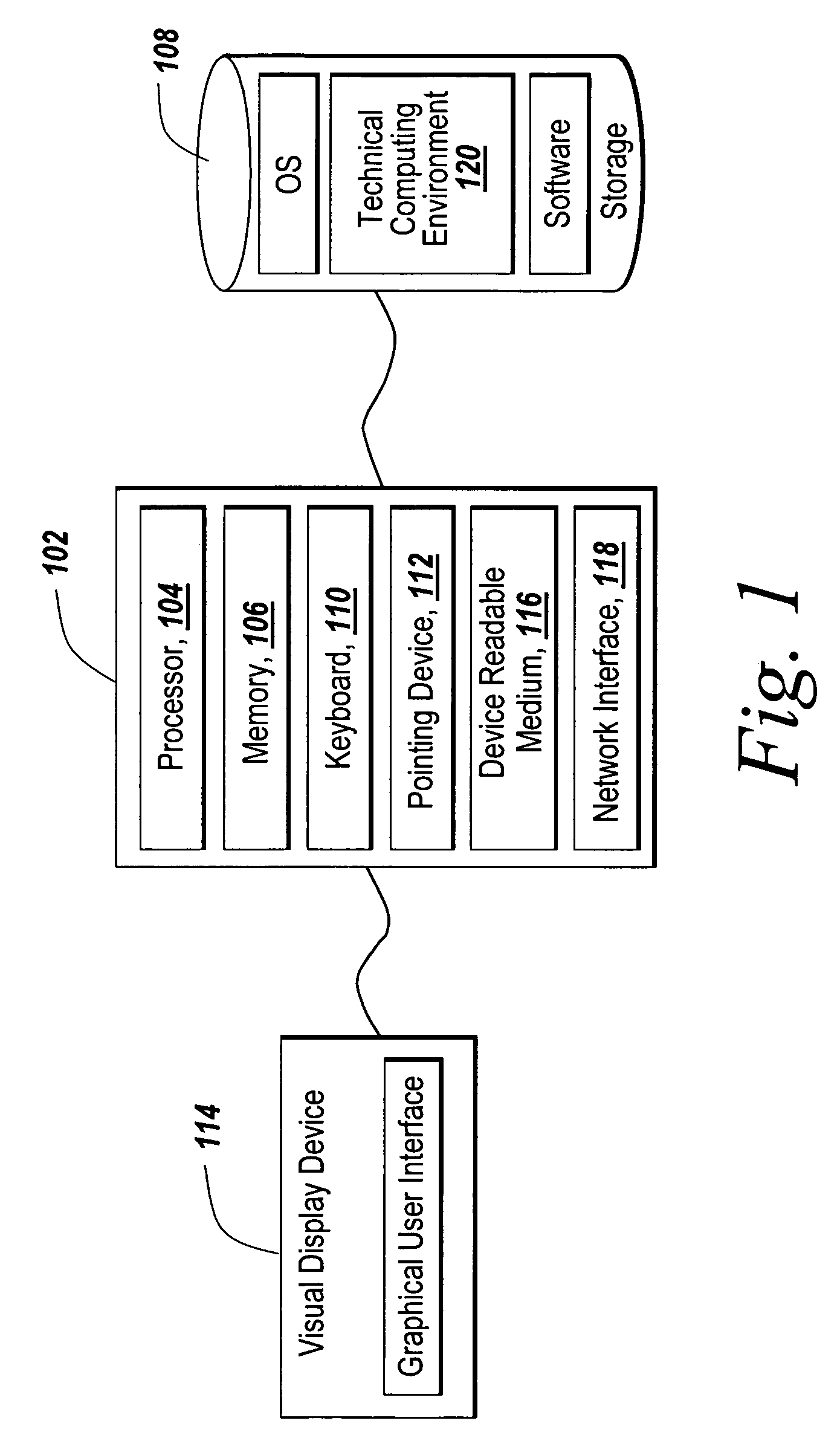

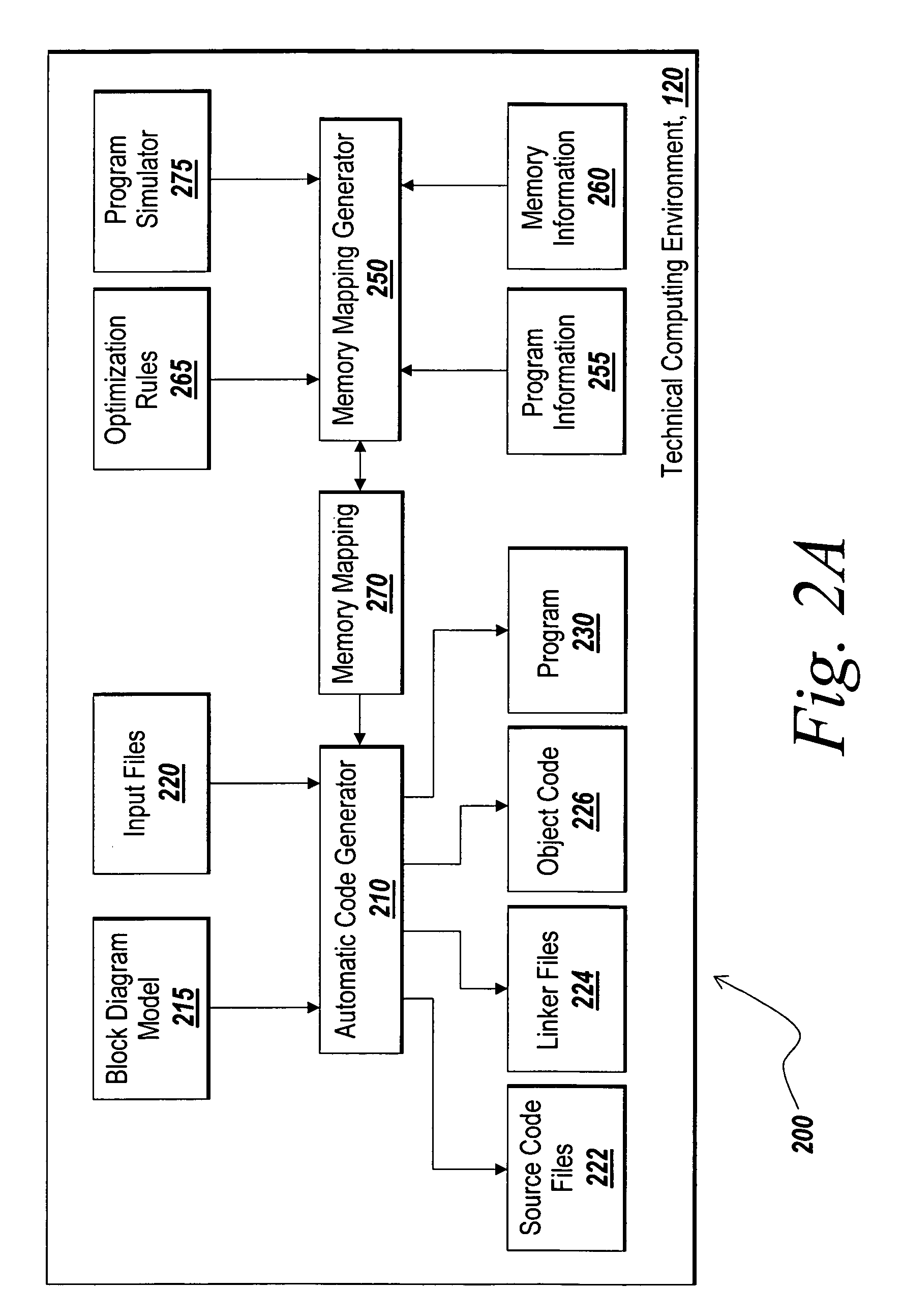

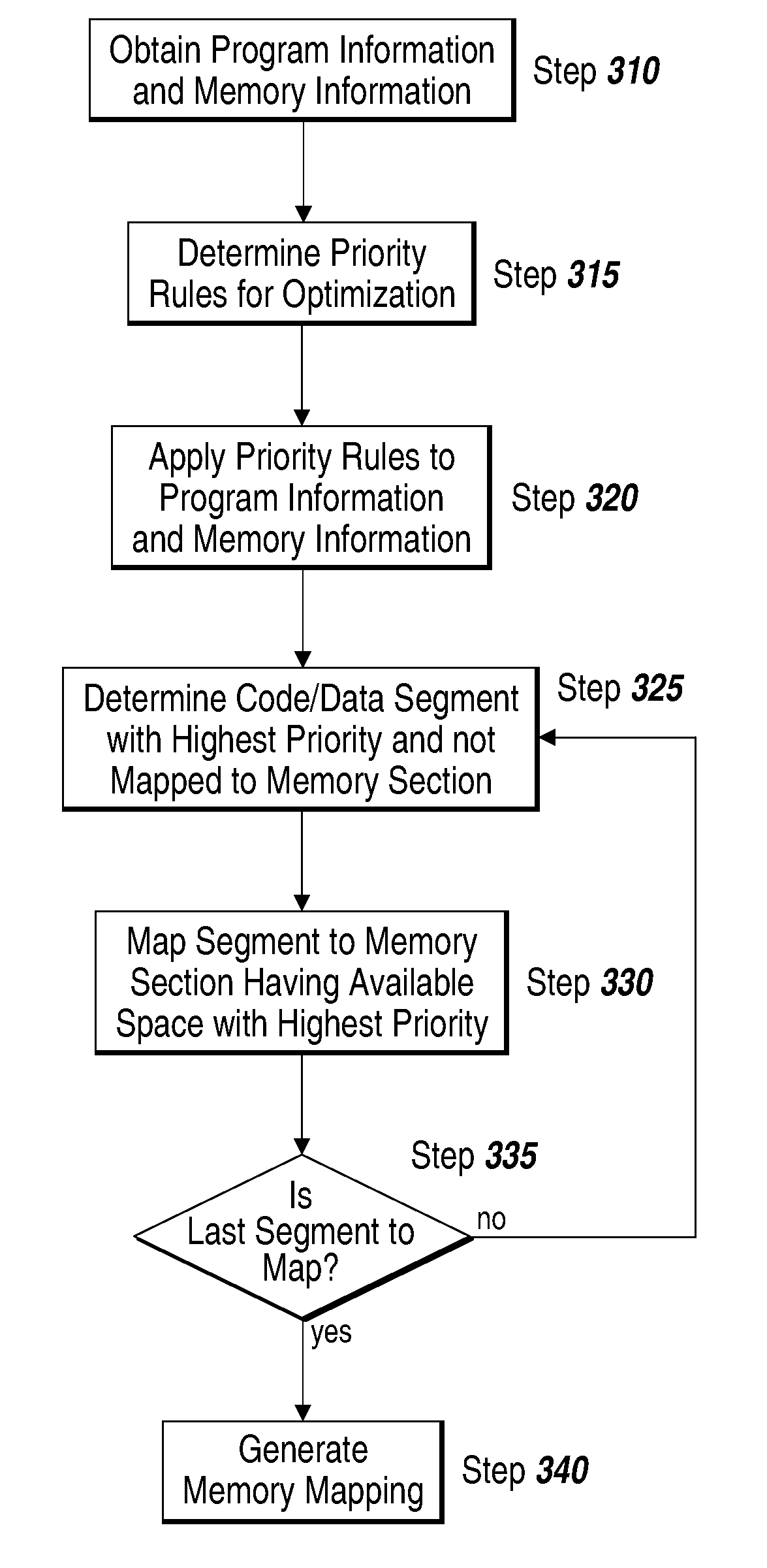

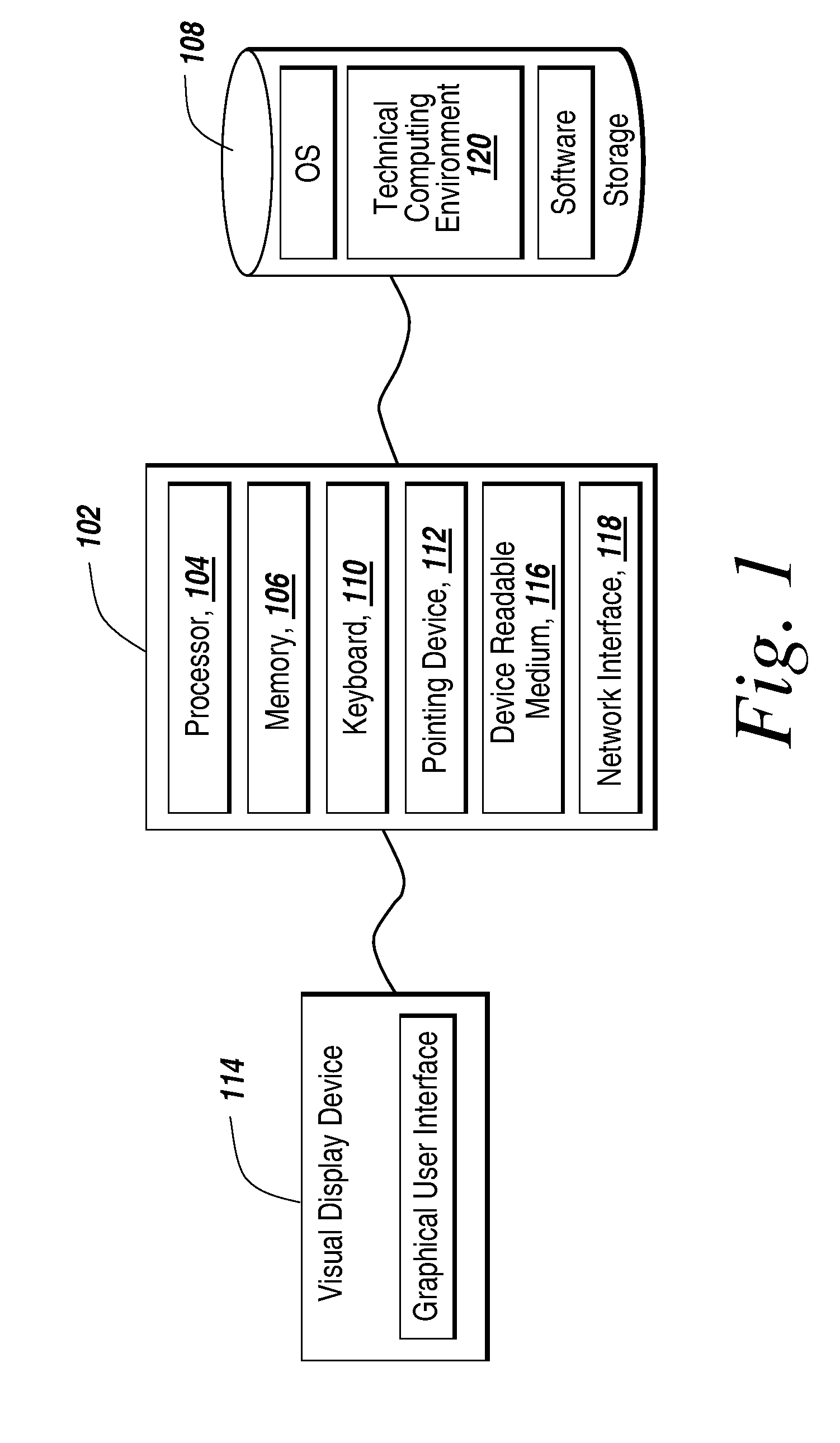

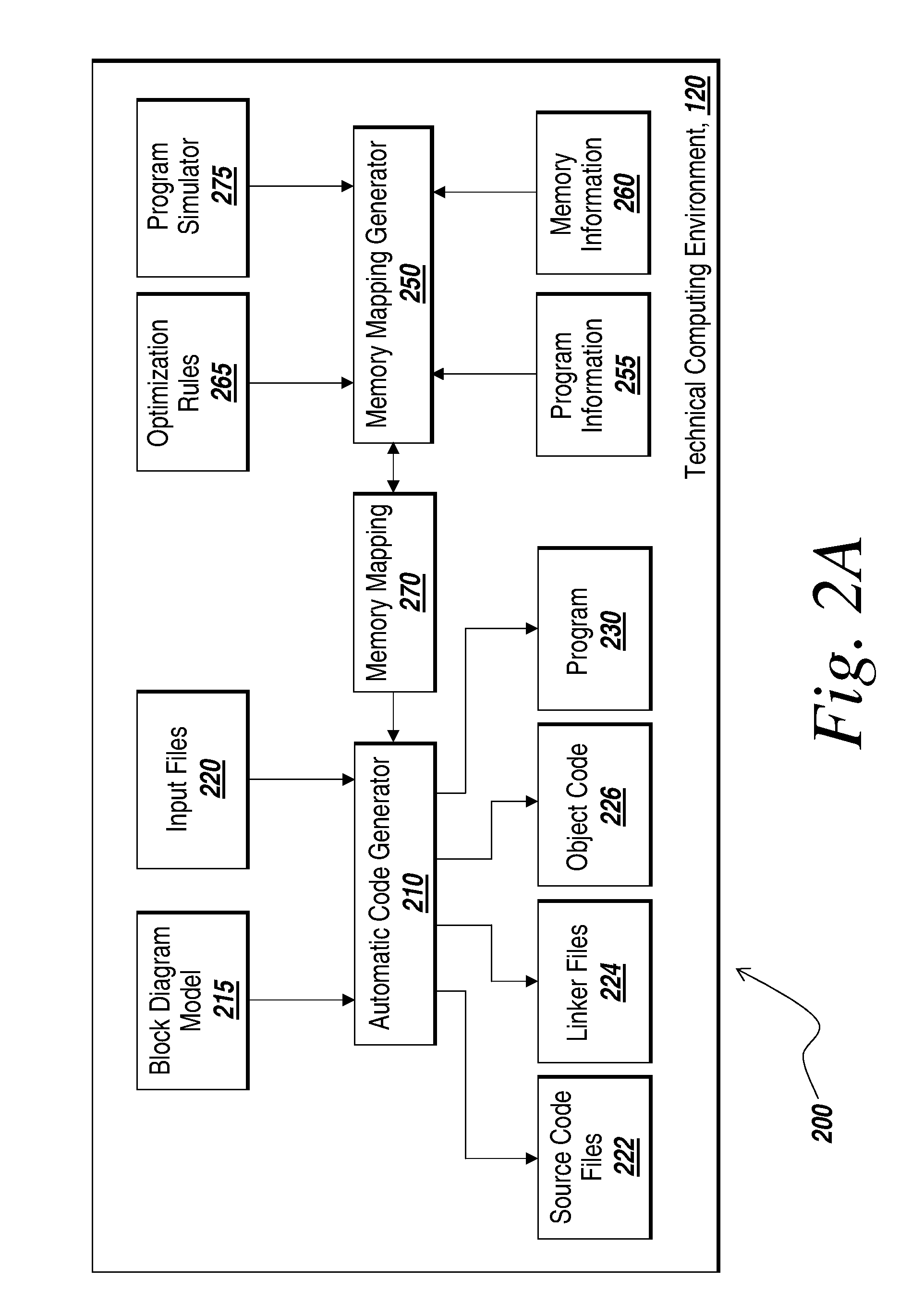

Memory mapping for single and multi-processing implementations of code generated from a block diagram model

ActiveUS7584465B1Improve programming performanceVisual/graphical programmingSpecific program execution arrangementsGraphicsModel method

Methods and systems are provided for automatically generating code from a graphical model representing a design to be implemented on components of a target computational hardware device. During the automatic code generating process, a memory mapping is automatically determined and generated to provide an optimization of execution of the program on the target device. The optimized memory mapping is incorporated into building the program executable from the automatically generated code of the graphical model.

Owner:THE MATHWORKS INC

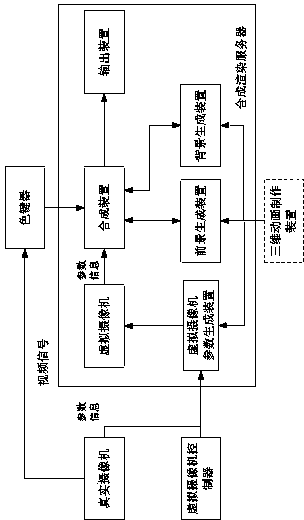

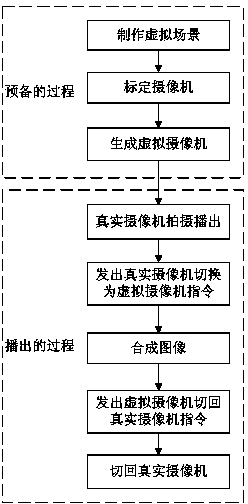

Virtual camera and real camera switching system and method

ActiveCN104349020AMake up for limitationsRealize automatic interpolation processingTelevision system detailsColor television detailsOutput deviceVirtual camera

The invention relates to a virtual camera and real camera switching system and method. The system comprises a real camera, wherein the real camera is connected with a chroma key device, a synthesis rendering server is connected with a virtual camera controller, a virtual camera parameter generating device connected with the real camera and the virtual camera controller is arranged inside the synthesis rendering server, a virtual camera and the virtual camera parameter generating device are connected with a synthesis device, the synthesis device is connected with the chroma key device, and a foreground generating device, a background generating device and an output device which are connected with the synthesis device are further arranged inside the synthesis rendering server. According to the method, virtual foregrounds, virtual backgrounds and virtual persons are fabricated in field and are fast synthesized, the switching of a field real scene and a virtual scene is realized through the operation of the virtual camera controller to the scene, the limitation of the real camera when the real camera shoots a virtual object is compensated, and the program expressive force of a virtual studio is greatly improved.

Owner:BEIJING DAYANG TECH DEV

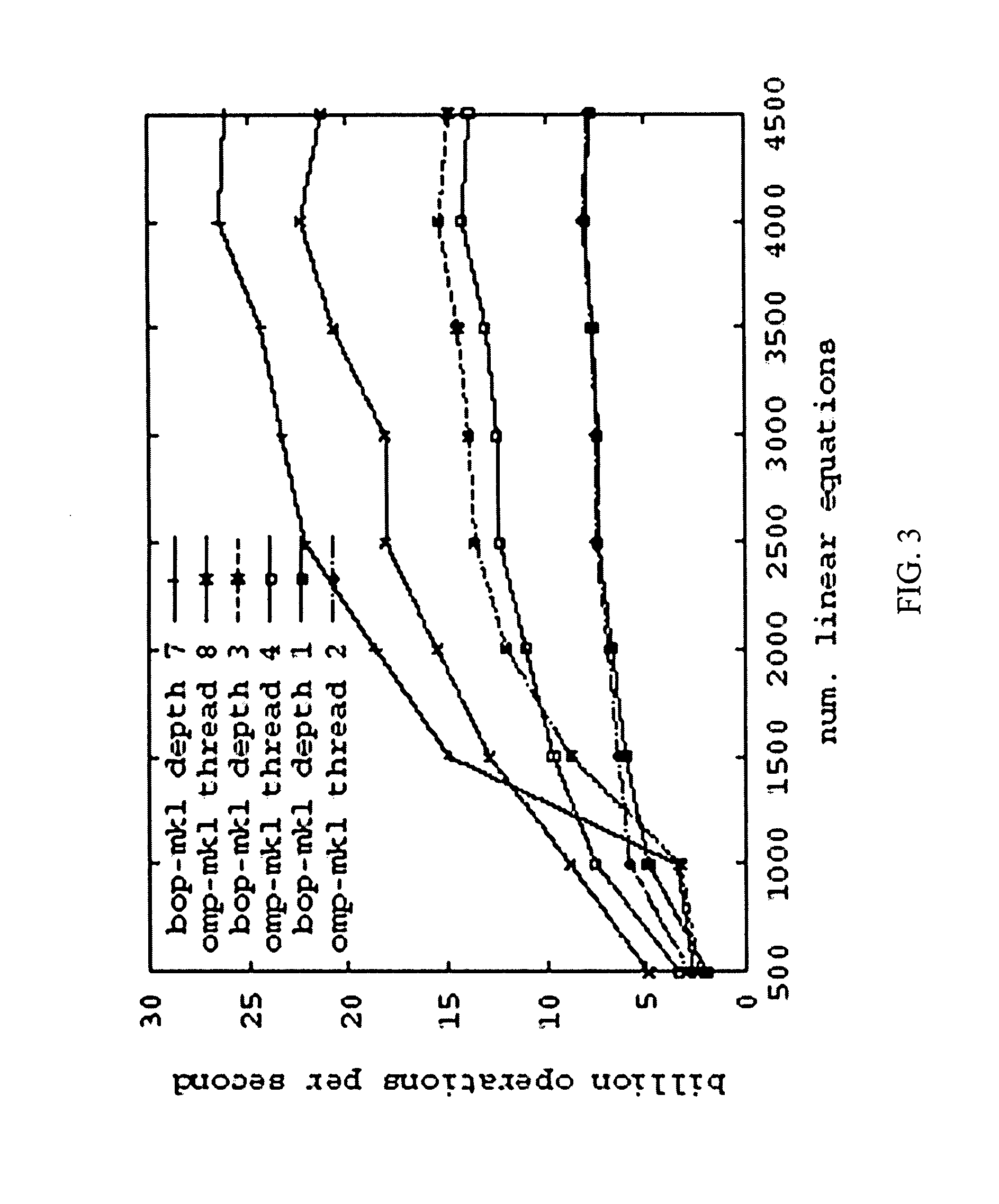

Parallel programming using possible parallel regions and its language profiling compiler, run-time system and debugging support

InactiveUS8549499B1Ensure performanceImprove programming performanceSoftware engineeringDigital computer detailsParallel programming modelRunning time

A method of dynamic parallelization for programs in systems having at least two processors includes examining computer code of a program to be performed by the system, determining a largest possible parallel region in the computer code, classifying data to be used by the program based on a usage pattern and initiating multiple, concurrent processes to perform the program. The multiple, concurrent processes ensure a baseline performance that is at least as efficient as a sequential performance of the computer code.

Owner:UNIVERSITY OF ROCHESTER

Control and provisioning in a data center network with at least one central controller

ActiveUS20130279909A1Effective bandwidthReducing physical interconnectivity requirementMultiplex system selection arrangementsElectromagnetic network arrangementsInterconnectivityData center

Data center networks that employ optical network topologies and optical nodes to efficiently allocate bandwidth within the data center networks, while reducing the physical interconnectivity requirements of the data center networks. Such data center networks employ at least one central controller for controlling and provisioning computing resources within the data center networks based at least in part on the network topology and an application component topology, thereby enhancing overall application program performance.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

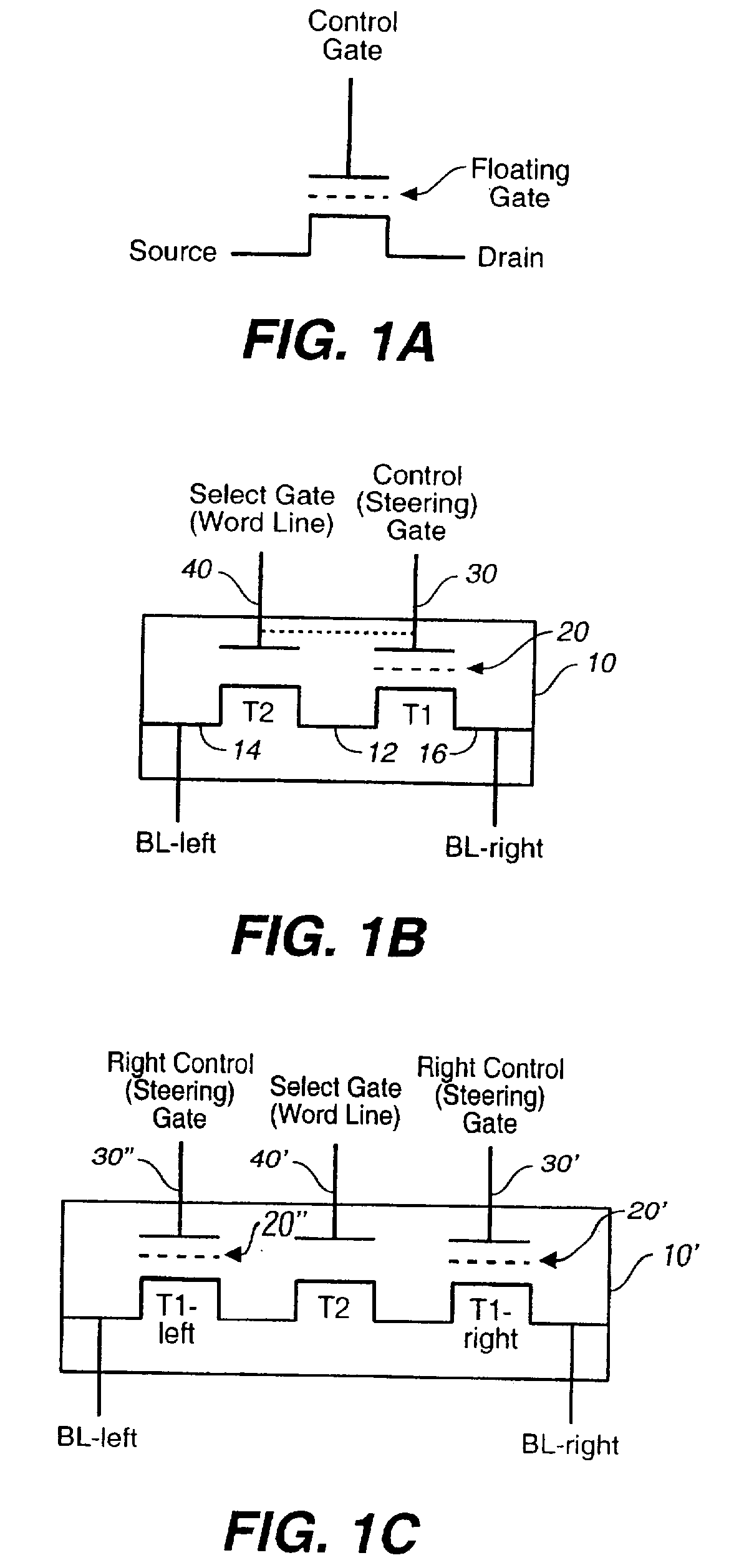

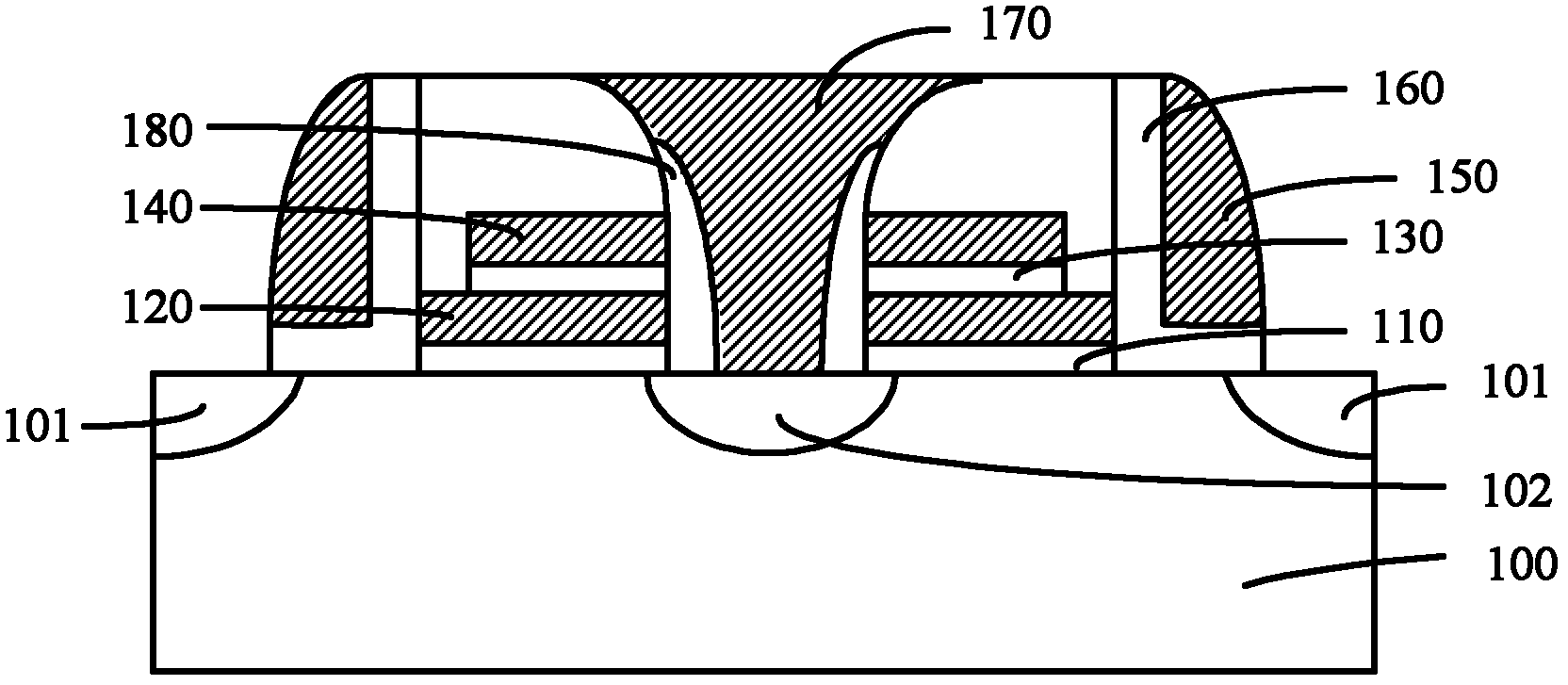

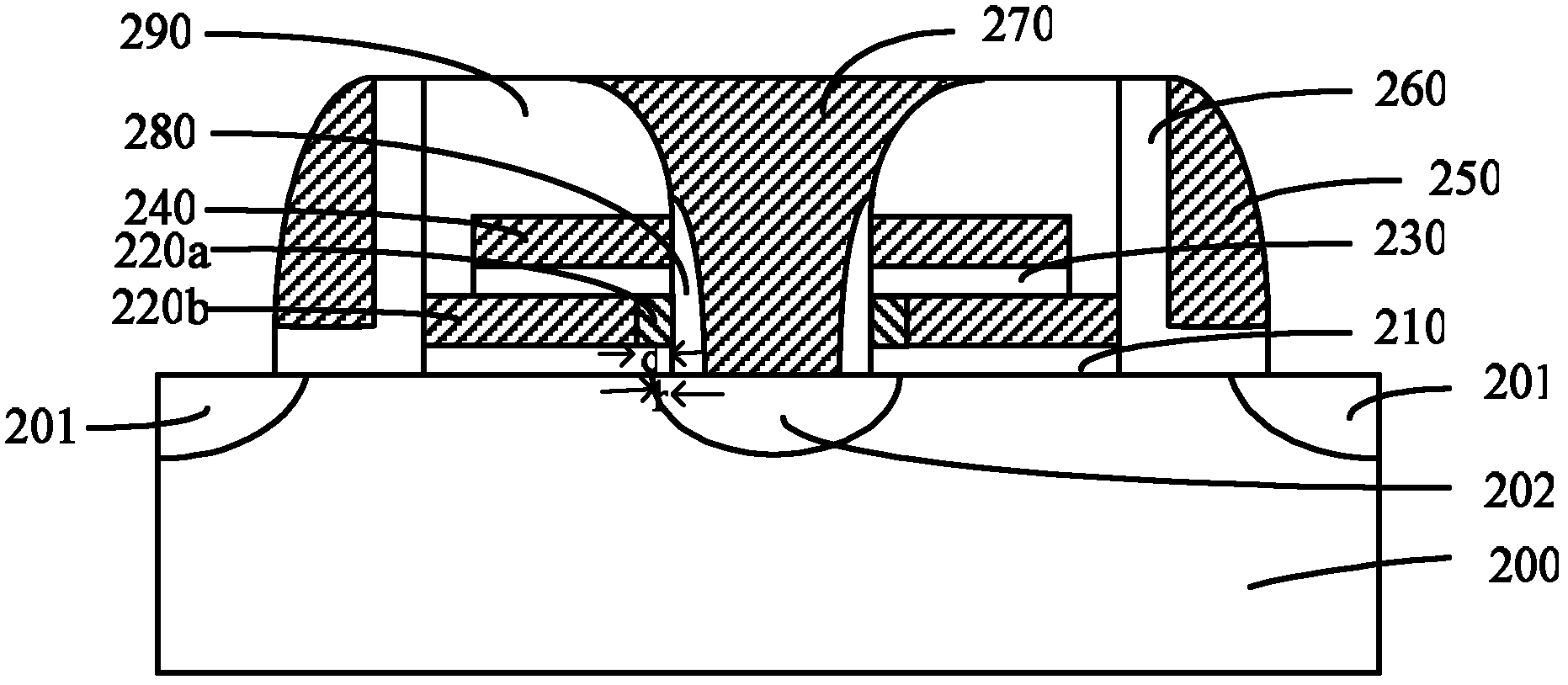

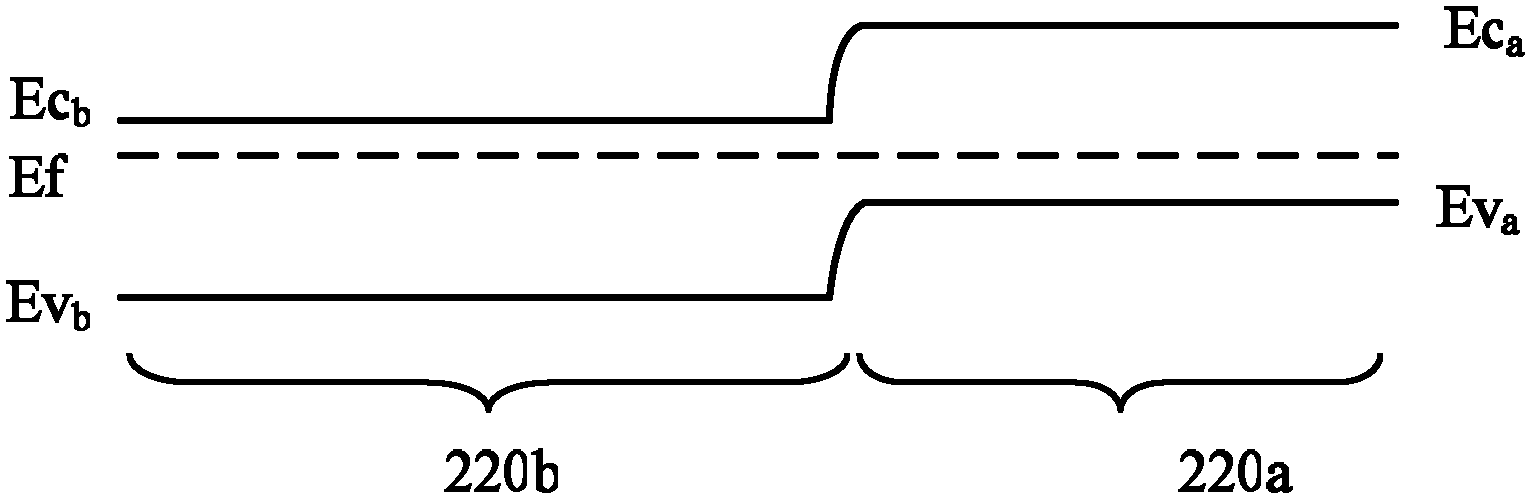

Flash memory unit for shared source line and forming method thereof

ActiveCN102315252AImprove coupling coefficientImproved Stress ReliabilitySolid-state devicesSemiconductor/solid-state device manufacturingGate dielectricEngineering

The embodiment of the invention provides a flash memory unit for a shared source line and a forming method thereof. The provided flash memory unit for the shared source line comprises a semiconductor substrate, a source line, a floating gate dielectric layer, a floating gate, a control gate dielectric layer, a control gate, side wall dielectric layers, side walls, a tunneling oxide layer, a word line, a drain electrode and a source electrode, wherein the source line is positioned on the surface of the semiconductor substrate; the floating gate dielectric layer, the floating gate, the control gate dielectric layer and the control gate are sequentially positioned on the surface of the semiconductor substrate on two sides of the source line; the side wall dielectric layers are positioned between the source line and the floating gate as well we between the source line and the control gate; the side walls are positioned on the floating gate and the control gate, which are far from the source line; the tunneling oxide layer is adjacent to the side wall and is positioned on the surface of the semiconductor substrate; the word line is positioned on the surface of the tunneling oxide layer; the drain electrode is positioned in the semiconductor substrate at one side of the word line, which is far from the source line; the source electrode is positioned in the semiconductor substrate which is right opposite to the source line; and the floating gate is provided with a p-type doping end which is close to the source line, wherein the doping type of the floating gate is in a p type and the doping type of other parts is respectively in an n type.

Owner:SHANGHAI HUAHONG GRACE SEMICON MFG CORP

Memory mapping for single and multi-processing implementations of code generated from a block diagram model

ActiveUS8230395B1Improve programming performanceVisual/graphical programmingSpecific program execution arrangementsGraphicsModel method

Methods and systems are provided for automatically generating code from a graphical model representing a design to be implemented on components of a target computational hardware device. During the automatic code generating process, a memory mapping is automatically determined and generated to provide an optimization of execution of the program on the target device. The optimized memory mapping is incorporated into building the program executable from the automatically generated code of the graphical model.

Owner:THE MATHWORKS INC

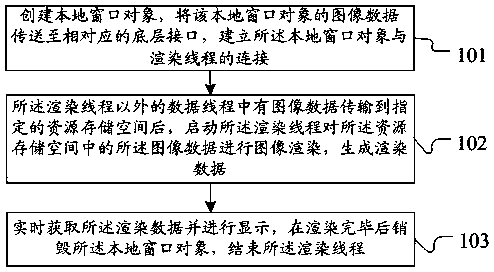

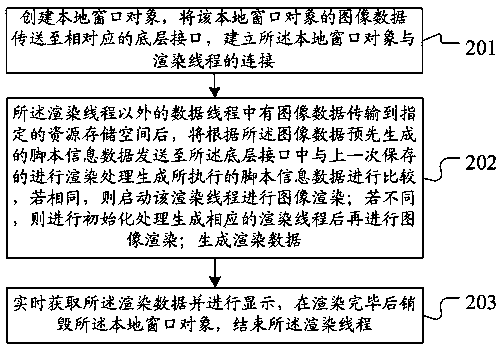

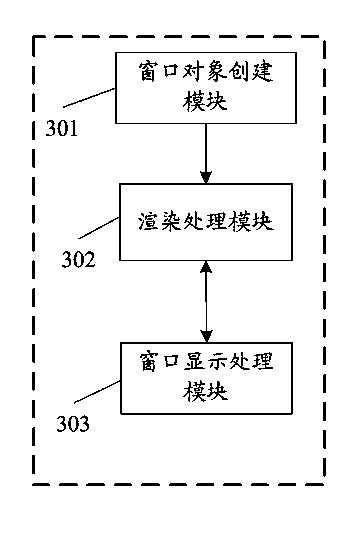

Android-based method and system for constructing image rendering engine

ActiveCN103617027AIncrease opennessImprove operational efficiencySpecific program execution arrangementsComputer graphics (images)Layer interface

The invention discloses an Android-based method and system for constructing an image rendering engine. The method comprises the steps of creating a local window object, transmitting image data of the local window object to a corresponding bottom-layer interface, and establishing connection between the local window object and a rendering thread; after the image data in data threads except for the rendering thread are transmitted to an appointed resource storage space, starting the rendering thread to conduct image rendering on the image data in the resource storage space, and generating rendering data; obtaining the rendering data in real time to display the rendering data, destroying the local window object after the rendering is completed, and finishing the rendering thread. According to the Android-based method and system for constructing the image rendering engine, the problem that operating efficiency of a rendering thread of a mobile device is low is solved.

Owner:ALIBABA (CHINA) CO LTD

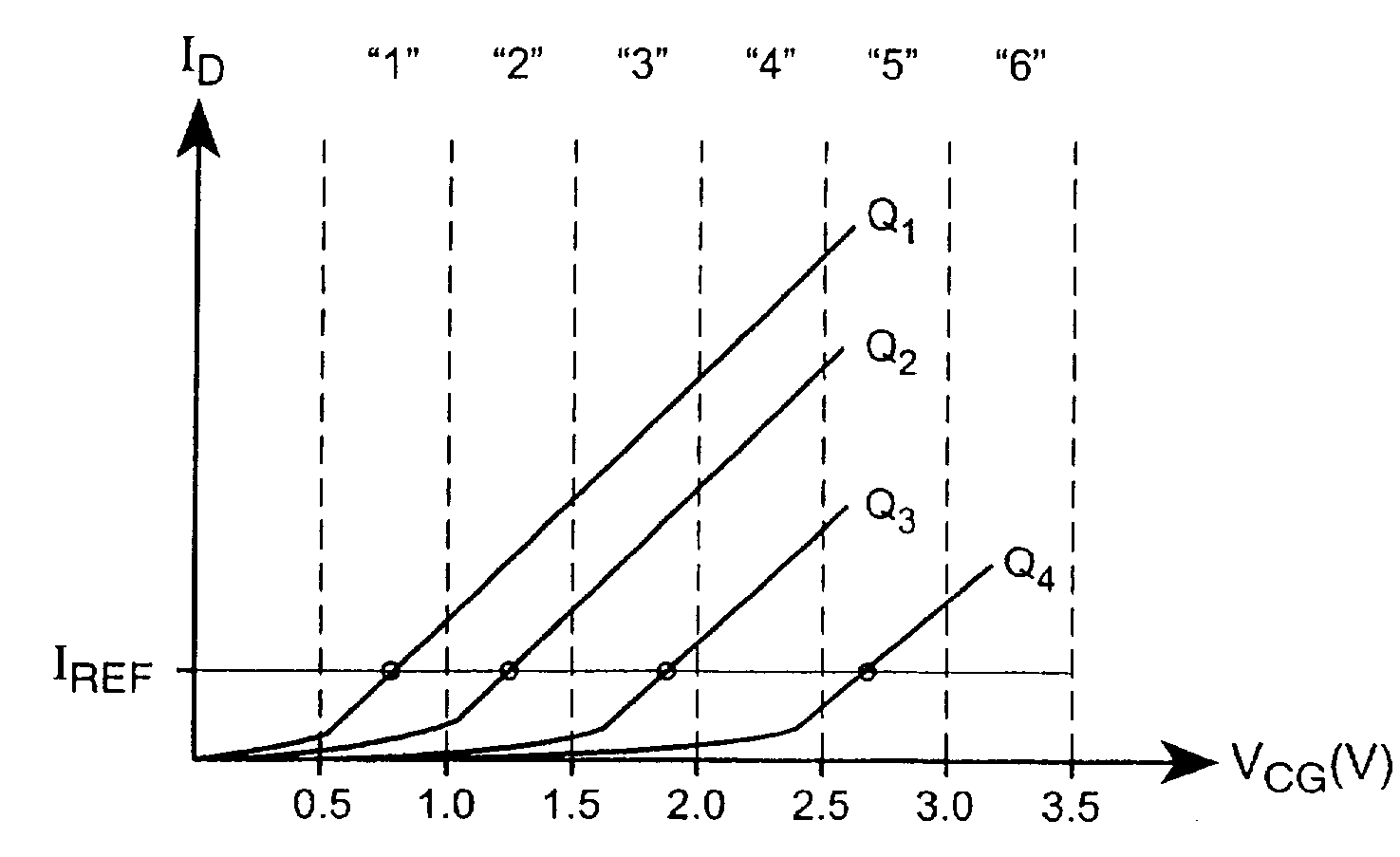

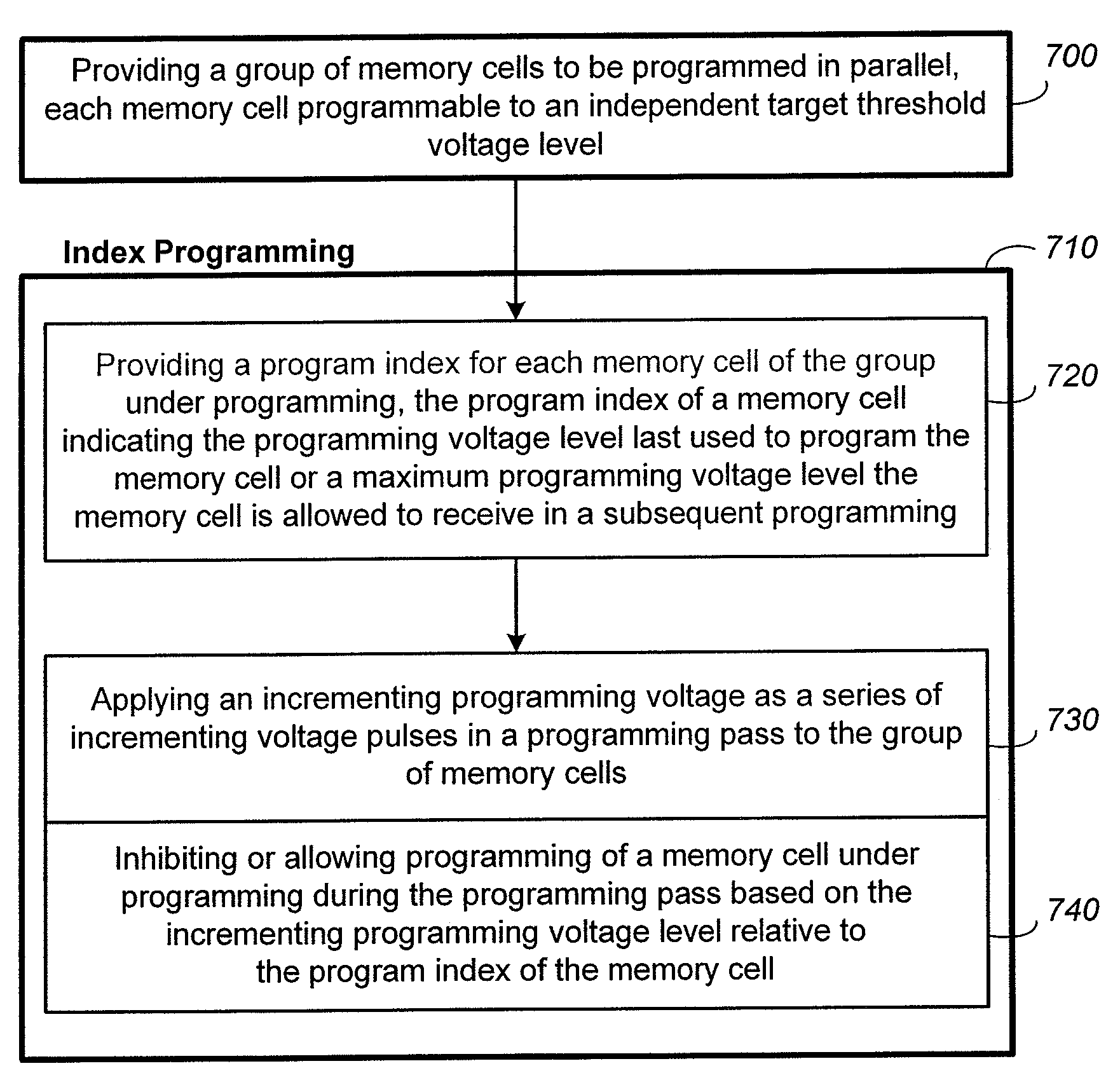

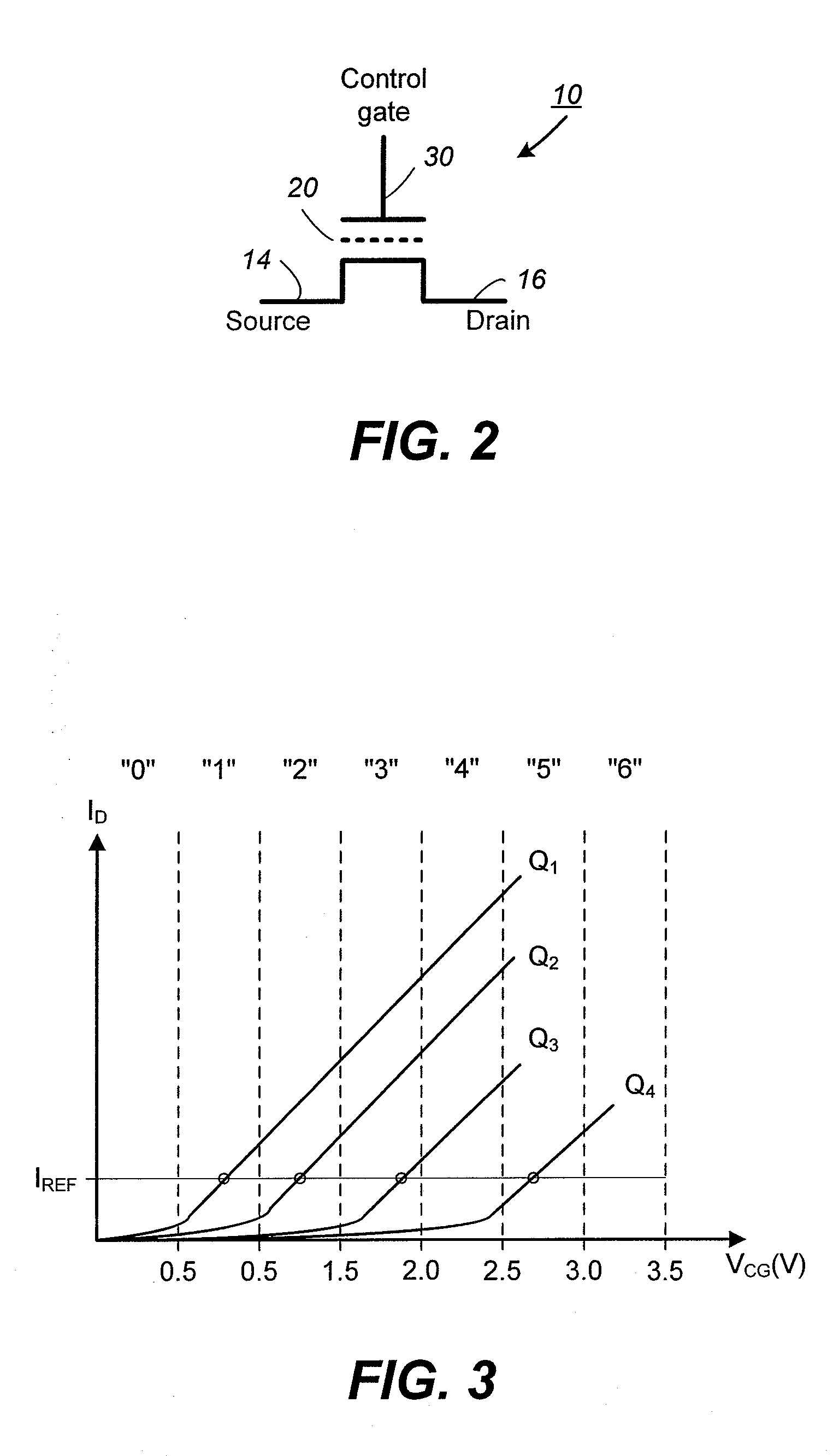

Nonvolatile Memory with Correlated Multiple Pass Programming

ActiveUS20090310421A1Fine step sizeImprove programming performanceRead-only memoriesDigital storageImage resolutionEngineering

A group of memory cells is programmed respectively to their target states in parallel using a multiple-pass programming method in which the programming voltages in the multiple passes are correlated. Each programming pass employs a programming voltage in the form of a staircase pulse train with a common step size, and each successive pass has the staircase pulse train offset from that of the previous pass by a predetermined offset level. The predetermined offset level is less than the common step size and may be less than or equal to the predetermined offset level of the previous pass. Thus, the same programming resolution can be achieved over multiple passes using fewer programming pulses than conventional method where each successive pass uses a programming staircase pulse train with a finer step size. The multiple pass programming serves to tighten the distribution of the programmed thresholds while reducing the overall number of programming pulses.

Owner:SANDISK TECH LLC

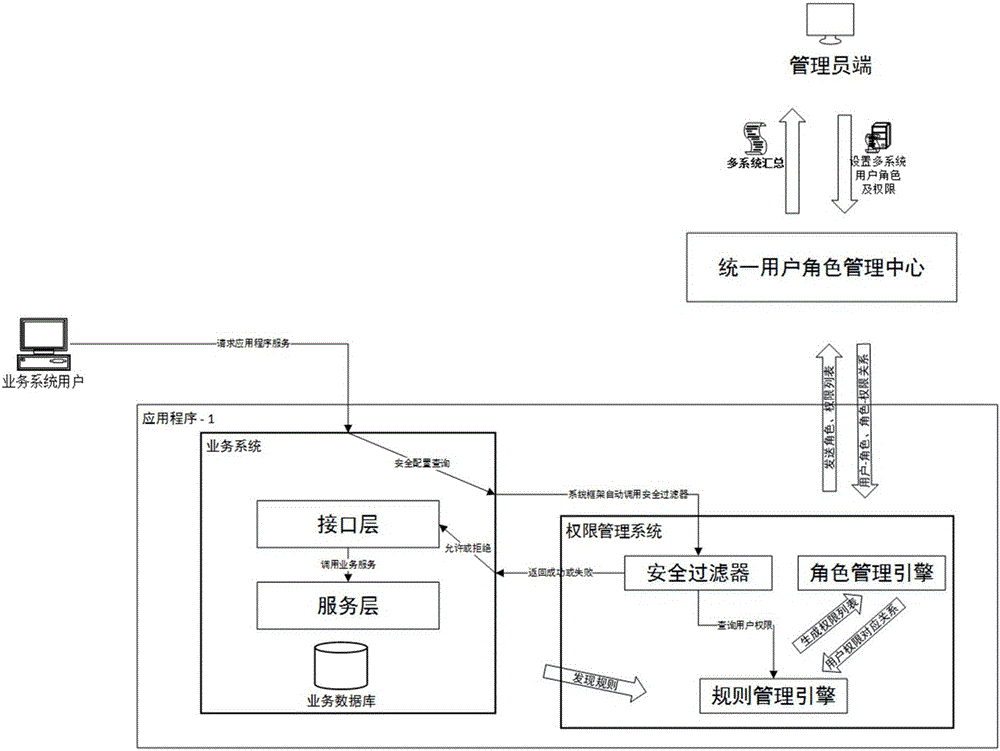

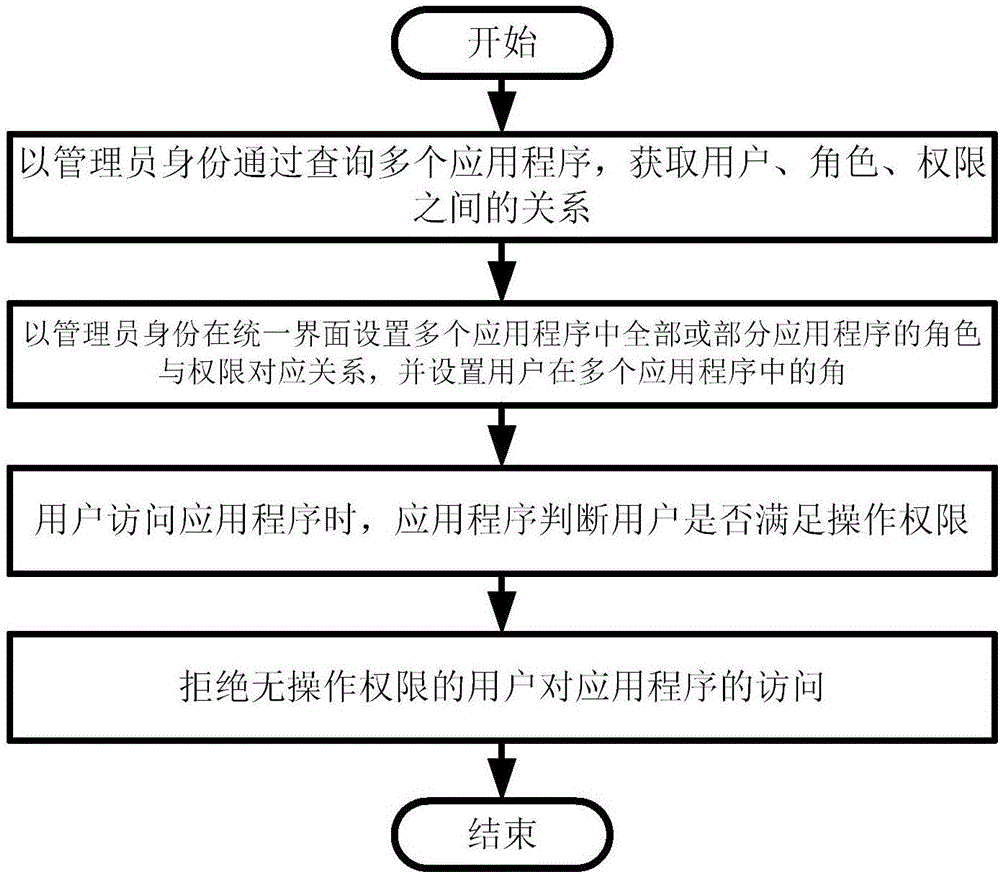

Multi-system role authority management method and system based on unified interface

ActiveCN106790001AReduce complexityImprove reliabilityData switching networksPartial applicationApplication software

The invention provides a multi-system role authority management method and system based on a unified interface. The method comprises the following steps: step 1: inquiring multiple application programs as an administrator to obtain the relationship between a user, a role and authority; step 2: setting a corresponding relation of roles and authority of all or a part of application programs in the multiple application programs on the unified interface as the administrator, and setting the roles of the user in the multiple application programs; step 3: when the user accesses the application programs, judging whether the user has operation authority by the application programs; and step 4: refusing the user having no operation authority to access the application programs.

Owner:CETC CHINACLOUD INFORMATION TECH CO LTD

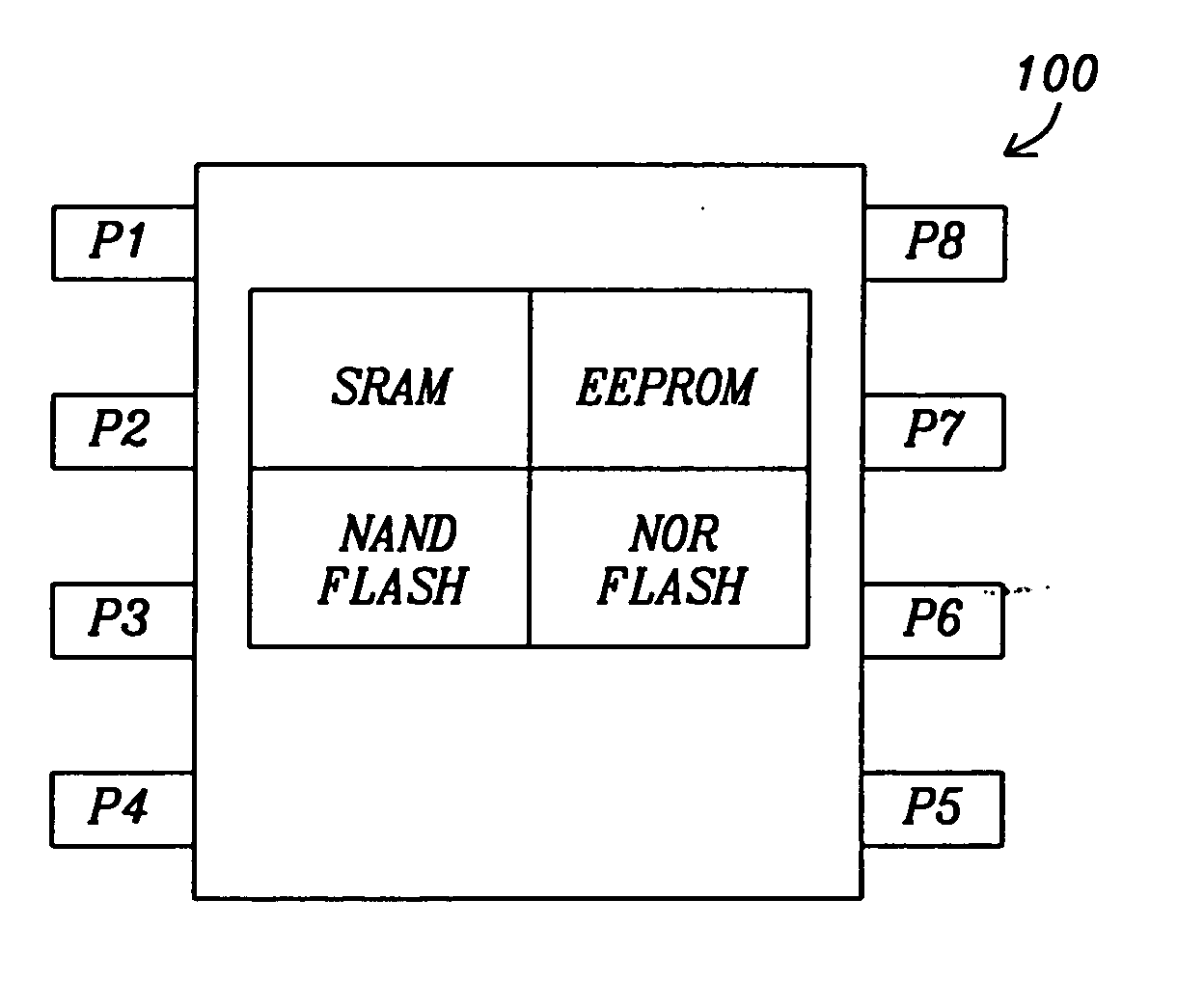

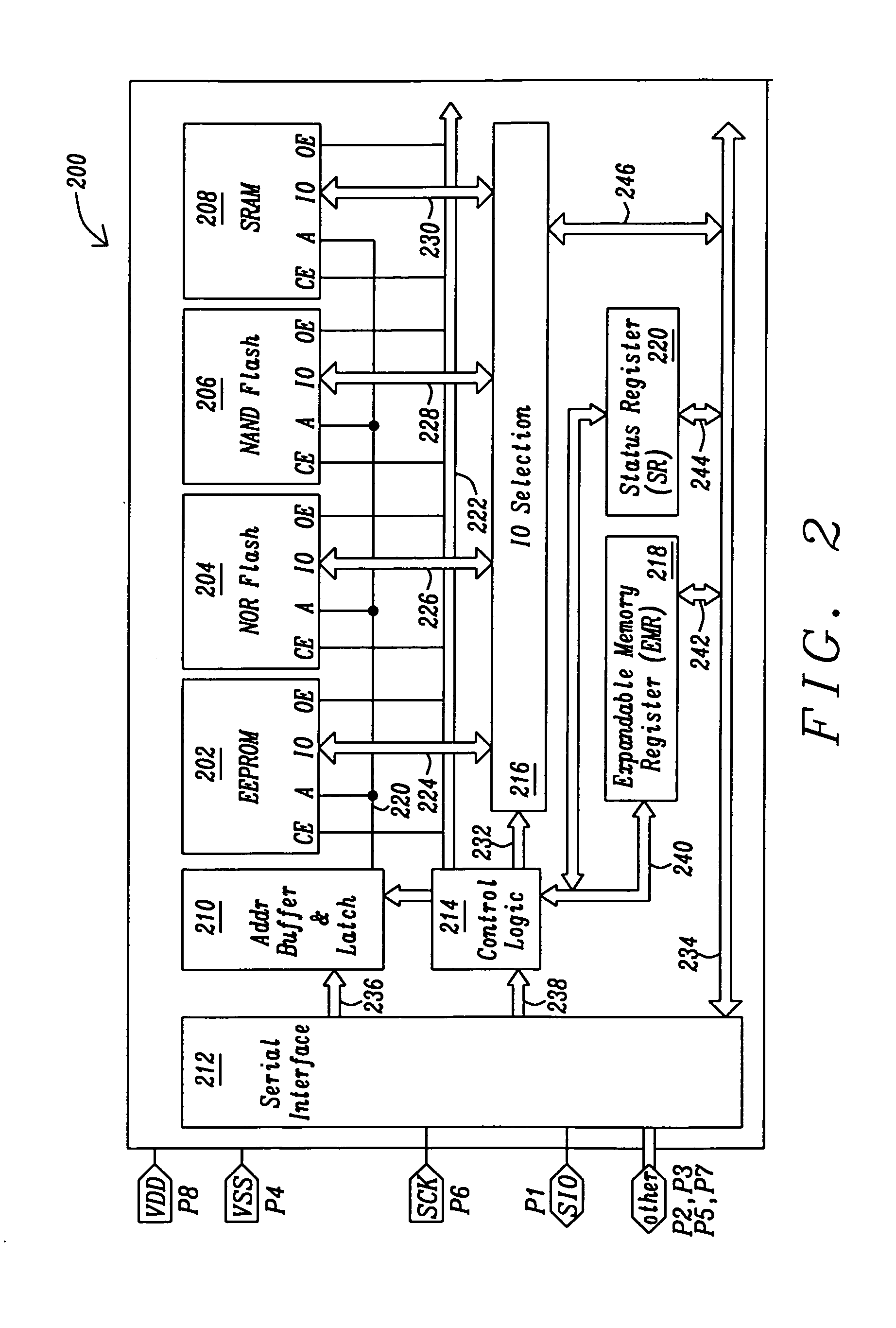

Different types of memory integrated in one chip by using a novel protocol

InactiveUS20120072647A1Improve programming performanceImprove read performanceMemory architecture accessing/allocationEnergy efficient ICTMemory typeMemory chip

A semiconductor chip contains four different memory types, EEPROM, NAND Flash, NOR Flash and SRAM, and a plurality of major serial / parallel interfaces such as I2C, SPI, SDI and SQI in one memory chip. The memory chip features write-while-write and read-while-write operations as well as read-while-transfer and write-while-transfer operations. The memory chip provides for eight pins of which two are for power and up to four pins have no connection for specific interfaces and uses a novel unified nonvolatile memory design that allow the integration together of the aforementioned memory types integrated together into the same semiconductor memory chip.

Owner:APLUS FLASH TECH

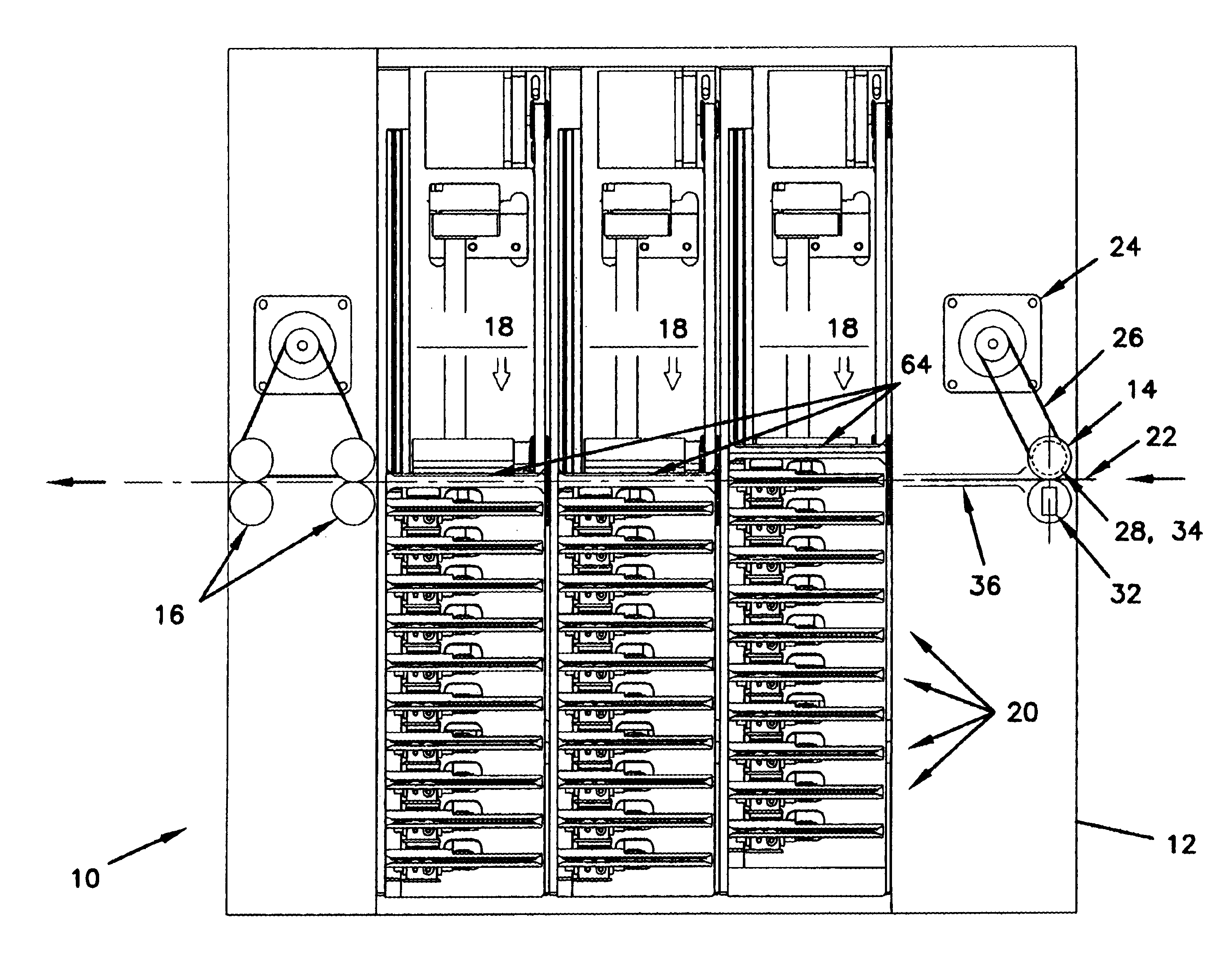

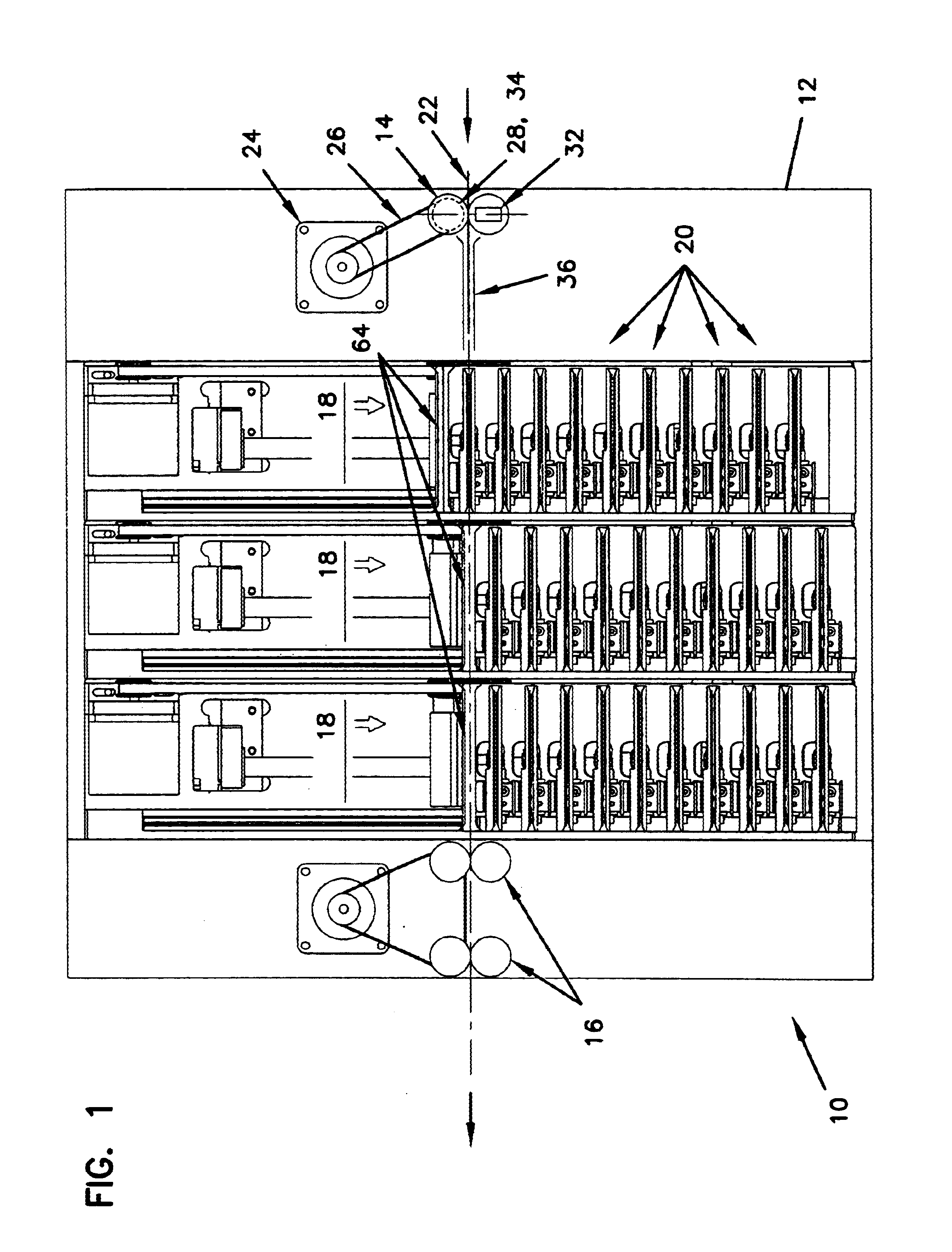

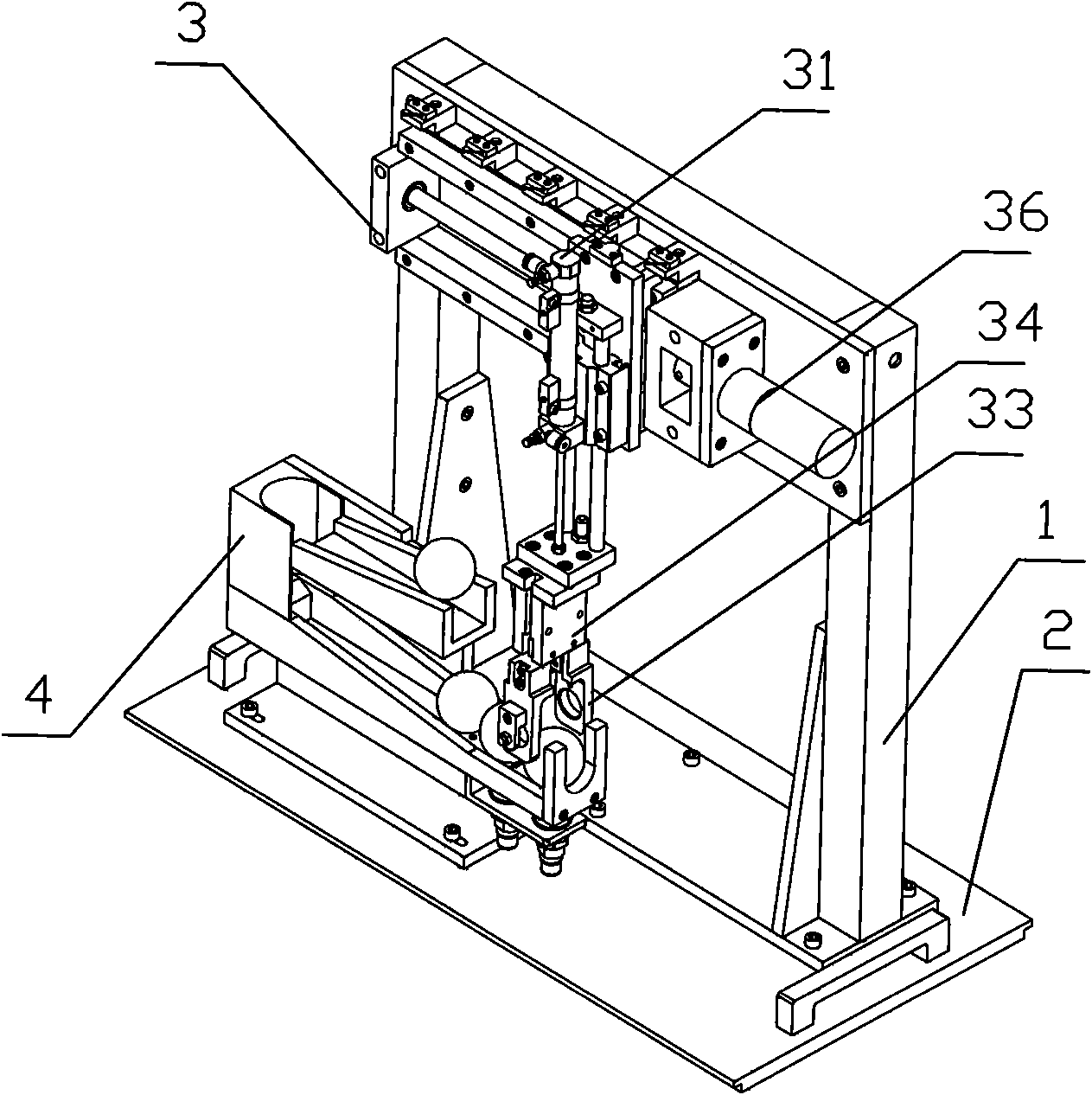

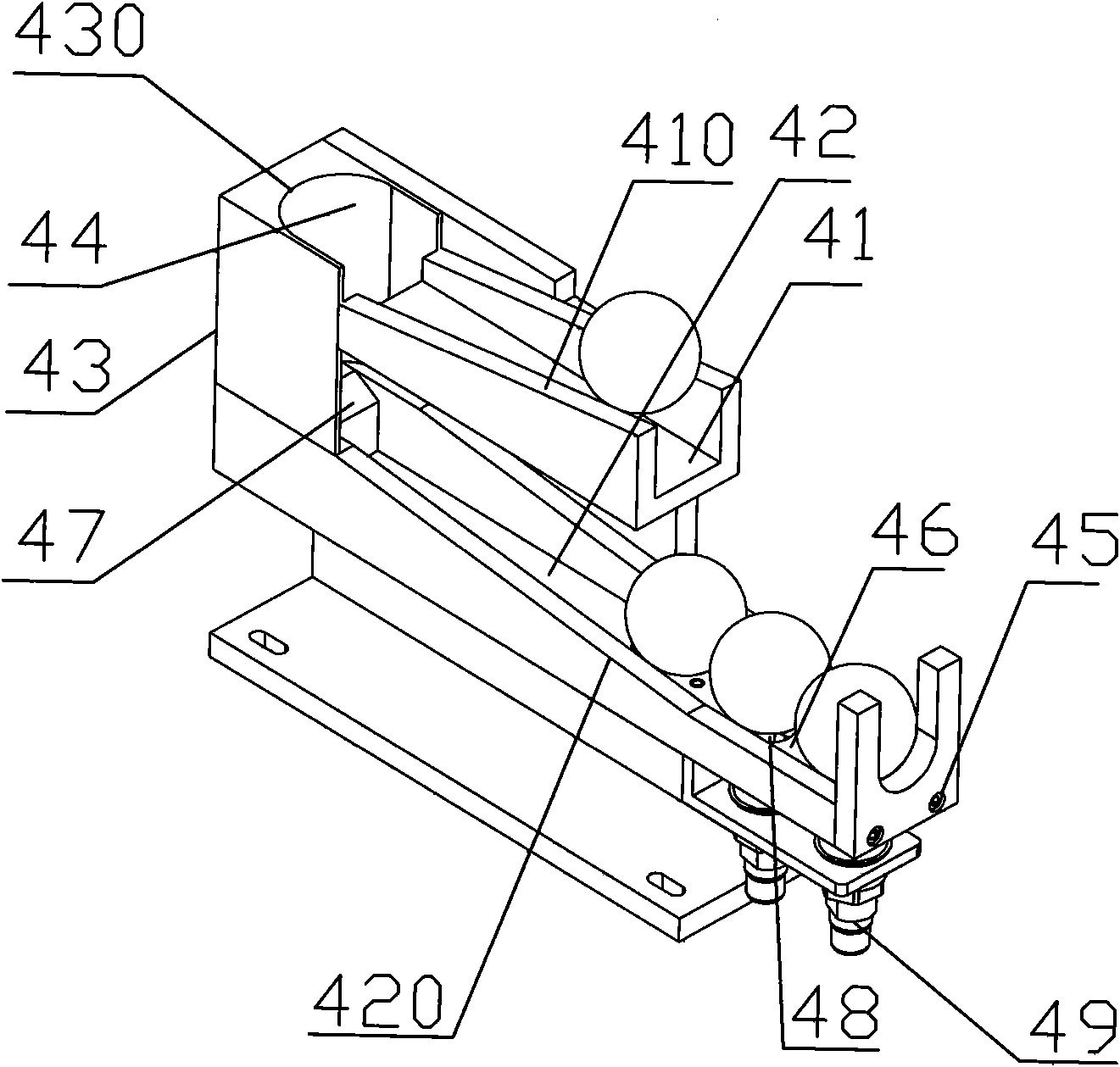

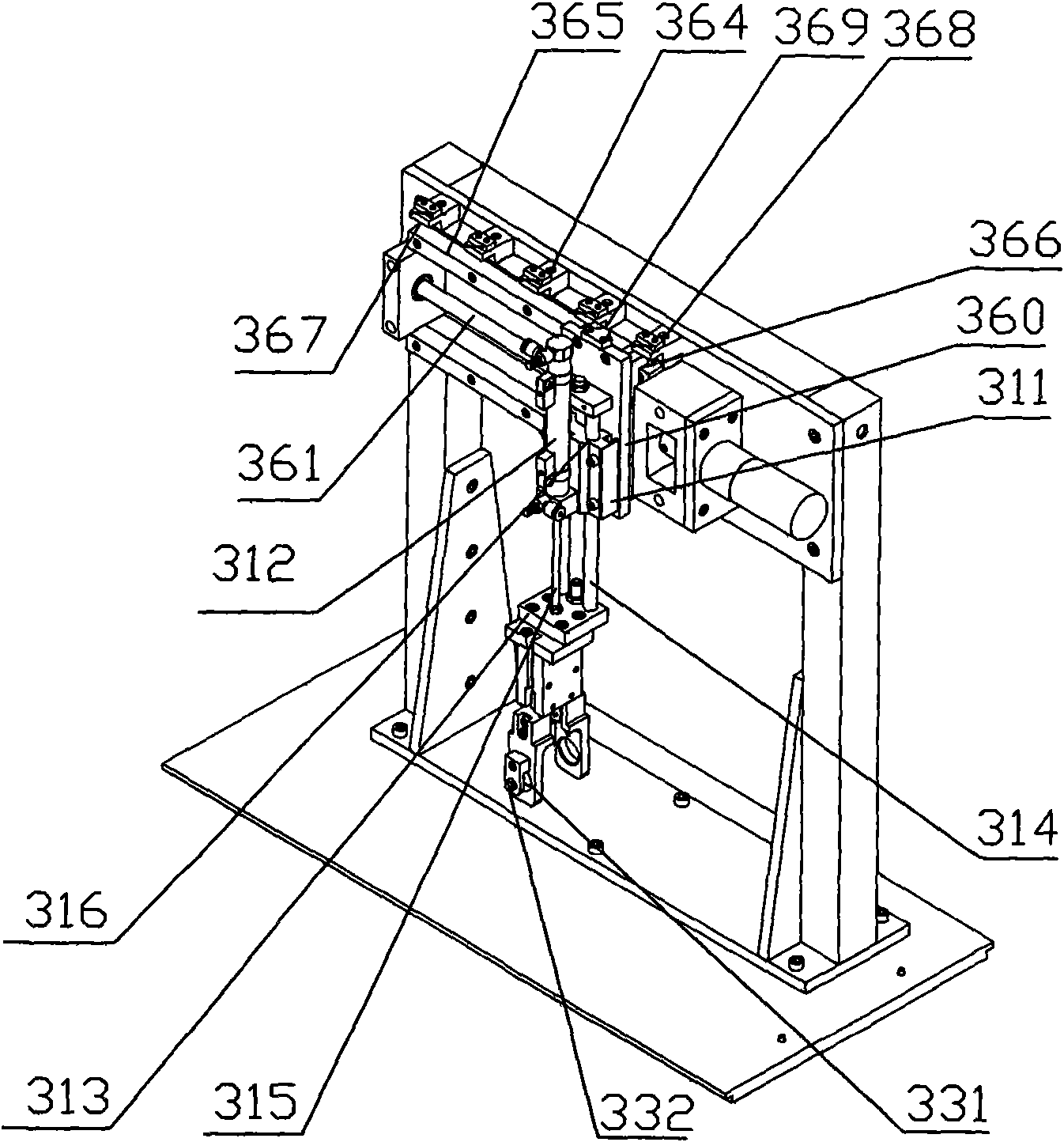

Intelligent material conveying device

ActiveCN101930689AImprove programming performanceEmbodies self-circulationEducational modelsManipulatorProduction lineBall screw

The invention relates to an intelligent material conveying device. The device comprises a bracket, a work bench, a double-shaft straight conveying device and an accepting and feeding device, wherein the double-shaft straight conveying device comprises a mechanical gripper, a ball screw device for providing horizontal degree of freedom for the mechanical gripper and a guide rod cylinder device forproviding vertical degree of freedom for the mechanical gripper; the mechanical gripper is fixedly connected with the guide rod cylinder device; and the accepting and feeding device is provided with an upper slide way and a horizontal slide way, arranged on the work bench and positioned below the mechanical gripper. The mechanical gripper grasps a workpiece from the horizontal slide way of the accepting and feeding device and puts down the workpiece on the upper slide way of the accepting and feeding device, and an automatic production line during the entire process has the characteristic of uninterrupted operation; and the device shows the characteristic of self-circulation of the production line, and has a simple structure by only adopting two working stations.

Owner:YALONG INTELLIGENT EQUIP GRP CO LTD

Adaptive next-executing-cycle trace selection for trace-driven code optimizers

ActiveUS8756581B2Compilation is improvedReduce the amount of solutionError detection/correctionSoftware engineeringDynamic compilationParallel computing

An apparatus includes a processor for executing instructions at runtime and instructions for dynamically compiling the set of instructions executing at runtime. A memory device stores the instructions to be executed and the dynamic compiling instructions. A memory device serves as a trace buffer used to store traces during formation during the dynamic compiling. The dynamic compiling instructions includes a next-executing-cycle (N-E-C) trace selection process for forming traces for the instructions executing at runtime. The N-E-C trace selection process continues through an existing trace-head when forming traces without terminating a recording of a current trace if an existing trace-head is encountered.

Owner:GLOBALFOUNDRIES US INC

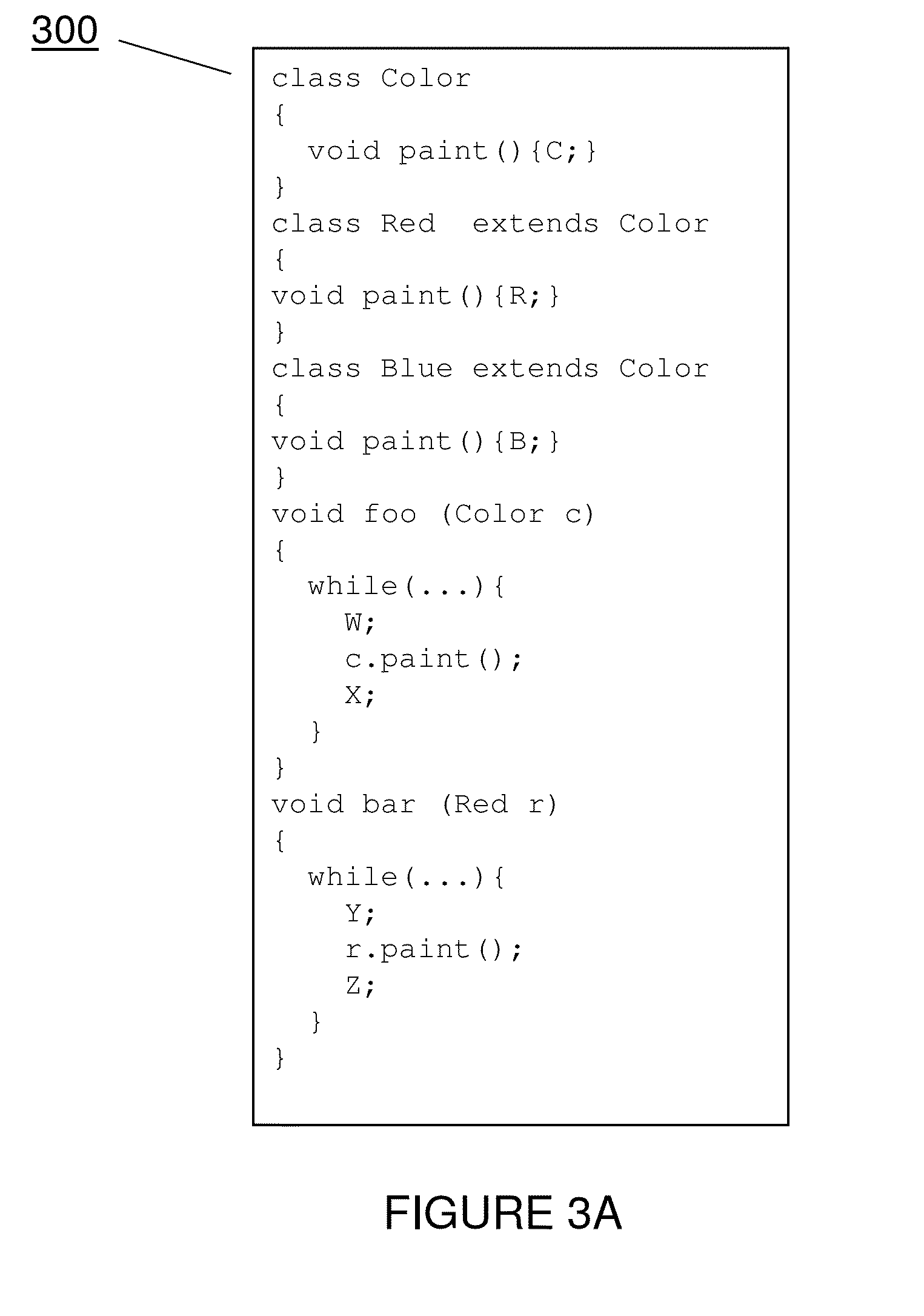

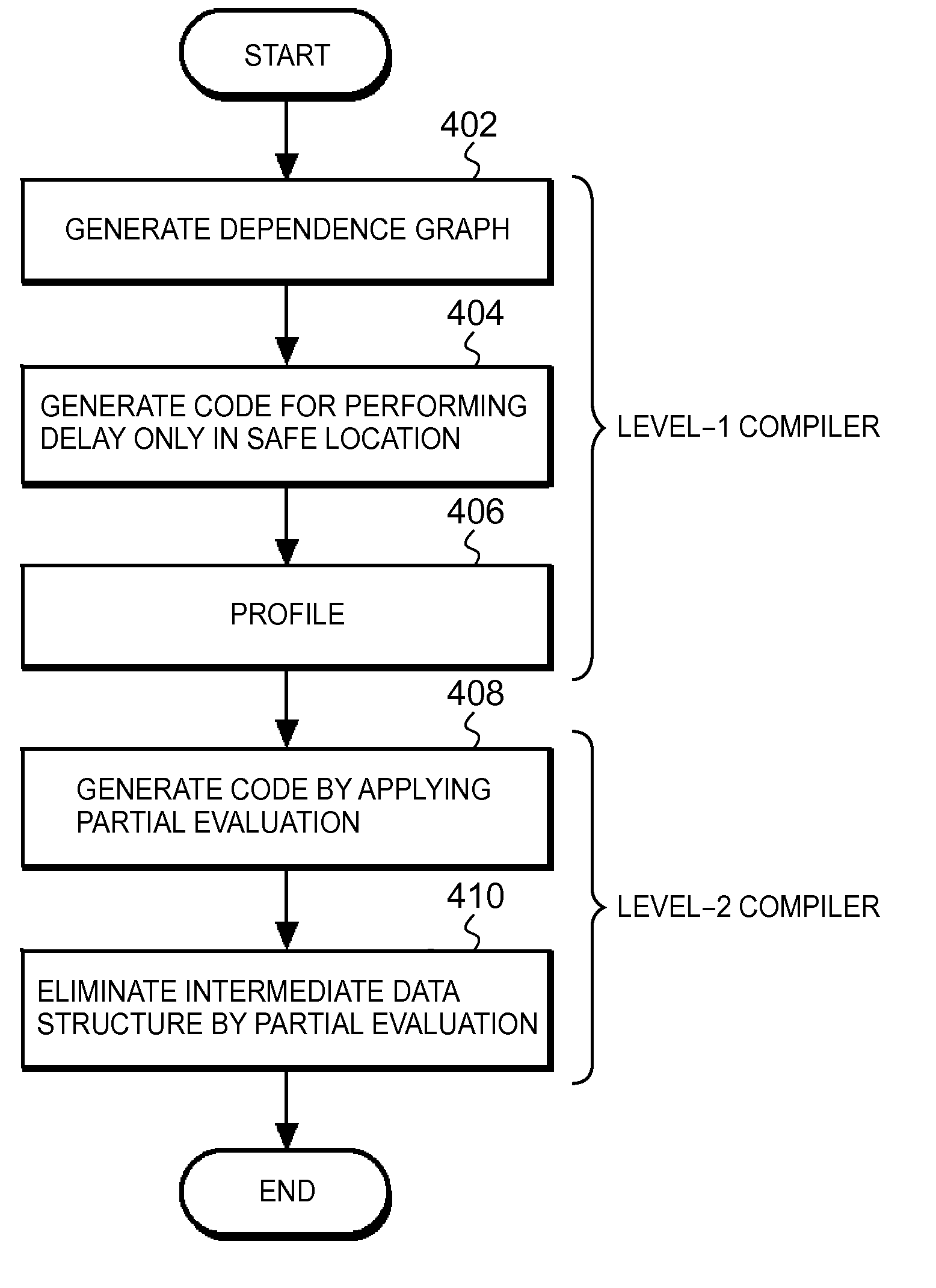

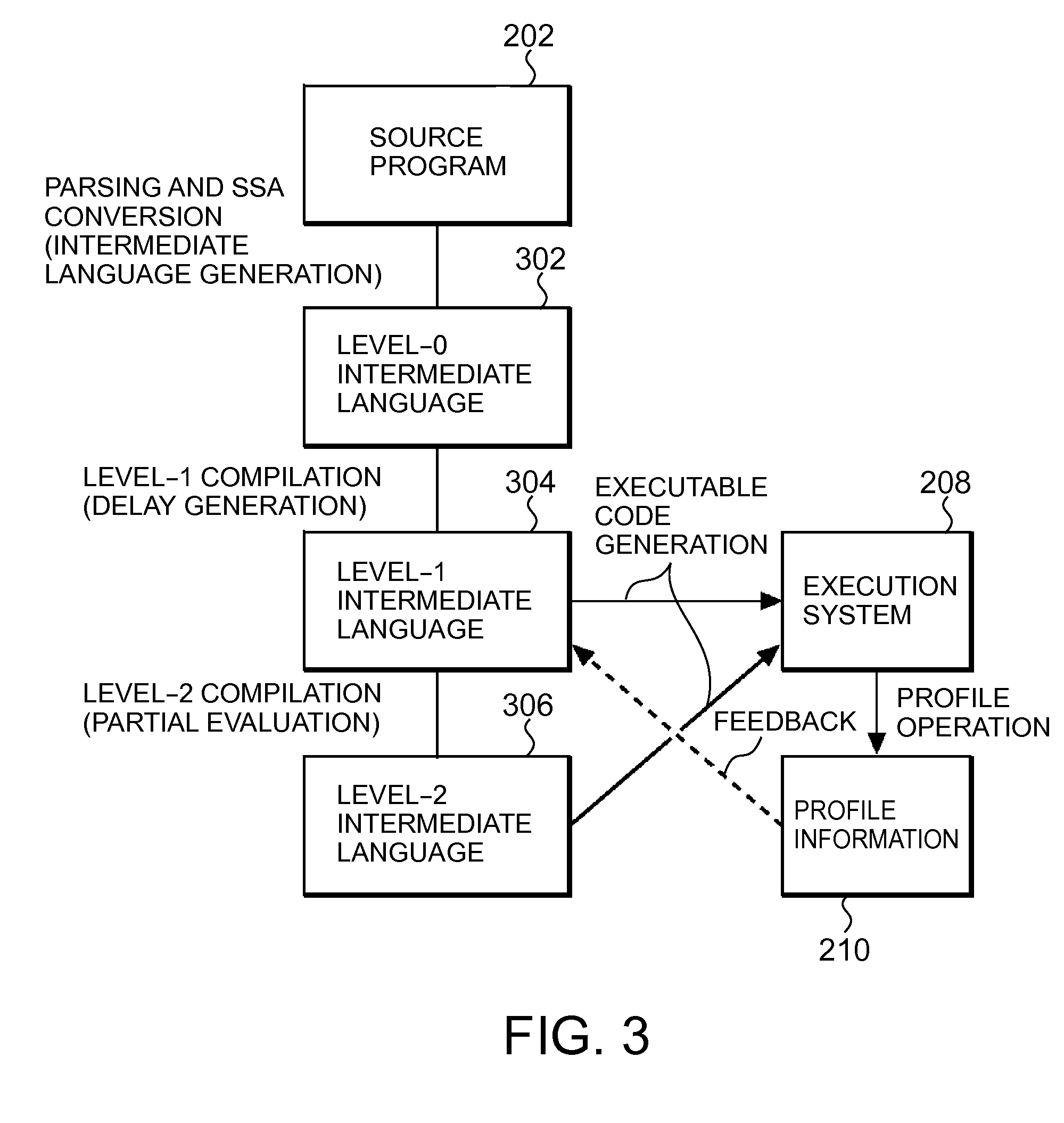

Compiler program, compilation method, and computer system

InactiveUS20110067018A1Improve programming performanceHigh frequencySoftware engineeringProgram controlCompilation errorCompiler

A method, computer program product and system for improving performance of a program during runtime. The method includes reading source code; generating a dependence graph with a dependency for (1) data or (2) side effects; generating a postdominator tree based on the dependence graph; identifying a portion of the program able to be delayed using the postdominator tree; generating delay closure code; profiling a location where the location is where the delay closure code is forced; inlining the delay closure code into a frequent location in which the delay closure code has been forced with high frequency; partially evaluating the program; and generating fast code which eliminates an intermediate data structure within the program, where at least one of the steps is carried out using a computer device so that performance of the program during runtime is improved.

Owner:IBM CORP

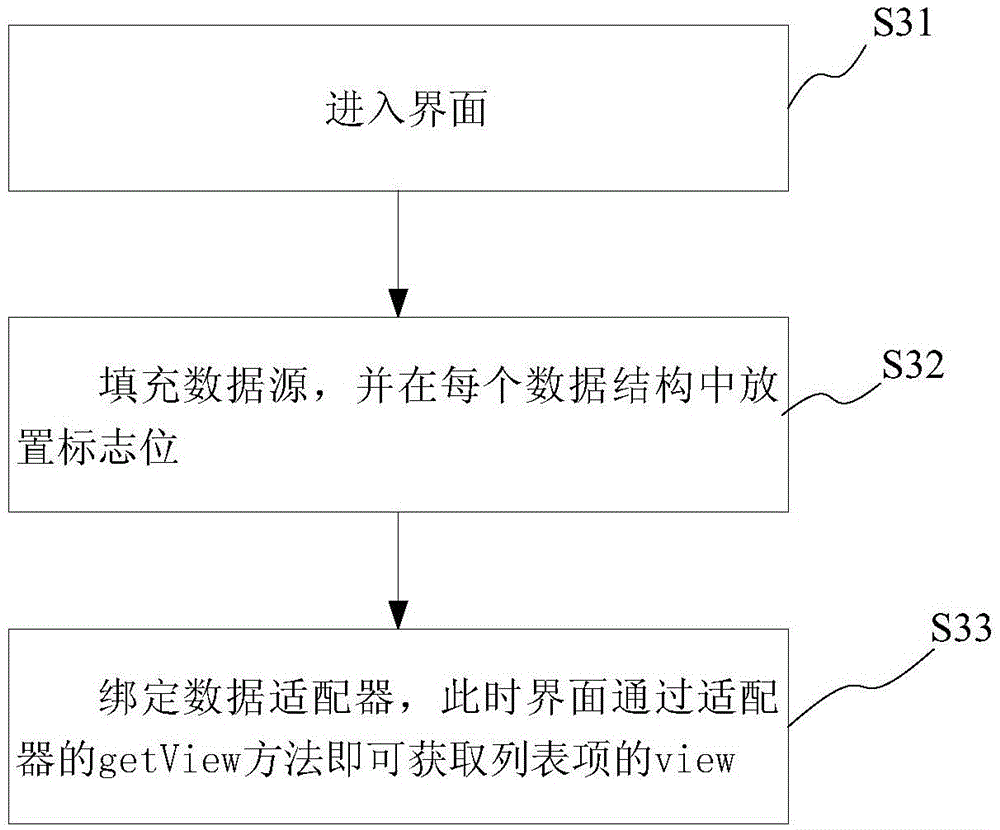

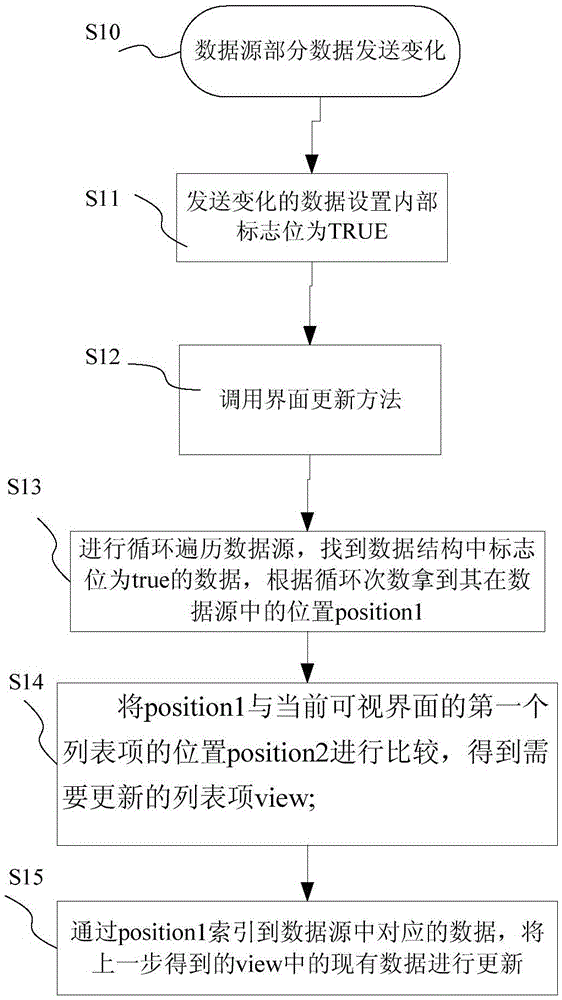

Data updating method and system for view list in list control

InactiveCN105549973AImprove program performanceAvoid wastingExecution for user interfacesData adapterDatabase

The invention provides a data updating method and system for a view list in a list control. The method comprises an interface initialization stage and an interface updating stage. During the interface initialization stage, the filling of a data source is carried out, namely, a first flag bit is added into a data structure corresponding to each view list item in the data source, and the filled data source is bound with a data adapter; and the list control obtains the data source through the data adapter, the view list of the corresponding list control is filled with the view list items in the data source, and a first view is drawn according to the view list of the list control. During the interface updating stage, when the data structure is changed, the first flag bit of the data structure is changed to a second flag bit, the view list of the list control obtains a data structure containing the second flag bit, and a corresponding view part of the data structure containing the second flag bit in the first view is drawn to obtain a second view. According to the data updating method and system, only the list item view with the changed data is updated, so that the resource waste is avoided and the updating performance is greatly improved.

Owner:PHICOMM (SHANGHAI) CO LTD

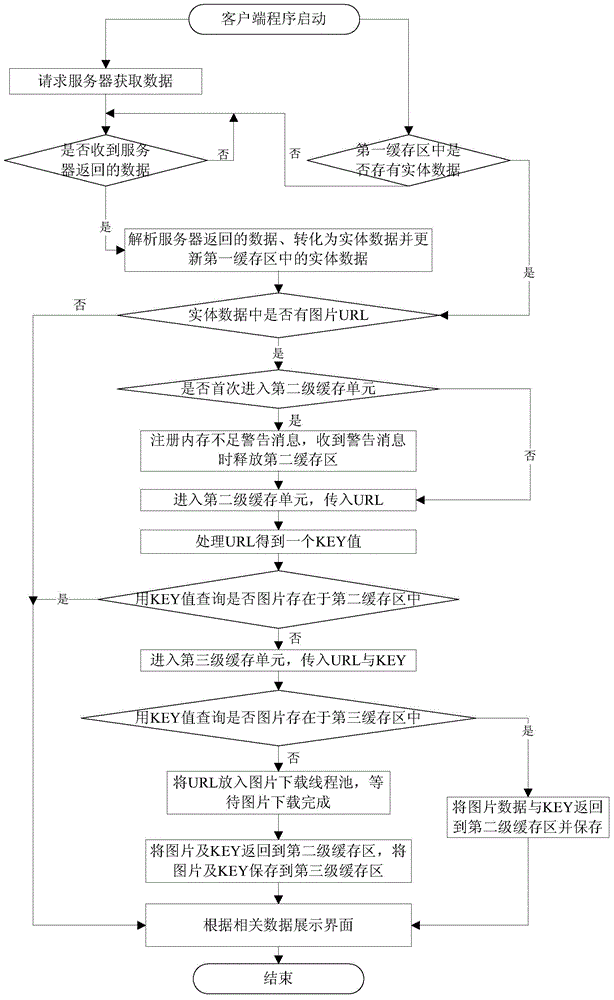

Data caching method and system for mobile equipment client

ActiveCN104683329AImprove experienceImprove fluencyInput/output to record carriersTransmissionClient-sideMobile device

The invention discloses a data caching method and a data caching system for a mobile equipment client, and aims to reduce the disk access rate when cached data is required to be used. The data caching method and the data caching system are characterized in that the latest updated historical entity data required by a display interface is stored in a first-level cache region, and pictures in the historical entity data are stored in a second-level cache region and a third-level cache region. After a client program is started, a data request is transmitted to a server to acquire the latest data required by current interface display, and meanwhile, the interface is displayed according to the latest updated historical entity data required by the display interface and the pictures in three caches.

Owner:CHENDU PINGUO TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com