Patents

Literature

56results about "Dataflow computers" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

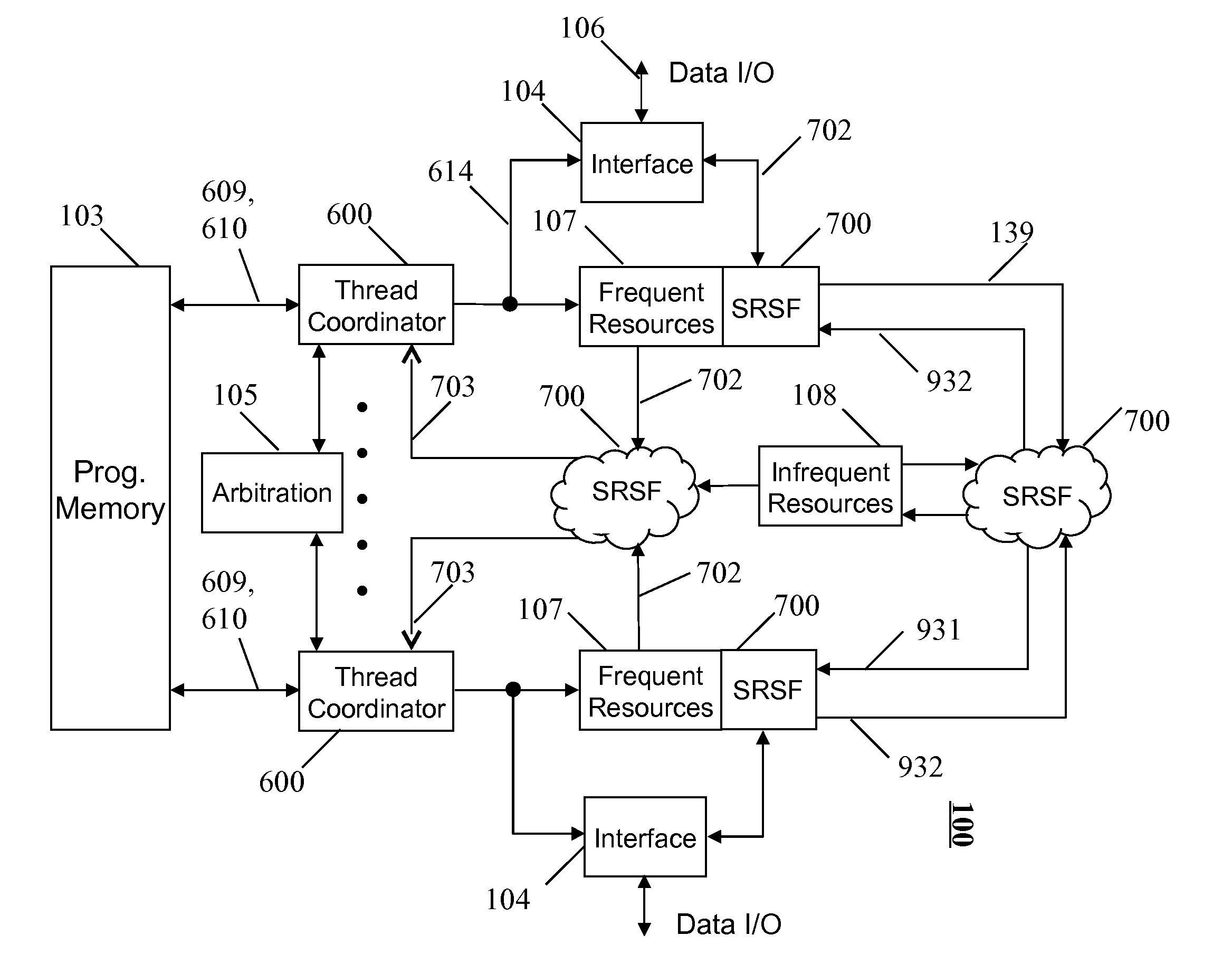

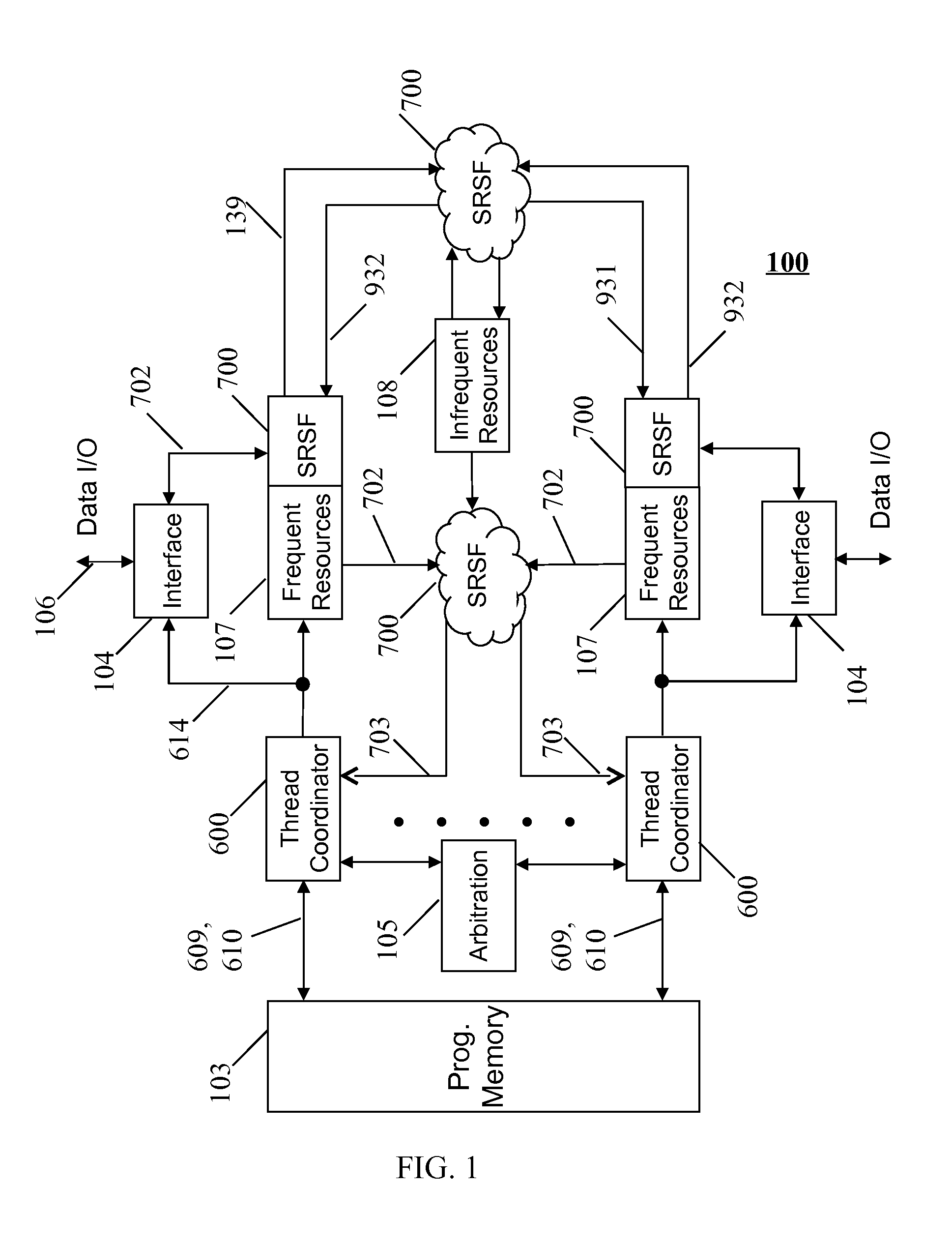

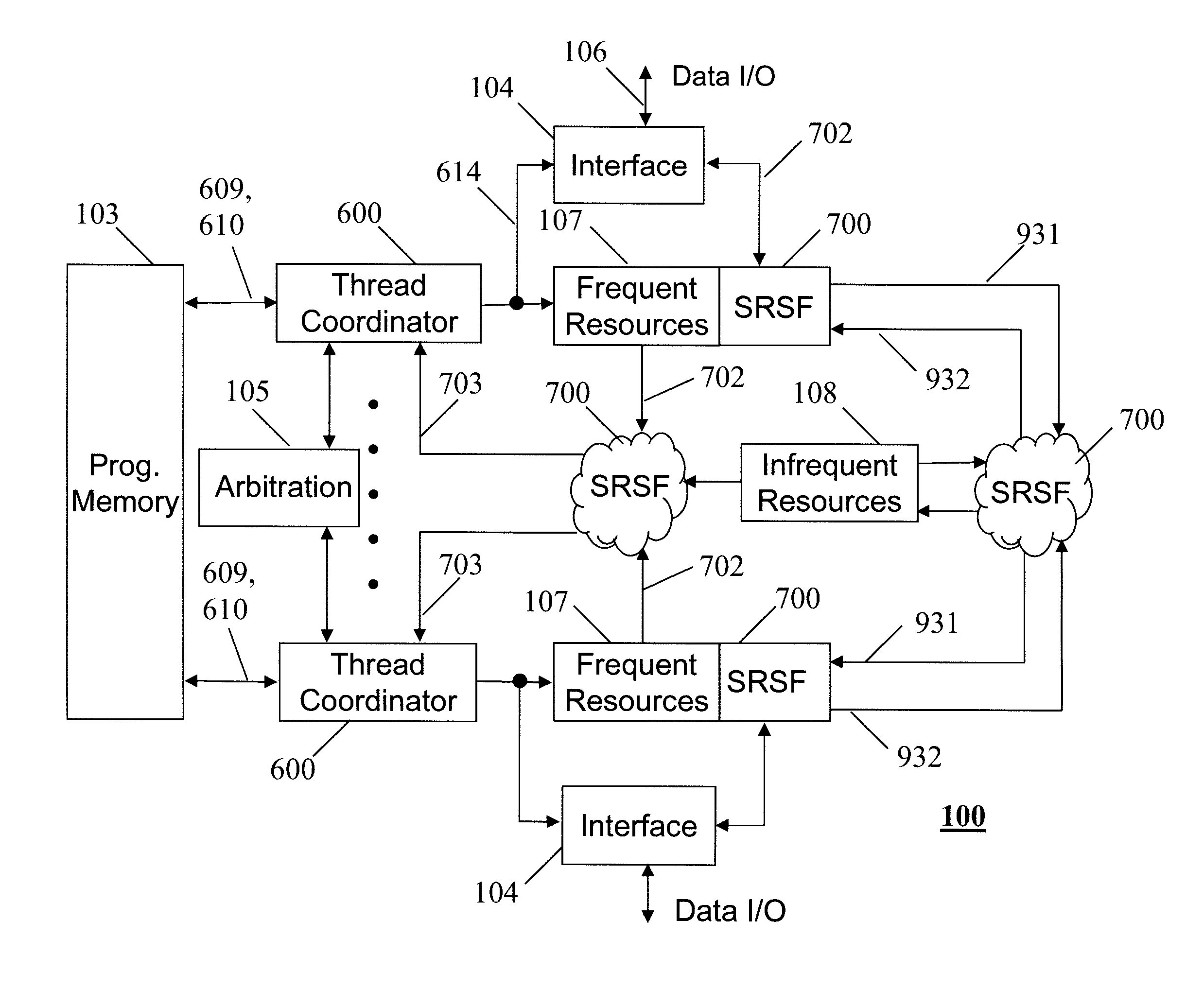

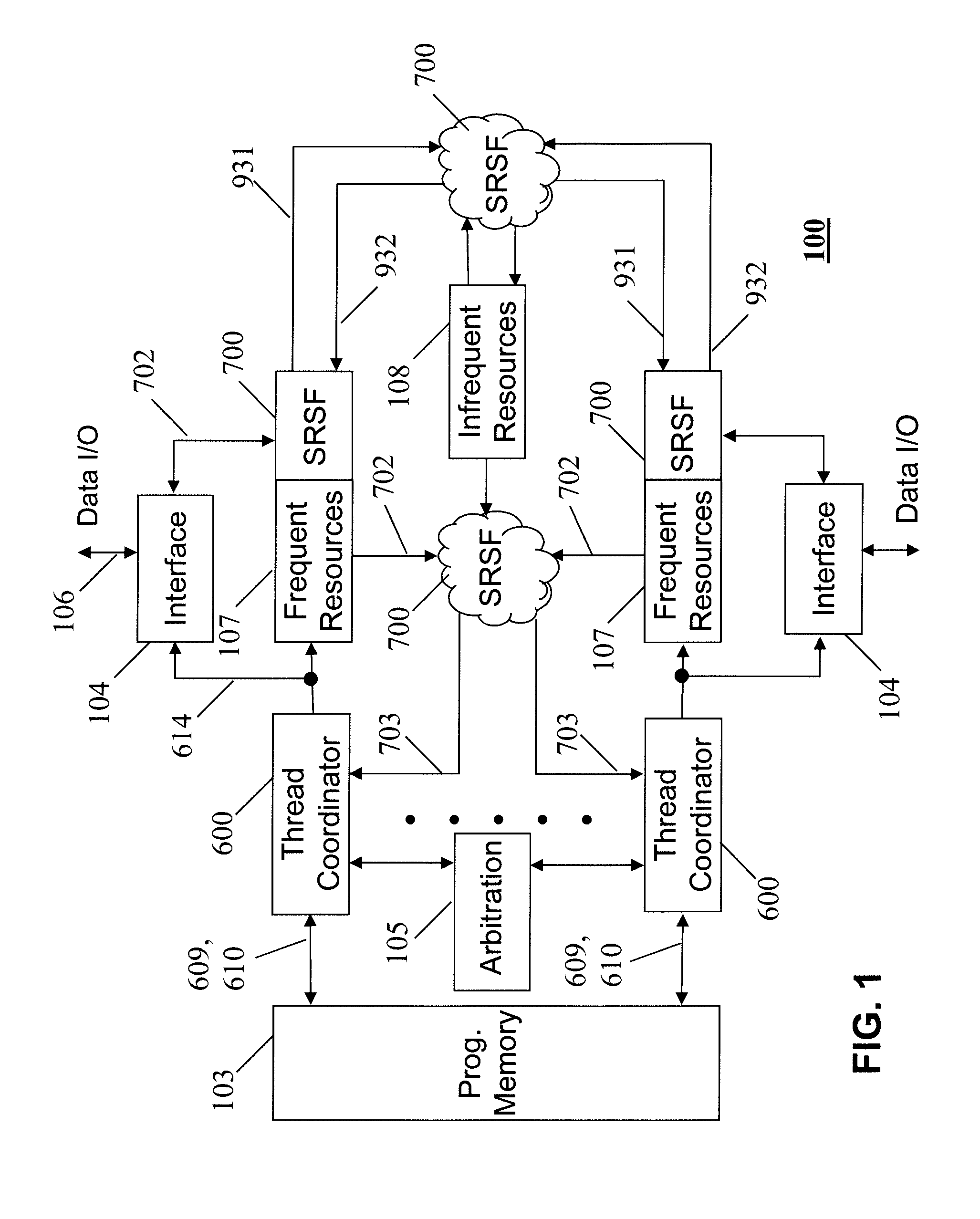

Shared resource multi-thread processor array

ActiveUS20120089812A1Dataflow computersProgram initiation/switchingProgrammable circuitsSwitched fabric

A shared resource multi-thread processor array wherein an array of heterogeneous function blocks are interconnected via a self-routing switch fabric, in which the individual function blocks have an associated switch port address. Each switch output port comprises a FIFO style memory that implements a plurality of separate queues. Thread queue empty flags are grouped using programmable circuit means to form self-synchronised threads. Data from different threads are passed to the various addressable function blocks in a predefined sequence in order to implement the desired function. The separate port queues allows data from different threads to share the same hardware resources and the reconfiguration of switch fabric addresses further enables the formation of different data-paths allowing the array to be configured for use in various applications.

Owner:SMITH GRAEME ROY

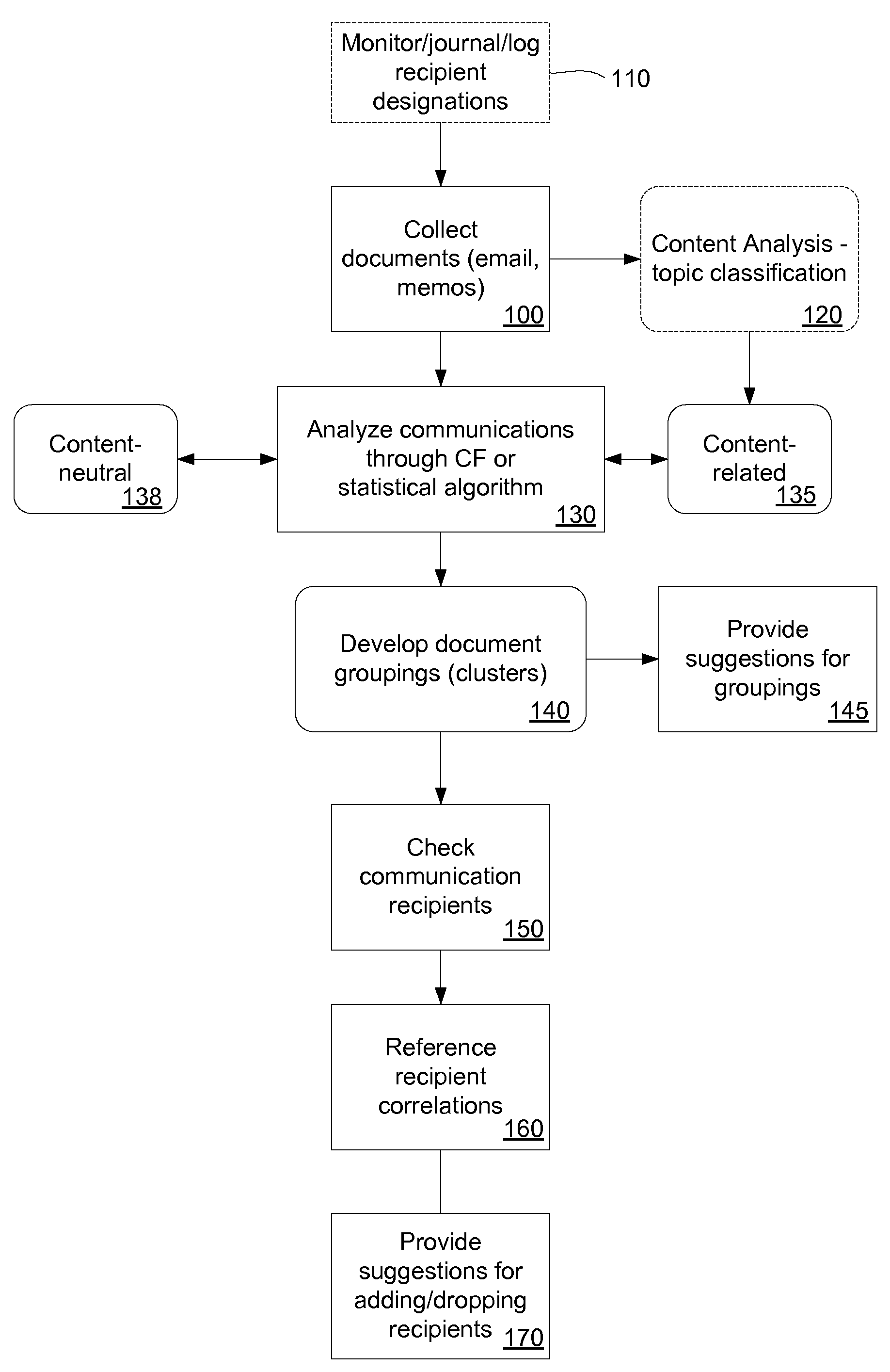

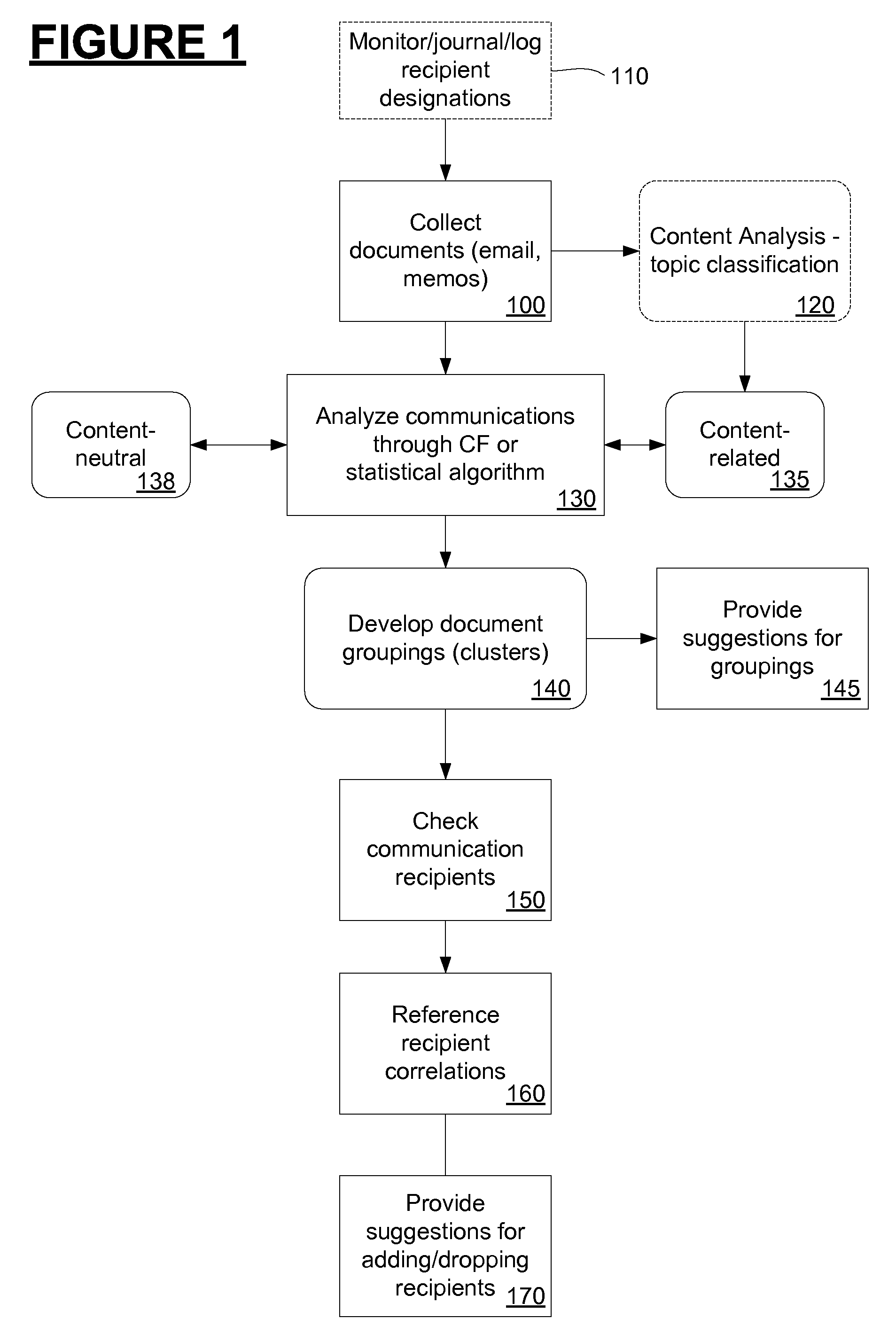

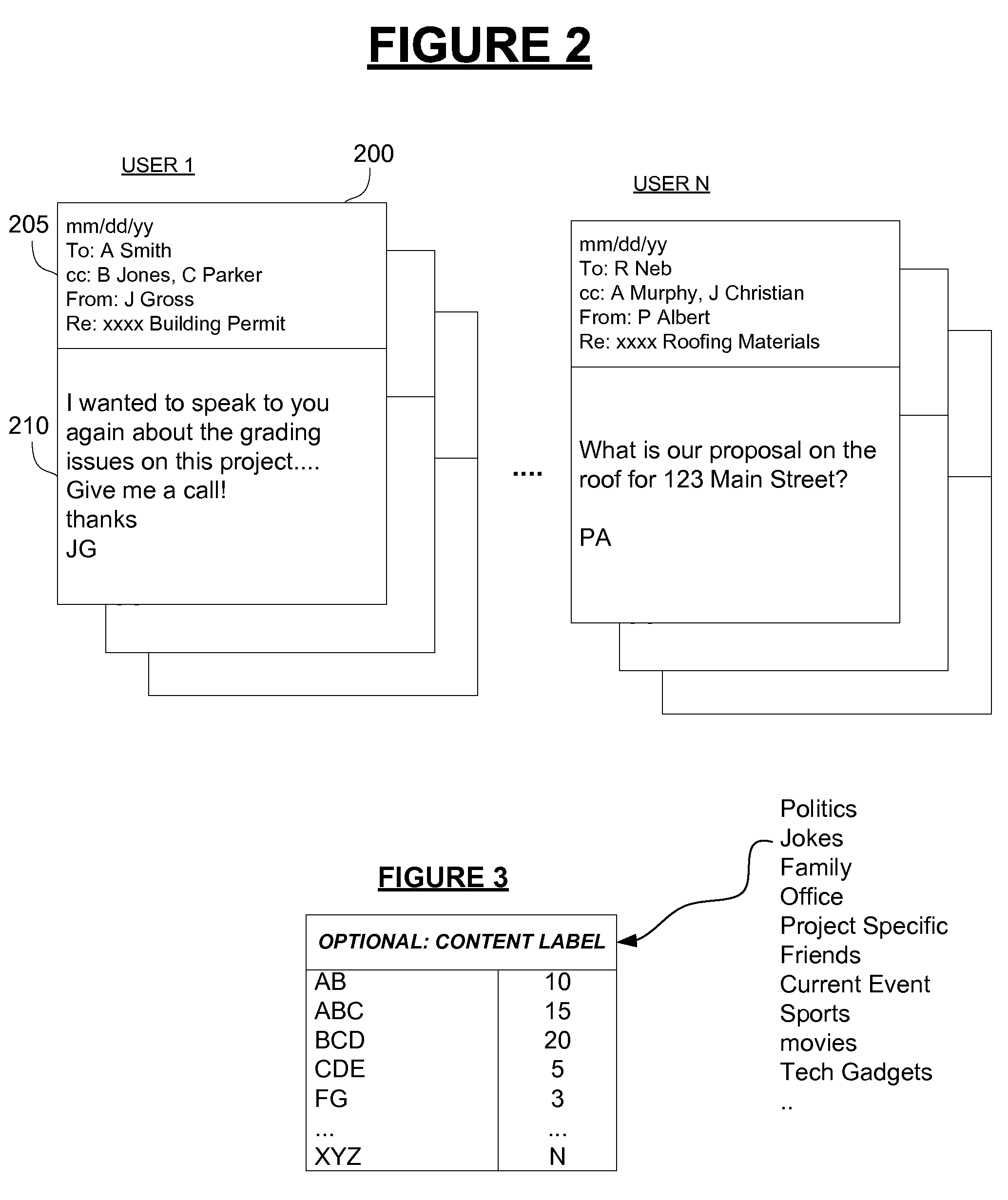

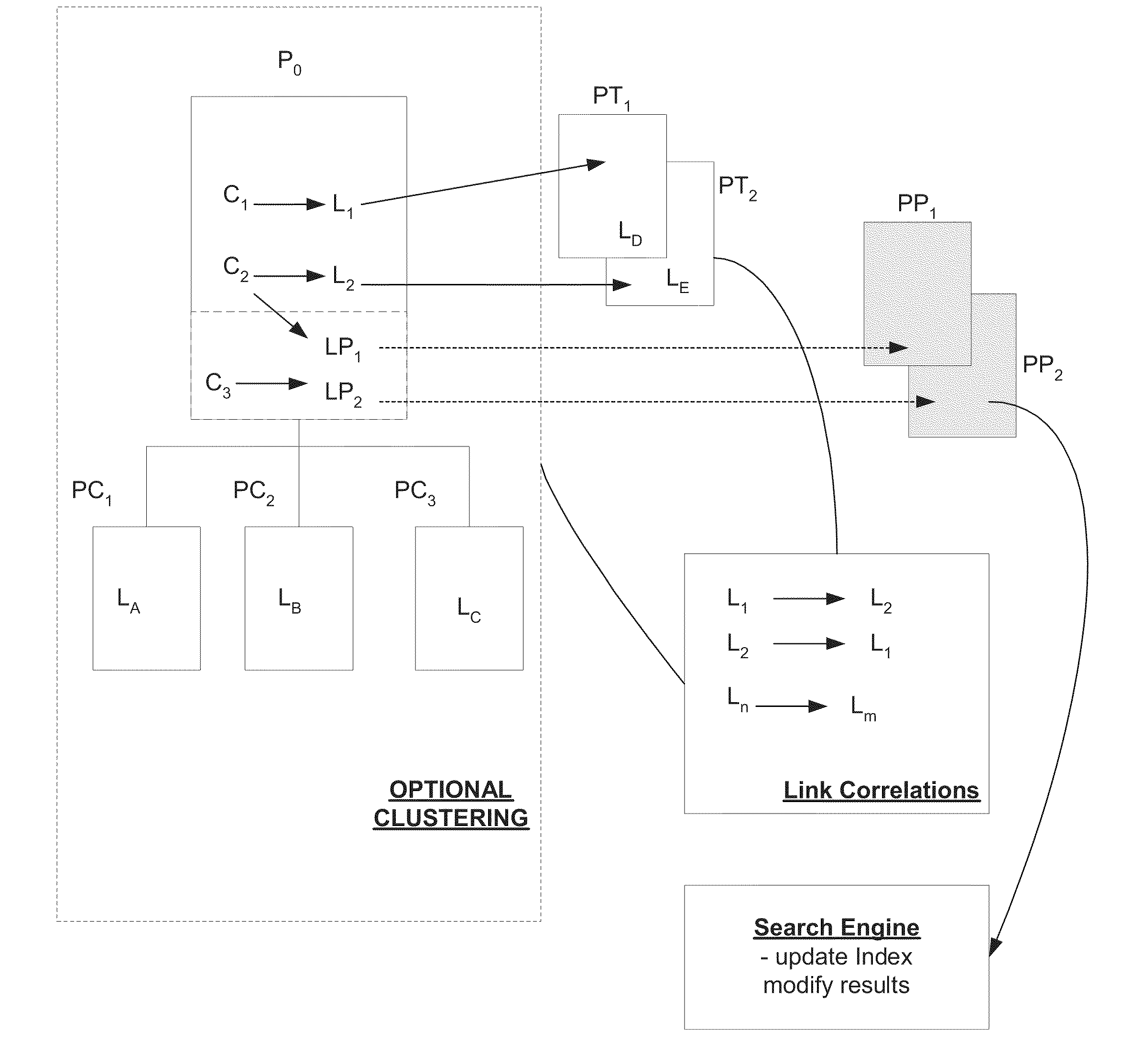

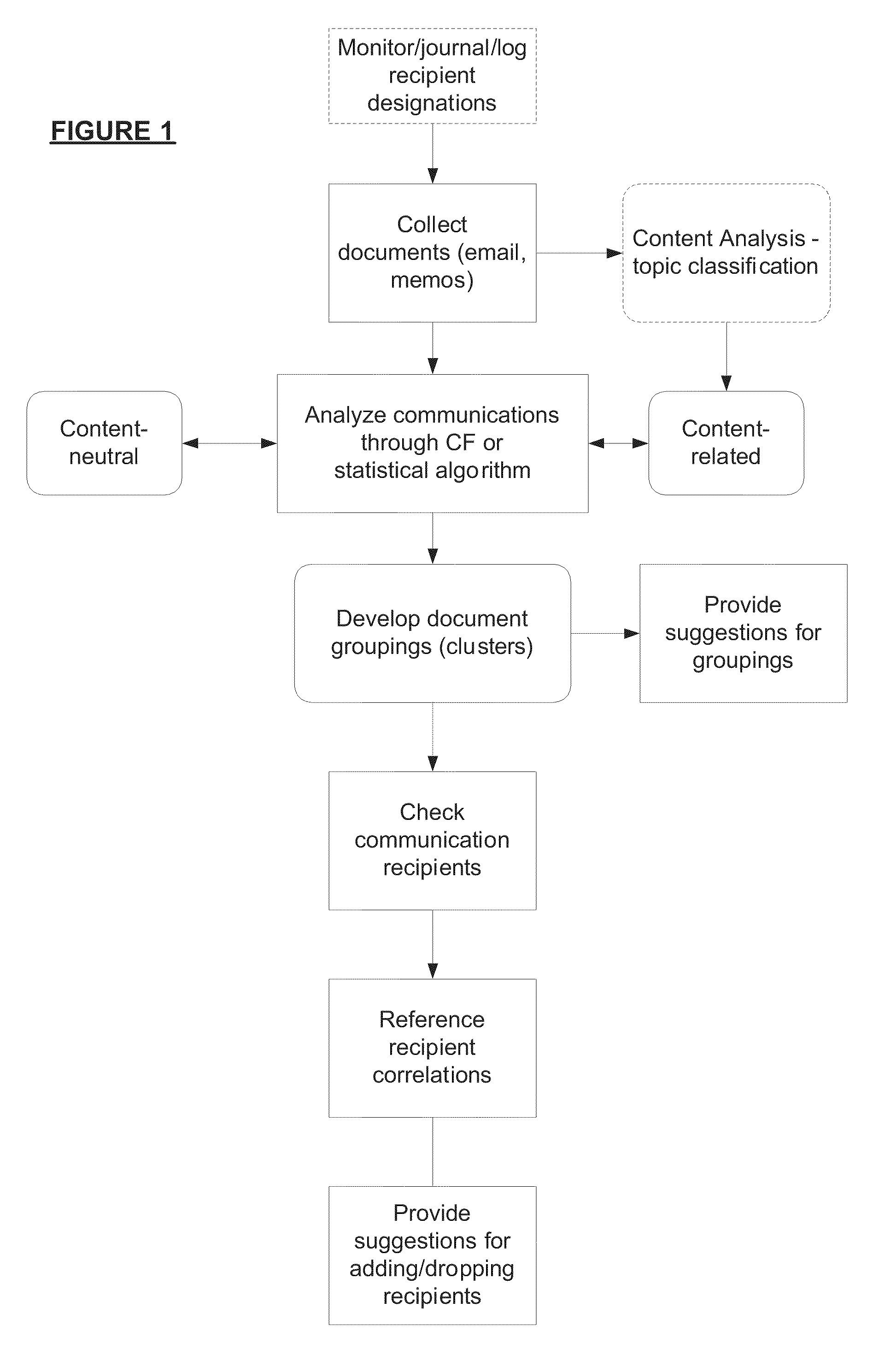

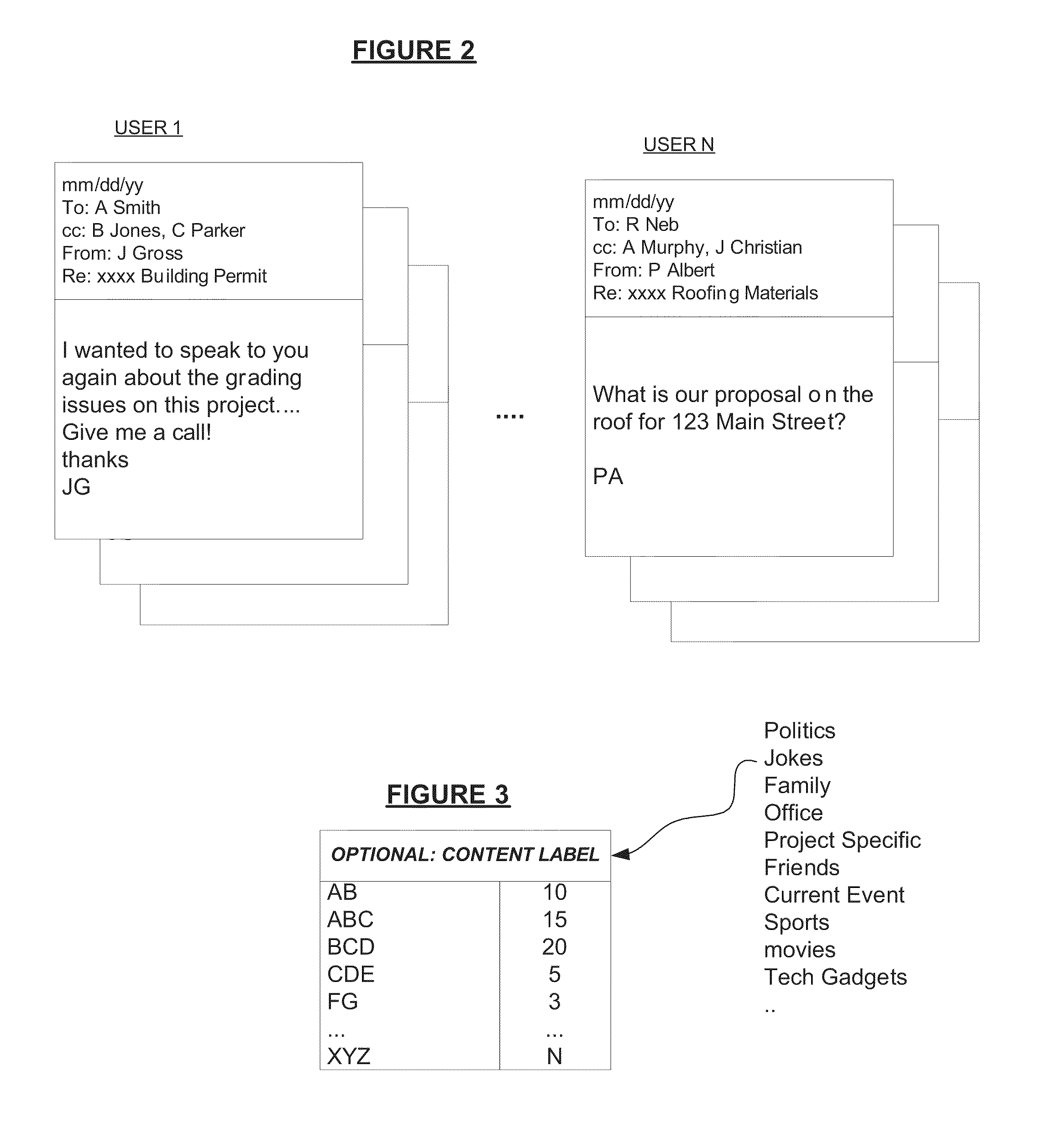

Document distribution recommender system and method

ActiveUS7996456B2Dataflow computersMultiple digital computer combinationsDocument preparationData mining

A document management system monitors proposed recipients for documents and provides recommendations on alterations to the distribution set, such as by adding or removing recipients.

Owner:META PLATFORMS INC

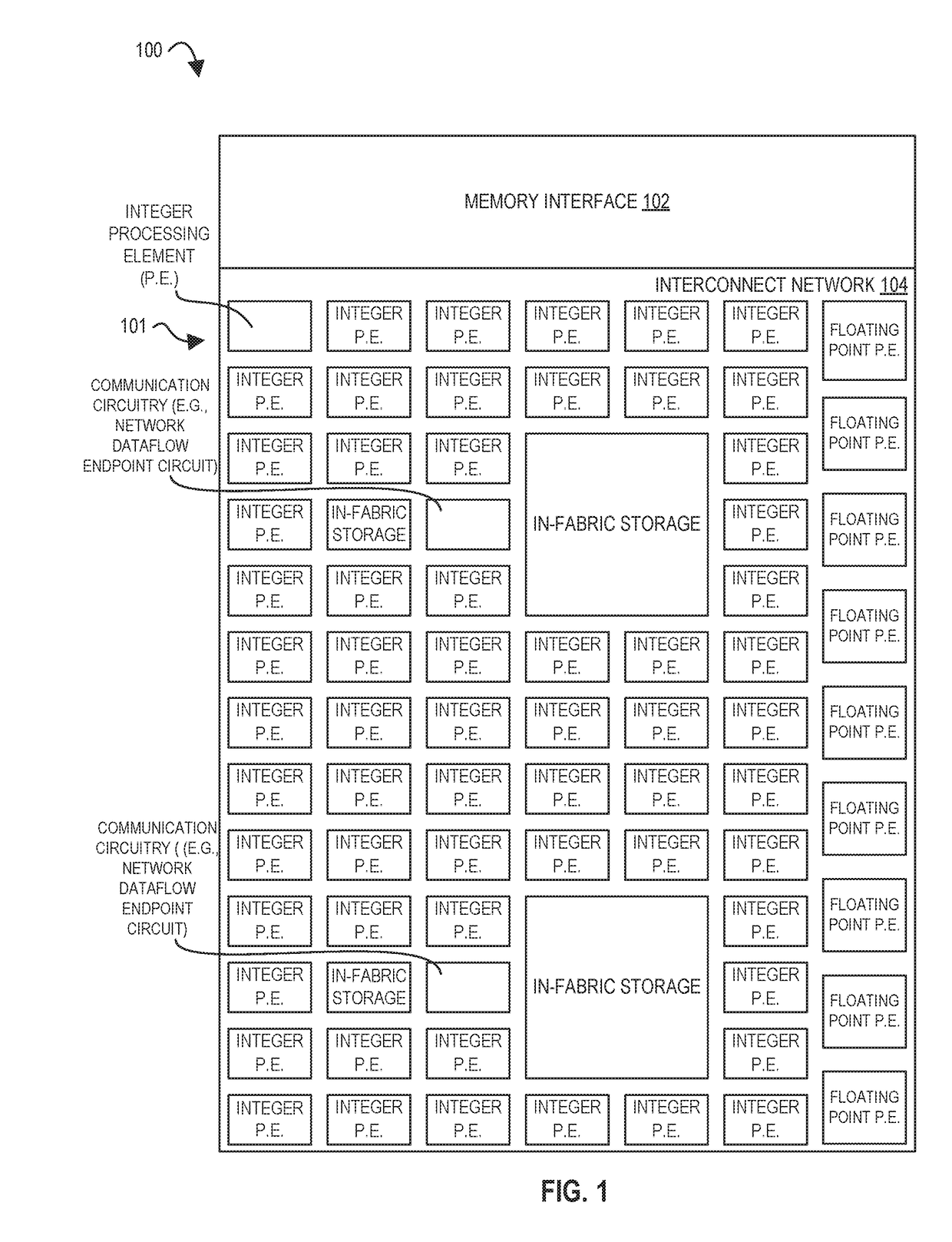

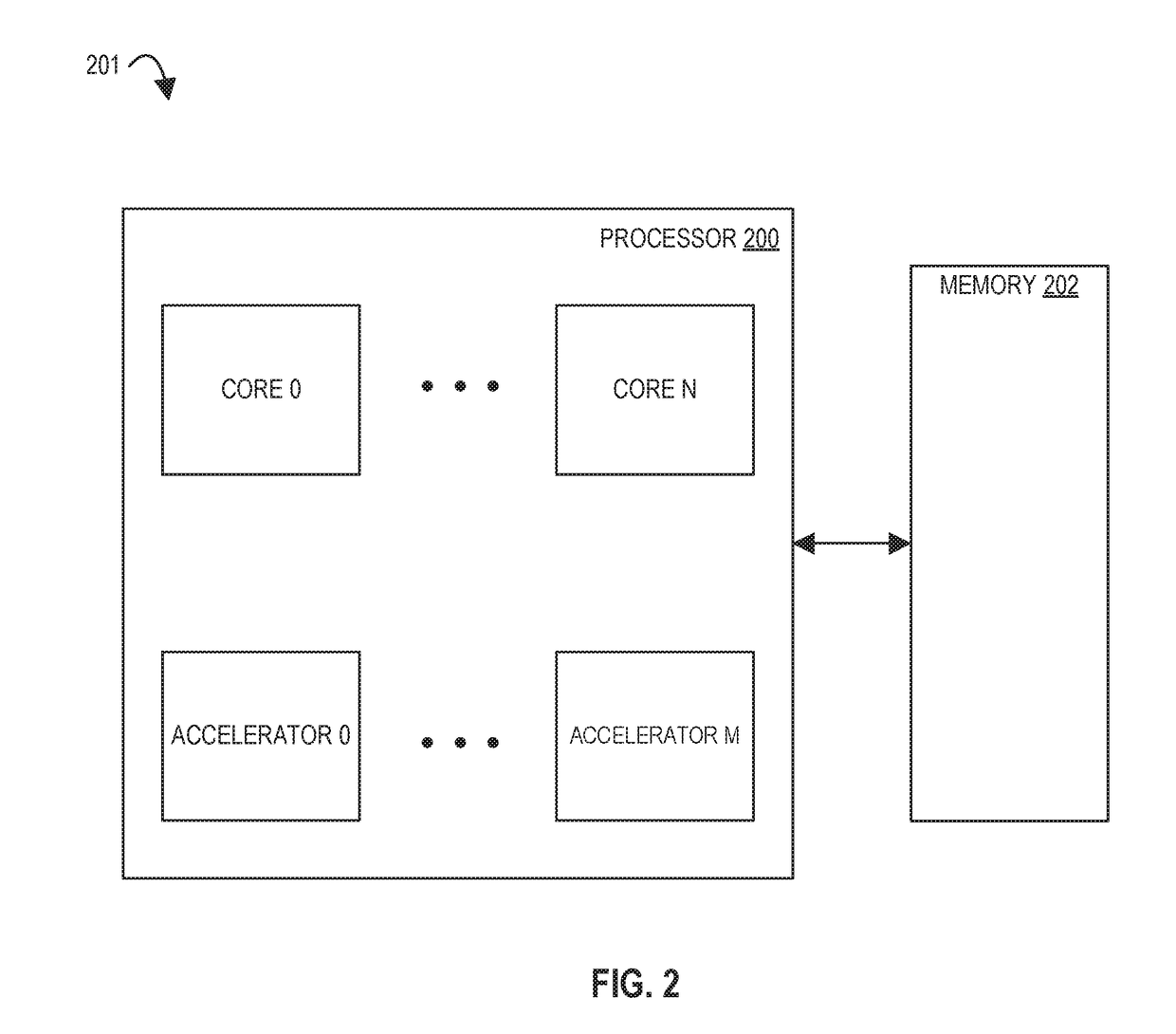

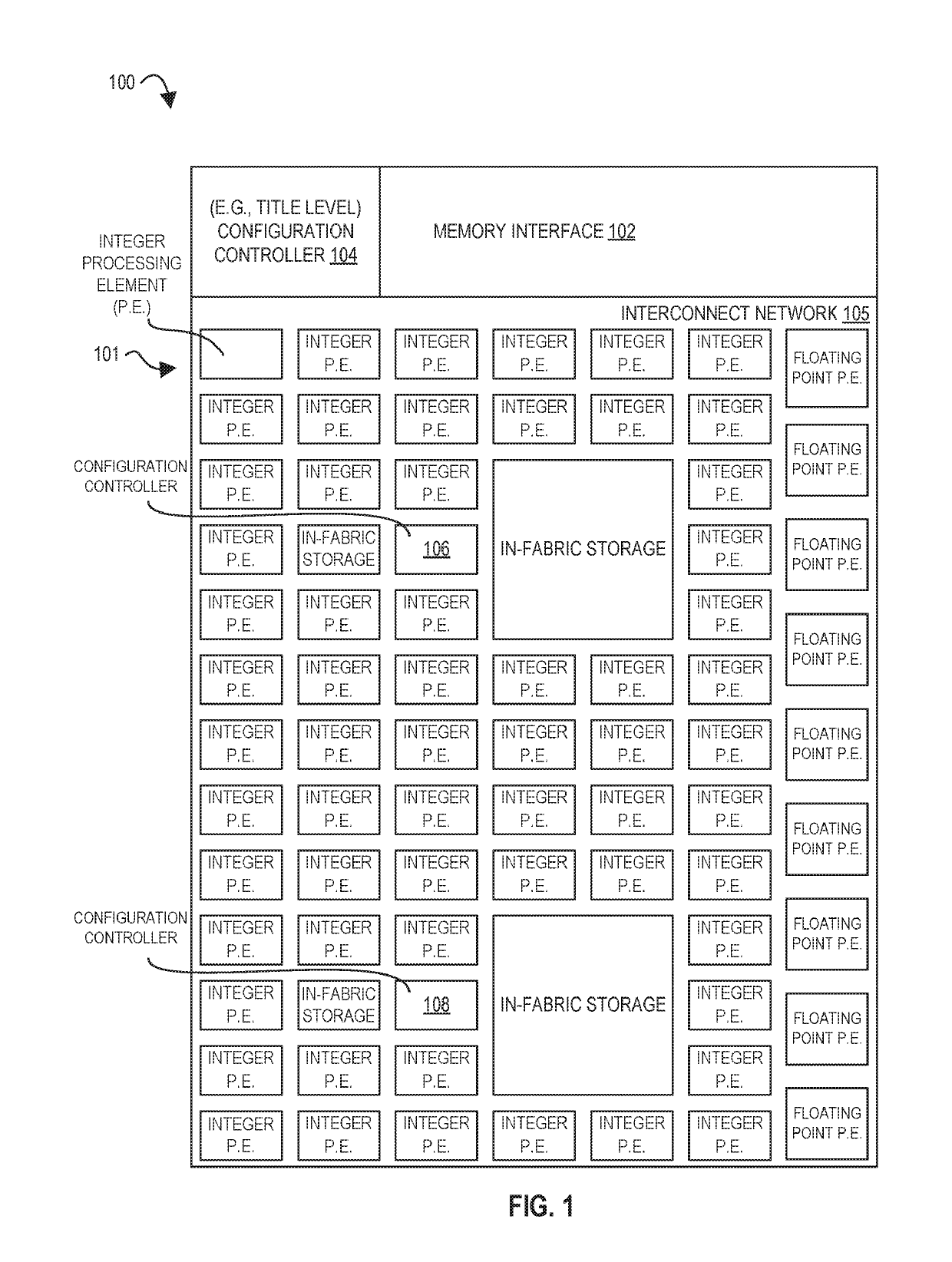

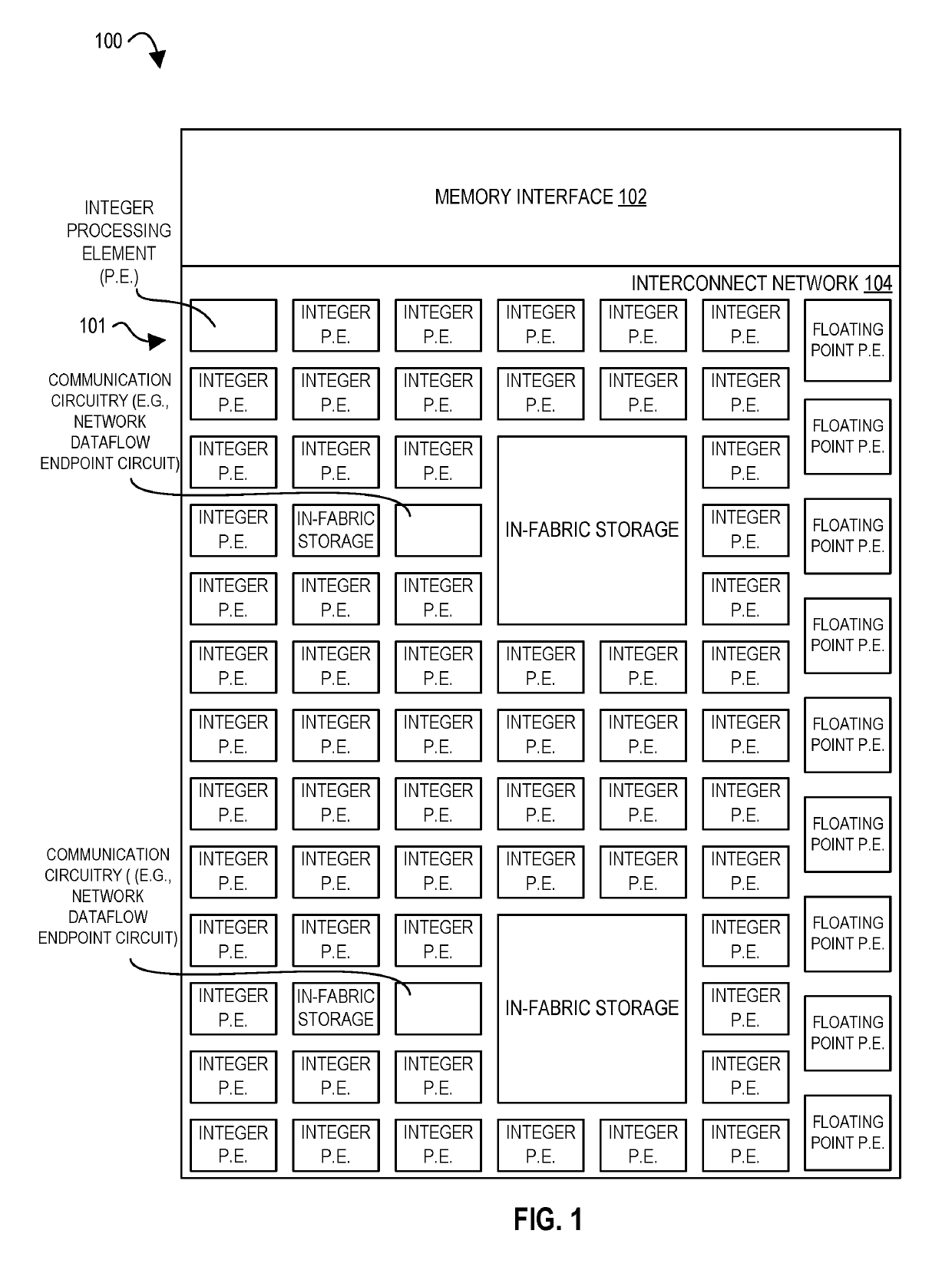

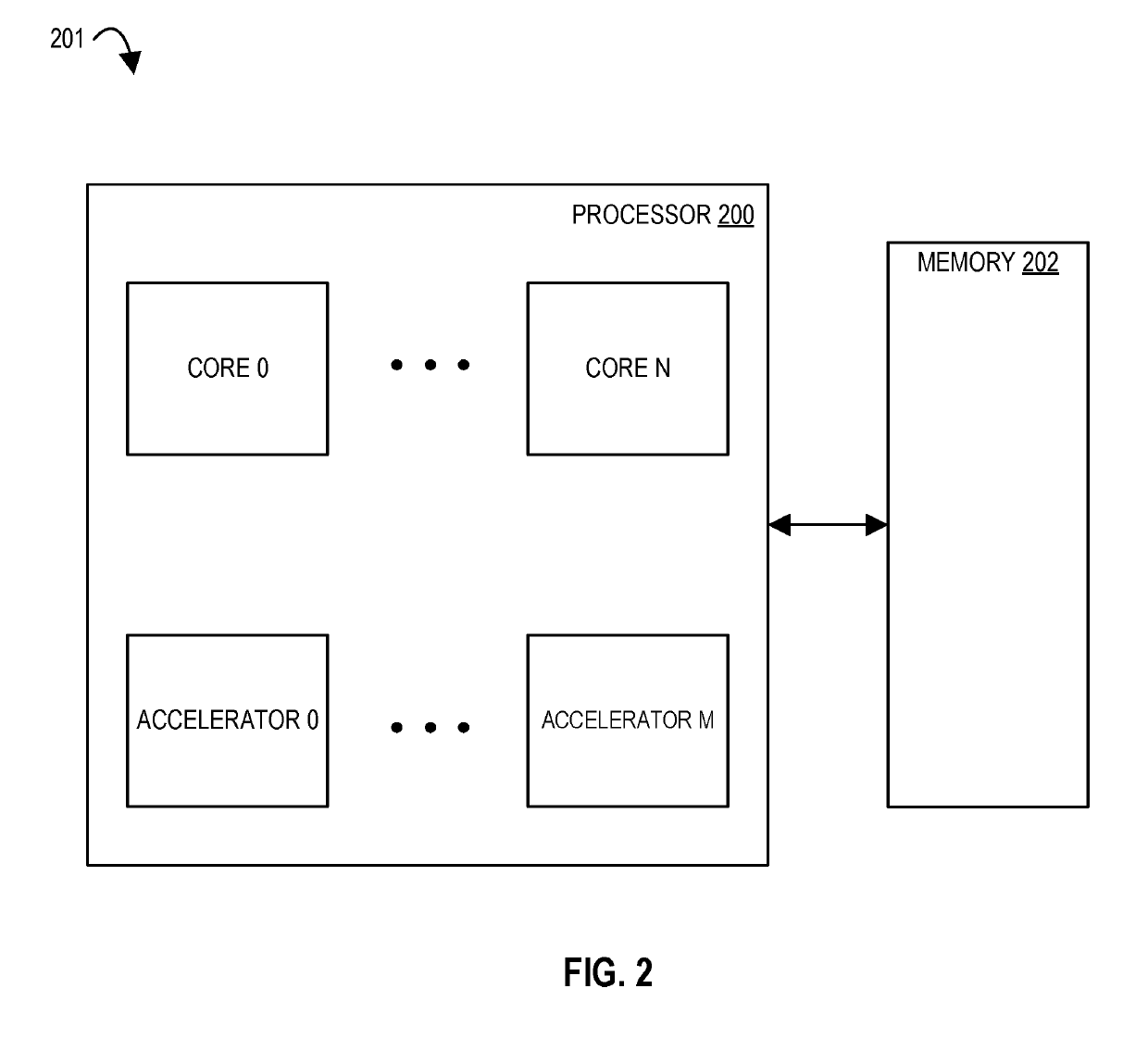

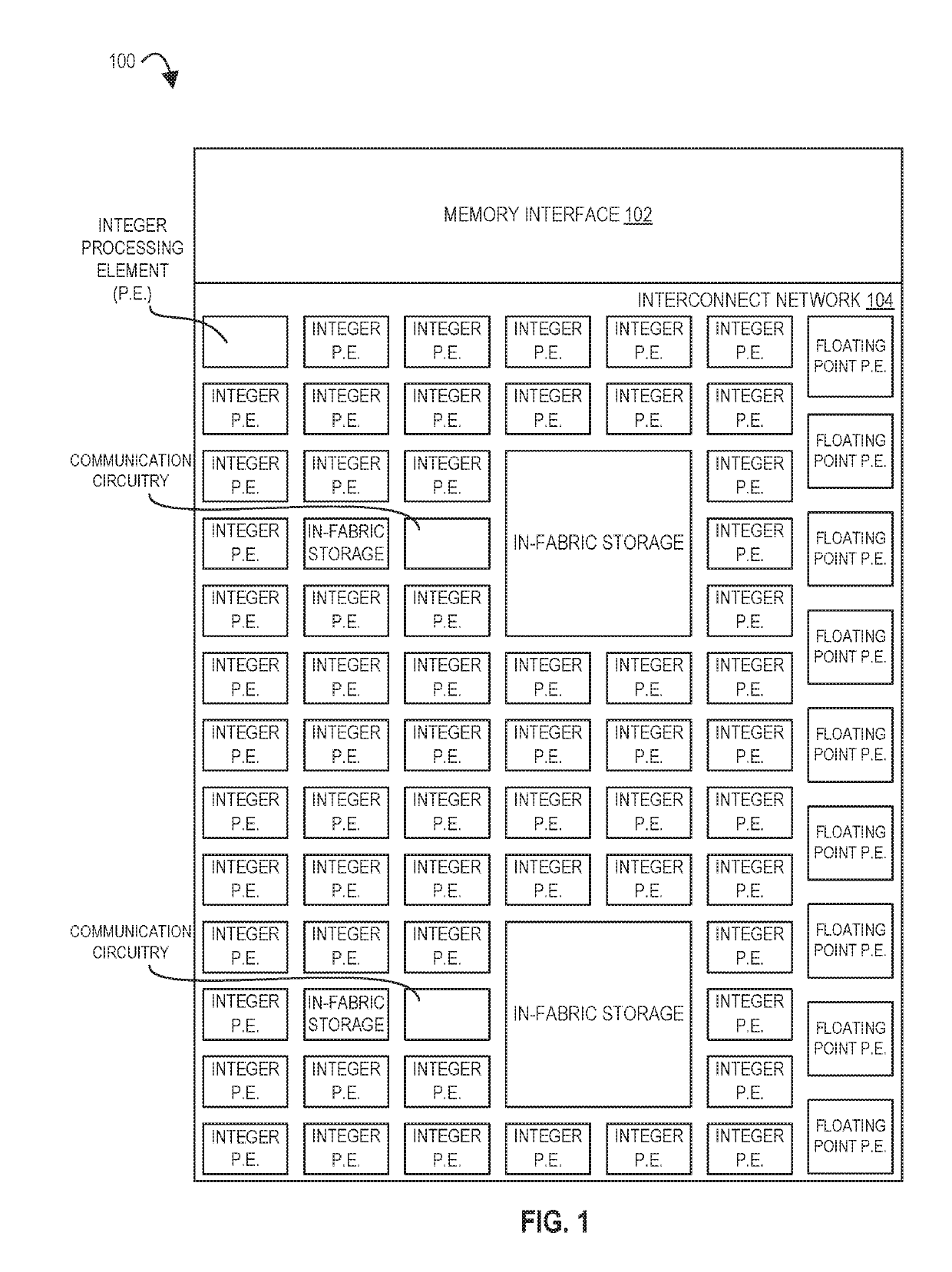

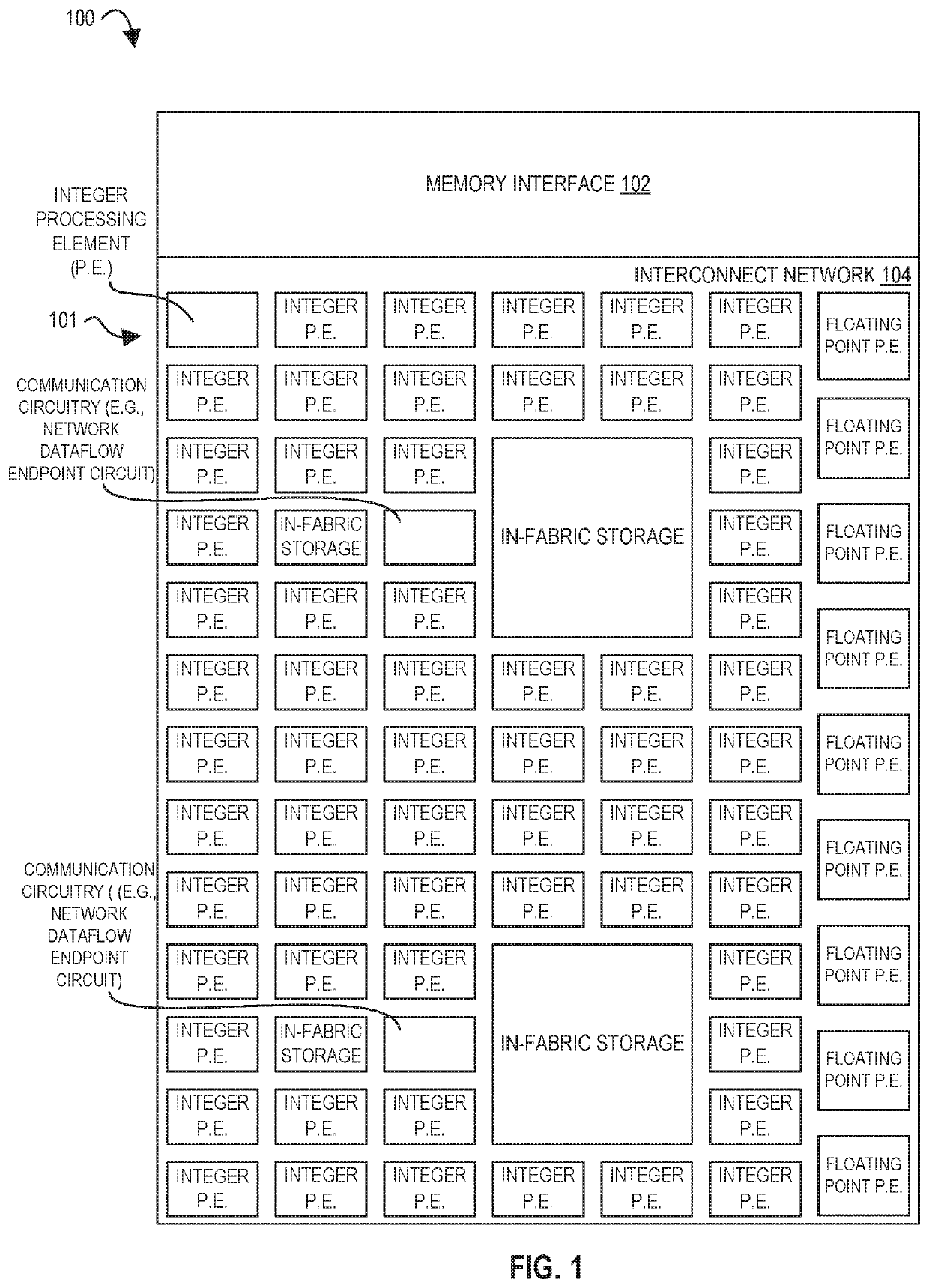

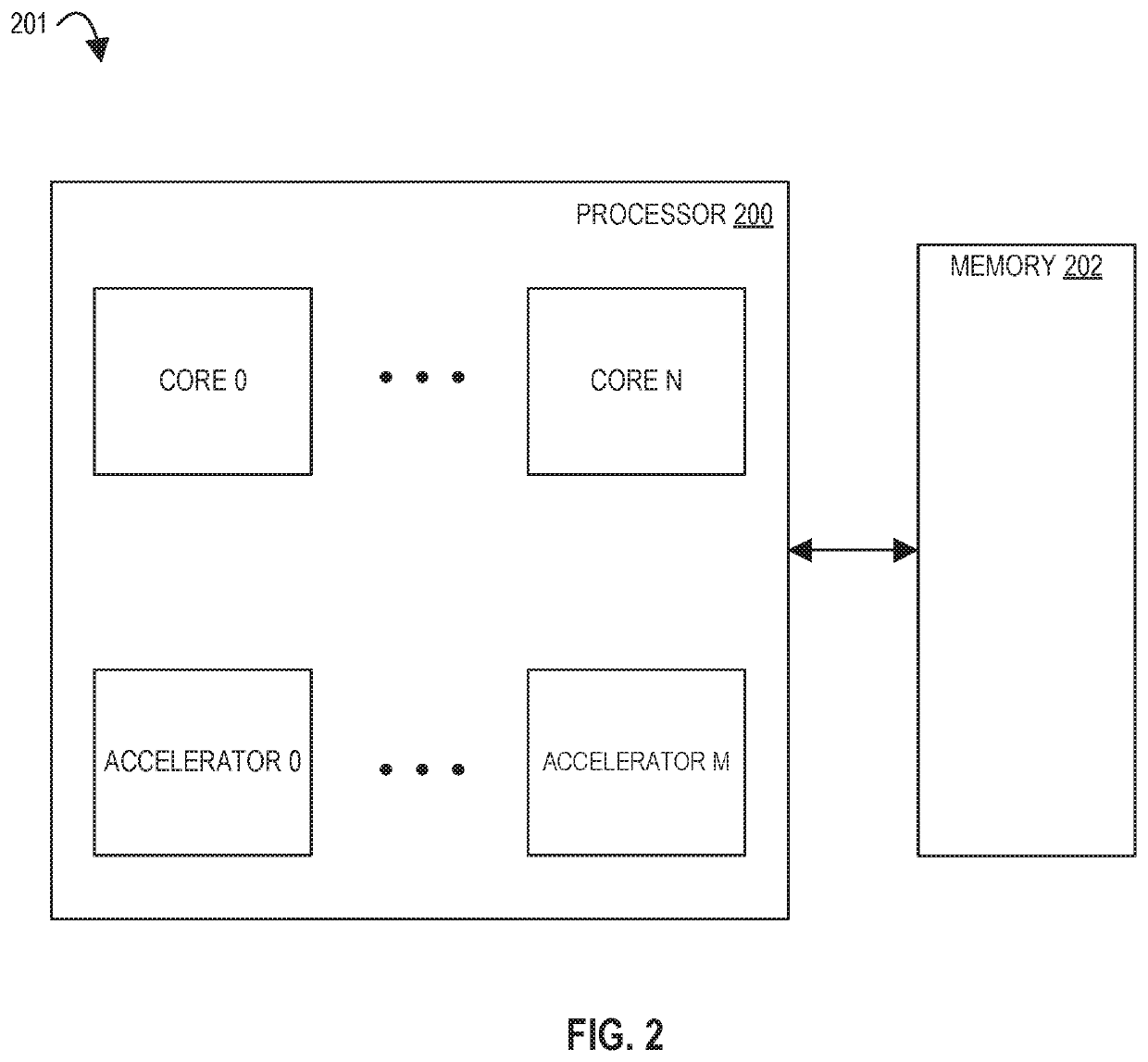

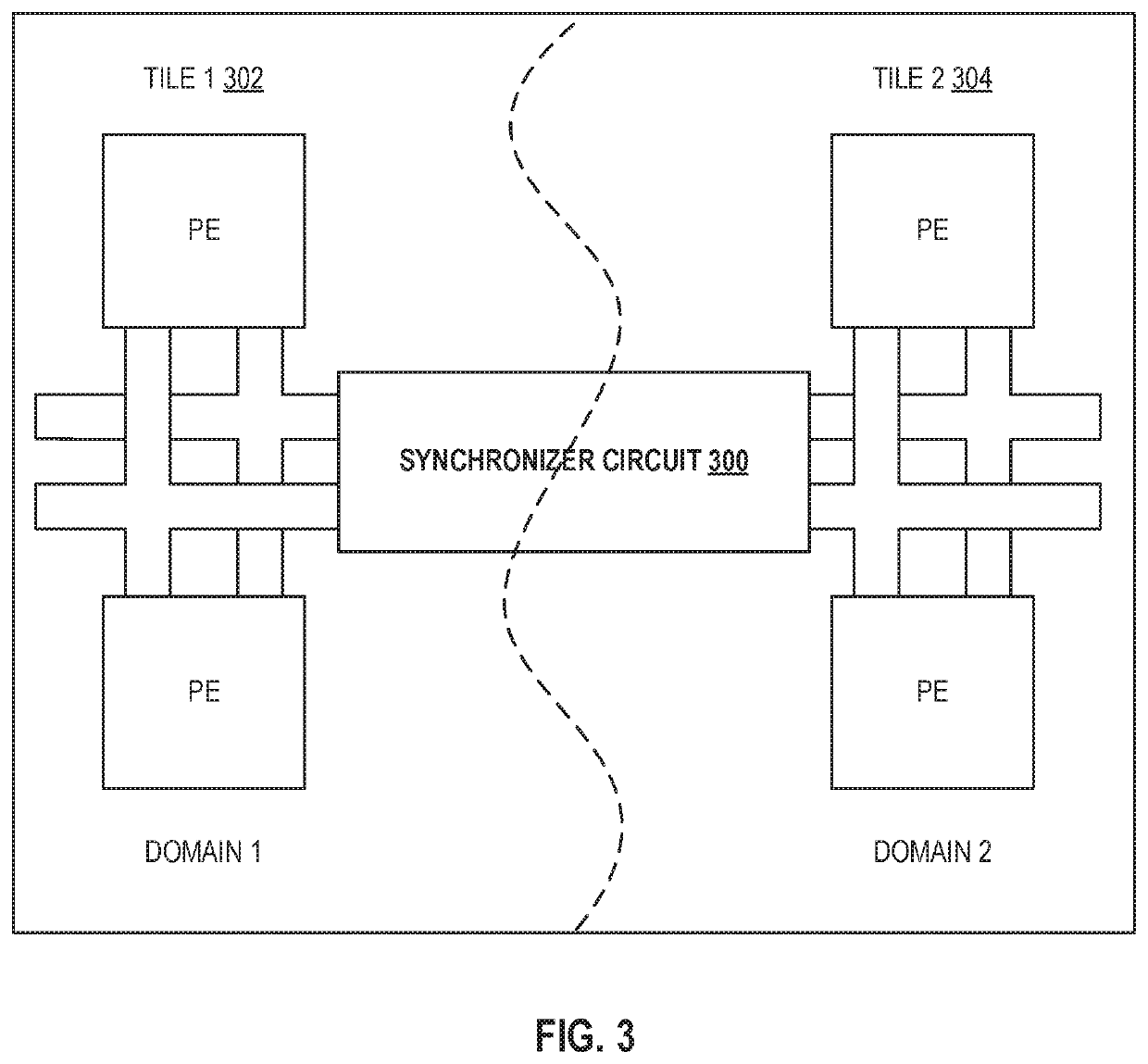

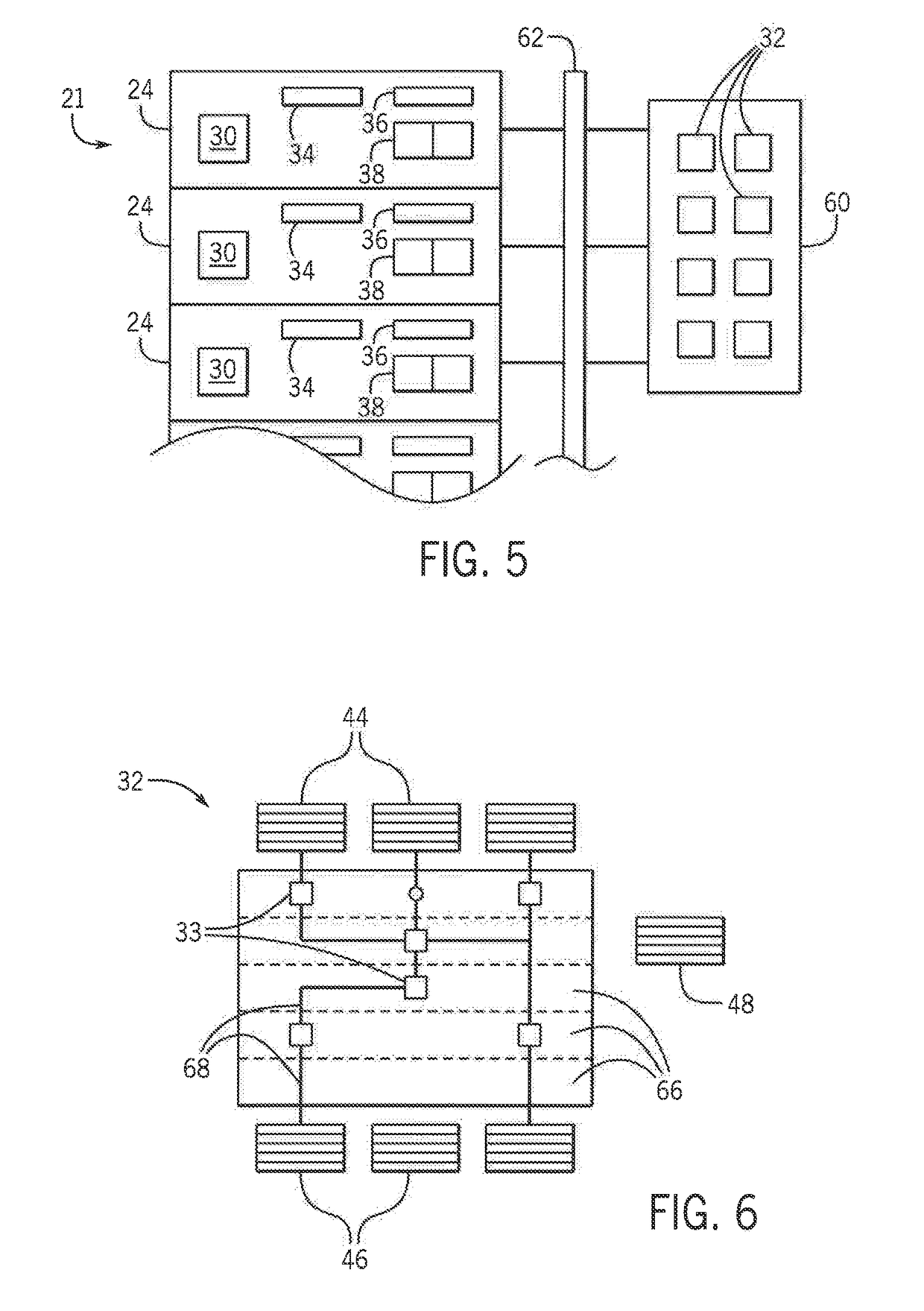

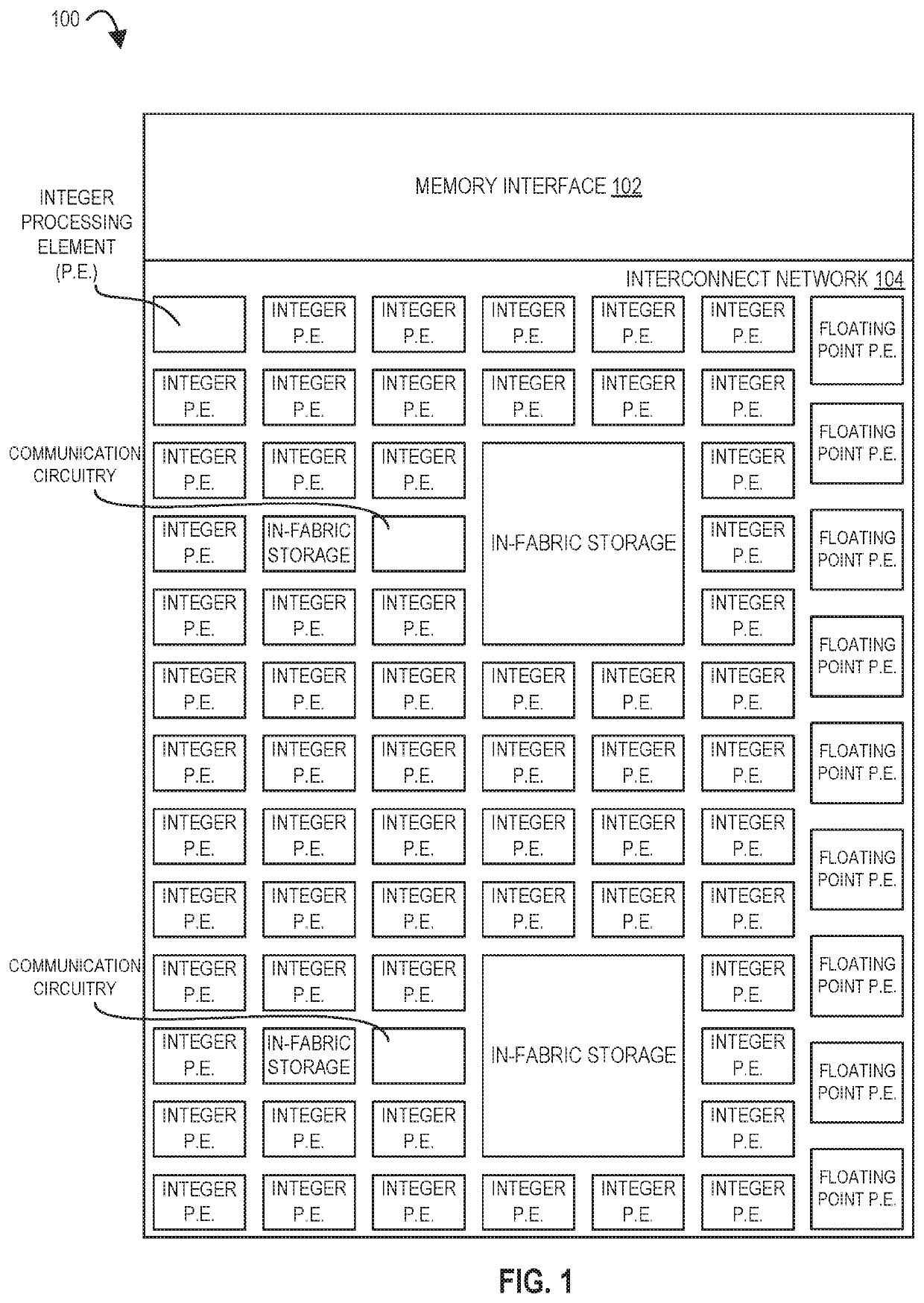

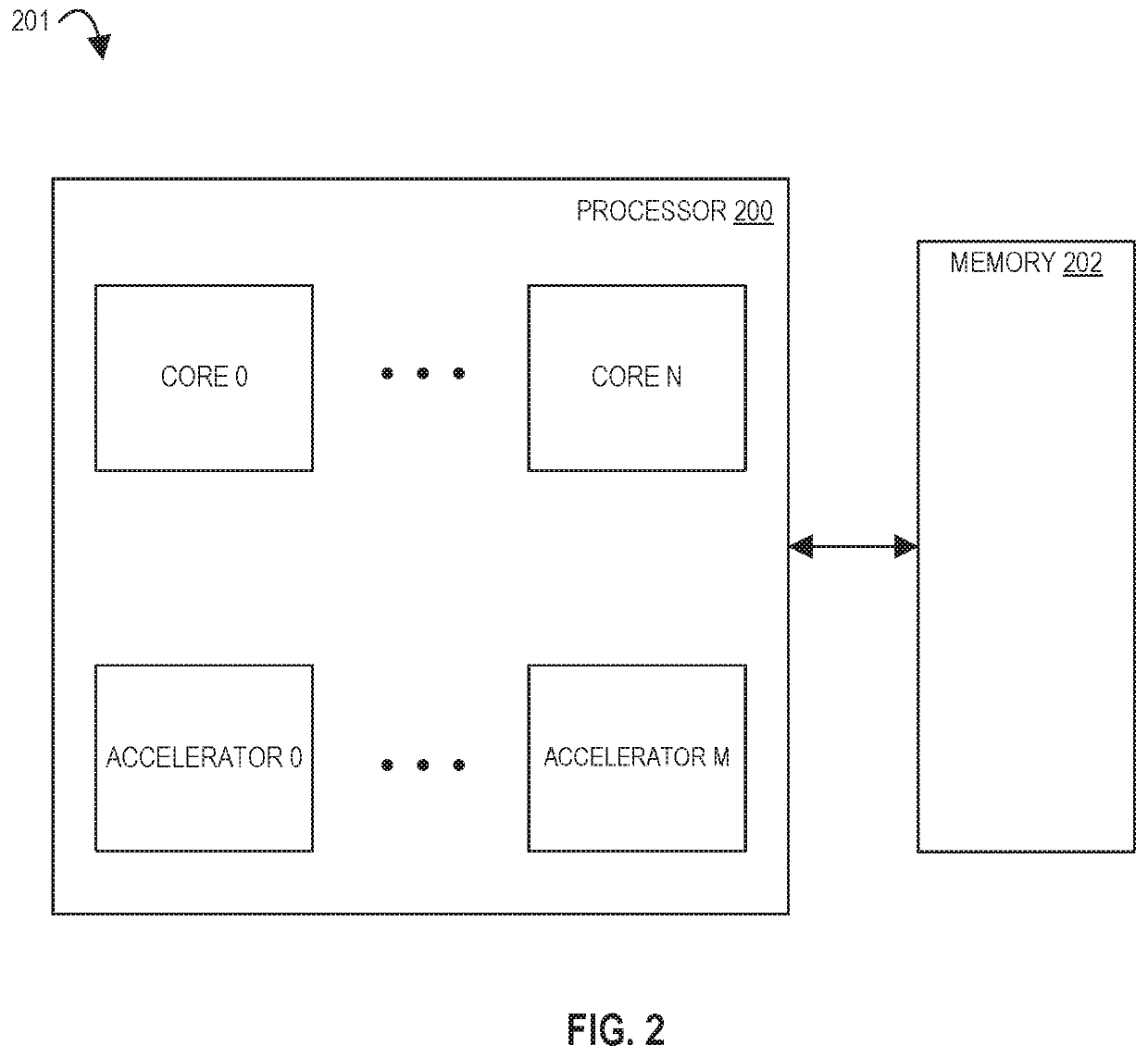

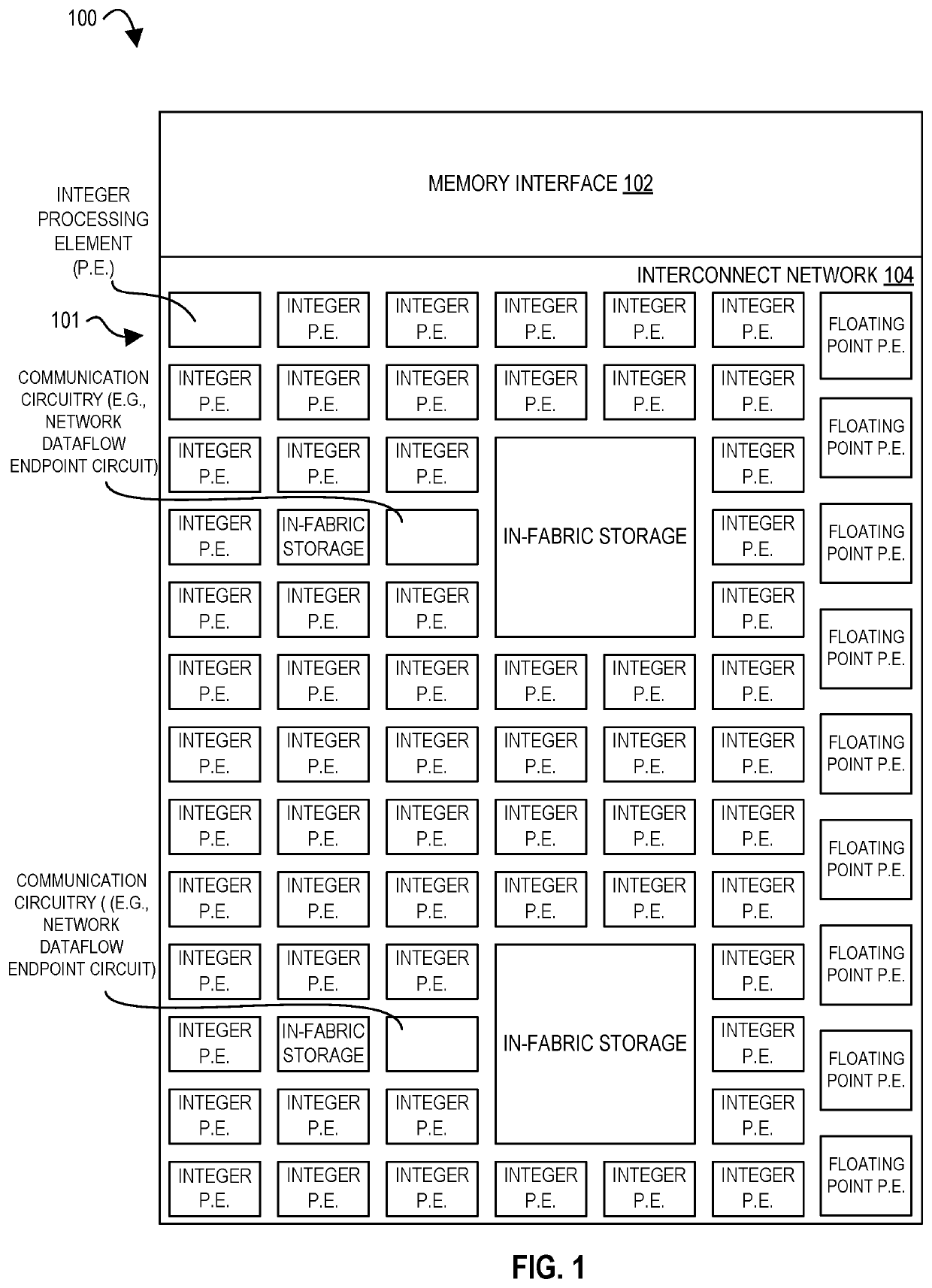

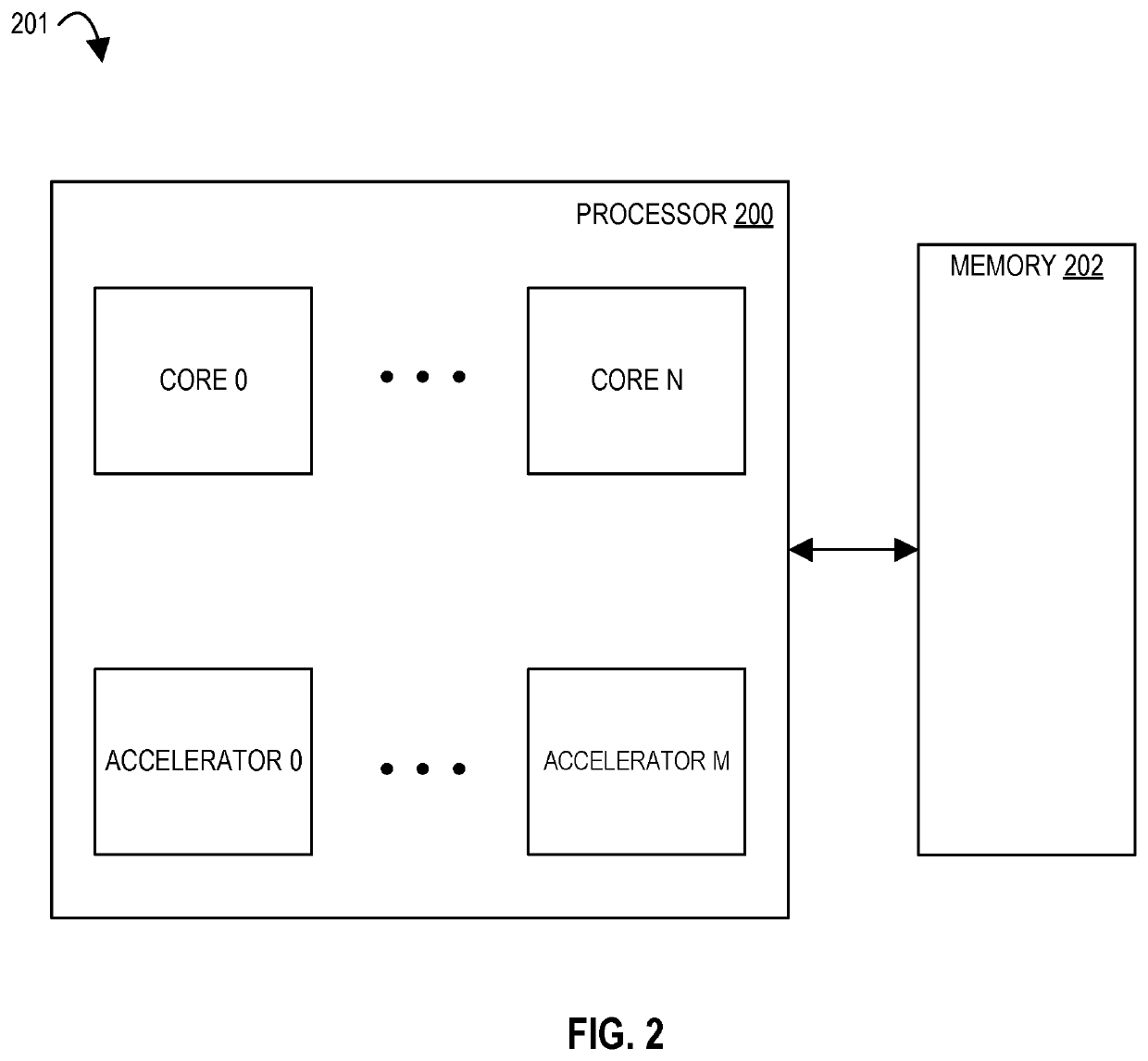

Processors, methods, and systems with a configurable spatial accelerator

ActiveUS20190018815A1Easy to adaptImprove performanceDataflow computersResource allocationComputer scienceVoltage

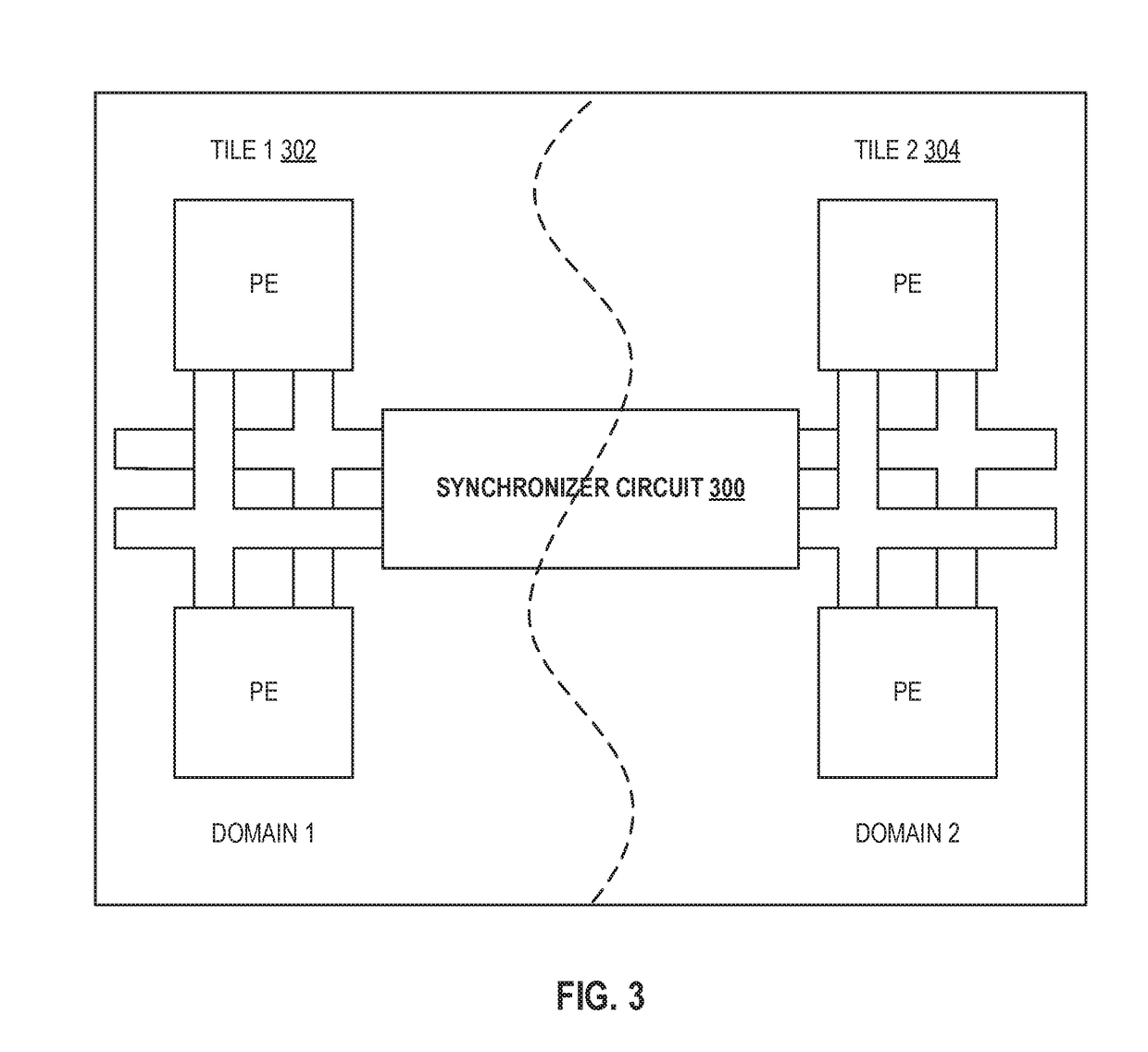

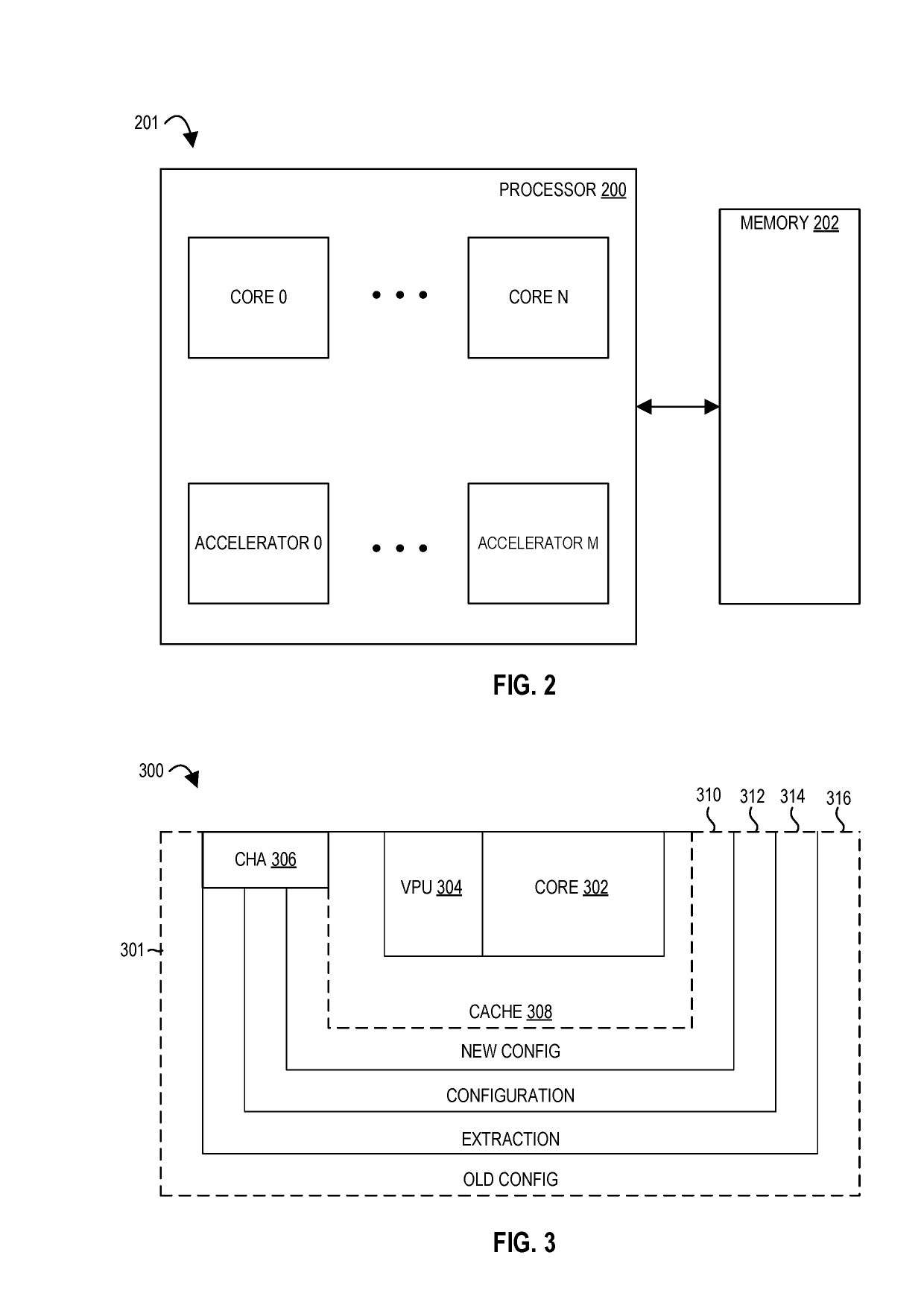

Systems, methods, and apparatuses relating to a configurable spatial accelerator are described. In one embodiment, a processor includes a synchronizer circuit coupled between an interconnect network of a first tile and an interconnect network of a second tile and comprising storage to store data to be sent between the interconnect network of the first tile and the interconnect network of the second tile, the synchronizer circuit to convert the data from the storage between a first voltage or a first frequency of the first tile and a second voltage or a second frequency of the second tile to generate converted data, and send the converted data between the interconnect network of the first tile and the interconnect network of the second tile

Owner:INTEL CORP

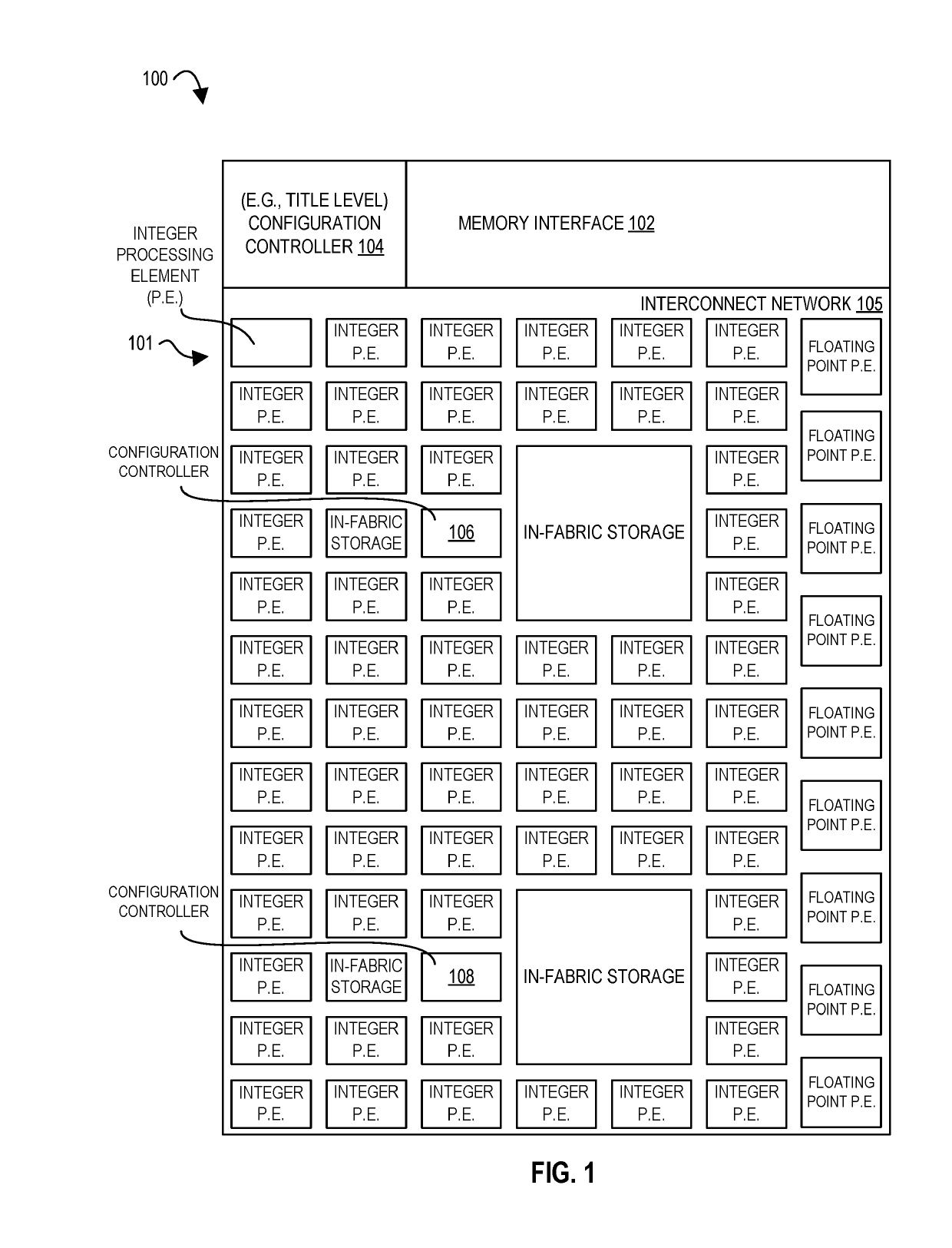

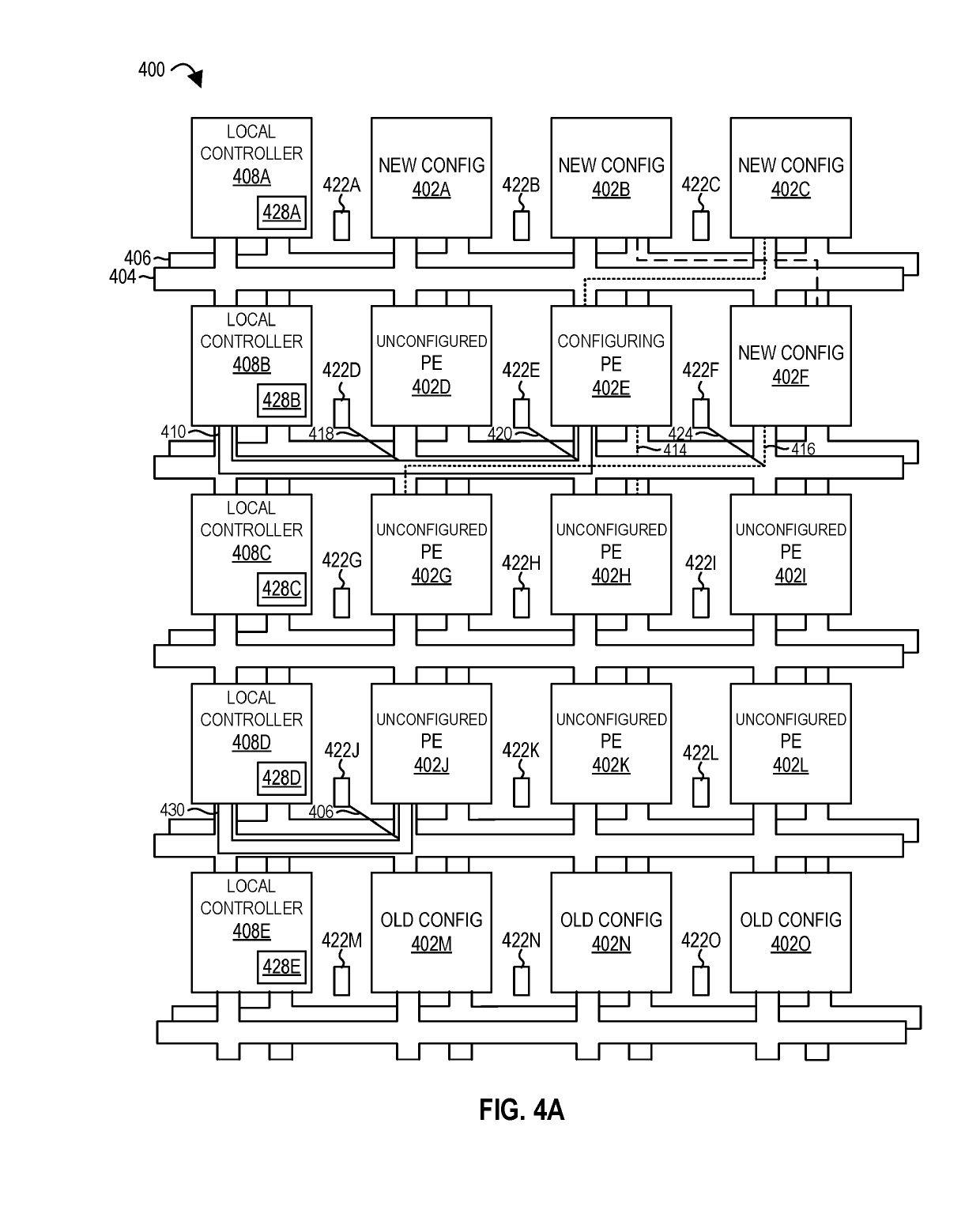

Processors and methods for configurable clock gating in a spatial array

InactiveUS20190101952A1Easy to adaptImprove performanceDataflow computersSingle instruction multiple data multiprocessorsSignal onParallel computing

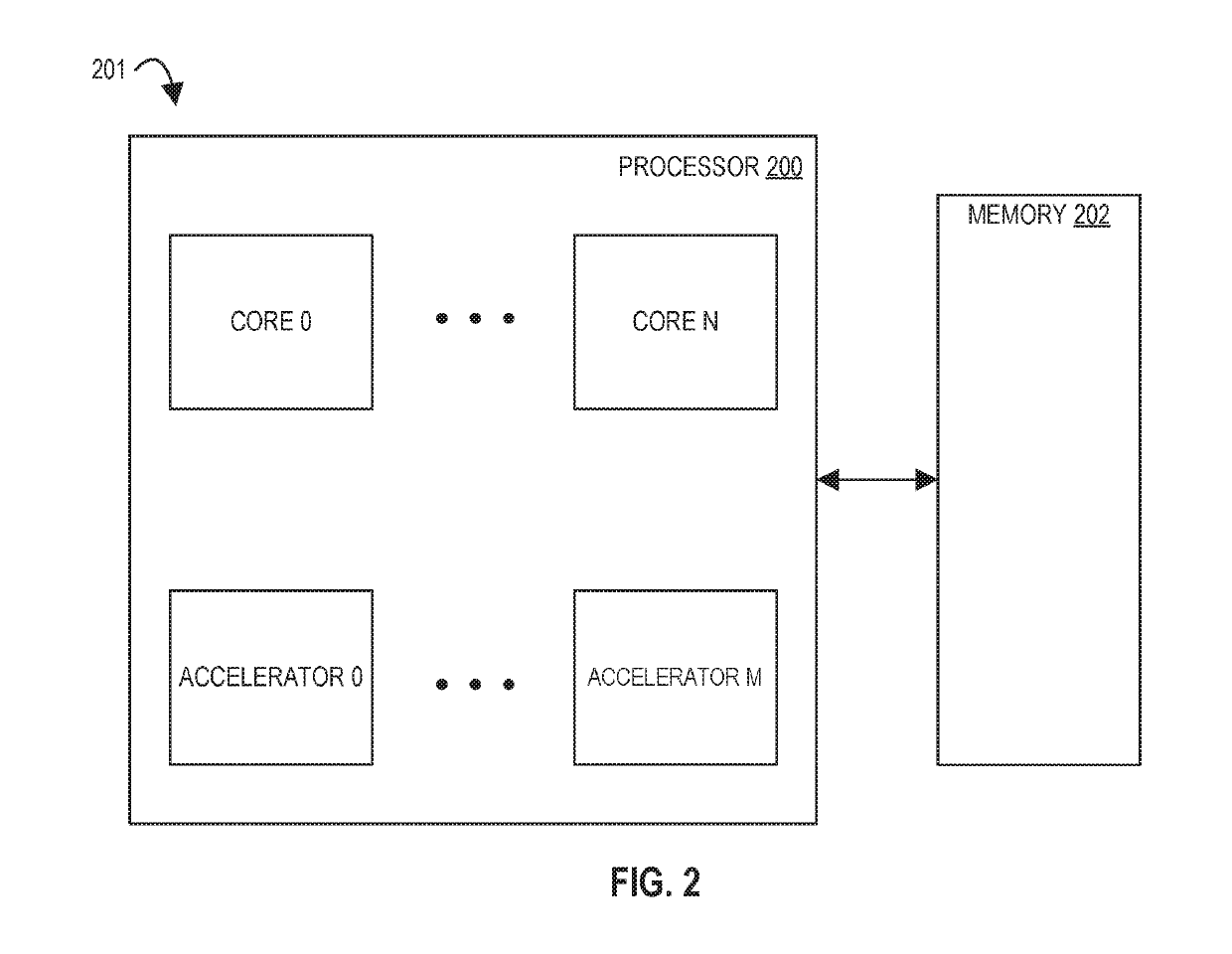

Methods and apparatuses relating to configurable clock gating in spatial arrays are described. In one embodiment, a processor includes processing elements; an interconnect network between the processing elements; and a configuration controller, coupled to a first processing element and a second processing element of the plurality of processing elements and the first processing element having an output coupled to an input of the second processing element, to configure the second processing element to clock gate at least one clocked component of the second processing element, and configure the first processing element to send a reenable signal on the interconnect network to the second processing element to reenable the at least one clocked component of the second processing element when data is to be sent from the first processing element to the second processing element.

Owner:INTEL CORP

Reconfigurable, Application-Specific Computer Accelerator

PendingUS20180210730A1Simple memory and reuse patternIncrease intensityDataflow computersConcurrent instruction executionData stream processingApplication specific

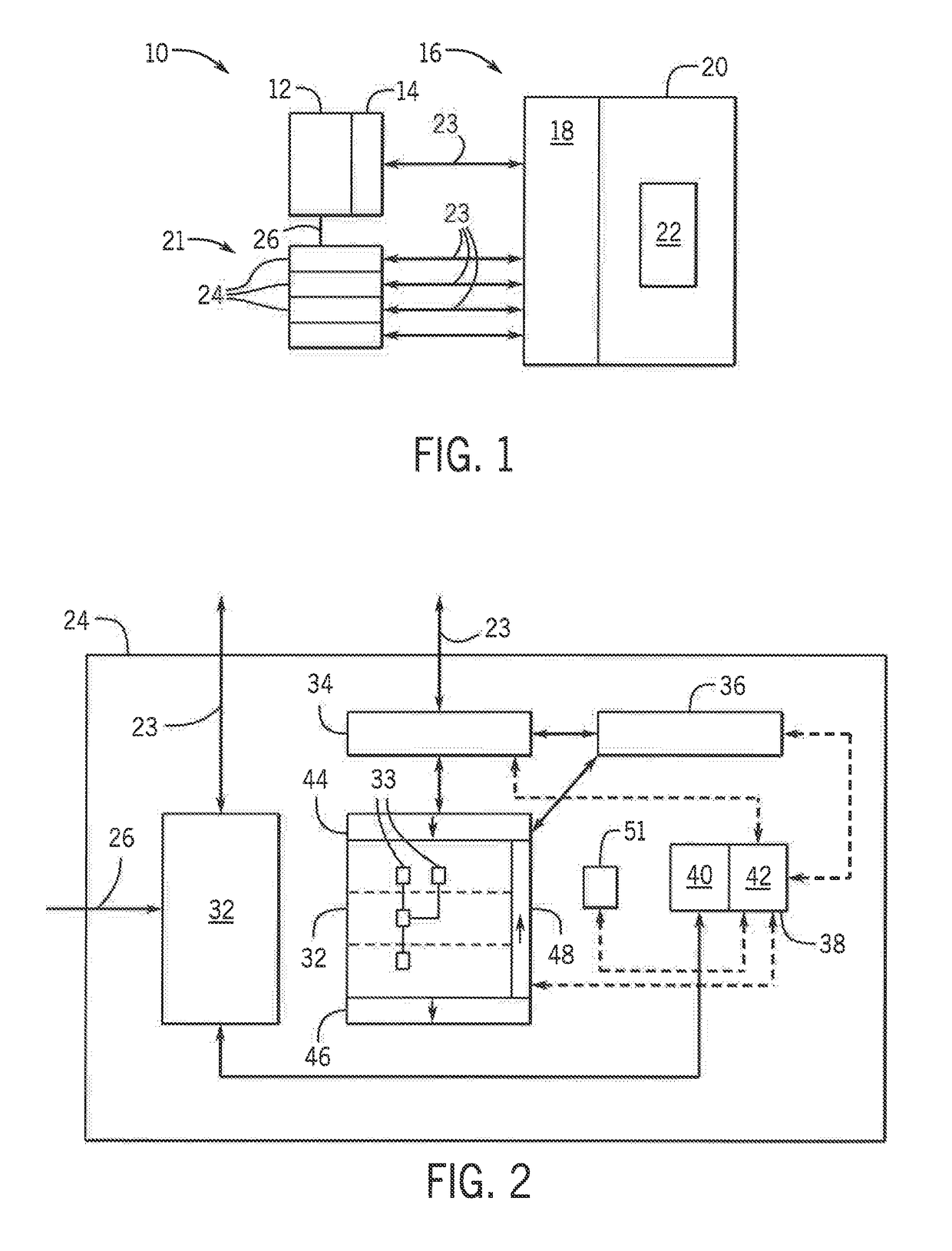

A reconfigurable hardware accelerator for computers combines a high-speed dataflow processor, having programmable functional units rapidly reconfigured in a network of programmable switches, with a stream processor that may autonomously access memory in predefined access patterns after receiving simple stream instructions. The result is a compact, high-speed processor that may exploit parallelism associated with many application-specific programs susceptible to acceleration.

Owner:WISCONSIN ALUMNI RES FOUND

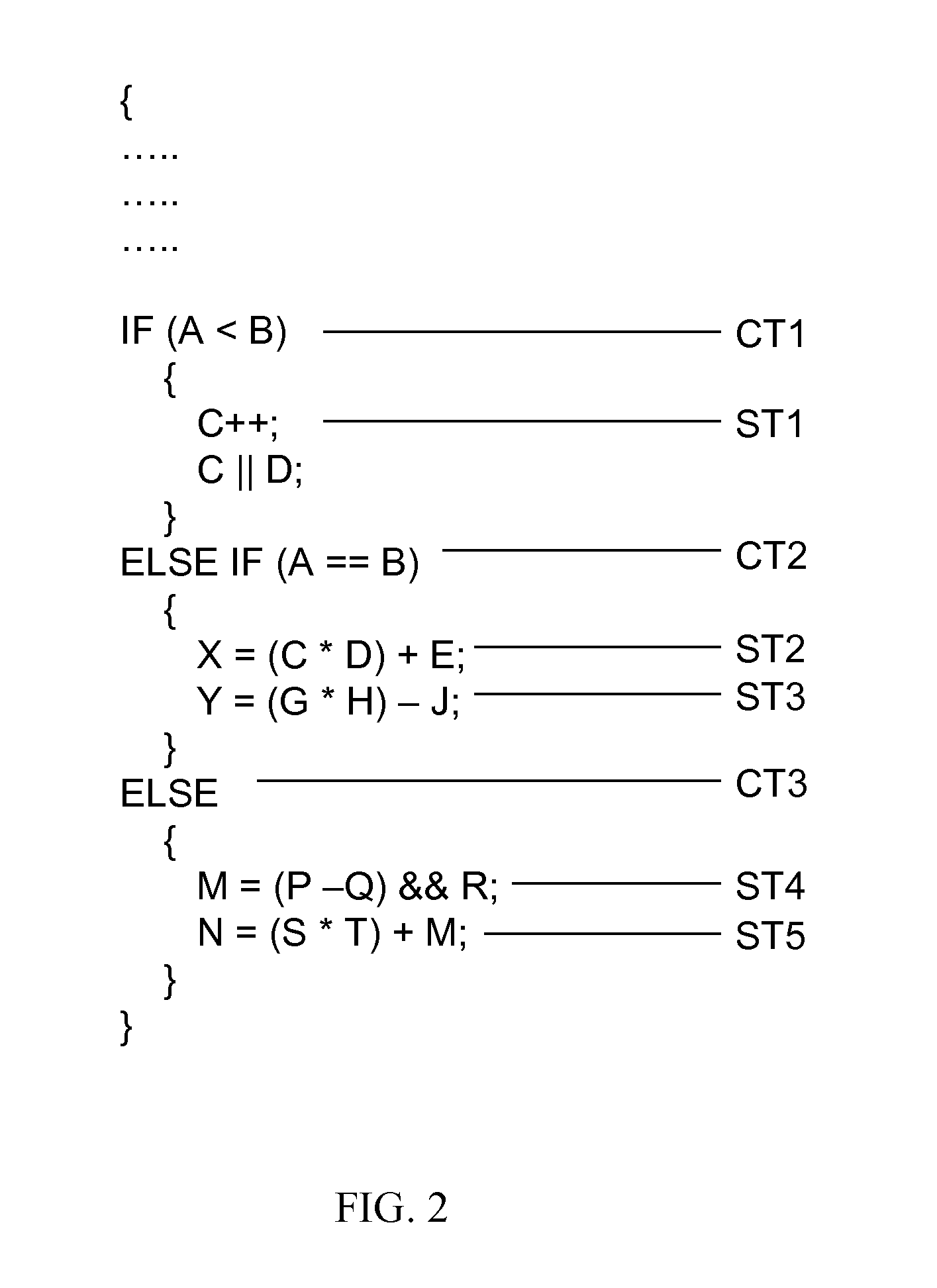

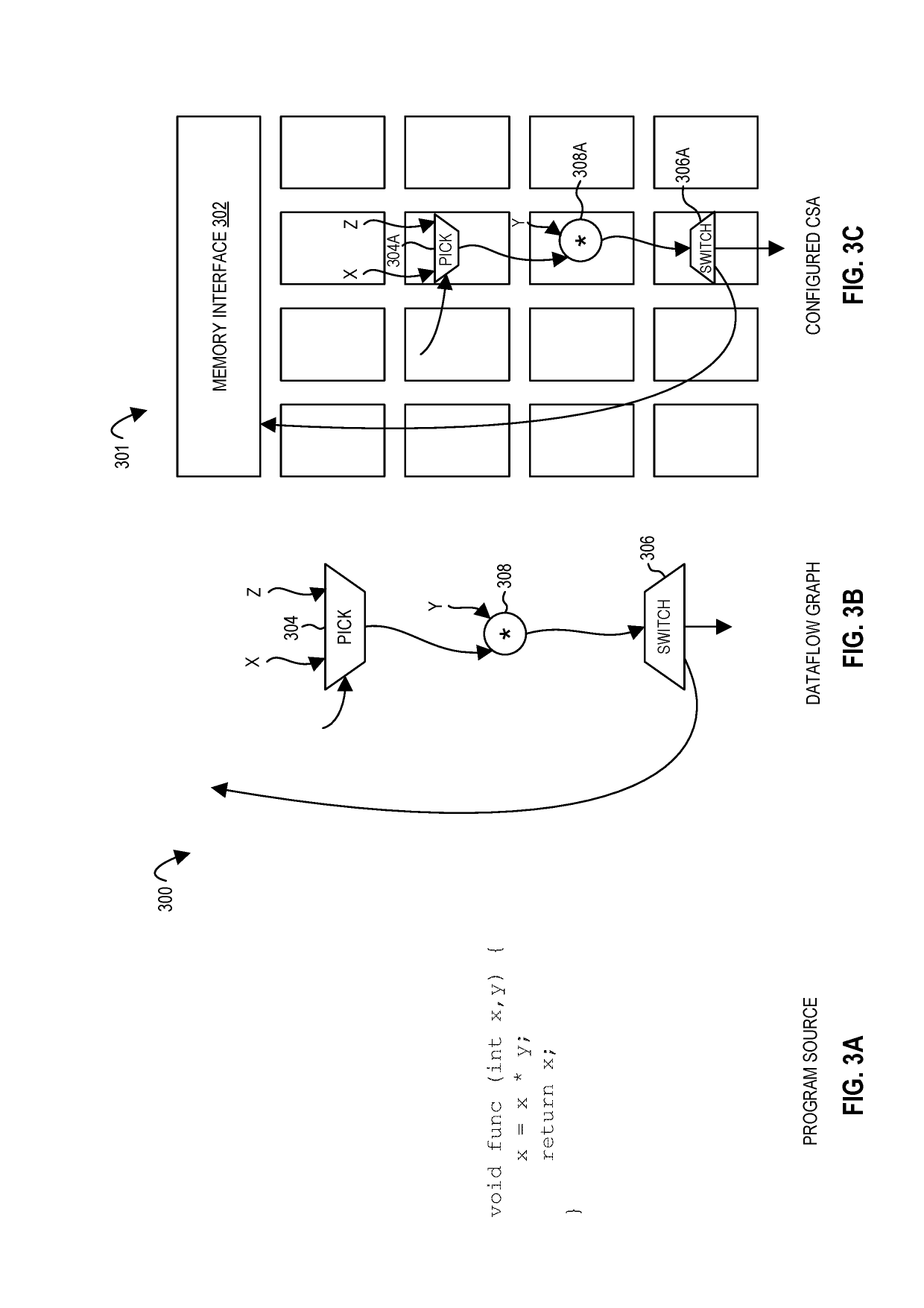

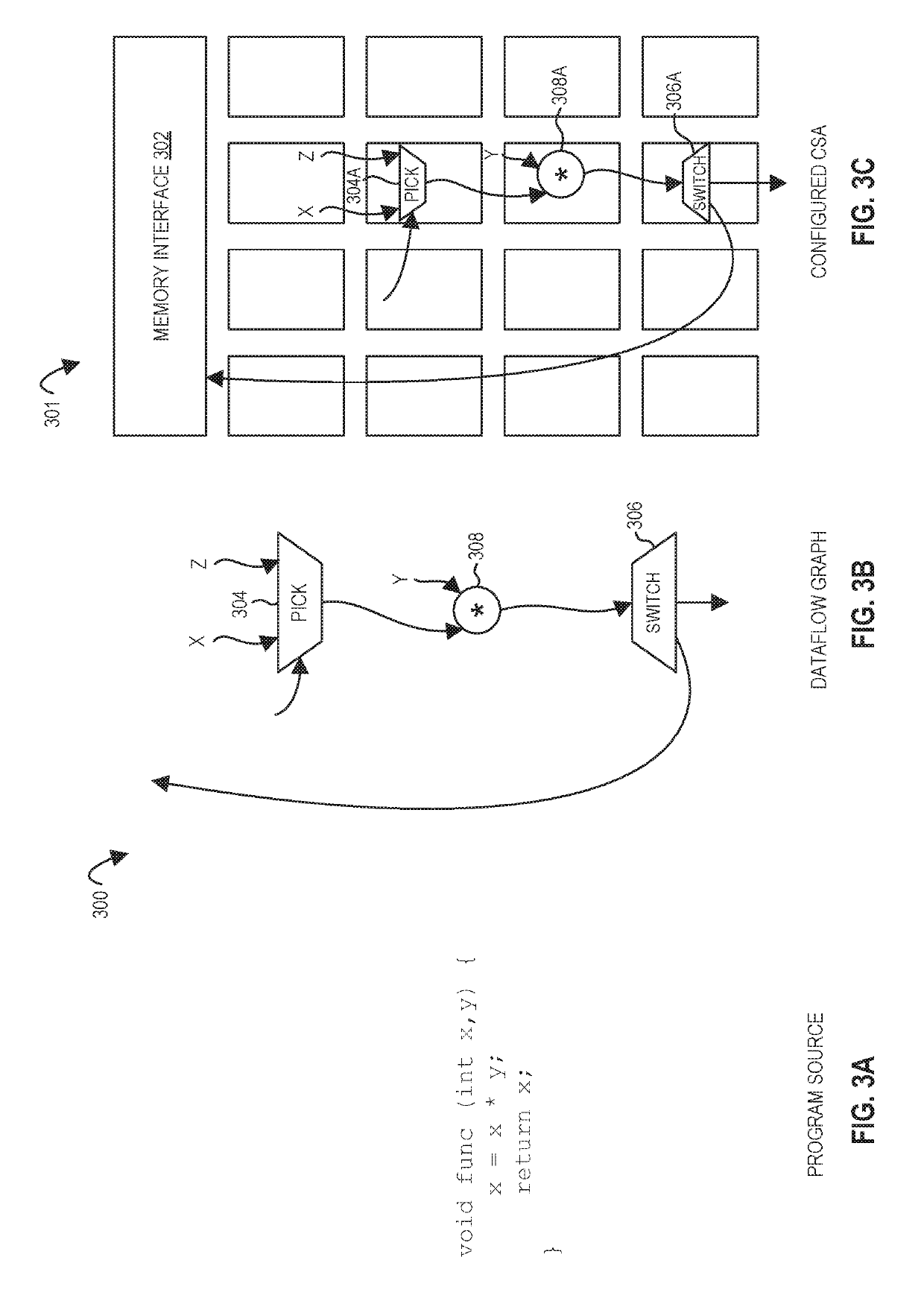

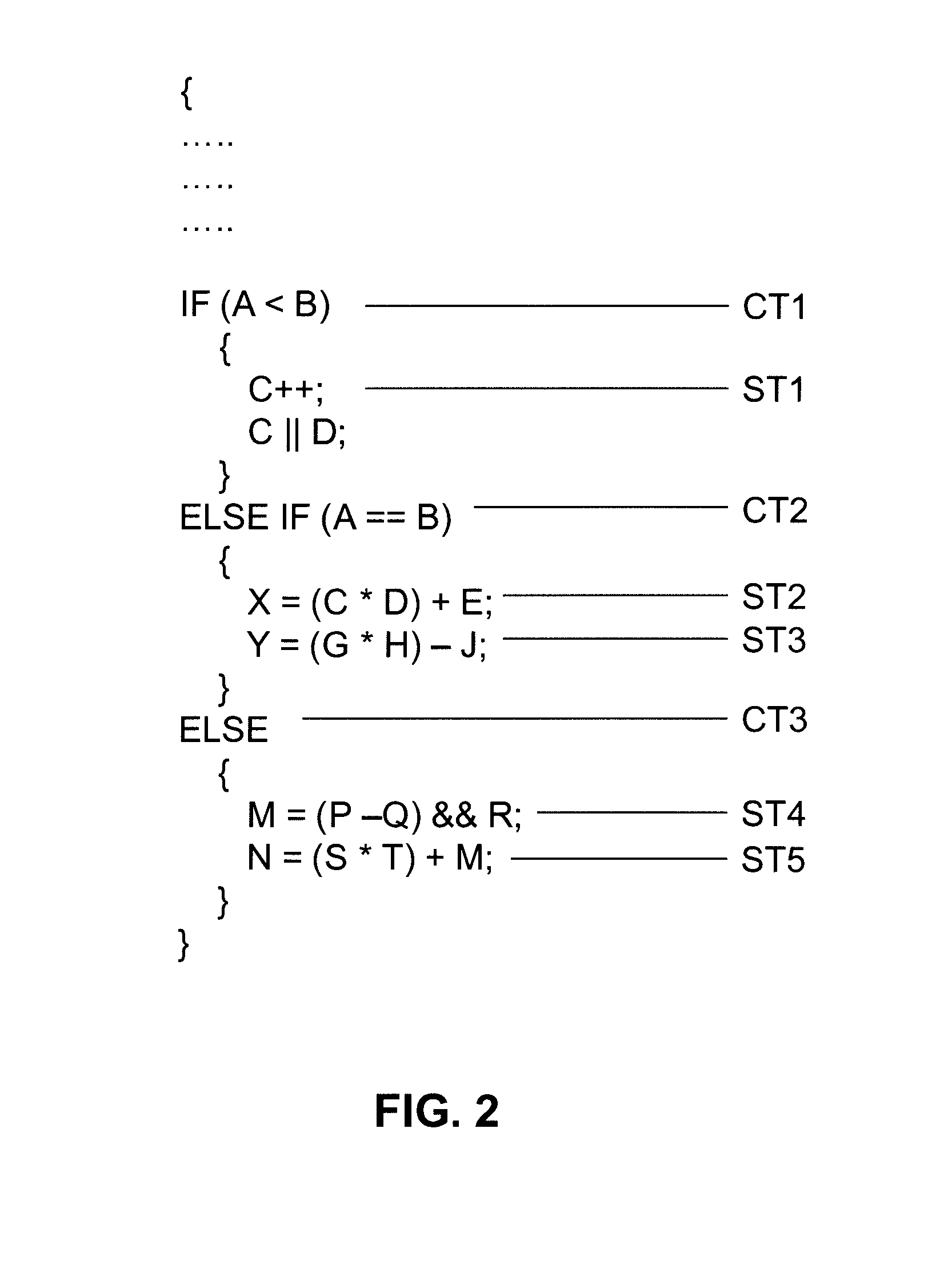

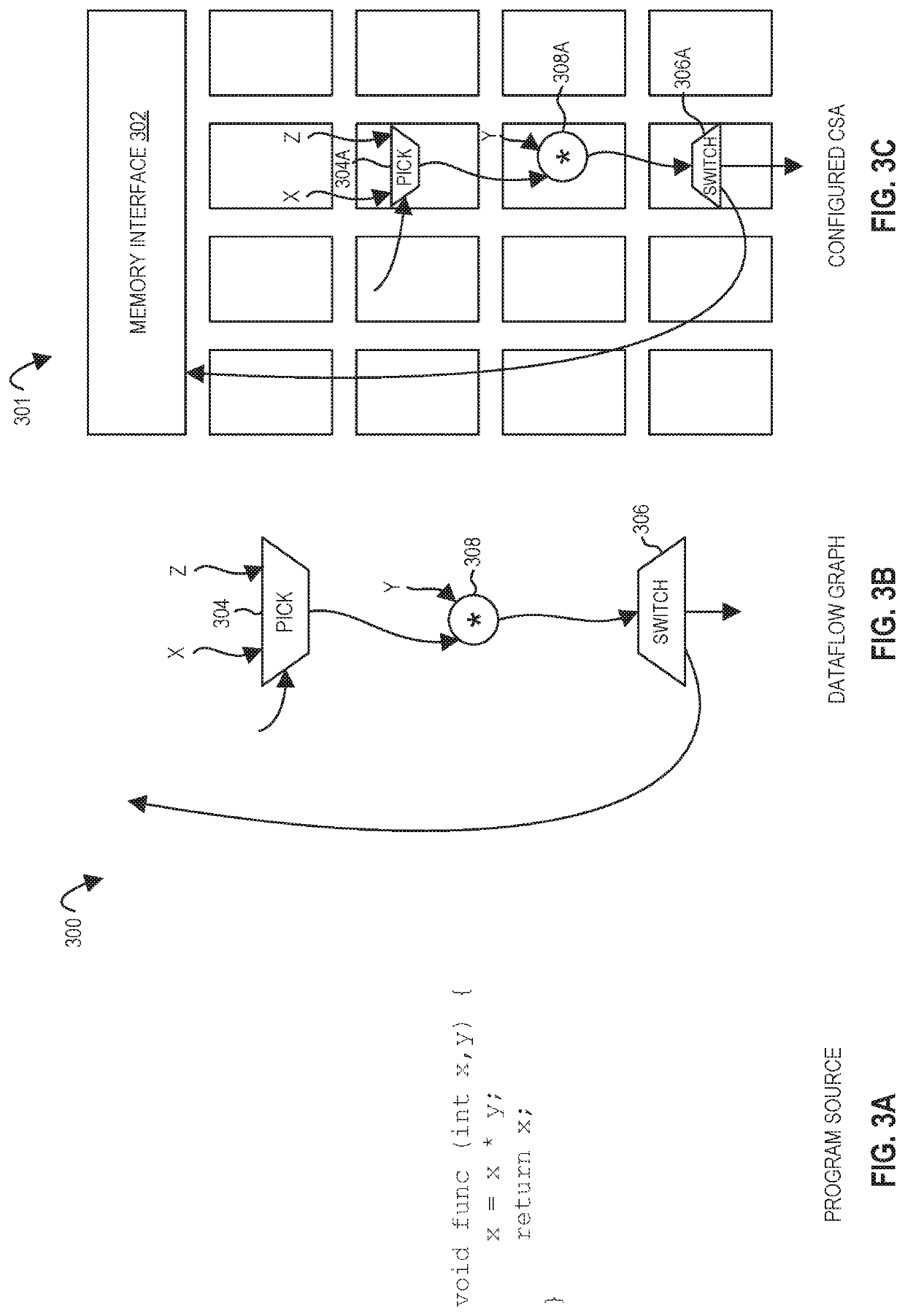

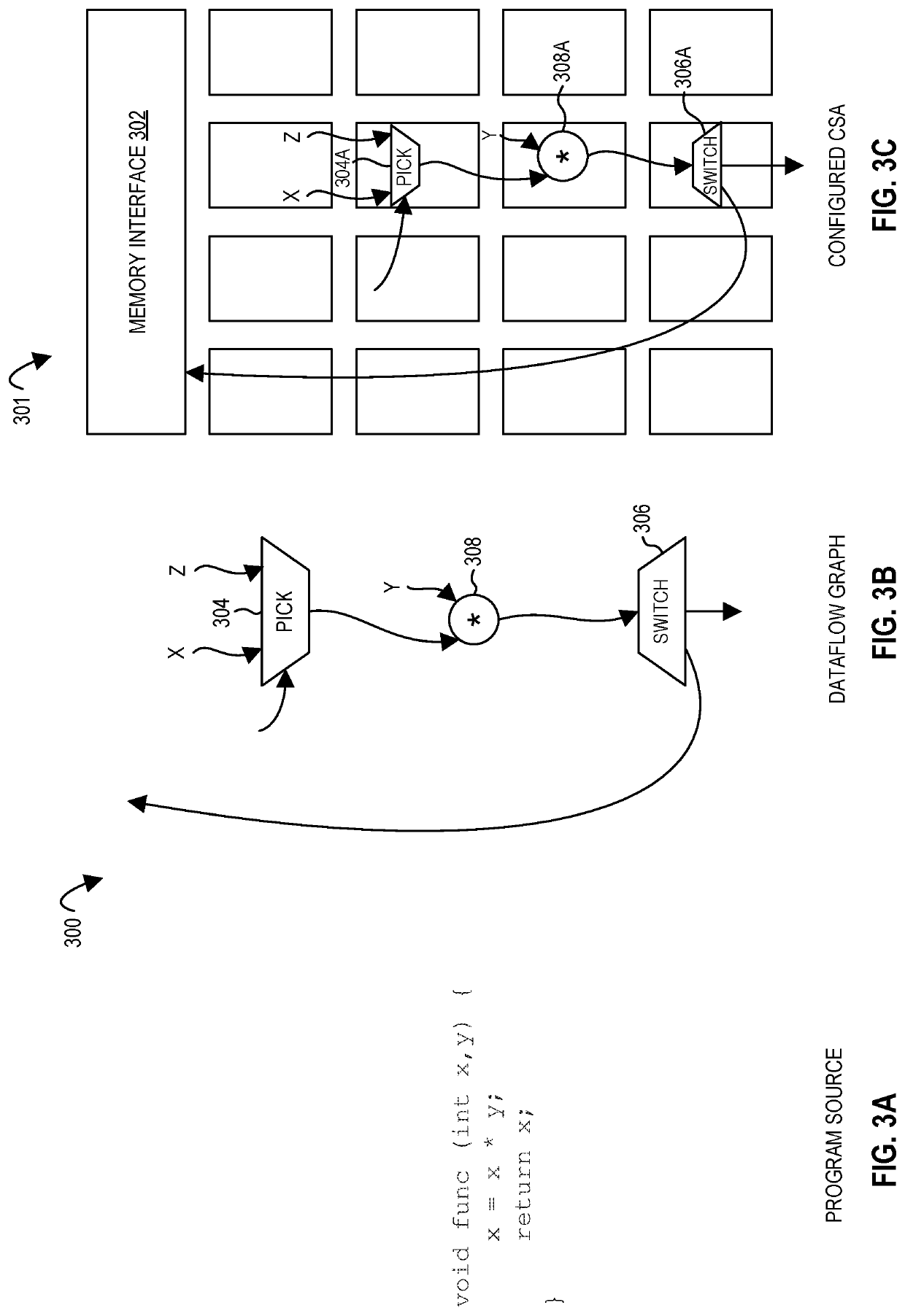

Apparatus, methods, and systems for unstructured data flow in a configurable spatial accelerator

ActiveUS20190303153A1Dataflow computersConcurrent instruction executionProcessing elementUnstructured data

Systems, methods, and apparatuses relating to unstructured data flow in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator includes a data path having a first branch and a second branch, and the data path comprising at least one processing element; a switch circuit comprising a switch control input to receive a first switch control value to couple an input of the switch circuit to the first branch and a second switch control value to couple the input of the switch circuit to the second branch; a pick circuit comprising a pick control input to receive a first pick control value to couple an output of the pick circuit to the first branch and a second pick control value to couple the output of the pick circuit to a third branch of the data path; a predicate propagation processing element to output a first edge predicate value and a second edge predicate value based on (e.g., both of) a switch control value from the switch control input of the switch circuit and a first block predicate value; and a predicate merge processing element to output a pick control value to the pick control input of the pick circuit and a second block predicate value based on both of a third edge predicate value and one of the first edge predicate value or the second edge predicate value.

Owner:INTEL CORP

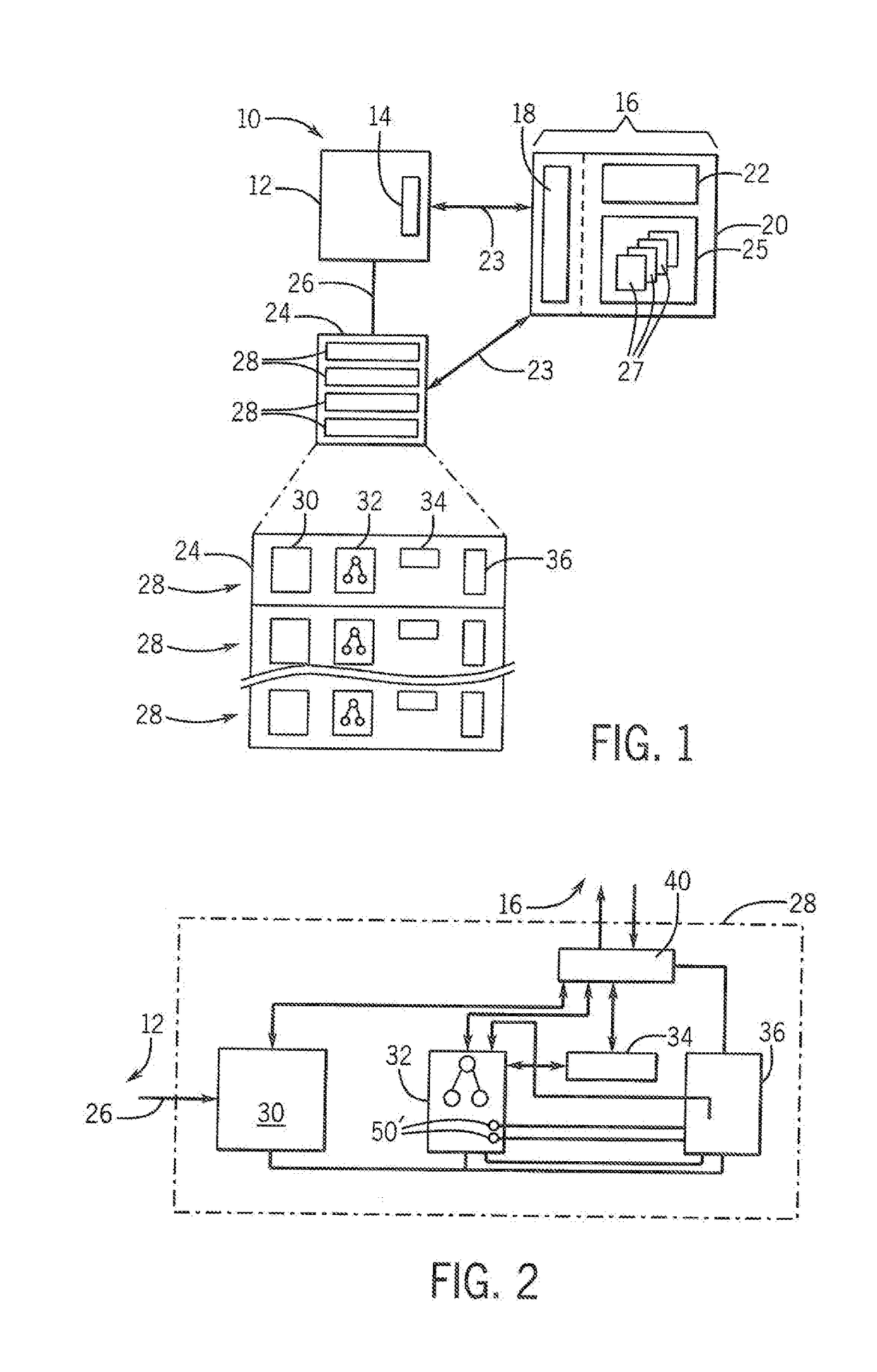

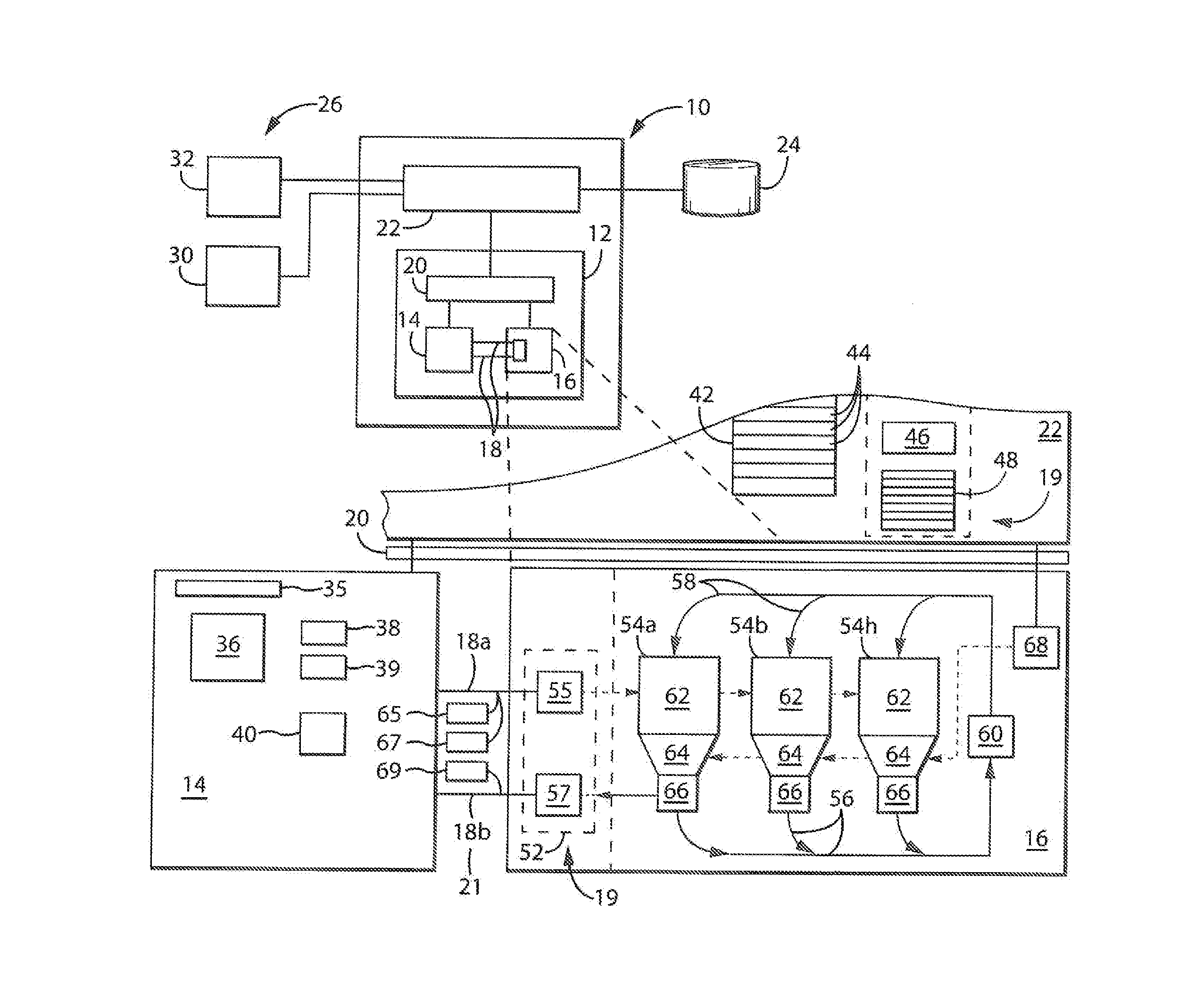

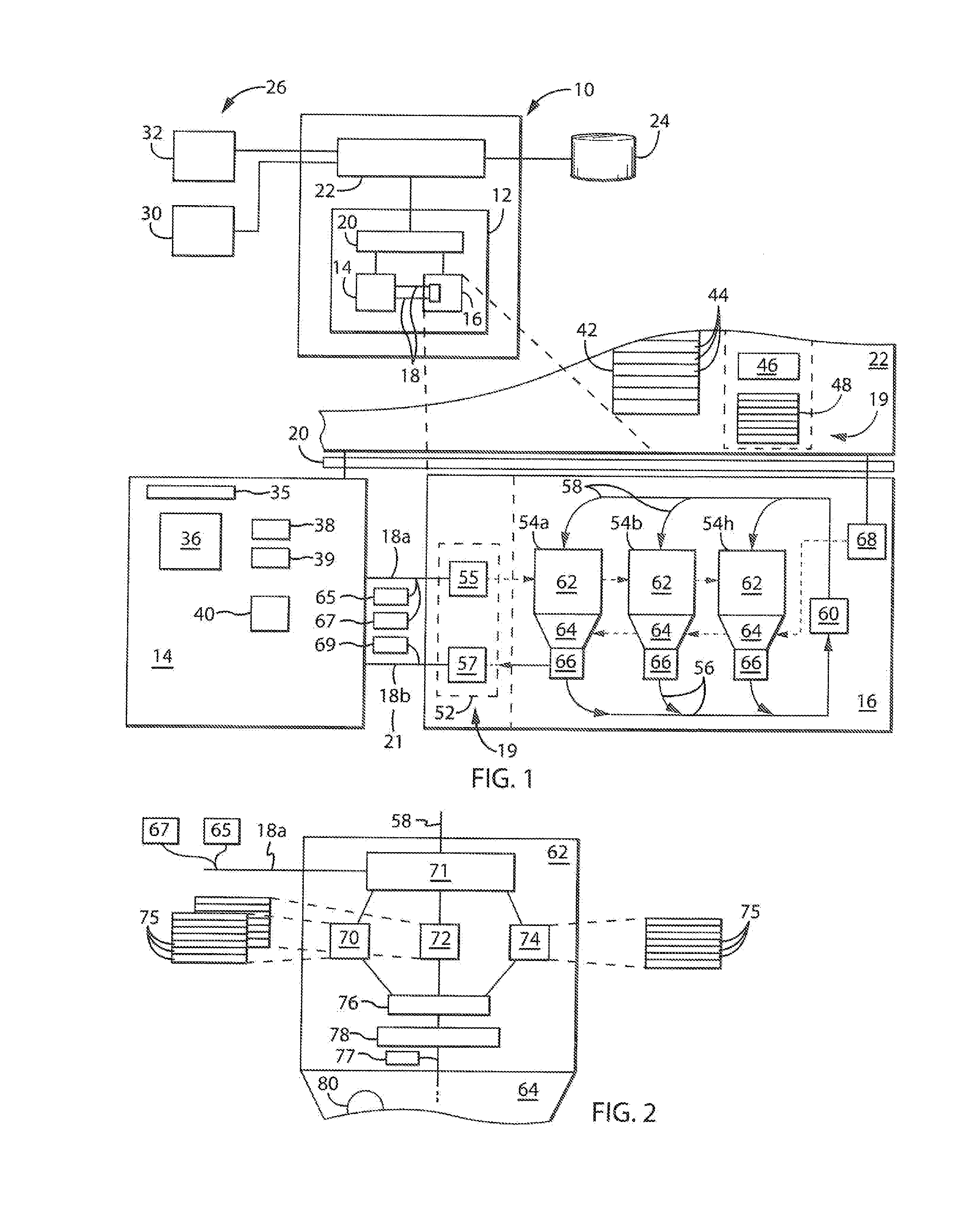

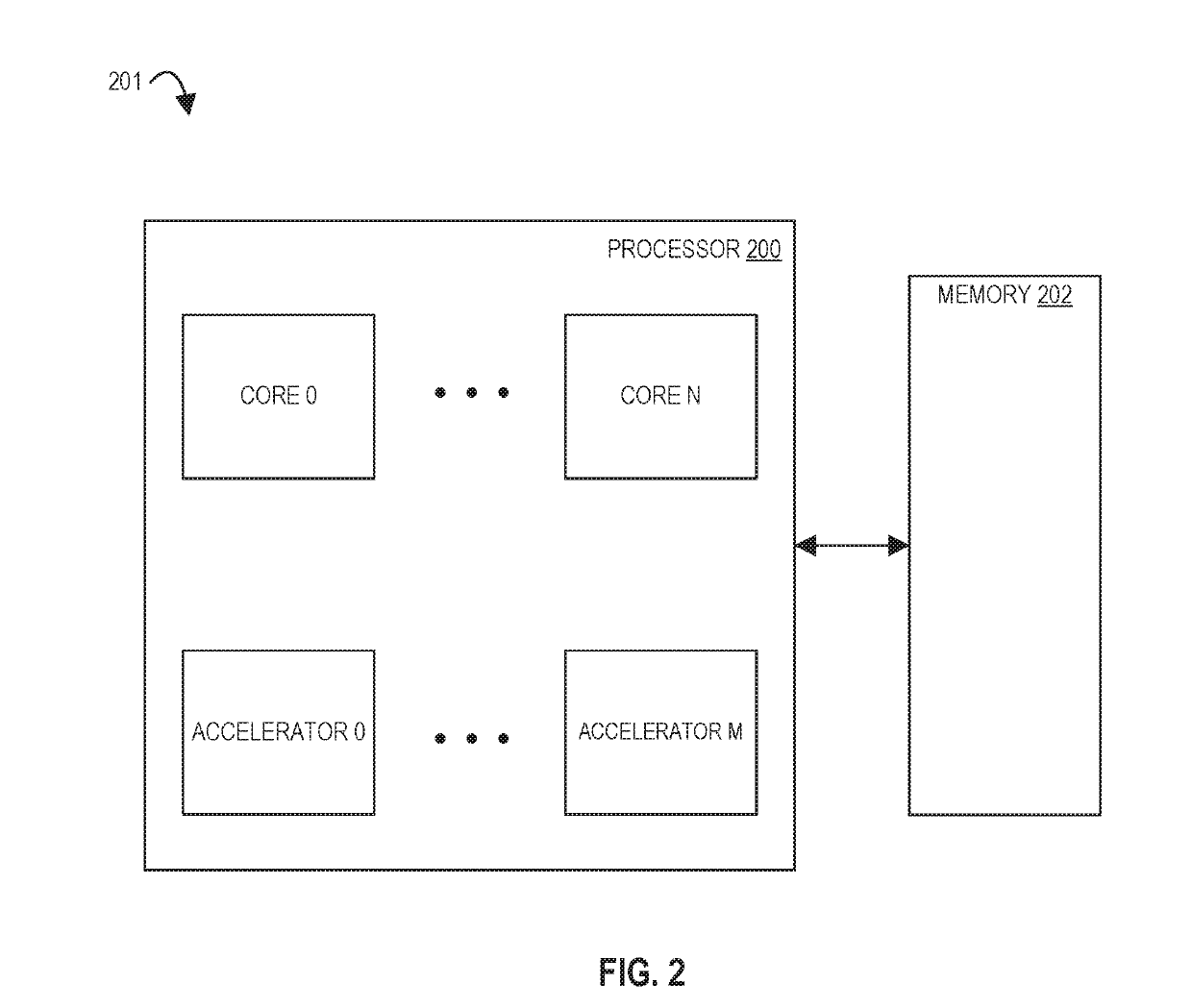

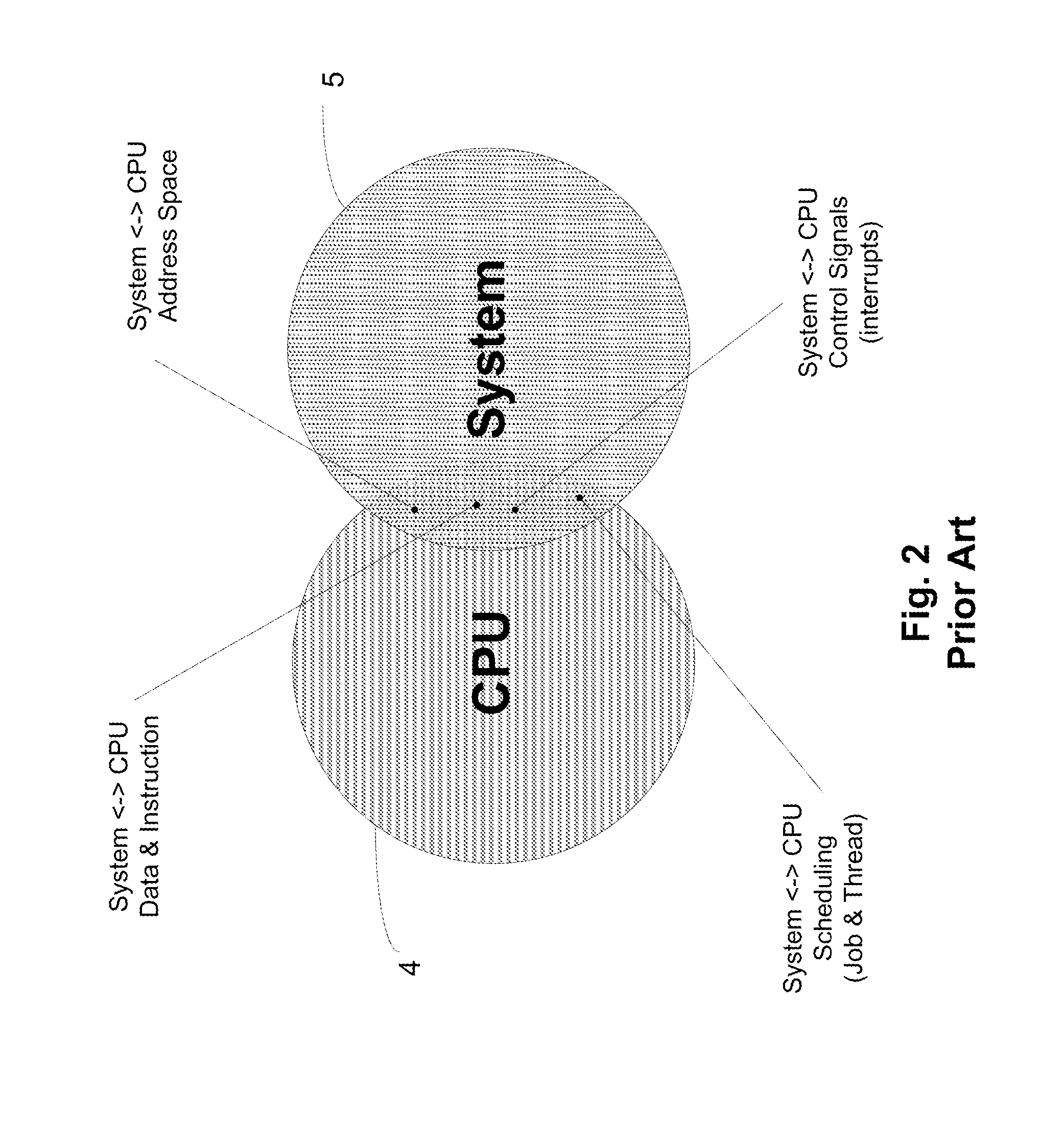

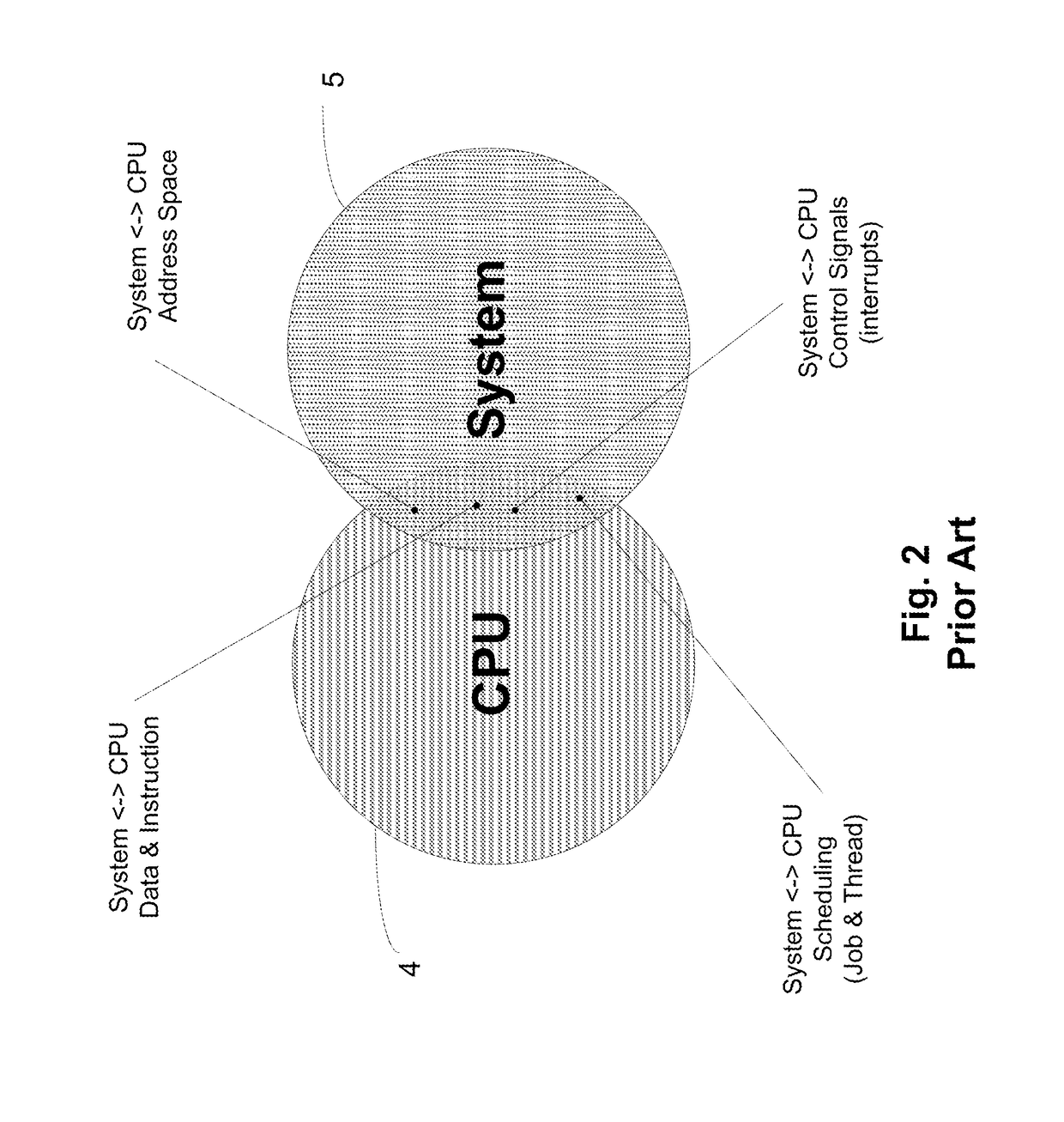

Computer with Hybrid Von-Neumann/Dataflow Execution Architecture

ActiveUS20170031866A1Simple control processAvoid problemsDataflow computersInstruction analysisData stream processingGeneral purpose computer

A dataflow computer processor is teamed with a general computer processor so that program portions of an application program particularly suited to dataflow execution may be transferred to the dataflow processor during portions of the execution of the application program by the general computer processor. During this time the general computer processor may be placed in partial shutdown for energy conservation.

Owner:WISCONSIN ALUMNI RES FOUND

Processors, methods, and systems for debugging a configurable spatial accelerator

ActiveUS20190095383A1Easy to adaptImprove performanceAssociative processorsDataflow computersProcessing elementOperand

Systems, methods, and apparatuses relating to debugging a configurable spatial accelerator are described. In one embodiment, a processor includes a plurality of processing elements and an interconnect network between the plurality of processing elements to receive an input of a dataflow graph comprising a plurality of nodes, wherein the dataflow graph is to be overlaid into the interconnect network and the plurality of processing elements with each node represented as a dataflow operator in the plurality of processing elements, and the plurality of processing elements are to perform an operation by a respective, incoming operand set arriving at each of the dataflow operators of the plurality of processing elements. At least a first of the plurality of processing elements is to enter a halted state in response to being represented as a first of the plurality of dataflow operators.

Owner:INTEL CORP

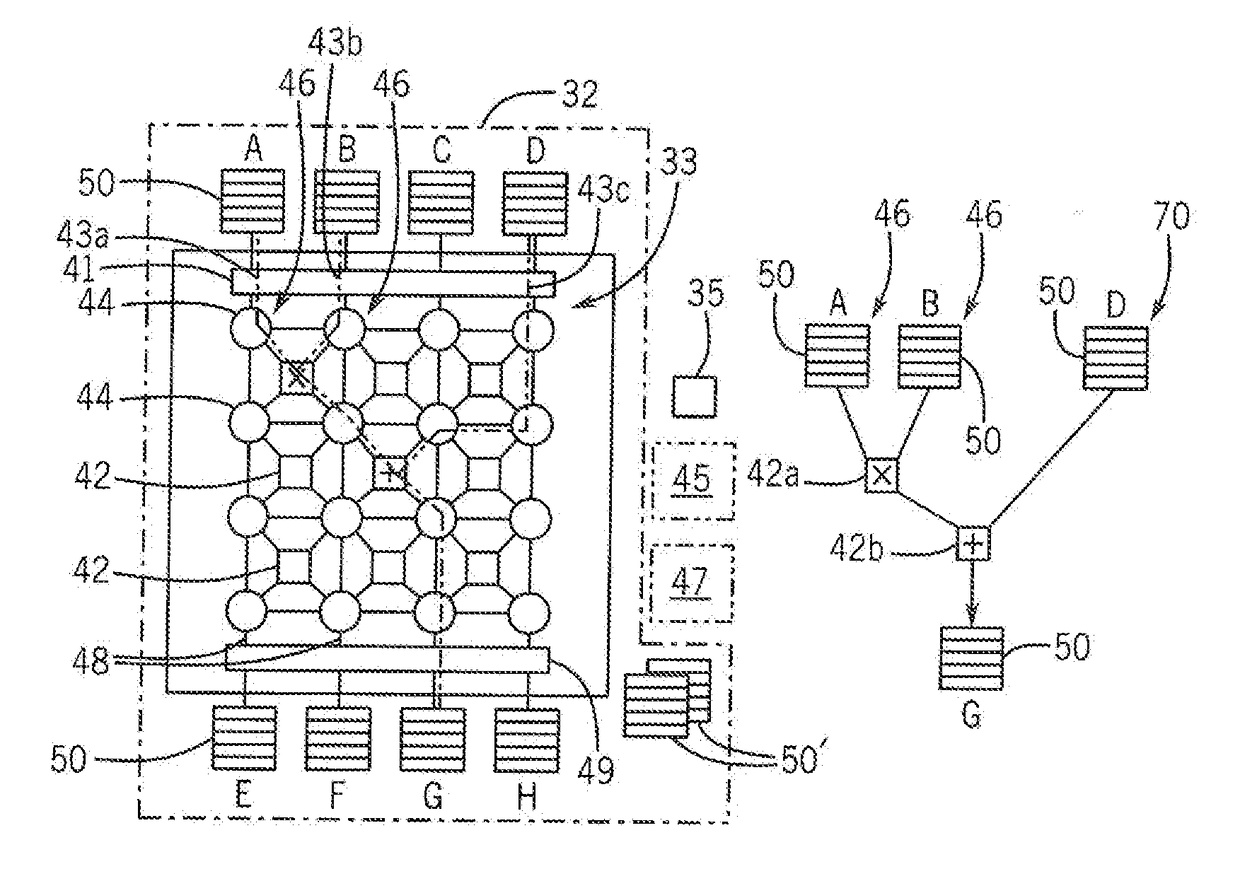

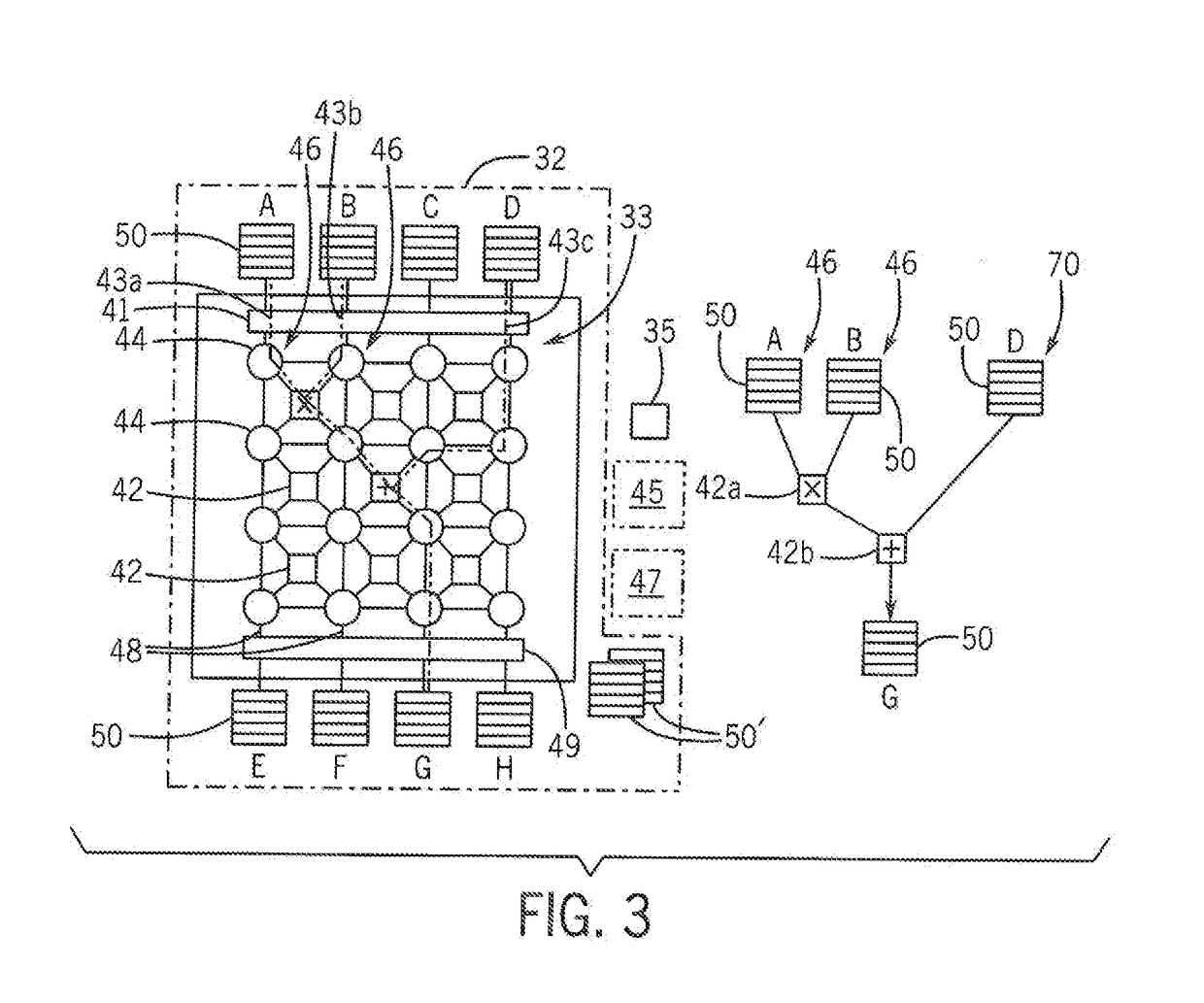

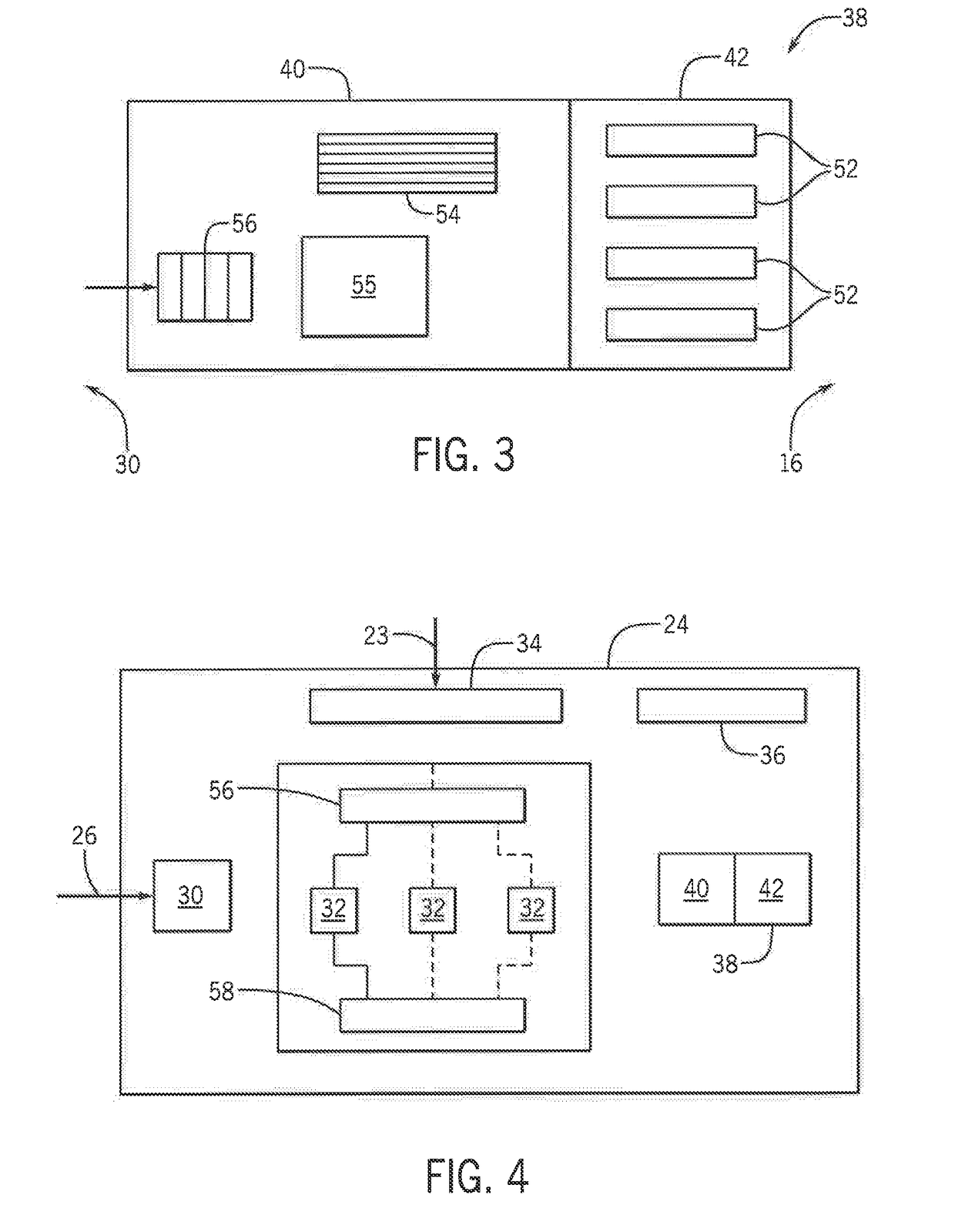

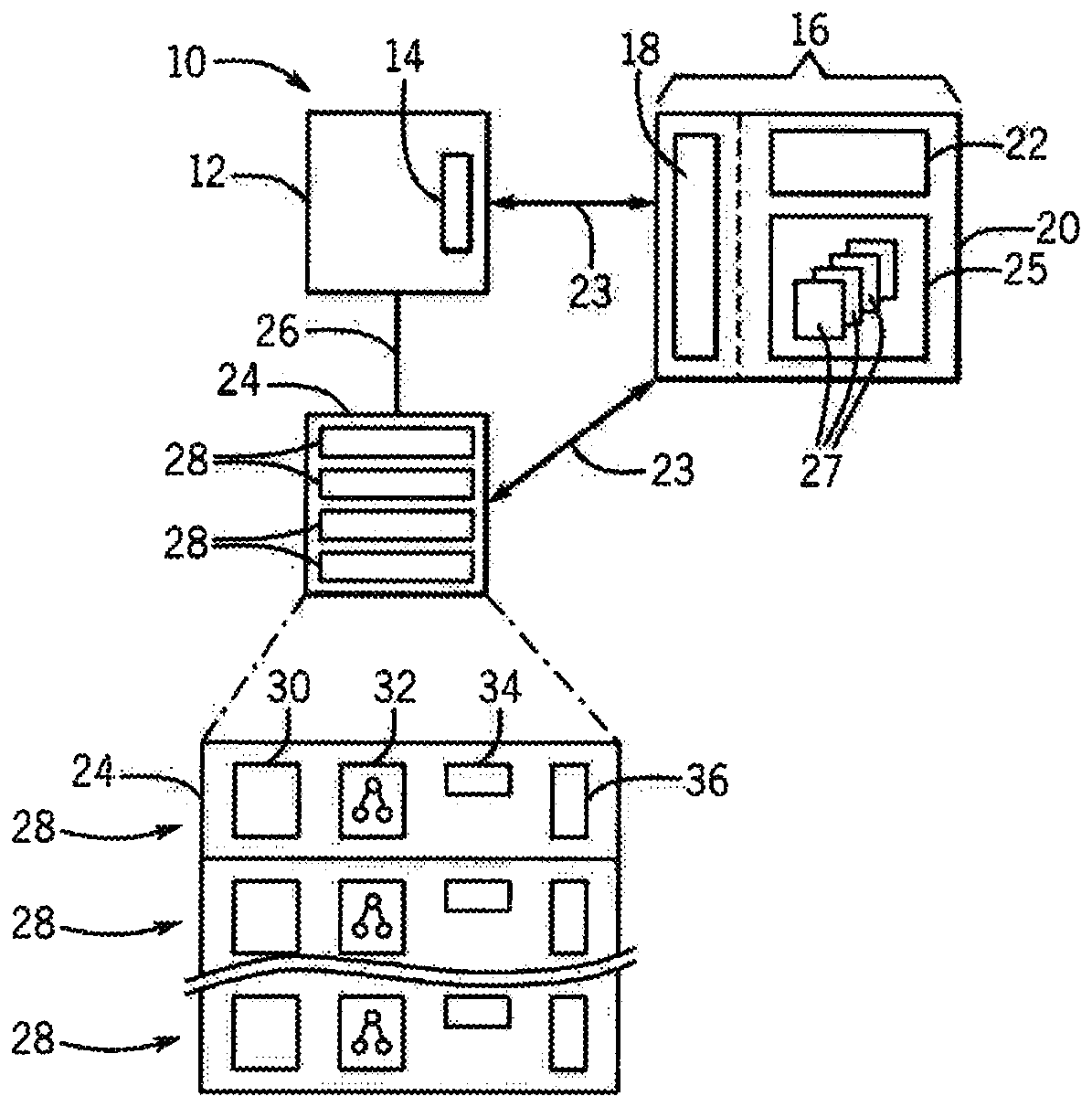

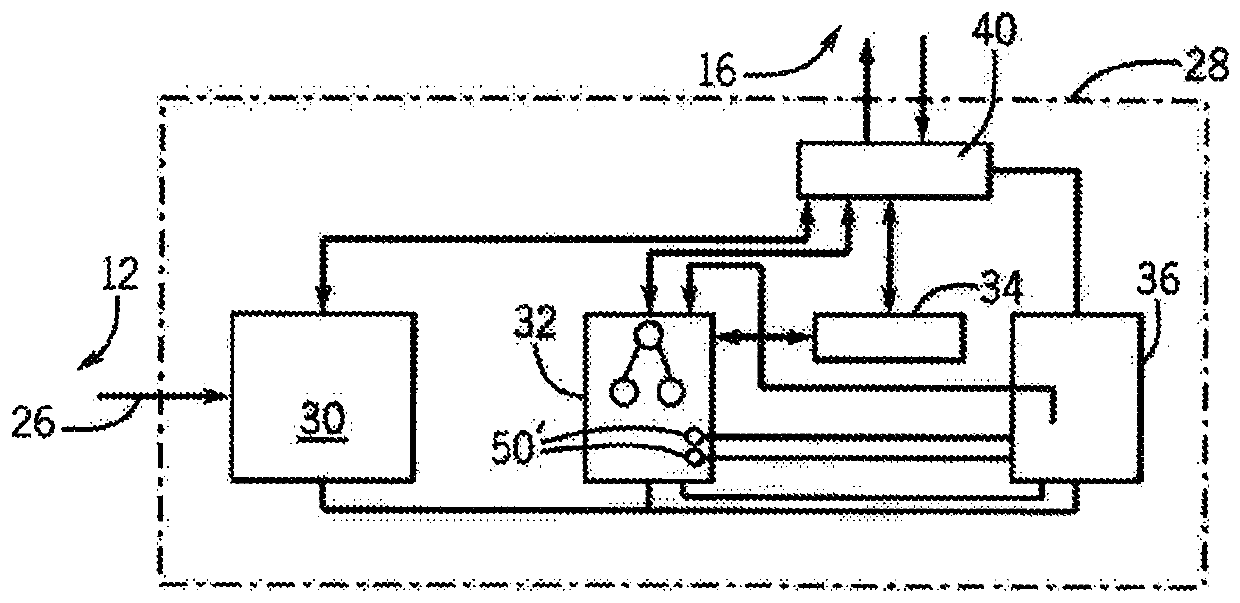

Multithreaded processor array with heterogeneous function blocks communicating tokens via self-routing switch fabrics

ActiveUS9158575B2Dataflow computersProgram initiation/switchingSelf routingStructure of Management Information

A shared resource multi-thread processor array wherein an array of heterogeneous function blocks are interconnected via a self-routing switch fabric, in which the individual function blocks have an associated switch port address. Each switch output port comprises a FIFO style memory that implements a plurality of separate queues. Thread queue empty flags are grouped using programmable circuit means to form self-synchronised threads. Data from different threads are passed to the various addressable function blocks in a predefined sequence in order to implement the desired function. The separate port queues allows data from different threads to share the same hardware resources and the reconfiguration of switch fabric addresses further enables the formation of different data-paths allowing the array to be configured for use in various applications.

Owner:SMITH GRAEME ROY

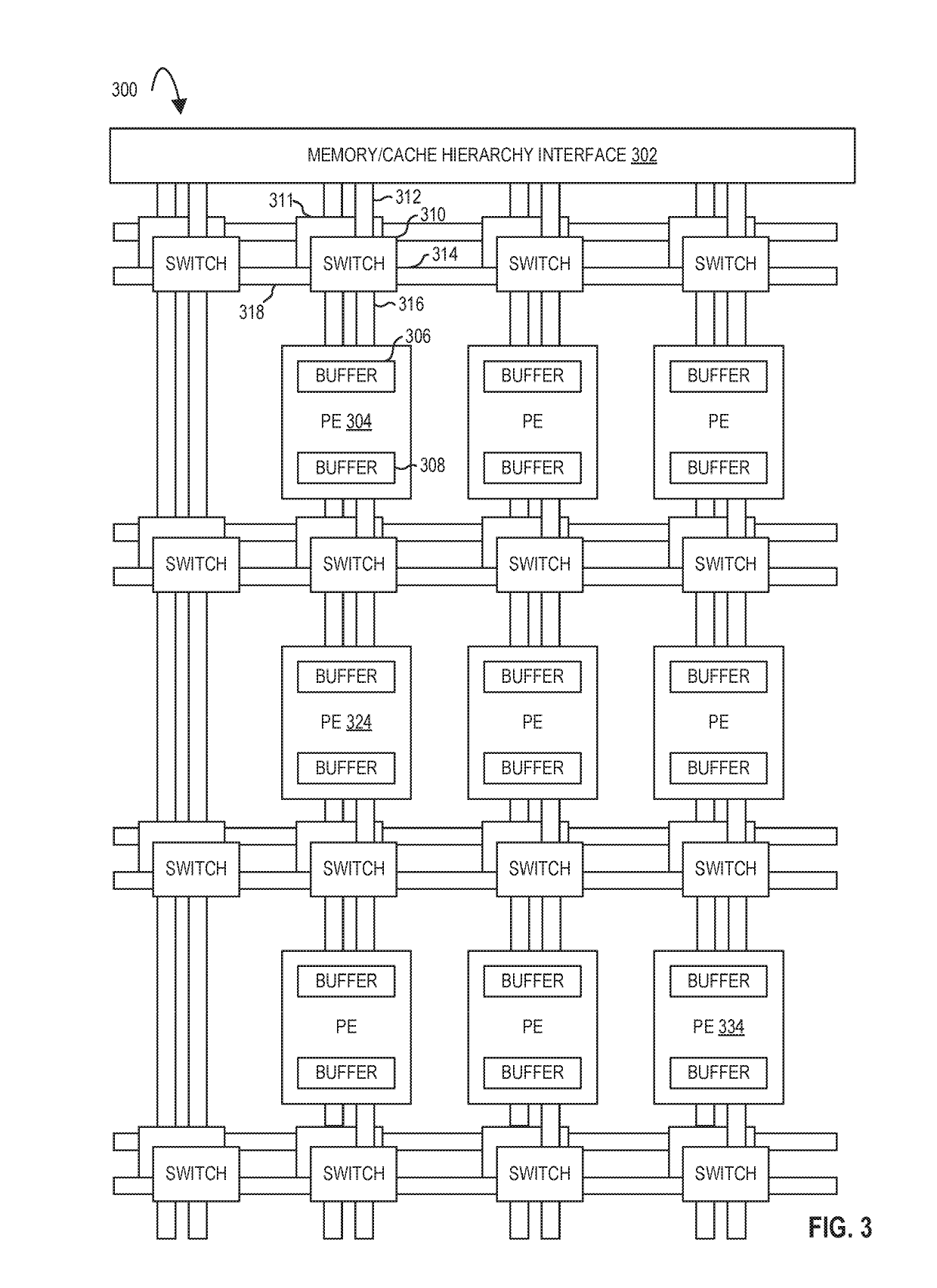

Processors and methods for pipelined runtime services in a spatial array

ActiveUS10467183B2Dataflow computersSingle instruction multiple data multiprocessorsArray data structureProcessing element

Methods and apparatuses relating to pipelined runtime services in spatial arrays are described. In one embodiment, a processor includes processing elements; an interconnect network between the processing elements; a first configuration controller coupled to a first subset of the processing elements; and a second configuration controller coupled to a second, different subset of the processing elements, the first configuration controller and the second configuration controller are to configure the first subset and the second, different subset according to configuration information for a first context, and, for a context switch, the first configuration controller is to configure the first subset according to configuration information for a second context after pending operations of the first context are completed in the first subset and block second context dataflow into the second, different subset's input from the first subset's output until pending operations of the first context are completed in the second, different subset.

Owner:INTEL CORP

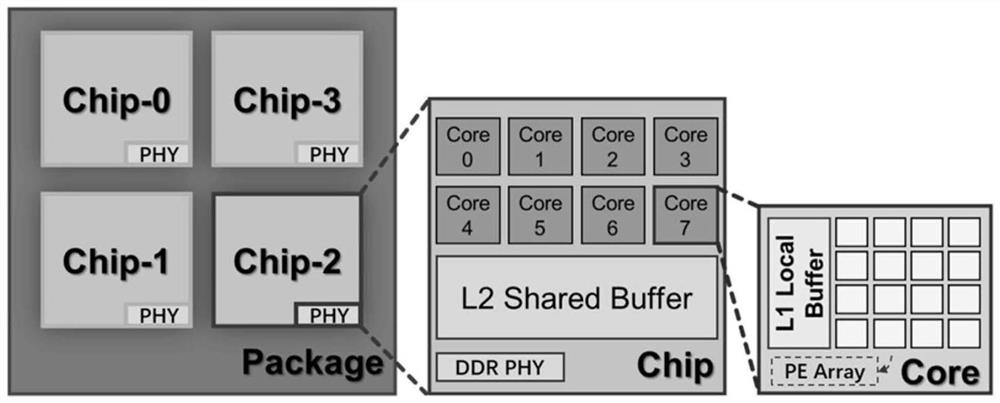

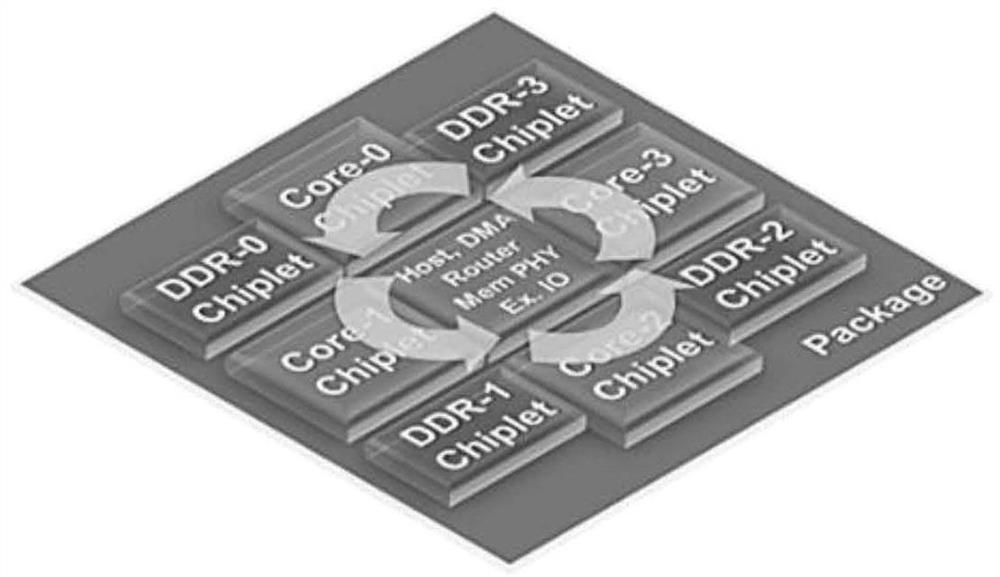

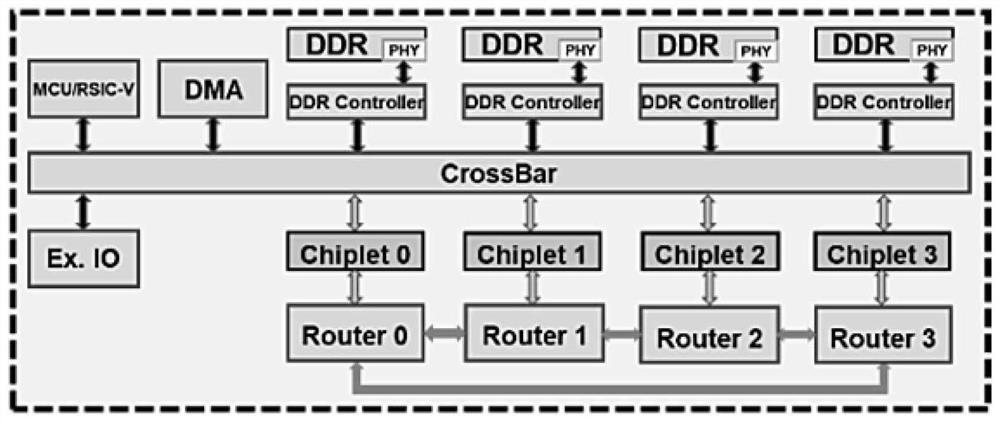

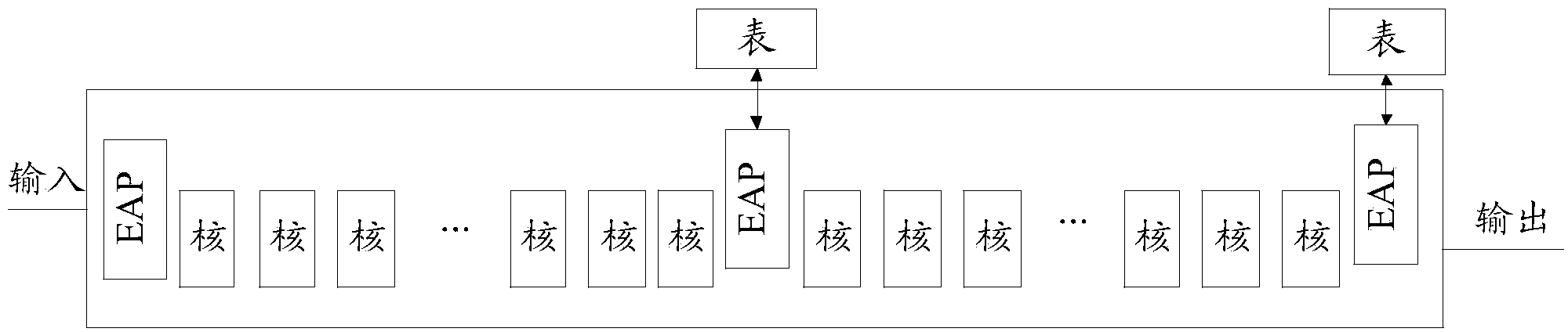

Multi-core package-level system based on core particle framework and task mapping method thereof for core particles

PendingCN112149369AReduce occupancyImprove processing efficiencyDataflow computersCAD circuit designComputational scienceDram memory

The invention discloses a multi-core package-level system based on a core particle framework and a task mapping method for core particles. The system comprises a core unit, a core particle unit and apackaging unit; the core unit comprises a plurality of parallel processing units and an L1 local buffer unit shared by the plurality of processing units; the L1 local buffer unit is only used for storing weight data; the core particle unit comprises a plurality of parallel core units and an L2 shared buffer unit shared by the plurality of core units; the L2 shared buffer unit is only used for storing activation data; the package unit includes a plurality of parallel and interconnected core grain units, and a DRAM memory shared by the plurality of core grain units. According to the method, scheme search is carried out through core particle Chiplet calculation mapping, calculation mapping between core particle Chiplets, a data distribution template of PE array calculation mapping in the coreparticle Chiplets and scale distribution of calculation of each layer, so that less inter-chip communication, less on-chip storage and less DRAM access are achieved.

Owner:INST FOR INTERDISCIPLINARY INFORMATION CORE TECH XIAN CO LTD

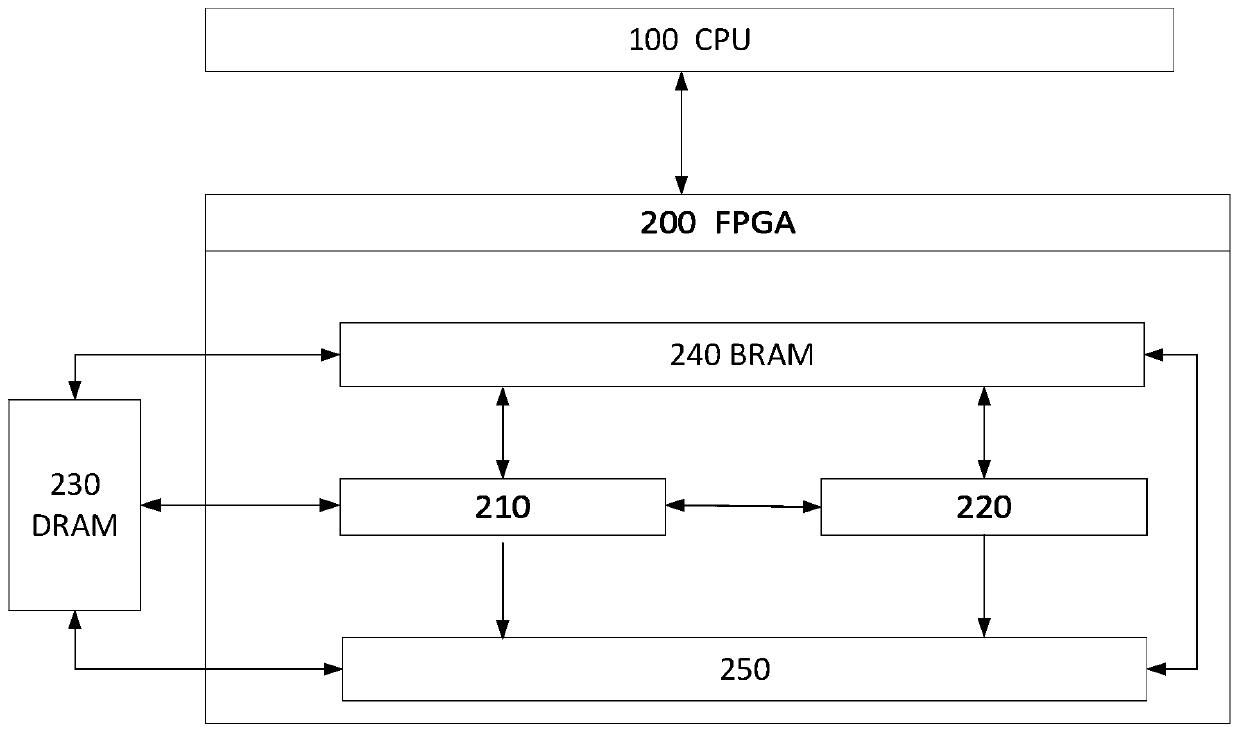

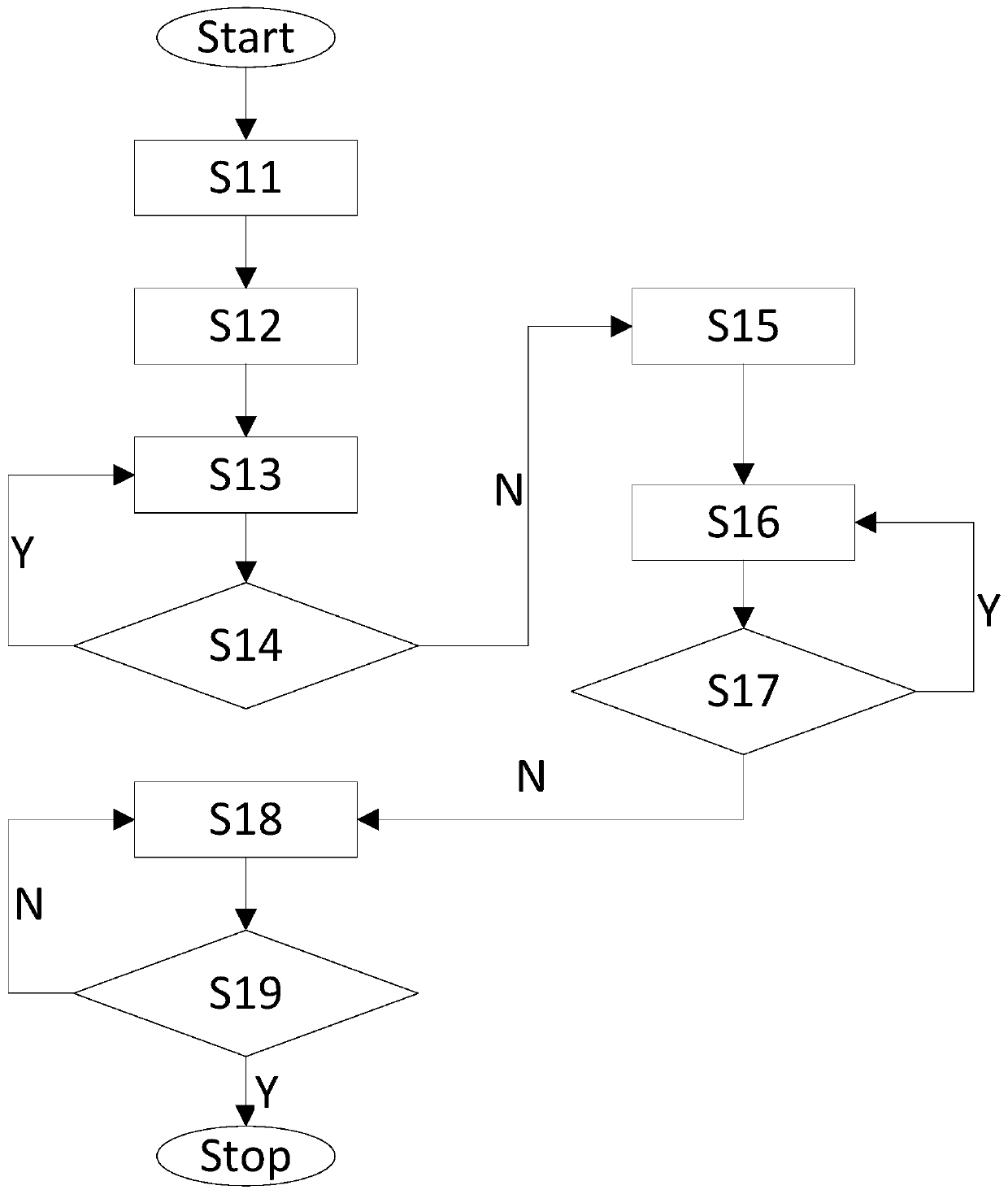

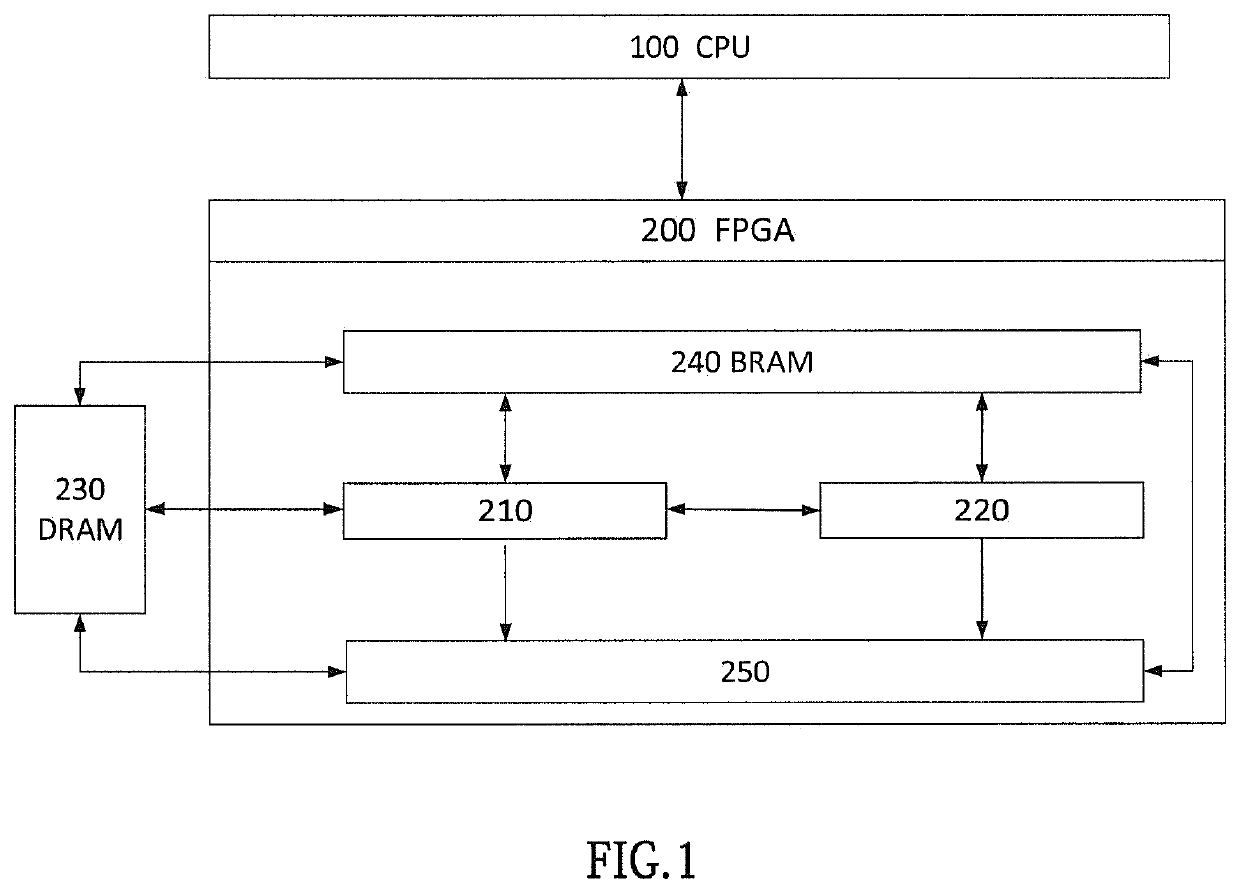

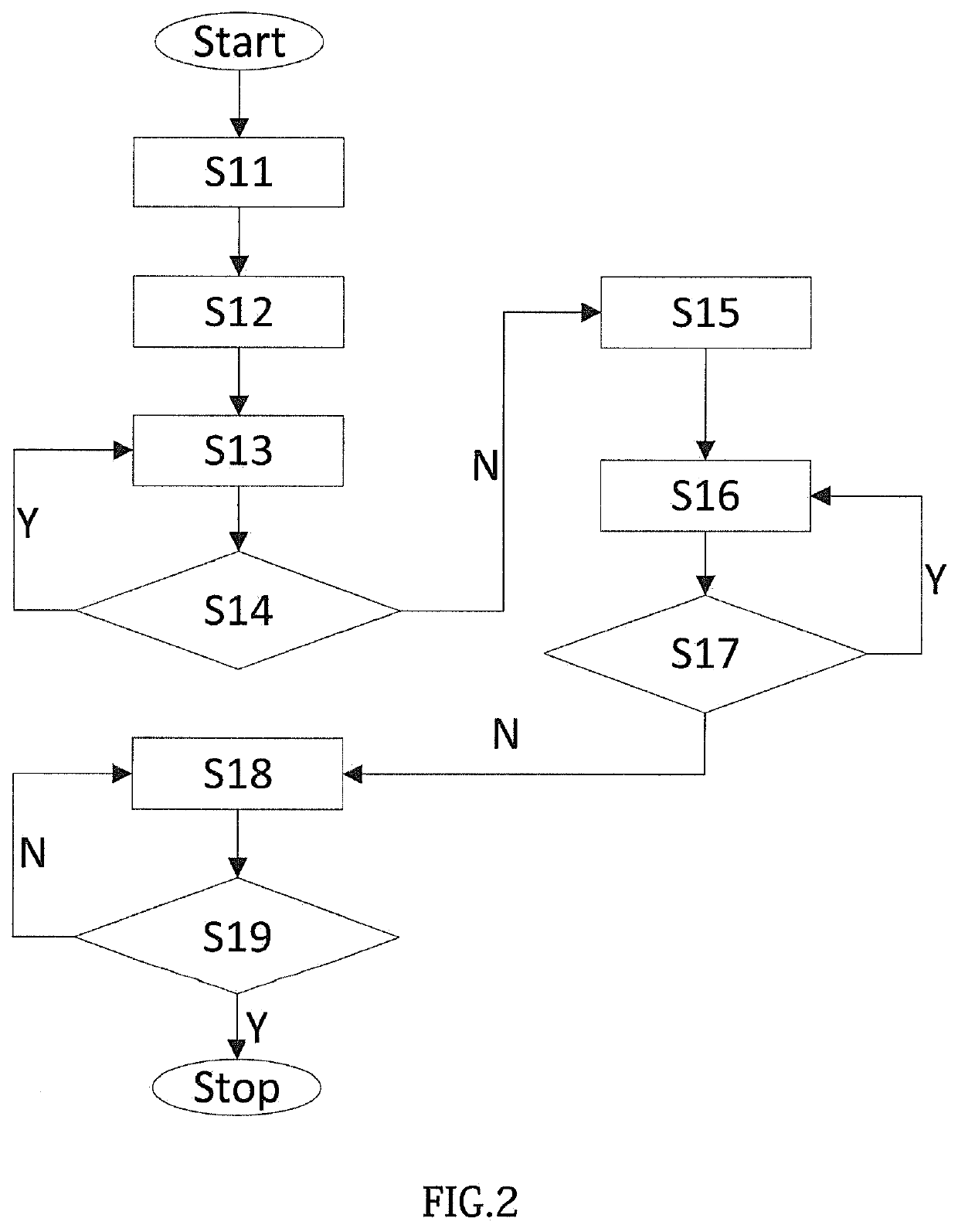

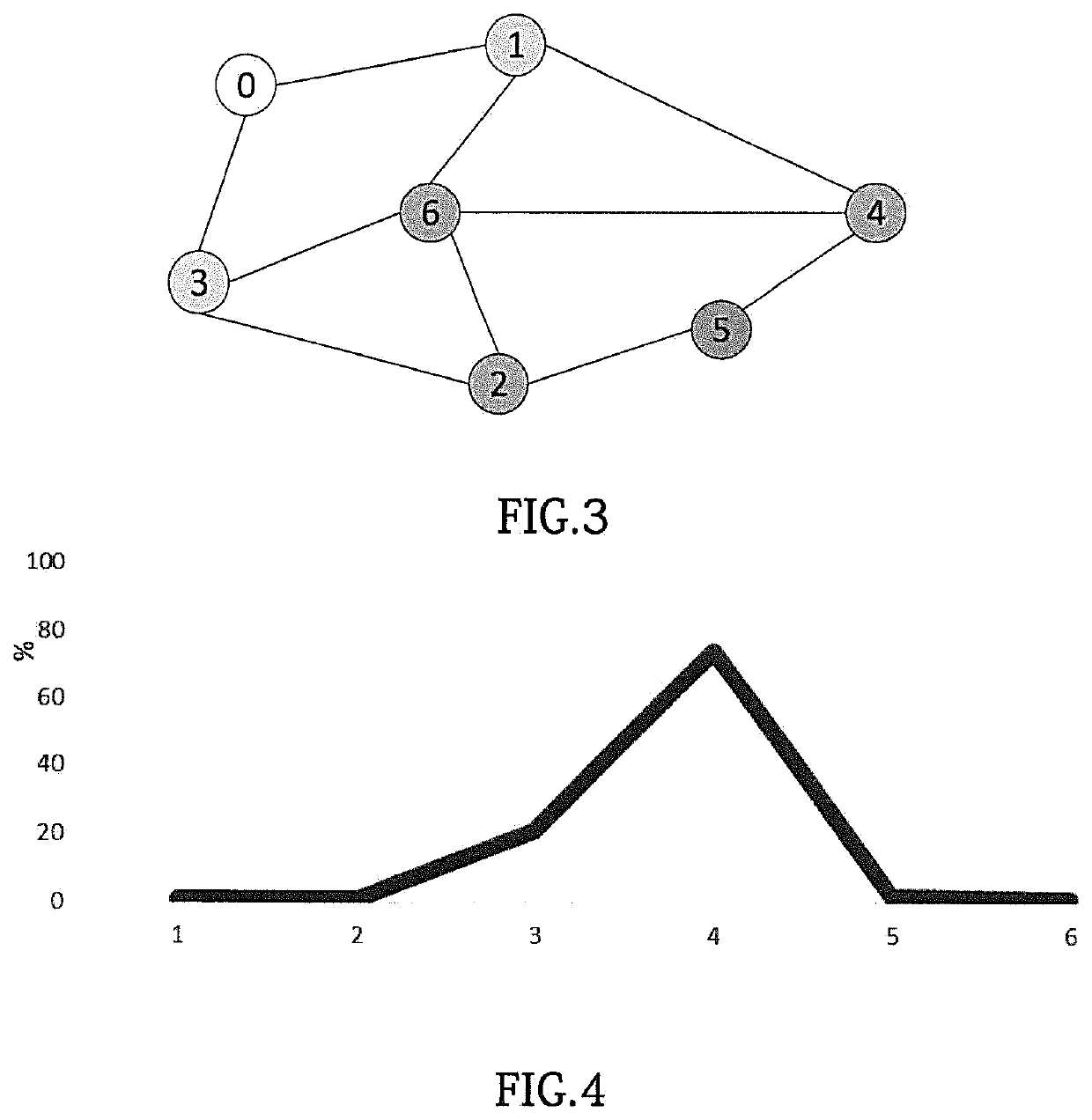

FPGA-based graph data processing method and system

ActiveCN109785224AAvoid designing complex control operationsAvoid huge time overheadDataflow computersProcessor architectures/configurationComputer hardwareGraph traversal

The invention relates to an FPGA-based graph data processing method and system. The method is used for carrying out graph traversal on a graph with small world network characteristics. The method comprises the following steps: obtaining a sample; carrying out graph traversal by using the first processor and a second processor in communication connection with the first processor, the first processor is a CPU, The second processor is an FPGA, The first processor is used for transmitting graph data needing to be traversed to the second processor. the first processor obtains result data of the graph traversal for result output after the second processor completes the graph traversal of the graph data according to a layer sequence traversal mode; the second processor comprises a low peak processing module and a high peak processing module; wherein the low-peak processing module and the high-peak processing module respectively utilize on-chip logic resources of different areas of the secondprocessor, the high-peak processing module has higher parallelism relative to the low-peak processing module, the low-peak processing module is used for carrying out graph traversal in a starting stage and / or an ending stage, and the high-peak processing module is used for carrying out graph traversal in an intermediate stage.

Owner:HUAZHONG UNIV OF SCI & TECH

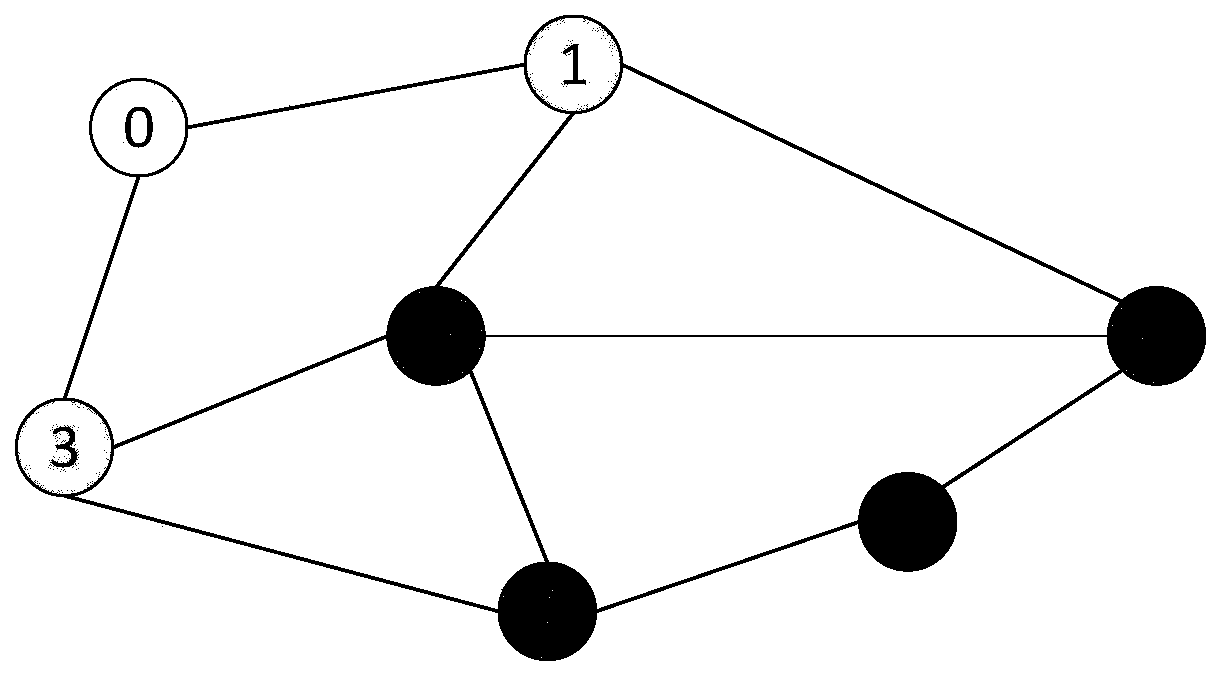

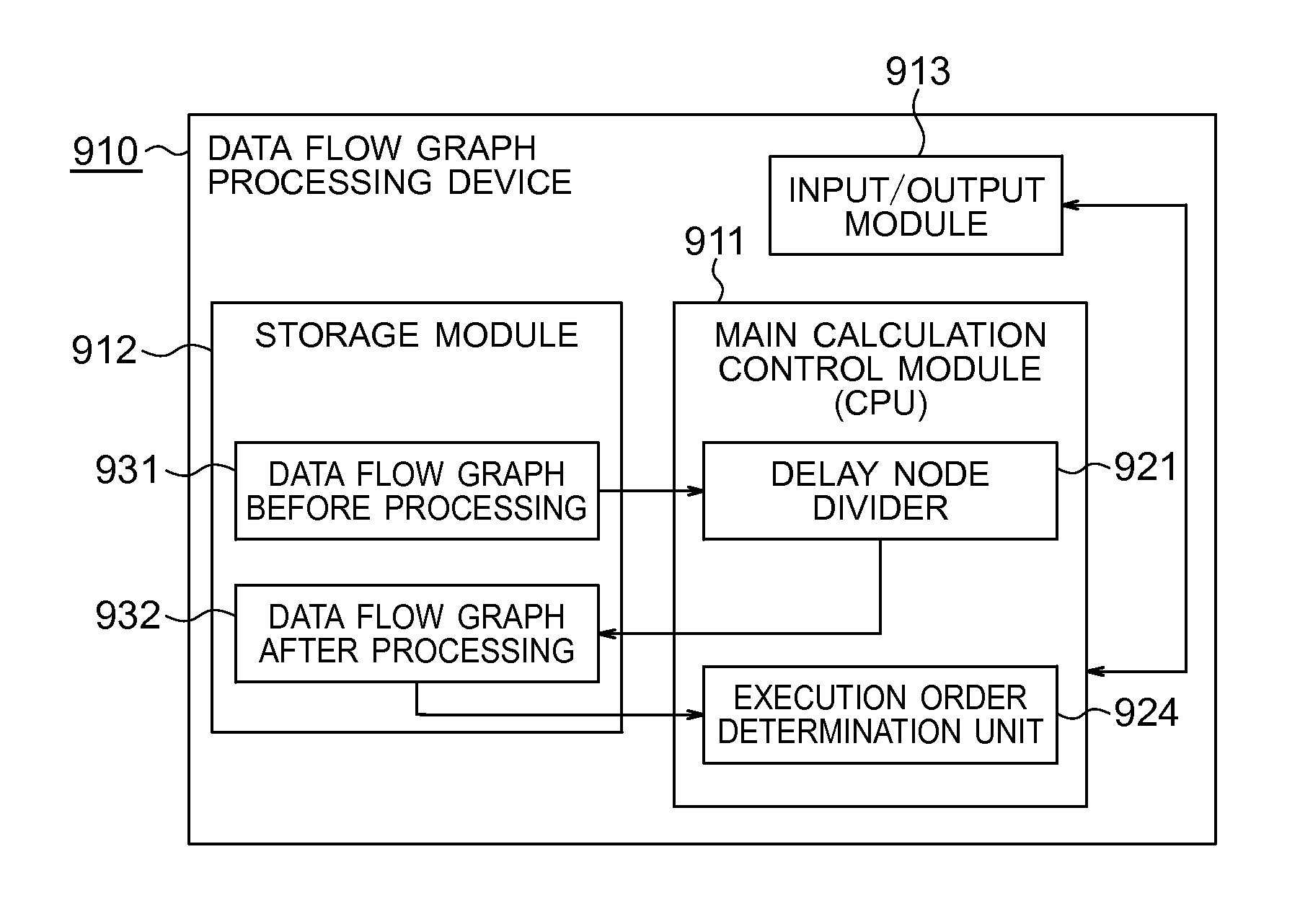

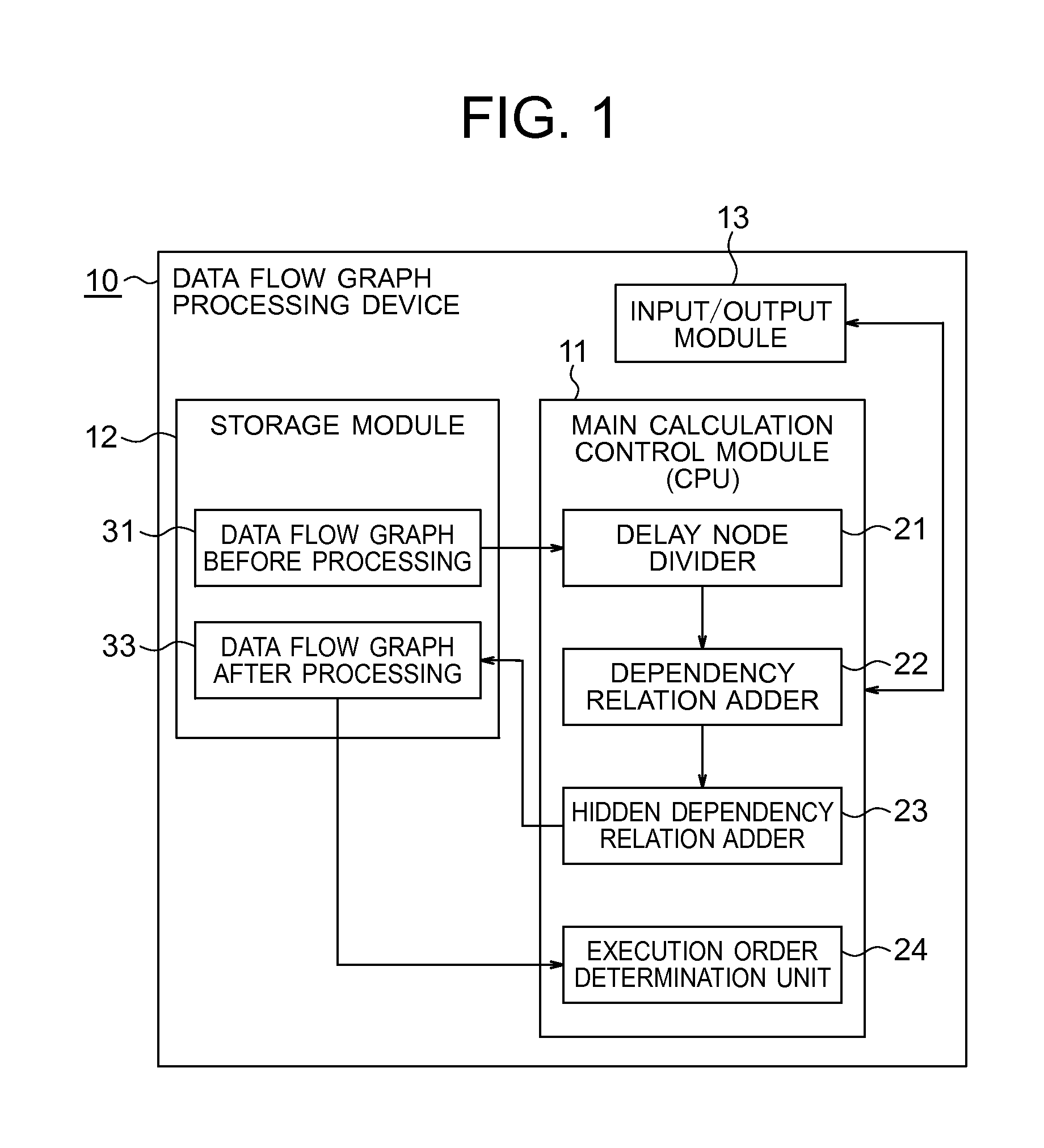

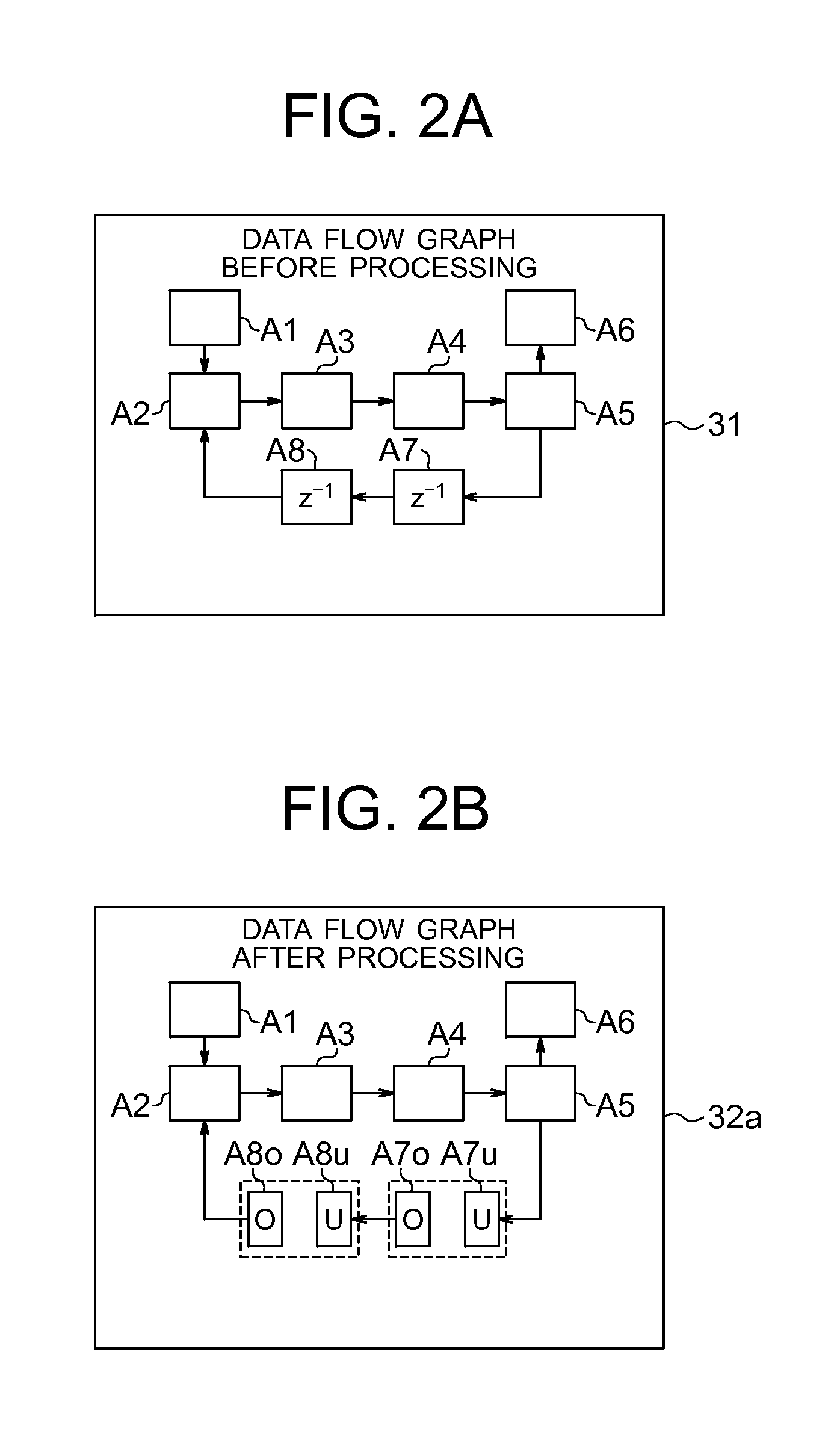

Data flow graph processing device, data flow graph processing method, and data flow graph processing program

A data flow graph processing device that transforms a data flow graph including a loop structure into a pipeline operation capable of determining node execution order and judging whether or not executable, comprises: a delay node divider that divides a delay node included in t data flow graph into a value update node and a value output node; a dependency relation adder that adds dependency relations from the start node of the data flow graph to the value output node; and a hidden dependency relation adder that adds hidden dependency relations, indicating previous iteration and current iteration dependencies, from the value update node to the value output node.

Owner:NEC CORP

Processors, methods, and systems with a configurable spatial accelerator

Systems, methods, and apparatuses relating to a configurable spatial accelerator are described. In one embodiment, a processor includes a synchronizer circuit coupled between an interconnect network of a first tile and an interconnect network of a second tile and comprising storage to store data to be sent between the interconnect network of the first tile and the interconnect network of the second tile, the synchronizer circuit to convert the data from the storage between a first voltage or a first frequency of the first tile and a second voltage or a second frequency of the second tile to generate converted data, and send the converted data between the interconnect network of the first tile and the interconnect network of the second tile

Owner:INTEL CORP

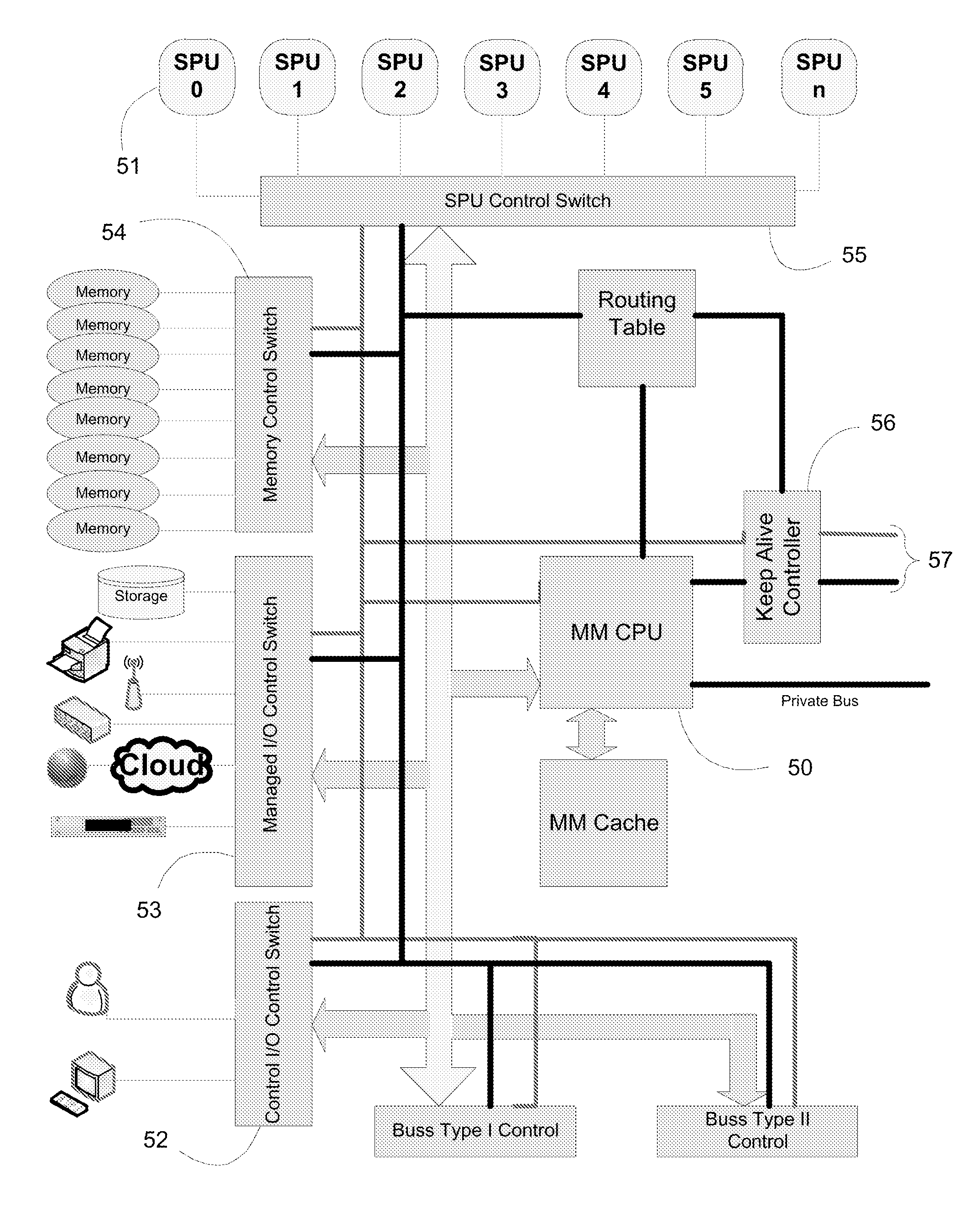

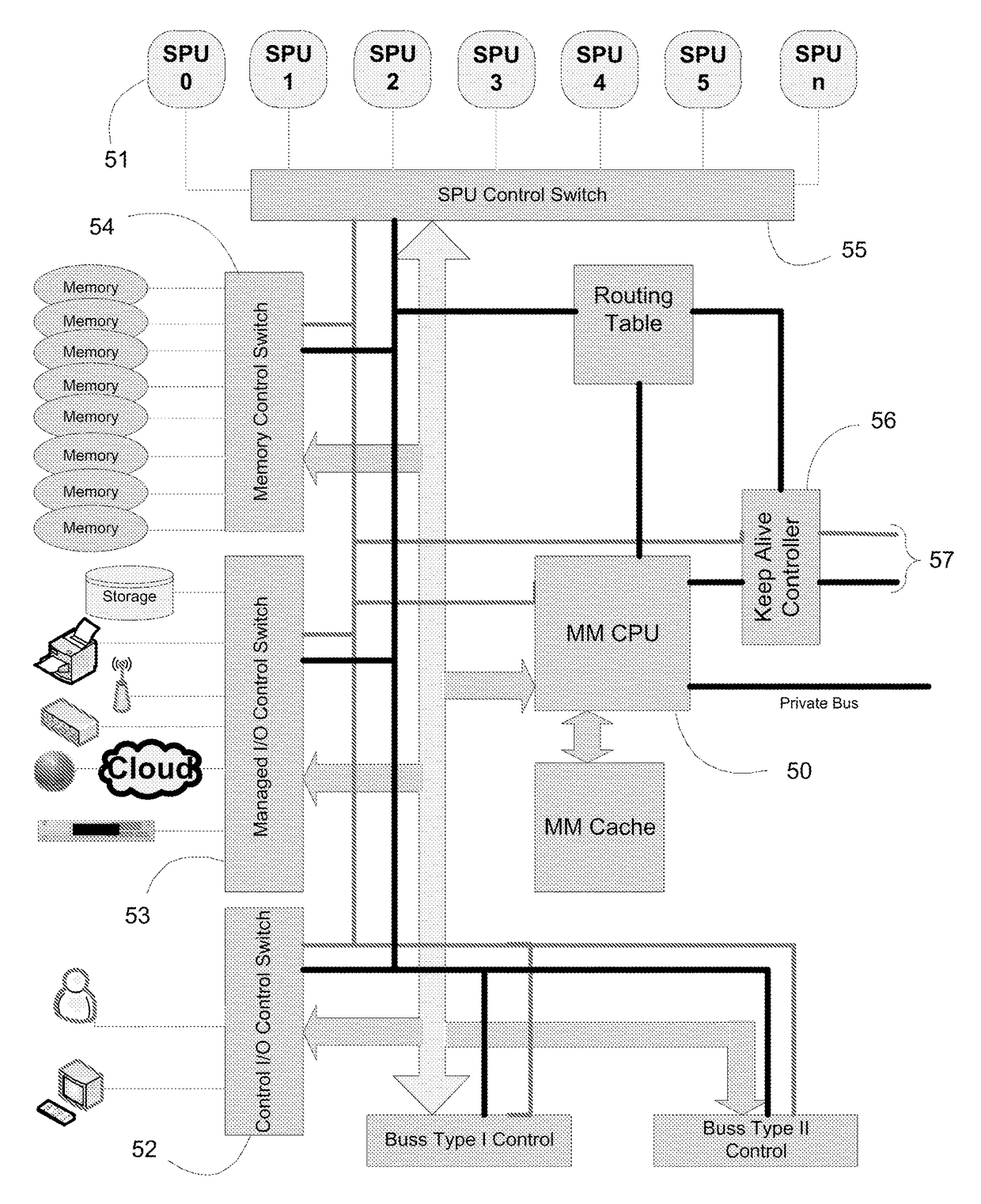

Dynamically Erectable Computer System

ActiveUS20150143082A1Memory architecture accessing/allocationDataflow computersVirtualizationComputer architecture

A fault-tolerant computer system architecture includes two types of operating domains: a conventional first domain (DID) that processes data and instructions, and a novel second domain which includes mentor processors for mentoring the DID according to “meta information” which includes but is not limited to data, algorithms and protective rule sets. The term “mentoring” (as defined herein below) refers to, among other things, applying and using meta information to enforce rule sets and / or dynamically erecting abstractions and virtualizations by which resources in the DID are shuffled around for, inter alia, efficiency and fault correction. Meta Mentor processors create systems and sub-systems by means of fault tolerant mentor switches that route signals to and from hardware and software entities. The systems and sub-systems created are distinct sub-architectures and unique configurations that may be operated as separately or concurrently as defined by the executing processes.

Owner:SMITH ROGER A

Social Network Site Recommender System & Method

ActiveUS20110289171A1Dataflow computersMultiple digital computer combinationsWeb siteDocument preparation

A document management system monitors proposed recipients for documents and provides recommendations on alterations to the distribution set, such as by adding or removing recipients.

Owner:META PLATFORMS INC

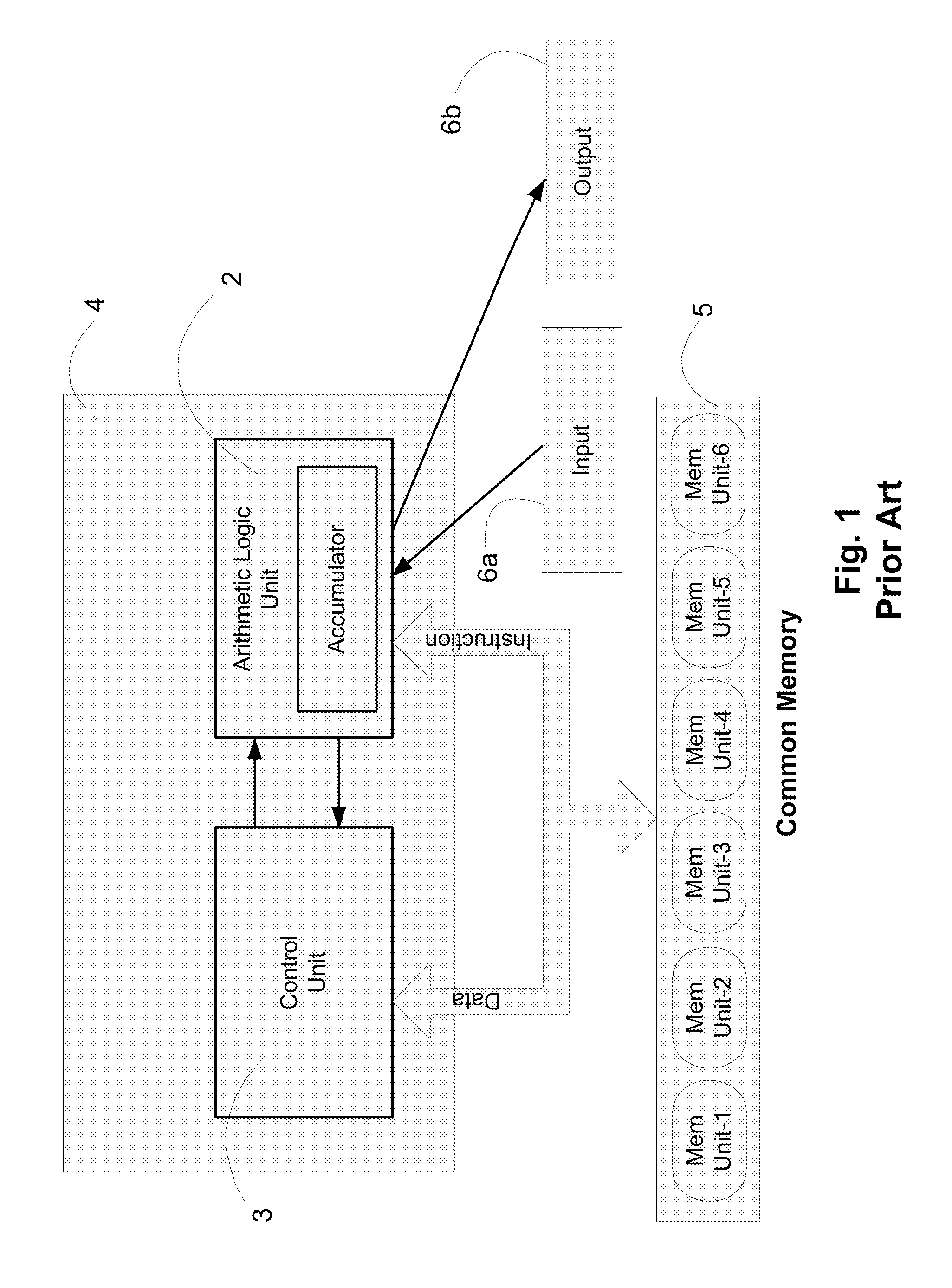

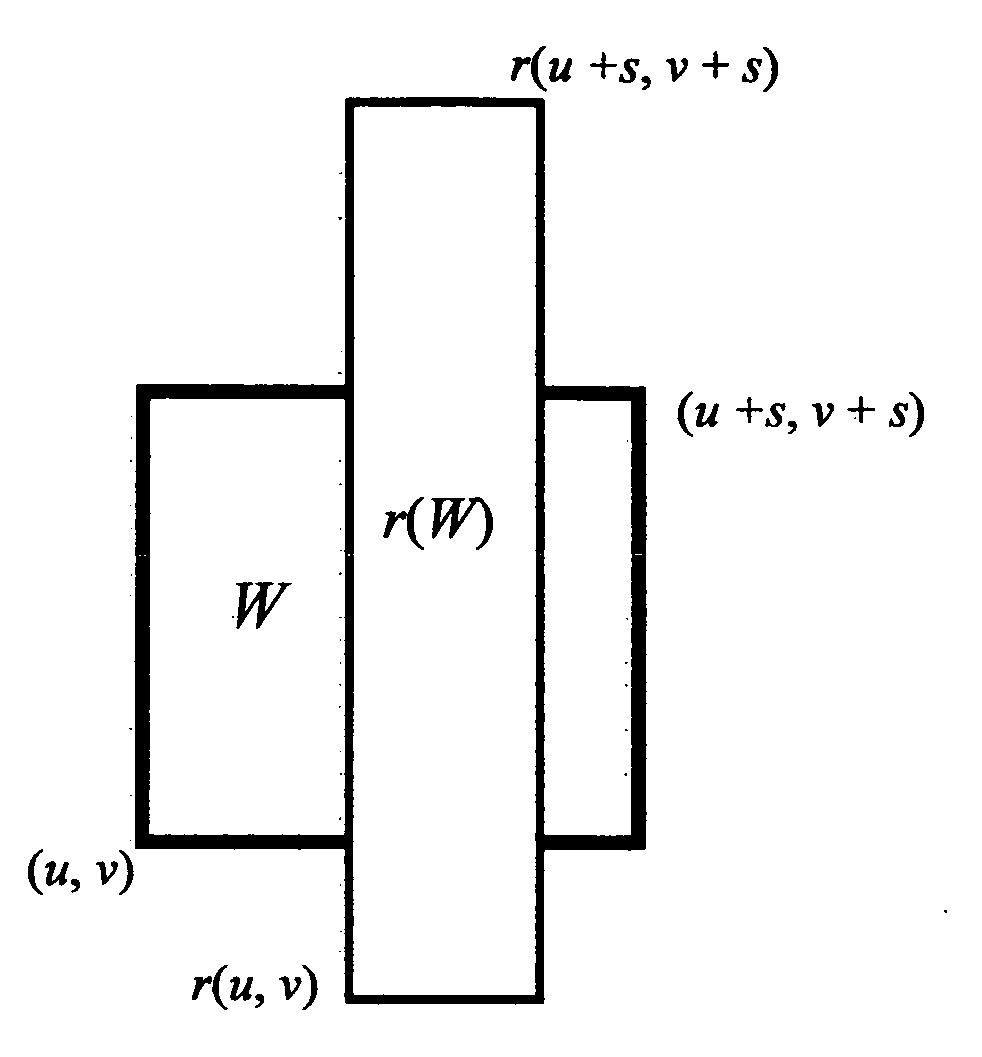

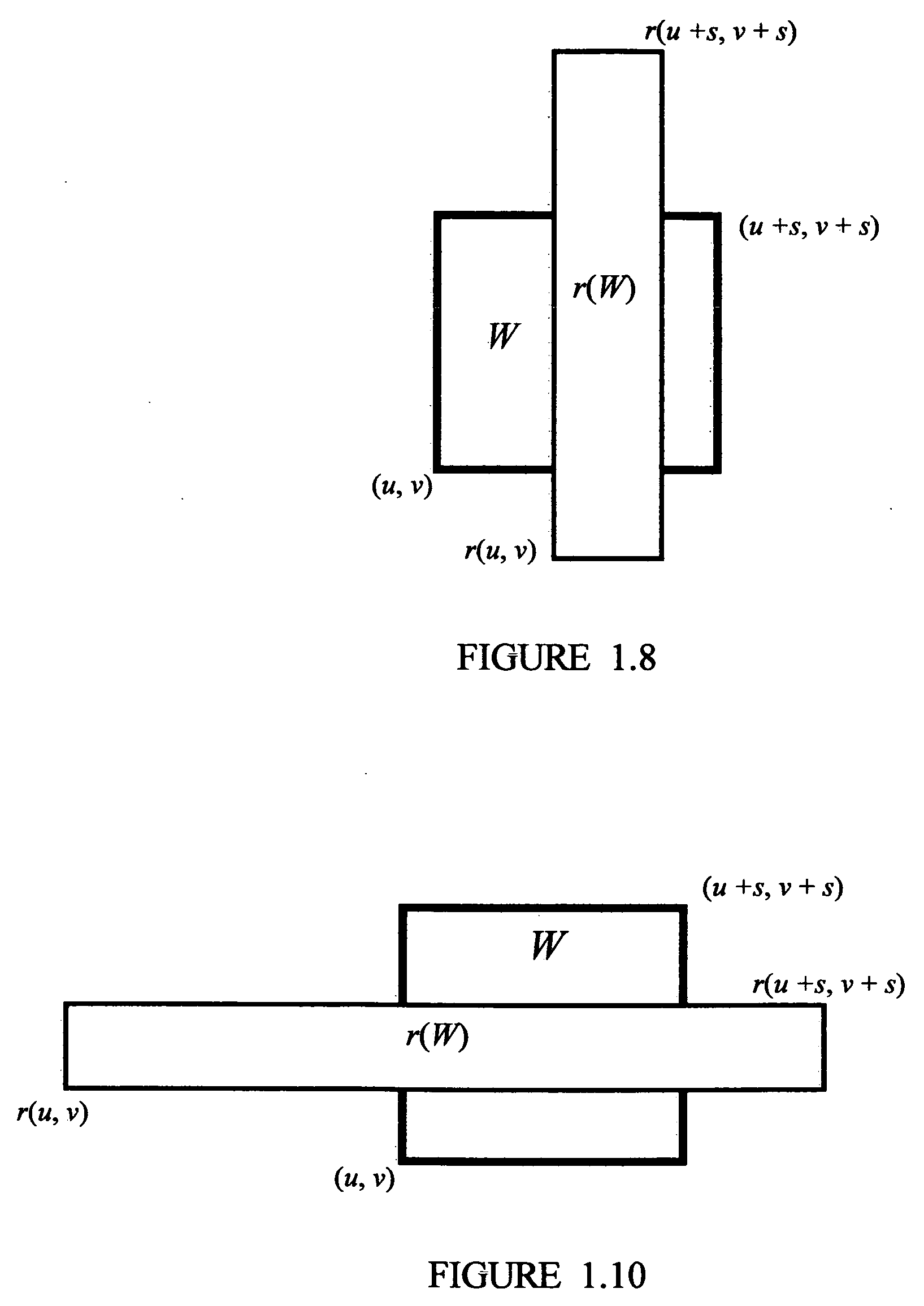

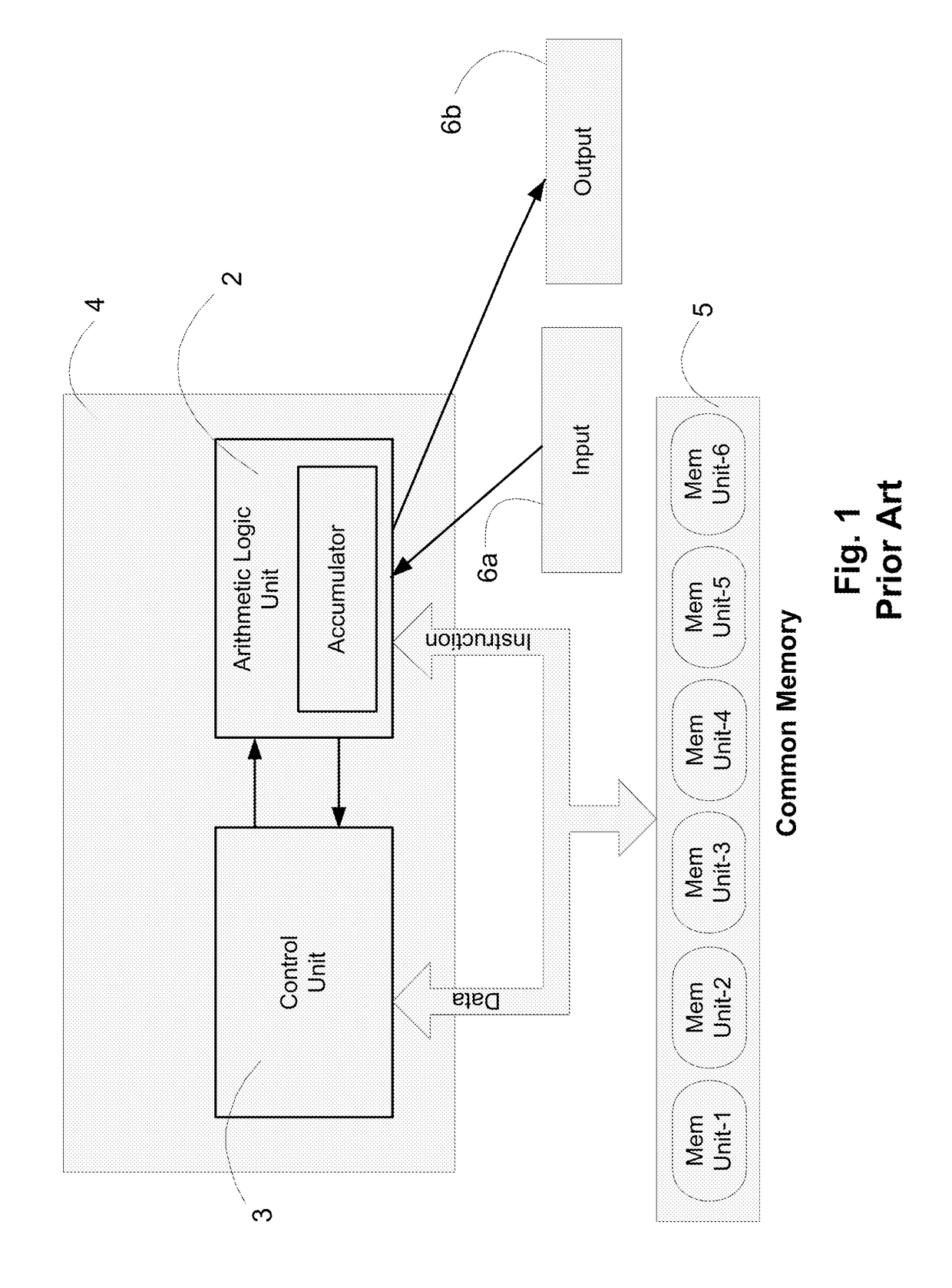

Execution engine for executing single assignment programs with affine dependencies

ActiveUS20150356055A1Efficient executionEnergy consumptionDataflow computersSingle instruction multiple data multiprocessorsDigital dataComputer architecture

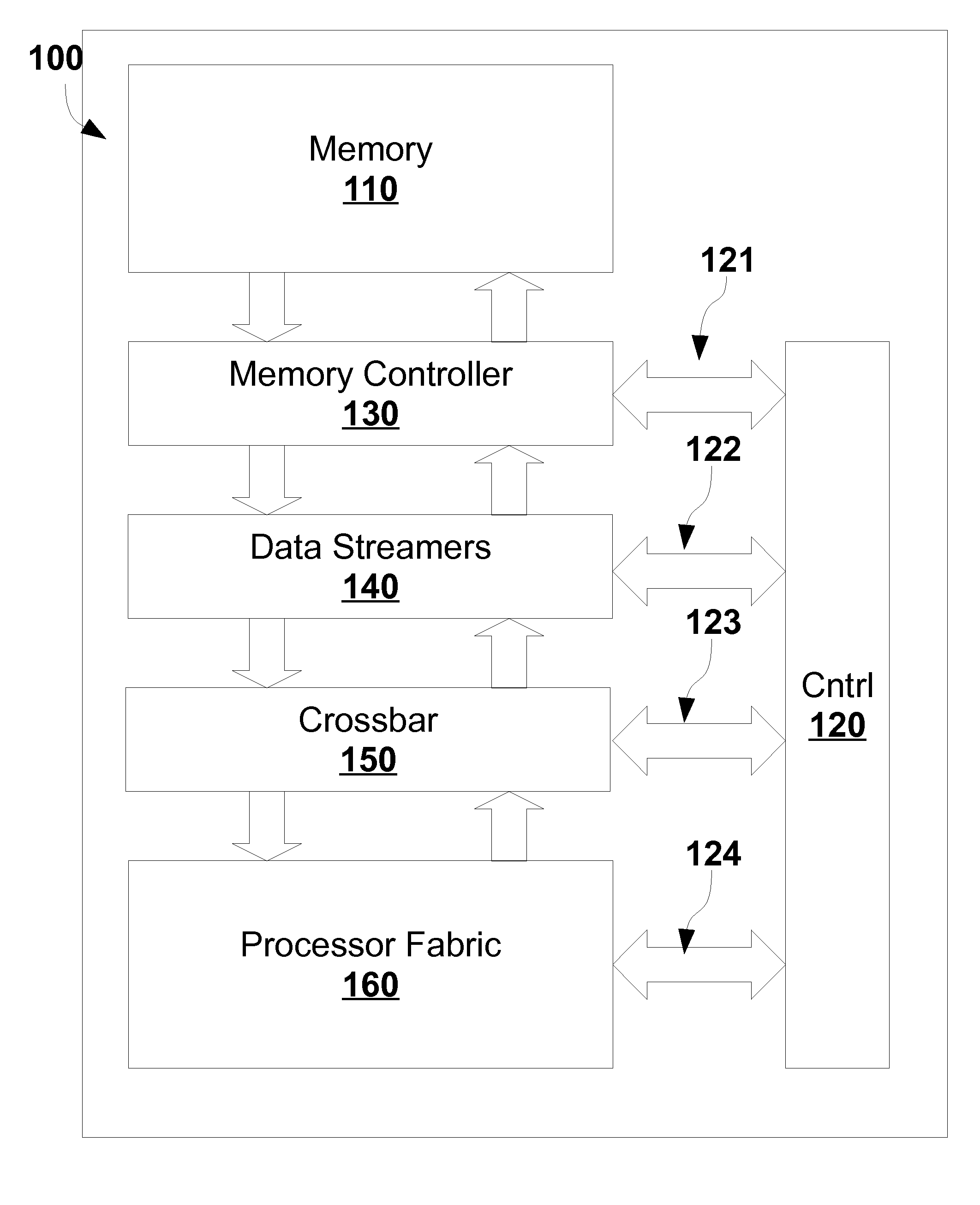

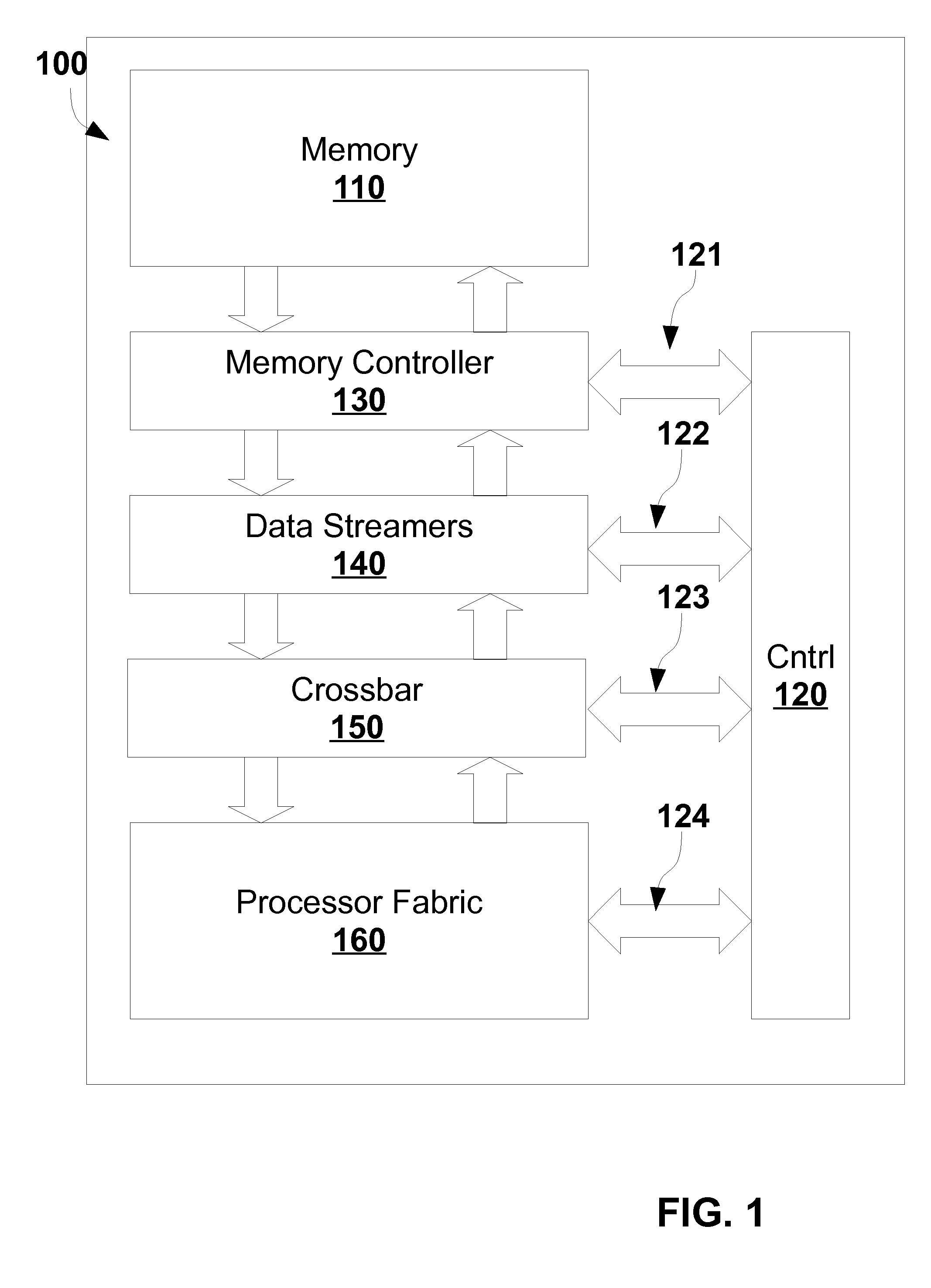

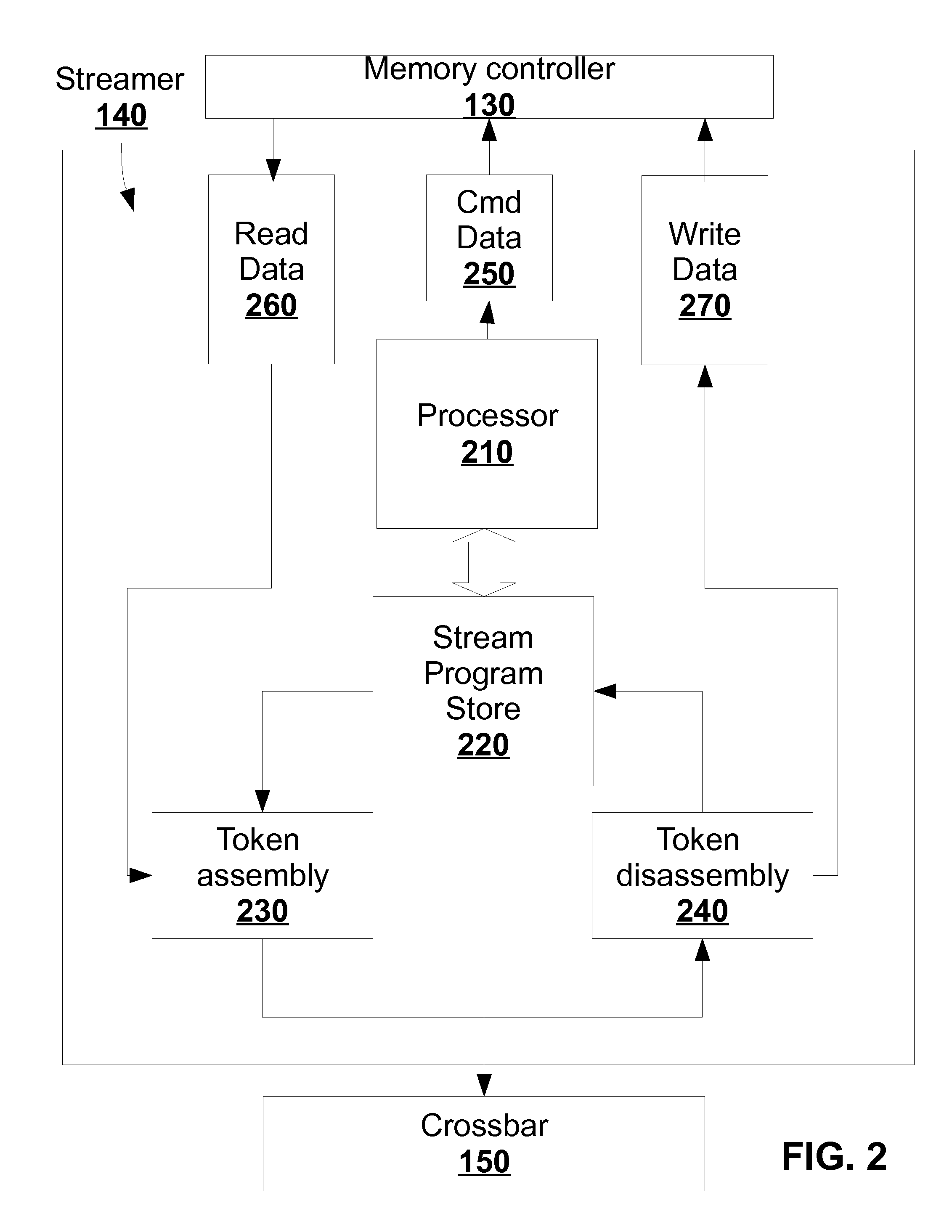

The execution engine is a new organization for a digital data processing apparatus, suitable for highly parallel execution of structured fine-grain parallel computations. The execution engine includes a memory for storing data and a domain flow program, a controller for requesting the domain flow program from the memory; and further for translating the program into programming information, a processor fabric for processing the domain flow programming information and a crossbar for sending tokens and the programming information to the processor fabric.

Owner:STILLWATER SUPERCOMPUTING

High-Speed, Fixed-Function, Computer Accelerator

ActiveUS20190004995A1Increase computing speedSimple callDataflow computersConcurrent instruction executionHardware accelerationDataflow

A hardware accelerator for computers combines a stand-alone, high-speed, fixed program dataflow functional element with a stream processor, the latter of which may autonomously access memory in predefined access patterns after receiving simple stream instructions and provide them to the dataflow functional element. The result is a compact, high-speed processor that may exploit fixed program dataflow functional elements.

Owner:WISCONSIN ALUMNI RES FOUND

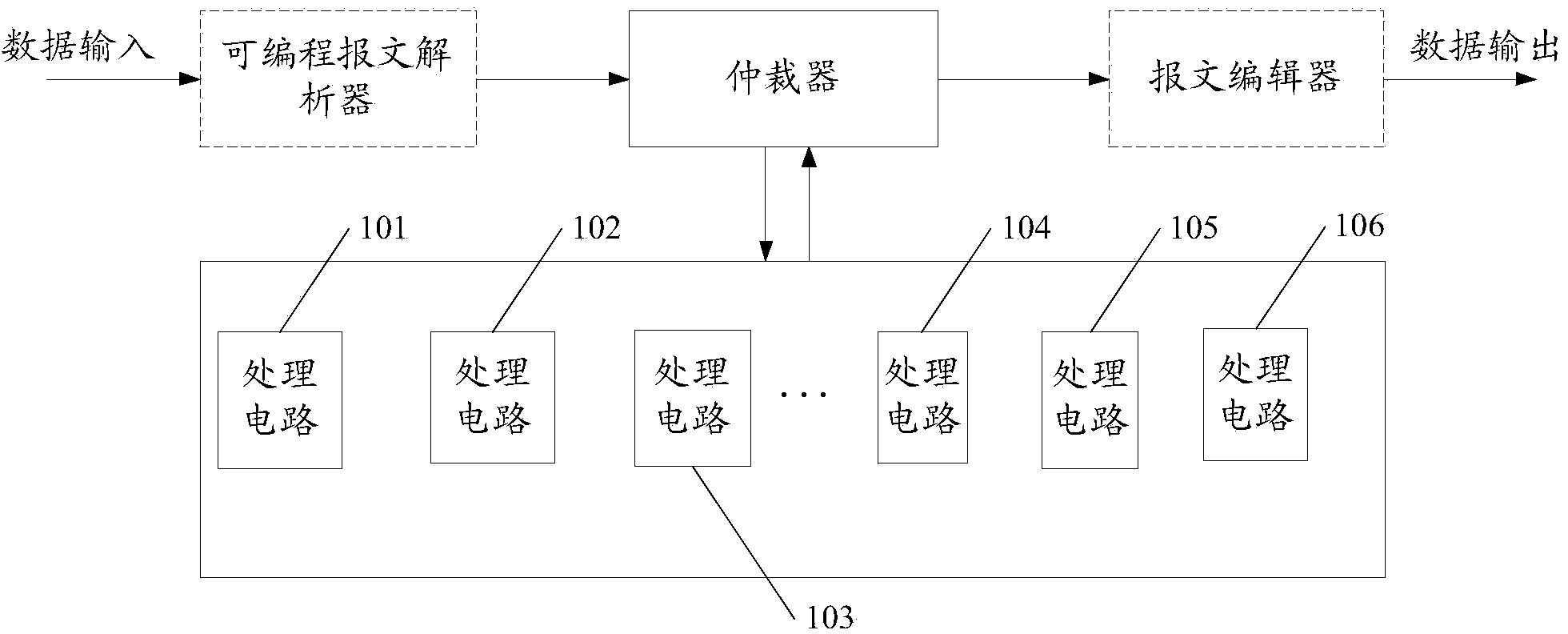

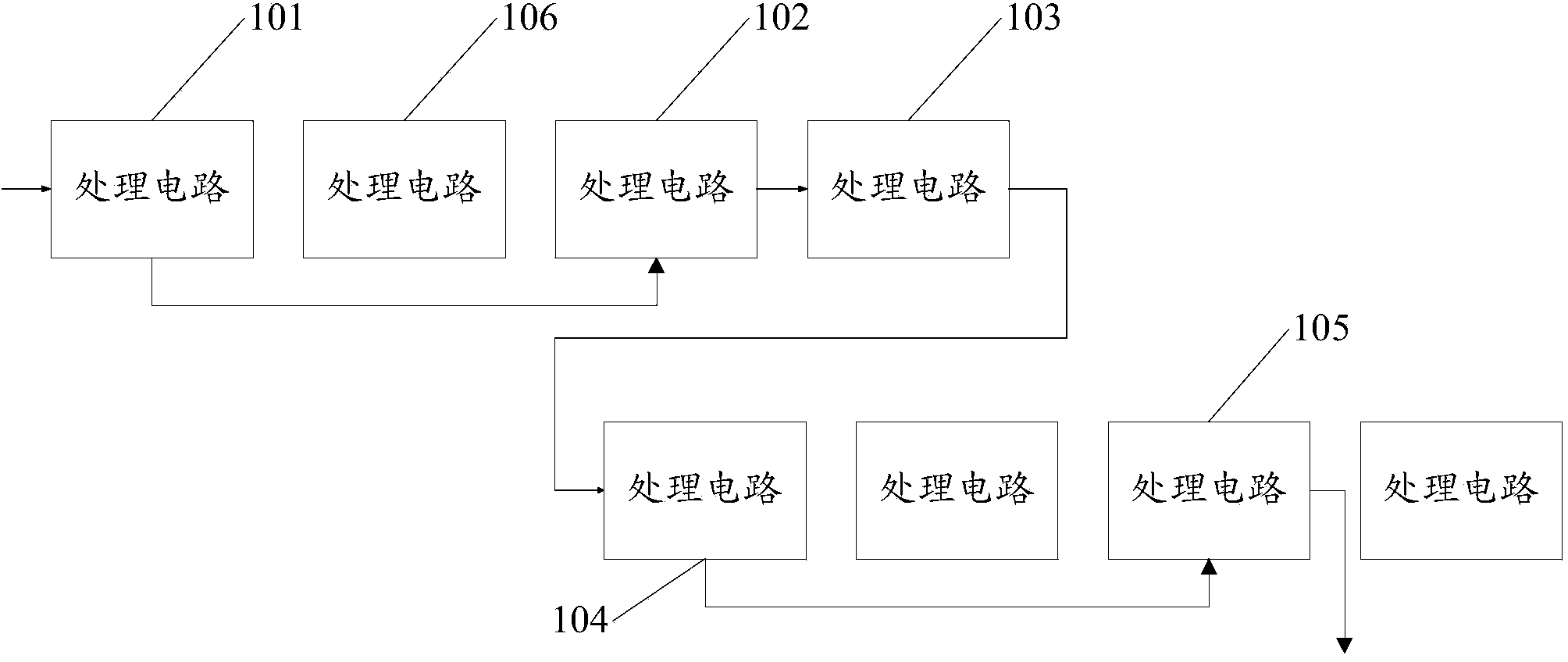

Data processing method, processor, and data processing equipment

ActiveCN103955445AReduce latencyImprove processing efficiencyDataflow computersMultiprogramming arrangementsParallel computingData processing

The invention discloses a data processing method, a processor, and data processing equipment. The method comprises the following steps: data D (a,1) is sent to a first processing circuit by an arbiter; the data D (a,1) is processed by the first processing circuit to obtain data D (1,2), wherein the first processing circuit is a processing circuit among m processing circuits; the data D (1,2) is sent to a second processing circuit by the first processing circuit; the received data is processed by the second processing circuit, a third processing circuit, ... and an mth processing circuit respectively; the data D (m,a) sent by the mth processing circuit is received by the arbiter, wherein the arbiter and the m processing circuits are components of the processor; the processor further comprises an m+1th processing circuit; each processing circuit of the first processing circuit, the second processing circuit, ... and the m+1th processing circuit can receive first data to be processed sent by the arbiter, and processes the first data to be processed. The scheme is helpful to improve efficiency of data processing.

Owner:HUAWEI TECH CO LTD

Church-turing thesis: the turing immortality problem solved with a dynamic register machine

ActiveUS20110066833A1Dataflow computersSpecific program execution arrangementsComputational problemParallel computing

A new computing machine and new methods of executing and solving heretofore unknown computational problems are presented here. The computing system demonstrated here can be implemented with a program composed of instructions such that instructions may be added or removed while the instructions are being executed. The computing machine is called a Dynamic Register Machine. The methods demonstrated apply to new hardware and software technology. The new machine and methods enable advances in machine learning, new and more powerful programming languages, and more powerful and flexible compilers and interpreters.

Owner:AEMEA

Reconfigurable specific computer accelerator

ActiveCN110214309ADirect interconnection between switches and functional unitsDataflow computersEnergy efficient computingData stream processingApplication specific

A reconfigurable hardware accelerator for computers combines a high-speed dataflow processor, having programmable functional units rapidly reconfigured in a network of programmable switches, with a stream processor that may autonomously access memory in predefined access patterns after receiving simple stream instructions. The result is a compact, high-speed processor that may exploit parallelismassociated with many application-specific programs susceptible to acceleration.

Owner:WISCONSIN ALUMNI RES FOUND

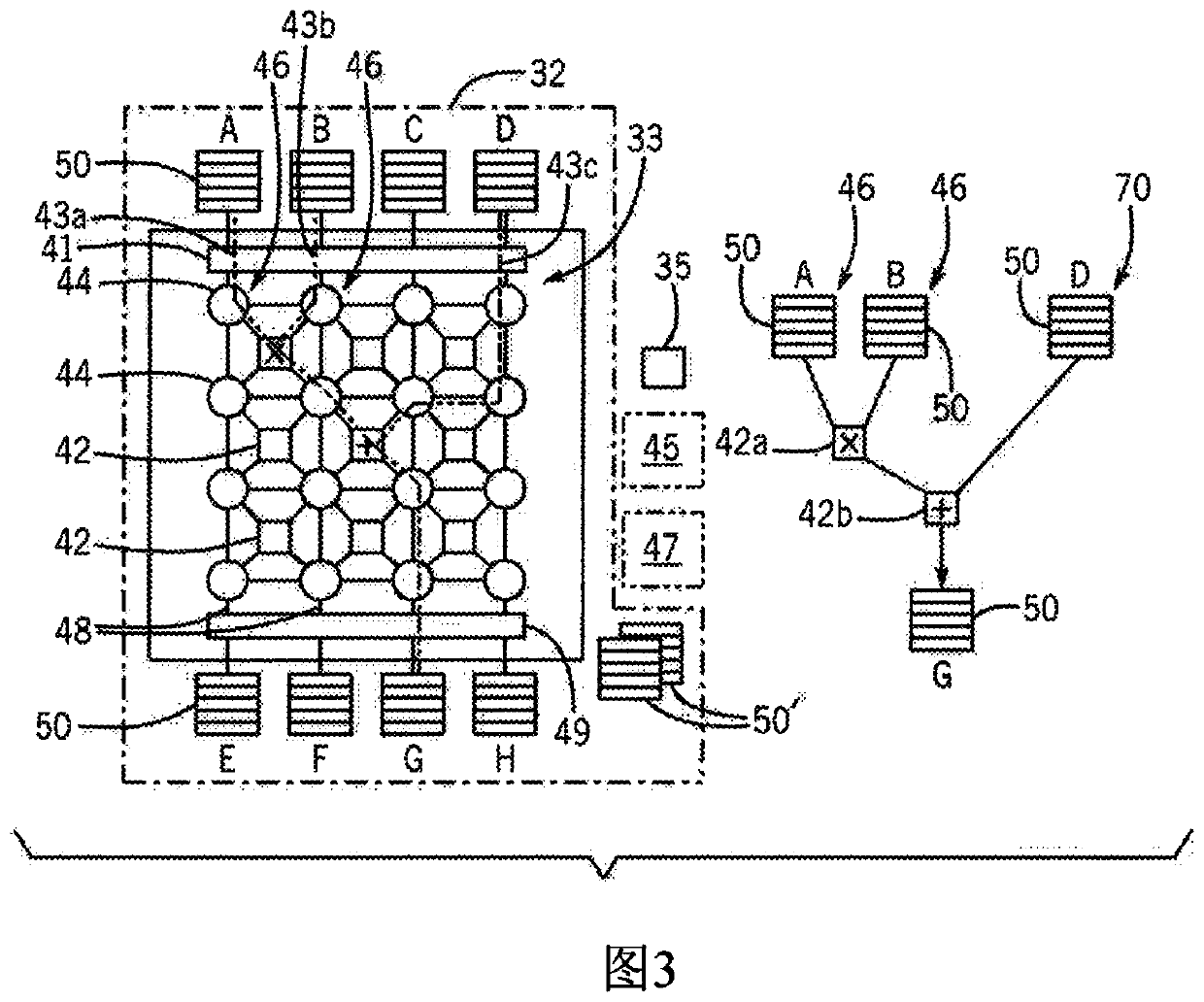

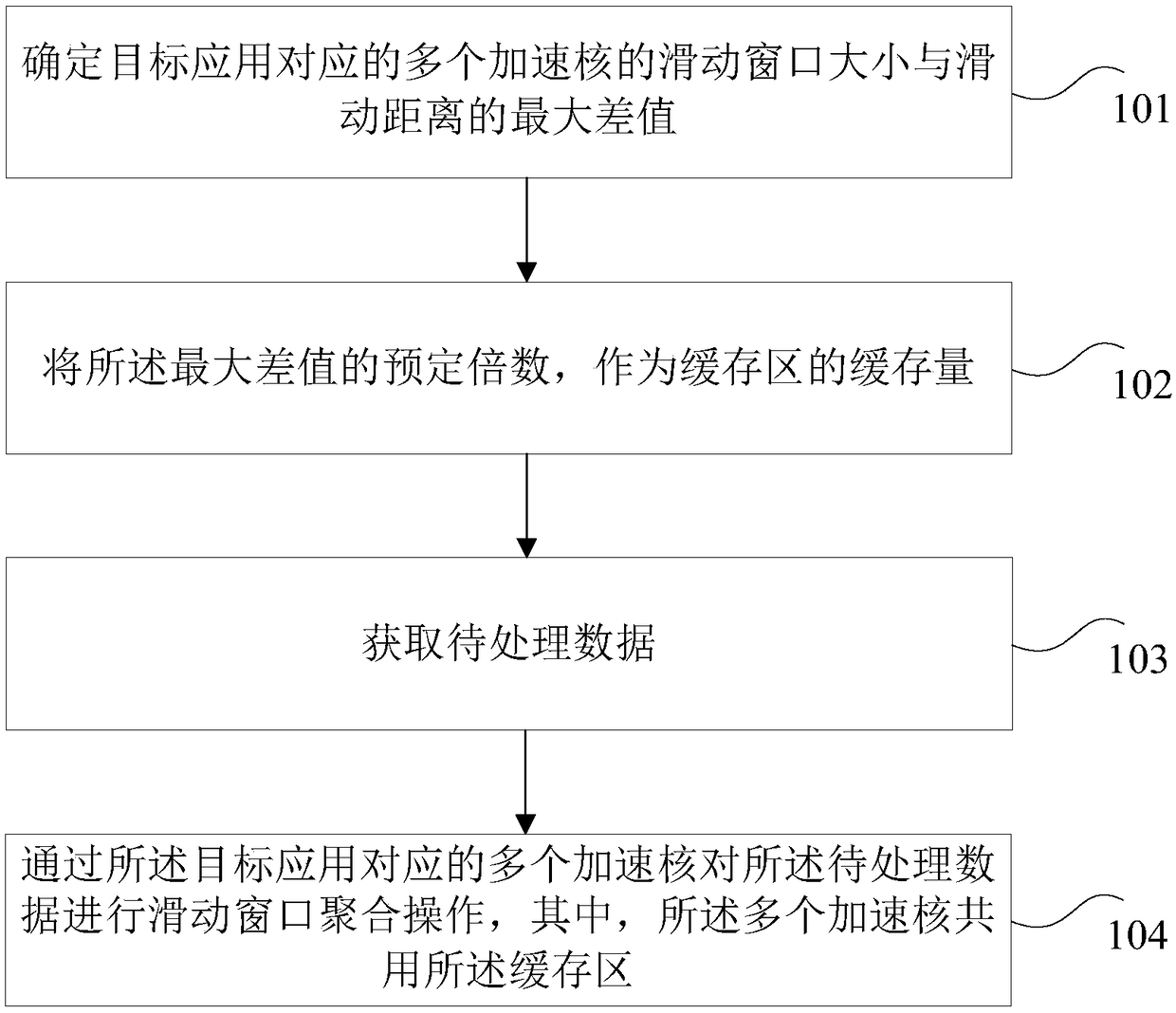

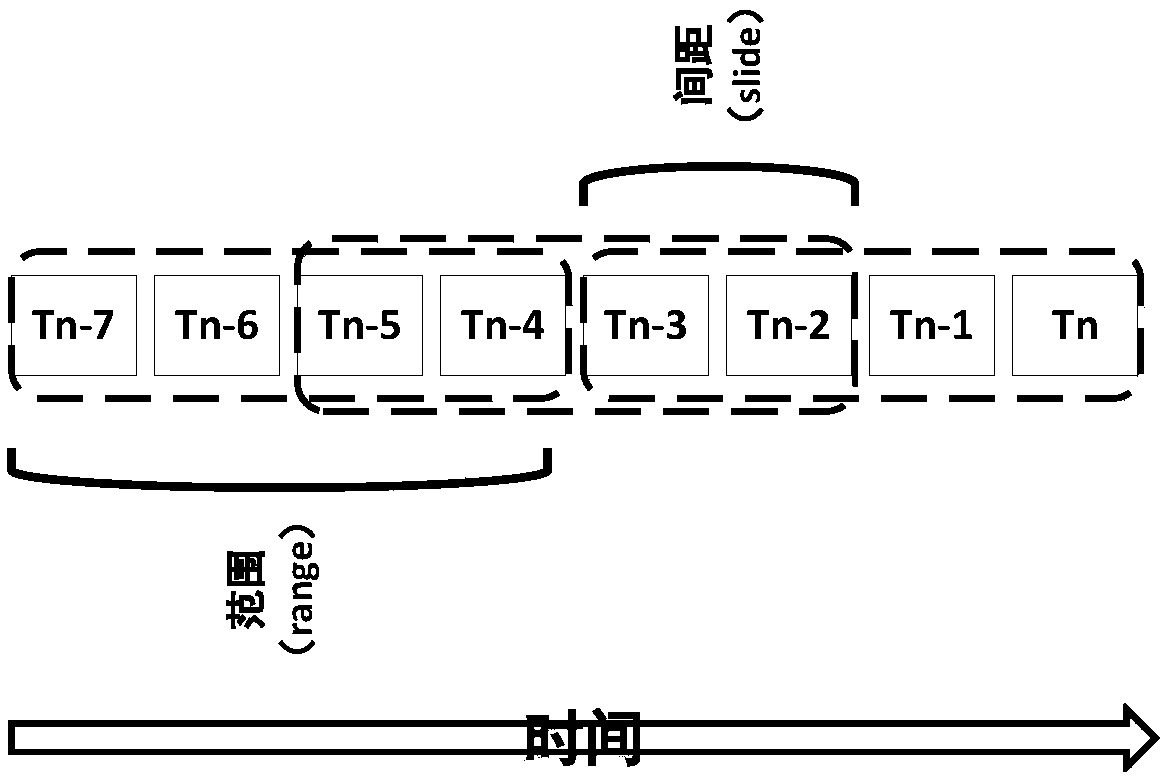

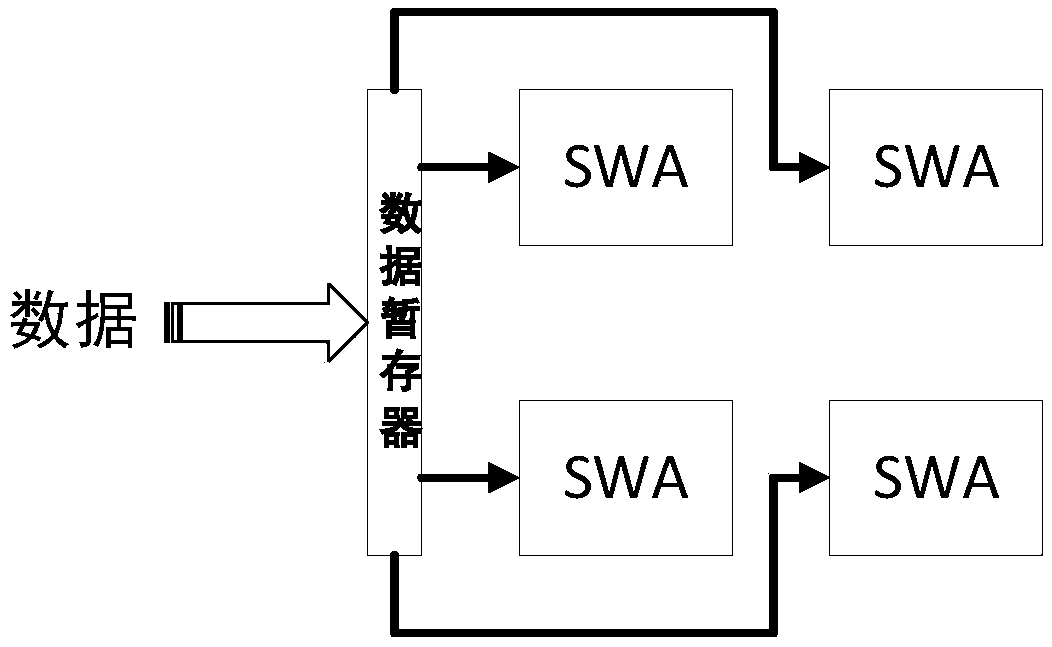

Data processing method and device based on acceleration kernel

ActiveCN109388609AAvoid waste seriouslyReduce demandDataflow computersMultiple digital computer combinationsSlide windowMaximum difference

The invention provides a data processing method and device based on an acceleration kernel, wherein, the method comprises the following steps: determining a maximum difference between a sliding windowsize and a sliding distance of a plurality of acceleration kernels corresponding to a target application; taking a predetermined multiple of the maximum difference value as the amount of memory retardation of the buffer area; acquiring data to be processed; performing sliding window aggregation operation on the data to be processed by applying a plurality of corresponding acceleration ccorresponding to the target application, wherein the plurality of acceleration cores share the buffer area. The scheme solves the problems of excessive cache resource demand and serious cache area waste causedby each accelerating core independently using one cache area, and achieves the technical effect of effectively reducing the cache resource demand and improving the utilization rate of the cache resource in the existing sliding window aggregation operation.

Owner:YUSUR TECH CO LTD

Dynamically erectable computer system

ActiveUS9772971B2Memory architecture accessing/allocationDataflow computersSignal routingVirtualization

A fault-tolerant computer system architecture includes two types of operating domains: a conventional first domain (DID) that processes data and instructions, and a novel second domain (MM domain) which includes mentor processors for mentoring the DID according to “meta information” which includes but is not limited to data, algorithms and protective rule sets. The term “mentoring” (as defined herein below) refers to, among other things, applying and using meta information to enforce rule sets and / or dynamically erecting abstractions and virtualizations by which resources in the DID are shuffled around for, inter alia, efficiency and fault correction. Meta Mentor processors create systems and sub-systems by means of fault tolerant mentor switches that route signals to and from hardware and software entities. The systems and sub-systems created are distinct sub-architectures and unique configurations that may be operated as separately or concurrently as defined by the executing processes.

Owner:SMITH ROGER A

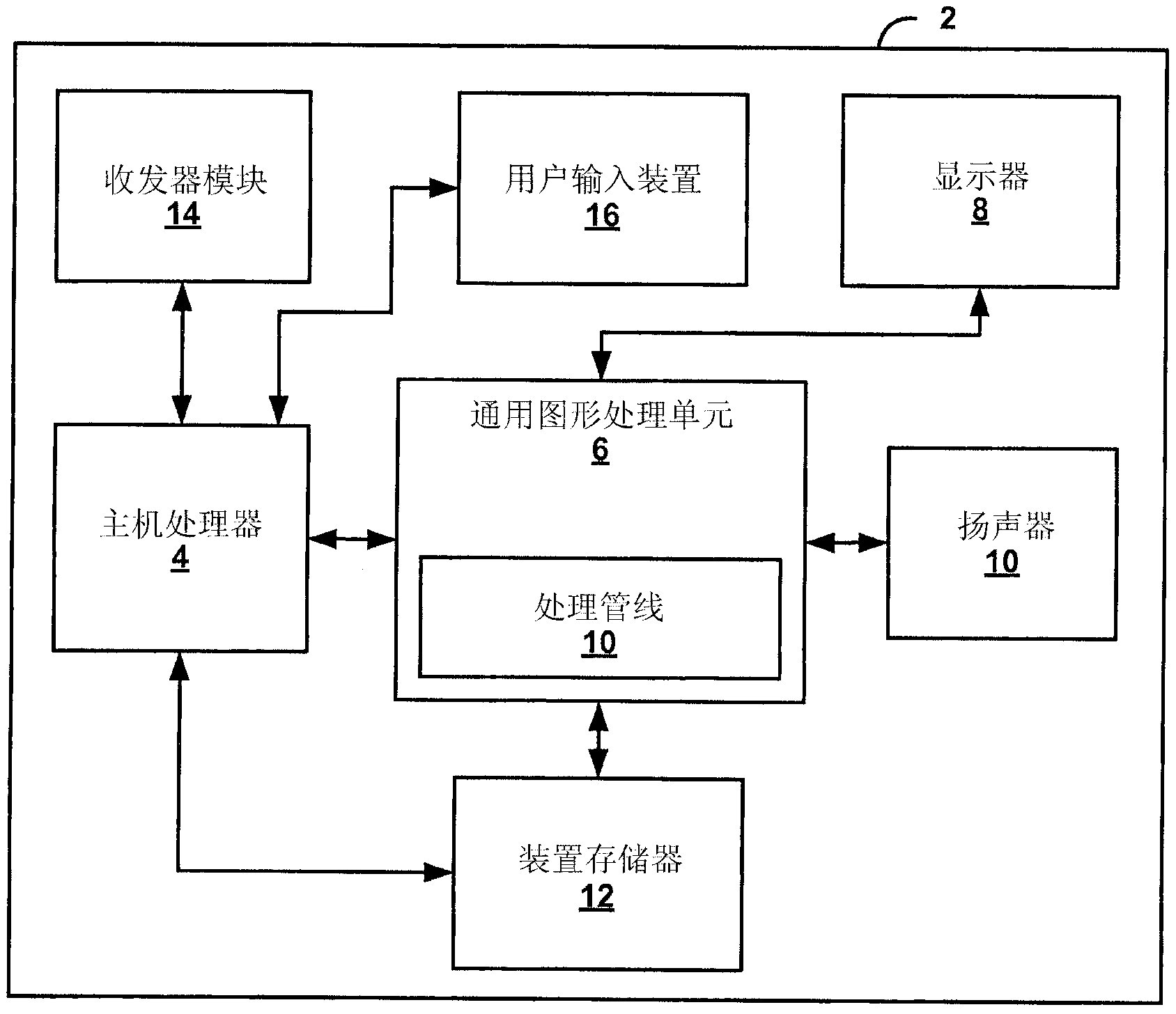

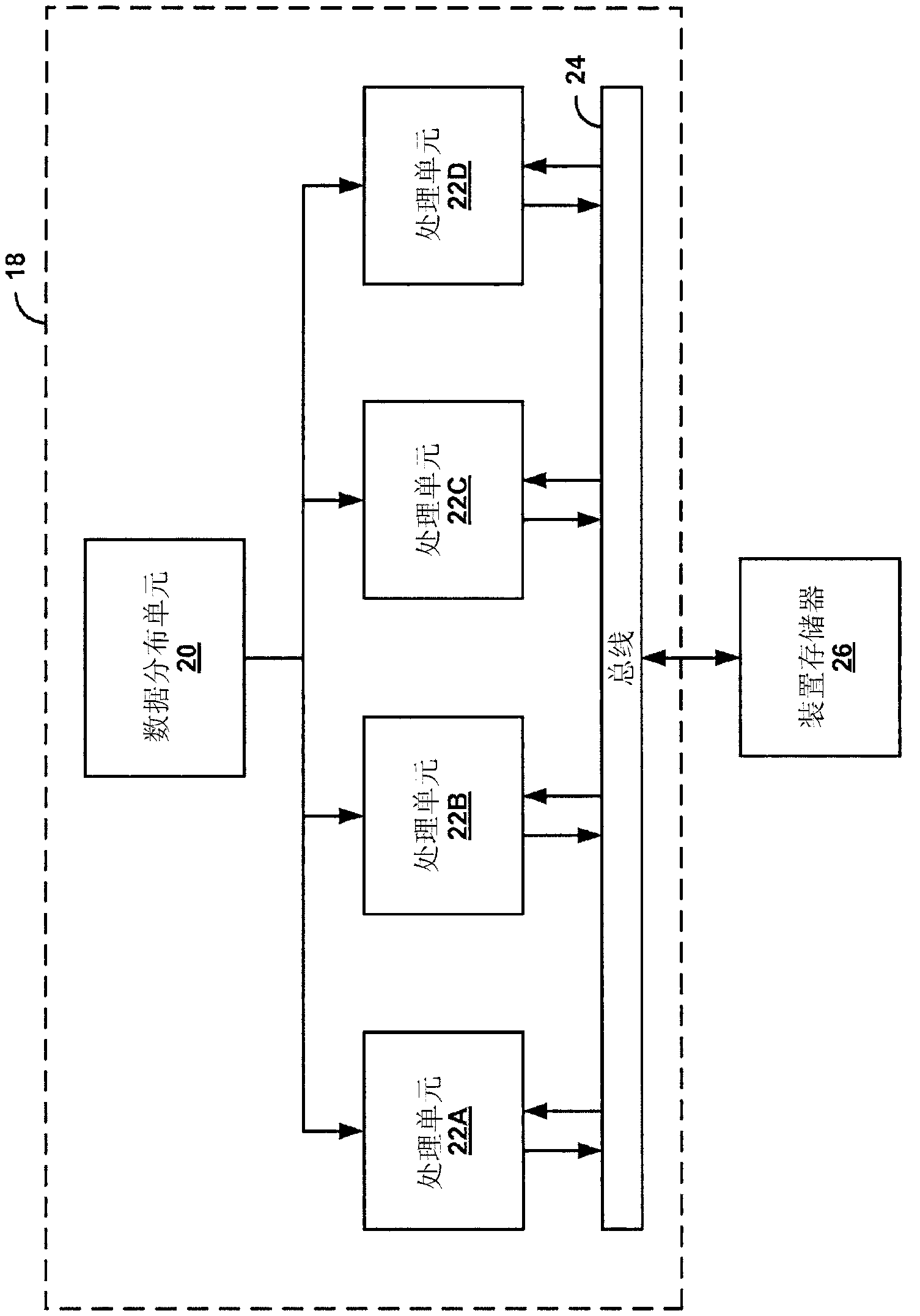

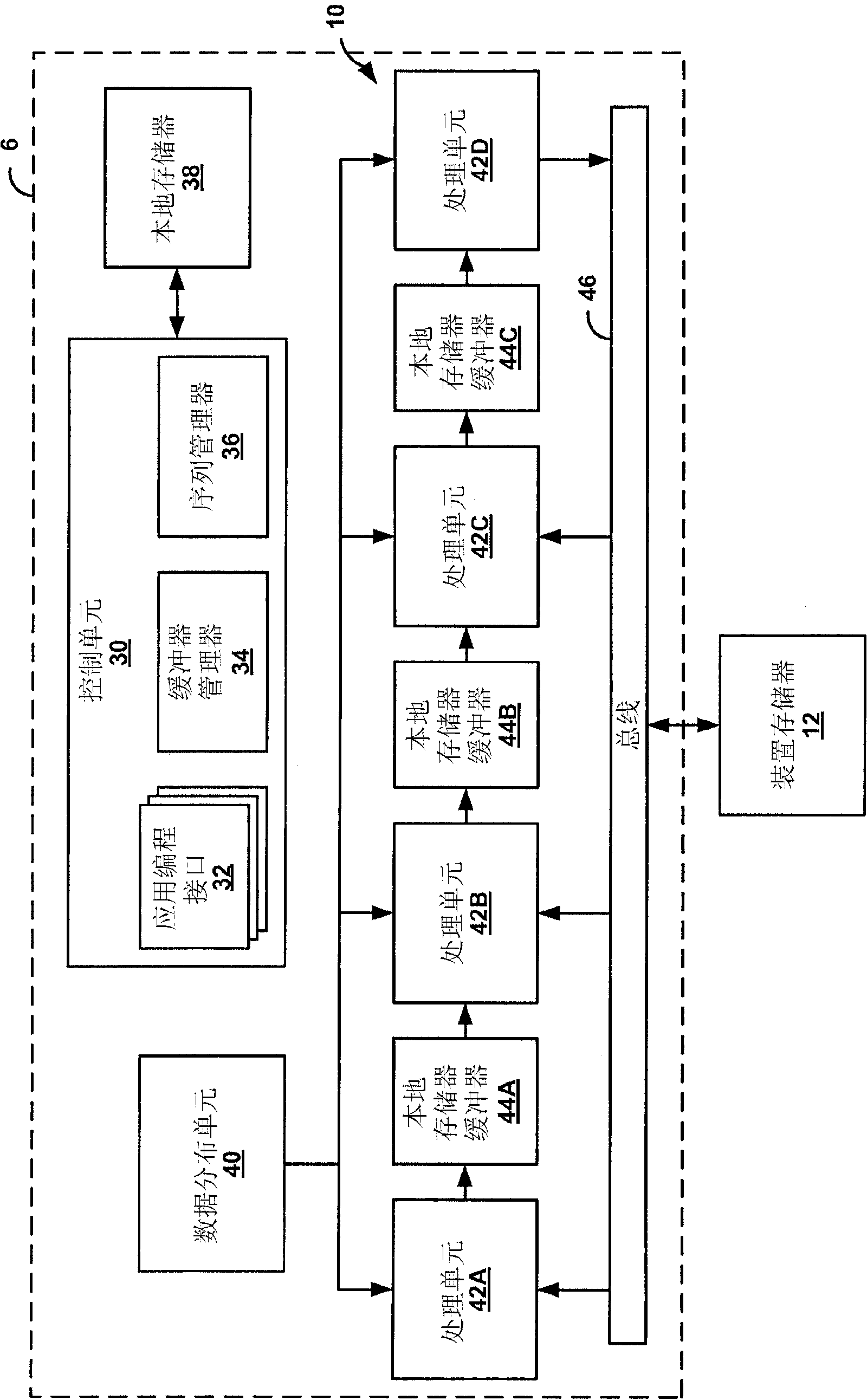

Computational resource pipelining in general purpose graphics processing unit

This disclosure describes techniques for extending the architecture of a general purpose graphics processing unit (GPGPU) with parallel processing units to allow efficient processing of pipeline-based applications. The techniques include configuring local memory buffers connected to parallel processing units operating as stages of a processing pipeline to hold data for transfer between the parallel processing units. The local memory buffers allow on-chip, low-power, direct data transfer between the parallel processing units. The local memory buffers may include hardware-based data flow control mechanisms to enable transfer of data between the parallel processing units. In this way, data may be passed directly from one parallel processing unit to the next parallel processing unit in the processing pipeline via the local memory buffers, in effect transforming the parallel processing units into a series of pipeline stages.

Owner:QUALCOMM INC

Fpga-based graph data processing method and system thereof

ActiveUS20200242072A1Difficult to operateIncrease time costDataflow computersOther databases indexingGraph traversalTheoretical computer science

An FPGA-based graph data processing method is provided for executing graph traversals on a graph having characteristics of a small-world network by using a first processor being a CPU and a second processor that is a FPGA and is in communicative connection with the first processor, wherein the first processor sends graph data to be traversed to the second processor, and obtains result data of the graph traversals from the second processor for result output after the second processor has completed the graph traversals of the graph data by executing level traversals, and the second processor comprises a sparsity processing module and a density processing module, the sparsity processing module operates in a beginning stage and / or an ending stage of the graph traversals, and the density processing module with a higher degree of parallelism than the sparsity processing module operates in the intermediate stage of the graph traversals.

Owner:HUAZHONG UNIV OF SCI & TECH

Processors, methods, and systems for debugging a configurable spatial accelerator

ActiveUS11086816B2Easy to adaptImprove performanceAssociative processorsDataflow computersComputer architectureData stream

Systems, methods, and apparatuses relating to debugging a configurable spatial accelerator are described. In one embodiment, a processor includes a plurality of processing elements and an interconnect network between the plurality of processing elements to receive an input of a dataflow graph comprising a plurality of nodes, wherein the dataflow graph is to be overlaid into the interconnect network and the plurality of processing elements with each node represented as a dataflow operator in the plurality of processing elements, and the plurality of processing elements are to perform an operation by a respective, incoming operand set arriving at each of the dataflow operators of the plurality of processing elements. At least a first of the plurality of processing elements is to enter a halted state in response to being represented as a first of the plurality of dataflow operators.

Owner:INTEL CORP

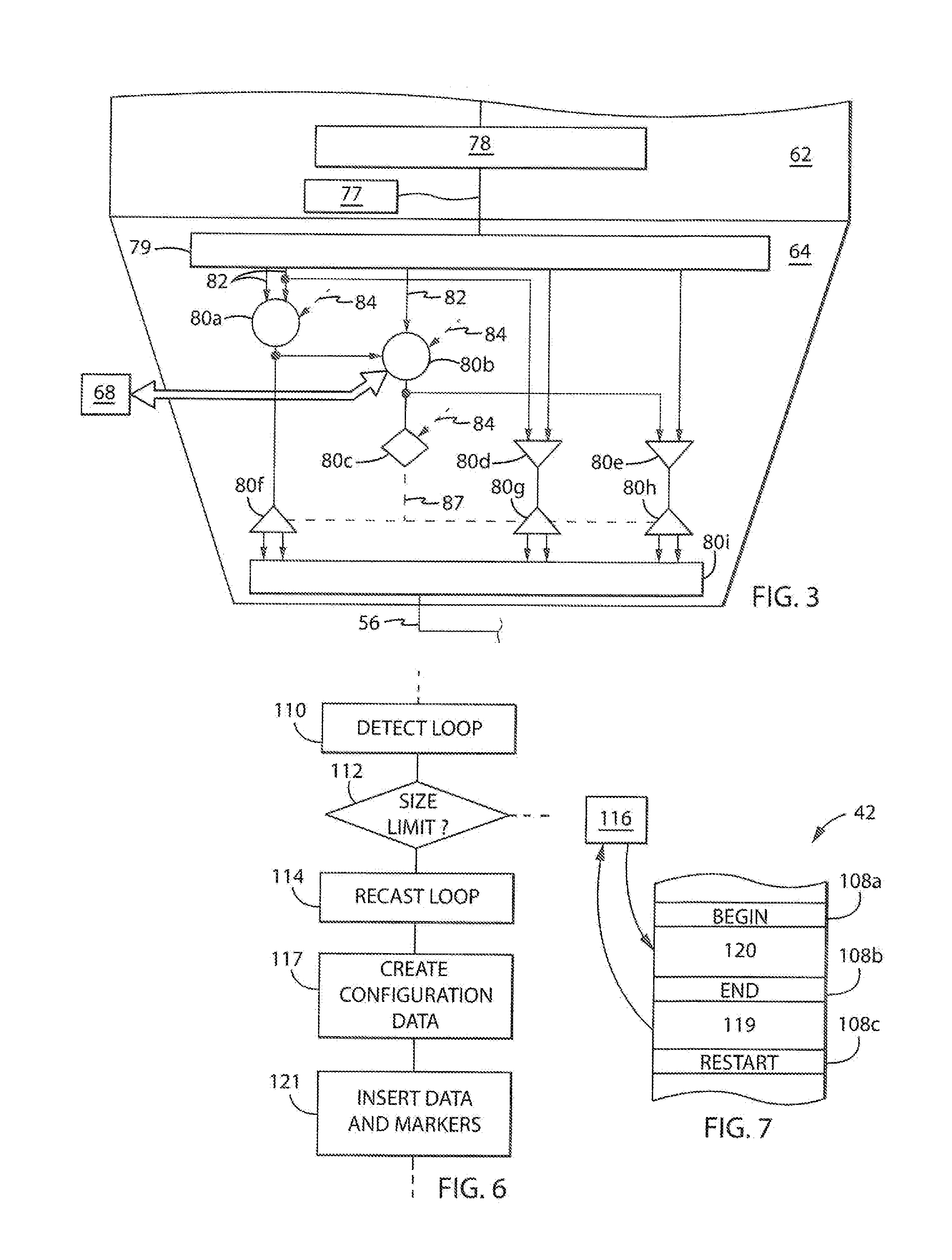

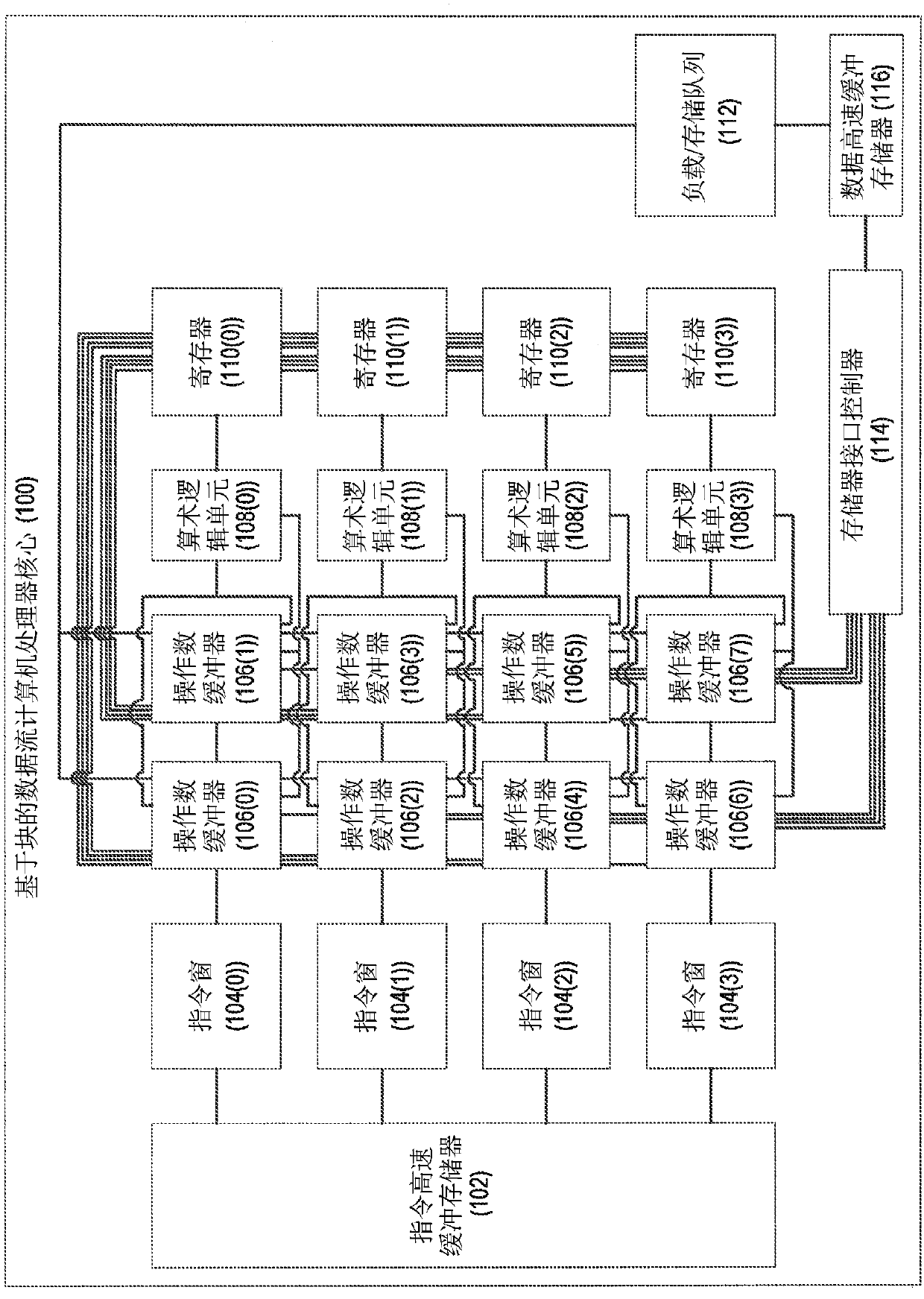

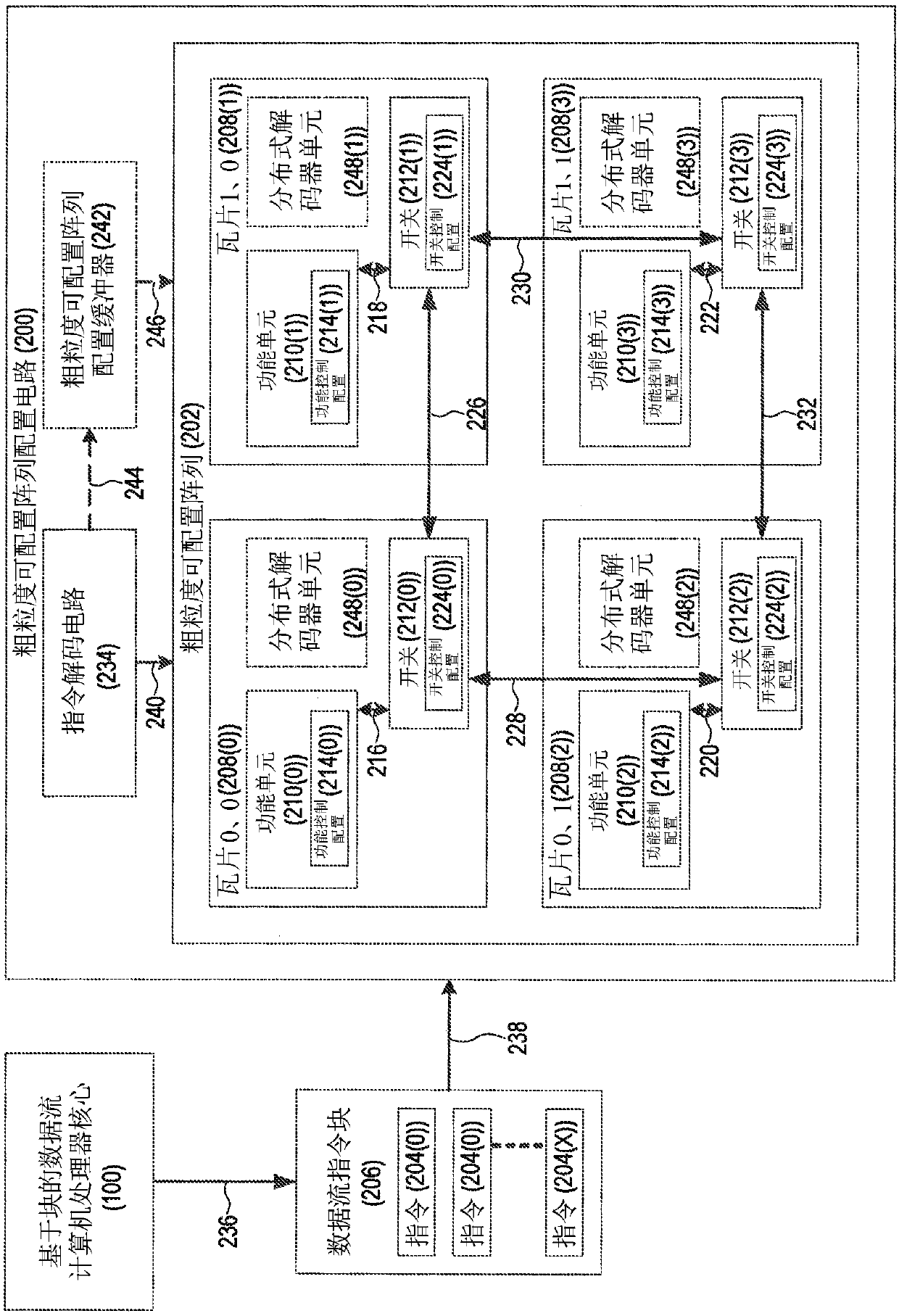

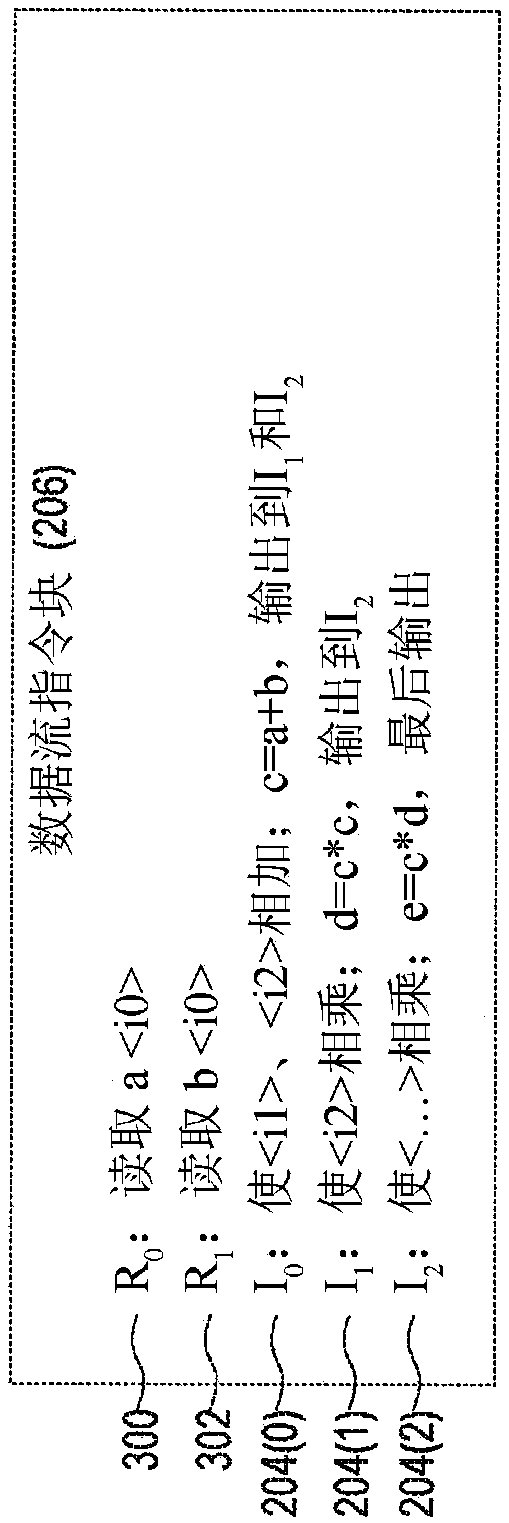

Configuring coarse-grained reconfigurable arrays (CGRAs) for dataflow instruction block execution in block-based dataflow instruction set architectures (ISAs)

Configuring coarse-grained reconfigurable arrays (CGRAs) for dataflow instruction block execution in block-based dataflow instruction set architectures (ISAs) is disclosed. In one aspect, a CGRA configuration circuit is provided, comprising a CGRA having an array of tiles, each of which provides a functional unit and a switch. An instruction decoding circuit of the CGRA configuration circuit mapsa dataflow instruction within a dataflow instruction block to one of the tiles of the CGRA. The instruction decoding circuit decodes the dataflow instruction, and generates a function control configuration for the functional unit of the mapped tile to provide the functionality of the dataflow instruction. The instruction decoding circuit further generates switch control configurations for switchesalong a path of tiles within the CGRA so that an output of the functional unit of the mapped tile is routed to each tile corresponding to consumer instructions of the dataflow instruction.

Owner:QUALCOMM INC

Apparatuses, methods, and systems for time-multiplexing in a configurable spatial accelerator

InactiveUS20200409709A1Easy to adaptImprove performanceDataflow computersConcurrent instruction executionHemt circuitsProcessing element

Systems, methods, and apparatuses relating to time-multiplexing circuitry in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator (CSA) includes a plurality of processing elements; and a time-multiplexed, circuit switched interconnect network between the plurality of processing elements. In another embodiment, a configurable spatial accelerator (CSA) includes a plurality of time-multiplexed processing elements; and a time-multiplexed, circuit switched interconnect network between the plurality of time-multiplexed processing elements.

Owner:INTEL CORP

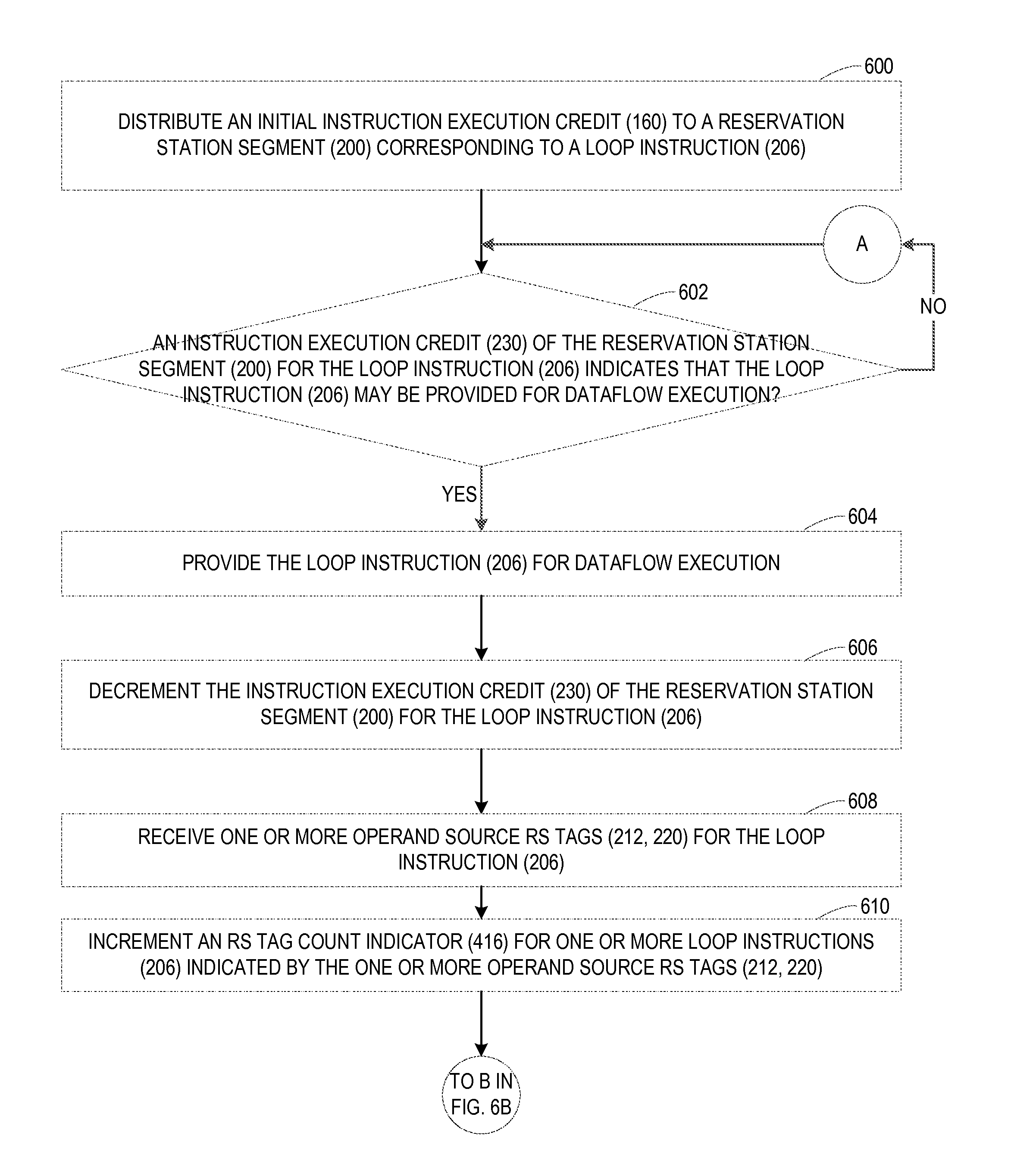

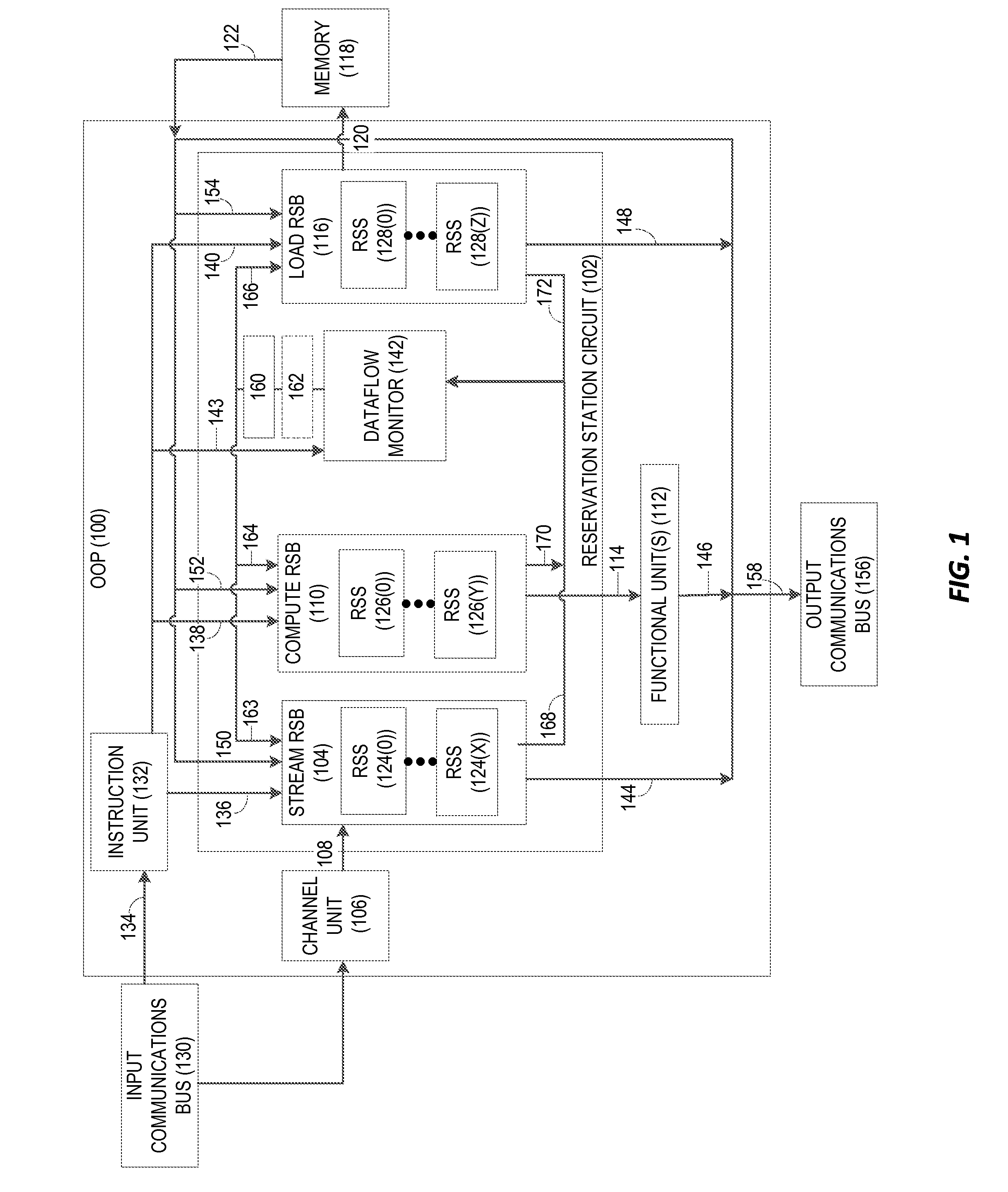

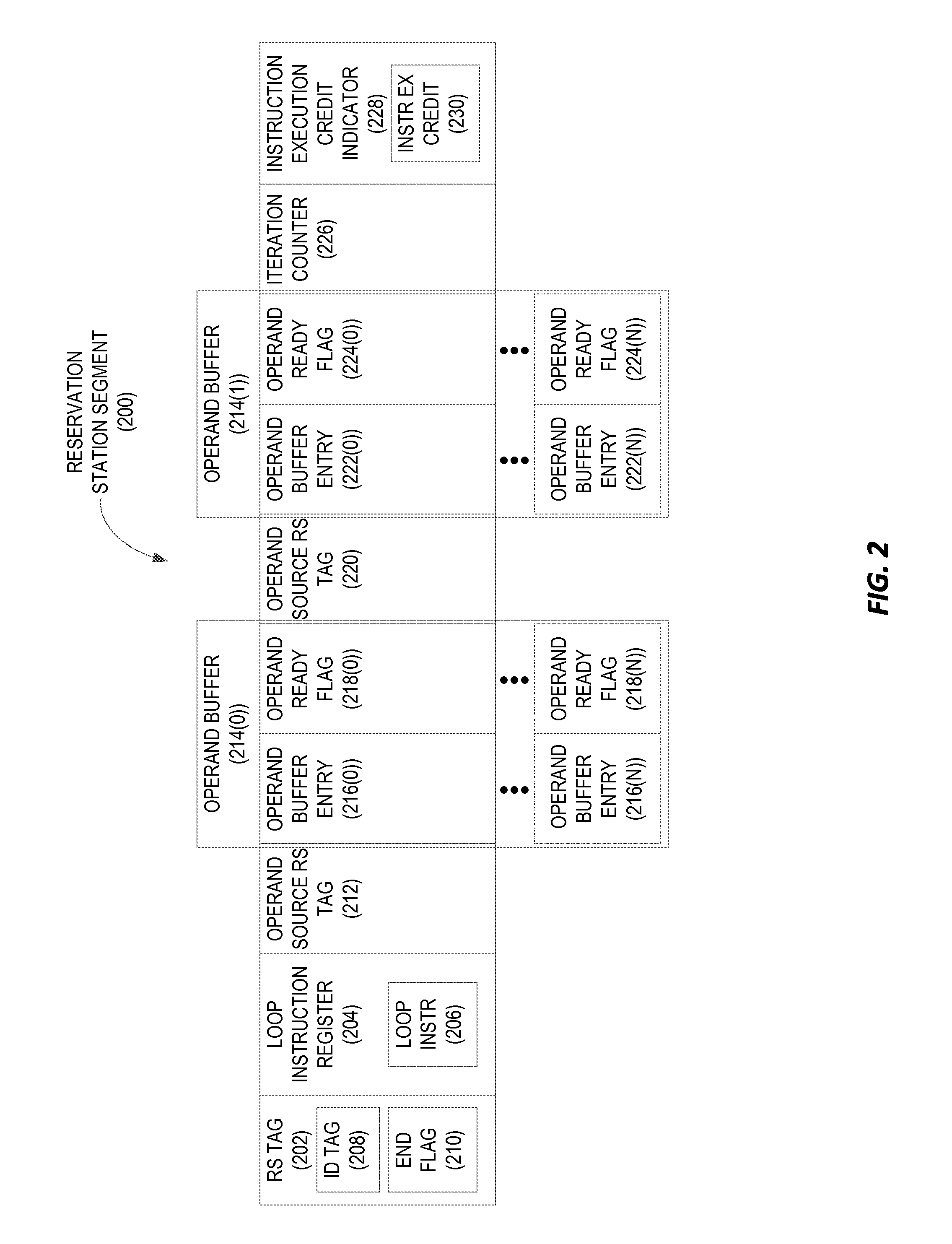

PROVIDING LOWER-OVERHEAD MANAGEMENT OF DATAFLOW EXECUTION OF LOOP INSTRUCTIONS BY OUT-OF-ORDER PROCESSORS (OOPs), AND RELATED CIRCUITS, METHODS, AND COMPUTER-READABLE MEDIA

InactiveUS20160274915A1Lower-overhead managementDataflow computersNext instruction address formationReservation stationComputer program

Providing lower-overhead management of dataflow execution of loop instructions by out-of-order processors (OOPs), and related circuits, methods, and computer-readable media are disclosed. In one aspect, a reservation station circuit including multiple reservation station segments, each storing a loop instruction of a computer program loop is provided. Each reservation station segment also stores an instruction execution credit indicator indicative of whether the corresponding loop instruction may be provided for dataflow execution. The reservation station circuit further includes a dataflow monitor providing an entry for each loop instruction, each entry comprising a consumer count indicator and a reservation station (RS) tag count indicator. The dataflow monitor is configured to determine whether all consumer instructions of a loop instruction have executed based on the consumer count indicator and the RS tag count indicator for the loop instruction. If so, the dataflow monitor issues an instruction execution credit to the loop instruction.

Owner:QUALCOMM INC

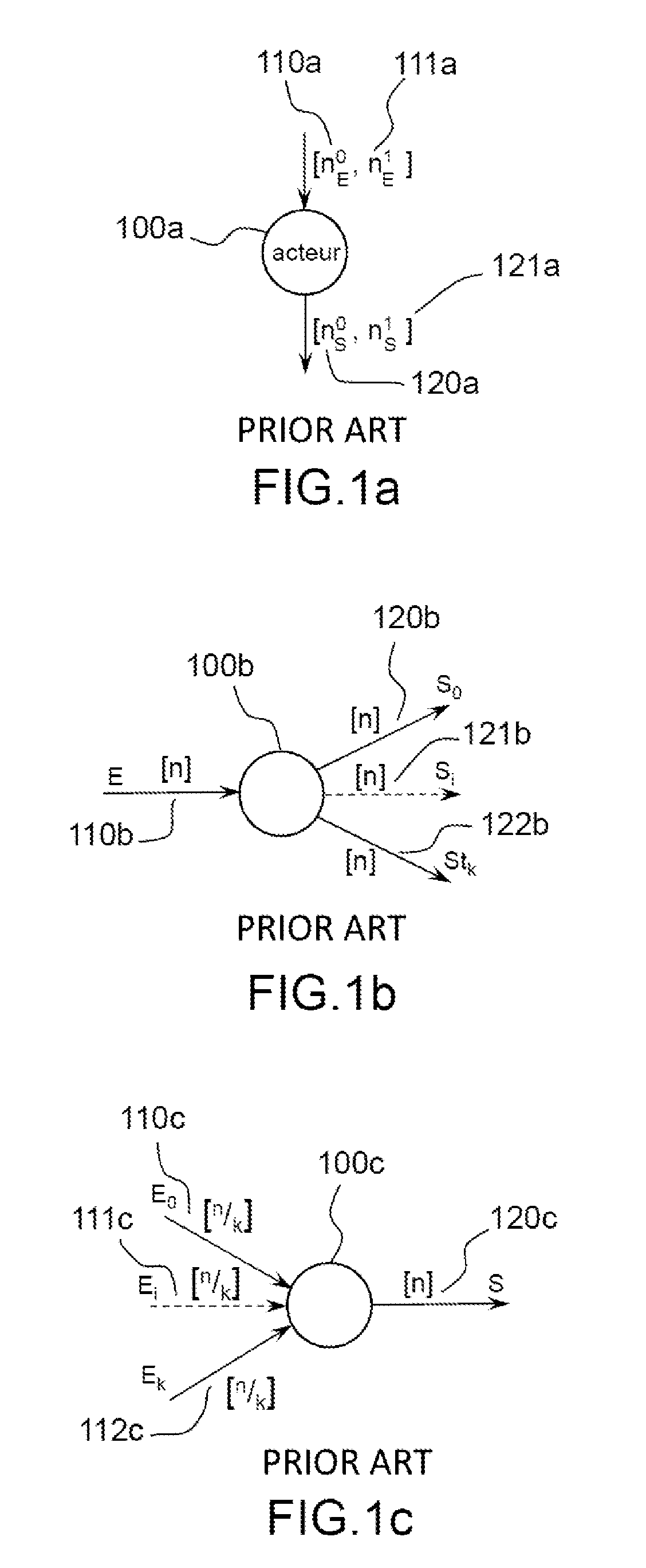

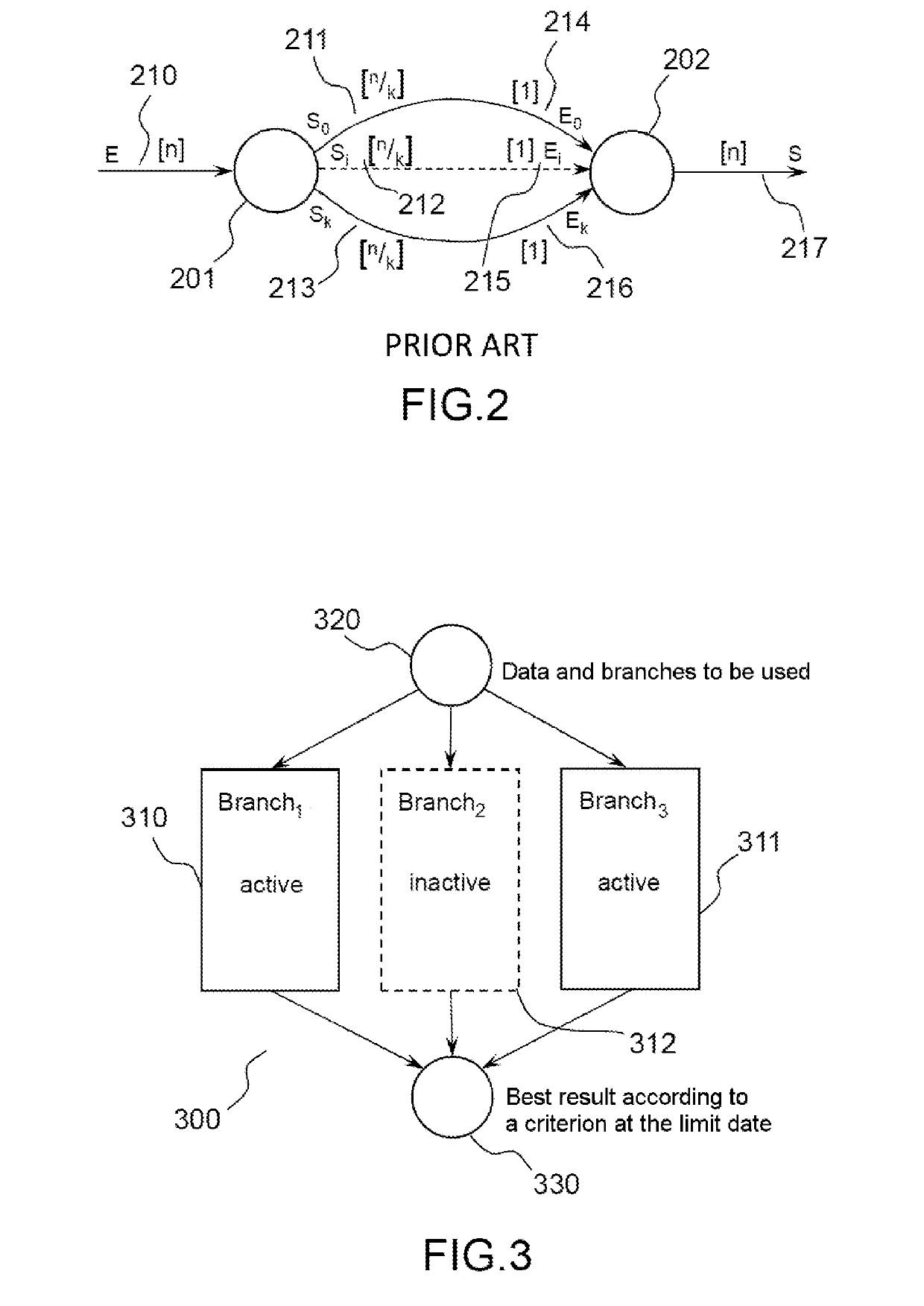

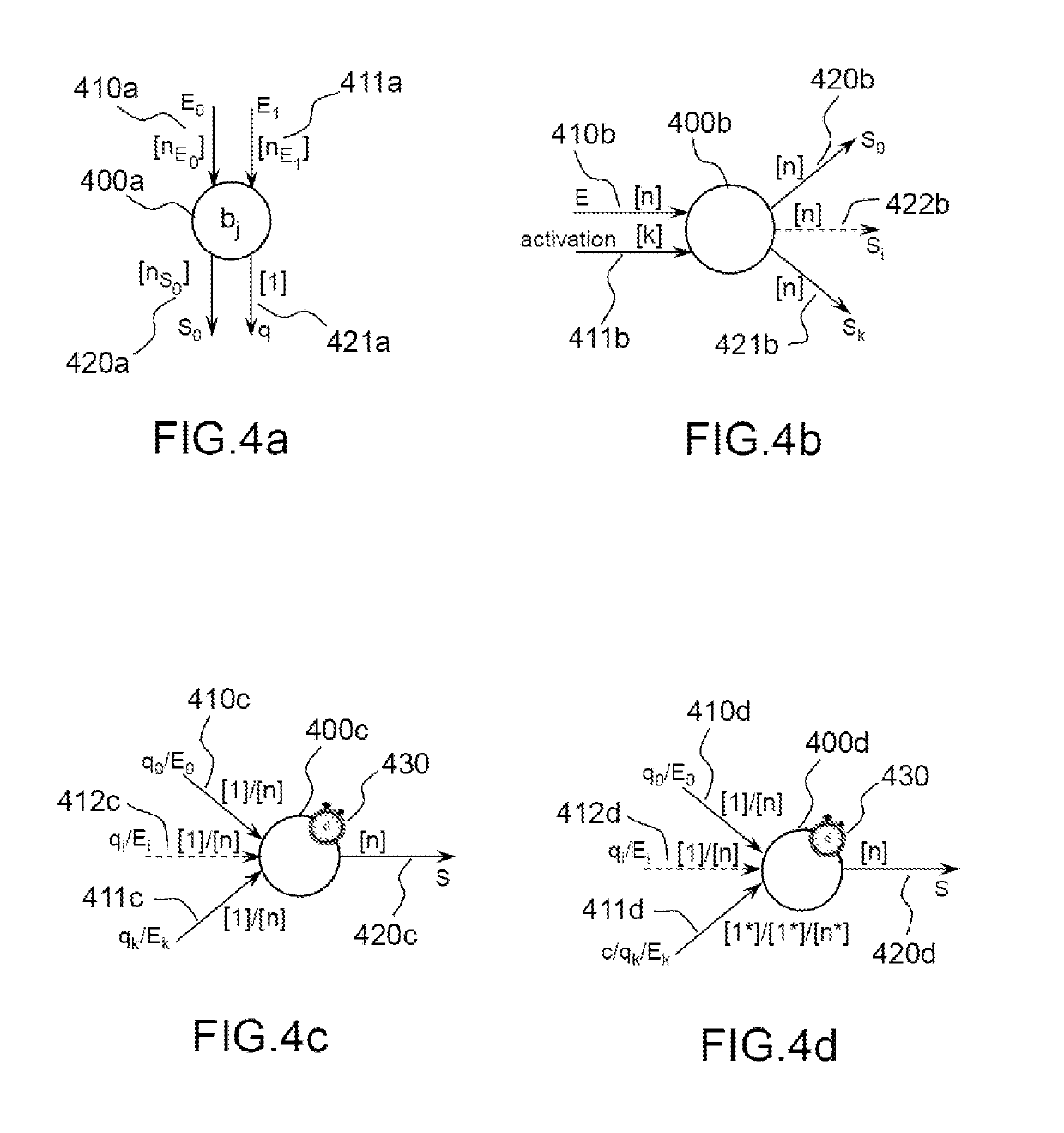

Speculative and iterative execution of delayed data flow graphs

ActiveUS10394729B2The result is validGood flexibilityDataflow computersProgram initiation/switchingData setComputer science

A system for executing a data flow graph comprises: at least two first actors each comprising means for independently executing a computation of a same data set comprising at least one datum, and producing a quality descriptor of the data set, the execution of the computation by each of at least two first actors being triggered by a synchronization system; a third actor, comprising means for triggering the execution of the computation by each of at least two first actors, and initializing a clock configured to emit an interrupt signal when a duration has elapsed; a fourth actor, comprising means for executing, at the latest at the interrupt signal from the clock: the selection, from the set of at least two first actors having produced a quality descriptor, of the one whose descriptor exhibits the most favorable value; the transfer of the data set computed by the selected actor.

Owner:COMMISSARIAT A LENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES

Popular searches

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com