Content locality-based caching in a data storage system

a data storage system and content technology, applied in the field of data caching techniques, can solve the problems of slow write and erase times, physical properties of ssds, and significantly limited number of write/erase cycles, so as to achieve high-speed random reads, reduce costs, and increase capacity. the effect of capacity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

first embodiment

[0103]the inventive methods and systems described herein may be embedded inside a disk controller. Such an embodiment may include a disk controller board that is adapted to include NAND-gate flash SSD or similar device, a GPU / CPU, and a DRAM buffer in addition to the existing disk control hardware and interfaces such as the host bus adapter (HBA). FIG. 8 depicts a block diagram of an HDD controller-embedded embodiment. A host system 802 may be connected to a disk controller 820 using a standard interface 812. Such an interface can be SCSI, SATA, SAS, PATA, iSCSI, FC, or the like. The flash memory 804 may be an SSD, such as to store reference blocks, compact delta blocks, hot independent blocks, and similar data. The intelligent processing unit 810 performs logical operations such as delta derivation, similarity detection, combining delta with reference blocks, managing reference blocks, managing meta data, and other operations described herein or known for maximizing SSD-based cachi...

third embodiment

[0105]A third embodiment is implemented at the HBA level but includes no onboard flash memory. An external SSD drive such as PCIe SSD, SAS SSD, SATA SSD, SCSI SSD, or other SSD drive may be used similarly to the SSD in the embodiment of FIG. 9. FIG. 10 depicts a block diagram describing this implementation. The HBA 1020 has an intelligent processing unit 1008 and a DRAM buffer 1004 in addition to the existing HBA control logic and interfaces. The host system 1002 may be connected to the system bus 1014, such as PCI, PCI-Express, PCI-X, HyperTransport, or InfiniBand. The bus interface 1010 allows the HBA card 1020 to be connected to the system bus 1014. The intelligent processing unit 1008 performs processing functions such as delta derivation, similarity detection, combining delta with reference blocks, managing reference blocks, executing cache algorithms that are described herein, managing meta data, and the like. The RAM cache 1004 temporarily stores deltas for active I / O operati...

fourth embodiment

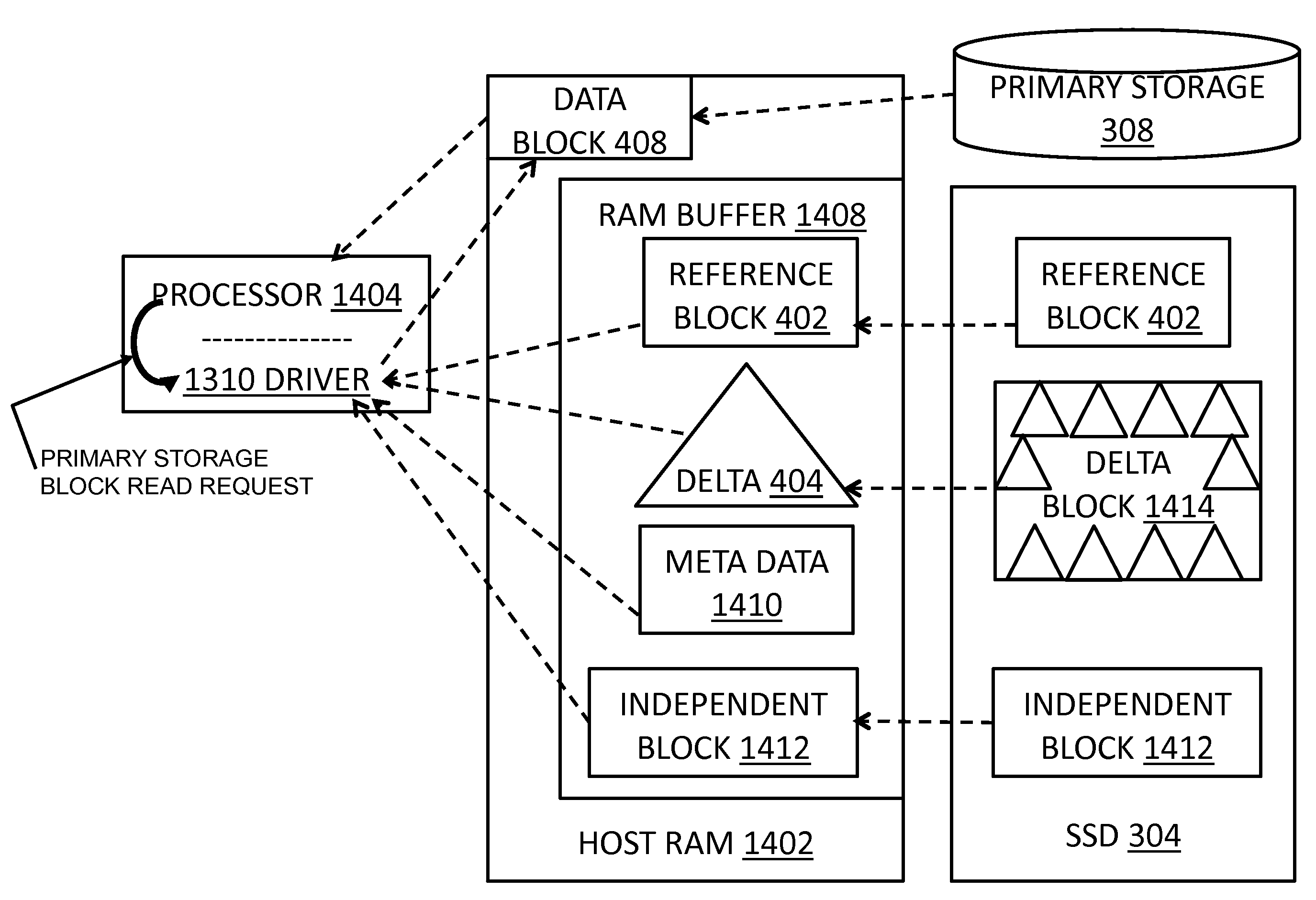

[0106]While the above implementations can provide great performance improvements, all require redesigns of hardware such as a disk controller or an HBA card. A fourth implementation includes a software approach using commodity off-the-shelf hardware. A software application at the device driver level controls a separate SSD drive / card, a GPU / CPU embedded controller card, and an HDD connected to a system bus. FIG. 11 depicts a block diagram describing this software implementation. This implementation leverages standard off-the-shelf hardware such as an SSD drive 1114, an HDD 1118, and an embedded controller / GPU / CPU / MCU card 1120. All these standard hardware components may be connected to a standard system bus 1122, such as PCI, PCI-Express, PCI-X, HyperTransport, InfiniBand, and the like. The software for this fourth implementation may be divided into two parts: one running on a host computer system 1102 and another running on an embedded system 1120. One possible partition of softwar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com