Patents

Literature

296 results about "Bag-of-words model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

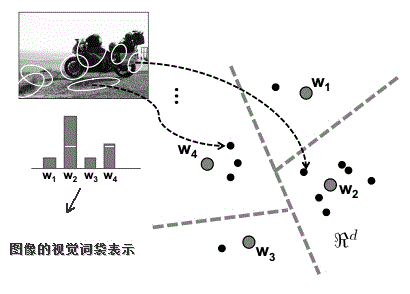

The bag-of-words model is a simplifying representation used in natural language processing and information retrieval (IR). In this model, a text (such as a sentence or a document) is represented as the bag (multiset) of its words, disregarding grammar and even word order but keeping multiplicity. The bag-of-words model has also been used for computer vision.

Mobile robot three-dimensional mapping and obstacle avoidance method based on space bag of words model

ActiveCN105843223AReduced memory footprintImprove accuracyPosition/course control in two dimensionsLaser rangingPoint cloud

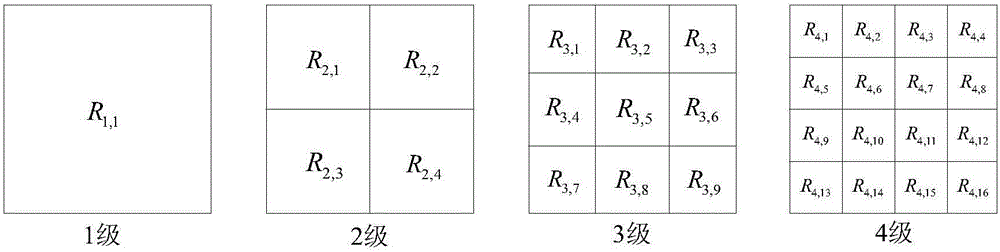

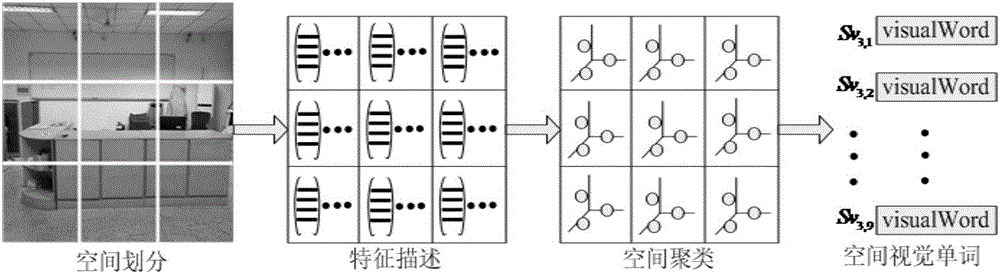

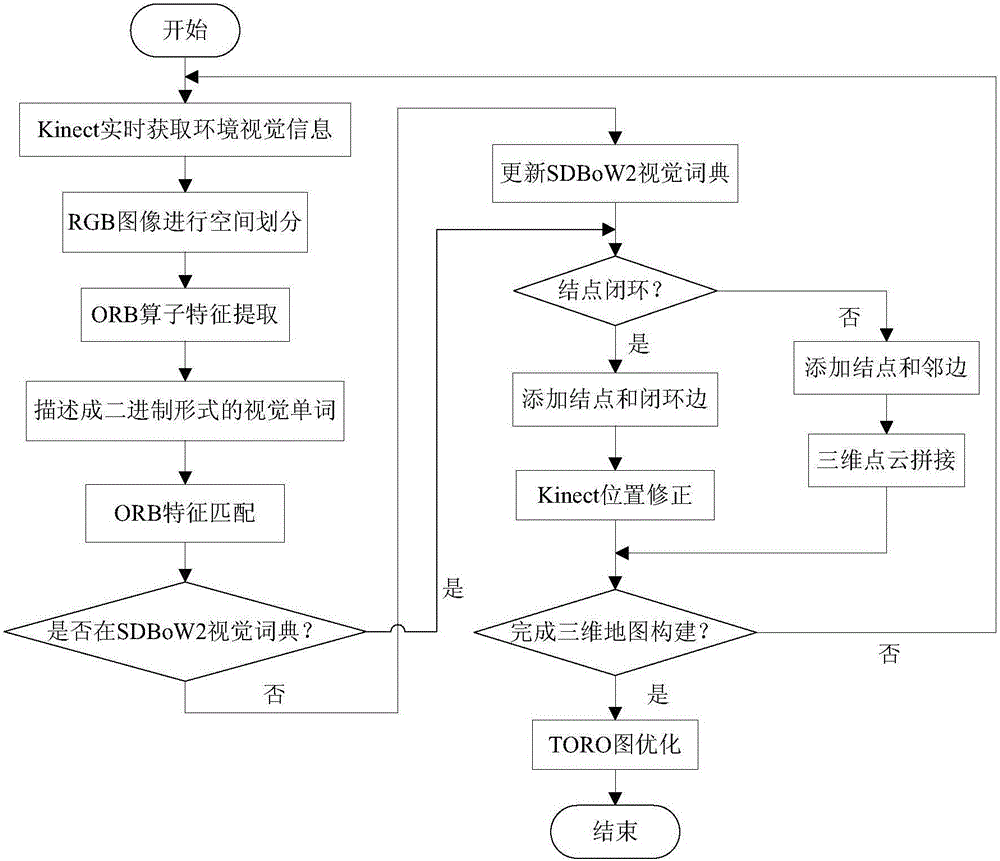

The invention discloses a mobile robot three-dimensional mapping and obstacle avoidance method based on a space bag of words model. The method comprises following steps: 1) collecting Kinect sensor data, and using a space bag of words model which fuses spatial relationships to describe scene image features 2) describing robot three-dimensional SLAM by means of the SDBoW2 model of the scene image to realize closed loop detection, three-dimensional point cloud registration, and graph structure optimization and therefore creating a global environmental three-dimensional point cloud density map; 3) the robot using the created global three-dimensional map and the Kinect sensor information to perform indoor real-time obstacle avoidance guiding. The method is aimed at low cost mobile robots without speedometers or laser distance measuring sensors; reliable real-time three-dimensional map creation and obstacle avoidance can be realized depending only on Kinect sensors; the method can be applied in long time mobile robot operation service at large area of indoor environment such as household places and office rooms, etc.

Owner:SOUTHEAST UNIV

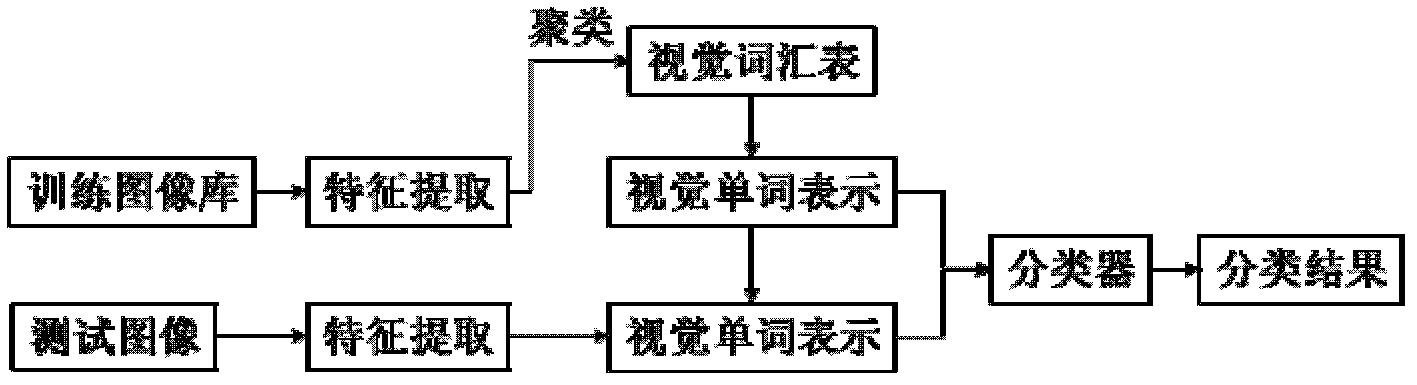

Remote sensing image classification method based on multi-feature fusion

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

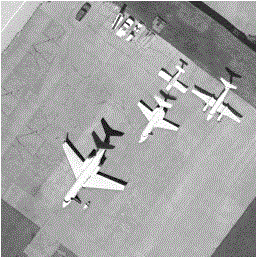

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

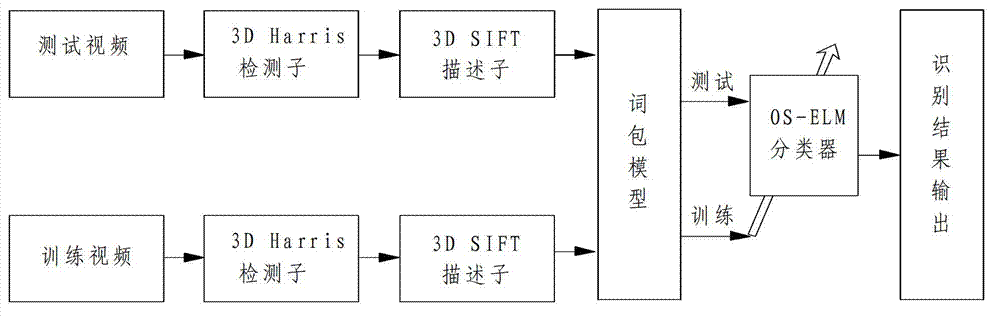

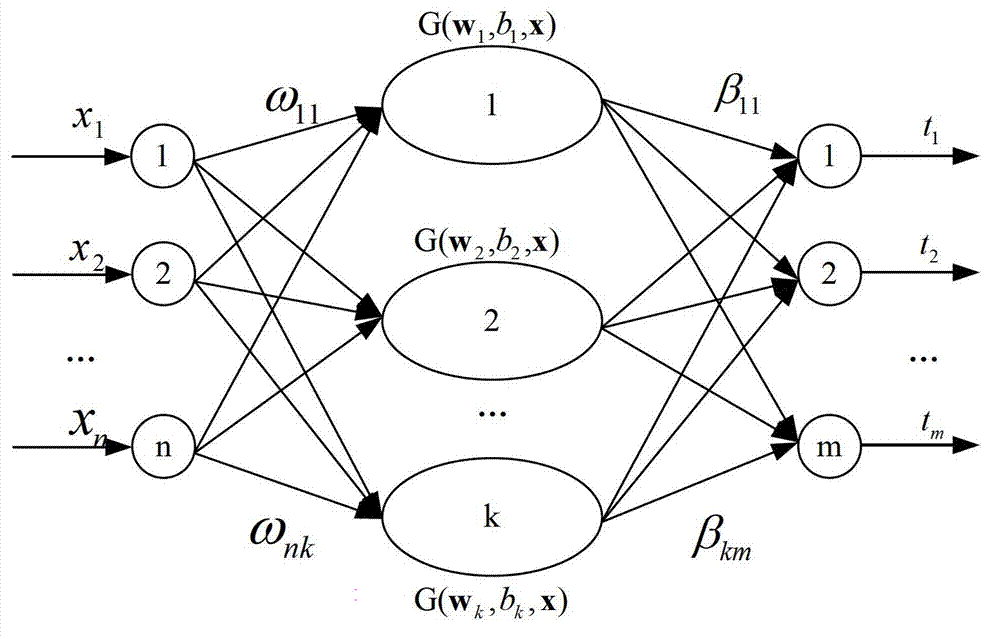

On-line sequential extreme learning machine-based incremental human behavior recognition method

InactiveCN102930302AImprove recognition accuracyAdaptableCharacter and pattern recognitionHuman bodyLearning machine

The invention discloses an on-line sequential extreme learning machine-based incremental human behavior recognition method. According to the method, a human body can be captured by a video camera on the basis of an activity range of everyone. The method comprises the following steps of: (1) extracting a spatio-temporal interest point in a video by adopting a third-dimensional (3D) Harris corner point detector; (2) calculating a descriptor of the detected spatio-temporal interest point by utilizing a 3D SIFT descriptor; (3) generating a video dictionary by adopting a K-means clustering algorithm, and establishing a bag-of-words model of a video image; (4) training an on-line sequential extreme learning machine classifier by using the obtained bag-of-words model of the video image; and (5) performing human behavior recognition by utilizing the on-line sequential extreme learning machine classifier, and performing on-line learning. According to the method, an accurate human behavior recognition result can be obtained within a short training time under the condition of a few training samples, and the method is insensitive to environmental scenario changes, environmental lighting changes, detection object changes and human form changes to a certain extent.

Owner:SHANDONG UNIV

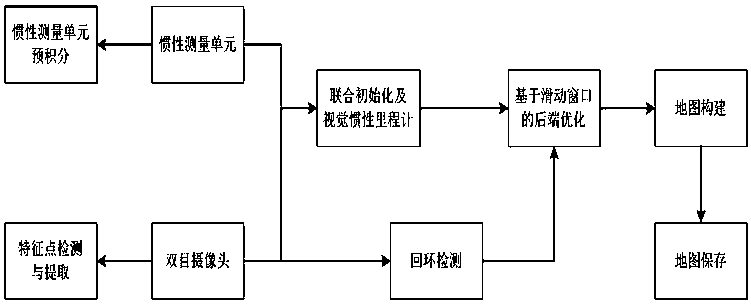

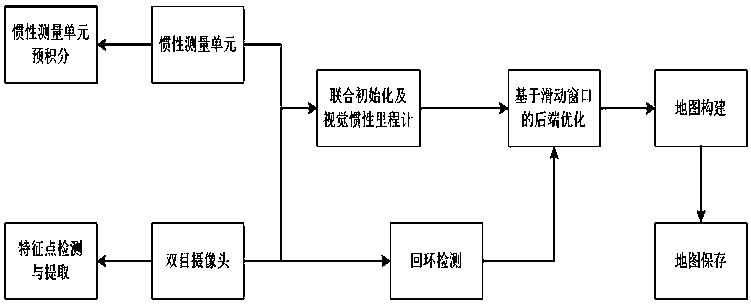

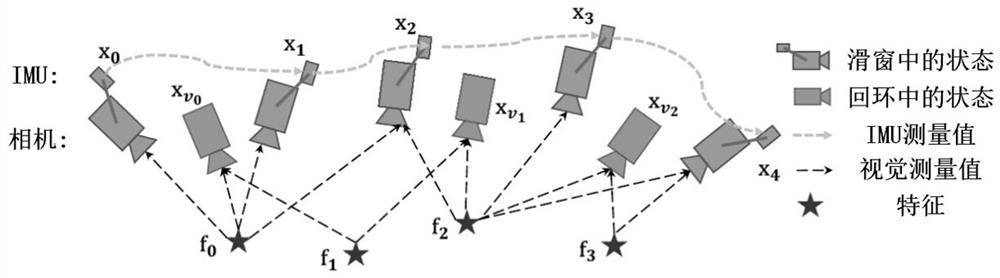

Binocular vision indoor positioning and mapping method and device

ActiveCN110044354APose optimizationHigh precisionInternal combustion piston enginesNavigational calculation instrumentsSlide windowAngular velocity

The invention discloses a binocular vision indoor positioning and mapping method and device. The method comprises the following steps of collecting left and right images in real time, and calculatingthe initial pose of the camera; collecting angular velocity information and acceleration information in real time, and pre-integrating to obtain the state of an inertial measurement unit; constructinga sliding window containing several image frames, and nonlinearly optimizing the initial pose of the camera by taking the visual error term between the image frames and the error term of the measurement value of the inertial measurement unit as constraints to obtain the optimized pose of the camera and measurement value of the inertial measurement unit; constructing word bag models for loop detection, and correcting the optimized pose of the camera; extracting and converting features of the left and right image into words for matching with the word bags of the offline map, optimizing and solving to obtain the optimized pose of the camera if the match is successful, and re-collecting the left and right images and matching the word bags if the match is unsuccessful. The binocular vision indoor positioning and mapping method and device provided by the invention can realize positioning and mapping in an unknown environment and the positioning function in the already constructed scene, andhas good precision and robustness.

Owner:SOUTHEAST UNIV

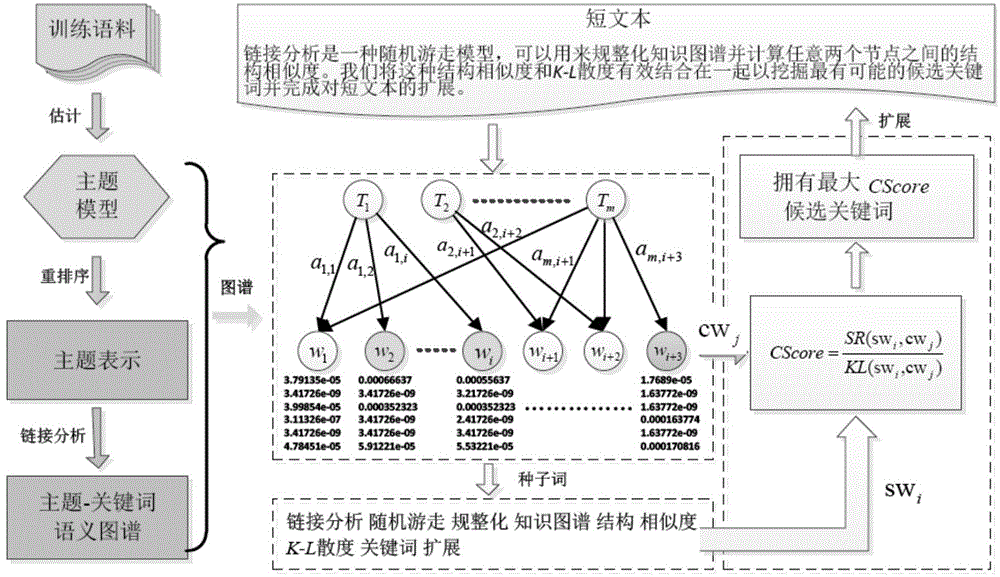

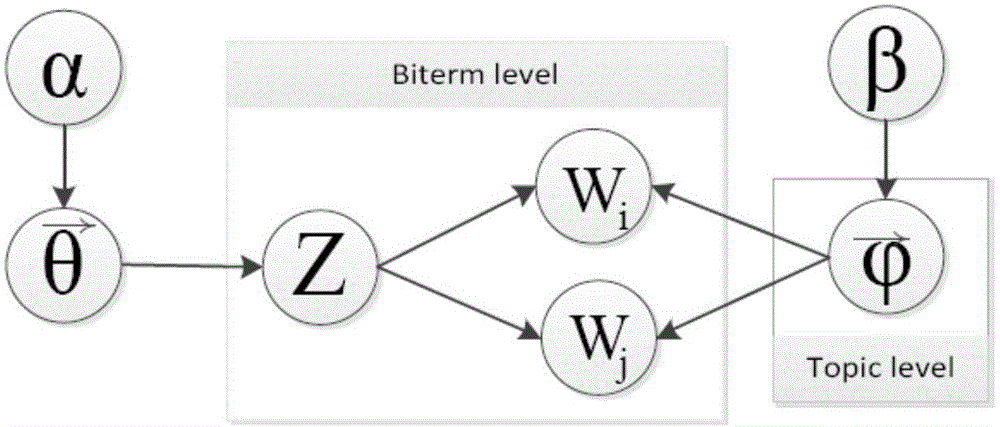

Short text characteristic expanding method based on semantic atlas

ActiveCN104391942AImprove classification performanceSolve the sparsity problemSemantic analysisSpecial data processing applicationsGraph spectraData set

The invention discloses a short text characteristic expanding method based on a semantic atlas. The method includes the steps: performing subject modeling by the aid of a training data set of a short text, and extracting subject term distribution; reordering the subject term distribution; building a candidate keyword dictionary and a subject-keyword semantic atlas; calculating comprehensive similarity degree evaluation of candidate keywords and seed keywords based on a link analysis method, and selecting the most similar candidate keywords to finish expanding the short text. Compared with a short text characteristic representation method based on a language model, the method is simple to operate and high in execution efficiency, and semantic correlation information between the keywords is sufficiently used. Compared with a traditional short text characteristic representation method based on a word bag model, the problems of data sparseness and semantic sensitivity are effectively relieved, and the method is independent of external large-scale auxiliary training corpus or a search engine.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

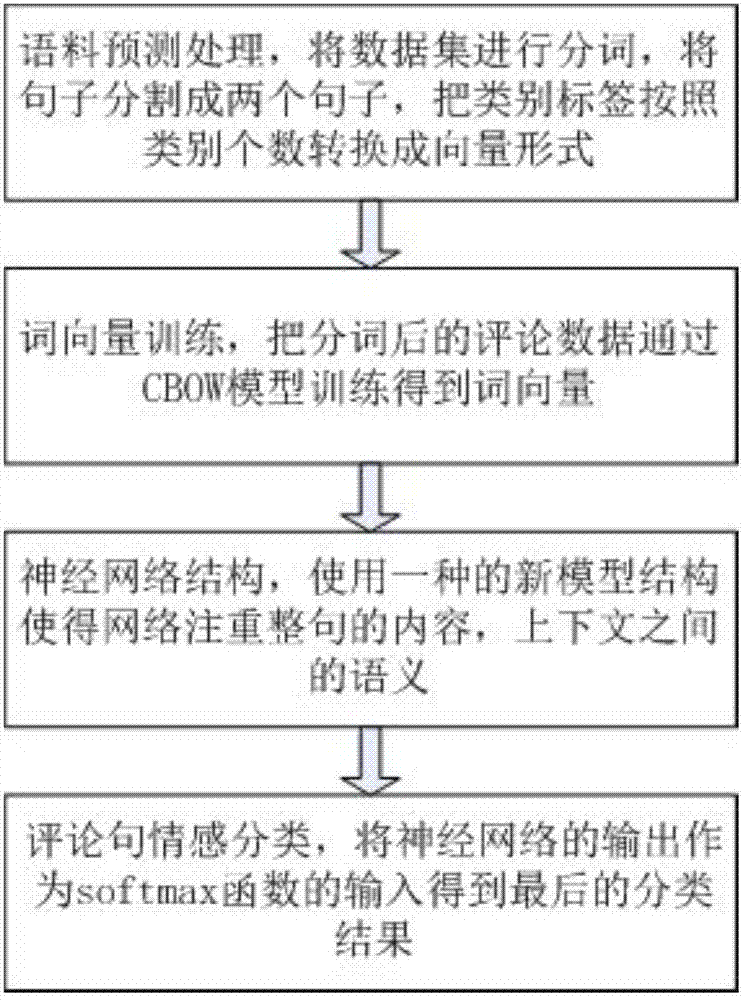

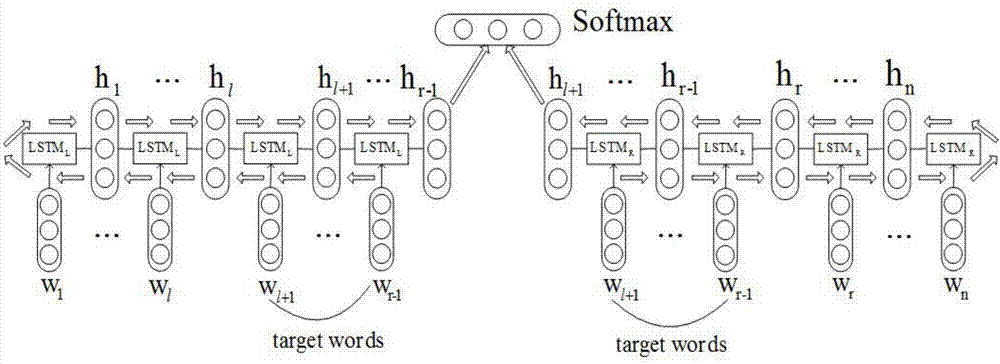

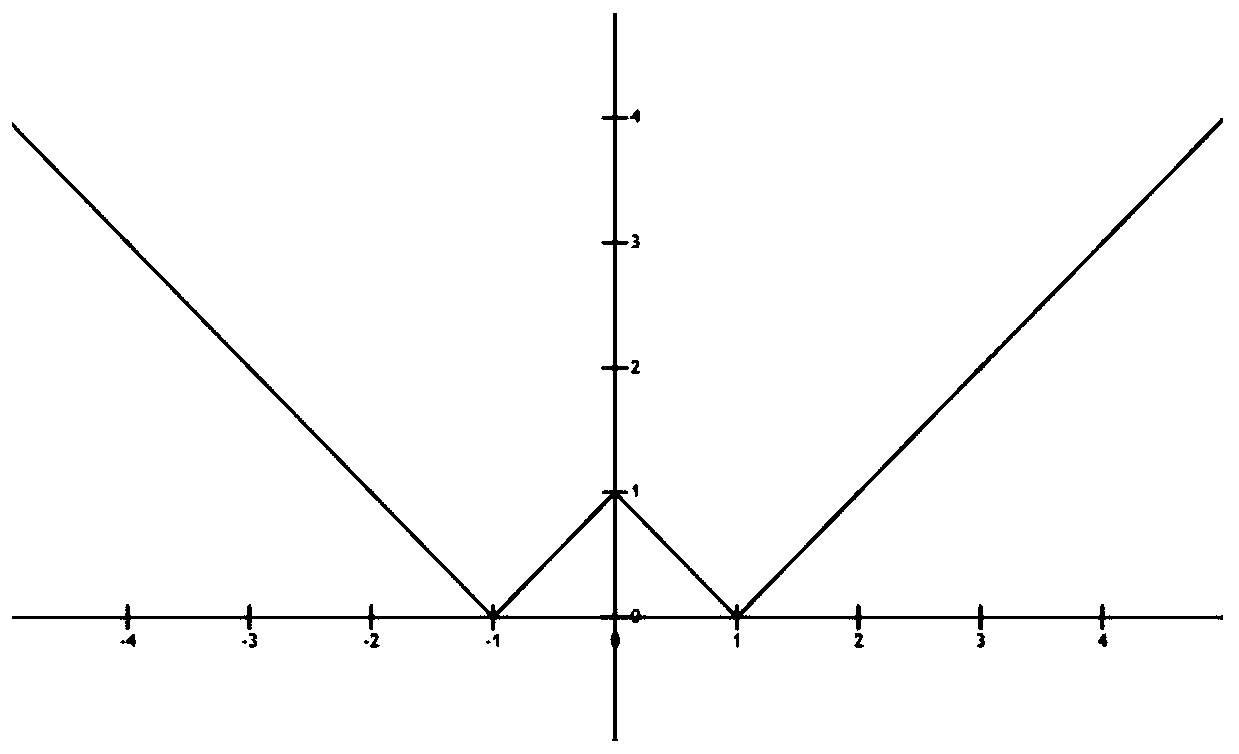

Commodity target word oriented emotional tendency analysis method

InactiveCN107544957ASemantic description is accurateAccurate predictionSpecial data processing applicationsData setBag-of-words model

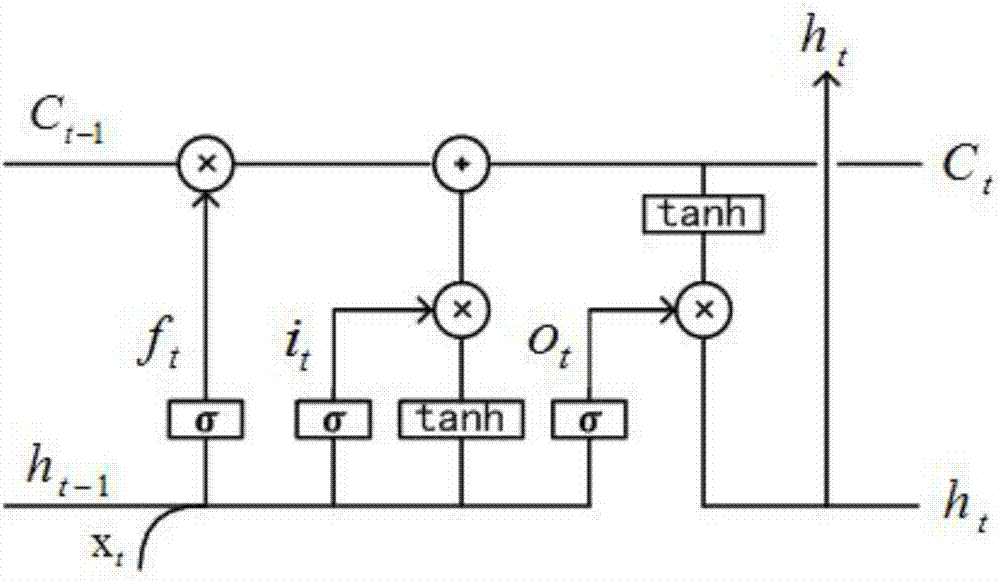

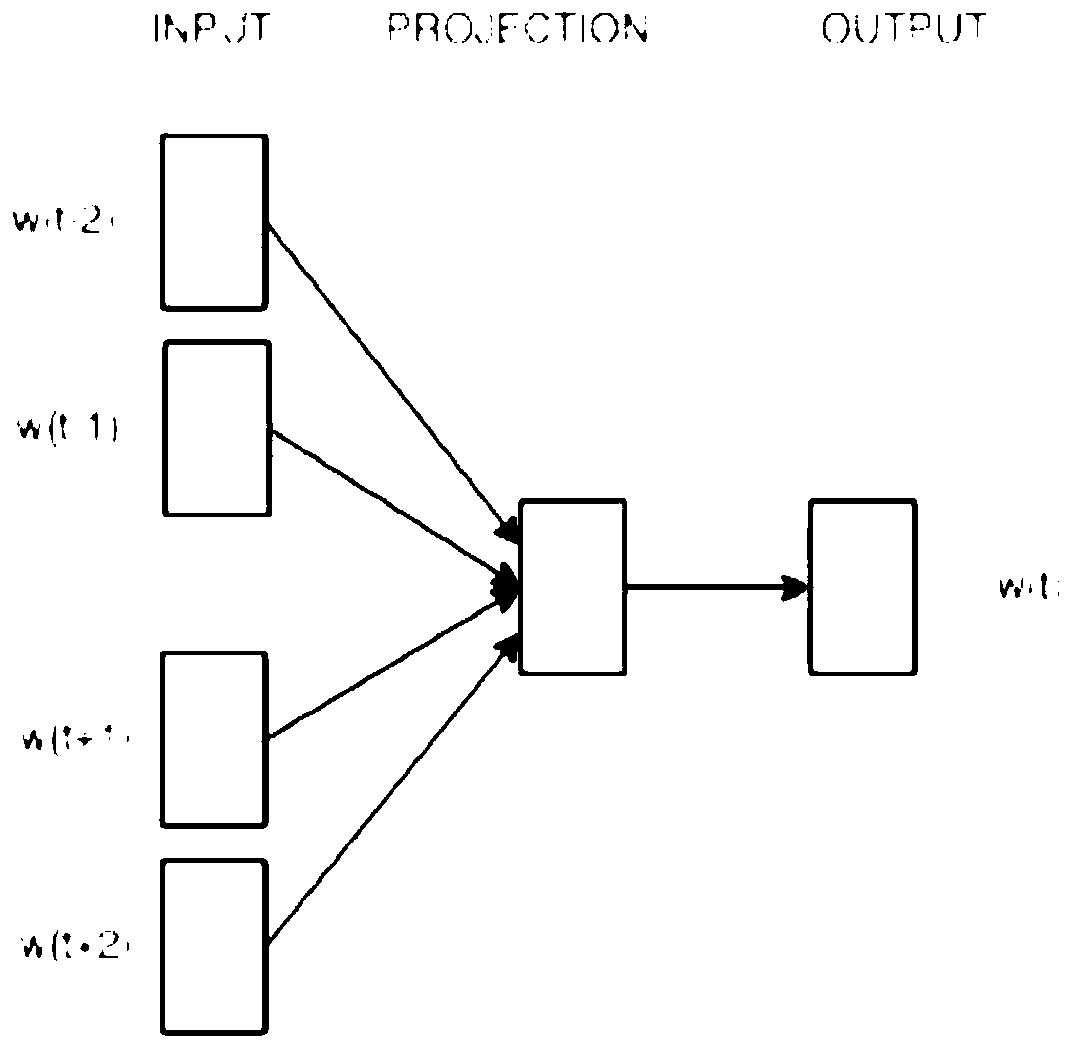

The invention discloses a commodity target word oriented emotional tendency analysis method, which belongs to the field of the analysis processing of online shopping commodity reviews. The method comprises the following four steps that: 1: corpus preprocessing: carrying out word segmentation on a dataset, and converting a category label into a vector form according to a category number; 2: word vector training: training review data subjected to the word segmentation through a CBOW (Continuous Bag-of-Words Model) to obtain a word vector; 3: adopting a neural network structure, and using an LSTM(Long Short Term Memory) network model structure to enable the network to pay attention to whole-sentence contents; and 4: review sentence emotion classification: taking the output of the neural network as the input of a Softmax function to obtain a final result. By use of the method, semantic description in a semantic space is more accurate, the data is trained through the neural network so as to optimize the weight and the offset parameter in the neural network, parameters trained after continuous iteration make a loss value minimum, at the time, the trained parameters are used for traininga test set, and therefore, higher accuracy can be obtained.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING)

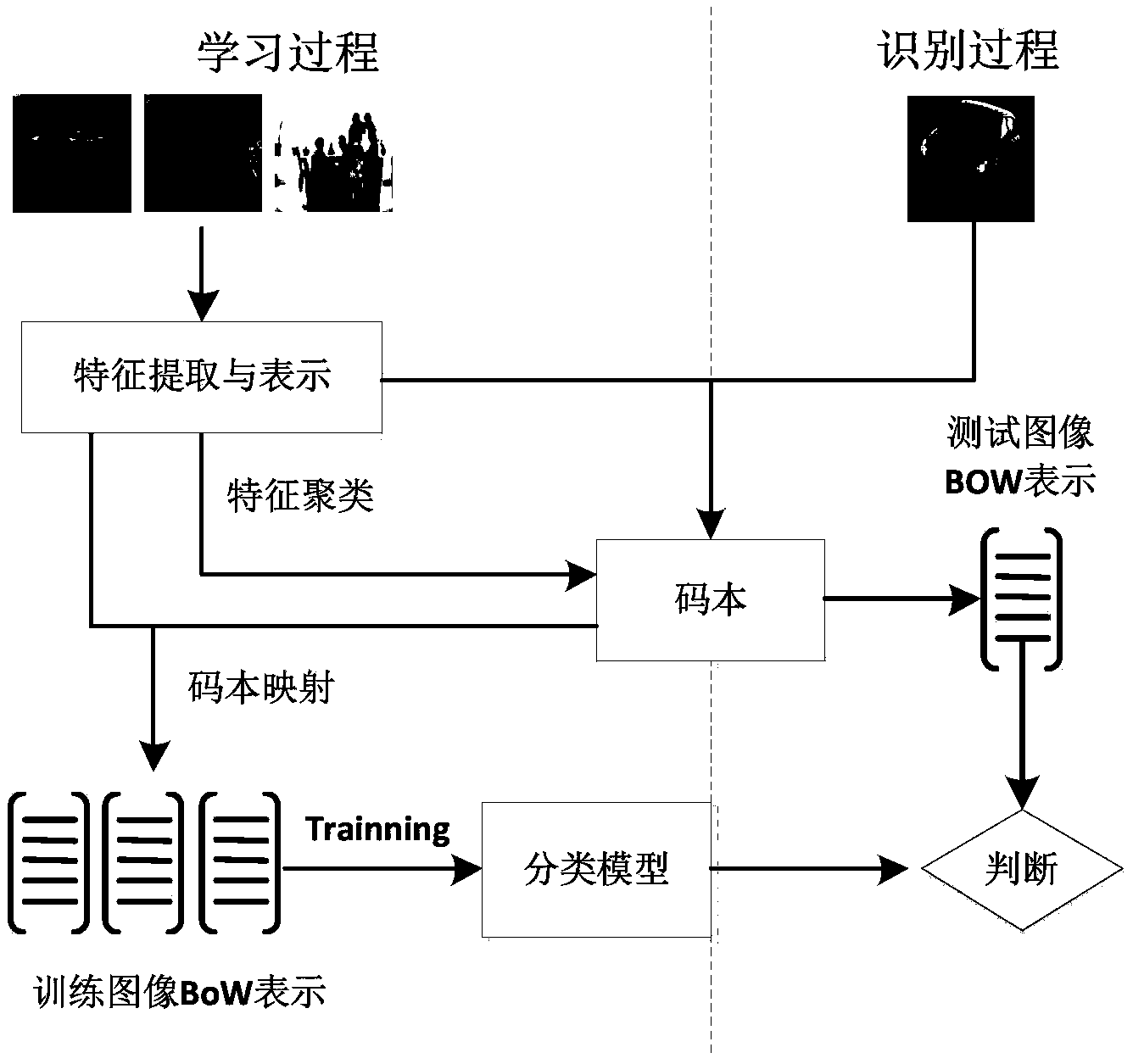

Image object recognition method based on SURF

ActiveCN105389593ARealize the recognition functionObjectively and accurately reflectCharacter and pattern recognitionBag-of-words modelClassification methods

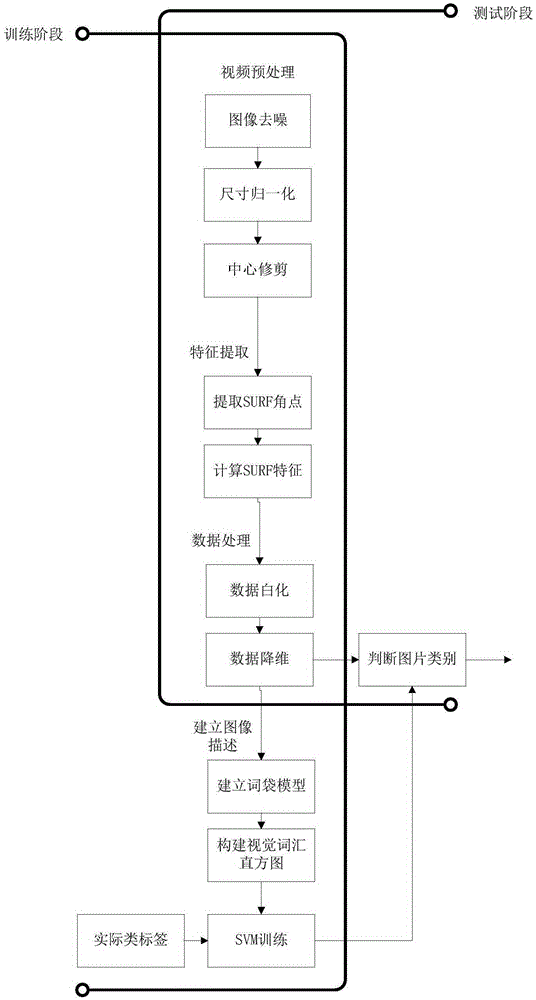

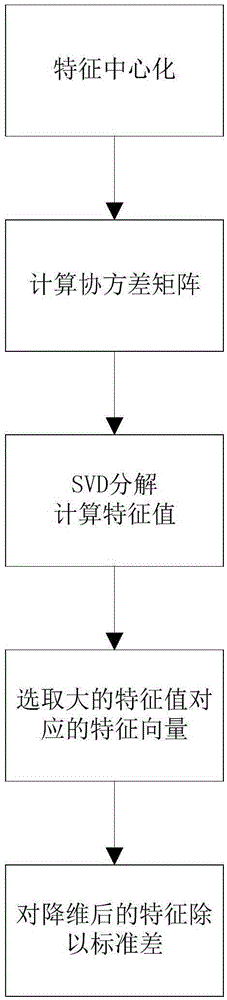

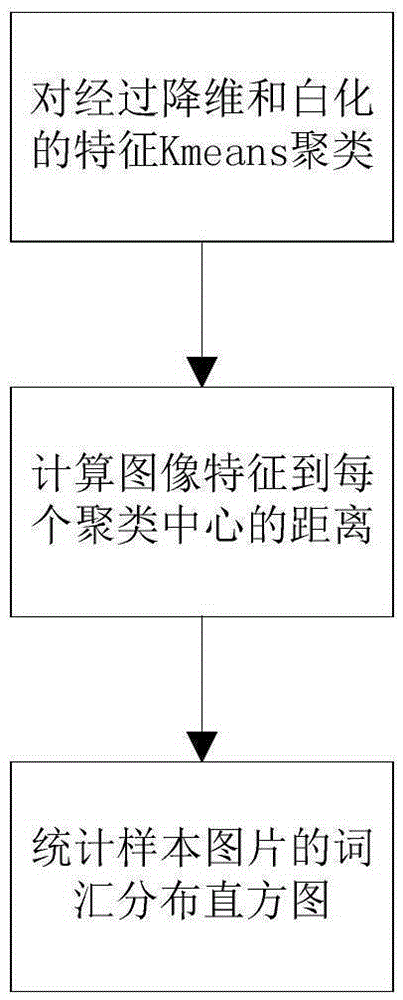

The invention provides an image object recognition method based on SURF (Speed Up Robust Feature), comprising the following steps: first, preprocessing images; second, extracting SURF corners and SURF descriptors of the images to describe the features of the images; third, processing the features through PCA data whitening and dimension reduction; establishing a bag-of-visual-words model through Kmeans clustering based on the features after processing, and using the bag-of-visual-words model to construct a visual vocabulary histogram of the images; and finally, carrying out training by a nonlinear support vector machine (SVM) classification method, and classifying the images to different categories. After classification model building of different images is completed in the training phase, the images tested in a concentrated way are detected in the testing phase, and therefore, different image objects can be recognized. The method has excellent performance in the aspects of recognition rate and speed, and can reflect the content of images more objectively and accurately. In addition, the classification result of an SVM classifier is optimized, and the error rate of judgment of the classifier and the limitation of the categories of training samples are reduced.

Owner:SHANGHAI JIAO TONG UNIV +1

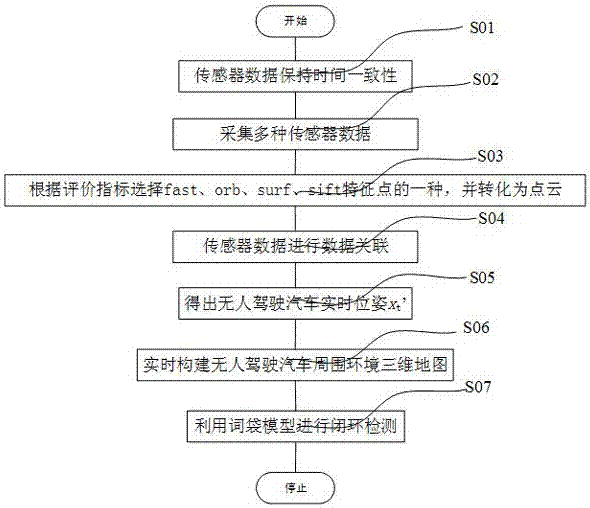

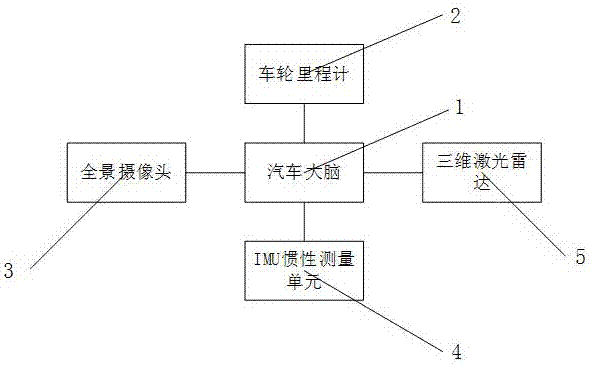

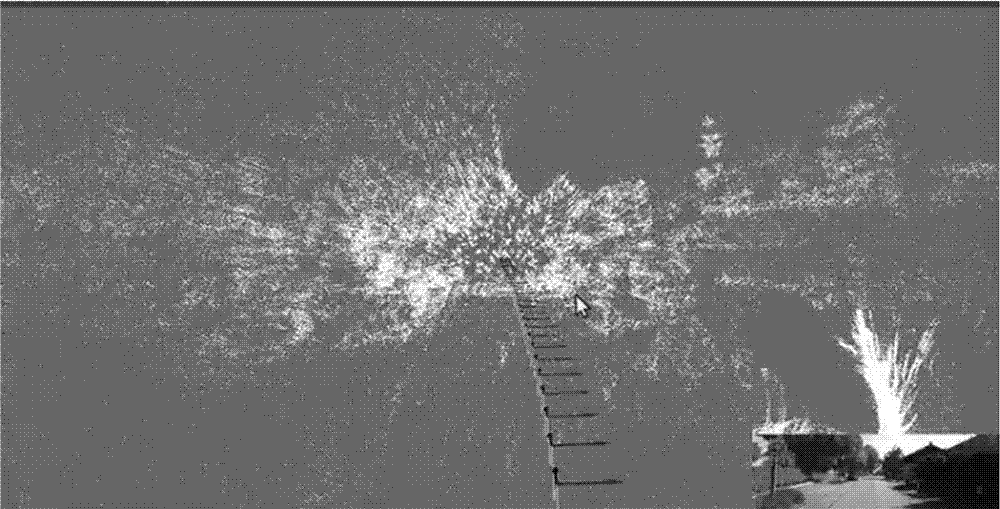

Method and system for achieving autonomous positioning and map building of pilotless automobile

InactiveCN107246876ABuild stable and effectiveInstruments for road network navigationRadarClosed loop

The invention discloses a method and system for achieving autonomous positioning and map building of a pilotless automobile. An SLAM technology is utilized to fuse multiple sensor data, and a stable and effective frame is built by using a novel algorithm structure; the data of a three-dimensional laser radar is optimized by using a particle filter, the data of the three-dimensional laser radar is transformed to a vision model, a bag-of-word model is used for closed-loop detection, and the stable and effective autonomous positioning and map building are conducted on the pilotless automobile. The operation efficiency is improved, the running speed is increased, and the method and system can be used in pilotless automobile systems on a large scale.

Owner:中北锂能(淮北)科技有限责任公司

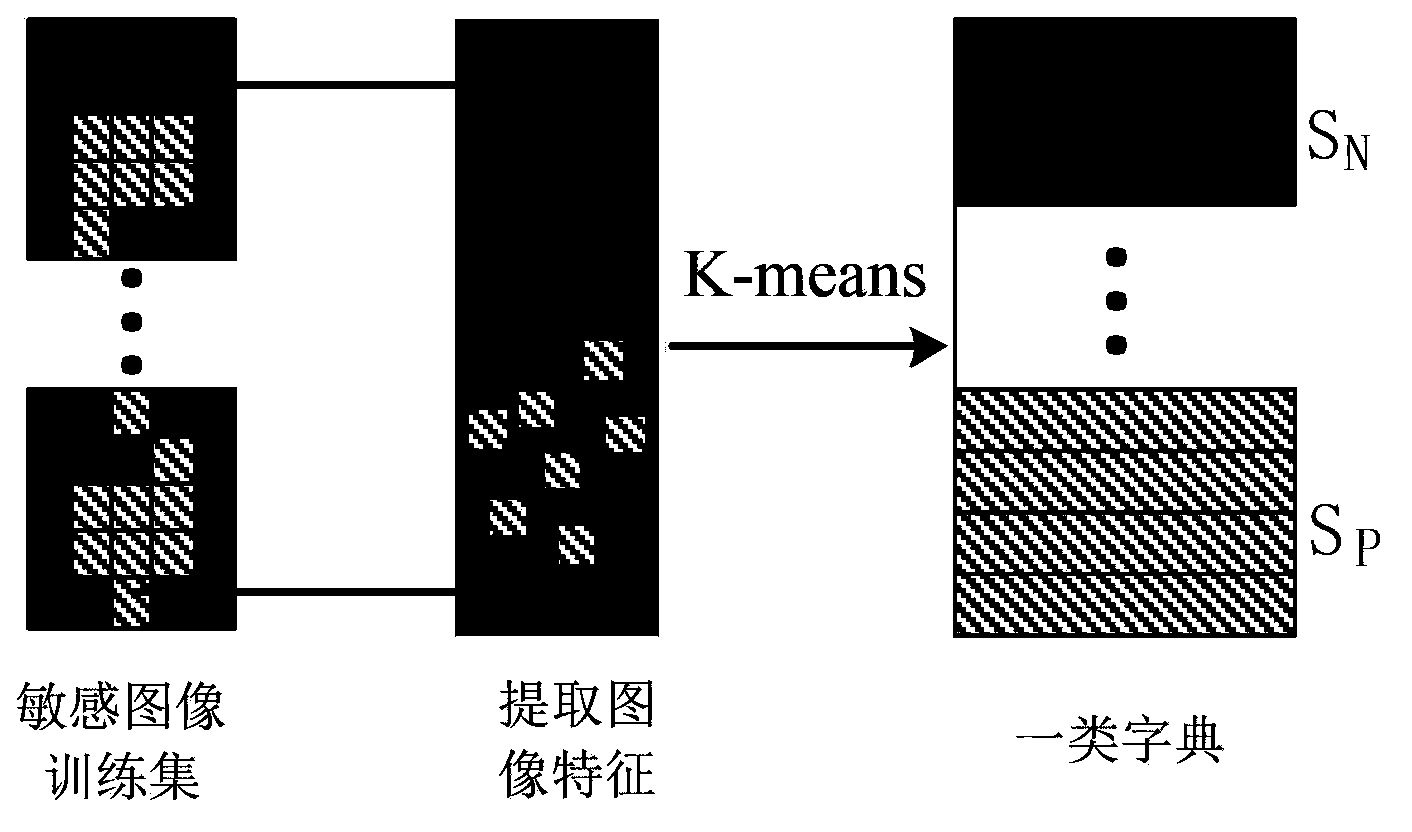

A sensitive image identification method and a system

The invention discloses a sensitive-image identification method and a system, and belongs to the technical field of image identification. The sensitive-image identification method and the system are characterized in that the following steps are comprised: a step 1, grid dividing characteristic extraction fused with skin color detection is carried out, and original bag-of-words expressing vectors of images are obtained through a bag-of-words model; a step 2, image characteristic optimization is carried out, and dimension-reduced optimization image vector expressions are obtained through the utilization of a random forest; a step 3, identification model training is carried out, that is to say through the utilization of a one-class support vector machine, a one class classifier is trained in optimization vector space; and a step 4, image identification is carried out, i.e., if the images completely do not contain skin color pixels in the pretreatment process of the step 1, the images are directly determined to be normal images; and otherwise, optimization characteristic expressions are obtained after processing, and the optimization characteristic expressions enter the one-class classification model obtained through the training, so that identification results of the images are finally obtained. According to the invention, a one-class classification algorithm is utilized to solve sensitive-image identification problem, and a plurality of techniques are fused in the processing process, and the characteristic optimization processing is carried out, so that the accuracy and the efficiency of the sensitive-image identification are improved.

Owner:南京多目智能科技有限公司

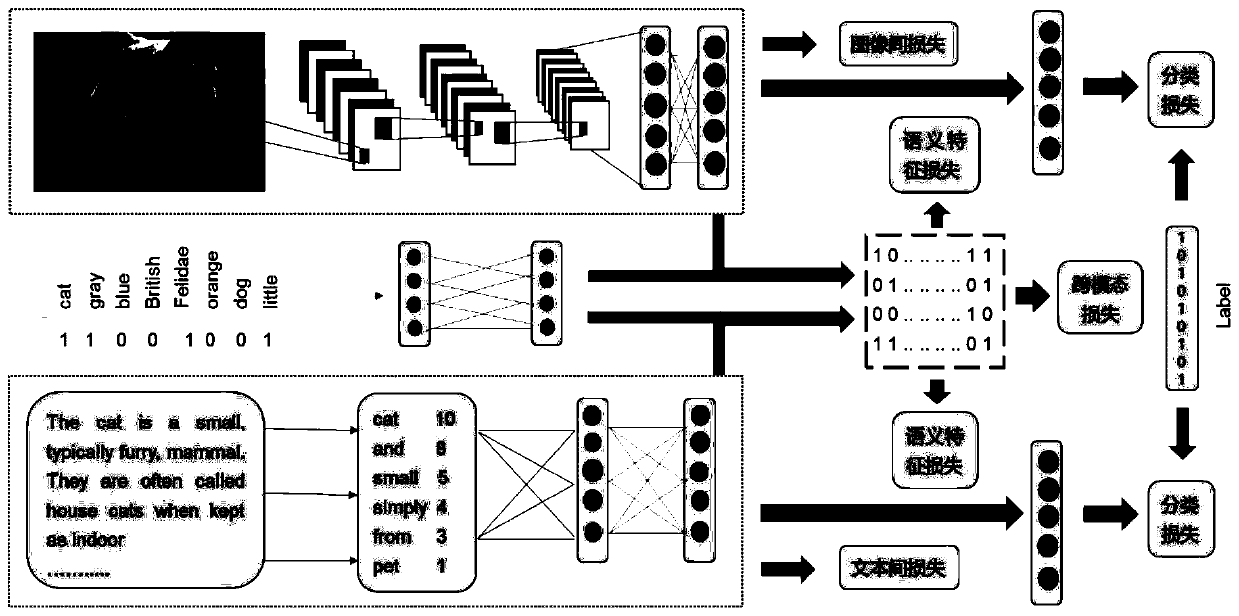

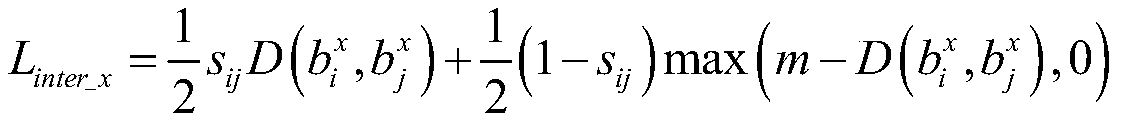

Cross-modal deep hash retrieval method based on self-supervision

ActiveCN110309331AImprove retrieval performanceImprove playbackCharacter and pattern recognitionStill image data indexingModal dataFeature extraction

The invention relates to a cross-modal joint hash retrieval method based on self-supervision. The method comprises the following steps: step 1, processing image modal data: carrying out feature extraction on the image modal data by adopting a deep convolutional neural network, carrying out Hash learning on the image data, and setting the number of nodes of the last full connection layer of the deep convolutional neural network as the length of a Hash code; step 2, processing the text modal data; using a word bag model for modeling text data, a two-layer full-connection neural network is established for feature extraction of text modal data, wherein the input of the neural network is a word vector represented by the word bag model, and the length of data of a first full-connection layer node is the same as that of data of a second full-connection layer node and a Hash code; step 3, for the neural network of category label processing, extracting semantic features from the label data by adopting a self-supervised training mode; and step 4, minimizing the distance between the features extracted from the image and the text network and the semantic features of the label network, so thatthe Hash model of the image and the text network can more fully learn the semantic features among different modals.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

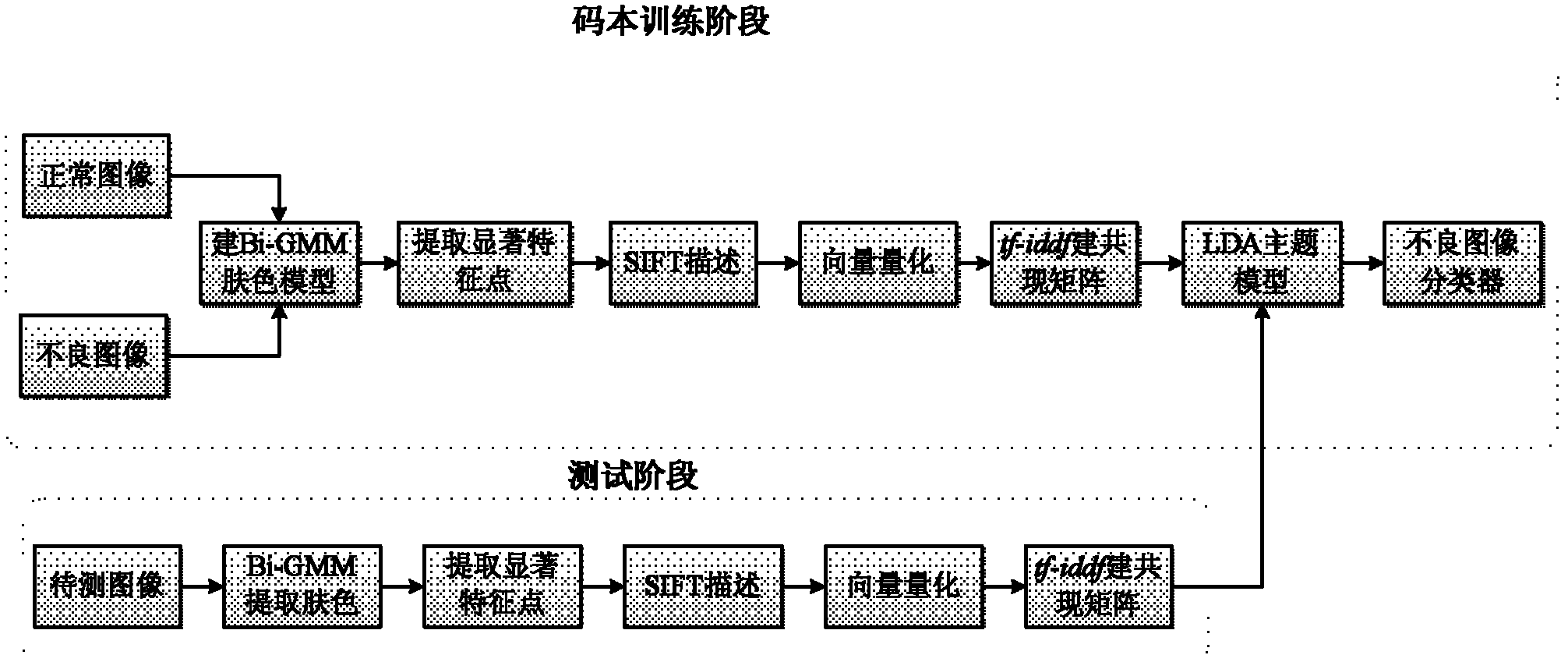

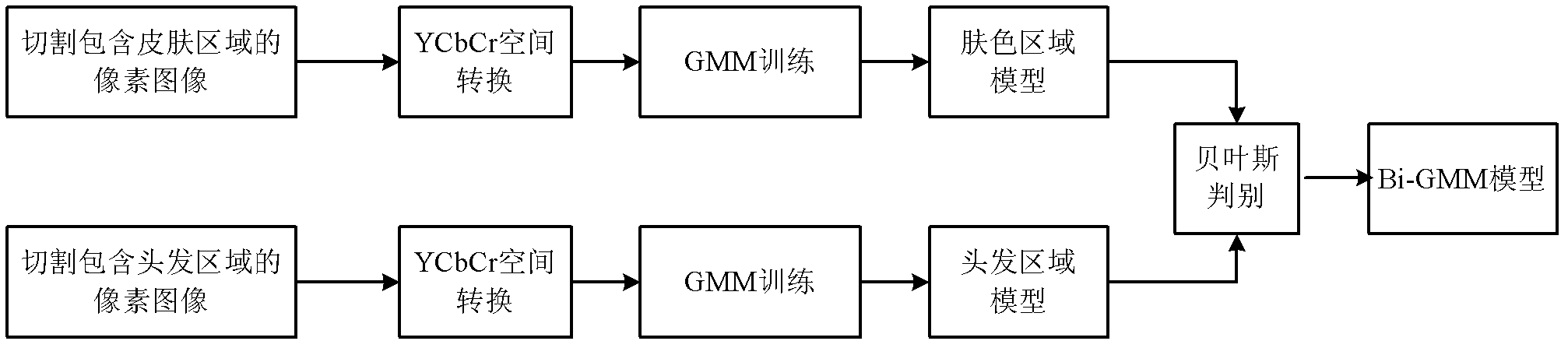

Undesirable image detecting method based on connotative theme analysis

InactiveCN102360435AImprove accuracyRobust skin tone detectionCharacter and pattern recognitionNeural learning methodsSubject analysisCo-occurrence

The invention discloses an undesirable image detecting method based on connotative theme analysis, which is substantially used for solving the problem of wrong judgment on normal images resulting from semantic information consideration failure in the present undesirable information detecting method. The scheme is as follows: extracting a skin region of an image by a double-blending Gaussian model; generating a codebook base containing distinguishing features in the skin region by a word bag model, and representing each training image to a group of word co-occurrence vectors with weights via aword frequency-inverse identification file frequency method; forming all co-occurrence vectors to a co-occurrence matrix, performing LDA model creation on the co-occurrence matrix to obtain the themeof the image; inputting the mixed theme of the training image in a BP neural network to train an undesirable image classifier; and obtaining the theme of an image to be measured, inputting the theme to the undesirable image classifier, and judging whether the theme is an undesirable image so as to finish the undesirable image detection. As shown in the test, the invention can be used for better distinguish the undesirable images and the normal images, so that the invention can be used for filtering the erotic information in the images.

Owner:XIDIAN UNIV

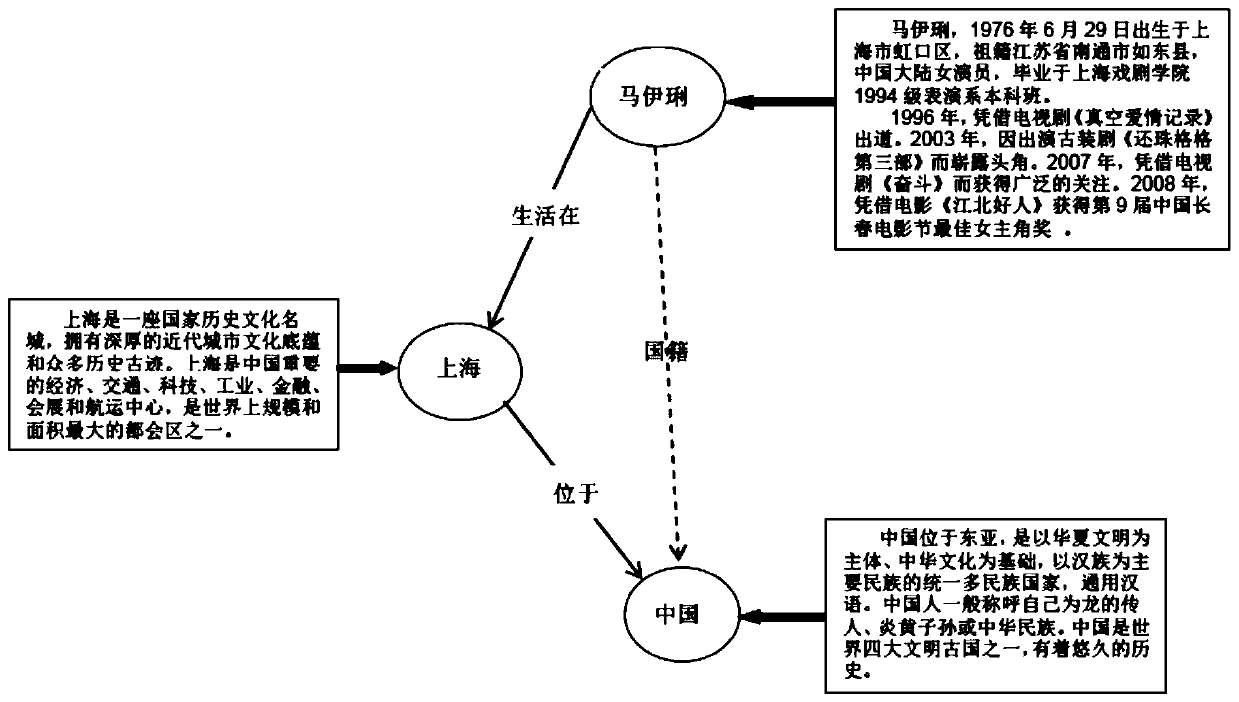

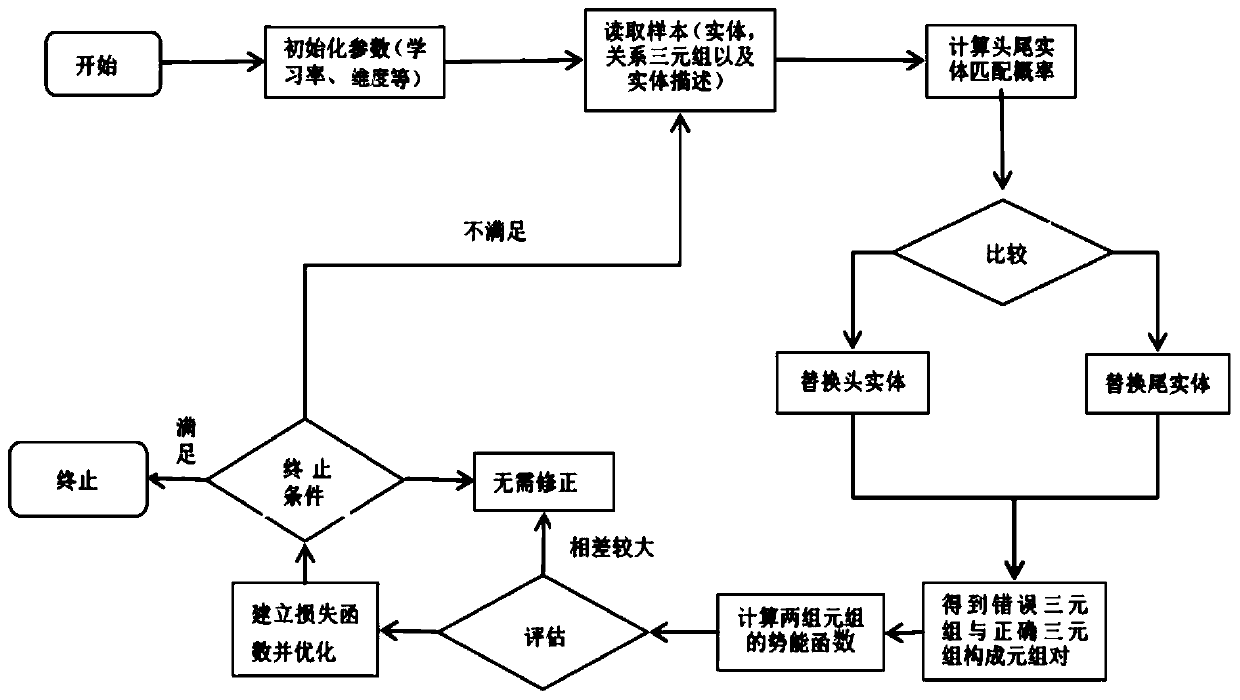

Knowledge graph completion method based on entity description and relationship path

PendingCN111026875AImprove computing efficiencyImprove accuracySpecial data processing applicationsSemantic tool creationBag-of-words modelWord model

The invention relates to a knowledge graph completion method based on entity description and relationship path, which comprises the steps of S1, establishing a continuous bag-of-words model based on entity text description, and performing vector representation on entity description of entities in a knowledge graph by utilizing the continuous bag-of-words model to obtain a description-based vector;S2, according to a conversion-based model between the entity vector and the relation vector and between the entity vector and the relation path, obtaining a conversion-based model, establishing a score function of the relation triad (h, r, t) and the path triad (h, p, t) and a loss function of the score function, learning vector representation of an entity, a relation and a path by minimizing theloss function, and learning vector representation based on a structure; and S3, adopting the learned entity vector representation, obtaining representation results in the vector space in different tasks, and complementing the knowledge graph or mining the potential relationship.

Owner:RENMIN UNIVERSITY OF CHINA

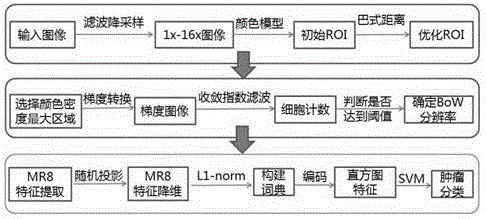

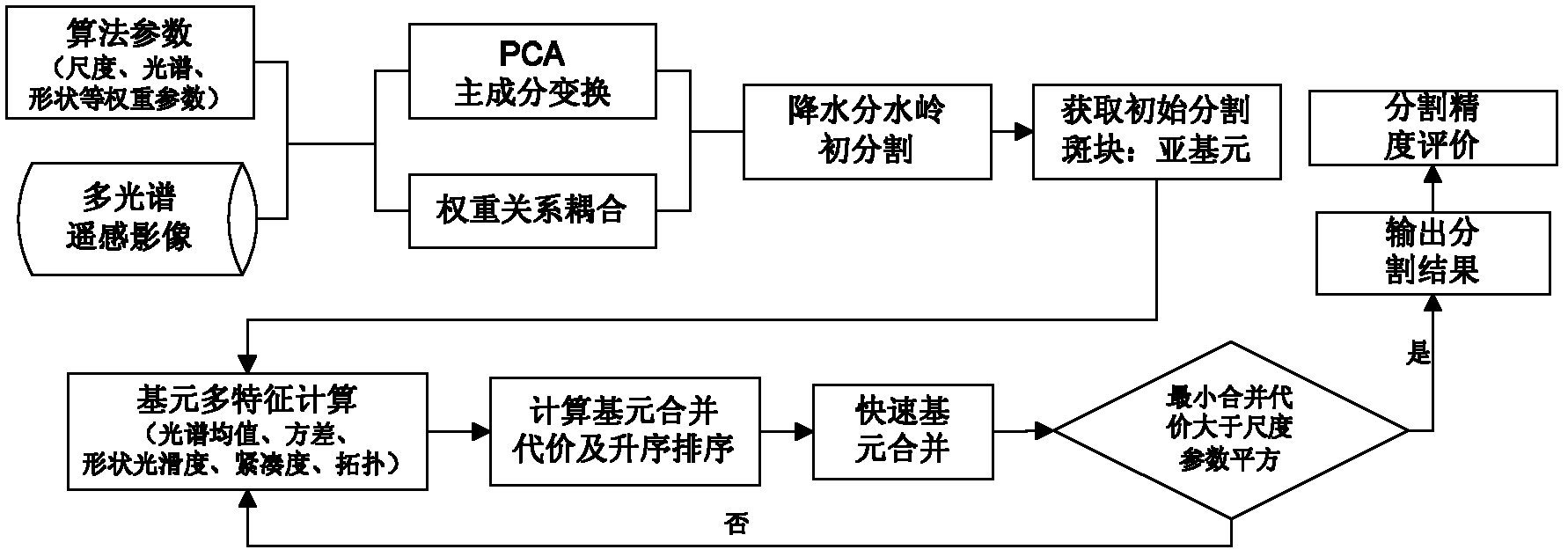

Automatic fast segmenting method of tumor pathological image

ActiveCN104933711AReduce workloadImprove efficiencyImage enhancementImage analysisAbnormal tissue growthFilter algorithm

The invention discloses an automatic fast segmenting method of a tumor pathological image. The method comprises the following steps: firstly filtering a tumor original pathological image through the adoption of a Gaussian pyramid algorithm to respectively obtain pathological images with equal resolution, double resolution, fourfold resolution, eightfold resolution and 16-fold resolution; determining an initial region of interest containing the tumor on the equal resolution image through a RGB color model and morphological close operation; iteratively optimizing the initial regions of interest from the equal resolution to the fourfold resolution through the adoption of bhattacharyya distance; judging that the contribution of the RGB color model to the tumor region of interest has been reduced to zero when the bhattacharyya distance achieves a set threshold value; performing the self-adaptive high resolution selection of the deep precise segmentation through the adoption of a convergence exponent filtering algorithm, thereby further segmenting under the most suitable high resolution; and finally segmenting out a normal tissue and a tumor tissue in the tumor region of interest through the adoption of a bag of words model based on random projection. The method disclosed by the invention has the features of being accurate, fast and automatic.

Owner:NANTONG UNIVERSITY

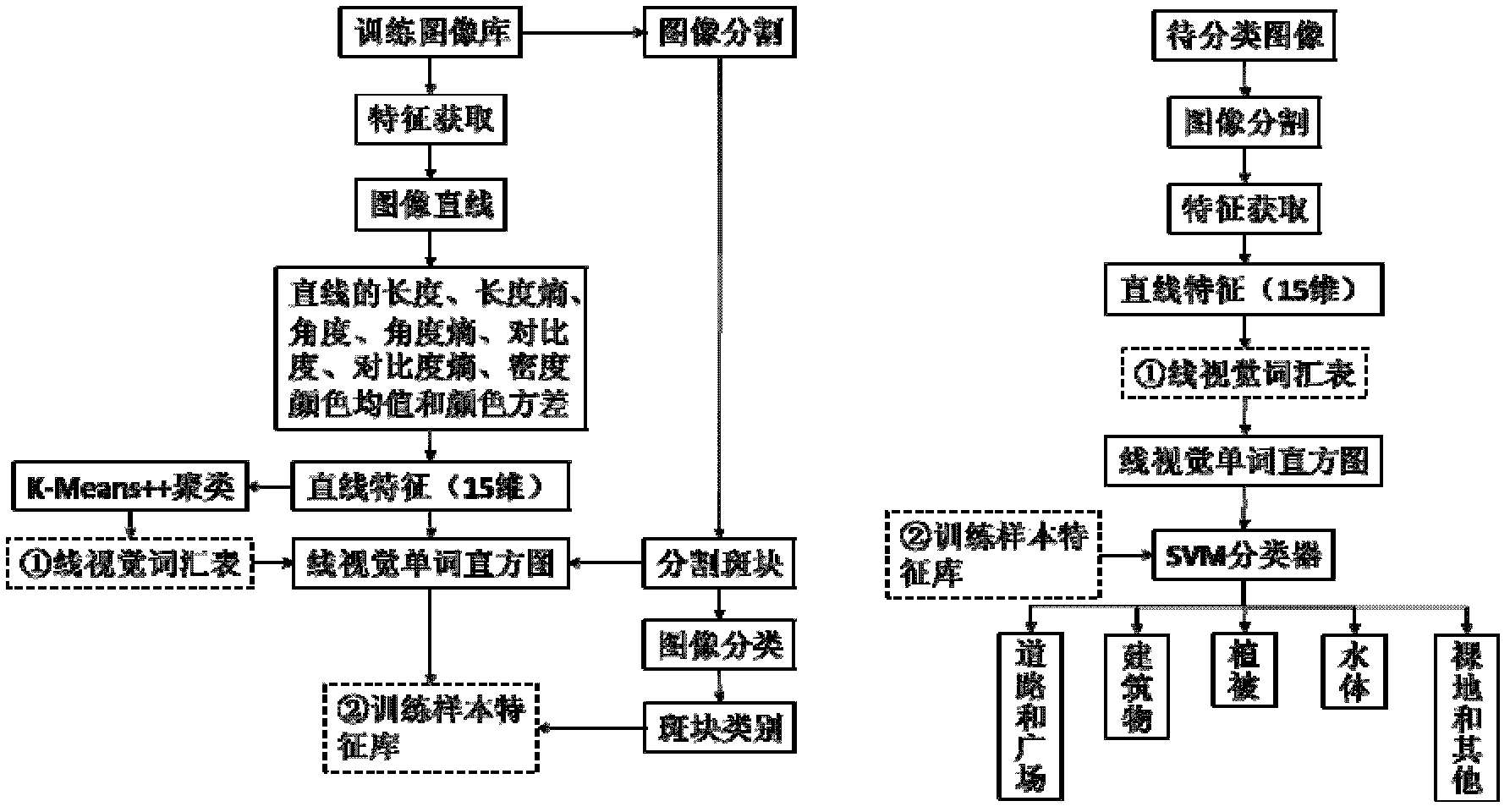

High-spatial resolution remote-sensing image bag-of-word classification method based on linear words

InactiveCN102496034ASimple calculationFeature stabilizationCharacter and pattern recognitionFeature vectorWord list

The invention discloses a high-spatial resolution remote-sensing image bag-of-word classification method based on linear words, which includes first dividing images to be classified into a practice sample and a classification sample. Steps for the practice sample include collecting linear characteristics of the practice image and calculating linear characteristic vector; utilizing K-Means++ arithmetic to generate linear vision word list in cluster mode; segmenting practice images and obtaining linear vision word list column diagram of each segmentation spot block on the base; and conducting class label on the spot block and putting the classification and linear vision word column diagram in storage. After sample practice, steps for the classification sample include collecting linear characteristics of the images to be classified, segmenting the images to be classified, calculating linear characteristics vector on the base, obtaining linear vision word list column diagram of each segmentation spot block and selecting an SVM classifier to classify the images to be classified to obtain classification results. The high-spatial resolution remote-sensing image bag-of-word classificationmethod utilizes linear characteristics to establish bag-of-word models and is capable of obtaining better high spatial resolution remote sensing image classification effect.

Owner:NANJING NORMAL UNIVERSITY

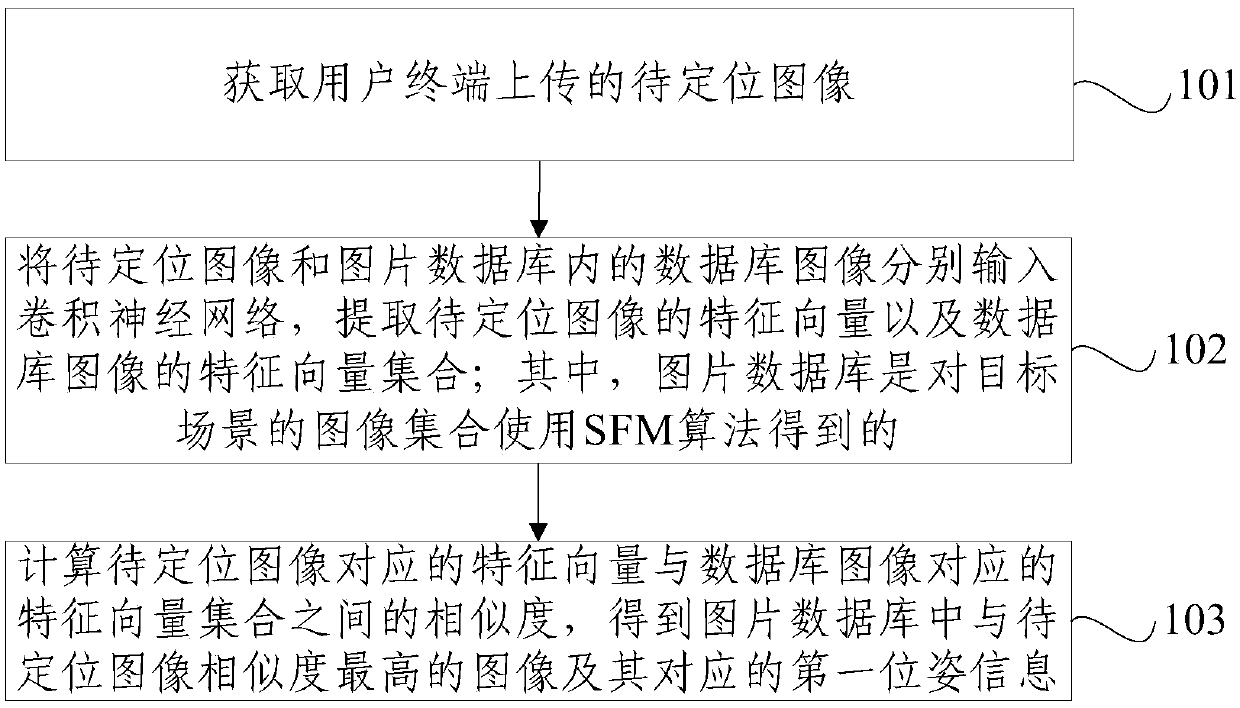

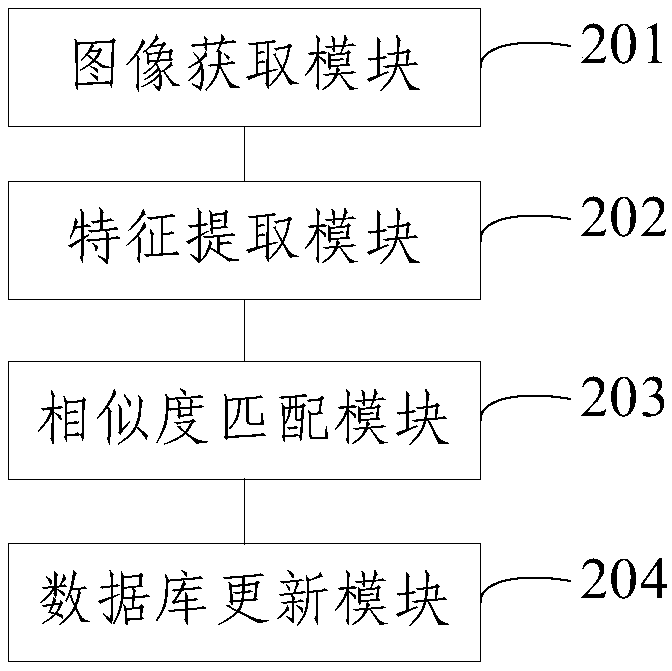

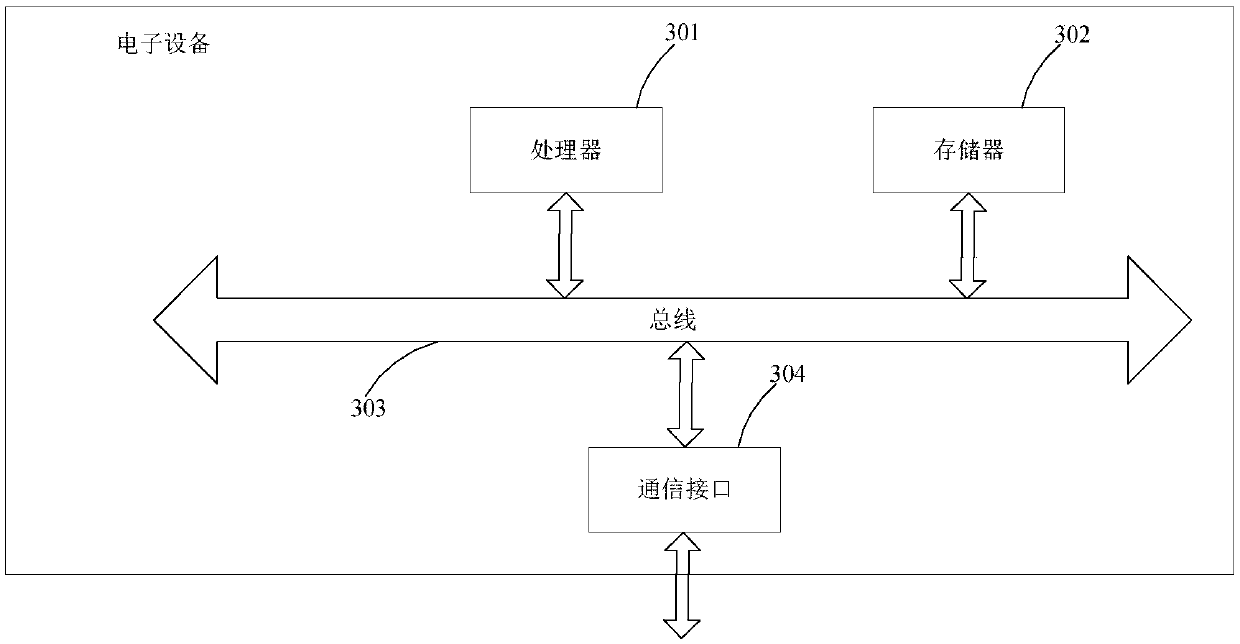

An indoor positioning method and device based on SLAM

InactiveCN109671119AImprove recognition accuracyAccurate acquisitionImage enhancementImage analysisFeature vectorNeural network learning

The embodiment of the invention provides an indoor positioning method and device based on SLAM. The method comprises the steps of obtaining a to-be-positioned image uploaded by a user terminal, constructing a picture database with pose information rapidly through an SFM algorithm; extracting a feature vector of a to-be-positioned image and a feature vector set of a database image through a convolutional neural network; calculating the similarity between the feature vectors of the to-be-positioned image and the database image, completing loopback detection, and obtaining the image with the highest similarity with the to-be-positioned image in the image database and the first pose information corresponding to the image, thereby obtaining the accurate position and pose information of the userterminal. Compared with a traditional visual SLAM algorithm which adopts a word bag model and is weak in recognition capability, the deep features of the image are learned through the neural network,the higher recognition accuracy can be achieved, and the accuracy of loop detection is improved.

Owner:ACAD OF OPTO ELECTRONICS CHINESE ACAD OF SCI

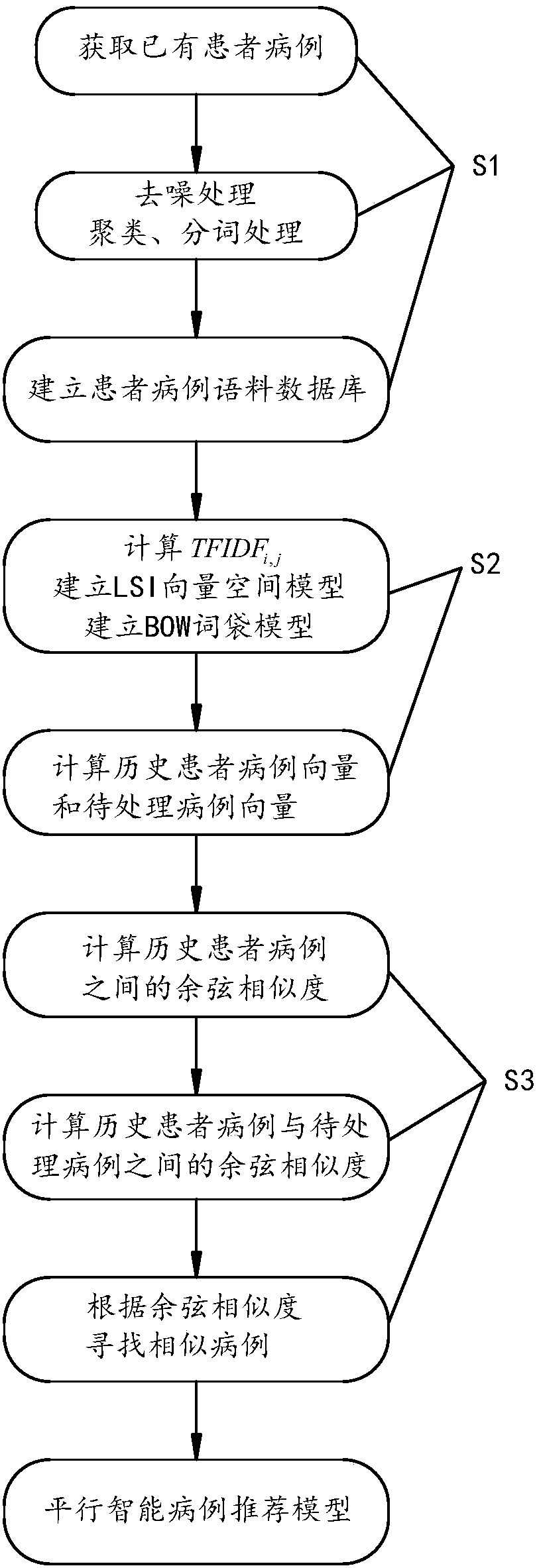

Modeling method for parallel smart case recommendation model

ActiveCN107656952AImprove work efficiencyReduce diagnosis and treatment timeMedical data miningSpecial data processing applicationsCosine similarityModel method

The invention relates to a modeling method for a parallel smart case recommendation model. The method comprises the following steps of obtaining existing patient cases from an electronic case database, carrying out denoising, clustering and word segmentation on the patient cases, and establishing a patient case corpus database; defining that TFIDFi, j shows the importance degree of a word or an expression in a case of the patient case corpus database, establishing an LSI vector space model according to the TFIDFi, j, and moreover, establishing a BOW word bag model according to all words and expressions in the patient case corpus database; calculating history case vectors and to-be-processed case vectors in the patient case corpus database through utilization of the LSI vector space model and the BOW word bag model; calculating cosine similarity among the history patient cases and storing the cosine similarity; and calculating the cosine similarity between the to-be-processed case vectors and the history patient case vectors, and searching similar cases of to-be-processed cases according to the cosine similarity. The model established through adoption of the method provided by the invention is high in accuracy and low in error. A recommendation result is high in quality.

Owner:QINGDAO ACADEMY OF INTELLIGENT IND

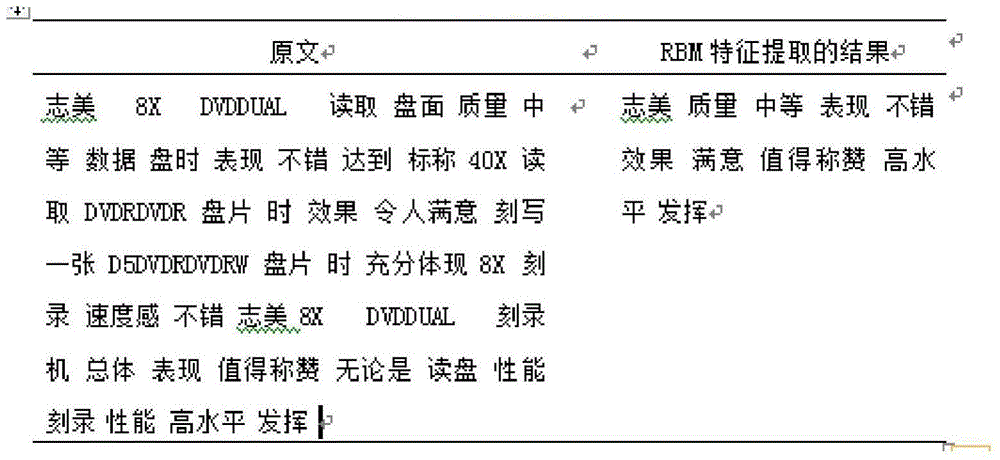

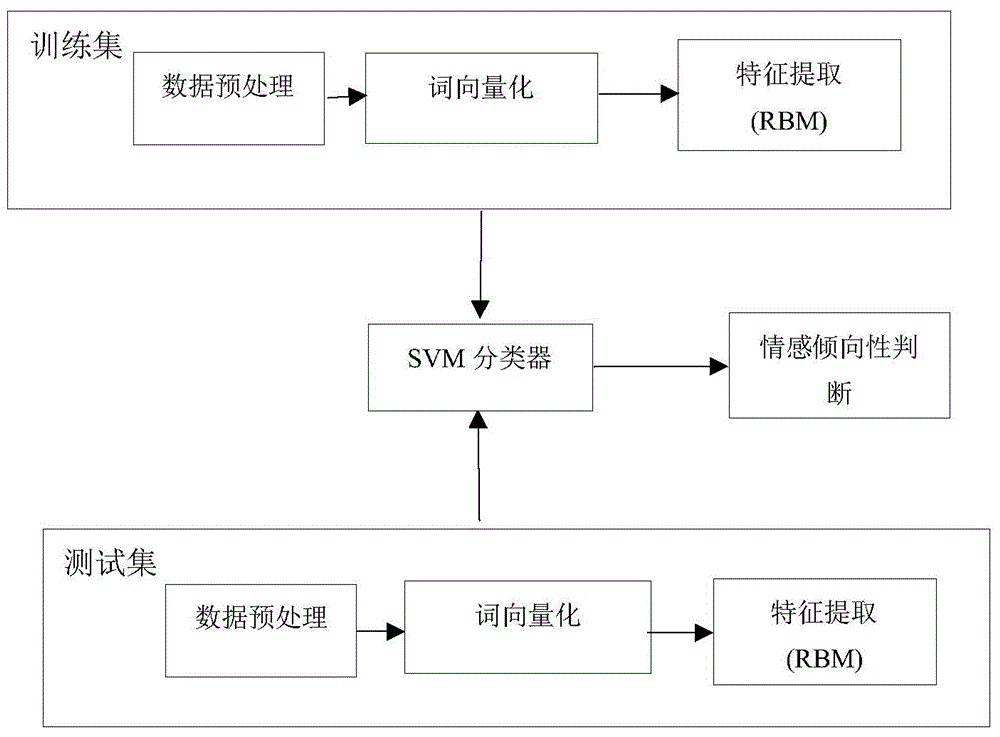

Emotion analysis method for Chinese texts based on computer information processing technology

InactiveCN104965822AHigh precisionSpecial data processing applicationsInformation processingRestricted Boltzmann machine

The invention discloses an emotion analysis method for Chinese texts based on computer information processing technology. Comments on Chinese products are subjected to word segmentation. By utilizing a bag-of-words model, vector representations of product comments are generated. The vector of every comment is inputted to a visible unit of a limited Boltzmann machine (RBM) in deep learning. Sentimental characteristics of Chinese texts are extracted by the RBM and the extracted emotional characteristics are inputted to a SVM for text emotion classification. The emotion analysis method for the Chinese texts based on computer information processing technology is capable of improving relevance of emotional semantics of characteristics while the SVM is capable of improving accuracy of emotion classification of comments on Chinese products.

Owner:CENT SOUTH UNIV

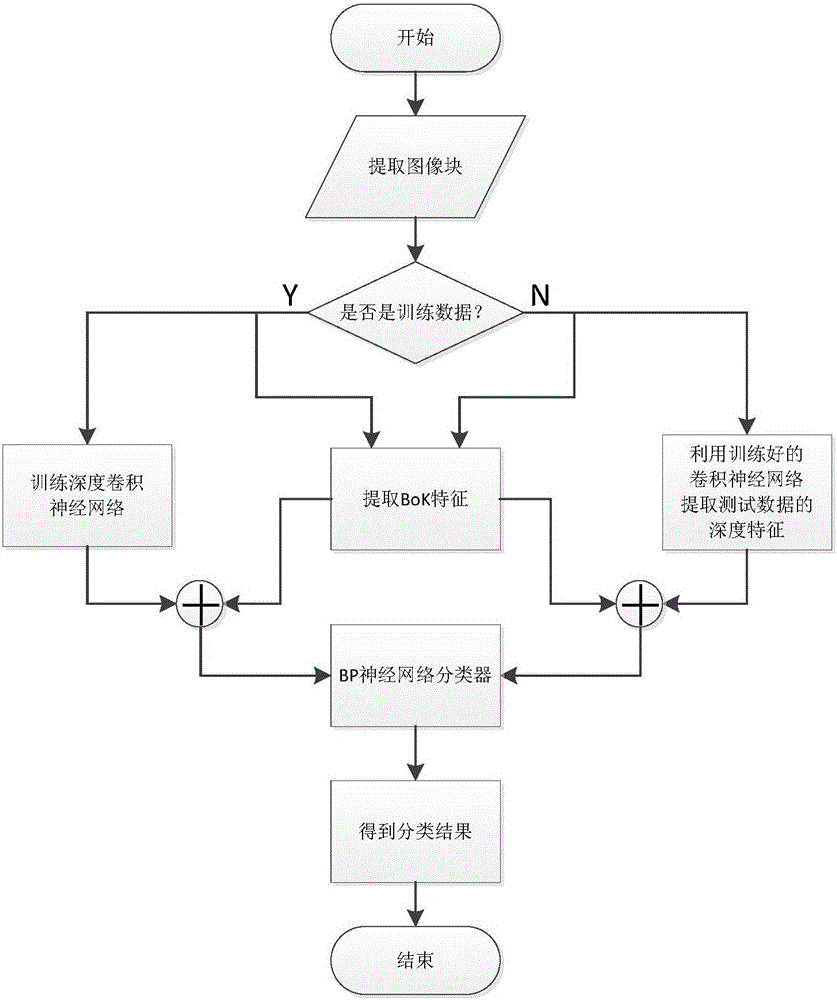

Medical image classification method for combining deep characteristic extraction and shallow characteristic extraction

InactiveCN106156793AImprove accuracyImplement automatic classificationCharacter and pattern recognitionFeature extractionBag-of-words model

The invention relates to a medical image classification method for combining deep characteristic extraction and shallow characteristic extraction. The medical image classification method comprises the following steps: training a deep convolutional neural network model, a bag-of-word model and a BP neural network at first, then dividing a medical image to be classified into image sub blocks, inputting the image sub blocks into the deep convolutional neural network model, the bag-of-word model and the BP neural network, which are trained, in sequence to obtain the type of each image sub block, and classifying the medical image to be classified based on the majority voting principle.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

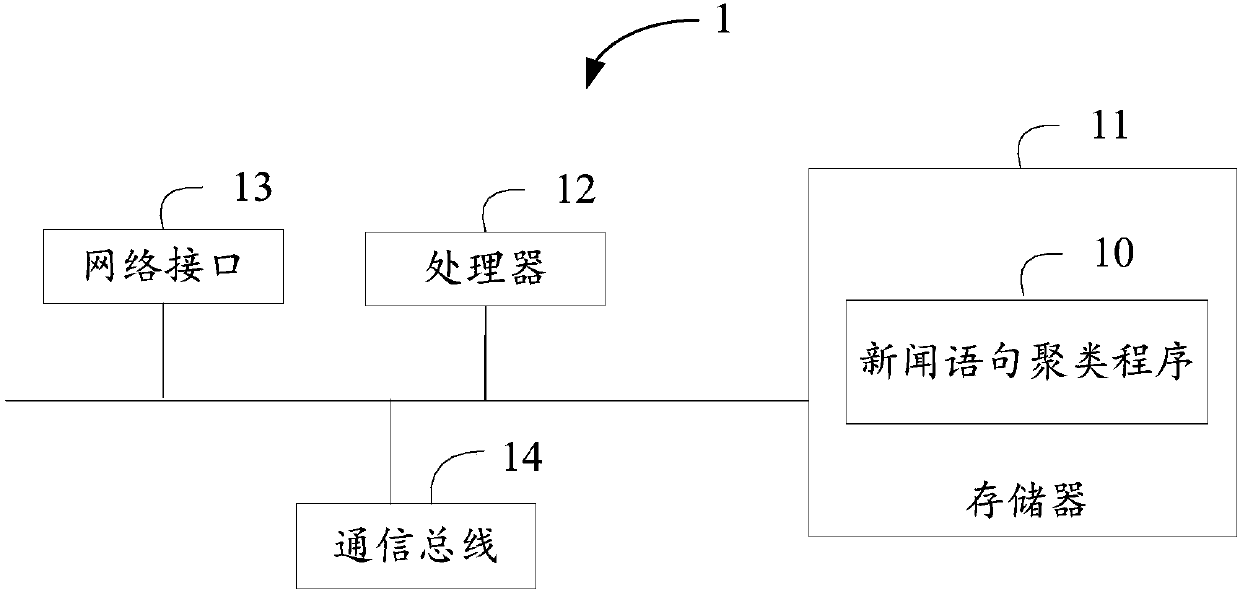

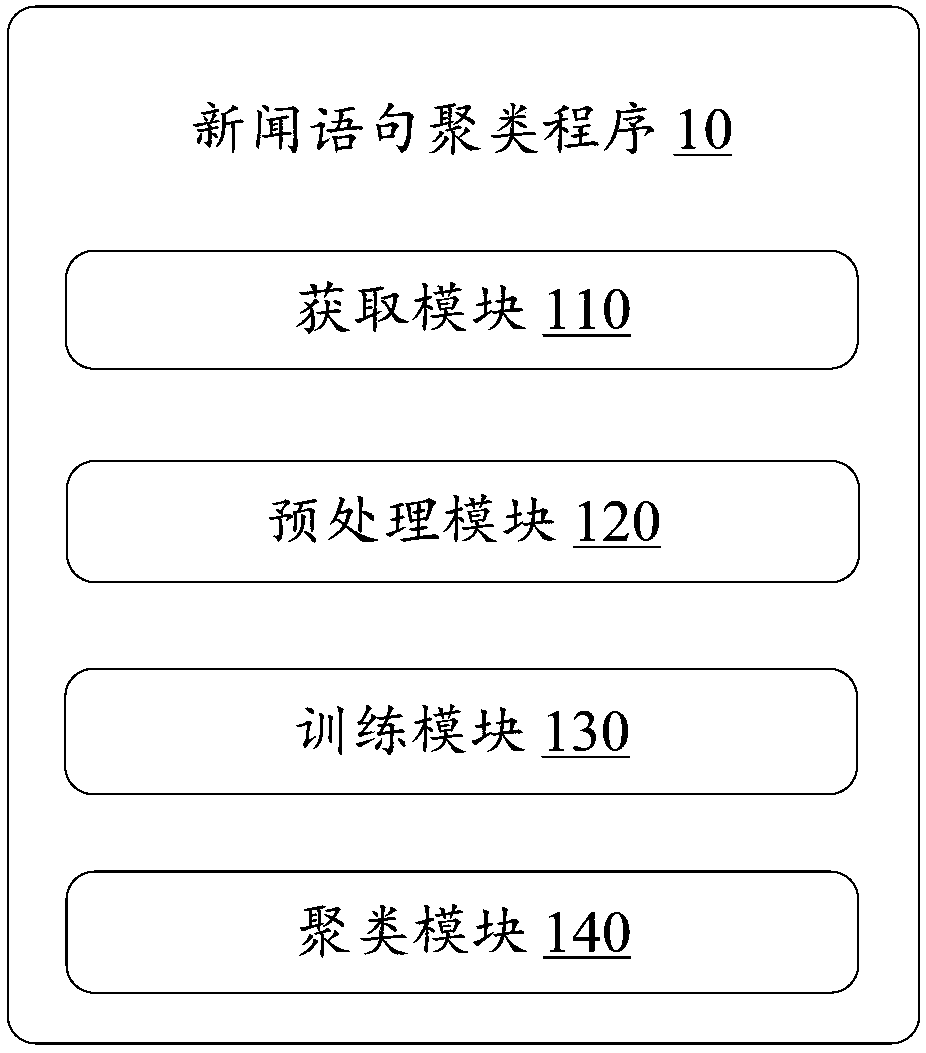

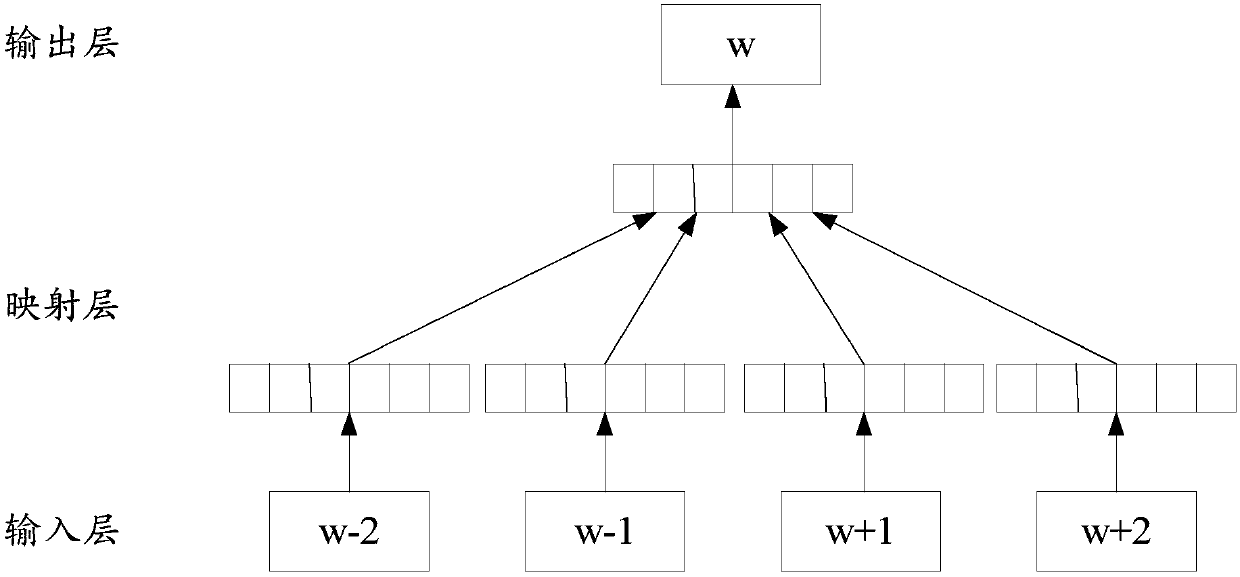

News sentence clustering method based on semantic similarity, device and storage medium

ActiveCN107679144AAccurate clusteringEfficient clusteringSemantic analysisSpecial data processing applicationsComputational semanticsSemantic vector

The invention provides a news sentence clustering method based on semantic similarity. The method includes the following steps: preprocessing news sentences of a corpus, and extracting available words; utilizing the available words to train a continuous bag-of-words model to obtain an initial word vector of each available word; utilizing an initial sentence vector of each news sentence and the initial word vectors of the left and right adjoining available words of a certain available word in the news sentence to train the continuous bag-of-words model in an iterative manner to obtain a currentword vector of each available word in the news sentence and a final sentence vector of the news sentence; merging an average value of the word vectors of all the available words, one-hot vectors of high-frequency words and the final sentence vector of each news sentence to obtain a semantic vector of the news sentence; and calculating distances between the semantic vectors to obtain the semanticsimilarity between the different news sentences, and clustering the news sentences of the corpus in accordance therewith. The invention also provides an electronic device and a computer-readable storage medium.

Owner:PING AN TECH (SHENZHEN) CO LTD

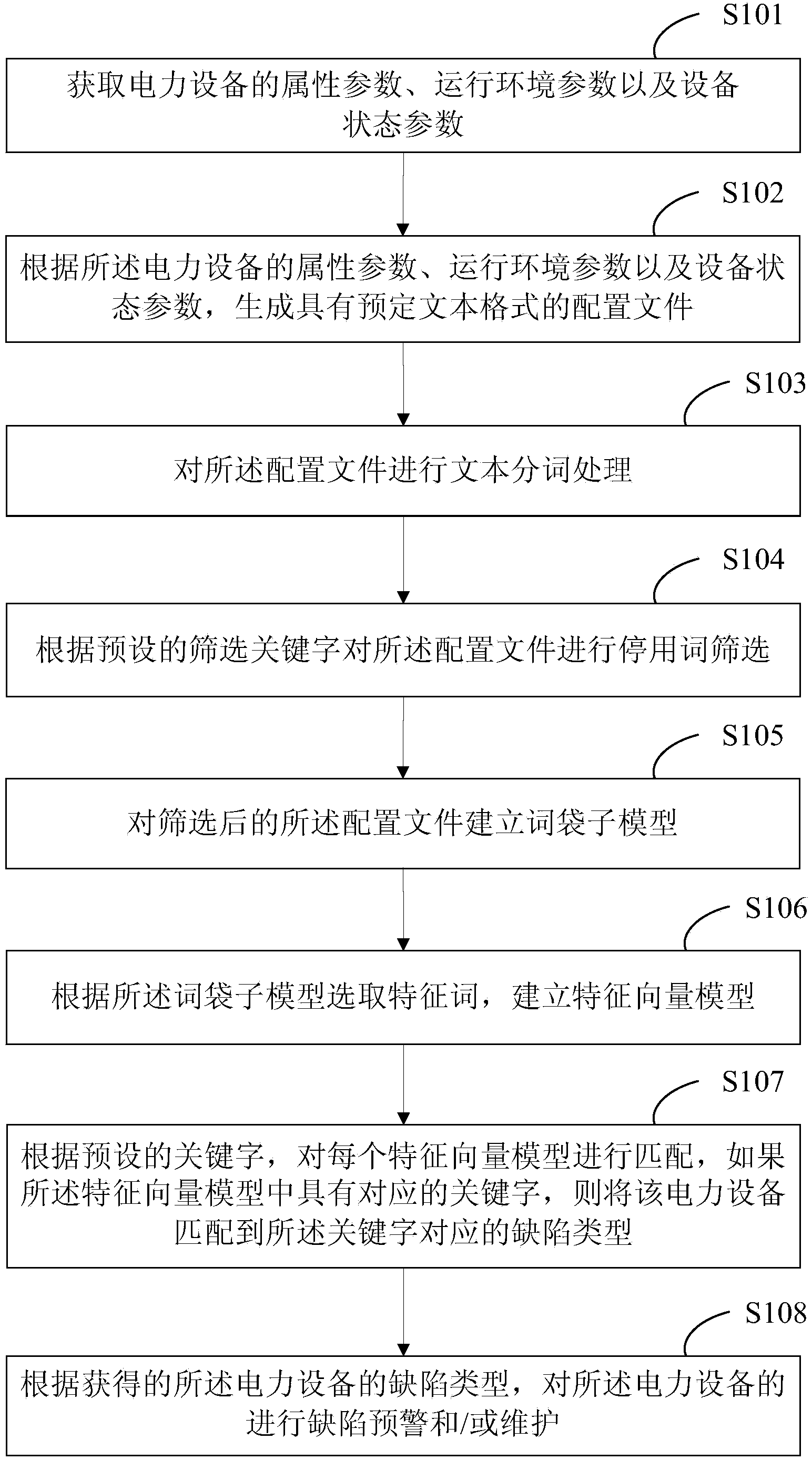

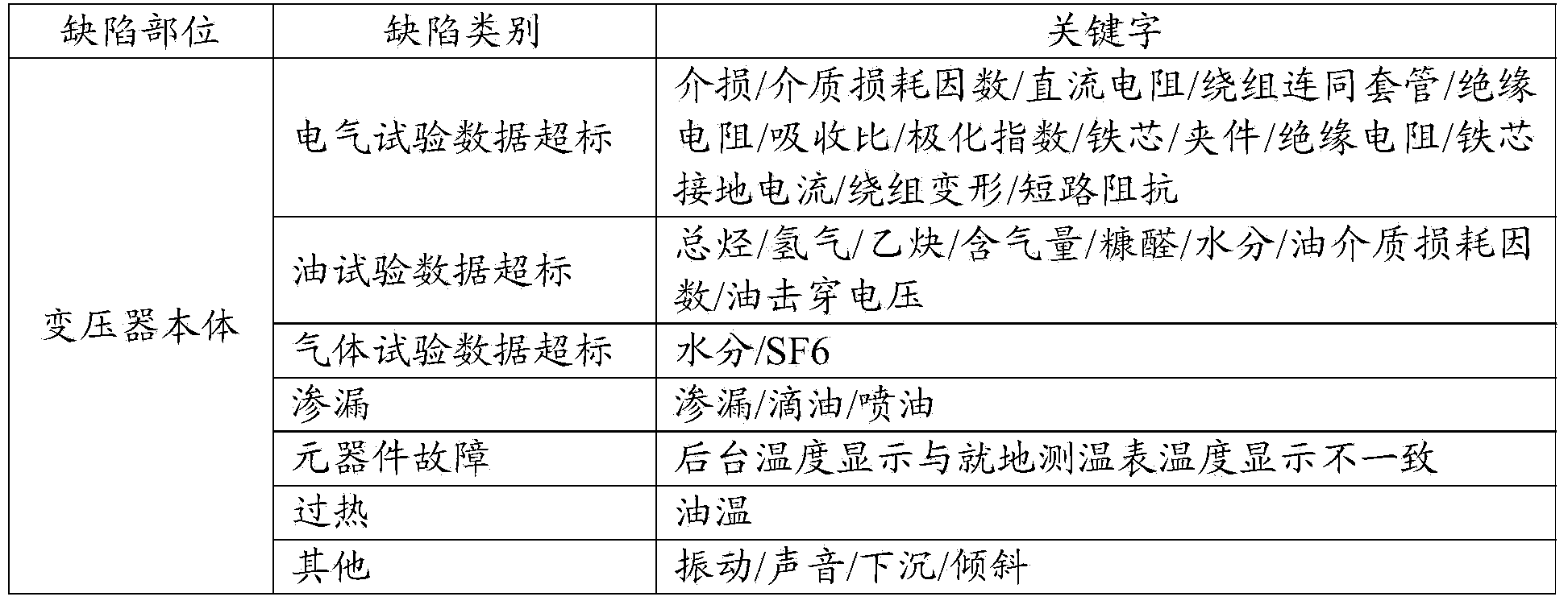

Electrical equipment defect detection and maintenance method

ActiveCN103837770AImprove detection efficiencyImprove maintenance efficiencyElectrical testingSpecial data processing applicationsFeature vectorWord selection

The invention provides an electrical equipment defect detection and maintenance method. The method includes the steps that a configuration file with a preset text format is generated according to attribute parameters, operating environment parameters and equipment state parameters of electrical equipment; text clustering processing including word segmentation, stop word screening, bag-of-words model establishment, feature word selection and feature vector model establishment is carried out on the configuration file, and automatic judgment and classification are carried out according to the similarity between defect information of the electrical equipment under the condition that how many defect types exist specifically is unknown beforehand; then, automatic matching is completed according to defect types having been known beforehand and corresponding keywords so as to obtain the defect type of the electrical equipment; accordingly, defect warning and maintenance can be carried out on the electrical equipment according to the defect type. By means of the method, the efficiency of defect detection and maintenance of the electrical equipment is improved, cost is reduced, and accuracy is improved as well.

Owner:ELECTRIC POWER RES INST OF GUANGDONG POWER GRID +1

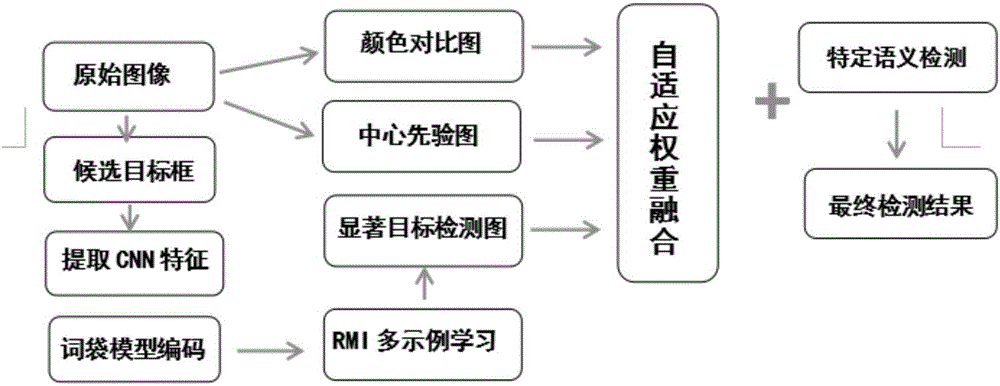

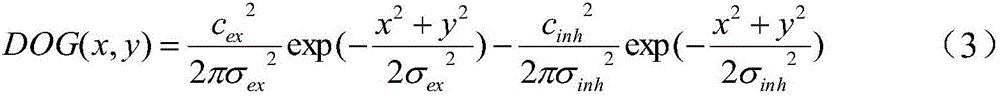

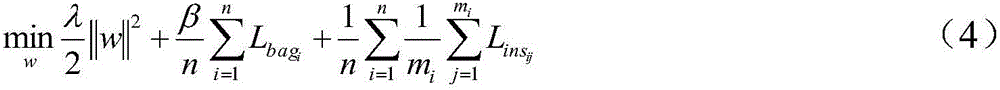

Point of regard detection method based on multilayer information fusion

InactiveCN106815604AHigh expressionPlay a positioning roleCharacter and pattern recognitionImage extractionBag-of-words model

The present invention belongs to the computer vision field, and relates to a point of regard detection method based on the multilayer information fusion. The point of regard detection method is characterized by obtaining a bottom-layer information detection result diagram by the bottom-layer information, such as the colors, etc.; utilizing the spatial position to obtain a center prior diagram; extracting a candidate target frame and the CNN depth features of an original image, utilizing a bag-of-word model to encode, sending the encoded visual features in a trained multi-instance RMI-SVM classifier to mark, and carrying out the Gaussian smoothing on the center of the frame and then carrying out the weighted stack according to the mark of the target frame to obtain a detection result diagram of a target grade; extracting the features describing the overall content of the original image itself, training a softmax regression device to obtain the fusion weight, and adopting the weight to carry out the weighted fusion on the above result diagram. The point of regard detection method of the present invention comprehensively utilizes the information at the three aspects of the color contrast, the significant target and the spatial position, adjusts the proportion of various information aiming at different images, and can have a better detection rate aiming at the image containing various content.

Owner:DALIAN UNIV OF TECH

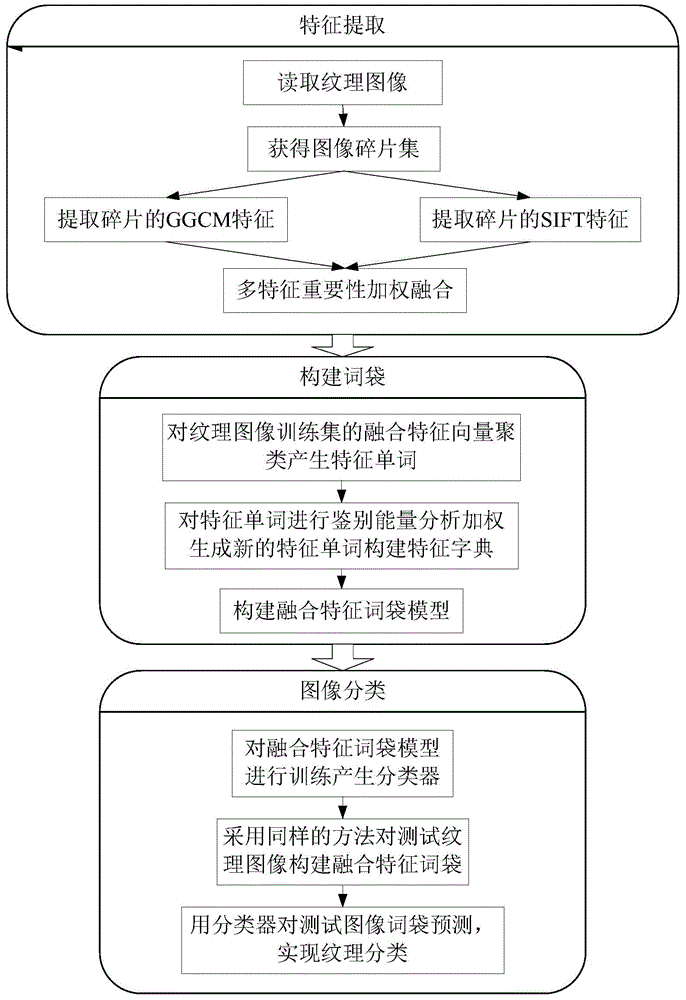

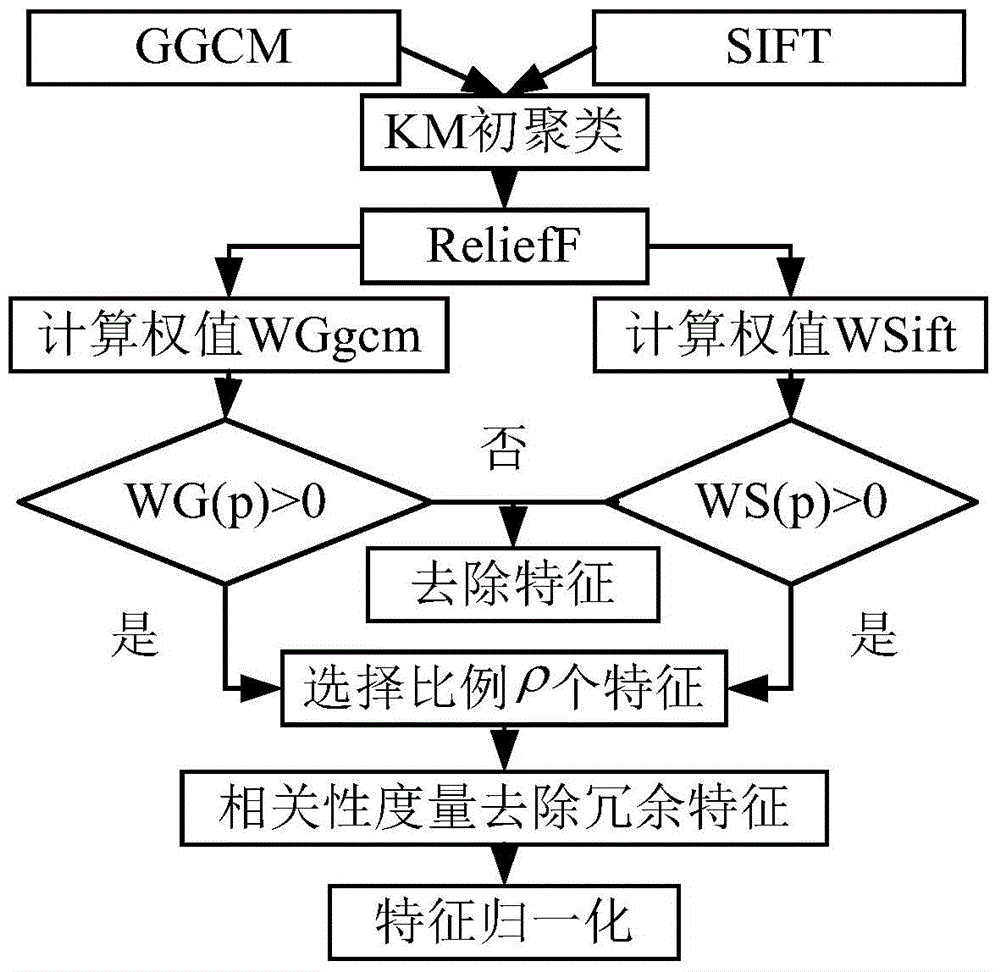

Texture image classification method based on BoF and multi-feature fusion

ActiveCN105005786AImprove classification accuracyImprove robustnessCharacter and pattern recognitionRobustificationBag-of-words model

The invention discloses a texture image classification method based on a BoF (bag of feature) and multi-feature fusion. The method comprises: performing local image selection on a texture image to form a fragment set; extracting GGCM (gray level-gradient co-occurrence matrix) features and SIFT (scale invariant feature transform) local features of all fragments, and performing importance weighted fusion on different features; generating a feature word for fusion feature clusters and performing preference and weighting on the words by using DWDPA (dynamic weighted discrimination power analysis), and assigning a fusion feature vector by using the preference and weighted word to form a training set fusion feature word bag model; computing the fusion feature vector of the texture image to be tested and acquiring a corresponding fusion feature word bag; and training the feature word bag model by using a SVM (support vector machine) as a classifier. The method effectively overcomes a defect that the GGCM is low in accuracy for large texture classification, compensates a weakness of information loss of the BoF feature space, and is more accurate and good in robustness.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

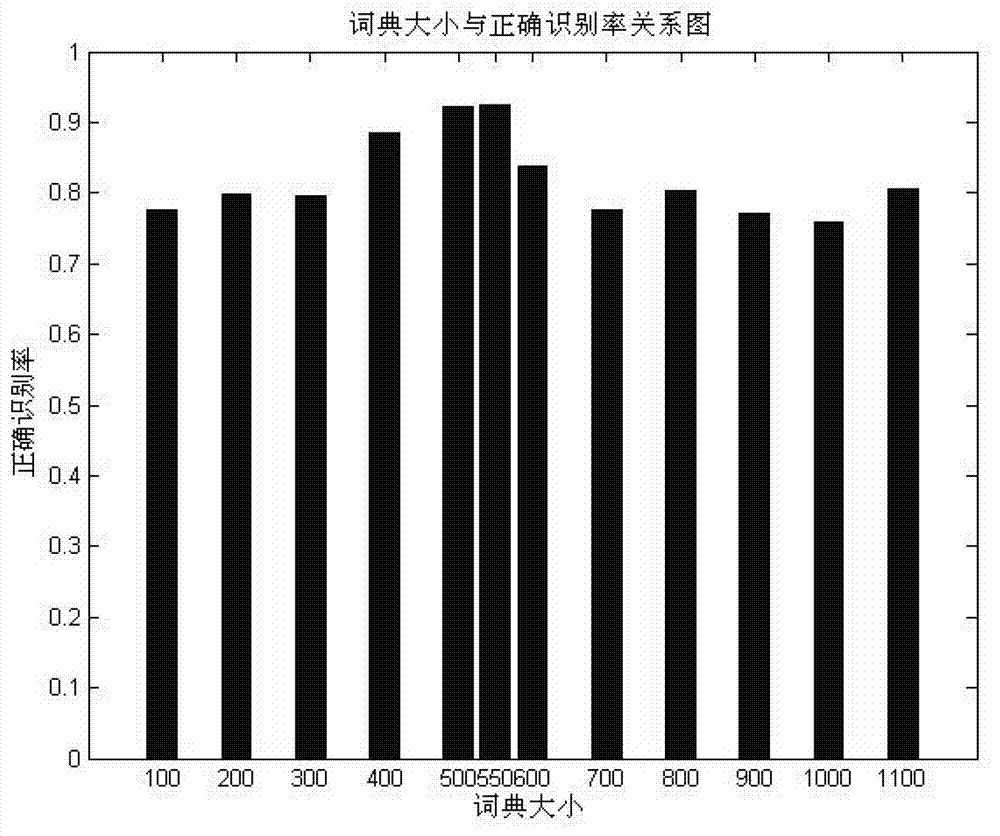

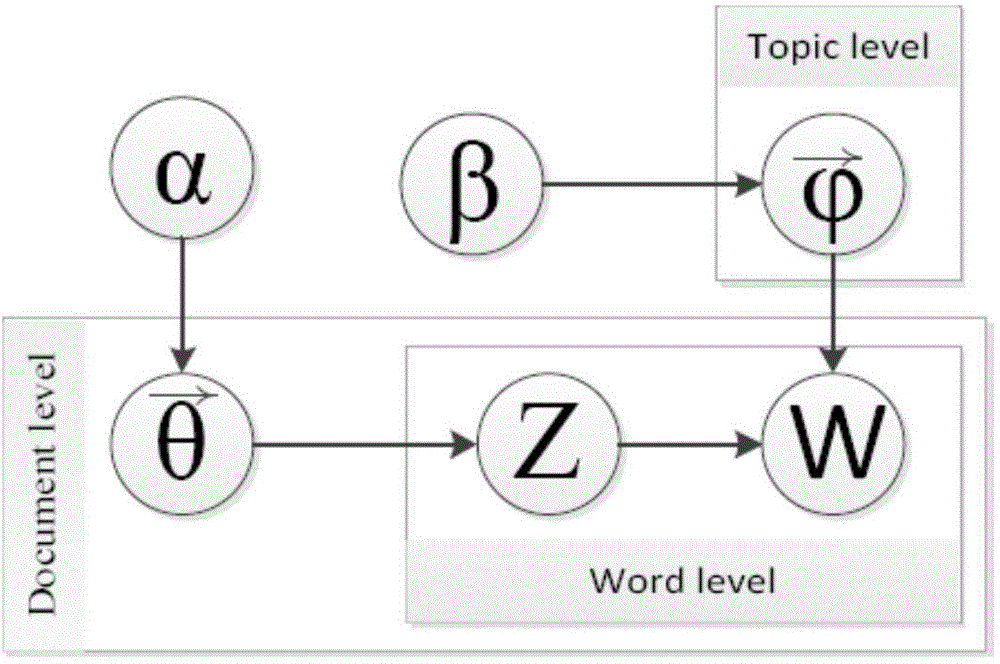

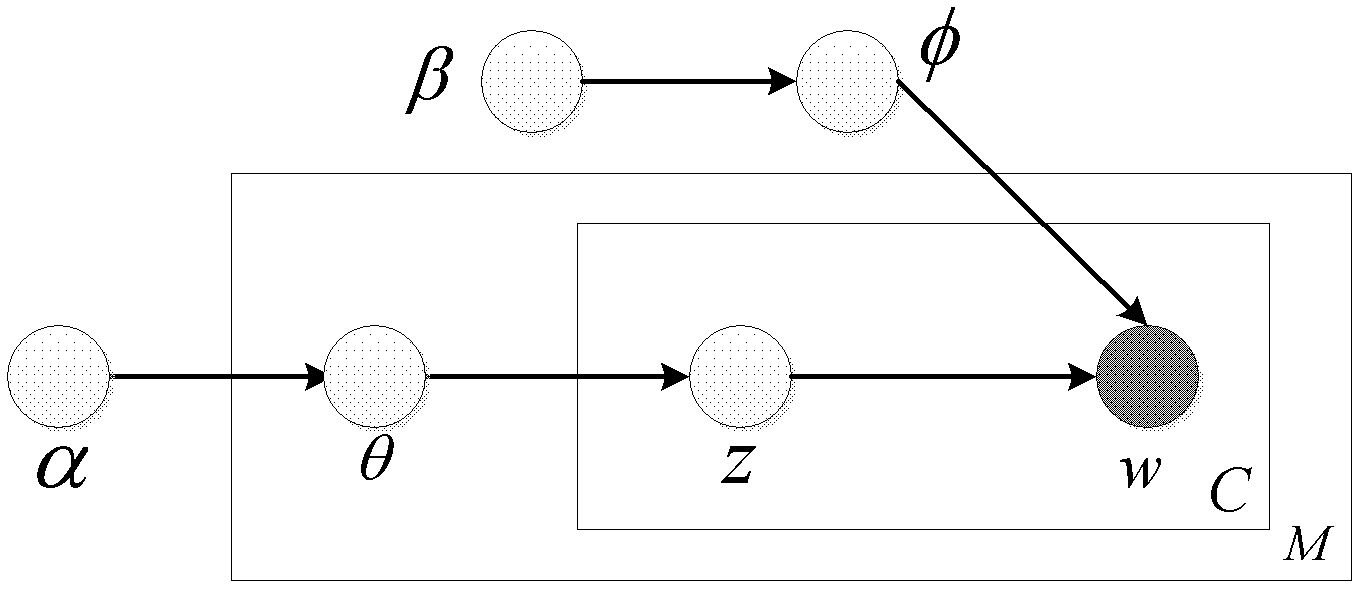

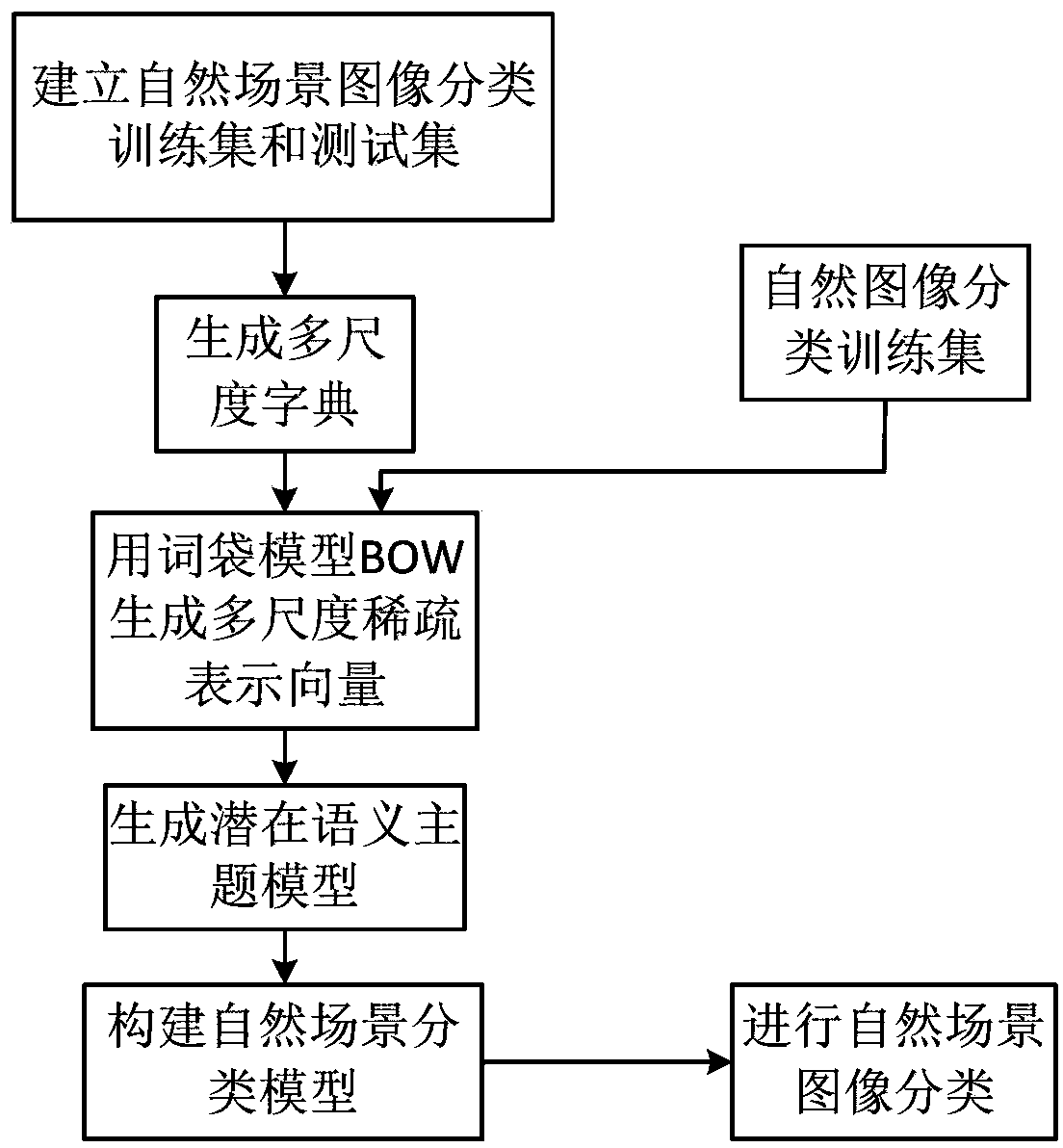

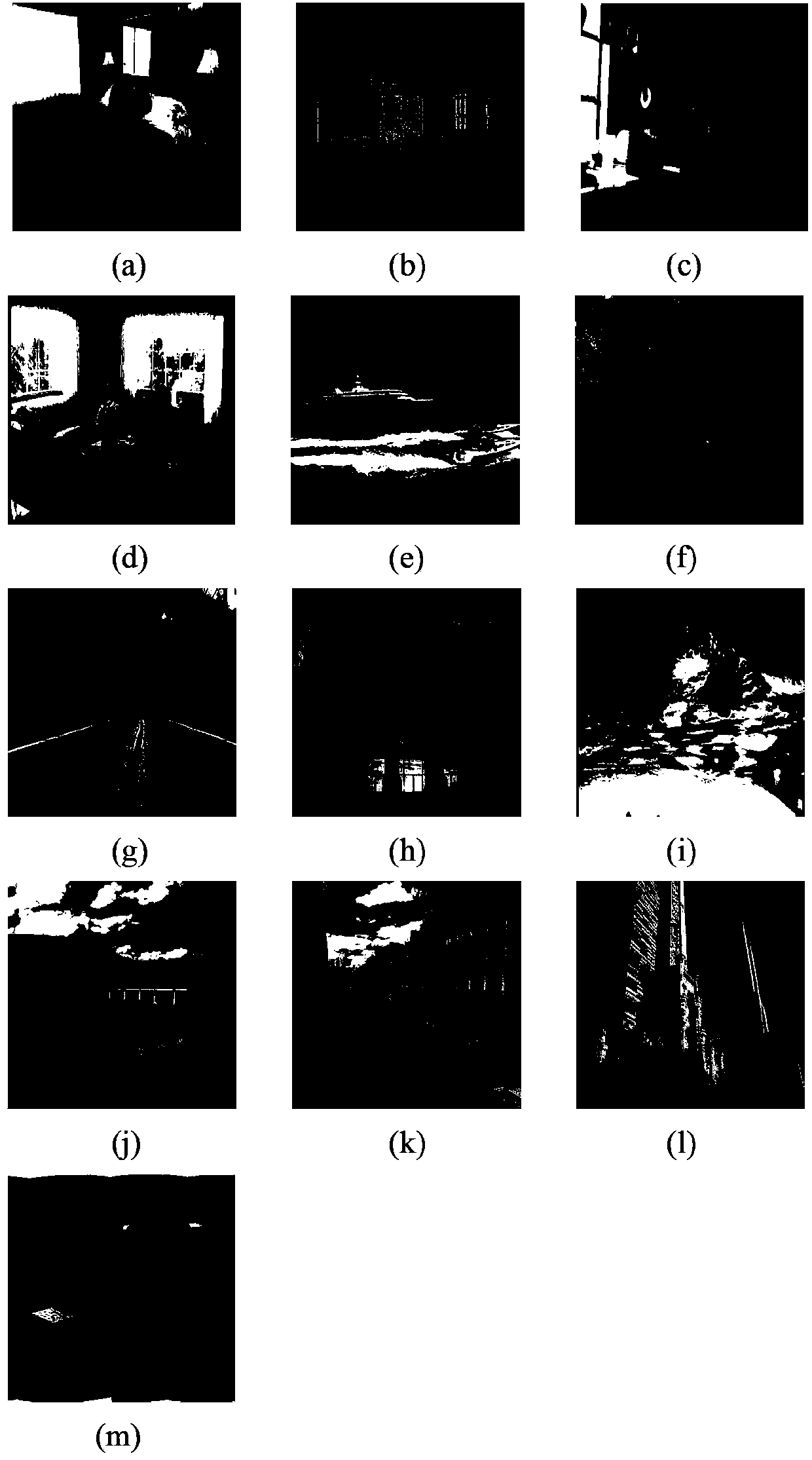

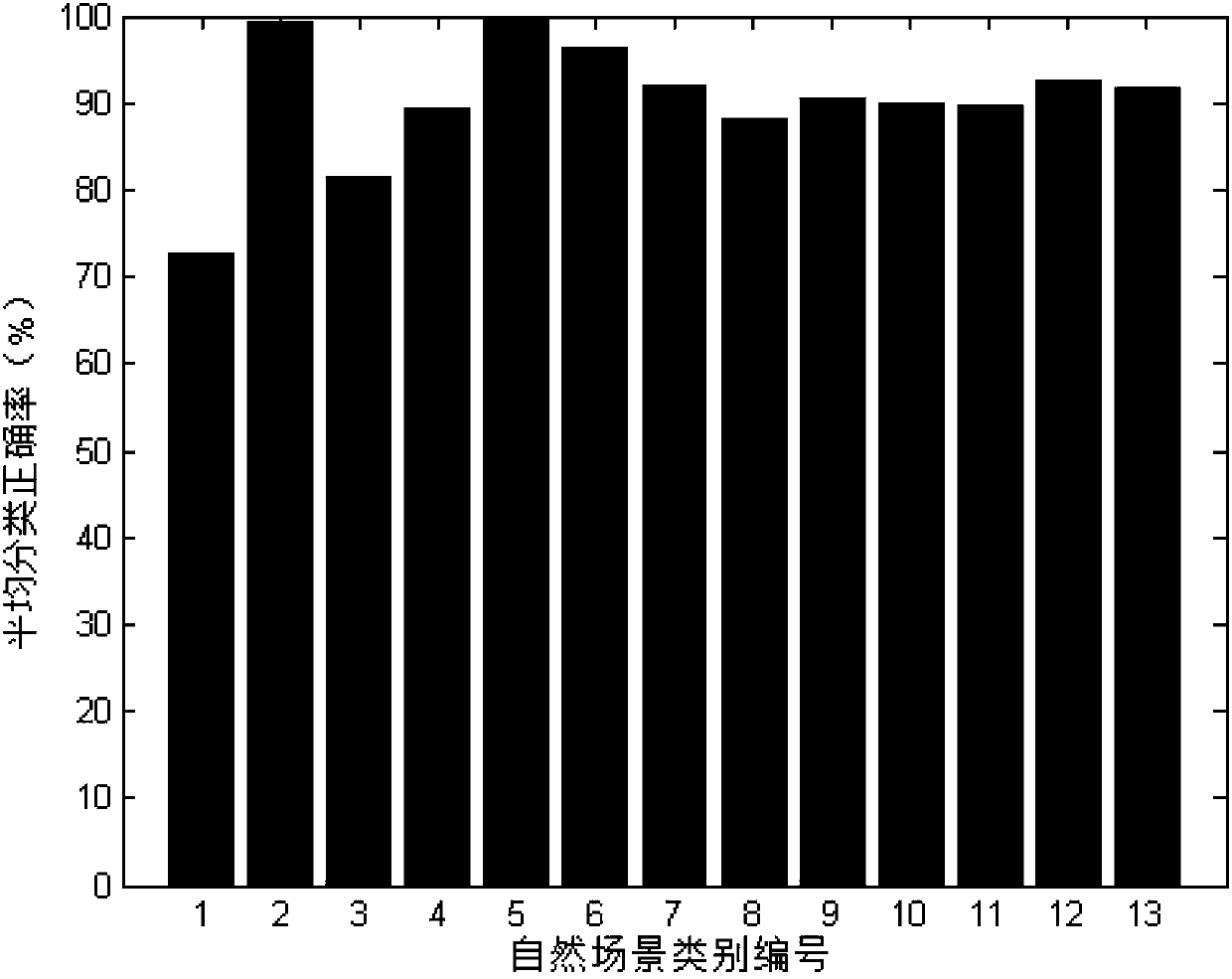

Multi-scale dictionary natural scene image classification method based on latent Dirichlet model

InactiveCN103390046ARich scale informationImprove accuracyCharacter and pattern recognitionSpecial data processing applicationsClassification methodsGoal recognition

The invention discloses a multi-scale dictionary scene image classification method based on latent Dirichlet analysis and mainly aims to solve the problems that the manual marking workload is higher and the classification accuracy is lower by adopting a traditional classification method. The multi-scale dictionary scene image classification method based on the latent Dirichlet analysis comprises the implementation steps of respectively establishing a training set and a test set for natural scene image classification; extracting scale invariant features from the training set to generate a multi-scale dictionary; performing dictionary mapping on images by using the multi-scale dictionary, and generating multi-scale sparse representation vectors by using a BOW (bag of words model); generating a latent semantic topic model of the multi-scale sparse representation vectors by using a Gibbs sampling method to obtain latent semantic topic distribution of the images, and further building a natural scene image classification model; classifying the natural scene images by using the classification model. According to the latent Dirichlet analysis-based method for classifying the scene images by using the multi-scale dictionary disclosed by the invention, by adopting multi-scale features and the latent semantic topic model, the feature information of the images is enriched, a large amount of manual marking work is avoided, and the classification accuracy is improved. The multi-scale dictionary scene image classification method based on the latent Dirichlet analysis can be used for object identification and vehicle and robot navigation.

Owner:XIDIAN UNIV

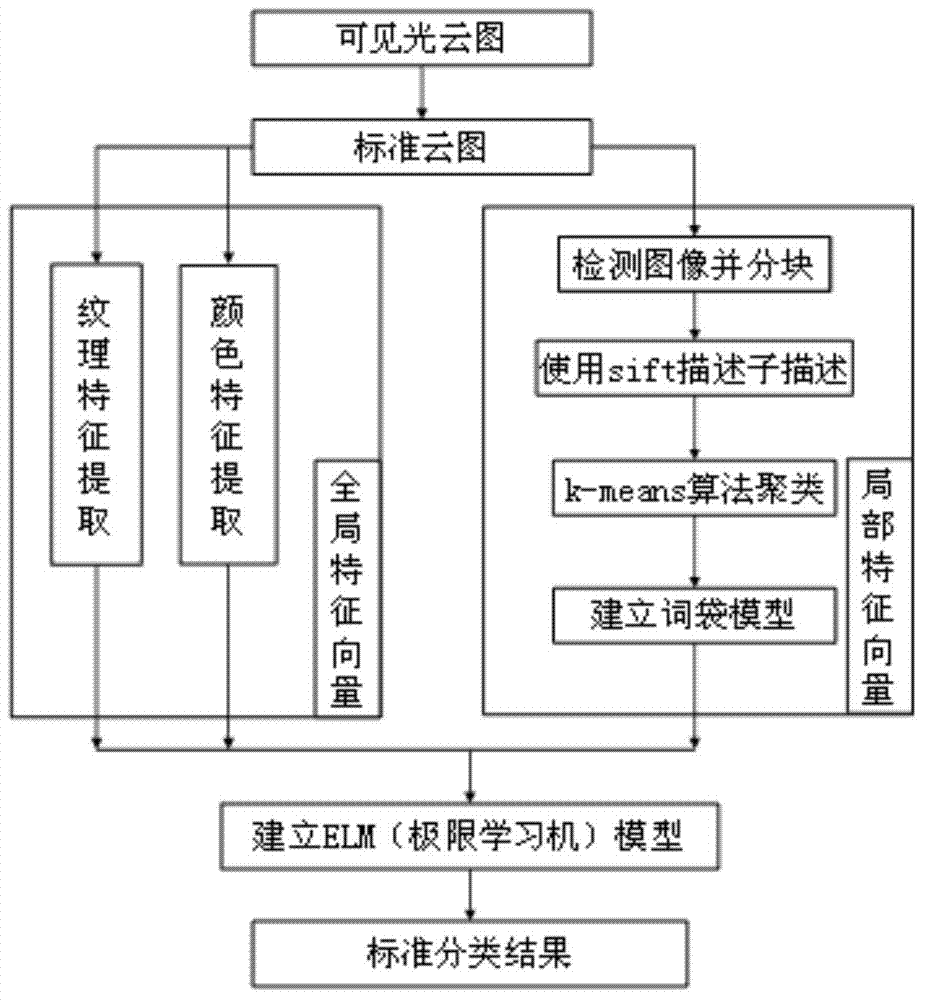

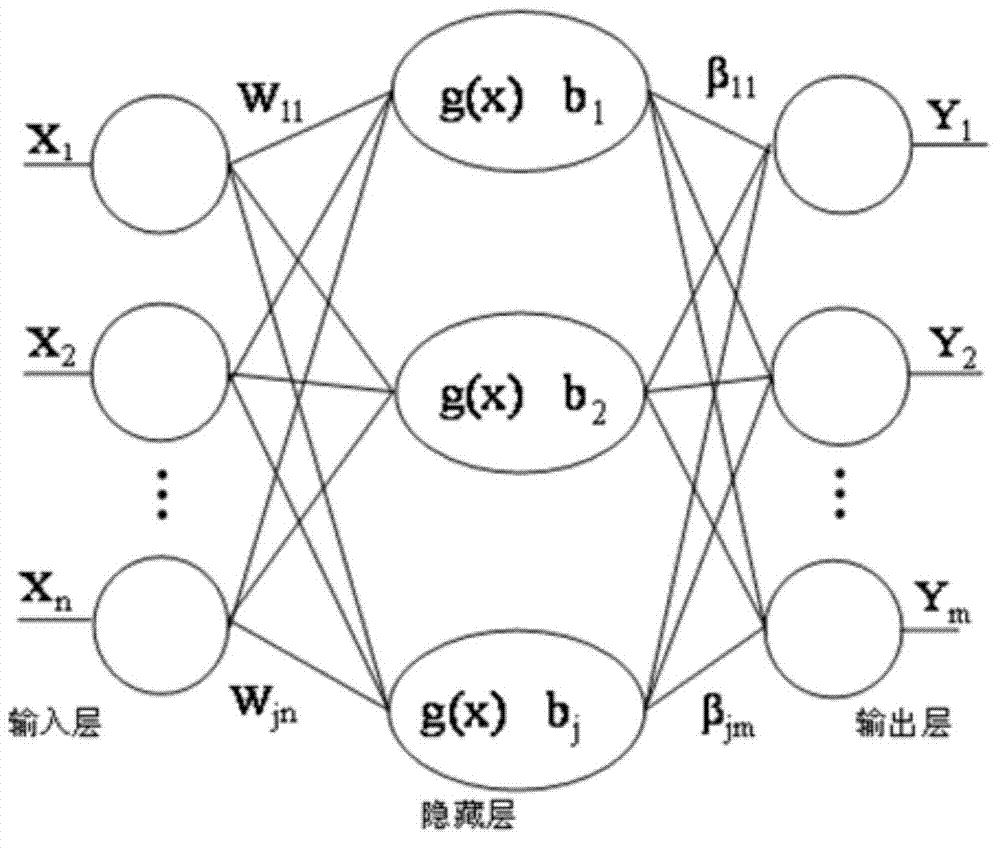

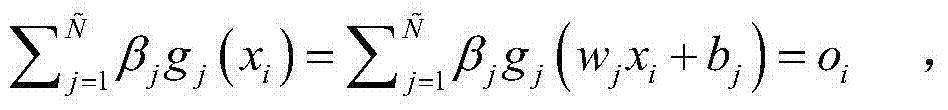

Sorting method of ground-based visible light cloud picture

InactiveCN103699902AAccurate classification effectCharacter and pattern recognitionLearning machineFeature vector

The invention discloses a sorting method of a ground-based visible light cloud picture. The method comprises the following steps that 1, image preprocessing is performed on the ground-based visible light cloud picture to obtain standard cloud pictures, a plurality of images are selected randomly from the standard cloud pictures to be used as training samples, the rest are used as testing samples, and the number of the training samples is larger than that of the testing samples; 2, global features of the standard cloud pictures are extracted, and comprise textural features and color features, and the texture features comprise gray level co-occurrence matrixes and Tamura features; 3, a bag of words model is built on basis of SIFT (Scale-Invariant Feature Transform) feature descriptors, and local features of the standard cloud pictures are extracted; 4, the global features obtained in the step 2 and the local features obtained in the step 3 are linearly fused, and a limitation learning machine model is built for the training samples to obtain a cloud picture classifier; 5, sorting is performed on the testing samples by using the cloud picture classifier, and a final sorting result is obtained. The sorting is more accurate by using the sorting method of the ground-based visible light cloud picture.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

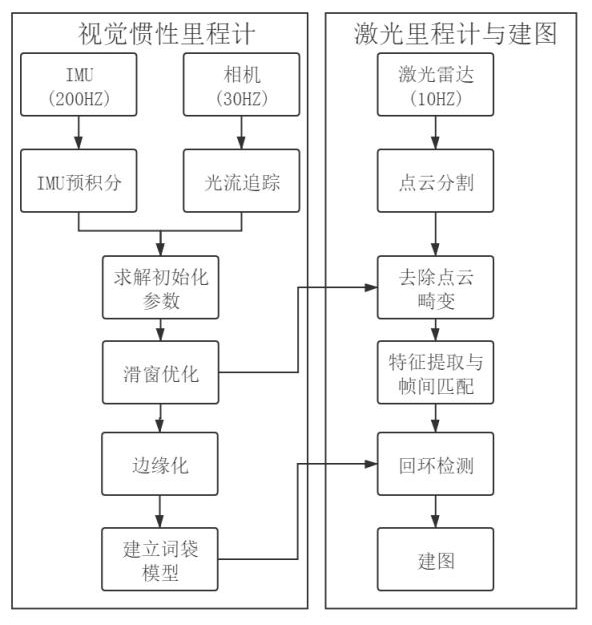

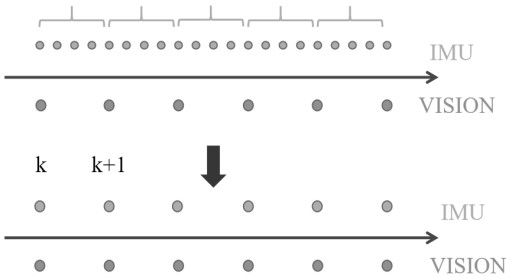

Outdoor large-scale scene three-dimensional mapping method fusing multiple sensors

ActiveCN112634451AEasy to adaptHigh loop detection accuracyImage enhancementImage analysisFeature extractionMultiple sensor

The invention provides an outdoor large-scale scene three-dimensional mapping method fusing multiple sensors. The implementation process is divided into two modules, namely a visual inertia odometer module, and a laser odometer and mapping module. The visual inertia odometer module comprises optical flow tracking, IMU pre-integration, initialization, sliding window optimization, marginalization and word bag model establishment. The laser odometer and mapping module comprises point cloud segmentation, point cloud distortion removal, feature extraction and inter-frame matching, loopback detection and mapping. Compared with a single radar mapping scheme, the high-frequency pose of the visual inertia odometer is fused, and the method has the advantages of being good in point cloud distortion removal effect, high in loopback detection precision and high in mapping precision. The problem that an outdoor large-scene three-dimensional map is low in precision is solved, and a breakthrough is provided for further development of unmanned driving.

Owner:FUZHOU UNIV

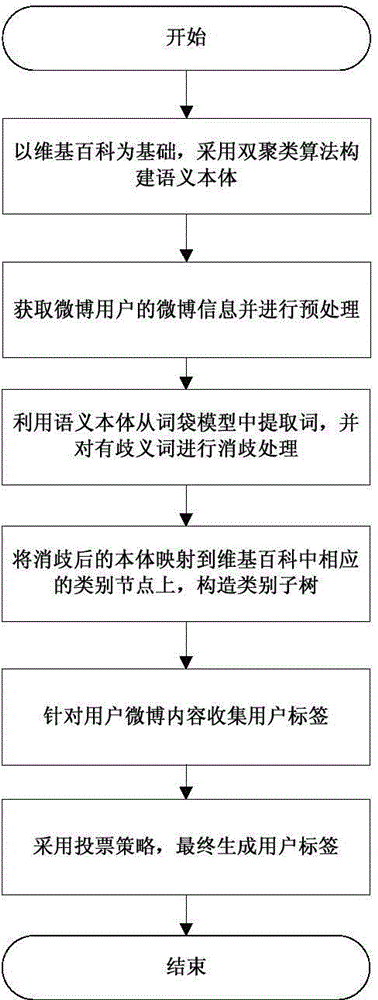

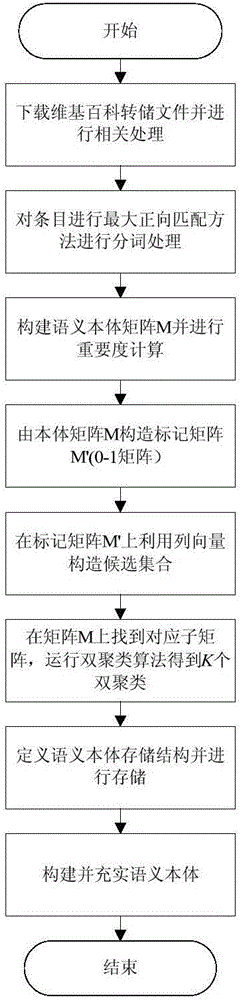

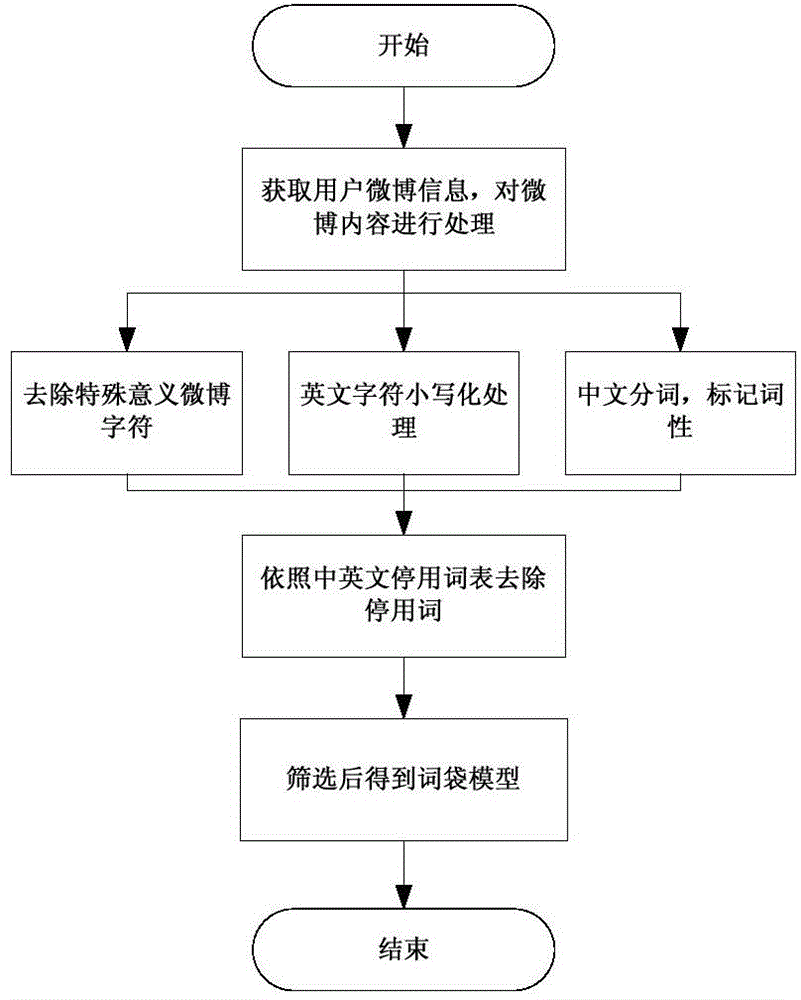

Automatic generating algorithm of microblog user label based on biclustering

InactiveCN104598588AImprove accuracySpecial data processing applicationsMicrobloggingBag-of-words model

The invention discloses an automatic generating algorithm of a microblog user label based on biclustering. The automatic generating algorithm is characterized by comprising the following steps: a semantic ontology is constructed by adopting a biclustering algorithm on the basis of Wikipedia; microblog information of a microblog user is obtained and preprocessed, so as to obtain a bag-of-words model; words are extracted from the bag-of-words model by utilizing the semantic ontology, and disambiguation is performed on ambiguous words; the words subjected to disambiguation are mapped to corresponding category nodes in the Wikipedia, so as to construct a category subtree; the user label is collected by aiming at the microblog content of the user; finally, the user label is generated by adopting a voting strategy. According to the automatic generating algorithm, the semantic ontology is constructed by adopting the biclustering algorithm on the basis of the Wikipedia, equivalents of text words in microblog are positioned, the disambiguation is performed on the ambiguous words, categories accurately correspond to the words, and the label with high accuracy is generated for the user.

Owner:HOHAI UNIV

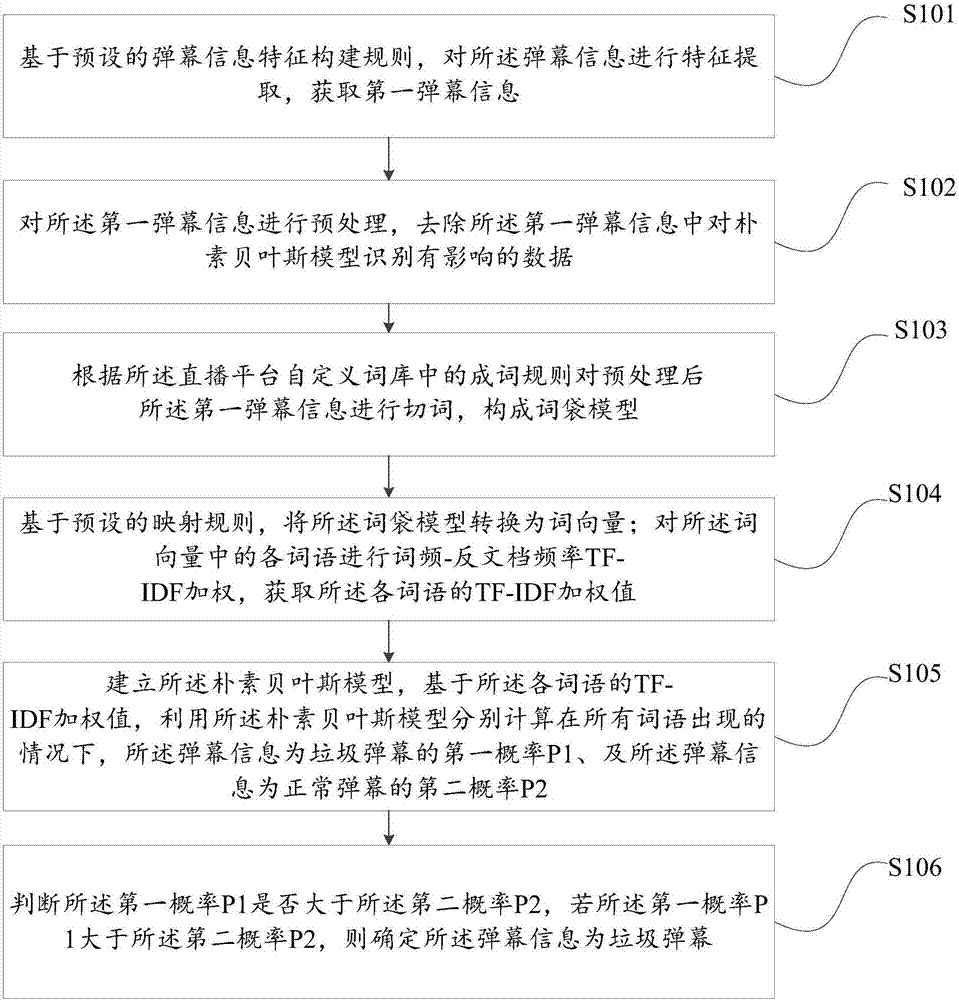

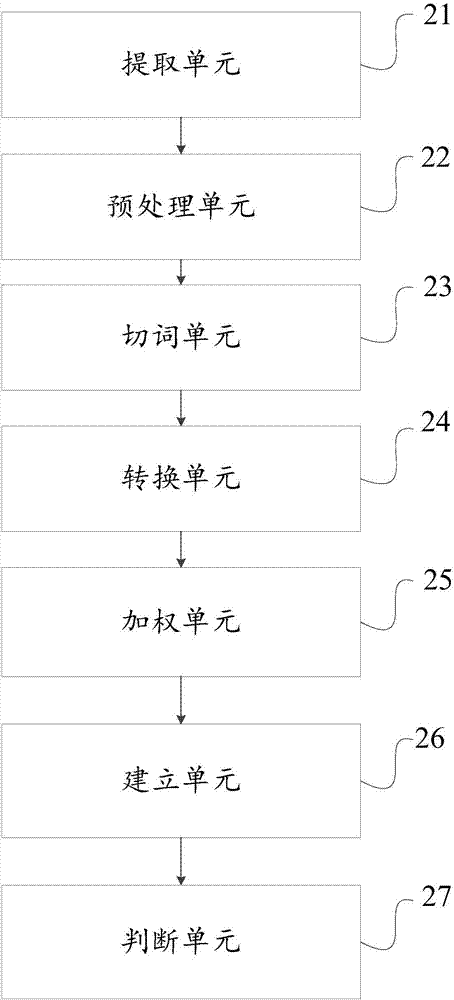

Identification method and device for garbage barrages and computer equipment

ActiveCN107480123AExpress true meaningHigh precisionUnstructured textual data retrievalNatural language data processingFeature extractionAlgorithm

The invention provides an identification method and device for garbage barrages and computer equipment. The method comprises the steps of constructing rules on the basis of preset barrage information characteristics, and conducting characteristic extraction on barrage information to obtain first barrage information; conducting term segmentation on the first barrage information according to term formation rules in a user-defined term library of a live platform, and forming a term bag model; on the basis of preset mapping rules, converting the term bag model into a term vector, conducting term frequency-inverse document frequency (TF-IDF) weighting on terms in the term vector, and obtaining the TF-IDF weighted values of the terms; establishing a native Bayesian model, and on the basis of the TF-IDF weighted values of the terms, separately utilizing the native Bayesian model to calculate a first probability P1 that the barrage information is a garbage barrage and a second probability P2 that the barrage information is a normal barrage under the condition that all terms appear; judging whether or not the first probability P1 is greater than the second probability P2, and if yes, determining that the barrage information is the garbage barrage.

Owner:WUHAN DOUYU NETWORK TECH CO LTD

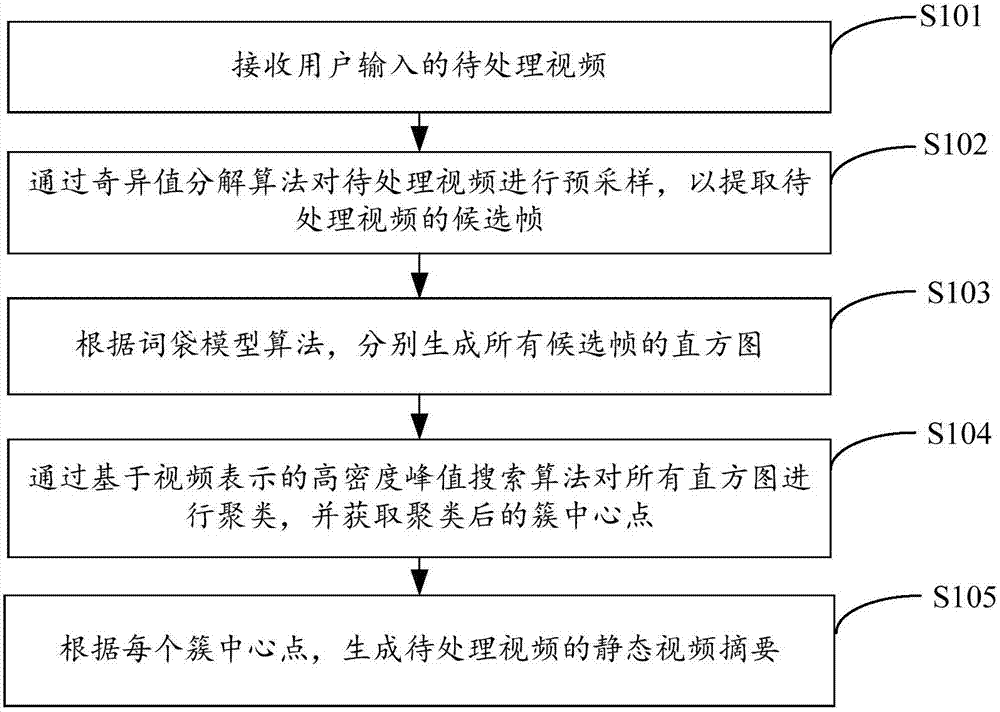

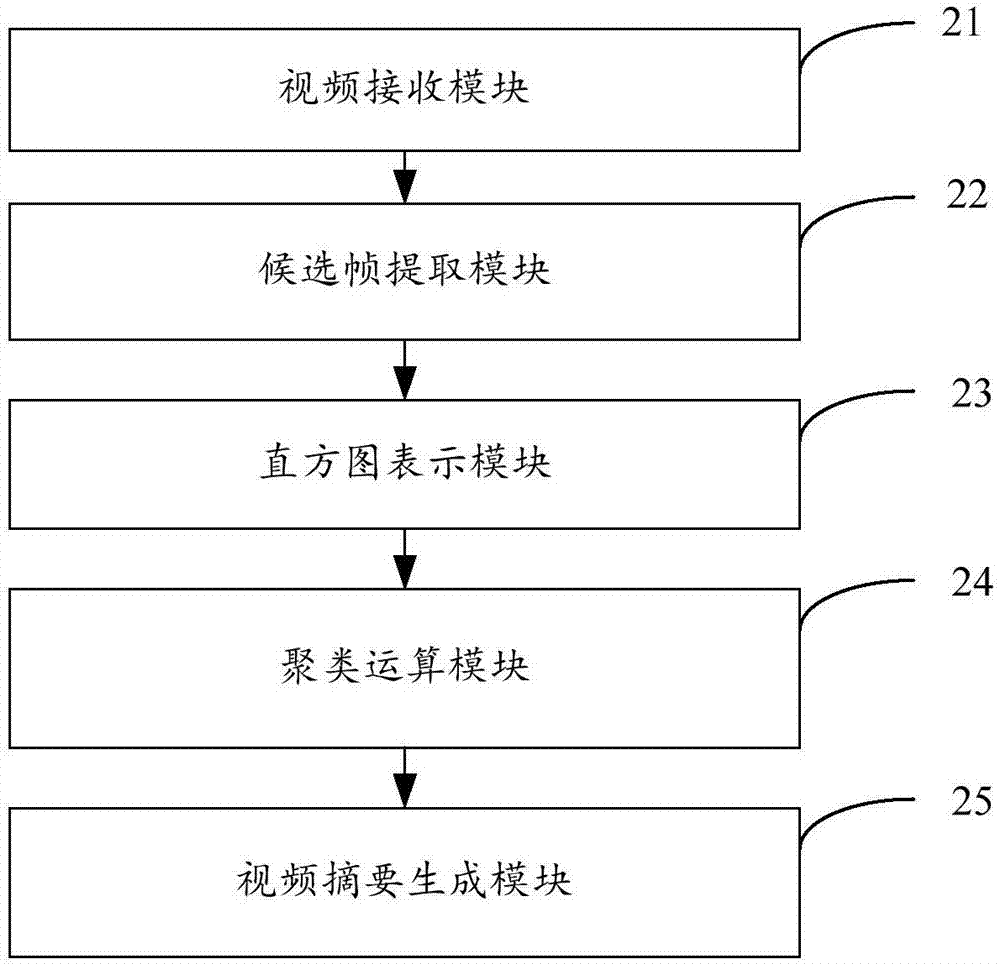

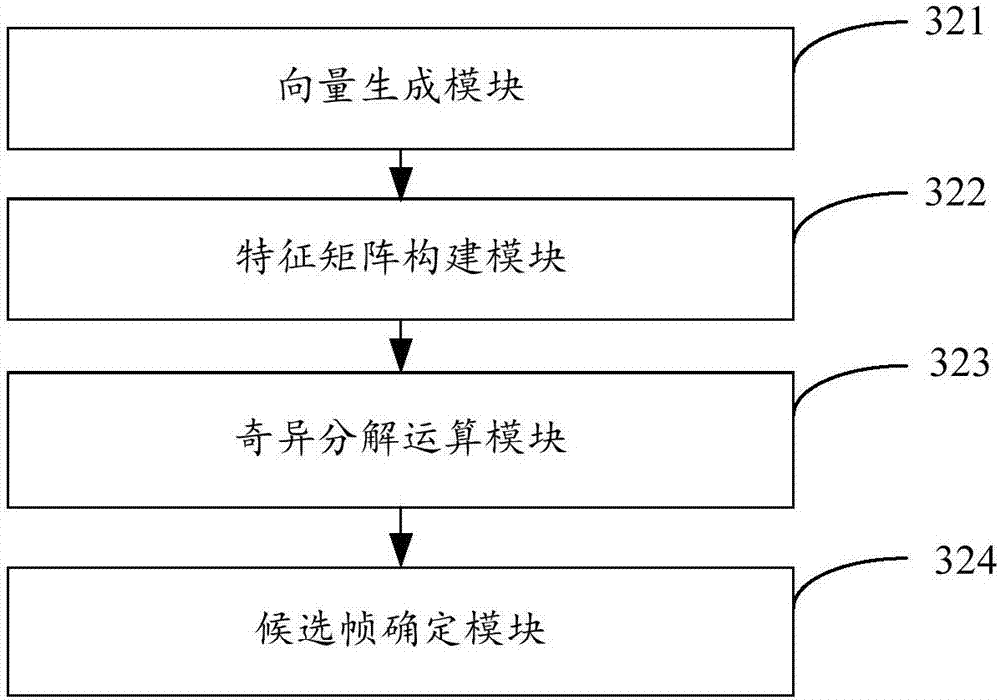

Method and apparatus for generating still video abstract

InactiveCN107223344AImprove the de-redundancy effectImprove stabilityCharacter and pattern recognitionSelective content distributionSingular value decompositionBag-of-words model

The invention belongs to the technical field of computers, and provides a method and apparatus for generating a still video abstract. The method includes the following steps: receving a to-be-processed video which is input by a user; pre-sampling the to-be-processed video through a singular value decomposition algorithm so as to extract a candidate frame of the to-be-processed video; based on a bag of words model, separately generating a histogram which is used to represent each candidate frame; by means of a high intensity peak value search algorithm which is based on video representation, clustering all histograms, and acquiring a clustering center point after performing clustering; based on each clustering center point, generating a still video abstract of the to-be-processed video. The method herein can, by candidate frame generation and histogram representation, deeply remove redundant frames, can adaptively generate clustering center points in the clustering process, obviates the need for setting the number of clusters in advance and has no iterative process, effectively increases clustering stability and adaptivenes, reduces time complexity of clustering, and further effectively increases the efficiency and quality in generating still video abstracts.

Owner:SHENZHEN UNIV

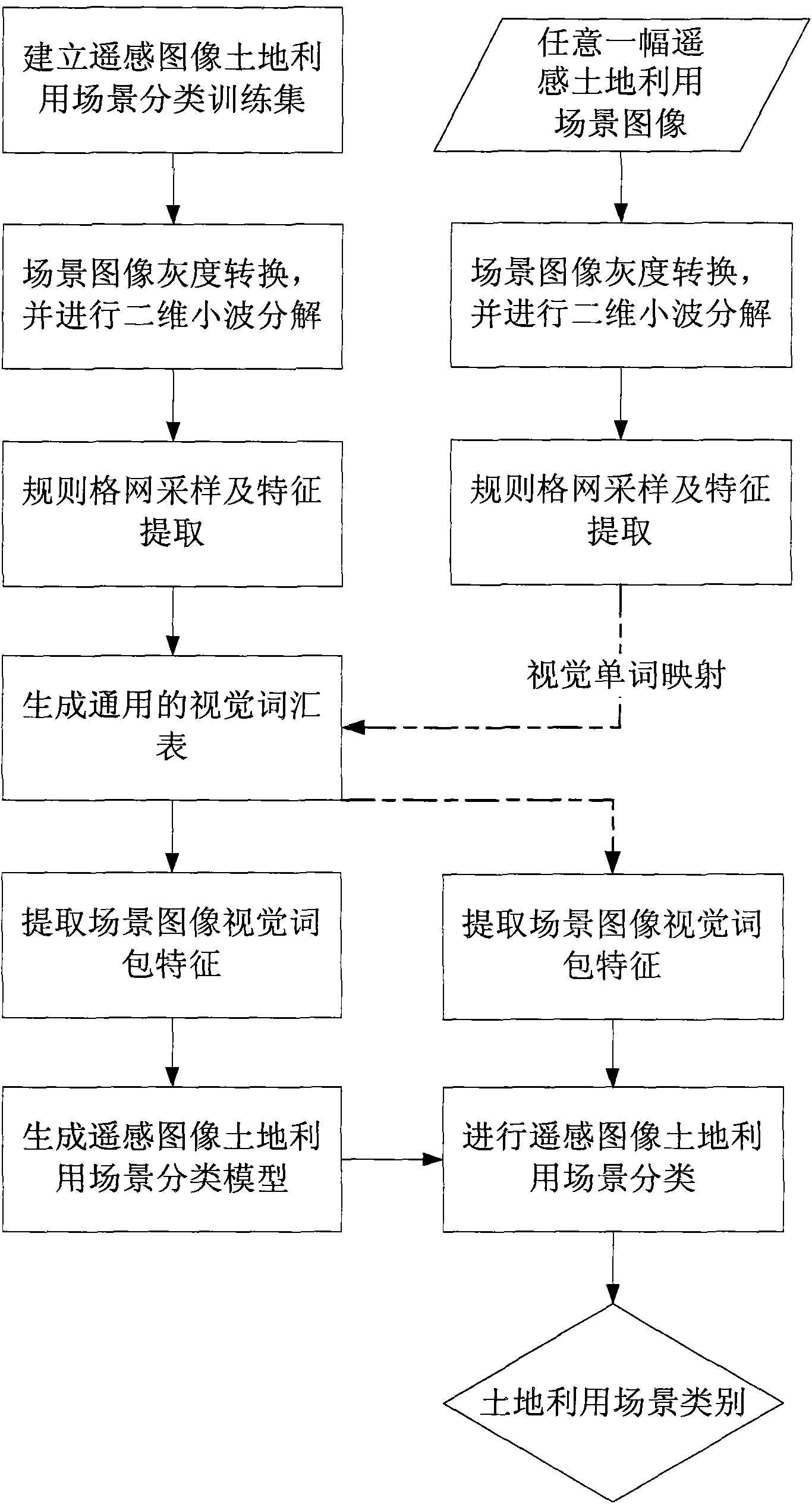

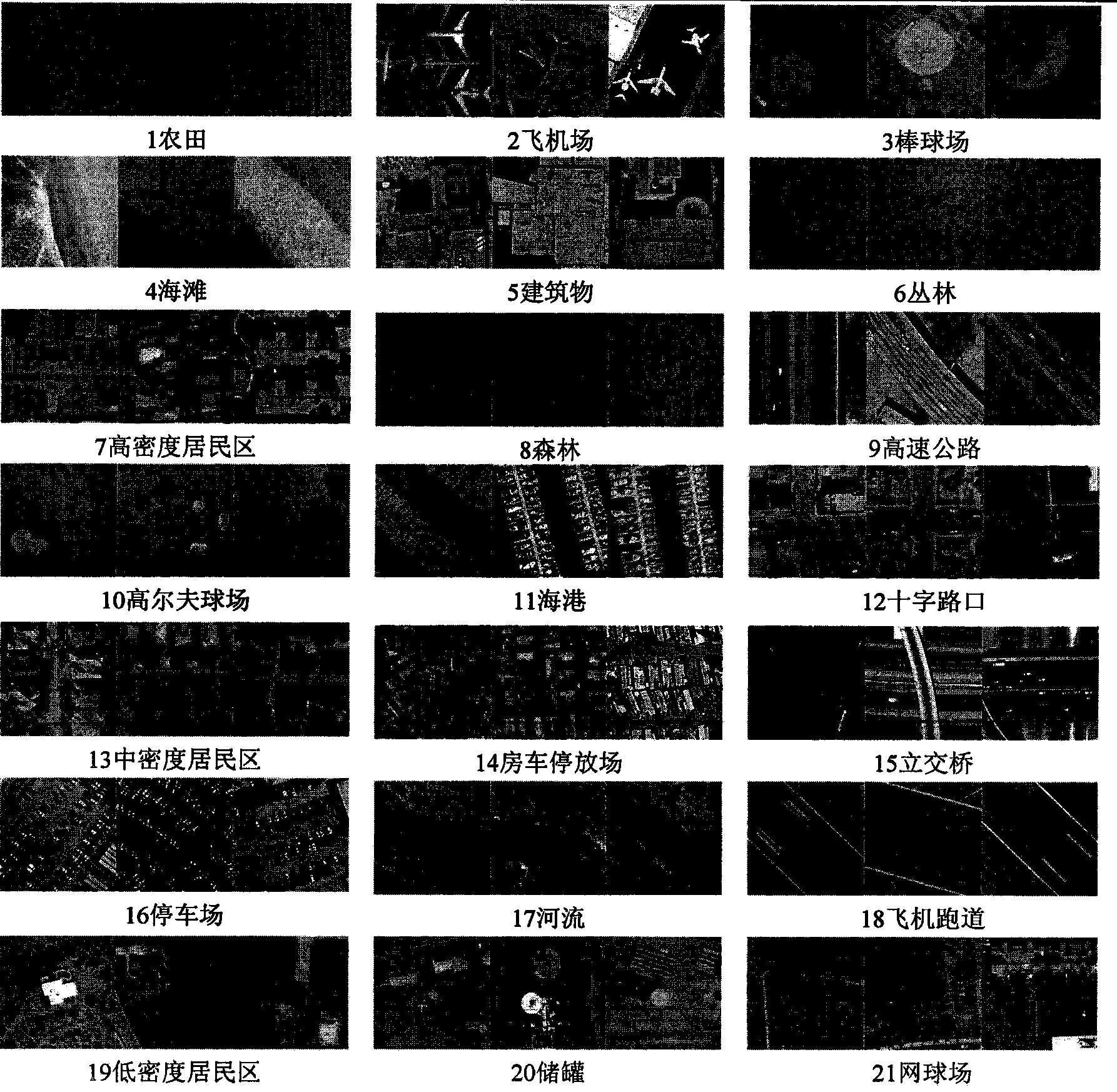

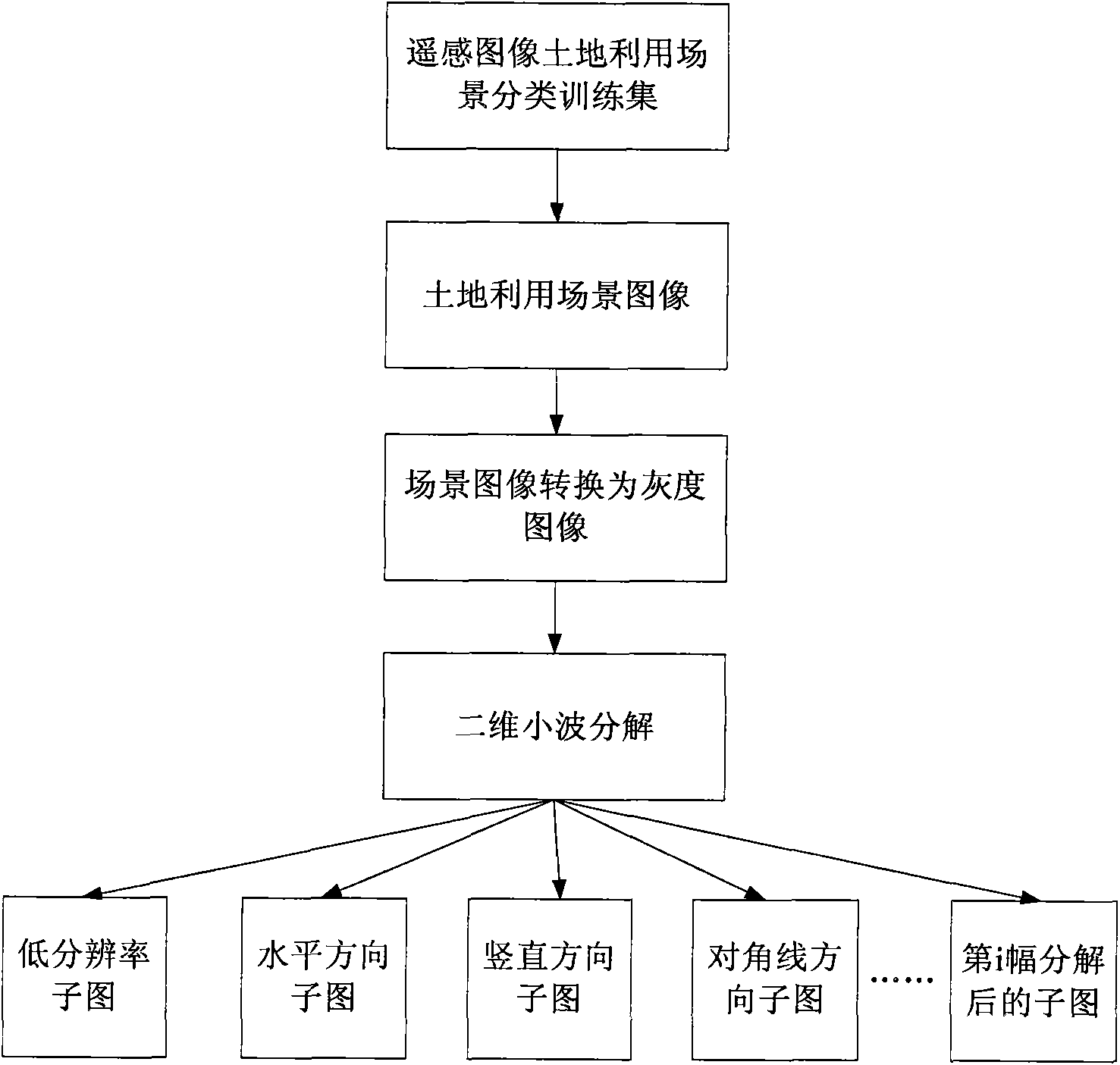

Remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and visual sense bag-of-word model

ActiveCN103413142ASolve the lack of considerationImprove utilizationCharacter and pattern recognitionDecompositionClassification methods

The invention relates to a remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and a visual sense bag-of-word model. The method comprises the steps that a remote sensing image land utilization scene classification training set is built; scene images in the training set are converted to grayscale images, and two-dimension decomposition is conducted on the grayscale images; regular-grid sampling and SIFT extracting are conducted on the converted grayscale images and sub-images formed after two-dimension decomposition, and universal visual word lists of the converted grayscale images and the sub-images are independently generated through clustering; visual word mapping is conducted on each image in the training set to obtain bag-of-word characteristics; the bag-of-word characteristics of each image in the training set and corresponding scene category serial numbers serve as training data for generating a classification model through an SVM algorithm; images of each scene are classified according to the classification model. The remote sensing image land utilization scene classification method well solves the problems that remote sensing image texture information is not sufficiently considered through an existing scene classification method based on a visual sense bag-of-word model, and can effectively improve scene classification precision.

Owner:INST OF REMOTE SENSING & DIGITAL EARTH CHINESE ACADEMY OF SCI

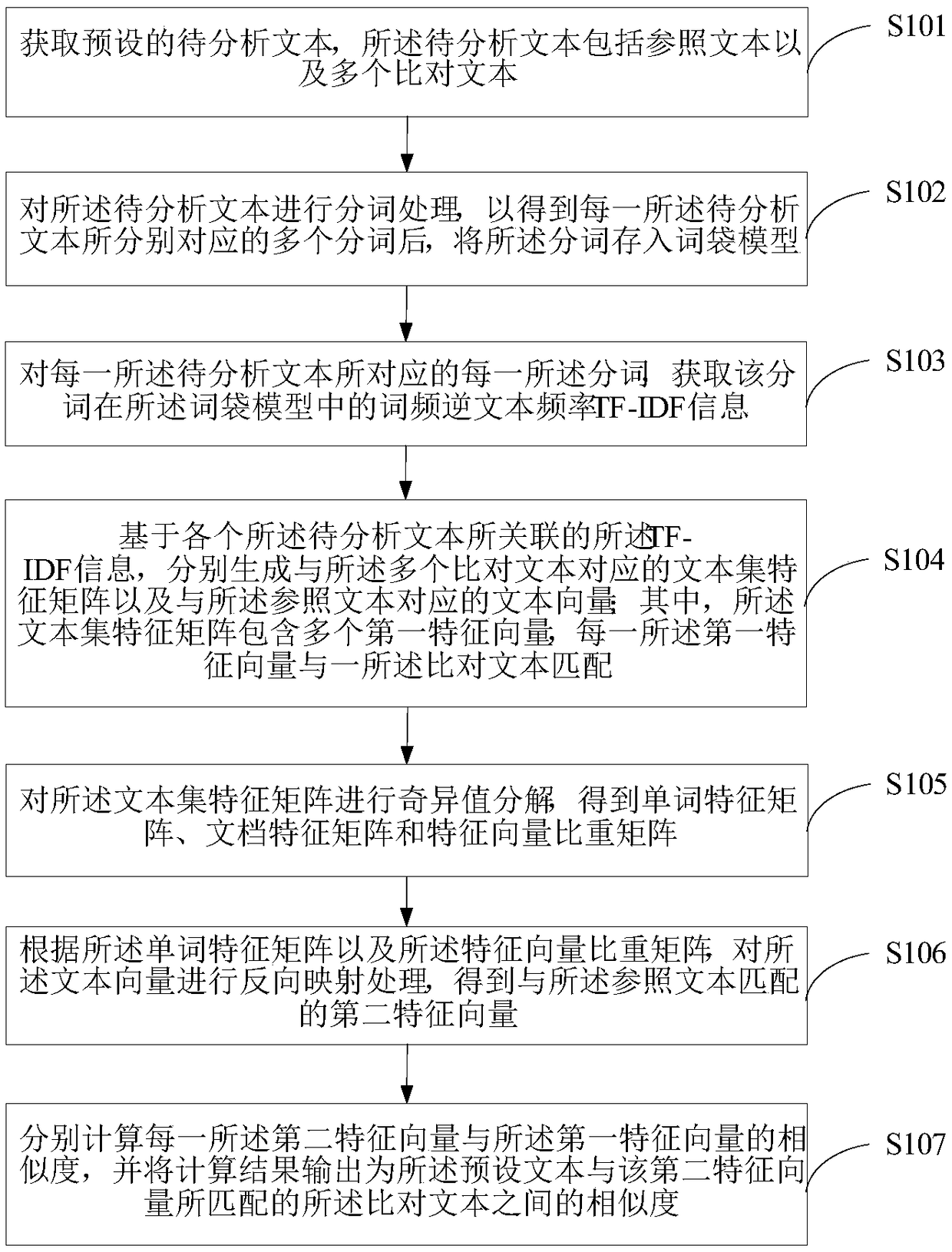

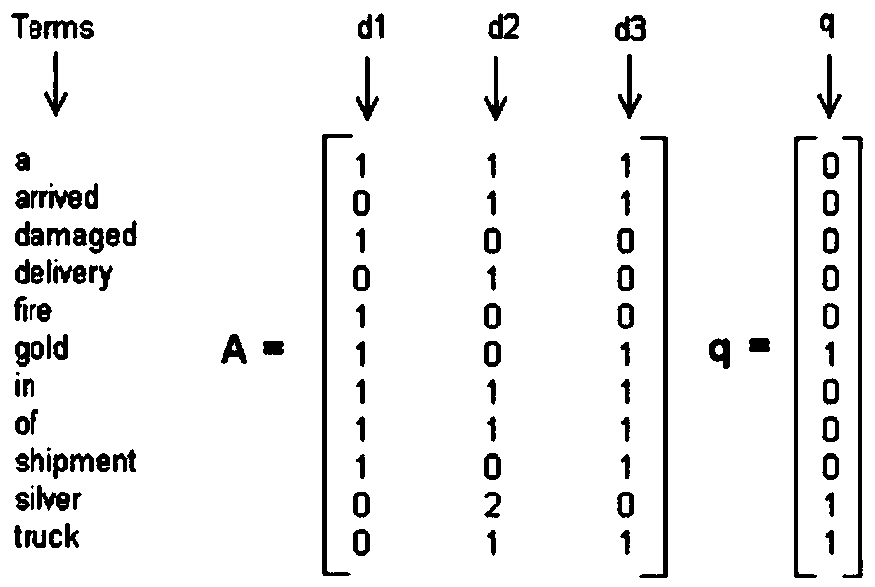

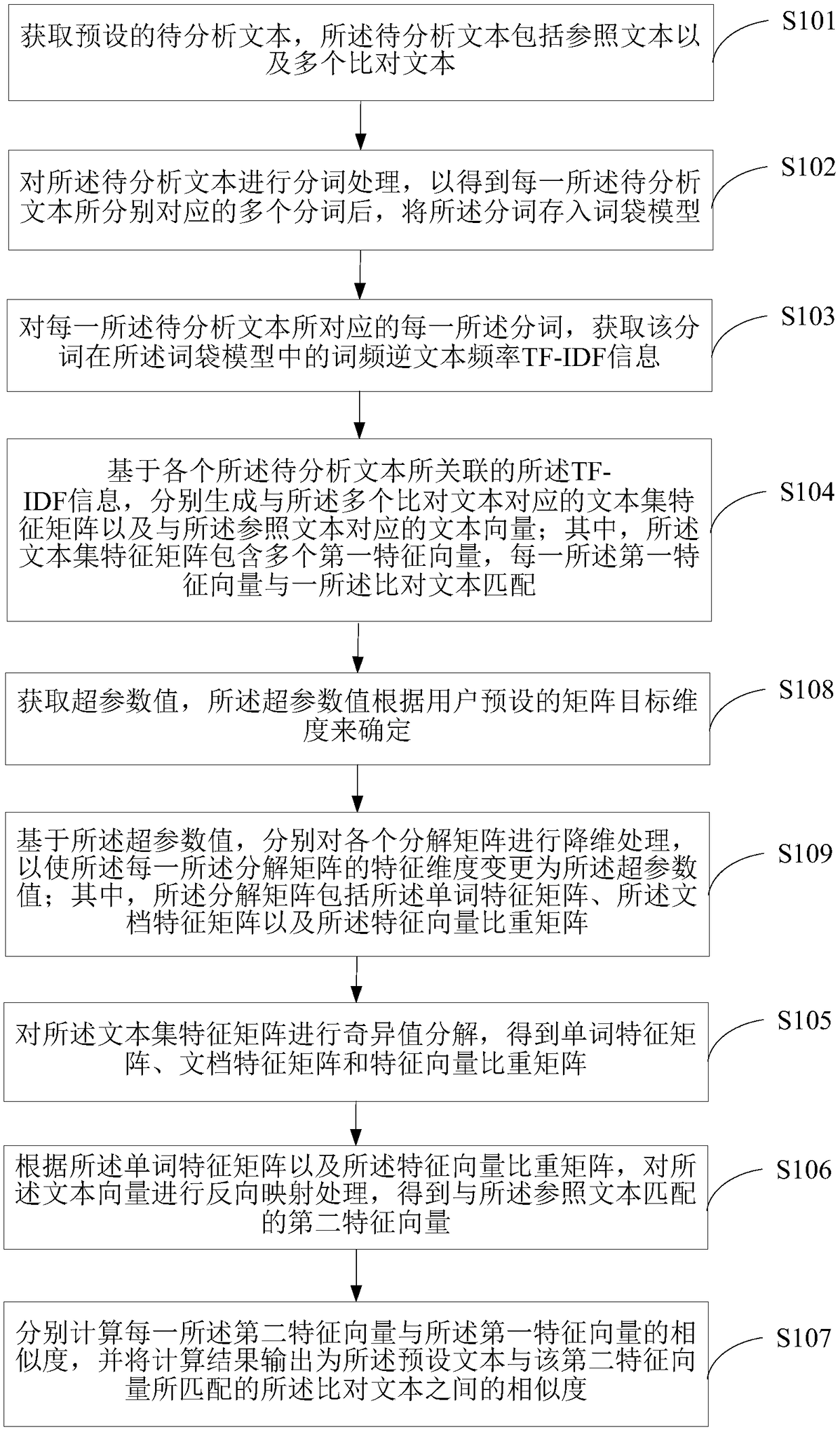

Method for acquiring text similarity, terminal device and medium

ActiveCN108710613AImprove calculation accuracyImprove comparison efficiencyNatural language data processingSpecial data processing applicationsSingular value decompositionFeature vector

The present invention is applicable to the technical field of data processing, and provides a method for acquiring text similarity, a terminal device, and a medium. The method comprises: after acquiring a plurality of word segments corresponding to each to-be-analyzed text, storing the word segments in a word bag; acquiring TF-IDF information of each word segment in a word bag model; based on theTF-IDF information associated with each to-be-analyzed text, generating text set feature matrices corresponding to the plurality of comparison texts and text vectors corresponding to the reference texts respectively; performing singular value decomposition on the text set feature matrices, and according to the obtained word feature matrices and the feature vector weight matrices, performing inverse mapping processing on the text vectors to obtain second feature vectors; and respectively calculating the similarity between each second feature vector and the first feature vector, and outputting acalculation result as the similarity between the preset text and the comparison text matched by the second feature vector. According to the technical scheme of the present invention, the calculationaccuracy of the text similarity is improved, and the comparison efficiency of the text is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com