Patents

Literature

38 results about "Cross modality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cross-modality translation is the process of converting from the affective, sensory, or evaluative perceptions of pain to a graded number, word, line, or color scale (e. However, it should be noted that many cross-modality equivalence classes have been demonstrated.

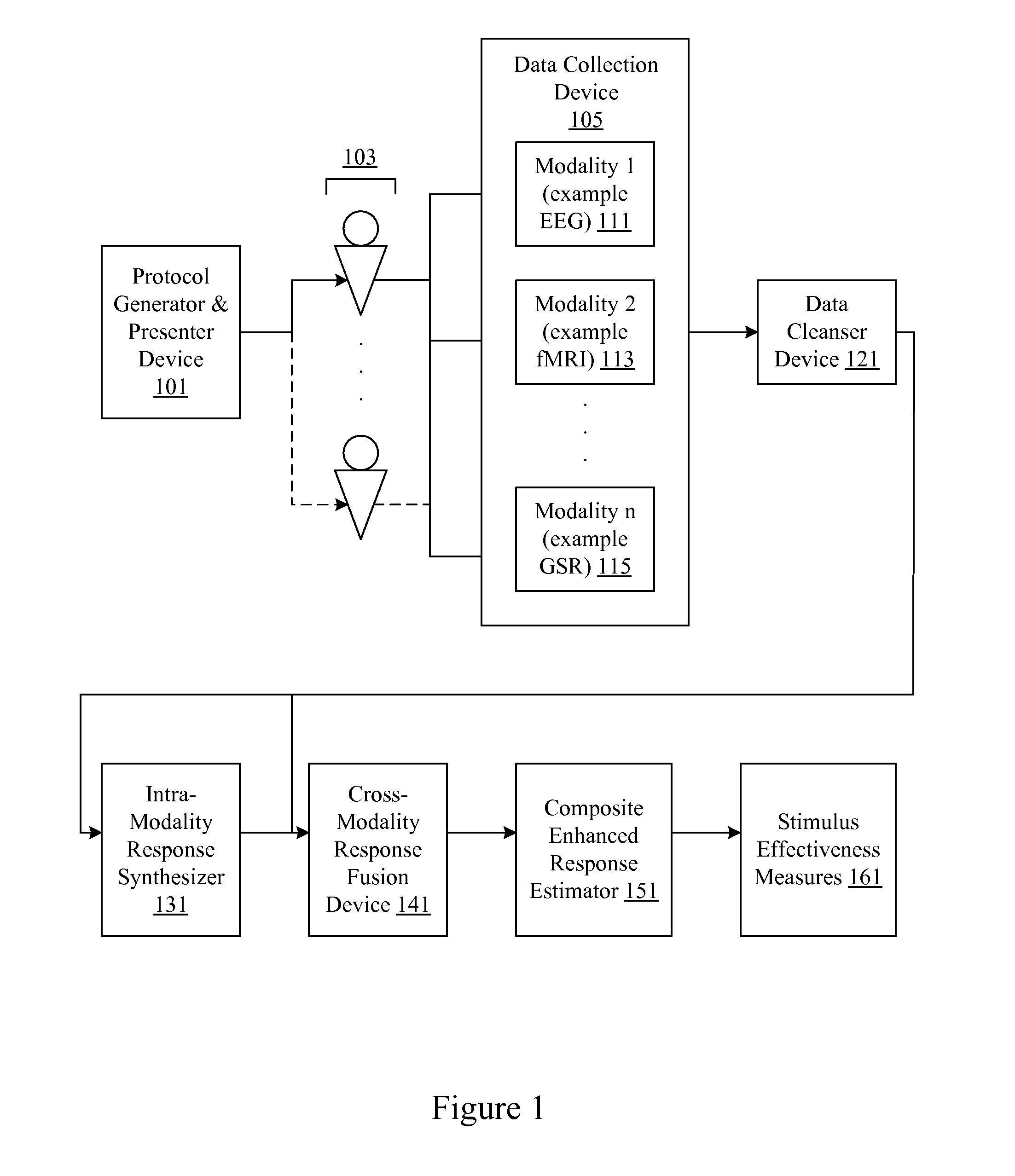

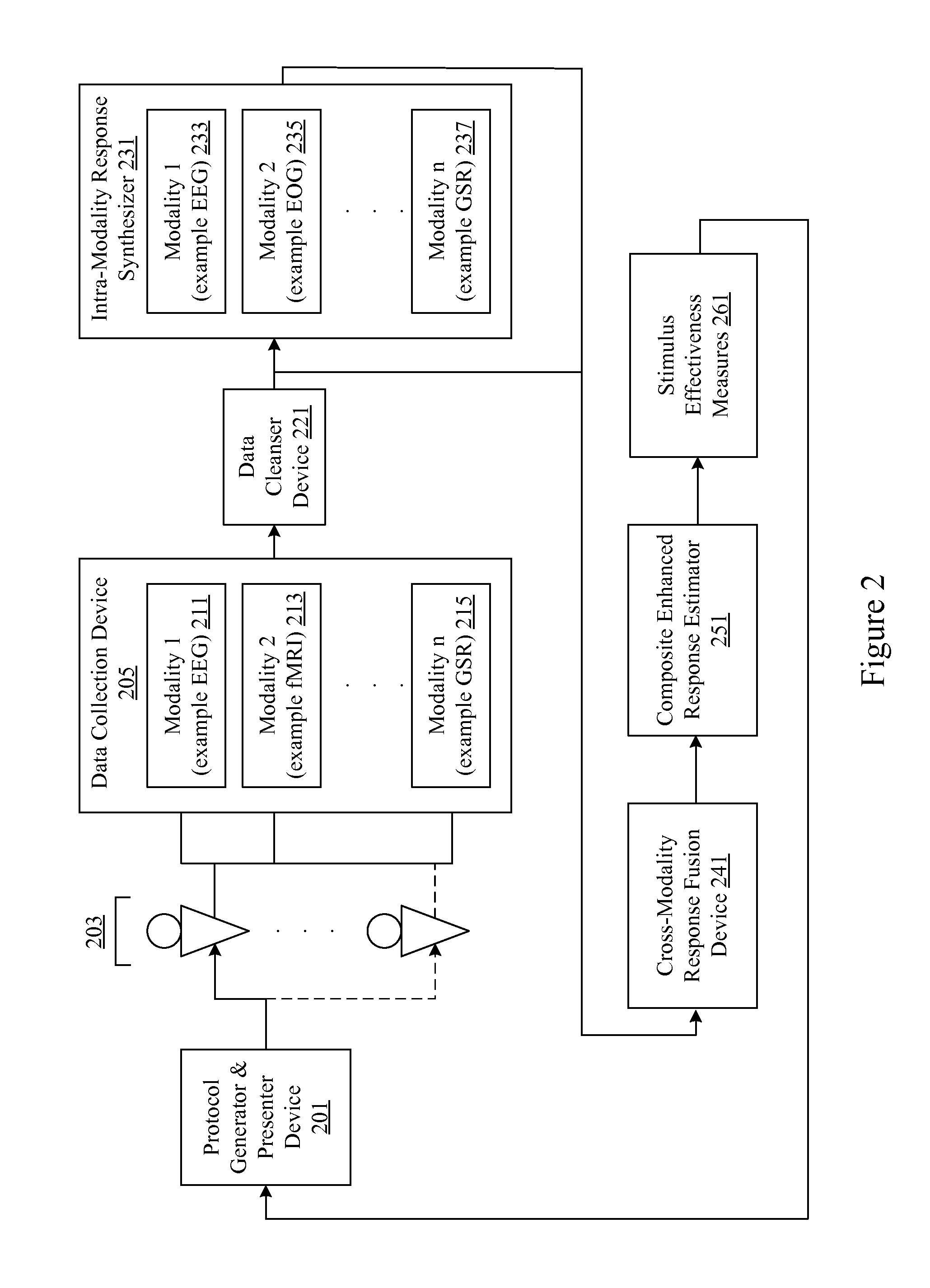

Audience response analysis using simultaneous electroencephalography (EEG) and functional magnetic resonance imaging (FMRI)

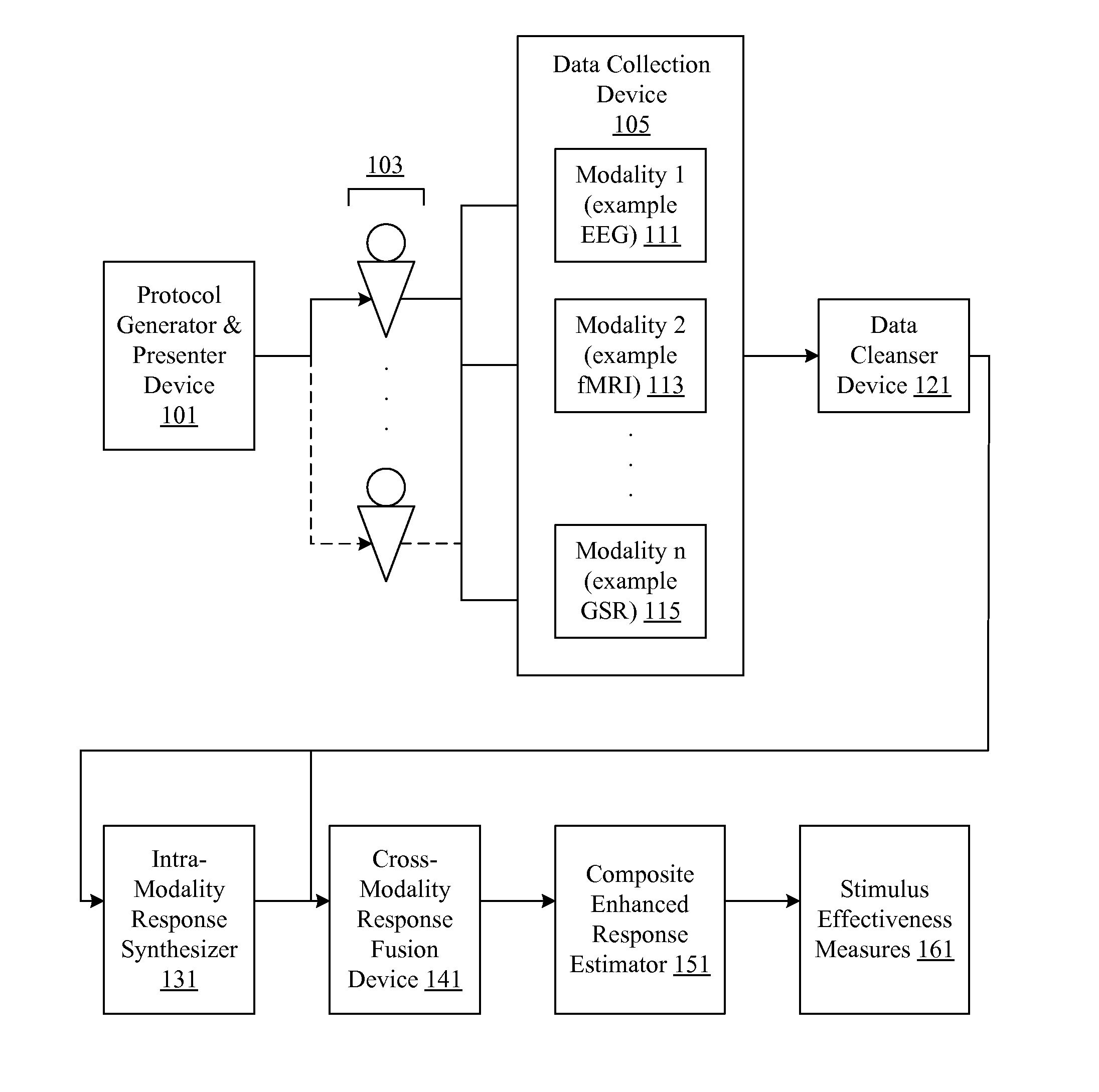

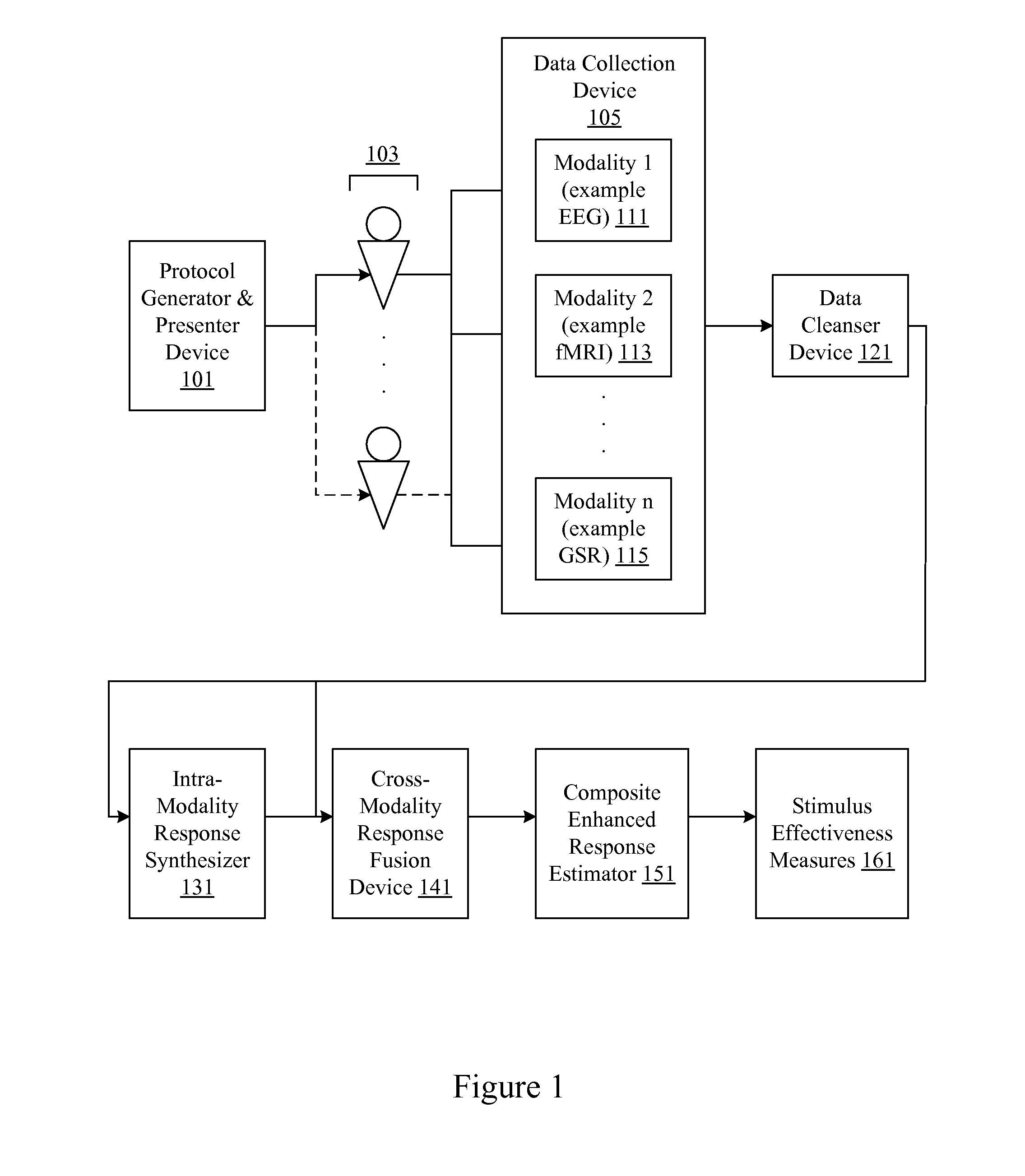

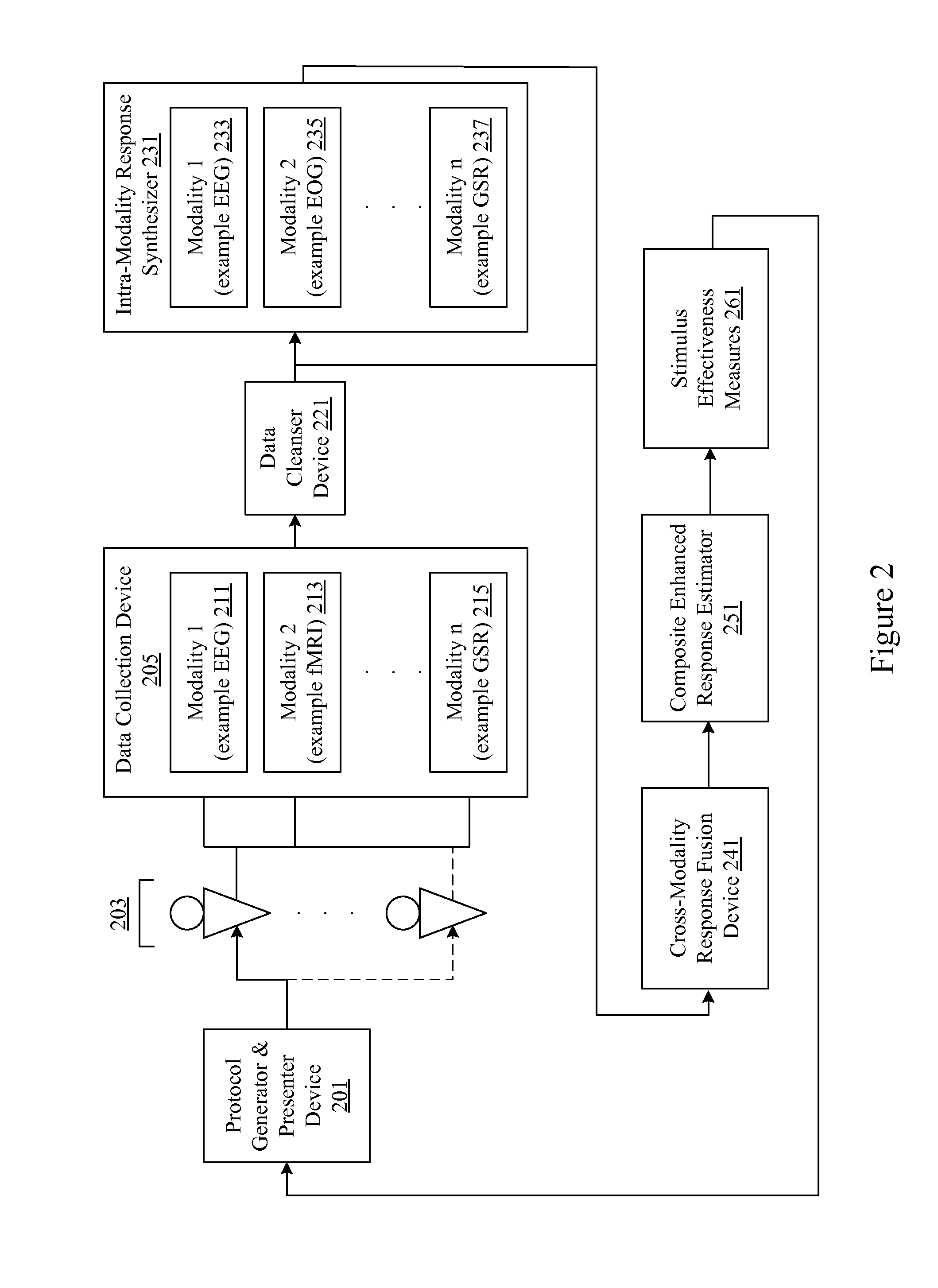

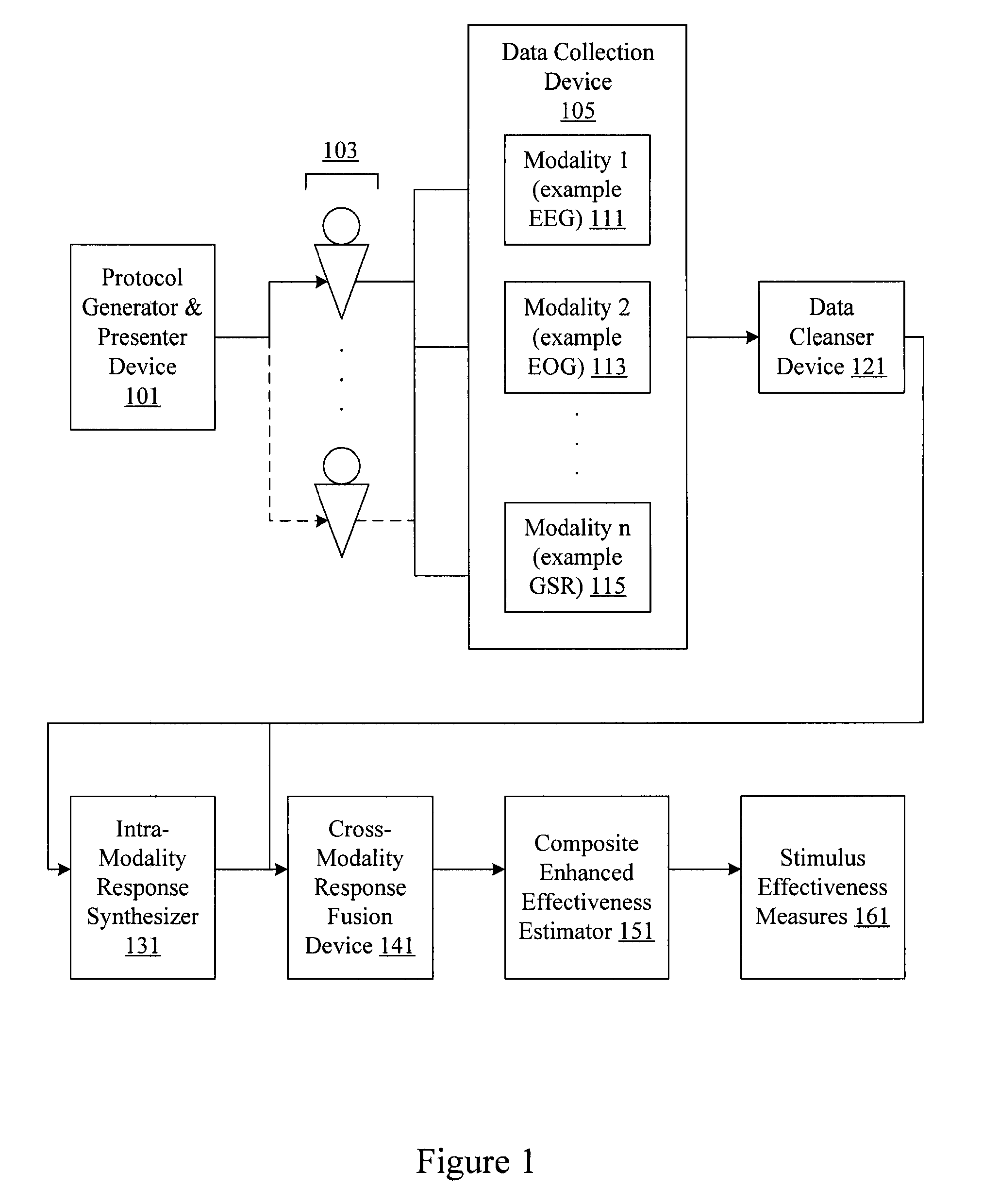

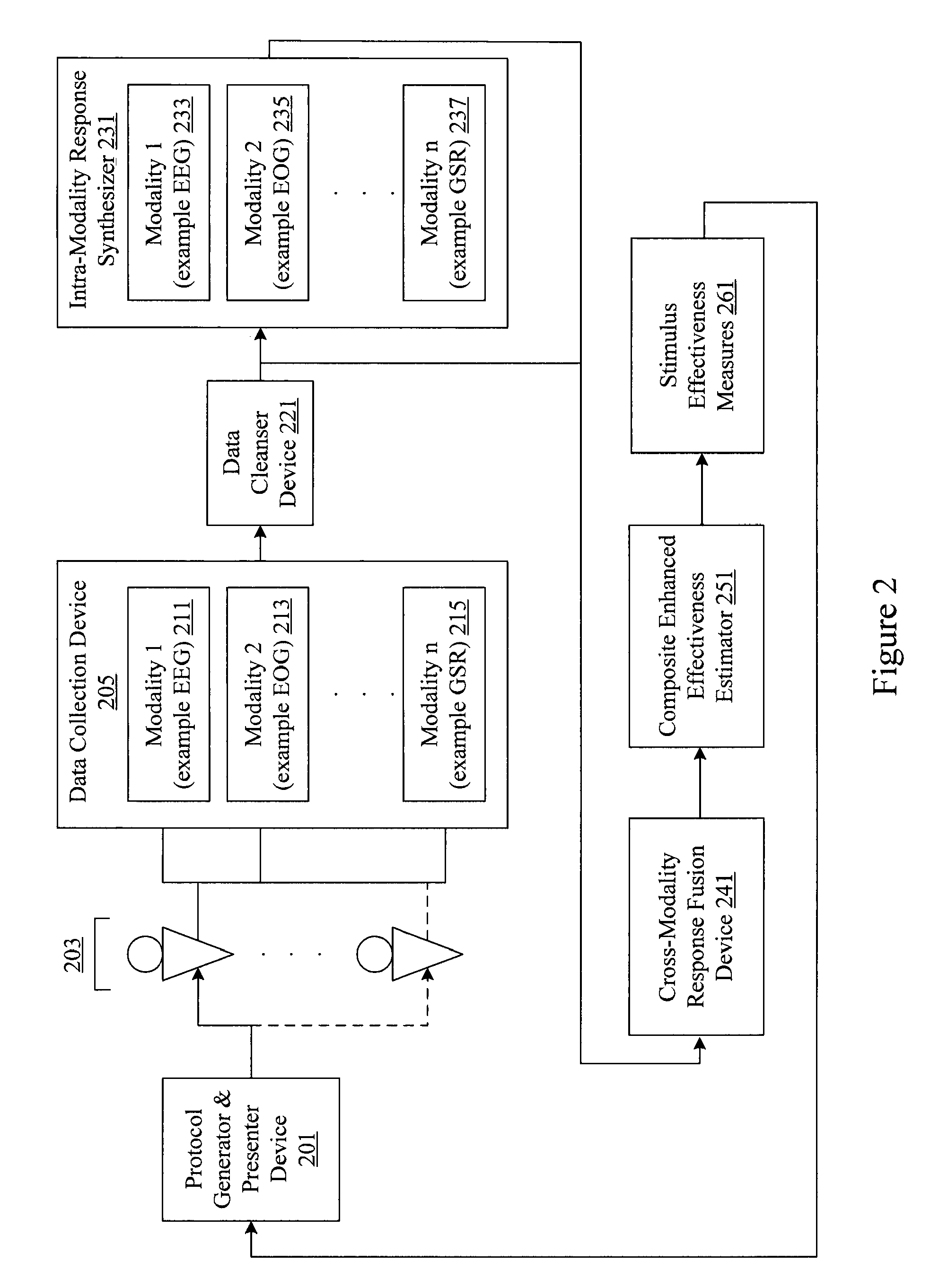

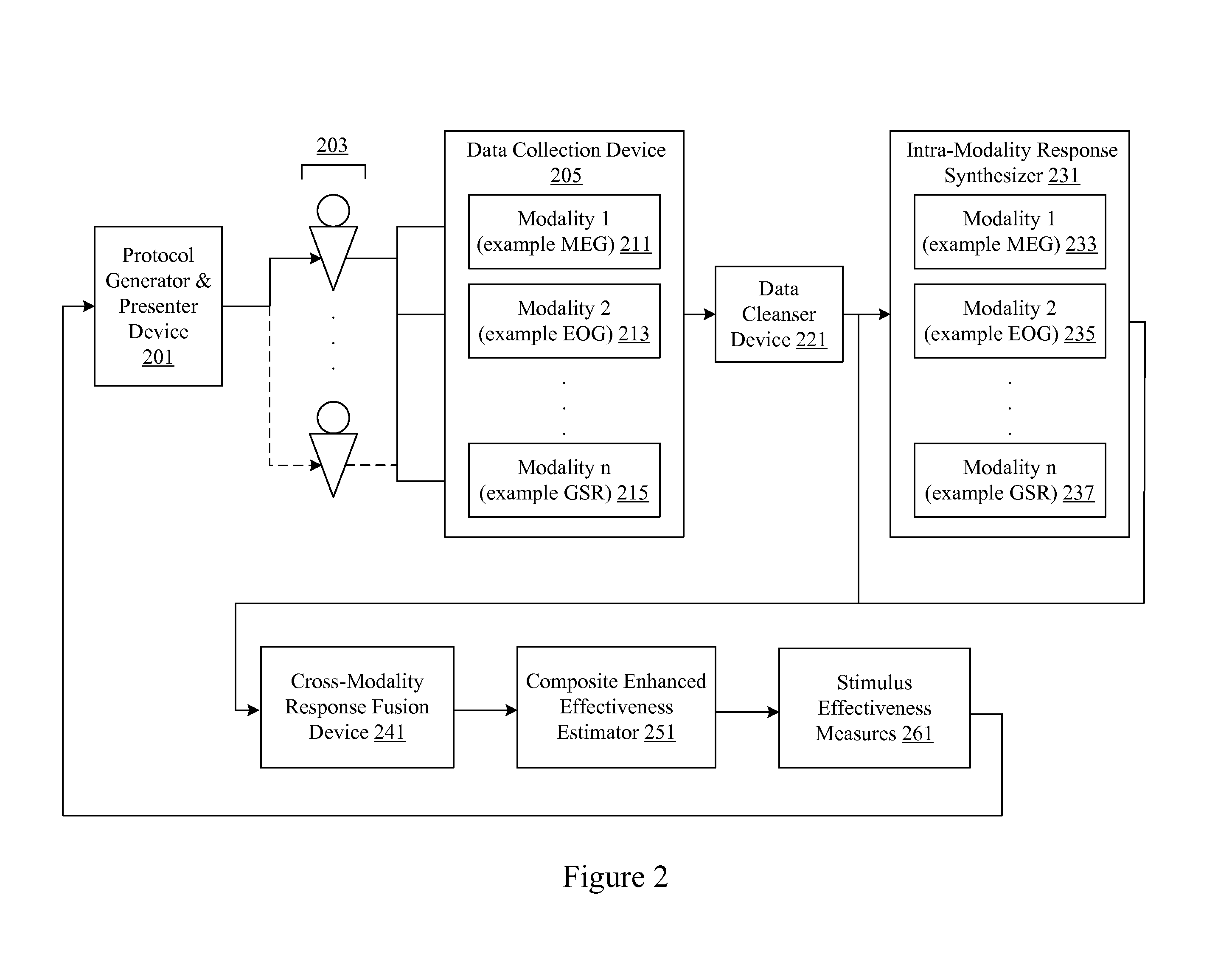

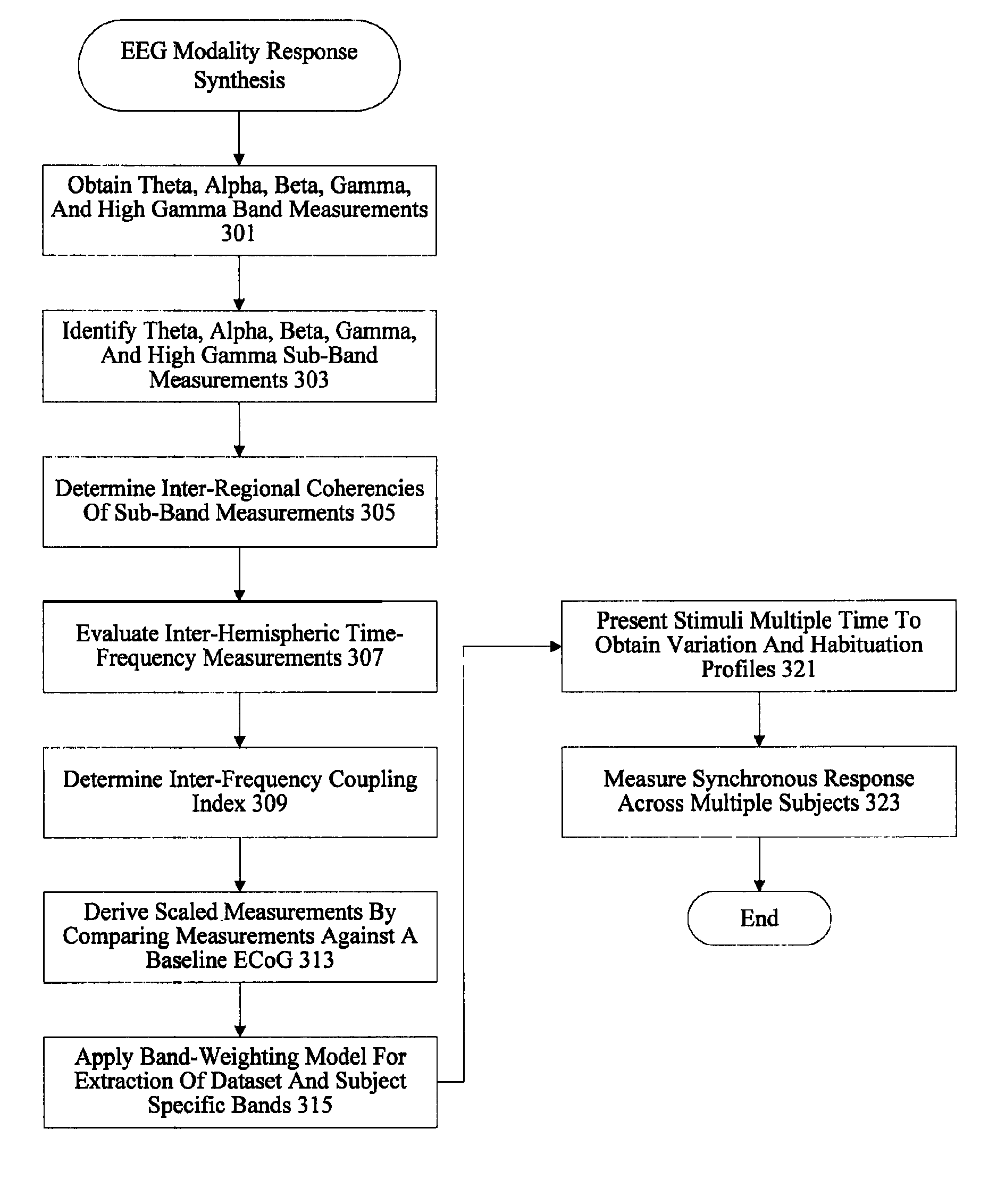

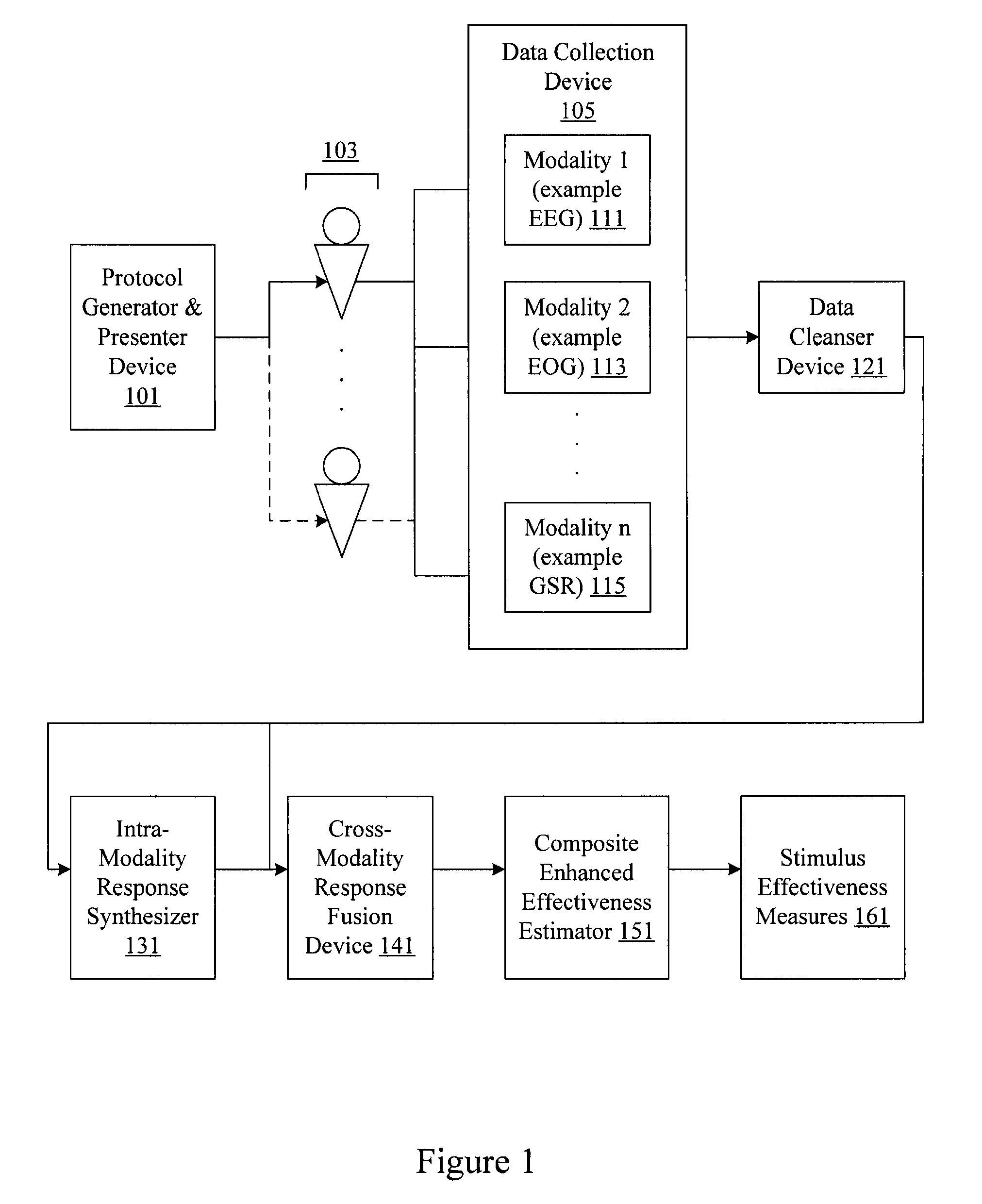

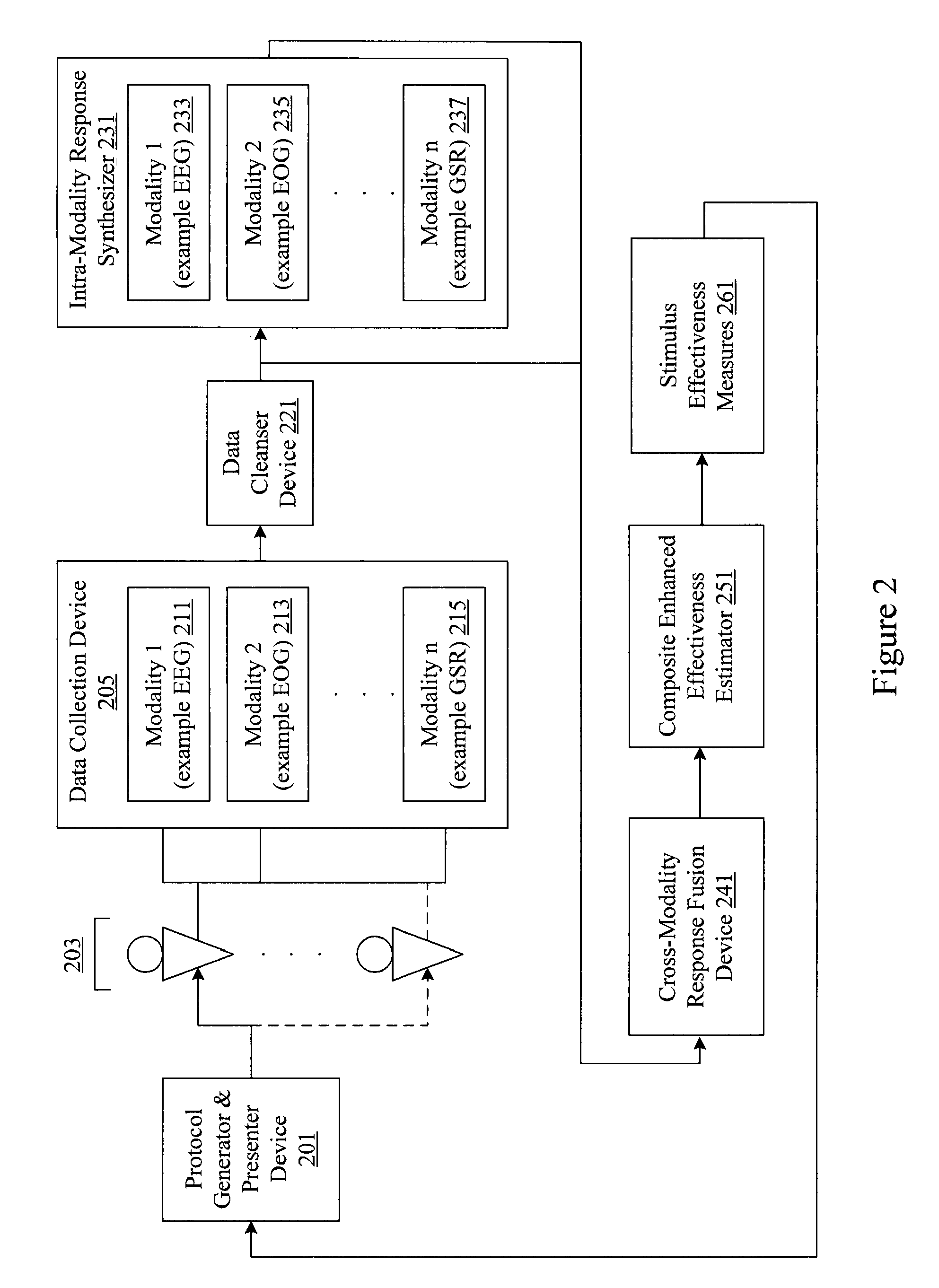

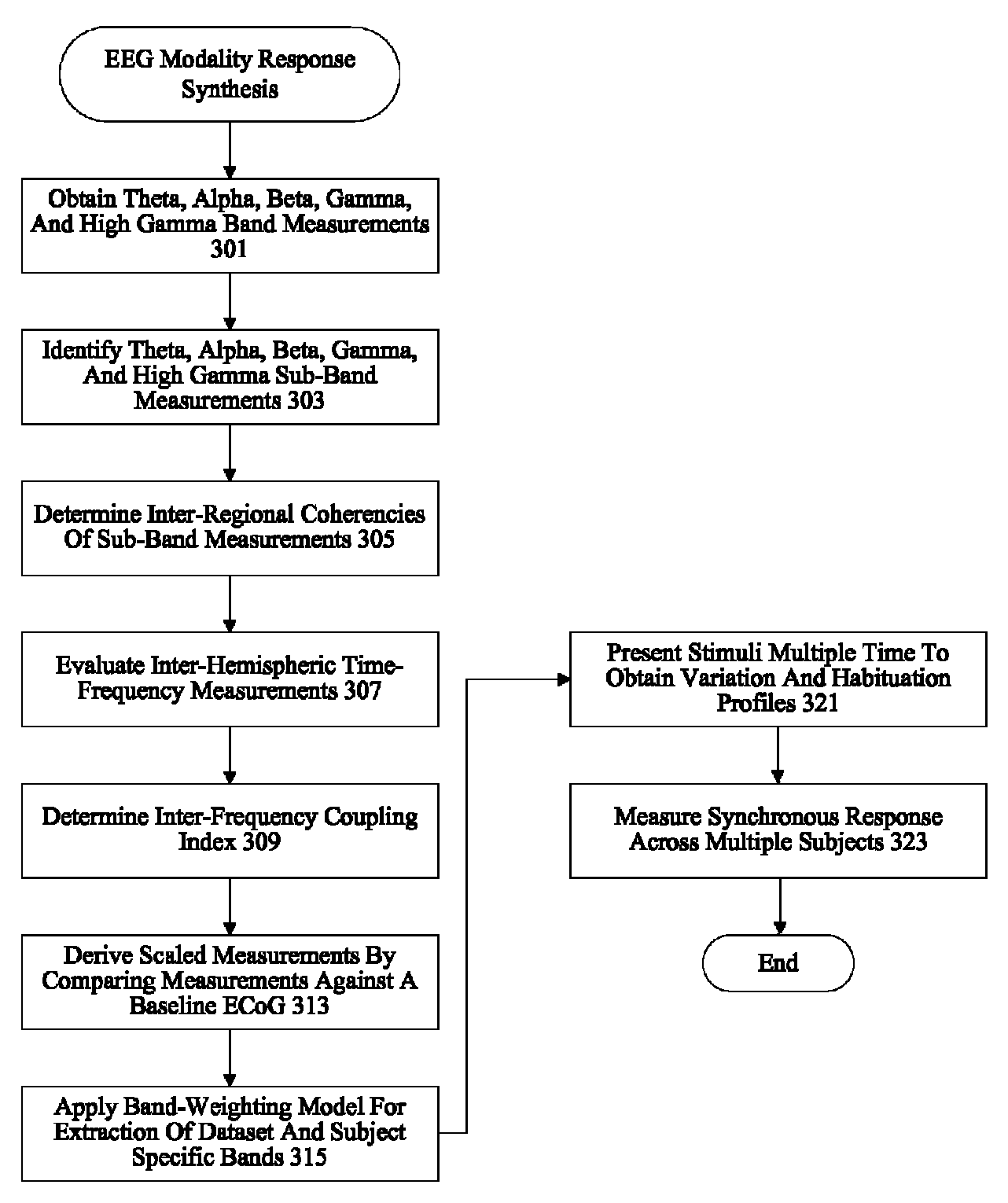

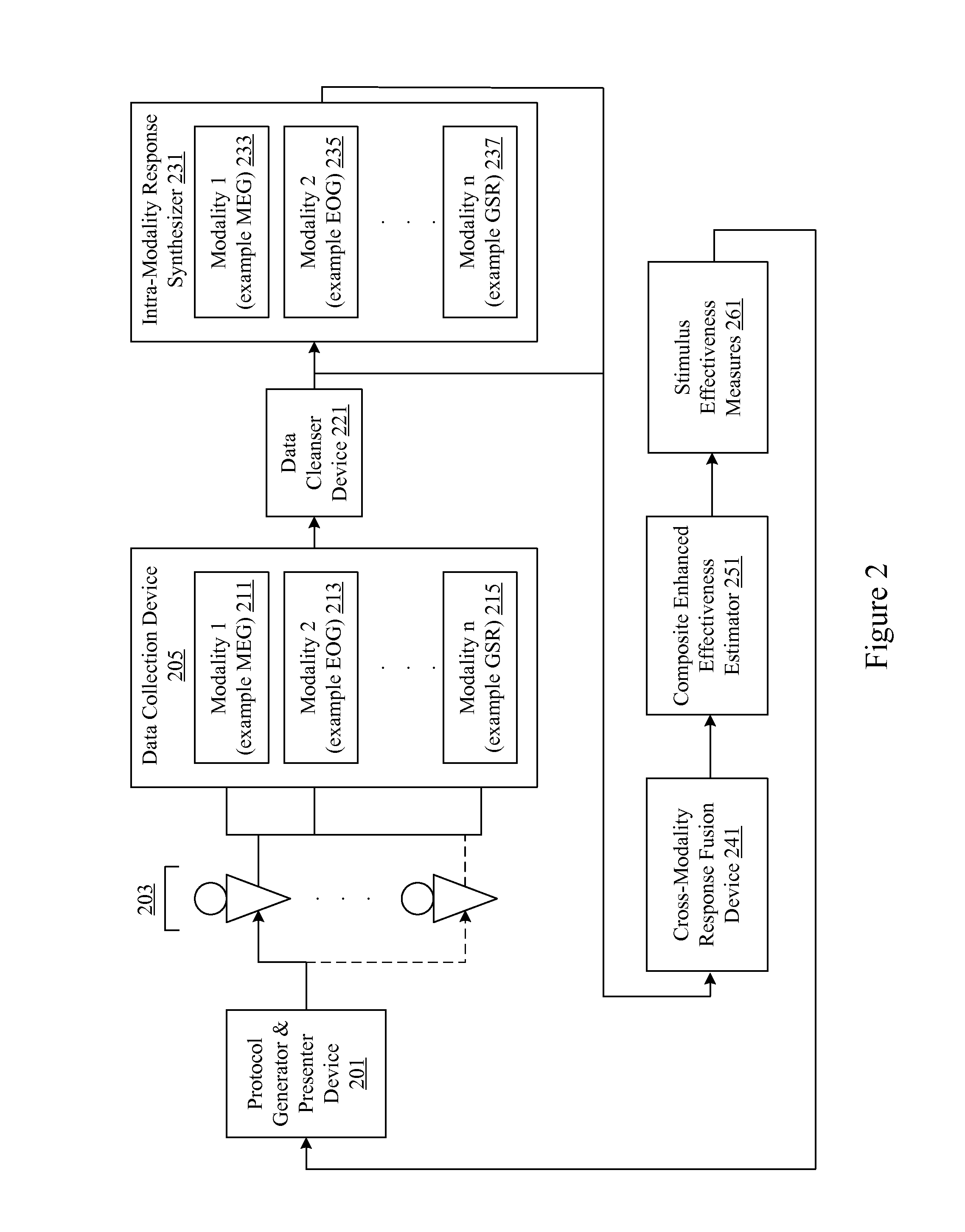

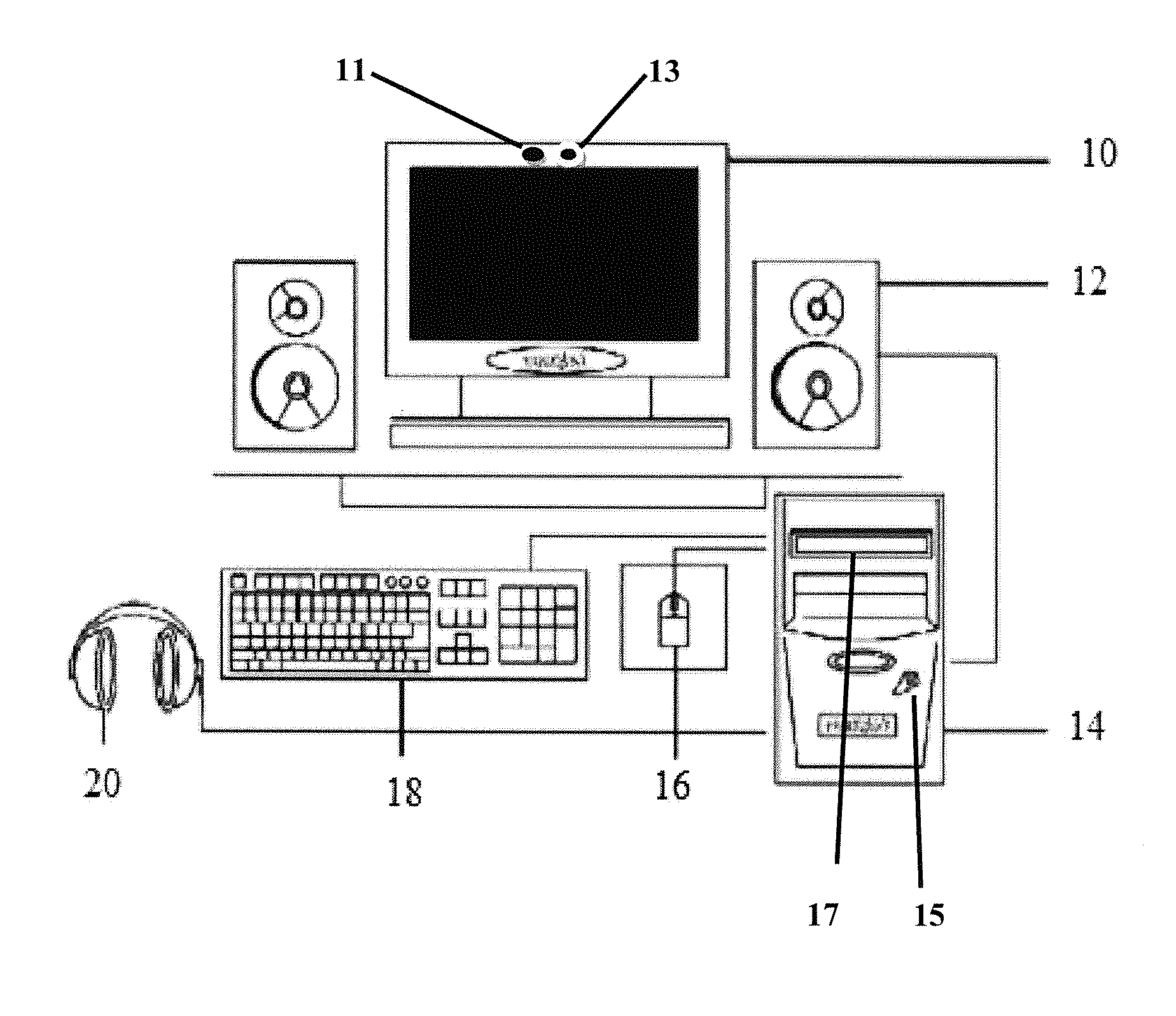

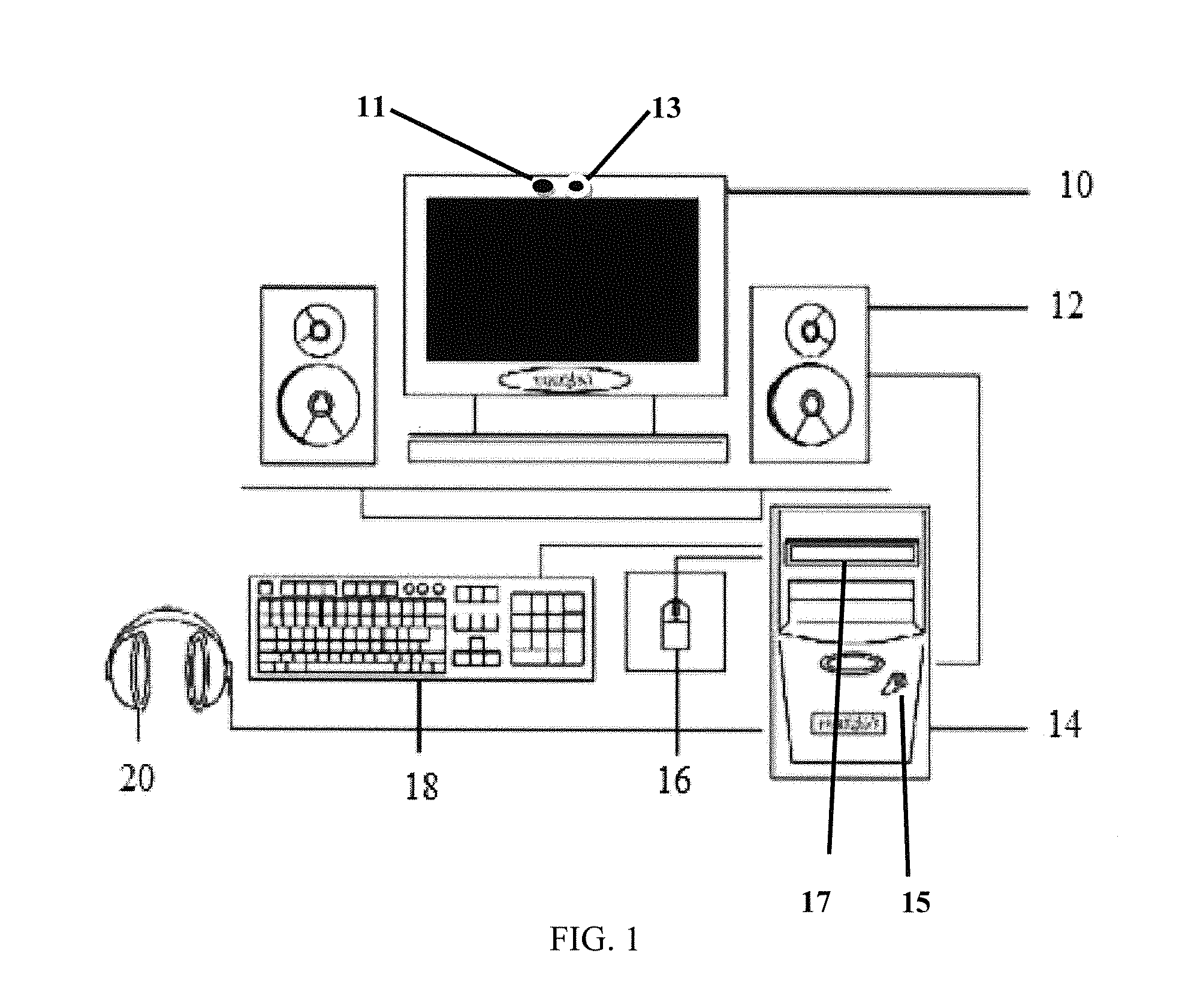

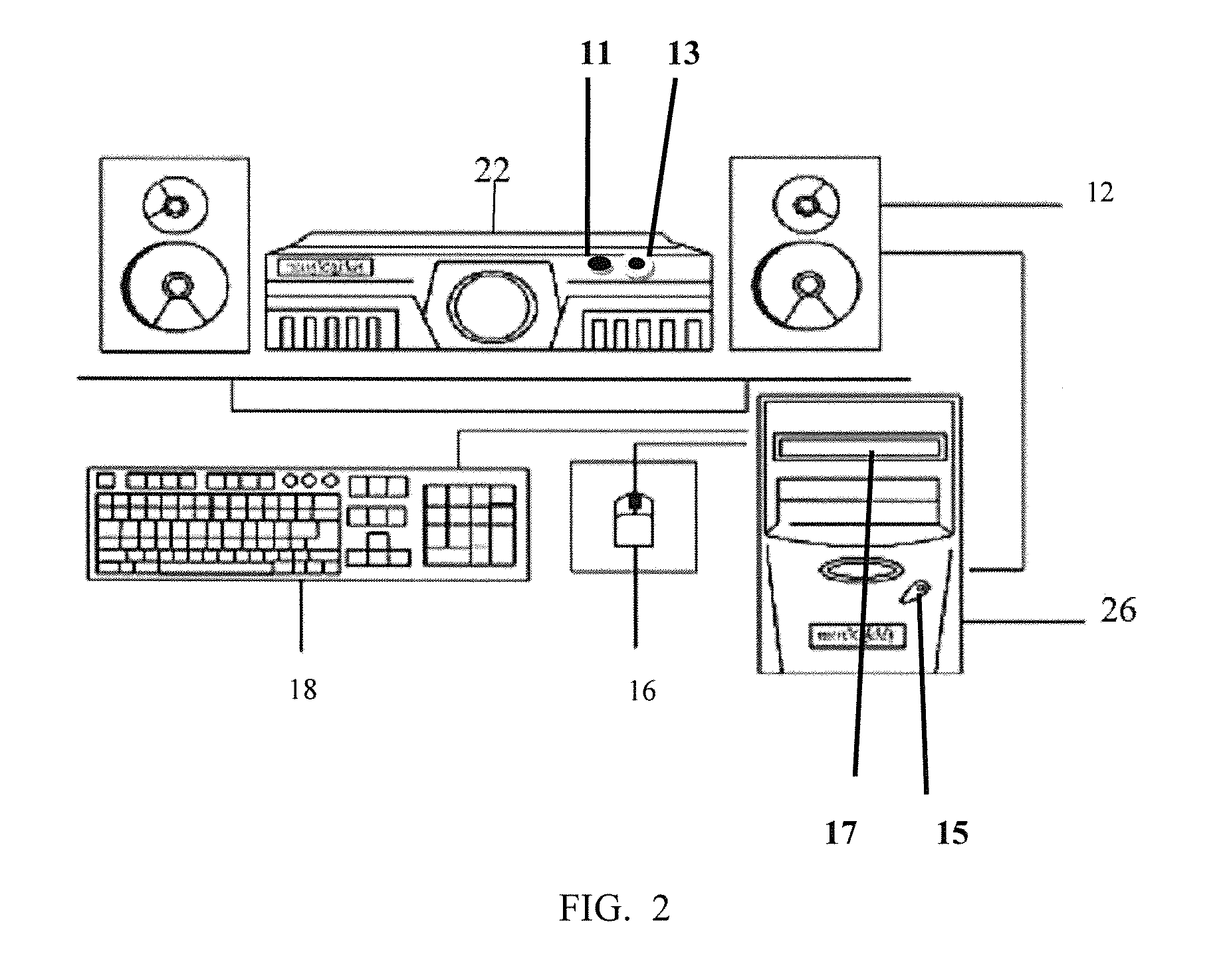

Neuro-response data including Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI) is filtered, analyzed, and combined to evaluate the effectiveness of stimulus materials such as marketing and entertainment materials. A data collection mechanism including multiple modalities such as, Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data and removes cross-modality interference.

Owner:NIELSEN CONSUMER LLC

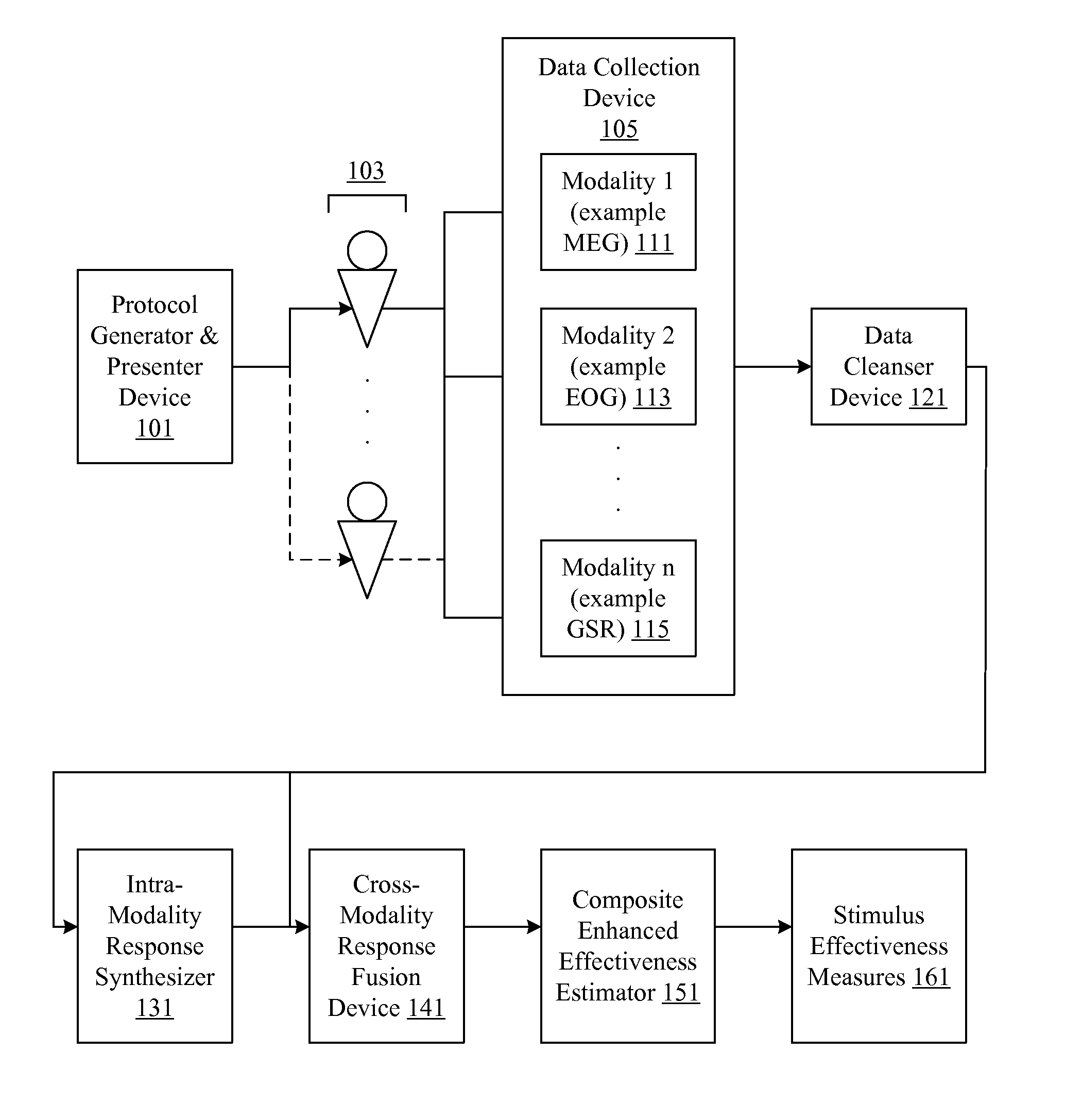

Analysis of marketing and entertainment effectiveness using central nervous system, autonomic nervous sytem, and effector data

ActiveUS20090024447A1Data acquisition and loggingMarket data gatheringCross modalityDiagnostic Radiology Modality

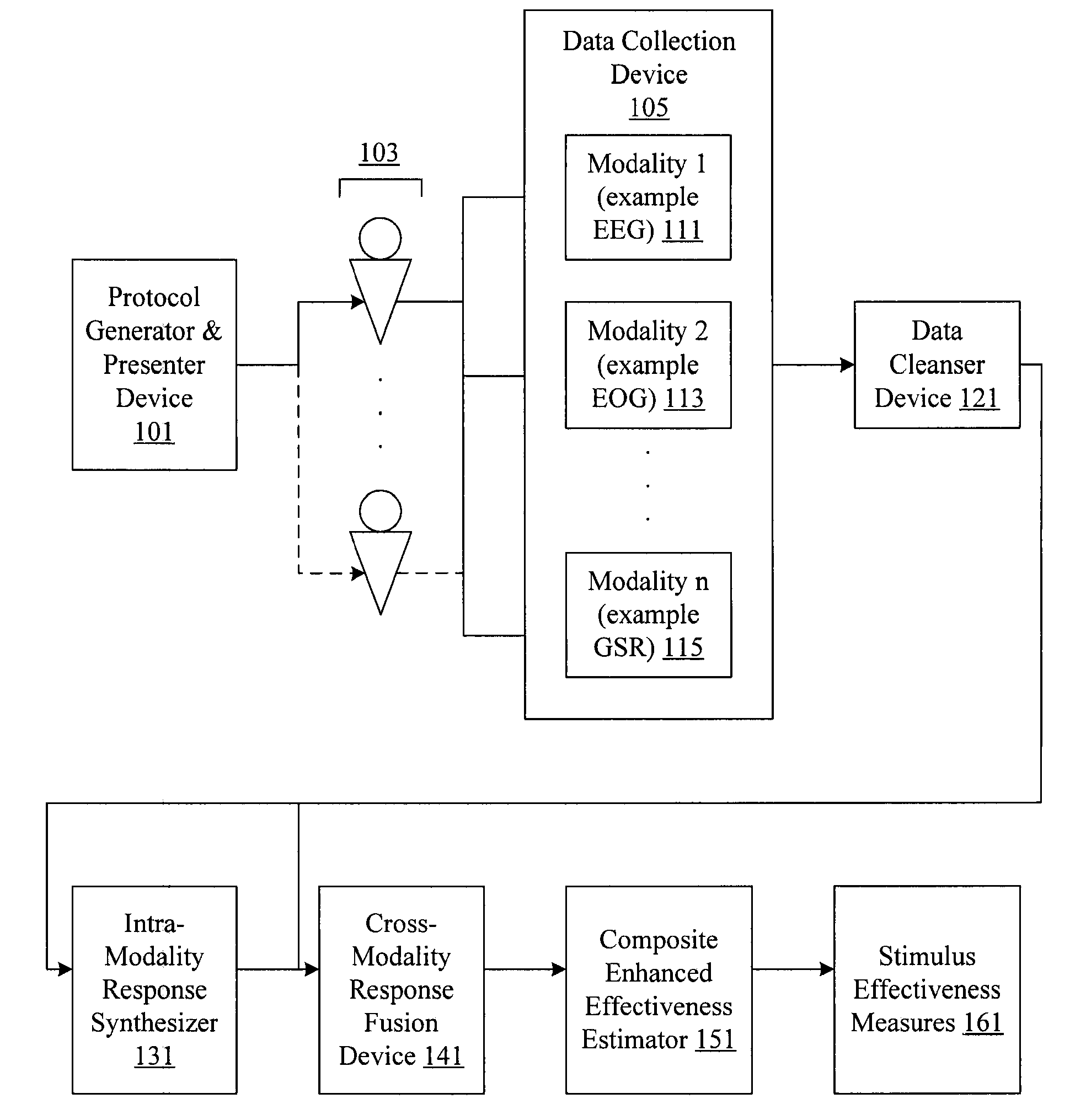

Central nervous system, autonomic nervous system, and effector data is measured and analyzed to determine the effectiveness of marketing and entertainment stimuli. A data collection mechanism including multiple modalities such as Electroencephalography (EEG), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data. The response data is enhanced using intra-modality response synthesis and / or a cross-modality response synthesis.

Owner:NIELSEN CONSUMER LLC

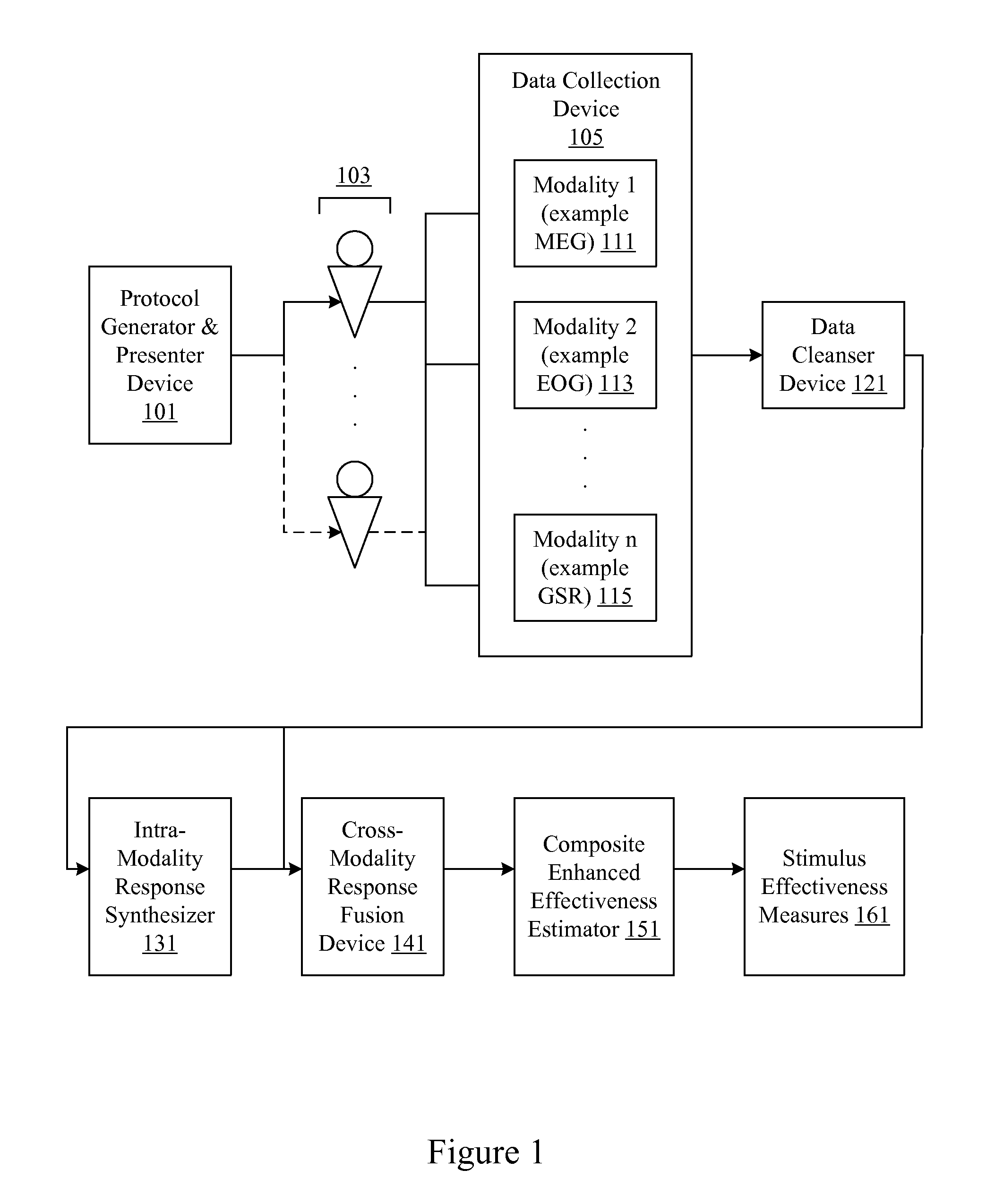

Analysis of marketing and entertainment effectiveness using magnetoencephalography

ActiveUS20090082643A1Analogue secracy/subscription systemsDiagnostic recording/measuringDiagnostic Radiology ModalityCross modality

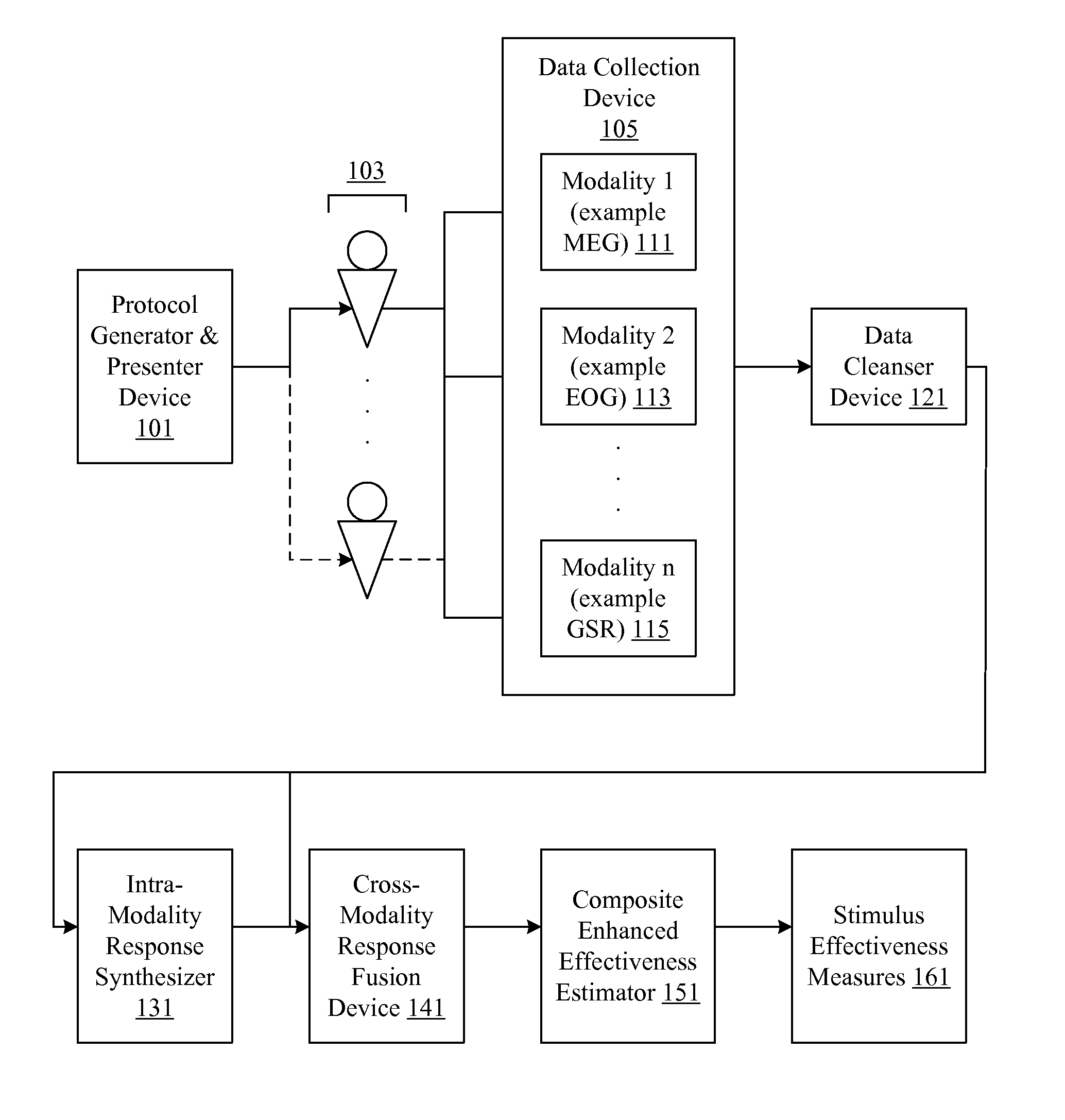

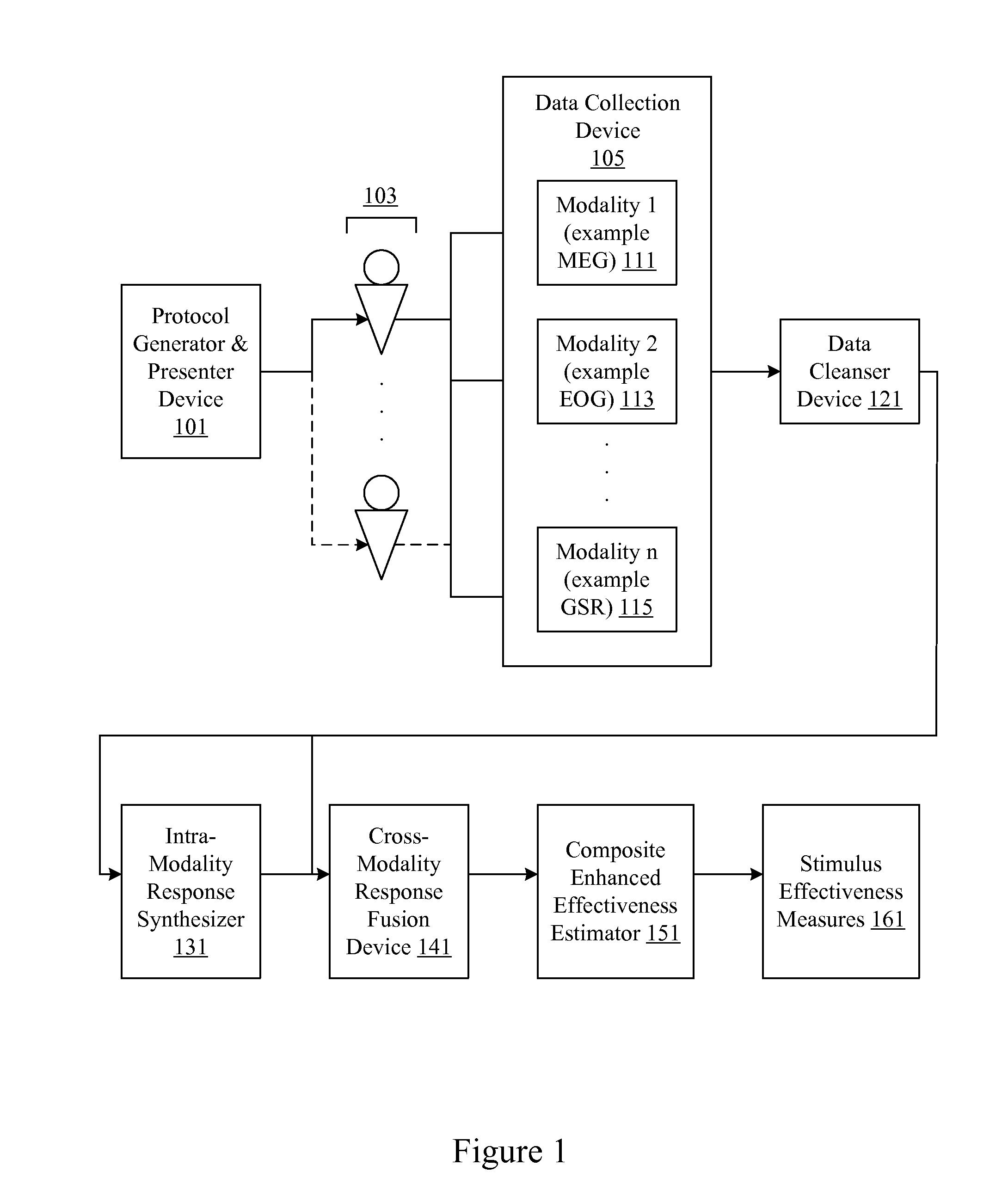

Central nervous system, autonomic nervous system, and effector data is measured and analyzed to determine the effectiveness of marketing and entertainment stimuli. A data collection mechanism including multiple modalities such as Magnetoencephalography (MEG), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data. The response data is enhanced using intra-modality response synthesis and / or a cross-modality response synthesis.

Owner:NIELSEN CONSUMER LLC

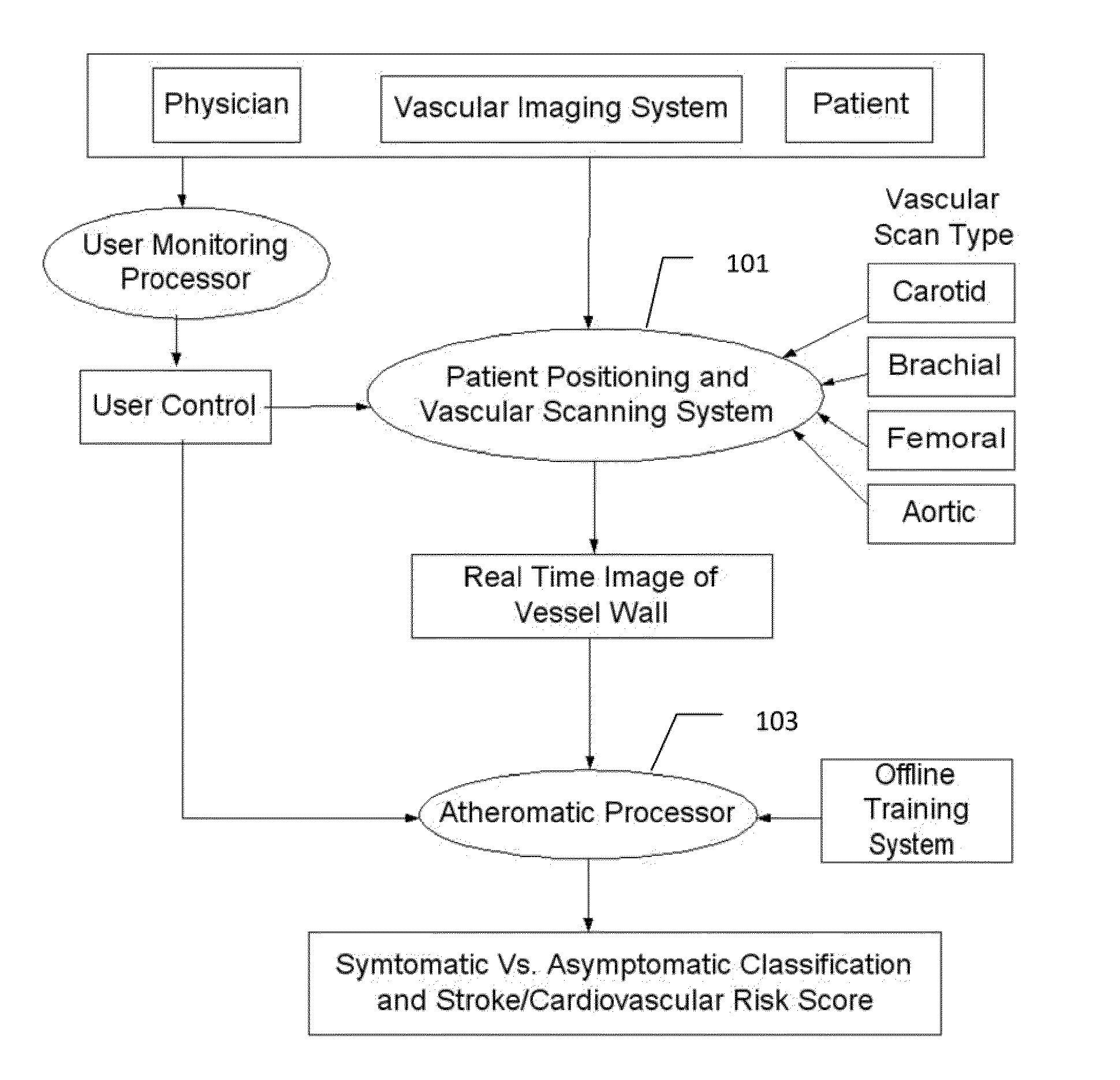

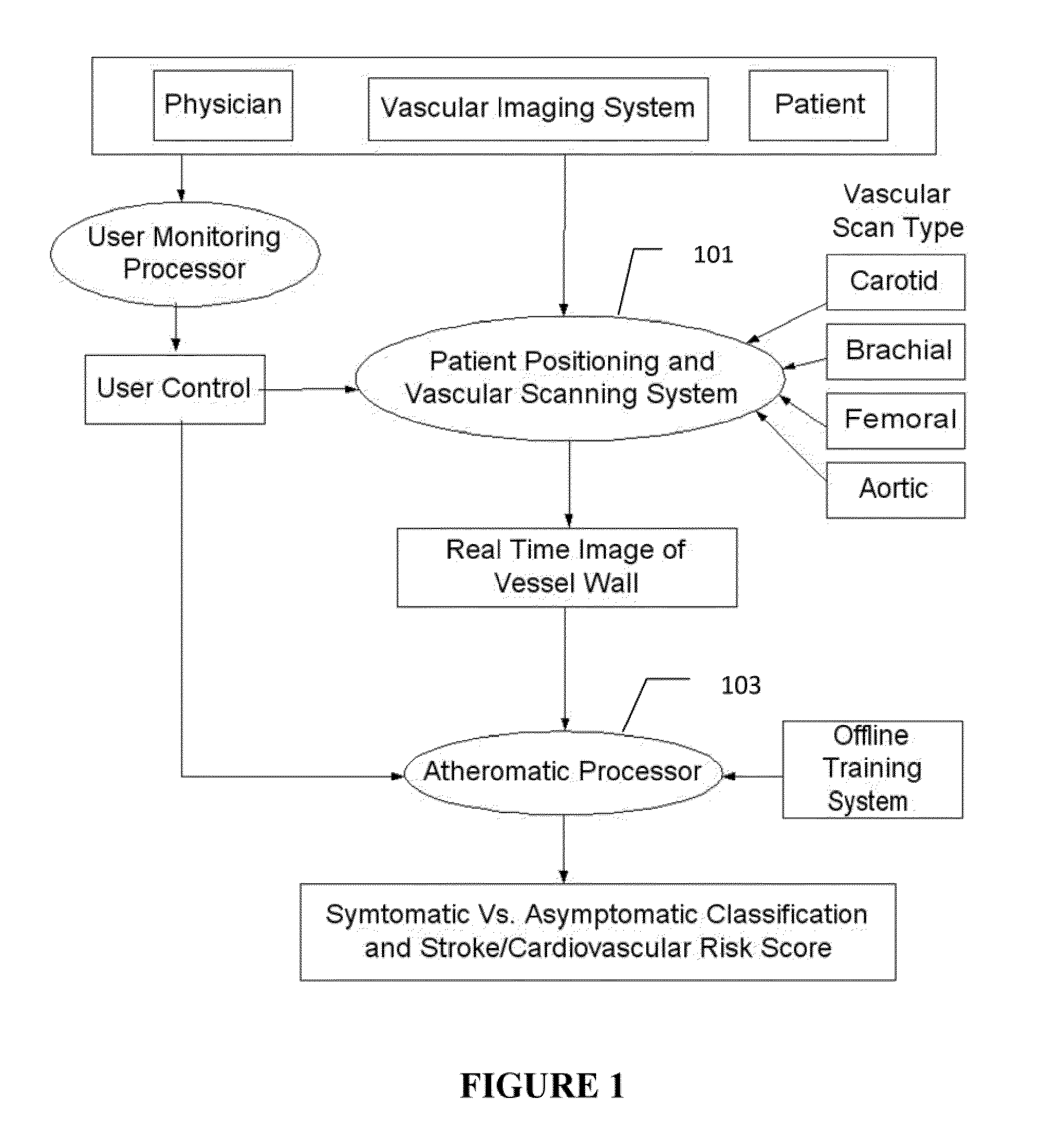

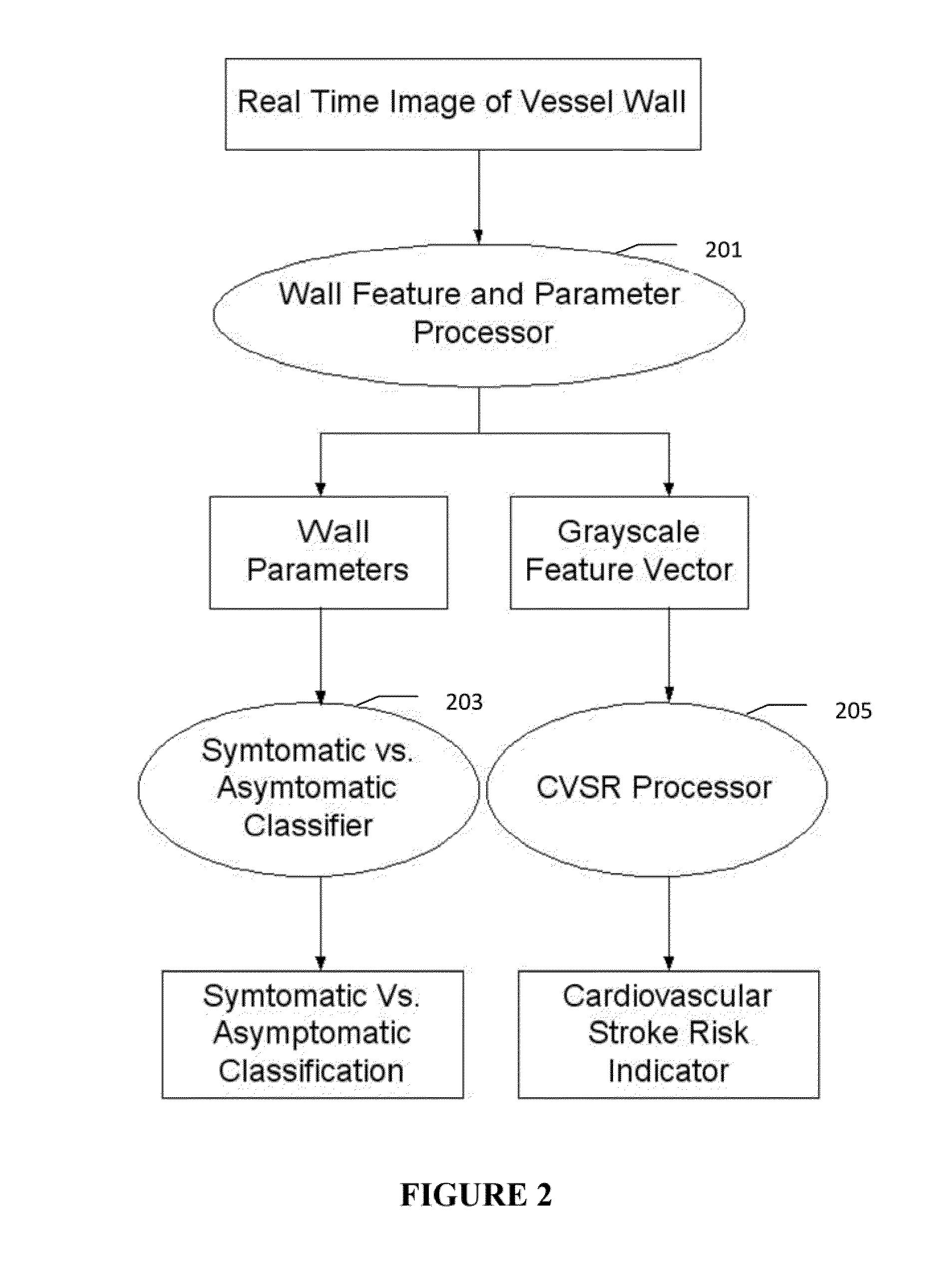

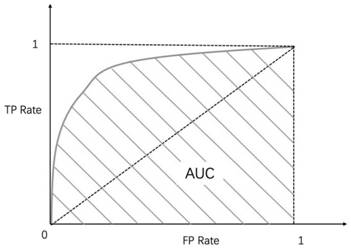

Imaging based symptomatic classification and cardiovascular stroke risk score estimation

InactiveUS20110257545A1Improve accuracyMaximum accuracyUltrasonic/sonic/infrasonic diagnosticsImage enhancementGround truthCross modality

Characterization of carotid atherosclerosis and classification of plaque into symptomatic or asymptomatic along with the risk score estimation are key steps necessary for allowing the vascular surgeons to decide if the patient has to definitely undergo risky treatment procedures that are needed to unblock the stenosis. This application describes a statistical (a) Computer Aided Diagnostic (CAD) technique for symptomatic versus asymptomatic plaque automated classification of carotid ultrasound images and (b) presents a cardiovascular stroke risk score computation. We demonstrate this for longitudinal Ultrasound, CT, MR modalities and extendable to 3D carotid Ultrasound. The on-line system consists of Atherosclerotic Wall Region estimation using AtheroEdge™ for longitudinal Ultrasound or Athero-CTView™ for CT or Athero-MRView from MR. This greyscale Wall Region is then fed to a feature extraction processor which computes: (a) Higher Order Spectra; (b) Discrete Wavelet Transform (DWT); (c) Texture and (d) Wall Variability. The output of the Feature Processor is fed to the Classifier which is trained off-line from the Database of similar Atherosclerotic Wall Region images. The off-line Classifier is trained from the significant features from (a) Higher Order Spectra; (b) Discrete Wavelet Transform (DWT); (c) Texture and (d) Wall Variability, selected using t-test. Symptomatic ground truth information about the training patients is drawn from cross modality imaging such as CT or MR or 3D ultrasound in the form of 0 or 1. Support Vector Machine (SVM) supervised classifier of varying kernel functions is used off-line for training. The Atheromatic™ system is also demonstrated for Radial Basis Probabilistic Neural Network (RBPNN), or Nearest Neighbor (KNN) classifier or Decision Trees (DT) Classifier for symptomatic versus asymptomatic plaque automated classification. The obtained training parameters are then used to evaluate the test set. The system also yields the cardiovascular stroke risk score value on the basis of the four set of wall features.

Owner:SURI JASJIT S

Analysis of marketing and entertainment effectiveness using central nervous system, autonomic nervous system, and effector data

ActiveUS8484081B2Data acquisition and loggingMarket data gatheringDiagnostic Radiology ModalityCross modality

Central nervous system, autonomic nervous system, and effector data is measured and analyzed to determine the effectiveness of marketing and entertainment stimuli. A data collection mechanism including multiple modalities such as Electroencephalography (EEG), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data. The response data is enhanced using intra-modality response synthesis and / or a cross-modality response synthesis.

Owner:NIELSEN CONSUMER LLC

Audience response analysis using simultaneous electroencephalography (EEG) and functional magnetic resonance imaging (fMRI)

Neuro-response data including Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI) is filtered, analyzed, and combined to evaluate the effectiveness of stimulus materials such as marketing and entertainment materials. A data collection mechanism including multiple modalities such as, Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data and removes cross-modality interference.

Owner:NIELSEN CONSUMER LLC

Analysis of marketing and entertainment effectiveness using magnetoencephalography

ActiveUS8494610B2Analogue secracy/subscription systemsDiagnostic recording/measuringCross modalityDiagnostic Radiology Modality

Central nervous system, autonomic nervous system, and effector data is measured and analyzed to determine the effectiveness of marketing and entertainment stimuli. A data collection mechanism including multiple modalities such as Magnetoencephalography (MEG), Electrooculography (EOG), Galvanic Skin Response (GSR), etc., collects response data from subjects exposed to marketing and entertainment stimuli. A data cleanser mechanism filters the response data. The response data is enhanced using intra-modality response synthesis and / or a cross-modality response synthesis.

Owner:NIELSEN CONSUMER LLC

Cognitive training system and method

A cognitive training system provides cognitive skills development using a suite of music and sound based exercises. Visual, auditory, and tactile sensory stimuli are paired in various combinations to build and strengthen cross-modality associations. The system includes a cognitive skills development platform in which training video-games and cartoons are user presented. The platform uses computer implemented systems and methods for training a user with the aim of enhancing processes, skills, and / or development of user intelligence, attention, language skills and brain functioning. The cognitive training system may be utilized by users of varying, or no, musical skills and is presented at a difficulty level corresponding to user characteristics, age, interests and attention span. User characteristics may be assessed in several manners, including retrieval of past performance data on one or more of the exercises.

Owner:MORENO SYLVAIN JEAN PIERRE DANIEL

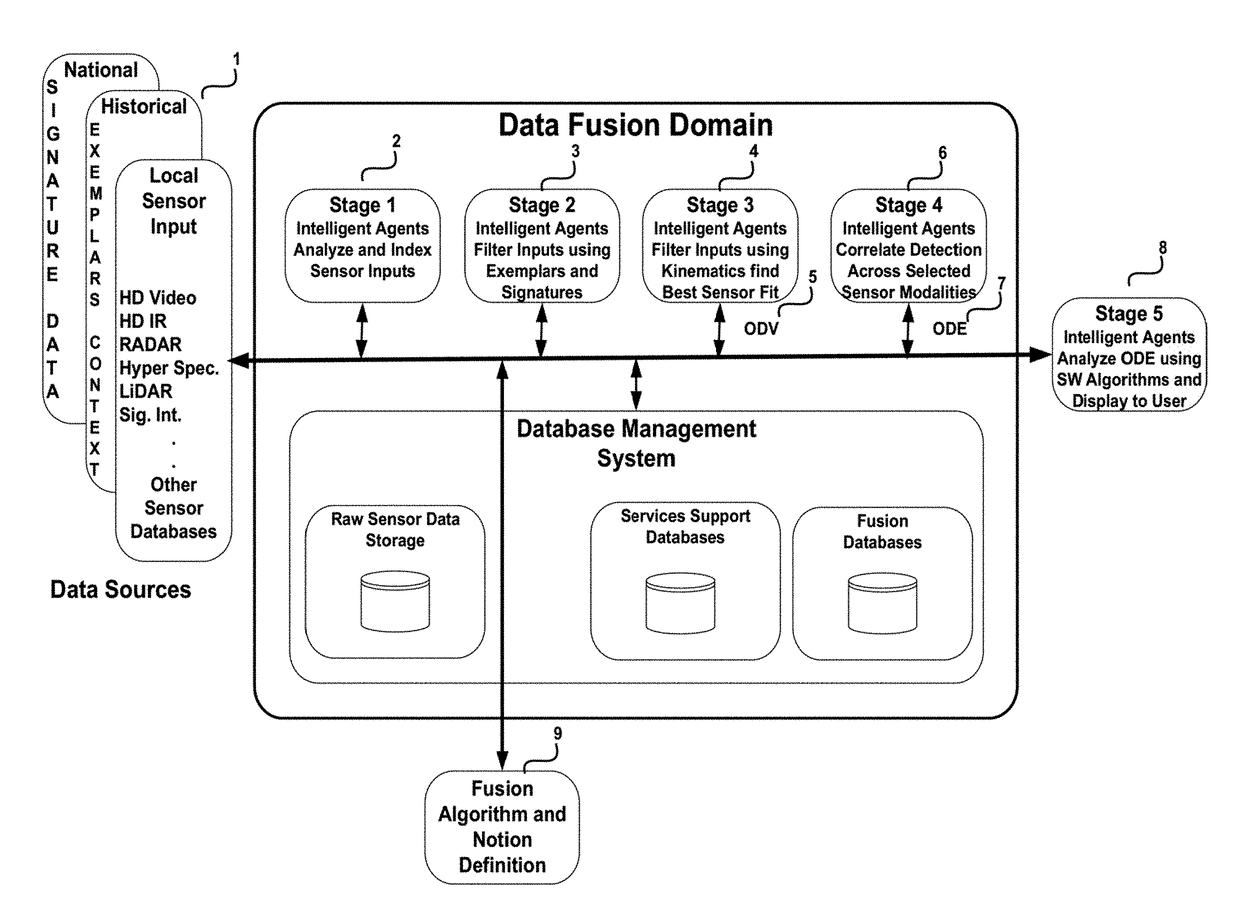

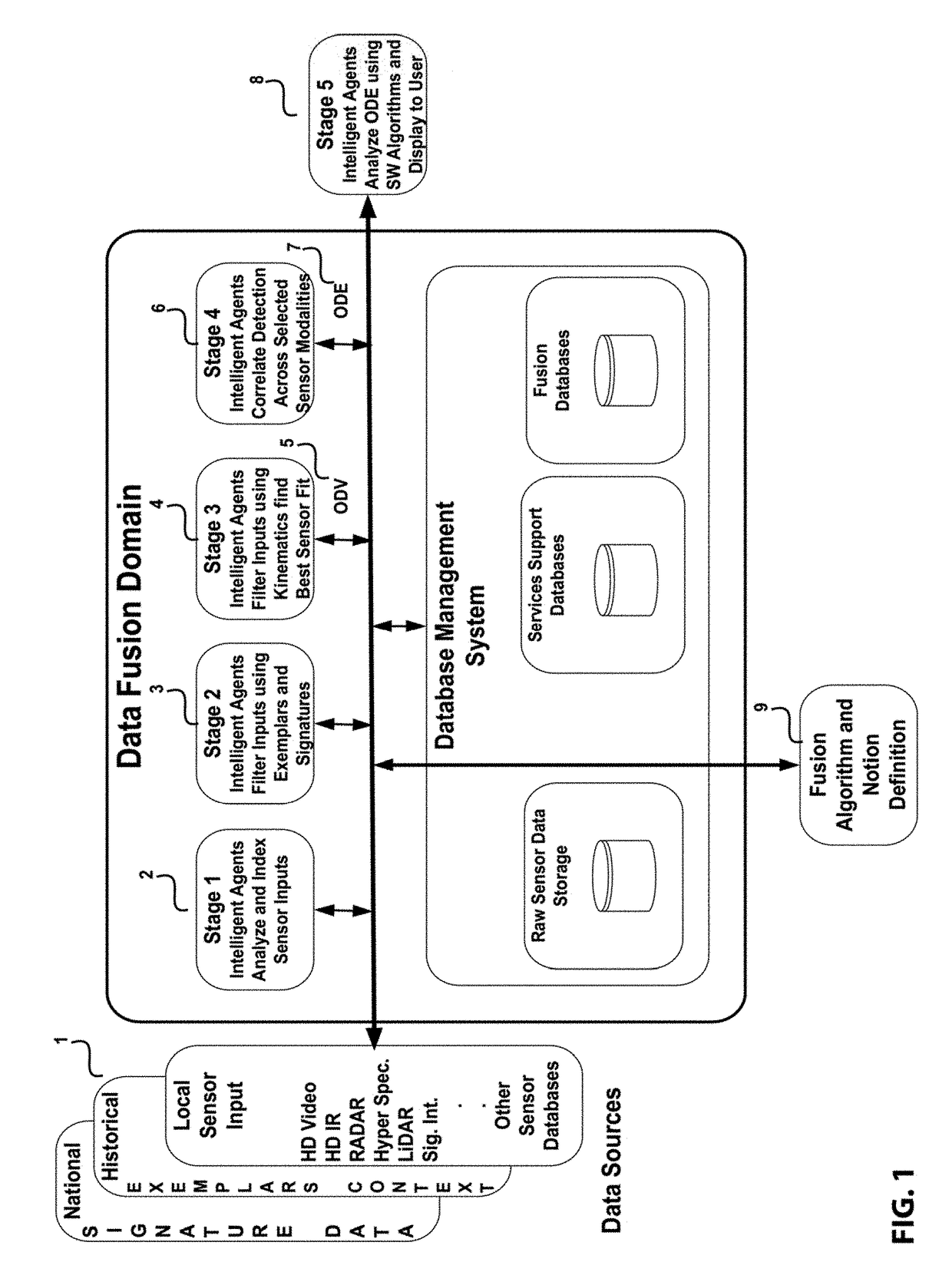

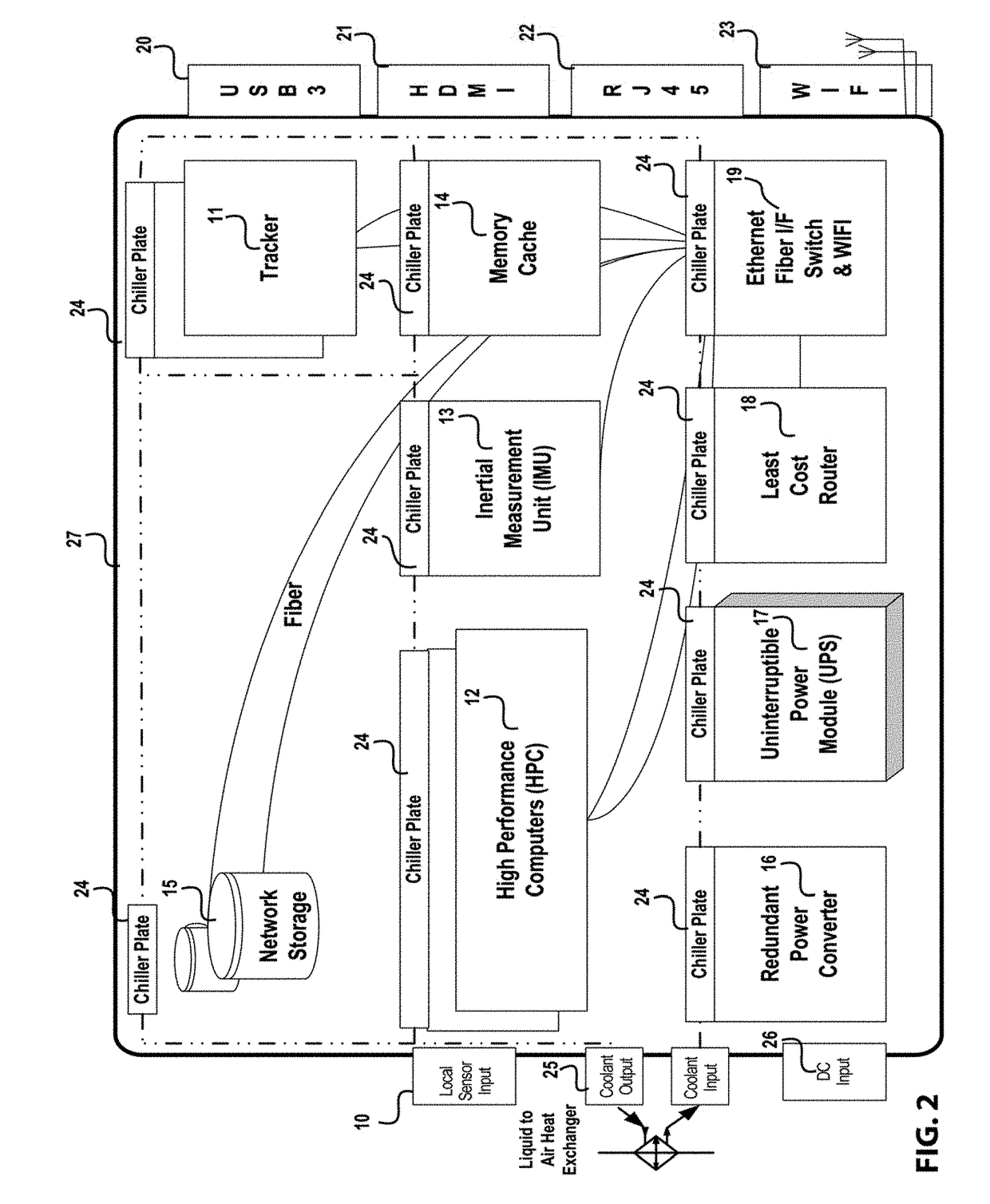

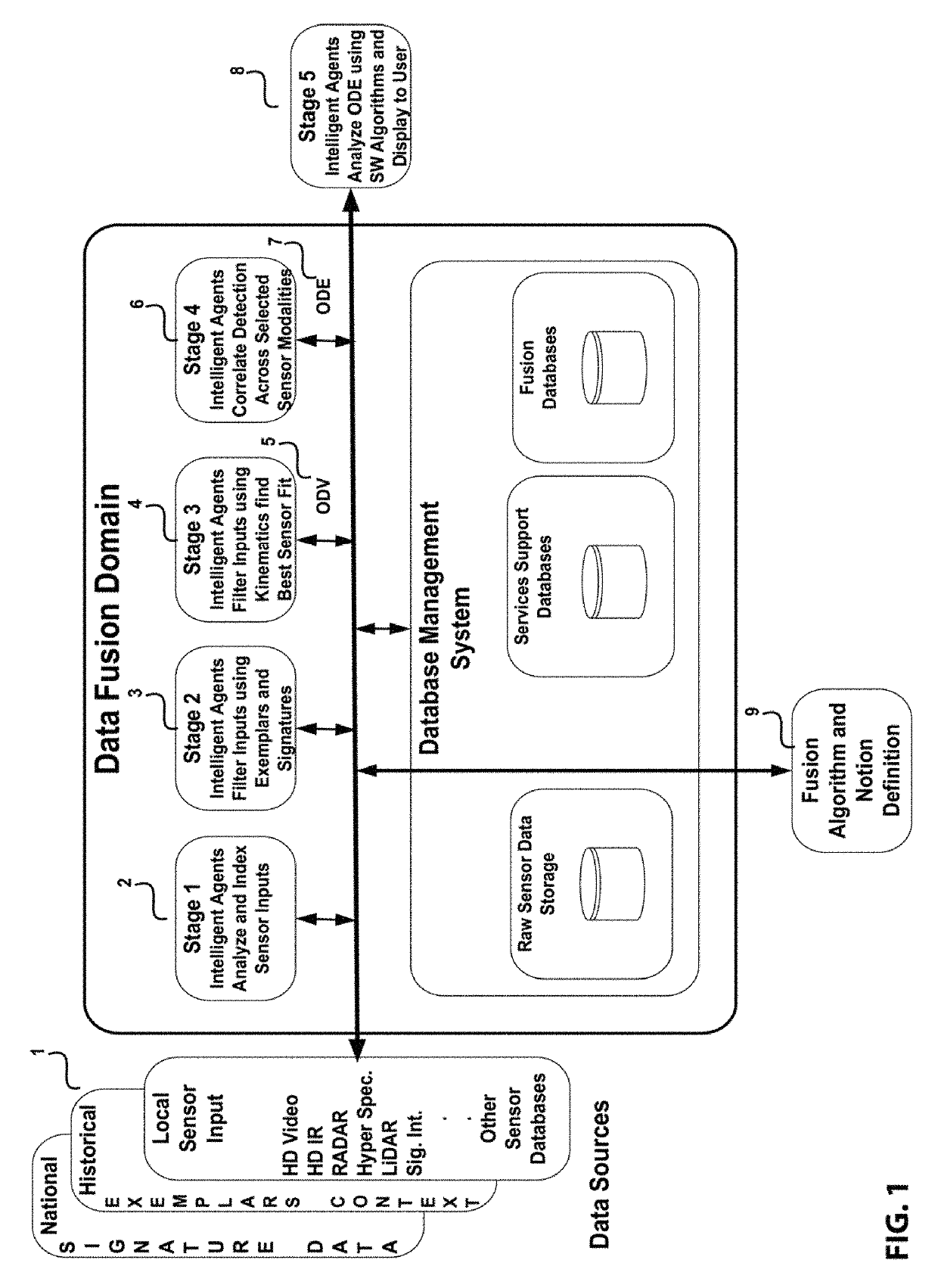

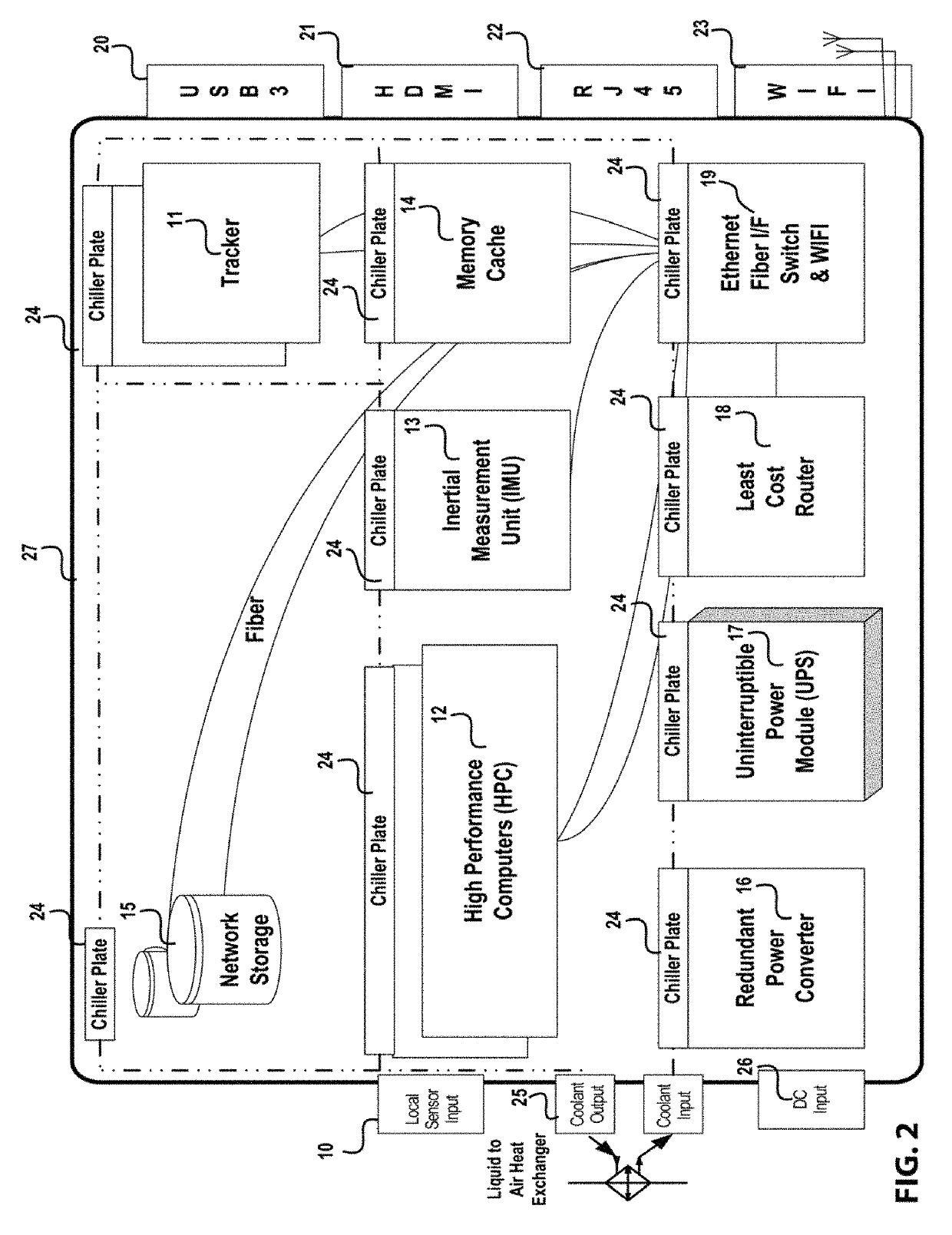

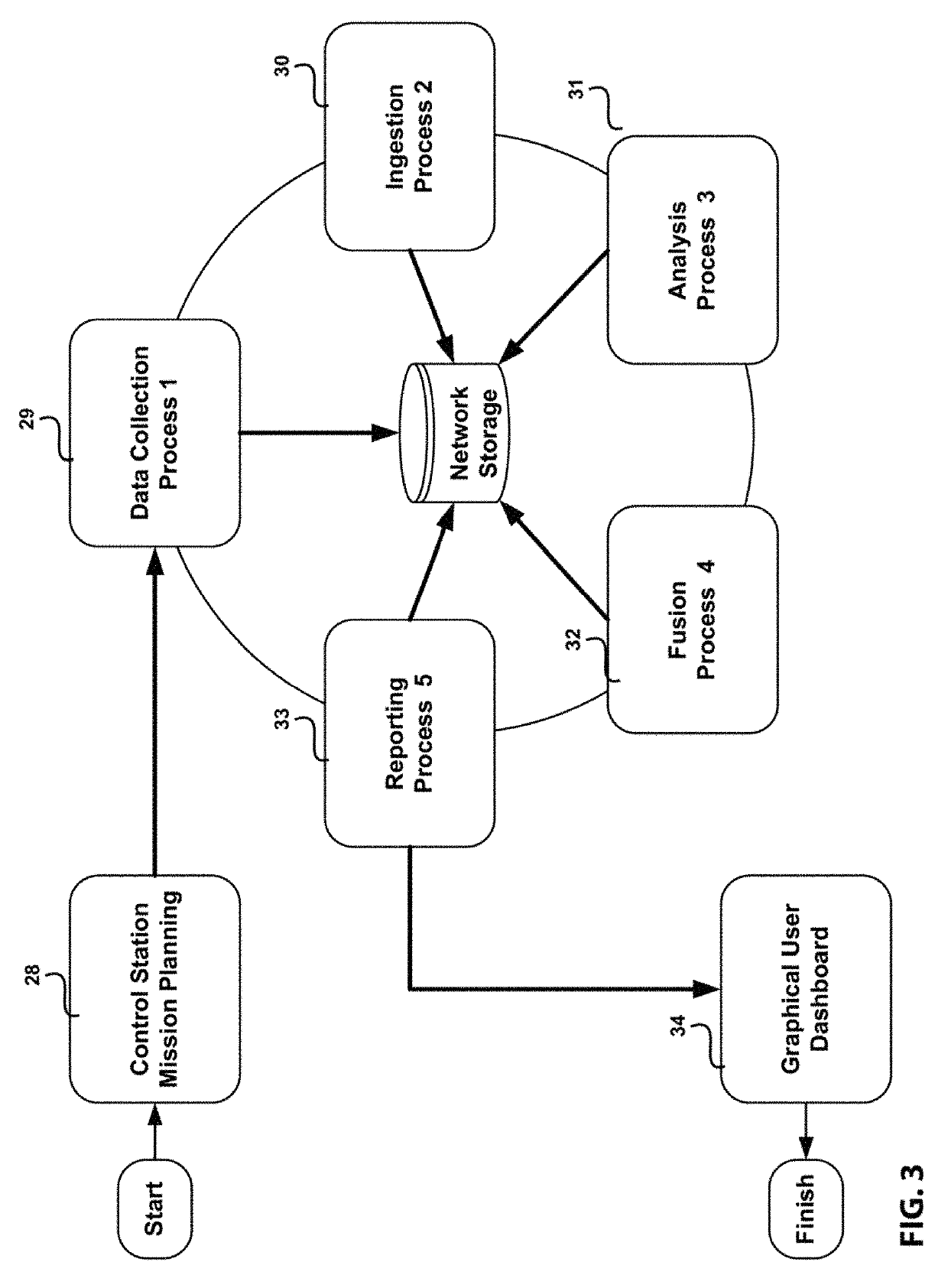

Portable apparatus and method for decision support for real time automated multisensor data fusion and analysis

ActiveUS20180239991A1Easy to detectReduce false detectionScene recognitionMetadata based other databases retrievalCross modalityCloud base

The present invention encompasses a physical or virtual, computational, analysis, fusion and correlation system that can automatically, systematically and independently analyze collected sensor data (upstream) aboard or streaming from aerial vehicles and / or other fixed or mobile single or multi-sensor platforms. The resultant data is fused and presented locally, remotely or at ground stations in near real time, as it is collected from local and / or remote sensors. The invention improves detection and reduces false detections compared to existing systems using portable apparatus or cloud based computation and capabilities designed to reduce the role of the human operator in the review, fusion and analysis of cross modality sensor data collected from ISR (Intelligence, Surveillance and Reconnaissance) aerial vehicles or other fixed and mobile ISR platforms. The invention replaces human sensor data analysts with hardware and software providing two significant advantages over the current manual methods.

Owner:MCLOUD TECH USA INC

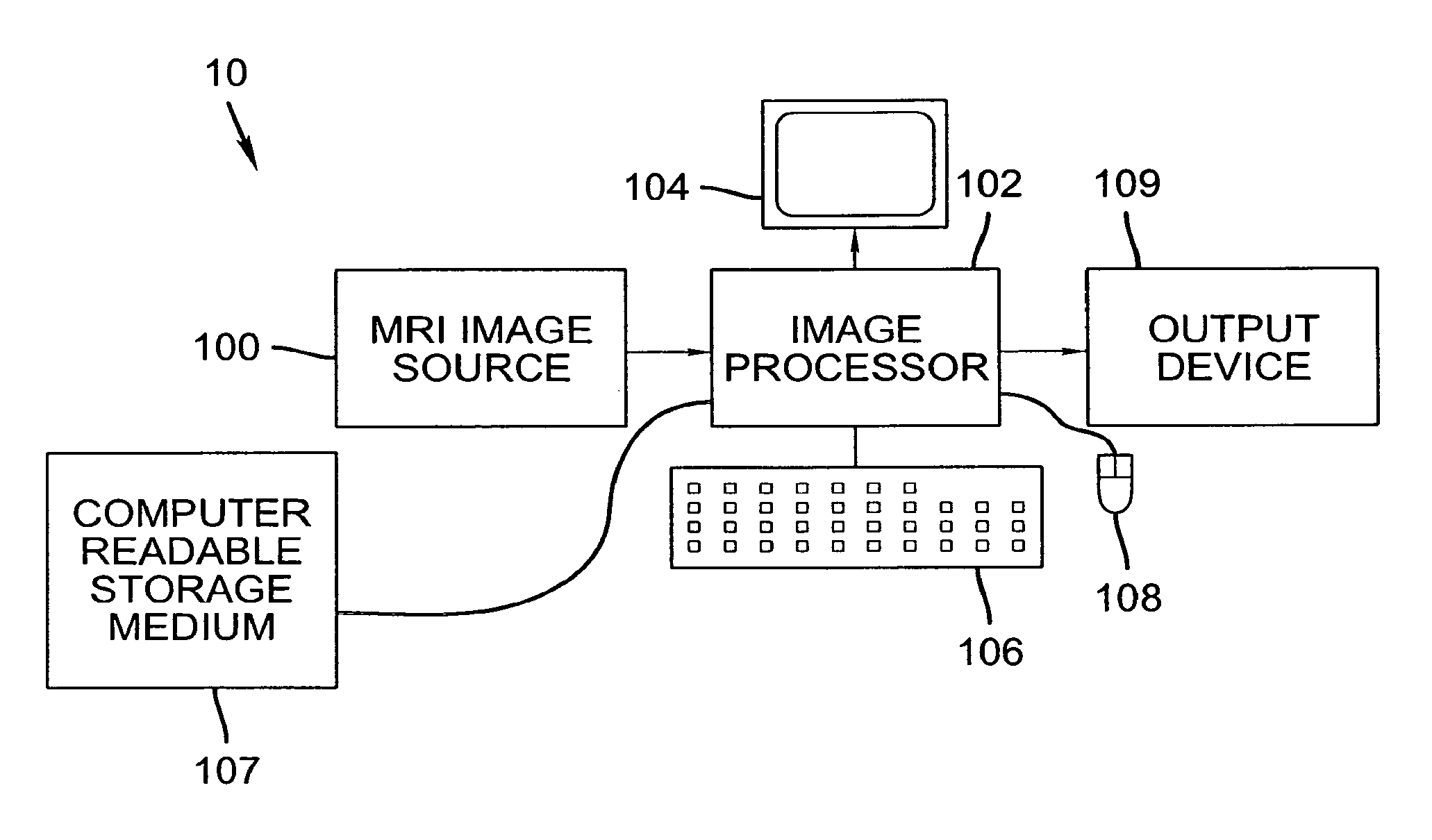

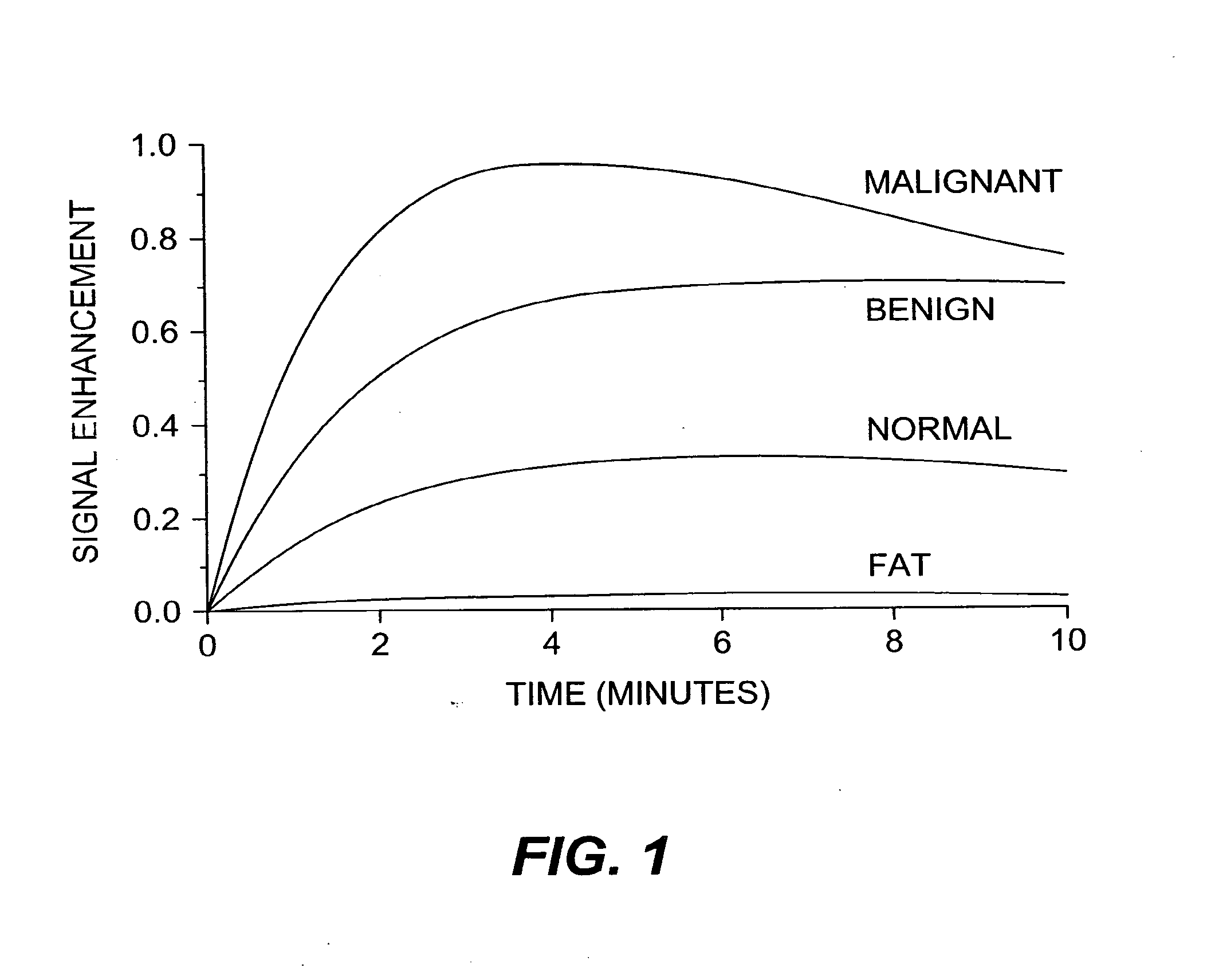

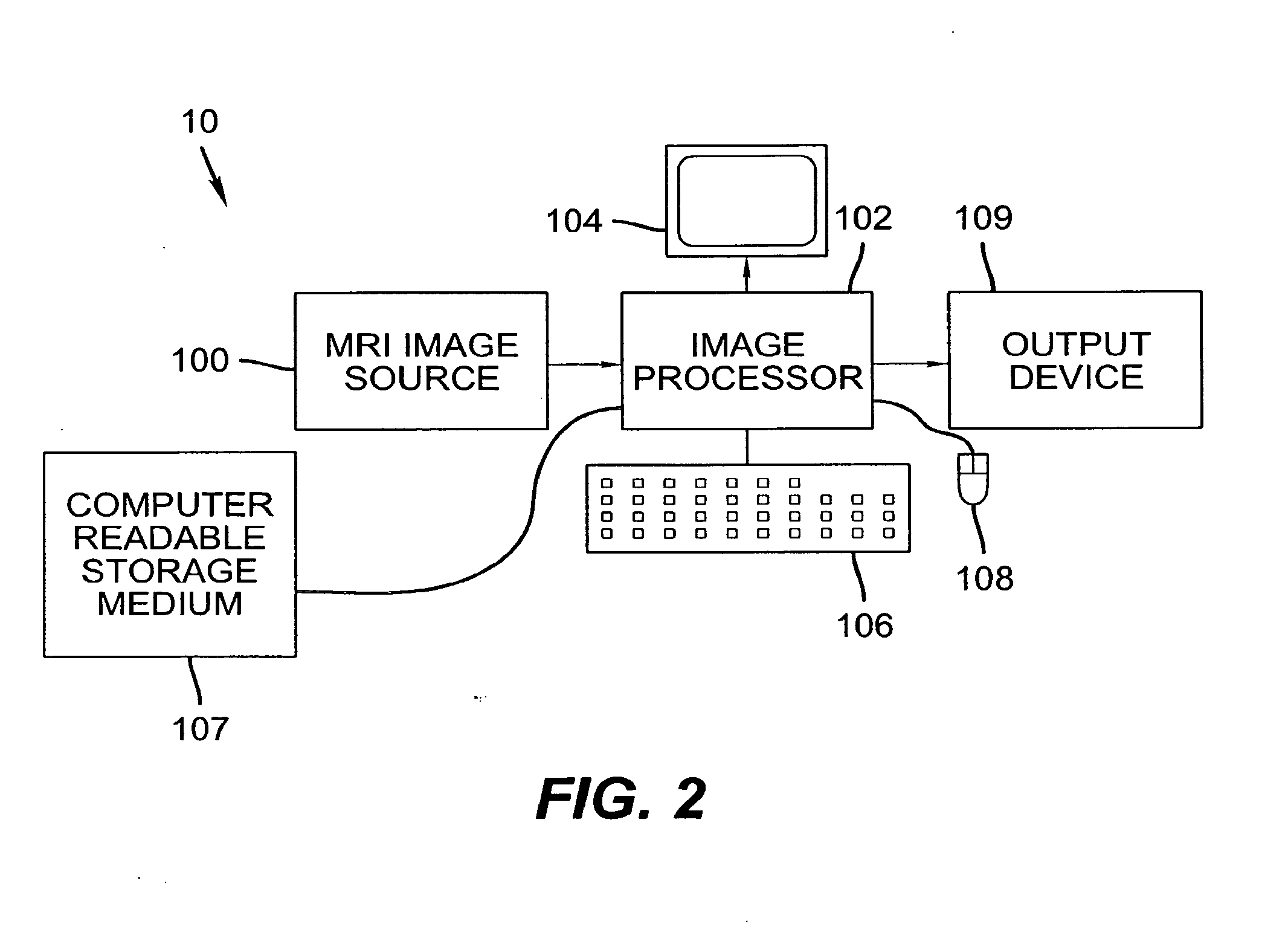

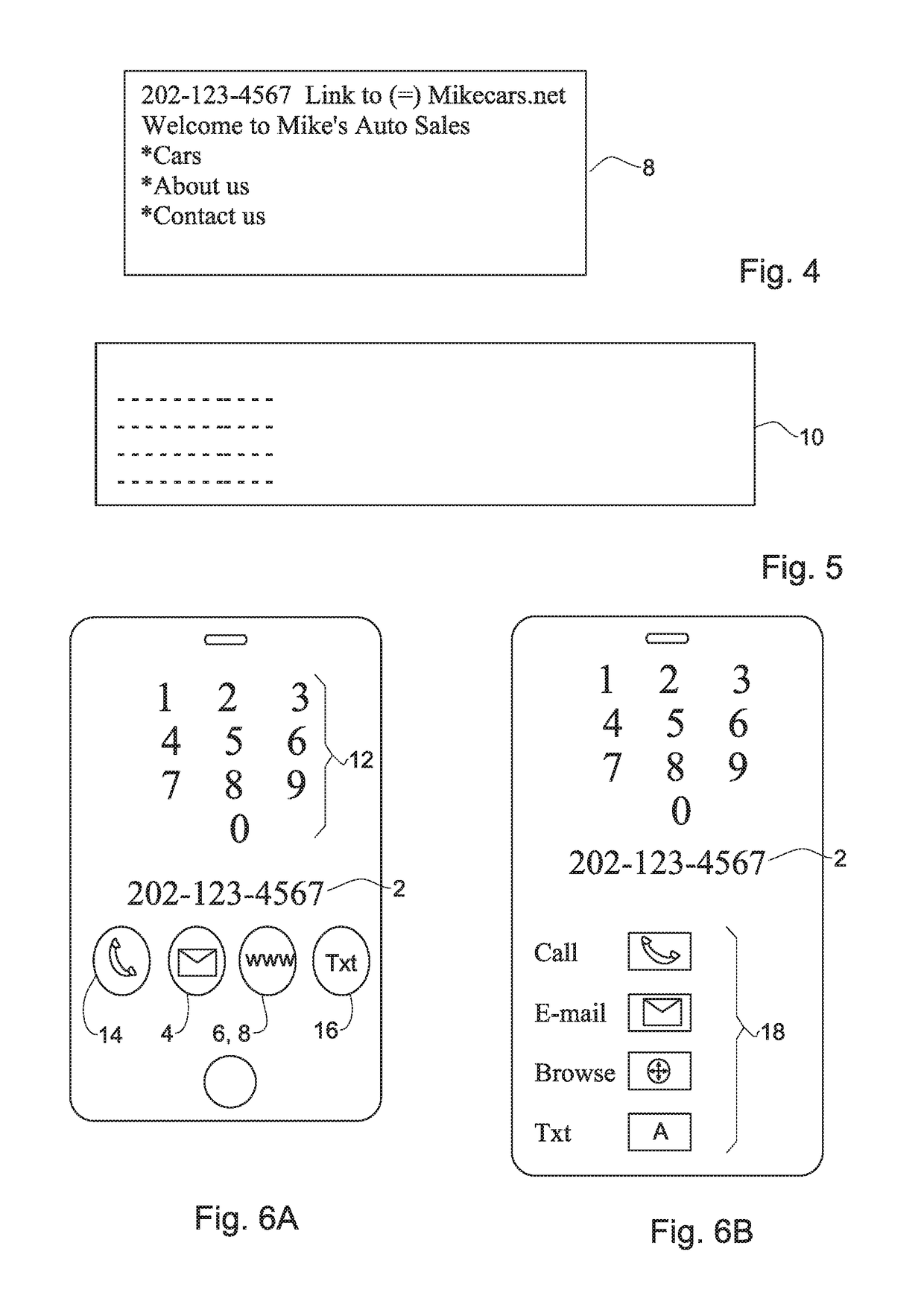

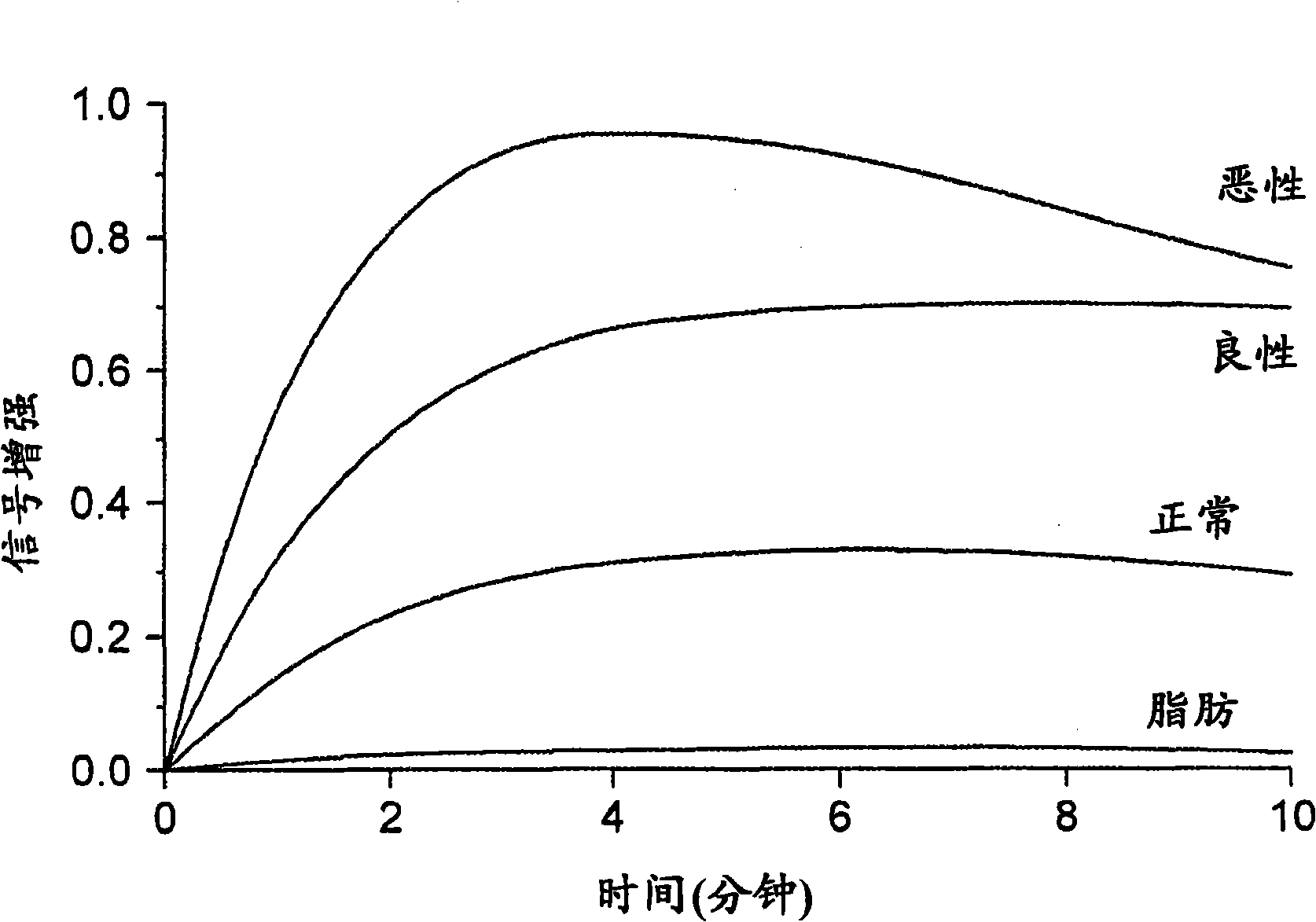

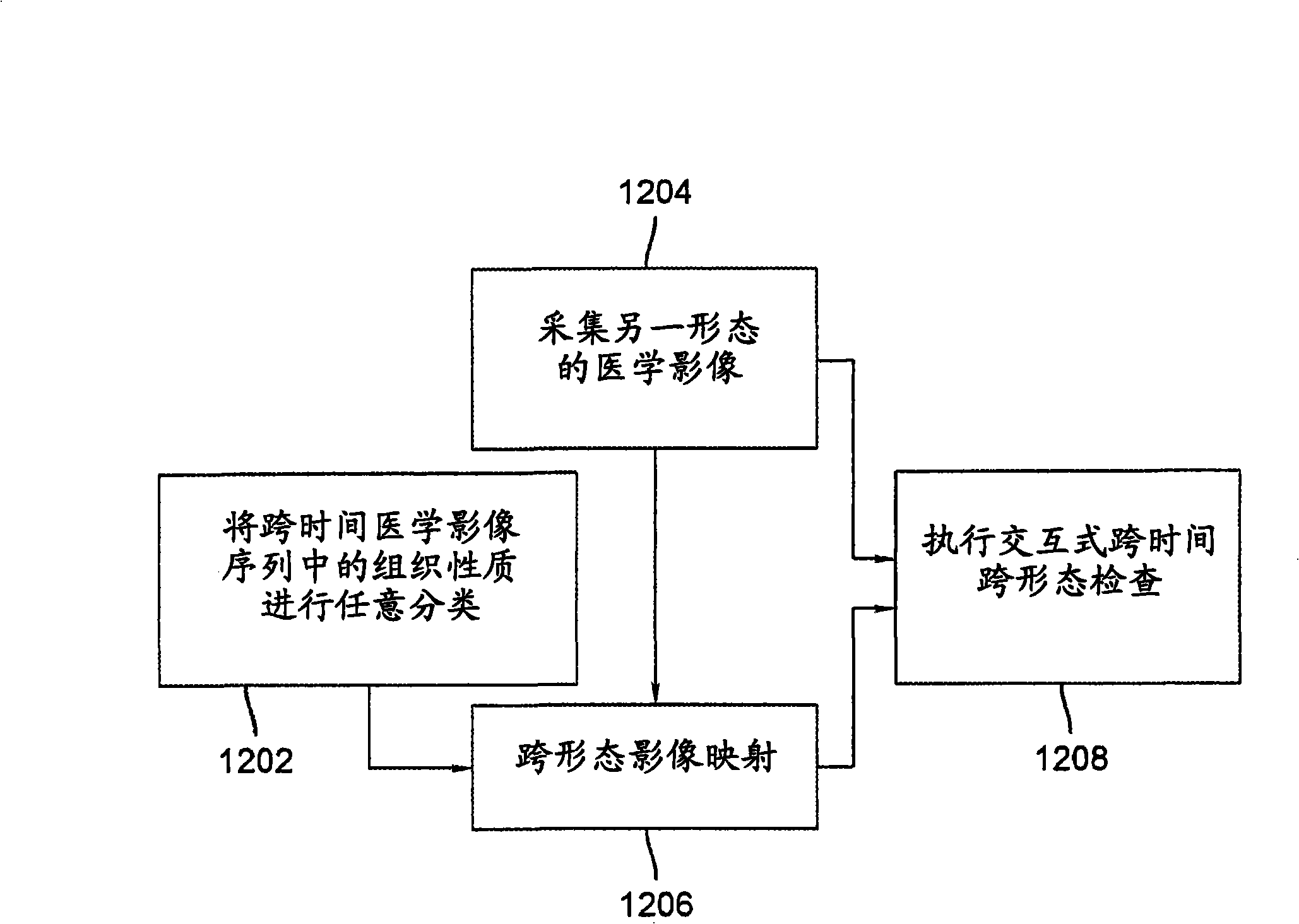

Cross-time and cross-modality inspection for medical image diagnosis

InactiveUS20070237372A1Image analysisRecognition of medical/anatomical patternsCross modalityImaging analysis

A cross-time and cross-modality inspection method for medical image diagnosis. A first set of medical images of a subject is accessed wherein the first set is captured at a first time period by a first modality. A second set of medical images of the subject is accessed, wherein the second set is captured at a second time period by a second modality. The first and second sets are each comprised of a plurality of medical image. Image registration is performed by mapping the plurality of medical images of the first and second sets to predetermined spatial coordinates. A cross-time image mapping is performed of the first and second sets. Means are provided for interactive cross-time medical image analysis.

Owner:CARESTREAM HEALTH INC

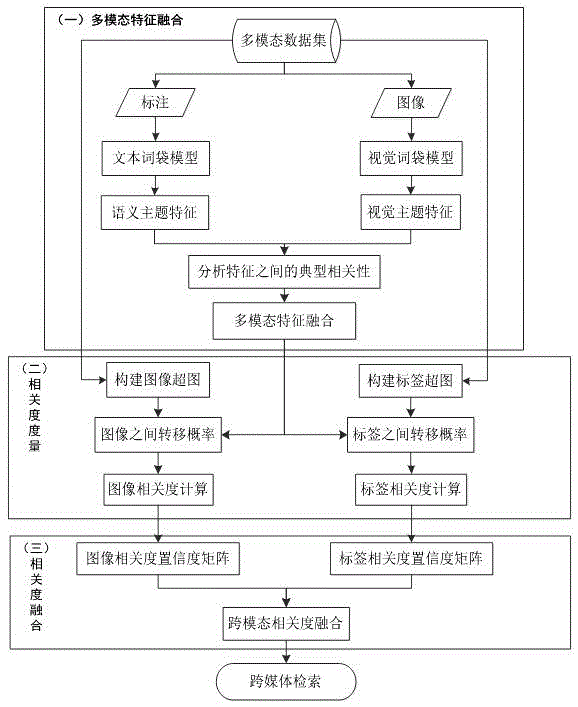

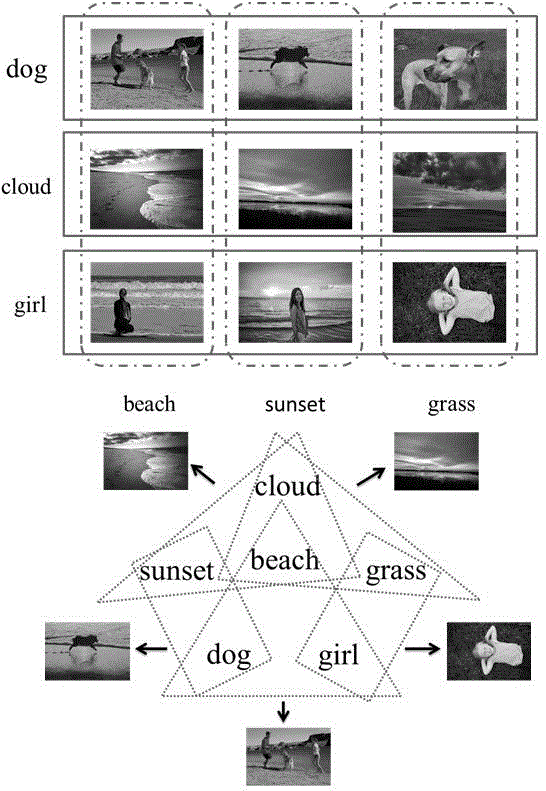

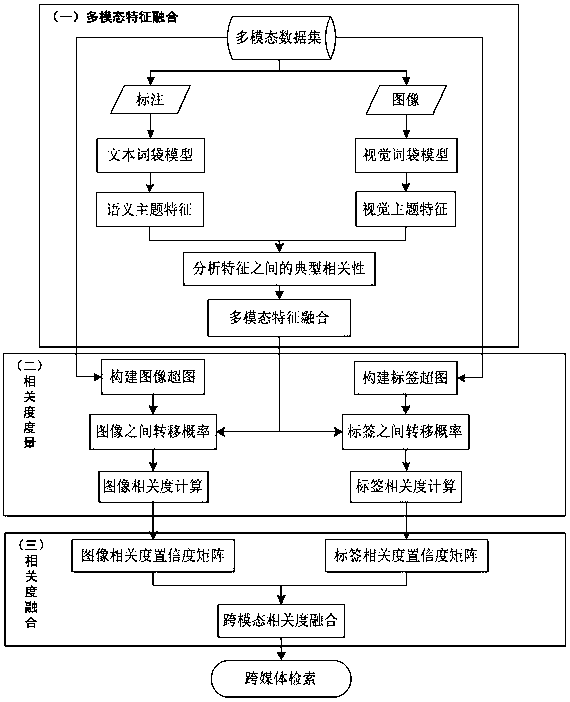

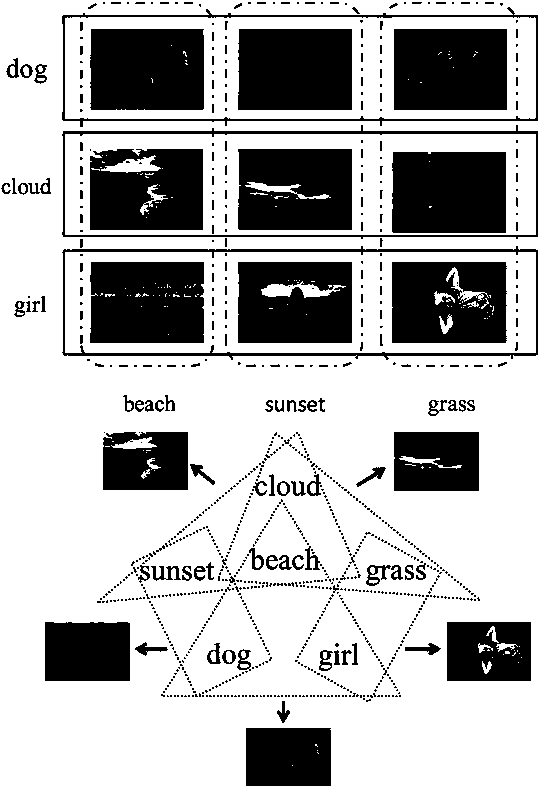

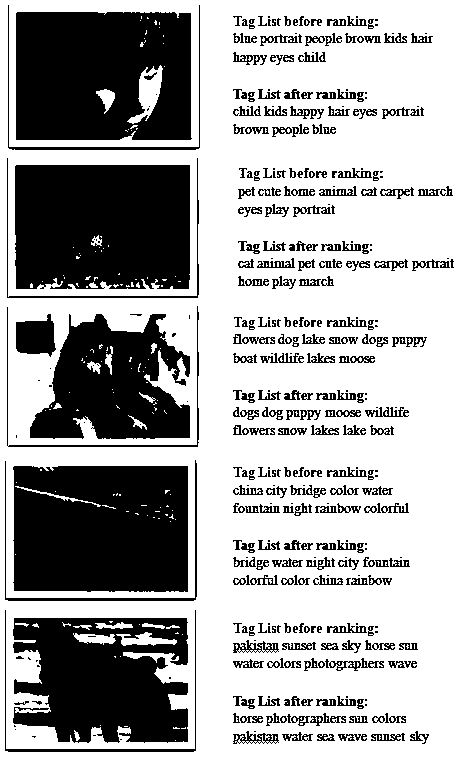

Cross-modality image-label relevance learning method facing social image

ActiveCN104899253ALearn precisely and effectivelyGood correlationMetadata still image retrievalSpecial data processing applicationsCross modalityData set

The invention belongs to the technical field of cross-media relevance learning, and particularly relates to a social image oriented cross-modality image-label relevance learning method. The invention comprises three algorithms: multi-modal feature fusion, bidirectional relevancy measuring and cross-modality relevancy fusion; a whole social image set is described by taking a hyperimage as a basic model, the image and a label are respectively mapped into hyperimage nodes for treatment, relevancy aiming at the image and the relevancy aiming at the label are obtained, and the two different relevancies are combined according to a cross-modality fusion method to obtain a better relevancy. Compared with the traditional method, the method is high in accuracy and high in adaptivity. The method has important significance in performing efficient social image retrieval by considering multi-modal semantic information based on large-scale social images with weak labels, retrieval relevancy can be improved, user experience is enhanced, and the method has application value in the field of cross-media information retrieval.

Owner:FUDAN UNIV

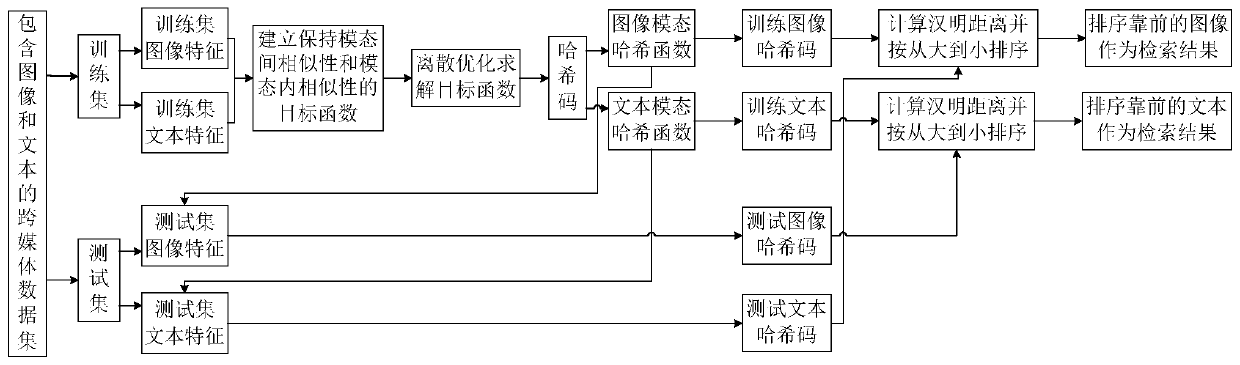

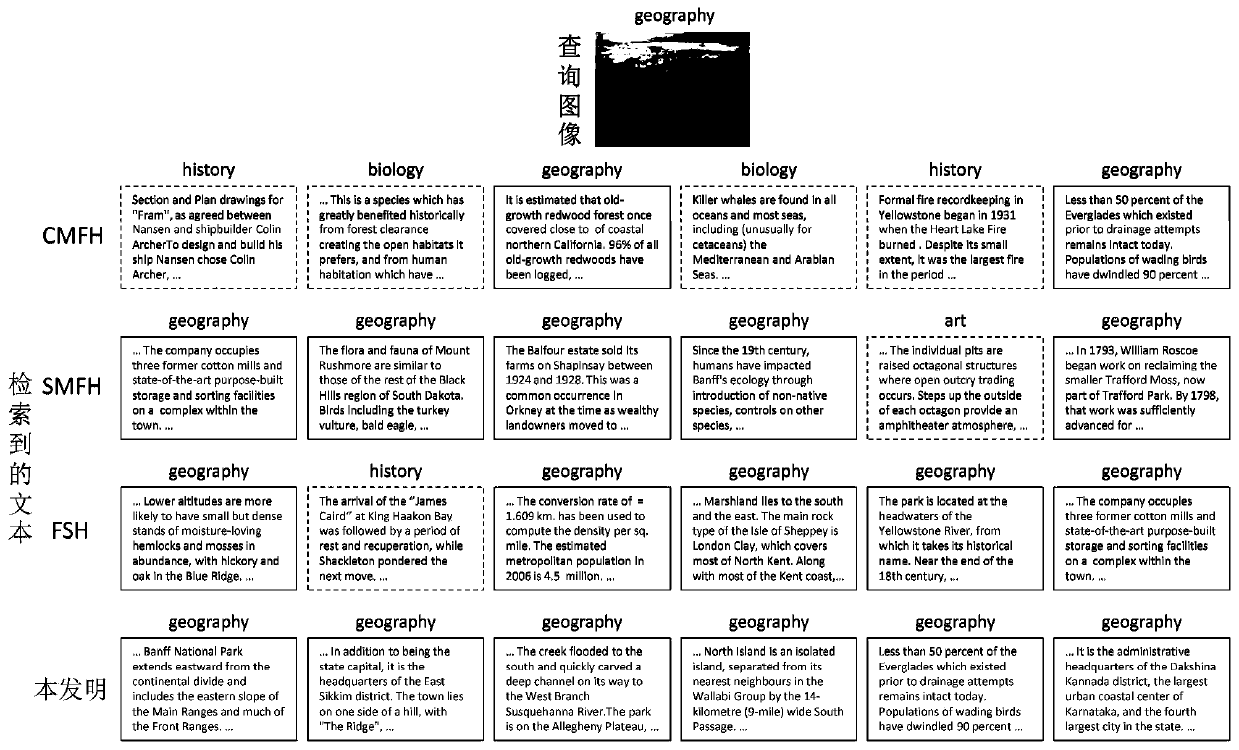

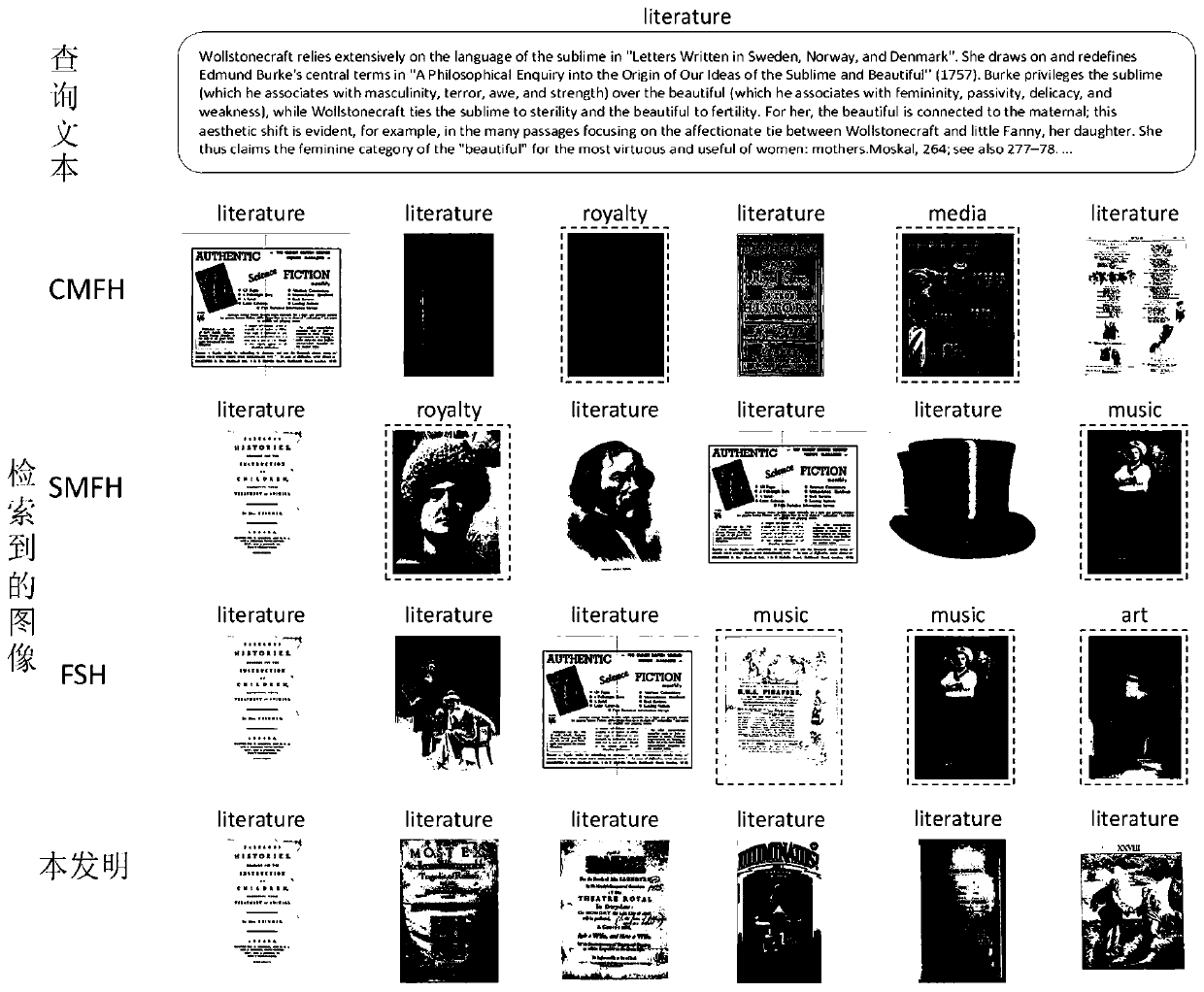

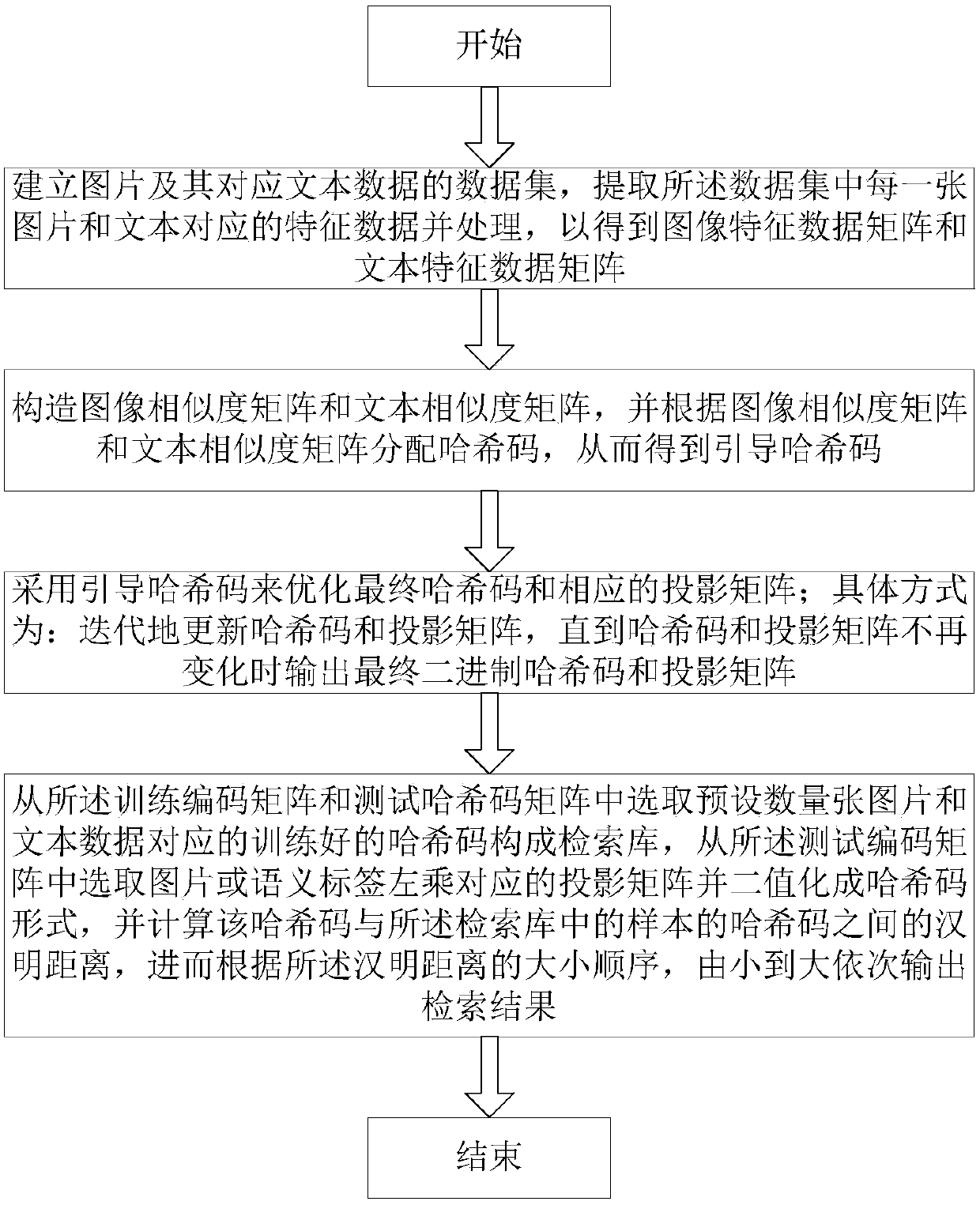

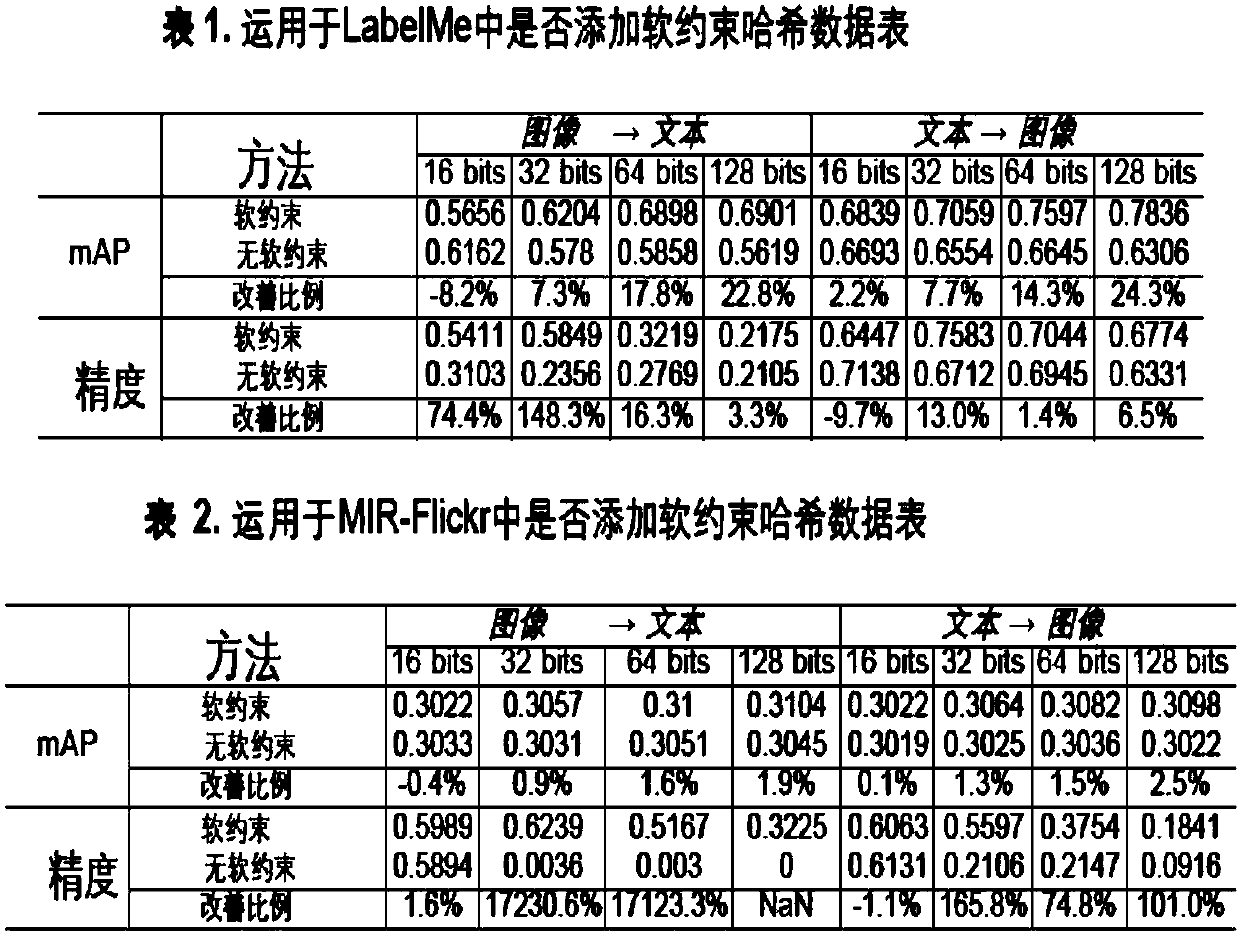

Cross-modal data discrete hash retrieval method based on similarity maintenance

ActiveCN110059198ATroubleshoot data retrieval issuesImprove accuracyMultimedia data indexingMultimedia data queryingModal testingCross modality

The invention discloses a cross-modal data discrete hash retrieval method based on similarity preservation. The method comprises the following steps of establishing a cross-modal retrieval data set composed of samples containing two modalities, and dividing the cross-modal retrieval data set into a training set and a test set; establishing an objective function for keeping similarity between modalities and similarity in modalities, and solving the objective function through a discrete optimization method to obtain a hash code matrix; learning a Hash function of each mode according to the Hashcode matrix; calculating Hash codes of all samples in the training set and the test set by using a Hash function; wherein one modal test set serves as a query set and the other modal training set serves as a retrieval set, calculating the Hamming distance between the Hash codes of the samples in the query set and the Hash codes of the samples in the retrieval set, wherein the sequence serves as aretrieval result. According to the method, the similarity between modalities and the similarity in the modalities can be effectively kept, the discrete characteristics of the Hash codes are considered, a discrete optimization method is adopted for solving the objective function, and therefore the cross-modality retrieval accuracy is improved.

Owner:ZHEJIANG UNIV +1

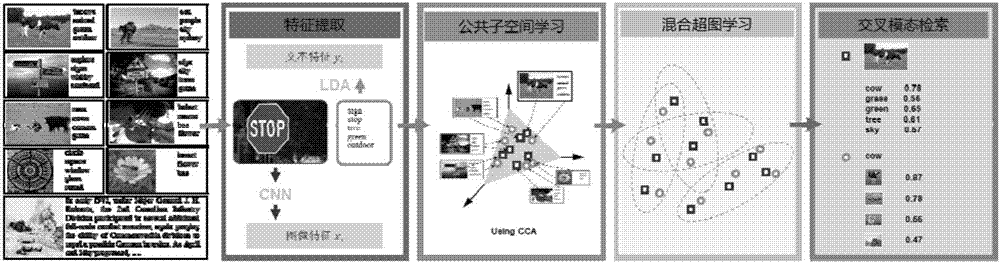

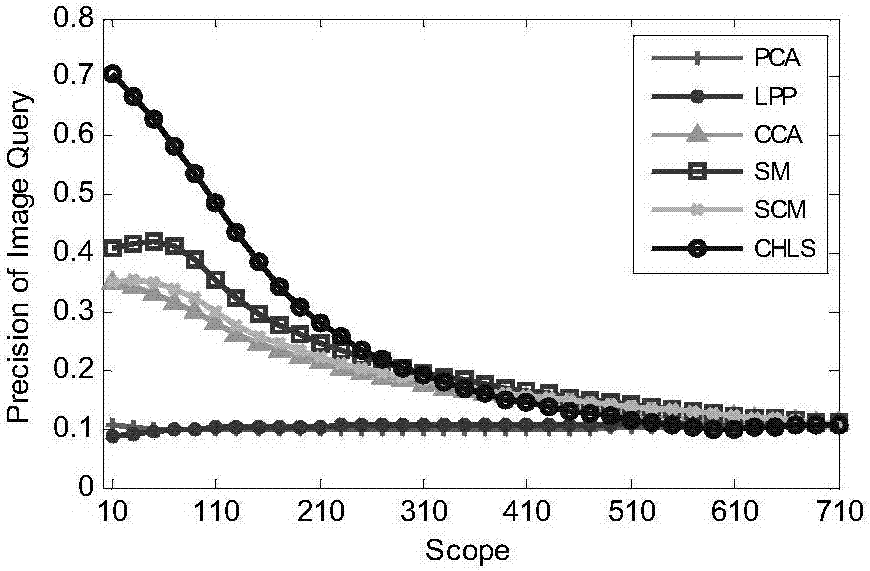

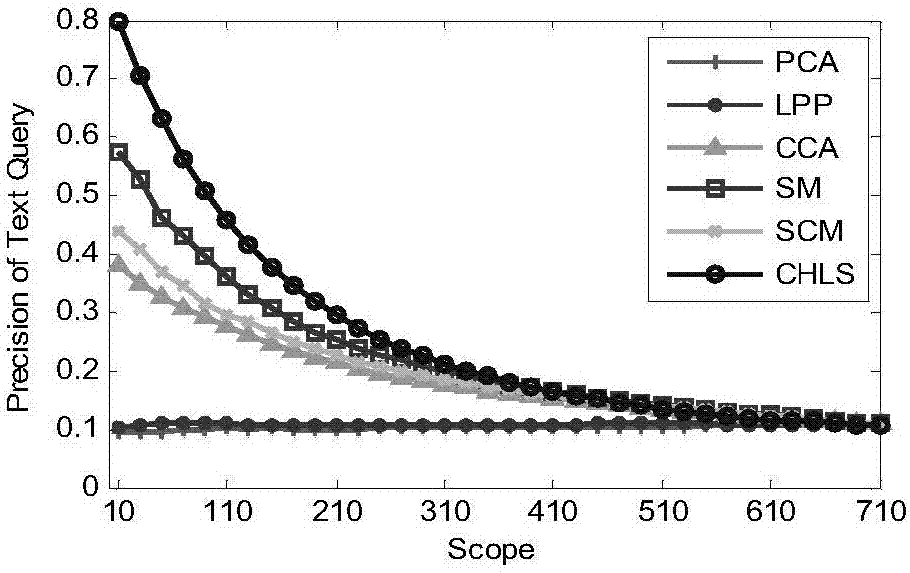

Cross-modal retrieval algorithm based on mixed hypergraph learning in subspace

The invention discloses a cross-modal retrieval algorithm based on mixed hypergraph learning in subspace, based on the cross-modal public subspace learning of canonical correlation analysis. The similarities inside and among modals are calculated by a mapping of the public subspace. A mixed relation matrix is calculated by the similarities inside and among the modals. A mixed hypergraph model is built by the extraction of the relation matrix. And the cross-modal retrieval and sample sequencing are conducted by adoption of hypergraph learning finally. With aiming at cross-modal heterogeneous variations and high order relation among samples, the algorithm instance applies the hypergraph model combined with the cross-modal public subspace learning to the cross-modal retrieval, so that the model is capable of considering the similarity among the modals and the similarity inside the modals simultaneously and giving consideration to the high order relation among a plurality of the samples meanwhile, improving the final precision ratio and the final recall ratio of the cross-modal retrieval. The algorithm is capable of effectively improving the performance of the cross-modal retrieval and greatly enhancing the precision ratio and the recall ratio of the cross-modal retrieval.

Owner:DALIAN UNIV OF TECH

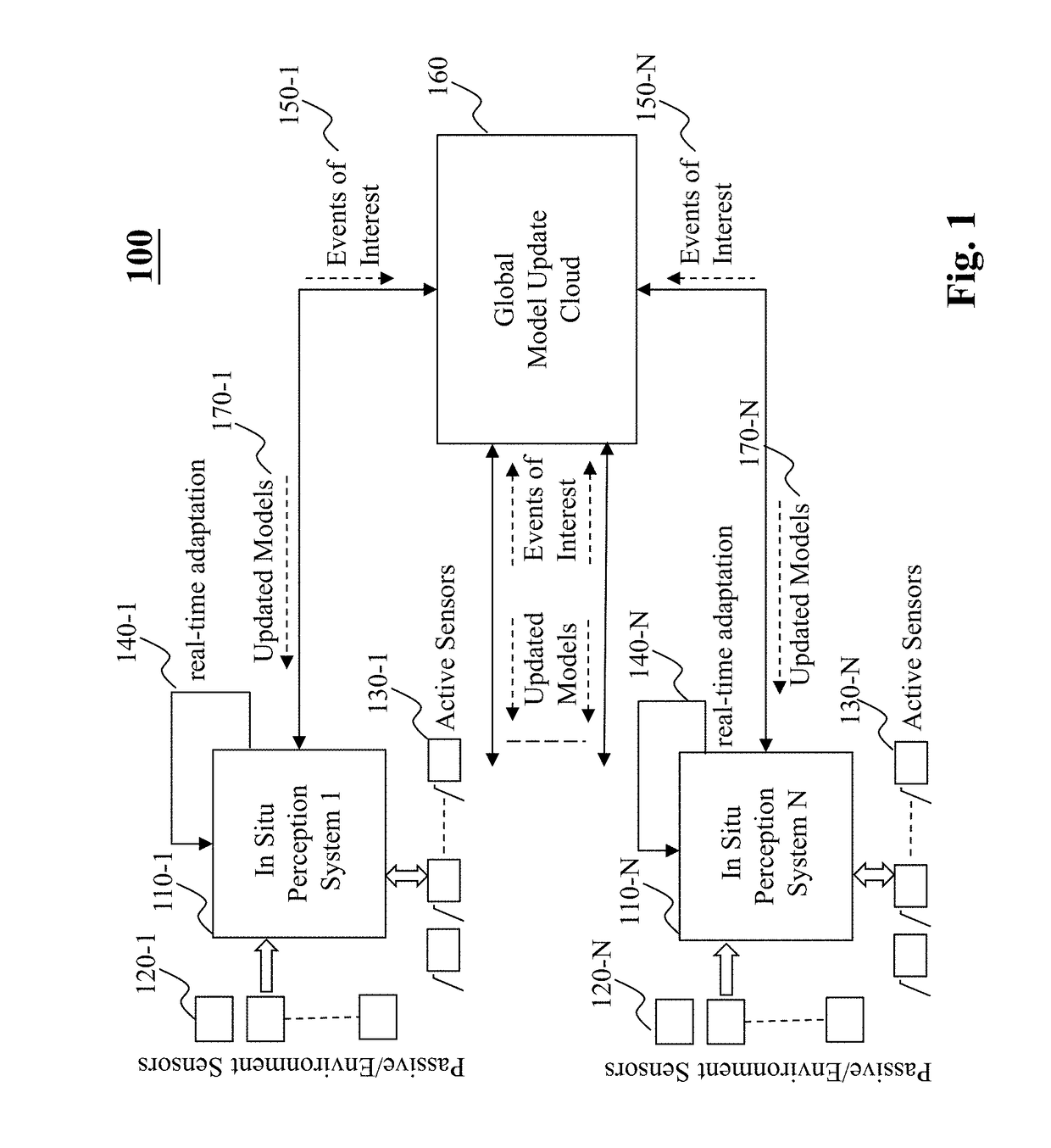

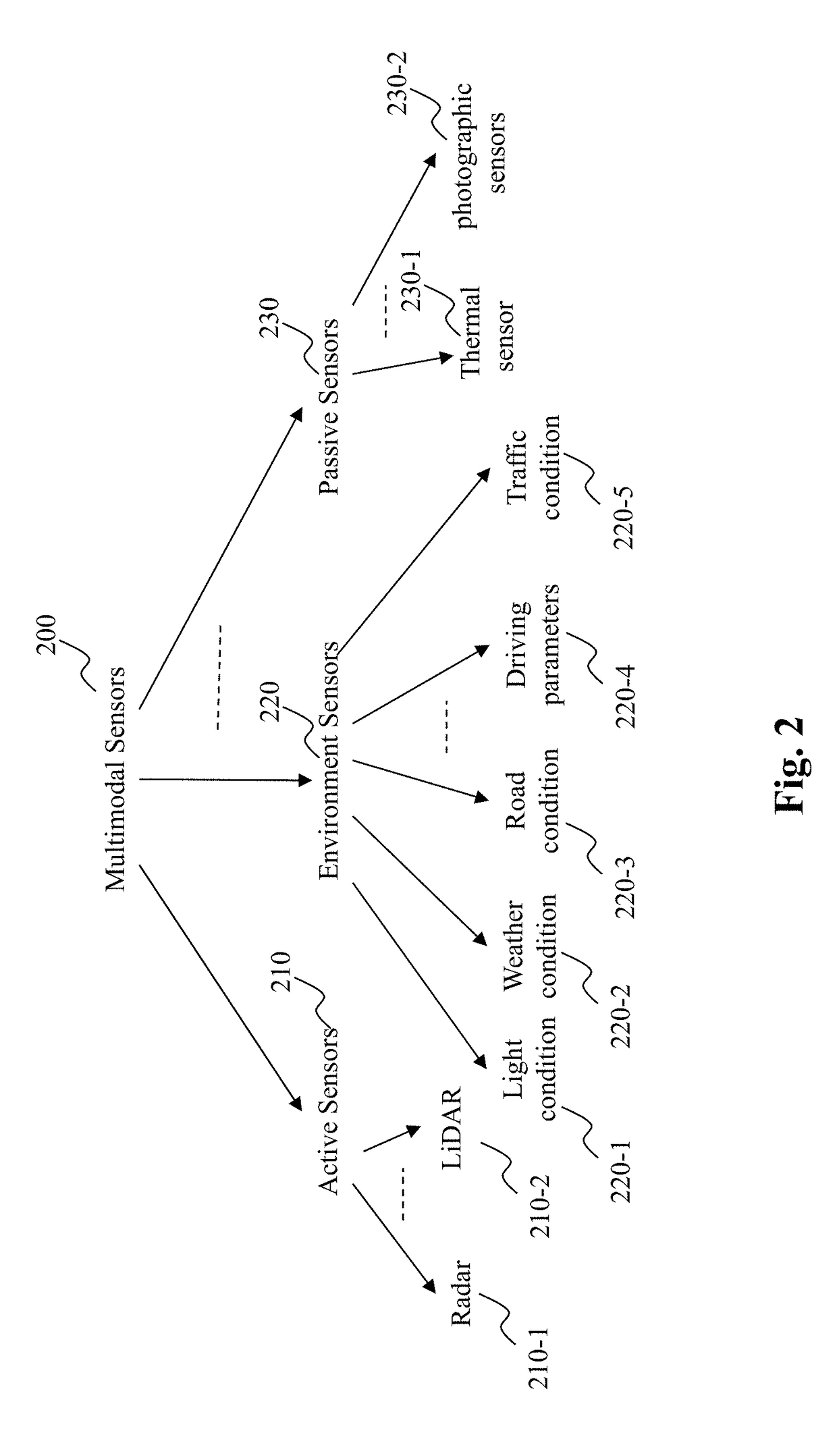

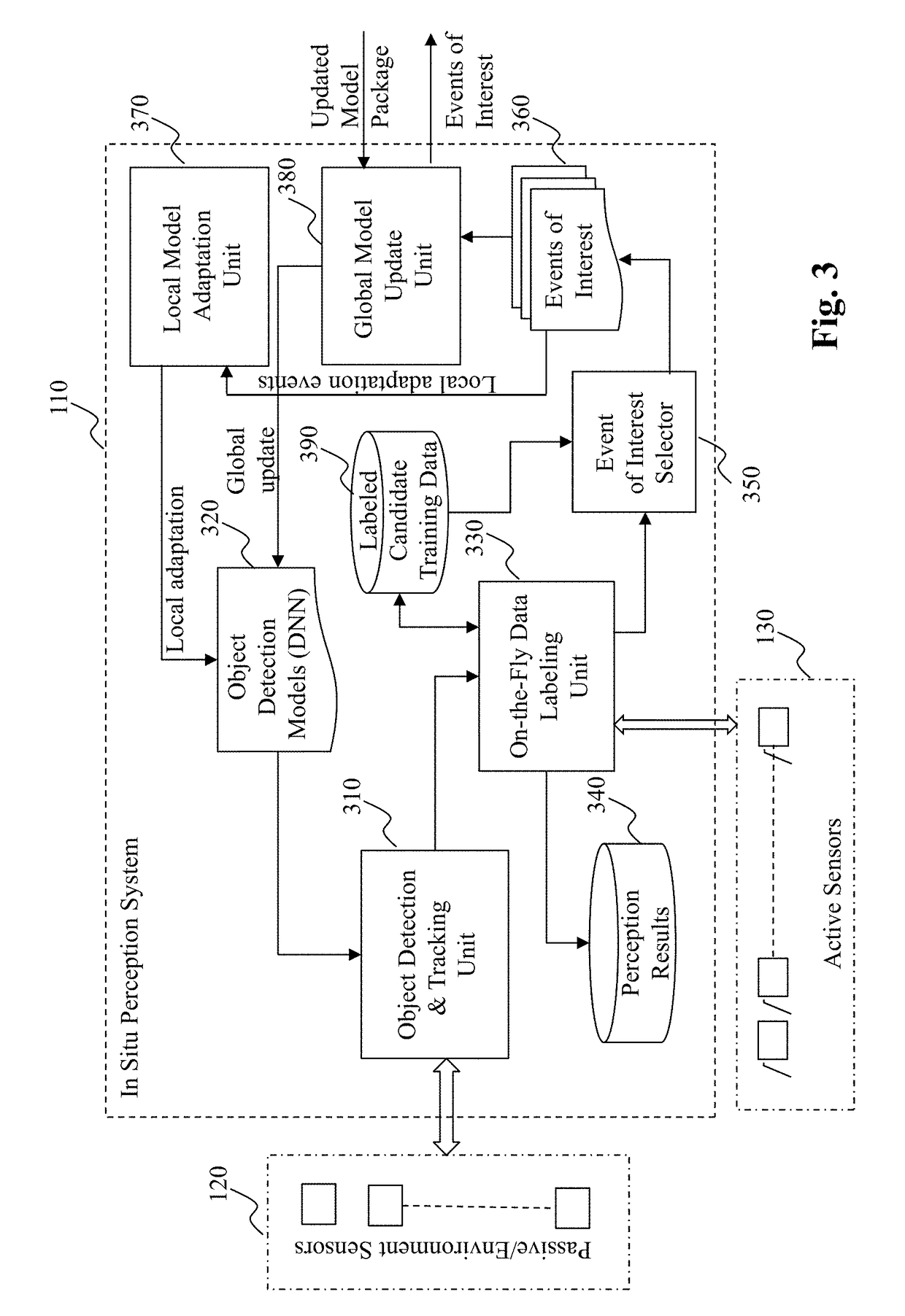

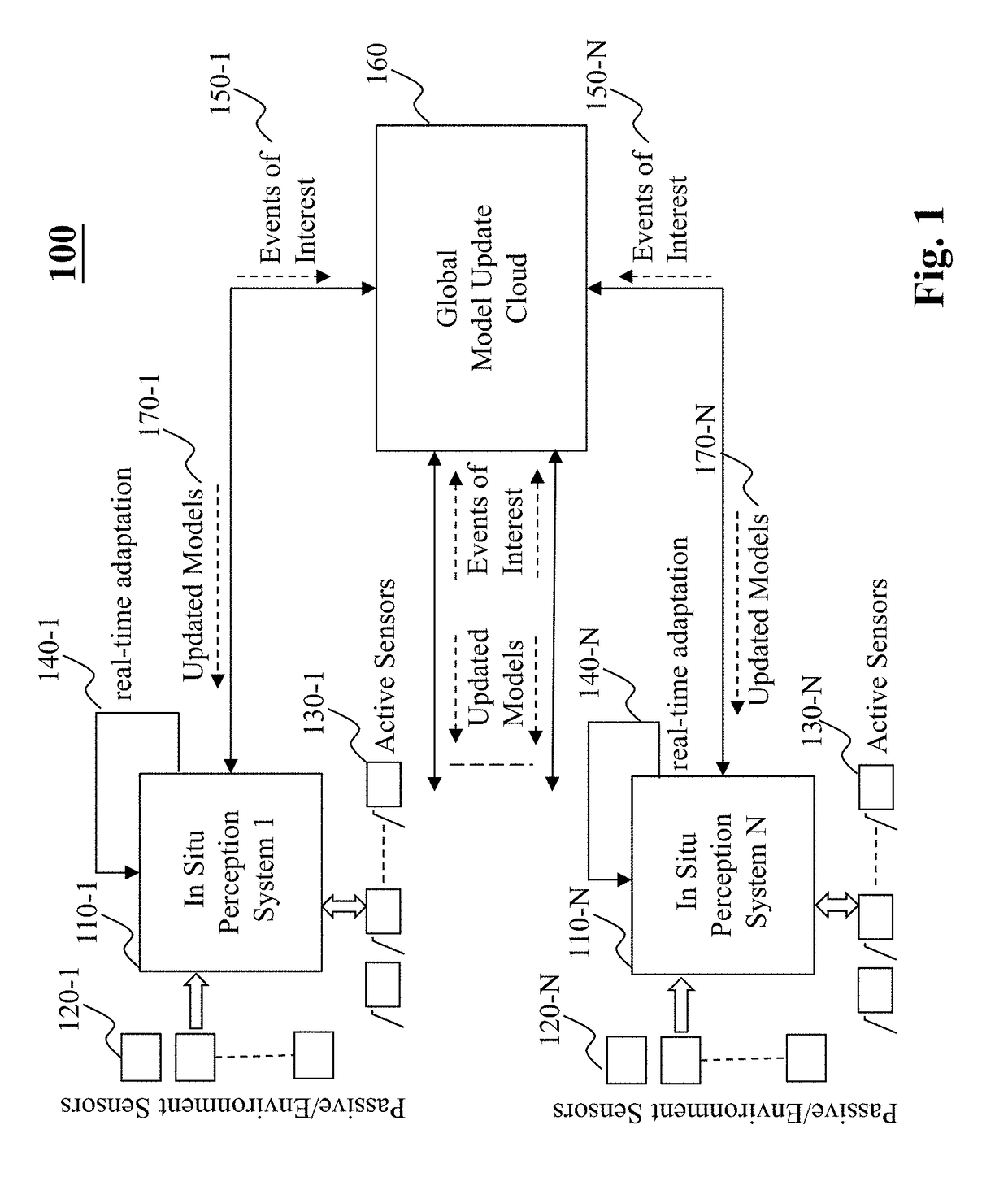

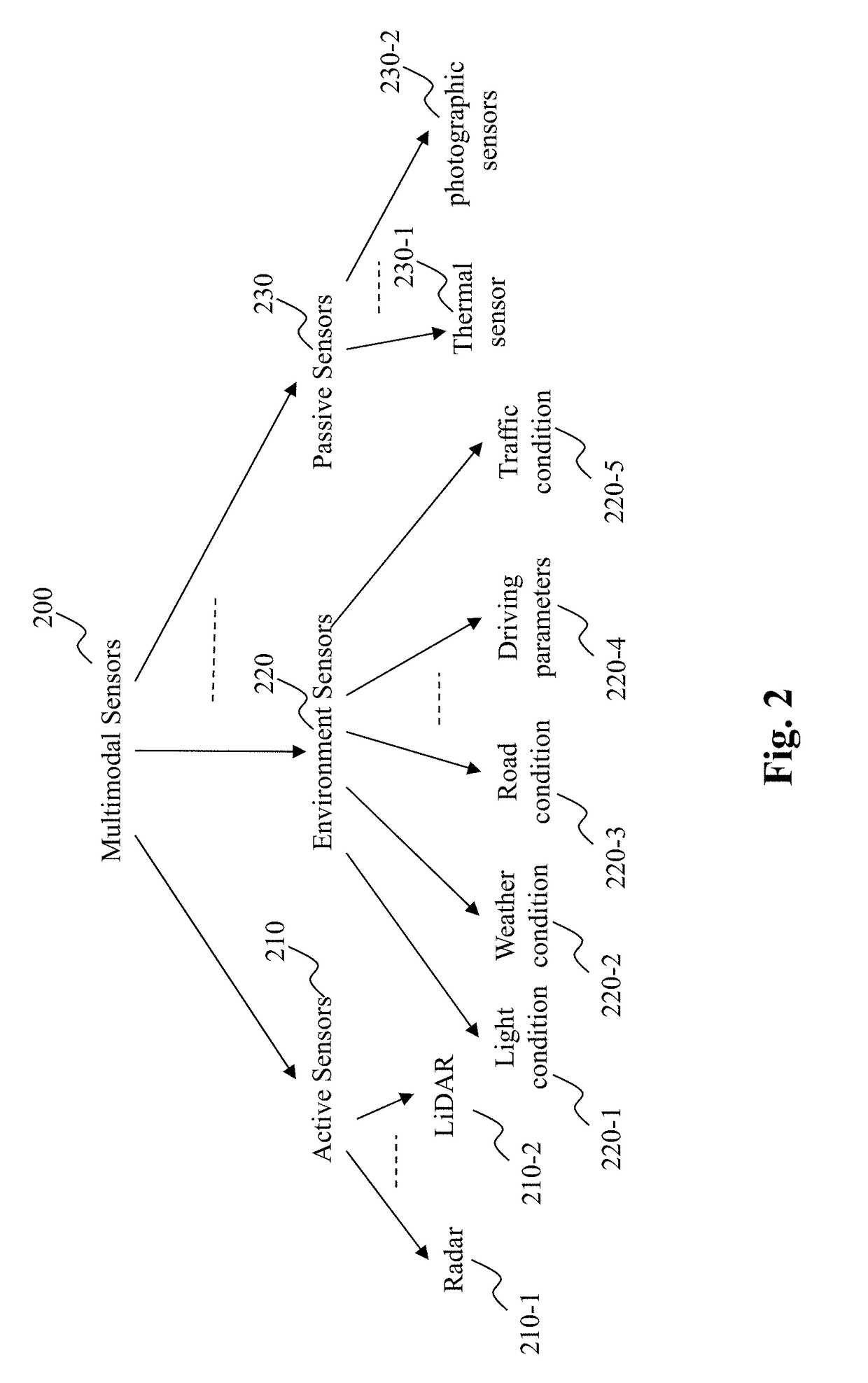

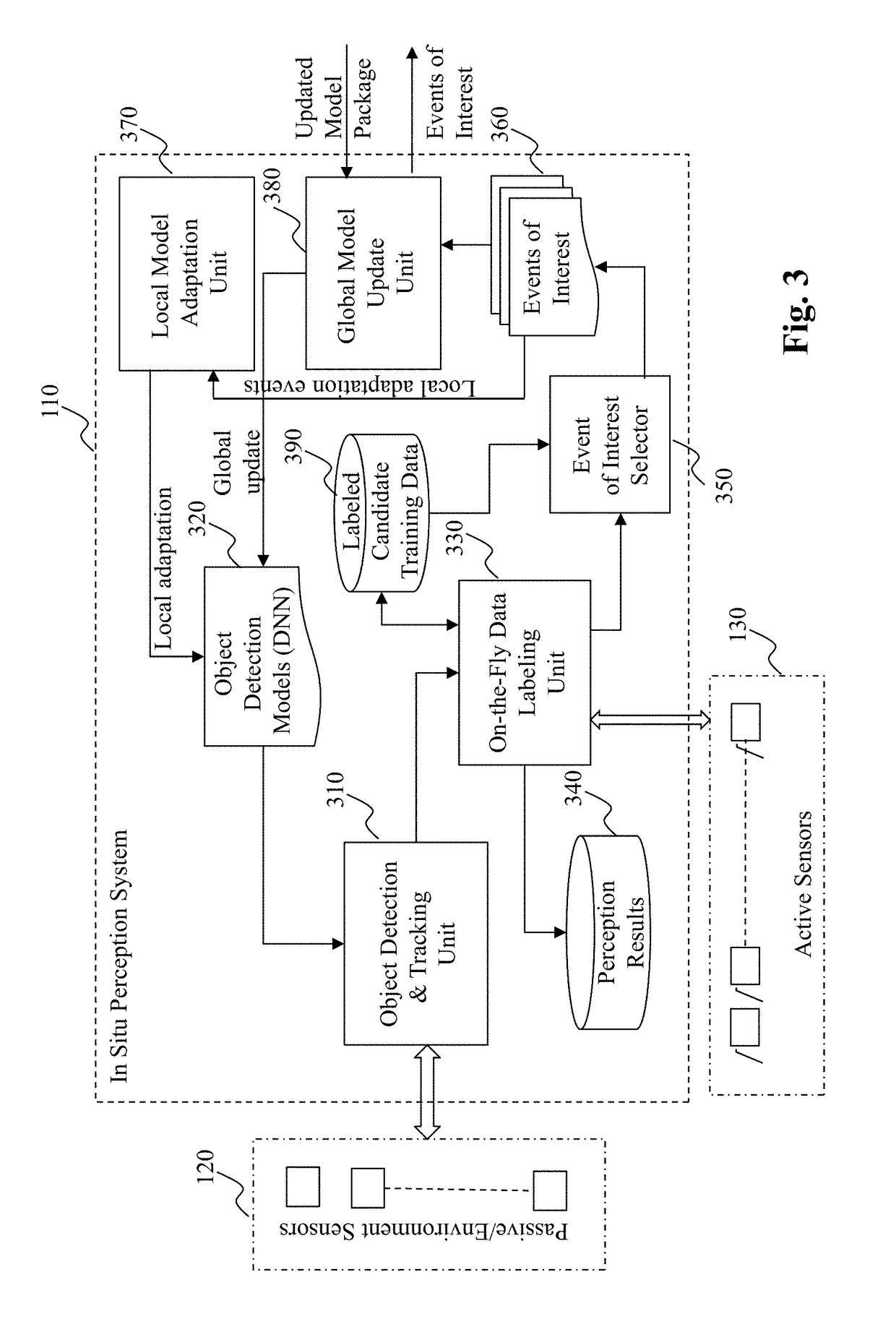

Method and system for on-the-fly object labeling via cross modality validation in autonomous driving vehicles

ActiveUS20180349784A1Autonomous decision making processScene recognitionPattern recognitionCross modality

The present teaching relates to method, system, medium, and implementation of in-situ perception in an autonomous driving vehicle. A plurality of types of sensor data are acquired continuously via a plurality of types of sensors deployed on the vehicle, where the plurality of types of sensor data provide information about surrounding of the vehicle. One or more items surrounding the vehicle are tracked, based on some models, from a first of the plurality of types of sensor data from a first type of the plurality of types of sensors. A second of the plurality of types of sensor data are obtained from a second type of the plurality of sensors and are used to generate validation base data. Some of the one or more items are labeled, automatically, via validation base data to generate labeled at least some item, which is to be used to generate model updated information for updating the at least one model.

Owner:PLUSAI INC

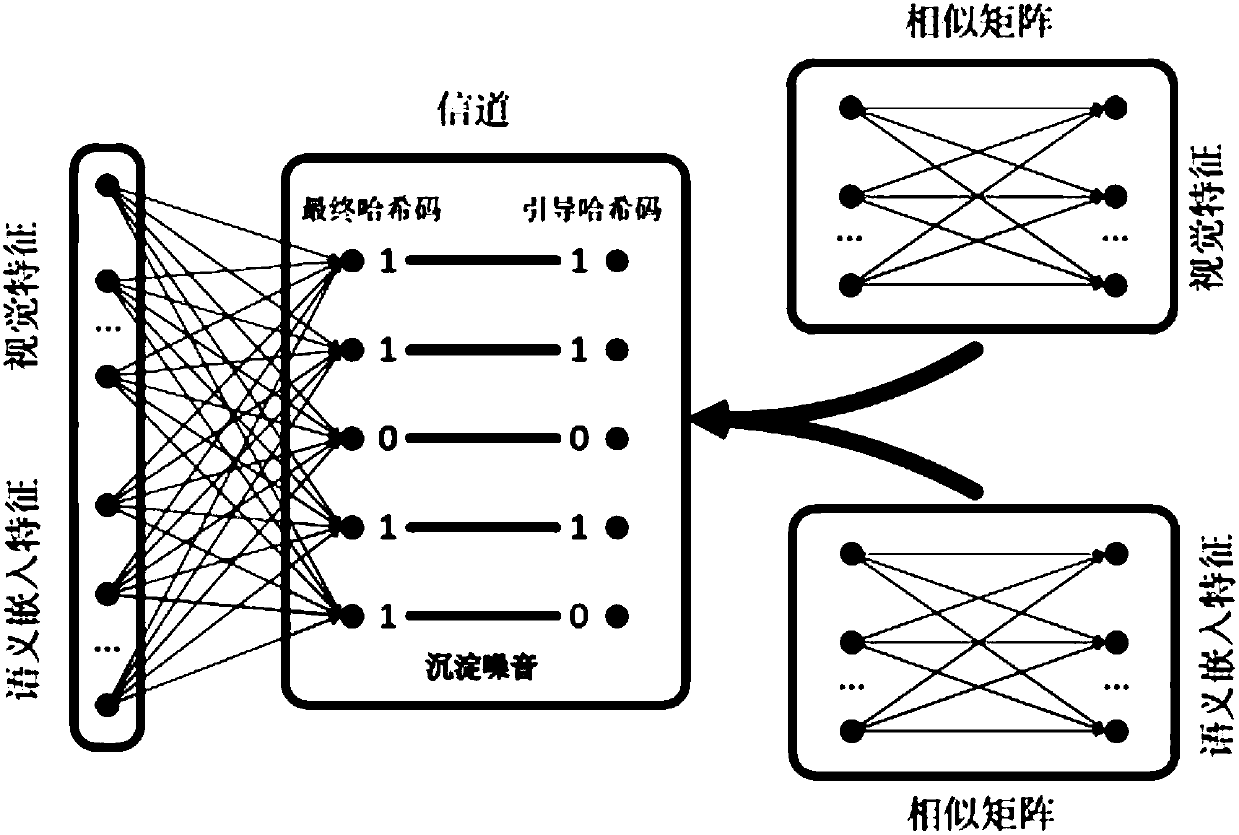

Image search method based on soft constraint non-supervision cross-modality hash

InactiveCN107766555AReduce quantization lossReduce noiseSpecial data processing applicationsCross modalityHat matrix

The invention discloses an image search method based on soft constraint non-supervision cross-modality hash. The method sequentially comprises the following steps of building a picture and a dataset of text data corresponding to the picture to obtain an image feature data matrix and a text feature data matrix; configuring an image similarity matrix and a text similarity matrix, distributing hash codes according to the image similarity matrix and the text similarity matrix, and thus obtaining guiding hash codes; adopting the guiding hash codes to optimize final hash codes and a corresponding projection matrix; calculating a Hamming distance between the hash codes and hash codes of a sample in a search base, and then descendingly outputting search results according to the size order of the Hamming distance. When the image search method is utilized, quantification loss of the hash codes can be lowered, semantic gaps can be shortened, discrete solutions can be obtained, and then the accuracy and efficiency of crossed searching of pictures and texts can be improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

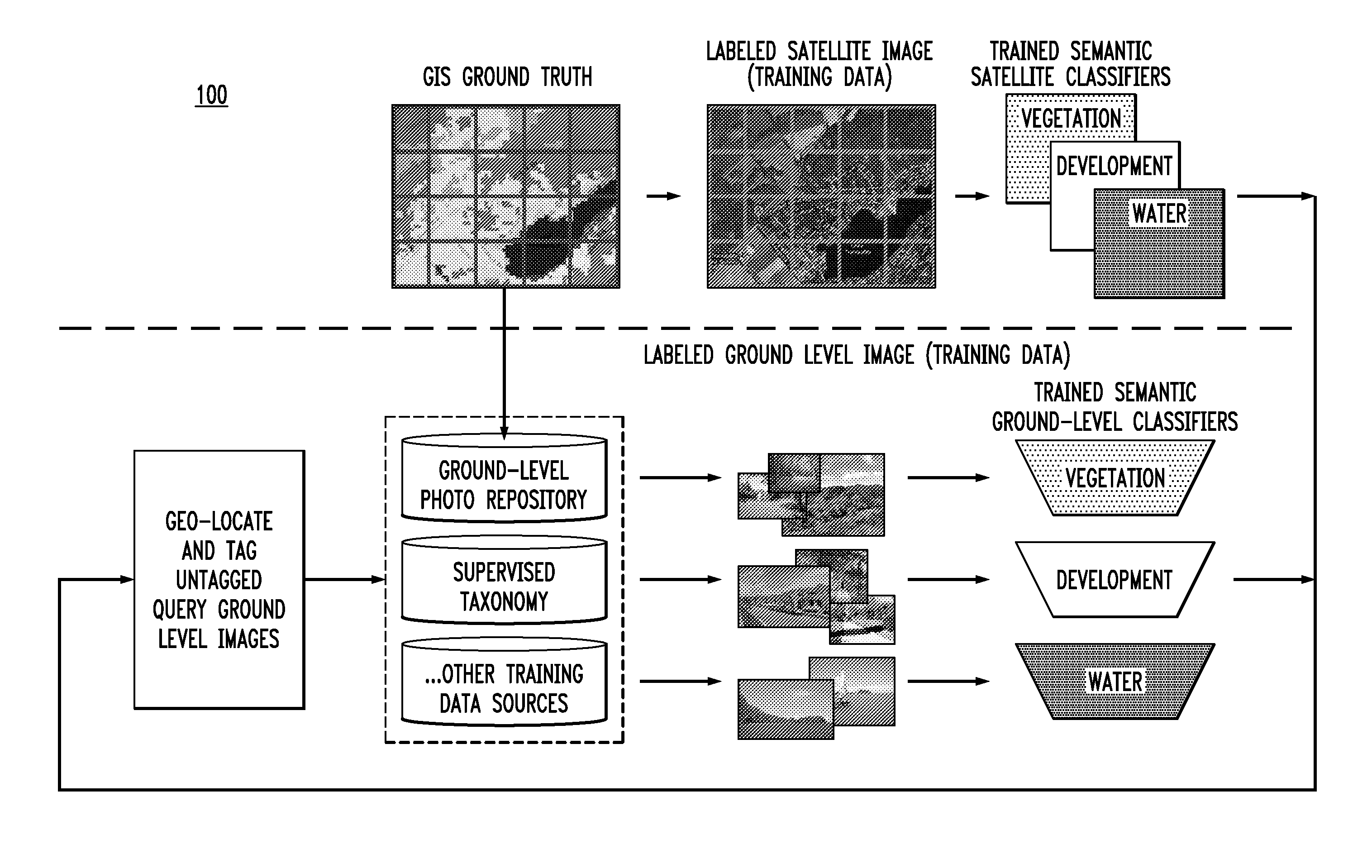

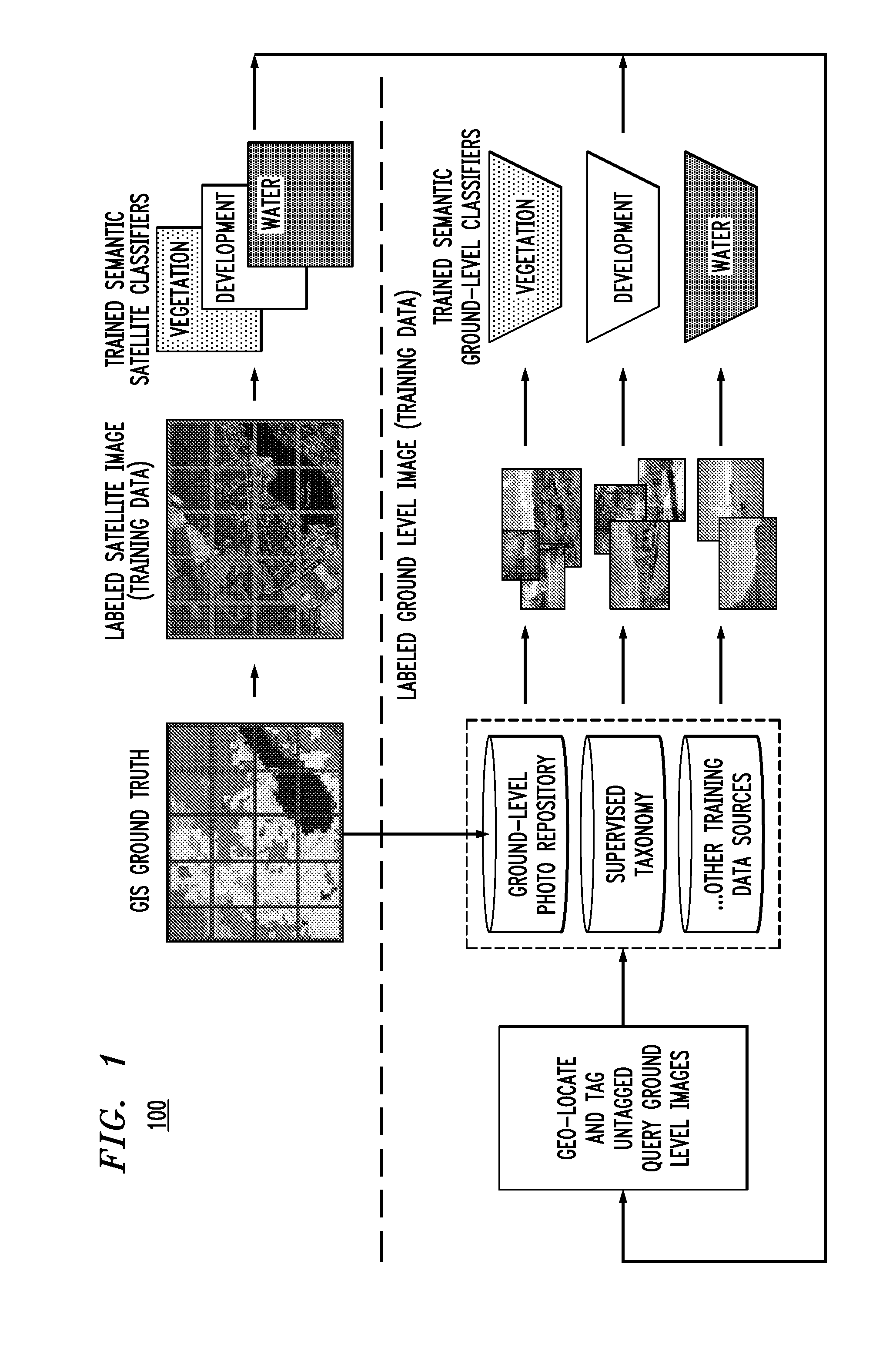

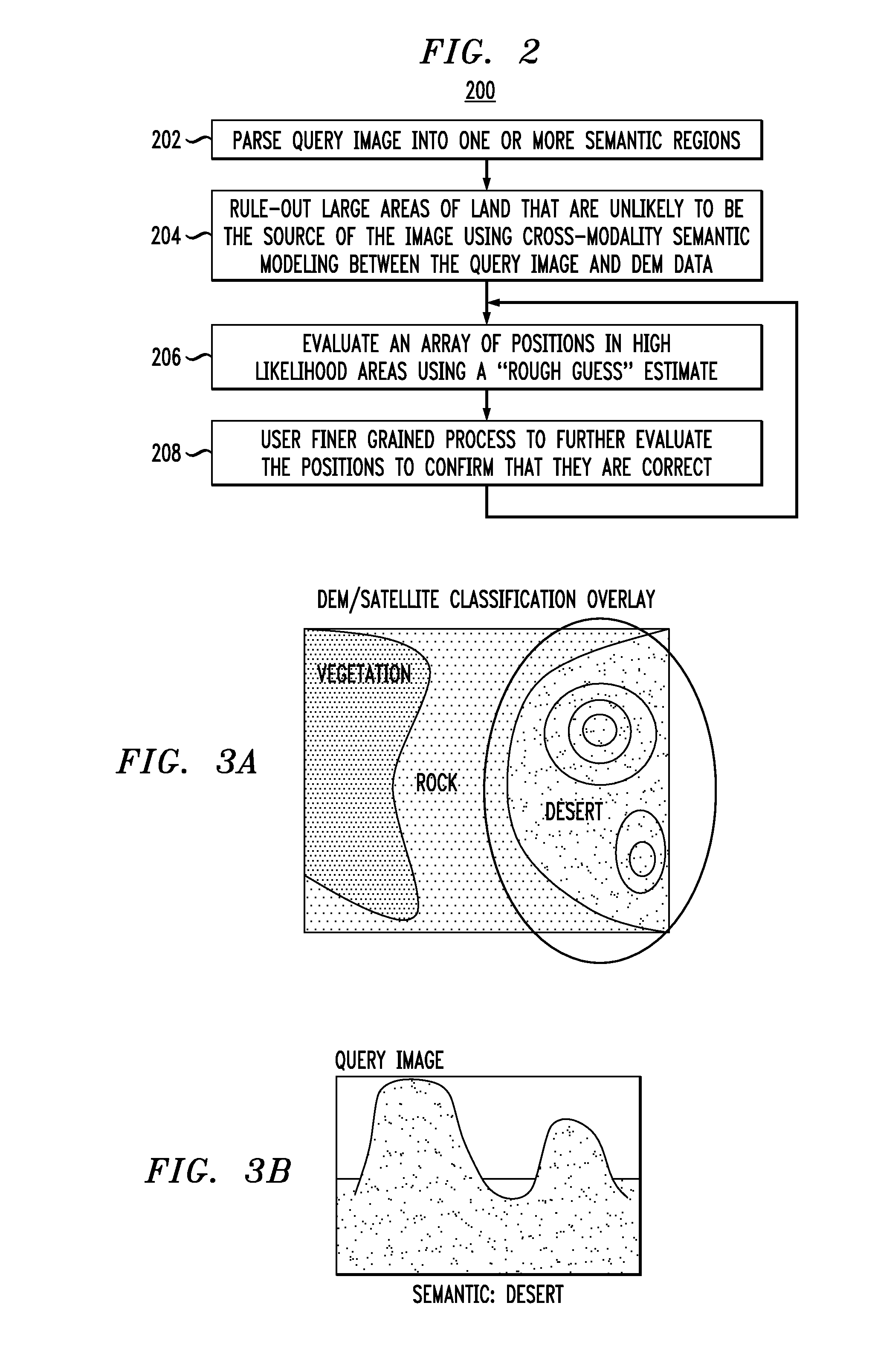

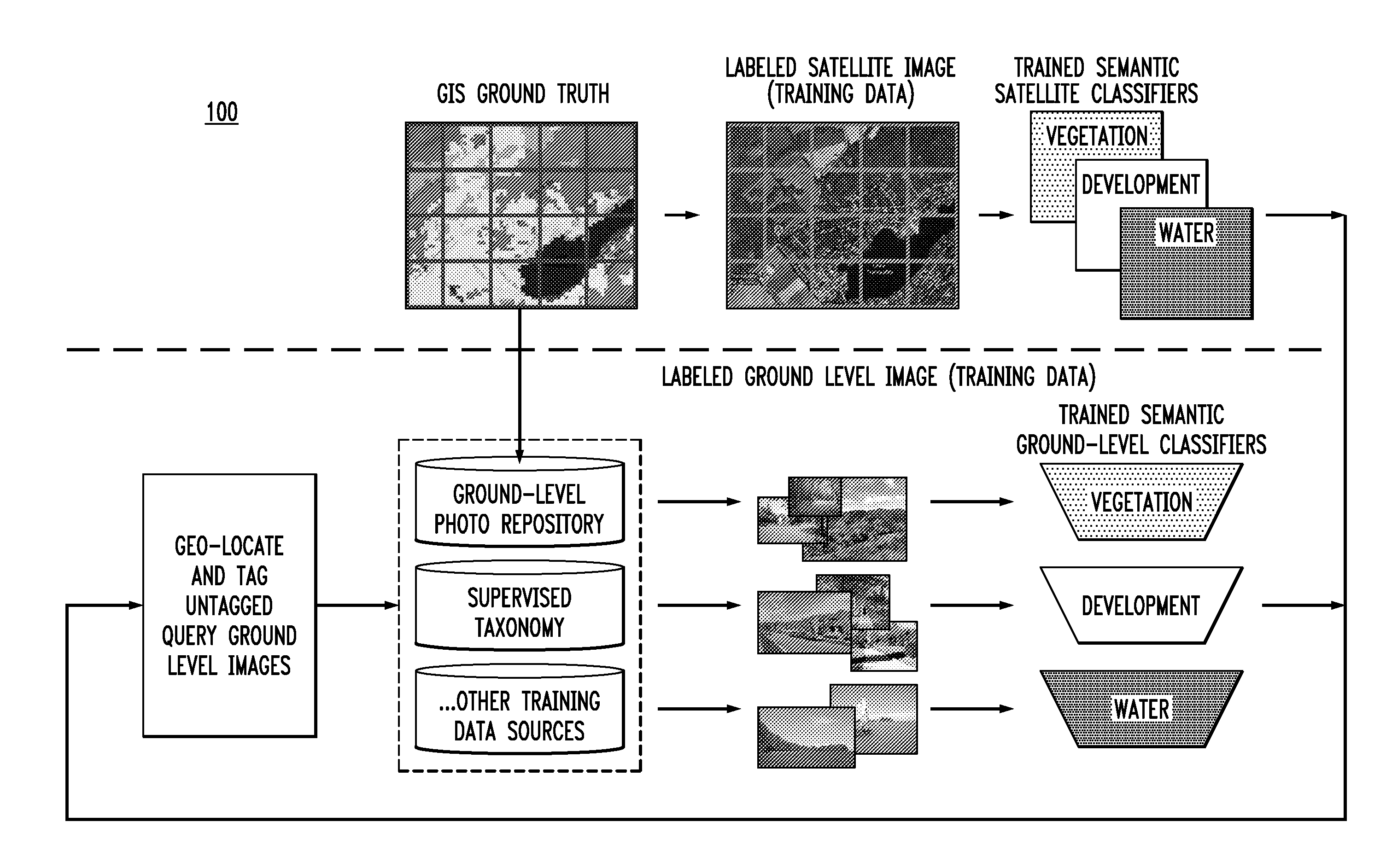

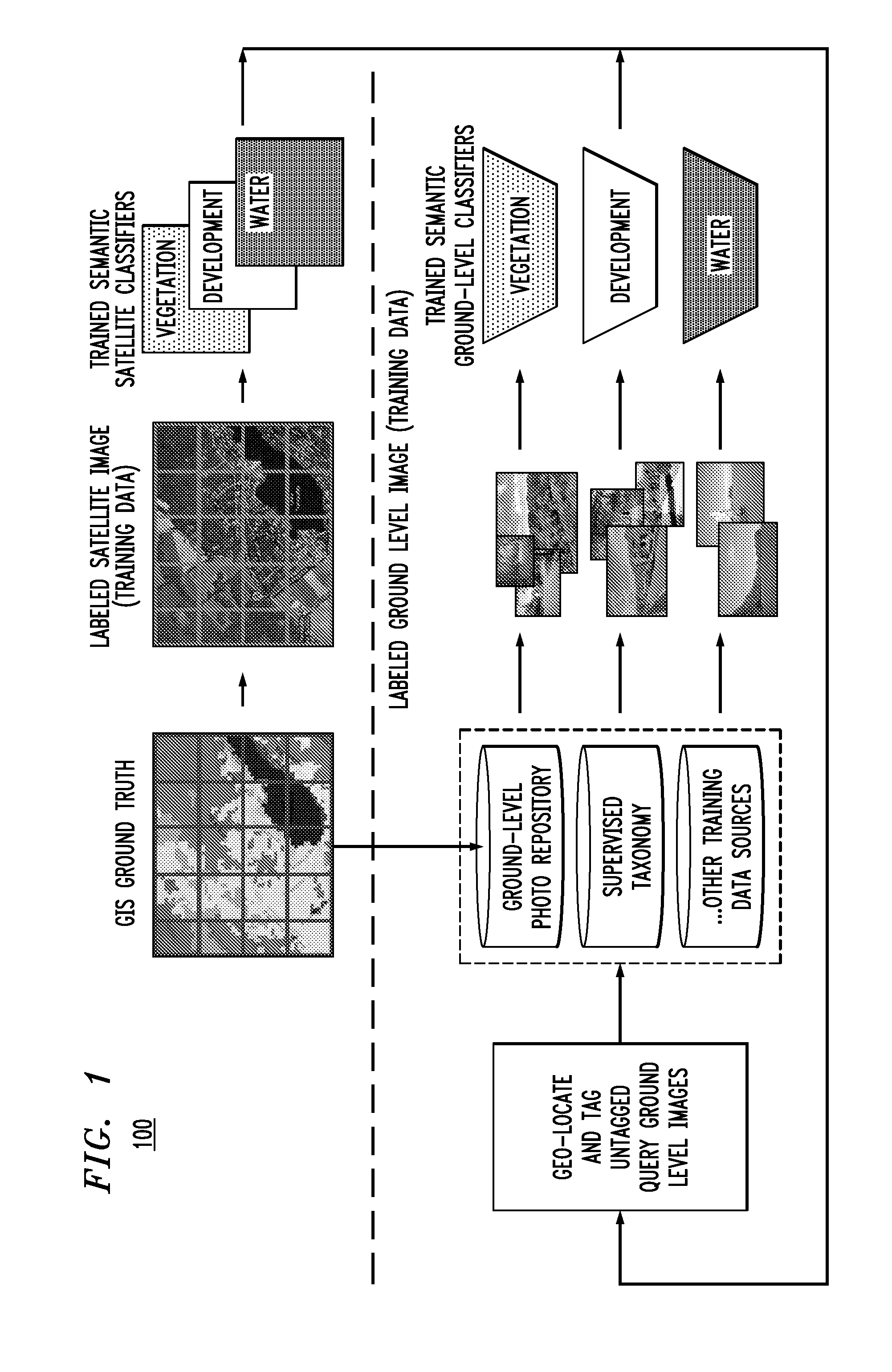

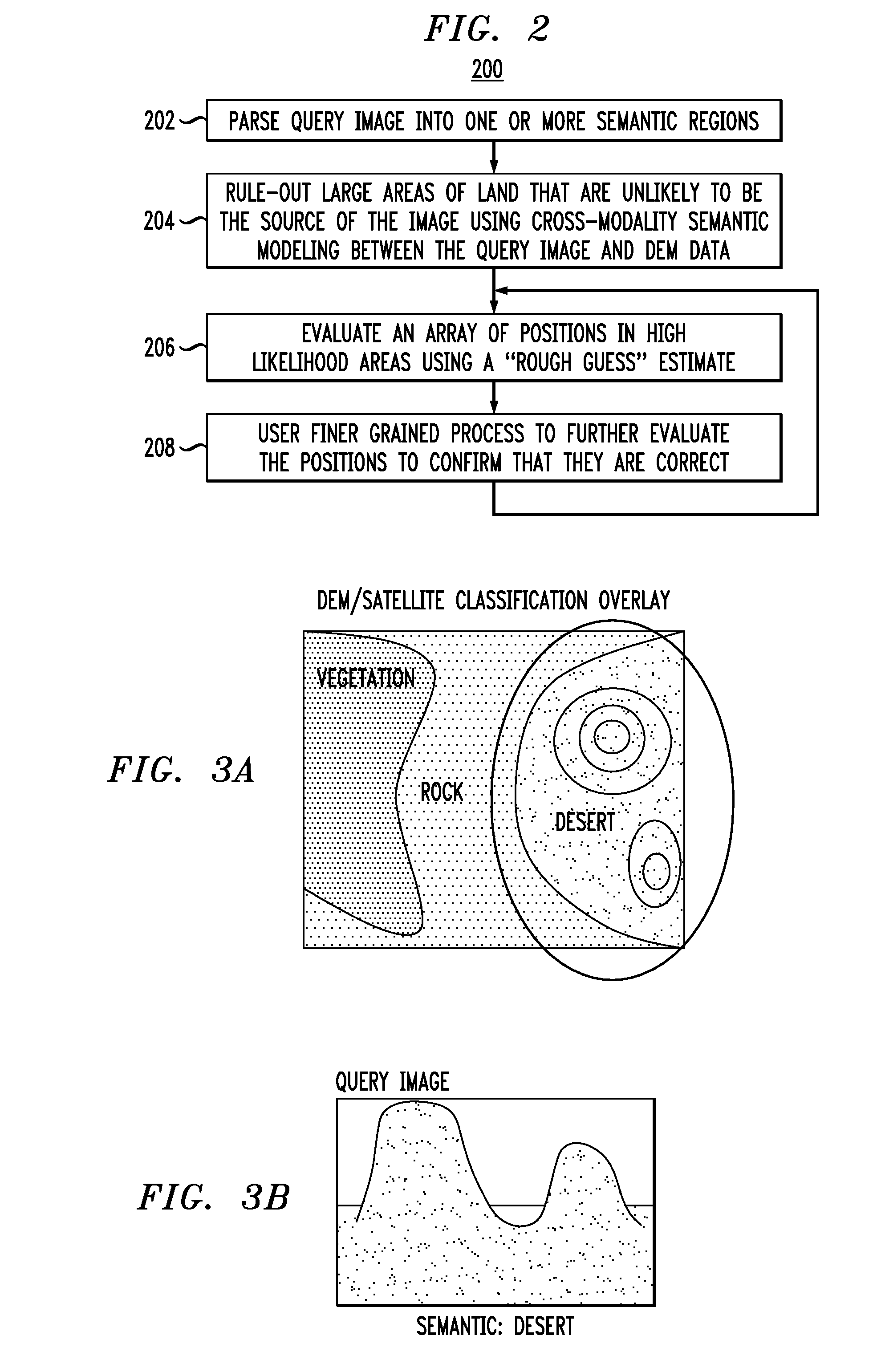

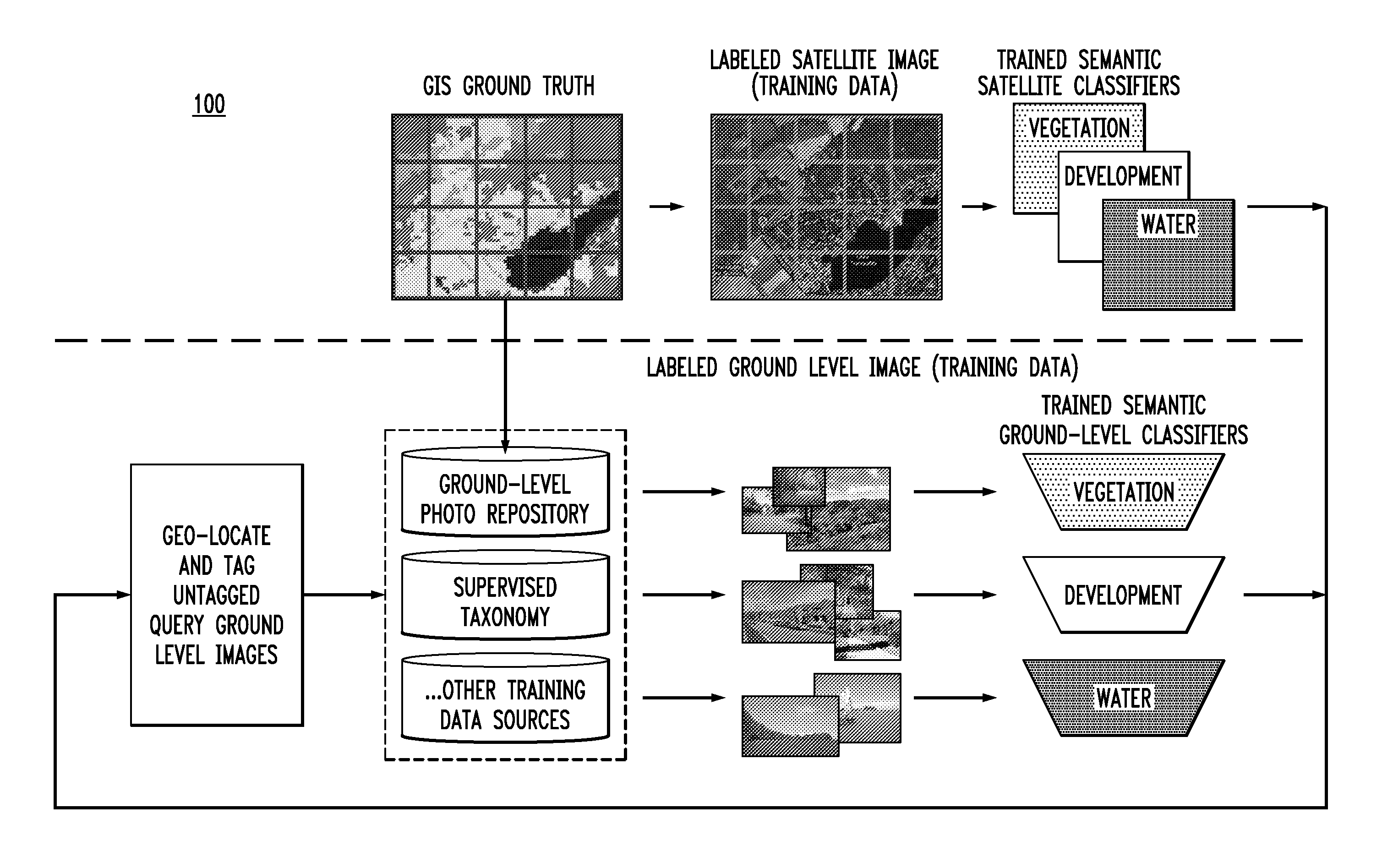

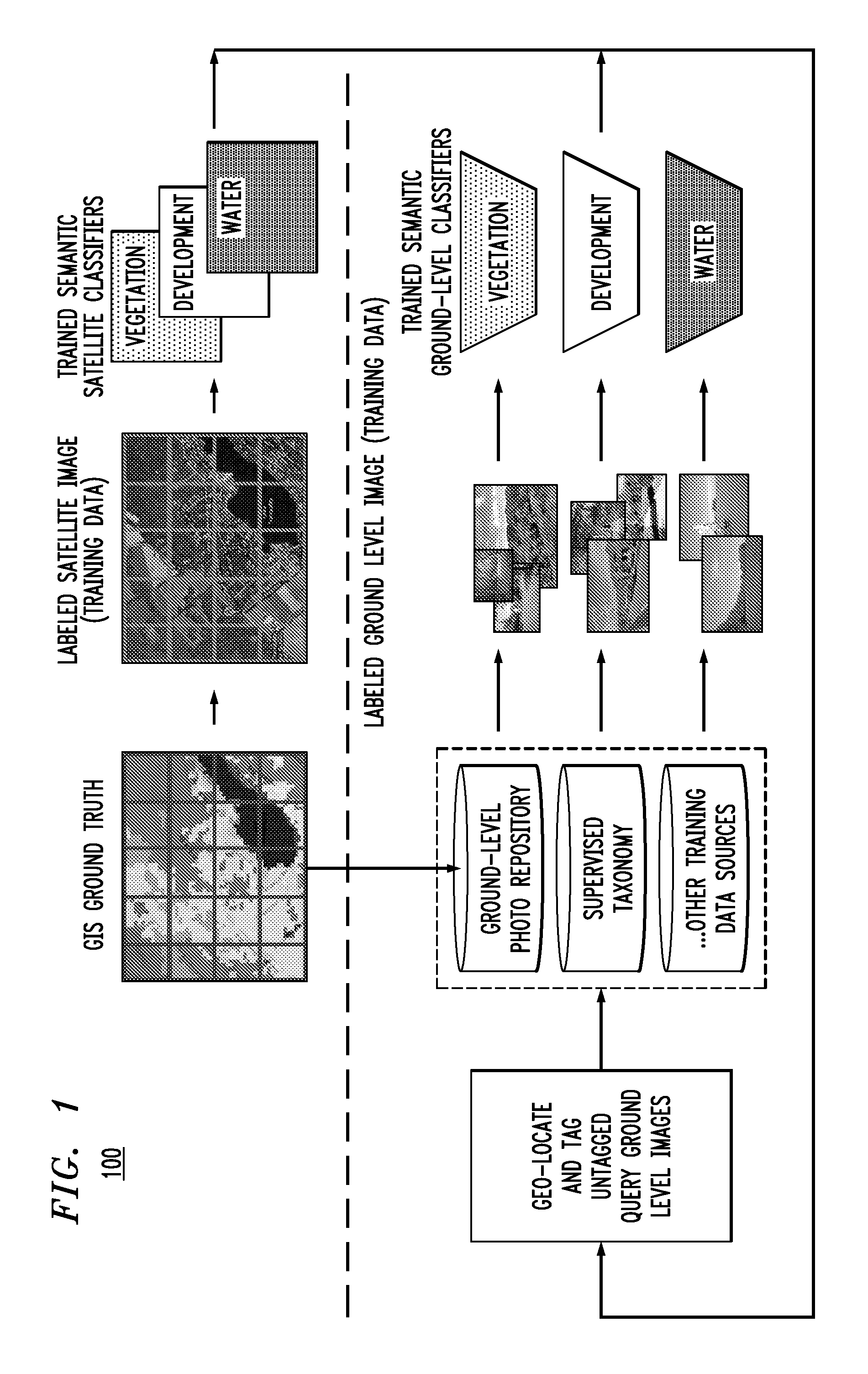

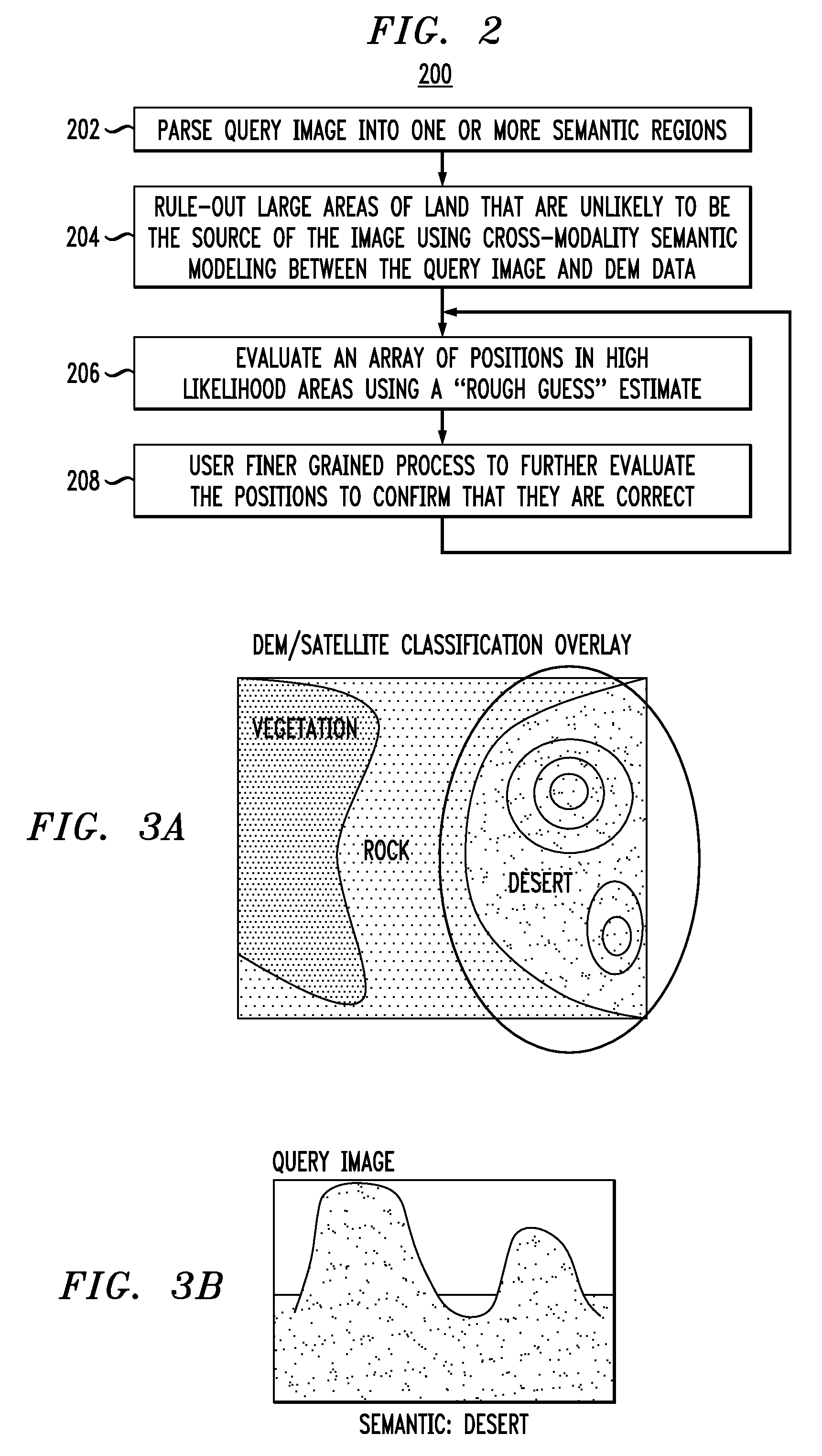

Techniques for Ground-Level Photo Geolocation Using Digital Elevation

Techniques for generating cross-modality semantic classifiers and using those cross-modality semantic classifiers for ground level photo geo-location using digital elevation are provided. In one aspect, a method for generating cross-modality semantic classifiers is provided. The method includes the steps of: (a) using Geographic Information Service (GIS) data to label satellite images; (b) using the satellite images labeled with the GIS data as training data to generate semantic classifiers for a satellite modality; (c) using the GIS data to label Global Positioning System (GPS) tagged ground level photos; (d) using the GPS tagged ground level photos labeled with the GIS data as training data to generate semantic classifiers for a ground level photo modality, wherein the semantic classifiers for the satellite modality and the ground level photo modality are the cross-modality semantic classifiers.

Owner:IBM CORP

Portable apparatus and method for decision support for real time automated multisensor data fusion and analysis

ActiveUS10346725B2Accurate reviewEasy to detectForce measurementScene recognitionCross modalityCloud base

The present invention encompasses a physical or virtual, computational, analysis, fusion and correlation system that can automatically, systematically and independently analyze collected sensor data (upstream) aboard or streaming from aerial vehicles and / or other fixed or mobile single or multi-sensor platforms. The resultant data is fused and presented locally, remotely or at ground stations in near real time, as it is collected from local and / or remote sensors. The invention improves detection and reduces false detections compared to existing systems using portable apparatus or cloud based computation and capabilities designed to reduce the role of the human operator in the review, fusion and analysis of cross modality sensor data collected from ISR (Intelligence, Surveillance and Reconnaissance) aerial vehicles or other fixed and mobile ISR platforms. The invention replaces human sensor data analysts with hardware and software providing two significant advantages over the current manual methods.

Owner:MCLOUD TECH USA INC

Techniques for Ground-Level Photo Geolocation Using Digital Elevation

Techniques for generating cross-modality semantic classifiers and using those cross-modality semantic classifiers for ground level photo geo-location using digital elevation are provided. In one aspect, a method for generating cross-modality semantic classifiers is provided. The method includes the steps of: (a) using Geographic Information Service (GIS) data to label satellite images; (b) using the satellite images labeled with the GIS data as training data to generate semantic classifiers for a satellite modality; (c) using the GIS data to label Global Positioning System (GPS) tagged ground level photos; (d) using the GPS tagged ground level photos labeled with the GIS data as training data to generate semantic classifiers for a ground level photo modality, wherein the semantic classifiers for the satellite modality and the ground level photo modality are the cross-modality semantic classifiers.

Owner:IBM CORP

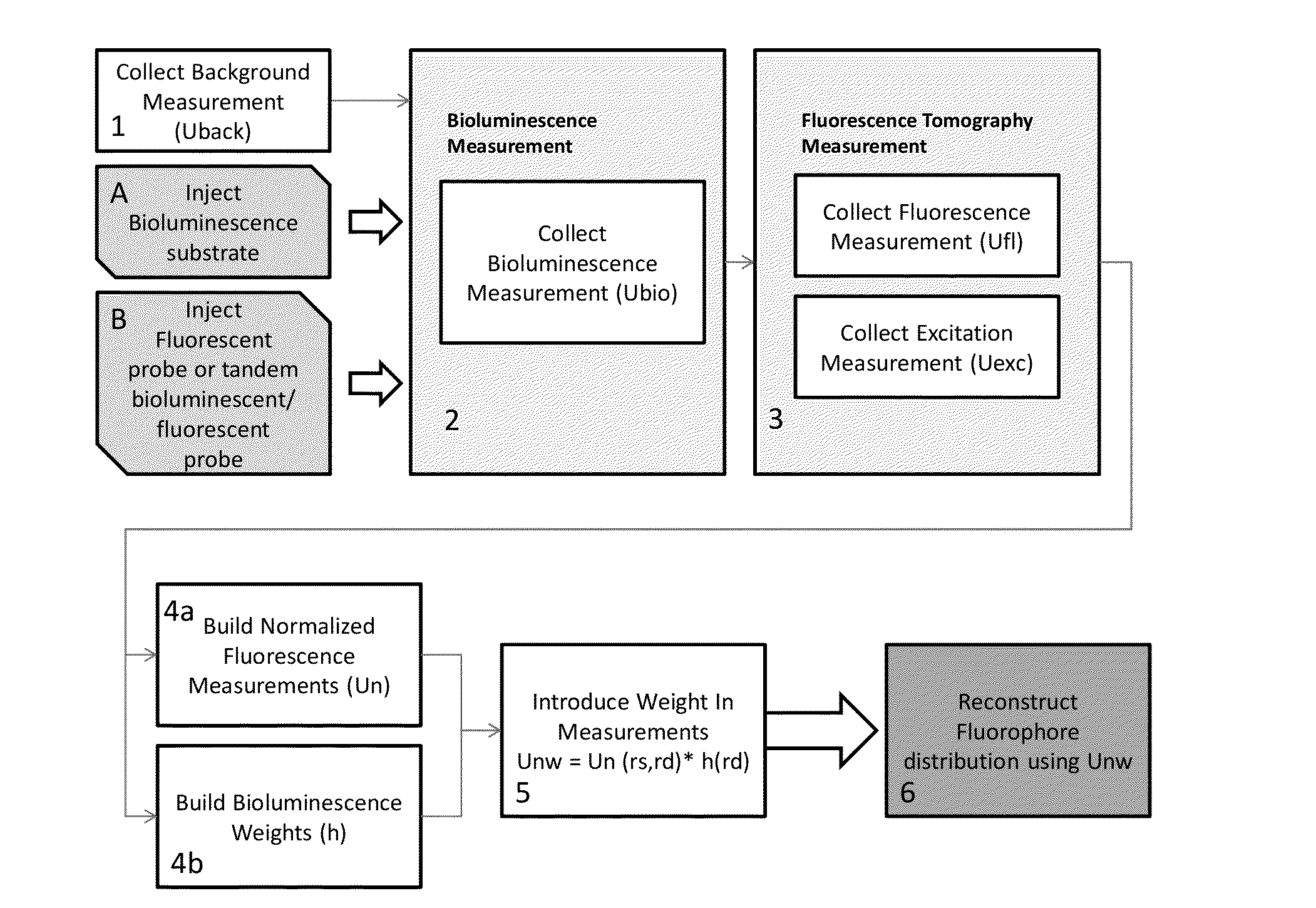

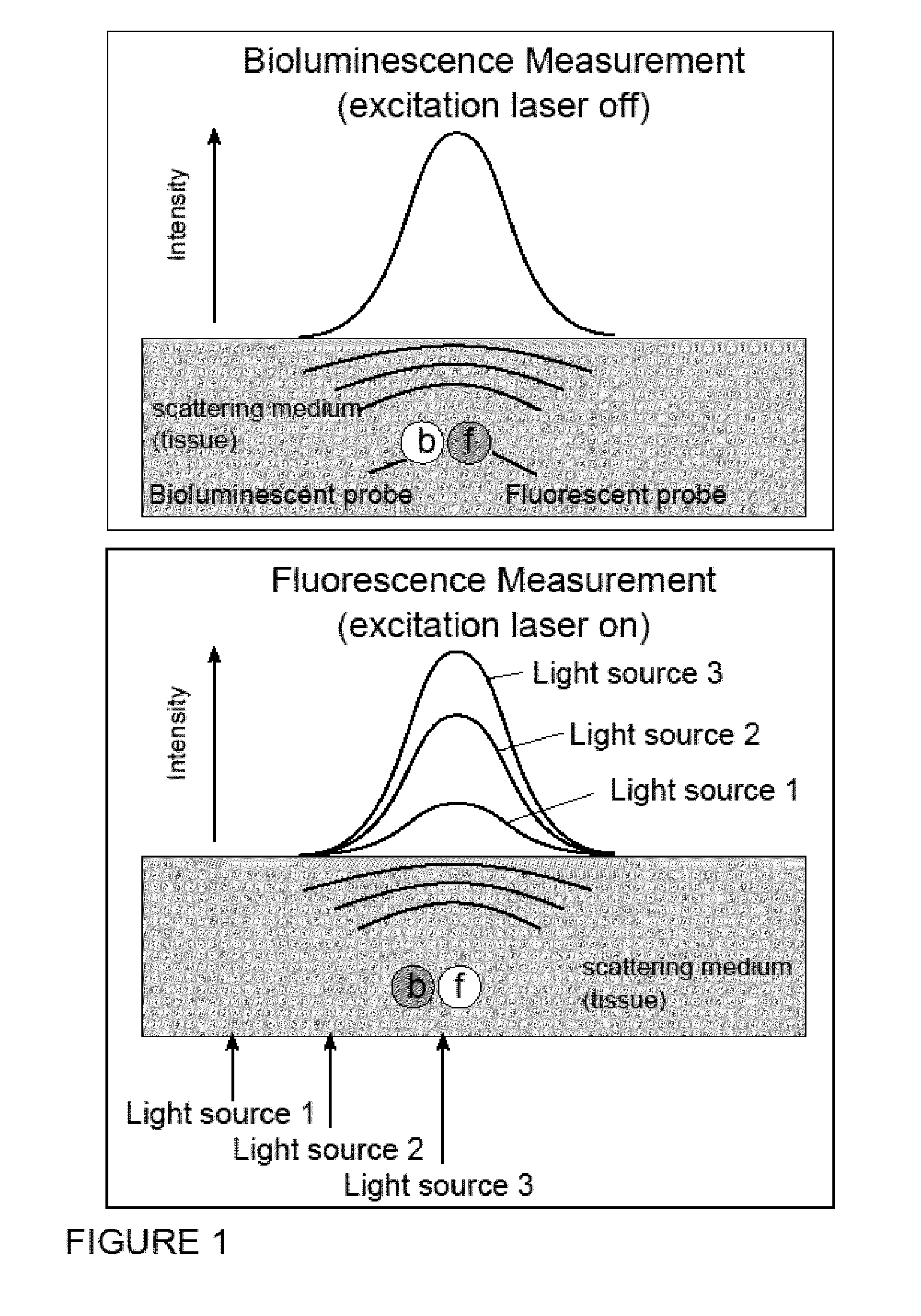

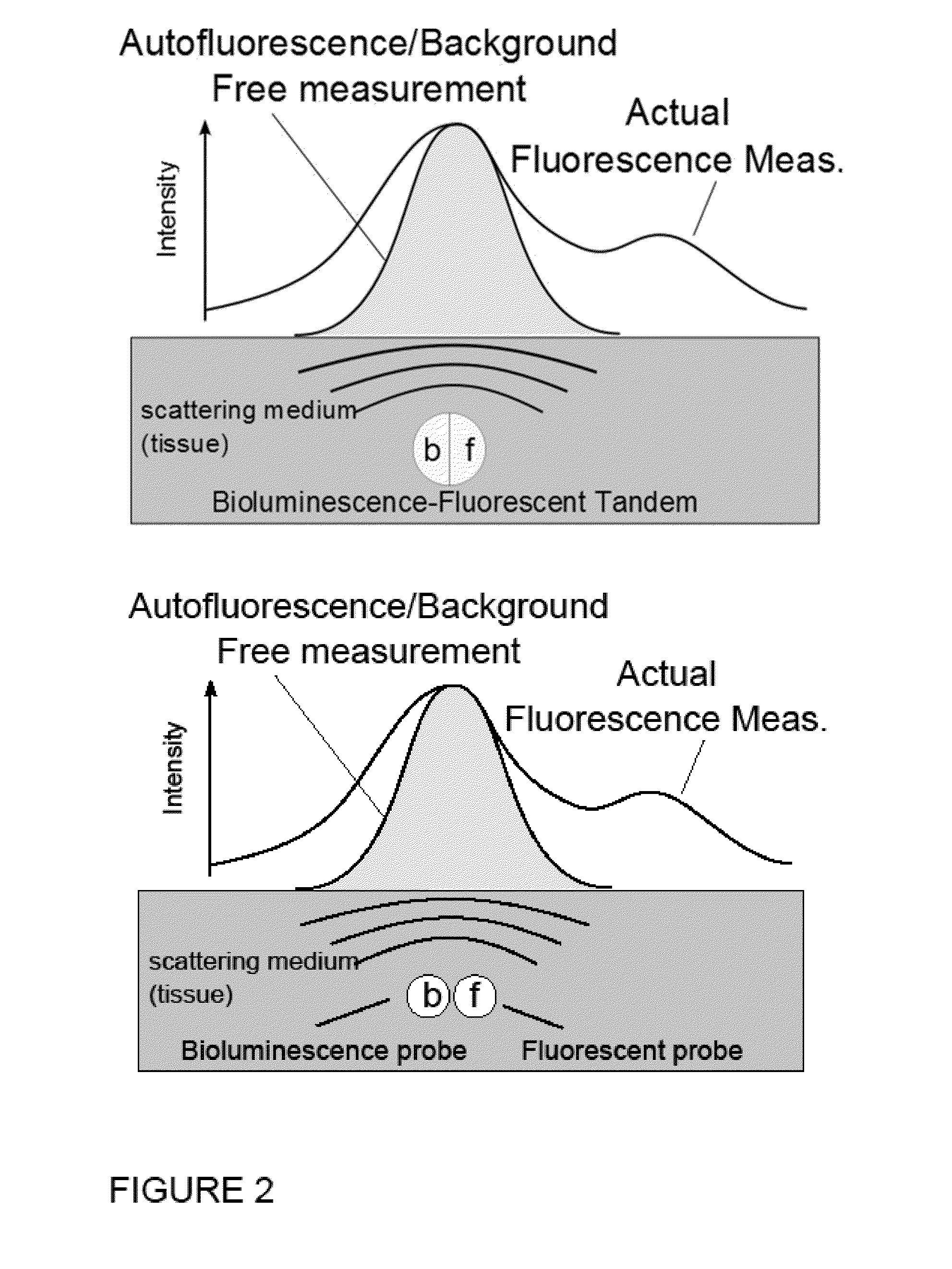

Systems, Methods, and Apparatus for Imaging of Diffuse Media Featuring Cross-Modality Weighting of Fluorescent and Bioluminescent Sources

ActiveUS20140105825A1Promote reconstructionUltrasonic/sonic/infrasonic diagnosticsSurgeryCross modalityMean free path

In certain embodiments, the invention relates to systems and methods for in vivo tomographic imaging of fluorescent probes and / or bioluminescent reporters, wherein a fluorescent probe and a bioluminescent reporter are spatially co-localized (e.g., located at distances equivalent to or smaller than the scattering mean free path of light) in a diffusive medium (e.g., biological tissue). Measurements obtained from bioluminescent and fluorescent modalities are combined per methods described herein.

Owner:VISEN MEDICAL INC

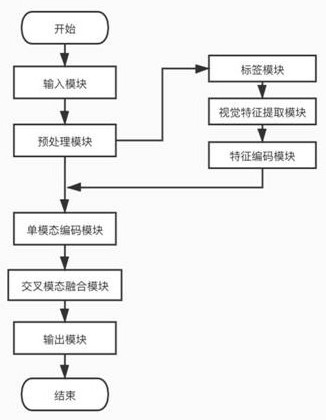

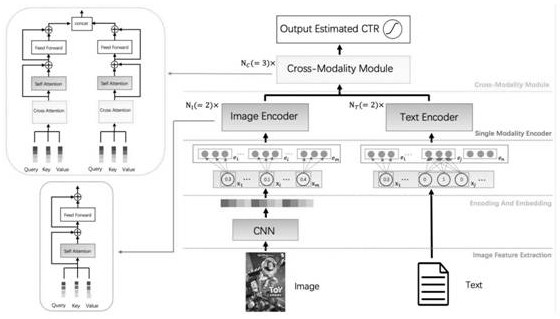

Movie recommendation system based on cross-modal fusion

PendingCN113918764ARich useful informationGood predictive accuracyDigital data information retrievalNeural architecturesCross modalityCode module

A movie recommendation system based on cross-modal fusion comprises an input module, where the input information comprises feature information of each user in a user set, feature information of each movie in a movie set, interaction information of each user in the user set to each movie in the movie set, and poster pictures of each movie in the movie set; the system also comprises a preprocessing module, a single-mode coding module, a cross-mode fusion module and an output module. Visual information of movie poster pictures is extracted based on a deep neural network model, and useful information which can be used in the recommendation process is enriched; on the other hand, interaction and fusion of text and visual information are realized based on a cross-modal fusion algorithm, so that movie recommendation is better carried out on the user; compared with a traditional recommendation algorithm which only uses single-mode information, does not interact two-mode feature information and does not distinguish single-mode and multi-mode interaction modes, the invention has a better recommendation effect.

Owner:ZHEJIANG UNIV

Method and system for object centric stereo via cross modality validation in autonomous driving vehicles

Owner:PLUSAI INC

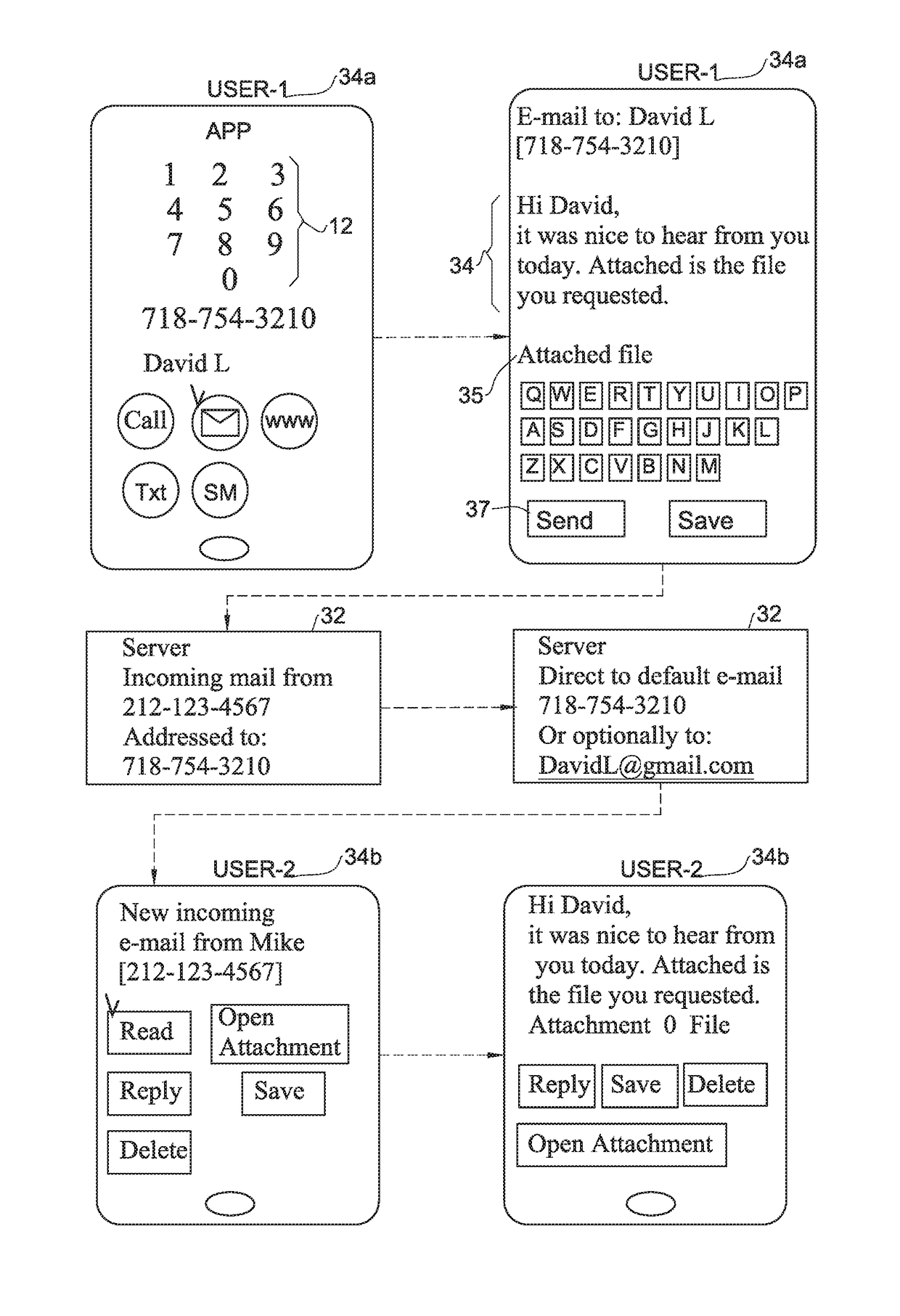

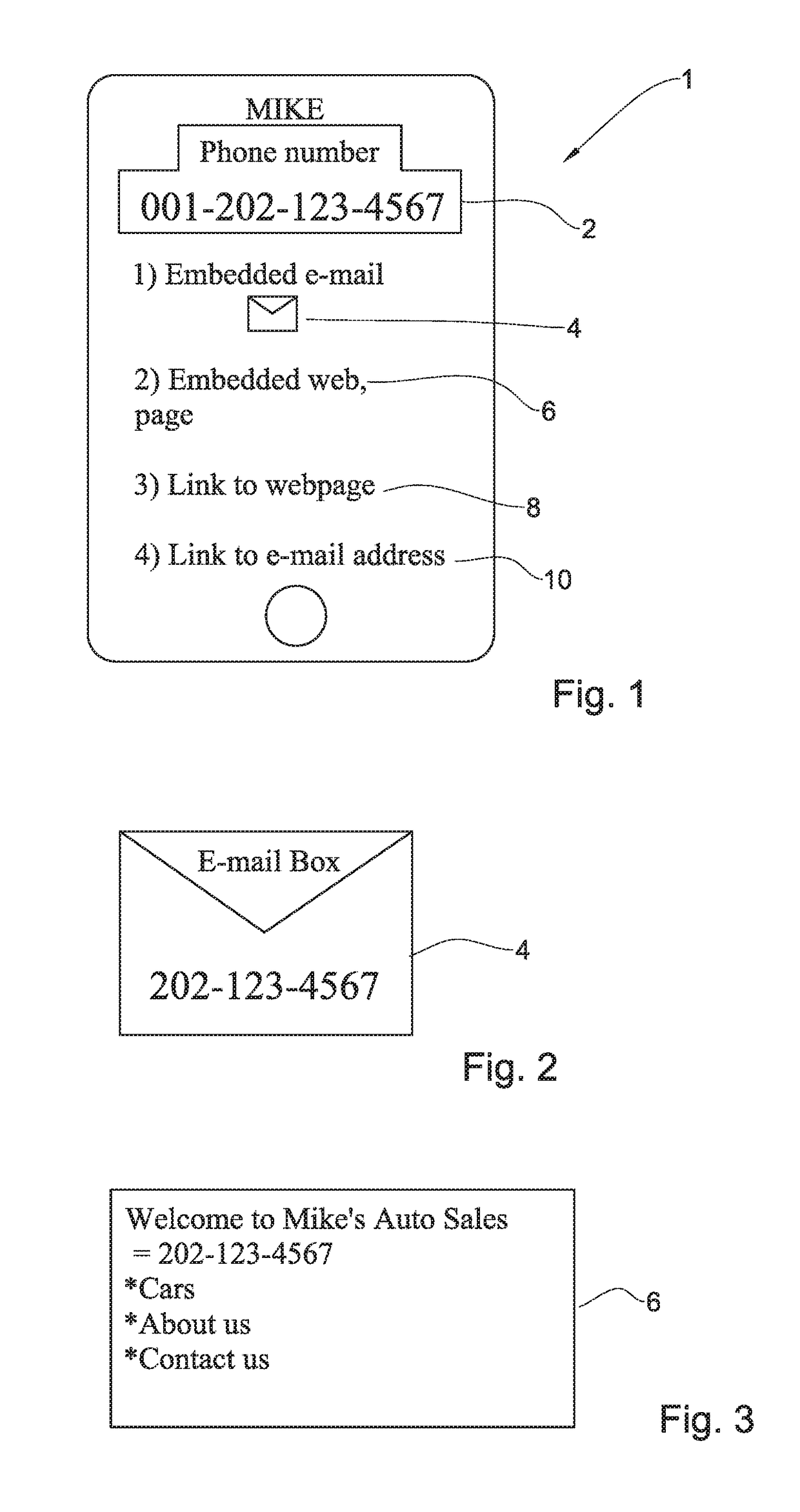

Systems and methods for cross-modality communication

InactiveUS20170339264A1Substation equipmentOffice automationDiagnostic Radiology ModalityCross modality

A multi-modality electronic communication method for communication via end-user selected communication modalities, including (at a server, having access to memory table / s operative to store, for each end user from among a multiplicity thereof, destinations via which the individual end user receives communications via a corresponding plurality of communication modalities): receiving communication / s from Tx client processor / s serving a Tx end user, the communication including content the Tx end user wishes to send to an Rx end user; and an indication of a known destination of an Rx end user, when a first communication modality is employed; extracting the known destination from the communication and retrieving from the table, a retrieved destination other than the known destination via which the Rx end user receives communications via a second communication modality; and sending the content to the Rx end user via the second communication modality to the retrieved destination.

Owner:STEEL NIR

Cross-time and cross-modality medical diagnosis

A cross-time and cross-modality inspection method for medical image diagnosis. A first set of medical images of a subject is accessed wherein the first set is captured at a first time period by a first modality. A second set of medical images of the subject is accessed, wherein the second set is captured at a second time period by a second modality. The first and second sets are each comprised of a plurality of medical image. Image registration is performed by mapping the plurality of medical images of the first and second sets to predetermined spatial coordinates. A cross-time image mapping is performed of the first and second sets. Means are provided for interactive cross-time medical image analysis.

Owner:CARESTREAM HEALTH INC

Techniques for ground-level photo geolocation using digital elevation

ActiveUS9165217B2Character and pattern recognitionSpecial data processing applicationsDiagnostic Radiology ModalityCross modality

Owner:IBM CORP

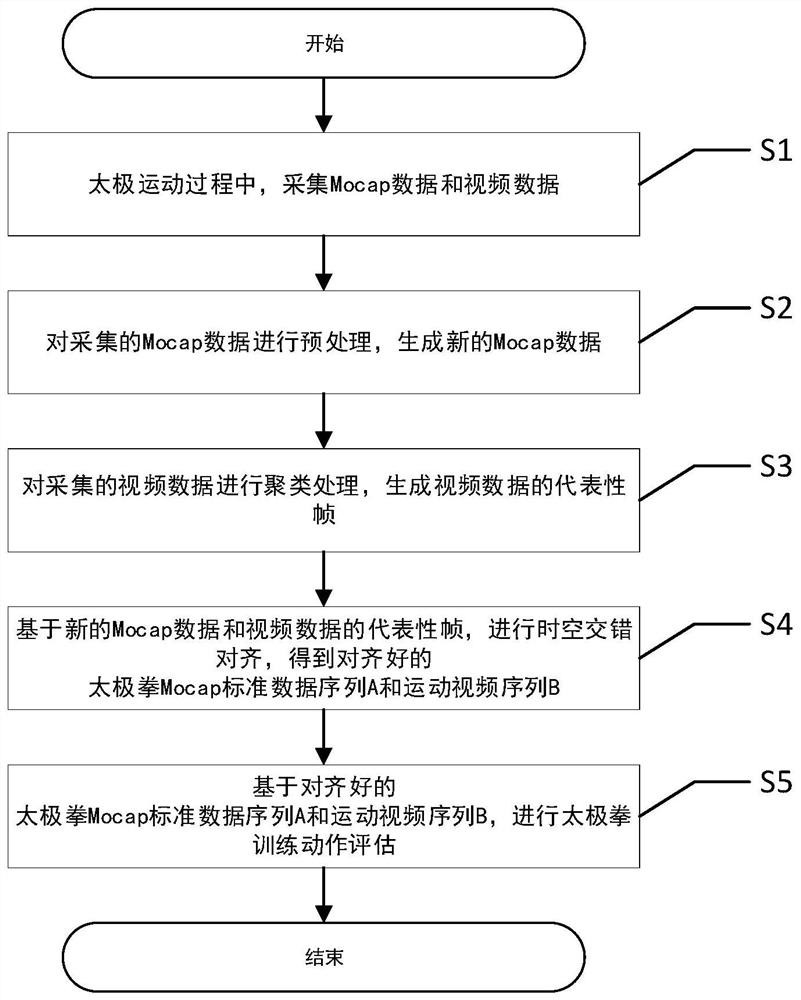

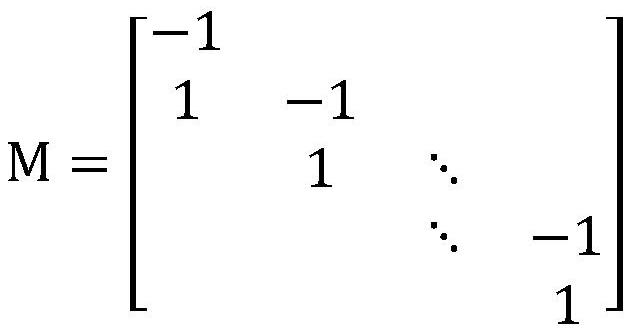

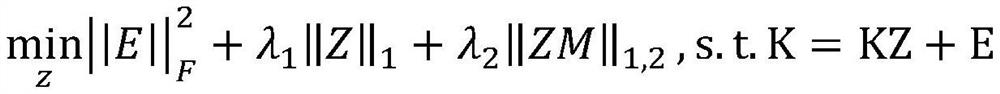

Cross-modal evaluation method for shadowboxing training actions

ActiveCN113591726AImprove refactoring effectReduce lossesCharacter and pattern recognitionNeural architecturesCross modalityMedicine

The invention discloses a cross-modal evaluation method for shadowboxing training actions, and provides a method for aligning a video sequence and an action capture sequence for a cross-modal sequence registration problem of original shadowboxing training actions with a nonlinear relationship. Denoising and reconstruction recovery are carried out on Tai Chi motion sequences of different lengths and dimensions, then staged space-time registration is carried out, the motion difference between different subjects is solved, space-time alignment of cross-modal Tai Chi long sequences is achieved, and the Tai Chi motion recruitment completion degree evaluation accuracy is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

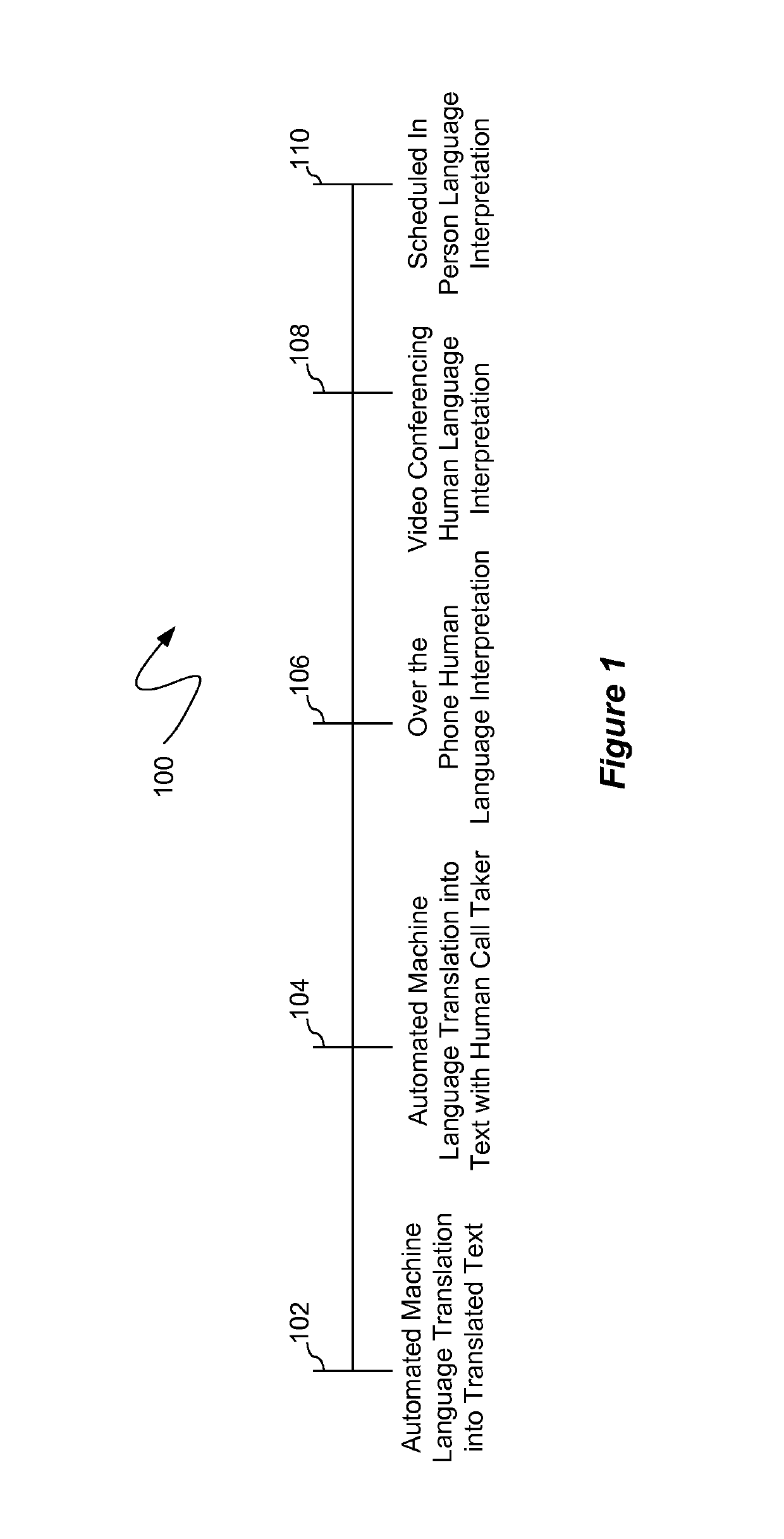

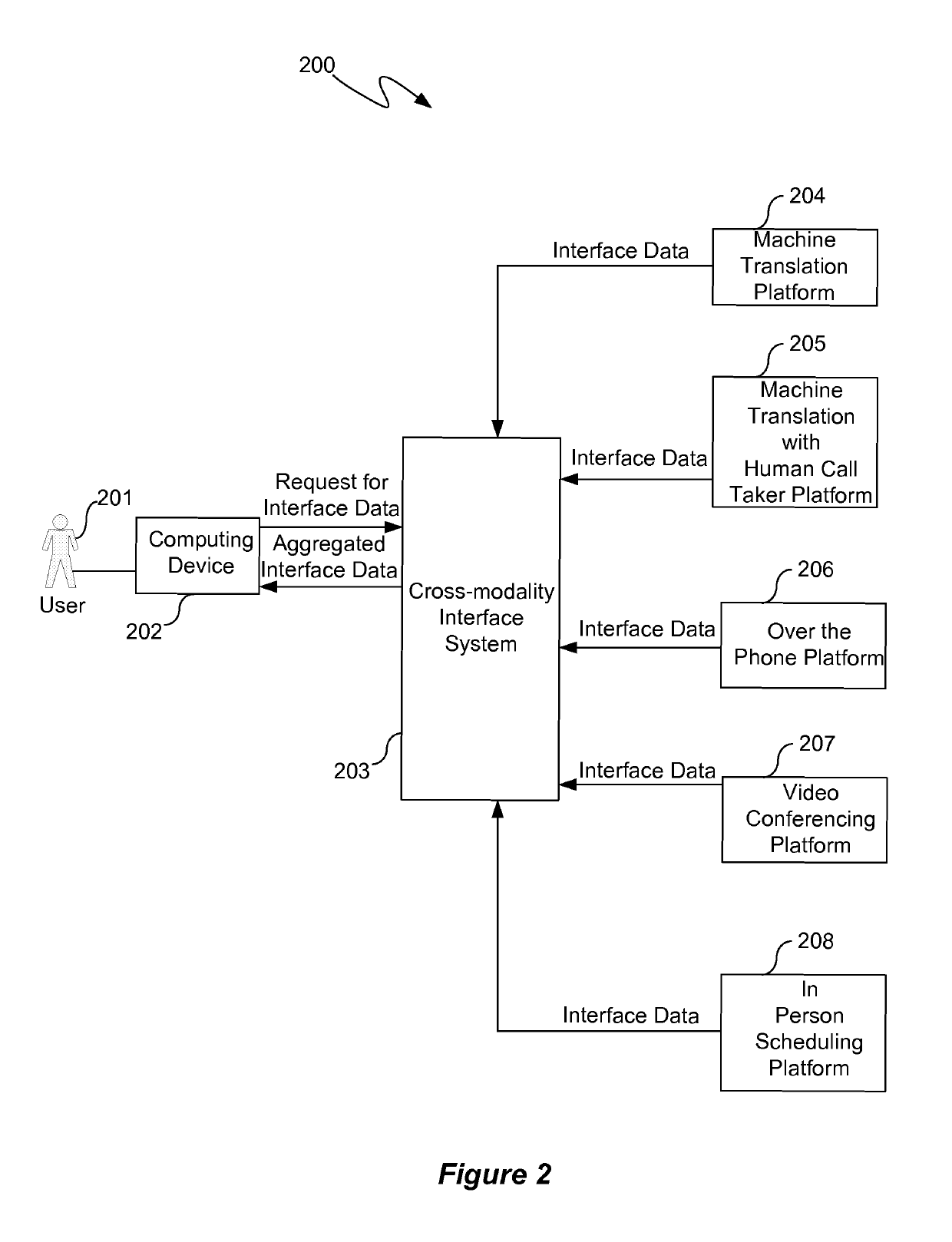

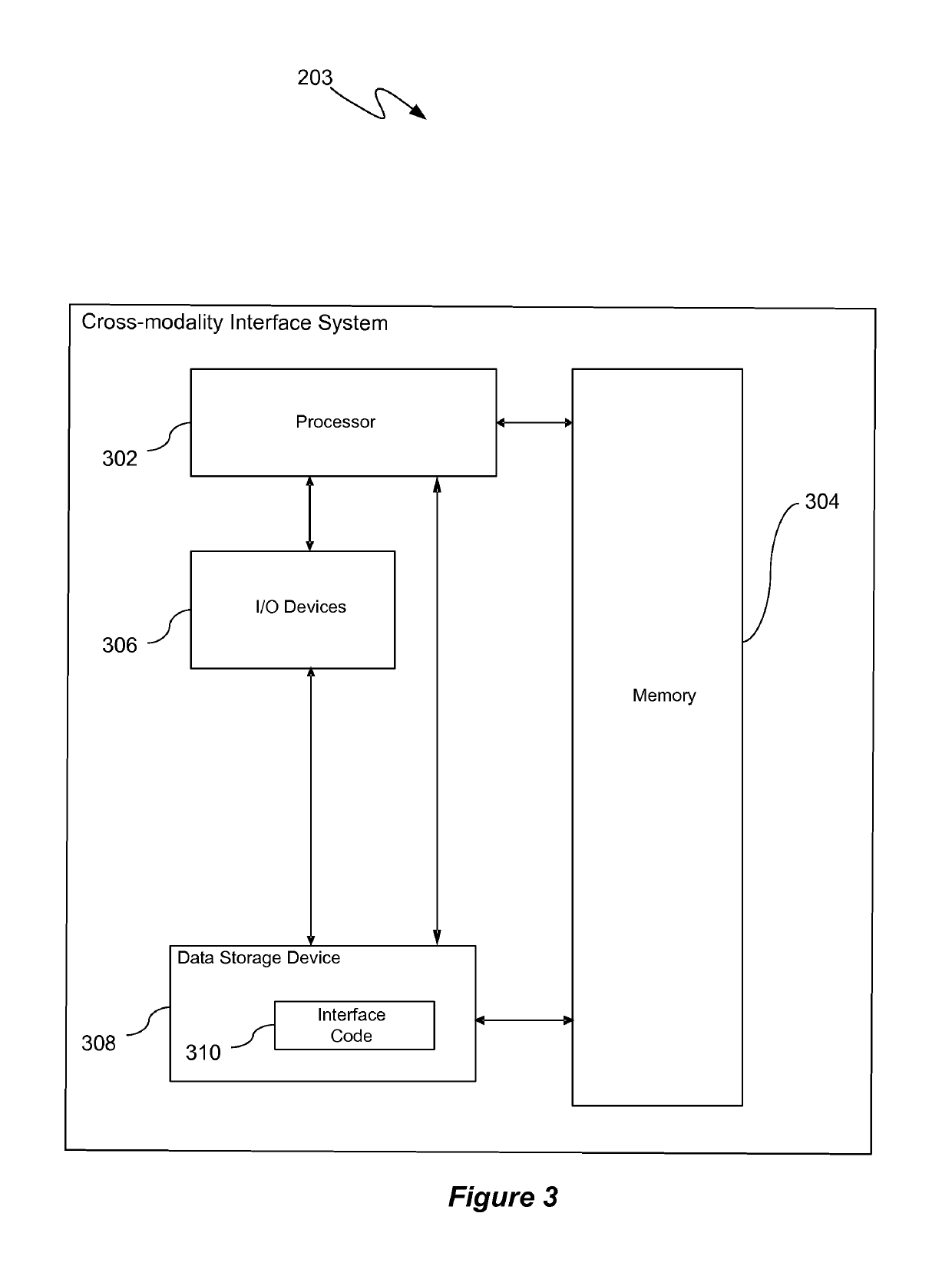

Multi-channel cross-modality system for providing language interpretation/translation services

ActiveUS10303776B2Natural language translationSpecial data processing applicationsCross modalityTranslation service

A computer implemented language interpretation / translation platform is provided so that a computerized interface can be generated to display a variety of language interpretation / translation services. The computer implemented language interpretation / translation platform includes a receiver that receives a request for computerized interface data from a computing device and receives a plurality of interface data from a plurality of distinct language interpretation / translation platforms. Each of the plurality of distinct language interpretation / translation platforms provide a language / interpretation service according to a distinct modality. The computer implemented language interpretation / translation platform includes a processor that aggregates the plurality of interface data from the plurality of distinct language interpretation / translation platforms in real-time into a computerized interface format. The computer implemented language interpretation / translation platform includes a transmitter that sends the computerized interface format to the computing device for display by the computing device so that the computing device receives a selection of an optimal language interpretation / translation service.

Owner:LANGUAGE LINE SERVICES

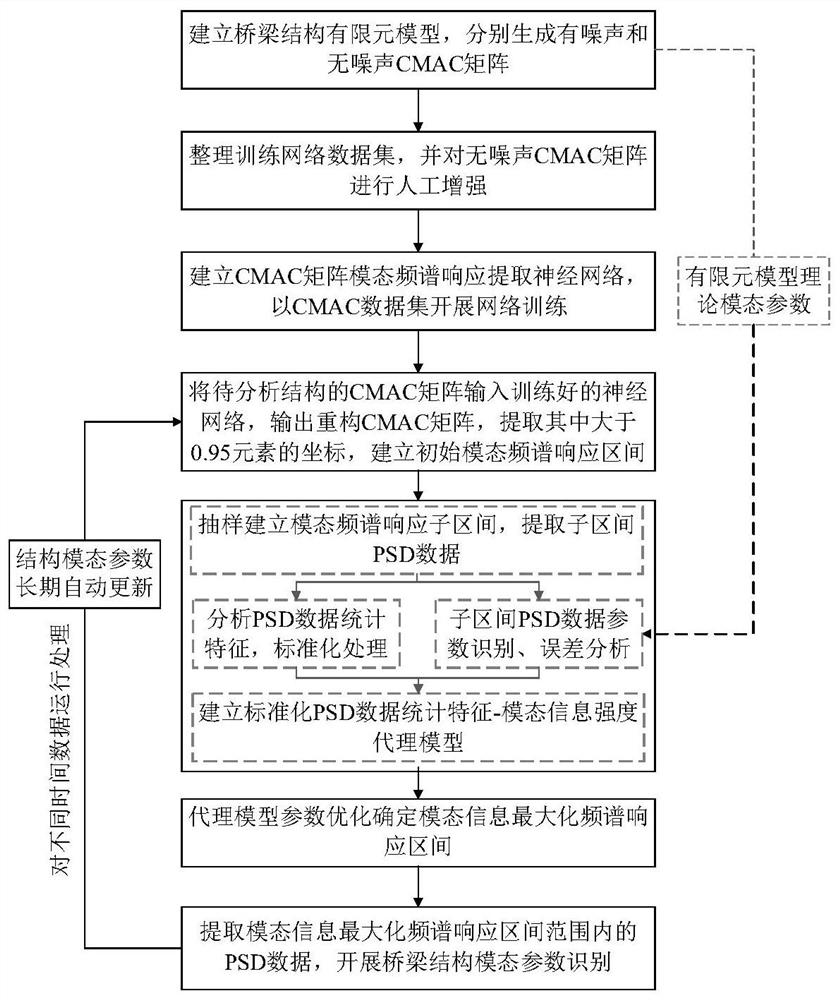

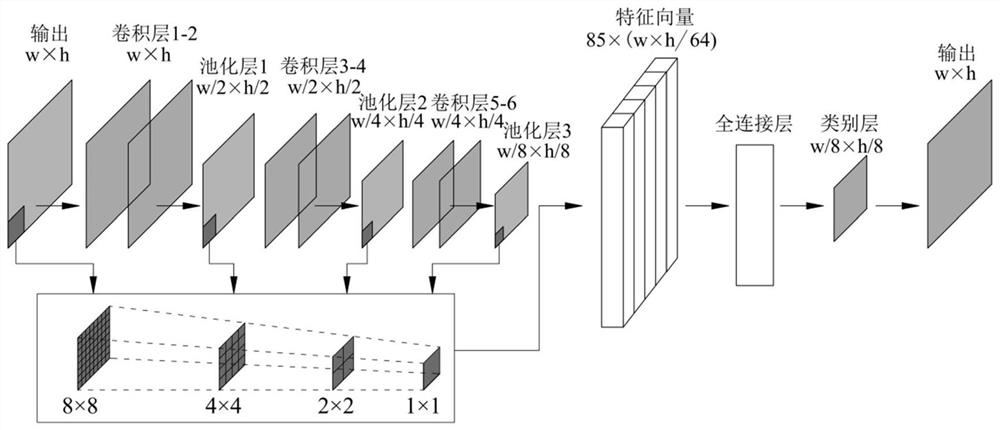

Bridge modal parameter intelligent updating method based on cross-modal confidence criterion matrix

ActiveCN113159282AFull display of modal response informationEasy to identifyNeural architecturesNeural learning methodsCross modalityElement model

The invention discloses a bridge modal parameter intelligent updating method based on a cross-modal confidence criterion (CMAC) matrix, and the method comprises the steps: taking a CMAC matrix as a basis, combining an adaptive convolution operation layer and a full-connection classification layer to form a modal spectrum response intelligent extraction neural network, and carrying out the classification reconstruction of the CMAC matrix; extracting a bridge structure physical modal spectrum response interval; furthermore, establishing an agent model of bridge vibration data power spectral density and modal information intensity based on the initial modal spectral response interval and a finite element model modal parameter theoretical value, so that a maximum modal information spectral response interval is determined, and bridge structure modal parameter identification is carried out accordingly. According to the method, the CMAC matrix and the adaptive convolutional neural network are combined to carry out intelligent analysis and identification of the structural modal parameters, the network training efficiency is high, the response of the weak excitation modal can be well extracted, and the method can be applied to bridge structure health state monitoring to carry out automatic updating of the modal parameters.

Owner:SOUTHEAST UNIV

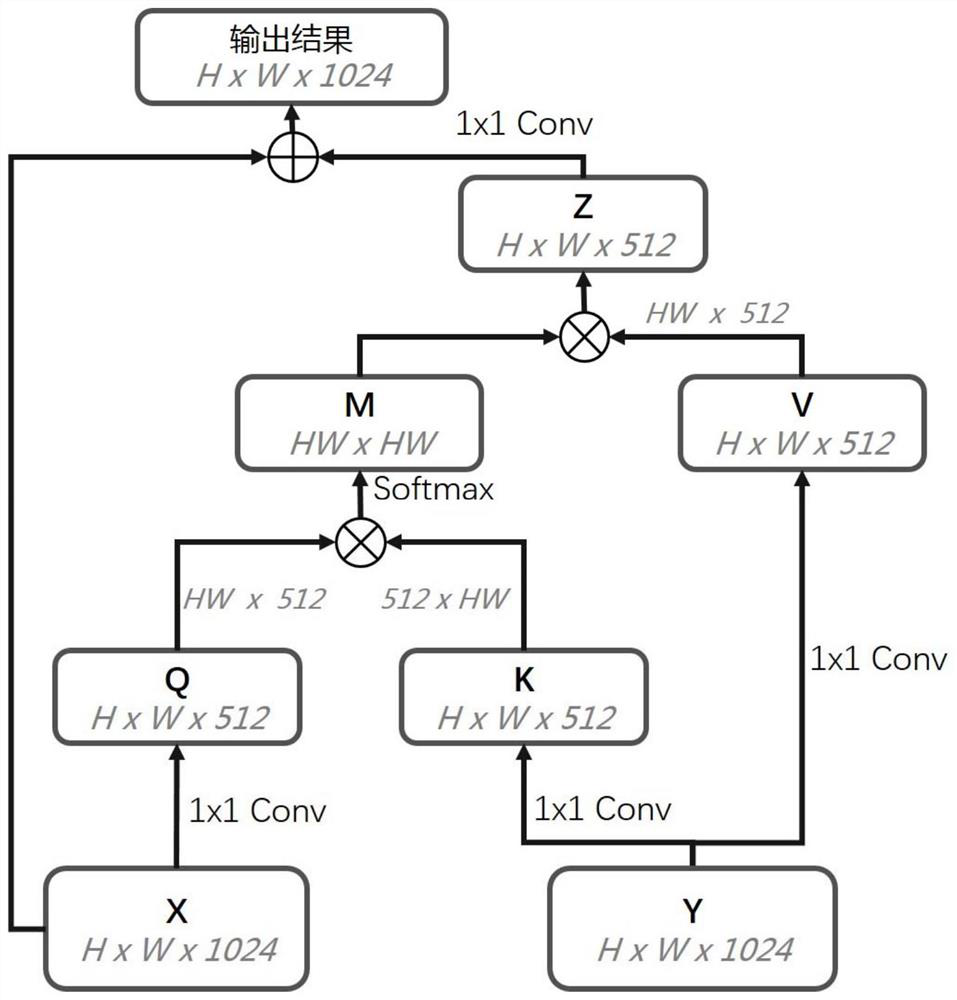

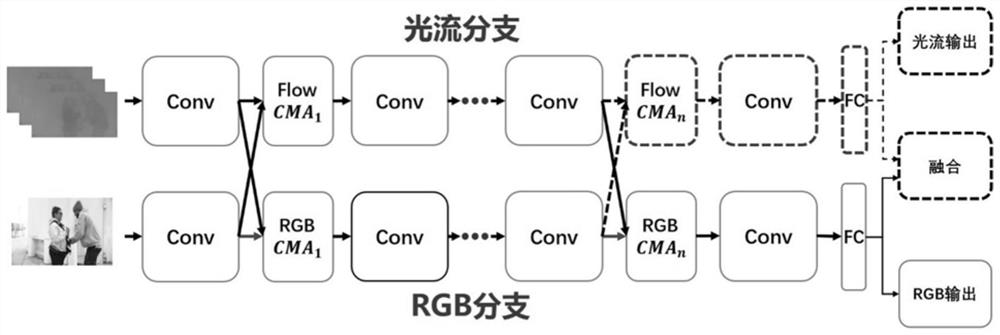

A two-stream video classification method and device based on cross-modal attention mechanism

ActiveCN110188239BEnhanced interactionTake advantage ofVideo data queryingVideo data clustering/classificationCross modalityAlgorithm

The invention relates to a dual-stream video classification method and device based on a cross-modal attention mechanism. Different from the traditional two-stream method, the present invention fuses the information of two modalities (or even more modalities) before predicting the result, so it can be more efficient and sufficient. At the same time, due to the information interaction at an earlier stage , a single branch already has the important information of another branch in the later stage, the accuracy of the single branch has been equal to or even exceeded the traditional two-stream method, and the parameter amount of the single branch is much less than the traditional two-stream method; compared with the non-local neural network, this The attention module designed by the invention can cross modalities, instead of only using the attention mechanism within a single modality. The method proposed by the invention is equivalent to a non-local neural network when the two modalities are the same.

Owner:PEKING UNIV +2

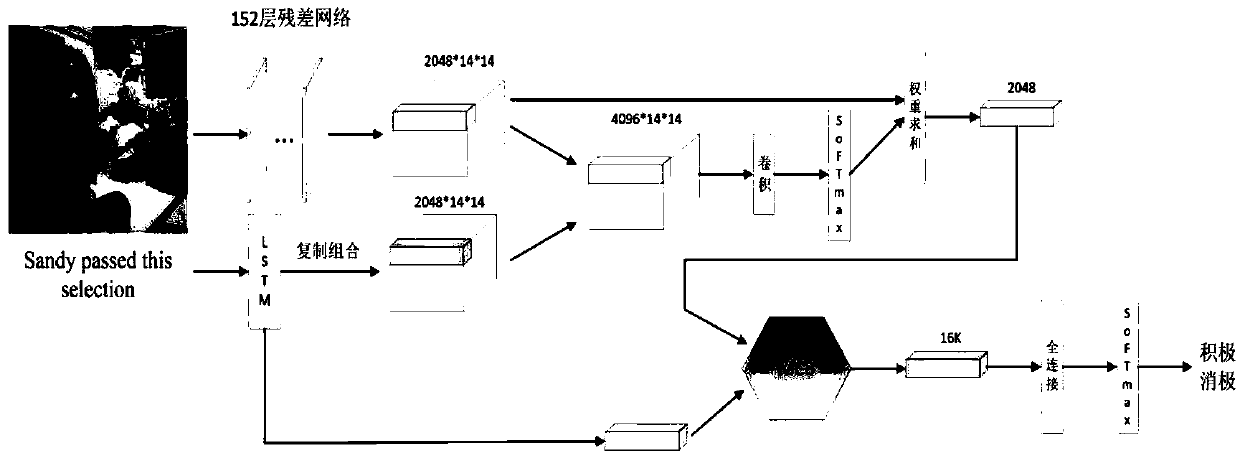

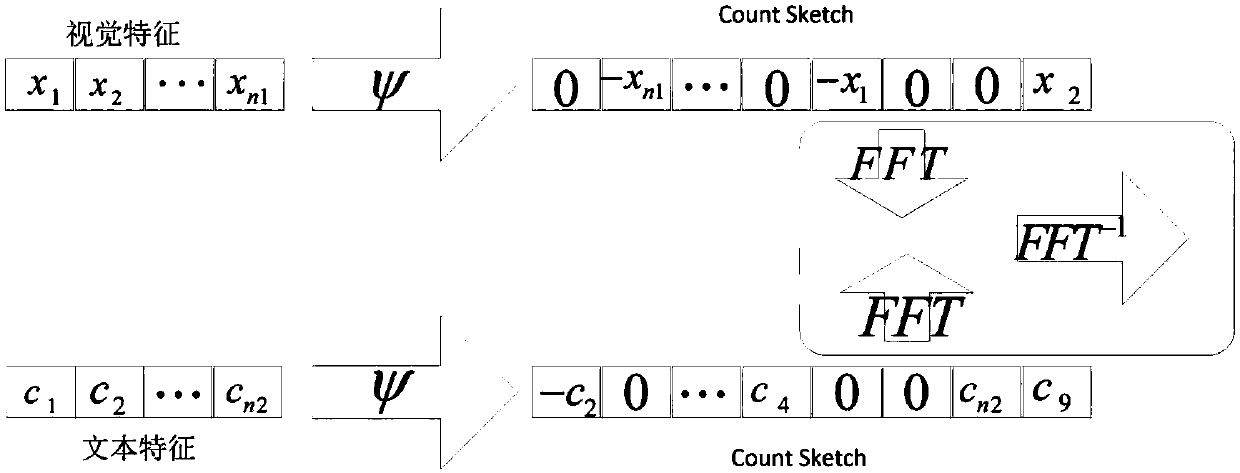

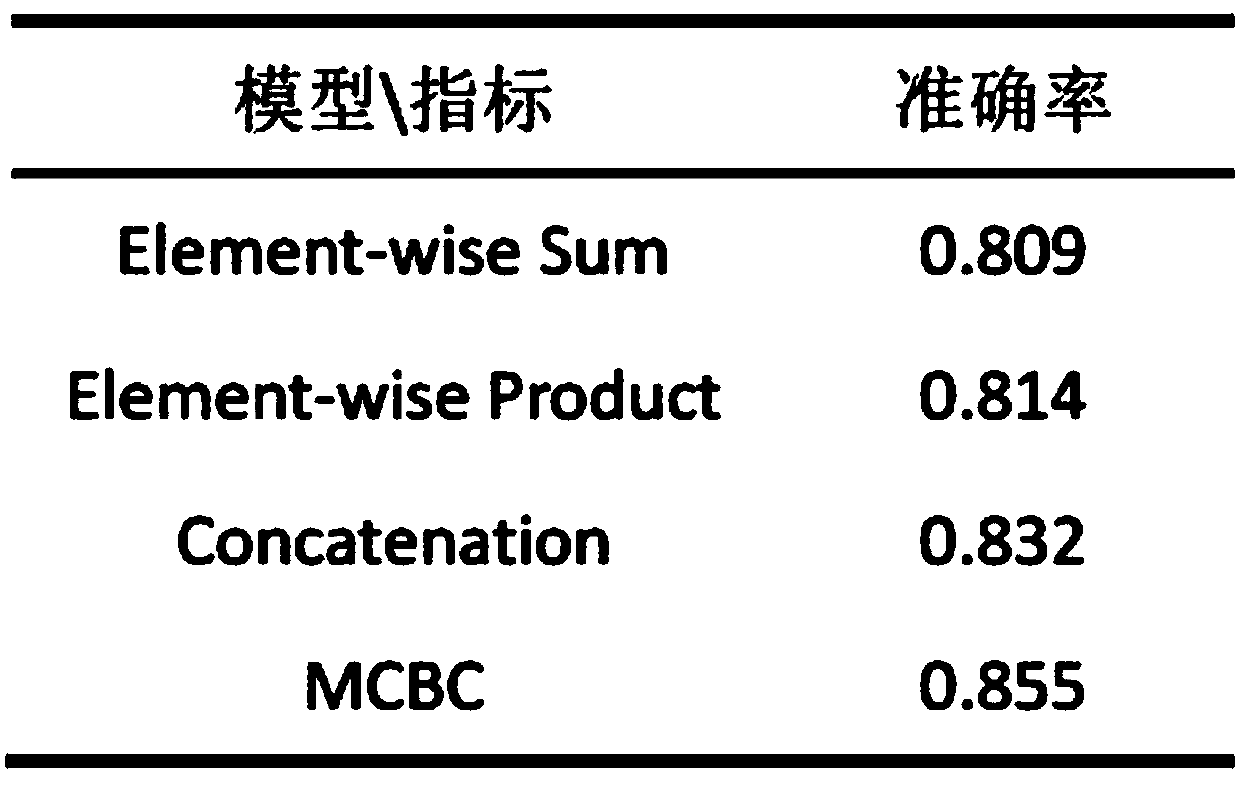

A Cross-Modal Sentiment Classification Method Based on Compact Bilinear Fusion of Image and Text

ActiveCN107066583BFrequent interactionGood image characteristicsCharacter and pattern recognitionSpecial data processing applicationsCross modalityDiagnostic Radiology Modality

The present invention provides a graphic-text cross-modal emotion classification method based on compact bilinear fusion, comprising the following six steps: (1) extraction of image feature representation; (2) extraction of text feature representation; (3) soft attention (4) Generation of image attention feature representation; (5) Multimodal compact bilinear fusion algorithm to fuse image attention feature representation and text feature representation; (6) Image-text emotion classification. The use of the soft attention map and the multimodal compact bilinear fusion algorithm in the method of the present invention can effectively improve the accuracy of emotion classification.

Owner:HUAQIAO UNIVERSITY

Cross-modal image-label correlation learning method for social images

ActiveCN104899253BImprove experienceImprove search relevanceMetadata still image retrievalSpecial data processing applicationsCross modalityData set

The invention belongs to the technical field of cross-media relevance learning, and particularly relates to a social image oriented cross-modality image-label relevance learning method. The invention comprises three algorithms: multi-modal feature fusion, bidirectional relevancy measuring and cross-modality relevancy fusion; a whole social image set is described by taking a hyperimage as a basic model, the image and a label are respectively mapped into hyperimage nodes for treatment, relevancy aiming at the image and the relevancy aiming at the label are obtained, and the two different relevancies are combined according to a cross-modality fusion method to obtain a better relevancy. Compared with the traditional method, the method is high in accuracy and high in adaptivity. The method has important significance in performing efficient social image retrieval by considering multi-modal semantic information based on large-scale social images with weak labels, retrieval relevancy can be improved, user experience is enhanced, and the method has application value in the field of cross-media information retrieval.

Owner:FUDAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com