A Hairstyle Replacement Method Based on Generative Adversarial Network Model

A replacement method and hairstyle technology, applied in biological neural network models, neural learning methods, graphics and image conversion, etc., can solve problems that do not involve hairstyle features

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

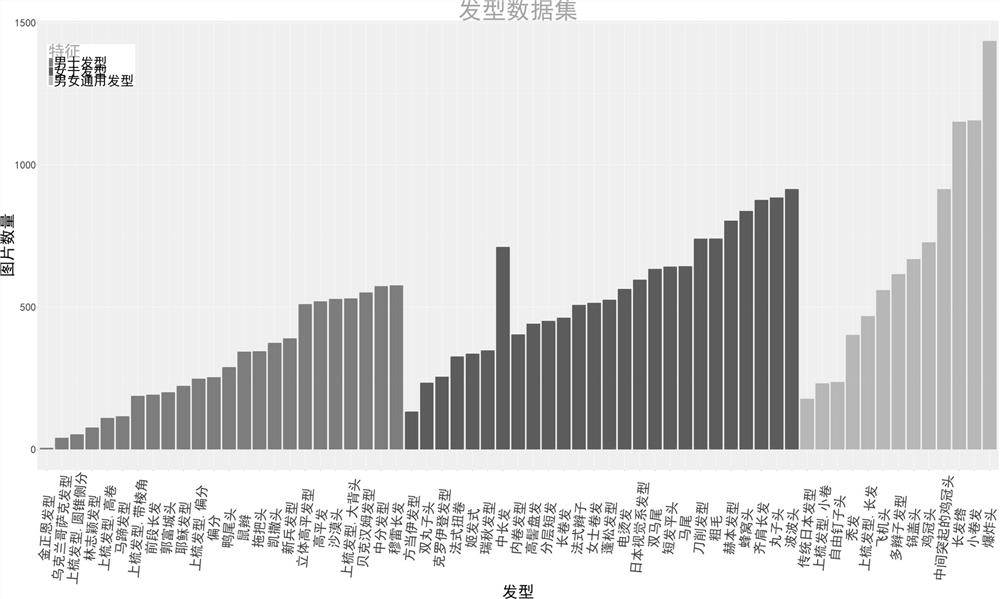

[0026] Step 1. Collection of hairstyle pictures and labeling of attribute categories;

[0027] Step 2. Face detection, cut out the location of the face, which is convenient for the next step of processing by the deep learning neural network;

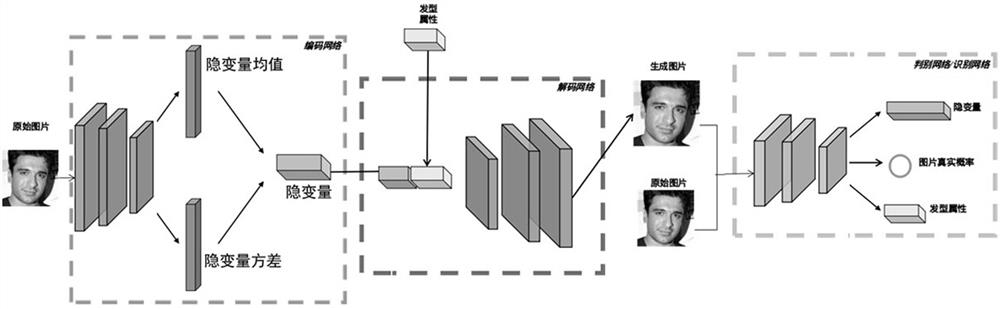

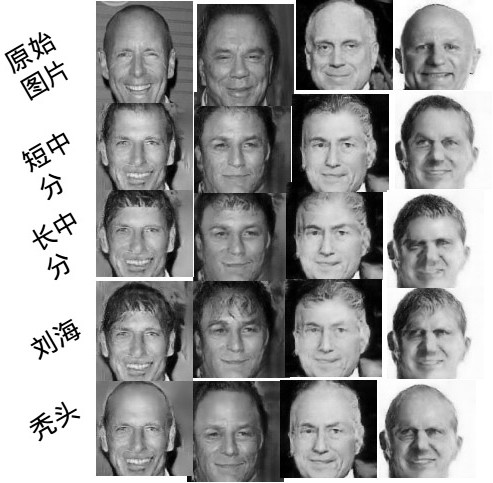

[0028] Step 3. Build a deep neural network. figure 2 The structure of the designed deep neural network;

[0029] Step 4. Train the deep neural network. After preparing the face picture and the corresponding attribute information, the training of the deep network is carried out. After the input training image is encoded by the neural network, the hidden vector is obtained. According to this latent vector and the specified hairstyle attributes, the generated picture is obtained from the decoding network. For the generated picture, it is sent to the discriminant network and the recognition network to learn the attributes corresponding to the generated picture, and the feature map of the discriminant network is used as the reconstruction...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com