Chinese Intelligent Dialogue Method Based on Transformer

A Chinese and intelligent technology, applied in neural learning methods, text database query, unstructured text data retrieval, etc., can solve problems that do not reach the level of intelligent understanding of semantics and context, and achieve real-time question-and-answer scenarios, high question-and-answer accuracy The effect of high efficiency and broad application prospects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0057] For the convenience of description, the relevant technical terms appearing in the specific implementation are explained first:

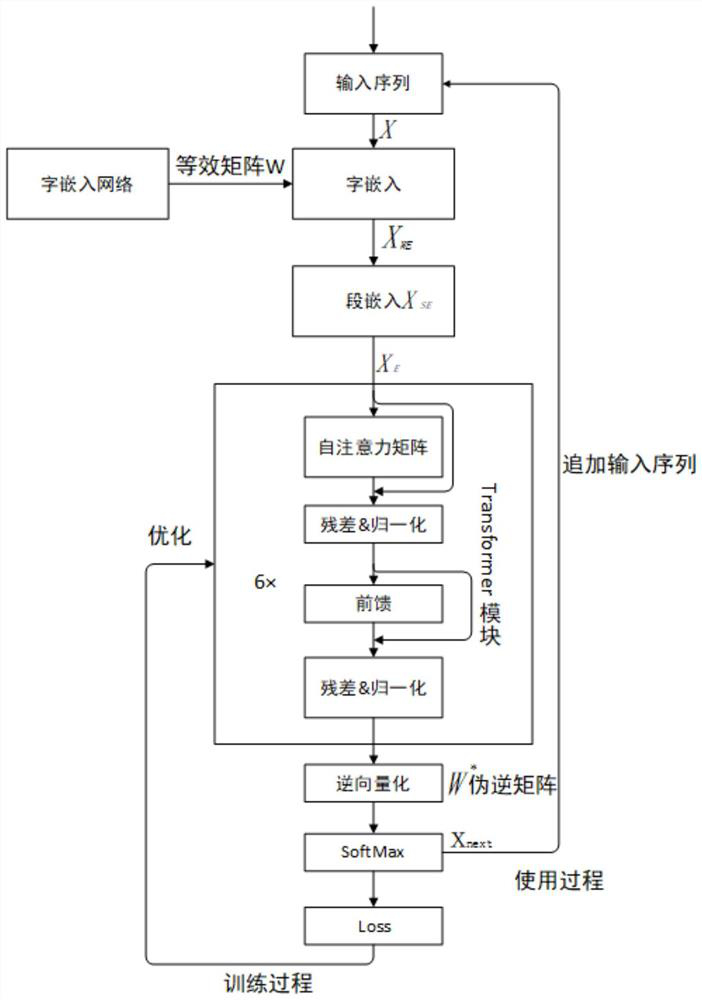

[0058] figure 1 It is the flow chart of the Chinese intelligent dialogue method based on Transformer of the present invention;

[0059] In this example, if figure 1 Shown, a kind of Chinese intelligent dialog method based on Transformer of the present invention comprises the following steps:

[0060] S1. Use LCCC (Large-scale Cleaned Chinese Conversation), hereinafter referred to as a large-scale Chinese chat corpus, referred to as a corpus to build a training data set;

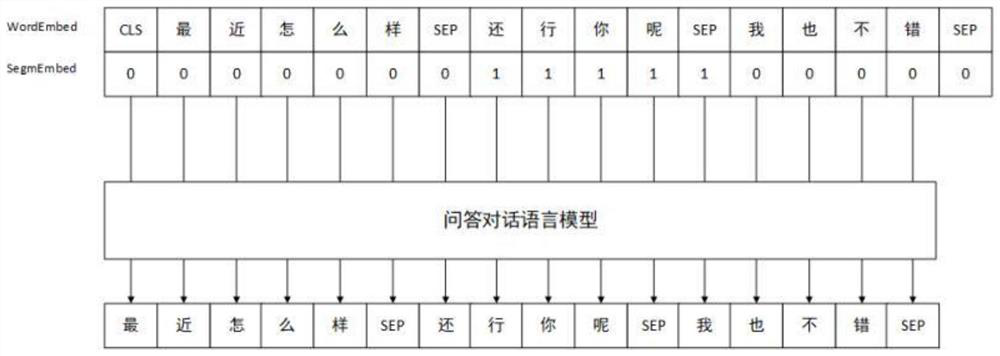

[0061] S1.1. Construct an input sequence whose length is N=20;

[0062] Use the [CLS] tag as the start of the input sequence, then extract continuous dialogue sentences from the corpus, fill in the words in the input sequence according to the order of the sentences, insert the [SEP] tag between each sentence, and determine when each sentence is filled in Add whether the t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com