A Reinforcement Learning Path Planning Method Using Artificial Potential Field

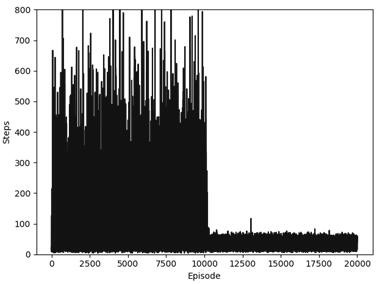

A technology of reinforcement learning and path planning, applied in navigation calculation tools, two-dimensional position/course control, vehicle position/route/altitude control, etc. It can solve problems such as multiple invalid iterations, insufficient environmental exploration, and difficult convergence. Achieve the effect of reducing the number of iterations, improving the stability of the convergence results, and shortening the path planning time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Embodiments of the technical solutions of the present invention will be described in detail below with reference to the accompanying drawings. The following examples are only used to more clearly illustrate the technical solutions of the present invention, and are therefore only used as examples, and cannot be used to limit the protection scope of the present invention.

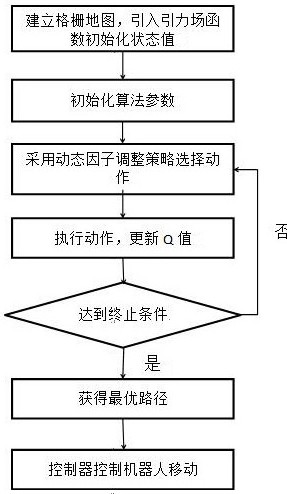

[0036] see figure 1 , a reinforcement learning path planning method by introducing an artificial potential field provided by the present invention, the method steps are as follows:

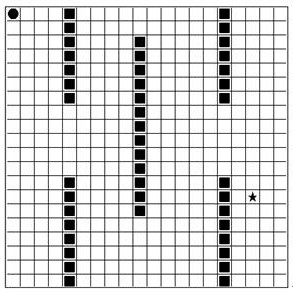

[0037] Step 1: Segment the environment image obtained by the mobile robot, divide the image into a 20×20 grid, and use the grid method to build an environment model. If an obstacle is found in the grid, define the grid as an obstacle If the target point is found in the grid, the grid is set as the target position, which is the final position that the mobile robot will reach; other grids are defined as grids without obsta...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com