A sentiment classification method and system based on multimodal contextual semantic features

A semantic feature and emotion classification technology, applied in the field of emotional computing, can solve the problems of ignoring context dependencies and not considering context information, so as to achieve the effect of improving generalization ability, improving accuracy, and increasing feature information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0088] The technical solutions of the present invention will be described in further detail below with reference to the accompanying drawings and specific embodiments of the description.

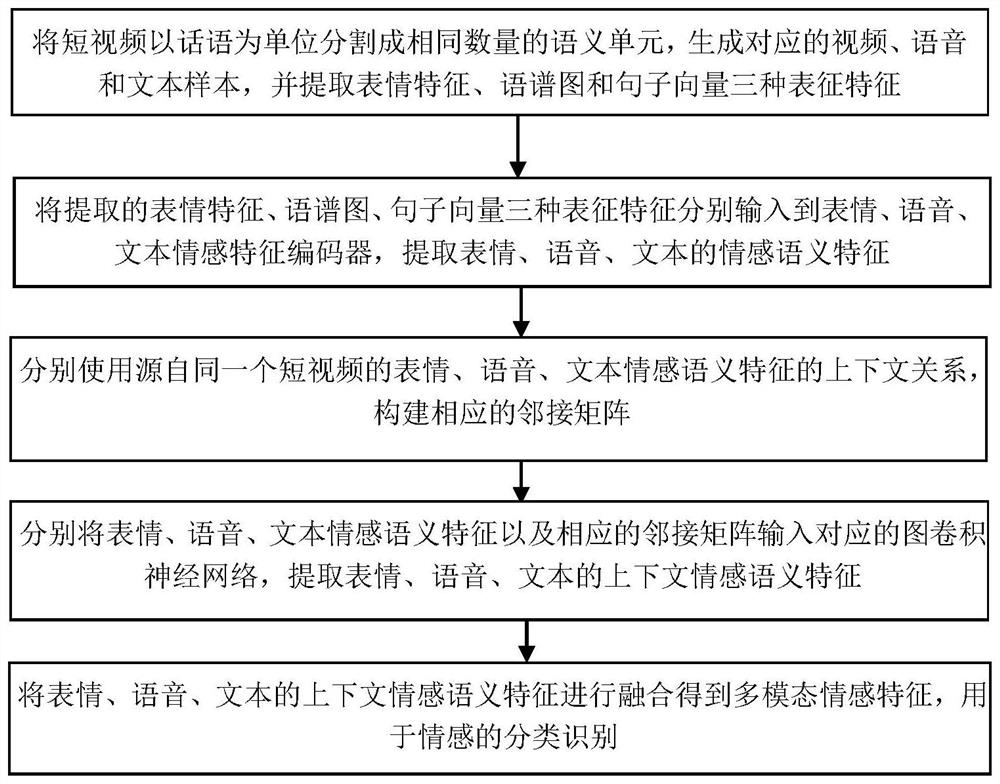

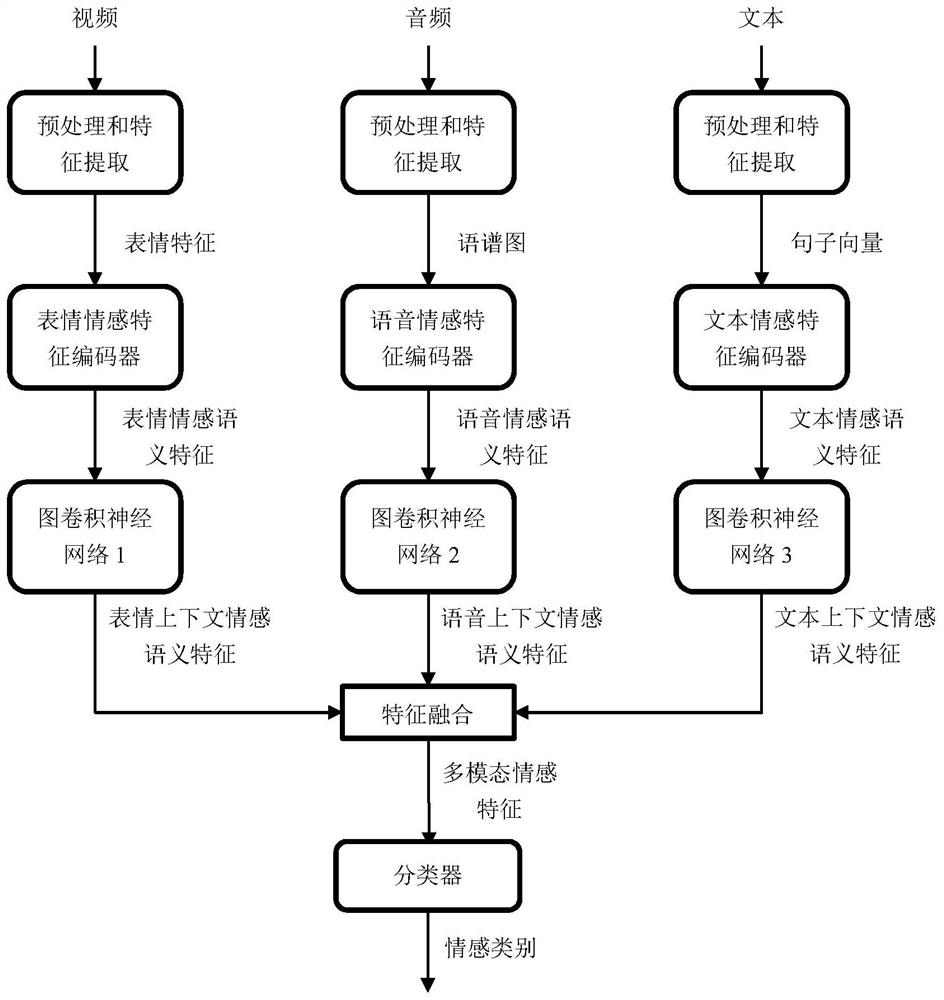

[0089] like figure 1 As shown, a sentiment classification method based on multimodal contextual semantic features provided by an embodiment of the present invention mainly includes the following steps:

[0090] Step (1) Data preprocessing and characterization feature extraction: The short video is divided into the same number of semantic units (usually can be divided into 12≤N≤60 semantic units according to the length of the video) using the utterance as a unit, and each semantic unit is used as a The corresponding video samples, speech samples and text samples are generated from the semantic units, and three kinds of representation features, namely, the expression feature vector, the spectrogram and the sentence vector, are correspondingly extracted from the three types of samples.

[0091...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com