Image Focus Error Estimation

A focus error and image technology, applied in the field of autofocus optical system, can solve the problems of not being able to run real-time view, high cost, increasing the size and weight of the optical system, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

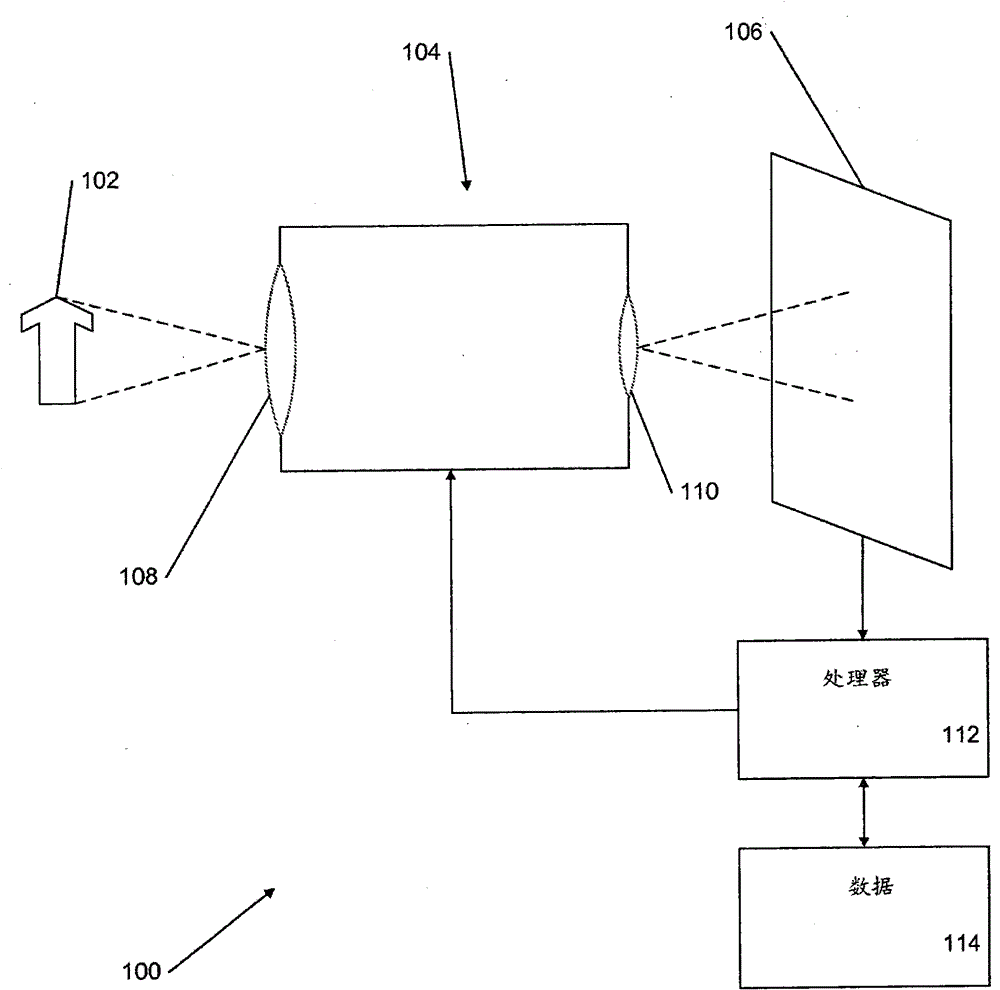

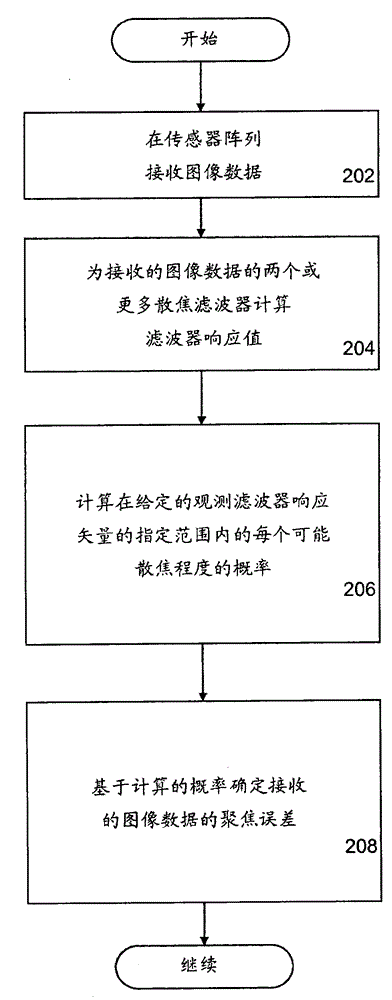

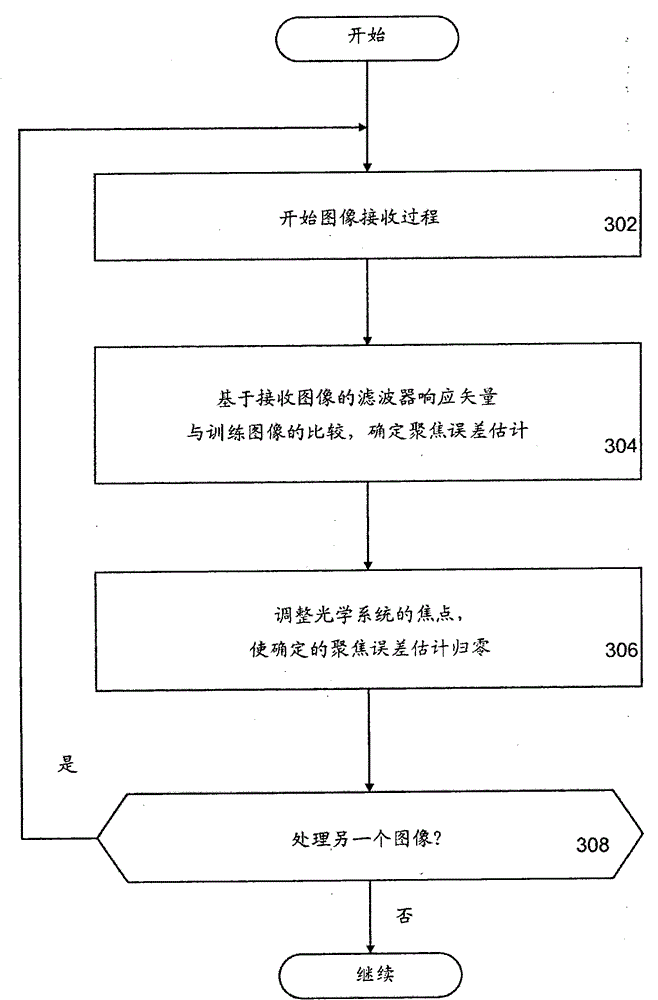

[0026] The present invention includes methods, systems and computer program products for estimating defocus (ie, focus error) within an image. In one embodiment of the invention, the optical system is characterized by a wave optics model of the point-spread function and the sensor array is characterized by the wavelength sensitivity, spatial sampling and noise functions of each sensor class. A training set of clear image patches is collected. A point-spread function is computed for each sensor class for each of the multiple degrees of defocus within the specified range. Additionally, a point-spread function for each degree of defocus is applied to each image patch, which is then sampled using the wavelength sensitivity and spatial sampling functions for each sensor within the sensor array. Noise is added to the sampled response of each sensor element of each sensor in the sensor array. Through a statistical learning step, the sensor responses from the sensor array are used t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com