Adaptive Processing for Data Sharing with Lock Omission and Lock Selection

A technology for omitting hardware locks and processing circuits, which is applied in the fields of electrical digital data processing, concurrent instruction execution, machine execution devices, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

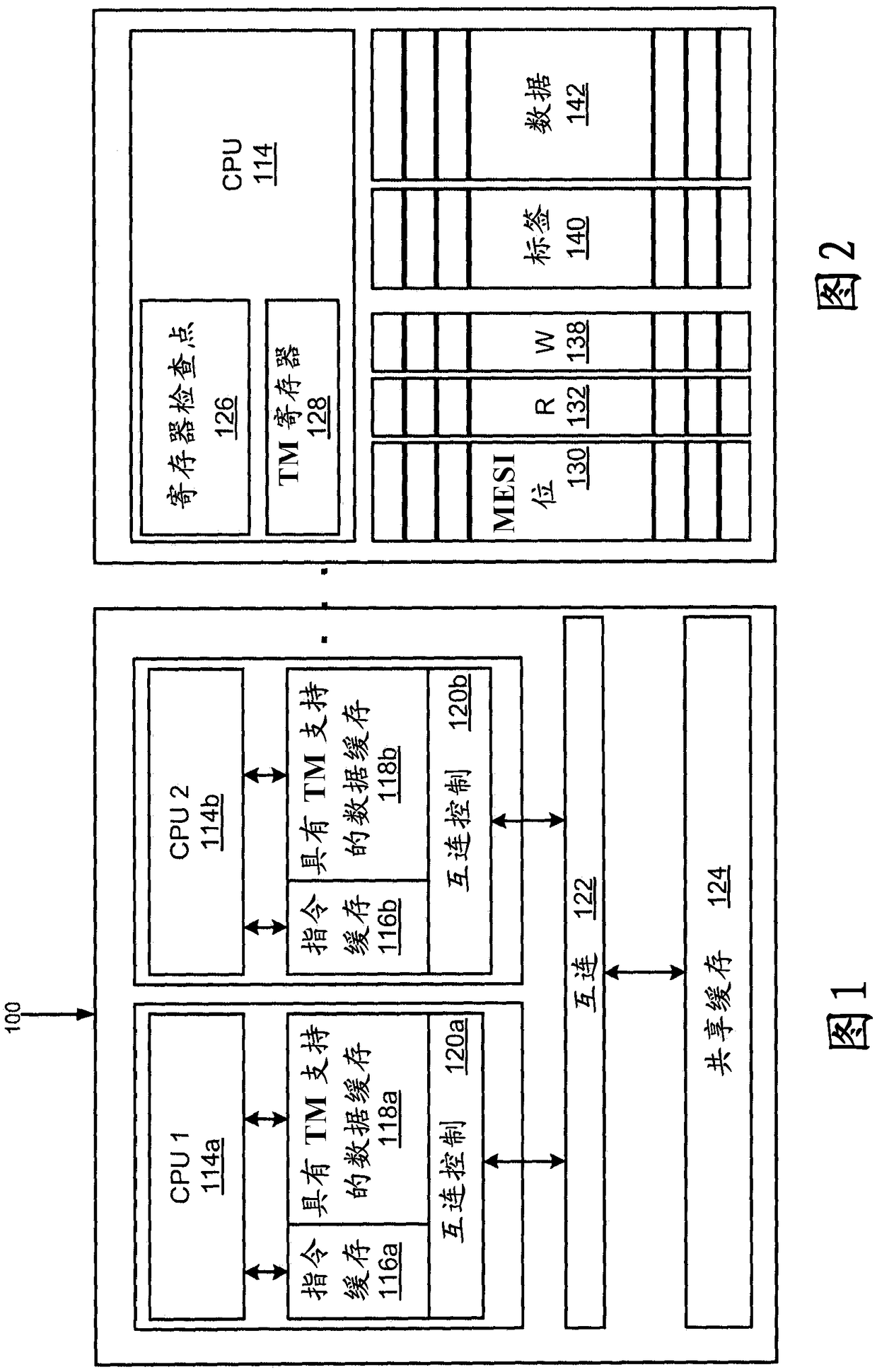

Image

Examples

Embodiment Construction

[0018] Historically, computer systems or processors have only had a single processor (aka processing unit or central processing unit). The processor includes an instruction processing unit (IPU), a branch unit, and a memory control unit, among others. Such processors are capable of executing a single thread of a program at a time. Operating systems have been developed that can time-share servers by allocating a program to execute on a processor for one period of time, and then assigning another program to execute on the processor for another period of time. As technology evolves, memory subsystem caches are often added to processors and complex dynamic address translations including translation lookaside buffers (TLBs). The IPU itself is often referred to as the processor. As technology continues to develop, entire processors can be packaged as a single semiconductor chip or die, and such processors are called microprocessors. Then, processors were developed that added mult...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com