A Derivation Method of Local Disparity Vector

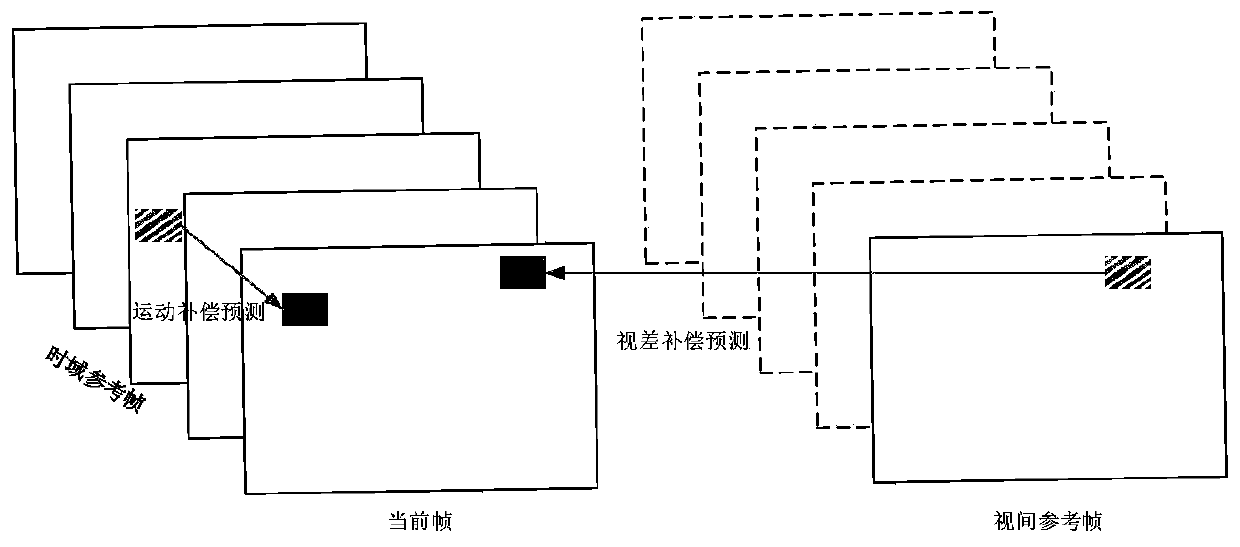

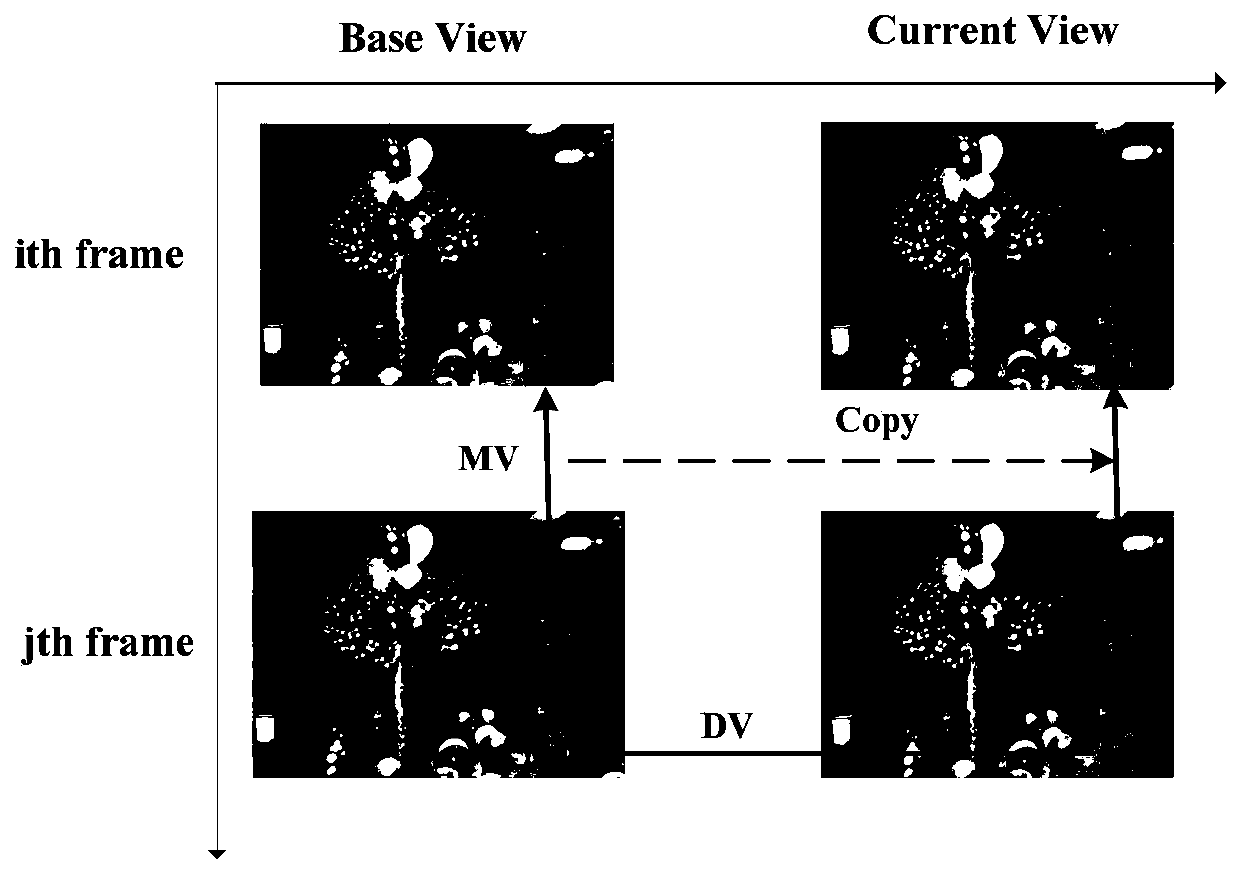

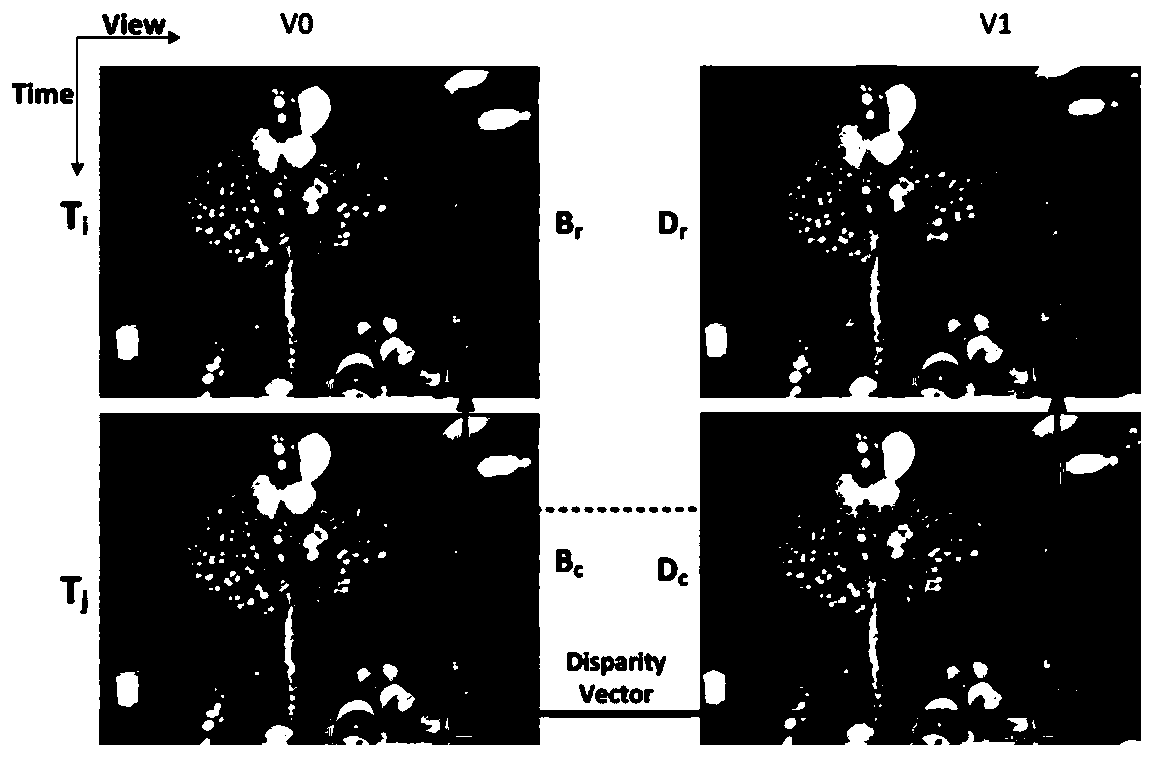

A disparity vector and partial technology, which is applied in the field of multi-view video coding, can solve the problems that the disparity vector is not accurate enough, and the non-zero disparity vector cannot be derived, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0050] A method for deriving a disparity vector in multi-viewpoint video coding in this embodiment is specifically obtained according to the following steps:

[0051] Step 1. Select the coded areas adjacent to the left, upper left, upper, upper right, and lower left of the current prediction unit PU as five candidate areas, which are respectively recorded as a1, b2, b1, b0, and a0, as Figure 7 As shown, the size of the region is specifically taken as R×W on the left, R×H on the top, and R×W on the right; where W and H are the width and height of the current prediction block PU; R is the region coefficient, and the initial value is 1 , R M In this embodiment, it is taken as 4; the size of the adjacent area is different according to the width and height of the current prediction block PU.

[0052] Step 2, dividing the candidate area into several units of 4×4 size;

[0053] Step 3. In the five candidate areas, according to the order of left a1, upper b1, left upper b2, right u...

specific Embodiment approach 2

[0062] A method for deriving a disparity vector in multi-viewpoint video coding in this embodiment is specifically obtained according to the following steps:

[0063] Step 1. Select the coded areas adjacent to the left, upper left, upper, upper right, and lower left of the current prediction unit PU as five candidate areas, which are respectively recorded as a1, b2, b1, b0, and a0, as Figure 7 As shown, the size of the region is specifically taken as R×W on the left, R×H on the top, and R×W on the right; where W and H are the width and height of the current prediction block PU; R is the region coefficient, and the initial value is 1 , R M In this embodiment, it is taken as 4; the size of the adjacent area is different according to the width and height of the current prediction block PU.

[0064] Step 2, dividing the candidate area into several units of 4×4 size;

[0065] Step 3. In the five candidate areas, according to the order of left a1, upper b1, right upper b0, left l...

specific Embodiment approach 3

[0072] A method for deriving a disparity vector in multi-viewpoint video coding in this embodiment is specifically obtained according to the following steps:

[0073] Step 1. Select the coded areas adjacent to the left, upper left, upper, upper right, and lower left of the current prediction unit PU as five candidate areas, which are respectively recorded as a1, b2, b1, b0, and a0, as Figure 7 As shown, the size of the region is specifically taken as R×W on the left, R×H on the top, and R×W on the right; where W and H are the width and height of the current prediction block PU; R is the region coefficient, and the initial value is 1 , R M In this embodiment, it is taken as 4; the size of the adjacent area is different according to the width and height of the current prediction block PU.

[0074] Step 2, dividing the candidate area into several units of 4×4 size;

[0075] Step 3. In the five candidate areas, according to the order of left a1, upper b1, left upper b2, left lo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com