Stereoscopic fitting method based on somatosensory feature parameter extraction

A technology of characteristic parameters and parameters, applied in data processing applications, image data processing, collaborative devices, etc., can solve the problems of wasting consumers' time, reducing the efficiency of clothing purchases, and the limited number of clothing.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

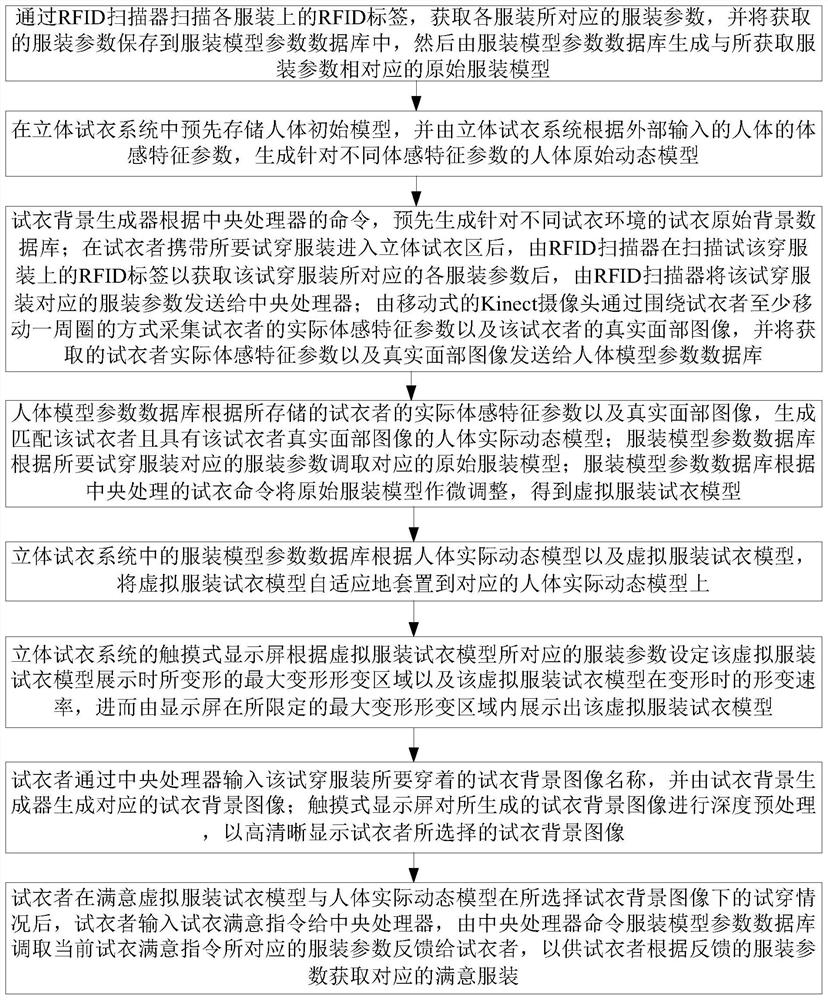

[0067] The present invention will be further described in detail below with reference to the accompanying drawings.

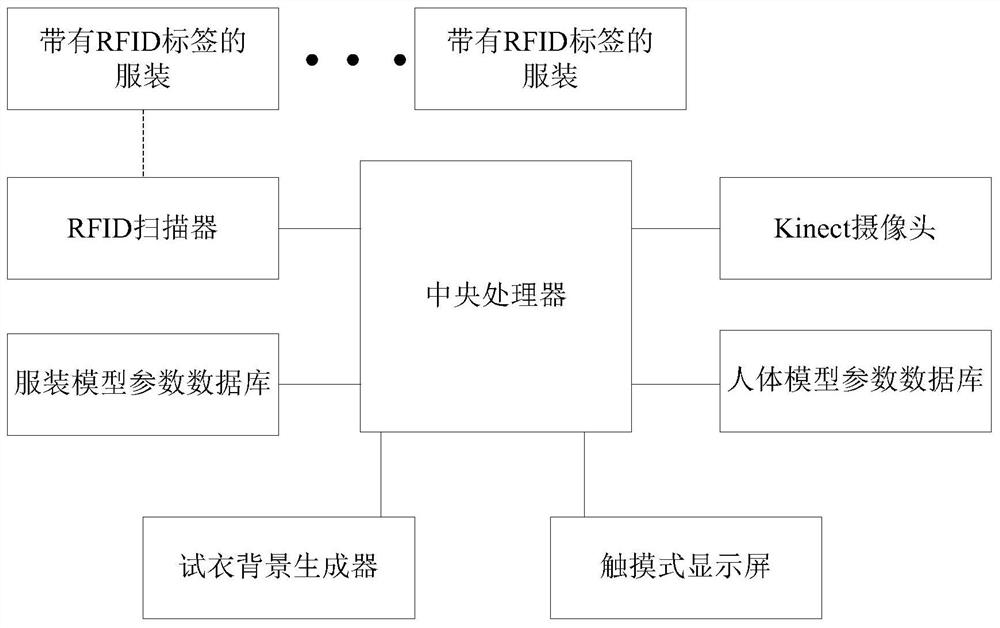

[0068] like figure 2 As shown in perspective based on fitting method according somatosensory feature extraction according to the present embodiment, for the clothing, the clothing model parameter database, the human body model parameter database of the mobile Kinect camera, RFID scanner, with at least one RFID tag perspective fitting system central processor, a touch screen and a background generator fitting formed, Kinect camera belongs to the prior art, here is not described in detail here, stereo system Referring fitting figure 1 FIG; stereoscopic fitting the method comprising the steps 1 through Step 8:

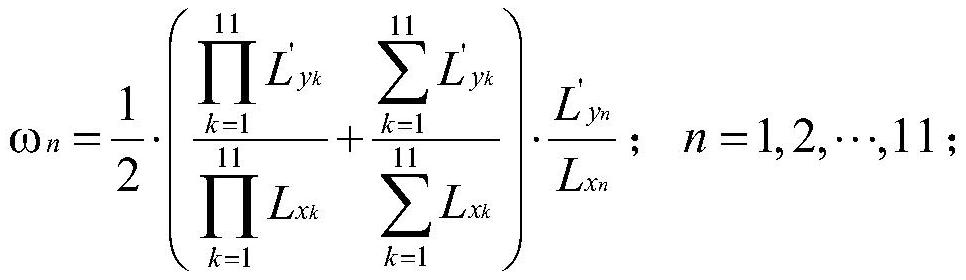

[0069] Step 1, the RFID tag scanned by the RFID scanner on the clothing, clothing obtaining parameters corresponding to the clothing, clothing to save the acquired parameters to the model parameter database clothing, clothing apparel parameters are then genera...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com