Video classification method and device

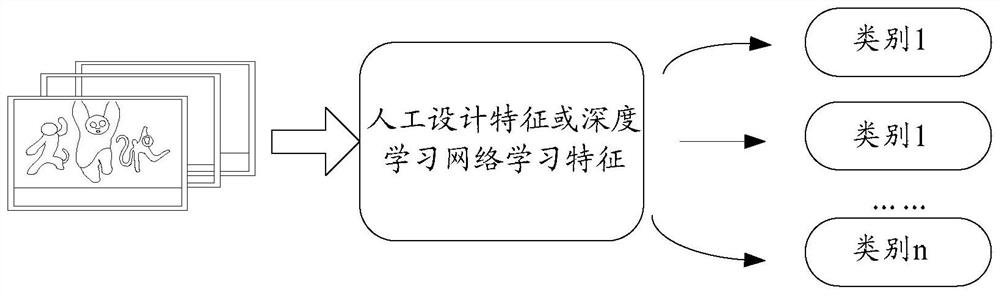

A video classification and video technology, applied in the video field, can solve the problem that the video classification result is not close to the user, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

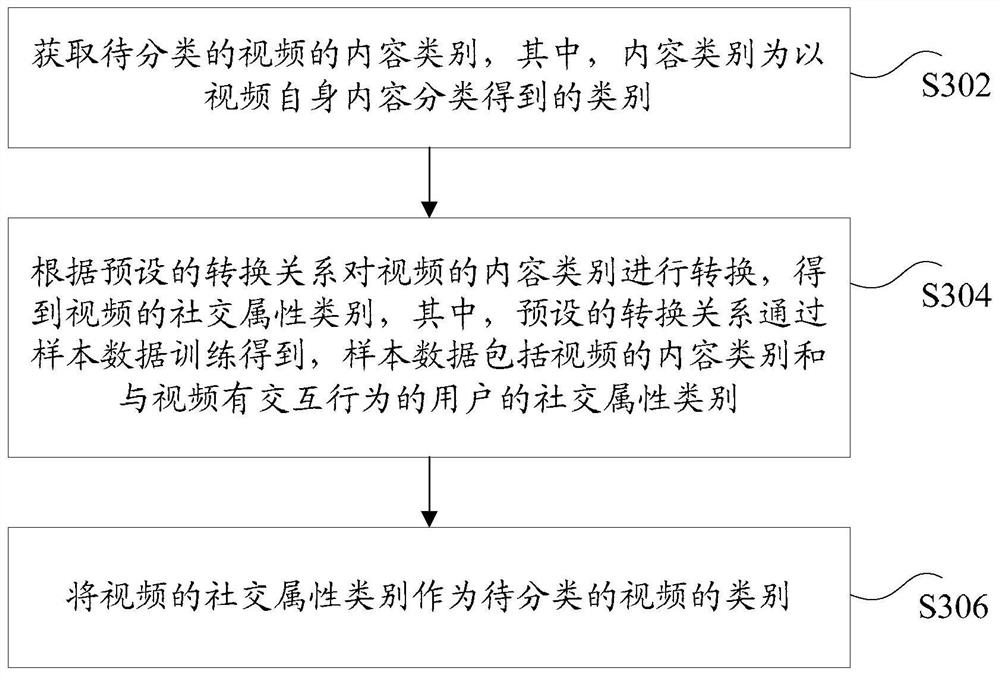

[0025] According to an embodiment of the present invention, a video classification method embodiment is provided.

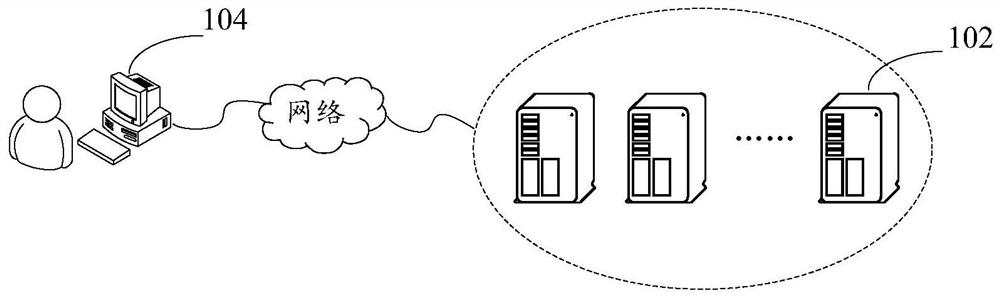

[0026] Optionally, in this embodiment, the above video classification method can be applied to figure 2 In the hardware environment constituted by the server 102 and the terminal 104 as shown. Such as figure 2 As shown, the server 102 is connected to the terminal 104 through a network. The above-mentioned network includes but not limited to: a wide area network, a metropolitan area network or a local area network. The terminal 104 is not limited to a PC, a mobile phone, a tablet computer, and the like. The video classification method in the embodiment of the present invention may be executed by the server 102 , may also be executed by the terminal 104 , and may also be executed jointly by the server 102 and the terminal 104 . Wherein, the video classification method of the embodiment of the present invention executed by the terminal 104 may also be executed b...

Embodiment 2

[0082] According to an embodiment of the present invention, a video classification device for implementing the above video classification method is also provided. Figure 9 is a schematic diagram of an optional video classification device according to an embodiment of the present invention, such as Figure 9 As shown, the device may include:

[0083] The first obtaining unit 10 is configured to obtain the content category of the video to be classified, wherein the content category is a category obtained by classifying the content of the video itself.

[0084] The conversion unit 20 is configured to convert the content category of the video according to a preset conversion relationship to obtain the social attribute category of the video, wherein the preset conversion relationship is obtained by training sample data, and the sample data includes the content category of the video and the content category of the video. The social attribute category of the user who interacted.

...

Embodiment 3

[0095] According to an embodiment of the present invention, a server or terminal for implementing the above video classification method is also provided.

[0096] Figure 10 is a structural block diagram of a terminal according to an embodiment of the present invention, such as Figure 10 As shown, the terminal may include: one or more (only one is shown in the figure) processor 201, memory 203, and transmission device 205 (such as the sending device in the above-mentioned embodiment), such as Figure 10 As shown, the terminal may also include an input and output device 207 .

[0097] Wherein, the memory 203 can be used to store software programs and modules, such as the program instructions / modules corresponding to the video classification method and device in the embodiment of the present invention, and the processor 201 executes the various software programs and modules stored in the memory 203 by running the software programs and modules. A functional application and dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com