An Approach to Visual Odometry Based on End-to-End Semi-Supervised Generative Adversarial Networks

A visual odometry and semi-supervised technology, applied in the field of visual odometry, which can solve the problems of limited usage scenarios, limited accuracy, and difficulty in obtaining data marked with geometric information.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The following examples will be further described with reference to the accompanying drawings.

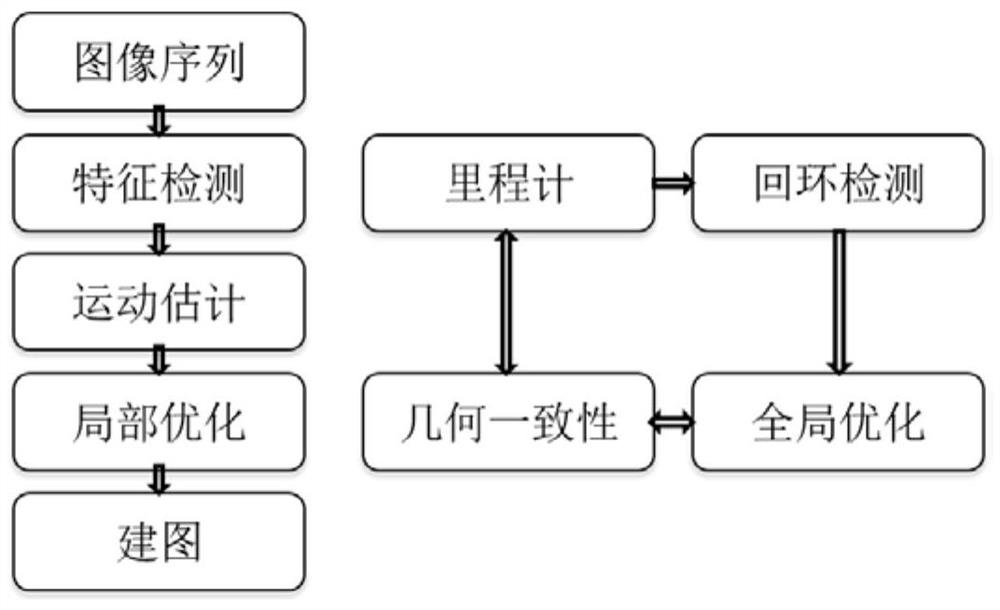

[0033] Embodiment of the present invention comprises the steps of:

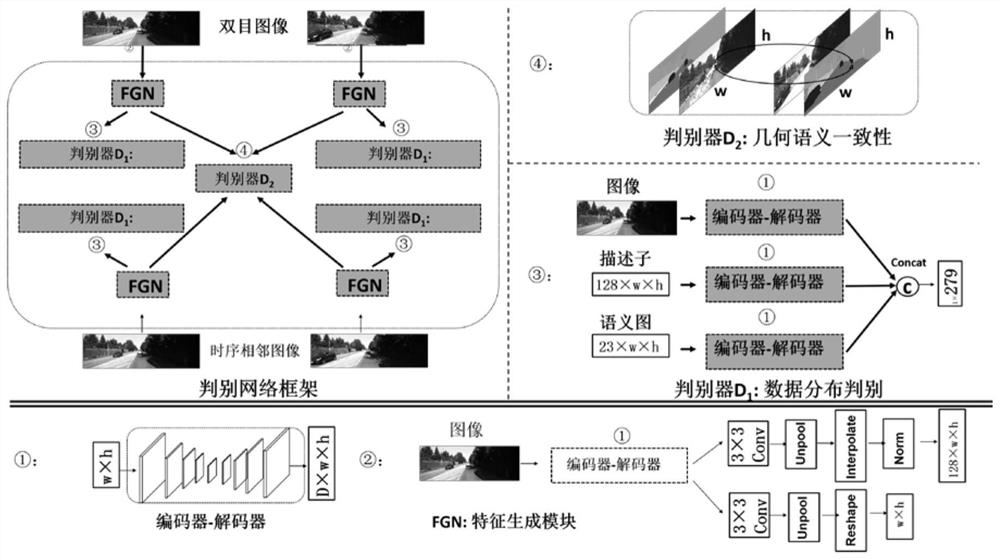

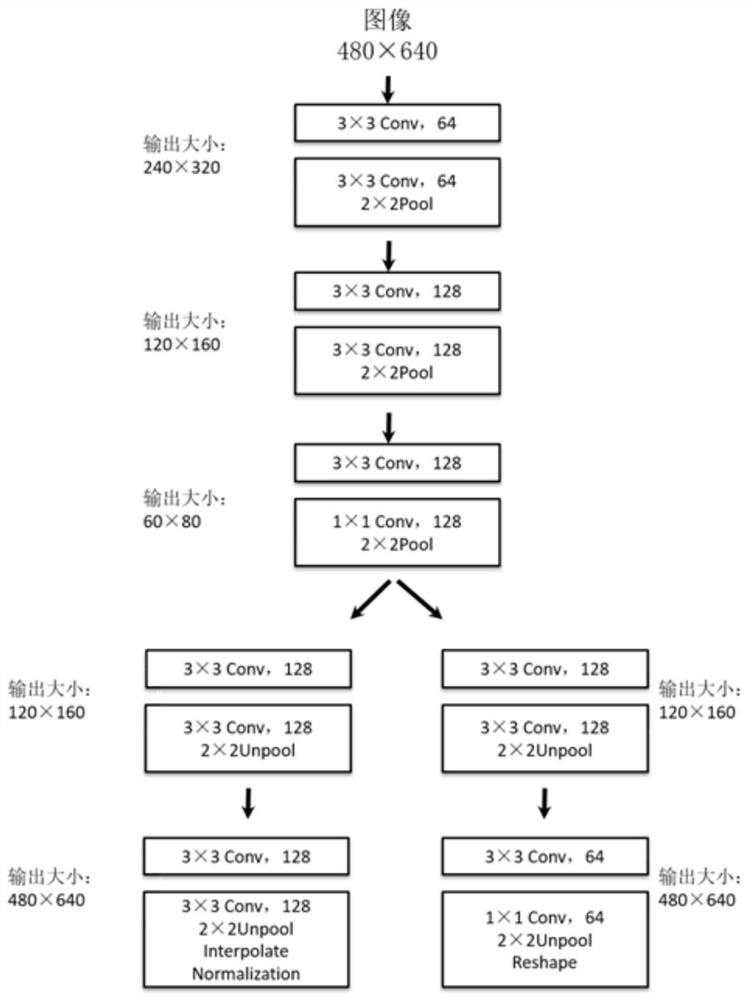

[0034] 1) Construction of feature generation network, the specific method is: the depth feature point detection and descriptor extraction process, the feature points and the descriptors are considered separately, simultaneously generated feature points and the depth of the descriptor with the generated network, and can be excellent in the speed to SIFT algorithm, wherein generating a network into the feature point detector and descriptor extraction depth features two functions, the RGB image as an input, the pixel-level probability map feature points and feature descriptors generated by the depth encoder and decoder; the feature point detector design, taking computational efficiency and real-time, so the first network can be run on the computing system SLAM complex, especially for resource-constrained computing ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com