A Video Generation Method Combining Variational Autoencoders and Generative Adversarial Networks

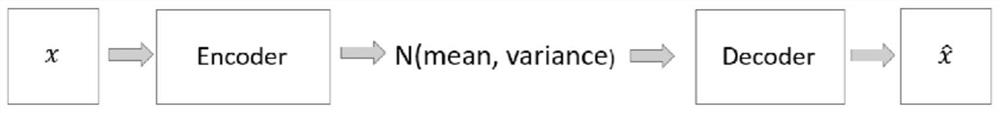

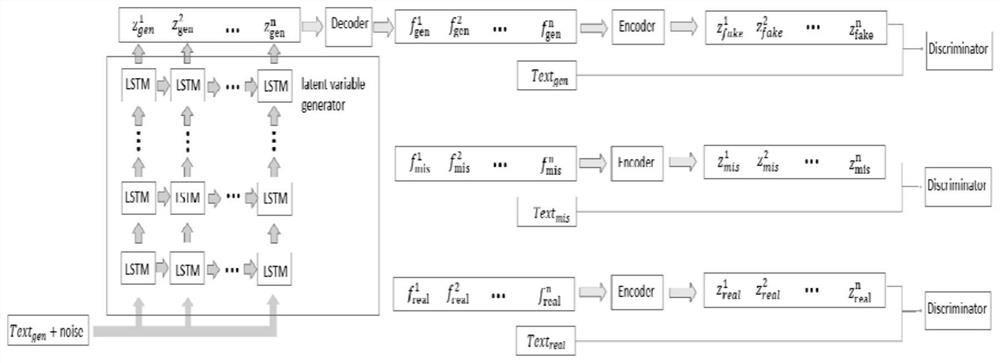

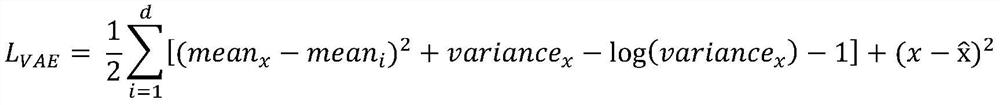

An autoencoder and encoder technology, which is applied in the field of video generation combining variational autoencoders and generative adversarial networks, can solve the problems of reducing the quality of video generation, lack of temporal continuity, and image deformation, and achieve improved inter-frame continuity. stability, ease of training, overcoming the effect of poor inter-frame continuity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0054] Step 1. Take out the handwritten digital picture from the MNIST data set. If the type of the handwritten digital picture taken out is "0, 1, 4, 6, 9", a 16-frame 48×48 pixel video will be formed for the number. The number is in In the first frame, start at any position and move up and down in 16 frames; if the type of handwritten digital picture taken out is "2, 3, 5, 7, 8", a 48×48 pixel of 16 frames will be formed for the number The video, the number starts from any position in the first frame, and moves left and right in 16 frames; make a text description for the moving video of each handwritten number, such as "The digit 0 is moving up and down", "The digit 2 is moving left and right", so that 10 categories of handwritten digital moving videos are obtained, and each category of video has a corresponding text description;

[0055] Step 2, preprocessing the video data set and its text description obtained in step 1, to obtain the "video-text" data set used in the trai...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com