Patents

Literature

188results about How to "Stable training" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

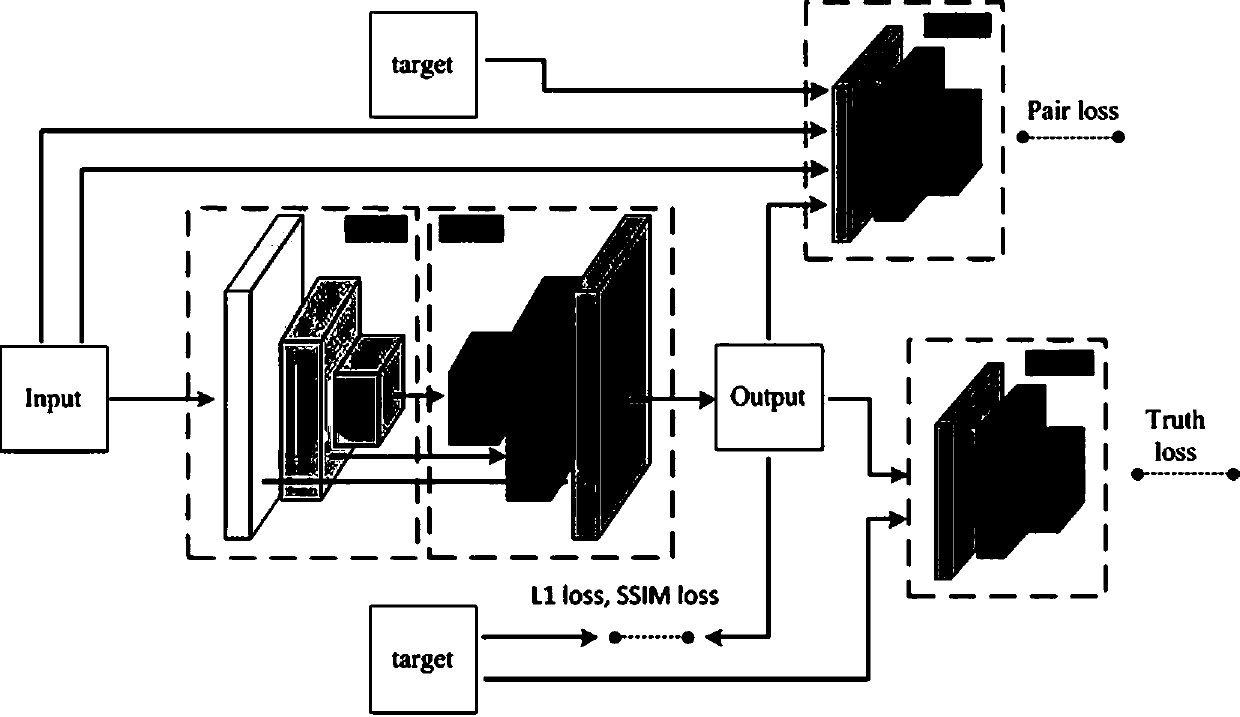

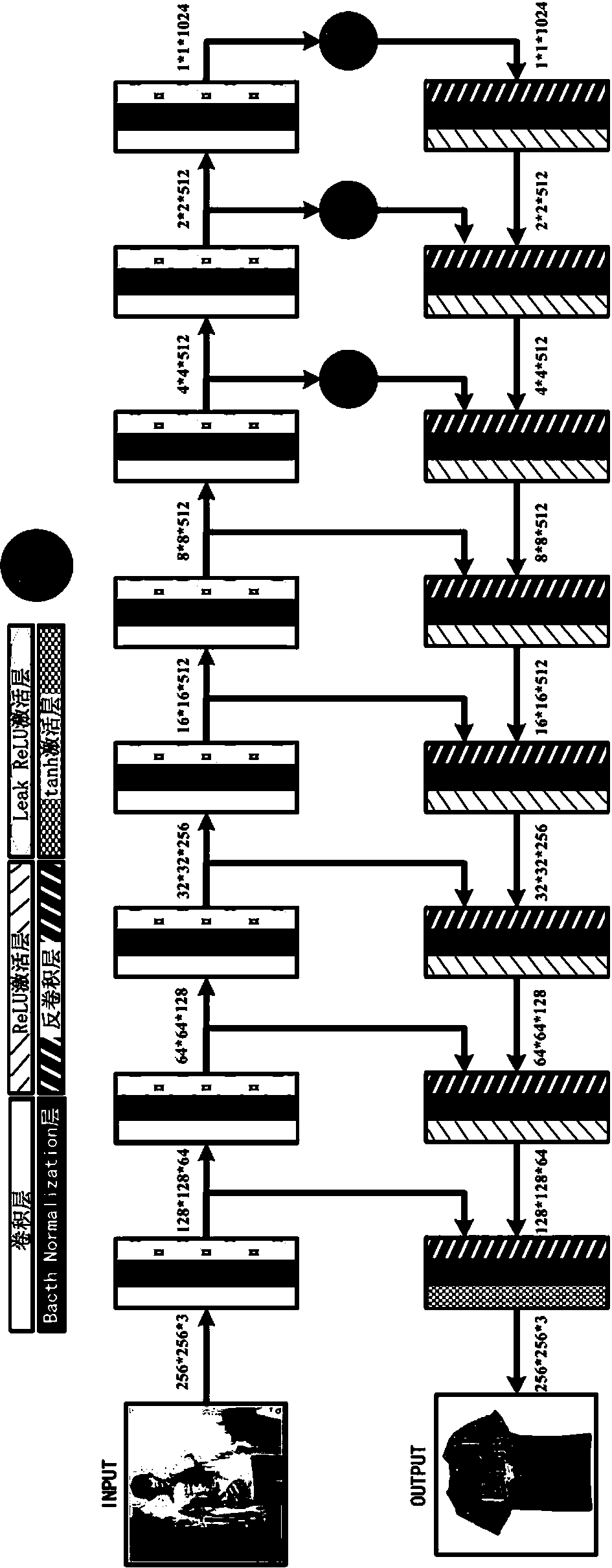

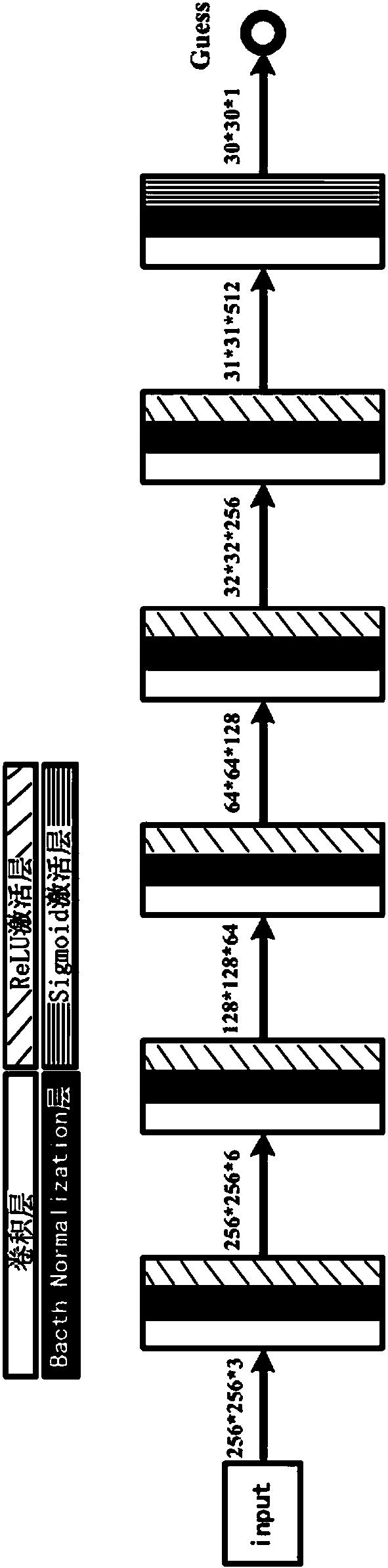

Image domain conversion network based on generative adversarial networks (GAN) and conversion method

ActiveCN108171320AImprove conversion performanceImprove reliabilityCharacter and pattern recognitionNeural architecturesGenerative adversarial networkNetwork model

The invention discloses an image domain conversion network based on generative adversarial networks (GAN) and a conversion method. The image domain conversion network includes a U-shaped generative network, an authenticity authentication network and a pairing authentication network. An image domain conversion process mainly includes the following steps: 1) training the U-shaped generative network,and establishing a network model of the U-shaped generative network; and 2) normalizing a to-be-converted image, and then input the image into the network model established through the step 1) to complete image domain conversion of the to-be-converted image. According to the image domain conversion network, an image domain conversion task of a local region in the image can be achieved, image local-domain conversion quality is high, judgment ability of the network is high, stability of image conversion is high, and authenticity of a generated image is greatly improved.

Owner:XIAN TECHNOLOGICAL UNIV

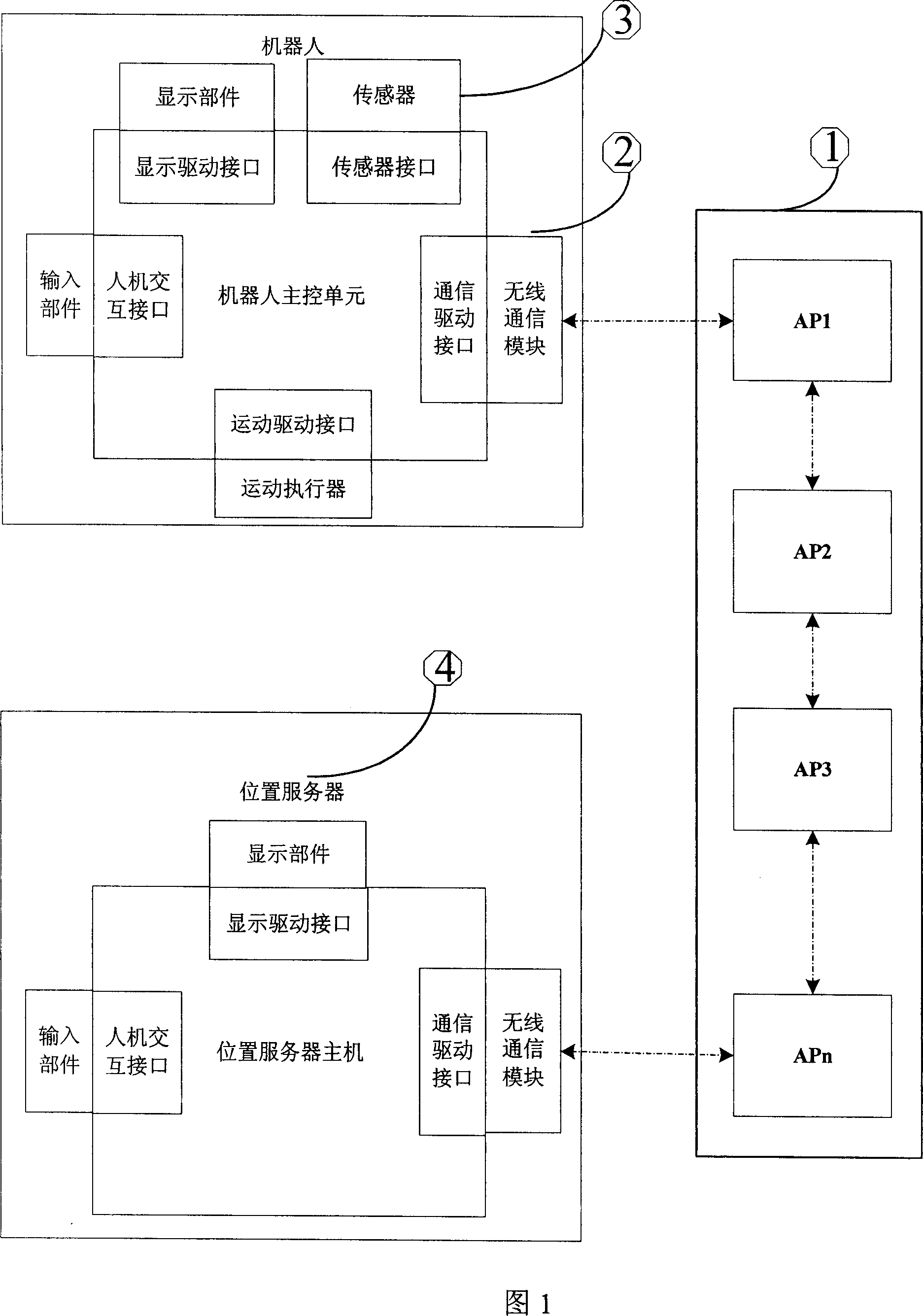

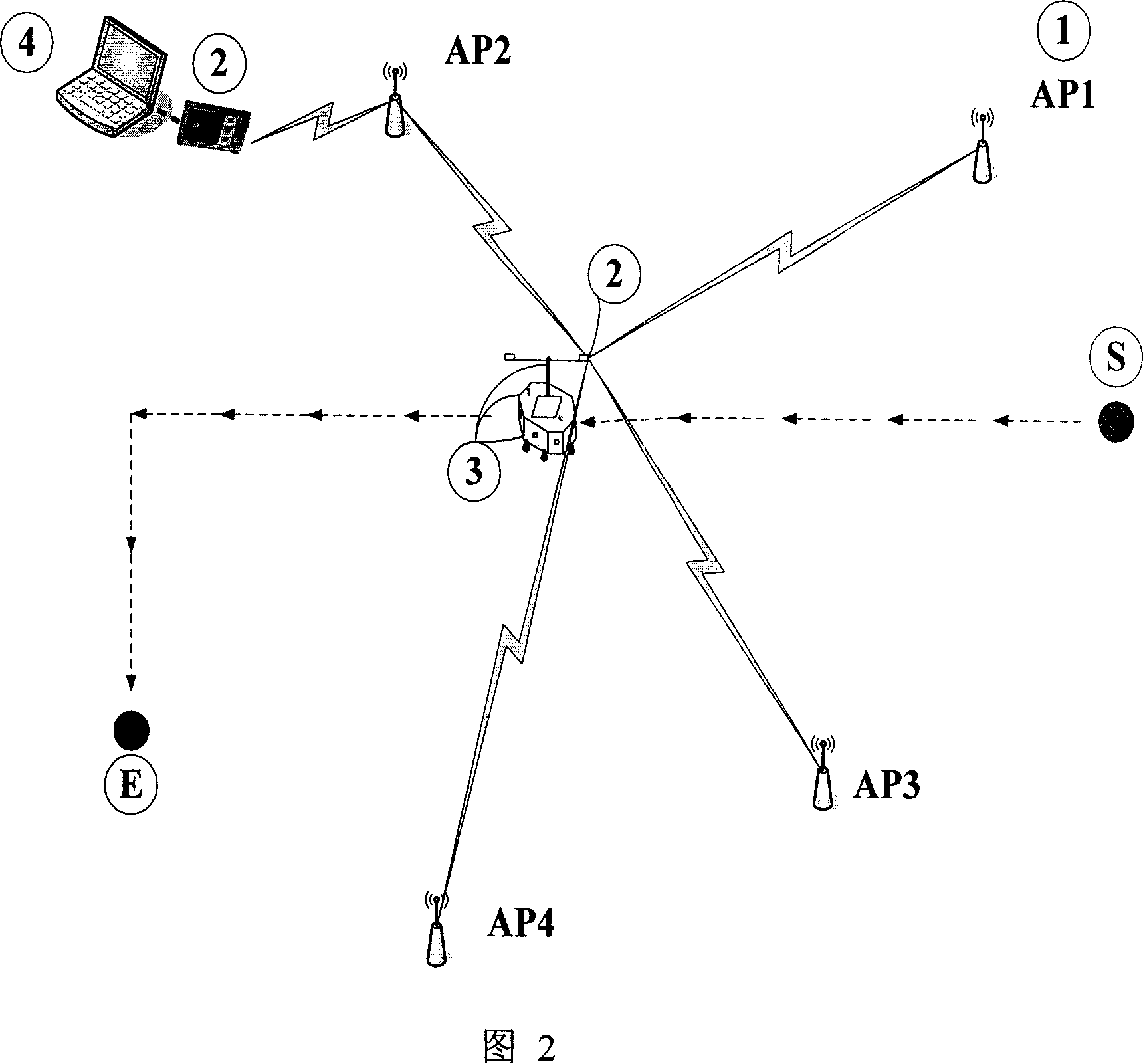

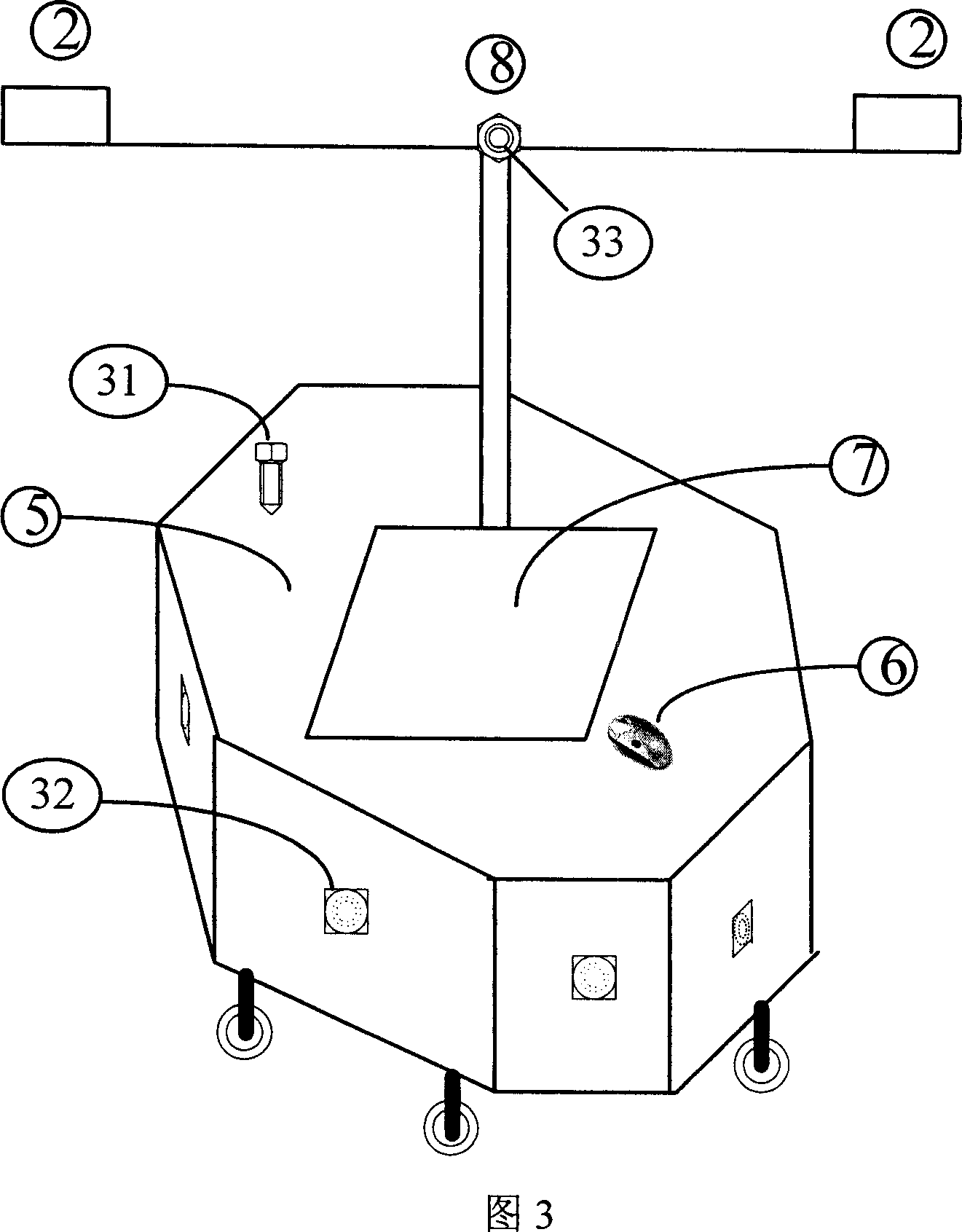

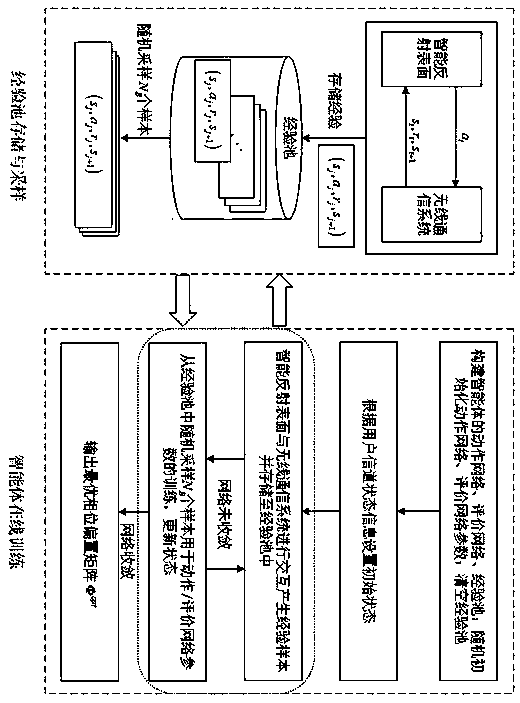

Robot navigation system and navigation method

InactiveCN101126808AAchieving Radio Signal StrengthAvoid re-entryNavigation instrumentsRadio/inductive link selection arrangementsRelevant informationEnvironment effect

The utility model discloses a robot navigation system and a navigation method. The robot navigation system comprises a navigation network which is formed by a plurality of wireless access points, a wireless communication module which is used for transferring data and collecting the intensity sequence communicated with the wireless access points, a sensor which is used for checking that the robot meets barriers or not, and a position server which is used for storing the referenced intensity sequence and running the intricate position arithmetic, and is characterized in that the position server is connected with the wireless communication module and interacts with the navigation network. The navigation method is characterized in that the robot judges the next target position until reaches the destination by comparing the intensity sequence collected in real time with stored reference intensity sequence of the position points; when the robot meets barriers, the robot records and demarcates the intensity sequence of the position in order to avoid entering the position again, therefore achieving intellectual learning; the robot can upload the correlative information to the position server and achieve the assistant navigation position by the help of the database of the position server and the position arithmetic. The utility model is not likely to be affected by the environment and also has the advantages of low cost of maintenance.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

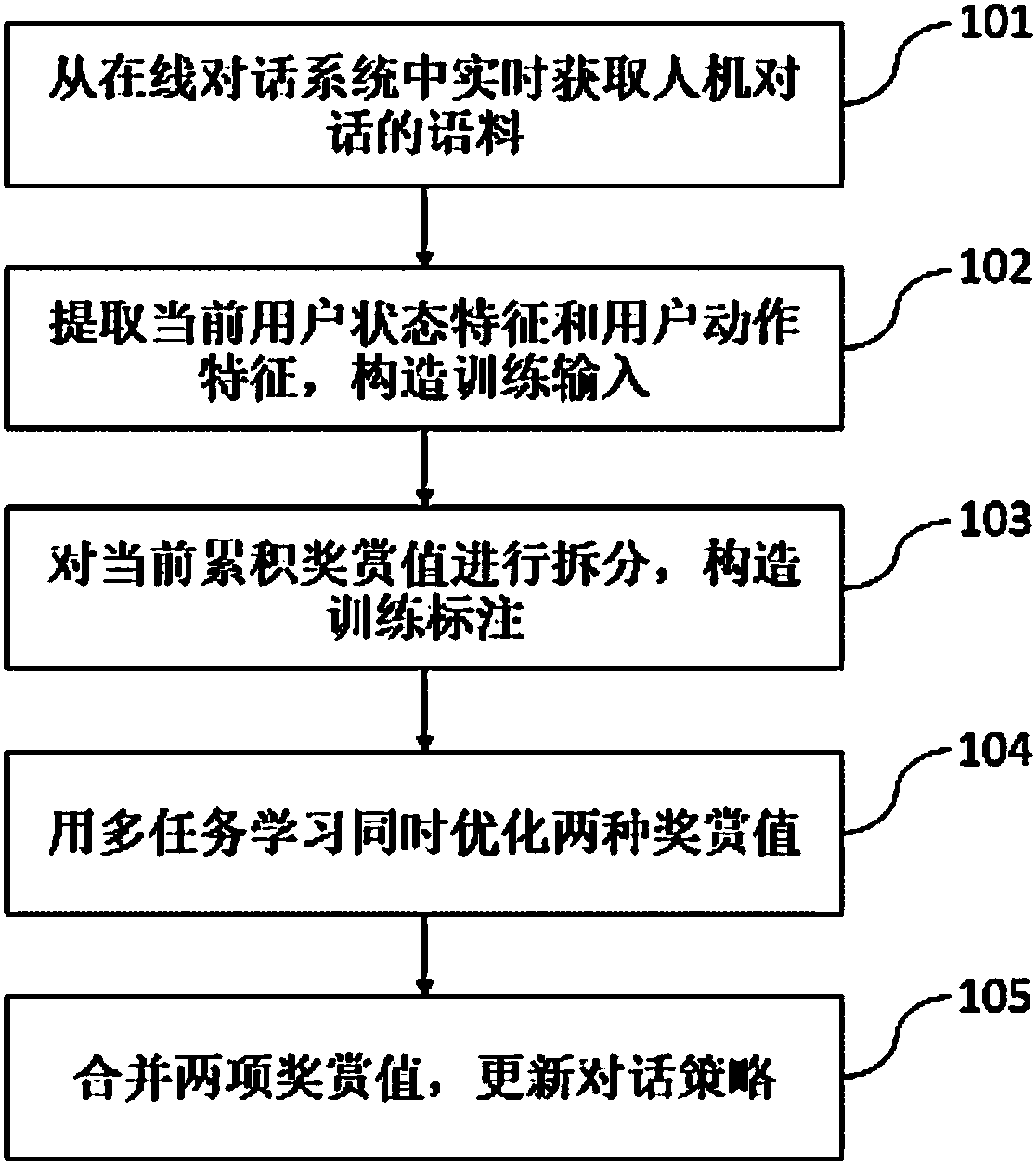

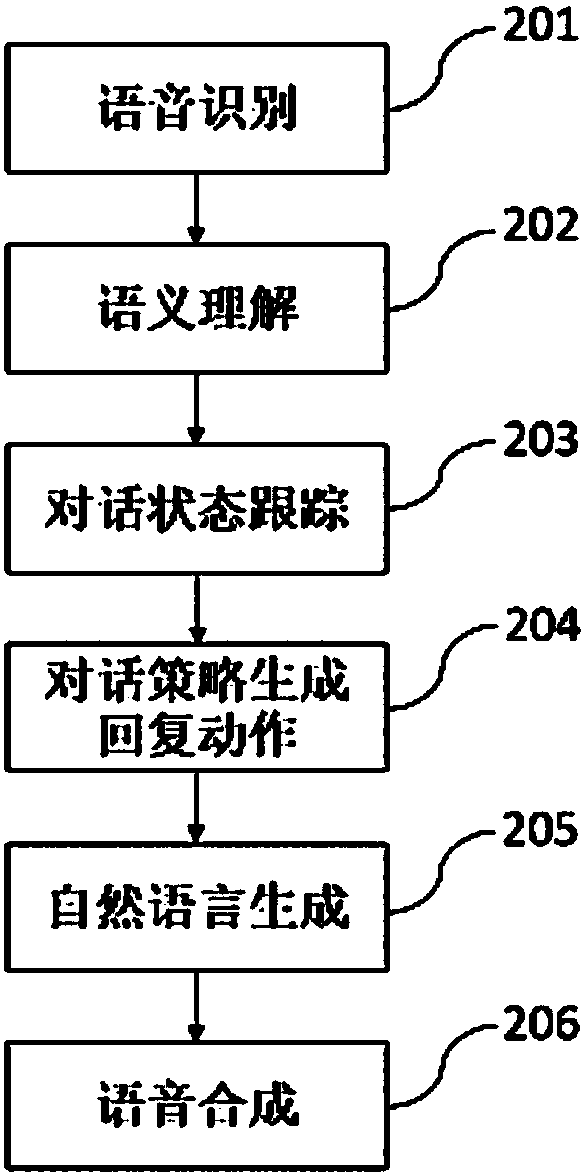

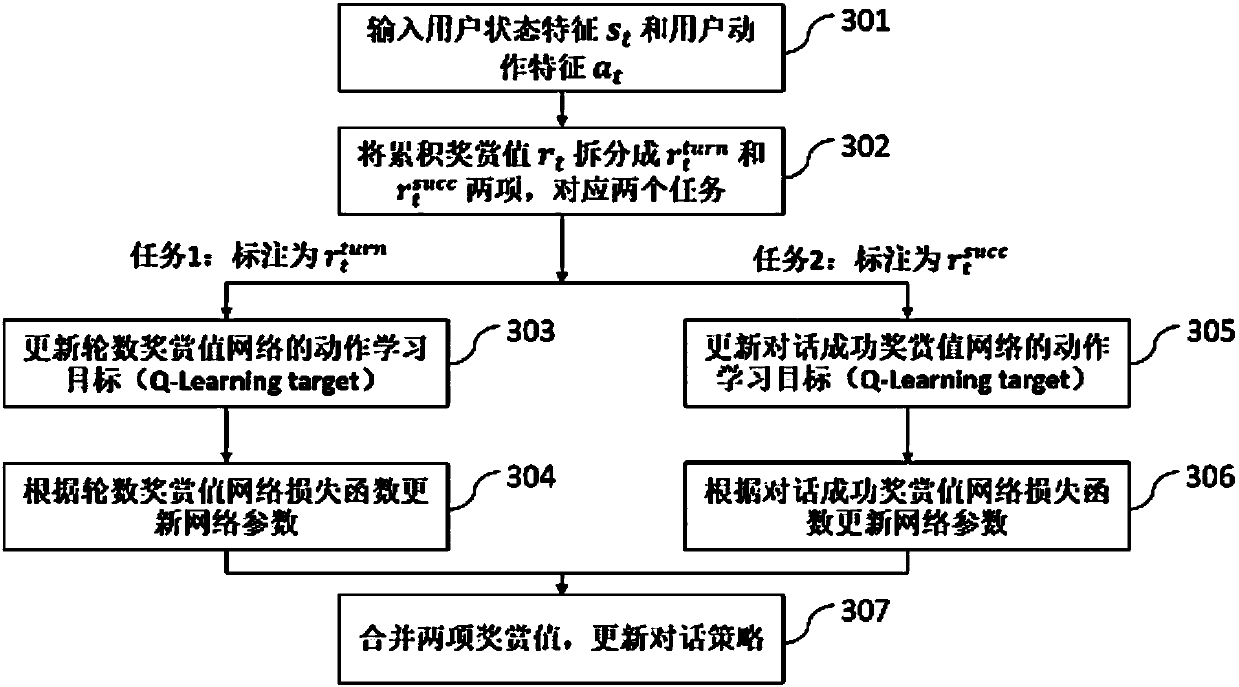

Dialog strategy online realization method based on multi-task learning

ActiveCN107357838AAvoid Manual Design RulesSave human effortSpeech recognitionSpecial data processing applicationsMan machineNetwork structure

The invention discloses a dialog strategy online realization method based on multi-task learning. According to the method, corpus information of a man-machine dialog is acquired in real time, current user state features and user action features are extracted, and construction is performed to obtain training input; then a single accumulated reward value in a dialog strategy learning process is split into a dialog round number reward value and a dialog success reward value to serve as training annotations, and two different value models are optimized at the same time through the multi-task learning technology in an online training process; and finally the two reward values are merged, and a dialog strategy is updated. Through the method, a learning reinforcement framework is adopted, dialog strategy optimization is performed through online learning, it is not needed to manually design rules and strategies according to domains, and the method can adapt to domain information structures with different degrees of complexity and data of different scales; and an original optimal single accumulated reward value task is split, simultaneous optimization is performed by use of multi-task learning, therefore, a better network structure is learned, and the variance in the training process is lowered.

Owner:AISPEECH CO LTD

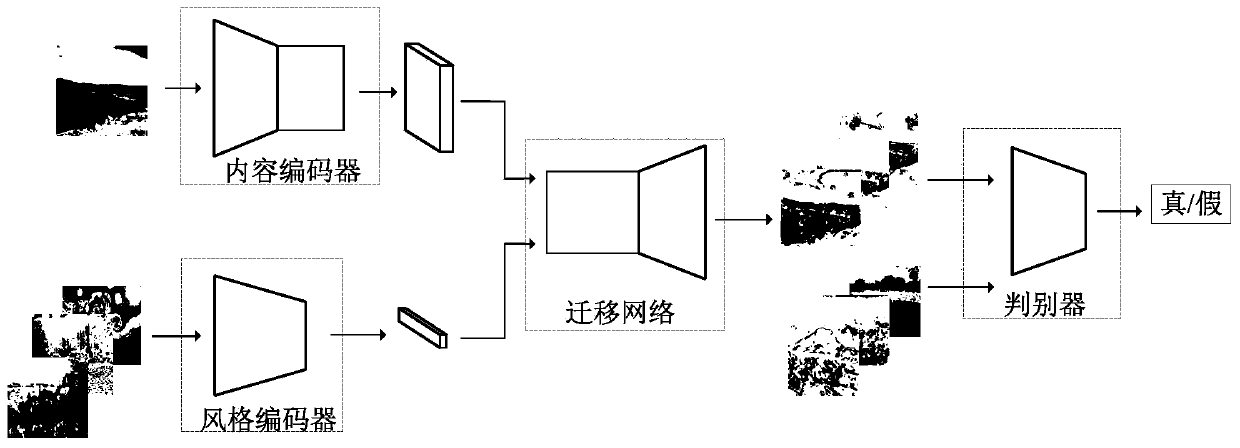

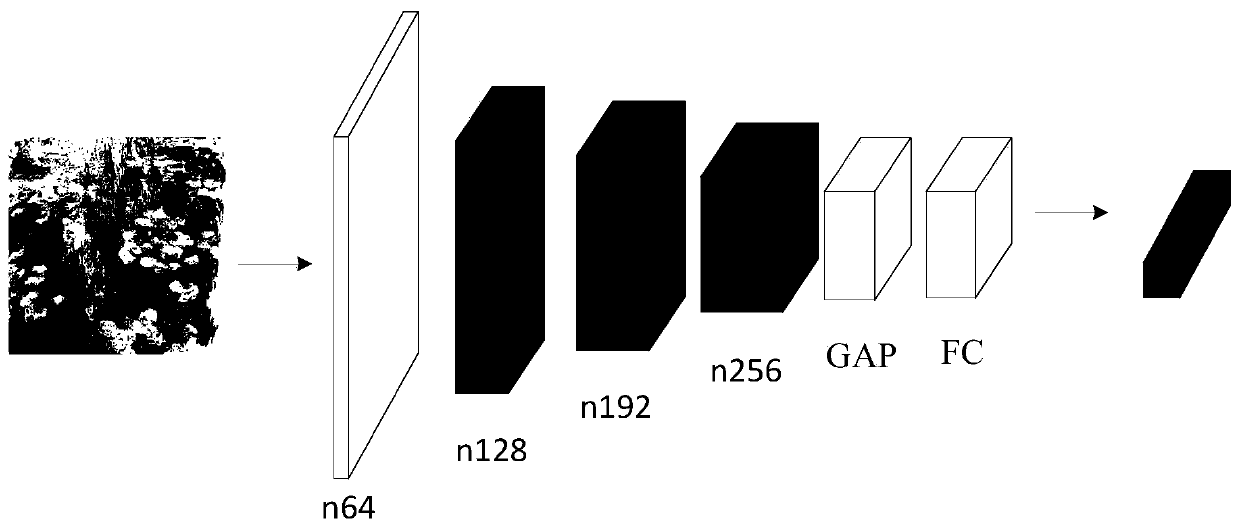

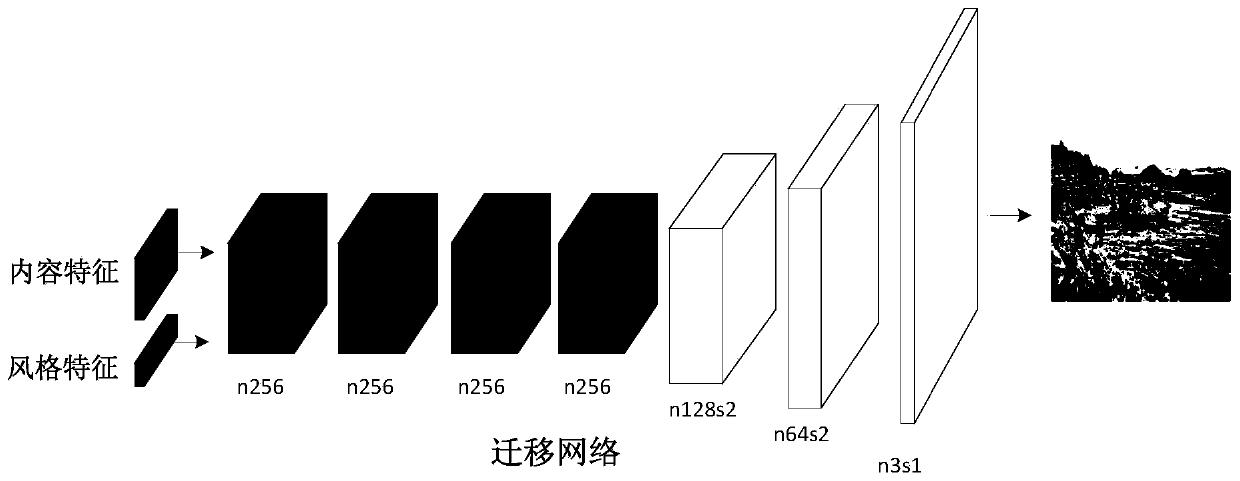

Multi-domain image style migration method based on generative adversarial network

ActiveCN110310221AStable trainingGeometric image transformationNeural architecturesGenerative adversarial networkSelf adaptive

The invention provides a multi-domain image style migration method based on a generative adversarial network, and belongs to the field of computer vision, for realizing conversion from an image to multiple different artistic styles. The multi-domain image style migration method designs an expert style network, and extracts style feature codes containing unique information of respective domains ininput images of different target domains through a group of bidirectional reconstruction losses. Meanwhile, the multi-domain image style migration method designs a migration network, and recombines extracted style feature codes and cross-domain shared semantic contents extracted by a content encoder to generate a new image in combination with self-adaptive instance standardization, so that style migration of the image from a source domain to a plurality of target domains is realized. Experiments show that the model can effectively combine the content of any photo with the styles of a pluralityof artworks to generate a new image.

Owner:DALIAN UNIV OF TECH

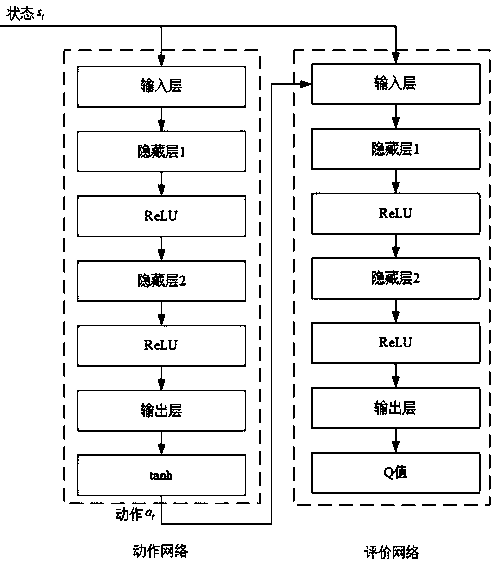

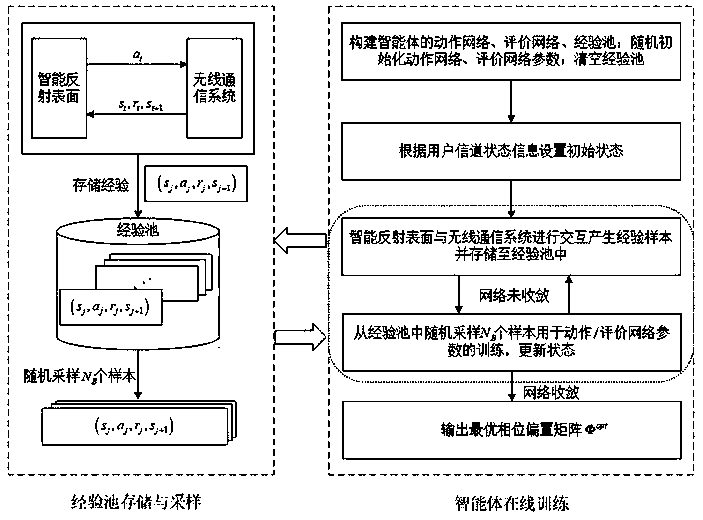

Intelligent reflection surface phase optimization method based on deep reinforcement learning

ActiveCN111181618AReduce complexityStable trainingSpatial transmit diversityNeural architecturesChannel state informationCommunications system

The invention discloses an intelligent reflection surface phase optimization method based on deep reinforcement learning. The method comprises the following steps: initializing an action network, an evaluation network, an intelligent reflection surface phase offset matrix and an experience pool in an intelligent reflection surface (intelligent agent); acquiring an initial state of the agent according to the user channel state information; storing the experience pool through interaction between the intelligent reflection surface and the wireless communication system; randomly sampling from theexperience pool to train the action network and the evaluation network so as to maximize the evaluation value output by the evaluation network, and then obtaining network model parameters after convergence; and outputting the optimal phase offset matrix coefficient of the intelligent reflecting surface, which maximizes the receiving signal-to-noise ratio of the user under the channel state information. According to the method, the time required for optimizing the phase offset matrix can be effectively reduced, the storage space of the sample is trained, and the robustness is better.

Owner:SOUTHEAST UNIV

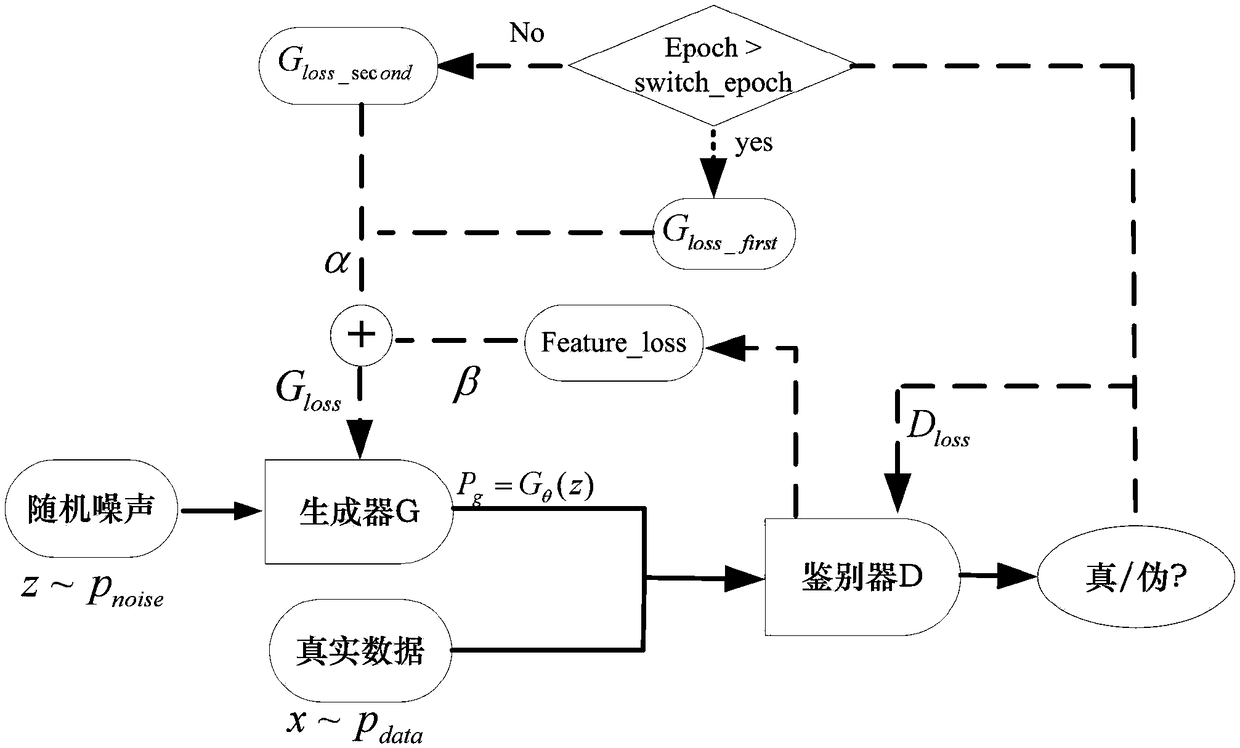

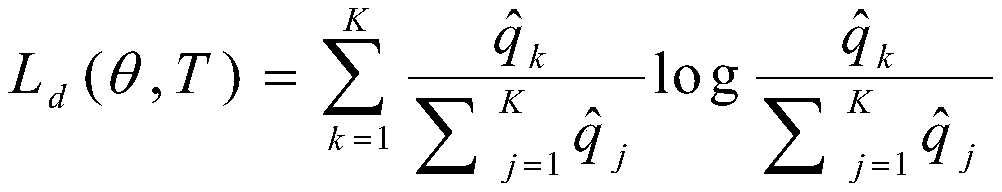

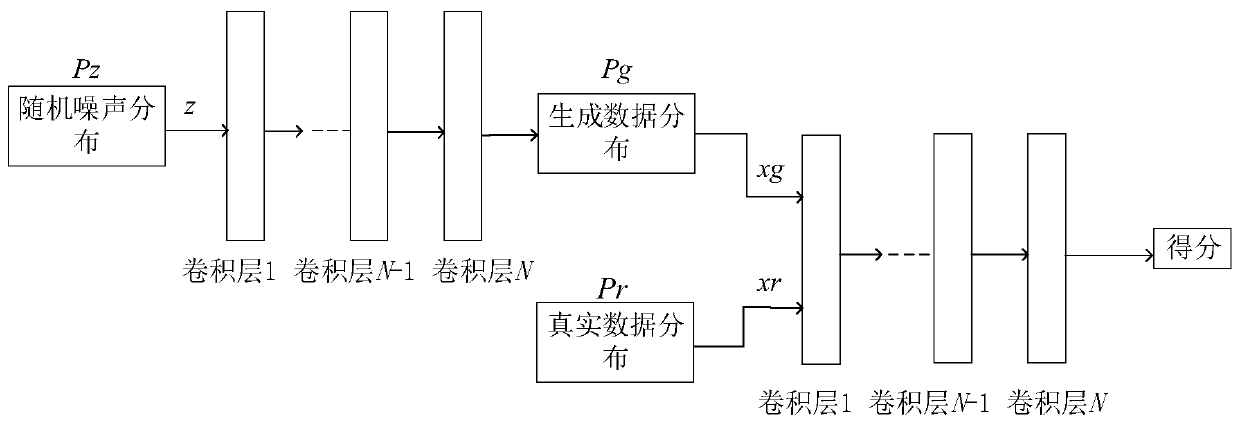

Segmentation loss-based generative adversarial network method

ActiveCN108665058AImprove mode crash phenomenonStable trainingCharacter and pattern recognitionNeural architecturesDiscriminatorAlgorithm

The invention discloses a segmentation loss-based generative adversarial network method. The method comprises the following steps of 1, performing parameter initialization: setting a batch size m to be 100 and a hyper-parameter k to be 1, performing the parameter initialization by using an Xavier method, determining a maximum iterative frequency and a loss switching iterative frequency parameter T, and setting an iterative frequency epoch to be 0; 2, training discriminator parameters: setting i to be 1, wherein i is a cyclic variable; and 3, training generator parameters: in epoch=epoch+1, judging whether the epoch is greater than the maximum iterative frequency or not, if the epoch is smaller than the maximum iterative frequency, repeating the steps 2 and 3, and if the epoch is greater than the maximum iterative frequency, ending the training process. According to the method, a generator can adopt loss functions in different forms in different training stages, so that the deficiency of a GAN theory under a single loss form is made up for to a certain extent, and the network training is more stable; and by introducing feature-level loss between a real sample and a generated sample,features extracted by a discriminator are robuster.

Owner:XUZHOU UNIV OF TECH

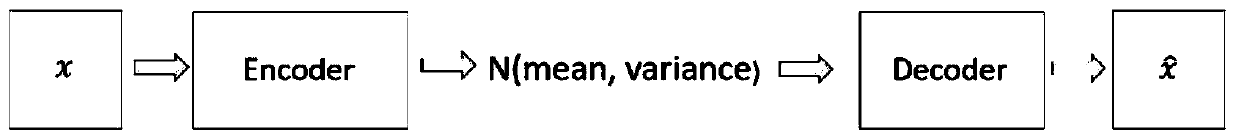

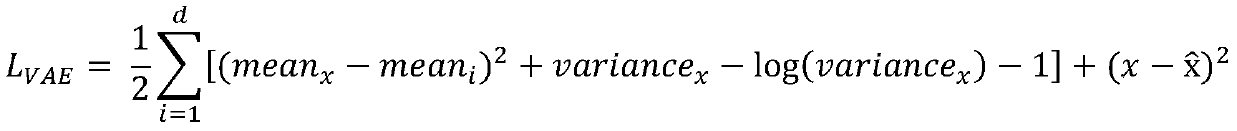

Video generation method combining variational auto-encoder and generative adversarial network

ActiveCN110572696AOvercoming the problem of poor continuity between framesImproved continuity between framesSelective content distributionDiscriminatorPattern recognition

The invention discloses a video generation method combining a variational auto-encoder and a generative adversarial network. Belonging to the technical field of video generation, the method comprisesthe following steps: a generator of the generative adversarial network does not directly generate a video but generate a series of associated hidden variables, the hidden variables pass through atrained decoder of the variational auto-encoder to generate a series of related images, a discriminator of the generative adversarial network does not directly discriminate the video, but enables the videoto pass through the encoder of the variational auto-encoder to obtain a series of low-dimensional hidden variables and discriminate the hidden variables. According to the method, the video can be generated according to the input description text; the method is advantaged in that a problem of poor inter-frame continuity in video generation is solved, inter-frame continuity of video generation is improved, the training step is divided into two parts of training the variation auto-encoder and training the generative adversarial network based on the trained variation auto-encoder, and training iseasier and more stable.

Owner:ZHEJIANG UNIV

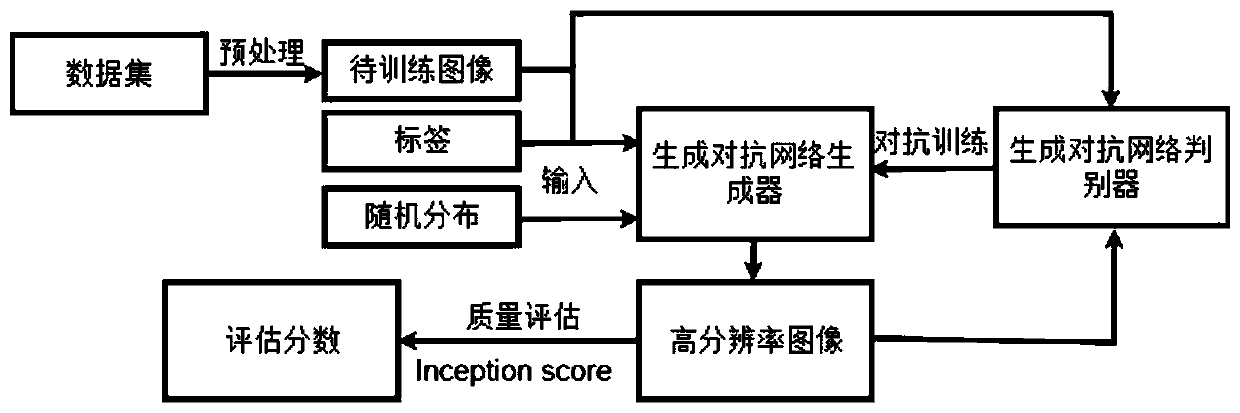

High-resolution image generation method based on generative adversarial network

ActiveCN111563841AImprove discriminationEasy to distinguishInternal combustion piston enginesGeometric image transformationPattern recognitionData set

The invention discloses a high-resolution image generation method based on a generative adversarial network. The method comprises the following steps: firstly, preprocessing a to-be-learned data set image to obtain a training set; constructing a generative adversarial network comprising a generative network and a discrimination network; pre-training the generative adversarial network; obtaining pre-trained model parameters as initialization parameters of the generative adversarial network; then, separately inputting the training set and an image generated by the generative network into the discrimination network, enabling the output of the discrimination network to react on the generative network, carrying out adversarial training on the generative adversarial network, optimizing network parameters of the generative network and the discrimination network, and ending the training when a loss function converges to obtain a trained generative adversarial network; and finally, inputting the random data distribution into the trained generation network to realize high-resolution image generation. According to the invention, the generated image is clearer, the training process is stable,and the network converges quickly.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

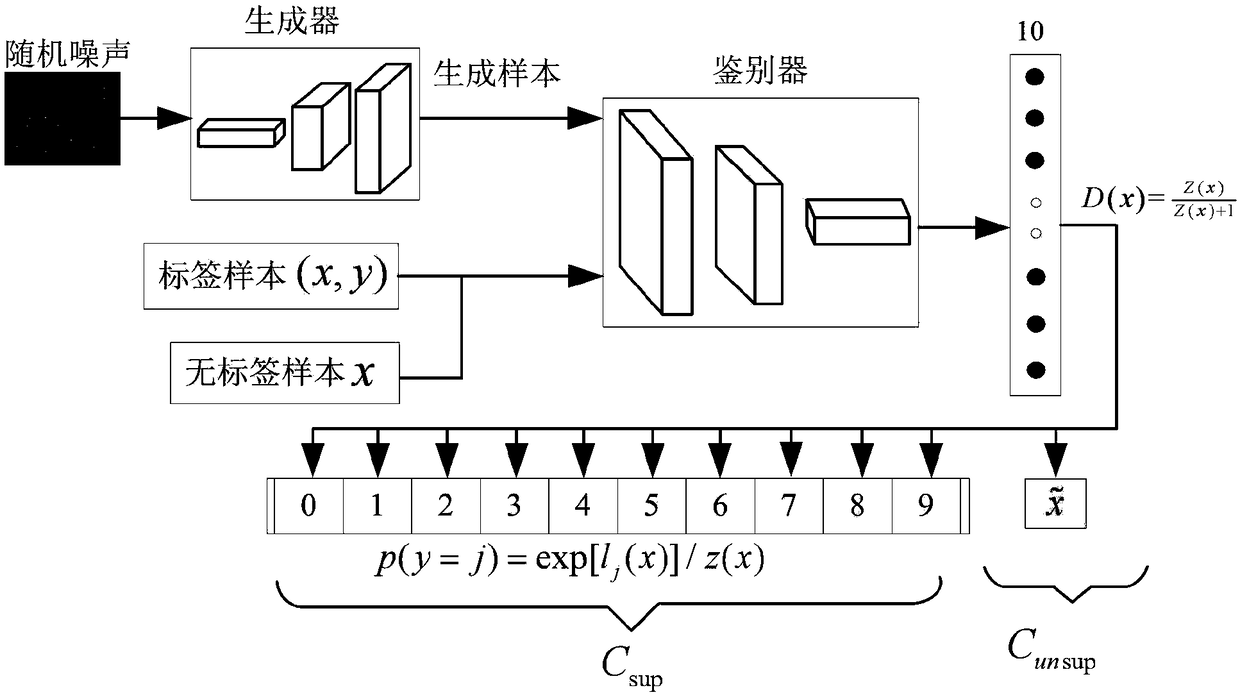

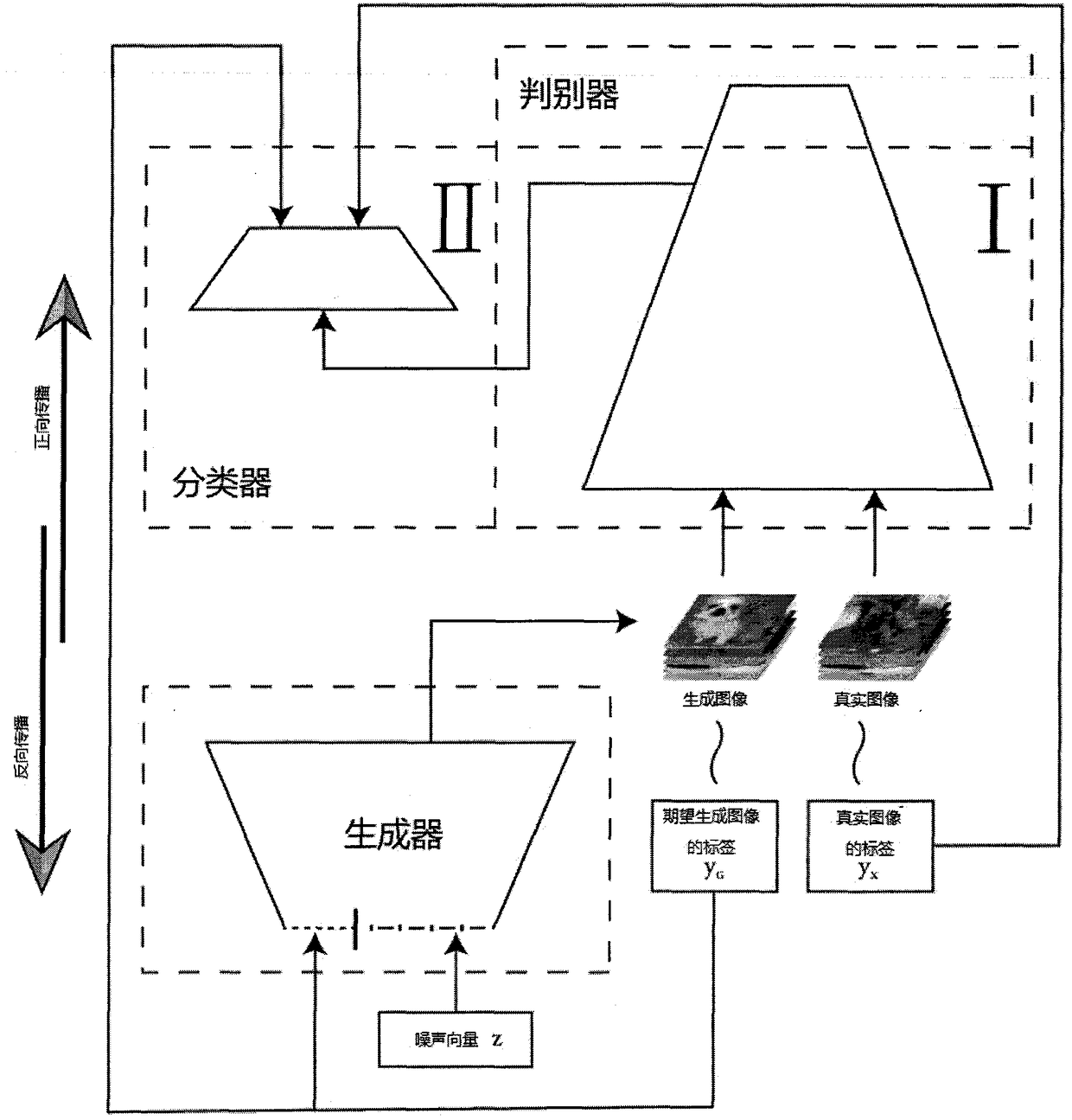

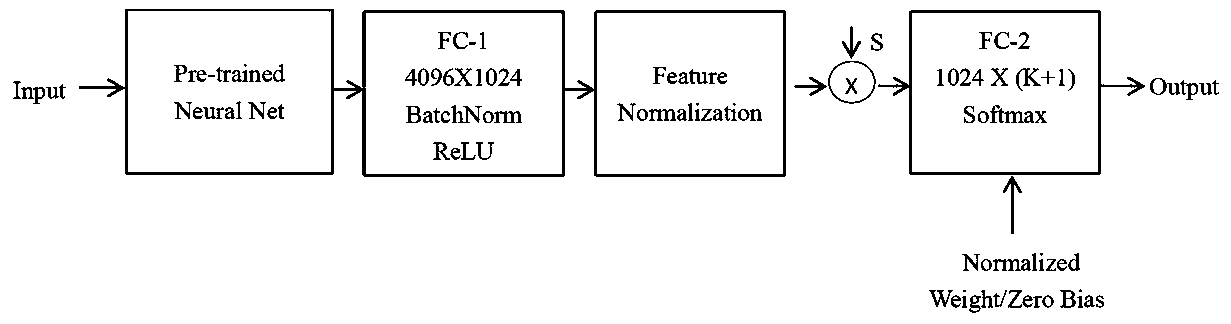

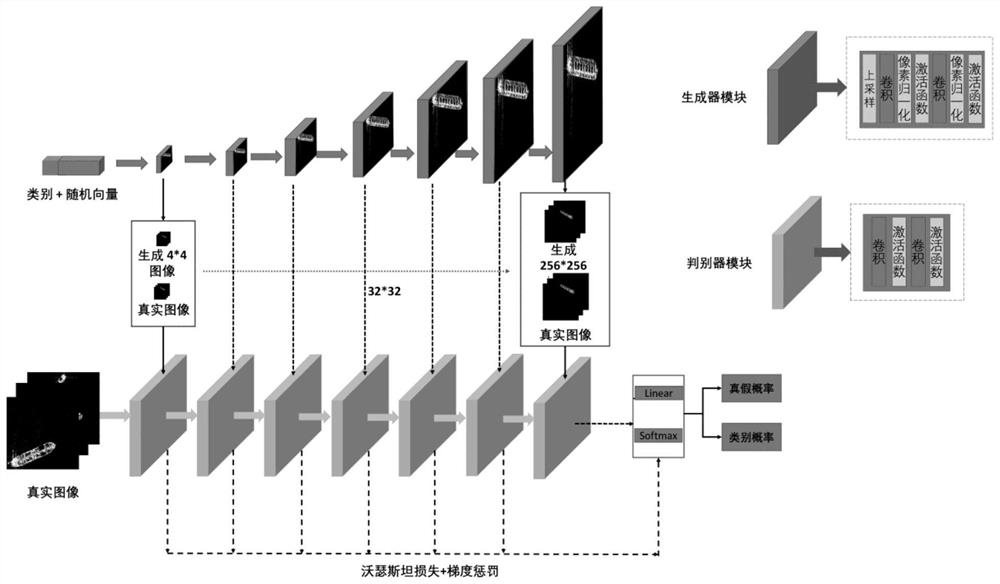

Mode collapse resistant robust image generation method based on novel conditional generative adversarial network

InactiveCN108388925AImprove performanceImprove robustnessCharacter and pattern recognitionImage watermarkingGenerative adversarial networkPhases of clinical research

The invention discloses a mode collapse resistant robust image generation method based on a novel conditional generative adversarial network. Compared with a method in the related art, the technologydisclosed by the invention has the advantages that the adaptability is strong, the robustness is good, only the category of a required image is required to be specified in the using phase, not manualintervention is required in the process, the training phase is short in consumed time, the training process is stable, and the balance between the diversity and the authenticity of generated images issufficiently maintained. The main innovation of the mode collapse resistant robust image generation method lies in that a mode collapse problem and a training failure problem in the training processof other conditional generative methods are solved. Meanwhile, parameters are respectively optimized for a classifier and a discriminator, thereby avoiding problems of instable training and mode collapse of the methods of the same category. In addition, the invention further introduces a construction strategy for weight sharing, so that the training speed is greatly improved and the storage overhead is reduced under the premise of not damaging the original performance. The mode collapse resistant robust image generation method is applied to a diversified image data generation task of low-costlarge-scale specified labels.

Owner:TIANJIN POLYTECHNIC UNIV

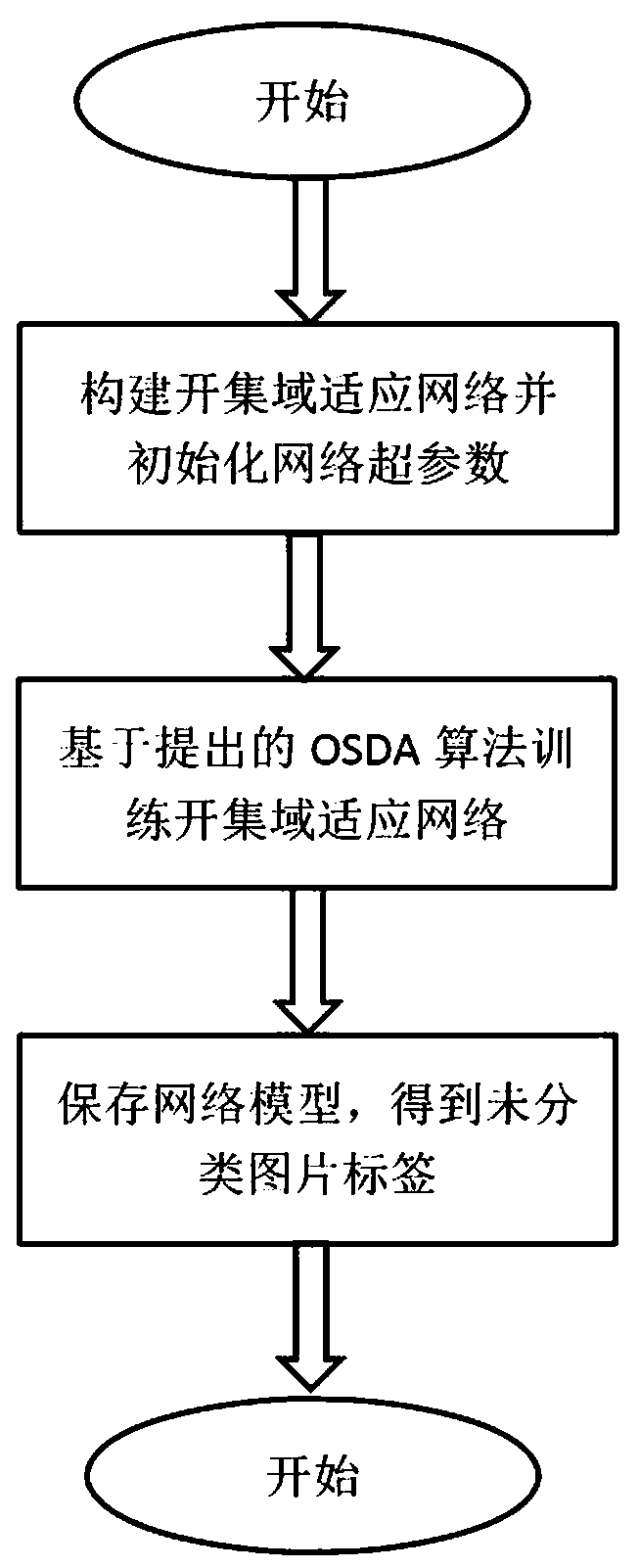

Open set domain adaptation method and system based on entropy minimization

InactiveCN110750665AImprove robustnessStable trainingCharacter and pattern recognitionStill image data clustering/classificationData setFeature extraction

The invention provides an open set domain adaptation method based on entropy minimization. The open set domain adaptation method comprises the steps of creating an open set domain adaptation network, and initializing network hyper-parameters; inputting a source domain picture and a target domain picture into an open set domain adaptive network, obtaining picture depth features through a networkfeature extraction layer, and then obtaining prediction probability vectors of various categories through a softmax layer according to a feature map; calculating an entropy loss function by using a known category prediction probability vector and a picture label so as to correctly identify a common category; on the basis, calculating binary cross entropy and a binary diversity loss function by using an unknown category prediction probability value so as to correctly classify unknown categories, updating network parameters by using a back propagation gradient until a loss function value is minimum, and stopping training the network; and storing the network model and the training result, and introducing the target domain data set into the network model to obtain a final target domain label.

Owner:NANJING UNIV OF POSTS & TELECOMM

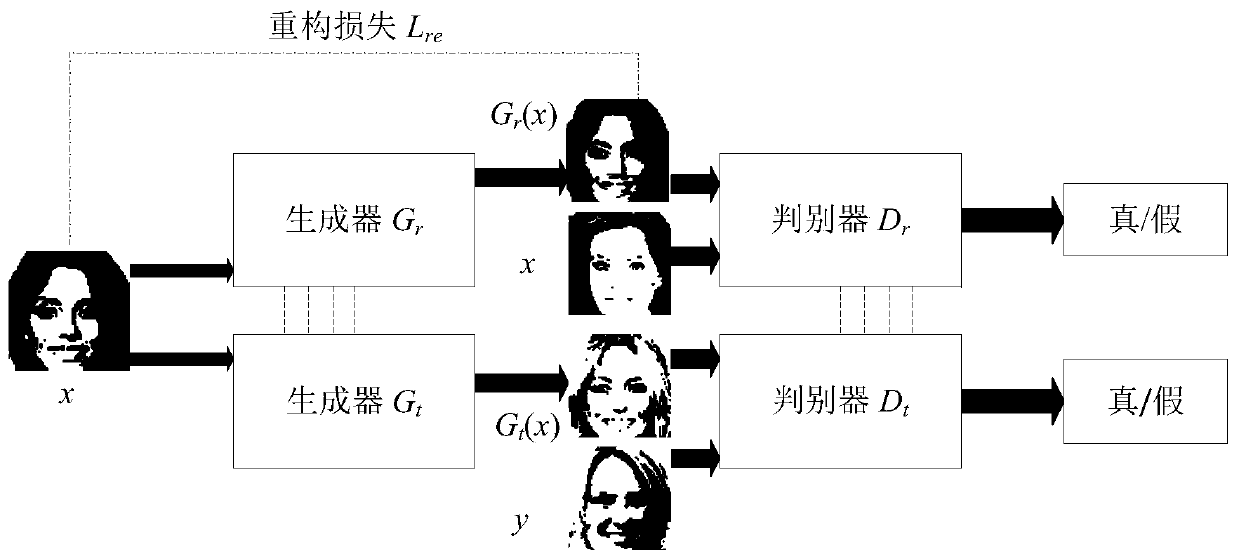

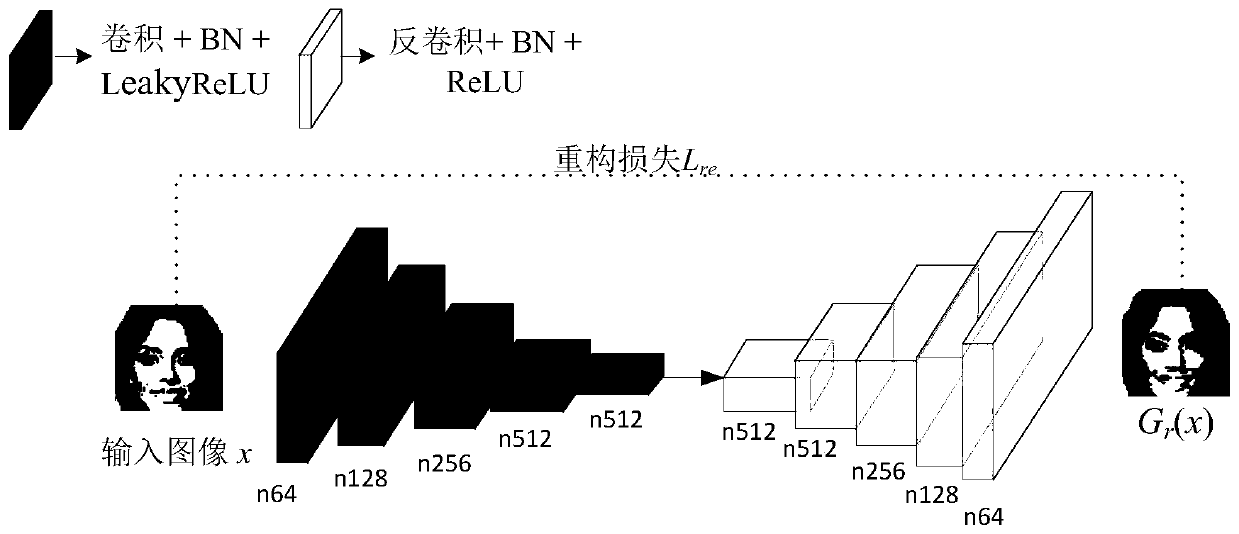

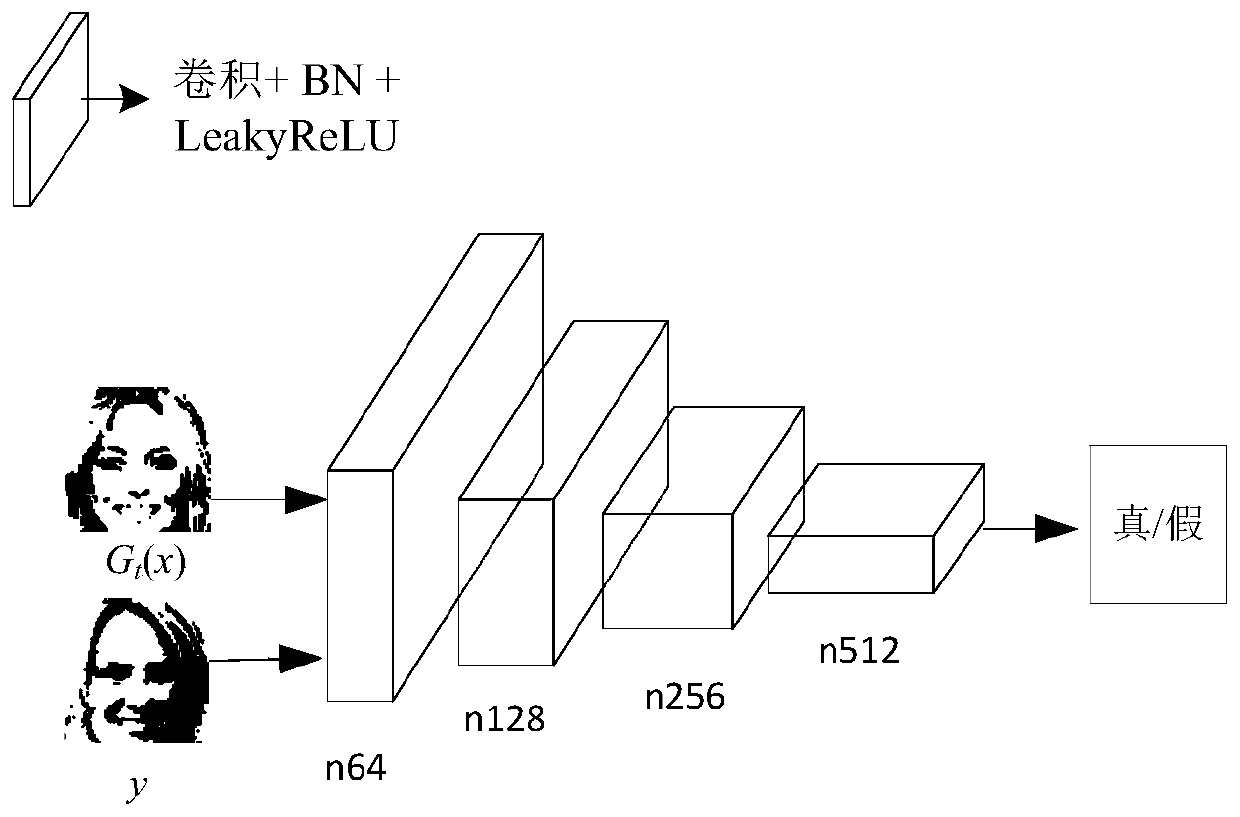

Target domain oriented unsupervised image conversion method based on generative adversarial network

ActiveCN110335193AStable trainingGeometric image transformationGenerative adversarial networkNetwork model

The invention provides a target domain oriented unsupervised image conversion method based on a generative adversarial network, and belongs to the field of computer vision. The target domain orientedunsupervised image conversion method is used for realizing an unsupervised cross-domain image-to-image conversion task, and belongs to the field of computer vision. According to the target domain oriented unsupervised image conversion method, a self-encoding reconstruction network is designed, and hierarchical representation of a source domain image is extracted by minimizing reconstruction loss of the source domain image; meanwhile, through a weight sharing strategy, the weights of network layers for encoding and decoding high-level semantic information in two groups of generative adversarialnetworks in the network model are shared, so that the output image can keep the basic structure and characteristics of the input image; and then, the two discriminators are respectively used for discriminating whether the input image is a real image or a generated image in respective fields. According to the target domain oriented unsupervised image conversion method, unsupervised cross-domain image conversion can be effectively carried out, and a high-quality image is generated. Experiments prove that the method provided by the invention obtains a good result on standard data sets such as CelebA and the like.

Owner:DALIAN UNIV OF TECH

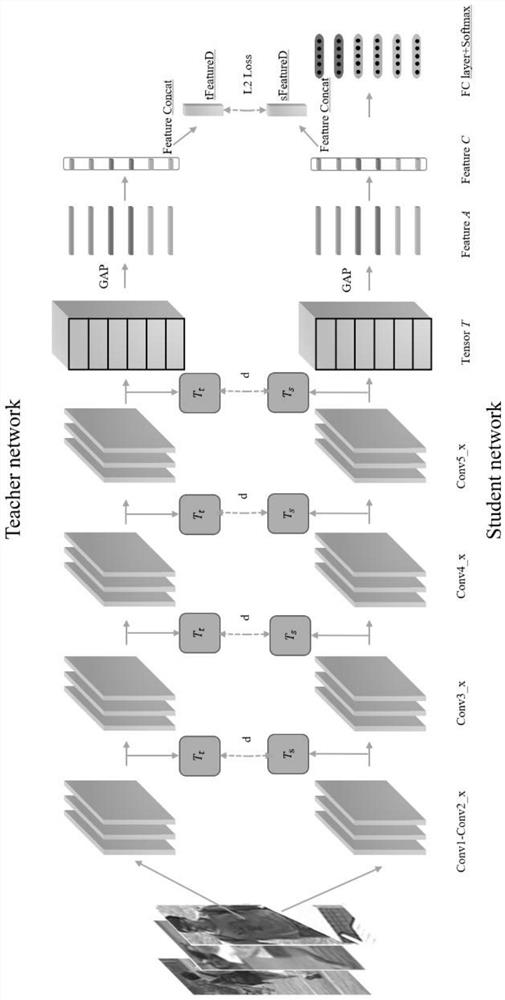

Pedestrian re-identification method based on knowledge distillation

ActiveCN112560631AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesFeature vectorComputation complexity

The invention discloses a pedestrian re-identification method based on knowledge distillation, and the method comprises the steps: inputting a pedestrian image training set into a teacher network, andinputting the same data set into a student network; through the synergistic effect of student network transfer, characteristic distillation positions and distance loss functions, carrying out distillation at multiple stages of the whole backbone network at the same time, so that the characteristic output of the student network is continuously close to the characteristics output by the teacher network; minimizing and updating parameters of the student model through a distillation loss function, and training a student network; carrying out distance measurement on the obtained feature vectors, searching out a pedestrian target graph with the highest similarity, and finally enabling the accuracy of the student network resnet18 to be greatly improved to be close to the accuracy of the teachernetwork resnet50. According to the method, personnel re-identification is realized by using a knowledge distillation transfer learning method, and the thought of replacing a large model with a small model is adopted, and therefore, calculation complexity can be effectively reduced, and the accuracy of a student model can be ensured.

Owner:KUNMING UNIV OF SCI & TECH

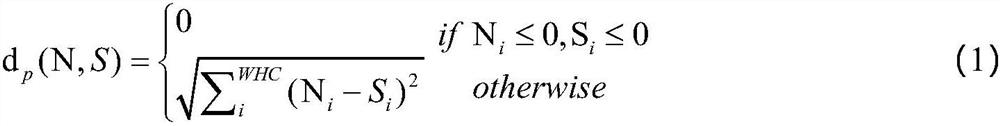

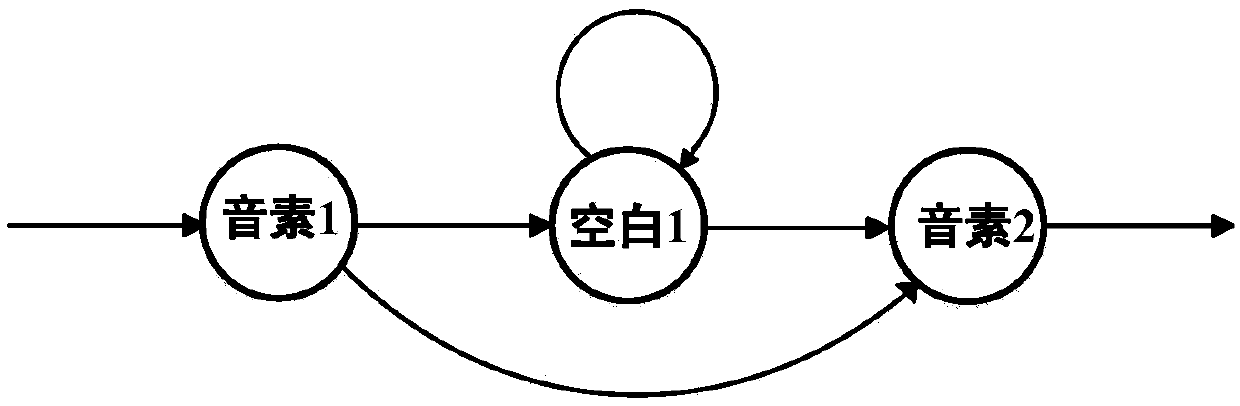

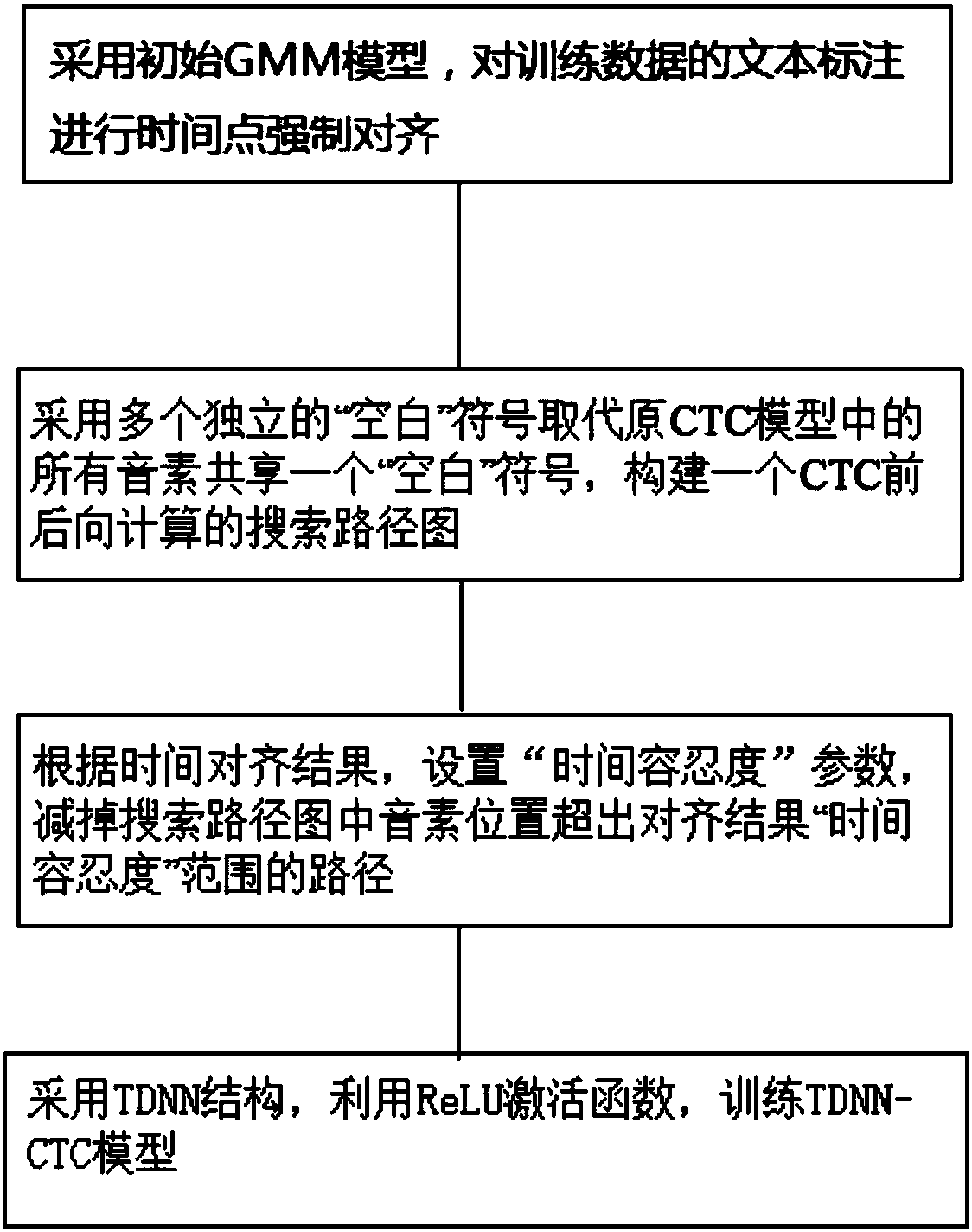

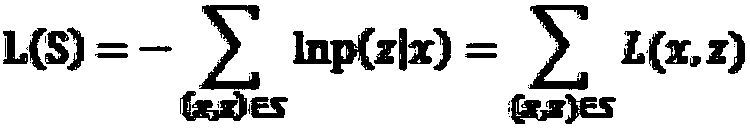

Method for training acoustic model based on CTC (Connectionist Temporal Classification)

ActiveCN108269568AIncrease independenceImprove visibilitySpeech recognitionTime rangeText annotation

The invention provides a method for training an acoustic model based on CTC (Connectionist Temporal Classification). The method comprises the steps of 1, training an initial GMM (Gaussian Mixture Model), wherein time point forced alignment on text annotation of training data by using the GMM to obtain a time region corresponding to each phoneme; 2, inserting a blank symbol associated with the phoneme behind each phoneme, wherein each phoneme has a unique blank symbol; 3, constructing a CTC forward and backward calculated search path diagram for a phoneme annotation sequence with the blank symbols being added by adopting a finite state machine; 4, restricting the appearance time range of each phoneme according to a time alignment result, pruning the search path diagram, and cutting off thepath with the phoneme position exceeding the time restrictions so as to obtain a final search path diagram required by calculating a network error in CTC; and 5, performing acoustic model training byadopting the combination of a time-delay neural network (TDNN) structure and the CTC method to obtain a final TDNN-CTC acoustic model.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

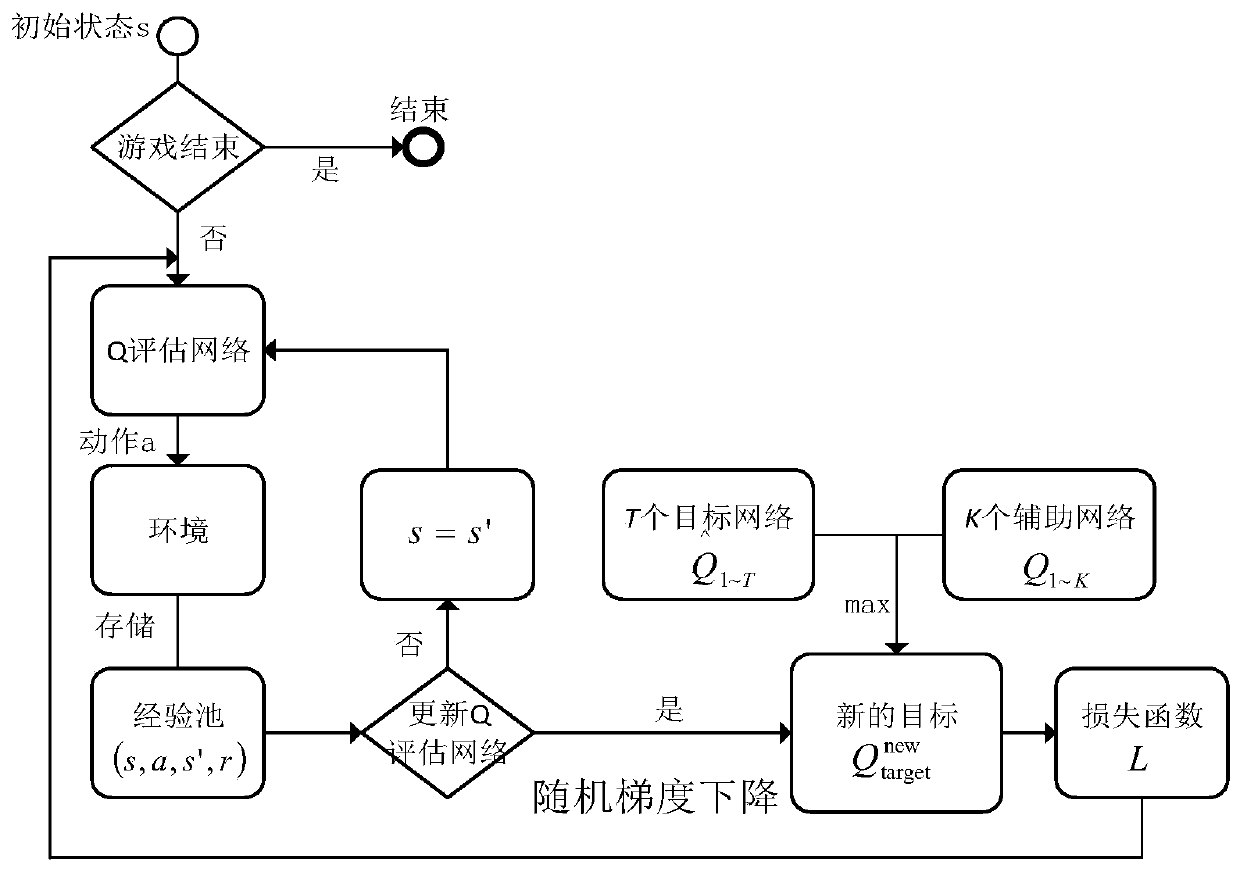

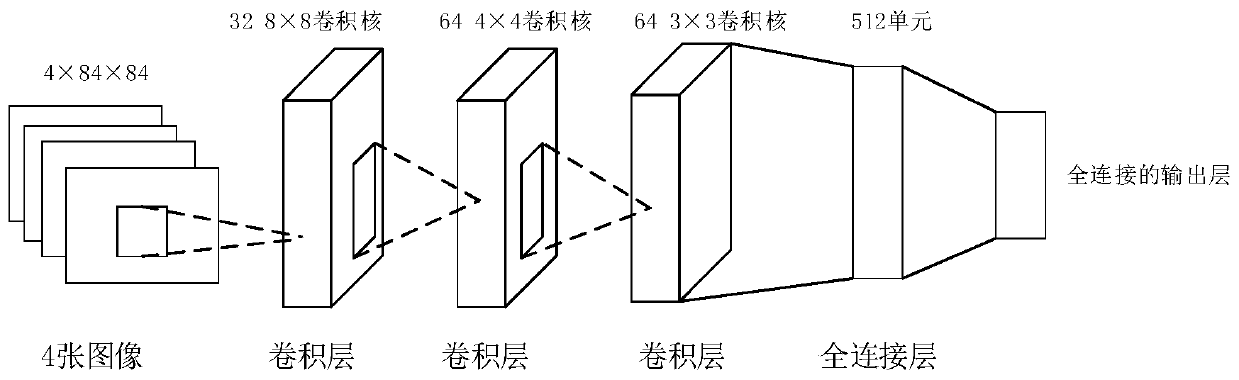

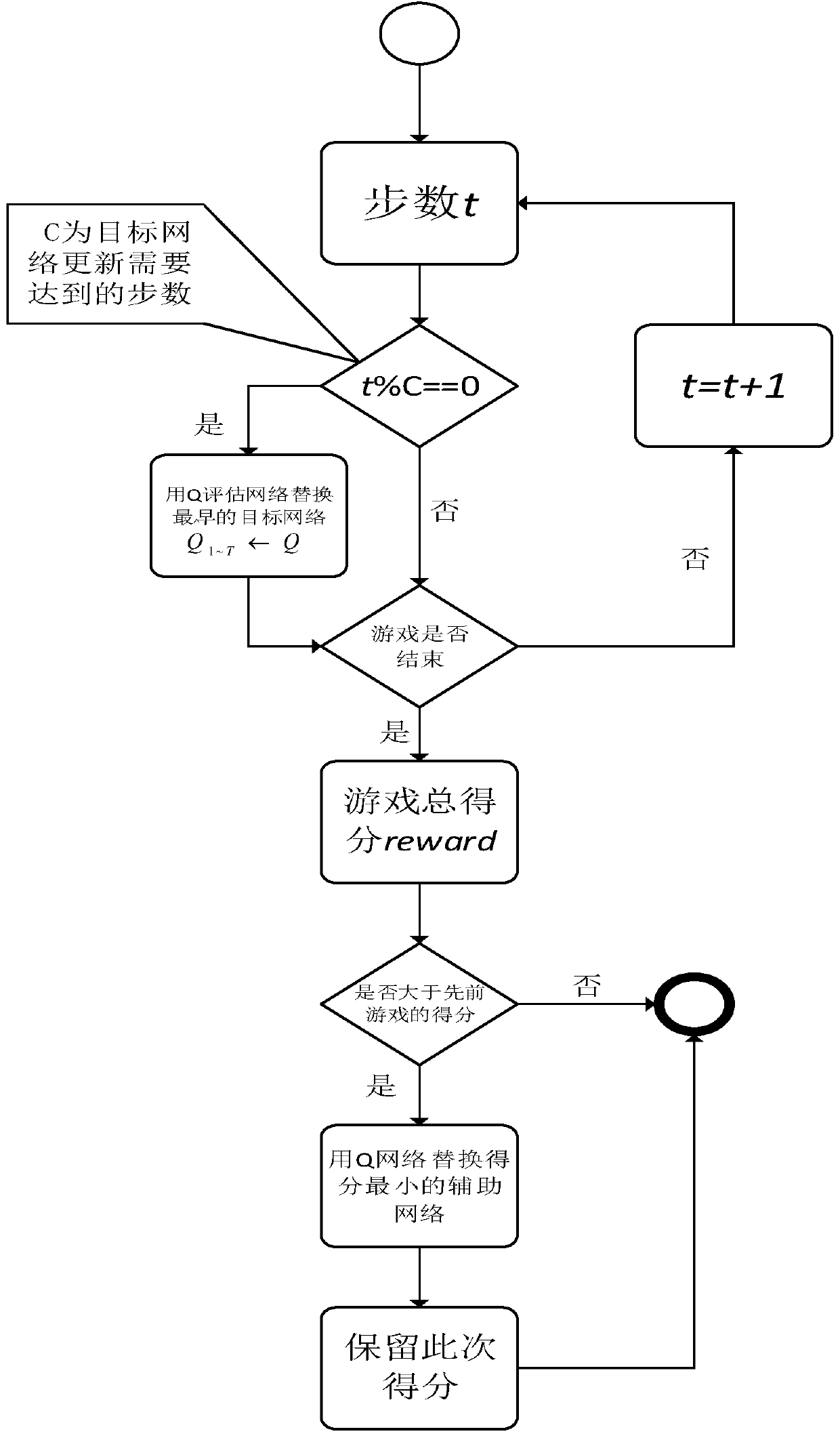

A deep reinforcement learning method and equipment based on a plurality of historical optimal Q networks

InactiveCN109919319AStable trainingEliminate low-scoring phenomenaMachine learningInteraction systemsState space

The invention provides a deep reinforcement learning method and device based on multiple historical optimal Q networks for an intelligent robot interaction system comprising an agent, and the method comprises the steps: defining the attributes and rules of the agent, defining the state space and action space of the agent, and constructing or calling an agent motion environment; selecting an optimal plurality of Q networks from all historical Q networks based on the level of the interaction evaluation score; combining the plurality of historical optimal Q networks with the current Q network byusing a maximum operation, guiding an agent to select an action strategy, training parameters of a learning model, and autonomously carrying out a next decision action according to an environment where the agent is located. According to the method, a reasonable motion environment can be constructed according to actual needs, the optimal Q network generated in the training process is used for better guiding the intelligent agent to make decisions, the purpose of intelligent strategy optimization is achieved, and the method has a positive effect on development of robots and unmanned systems in China.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

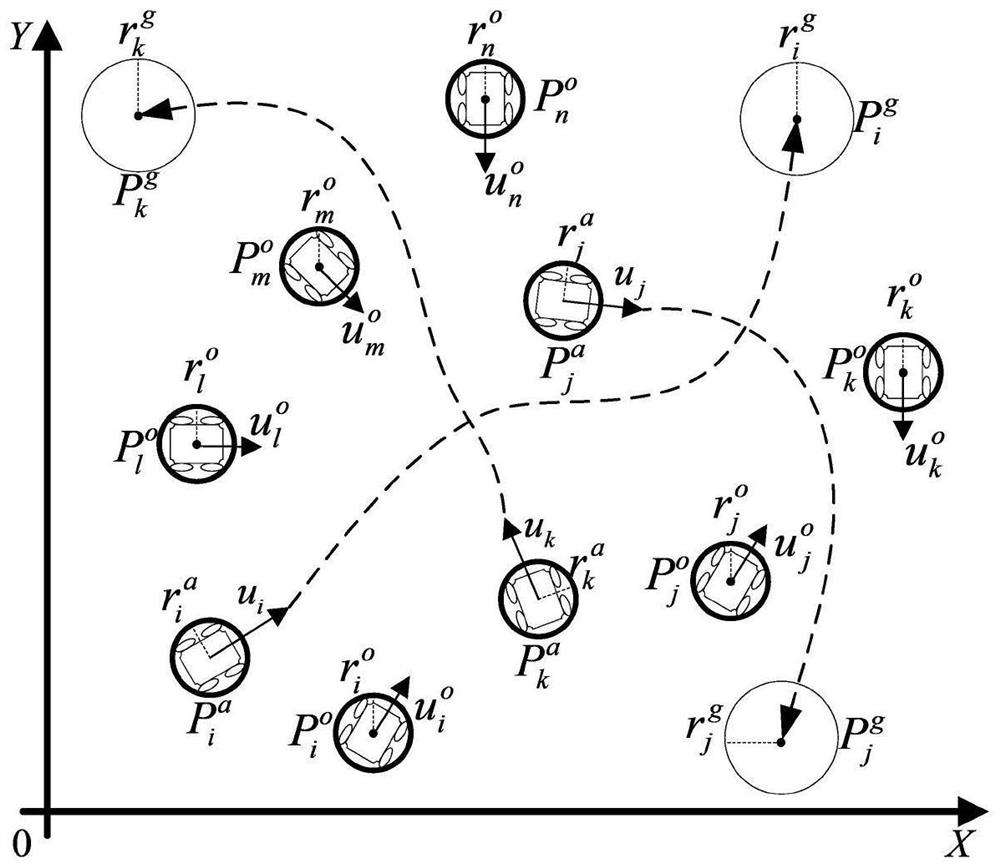

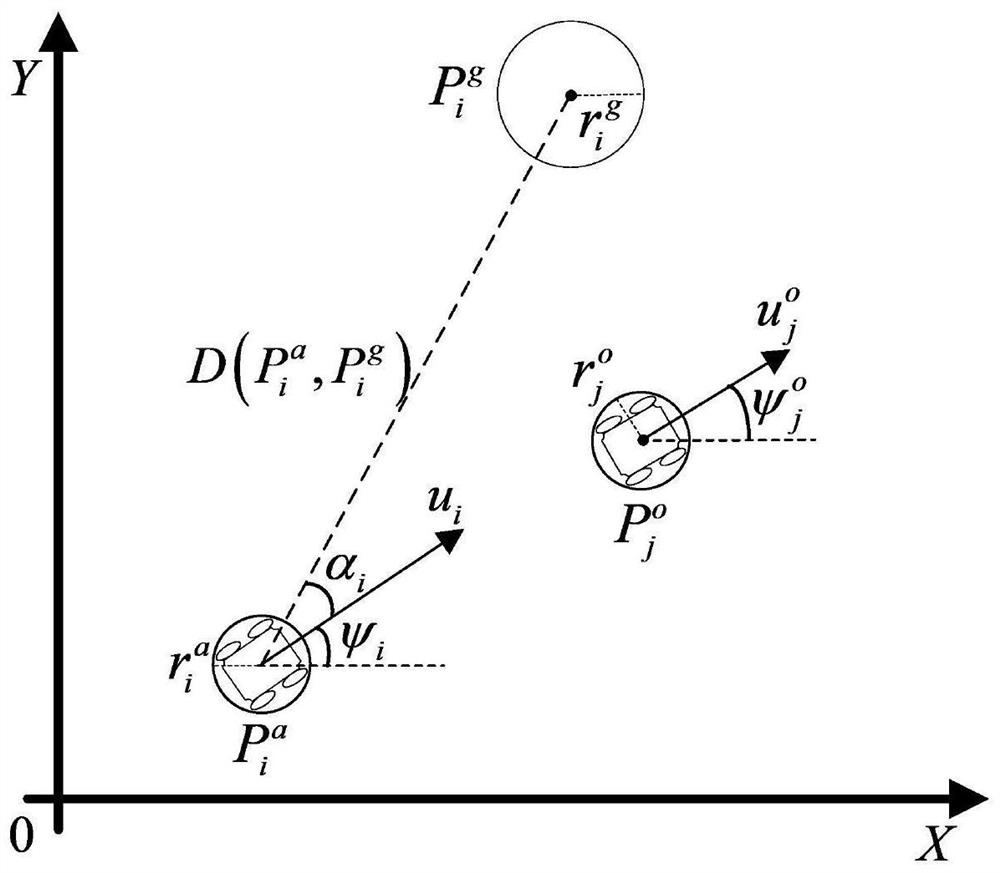

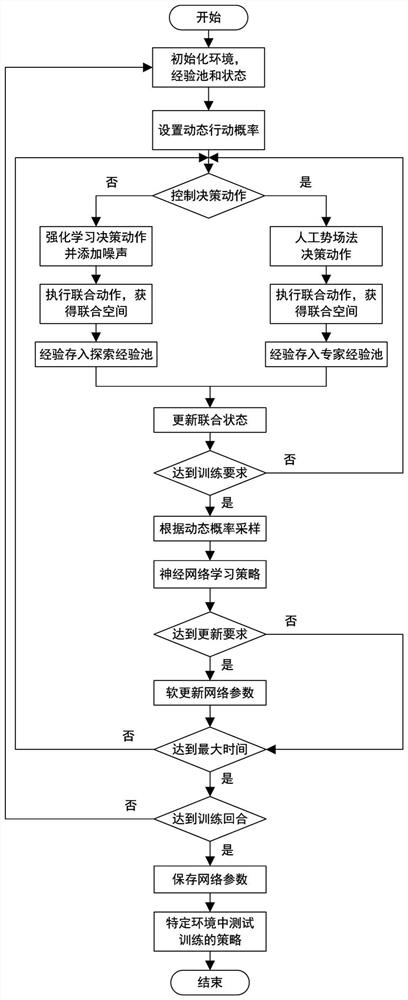

Mixed-experience multi-agent reinforcement learning motion planning method

ActiveCN113341958AImprove convergence rateLower Q valuePosition/course control in two dimensionsVehiclesSimulationUncrewed vehicle

The invention discloses a mixed-experience multi-agent reinforcement learning motion planning method, namely an ME-MADDPG algorithm. According to the method, through MADDPG algorithm training, when a sample is generated, experience is generated through exploration and learning, high-quality experience of successfully planning multiple unmanned aerial vehicles to a target through an artificial potential field method is added, and the two kinds of experience are stored in different experience pools. During training, a neural network collects samples from the two experience pools through dynamic sampling according to the changing probability, state information and environment information of the agents serve as input of the neural network, and the speeds of the multiple agents serve as output. Meanwhile, the neural network is slowly updated in the training process, the training of a multi-agent motion planning strategy is stably completed, and finally, the agents autonomously avoid obstacles in a complex environment and can smoothly reach the respective target positions. According to the method, a motion planning strategy with better stability and adaptability can be efficiently trained in a complex dynamic environment.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

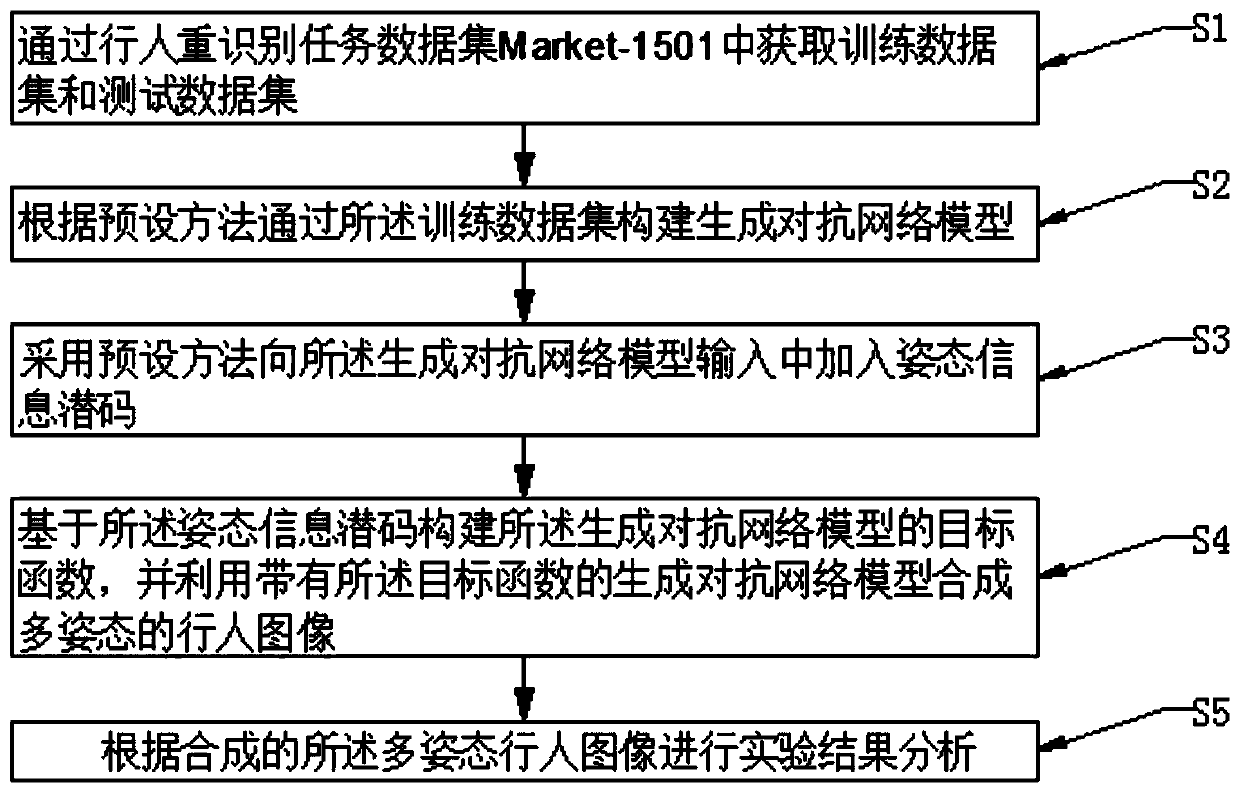

Multi-pose pedestrian image synthesis algorithm based on generative adversarial network

ActiveCN110796080ANarrow solution spaceDecompression trainingInternal combustion piston enginesGeometric image transformationPattern recognitionData set

The invention discloses a multi-pose pedestrian image synthesis algorithm based on a generative adversarial network. The multi-pose pedestrian image synthesis algorithm comprises the following steps:S1, obtaining a training data set and a test data set from a pedestrian re-identification task data set Market-1501; S2, constructing a generative adversarial network model through the training data set according to a preset method; S3, adding an attitude information latent code into the generative adversarial network model input by adopting a preset method; S4, constructing an objective functionof a generative adversarial network model based on the attitude information latent code, and synthesizing a multi-attitude pedestrian image by using the generative adversarial network model with the objective function; and S5, performing experimental result analysis according to the synthesized multi-pose pedestrian image. The multi-pose pedestrian image synthesis algorithm has the beneficial effects that the solution space of the generator is effectively reduced, so that the generative adversarial network training is more stable, and high-quality multi-pose pedestrian pictures can be generated.

Owner:CHONGQING UNIV

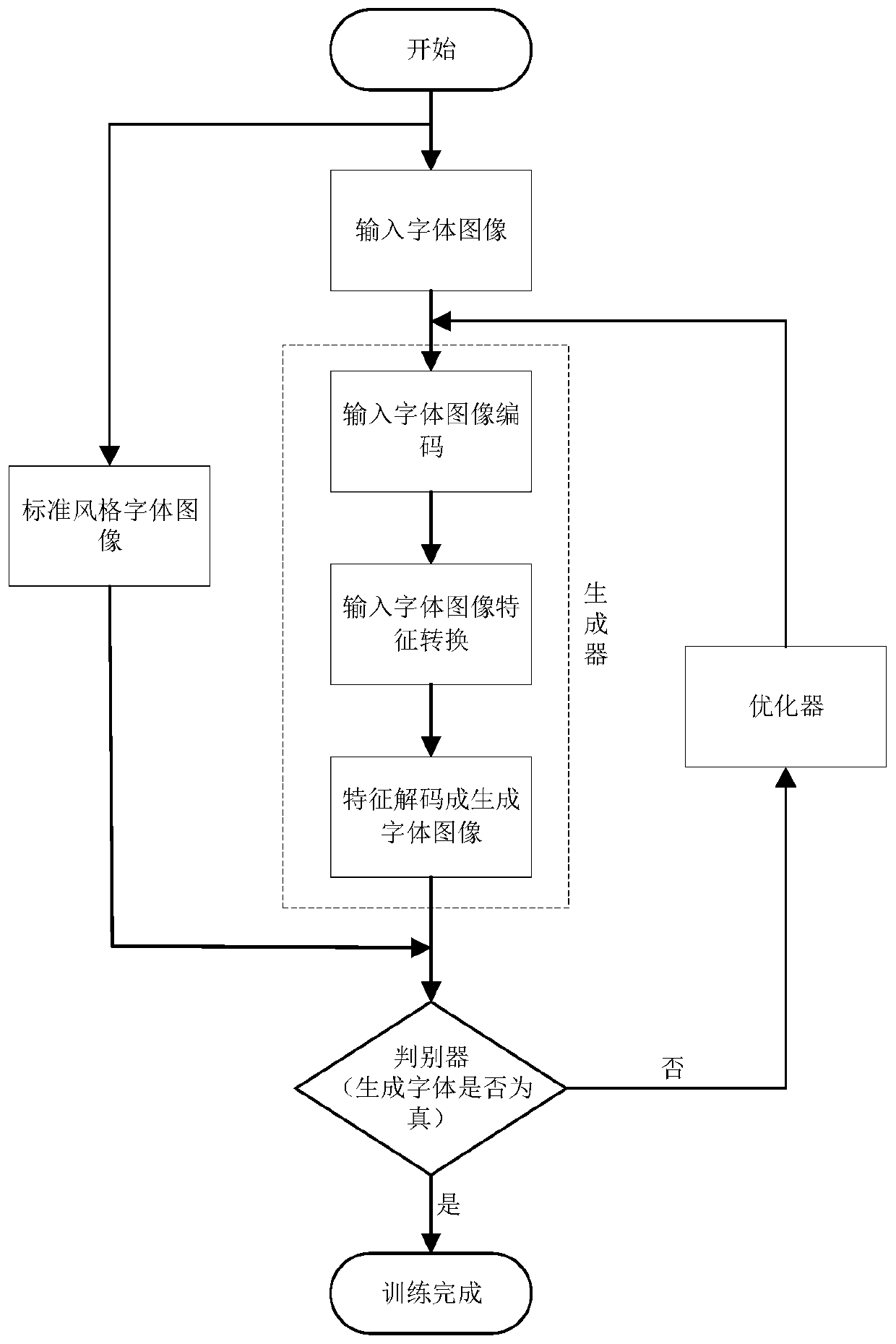

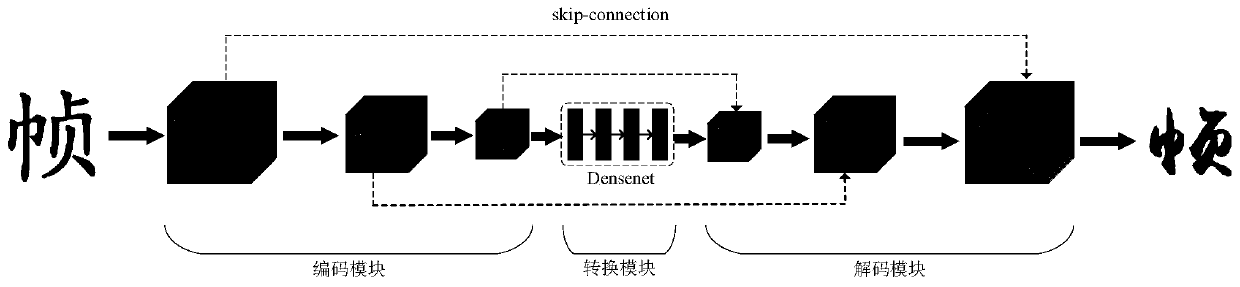

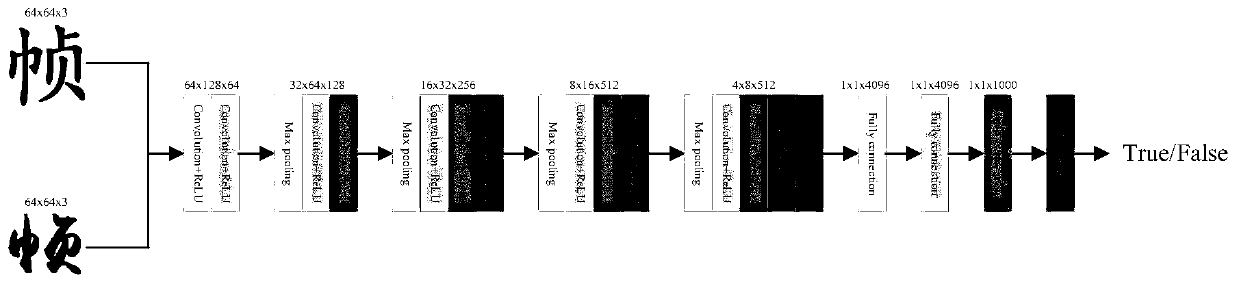

Calligraphy word stock automatic restoration method and system based on style migration

PendingCN110570481AAutomatic extractionImprove the situation where there is a large deformationTexturing/coloringNeural architecturesCode moduleDiscriminator

The invention provides a calligraphy font library automatic restoration method and system based on style migration. The calligraphy font library automatic restoration method comprises the steps that input fonts and standard style fonts are set; the input font image is input into a coding module, and potential feature information is obtained by the coding module; the conversion module converts thefeature information into feature information of standard style fonts; the decoding module performs processing to obtain a generated font image; the input font image and the generated font image are input into a discriminator, and the probability that the generated font image is a real standard style font is output; similarly, the input font image and the standard style font image are input into adiscriminator to obtain the probability that the standard style font image is a real standard style font; and finally loss functions of the generator and the discriminator are obtained according to the two probabilities. The optimizer adjusts the generator and the discriminator according to the loss functions until the loss functions of the generator and the discriminator converge to obtain a trained generator; a complete font library of standard style fonts can be obtained by adopting the trained generator.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

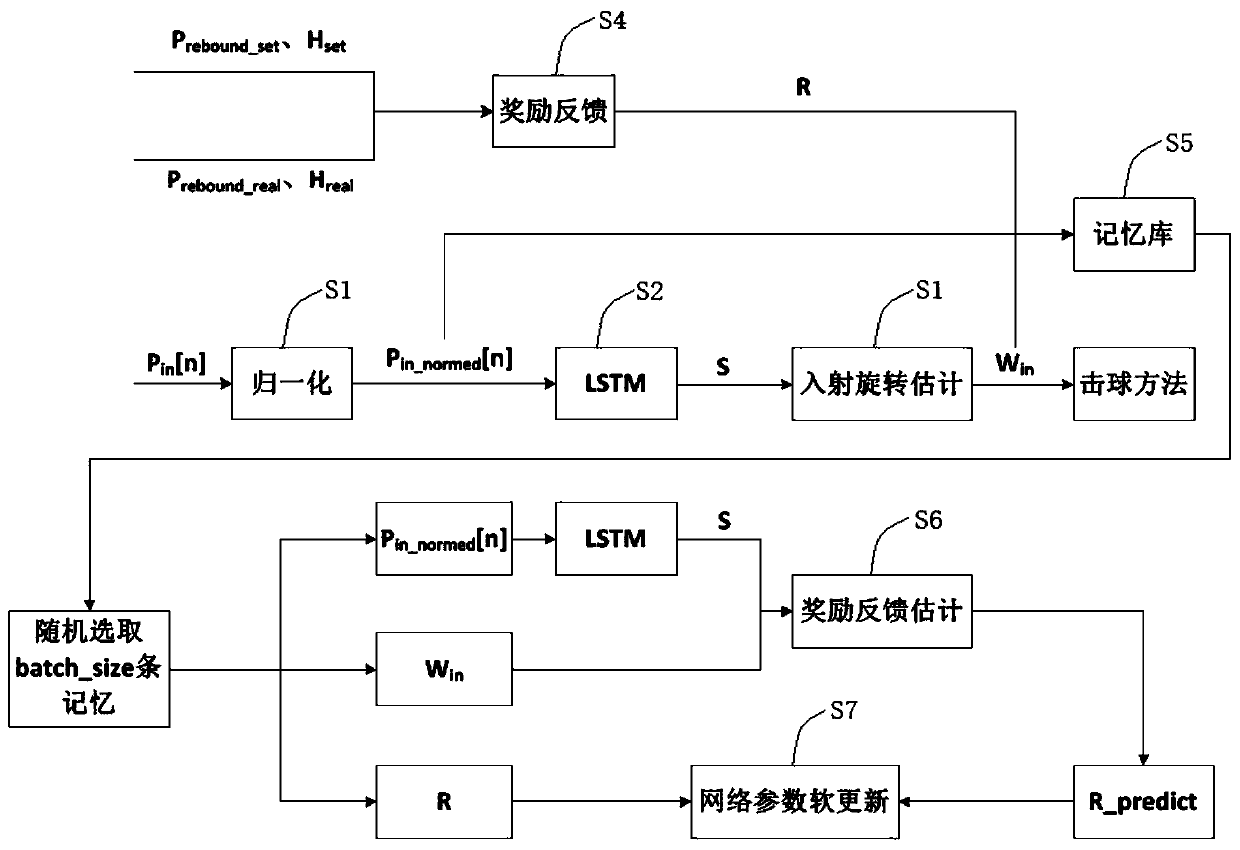

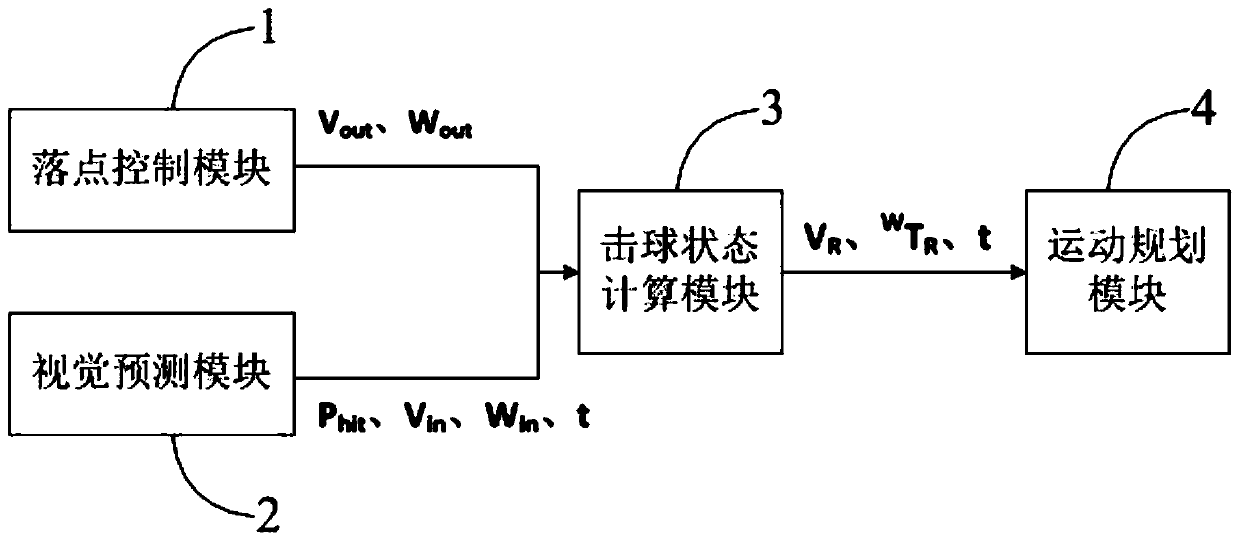

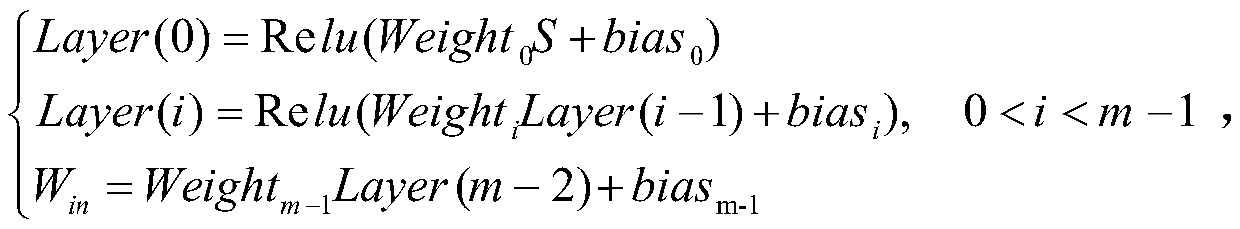

Deep reinforcement learning rotation speed prediction method and system for table tennis robot

ActiveCN110458281AAccurate returnImprove robustnessNeural architecturesNeural learning methodsMemory bankAlgorithm

The invention provides a deep reinforcement learning rotation speed prediction method and system for a table tennis robot. The prediction method comprises: normalizing a table tennis ball coming position sequence at equal time intervals; inputting the normalized sequence into a deep LSTM network; inputting the obtained state vector of the LSTM into an incident rotation estimation deep neural network to obtain an incident rotation speed; calculating reward feedback of deep reinforcement learning; combining the ping-pong ball coming position sequence, the ping-pong ball incident rotation speed and the reward feedback in the current ball hitting process into one-time ball hitting memory, and storing the one-time ball hitting memory into a memory bank; and randomly selecting at least one memory from a memory bank, inputting the state vector of the LSTM and the incident rotation speed of the table tennis ball into a reward feedback estimation deep neural network, outputting reward feedbackestimation, and performing back propagation and parameter updating on the incident rotation estimation deep neural network and the reward feedback estimation deep neural network. The ball returning device can accurately return the ball when coping with the rotating ball.

Owner:上海创屹科技有限公司

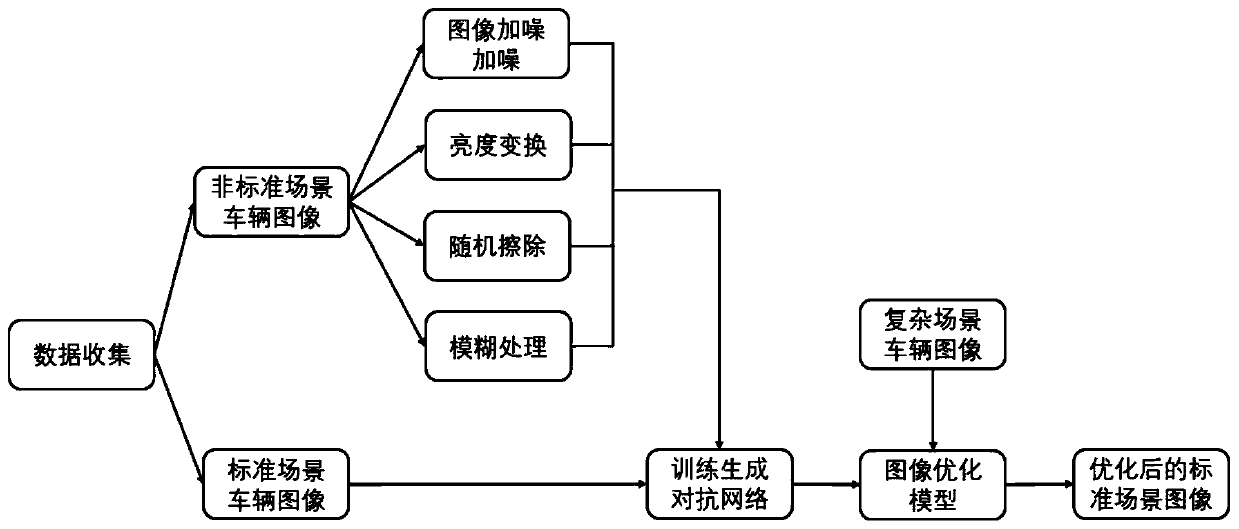

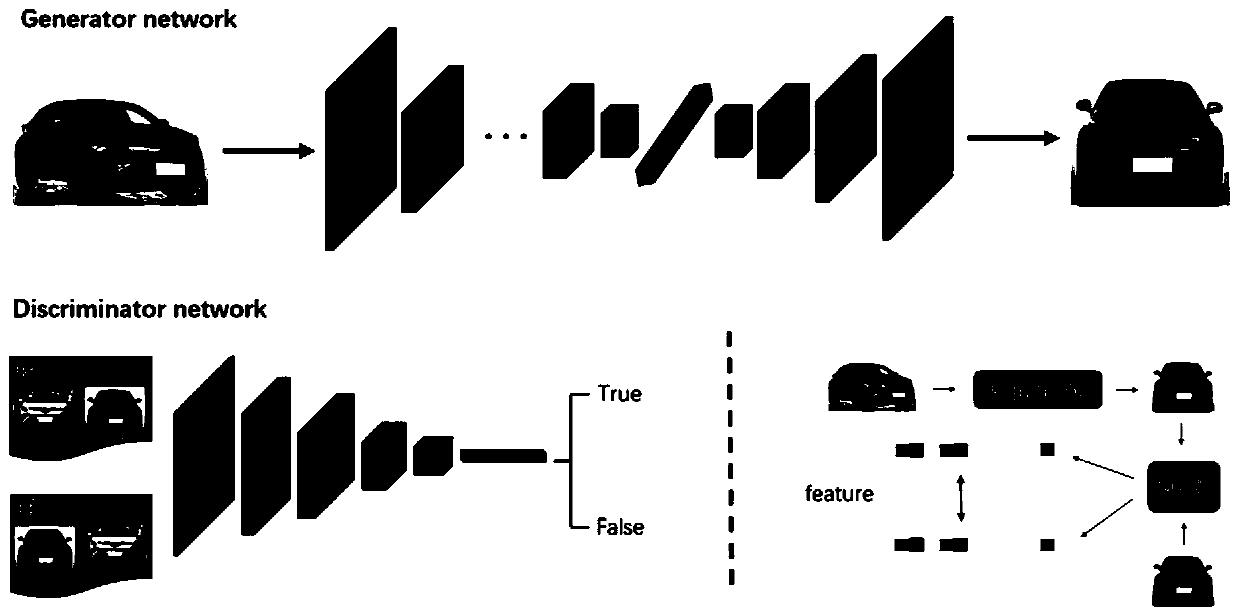

Vehicle image optimization method and system based on adversarial learning

InactiveCN110458060AImage optimization process is simple and efficientReduce labor burdenInternal combustion piston enginesCharacter and pattern recognitionDiscriminatorFeature extraction

The invention discloses a vehicle image optimization method and system based on adversarial learning. The vehicle image optimization method comprises the steps: collecting vehicle images photographedat different angles, and dividing the vehicle images into a standard scene image and a non-standard scene image; carrying out image preprocessing on the non-standard image to obtain a low-quality dataset; constructing a vehicle image optimization model based on the generative adversarial network, wherein the model is composed of a generator, a discriminator and a feature extractor; training a vehicle image optimization model based on the generative adversarial network, setting a loss function, calculating a network weight gradient by adopting back propagation, and updating parameters of the vehicle image optimization model; and after the vehicle image optimization model is trained, reserving the generator as a final vehicle image optimization model, inputting multi-scene vehicle images, and outputting optimized standard scene images. According to the invention, migration from complex scene vehicle images to standard scene vehicle images is realized, and the purpose of optimizing the image quality is achieved, and the vehicle detection and recognition accuracy is improved.

Owner:JINAN UNIVERSITY

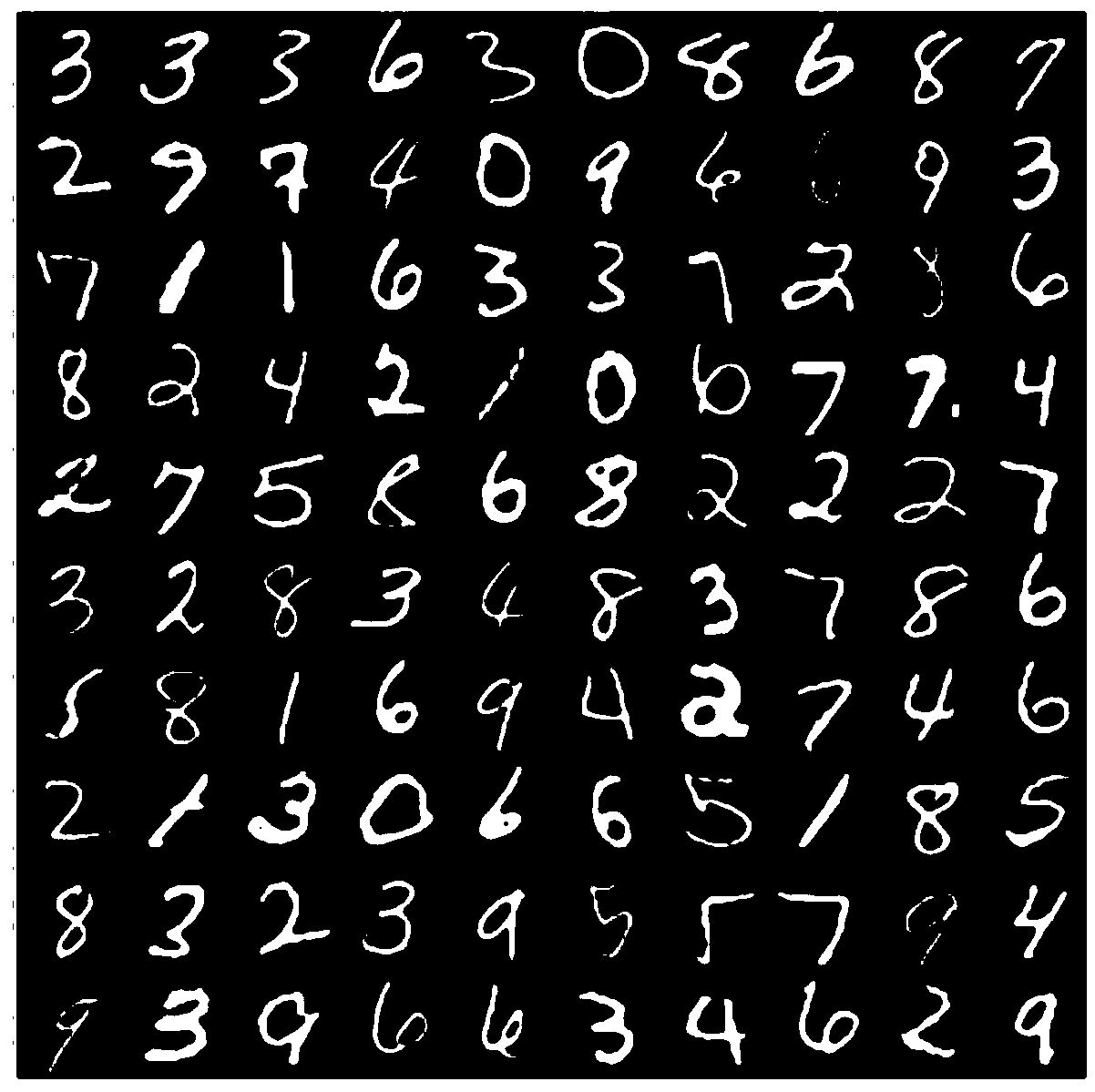

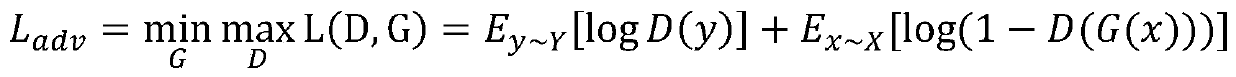

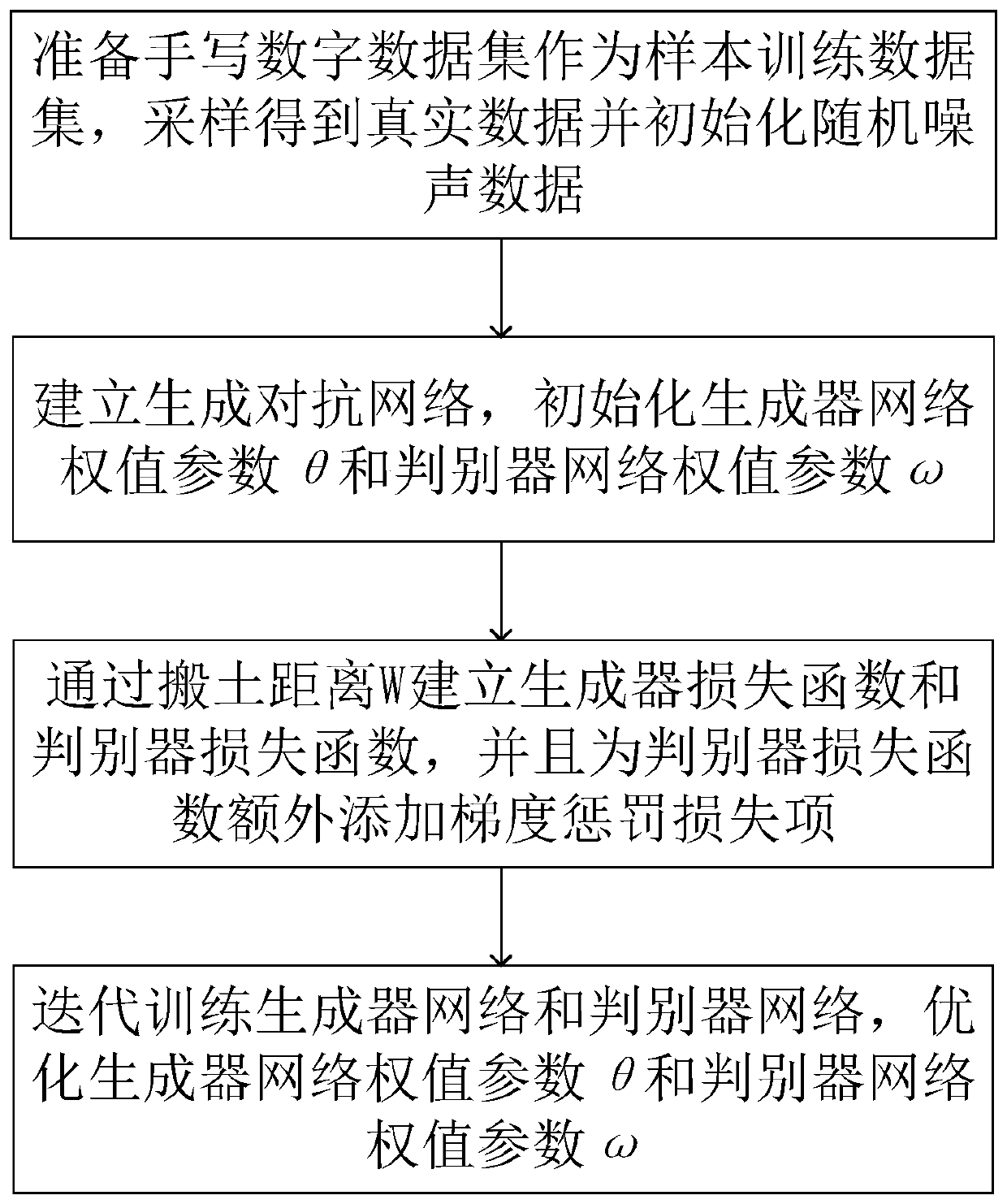

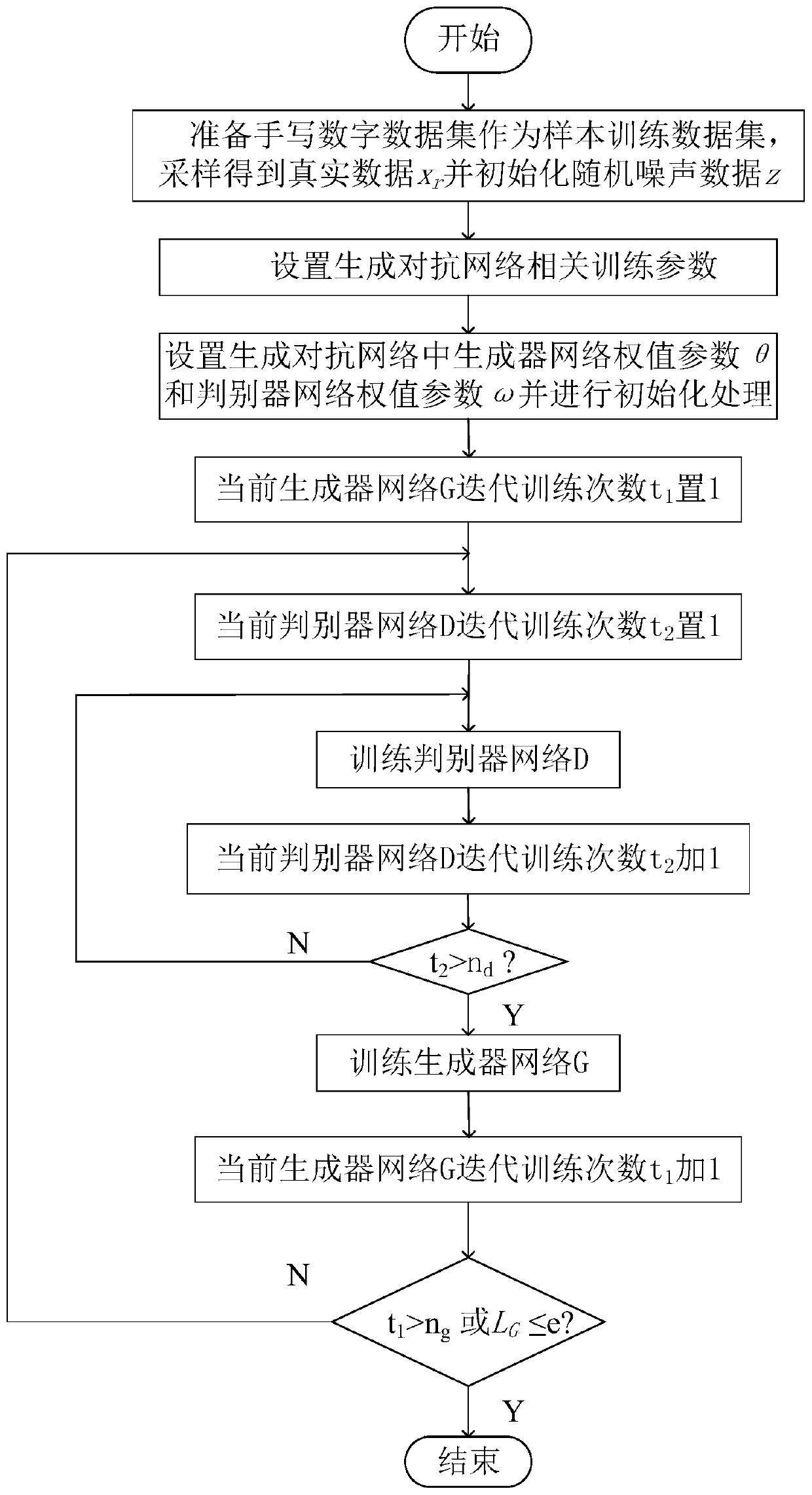

Handwritten numeral generation method based on parameter optimization generative adversarial network

InactiveCN110598806APromote generationQuality improvement2D-image generationNeural architecturesDiscriminatorDigital data

The invention provides a handwritten numeral generation method based on a parameter optimization generative adversarial network. The method comprises the following steps of preparing a handwritten digital data set as a sample training data set, sampling to obtain the real data, and initializing the random noise data; establishing the generative adversarial network, and initializing a generator network weight parameter theta and a discriminator network weight parameter omega; establishing a generator loss function and a discriminator loss function through the soil moving distance W, and additionally adding a gradient penalty loss item to the discriminator loss function; and iteratively training a generator network and a discriminator network, and optimizing the generator network weight parameter theta and the discriminator network weight parameter omega. According to the embodiment of the invention, the problems of slow convergence, unstable training, high calculation overhead and the like of the original generative adversarial network are solved, the optimization of the generative adversarial network is realized, the network performance of the generative adversarial network is fully improved, and the generator can generate the handwritten digital images with higher quality.

Owner:HEFEI UNIV OF TECH

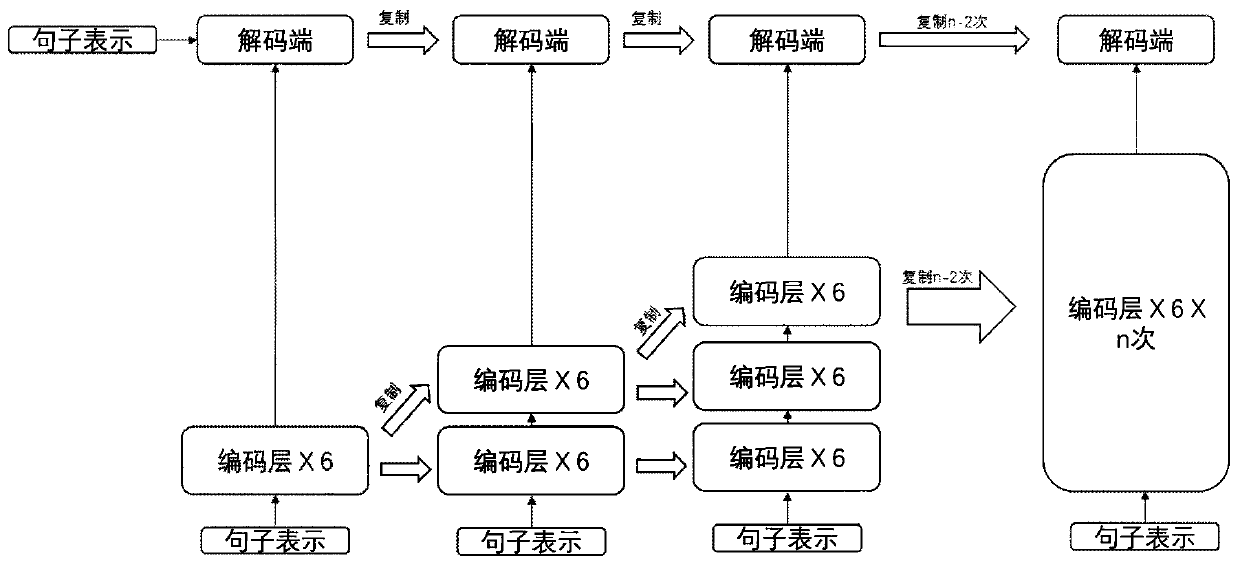

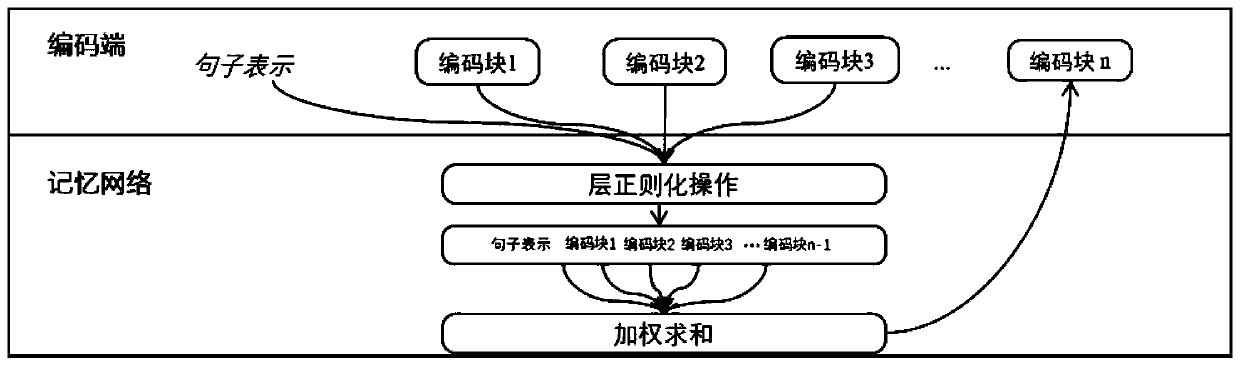

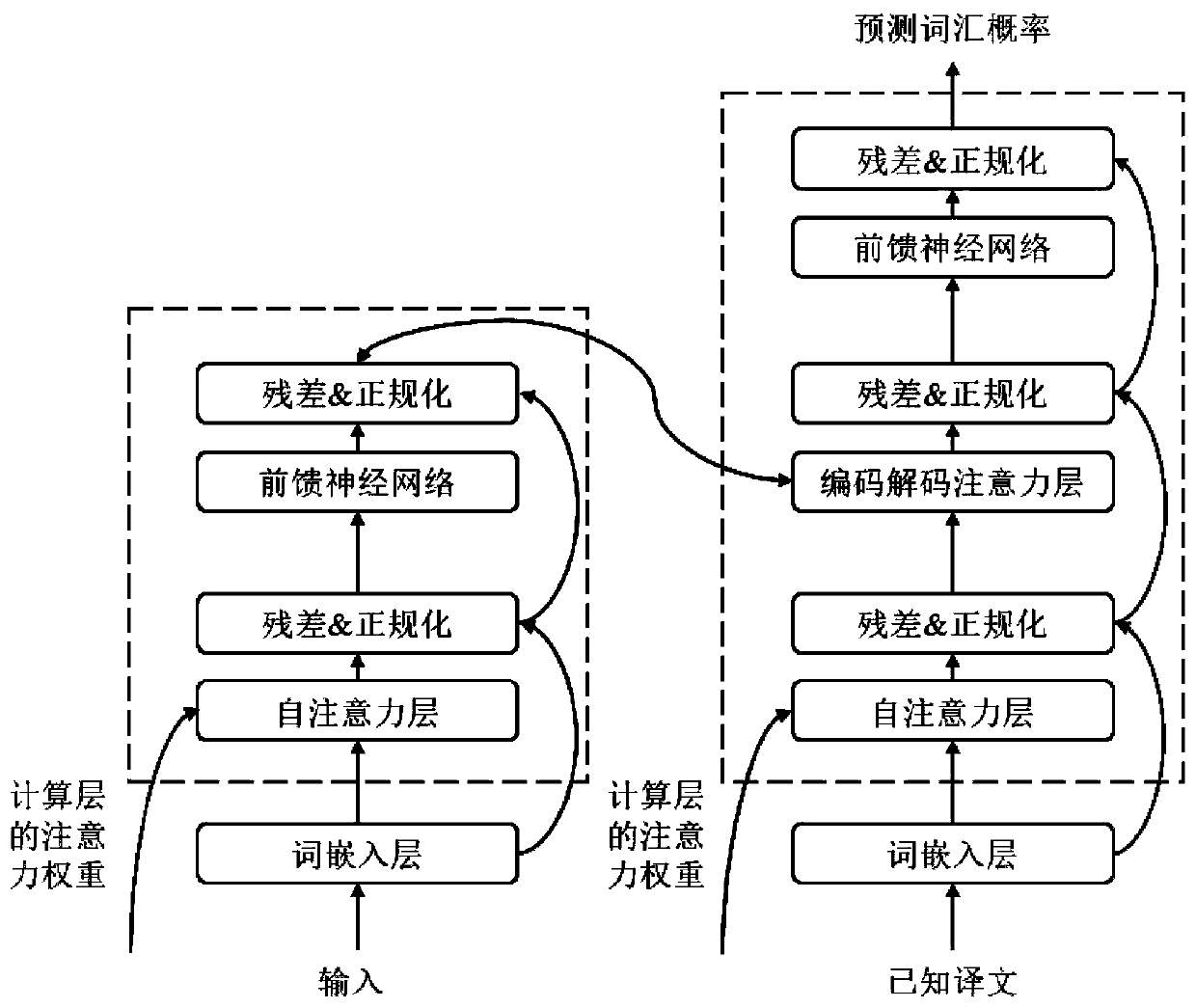

Neural machine translation system training acceleration method based on stacking algorithm

ActiveCN111178093ALess batch optimizationImprove performanceNatural language translationNeural architecturesCoding blockAlgorithm

The invention discloses a training acceleration method of a deep neural machine translation system based on a stacking algorithm. The method comprises the following steps: constructing a coding end comprising a coding block, a decoding end and a preceding Transformer model; inputting sentences expressed by dense vectors into the coding end and the decoding end, and writing the input of the codingend into a memory network; writing the output vector into the memory network after completing the operation of each coding block, and accessing the memory network to perform linear aggregation to obtain the output of the current coding block; training a current model; copying the coding block parameters of the top layer to construct a new coding block and stacking the new coding block on the current coding end to construct a model containing two coding blocks; repeating the process to construct a neural machine translation system with a deeper coding end, and training the neural machine translation system to a target layer number until convergence; and performing translating by using the trained model. According to the method, the network with 48 coding layers can be trained, and the performance of the model is improved while 1.4 times of the speed-up ratio is obtained.

Owner:沈阳雅译网络技术有限公司

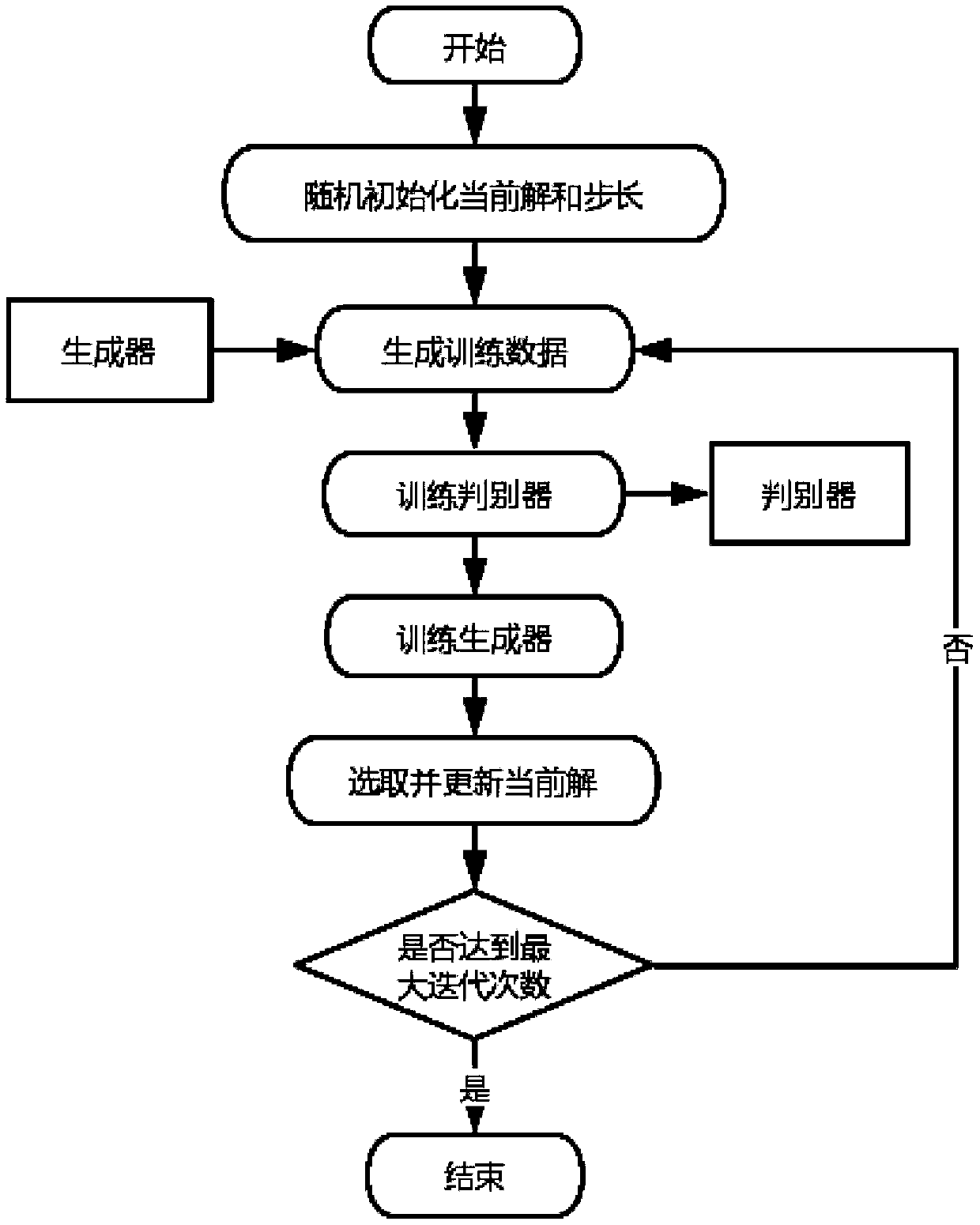

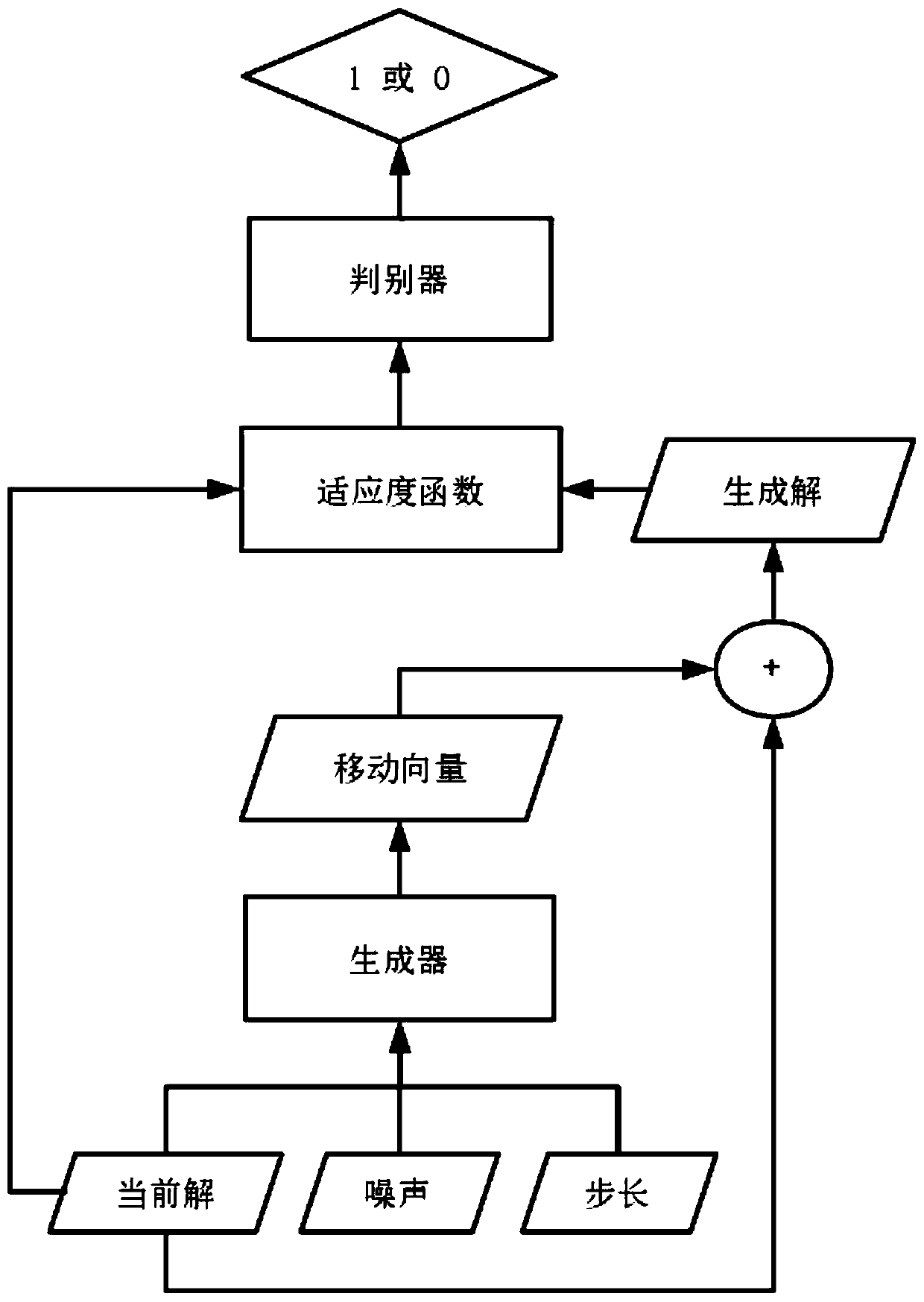

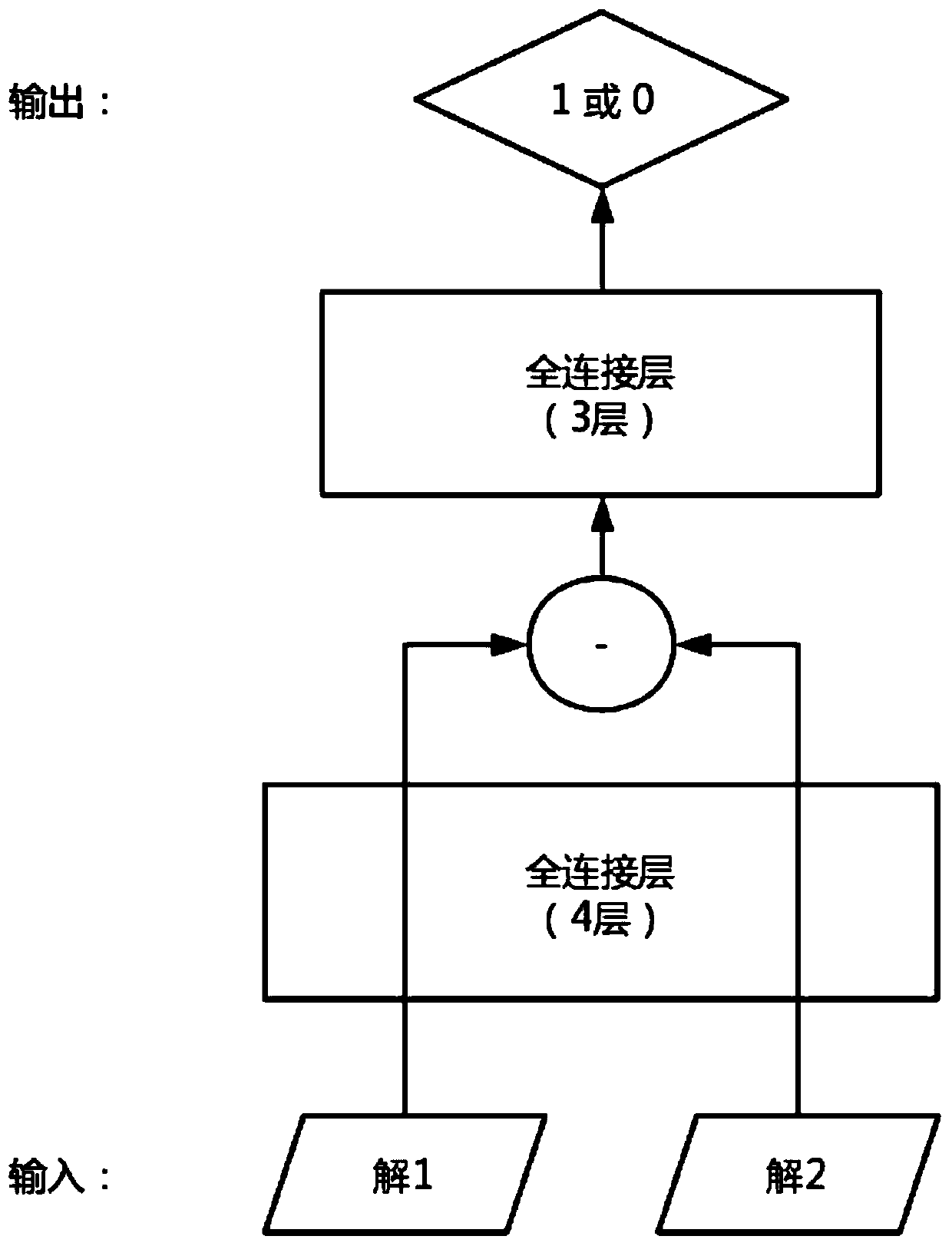

Optimization model method based on generative adversarial network and application

ActiveCN110097185ABoost parameter training processStable trainingLogisticsNeural learning methodsDiscriminatorLocal optimum

The invention discloses an optimization model method based on a generative adversarial network and an application, called GAN-O, the method comprises the following steps: expressing the application (such as logistics distribution optimization) as a function optimization problem; establishing a function optimization model based on the generative adversarial network according to the test function and the test dimension of the function optimization problem, including constructing a generator and a discriminator based on the generative adversarial network; training a function optimization model; carrying out iterative computation by utilizing the trained function optimization model to obtain an optimal solution; therefore, the optimization solution based on the generative adversarial network is realized. According to the method, a better local optimal solution can be obtained in a shorter time, so that the training of the deep neural network is stable, and the method has more excellent local search capability. The method can be used for many application scenarios such as logistics distribution problems which can be converted into function optimization problems in reality, the application field is wide, a large number of actual problems can be solved, and the popularization and application value is high.

Owner:PEKING UNIV

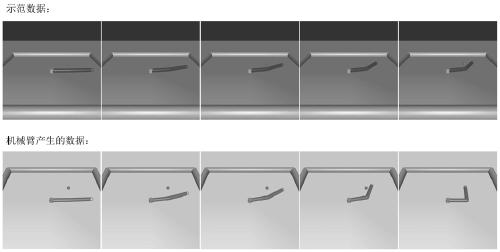

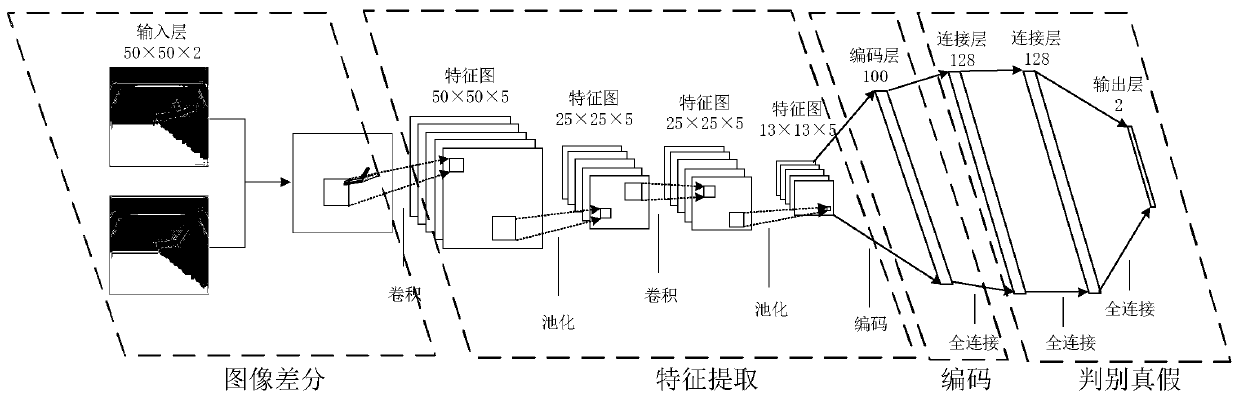

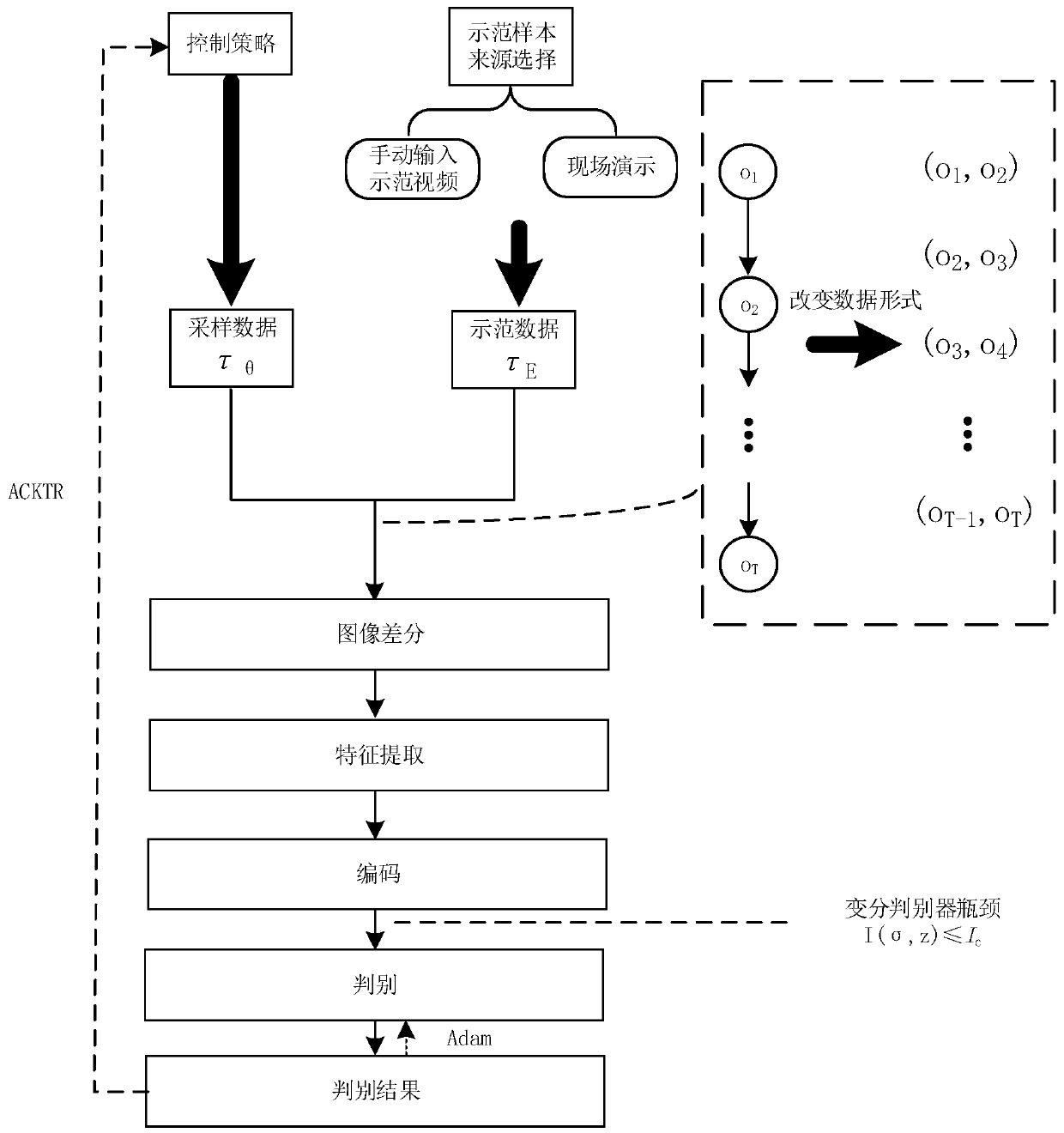

Mechanical arm action learning method and system based on third-person imitation learning

ActiveCN111136659ABreak the balance of the gameGame balance maintenanceProgramme-controlled manipulatorThird partyAutomatic control

The invention discloses a mechanical arm action learning method and system based on third-person imitation learning. The method and system are used for automatic control of a mechanical arm so that the mechanical arm can automatically learn how to complete a corresponding control task by watching a third-party demonstration. According to the method and system, samples exist in a video form, and the situation that a large number of sensors are needed to be used obtaining state information is avoided; an image difference method is used in a discriminator module so that the discriminator module can ignore the appearance and the environment background of a learning object, and then third-party demonstration data can be used for imitation learning; the sample acquisition cost is greatly reduced; a variational discriminator bottleneck is used in the discriminator module to restrain the discriminating accuracy of a discriminator on demonstration generated by the mechanical arm, and the training process of the discriminator module and a control strategy module is better balanced; and the demonstration action of a user can be quickly simulated, operation is simple and flexible, and the requirements for the environment and demonstrators are low.

Owner:NANJING UNIV

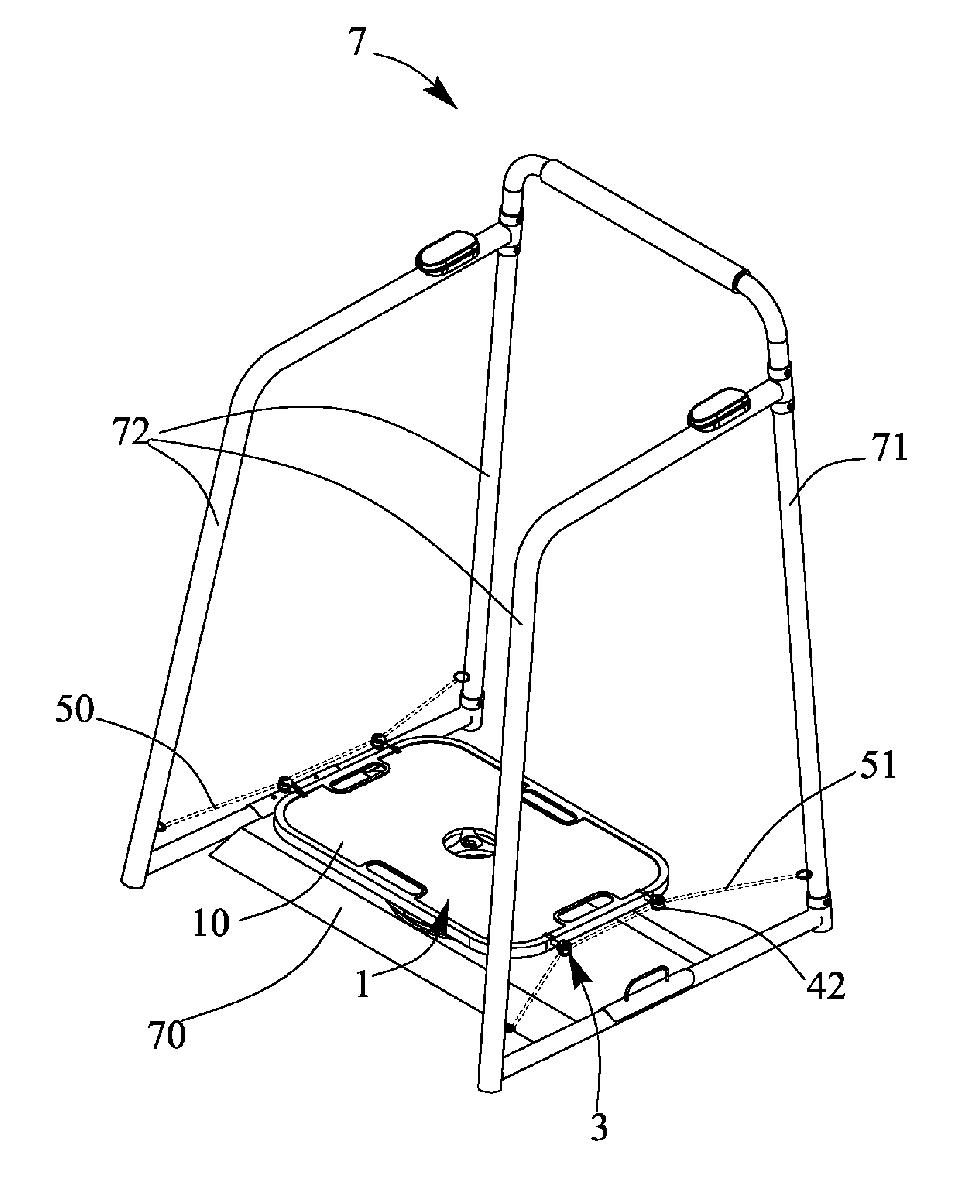

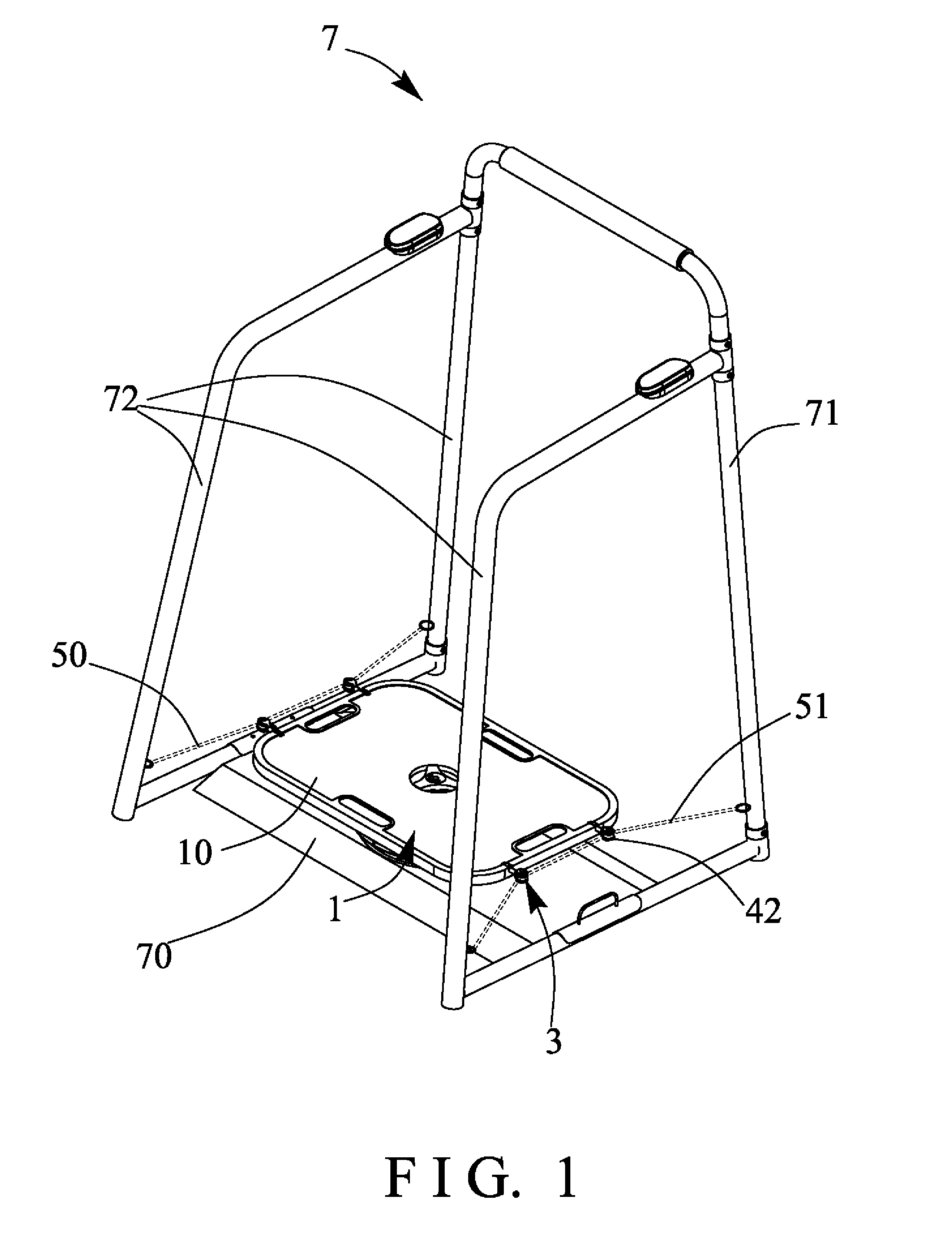

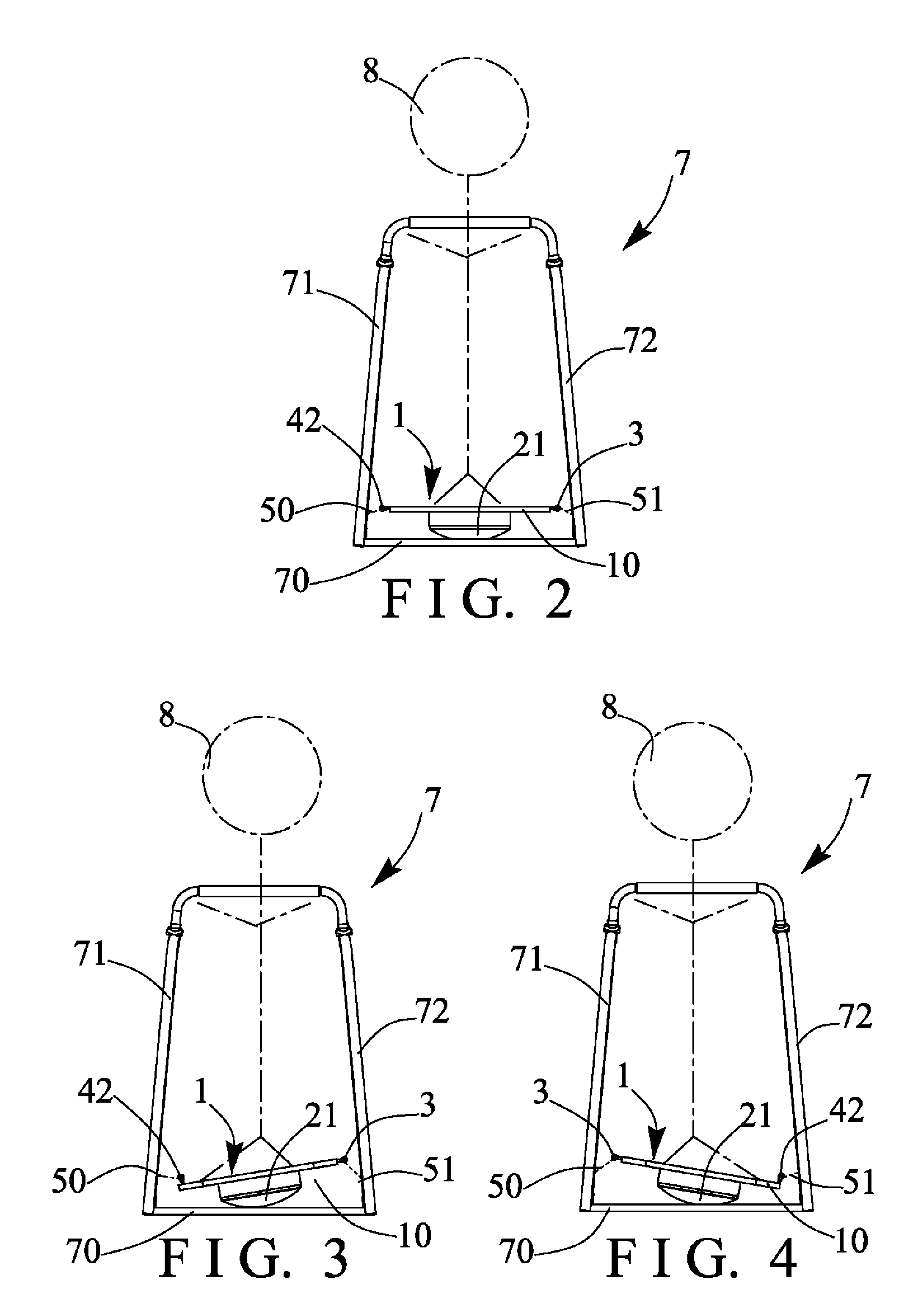

Pivotal pulley for exercise machine

InactiveUS20140018218A1Stable supportEasy to operateStiltsMuscle exercising devicesPhysical exerciseEngineering

A physical exercising machine includes a platform to support a user, an aperture formed in the platform, a pulley device includes a shaft for engaging with the aperture of the base and for pivotally attaching to the base, a shank is extended from the shaft and pivotal relative to the base with the shaft, a bracket is rotatably attached to the shank, and a pulley member is rotatably attached to the bracket with a spindle, and a cable is engaged with the pulley member, the pulley member is pivotal relative to the base with the shaft and rotatable relative to the shank for preventing the pulling cable from being disengaged from the pulley member and for allowing the user to suitably actuate or operate the pulling cable.

Owner:CHEN PAUL

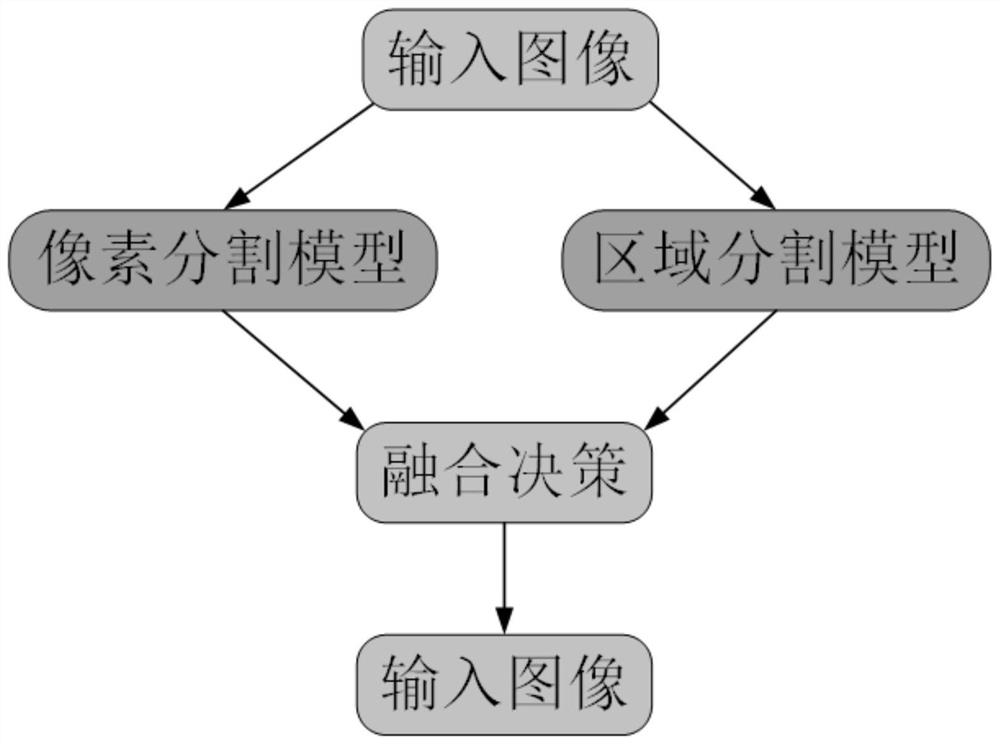

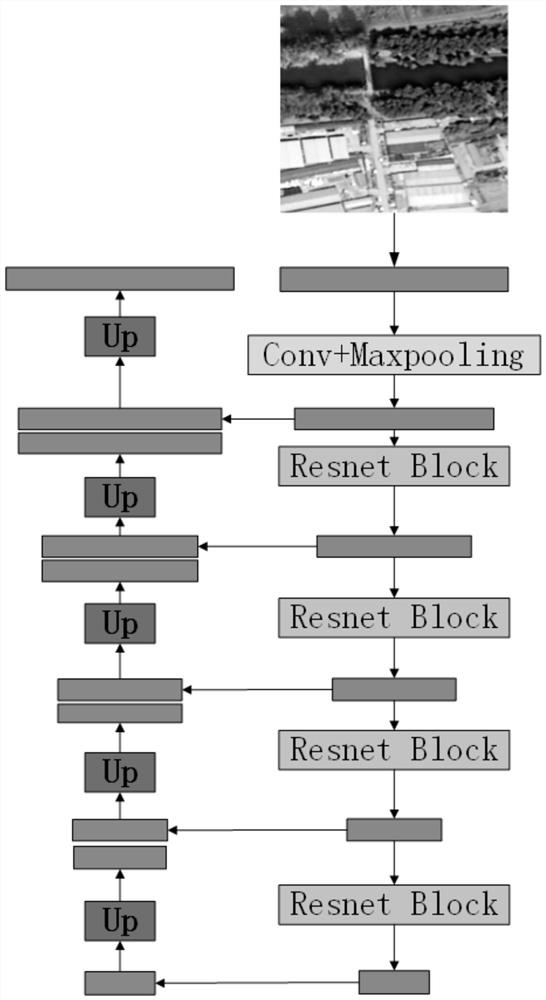

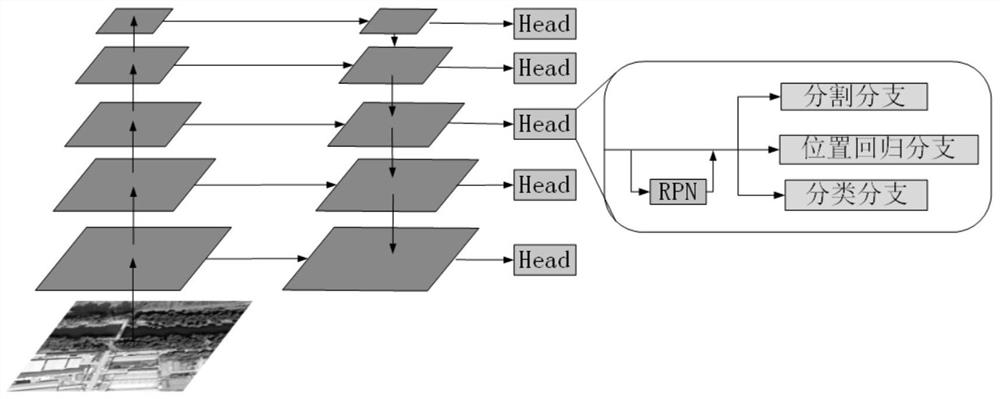

Building detection method based on pixel and region segmentation decision fusion

PendingCN111968088AImprove spatial continuityImprove liquidityImage enhancementImage analysisSensing dataTest sample

The invention discloses a building detection method based on pixel and region segmentation decision fusion. The building detection method comprises the following steps: respectively constructing a residual structure introduced pixel segmentation model and a feature pyramid network introduced region-based double segmentation model; generating a training sample set and a test sample set from the optical remote sensing data set; preprocessing the images in the training set samples; training a pixel segmentation model by using the mixed supervision loss added with the Dice loss and the cross entropy loss; inputting the test sample set into the trained double-segmentation network, and respectively outputting prediction results of the test sample set; and fusing the prediction result of the double-segmentation network according to the decision scheme, outputting the final detection result of the test sample set, and completing the detection. The method pays attention to the spatial consistency of the large-scale building, reserves the multi-scale features of the small-scale building, guarantees the richness of the features of the building, and improves the building detection accuracy.

Owner:XIDIAN UNIV

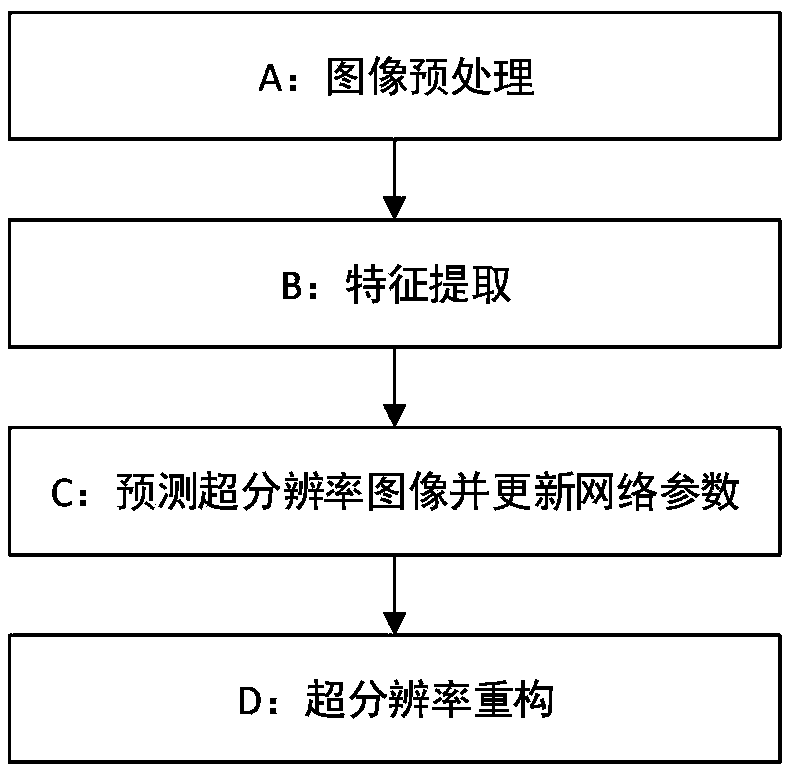

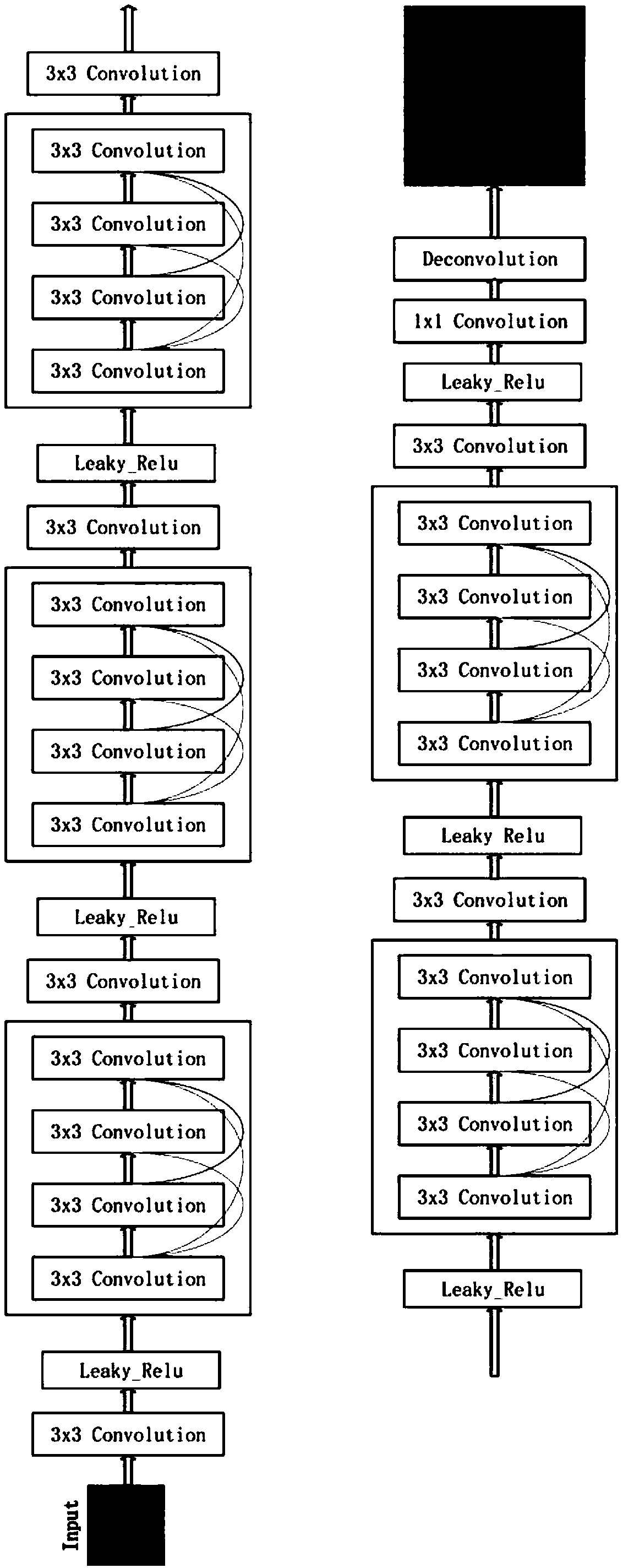

Image super-resolution method based on densely linked neural network, storage medium and terminal

InactiveCN109544457AImprove the ability to extract low-frequency and high-frequency features of imagesImprove the effectGeometric image transformationNeural architecturesImage resolutionDeconvolution

The invention discloses an image super-resolution method based on a densely linked neural network, a storage medium and a terminal. The method includes: preprocessing an image; performing Feature extraction: Building a dense-linked neural network, inputting the low-resolution image Input from the entrance of the dense-linked neural network, and extracting the feature information contained in the Input after calculation; Predicting the super-resolution image and updating the network parameters: performing upsampling / deconvolution on the feature-extracted image to obtain the predicted image predict; calculating the error values between the predicted image predict and the real image label, and updating the parameters of the densely linked neural network in the reverse direction; and performing super resolution reconstruction. The method can remarkably improve the ability of extracting the low-frequency and high-frequency features of an image by a depth neural network, improve the effect of the image super-resolution, and improve the ability of providing information by a picture, so that the invention is applied in the field of expecting to obtain a high-resolution image and providingmore details by the picture.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

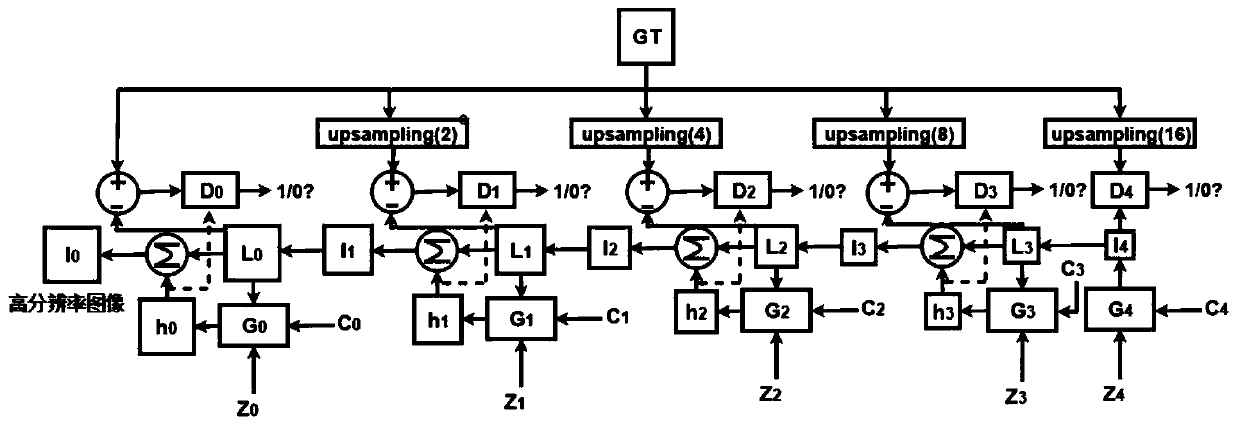

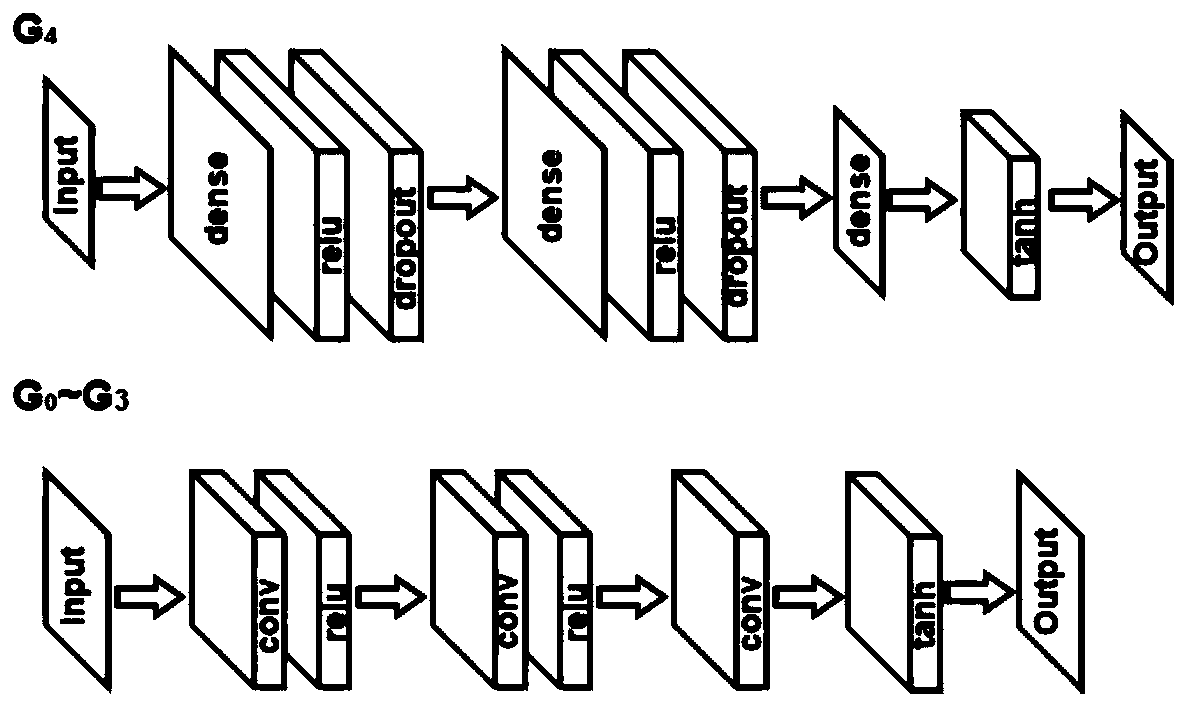

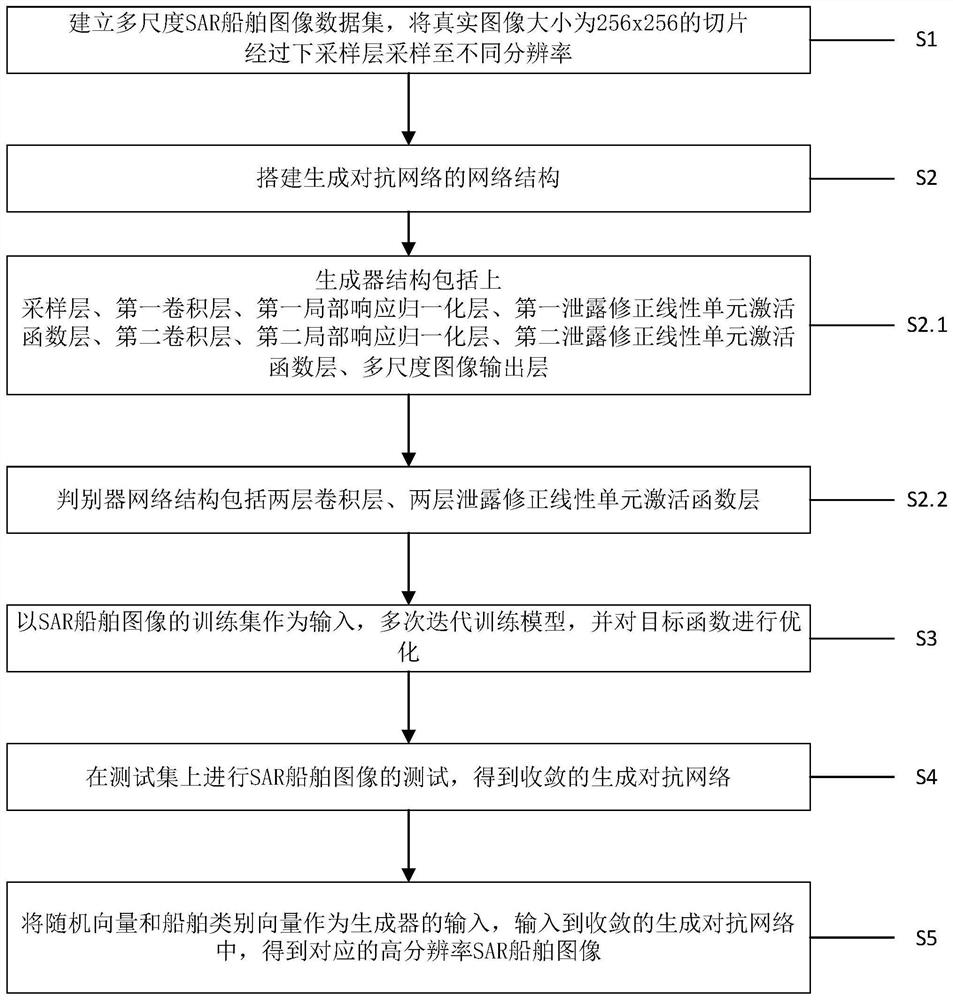

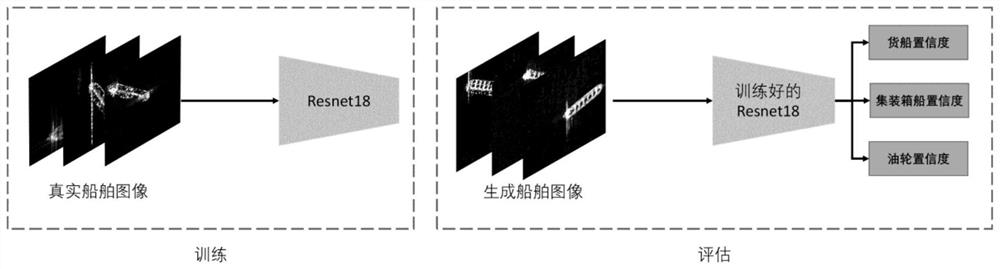

High-resolution SAR ship image generation method based on generative adversarial network

ActiveCN112132012AStable trainingQuality improvementTexturing/coloringScene recognitionData setAlgorithm

The invention relates to a high-resolution SAR image generation method based on a generative adversarial network. The method comprises the steps of firstly, establishing a multi-scale SAR ship image data set, sampling slices with the real image size of 256*256 to different resolutions through a down-sampling layer, and establishing a network structure of the generative adversarial network; takingthe training set of the SAR ship images as input, iterating the training model for multiple times, optimizing a target function, and testing the SAR ship images on the test set to obtain a convergentgenerative adversarial network; and finally, taking the noise and the ship category vector as input of a generator, and inputting into the convergent generative adversarial network to obtain a corresponding high-resolution SAR ship image. According to the method, a local response normalization layer is added in the generator, a network training process is stabilized, a multi-scale loss item is added, images generated in different scales are input into a discriminator, losses in different scales are calculated and finally added globally, and the quality of SAR images generated in different scales is improved.

Owner:AEROSPACE INFORMATION RES INST CAS

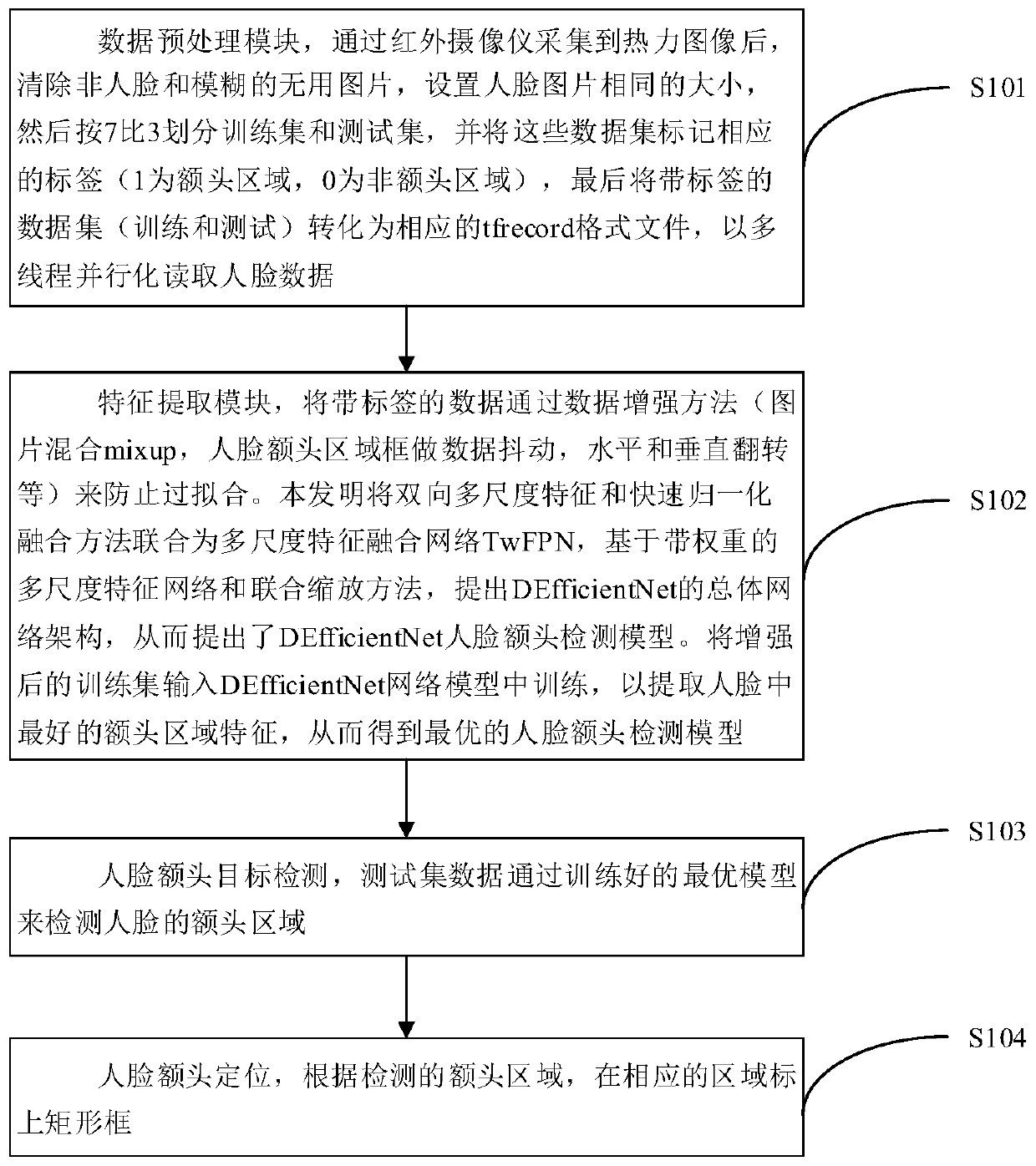

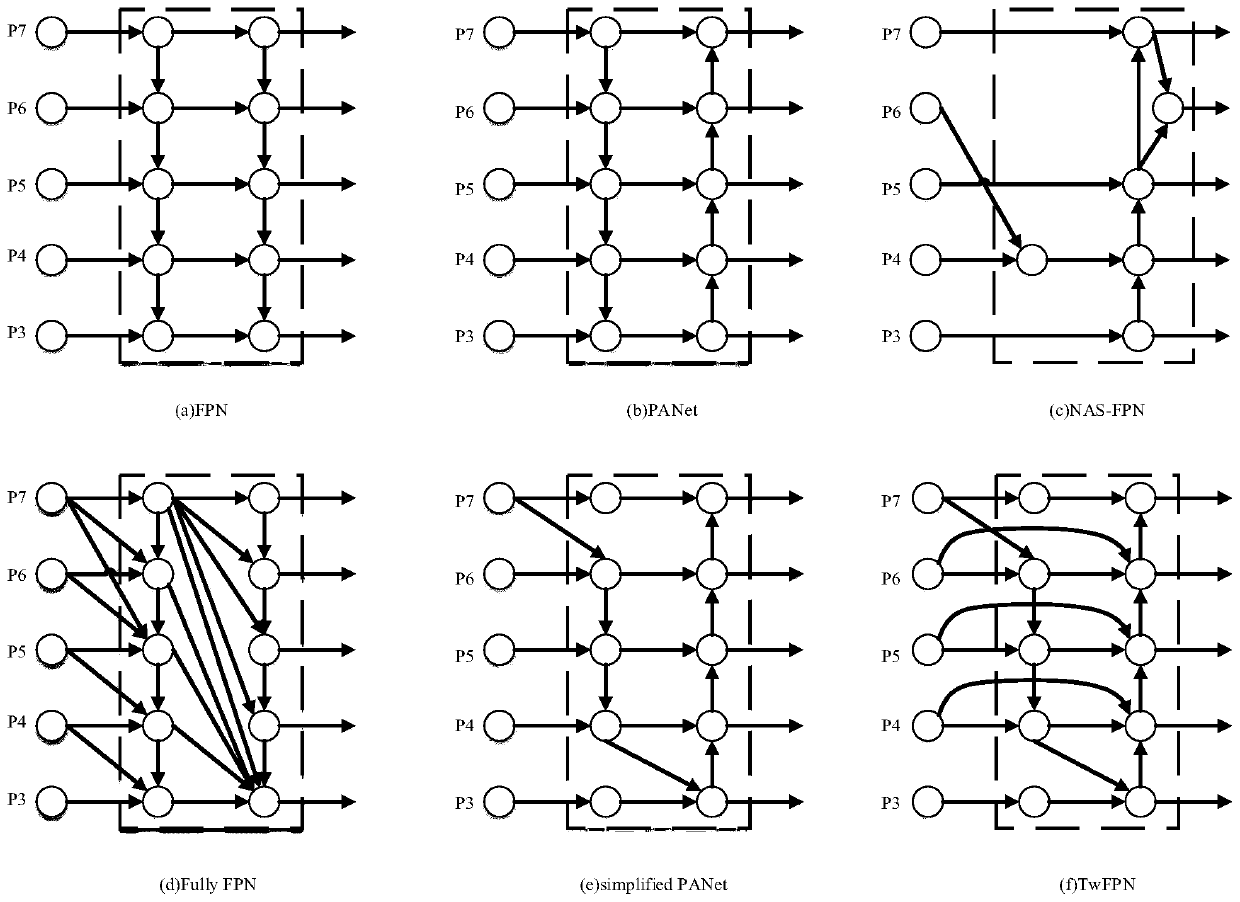

Human face forehead area detection and positioning method and system of low-resolution thermodynamic diagram

PendingCN111507248AImprove accuracyCalculation speedCharacter and pattern recognitionNeural architecturesComputer visionFeature fusion

Owner:成都东方天呈智能科技有限公司

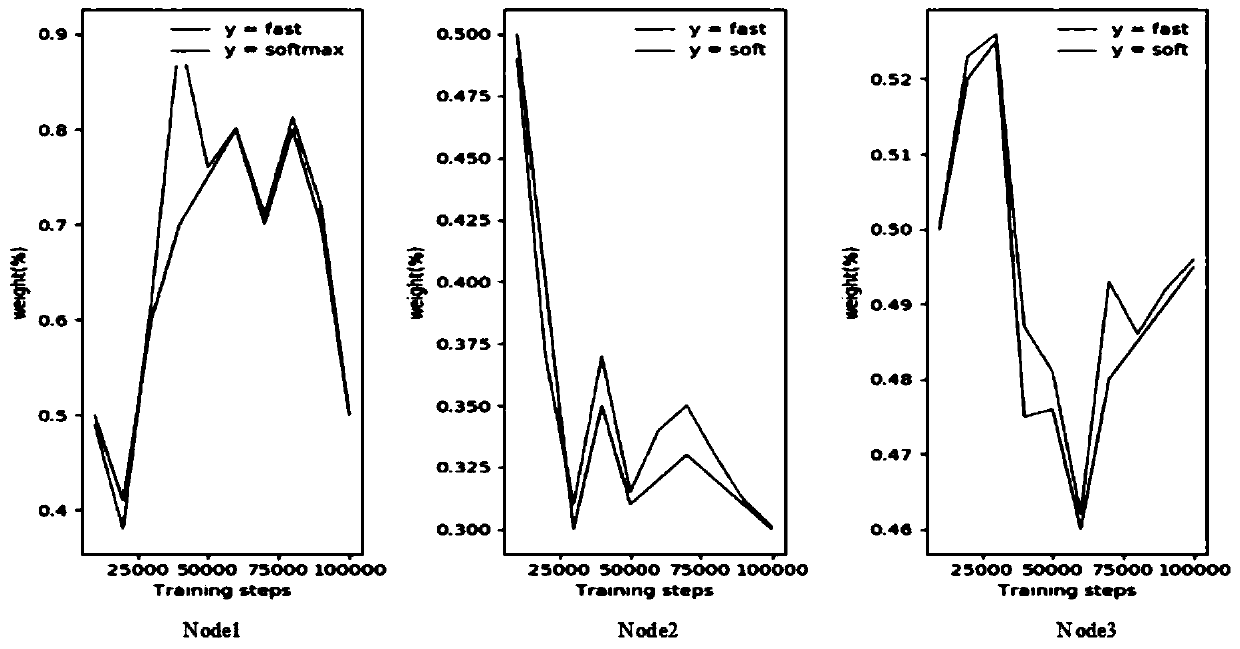

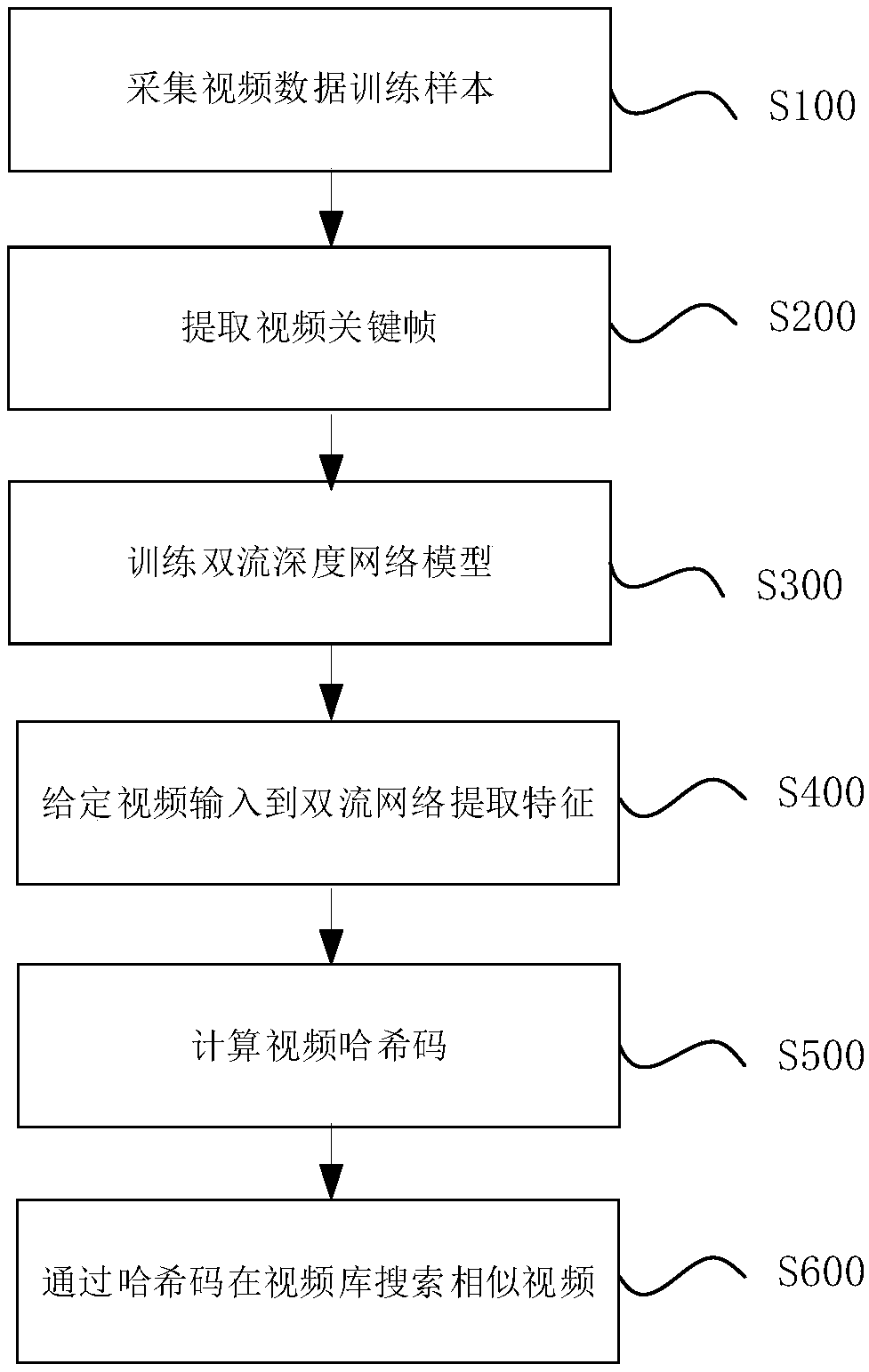

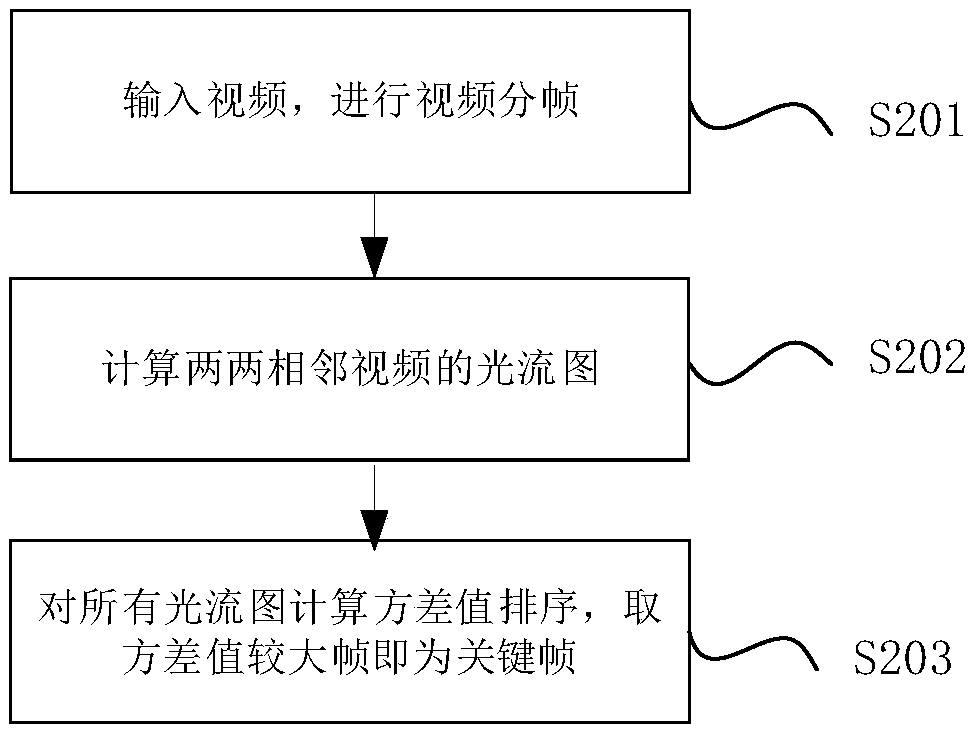

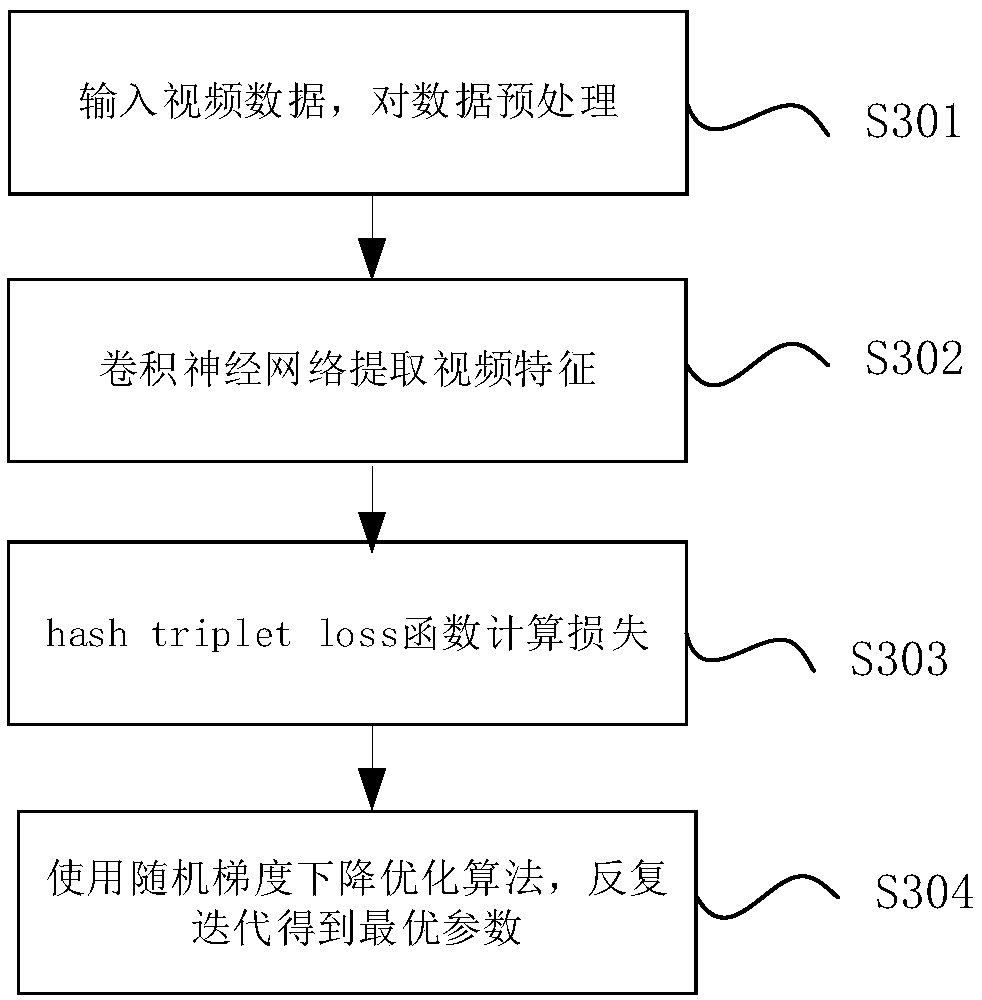

Similar video searching method and system based on a double-flow neural network

ActiveCN109492129AStable trainingFast convergenceDigital data information retrievalSpecial data processing applicationsTemporal informationHamming space

The invention provides a similar video searching method and system based on a double-flow neural network. According to the invention, a key frame extraction technology is adopted for video frame extraction, so that the storage space is greatly saved, the neural network training is more stable, and the convergence speed of the neural network training is accelerated; the video features are extractedby adopting the double-flow convolutional neural network, so that the extracted video features keep the spatial information and the time information in the video at the same time, and the robustnessis better. The Hamming distance is used for measuring the similarity of videos, the distance operation in the Hamming space is a bit operation, so that the calculation cost of the Hamming space is farlower than that of the original space even if the Hamming space is a complex retrieval algorithm, and the retrieval algorithm is an efficient retrieval mode.

Owner:WUHAN UNIV OF TECH

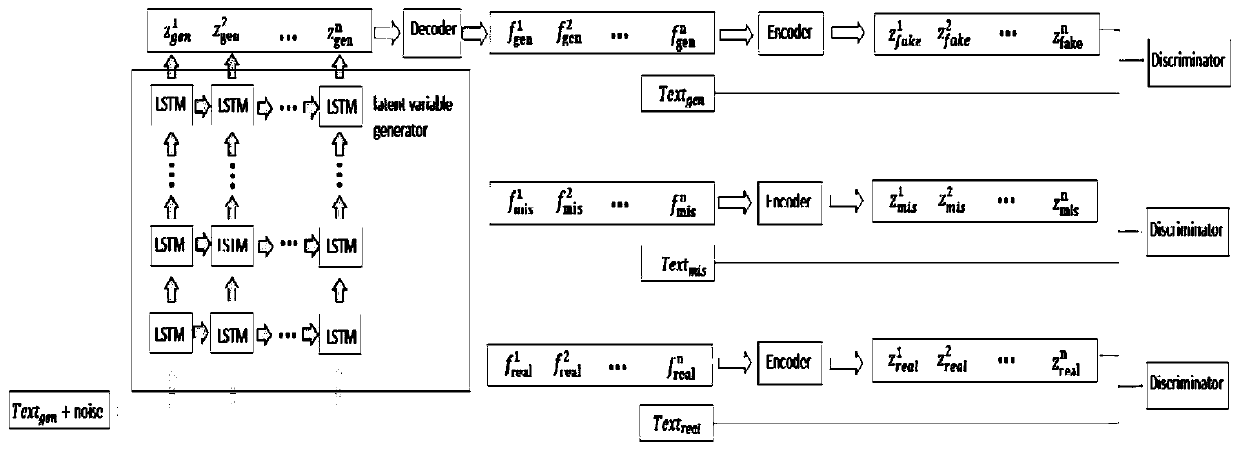

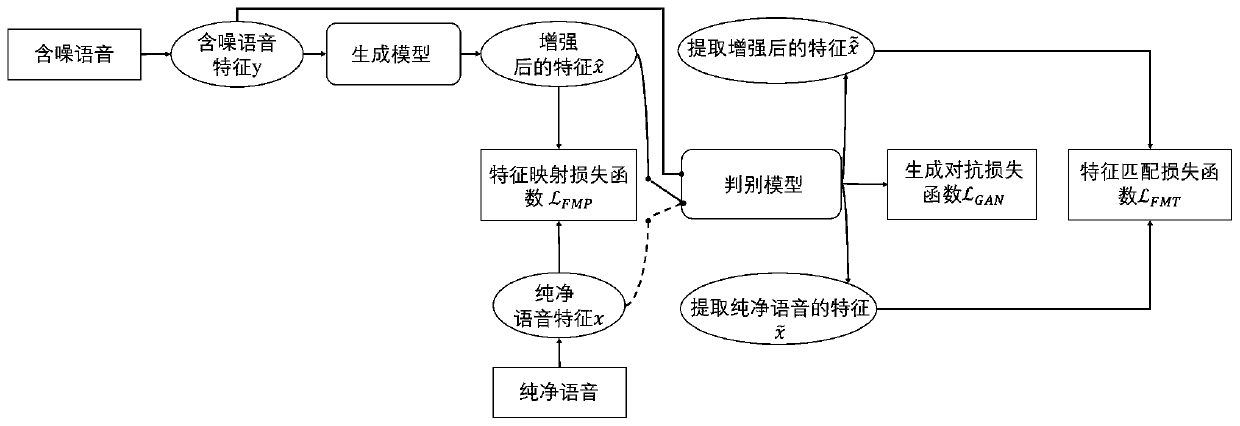

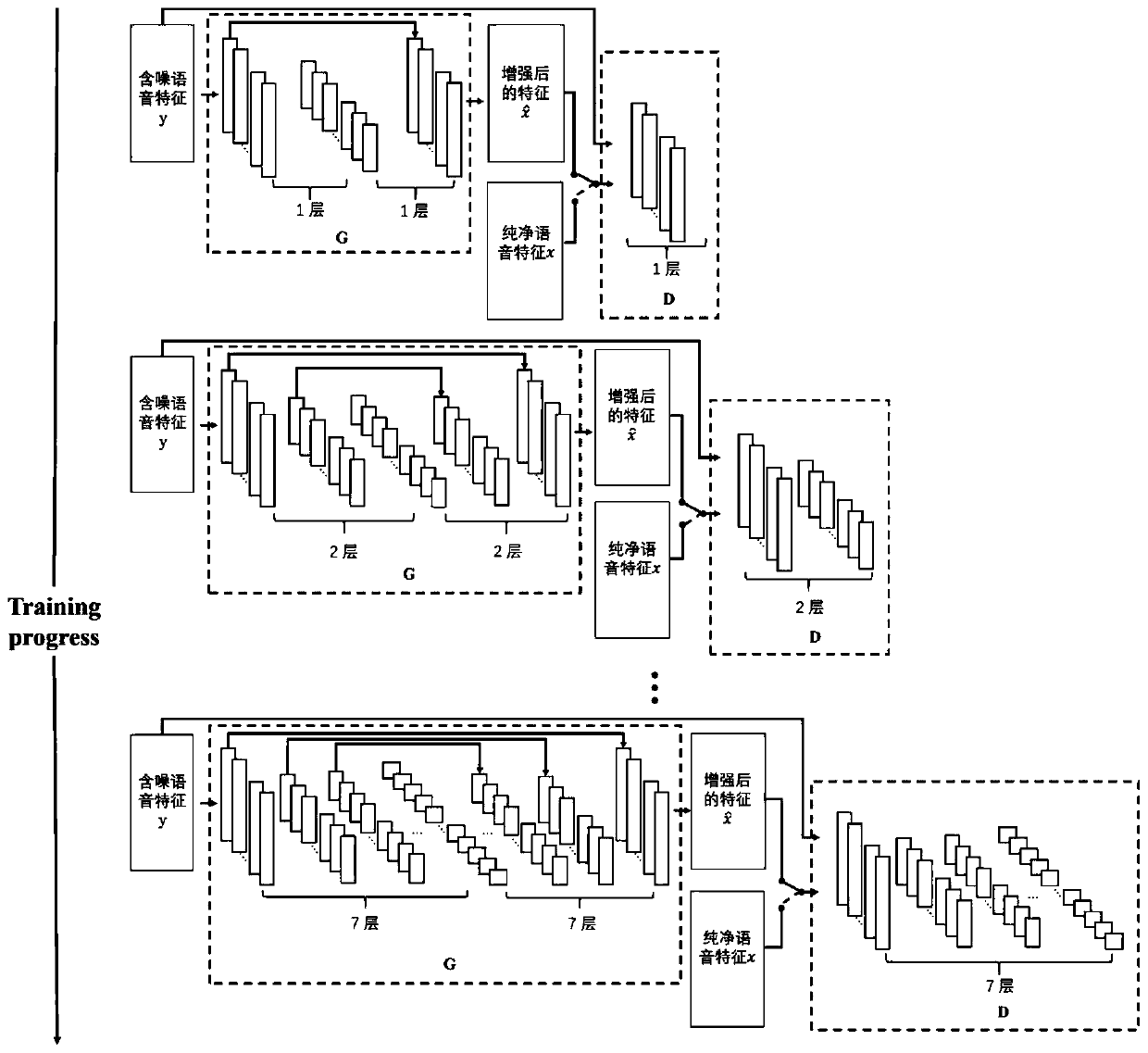

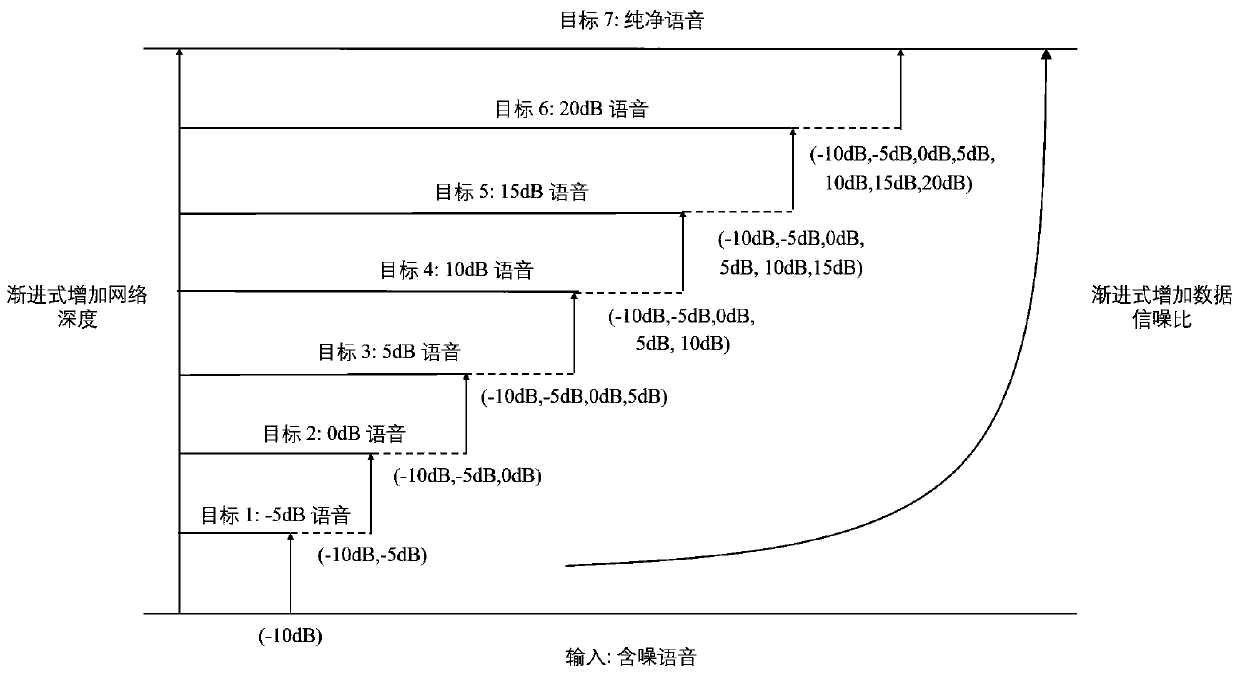

Voice enhancing method based on generative adversarial network

ActiveCN110428849AImprove signal-to-noise ratioRebuild distributionSpeech analysisDiscriminatorGenerative adversarial network

The invention discloses a voice enhancing method based on a generative adversarial network. The method is characterized by comprising the steps that 1, a progressive training mode is adopted to reconstruct distribution of pure voice; 2, a feature matching strategy based on a discriminator is adopted to optimize enhancement properties of a generator; and 3, a plurality of types of noise type data are adopted to perform training so as to generate the generative adversarial network. According to the method, the discriminator-based feature matching method is combined with a traditional feature mapping method, so that the difference between the feature discrimination of enhanced voice and the feature distribution of the pure voice is effectively reduced. Besides, a GAN objective function is further adopted to perform united optimal training on the network, so that the loss between the generator and the discriminator is minimum.

Owner:珠海亿智电子科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com