Method for training acoustic model based on CTC (Connectionist Temporal Classification)

An acoustic model and model technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of CTC acoustic model performance is not as good as CE model, model training is unstable, performance degradation of small and medium datasets, etc., to improve independence and performance. The effect of identification, reducing the number of search paths, and easy parallel computing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described in detail below in conjunction with the accompanying drawings.

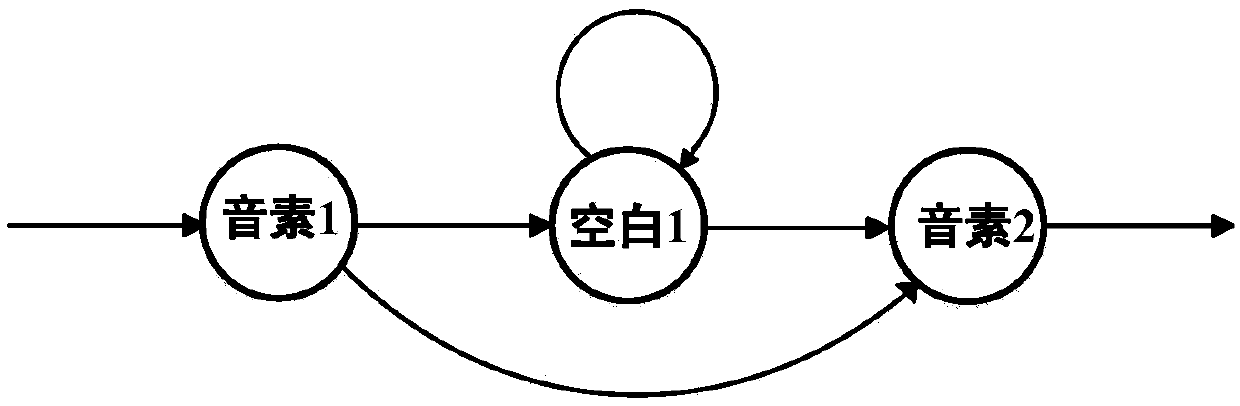

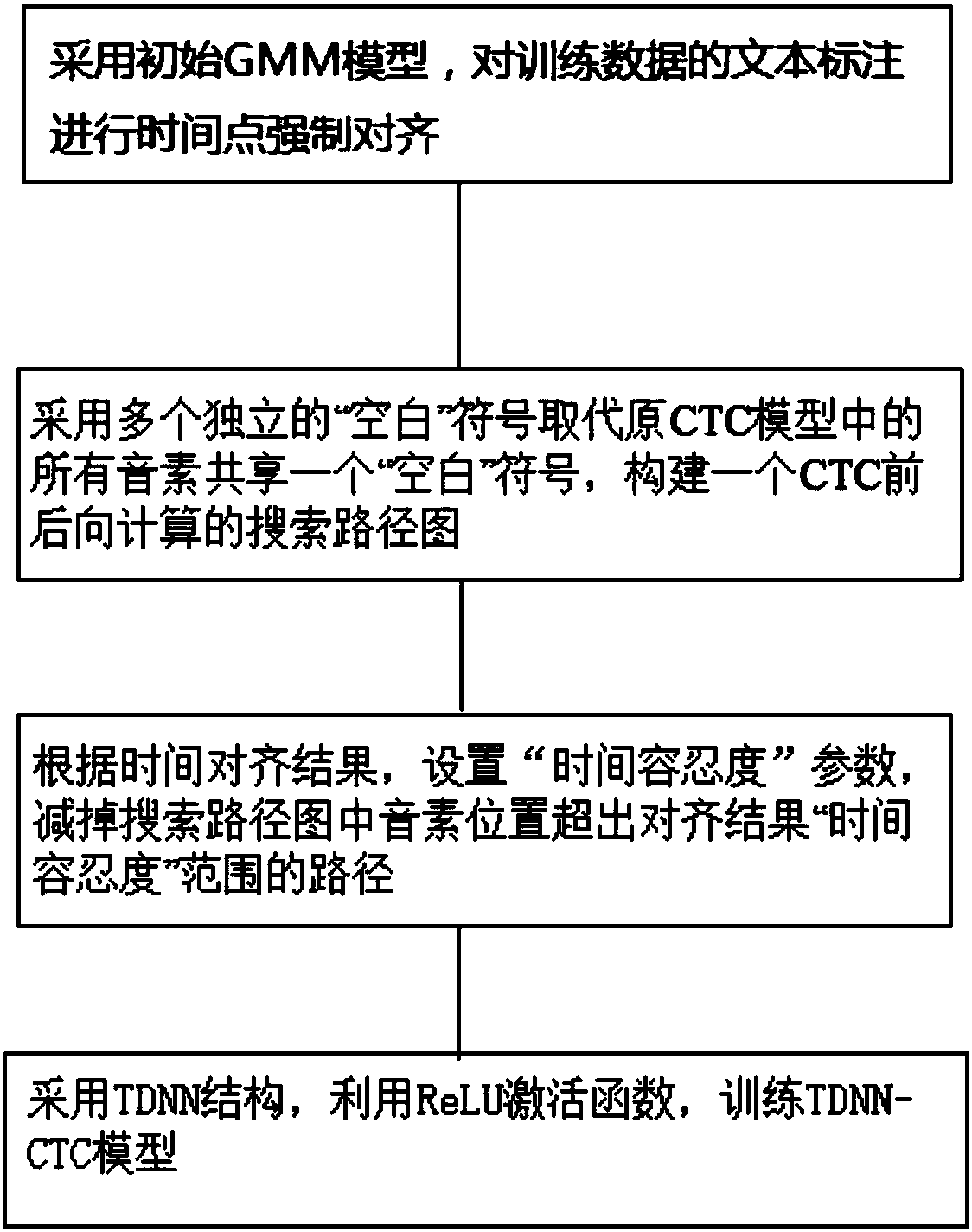

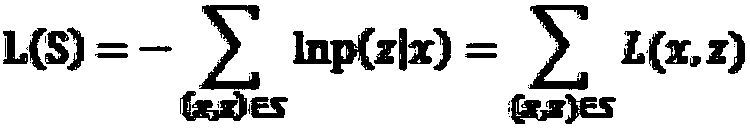

[0030] Such as figure 2 As shown, the present invention provides a kind of method based on the acoustic model training of CTC, this method adopts at first a plurality of independent " blank " symbols to replace all phonemes in the original CTC model to share a " blank " symbol, then to the training data The phoneme labeling sequence is aligned with the time points through an initial model GMM to obtain the approximate location of each phoneme, and then construct a search path graph for the forward and backward calculation of CTC for the phoneme labeling sequence after adding the "blank" symbol; then through a configurable The parameter "Time Tolerance" controls phonemes to appear slightly earlier or later in the search path within the "Time Tolerance" range, which is the range of time each element occurs, usually set to 50- 300 milliseconds. In this embodi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com