Multimodal continuous emotion recognition method, service reasoning method and system

A technology for emotion recognition and speech emotion recognition, applied in the field of service robots, can solve the problems of poor robustness, scarce data sets, and low recognition accuracy, and achieve the effect of improving satisfaction, improving accuracy, and improving accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

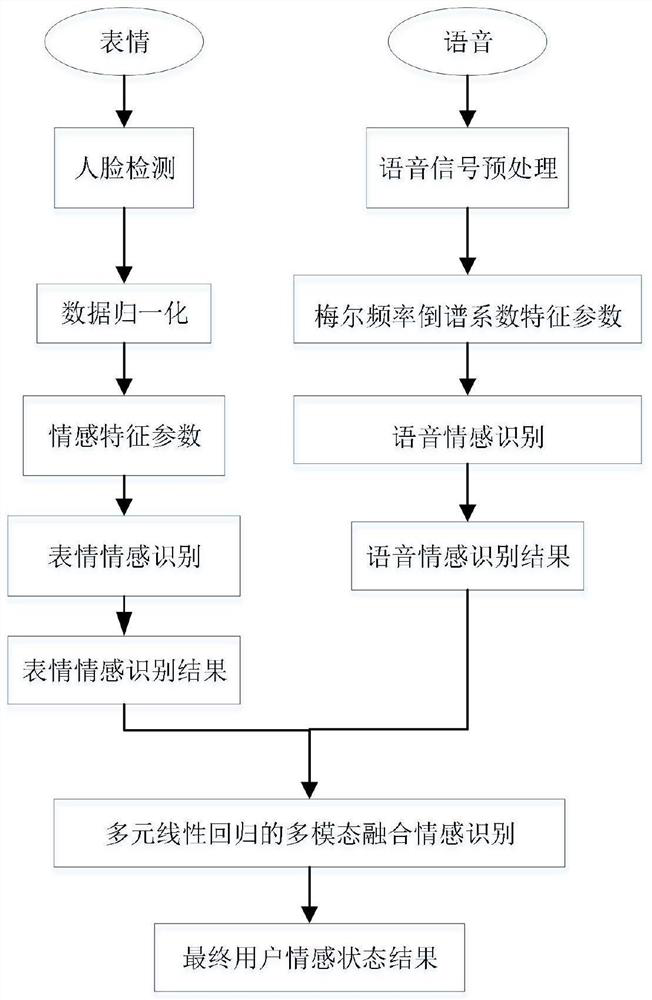

[0055]This embodiment discloses a multi-modal continuous emotion recognition method based on expressions and speech, such as figure 1 shown, including the following steps:

[0056] Step 1: Obtain video data containing the user's facial expressions and voice;

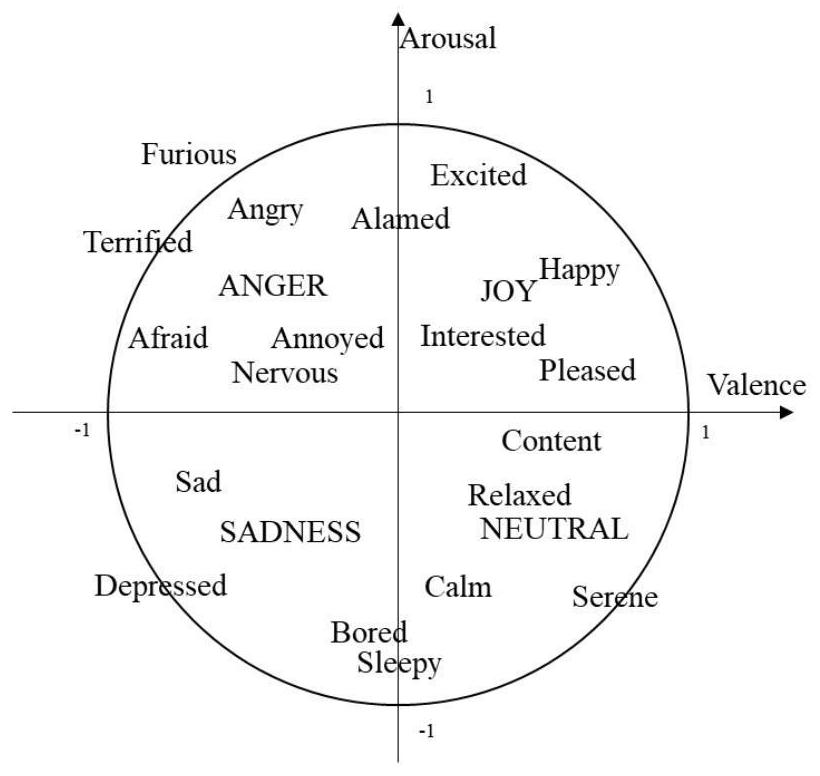

[0057] In this example, experimental verification is performed on the AVEC2013 dataset. The AVEC2013 database is an open dataset provided by the third audiovisual emotion challenge competition, which not only contains facial expression and speech emotion data, but also has features such as figure 2 Sentiment labels for two continuous dimensions, Arousal and Valence, are shown.

[0058] Step 2: Based on the pre-trained face recognition model, extract face images and perform emotion recognition; specifically include:

[0059] Step 2.1: Use the convolutional neural network based on cascade architecture to realize face detection and discard abnormal frames in expression video frames, and extract face images;

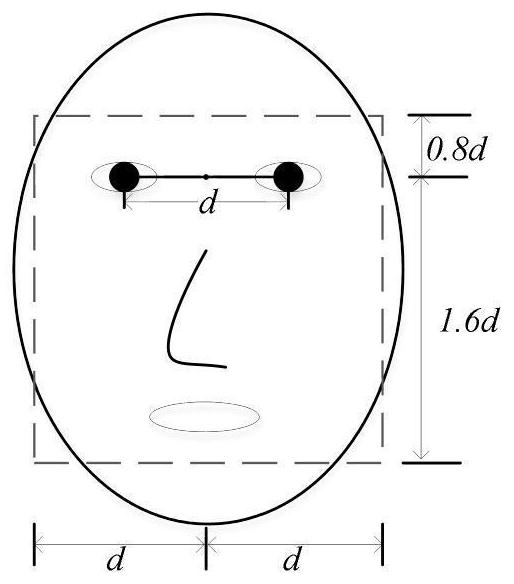

[0060] Fi...

Embodiment 2

[0107] The purpose of this embodiment is to provide a multimodal continuous emotion recognition system based on facial expressions and speech, including:

[0108] a data acquisition module, configured to acquire video data containing the user's facial expressions and voice;

[0109] The facial expression and emotion recognition module is configured to extract a face image for the video image sequence, and perform feature extraction on the facial image to obtain the facial expression emotion feature; according to the facial expression emotion feature, perform continuous emotion recognition based on a pre-trained deep learning model;

[0110] The speech emotion recognition module is configured to obtain speech emotion features by using Mel frequency cepstral coefficients for speech data; according to the speech emotion features, continuous emotion recognition is performed based on a pre-trained transfer learning network;

[0111] The data fusion module is configured to fuse the ...

Embodiment 3

[0116] The purpose of this embodiment is to provide a computer-readable storage medium.

[0117] A computer-readable storage medium having a computer program stored thereon, the program implementing the method described in Embodiment 1 when the program is executed by a processor.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com