Joint learning method of label and interaction relation for human action recognition

A behavior and labeling technology, applied in the field of human behavior recognition, can solve problems such as unrecognizable interactive behavior, non-convex, and images that cannot apply to multiple behavior categories

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

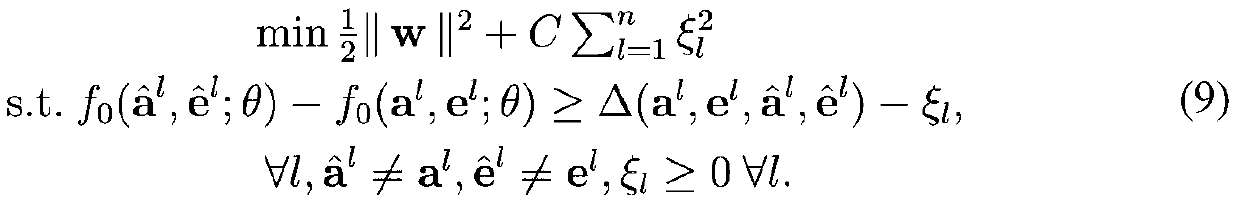

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below.

[0032] A method for joint label interaction learning for human action recognition, comprising the following steps:

[0033] 1) Construct energy function

[0034] Let G = (V, E) denote a graph, where the node set V represents the individual actions of all people, and the edge set E represents their interaction information, e.g., e ij ∈E indicates that there is an interaction between person i and person j, while edge e st The absence of means that there is no interaction between person s and person t, I represents an image, is the personal behavior label of person i, a=[a i ] i=1,...,n is a vector containing individual behavior labels of n individuals;

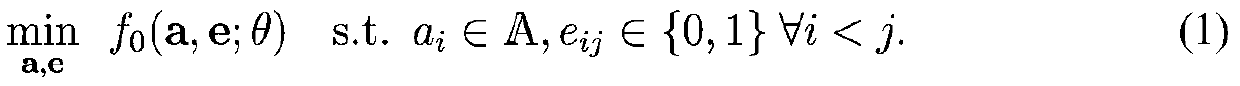

[0035] Given a new input I, the goal is to predict the personal behavior label a and the interaction information G by solving the following problem (1);

[0036]

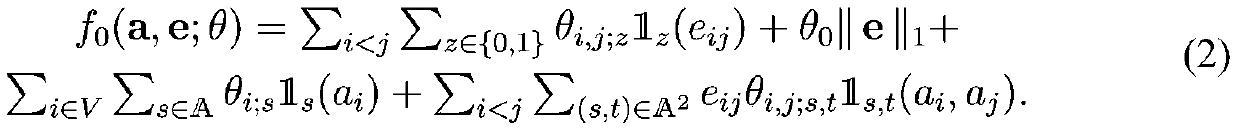

[0037] in

[0038]

[0039] in is an indicator function, if a i =s, its value is 1, o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com