Emotion prediction method and system based on face and sound

A forecasting method and forecasting system technology, applied in the computer field, can solve problems such as low precision and inability to meet the needs of practical applications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

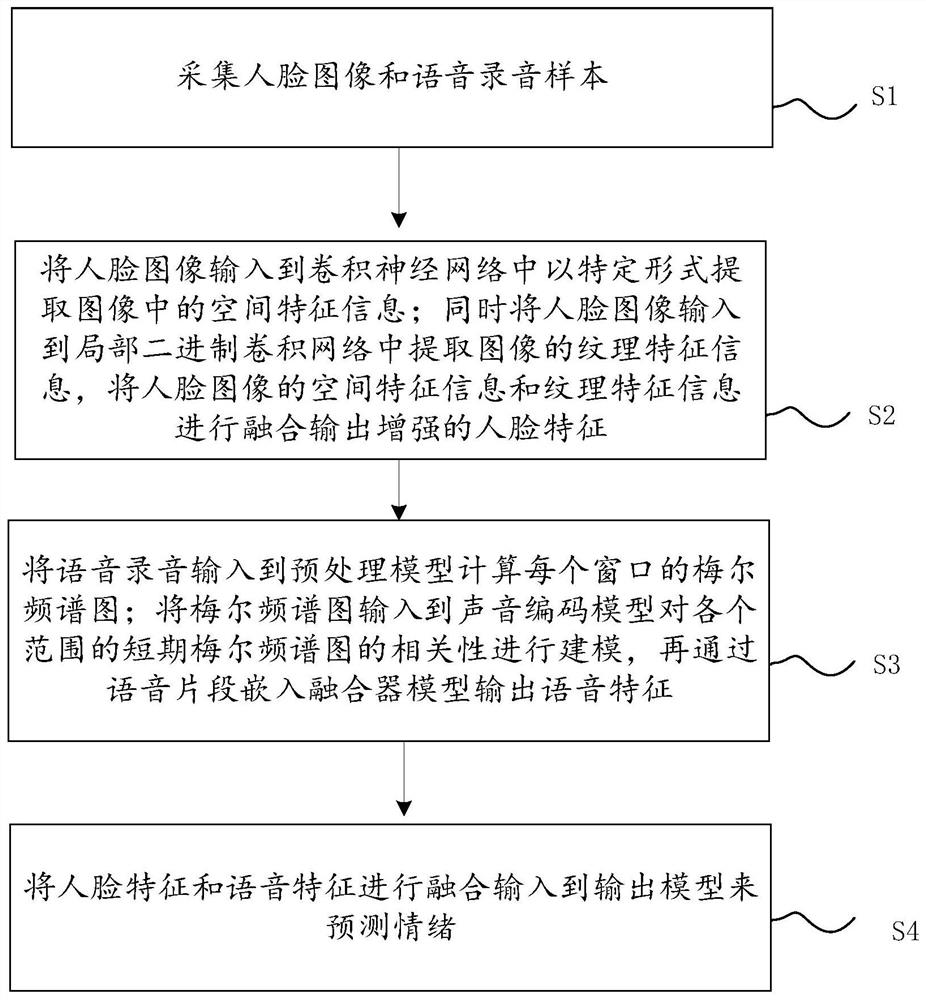

[0042] Such as figure 1 As shown, a kind of emotion prediction method and system based on human face and voice of the present invention specifically comprises the following steps:

[0043] Step S1, collecting face images and voice recording samples;

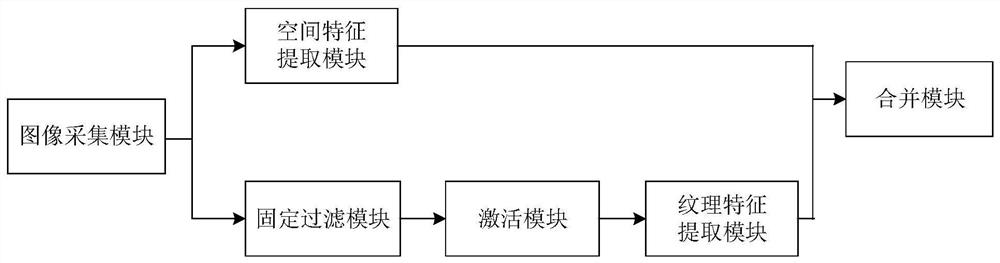

[0044] In step S2, the face image is input into the convolutional neural network to extract the spatial feature information in the image in a specific form; at the same time, the face image is input into the local binary convolution network to extract the texture feature information of the image, and the face image Spatial feature information and texture feature information are fused to output enhanced face features;

[0045] Step S3, input the speech recording into the preprocessing model to calculate the mel spectrogram of each window; input the mel spectrogram into the voice coding model to model the correlation of short-term mel spectrograms in various ranges, and then pass the speech The segment embedding fuser model outpu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com