A method and device for adaptive inverse quantization in video coding

A video coding and adaptive technology, applied in the field of data processing, can solve problems such as limiting coding efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

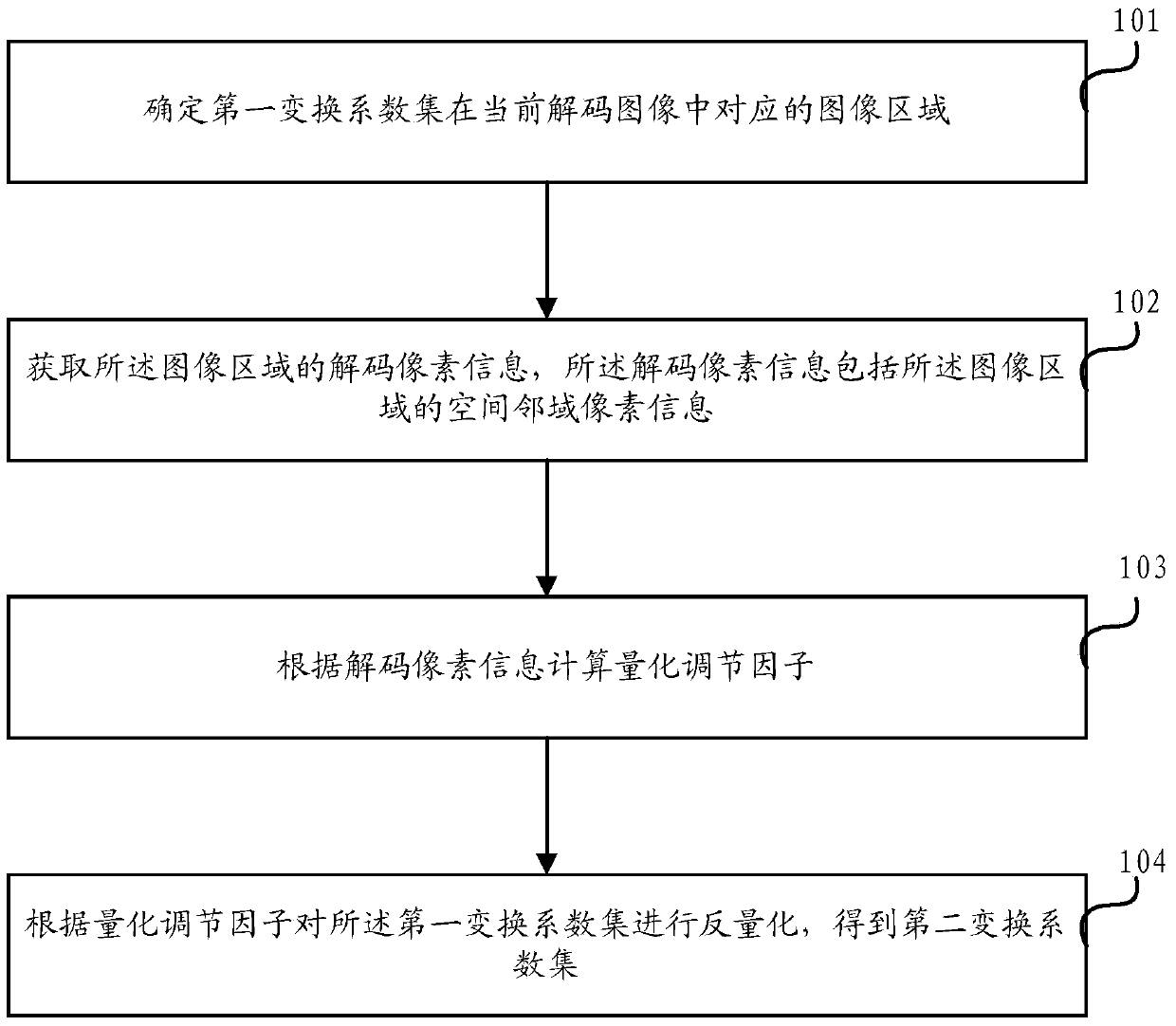

[0122] Such as figure 1 As shown, an embodiment of the present invention provides an adaptive inverse quantization method in video coding, the method comprising:

[0123] Step 101, determine the image area corresponding to the first transform coefficient set in the current decoded image; the first transform coefficient set includes N transform coefficients, and the transform coefficients are the transform coefficients of any color space component in the current decoded image, wherein, N is a positive integer;

[0124] In the embodiment of the present invention, the first transform coefficient set may include N transform coefficients A(i), i=1, 2,..., N, where N is a positive integer, for example, N=1, 2, 4, 16 . Transform coefficient, transform coefficient of any component of RGB (such as R component).

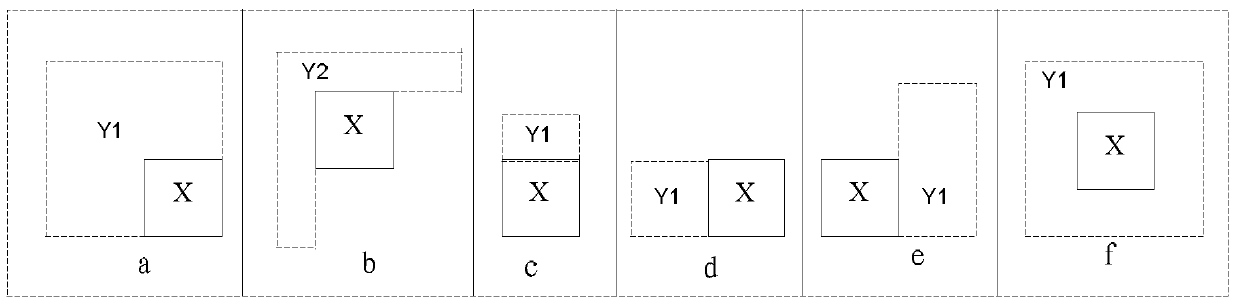

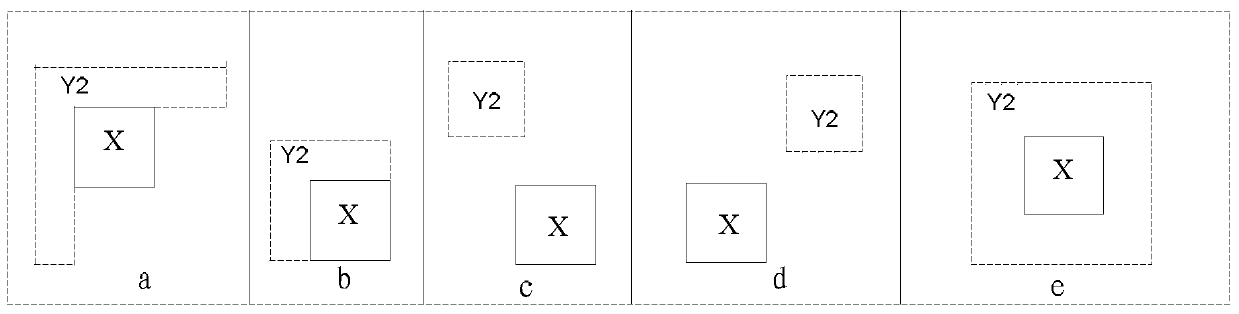

[0125] The image area corresponding to the first transform coefficient set is the area corresponding to the first transform coefficient set in the current decoded image. Fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com