Word embedding representation method based on internal semantic hierarchical structure

A technology of hierarchical structure and embedded representation, applied in semantic analysis, neural architecture, natural language data processing, etc., can solve the problem of neglecting rich semantic information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

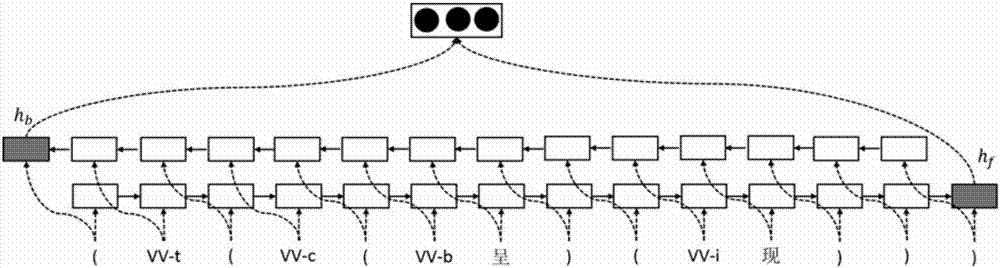

[0028] The first step is to serialize the tree structure according to the invariance of the hierarchical structure of the internal characters of the word;

[0029] In the second step, the above sequence is used as the input of the bidirectional GRU network for embedded representation encoding;

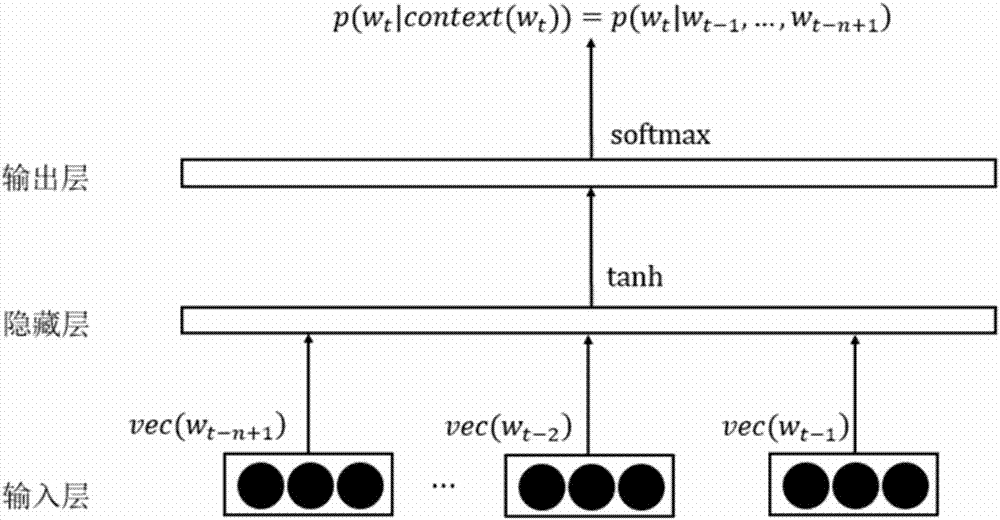

[0030] The third step is to perform parameter training with the goal of maximizing the language model probability.

[0031] The following describes the implementation details of the key steps:

[0032] 1. Serialize the tree structure

[0033] In the present invention, an open-source tool [9] is used to obtain the word internal hierarchy in the form of a character-level tree. Based on this tree structure, serialized word structure information can be extracted.

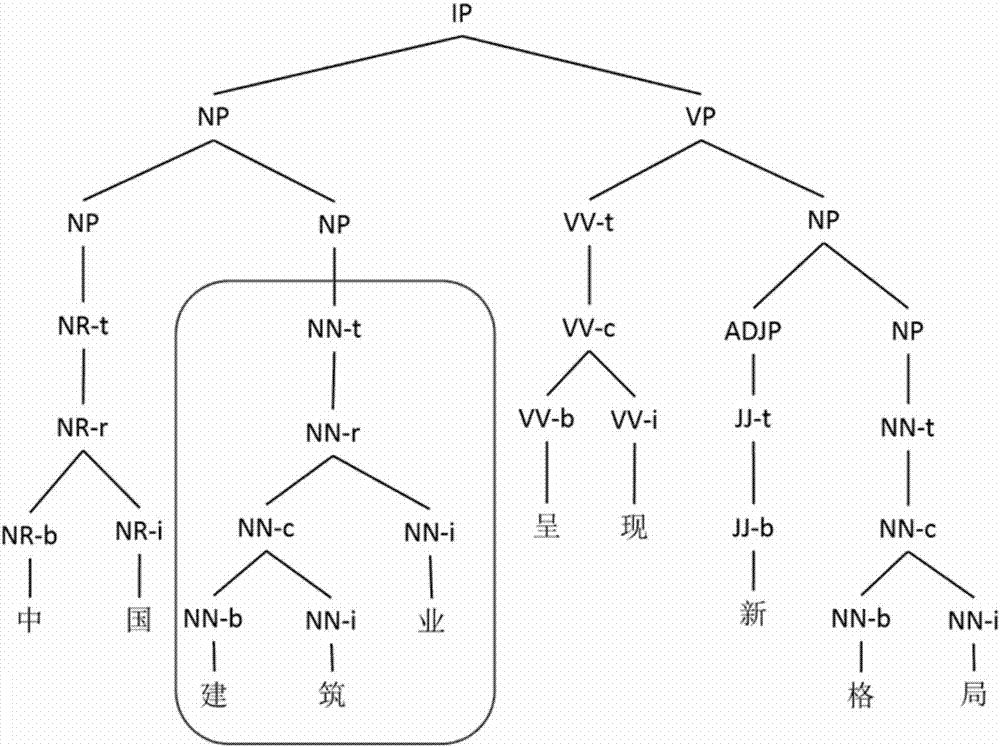

[0034] figure 1 Character-level tree structures obtained through open-source tools are given. The character-level tree structure of the sentence "China's construction industry presents a new pattern" contains the words "Chi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com