Crowd counting method based on scene depth information

A technology for crowd counting and scene depth, which is applied in computing, image data processing, computer components, etc., can solve the problems of insufficient multi-scale adaptability, high degree of overfitting, and low counting accuracy of crowd counting, and achieve suppression of sample size Insufficient, low degree of overfitting, and accurate counting effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

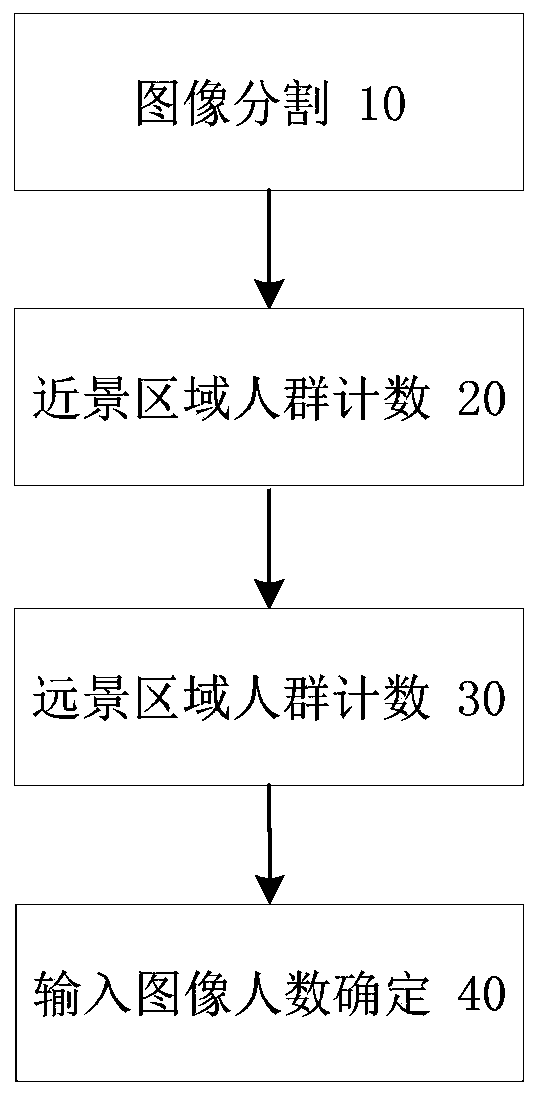

[0037] Such as figure 1 As shown, the present invention is based on the crowd counting method of scene depth information, comprises the following steps:

[0038] (10) Image segmentation: using the monocular image depth estimation algorithm to extract the depth information of the input image, and segment the input image into near-view and distant-view areas according to the depth information;

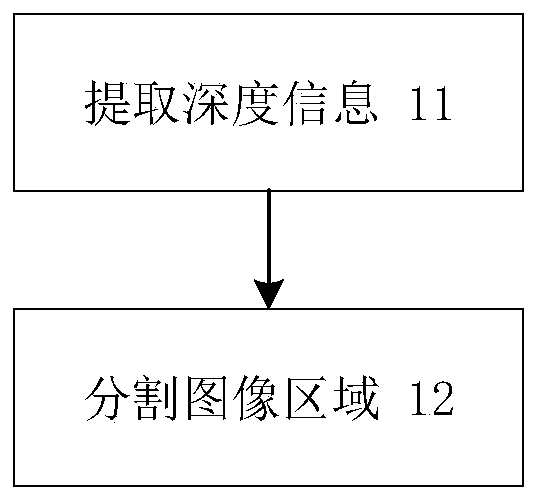

[0039] Such as figure 2 Shown, described (10) image segmentation step comprises:

[0040](11) Extract depth information: use a fully convolutional residual network to predict the depth map of a single RGB input image, map the input image into a corresponding depth map, and restore the size of the depth map to the size of the input image;

[0041] (12) Segmentation of image area: Using linear iterative clustering method, the depth map is divided into two parts, and then the segmentation result is mapped to the input image, so that the input image is divided into near-view area and dist...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com