A Gradient-Based Graph Adversarial Example Generation Method by Adding False Nodes for Document Classification

A technology against samples and document classification, applied in the field of artificial intelligence information security, can solve problems such as difficult to achieve, difficult to obtain, misleading target node classification results, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further described in detail below with reference to the accompanying drawings and examples, and the following examples are intended to facilitate the understanding of the present invention, but will not afford any limits.

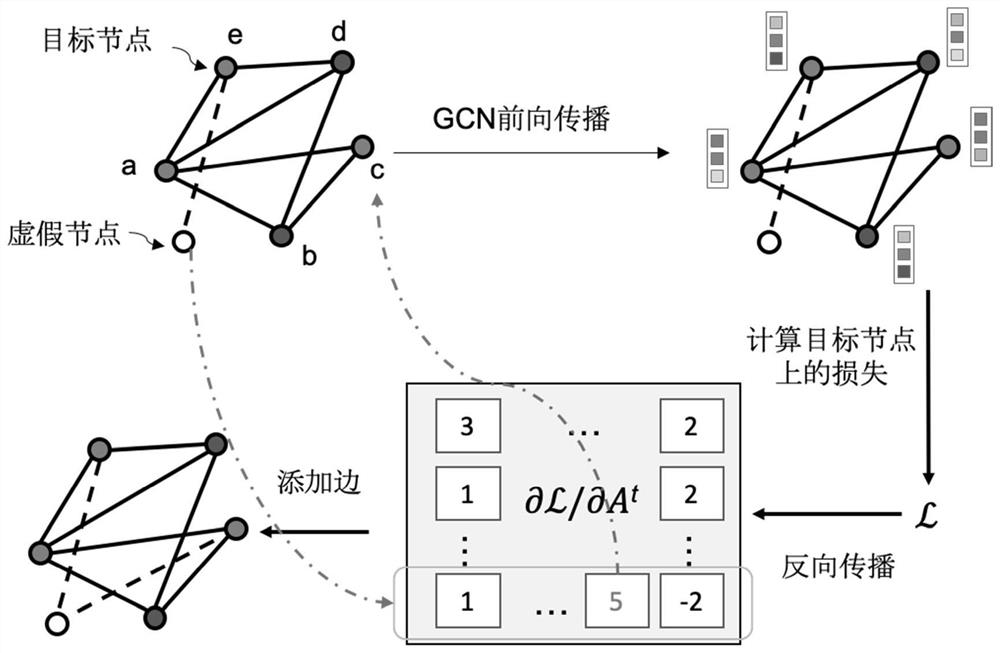

[0028] The overall process of the method of the present invention is figure 1 Indicated.

[0029] For a total of a total of Y-type tags (A, X), and a well-trained diagram node classification model M, first input the map data to the model M, calculate the classification result of each node, select the correct classification correct The node constitutes the target node set V, for each node V in the set V, the target tag of the assigned attack (the target tag is the error class label) constitutes the attack target (V, Y), thereby constituting the attack target set O, and | O | = (Y-1) * | V |, here | · | Indicates the size of the collection. For example, a 3-class drawing data, the size of the attack target set is twice the siz...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com