Background clutter quantizing method based on contrast ratio function

A technology of sensitive function and background clutter, applied in the field of image processing, can solve problems such as violation of the physical essence of background clutter, difficulty in predicting and evaluating the external field performance of photoelectric imaging systems, and inability to reasonably reflect the impact, etc., and achieve accurate prediction. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

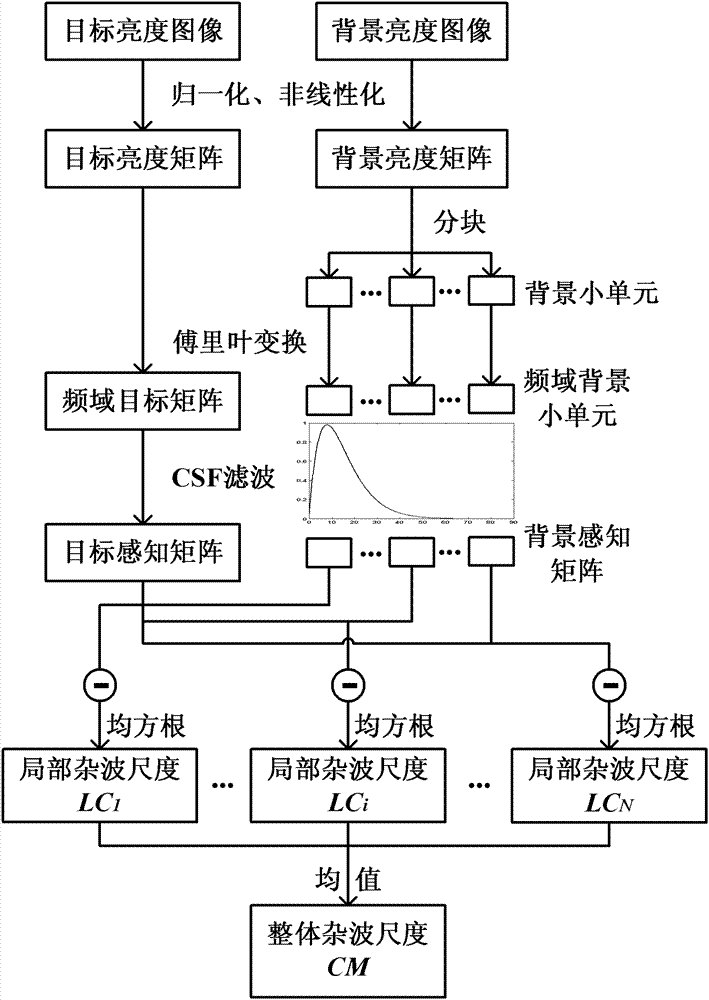

[0025] refer to figure 1 , the present invention is based on the background clutter quantification method of the contrast sensitive function and realizes the steps as follows:

[0026] Step 1. Normalize and nonlinearize the target brightness image and background brightness image to obtain target brightness matrix x and background brightness matrix y respectively. The specific steps are as follows:

[0027] (1a) Use the brightness value L at each position of the target brightness image t divided by its mean Realize the normalization of the target brightness image, and then take the cube root of the obtained value to realize nonlinearization, and then obtain the target brightness matrix x:

[0028] x = L t / L ‾ t 3 ;

[0029] (1b) Use the brightness ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com