Video annotation anchoring and matching method

A matching method and video technology, applied in the field of anchoring and matching of video annotations, can solve problems such as complex, unfavorable player-side fast processing, and large amount of calculation, and achieve the effect of low calculation amount, rich content, and accurate matching

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

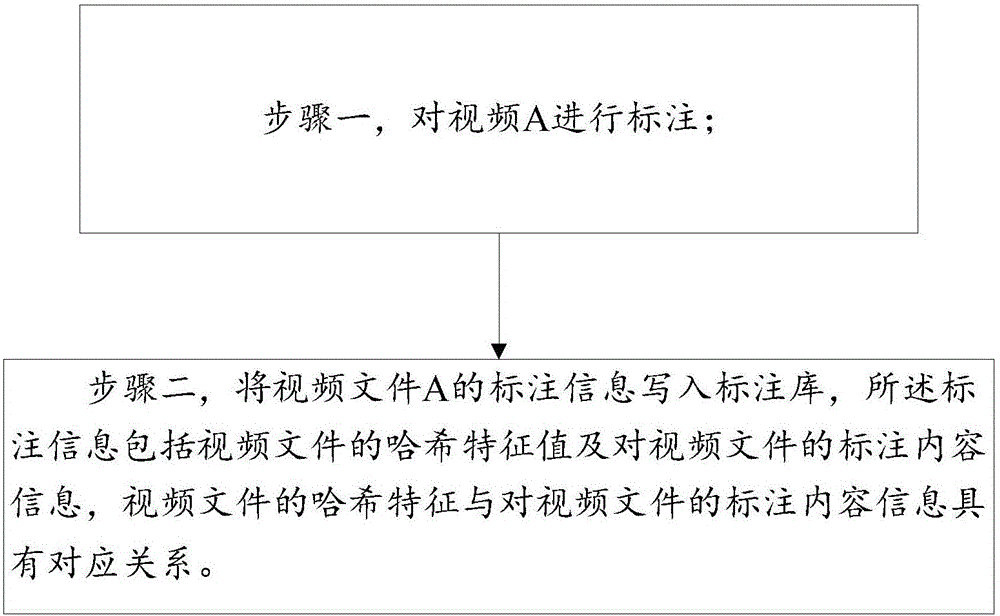

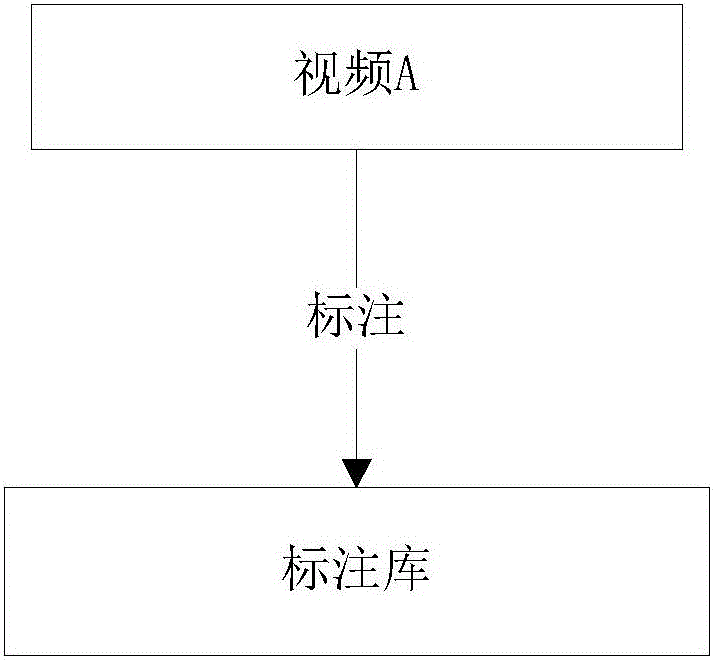

[0019] Embodiment one, such as figure 1 , figure 2 As shown, an anchoring method for video annotation, including:

[0020] Step 1, labeling video A;

[0021] Each annotation is a position and associated content in the video, including but not limited to video links, advertisements, barrage, and subtitles.

[0022] Video link For example, when video A is played to the 20th-22nd second, an icon is displayed on an object in the video screen, and the user clicks on the icon to display the specified text, picture, map location, or open the specified URL;

[0023] Ad annotation, for example, specifies that when video A plays to the 20th-22nd second, a graphic advertisement is displayed at a certain position on the video screen, and the user clicks on the advertisement to display more detailed content or jump to the purchase screen;

[0024] Subtitle annotation For example, when video A is played to the 20th-22nd second, a specified text subtitle is displayed below the video;

...

Embodiment 2

[0029] Embodiment 2, on the basis of Embodiment 1, more preferably, the hash characteristic value of the video file is specifically the hash characteristic value of the audio channel of the video.

[0030] The audio data in the video, after decoding, each channel data is a continuous number, which represents the sample value after sampling the sound every second, and the zero-crossing rate also has the feature of anti-editing. The voice sample is down-sampled, and the zero-crossing rate is calculated and matched at a lower sampling frequency. These subtle changes have little effect on the matching.

[0031] More preferably, the tagging information specifically includes but is not limited to the hash feature value of the audio channel of the video file, including a combination of one or more of the following: tagging content, tagging position, tagging start time and end time, Annotate the image feature value of the video frame corresponding to the start time. This standard inf...

Embodiment 3

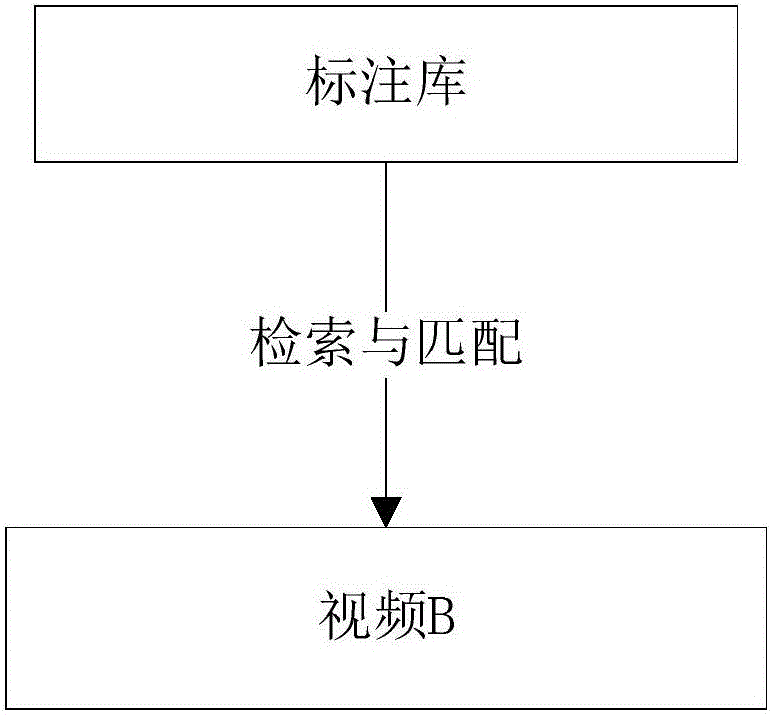

[0045] Embodiment three, such as image 3 As shown, a matching method for video labeling, including:

[0046] A method for matching video annotations, characterized in that, when a video is played, the hash feature value of the video file is transferred to an annotation library, and the matching annotation information is retrieved from the annotation library, and when the video is played to a corresponding position, it can be displayed on the interface The corresponding label content is displayed on the The video played by the user can be a derivative video instead of the original video itself. Using the voice feature based on the one-way zero-crossing rate, it is possible to associate the derived video with the labeled video, and extract all the annotations of the labeled video and the time offset between the two videos in the association library. And it can accurately match the label information, the calculation amount is relatively small, and the speed is fast.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com