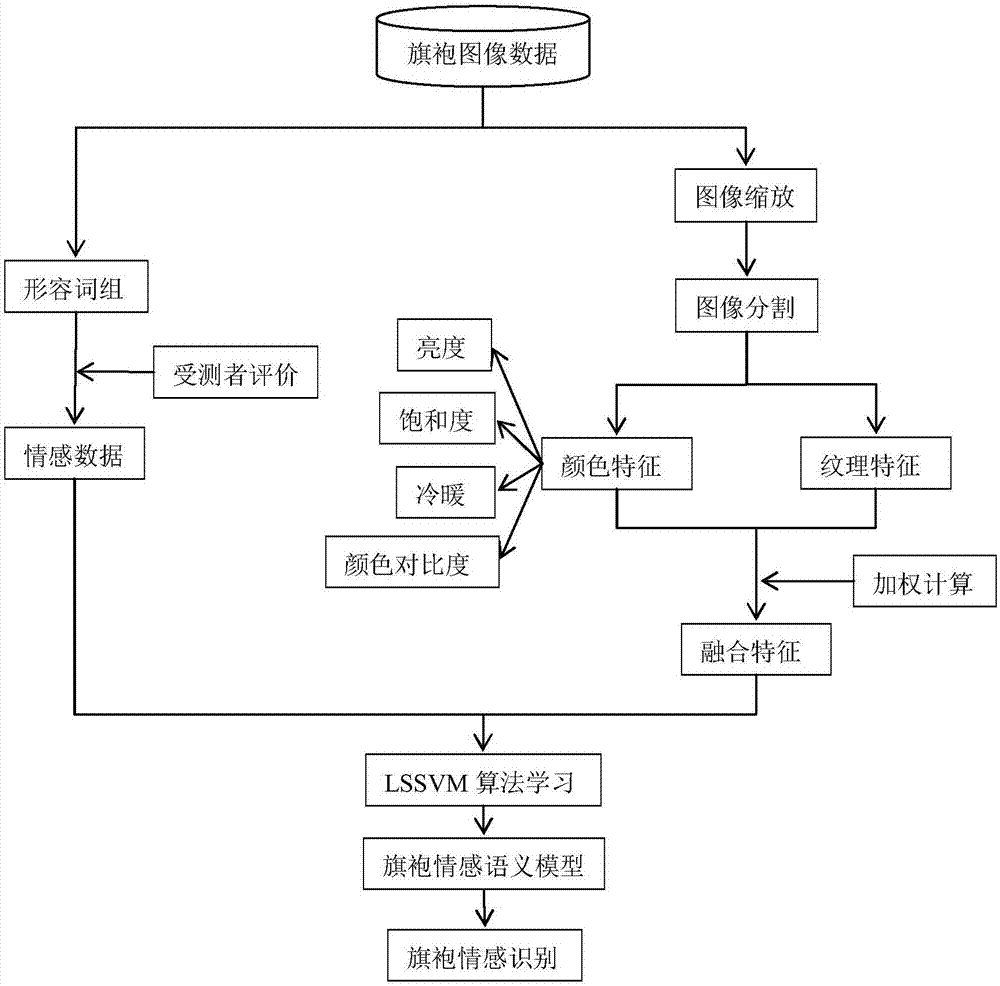

Fusion feature-based cheongsam image emotional semantic recognition method

A technology that integrates features and semantic recognition, and is used in image enhancement, image analysis, image data processing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0125] Step 1. Adjective selection and establishment of emotional space:

[0126] 1.1 From the 30 adjectives for clothing pictures, select a representative group of adjectives (simple and steady, noble and elegant, gentle and romantic, plain and fresh) to describe the cheongsam;

[0127] 1.2 Collected 400 pictures of cheongsam, all with solid color background;

[0128] 1.3 Divide the pictures in step 1.2 into 5 groups at random, with 80 pictures in each group, select 20 subjects to participate, and each 4 subjects evaluate the style of a group of pictures with the adjectives in step 1.1. The pictures are represented by 1, 2, 3, and 4 respectively, where 1 represents "quaint and steady" clothing, 2 represents "noble and elegant" clothing, 3 represents "gentle and romantic" clothing, and 4 represents "plain and fresh" clothing. Select the cheongsam pictures that are evaluated together, and remove the pictures with ambiguous styles. Finally, 350 pictures and corresponding emoti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com