Fine-grained word representation model-based sequence labeling model

A sequence labeling, fine-grained technology, applied in character and pattern recognition, special data processing applications, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] The specific embodiments discussed are merely illustrative of implementations of the invention, and do not limit the scope of the invention. Embodiments of the present invention will be described in detail below in combination with technical solutions and accompanying drawings.

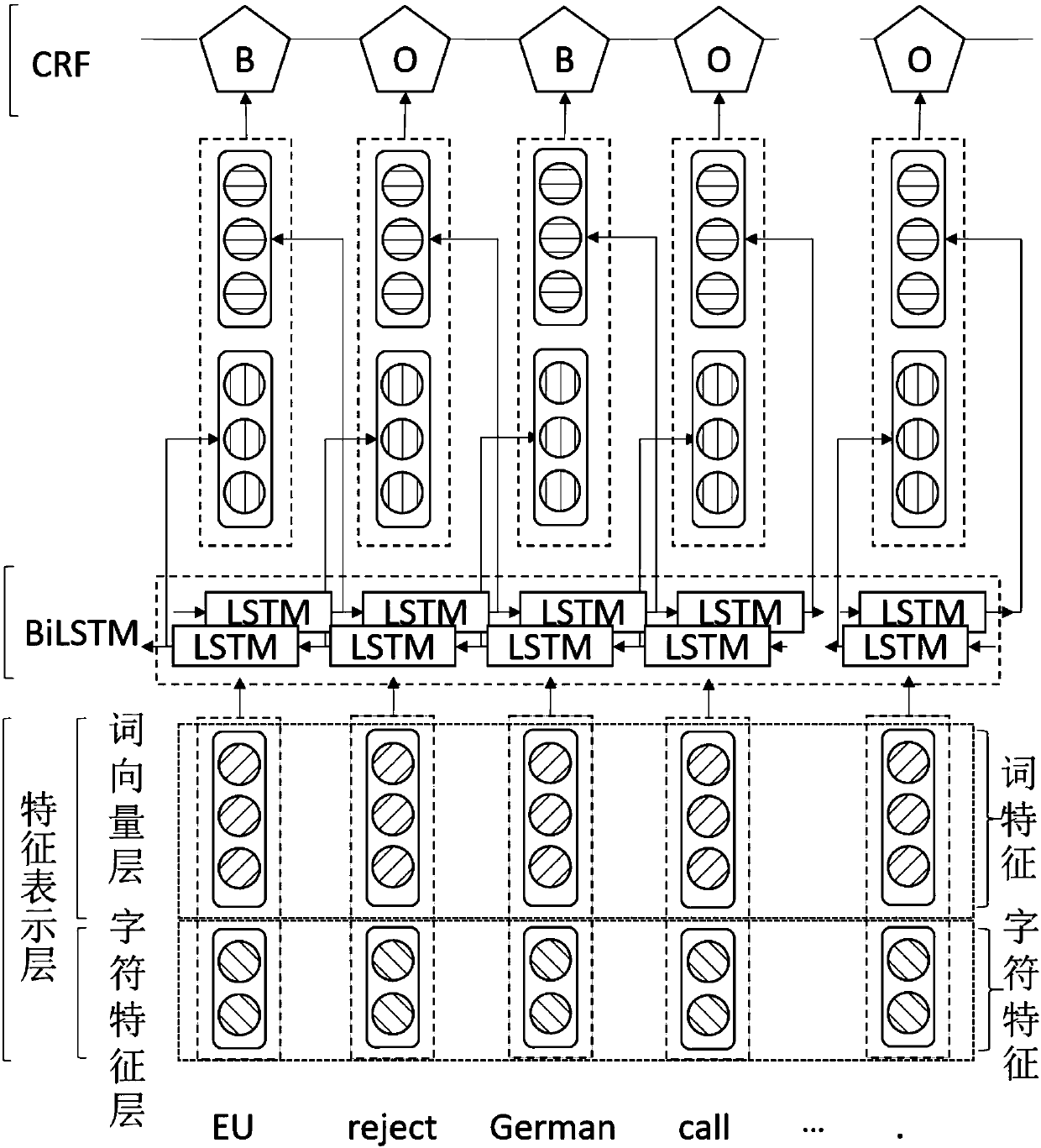

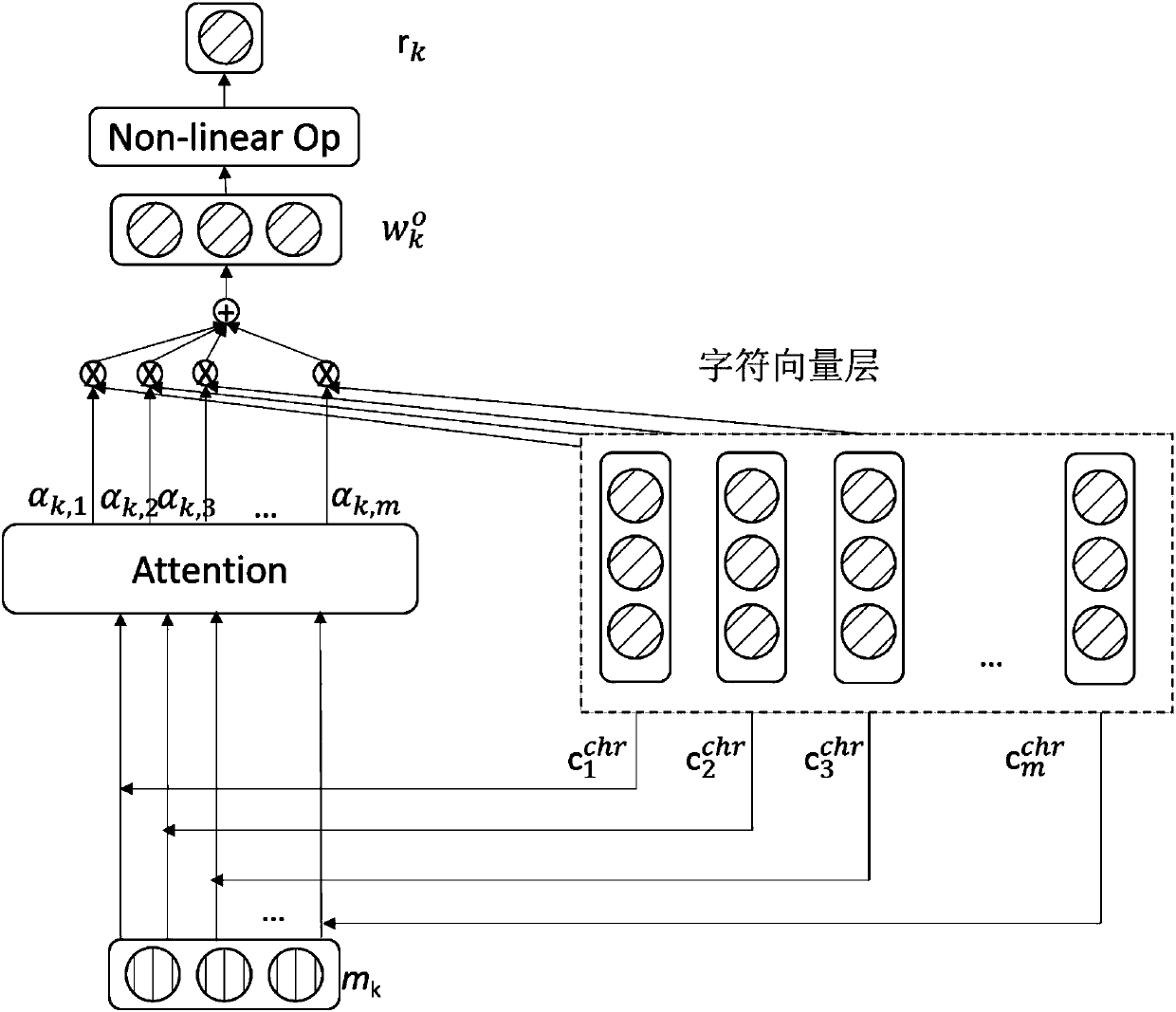

[0066] In order to represent the morphological information of words more accurately, the present invention designs a fine-grained word representation model Finger based on the Attention mechanism. At the same time, by combining Finger and BiLSTM-CRF model for sequence tagging tasks, ideal results have been achieved.

[0067] 1. Representation stage

[0068] In the representation stage, given an arbitrarily long sentence, the word vector representation and character vector representation of the corresponding word are respectively represented by formulas (1)-(6), and the word vector and character vector of the word sequence are connected by splicing.

[0069] 2. Coding stage

[0070] In the enc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com