A Robust Object Tracking Device and Tracking Method Based on Compact Expression

A tracking device and robust technology, applied in image analysis, image enhancement, instrumentation, etc., can solve unsolved robust tracking problems and other problems, and achieve the effect of reducing time and stabilizing tracking effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] Such as figure 1 As shown in , a physically robust tracking device based on compact expressions, including a computer and a camera connected to it.

Embodiment 2

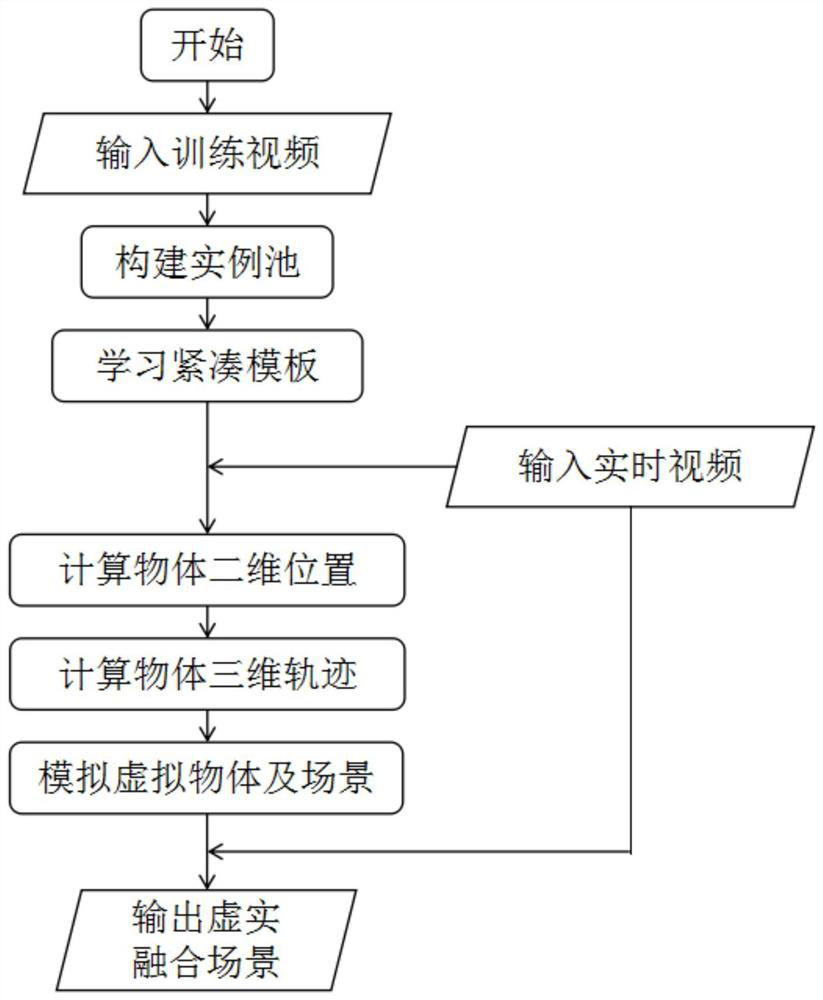

[0050] A physically robust tracking method based on compact representations such as figure 2 shown, including the following steps:

[0051] 1) Construct a complete and compact target dictionary. To achieve robust tracking in marker-free AR environments, we first need to create representations of objects, and we frame this problem as one that constructs a high-dimensional manifold of normalized subimages of all possible appearances of a target object. Specifically divided into the following five steps:

[0052] 1.1) Capture sub-images about the target from multiple perspectives, multiple backgrounds, and under changing lighting conditions to form an instance pool. Note that these sub-images should not have any occlusion. In order to facilitate the acquisition of such images in large quantities and quickly, video sequences taken and computer-synthesized images can be utilized. These sub-images should constitute a complete description of the target, i.e. they should contain i...

Embodiment 3

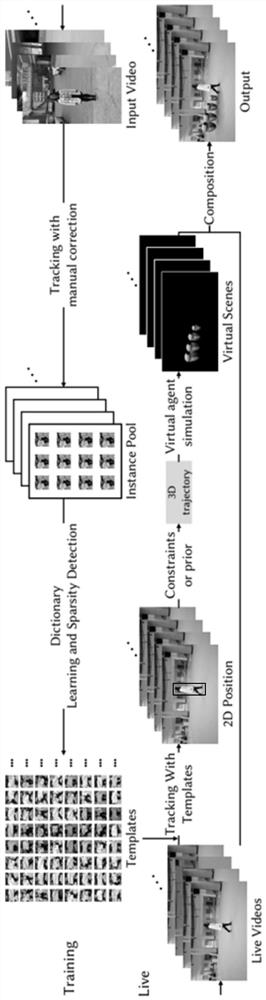

[0073] A robust object tracking method based on compact representations such as image 3 As shown, in the training phase, such as image 3 From right to left, the upper line of , collects various video images of a person, performs general target tracking on the person, and removes or corrects the error through manual intervention when the tracking fails; the sub-image corresponding to the accurately tracked target taken out to obtain thousands to hundreds of thousands of instances{x j ,j=1,2,...,m} to form an instance pool; further, through an iterative sparse learning method, a compact template set T is selected * ; in the online phase (such as image 3 the next row from left to right), this template set T * It is used to track the target, so as to obtain a stable two-dimensional area, and through the constraints, that is, the character walks on the ground, it can be seen that the bottom of the two-dimensional area is in contact with the plane, so as to obtain the three-di...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com