Decentralized parameter server optimization algorithm

A decentralized and optimized algorithm technology, applied in the field of deep learning, can solve problems such as aggravation, bandwidth waste, and communication congestion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

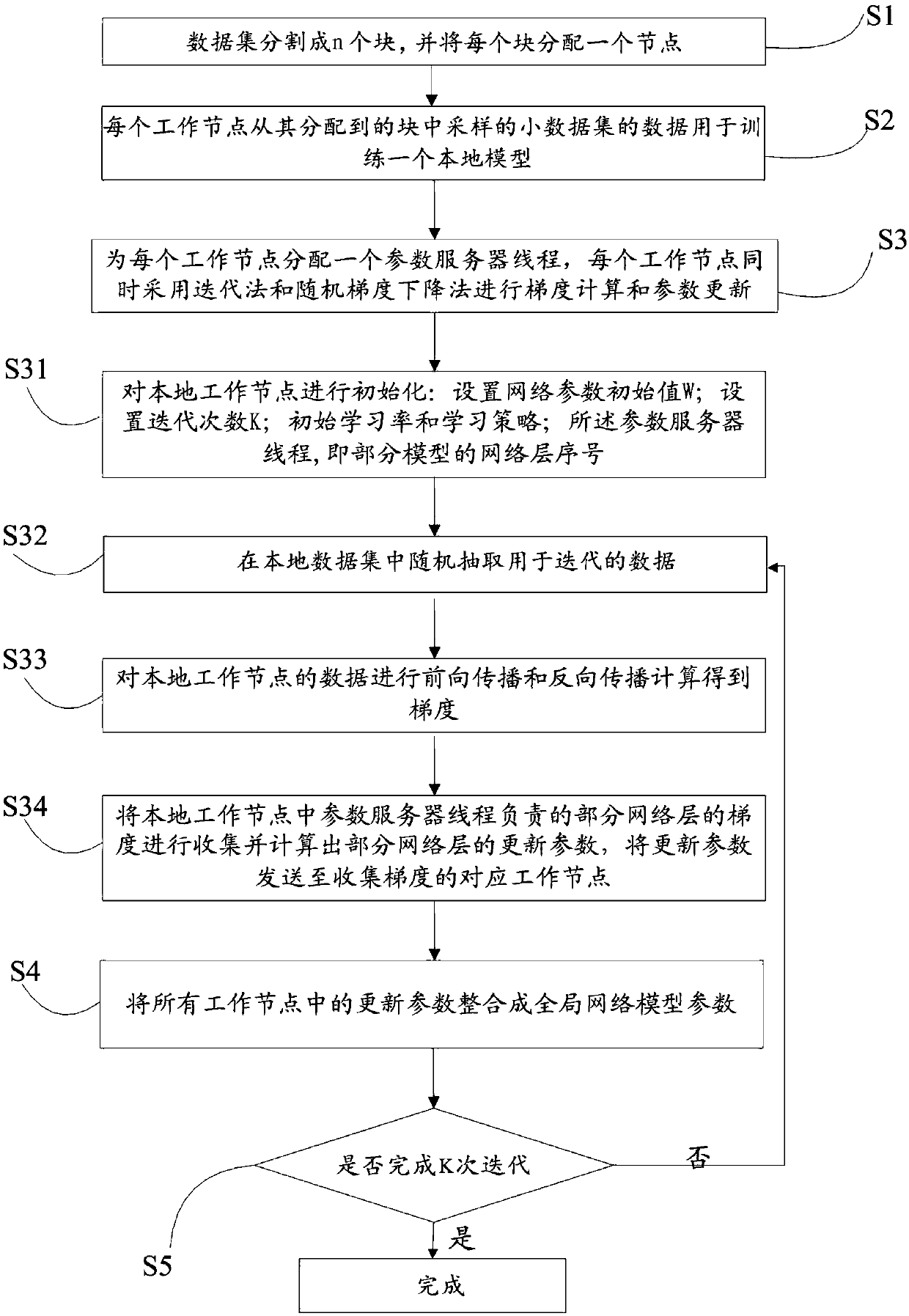

[0049] The traditional central parameter server algorithm is often used in network model training based on distributed deep learning. The present invention optimizes the traditional central parameter server algorithm and removes the parameter server in the traditional central parameter server algorithm. The global network model training is carried out in the communication network. The communication network has n working nodes. The global network for deep learning training includes an M-layer network. The global network model is divided according to the layers of the global network, and part of the divided models are assigned to Each working node is maintained and updated through the parameter server thread, and the data set for model training is divided into n blocks. Each piece of data is individually assigned to a working node for local model training. Each working node adopts gradient descent The parameter update in the iterative model training is carried out by the iterativ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com