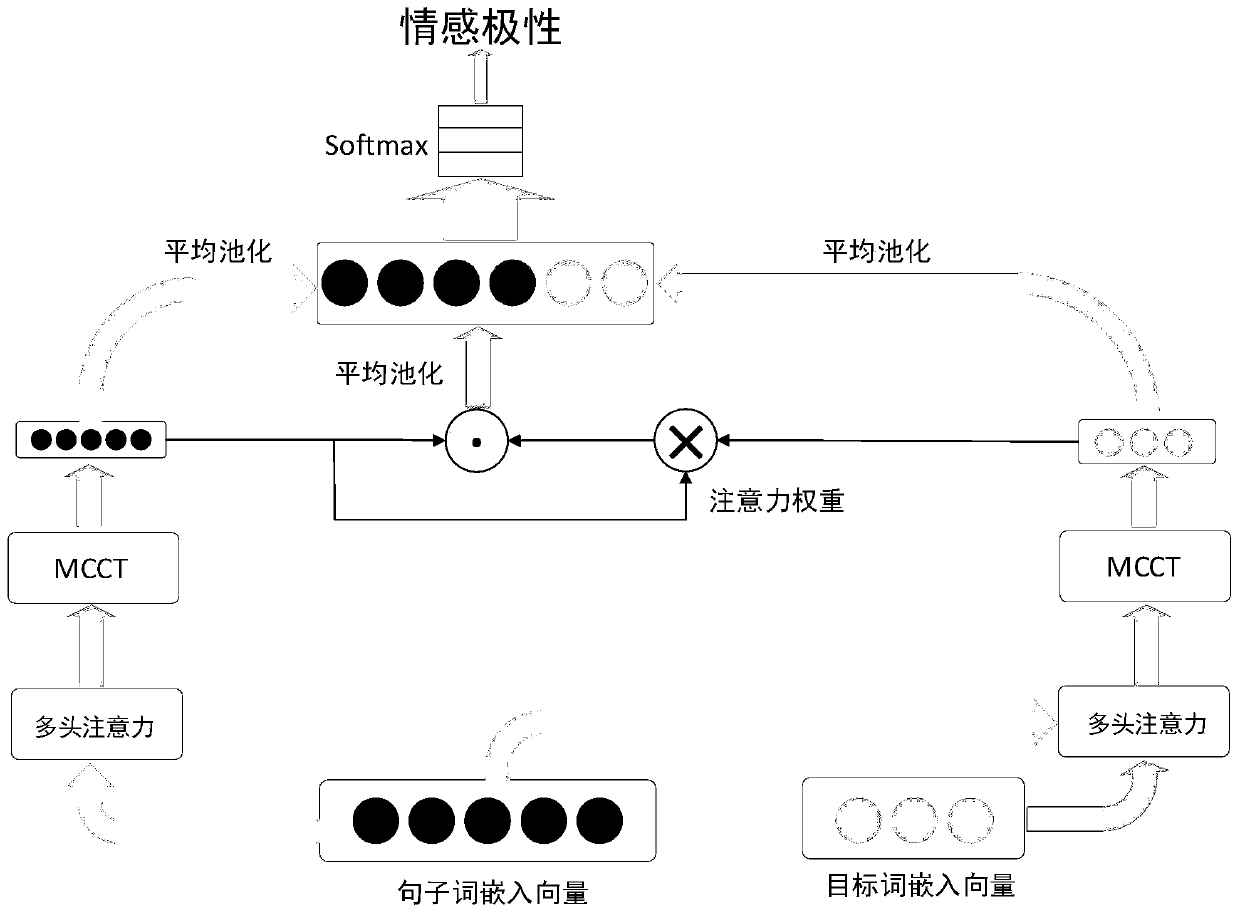

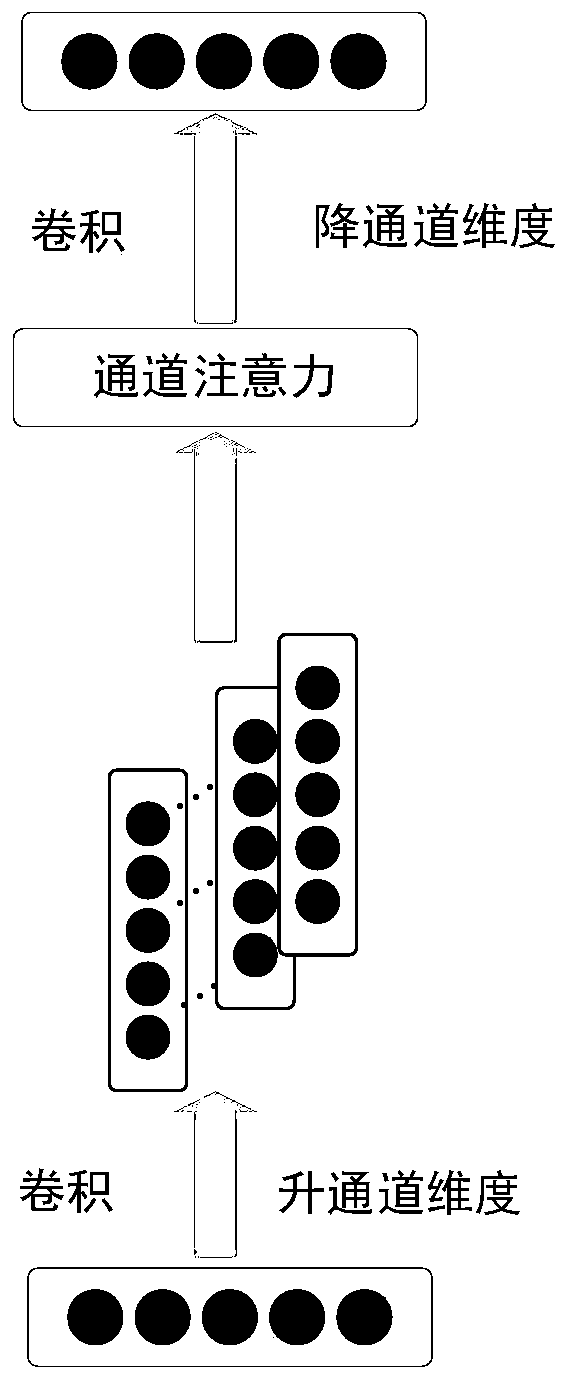

Video bullet screen emotion analysis method based on multi-scale attention convolutional coding network

A convolution coding and attention technology, applied in the field of deep learning and sentiment analysis, can solve problems such as insufficient extraction of emotional polarity, disadvantages of extracted sample features, errors, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

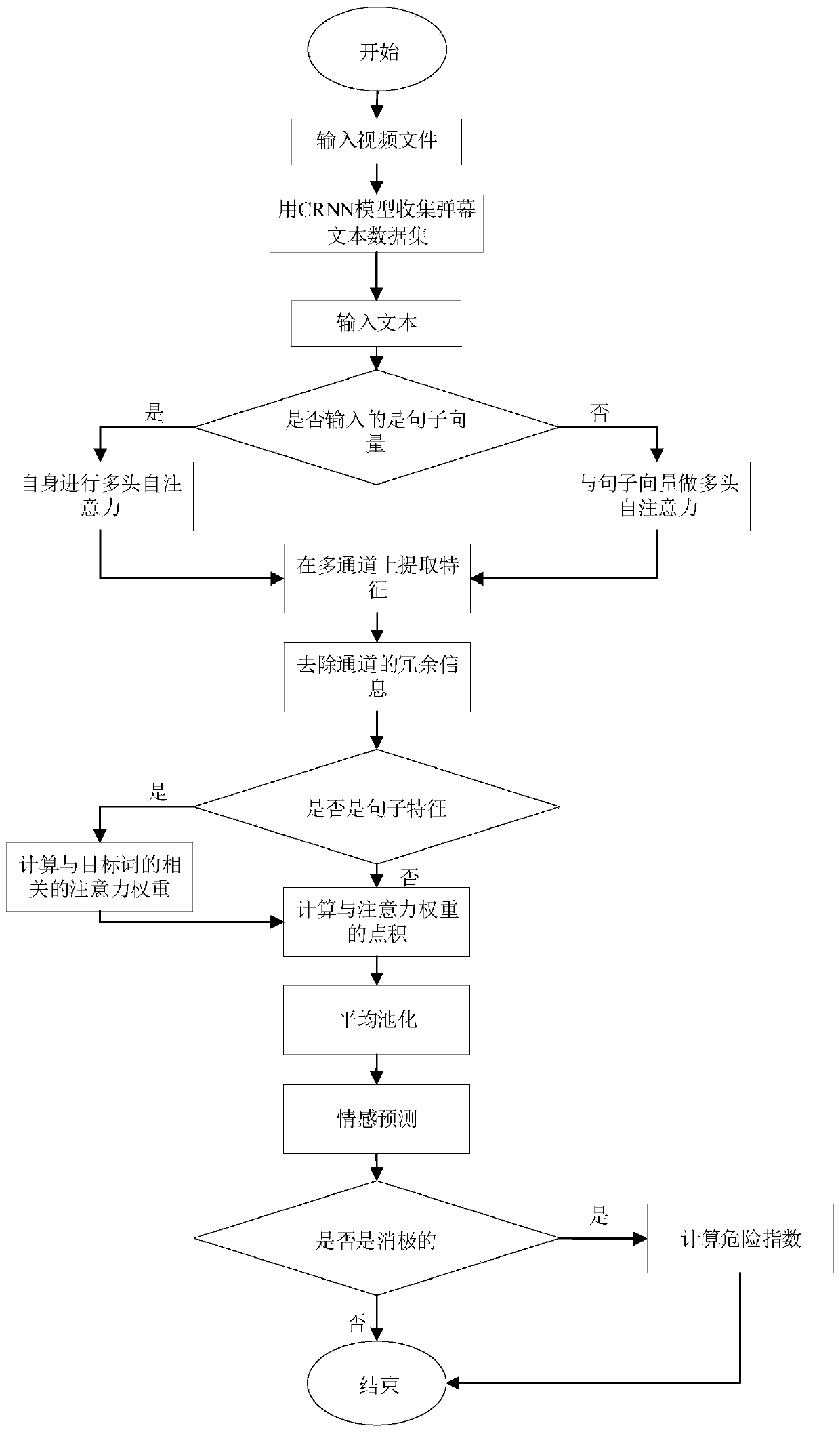

[0080] In order to make the purpose, technical solutions and advantages of the present invention clearer, in combination with the technical solutions and accompanying drawings given above, the specific usage of the present invention is further described.

[0081] Such as image 3 As shown, the video barrage sentiment analysis method based on multi-scale attention convolution coding network, the specific steps are as follows:

[0082] Step 1. Collect video files, use CRNN to extract video bullet chat samples, sort out the target words in each sample sentence, and mark the emotional bias of each target word respectively to obtain a bullet chat sample data set. Sentence samples and target words in the data set are preprocessed by GloVe respectively to make them into a vector form that can be easily handled by the neural network. The loss function of the GloVe neural network is:

[0083]

[0084] in, with To finally solve the word vector, f(X ij ) is the weight function. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com